Abstract

It has been known for over 70 years that there is an asymptotic transition of Charlier polynomials to Hermite polynomials. This transition, which is still presented in its classical form in modern reference works, is valid if and only if a certain parameter is integer. In this light, it is surprising that a much more powerful transition exists from Charlier polynomials to the Hermite function, valid for any real value of the parameter. This greatly strengthens the asymptotic connections between Charlier polynomials and special functions, with applications in queueing theory, where this transition is crucial for solving first-passage problems with moving boundaries. It is shown in this paper that the convergence is locally uniform, and a sharp rate bound is proved. In addition, it is shown that there is a transition of derivatives of Charlier polynomials to the derivative of the Hermite function, again with a sharp rate bound. Finally, it is proved that zeros of Charlier polynomials converge to zeros of the Hermite function.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A unique feature of Charlier polynomials [1,2,3,4,5] is their affinity with the Poisson distribution. This has many important applications. Charlier polynomials concisely express the behavior of Erlang loss systems, a fundamental concept in queueing theory [6,7,8]. Here, Charlier polynomials (and the transition to the Hermite function) are instrumental when computing the probability of the first loss for a time-variable number of servers [9]. Another example is the generalization of stochastic integrals over Poisson distributions to multiple stochastic integrals, which can be effectively computed using Charlier polynomials [10, 11], whereas yet another is that of random matrices over Poisson distributions [12], which can be characterized by Charlier polynomial zeros.

High-dimensional or asymptotic problems typically engage Charlier polynomials of high degree and order (index). For instance, the asymptotic behavior in the number of servers of Erlang loss systems is described by Charlier polynomials whose degree and order tend to infinity simultaneously according to the Halfin–Whitt regime [13]. At a first glance, the classical formula ([5, Eq. 9.14.12], [14, p. 532], [4, Eq. 18.21.9], [3, Eq. 2.82.7])

appears useful for reducing Charlier polynomials in this limit, but unfortunately, this formula holds only for non-negative integer n. In light of the long standing of this formula, it can somewhat surprisingly be shown that

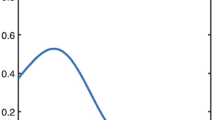

for any real x and \(\nu \), a much stronger statement (Fig. 1). Here, the ceiling function \(\lceil x \rceil \) denotes the smallest integer not smaller than x, and \(H_\nu (x)\) denotes the Hermite function [15, Ch. 10].

A proof of (1) has been given via Krawtchouk polynomials [3, pp. 36–37]. When \(\nu \) is non-negative real and \(a-x\sqrt{2a}\) is integer, pointwise convergence of (2) (without rate bound) follows implicitly from [16].

Transition of Charlier polynomials \({{\left( 2a \right) }^{\nu /2}}C_{\lceil a+\sqrt{a}\rceil }(\nu ,a)\) (thin lines) to the Hermite function \(H_\nu (-1/\sqrt{2})\) (thick line). The different values of the parameter a are 100 (dotted line), 400 (dashed line), and 1600 (solid thin line). Figure adapted from Fig. 2 in [9] under Creative Commons license

In Sect. 2, it is proved rigorously that convergence to the Hermite function holds for any real \(\nu \), and that convergence is uniform for \(\nu \) and x in any bounded interval, i.e., locally uniform. A sharp rate bound is established. The same technique is then employed in Sect. 3 to prove that there is a similar transition of the derivative with respect to \(\nu \), and a sharp rate bound is provided here, too. These results are used in Sect. 4 for proving that zeros of Charlier polynomials converge to zeros of the Hermite function.

Below is first a recollection of some well-known definitions and recurrence relations from [2, 4, 5, 15] to make the paper self-contained. This is followed by three sections, each proving an aspect of the transition of Charlier polynomials to Hermite functions.

The notation “\(A\triangleq B\)” is used for “A is defined as B”, to make the introduction of new symbols more explicit. The expression “bounded \(\nu \le -3\)” is shorthand for “\(\nu \) in any bounded interval \([{{\nu }_{0}},-3]\).” Charlier polynomials \(C_n(x,a)\) will be abbreviated as \(c_{n}^{a}(x)\), \(c_{n}(x)\), \(c_{n}^{a}\), or even \(c_{n}\), unless there is a risk for misunderstanding. They can be defined for positive a and non-negative integer n by [2, Eq. 10.25.4], [4, Eq. 18.20.8],

where

These polynomials obey the three-term recurrence relation [2, Eq. 10.25.8], [4, Eq. 18.22.2],

and the difference equation [2, Eq. 10.25.9], [4, Eq. 18.22.12],

as well as the backward recurrence relation [5, Eq. 9.14.8],

The Hermite function \({{H}_{\nu }}(x)\) is a solution of the differential equation [15, Eq. 10.2.3]

and satisfies the three-term recurrence [15, Eq. 10.4.7]

and the derivative rule [15, Eq. 10.4.4]

It can be defined by [15, Eq. 10.2.8]

where M is the confluent hypergeometric function of the first kind. When the expression involves a gamma function of a non-positive integer argument, the expression should be interpreted by its limiting value.

2 Transition of Charlier Polynomials

Theorem 1

For real x, \(\nu \), and positive a,

where \(c_{n}^{a}(\nu )\) are Charlier polynomials and \({{H}_{\nu }}(x)\) is the Hermite function. The error bound \(O\left( {1}/{\sqrt{a}}\; \right) \) is locally uniform for \(\nu \) and x and is sharp in the sense that there are \(\nu \) and x such that the error is proportional to \(1/\sqrt{a}\) for arbitrarily large a.

Proving asymptotic properties of Charlier polynomials is difficult, since these do not satisfy a second-order linear ordinary differential equation with respect to the independent variable [17]. However, the three-term recurrence relation (4) is a discretization of such a differential equation (7). This can be used to prove the theorem in the following way: It is first proven for the special case \(x=0\) and \(\nu \le -4\) (Lemmas 1–5) and then generalized to arbitrary real \(\nu \) (Lemma 6). After that, the scaled polynomials are shown to approximate a Cauchy polygon converging to the \({{H}_{\nu }}\left( x \right) \) solution of the Hermite differential equation initial value problem (Lemma 7).

2.1 Convergence for \(x=0\) and \(\nu \le -4\)

For notational convenience, define \(A\triangleq \lceil a \rceil \) and

The superscript will be left out in \(y_{\nu }^{a}\) and \(c_{n}^{a}\) unless there is a risk for misunderstanding. Consider the case \(x=0\) and \(\nu \le -4\). By the definition of Charlier polynomials (3),

To prove that

using the definition of \({{H}_{\nu }}(x)\) in (10), \({{y}_{\nu }}(0)\) can be expressed as a sum

of terms

When \(\nu \) is negative, these are all positive. The series is difficult to sum due to multiple levels of numerical cancellation, but can be estimated by separating the factors. Another difficulty is the changing behavior of \(T_k\) with increasing a. This problem can be remedied by defining a border between “head” and “tail” sections that increases with a properly tuned power of a.

Lemma 1

The factor

for \(1\le k\le A~\)satisfies

and for \(1\le k<A/2\),

Proof

Define the “nuisance factor” due to truncation by the ceiling function by

where \(0\le \theta <1\). For \(1\le k\le A\), taking the logarithm of \(\beta p(k)\) and Taylor expanding,

where \({{R}_{p}}\ge 0\). By re-exponentiation,

so

On the other hand, for \(1\le k\le A/2\), by comparison with a geometric series,

so by (14),

By (13)

\(\square \)

The following lemma is similar to Gautschi’s inequality [18], but whereas the latter inequality is restricted to \(-1\le \nu \le 0\), the lemma here needs to hold for arbitrary negative \(\nu \).

Lemma 2

The factor

for \(1\le k\le A\) and \(\nu \le 0\) satisfies

Proof

By Stirling’s approximation for \(k>0\) [19, §6.1.37-38], [4, Eq. 5.11.3]

and the relation

gives

so from the definition of q(k),

\(\square \)

Now, it is time to take on the sum (12), split in a head and tail part at index \(M\triangleq \lceil {{A}^{3/4}}\rceil \),

Define \(\Delta t\triangleq 1/\sqrt{A}\) and the function

Clearly, the functions \({{f}_{\nu }}\left( t \right) \) (Fig. 2) and

are continuous and bounded for bounded \(\nu \le -3\) and \(t\ge 0\).

Lemma 3

The following relations hold for \(\nu \le -3\):

and

Proof

According to the well-known trapezoidal rule, since \({{f}_{\nu }}\left( t \right) \) and \({f''_{\nu }}(t)\) are bounded for \(\nu \le -3\) and \(t\ge 0\), and for some \(\tau \in \left[ M\Delta t,A\Delta t \right] \),

so that

By substituting \({{t}^{2}}/2=u\) in the integral of \({{f}_{\nu }}\), the upper incomplete gamma function [19, §6.5.3], [4, Eq. 8.2.2] is obtained,

Asymptotically [19, §6.5.32], [4, Eq. 8.11.2-3],

implying that when z increases, \(\Gamma (s,z)\) approaches zero faster than any negative power of z, including \(1/\sqrt{a}\), i.e.,

This proves the first relation. For the second relation, by (18),

\(\square \)

Lemma 4

For bounded \(\nu \le -3\), \({{R}_\mathrm{tail}}=O\left( 1/\sqrt{a} \right) \).

Proof

Substituting \(k=k\Delta t \sqrt{A}\),

by Lemma 3. \(\square \)

The term \({{R}_\mathrm{head}}\) in (15) can be computed in a similar way.

Lemma 5

For bounded \(\nu \le -4\),

Proof

This time \(k<M\), and by Lemmas 1 and 2,

Using the identity \({{f}_{\nu }}\left( t \right) {{t}^{n}}={{f}_{\nu -n}}(t)\), the error term \(\Delta {}S\) is

Since

which by Lemma 3 is bounded for \(\nu \le -3\),

for \(\nu \le -4\). For the sum S in (20), again using Lemma 3,

\(\square \)

By (12), and combining Lemmas 5 and 4,

By the gamma function duplication rule [15, Eq. 1.2.3], [4, Eq. 5.5.5],

substituting \(z=-\nu /2\),

2.2 Convergence for \(x=0\) and Arbitrary \(\nu \)

Lemma 6

For \(\nu \) in any bounded interval,

and for \(\Delta x=1/\sqrt{2a}\),

Proof

Given that \({{y}_{\nu }}\left( 0 \right) ={{H}_{\nu }}(0)+O\left( 1/\sqrt{a} \right) \) for bounded \(\nu <{{\nu }_{0}}\), then for \({{\nu }_{0}}\le \nu <{{\nu }_{0}}+1\) the difference equation (5) can be rewritten into

so that for \(n=A=\lceil a \rceil \) and by (21),

By induction, \({{y}_{\nu }}\left( 0 \right) ={{H}_{\nu }}\left( 0 \right) +O\left( {1}/{\sqrt{a}} \right) \) locally for \(\nu \). Additionally, by the backward recurrence relation (6) and the derivative rule for the Hermite function (9),

\(\square \)

2.3 Convergence for Arbitrary x and Arbitrary \(\nu \)

To prove that \({{y}_{\nu }}\left( x \right) \) in (11) converges to the solution of the Hermite differential equation (7) having initial conditions \(y\left( 0 \right) ={{H}_{\nu }}(0)\) and \(y'(0)=H'_\nu (0)\), it can be rewritten in normal form as

where \({\varvec{y}}(x)\triangleq {{\left( y\left( x \right) ,~{y}'(x) \right) }^{T}}\)and

Let \(r\triangleq \sqrt{2a}\), \(\Delta x\triangleq 1/r\), and \({{x}_{k}}\triangleq k\Delta x\). Define a Cauchy polygon \({\varvec{u}}(x)\) for the differential equation (25) by linear interpolation between points \(\left( {{x}_{k}},{{{\varvec{u}}}_{k}} \right) \), where \({{{\varvec{u}}}_{0}}={\varvec{y}}(0)\) and

Lemma 7

For x and \(\nu \) in bounded intervals \([0,\xi ]\) and \([-\psi ,\psi ]\), respectively, the Cauchy polygon \({\varvec{u}}(x)\) converges uniformly to the Hermite function solution with an error bound

Proof

The Euclidean norm \(\left\Vert {\varvec{A}}(x)\right\Vert \) of \({\varvec{A}}\) in (25) equals the largest singular value of the matrix, so

Given arbitrary \(\xi ,\psi >0\) and \(L\triangleq ~\sqrt{1+4{{\psi }^{2}}+4{{\xi }^{2}}}\), for x in \(\left[ 0,\xi \right] \) and \(\nu \) in \([-\psi ,\psi ]\), by the definition of the Euclidean norm,

so L is additionally a Lipschitz constant for (25) when \(x\in \left[ 0,\xi \right] \). A definition and two theorems proved in [20, Sect. 7.3] are now handy:

Definition 1

A vector function \({\varvec{u}}(x)\) is an approximate solution with deviation at most \(\epsilon \) in the interval \(a \le x \le \xi +a\) of the vector differential equation

when \({\varvec{u}}(x)\) is continuous and satisfies the differential inequality

for all except a finite number of points x of the interval \(\left[ a, a+\xi \right] \).

Theorem 2

(Birkhoff and Rota, Th. 7.1) Let the continuously differentiable function \({\varvec{Y}}\) satisfy \(|{\varvec{Y}}|\le M\), \(|\partial {\varvec{Y}}/\partial x|\le C\), and L be a Lipschitz constant in the cylinder \(D: |{\varvec{y}}-{\varvec{c}}|\le K\), \(a \le x \le a + \xi \). Then, any Cauchy polygon in D with partition \(\pi \) is an approximate solution of \({\varvec{y}}'(x)={\varvec{Y}}({\varvec{y}},x)\) with deviation at most \((C + LM)|\pi |\).

Theorem 3

(Birkhoff and Rota, Th. 7.3) Let \({\varvec{y}}(x)\) be an exact solution and \({\varvec{u}}(x)\) be an approximate solution, with deviation \(\epsilon \), of the differential equation \({\varvec{y}}'(x)={\varvec{Y}}({\varvec{y}},x)\). Let \({\varvec{Y}}\) satisfy a Lipschitz condition with Lipschitz constant L. Then, for \(x \ge a\),

Bounds for \(|{\varvec{A}}\left( x \right) {\varvec{u}}(x) |\) and \(|\partial \left( {\varvec{A}}\left( x \right) {\varvec{u}}(x) \right) /\partial x |\) in \([0,\xi ]\) can be chosen

and

By Theorem 2, Theorem 3, and Lemma 6, for \(x\in [0,\xi ]\) and \(\nu \in [-\psi ,\psi ]\),

which is independent of x and \(\nu \), so the Cauchy polygon (26) converges uniformly to the Hermite function when \(a\rightarrow \infty \). \(\square \)

Define \({\varvec{z}}_0\triangleq {\varvec{u}}_0\) and

Let \(m\triangleq \lceil a-{{x}_{k}}r\rceil =a-{{x}_{k}}r+\left( \lceil a \rceil -a \right) =a-{{x}_{k}}r+\theta \), where \(0\le \theta <1\). For simplicity of notation, the argument of \({{c}_{m}}\) is dropped when it is \(\nu \). Consequently,

and

Multiplying the three-term recurrence relation (4) by two, and substituting \(x=\nu \) and \(m=n\) gives the identity

Rearranging, and using the facts that \(2a={{r}^{2}}\) and \(m=a-{{x}_{k}}r+\theta \),

by which

This is nearly the same expression as for the Cauchy polygon (26), with only the \(\theta \)-term differing. Understanding the product sign below to multiply matrices in the proper order, and \(\varvec{I}\) to denote the identity matrix,

Bounding the factor \(\left|\left|{\varvec{I} + \Delta x~{\varvec{A}}{\left( x_j \right) } }\right|\right|\le \exp (L\xi )\) in the same way as in (27),

demonstrates that \({\varvec{z}}\) converges uniformly to \({\varvec{u}}\) for \(x \in \left[ 0,\xi \right] \) and \(\nu \in [-\psi ,\psi ]\). The proof for the descending direction from \(x=0\) is omitted, since it is exactly analogous. By (28) and Lemma 7,

so for \(x_k \le x < x_{k+1}\),

where the right hand side is independent of x and \(\nu \) for these parameters in any bounded interval.

To demonstrate the sharpness of the bound, choose \(\nu =2\), any real x, and arbitrarily large a such that \(n=a-x\sqrt{2a}\) is integer. Since \(c_{2}^{a}\left( n \right) =1-\left( 1+2a \right) n/{{a}^{2}}+{{n}^{2}}/{{a}^{2}}\) and \({{H}_{2}}\left( x \right) =4{{x}^{2}}-2\),

This completes the proof of Theorem 1. \(\square \)

3 Transition of the Derivative

Theorem 4

For real x, \(\nu \), and positive a,

where \(c_{n}^{a}(\nu )\) are Charlier polynomials and \({{H}_{\nu }}(x)\) is the Hermite function. The error bound \(O\left( {1}/{\sqrt{a}}\; \right) \) is sharp and locally uniform for \(\nu \) and x.

The proof of this theorem uses the same technique as the proof of Theorem 1, so the procedure is abbreviated. First, the theorem is proved for the special case \(x=0\) and \(\nu \le 5\), then generalized to arbitrary \(\nu \), and finally shown to converge to the solution of a differential equation uniquely solved by the derivative of the Hermite function.

3.1 Convergence for \(x=0\) and \(\nu \le -5\)

Differentiating (12) with respect to \(\nu \),

The first sum \(\sum T_k\) is given by Lemmas 4 and 5. Consider the second sum

Here,

and \(\psi (z)=\Gamma '(z)/\Gamma (z)\). By [19, §6.3.5 and §6.3.2], [4, Eqs. 5.4.12, 5.4.14, and 5.5.2],

Define

Since \(t \ln t \rightarrow 0\) when \(t \rightarrow 0^+\), taking zero as the value at \(t = 0\), the functions \({{g}_{\nu }}\left( t \right) \) and

are continuous and bounded for bounded \(\nu \le -4\) and \(t\ge 0\).

Lemma 8

For bounded \(\nu \le -4\), \({{R}_\mathrm{tail}}=O\left( 1/\sqrt{a} \right) \).

Proof

But substituting \(k=k\Delta t \sqrt{A}\),

Since \(\vert \ln t\vert \le 1/t\) for \(0 < t \le 1\) and \(\vert \ln t\vert \le t\) for \(t\ge 1\), \(\vert g_\nu (t)\vert \le f_{\nu +1}(t) + f_{\nu -1}(t)\) for \(t \ge 0\), and for \(\nu \le -4\),

by Lemma 3. \(\square \)

Lemma 9

For bounded \(\nu \le -4\),

Proof

This time \(k<M\), and by Lemmas 1 and 2, like (20),

Since \(\vert {{g}_{\nu }}\left( t \right) {{t}^{n}}\vert =\vert {{g}_{\nu -n}}(t)\vert \le f_{\nu - n +1}+f_{\nu -n-1}\), the error term \(\Delta {}S\) is

for \(\nu \le -4\) by Lemma 3.

For the sum S in (38), again using the trapezoidal rule, as in the proof of Lemma 3,

For \(\nu \le -4\),

The function \(f_\nu \) satisfies \(\int _0^\infty {f_{\nu } \mathrm{d}t} < \infty \) for \(\nu \le -1\), and for \(|h|\le 1\) and \(t\ge 1\),

The function \(f_{\nu -1}(t)\) is an integrable function dominating \(|{f_{\nu +h}(t)-f_\nu (t)}|/{h}\) for \(|h|\le 1\) and \(t\ge 1\), since

so by Lebesgue’s dominant convergence theorem, the integration and differentiation order can be switched in the integral

\(\square \)

Using Lemmas 9 and 8, Eq. (35) becomes

3.2 Convergence for \(x=0\) and Arbitrary \(\nu \)

Lemma 10

For \(\nu \) in any bounded interval,

and for \(\Delta x=1/\sqrt{2a}\),

Proof

Induction can be applied again, just as in the proof of Lemma 6. Given that \({\partial {y}_{\nu }(0)/\partial \nu }={\partial {H}_{\nu }}(0)/\partial \nu +O\left( 1/\sqrt{a} \right) \) for bounded \(\nu <{{\nu }_{0}}\) and \(n=A=\lceil a \rceil \), again using (22),

Applying the induction step,

This implies that \({{y}_{\nu }}\left( 0 \right) ={{H}_{\nu }}\left( 0 \right) +O\left( {1}/{\sqrt{a}} \right) \) locally for \(\nu \). Using the backward recurrence relation (6), as in (24),

\(\square \)

3.3 Convergence for Arbitrary x and Arbitrary \(\nu \)

By differentiating Eq. (7) with respect to \(\nu \), and defining \(w \triangleq \partial y/\partial \nu \),

This equation has the particular solution \(w(x) = \partial H_\nu (x)/\partial \nu \). The homogeneous equation is again the Hermite equation, so the general solution of (39) is

For initial conditions \(w(0)=\partial H_\nu (0)/\partial \nu \) and \(w'(0) = \partial H'_\nu (0)/\partial \nu \), the unique solution of (39) is obviously \(w(x) = \partial H_\nu (x)/\partial \nu \).

Let \({\varvec{y}}(x)\triangleq {{\left( y\left( x \right) ,~{y}'(x),~w(x),~w'(x) \right) }^{T}}\). Equation (39) can be rewritten in the normal form (25), where

This time, the Euclidean norm of \({\varvec{A}}\) satisfies \( \left\Vert {\varvec{A}}(x)\right\Vert \le \sqrt{6+8{{\nu }^{2}}+8{{x}^{2}}} \), giving the Lipschitz constant \(\sqrt{6+8{{\psi }^{2}}+8{{\xi }^{2}}}\). In analogy with (26), a Cauchy polygon \({\varvec{u}}(x)\) can be defined such that for x and \(\nu \) in bounded intervals \([0,\xi ]\) and \([-\psi ,\psi ]\), respectively,

As in the proof of Lemma 7, this means that for bounded x and \(\nu \), \({\varvec{u}}(x)\) converges uniformly to the solution \({\varvec{y}}\) with an error bound

Now, extending the definition of \({\varvec{z}}_k\) (29) to four components, \({\varvec{z}}_0\triangleq {\varvec{u}}_0\) and

and writing \(d_m \triangleq \partial c_m/\partial \nu \),

and

Differentiating (30) with respect to \(\nu \),

leads to

By a procedure similar to the application of Eqs. (31)–(33) in Sect. 2,

where the right hand side is independent of x and \(\nu \) for these parameters in any bounded interval.

The sharpness of the bound can be proved by contradiction: Suppose that \({\partial y^a_{\nu }(x)}/{\partial \nu } -\partial H_\nu (x)/{\partial \nu } = O\left( b(a)\right) \) where O(b(a)) is tighter than \(O\left( 1/\sqrt{a}\right) \). Integrating this difference,

Choosing \(\nu _1=0\) and \(\nu _2=2\), arbitrary x, arbitrarily large a such that \(a - x\sqrt{2a}\) is integer, and using (34),

which is a contradiction. This completes the proof of Theorem 4. \(\square \)

4 Convergence of Zeros

Theorem 5

For fixed real x and positive \(a\rightarrow \infty \), let \(~n\triangleq ~\lceil a-x\sqrt{2a}\rceil \). For a convergent sequence of zeros \({{\nu }_{n}}\rightarrow \nu \) such that \(c_{n}^{a}\left( {{\nu }_{n}} \right) =0\), the limit \(\nu \) is a zero of the Hermite function, \({{H}_{\nu }}\left( x \right) =0\), satisfying \(\nu ={{\nu }_{n}}+O\left( {1}/{\sqrt{a}}\; \right) \). Conversely, for a positive real zero \(\nu \) of the Hermite function, there is a convergent sequence \({{\nu }_{n}}\rightarrow \nu \) of zeros of \(c_{n}^{a}\) satisfying \(\nu ={{\nu }_{n}}+O\left( {1}/{\sqrt{a}}\; \right) \).

Proof

Define \({{w}_{n}}\left( z \right) \triangleq {{\left( \sqrt{2a} \right) }^{n}}c_{n}^{a}(z)\) and note that \({{w}_{n}}\) has the same zeros in z as \(c_{n}^{a}\). The proof is based on the well-known fact that the zeros of a Charlier polynomial are real, simple, and positive [8]. Taylor-expanding \({{w}_{n}}\left( z \right) \) around one of its zeros \(z={{\nu }_{n}}\), writing \({w'_n}({{\nu }_{n}})\) for \(\partial {{w}_{n}}\left( z \right) /\partial z\) at \(z={{\nu }_{n}}\),

Since the zeros of a Charlier polynomial are simple, \({{w'}_{n}}({{\nu }_{n}})\text { }\!\!~ \ne 0\), the expression \(W\left( {{\nu }_{n}},\varepsilon \right) \) must be nonzero for \(\varepsilon \) in some sufficiently small interval \(I=[-\delta ,\delta ]\), where \(0 < \delta \le {{\nu }_{n}}\). Assume that \({w'_{n}}({{\nu }_{n}})>0\). The case \(w'_{n}({{\nu }_{n}})\text { }\!\!~ <0\) is treated in an analog way. Let \(c\triangleq \inf _{\varepsilon \in I} \,W\left( {{\nu }_{n}},\varepsilon \right) \). Figure 3 illustrates \(\vert (z-\nu _n)c\vert \) as a lower bound for \(\vert w_n(z)\vert \). By Theorem 1, due to the uniform convergence, for \(z\in [{{\nu }_{n}}- \delta ,{{\nu }_{n}}+\delta ]\), there is a b, independent of n and z, such that

Choose \(\varepsilon \triangleq \left( 1+b \right) /\left( c\sqrt{a} \right) \), which satisfies \(\varepsilon <\delta \) for sufficiently large a. For \(z = {{\nu }_{n}}+\varepsilon \),

Similarly, \(z = {{\nu }_{n}}-\varepsilon \) implies that \({{H}_{z}}\left( x \right) <0\). Since \({{H}_{z}}(x)\) is an entire function and changes sign for z in \([{{\nu }_{n}}- \varepsilon ,{{\nu }_{n}}+\varepsilon ]\), it must have a zero there. By letting \(a\rightarrow \infty \), the theorem is proved in one direction. For the reverse direction, switch the roles of w and H. Assume that \({{H}_{\nu }}\left( x \right) =0\). Since \({{H}_{0}}(x)\equiv 1\), \(\nu \) cannot be zero. Expand \({{H}_{z}}(x)\) around \(z=\nu \), writing \({\partial {{H}_{\nu }}(x)}/{\partial \nu }\) for \(\partial {{H}_{z}}\left( x \right) /\partial z\) at \(z=\nu \),

Let \(x(\nu )\) be defined as the pth zero in x of \(H_\nu (x)=0\). It is known that \(x(\nu )\) is a strictly monotonic function of \(\nu \) for \(\nu \ge 0\), so \(\mathrm{d}x/\mathrm{d}\nu \ne 0\) [21]. Differentiating the equation by \(\nu \),

so obviously, \({\partial {{H}_{\nu }}(x)}/{\partial \nu } = 0\) if and only if \({\partial {{H}_{\nu }}(x)}/{\partial x} = 0\). But if the latter derivative is zero, then \({{H}_{\nu -1}}\left( x \right) =0\) by the derivative rule (9), and according to the three-term recurrence for Hermite functions (8), all derivatives of \({{H}_{z}}(x)\) would be zero at \(z=\nu \), entailing that H, being analytic, would be identically zero. In other words, all positive real zeros \(\nu \) of \(H_{\nu }(x)\) are simple.

Consequently, \({\partial {{H}_{\nu }}(x)}/{\partial \nu } \ne 0\), and similarly to the first half of the proof, \(Z\left( \nu ,\varepsilon \right) \) must be nonzero for \(\varepsilon \) in some sufficiently small interval. It follows that \({{w}_{n}}\left( z \right) \) must be zero for some \(z\in [\nu -\varepsilon ,\nu +\varepsilon ]\), where \(\varepsilon =O\left( 1/\sqrt{a} \right) \). \(\square \)

5 Conclusions

It has been shown that the scaled Charlier polynomials, their scaled derivatives, and zeros converge to the Hermite function, the derivative of the Hermite function, and the zeros of the Hermite function, respectively. The convergence rates are inversely proportional to the square root of the order of the Charlier polynomial.

The proof technique used for showing the convergence of Charlier polynomials and their first derivatives is applicable to higher derivatives of the polynomials. There is a possibility that the Charlier polynomials may be extensible to an entire function. Such an extension could simplify the convergence proofs, but finding it appears nontrivial.

References

Charlier, C.V.L.: Über die Darstellung willkürlicher Funktionen. Ark. Mat. Astron. Fys. 2(20), 1–35 (1905)

Erdélyi, A., Magnus, W., Oberhettinger, F., Tricomi, F.G.: Higher Transcendental Functions, vol. II. McGraw-Hill, New York, Toronto, London (1953)

Szegő, G.: Orthogonal Polynomials, AMS Colloquium Publications, vol. XXIII, 4th edn. American Mathematical Society, Providence (1975)

Olver, F.W.J., Lozier, D.W., Boisvert, R.F., Clark, C.W. (eds.): NIST Handbook of Mathematical Functions. Cambridge University Press, New York (2010)

Koekoek, R., Lesky, P.A., Swarttouw, R.F.: Hypergeometric Orthogonal Polynomials and Their \(q\)-Analogues. Springer, Berlin (2010)

Jagerman, D.L.: Some properties of the Erlang loss function. Bell Syst. Tech. J. 53(3), 525–551 (1974)

Karlin, S., McGregor, J.: Many server queueing processes with Poisson input and exponential service times. Pac. J. Math. 8(1), 87–118 (1958)

Kijima, M.: On the largest negative eigenvalue of the infinitesimal generator associated with \({M}/{M}/n/n\) queues. Oper. Res. Lett. 9, 59–64 (1990)

Nilsson, M.N.P.: Hitting time in Erlang loss systems with moving boundaries. Queueing Syst. 78(3), 225–254 (2014). https://doi.org/10.1007/s11134-014-9399-5

Engel, D.D.: The Multiple Stochastic Integral, Memoirs of the American Mathematical Society, vol. 38, pp. 1–82. AMS, Providence (1982)

Xiu, D.: Numerical Methods for Stochastic Computations: A Spectral Method Approach. Princeton University Press, Princeton (2010)

König, W.: Orthogonal polynomial ensembles in probability theory. Probab. Surv. 2, 385–447 (2005). arXiv:math/0403090v3 [math.PR]

Halfin, S., Whitt, W.: Heavy-traffic limits for queues with many exponential servers. Oper. Res. 29(3), 567–588 (1981)

Meixner, J.: Erzeugende Funktionen der Charlierschen Polynome. Math. Z. 44(1), 531–535 (1939). https://doi.org/10.1007/BF01210670

Lebedev, N.N.: Special Functions and Their Applications. Dover Publications, New York (1972)

Dominici, D.: Asymptotic analysis of the Askey scheme I: from Krawchouk to Charlier. Cent. Eur. J. Math. 5(2), 280–304 (2007)

Dunster, T.M.: Uniform asymptotic expansions for Charlier polynomials. J. Approx. Theory 112, 93–133 (2001). https://doi.org/10.1006/jath.2001.3595

Laforgia, A.: Further inequalities for the gamma function. Math. Comput. 42(166), 597–600 (1984)

Abramowitz, M., Stegun, I.A.: Handbook of Mathematical Functions. Applied Mathematics Series 55. National Bureau of Standards, Washington, DC (1972)

Birkhoff, G., Rota, G.-C.: Ordinary Differential Equations, 2nd edn. Xerox College Publishing, Lexington (1969)

Elbert, A., Muldoon, M.E.: Inequalities and monotonicity properties for zeros of Hermite functions. Proc. R. Soc. Edinb. Sect. A 129, 57–75 (1999)

Acknowledgements

This research was funded by the European Union FP7 research project THE, “The Hand Embodied,” under Grant Agreement 248587. The author is grateful for support by Prof. Henrik Jörntell of Lund University, Department of Experimental Medical Science.

Funding

Open access funding provided by RISE Research Institutes of Sweden.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Edward B. Saff.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Nilsson, M.N.P. On the Transition of Charlier Polynomials to the Hermite Function. Constr Approx 56, 479–504 (2022). https://doi.org/10.1007/s00365-021-09559-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00365-021-09559-w