Abstract

Nowadays, in many different fields, massive data are available and for several reasons, it might be convenient to analyze just a subset of the data. The application of the D-optimality criterion can be helpful to optimally select a subsample of observations. However, it is well known that D-optimal support points lie on the boundary of the design space and if they go hand in hand with extreme response values, they can have a severe influence on the estimated linear model (leverage points with high influence). To overcome this problem, firstly, we propose a non-informative “exchange” procedure that enables us to select a “nearly” D-optimal subset of observations without high leverage values. Then, we provide an informative version of this exchange procedure, where besides high leverage points also the outliers in the responses (that are not necessarily associated to high leverage points) are avoided. This is possible because, unlike other design situations, in subsampling from big datasets the response values may be available. Finally, both the non-informative and informative selection procedures are adapted to I-optimality, with the goal of getting accurate predictions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Recently, the theory of optimal design has been exploited to draw a subsample from huge datasets, containing the most information for the inferential goal; see Drovandi et al. (2017), Wang et al. (2019), Deldossi and Tommasi (2022) among others. Unfortunately, Big Data sets usually are the result of passive observations, so some high leverage values in the covariates and/or outliers in the response variable (denoted by Y) may be present. In this study, we assume that a small percentage of the data are outliers and the goal is to provide a precise estimate of the model parameters or an accurate prediction for the model that generates the majority of the data.

The most commonly applied criterion is the D-optimality. It is well known that D-optimal designs tend to lie on the boundary of the design region thus, in the presence of high leverage values, all of them would be selected. Since this circumstance could have a severe influence on the estimated model (leverage points with high influence), we propose an “exchange” procedure to select a “nearly” D-optimal subset which does not include high leverage values. Avoiding high leverage points, however, does not guard from all the outliers in Y. Therefore, we also modify the previous method to exploit the information about the responses and avoid the selection of the abnormal Y-values. The first proposal is a non-informative procedure, as it is not based on the response observations, while the latter is an informative exchange method.

Finally, both these exchange algorithms are adapted to the I-criterion, which aims at providing accurate predictions in a set of covariate-values (called prediction set).

Notation and motivation of the work are introduced in Sect. 2. Section 3 describes the novel modified exchange algorithm to obtain both non-informative and informative D-optimal subsamples without outliers. In Sect. 4 we adapt our proposal to I-optimality, to select a subsample with the goal of obtaining accurate predictions. In Sect. 5 we develop some simulations and a real data example, to assess the performance of the proposed subsampling methods. Finally, in Appendix we suggest a procedure for the initialization of these algorithms.

2 Notation and motivation of the work

Assume that N independent responses have been generated by a super-population model

where \(^\top \) denotes transposition, \(\varvec{\beta }=(\beta _0,\beta _1,\ldots ,\beta _k)^\top \) is a vector of unknown coefficients, \(\varvec{x}_i^\top =(1,\tilde{\varvec{x}}_i^\top )\) where \(\tilde{\varvec{x}}_i=({x}_{i1},\ldots ,x_{ik})^\top \), for \(i=1,\ldots , N\), are N iid repetitions of a k-variate explanatory variable, and \(\varepsilon _i\) are iid random errors with zero mean and equal variance \(\sigma ^2\).

\(\varvec{D}=\{(\tilde{\varvec{x}}_1^\top , Y_1),\ldots ,(\tilde{\varvec{x}}_N^\top , Y_N)\}\) indicates the available dataset, which is assumed to be a tall dataset, i.e. with \(k<< N\).

The population under study is denoted by \(U=\{1,\ldots ,N\}\) and \(s_n \subseteq U\) denotes a sample without replications of size n from U (i.e. a collection of n different indices from U).

Herein, we describe a new sampling method from a given dataset \(\varvec{D}\), with the goal of selecting n observations (\(k< n<< N\)) to produce an efficient parameter estimate or an accurate prediction for the model generating the whole dataset apart from a few outliers, i.e. a small quantity of points that take “abnormal” values with respect to the rest of the data and that possibly have been generated by a different model.

Given a sample \(s_n=\{i_1,\ldots ,i_n \}\), let \(\varvec{X}\) be the \(n \times (k+1)\) matrix whose rows are \(\varvec{x}_i^\top \), for \(i \in s_n\), and let \(\varvec{Y} = (Y_{i_1}, \ldots , Y_{i_n})^\top \) be the \(n \times 1\) vector of the sampled responses. We consider the OLS estimator of the coefficients of the linear model based on the sample \(s_n\):

where

denotes the sample inclusion indicator.

To improve the precision of \(\hat{\varvec{\beta }}\), we suggest to select the sample \(s_n\) according to D-optimality. We denote the D-optimum sample as

When \(\varvec{D}\) contains outliers, \(s_n^*\) may include them, because D-optimal support points usually lie on the boundary of the experimental region. Example 1 illustrates this issue.

Example 1

An artificial dataset \(\varvec{D}\) with \(N=10000\) observations has been generated from a simple linear model,

in the following way:

for \(i = 1, \ldots , 9990\), \(\varvec{\beta } = (1.5, 2.7)^\top \), \(x_i \sim \mathcal {N}(3, 4)\), \(\varepsilon _i \sim \mathcal {N}(0, 9^2)\);

for \(i = 9991, \ldots ,10000\), \(\varvec{\beta } = (1.5, -2.7)^\top \) \(x_i \sim \mathcal {N}(3, 20)\), \(\varepsilon _i \sim \mathcal {N}(0, 20^2)\).

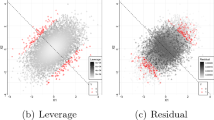

The left-hand side of Fig. 1 displays these last 10 observations which are isolated with respect to the majority of the data, generated from the first distribution. The right-hand side of Fig. 1 emphasises the D-optimal subsample of size \(n =100\), \(s_n^*\). As expected, all the abnormal values in X are included in \(s_n^*\) because they maximize the determinant of the information matrix [\(s_n^*\) has been obtained by applying the function od_KL of the R package OptimalDesign (Harman and Filová, 2019)].

A similar behaviour would be displayed also by the I-optimal subsample, that should be applied to get accurate predictions (see Sect. 4). To avoid the inclusion of outliers when applying the D- or I-optimal subsampling, we propose a modification of the well known exchange algorithm.

Before describing our proposal, we recall that a tool to identify an outlier in the factor-space is the leverage score, \(h_{ii} =\varvec{x}^\top _i (\varvec{X}^\top \varvec{X})^{-1} \varvec{x}_i \). Points which are isolated in the factor-space (i.e., far located from the main body of points) can be thought of as outliers and are characterized by high leverage values [see Chatterjee and Hadi (1986)]. Actually, an observation \(\varvec{x}_i\), with \(i=1, \cdots , n\), such that

where \(\nu _1\) is a tuning parameter usually set equal to 2 [see for instance Hoaglin and Welsch (1978)], that is called a high leverage point. In general, high leverage points allow to reduce the variance of the parameters’ estimates and in the literature many leverage-based sampling procedure have been proposed [see, among others Ma et al. (2015)]. But consider that if these high leverage points are associated to outlying response values, their inclusion in the sample may lead to misleading inferential results. For this reason, our aim is to avoid these points.

3 Modified exchange algorithms

The common structure of the t-th iteration of an exchange algorithm consists in adding a unit, chosen from a list of candidate points \(\mathcal{C}^{(t)}\), to the current sample \(s_n^{(t)}\), and then deleting an observation from it. The choice of the augmented and deleted points is based on the achievement of some optimality criterion. For instance, for D-optimality, Algorithm 1 describes the classical exchange procedure [see Chapter 12 in Atkinson et al. (2007)].

Our main idea is to modify Algorithm 1 by not proposing for the exchange the high leverage points, thus avoiding the inclusion in the sample of high leverage scores with abnormal responses, which could lead to wrong inferential conclusions. This goal is reached by:

-

(a)

Switching the augmentation and deletion steps;

-

(b)

Changing the set \(\mathcal{C}^{(t)}\) where the observation to be added is searched.

If the information about the responses is not exploited in step b (to identify \(\mathcal{C}^{(t)}\)), then the modified D-optimal sample is non-informative for the parameters of interest. The non-informative procedure is described in detail in Subsect. 3.1.

Preventing high leverage points, however, does not guard from all the outliers in Y: there may exist points that are in the core of the data with respect to the features, while being abnormal with respect to the response variable. In Subsect. 3.2 we propose another version of the algorithm, where (in step b) we employ the responses to remove the outliers in Y. Note that the obtained optimal subsample becomes informative because of the dependence on the Y values.

3.1 Non-informative D-optimal samples without high leverage points

Let \(s_n^{(t)}\) be the current sample of size n and \(s_n^{(0)}\) an initial sample which does not include high leverage points (see Algorithm 5 in Appendix for a detailed procedure to get a convenient initial sample).

To update \(s_n^{(t)}\), firstly we remove from it the unit \(i_{m}\) with the smallest leverage score,

thus obtaining a reduced sample of size \(n-1\), where \({\varvec{X}}_t\) denotes the design matrix associated to \(s_n^{(t)}\).

Let \({\varvec{X}}_t^{\!-}\) be the design matrix attained by leaving out the row \(\varvec{x}_{i_m}\) from \(\varvec{X}_t\). Subsequently, we add the unit \(j_a \in \mathcal{C}^{(t)} \) with the largest leverage score \({\varvec{x}}_{j_a}^\top ({{\varvec{X}}_t^{\!-}}^\top {\varvec{X}}_t^{\!-})^{-1} \varvec{x}_{j_a}\), where the set of candidate points for the exchange at the current iteration is

[see Searle (1982) p. 153 to get (3)] and \(h_{i_m i_m}(\varvec{x}_j)\) is the leverage score obtained by exchanging \(\varvec{x}_{i_m}\) with \(\varvec{x}_j\) for \(j\in \{U-s_n^{(t)}\}\). The next theorem provides an analytical expression for \(h_{i_m i_m}(\varvec{x}_j)\), which reduces the computational burden of the algorithm.

Theorem 1

Let \(_j{\varvec{X}}_t\) be the design matrix obtained from \(\varvec{X}_t\) exchanging \(\varvec{x}_{i_m}\) with \(\varvec{x}_j\), then

where

with

Proof

Expression (5) can be obtained from Lemma 3.3.1 in Fedorov (1972) after some cumbersome algebra. \(\square \)

In force of the upper bound in (2), our proposal is to consider as candidates for the exchange only observations in \(\{U-s_n^{(t)}\}\) which are not high leverage points. In addition, to speed up the algorithm we reduce the number of exchanges by imposing the lower bound in (2). Without this lower bound, if \(h_{i_m i_m}(\varvec{x}_j) \le h_{i_{m} i_{m}}\), the new observation j could be removed at the subsequent iteration.

Algorithm 2 outlines the steps to select a D-optimal subsample without high leverage points.

3.2 Informative D-optimal sample without outliers

Whenever the response values are available, this information should be exploited by the exchange algorithm, obtaining an informative D-optimal subsample.

According to Chatterjee and Hadi (1986) an influential data point in Y is an observation that strongly influences the fitted values. To identify these influential values, we adopt Cook’s distance, but other measures can be similarly applied. Cook’s distance for the i-th observation, \(C_i\), quantifies how much all of the fitted values in the model change when the i-th data point is deleted:

where \(\hat{\varvec{Y}}= \varvec{X} \hat{\varvec{\beta }}^\top \), \(\hat{\sigma }^2\) is the residual mean square estimate of \(\sigma ^2\) and \(\hat{\varvec{Y}}_{(i)}= \varvec{X} \hat{\varvec{\beta }}_{(i)}^\top \) is the vector of predicted values when the i-th unit is removed from the data set \(\varvec{D}\). According to a general practical rule, any observation with a Cook’s distance larger than 4/n may be considered as an influential point.

To get an informative D-optimal sample, Algorithm 2 is modified by including the additional steps illustrated in Algorithm 3.

Example 2

Figure 2 illustrates the performance of the proposed algorithms in comparison with the Iboss subsampling method [proposed by Wang et al. (2019)] and the simple random sample, in the artificial dataset of Example 1.

Subsamples (in green) of the artificial dataset of Example 1, obtained applying: Iboss, Simple Random Sampling, Non-informative D-optimal sampling, Informative D-optimal sampling. The black line is the true regression model, while the green line is the fitted model based on the subsample (of size \(n=100\)) selected according to the different procedures. (Color figure online)

As expected, the Iboss algorithm provides a subset similar to the D-optimal sample (cfr. with Fig. 1) since it selects the points on the boundary of the design space, thus including most of the outliers. As a consequence, the true model and the fitted model are quite distinct. Neither the simple random sample produces a good filted model, as it includes an outlier. The non-informative selection procedure seems to improve the fit of the true model, even if the best performance is obtained using the informative selection approach, which doesn’t include outliers.

Remark

Let us note that an increase of \(t_{max}\) and \(\tilde{N}\) would lead to an improvement of the D-optimal subsamples, because of a better chance of exchanging sample points. In particular, it is reasonable to consider \(\tilde{N}=N-n\) whenever N is not too large.

4 Optimal subsampling to get accurate predictions

If we are interested in obtaining accurate predictions on a set of values \(\mathcal{X}_0=\{ \varvec{x}_{01},\ldots ,\varvec{x}_{0N_0}\}\) instead of a precise parameter estimation, then we should select the observations minimizing the overall prediction variance. Let \(\hat{Y}_{0i}= \hat{\varvec{\beta }}^\top \varvec{x}_{0i}\) be the prediction of \(\mu _{0i}=E(Y_{0i}|\varvec{x}_{0i})\) at \(\varvec{x}_{0i}\), \(i=1,\ldots ,N_0\). The prediction variance at \(\varvec{x}_{0i}\), also known as “mean squared prediction error” is

If \({\varvec{X}}_0\) is the \(N_0\times k\) matrix whose i-th row is \(\varvec{x}_{0i}^\top \), then a measure of the overall mean squared prediction error is the sum of the prediction variances in \(\mathcal{X}_0\):

The following sample

minimizes the overall prediction variance (7) and is called I-optimal. It is well known that to produce accurate predictions it would be advisable to avoid outliers. An I-optimal subsample without high leverage points can be obtained by modifying the deletion and augmentation steps of the exchange algorithm described in Sect. 3.1 accordingly to the I-criterion. The current sample \(s_n^{(t)}\) should be updated by removing the unit \(i_{m}\) which minimises the increase in the overall mean squared prediction error. From the results given in Appendix A of Meyer and Nachtsheim (1995), the increment in the overall mean squared prediction error due to the omission of the unit i is given by

where \(\varvec{X}_t\) is the \(n \times k\) matrix whose rows are \(\varvec{x}_i^T\) with \(i \in s_n^{(t)}\).

Subsequently, to obtain again a sample of size n, from a set \(\mathcal{C}^{(t)}\) of candidate points, we should add the unit \(j_a\) which maximize the decrease in the overall mean squared prediction error:

where \({\varvec{X}}_t^{\!-}\) is the design matrix obtained by removing the row \(\varvec{x}_{i_m}\) from \(\varvec{X}_t\) and \(({\varvec{X}}_t^{\!-\top } {\varvec{X}}_t^{\!-})^{-1}\) can be computed from (3).

The set of candidate points should be composed by units that are not at risk to be deleted at the next iteration and are not high leverage points:

where \({h}_{i_m i_m}(\varvec{x}_j)\) is given in (4),

\(_j{\varvec{X}}_t\) is the matrix obtained from \(\varvec{X}_t\) by exchanging \(\varvec{x}_{i_m}\) with \(\varvec{x}_j\) and \((_j{\textbf{X}}_t^\top {_j{\textbf{X}}_t})^{-1}\) can be computed from Eq. (5).

Algorithm 4 summarizes the steps to select a non-informative I-optimal sample, while to obtain its informative version, it is enough to incorporate the additional steps of Algorithm 3.

5 Numerical studies

5.1 Simulation results

In this section, we evaluate the performance of our proposals through a simulation study. We generate \(H\times S\) random datasets of size \(N=10^6\), each one including \(N_{out}=500\) high leverage points/outliers (with \(H=30\) and \(S=50\)). The computation of some metrics will illustrate the validity of our procedures in selecting D- or I-optimal subsamples without outliers.

Precisely, for each \(h=1, \ldots , H\), N iid repetitions of a 10-variate explanatory variable \(_h\tilde{\varvec{x}}_i=({x}_{i1},\ldots ,x_{i10})^\top \) are generated as follows:

-

1.

\(x_{i1}\), \(x_{i2}\) and \(x_{i3}\), for \(i=1, \ldots , N\), are independently distributed as U(0, 5);

-

2.

\((x_{i4},x_{i5},x_{i6},x_{i7})^\top \) is distributed as a multivariate normal r.v. with zero mean and

-

2.a.

For \( i=1, \ldots , (N-N_{out})\): covariance matrix \(\varvec{\Sigma }_1=\left[ a_{rs} \right] \), with \(a_{rr}=9\) and \(a_{rs}=-1\) (\(r\ne s\)), \(r,s=1,\ldots ,4\);

-

2.b.

For \(i=(N-N_{out})+1, \ldots , N\): covariance matrix \(\varvec{\Sigma }_{1.out}=\left[ a_{rs} \right] \), with \(a_{rr}=25\) and \(a_{rs}=1\) (\(r\ne s\)), \(r,s=1,\ldots ,4\);

-

2.a.

-

3.

\((x_{i8},x_{i9})^\top \), for \(i=1, \ldots , N\), is distributed as a multivariate t-distribution with 3 degrees of freedom and scale matrix \( \varvec{\Sigma }_2=\left[ \begin{array}{cc} 1 &{} 0.5 \\ 0.5 &{} 1\\ \end{array} \right] \);

-

4.

\(x_{i10}\), for \(i=1, \ldots , N\), is distributed as a Poisson distribution \(\mathcal{P}(5)\).

For each generated \(N\times (k+1)\) design matrix \(_h\varvec{X}\), whose i-th row is \(_h{\varvec{x}}^\top _i=(1, {_h{\tilde{\varvec{x}}}^\top _i} )\) (\(i=1,\ldots ,N\)), we have simulated \(S=50\) independent \(N\times 1\) response vectors \({_h{\!\varvec{Y}\!}_{s}}\) (with \(s=1,\ldots , S\)), whose i-th item is

with

-

(i)

\(\varvec{\beta }=(1, 1, 1, 1, 2, 2, 2, 2, 1, 1, 1)\) and \(\sigma =3\) for \(i=1, \ldots , N-N_{out}\)

-

(ii)

\(\varvec{\beta }\!=\!(1, 1, 1, 1, -2, -2, -2, -2, 1, -1, -1)\), \(\sigma \!=\!20\) for \(i\!=\!(N\!-\!N_{out})\!\ +1, \ldots , N\).

At each simulation step (h, s), to draw subsamples from the simulated dataset:

we have applied the following algorithms (\(h=1,\ldots ,H\) and \(s=1,\ldots ,S\)):

-

1.

Non-informative I (Algorithm 4)

-

2.

Non-informative D (Algorithm 2)

-

3.

Informative I (Algorithms 4 and 3)

-

4.

Informative D (Algorithms 2 and 3)

-

5.

Simple random sampling (SRS): passive learning selection

To assess these subsampling techniques, we have generated a test set of size \(N_T=500\), without high leverage points and outliers (i.e. with \(N_{out}=0\)):

Finally, to implement the I-optimality procedure, we have generated a prediction region \(\mathcal{X}_0\) without high leverage points. In addition, to compare the performance of the distinct subsamples in terms of prediction ability on \(\mathcal{X}_0\), we have generated also the corresponding responses (without outliers). Let

be the prediction set, where \(N_0=500\).

A subsample selected from the dataset \(_h\varvec{D}_{s}\) (generated at the (h, s)-th simulation step) is denoted by \(s_{n}^{(h,s)}\), and

is the corresponding sampling indicator variable, for \(h=1,\ldots ,H\) and \(s=1,\ldots ,S\).

At each simulation step (h, s):

-

(a)

To evaluate the performance of the subsampling techniques with respect to D- and I-optimality criteria, we have computed: – The average mean squared prediction error in \(\mathcal{X}_0\) [from (7)]:

$$\begin{aligned} \textrm{MSPE}_{\mathcal{X}_0}^{(h,s)}= \sigma ^2 \dfrac{{\text {trace}}\!\left[ \!\left( \sum _{i = 1}^{N} {_h\varvec{x}}_i\, {_h\varvec{x}}_i^\top I_{i}^{(h,s)}\right) ^{\!-1} \! \varvec{X}_0^\top \varvec{X}_0 \right] }{N_0}; \end{aligned}$$– The logarithm of the determinant of the information matrix:

$$\begin{aligned} \mathrm{Log(det)}^{(h,s)} = \log \left|\sum _{i = 1}^{N} {_h\varvec{x}}_{i}\, {_h\varvec{x}}_{i}^\top I_{i}^{(h,s)} \right|; \end{aligned}$$ -

(b)

To assess the predictive ability of the selection algorithms, we have considered: – The average squared prediction error in \(\mathcal{X}_0\) and in \(\mathcal{X}_{T}=\{\varvec{x}_{T1},\ldots ,\varvec{x}_{TN_{T}}\}\):

$$\begin{aligned} \textrm{SPE}_{\mathcal{X}_{0}}^{(h,s)}= \dfrac{\sum _{i=1}^{N_{0}} ( \hat{y}_{0i}^{(h,s)}- \mu _{0i})^2}{N_{0}} \;\; \textrm{and} \;\; \textrm{SPE}_{\mathcal{X}_{T}}^{(h,s)} = \dfrac{\sum _{i=1}^{N_{T}} ( \hat{y}_{Ti}^{(h,s)}- \mu _{Ti})^2}{N_{T}}, \end{aligned}$$where \(\hat{y}_{0i}^{(h,s)}={_h\hat{\varvec{\beta }}_s\!\!}^\top \varvec{x}_{0i}\), \(\hat{y}_{Ti}^{(h,s)}={_h\hat{\varvec{\beta }}_s\!\!}^\top \varvec{x}_{Ti}\), \(\mu _{0i}=\varvec{\beta }^\top \varvec{x}_{0i}\), \(\mu _{Ti}=\varvec{\beta }^\top \varvec{x}_{Ti}\) and \(_h\hat{\varvec{\beta }}_s\) is the OLS estimate of \({\varvec{\beta }}\) based on the subsample \(s_{n}^{(h,s)}\); – The standard error in the prediction set \({\varvec{D}_0}\) and in the test set \({\varvec{D}_T}\):

$$\begin{aligned} \textrm{SE}_{\varvec{D}_0}^{(h,s)} =\dfrac{\sum _{i=1}^{N_{0}} \big ( \hat{y}_{0i}^{(h,s)} - y_{0i}\big )^2}{N_{0}} \;\; \textrm{and} \;\; \textrm{SE}_{\varvec{D}_T}^{(h,s)}=\dfrac{\sum _{i=1}^{N_{T}} \big ( \hat{y}_{Ti}^{(h,s)} - y_{Ti}\big )^2}{N_{T}} . \end{aligned}$$

Table 1 displays the following Monte Carlo averages,

for the different sampling strategies: non-inf. I, non-inf. D, inf. I, inf. D and SRS, respectively. The results have been obtained having set \(n=500\), \(\tilde{N}=1000\), \(t_{max}=500\), \(\nu _1=2\) and \(\nu _2=3\).

From Table 1, the non-informative procedures seem to provide subsamples “nearly” D- and I-optimal that do not include high leverage points (they would be exactly D- and I-optimal if they allowed for these abnormal values). This result is consistent with the definitions of I- and D-optimality.

Table 2 instead lists the following Monte Carlo averages:

\(\textrm{SPE}_{\mathcal{X}_{0}}=\sum _{h=1}^H \sum _{s=1}^S \textrm{SPE}_{\mathcal{X}_{0}}^{(h,s)}/HS\;\), \(\;\;\textrm{SPE}_{\mathcal{X}_{T}}=\sum _{h=1}^H \sum _{s=1}^S \textrm{SPE}_{\mathcal{X}_{T}}^{(h,s)}/HS\),

\(\textrm{SE}_{\varvec{D}_0}=\sum _{h=1}^H \sum _{s=1}^S \textrm{SE}_{\varvec{D}_0}^{(h,s)}/HS\;\) and \(\;\textrm{SE}_{\varvec{D}_T}= \sum _{h=1}^H \sum _{s=1}^S \textrm{SE}_{\varvec{D}_T}^{(h,s)}/HS\),

for the different subsamples. These quantities enable to assess the predictive ability of the subsampling techniques. From Table 2, we can appreciate the prominent role of the informative procedures. In fact, when the database includes outliers in Y which are not associated with high leverage points (as in this simulation study), only the informative procedures are able to exclude them providing accurate predictions.

From the last row of Table 2, the SRS seems to behave quite well: it is fast, easy to be implemented and provides good predictions compared to the informative I-optimal subsampling. However, such a nice performance is due to the low percentage of outliers present in the artificial datasets. Figure 3 displays the superiority of the informative procedures with respect to the passive learning selection (SRS), as the percentage of the outliers increases. Of course, we consider a short range for the percentage of outliers because outliers are (by definition) a few isolated data points.

Comparing the third and the fourth rows of Table 2, informative I-optimal subsamples seem outperform the D-optimal ones only slightly, despite I-optimality should reflect the goal of getting accurate predictions. This happens because the prediction set \(\mathcal{X}_0\) has a similar shape as the dataset. When \(\mathcal{X}_0\) defines a specific subset of covariate-values, then the superiority of I-optimality emerges. See for instance the values of \(\textrm{SPE}_{\mathcal{X}_{0}}\) and \(\textrm{SPE}_{\mathcal{X}_{T}}\) in Table 3, where \(\mathcal{X}_0\) and \(\mathcal{X}_T\) involve only positive values of the features.

Remark

Actually, to take into account the randomness of the SRS technique, we have drawn \(N_{SRS}=50\) different independent SRSs from each dataset \(_h\varvec{D}_{s}\), for \(h=1, \ldots ,H\) and \(s=1,\ldots ,S\); the Monte Carlo averages for SRS are based also on these additional observations.

5.2 Real data example

In this section we apply our proposal to the diamonds data set in the ggplot2 package. This dataset contains the prices and the specifications for more than 50000 diamonds. More specifically, 7 features are included:

-

The carat \(x_1\), which is the weight of the diamond and ranges from 0.2 to 5.01;

-

The quality of the diamond cut \(x_2\), which is coded by one if the quality is better than “Very Good” and zero otherwise;

-

The level of diamond color \(x_3\), which is coded by one if the quality is better than “level F” and zero otherwise;

-

A measurement of the diamond clearness \(x_4\), which takes value one if the quality is better than “SI1” and zero otherwise;

-

The total depth percentage \(x_5\);

-

The width at the widest point \(x_6\);

-

The volume of the diamond \(x_7\).

To avoid a multicollinearity problem, \(x_1\) has not been considered in the analysis, because it is highly correlated with \(x_7\) (the volume). Furthermore, to obtain a better fit of the data, the quadratic effect of \(x_7\) has been included in the model, where the response variable Y is the logarithm of the price (\(\log _{10}\)).

The dataset contains some outliers, such as observation NO.24068 which corresponds to a diamond with an unusually large width that makes the price too high.

Let us assume that the goal is the prediction of the price of the diamonds with a volume larger than 200 mm\(^3\). Therefore, to apply the I-optimality strategy, we have randomly selected a prediction set \(\mathcal{X}_0\) from all the diamonds with \(x_7\) larger than 200 mm\(^3\). Then, the remaining dataset has been divided in fourfolds of the same size to compare the different subsampling techniques through a cross-validation approach. In rotation, one fold represents the test set, while the others form the training set, from which subsample of size n are selected according to the different algorithms. In each test set only diamonds with volume larger than 200 mm\(^3\) are considered; in addition, the outliers (if present) are removed. In this example we have set \(n = 100\), \(\tilde{N} = 2000\), \(t_{max}=2000\).

The first two columns of Table 4 show that the minimum value of the \(\textrm{MSPE}_{\mathcal{X}_0}\) is associated to the non-informative I-Algorithm, while the maximum value of Log(Det) corresponds to the non-informative D-optimal subsample. This result is consistent with the definitions of I- and D-optimality and with the results of the simulation study in Sect. 5. With regards to the predictive ability of the subsampling techniques, we can observe that the I-informative procedure leads to the minimum values of the Cross-validation averages \(\textrm{SE}_{\varvec{D}_0}\) and \(\textrm{SE}_{\varvec{D}_T}\) (last two columns of Table 4). Differently from the simulation study, in this real data example, also the non-informative I-criterion seems to perform properly. This is due to the fact that in the diamonds dataset most of the outliers in Y are associated with high leverage points and thus also the non-informative procedure is able to exclude them providing accurate predictions.

6 Discussion

Recent advances in technology have brought the ability to collect, transfer and store large datasets. The availability of such a huge amount of data is a great challenge nowadays. However, very often Big Datasets contain noisy data because they are the result of a passive observation and not of a well planned survey. Moreover, huge datasets may not be queried for free; typically agencies that create and manage huge databases, enable to download data by paying a price per MB. Furthermore, there are circumstances where the value of the response variable may be obtained only for a restricted number of units.

For this reason, we suggest to consider only a subsample of the dataset excluding abnormal values, with the idea that a subset of a few relevant data may be more “informative” than a huge quantity of raw, redundant, and noisy observations. The theory of optimal design is a guide to draw a subsample containing the most informative observations, but optimal subsamples frequently lie on the boundary of the factor-domain, including all the outliers. Two modifications of the well-known exchange algorithm are herein proposed to select “nearly” optimal subsamples without abnormal values:

-

A non-informative procedure, that avoids the inclusion of high leverage points, can be applied whenever information about the responses is not available or is too expensive to have it;

-

An informative procedure, that excludes outliers in the response besides high leverage points, can be used whenever the responses are available.

A simulation study confirms that D-optimal subsampling should be applied if the inferential goal is precise estimation of the parameters, while informative I-optimal algorithm should be applied to get accurate predictions on a specified prediction set.

A limitation of these methods is that they are model-based, while in the real-life problems the model is unknown. This relevant issue will be handled in a future research by adapting the algorithms to optimality criteria for model selection, possibly combined with D- and I-criteria.

Finally, another challenging future development could be the extension of the proposed algorithms to the generalised linear model, because the definition of outliers and high leverage points, in this context, is not straightforward.

References

Atkinson A, Donev A, Tobias R (2007) Optimum experimental designs, with SAS. Oxford University Press, Oxford

Chatterjee S, Hadi AS (1986) Influential observations, high leverage points, and outliers in linear regression. Stat Sci 1(3):379–416

Deldossi L, Tommasi C (2022) Optimal design subsampling from Big Datasets. J Qual Technol 54(1):93–101

Drovandi CC, Holmes CC, McGree JM, Mengersen K, Richardson S, Ryan EG (2017) Principles of experimental design for big data analysis. Stat Sci 32(3):385–404

Fedorov VV (1972) Theory of optimal experiments. Academic Press, New York

Harman R, Filová L (2019) OptimalDesign: a toolbox for computing efficient designs of experiments. R package version 1.0.1. https://CRAN.R-project.org/package=OptimalDesign

Hoaglin DC, Welsch RE (1978) The hat matrix in regression and ANOVA. Am Stat 32(1):17–22

Ma P, Mahoney MW, Yu B (2015) A statistical perspective on algorithmic leveraging. J Mach Learn Res 16(27):861–911

Meyer RK, Nachtsheim CJ (1995) The coordinate-exchange algorithm for constructing exact optimal experimental designs. Technometrics 37(1):60–69

Searle SR (1982) Matrix algebra useful for statistics. Wiley, New York

Wang H, Yang M, Stufken J (2019) Information-based optimal subdata selection for Big Data linear regression. J Am Stat Assoc 114(525):393–405

Acknowledgements

We are grateful to Prof. Claudio Agostinelli of the University of Trento, for the useful discussions related to robust statistics, which has stimulated the development of this study.

Funding

Open access funding provided by Università Cattolica del Sacro Cuore within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

The following algorithm provides a "good" initial sample for Algorithms 2 and 4.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Deldossi, L., Pesce, E. & Tommasi, C. Accounting for outliers in optimal subsampling methods. Stat Papers 64, 1119–1135 (2023). https://doi.org/10.1007/s00362-023-01422-3

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00362-023-01422-3