Abstract

When self-reported data are used in statistical analysis to estimate the mean and variance, as well as the regression parameters, the estimates tend, in many cases, to be biased. This is because interviewees have a tendency to heap their answers to certain values. The aim of the paper is to examine the bias-inducing effect of the heaping error in self-reported data, and study the effect on the heaping error on the mean and variance of a distribution as well as the regression parameters. As a result a new method is introduced to correct the effects of bias due to the heaping error using validation data. Using publicly available data and simulation studies, it can be shown that the newly developed method is practical and can easily be applied to correct the bias in the estimated mean and variance, as well as in the estimated regression parameters computed from self-reported data. Hence, using the method of correction presented in this paper allows researchers to draw accurate conclusions leading to the right decisions, e.g. regarding health care planning and delivery.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In practice, when interviewees are questioned for data collection they usually do not give true values (Devaux and Sassi 2016). This may be for many reasons; misunderstanding the question, estimating an answer, or feeling uncomfortable giving the most accurate answer (Rosenman et al. 2011). Instead, they may underestimate or exaggerate their responses to certain target values, e.g. nearest integer or to a specific number such as those ending in “zero” or “five” (Pardeshi 2010). So, if one analyses data collected by personal interview then one can note some accumulations on specific values. These given values are also called “target values” (Camarda et al. 2017). An example can be clearly seen in the breastfeeding duration data, where 6, 12, 18 and 24 months are the most frequently reported breastfeeding times (Bracher and Santow 1982; Haaga 1988; Klerman 1993). Another example is data on length of unemployment in months, with 6, 12, 18 and 24 months being the most commonly reported length of unemployment (Neels 2000). In the modern statistical literature, these attractive values are called heaped points and data that lie on these heaped points are called heaped data (Zinn and Würbach 2016). Furthermore, heaping error-in-the-variable can bias the estimates of the mean and variance as well as regression parameters (Wang et al. 2012; Hanisch 2005).

The phenomena of heaping has been intensively investegated in several studies. A novel statistical approach is described that allows us to deal with self-reported heaped income data, where modeling heaping mechanisms and the true underlying model in combination are proposed using the zero-inflated log-normal distribution (Zinn and Würbach 2016). Unemployment duration data was modelled using a maximum likelihood framework based on external validation information, which shows that parameter estimates in discrete-time proportional hazard models of unemployment duration are affected by the heaping mechanism (Kraus and Steiner 1998). A Bayesian approach was implemented by using the Gibbs sampler to reduce biased estimates due to heaping error in measurements from ultrasound images (Wright and Bray 2003). Unemployment spells drawn from the GSOEP West regarding heaping error was examined, and heaping effect on the estimate of a discrete time proportional hazard model was discussed and maximum likelihood approach was used to model the effect of heaping validation (Kraus and Steiner 1998).

Similarly when considering income, the phenomenon of heaping is also apparent and Taiwanese firms have observed that their employees describe their monthly earnings to the nearest 5 within the first two digits of the earnings numbers (Lin et al. 2011). The possible effects of heaping on the parameter estimates of an exponential model a Weibull and a log-logistic model was examined by Monte Carlo simulations and shown that heaping phenomena is common and may impact the relationship between reported duration of unemployment and the actual duration (Torelli and Trivellato 1993).

In the same way, the bias for general cluster patterns was examined and recommendations for bias correction were obtained. The use of Monte-Carlo simulations shows that the amount of heaping that characterizes the GSOEP West does not result in significantly biased parameter estimates of a Weibull model. However, this clearly leads to false seasonal effects (Wolff and Augustin 2003). Self-reported smoking data, specifically number of cigarettes smoked daily, are also subject to a user-dependent heaping bias. Here, multiples of 5 10 or 20 cigarettes smoked daily are reported. Furthermore, it has been shown that the heaping error in this type of data can bias the estimation of parameters of interest such as mean cigarette consumption (Wang and Heitjan 2008).

It has been suggested that heaping adds significantly to the distortion of self-reported number of cigarettes smoked daily (Wang et al. 2012). It has been shown that simple random heaping may lead to a biased estimate of mean daily cigarette consumption of up to 20% depending on both the true mean and the extent of heaping (Wang and Heitjan 2008; Wang et al. 2012). The same heaping errors are present in accurately measured datasets such as birthweight, when births are formally registered. It has been observed that rather than using the birthweight given to at least two decimal places, birthweights are rounded up to the closest integer at the time of birth registration (Barreca et al. 2011).

Multiple imputations are suggested to be used to replace heaped data. This statistical analysis may lead to more accurate estimates for heaping error model misspecification (Heitjan and Rubin 1990). Similalrly, Bayesian hierarchical model was used to control the heaping procedure in longitudinal self-reported counts of sex partners in a study of high-risk behavior in HIV-positive youth (Crawford et al. 2015). The performance of the naive estimators by ignoring the cumulative error for special cases of the Weibull model was examined and a correction approach was introduced when bias due to heaping errors is identified (Augustin and Wolff 2004). An asymmetric rounding, which is a special kind of heaping error in the variables, is investigated. It has neen shown that both symmetric and asymmetric rounding error in the variables has no effect on the expected value, but rather does affect the variance of estimated from rounded data (Schneeweiss et al. 2010).

Section 2 discusses several datasets where both self-reported and measured values are available. Section 3 provides an overview of the self-reported data and the heaping error, which is the difference between the self-reported and measured data. In Sect. 4, we present a method of correction for the bias in the mean and the variance caused by the heaping error in self-reported data. Section 5 is a simulation study and Sect. 6 is the discussion.

2 Investigating dataset with self-reported and measured variables

In order to gain practical knowledge about the heaping error, we examine two datasets where both self-reported and measured values are available. The heaping error was calculated as the difference between self-reported and measured values.

2.1 Self-reported and measured sleep duration

Sleep data from 647 subjects (Lauderdale et al. 2008) were collected between 2003 and 2005 using self-reports and measured sleep duration. Objective sleep duration data (in hours) were measured with wrist actigraphy. The mean (standard deviation) of the objective sleep duration and the subjective sleep duration (self-reported) were 6.06 (1.16) and 6.83 (1.11), respectively. This difference of 0.77 between the two means is a simple estimate of bias in the mean. The result of this study shows that the estimated mean of self-reported sleep duration is greater than the estimated mean of measured sleep duration as the mean of the differences (heaping error) is not zero (i.e. bias in the mean).

2.2 Self-reported and measured height, weight and body-mass-index

We investigated a dataset published by Krul et al. (2010) to achieve practical knowledge about the heaping error. The (Krul et al. 2010) dataset includes both self-reported and measured height and weight from 1257 Dutch adults (males and females). The body-mass-index (BMI) was computed as the ratio of measured or reported weight (kg) to height (\(m^{2}\)). Figure 3 shows the distribution of the self-reported and measured height and weight from 1257 Dutch adults (males and females) respectively. Figure 3 shows that for the reported body height, the value of 165, 170, 175, 180, 185, 190, and 195 were often given as target values. Whereas, for the reported body weight, the value of 65, 70, 75, 80, 85 and 90 were often given as target values (Fig. 3). These target values (most frequently given values) are seen as attractive heaping values. Furthermore, the heaping error was computed as the difference between the self-reported and measured data. The mean (95% confidence interval) of the heaping error of the height, weight and BMI was estimated with 1.041 (95% CI 0.920, 1.161), \(-\) 1.064 (95% CI \(-\) 1.243, \(-\) 0.886) and \(-\) 0.658 (95% CI \(-\) 0.724, \(-\) 0.592) respectively. The result of this study shows that the estimated mean from self-reported data is a biased estimate of the mean true mean (as compared to the mean estimated from measured data) as the mean of the differences (heaping error) is not zero. Figure 4 presents the density of the heaping error, which is the difference between the self-reported and measured weight (kg), for six targeted values (65, 70, 75, 80, 85 and 90).

Furthermore, the estimated variance of self-reported error is 4.7 and 10.4 for the height and weight variables. Whereas, the measured (self-reported) height and weight was 109.4 (112.9) and 276.9 (260.6) respectively. We can see from this dataset that the estimated variance of the height and weight from self-reported data is a biased estimate of the true variance. Moreover, the variance of the measured height is a grater than the variance of the self-reported height. However, the variance of the measured weight is less than the variance of the self-reported weight. Table 1 shows the estimated variance of measured, self-reported and the differences (self-reported minus measured) height and weight; as well as the estimated covariance between measured, self-reported and the differences for height and weight respectively.

Data from 168 students were included in a study to validate on-line self-reported heights and weights against objectively measurements (Nikolaou et al. 2017). The estimated mean (SD) self-reported and measured weight was 66.9 (17.7) kg and 67.5 (16.7) kg respectively. The estimated mean (SD) of the differences between self-reported and measured weight was \(-\) 0.6 (0.54) kg. Furthermore, after rounding to two decimal numbers, the estimated mean (SD) self-reported and measured height were the same 1.71 (0.09) m and 1.71 (0.07) m, respectively. The computed BMI calculated from self-reported height and weight was significantly lower than measured, by 0.2 (0.2) kg/m\(^2\).

The accuracy of self-reported height and body mass measuremnts were compared to measured values within the US law enforcement population, and the impact these estimations have on the accuracy of BMI classifications (Dawes et al. 2019). Data from 33 male law enforcement officers (age: \(40.48 \pm 6.66\) years) were included in the study. The estimated mean (standard deviation) of measured height and body mass was 180.42 (6.87) cm 100.82 (19.86) kg respectively. However, the estimated mean (standard deviation) of reported height and body mass was 180.49 (6.62) cm and 100.59 (19.54) kg correspondingly. Self-report bias in the mean, which is the estimated mean (standard deviation) of differences betweeen self-reported and measured height and body mass was 0.08 (0.25) cm and \(-\) 0.23 (0.32) kg respectively.

Flegal et al. (2019) compared national estimates of self-reported and measured height and weight among adults from three US surveys; namely, the National Health and Nutrition Examination Survey (NHANES) for the years 1999 to 2016. Table 2 shows mean differences between self-reported and measured heights (cm) and weight (kg) by survey year for females and males (NHANES), 1999–2016. From Table 2 one can see that mean of self-reported data is not equal to the mean of measured data and the calculated mean differences represent the estimated bias in the mean.

3 Overview

Let X be a continuous random variable with density \(f(x)=f(x,\vartheta )\), where \(\vartheta \) is an unknown parameter with a parameter space \(\varOmega \). Moreover, let \(X^{*}\) be the self-reported variable for the unmeasured variable X. The self-reported variable \(X^{*}\) can be written as

where \(\varDelta \) is the measurement error, which is the difference between the self-reported variable and unmeasured variable (\(\varDelta = X^{*} - X\)). Equation (1) looks similar to the classical measurement error model. However, the measurement error \(\varDelta \) is not independent of X. Instead of that, \(\varDelta \) is a function of X as \(X^{*}\) is a function of X (Augustin and Wolff 2004; Schneeweiss et al. 2010).

Based on our practical knowledge and several simulations as well as the data we discuss in Sect. 2, we define a new probabilistic heaping model that shows how data can be self-reported in practice. Here, we assume that the unmeasured variable X in (1) is heaped with multiple target values or rounded according to

with

where \(j^{*}=\arg \displaystyle {\max _{j}}\,\, HP_{j}\), I is a predetermined intensity, round() is the simple rounding function and B is a uniformly distributed random variable on the interval [0; 1] (\(B\sim U[0;1]\)), with

Figure 1 shows heaping profiles with multiple target values, which is similar to Fig. 4 and Figure 1 in Torelli and Trivellato (1993). Note that \(X^{*}=X\) in Eq. (2), if \(B\ge \displaystyle {\max _{j}}\,\, HP_{j}\) and X is a discrete random variable. Equation (2) allows for overlapping, i.e. values can be heaped to different heaping points. For example, a weight value of 68.93, 69.12, 70.88, 72.36, 74.27, and 75.29 can be heaped to 70.

Through repeated multiple different simulations and investigating several datasets (see Sect. 2), we have found out that the estimated mean and variance of the self-reported variable \(X^{*}\) is, in many cases,Footnote 1 a biased estimator of the mean and variance of the X variable. Therefore, we have under Eq. (2)

(see Tables 2 and 4). Furthermore,

The variance of the self-reported data is not equal to the variance of the measured data. However, the variance of the self-reported data can be greater than or less than the variance of the measured. This depends on the data we are investigating and how the data were self-reported. For example, the variance of the self-reported data can be less or greater than the variance of the measured data, if \({\mathbb {V}}\varDelta + 2{\mathbb {C}}ov(X,\varDelta ) > {\mathbb {V}}X\) or \({\mathbb {V}}\varDelta + 2{\mathbb {C}}ov(X,\varDelta ) < {\mathbb {V}}X\) correspondingly (see Tables 1 and 4).

4 Method of correction

Assuming that we interview N participants to collect a certain amount of information (for example their weight). The answers \(X^{*}\) of these N interviewees will not be accurate and usually heaped or rounded to an attractive number or to the nearest integer. Therefore, we randomly select a subsample n from these N participants to exactly measure the required information.

We assume an approximately linear relationship between these two variables according to the following

with

A linear regression assume that the conditional expectation value of the residual \(\epsilon \) given an exogenous observed variable should equal zero. Even if this is not satisfies, the linear model in (5) is still a good approximation between X and \(X^{*}\). In general a linear model assumes that

However, in the case of heaped data the true variable X and heaping error \(\varDelta \) are assumed to be dependent. Thus, on the first consideration, one has to ask whether the assumption in (6) may break down. But through repeated multiple different simulations we have found out that \({\mathbb {E}}(X|X^{*})\approx X^{*}\beta \). Therefore

Hence, we estimate the unknown parameters \(\beta \) by the method of least squares, which consists of finding the values of

that minimize the sum of squares of the residuals

We estimate the unmeasured values of the X variable for the remaining \(N-n\) observation according to

where \({\widehat{W}}=X^{*}{\widehat{\beta }}\) and u is simulated normal distributed random variable with mean zero and variance \(\sigma _{u}^{2} = \sigma _{{\widehat{\epsilon }}}^{2}\).

Here we assume that \(\sigma _{u}^{2} = \sigma _{{\widehat{\epsilon }}}^{2}\), because we have selected our subsample randomly, therefore the estimated variance of the residuals \(\sigma _{{\widehat{\epsilon }}}^{2}\) is representative for variance of the residuals of the whole sample.

Then, we have a new variable \({\widetilde{X}}\) and \({\widetilde{W}}\) such as

and

4.1 Comparison of the mean and the variance

We use validation data to estimate \({\tilde{X}}\), we would like to prove that the newly estimated variable \({\tilde{X}}\) has an equal mean and variance to the mean and variance of unmeasured variable X.

Proof

From (8) we have

with

\(\therefore \) \({\mathbb {E}}({\widetilde{X}})={\mathbb {E}}(X)\). \(\square \)

Proof

Furthermore

with

this because u is independent of \({\widehat{\beta }}\) and \(X^{*}\).

Let

\(\Longrightarrow \)

\(\hbox {tr}(M\varSigma _{{\widehat{\beta }}})\approx 0\), because \(\varSigma _{{\widehat{\beta }}}\longrightarrow 0\) for \(N\longrightarrow \infty \)

\(\Longrightarrow \)

\(\Longrightarrow \)

\(\therefore \) \({\mathbb {V}}({\tilde{X}})={\mathbb {V}}(X)\). \(\square \)

Note that the approximation sign (\(\approx \)) in (10) will be an exact result once the validation data sample size goes to infinity (\(n\rightarrow \infty \)).

From (9) and (10) we have proved that the mean and the variance of the newly estimated variable \({\tilde{X}}\) are equal to the mean and the variance of the unmeasured variable X.

4.2 The influence of heaping on regression estimates

Next, we would like to discuss the influence of self-reported data on the estimates of the regression coefficients. Let Y be a measured outcome (dependent variable), and \(X^{*}\) and X be the self-reported and measured explanatory variable respectively. We consider a simple linear regression model

where \(\alpha _{0}\) and \(\alpha _{1}\) are the unknown intercept and slope respectively. The corresponding regression for self-reported and corrected data is \(Y = \alpha _{0}^{*} + X^{*}\alpha _{1}^{*} + \epsilon ^{*}\) and \(Y = {\tilde{\alpha }}_{0} + {\widetilde{X}}{\tilde{\alpha }}_{1} + {\tilde{\epsilon }}\) respectively.

The true regression parameter \(\alpha _{1}\) is estimated by \({\mathbb {C}}ov(X,Y)/{\mathbb {V}}X\), whereas the regression parameter computed from the self-reported data \(\alpha _{1}^{*}\) is estimated by \({\mathbb {C}}ov(X^{*},Y)/{\mathbb {V}}X^{*}\), which is differs from \(\alpha _{1}\). Furthermore, using the corrected variable \({\widetilde{X}}\) to estimated the unknown parameter \(\alpha _{1}\) (\({\mathbb {C}}ov({\widetilde{X}},Y)/{\mathbb {V}}{\widetilde{X}}\)) will also lead to a biased estimate, although that \({\mathbb {E}}X={\mathbb {E}}{\widetilde{X}}\), and \({\mathbb {V}}X={\mathbb {V}}{\widetilde{X}}\) (when \(n\rightarrow \infty \) ). That’s because, under Eq. (2), \({\mathbb {E}}(XY)\ne {\mathbb {E}}({\widetilde{X}},Y)\). Nevertheless, we can estimate an approximately unbiased estimate for \(\alpha _{1}\) in Eq. (11) by performing a linear regression mode with \({\widetilde{W}}\) as a predictor of Y.Footnote 2

5 Simulation study

5.1 Simulation study based on Krul et al. (2010) data

To show the accuracy of the method in Sects. 4, we performed a simulation study with 10,000 replications. First, we used the estimated mean (77.8) and standard deviation (16.6) of the measured weight from Krul et al. data (Krul et al. 2010), to simulate a normally distributed variable X, with mean of 77.8 and standard deviation of 16.6 (\(X\sim N(77.8, 16.6)\)). Then, we heaped-and-rounded the X variable into \(X^{*}\) as in Eq. (2). For each simulation, we varied the percentage of heaping (from 10%, to 90%, by 10%) and the rest of the sample was rounded classically with zero decimal place (see Eq. (2)). The heaping values (target values) were 65, 70, 75, 80, 85, and 90.

Next, we simulated a simple linear regression model with a normally distributed independent variable X, the regression parameters were \(\alpha _{0}=-2\) and \(\alpha _{1}=2\) and the error term was simulated to be standard normally distributed with mean zero and variance one (\(\epsilon \sim N(0,1)\)). The dependent variable Y was simulated as \(Y=\alpha _{1}+\alpha _{1}X+\epsilon \).

Finally, we applied our method of correction in Sect. 4 to correct the mean and variance of the \(X^{*}\) variable by estimating the \({\widetilde{W}}\) and \({\widetilde{X}}\) variables as in Eqs. (8) and (7). Next we fitted three simple linear regression models with Y as a depended variable and \(X^{*}\), \({\widetilde{W}}\) and \({\widetilde{X}}\) as independent variable respectively. The estimates of the regression parameters were computed from 10,000 replications; and the subsample was \(n=120\)% for each sample size \(N=1200\) in each replicate.

Figure 2 contains four graphs which comprise the results of this simulation study. The top-left graph shows the estimated mean of the measured X, heaped-and-rounded \(X^{*}\) and corrected variables (\({\widetilde{W}}\) and \({\widetilde{X}}\)) as well as the mean of the heaping-and-rounded error (\({\mathbb {E}}\varDelta \)) for different perecetages of heaping. The estimated mean of \(X^{*}\) is a biased estimate of the mean of X and the difference between \({\mathbb {E}}X^{*}\) and \({\mathbb {E}}X\), which is \({\mathbb {E}}\varDelta \), is exponentially decreasing as the perecetage of heaping increases. However, the estimated mean of the corrected variable \({\widetilde{W}}\) and \({\widetilde{X}}\) is an unbiased estimate of the mean of the true variable X (\({\mathbb {E}}X={\mathbb {E}}{\widetilde{W}}={\mathbb {E}}{\widetilde{X}}\)).

Results of this simulation study in Sect. 5.1. on of 16.6 (\(X\sim N(77.8, 16.6)\)). Then, we heaped-and-rounded the X variable into \(X^{*}\) as in Eq. (2). For each simulation, we varied the percentage of heaping (from 10%, to 90%, by 10%) and the rest of the sample was rounded classically with zero decimal place (see Eq. (2)). The heaping values (target values) were 65, 70, 75, 80, 85, and 90

Furthermore, The top-right graph in Fig. 2 demonstrates that the estimated variance of the corrected variable \({\widetilde{X}}\) is an unbiased estimate to the variance of the true variable X for all simulated perecetages of heaping (10% to 90%). The estimated variance of the heaping-and-rounded variable \(X^{*}\) and corrected \({\mathbb {V}}W\) is a biased estimate of the variance of the measured variable X respectively (top-right graph in Fig. 2).

Moreover, The bottom-left graph sindicates that the estimated covariance of the \({\mathbb {C}}ov(X^{*},Y)\), \({\mathbb {C}}ov({\widetilde{W}},Y)\) and \({\mathbb {C}}ov({\widetilde{X}},Y)\) are a biased estimates of the \({\mathbb {C}}ov(X,Y)\); and the degree of bias depends on the degrees of heaping (percentage of heaping). Therefore, the estimated slope by \({\mathbb {C}}ov(Y,X^{*})/{\mathbb {V}}(X^{*})\) and \({\mathbb {C}}ov(Y,{\widetilde{X}})/{\mathbb {V}}({\widetilde{X}})\) is a biased estimate of the true slope \({\mathbb {C}}ov(Y,X)/{\mathbb {V}}(X)\). However, an unbiased estimate of the true slope \(\alpha _{1}\) can be estimated by \({\mathbb {C}}ov(Y,{\widetilde{X}})/{\mathbb {V}}({\widetilde{X}})\) (Fig. 2, graph bottom-right).

From Fig. 2, we can see that heaping-and-rounding error leads to biased estimates of the mean and variance of the heaped-and-rounded variable \(X^{*}\). This bias increases as the heaping percentage increases. Nevertheless, our method of correction in Sect. 4 can be applied to reach unbiased estimates even.

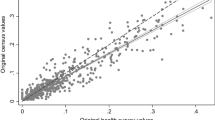

In addition, we simulated \(X\sim N(77.8, 16.6)\). Then, we heaped-and-rounded the X variable into \(X^{*}\) as in Eq. (2). For each simulation, we varied the percentage of heaping (from 5%, to 95%, by 10%) and the rest of the sample was rounded classically with zero decimal place (see Eq. (2)). The heaping values (target values) were 65, 70, 75, 80, 85, and 90. Then we applied our method of correction in Sect. 4 to correct the mean and variance of the \(X^{*}\) variable by estimating the \({\widetilde{W}}\) and \({\widetilde{X}}\) variables as in Eqs. (8) and (7). Next we performed three univariate linear regression models with X as a dependent variable and \(X^{*}\), \({\widetilde{X}}\) and \({\widetilde{W}}\) as an independent variable respectivel. Table 3 shows that the estimated slope of X regress on \({\widetilde{W}}\) is approximately equal to one even if 95% of the data were heaped to several heaping points (more than two vaues). However, the estimated slope of X regress on \(X^{*}\) and \({\widetilde{X}}\) are less than one when 20% of the data are heaped (Table 3).

Through different simulations, we have found out that the linear assumption between self-reported and measured data holds even if 100% of the data were heaped to several heaping points (targeted values). However, the linear assumption between self-reported and measured data breaks down when 100% of the self-reported data are heaped to one or two targeted values. Furthermore, the corrected variable \({\widetilde{W}}\) has the highest correlation with the Y variable (between 0.99 to 0.4 for different heaping percentages) compared to the self-reported (\(X^{*}\)) and corrected \({\widetilde{X}}\) variables.

5.2 Simulation study using Krul et al. (2010) data

We carried out a simulation study with 10,000 replications to invetegate the accuracy of the method in Sects. 4. We used Krul et al. data (Krul et al. 2010) to compare the mean and the variance of the heaped and measured variable with the mean and the variance of the estimated variable as in (8). Form each simulation (replicate), we randomly sampled (without replacement) a subsample (n) from the \(N=1257\) measured data (height, weight and BMI). Next we have applied our method of correction in Sect. 4 to estimate the new variable \({\tilde{W}}\) and \({\tilde{X}}\) for the height, weight and BMI respectively. The mean and variance of the estimated height, weight and BMI was estimated from each replicate (simulation) and the average of 10,000 simulations was computed for each variable. We have vary the randomly sampled subsample sizes of 126 (10%), 251 (20%), 377 (30%), 503 (40%) and 628 (50%) respectively. In addition, four bivariate linear regression models were performed with measured, self-reported, and corrected BMI as the outcome. The predictors were age and gender. We estimated the intercept and slopes of each model and for each replicate. Similarly, the sample size (n) was varied to 126 (10%), 251 (20%), 377 (30%), 503 (40%), and 628 (50%), respectively.

Table 4 represents the average mean and standard deviation of the height weight and BMI from 10,000 replications. From Table 4 we can see that the mean and standard deviation from the estimated variable \({\tilde{X}}\) are equal to the mean and standard deviation of the measured variable X for all validation samples of size (n) used in this simulation study. Furthermore, Table 5 shows the average of 10,000 simulations for different subsample sizes of 126 (10%), 251 (20%), 377 (30%), 503 (40%) and 628 (50%) respectively. A good correction for the estimated regression coefficients is obtained, when our method of correction in Sect. 4, is used to estimate the effect of age and gender on BMI as compared with the naive estimators, i.e. when self-reported BMI is used as an outcome variable (Table 5).

6 Discussion

In empirical research, variables such as weight (kg) or sleep duration (hours) can be measured directly for each individual (Narciso et al. 2019). However, self-reported measures can be valuable when actual measurements are unavailable or too time consuming or expensive to measure (Short et al. 2009). Self-reported measures can also be very useful in some situations where conducting measurement is not possible, such as during a pandemic (e.g. COVID-19 pandemic). Instead, data can be collected via self-reporting questionnaires (Garcia and Gustavson 1997). Nevertheless, self-reported data are subject to error (Trabulsi and Schoeller 2001), and standard statistical approaches do not consider self-reporting errors in the data. This can, in many cases, leads to invalid inferences.

This paper investigates to what extent heaping-and-rounding error in self-reported data can affect the estimates of the mean and variance, as well as regression parameters, when self-reported data are used in statistical analysis. Exploring several datasets in Sect. 2, we show that using self-reported data can, in many situation, lead to bias estimates of the mean and variance, as well as regression parameters (Lauderdale et al. 2008; Krul et al. 2010; Dawes et al. 2019; Flegal et al. 2019). This is also because most people are heaping their responses in an asymmetrical manner. For instance, it is well known that many people tend to reduce their real weight (Krul et al. 2010; Short et al. 2009) and income (Maynes 1968) and increase their height (Krul et al. 2010; Short et al. 2009). As a consequence the mean and the variance of the differences between self-reported and measured data are not zero.

As a result, a new method of correction is introduced to correct the heaping error effects using validation data. Validation data are very valuable when self-reported data are collected (Ahmad 2007). In statistics, validation is performed to find out if predicted values from a statistical model are likely to correctly predict responses on future observed values (Frank and Harrell 2015). In our case, the technical term “validation data” denotes an exact measurement of a certain subsample of the data collected at interview time. For example, assuming that we interview N persons about a certain amount of information (for example about their height or weight). Their answers are usually heaped, therefore we can randomly select n individuals from the study sample size N and measure them exactly (without rounding or heaping error). An example of such data can be found in Krul et al. (2010).

Section 5 shows that bias increases as the heaping percentage increases, and that heaping leads to bias in the mean and variance of a heaped variable and this bias depends on the degree of heaping. However, in empirical research, the heaping-and-rounding procedure and the heaping degree are unknown. Nevertheless, our method of correction in Sect. 4 can be applied to reach unbiased estimates even if the the heaping-and-rounding procedure and the heaping degree are unknown. Nevertheless, validation data can be used to make inferences on the mean and variance as well as regression parameters. A randomly selected subsample with a size of 10% of the study sample size N is recommended, in order to use our method of correction in Sect. 4. However, a careful consideration should be made to draw a conclusion from the self-reported, validation, and estimated data in (8) and (7). We recommend that a sensitivity analysis should be used where self-reported data of size N, validation data of size n, and estimated data of size N are analyzed.

Notes

This is because many people heap their responses in an asymmetrical manner by reducing their real weight and increase their height (Krul et al. 2010).

Through several simulations we found that the variable \({\widetilde{W}}\) is perfectly calibrated (\(0.999<\) slope \(<1.004\)) with the measured variable X. Also, \({\widetilde{W}}\) has a higher correlation with Y compared to \(X^{*}\) and \({\widetilde{X}}\).

References

Ahmad A (2007) Statistical analysis of heaping and rounding effects. Dr Hut Verlag, Verlag. ISBN: 978-3-89963-508-9

Augustin T, Wolff J (2004) A bias analysis of Weibull models under heaped data. Stat Pap 45:211–229

Barreca AI, Guldi M, Lindo JM, Waddell GR (2011) Saving babies? Revisiting the effect of very low birth weight classification. Q J Econ 126(4):2117–1223

Bracher MD, Santow G (1982) Breastfeeding in central java. Popul Stud 36:413–430

Camarda CG, Eilers PHC, Gampe J (2017) Modelling trends in digit preference patterns. J R Stat Soc B 66(5):893

Flegal KM, Ogden CL, Fryar C, Afful J, Klein R, Huang DT (2019) Comparisons of self-reported and measured height and weight, BMI, and obesity prevalence from national surveys: 1999–2016. Obesity (Silver Spring) 27(10):1711–1719

Crawford FW, Weiss RE, Suchard MA (2015) Sex, lies and self-reported counts: Bayesian mixture models for heaping in longitudinal count data via birth-death processes. Ann Appl Stat 9(2):572–596

Dawes JJ, Lockie RG, Kukic F, Cvorovic A, Kornhauser Ch, Holmes R, Orr RM (2019) Accuracy of Self-reported height, body mass and derived body mass index in a group of united states law enforcement officers. NBP. https://doi.org/10.5937/nabepo24-21191

Devaux M, Sassi F (2016) Social disparities in hazardous alcohol use: self-report bias may lead to incorrect estimates. Eur J Public Health 26(1):129–134

Frank E, Harrell Jr (2015) Regression modeling strategies with applications to linear models, logistic and ordinal regression, and survival analysis, Springer series in statistics, p 109. ISSN 0172-7397

Garcia J, Gustavson AR (1997) The science of self-report. APS Observer 10, 1

Haaga JG (1988) Reliability of retrospective survey data on infant feeding. Demography 25:307–314

Hanisch JU (2005) Rounded responses to income questions. Allg Stat Arch 89(1):39–48

Heitjan DF, Rubin DB (1990) Inference from coarse data via multiple imputation with application to age heaping. J Am Stat Assoc 85(410):304–314

Klerman JA (1993) Heaping in retrospective data: insights from Malaysian family life surveys’ breastfeeding data. The RAND Corporation

Kraus F, Steiner V (1998) Modelling heaping effects in unemployment duration models–with an application to retrospective event data in the German Socio-Economic Panel. Jahrbücher für Nationlökonomie und Statistik 217:550–573

Krul A, Daanen HAM, Choi H (2010) Self-reported and measured weight, height and body mass index (BMI) in Italy, The Netherlands and North America. Eur J Pub Health 21(4):414–419

Lauderdale DS, Knutson KL, Yan LL, Liu K, Rathouza PJ (2008) Self-reported and measured sleep duration how similar are they? Epidemiology 9(6):838–45

Lin F, Guan L, Fang W (2011) Heaping in reported earnings: evidence from monthly financial reports of Taiwanese firms. Emerg Mark Finance Trade 47(2):62

Maynes ES (1968) Minimizing responses errors in financial data: the possibilities. J Am Stat Assoc 63:214–227

Narciso J, Silva AJ, Rodrigues V, Monteiro MJ, Almeida A, Saavedra R, Cost AM (2019) Behavioral, contextual and biological factors associated with obesity during adolescence: a systematic review. PLoS ONE 14:e0214941

Neels K (2000) Education and the transition to employment: young Turkish and Moroccan adults in Belgium. Interface Demography. Vrije Universiteit Brussel, Brussels

Nikolaou CK, Hankey CR, Lean MEJ (2017) Accuracy of on-line self-reported weights and heights by young adults. Eur J Public Health 27(5):898–903

Pardeshi GS (2010) Age heaping and accuracy of age data collected during a community survey in the Yavatmal District, Maharashtra. Indian J Community Med 35(3):391–395

Rosenman R, Tennekoon V, Hill LG (2011) Measuring bias in self-reported data. Int J Behav Healthc Res 2(4):320–332

Schneeweiss H, Komlos J, Ahmad A (2010) Symmetric and asymmetric rounding: a review and some new results. AStA Adv Stat Anal 94:247–271

Short ME, Goetzel RZ, Pei X, Tabrizi MJ, Ozminkowski RJ, Gibson TB, DeJoy DM, Wilson MG (2009) How accurate are self-reports? An analysis of self-reported healthcare utilization and absence when compared to administrative data. J Occup Environ Med 51(7):786–796

Torelli N, Trivellato U (1993) Modelling inaccuracies in Job-Search duration data. J Econ 59:187–211

Trabulsi J, Schoeller D (2001) Evaluation of dietary assessment instruments against doubly labeled water, a biomarker of habitual energy intake. Am J Physiol Endocrinol Metab 281(5):E891-9

Wang H, Heitjan DF (2008) Modeling heaping in self-reported cigarette counts. Stat Med 27(19):3789–3804

Wang H, Shiffman S, Griffth SD, Heitjan DF (2012) Truth and memory: linking instantaneous and retrospective self-reported cigarette consumption. Ann Appl Stat 6(4):1689–1706

Wolff J, Augustin T (2003) Heaping and its consequences for duration analysis: a simulation study. Allg Stat Arch 87:59–86

Wright DE, Bray IA (2003) Mixture model for rounded data. The Statistician 52(Part 1):3–13

Zinn S, Würbach A (2016) A statistical approach to address the problem of heaping in self-reported income data. J Appl Stat 43(4):682

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ahmad, A.S., Al-Hassan, M., Hussain, H.Y. et al. A method of correction for heaping error in the variables using validation data. Stat Papers 65, 687–704 (2024). https://doi.org/10.1007/s00362-023-01405-4

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00362-023-01405-4