Abstract

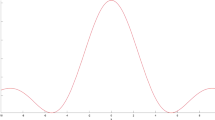

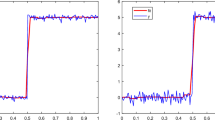

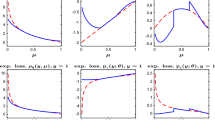

In this paper, we mainly investigate the nonparametric regression model with repeated measurements based on extended negatively dependent (END, in short) errors. Based on the Rosenthal type inequality and the Marcinkiewicz–Zygmund type strong law of large numbers, the mean consistency, weak consistency, strong consistency, complete consistency and strong convergence rate of the wavelet estimator are established under some mild conditions, which generalize the corresponding ones for negatively associated errors. Some numerical simulations are presented to verify the validity of the theoretical results.

Similar content being viewed by others

References

Antoniadis A, Gregoire G, Mckeague IW (1994) Wavelet methods for curve estimation. J Am Stat Assoc 89:1340–1352

Chen Y, Chen A, Ng KW (2010) The strong law of large numbers for extend negatively dependent random variables. J Appl Probab 47:908–922

Chen ZY, Wang HB, Wang XJ (2016) The consistency for the estimator of nonparametric regression model based on martingale difference errors. Stat Pap 57(2):451–469

Fan Y (1990) Consistent nonparametric multiple regression for dependent heterogeneous processes. J Multivar Anal 33(1):72–88

Fraiman R, Iribarren GP (1991) Nonparametric regression estimation in models with weak error’s structure. J Multivar Anal 37:180–196

Georgiev AA (1988) Consistent nonparametric multiple regression: the fixed design case. J Multivar Anal 25(1):100–110

Georgiev AA, Greblicki W (1986) Nonparametric function recovering from noisy observations. J Stat Plan Inference 13:1–14

Hart JD, Wehrly TE (1986) Kernel regression estimation using repeated measurements data. J Am Stat Assoc 81:1080–1088

Joag-Dev K, Proschan F (1983) Negative association of random variables with applications. Ann Stat 11(1):286–295

Li YM, Guo JH (2009) Asymptotic normality of wavelet estimator for strong mixing errors. J Korean Stat Soc 38:383–390

Li YM, Yang SC, Zhou Y (2008) Consistency and uniformly asymptotic normality of wavelet estimator in regression model with associated samples. Stat Probab Lett 78:2947–2956

Liang HY (2011) Asymptotic normality of wavelet estimator in heteroscedastic model with $\alpha $-mixing errors. J Syst Sci Complex 24:725–737

Liang HY, Jing BY (2005) Asymptotic properties for estimates of nonparametric regression models based on negatively associated sequences. J Multivar Anal 95:227–245

Liu L (2009) Precise large deviations for dependent random variables with heavy tails. Stat Probab Lett 79:1290–1298

Liu L (2010) Necessary and sufficient conditions for moderate deviations of dependent random variables with heavy tails. Sci China Ser A 53(6):1421–1434

Müller HG (1987) Weak and universal consistency of moving weighted averages. Period Math Hung 18(3):241–250

Qiu DH, Chen PY, Antonini RG, Volodin A (2013) On the complete convergence for arrays of rowwise extended negatively dependent random variables. J Korean Math Soc 50(2):379–392

Roussas GG, Tran LT, Ioannides DA (1992) Fixed design regression for time series: asymptotic normality. J Multivar Anal 40:262–291

Shen AT (2011) Probability inequalities for END sequence and their applications. J Inequal Appl 2011, Article ID 98

Shen AT (2014) On asymptotic approximation of inverse moments for a class of nonnegative random variables. Statistics 48(6):1371–1379

Shen AT, Zhang Y, Volodin A (2015) Applications of the Rosenthal-type inequality for negatively superadditive dependent random variables. Metrika 78(3):295–311

Stout WF (1974) Almost sure convergence. Academic Press, New York

Tran LT, Roussas GG, Yakowitz S, Van BT (1996) Fixed-design regression for linear time series. Ann Stat 24:975–991

Walter GG (1994) Wavelets and orthogonal systems with applications. CRC Press Inc, Florida

Wang SJ, Wang XJ (2013) Precise large deviations for random sums of END real-valued random variables with consistent variation. J Math Anal Appl 402:660–667

Wang XJ, Xu C, Hu TC, Volodin A, Hu SH (2014) On complete convergence for widely orthant-dependent random variables and its applications in nonparametric regression models. Test 23:607–629

Wang XJ, Zheng LL, Xu C, Hu SH (2015) Complete consistency for the estimator of nonparametric regression models based on extended negatively dependent errors. Statistics 49(2):396–407

Wu QY (2006) Probability limit theory for mixing sequences. Science Press, Beijing

Wu QY (2012) A complete convergence theorem for weighted sums of arrays of rowwise negatively dependent random variables. J Inequal Appl 2012, Article ID 50

Wu YF, Guan M (2012) Convergence properties of the partial sums for sequences of END random variables. J Korean Math Soc 49(6):1097–1110

Xue LG (2002) Strong uniform convergence rates of the wavelet estimator of regression function under completed and censored data. Acta Math Appl Sin 25:430–438

Yang WZ, Xu HY, Chen L, Hu SH (2018) Complete consistency of estimators for regression models based on extended negatively dependent errors. Stat Pap 59(2):449–465

Zhou XC, Lin JG (2014) Wavelet estimator in nonparametric regression model with dependent error’s structure. Commun Stat 43:4707–4722

Zhou XC, Lin JG (2015) Asymptotics of a wavelet estimator in the nonparametric regression model with repeated measurements under a NA error process. RACSAM 109(1):153–168

Zhou XC, Lin JG, Yin CM (2013) Asymptotic properties of wavelet-based estimator in nonparametric regression model with weakly dependent processes. J Inequal Appl 2013, Article ID 261

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supported by the National Natural Science Foundation of China (11671012, 11871072, 11701004, 11701005), the Natural Science Foundation of Anhui Province (1808085QA03, 1908085QA01, 1908085QA07) and the Project on Reserve Candidates for Academic and Technical Leaders of Anhui Province (2017H123).

Appendix

Appendix

Proof of Corollary 3.1

It is easily checked that

where the last inequality above follows by Lemma 3.1 (ii).

Noting that

we have by \(C_r\)-inequality that

By Lemma 3.3, we can see that \(\{V^{(1)}_+(x),V^{(2)}_+(x),\ldots ,V^{(m)}_+(x)\}\) are still zero mean END random variables. Hence, it follows by Lemma 3.4 and (4.1) that

Similarly, we have

Therefore, the desired result (3.23) follows by (4.2)–(4.4) immediately. This completes the proof of the corollary. \(\square \)

Proof of Lemma 3.5

Noting that \(\alpha p>1\), we take a suitable q such that \(\frac{1}{ \alpha p }< q<1\). For fixed \(n\ge 1\), denote for \(1\le i\le n\) that

Noting that

for \(1\le j\le n\), we have that for all \(\varepsilon >0\),

Hence, in order to prove (3.2), it suffices to show that \(I_1<\infty \), \(I_2<\infty \) and \(I_3<\infty \).

For \(I_1\), we firstly show that

It follows by \(EX_{n} = 0\), Markov’s inequality and Property 1.1 that

which together with \(E |X|^{p} <\infty \) and \(\frac{1}{ \alpha p }< q<1\) yields (4.6). Hence, we have by (4.6) that

For fixed \(n\ge 1\), we can see that \(\{X_{ni} ^{(1)}-EX_{ni} ^{(1)}, 1\le i\le n\}\) are still END random variables by Lemma 3.3. It follows by (4.7), Markov’s inequality and Lemma 3.4 that for any \(\delta \ge 2\),

Taking \(\delta >\max \left\{ \frac{\alpha p-1}{\alpha -1/2}, 2, p\right\} \), we have that

and

It follows by \(C_r\)-inequality, Markov’s inequality and Property 1.1 that

and

Hence, \(I_1<\infty \) follows by (4.8)–(4.10) immediately.

In the following, we will show that \(I_{2}<\infty \). For fixed \(n\ge 1\), denote for \(1\le i\le n\) that

It is easily checked that

which implies that

It follows by \(E|X|^{p} < \infty \) that

Noting that \(\frac{1}{\alpha p}<q<1\), we have by the definition of \(X_{ni} ^{(4)}\) and Property 1.1 that

Since \(X_{ni} ^{(4)}>0\), we have by (4.11)–(4.13) that

For fixed \( n\ge 1\), we can see that \(\{X_{ni} ^{(4)}-EX_{ni} ^{(4)}, 1\le i\le n\}\) are still END random variables by Lemma 3.3. It follows by Markov’s inequality, \(C_r\) inequality and Lemma 3.4 that

By \(C_r\)-inequality and Property 1.1 again, we can get that

Similar to the proofs of (4.10) and (4.16), we can obtain that \(J_2<\infty \), which together with (4.15) and (4.16) yields that \(I_2<\infty \).

Similar to the proof of \(I_2<\infty \), one can get that \(I_3<\infty \). Hence, (3.2) follows by (4.5) and \(I_1<\infty \), \(I_2<\infty \) and \(I_3<\infty \) immediately. By the standard method, one can get (3.3) by (3.2) immediately. This completes the proof of the theorem. \(\square \)

Rights and permissions

About this article

Cite this article

Wang, X., Wu, Y., Wang, R. et al. On consistency of wavelet estimator in nonparametric regression models. Stat Papers 62, 935–962 (2021). https://doi.org/10.1007/s00362-019-01117-8

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00362-019-01117-8

Keywords

- Nonparametric regression model

- END random variables

- Wavelet estimator

- Consistency

- Marcinkiewicz–Zygmund type strong law of large numbers