Abstract

The theoretical literature on public school choice proposes centralized mechanisms that assign children to schools on the basis of parents’ preferences and the priorities children have for different schools. The related experimental literature analyzes in detail how various mechanisms fare in terms of welfare and stability of the resulting matchings, yet often provides only aggregate statistics of the individual behavior that leads to these outcomes (i.e., the degree to which subjects tell the truth in the induced simultaneous move game). In this paper, we show that the quantal response equilibrium (QRE) adequately describes individual behavior and the resulting matching in three constrained problems for which the immediate acceptance mechanism and the student-optimal stable mechanism coincide. Specifically, the comparative statics of the logit-QRE with risk-neutral and expected-payoff-maximizing agents capture the directional changes of subject behavior and the prevalence of the different stable matchings when cardinal payoffs (i.e., relative preference intensities) are modified in the experiment.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 Motivation

In many public school choice programs, centralized mechanisms such as the student-optimal stable mechanism and the immediate acceptance mechanism are used to assign children to schools on the basis of parents’ preferences and the priorities of children for different schools (based on, e.g., walking distance, siblings, etc.).Footnote 1 In a constrained setting where parents can only rank a limited number of schools, which happens in many real-life applications,Footnote 2 there may be incentives to behave strategically. Specifically, a very common situation is that the parents prefer their child to go to school x instead of school y but the child has higher priority at school y than at school x. The parents then face an important decision. Will they report their preferences on the pair (x, y) truthfully or will they misrepresent their preferences by ranking school y above school x? Our paper contributes to the experimental literature that analyzes the structure of manipulations in school choice problems by studying how cardinal payoffs affect subject behavior.

For the constrained setting, laboratory experiments have shown that subjects may fail to coordinate on a Nash equilibrium.Footnote 3 In fact, to the best of our knowledge, there are no studies that give clear-cut descriptions of subjects’ behavior and resulting matchings. We consider three constrained problems for which the immediate acceptance mechanism and the student-optimal stable mechanism coincide and show that quantal response theory introduced by McKelvey and Palfrey (1995) is capable of describing the main behavioral patterns.Footnote 4 From a logit-QRE perspective, if the payoff of school x is much higher than the payoff of school y, there are incentives to play a strategy in which school x is ranked above school y. On the other hand, if the payoffs of the two schools are close to each other, strategies that rank school y above school x have the advantage that not much payoff is foregone and that the risk of getting an even worse outcome than school y diminishes. We next detail the experimental design and our main findings to clarify these points further.

1.2 Experiment and contribution

We consider a setting with three students and two schools (\(s_1\) and \(s_2\)) such that each student can only apply to one school. The preferences of the students and the priorities students have at the schools remain the same throughout the experiment. Two parameters vary in our study. First, the number of seats schools offer changes each six rounds of an experimental session. School \(s_1\) offers one and school \(s_2\) offers two seats in the first six rounds of a session (we refer to this situation as problem \({{\mathcal {P}}}_1\)), both schools offer one seat in the next six rounds (problem \({{\mathcal {P}}}_2\)), and in the final six rounds \(s_1\) offers two and \(s_2\) offers one seat (problem \({{\mathcal {P}}}_3\)). Our treatment variable is the material payoff subjects receive from their matches. A subject always receives 1 monetary unit if she is assigned to the school she likes most and nothing if she remains unmatched. In treatment L (low payoff), a subject receives 0.3 monetary units if she is matched to the school she likes least. This payoff equals 0.7 monetary units in treatment H (high payoff). In each of \({{\mathcal {P}}}_1\) and \({{\mathcal {P}}}_3\), there are two stable matchings: the student-optimal stable matching \(\mu ^I\) and the school-optimal stable matching \(\mu ^S\). All students weakly prefer \(\mu ^I\) to \(\mu ^S\). There is a unique stable matching in \({{\mathcal {P}}}_2\).Footnote 5

Our experimental predictions are based on the logit version of the quantal response equilibrium (logit-QRE) with risk-neutral and expected-payoff-maximizing agents, which is parametrized by a “rationality parameter” \(\lambda \ge 0\).Footnote 6 It is well-known that for large \(\lambda \), the logit-QRE tends towards a Nash equilibrium in mixed strategies. For both \({{\mathcal {P}}}_1\) and \({{\mathcal {P}}}_3\), the resulting matching under this limiting logit-QRE is \(\mu ^I\) in treatment L but \(\mu ^S\) in treatment H. The underlying intuition is that at the pure strategy Nash equilibrium that yields \(\mu ^I\) some students take the risk of being unmatched. This risk comes with the potential benefit of being matched to the most preferred school. For it being worthwhile to take this risk (instead of applying to the worst school, which ensures a seat, but comes with a low payoff), the payoff difference between the two schools must be sufficiently high, which is the case in treatment L but not in treatment H. A similar argument applies in the general (non-limiting) case as well. In fact, we establish that independently of the actual rationality parameters in treatments L and H, \(\mu ^I\) is more likely to be obtained than \(\mu ^S\) in treatment L but \(\mu ^S\) is more prevalent than \(\mu ^I\) in treatment H. Our data supports this prediction, which we consider to be our main finding. In \({{\mathcal {P}}}_1\) of treatment L, \(\mu ^I\) is obtained in 30.2% and \(\mu ^S\) in 27.2% of the cases (the frequency of an unstable matching is thus 42.6%). On the other hand, in \({{\mathcal {P}}}_1\) of treatment H, \(\mu ^I\) is obtained in 19.7% and \(\mu ^S\) in 45.1% of the cases. The frequencies for \({{\mathcal {P}}}_3\) are 43.2% for \(\mu ^I\) and 37.0% for \(\mu ^S\) in treatment L, but 24.1% for \(\mu ^I\) and 59.9% for \(\mu ^S\) in treatment H. In each of the two problems, the frequency difference between \(\mu ^I\) and \(\mu ^S\) is significant at the 5-percent level for treatment H but not for treatment L.

The logit-QRE also permits us to derive hypotheses regarding student behavior. Point predictions indicate for a given problem \({{\mathcal {P}}} \in \{{{\mathcal {P}}}_1,{{\mathcal {P}}}_2,{{\mathcal {P}}}_3\}\) and for a given treatment whether a student is more likely to apply to school \(s_1\) or to school \(s_2\). And treatment comparisons refer to how the probability with which a student applies to each of the two schools changes between the two treatments. The experimental data is in almost all instances consistent with these predictions. In particular, for \({{\mathcal {P}}}_1\) and \({{\mathcal {P}}}_3\), 4 out of a total of 5 statistical tests regarding treatment comparisons yield a one-sided p value below 0.05. With respect to the point predictions, there is more noise in the data because “only” 3 out of a total of 12 comparisons are significant at the 5-percent level.

We also estimate the logit-QRE for the pooled data at the problem level via maximum likelihood. The estimated \(\lambda \) is largest in \({{\mathcal {P}}}_2\), which is arguably the simplest setting because it has a unique Nash equilibrium outcome (supported by an infinite number of Nash equilibria in mixed strategies). In each of \({{\mathcal {P}}}_1\) and \({{\mathcal {P}}}_3\), the estimated \(\lambda \) is smaller in treatment L than in treatment H. Finally, we find that this discrepancy in the estimation of \(\lambda \) is reduced if agents are risk-averse but not if they have preferences for the student-optimal stable matching (the expected utility depends positively on the probability that this matching is reached) or the expected group payoff.

1.3 Related literature

There is a steadily increasing number of experimental studies that complement the theoretical literature on school choice (see Hakimov and Kübler 2020 for an excellent overview). It is by now well understood that some subjects fail to report their true preferences under the unconstrained student-optimal stable mechanism. Under the immediate acceptance mechanism, subject behavior is even less in line with the true preferences (see, e.g., Calsamiglia et al. 2010; Chen and Kesten 2019; Chen and Sönmez 2006; Featherstone and Niederle 2016; Pais and Pintér 2008). The kind of deviations subjects employ under the two mechanisms are very similar. In particular, the order of two schools at the top of their preference ranking might be switched if students have a higher priority in the less desired of the two schools.

The existing experiments on school choice mainly compare various mechanisms in terms of their outcome (stability and welfare of the resulting matching), but only offer a less detailed overview of the behavior that leads to these outcomes (i.e., often the focus is a comparison of truth-telling rates across mechanisms). It seems therefore necessary to develop alternative models that capture the observed behavioral patterns. The logit-QRE is a natural starting point because it takes relative preference intensities explicitly into account.Footnote 7 Apart from our study, only Echenique et al. (2016) and Dreyfuss et al. (2021) estimate a logit-QRE in a two-sided matching market. The main difference between our study and Echenique et al. (2016) is that we specifically design the experiment in such a way that the predictions of the logit-QRE depend on the treatment condition. Furthermore, Echenique et al. (2016) do not derive testable hypotheses from the logit-QRE.

Using the data of Li (2017) on the (strategy-proof) random serial dictatorship mechanism, Dreyfuss et al. (2021) suggest that expectation-based loss aversion can explain individuals’ deviations from truth-telling. When the underlying mechanism is strategy-proof, the logit-QRE with expected-payoff-maximizing agents converges towards truthful preference revelation, which implies that deviations of rational subjects are likely to be caused by non-standard preferences.

1.4 Remainder

We proceed as follows. In Sect. 2, we detail the experimental design and procedures and we derive predictions from the logit-QRE. In Sect. 3, we present all experimental results. Section 4 concludes. The appendix contains the experimental instructions, formal proofs, additional data analysis, and theoretical predictions for lists of length 2 (i.e., the unconstrained mechanisms).

2 Laboratory experiment

2.1 Design and procedures

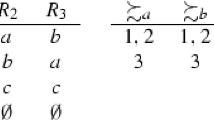

Our experiment is designed to analyze behavior in three school choice problems in which three students (labeled 1, 2, and 3) seek to obtain a seat at schools \(s_1\) and \(s_2\). The preferences of the students and the priorities of the schools are presented in Table 1 and are the same in all three problems. The number of seats schools offer, however, changes over the course of an experimental session and is given in Table 2.

During the experiment, subjects assume the role of students. Schools are not strategic players. The information in Table 1 is common knowledge. The number of seats schools offer is made public at the beginning of each round of the experiment. Given this information, the subjects’ task is to submit a single application to a school (not necessarily to the most preferred school) to be used by a central clearinghouse to assign students to schools. Specifically, we focus on the constrained immediate acceptance or, equivalently, the constrained deferred acceptance mechanism (the equivalence is due to the fact that lists are of length 1):

Constrained Immediate/Deferred Acceptance Mechanism

- Step 1. :

-

Each student sends an application to exactly one school.

- Step 2. :

-

Each school that has received at least one application accepts the application from the student with the highest priority (among all received applications). If a school with two seats has received at least two applications, then it also accepts the application from the student with the second highest priority (among all received applications). All other applications (if any) are rejected.

Each student is assigned to the school that she applied to provided that the school accepted her application. If the application of a student was rejected, then the student remains without a seat.

The experiment was programmed within the z-Tree toolbox provided by Fischbacher (2007) and carried out at Lineex (www.lineex.es) hosted at the University of Valencia. At the beginning of a session, subjects received written instructions that were read aloud by an instructor (see Appendix 1). Participants were informed in particular that the experiment would take a total of 18 rounds and that the number of seats schools offer would change each six rounds. In all sessions, \({{\mathcal {P}}}_1\) was always played first (rounds 1–6), \({{\mathcal {P}}}_2\) always played second (rounds 7–12), and \({{\mathcal {P}}}_3\) always played third (13–18).Footnote 8 Then, the computer software started. The program matched participants anonymously into groups of three. Within each group, one subject was assigned the role of student 1, another subject the role of student 2, and a third subject the role of student 3. Groups and roles did not change over the course of the experiment. Before round 1, subjects first went individually over an illustrative example (to get used to the mechanism) and then played a trial round that was not taken into account for payment (to become familiar with the computer software). At the beginning of each round, the computer screen presented the preferences of the three group members, the priorities of the two schools, and the number of seats at each school. Subjects took then their respective decisions. At the end of each round, each subject was informed of her resulting match.

Our treatment variable is the payoff subjects receive. In each round, a subject received 1 ECU if she ended up at her most preferred school and 0 ECU if her application was rejected (in which case she ended up unmatched). In treatment L (low), a subject received 0.3 ECU if she ended up at her second most preferred (or equivalently, her least preferred) school. In treatment H (high), this payoff was 0.7 ECU. Each ECU was worth 1€.

We ran two sessions (one with 42 and another one with 39 subjects) per treatment. In total, 162 undergraduates from various disciplines participated in the experiment. Each session lasted about 90 min. Apart from the payoff subjects accumulated during the session, they also received a show-up fee of 3€. Subjects earned on average 13.73€ in treatment L and 14.60€ in treatment H.

2.2 Predictions

In this subsection, we derive our experimental predictions. A (school choice) problem is a five-tuple \(\mathcal {P}=\langle I, S, q, P_I, P_S \rangle \) where

-

\(I=\{1,2,\ldots ,n\}\) is a finite set of students (individuals);

-

S is a finite set of schools;

-

\(q\equiv {(q_s)}_{s\in S}\) where for each \(s\in S\), \(q_s\) is the number of available seats at school s;

-

\(P_I \equiv {(P_i)}_{i \in I}\) is a profile of strict preference relations of the students, where for each \(i\in I\), \(P_i\) is a complete, irreflexive, and transitive binary relation over \(S \cup \{ i \}\); and

-

\(P_S \equiv {(P_s)}_{s \in S}\) is a profile of strict priority relations of the schools, where for each \(s\in S\), \(P_s\) is a complete, irreflexive, and transitive binary relation over I.

We assume that for each student i, each school is preferred to her outside option (being unmatched), which is denoted by i. A matching of students to schools is a function \(\mu : I \rightarrow S\) such that for each \(i \in I\) and for each \(s \in S\),

-

\(\mu (i) \in S \cup \{i \}\) and

-

\(|\mu ^{-1}(s)|\le q_s\).

We will often write a matching \(\mu \) as the vector \((\mu (1),\mu (2),\ldots ,\mu (n))\), where for each i, \(\mu (i)\) is called student i’s match. Whenever \(\mu (i)=i\), student i is unmatched, i.e., remains without seat. A pair \((i,s)\in I\times S\) blocks matching \(\mu \) if \(s \, P_i \, \mu (i)\) and

-

\(|\mu ^{-1}(s)|< q_s\) or

-

\(|\mu ^{-1}(s)|= q_s\) and there exists \(k \in \mu ^{-1}(s)\) such that \(i \, P_s \, k\).

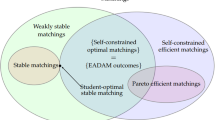

A matching is stable if no pair (i, s) blocks it. The set of stable matchings is non-empty (Gale and Shapley 1962). Moreover, there exists a student-optimal stable matching \(\mu ^I\) which is weakly preferred by all students to all other stable matchings. Similarly, there exists a school-optimal stable matching \(\mu ^S\) which is student-pessimal, i.e., all students weakly prefer any other stable matching to \(\mu ^S\). Table 3 shows all stable matchings in our three problems. In problems \({{\mathcal {P}}}_1\) and \({{\mathcal {P}}}_3\), there are two stable matchings (\(\mu ^I\) and \(\mu ^S\)). Since there is only one stable matching in problem \({{\mathcal {P}}}_2\), the side-optimal stable matchings \(\mu ^I\) and \(\mu ^S\) coincide in this problem.

We consider the strategic game induced by the school choice mechanism. Let \(I=\{1,2,3\}\) be the set of players. Each player \(i \in I\) has a set of pure strategies \(A_i = \{a_{i1},a_{i2}\}\), where \(a_{ij}\) is player i’s strategy of sending an application to school \(s_j\). Let \(A \equiv \times _{i \in I} A_i\) denote the set of strategy-profiles \(a = (a_1, a_2, a_3)\).

Let \(\mathcal {P} \in \{ \mathcal {P}_1, \mathcal {P}_2, \mathcal {P}_3 \}\). For each \(a \in A\) and each \(i\in I\), let \(\beta _i(a,\mathcal {P})\) denote the match of player i when players send applications a and schools have priorities and seat availability as given by \(\mathcal {P}\). Fix a problem \(\mathcal {P} \in \{ \mathcal {P}_1, \mathcal {P}_2, \mathcal {P}_3 \}\) and a treatment \(T \in \{L,H\}\). We assume that player i has a utility function \(u_i: A \rightarrow {\mathbb {R}}\) that reflects the possible per-round payoffs in the experiment, i.e.,

Here, good (bad) match refers to player i’s most (least) preferred school at \(\mathcal {P}\).

A strategy-profile \(\hat{a} \in A\) is a Nash equilibrium (in pure strategies) if for each \(i \in I\) and each \(a_i \in A_i\), \(u_i(\hat{a}) \ge u_i(a_i,({\hat{a}_{j})}_{j\ne i})\). For each problem, the set of Nash equilibrium outcomes of the simultaneous-move game induced by the constrained immediate acceptance mechanism coincides with the set of stable matchings (Haeringer and Klijn 2009). Hence, while there is a unique stable matching and therefore a unique Nash equilibrium outcome in problem \(\mathcal {P}_2\), there are two Nash equilibrium outcomes in each of the other two problems.

Let \(\Delta _i\) denote the set of mixed strategies for player i. More specifically, an element of \(\Delta _i\) is a probability distribution \(p_i: A_i \rightarrow {\mathbb {R}}\), i.e., \(p_i(a_{i1})+p_i(a_{i2})=1\) and for each \(a_{ij} \in A_i\), \(p_i(a_{ij}) \ge 0\). Let \(\Delta \equiv \times _{i \in I} \Delta _i\). The domain of the utility function u is extended from A to \(\Delta \) by defining for each \(p \in \Delta \),

Since \(p_i(a_{i1})+p_i(a_{i2})=1\), we can denote \(p_i(a_{i1})\) by \(p_i\) and \(p_i(a_{i2})\) by \(1-p_i\), and thus interchangeably refer to an element of \(\Delta _i\) by \((p_i,1-p_i)\) or simply by \(p_i\). For each \(p =(p_1,p_2,p_3) \in \Delta \) and each \(i\in I\), we let \(p_{-i}\equiv {(p_j)}_{j\ne i}\).

A strategy-profile \({\hat{p}} \in \Delta \) is a Nash equilibrium in mixed strategies if for each \(i \in I\) and each \(p_i \in \Delta _i\), \(u_i({\hat{p}}) \ge u_i(p_i,{\hat{p}}_{-i})\). The set of Nash equilibria in mixed strategies is computed and depicted for each problem in Fig. 4 of Appendix 2. The resulting probability distributions over matchings are presented in Table 4.

Table 4 highlights that there are multiple Nash equilibrium outcomes in problems \(\mathcal {P}_1\) and \(\mathcal {P}_3\). The notion of quantal response equilibrium for normal form games, introduced by McKelvey and Palfrey (1995), can be used as an equilibrium selection criterion. Quantal response equilibria in its logit form are defined by means of a non-negative parameter \(\lambda \) that is inversely related to the players’ error level. Given \(\lambda \ge 0\), a strategy-profile \(p^* \in \Delta \) is a logit quantal response equilibrium (logit-QRE) if for each \(i \in I\) and each \(a_{ij} \in A_i\),

If \(\lambda = 0\), then players choose uniformly at random. By Theorem 2 in McKelvey and Palfrey (1995), if \(\lambda \rightarrow \infty \), then \(p^*\) converges to a Nash equilibrium in mixed strategies. We refer to this Nash equilibrium as the limiting logit-QRE.

In each problem, since each of the three players has two pure strategies, the system of equations (1) reduces to three equations with three unknowns, i.e., \(p_1^*\), \(p_2^*\), and \(p_3^*\) (the probabilities with which the students apply to school \(s_1\)). Given \(\lambda \ge 0\), we indicate this logit-QRE probability for student i in problem \({{\mathcal {P}}} \in \{{{\mathcal {P}}}_1,{{\mathcal {P}}}_2,{{\mathcal {P}}}_3\}\) of treatment \(T \in \{L,H\}\) by \(p_i^*(\lambda \, | \, {{\mathcal {P}}}, T)\). Figure 1 depicts the logit-QRE probabilities for all instances (combinations of problems and treatments) of our experiment.

Most importantly, in each of the problems \(\mathcal {P}_1\) and \(\mathcal {P}_3\), the limiting logit-QRE depends on the treatment. In particular, panel 1 of Fig. 1 reveals that in \(\mathcal {P}_1\) (\(q_1=1\) and \(q_2=2\)) of treatment L, as \(\lambda \) grows large, the probability with which student 1 applies to school \(s_1\) and students 2 and 3 apply to school \(s_2\) approaches 1. So, all students are accepted with probability 1 for large \(\lambda \) and the resulting matching is \((s_1,s_2,s_2)\), which corresponds to the student-optimal stable matching of \(\mathcal {P}_1\). However, panel 2 shows that in \(\mathcal {P}_1\) of treatment H, the resulting matching under the limiting logit-QRE is the school-optimal stable matching of \(\mathcal {P}_1\), i.e., \((s_2,s_2,s_1)\). A similar observation can be made for \(\mathcal {P}_3\) (\(q_1=2\) and \(q_2=1\)): for large \(\lambda \), the resulting matching converges again to the student-optimal stable matching \((s_1,s_1,s_2)\) in treatment L, while the school-optimal stable matching \((s_1,s_2,s_1)\) is obtained in treatment H. Finally, while \((1,s_2,s_1)\) is the unique Nash equilibrium outcome in \(\mathcal {P}_2\) (\(q_1=q_2=1\)), Table 4 shows that it is sustained by an infinite number of Nash equilibria in mixed strategies. The probability distribution over matchings induced by the logit-QRE converges to the degenerate probability distribution where \((1,s_2,s_1)\) has probability 1. This is because in the limiting logit-QRE of both treatments, the probability with which student 1 applies to school \(s_1\) converges to 0.5, while the probability with which students 2 and 3 apply to schools \(s_2\) and \(s_1\), respectively, approaches 1. However, the trajectory of the logit-QRE clearly depends on the treatment.Footnote 9

Figure 1 permits us to make point predictions and derive treatment effects regarding student behavior. Point predictions indicate (ranges of) probabilities that are consistent with logit-QRE behavior and analyze in particular whether a student is more likely to apply to school \(s_1\) or to school \(s_2\). Based on Fig. 1 we see that for each \(\lambda >0\),

- \({{\mathcal {P}}}_1:\):

-

\(p_1^*(\lambda \, | \, {{\mathcal {P}}}_1, L)> \frac{1}{2} > p_1^*(\lambda \, | \, {{\mathcal {P}}}_1, H)\), \(p_2^*(\lambda \, | \, {{\mathcal {P}}}_1, L), p_2^*(\lambda \, | \, {{\mathcal {P}}}_1, H) < \frac{1}{2}\), and \(p_3^*(\lambda \, | \, {{\mathcal {P}}}_1, L)< \frac{1}{2} < p_3^*(\lambda \, | \, {{\mathcal {P}}}_1, H)\);

- \({{\mathcal {P}}}_2:\):

-

for each \(T \in \{L,H\}\), \(p_1^*(\lambda \, | \, {{\mathcal {P}}}_2, T) \ge \frac{1}{2}\), \(p_2^*(\lambda \, | \, {{\mathcal {P}}}_2, T)< \frac{1}{2}\), and \(p_3^*(\lambda \, | \, {{\mathcal {P}}}_2, T) > \frac{1}{2}\);

- \({{\mathcal {P}}}_3:\):

-

\(p_1^*(\lambda \, | \, {{\mathcal {P}}}_3, L), p_1^*(\lambda \, | \, {{\mathcal {P}}}_3, H) > \frac{1}{2}\), \(p_2^*(\lambda \, | \, {{\mathcal {P}}}_3, L)> \frac{1}{2} > p_2^*(\lambda \, | \, {{\mathcal {P}}}_3, H)\), and \(p_3^*(\lambda \, | \, {{\mathcal {P}}}_3, L)< \frac{1}{2} < p_3^*(\lambda \, | \, {{\mathcal {P}}}_3, H)\).

As a consequence we formulate the first part of our first̄ hypothesis as follows.

Hypothesis 1.a (point predictions)

- \({{\mathcal {P}}}_1:\) :

-

In treatment L, student 1 is (students \(\{2,3\}\) are) more (less) likely to apply to \(s_1\) than to \(s_2\). In treatment H, student 3 is (students \(\{1,2\}\) are) more (less) likely to apply to \(s_1\) than to \(s_2\).

- \({{\mathcal {P}}}_2:\) :

-

Student 1 is weakly more likely, student 2 is less likely, and student 3 is more likely to apply to \(s_1\) than to \(s_2\).

- \({{\mathcal {P}}}_3:\) :

-

In treatment L, students \(\{1,2\}\) are (student 3 is) more (less) likely to apply to \(s_1\) than to \(s_2\). In treatment H, students \(\{1,3\}\) are (student 2 is) more (less) likely to apply to \(s_1\) than to \(s_2\).

Treatment effects deal with comparative statics by studying how the probability with which a student applies to school \(s_1\) is affected by the payoff \(x \in \{0.3,0.7\}\) (i.e., the two treatments L and H) that the student receives from being matched to her least preferred school. We distinguish between ambiguous and unambiguous effects. Given a problem and a student, the treatment effect is unambiguous if the logit-QRE probability in one treatment is always greater than in the other treatment, i.e., independently of the rationality parameter \(\lambda \). Formally, given a problem \({{\mathcal {P}}}\) and a student i, the treatment effect is unambiguous if

We can observe from Fig. 1 that there are four unambiguous effects.Footnote 10 Specifically, in \({{\mathcal {P}}}_1\), the probability with which student 1 (student 3) applies to \(s_1\) in treatment L is always greater (smaller) than in treatment H. Similarly, in \({{\mathcal {P}}}_3\), the probability with which student 2 (student 3) applies to \(s_1\) in treatment L is greater (smaller) than in treatment H.

The treatment effect is ambiguous if the comparison depends on the actual values \(\lambda \) takes in the two treatments, i.e., if

Ambiguous treatment effects occur for student 2 in \({{\mathcal {P}}}_1\), all three students in \({{\mathcal {P}}}_2\), and student 1 in \({{\mathcal {P}}}_3\). We are able to make some further predictions in these cases if we assume that \(\lambda \) is treatment-independent. We first define

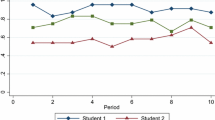

i.e., the logit-QRE probability difference for student i between treatment L and treatment H in problem \({{\mathcal {P}}}\), as a function of \(\lambda >0\) (in both treatments). Figure 2 graphically presents this function for all students in all problems.

It can be observed that only for student 1 in \({{\mathcal {P}}}_3\) there are values \(\lambda ,\lambda '>0\) such that \(\delta _1(\lambda \, | \, {{\mathcal {P}}}_3)>0\) and \(\delta _1(\lambda ' \, | \, {{\mathcal {P}}}_3)<0\). So, for student 1 in \({{\mathcal {P}}}_3\) there is no clear treatment effect even under the additional assumption that \(\lambda \) is the same in both treatments. For the other four ambiguous effects we have that for all \(\lambda >0\), \(\delta _2(\lambda \, | \, {{\mathcal {P}}}_1) > 0\), \(\delta _1(\lambda \, | \, {{\mathcal {P}}}_2) > 0\), \(\delta _2(\lambda \, | \, {{\mathcal {P}}}_2) > 0\), and \(\delta _3(\lambda \, | \, {{\mathcal {P}}}_2) < 0\).

Hypothesis 1.b (treatment effects).

-

Unambiguous effects. Student 1 in \({{\mathcal {P}}}_1\) and student 2 in \({{\mathcal {P}}}_3\) apply to \(s_1\) more often and student 3 in \(\{{{\mathcal {P}}}_1,{{\mathcal {P}}}_3\}\) applies to \(s_1\) less often in treatment L than in treatment H.

-

Ambiguous effects. Student 1 in \({{\mathcal {P}}}_2\) and student 2 in \(\{ {{\mathcal {P}}}_1,{{\mathcal {P}}}_2 \}\) apply to \(s_1\) more often and student 3 in \({{\mathcal {P}}}_2\) applies to \(s_1\) less often in treatment L than in treatment H.

Next, we derive predictions about the prevalence of the side-optimal stable matchings. Given \(\lambda \), let \(p^I(\lambda \, | \, {{\mathcal {P}}}, T)\) be the probability with which the student-optimal stable matching \(\mu ^I\) is obtained in problem \({{\mathcal {P}}}\) of treatment T. The probability \(p^S(\lambda \, | \, {{\mathcal {P}}}, T)\) for the school-optimal stable matching is similarly defined. The four panels in the left part of Fig. 3 depict for each problem \({{\mathcal {P}}} \in \{{{\mathcal {P}}}_1,{{\mathcal {P}}}_3\}\) and for each treatment \(T \in \{L,H\}\), the trajectories of \(p^I\) and \(p^S\) as a function of \(\lambda \). The two panels in the right part of Fig. 3 depict the probability difference

To the left: probabilities of the student-optimal stable matching \(p^I(\lambda \, | \, {{\mathcal {P}}}, T)\) (light-gray) and the school-optimal stable matching \(p^S(\lambda \, | \, {{\mathcal {P}}}, T)\) (black) under the logit-QRE in \({{\mathcal {P}}}_1\) and \({{\mathcal {P}}}_3\) for each of the two treatments. To the right: probability difference \(d(\lambda \, | \, {{\mathcal {P}}}, T)\) in \({{\mathcal {P}}}_1\) and \({{\mathcal {P}}}_3\) for treatment \(T=H\) (black) and treatment \(T=L\) (light-gray)

In all four panels in the left part of Fig. 3, if \(\lambda =0\), i.e, all three students apply to each of the two schools with probability \(\frac{1}{2}\), then both \(\mu ^I\) and \(\mu ^S\) occur with the same probability (namely, \(\frac{1}{8}\)). Also, for each of the problems \({{\mathcal {P}}}_1\) and \({{\mathcal {P}}}_3\), \(p^I\) is strictly increasing over the whole domain of \(\lambda \) in treatment L but mostly decreases in \(\lambda \) in treatment H. The opposite happens for \(p^S\): it mostly decreases in \(\lambda \) in treatment L but strictly increases over the whole domain of \(\lambda \) in treatment H. This is reflected in the right part of Fig. 3 where it can be observed that in each problem \({{\mathcal {P}}} \in \{{{\mathcal {P}}}_1,{{\mathcal {P}}}_3\}\) and for all \(\lambda >0\), \(d(\lambda \, | \, {{\mathcal {P}}}, L)> d(0 \, | \, {{\mathcal {P}}}, L) = 0 = d(0 \, | \, {{\mathcal {P}}}, H) > d(\lambda \, | \, {{\mathcal {P}}}, H)\). These inequalities allow us to formulate the following hypothesis.

Hypothesis 2 (side-optimal stable matchings).

In each \({{\mathcal {P}}} \in \{{{\mathcal {P}}}_1,{{\mathcal {P}}}_3\}\), it is more likely to obtain \(\mu ^I\) than \(\mu ^S\) in treatment L and it is less likely to obtain \(\mu ^I\) than \(\mu ^S\) in treatment H.

3 Results

We next present the results of our experiment. First, we statistically test our two hypotheses in order to assess whether the logit-QRE makes sound directional predictions in our setting. Afterwards, we estimate \(\lambda \).

The relevant data for the statistical analysis is displayed in Tables 5 and 6. Table 5 indicates the frequencies with which students apply to \(s_1\) and, thus, analyzes how subjects behave in the different student roles. The corresponding predictions are in Hypothesis 1. We employ Wilcoxon signed-rank tests for the within-treatments comparisons (point predictions) and Mann–Whitney U tests for the between-treatments comparisons. Table 6 shows the probability distribution over matchings that we obtained in the experiment. We focus in Hypothesis 2 on the probability \(p^I\) that the student-optimal stable matching and the probability \(p^S\) that the school-optimal stable matching is reached. For this reason the probabilities of all other matchings are added up under the column “unstable.”Footnote 11 For a visual overview of the per-round data and some additional statistical tests we refer to Appendix 3.

Evidence on Hypothesis 1:

We show that the experimental data in Table 5 considerably supports Hypothesis 1.

Consider first \({{\mathcal {P}}}_1\). It is expected that in treatment L, student 1 is more and students 2 and 3 are less likely to apply to \(s_1\) than to \(s_2\). Also, in treatment H, student 3 is expected to be more and students 1 are 2 are expected to be less likely to apply to \(s_1\) than to \(s_2\). Only the data for student 1 in treatment L is outright against this prediction: the experimental frequency with which the student applies to \(s_1\) is 49%, yet this frequency is expected to be greater than 50%. From a statistical point of view, only the comparison for student 2 in treatment H is significant at the 5% level (the one-sided p value is 0.039). With respect to the treatment effects, students 1 and 2 are expected to be more and student 3 is expected to be less likely to apply to \(s_1\) in treatment L than in treatment H. The experimental data goes in the correct direction. The one-sided p values of the corresponding Mann–Whitney U tests are 0.062 for student 1, 0.031 for student 2, and 0.024 for student 3.

Consider next \({{\mathcal {P}}}_2\). It is expected that in both treatments, student 3 is more and student 2 is less likely to apply to \(s_1\) than to \(s_2\). It is evident from Table 5 that the experimental data is completely in line with these predictions even though the comparison for student 3 in treatment L is only significant at a one-sided p value of 0.098. With respect to student 1, for whom the point prediction was expressed in a weak sense, the hypothesis that this student applies to school \(s_1\) with probability \(\frac{1}{2}\) cannot be rejected in either of the two treatments. The experimental data is also consistent with the ambiguous treatment effects in Hypothesis 1: Students 1 and 2 are more and student 3 is less likely to apply to \(s_1\) in treatment L than in treatment H. The one-sided p values of the corresponding Mann–Whitney U tests are 0.019 for student 1, 0.069 for student 2, and 0.067 for student 3.

Consider finally \({{\mathcal {P}}}_3\). It is expected that in treatment L, students 1 and 2 are more and student 3 is less likely to apply to \(s_1\) than to \(s_2\). Also, in treatment H, students 1 and 3 are expected to be more and student 2 is expected to be less likely to apply \(s_1\) than to \(s_2\). The experimental data is again consistent with these predictions. The comparisons for student 1 are in both treatments significant at the 1% level. Finally, student 2 (student 3) is supposed to apply to \(s_1\) more (less) often in treatment L than in treatment H. The data confirms these predictions: the one-sided p values of the Mann-Whitney U tests are 0.040 for student 2 and 0.025 for student 3. \(\square \)

A first observation from Table 6 is that even though our setting is highly stylized, it is not straightforward for subjects to coordinate in \(\{{{\mathcal {P}}}_1,{{\mathcal {P}}}_3\}\) on a Nash equilibrium in pure strategies. A Nash equilibrium in pure strategies leads to a stable matching, however an unstable matching is reached in \({{\mathcal {P}}}_1\) in more than 35% and in \({{\mathcal {P}}}_3\) in more than 16% of the cases. Moreover, the probability distribution over matchings in these two problems does not coincide with the one induced by the non-degenerate mixed strategy Nash equilibria in Table 4. To see this, we employ Wilcoxon signed-rank tests at the group level to analyze whether the empirical distribution over matchings is different from the one that arises from the non-degenerate Nash equilibria in mixed strategies. The (truly independent) observation for a group is the probability with which a matching (\(\mu ^I\), \(\mu ^S\), or unstable) is reached over the course of the six rounds in which the problem is played. We find that in treatment L of \({{\mathcal {P}}}_1\), \(p^S\) is significantly different from 0.49 (two-sided \(p=0.0052\)). Also, in treatment H of \({{\mathcal {P}}}_1\), \(p^S\) is significantly greater than 0.09 (one-sided \(p \le 0.0001\)). And the probability of an unstable matching is significantly different from 0.42 in treatment L (two-sided \(p<0.0001\)) and treatment H (two-sided \(p=0.0052\)) of \({{\mathcal {P}}}_3\). Finally, in \({{\mathcal {P}}}_2\) the unique stable outcome is “only” obtained in 78% of the cases in treatment L and in 90% of the cases in treatment H. This hints at misplays, yet the per-round data at the bottom of Fig. 5 in Appendix 3 provides evidence of learning and coordination effects because unstable matchings occur less often in later rounds of a problem.

Evidence on Hypothesis 2:

Hypothesis 2 states that for each problem \({{\mathcal {P}}} \in \{{{\mathcal {P}}}_1,{{\mathcal {P}}}_3\}\), it is more likely to obtain \(\mu ^I\) than \(\mu ^S\) in treatment L and it is less likely to obtain \(\mu ^I\) than \(\mu ^S\) in treatment H. We find for \({{\mathcal {P}}}_1\) that the probability difference between \(\mu ^I\) and \(\mu ^S\) is \(0.302-0.272=0.030\) in treatment L and \(0.197-0.451=-0.254\) in treatment H. The one-sided p values of the Wilcoxon signed-rank tests at the group level are 0.3528 in treatment L and 0.0078 in treatment H. Similar observations hold for problem \({{\mathcal {P}}}_3\): the probability difference between \(\mu ^I\) and \(\mu ^S\) is \(0.432-0.370=0.062\) in treatment L (one-sided p=0.3625) and \(0.241-0.599=-0.358\) in treatment H (one-sided p=0.0033). We conclude that the experimental data is supportive of Hypothesis 2. \(\square \)

In the final part of this section, we estimate the logit-QRE via maximum likelihood. Suppose that a total of K subjects participate in a given treatment \(T \in \{L,H\}\) in each student role i. Let \(x_{l,i}^t\) be a particular observation for subject l in role i of round \(t\in \{1,\ldots ,6\}\). We define \(x_{l,i}^t=1\) if student l in role i applies in round t to school \(s_1\). Otherwise, \(x_{l,i}^t=0\). Under the logit-QRE, \(p_i^*(\lambda )\) is the probability that \(x_{l,i}^t=1\) and \(1-p_i^*(\lambda )\) is the probability that \(x_{l,i}^t=0\). The joint likelihood of observing the data is then

The data can be pooled if it is assumed that subject behavior is time-independent, i.e., for all \(t,t' \in \{1,\ldots ,6\}\), \(x_{l,i}^t=x_{l,i}^{t'} \equiv x_{l,i}\).Footnote 12 Then,

Let \({\tilde{p}}_i = \sum _{l=1}^K x_{l,i}/K\) be the empirical probability from the experiment that subjects in student role i apply to school \(s_1\). We finally obtain that

In order to maximize this function, we calculate the equilibrium probabilities of the logit-QRE numerically on a fine grid—the unique model parameter \(\lambda \) is varied in steps of 0.01 between 0 and 10, which means that 1000 different values for \(\lambda \) are considered—and evaluate the objective function at these equilibrium values. For each of the 1000 estimations of \(\lambda \) we use a random sample that consists of 60% of the available data for each student.Footnote 13 This yields a distribution of estimates for \(\lambda \) and allows us to analyze treatment effects. We denote by \({\widehat{\lambda }}^T_j\) the mean of the estimated distribution for problem \({{\mathcal {P}}}_j\) of treatment T.

Table 7 presents the estimation results for three different models (utility functions). We first concentrate on the case when the subjects are risk-neutral expected-payoff maximizers (Model I) and the only parameter to be estimated is \(\lambda \). The intuition of the logit-QRE is that larger values of \(\lambda \) imply that choices are closer to Nash equilibrium behavior. In this sense, \(\lambda \) measures the rationality of the observed behavior. In our experiment, \({{\mathcal {P}}}_2\) is arguably the simplest of the three problems. In this problem, the continuum of mixed strategy Nash equilibria leads to the same (stable) matching. In the other two problems, there are two stable matchings and a coordination problem arises because different Nash equilibria induce different stable (or even unstable) matchings. Our estimation results in Table 7 support this interpretation since in Model I, \({\widehat{\lambda }}\) is largest in \({{\mathcal {P}}}_2\) (for both treatments).Footnote 14 Furthermore, it is worth noting that in each of the problems \({{\mathcal {P}}}_1\) and \({{\mathcal {P}}}_3\), \({\widehat{\lambda }}\) is larger in treatment H than in treatment L. For a possible explanation, recall that for large \(\lambda \), the logit-QRE converges to \(\mu ^S\) in treatment H and to \(\mu ^I\) in treatment L. At the pure strategy Nash equilibrium that induces \(\mu ^S\), students apply to their “safety schools,” i.e., they maximize the probability of being accepted. Yet, at the pure strategy Nash equilibrium that yields \(\mu ^I\) some students take the risk of remaining unmatched (if some of the other students deviates). This risk comes with the potential benefit of being matched to the good school. If the payoff of the (bad) safety school is close to the payoff of the good school, which is the case in treatment H, then a subject may prefer to send an application to her (bad) safety school, which would result in a relatively large \(\lambda \). On the other hand, if there is a big payoff difference between the good and the (bad) safety school, which is the case in treatment L, the logit-QRE demands to make the risky choice but some subjects might still apply to their (bad) safety school due to risk preferences (see, e.g., Klijn et al. 2013Footnote 15), which would result in a relatively small \(\lambda \). As a consequence, one could expect to obtain larger estimates of \(\lambda \) in treatment H than in treatment L for both \({{\mathcal {P}}}_1\) and \({{\mathcal {P}}}_3\).

We address in Model II the question whether subjects’ risk aversion indeed affects the estimation of \(\lambda \) by assuming a CARA utility function on expected payoffs.Footnote 16 Equation (1) then becomes

where r is the Arrow-Pratt measure of risk aversion. If \(r=0\), subjects are risk-neutral; and if \(r \rightarrow 1\), then \(u_i(a_{ij},p_{-i}^*)^r/(1-r)\) tends to \(\ln (u_i(a_{ij},p_{-i}^*))\).

Table 7 shows that \(\widehat{r}\) is in all problems consistent across treatments, yet there are important differences between problems. Risk aversion is maximal in \({{\mathcal {P}}}_1\) and \({{\mathcal {P}}}_2\), while \({\widehat{r}} \rightarrow 0\) in \({{\mathcal {P}}}_3\). It is worth noting that player 1 has the dominant strategy \(p_1=1\) in \({{\mathcal {P}}}_3\), which implies that the optimal behavior of this player is independent of her risk aversion. If one compares specifications under the premise that \(\lambda \) is exogenous and is “supposed to be” constant across treatments, Model II improves upon Model I. In Model I, the ratio \(|{\widehat{\lambda }}^H_j / {\widehat{\lambda }}^L_j|\) is 3.81 in \({{\mathcal {P}}}_1\), 0.53 in \({{\mathcal {P}}}_2\), and 1.43 in \({{\mathcal {P}}}_3\). The ratios for Model II are closer to 1 than the ratios of Model I: 3.24 in \({{\mathcal {P}}}_1\), 0.92 in \({{\mathcal {P}}}_2\), and 1.43 in \({{\mathcal {P}}}_3\).

Finally, we study in Model III whether the systematic differences of the between-treatment estimates of \(\lambda \) in Model I are explained by subjects trying to coordinate on the student-optimal stable matching. For that it is assumed that the utility function is a linear combination of the expected payoffs and the probability that the student-optimal stable matching is reached. In particular,

where \(c \in [0,1]\). We find that the estimation outcome of this model is worse than that of Model II. First, \({\widehat{c}}\) varies substantially between treatments and between problems (and not only between problems as in Model II). And second, the ratio \(|{\widehat{\lambda }}^H_j / {\widehat{\lambda }}^L_j|\) is 3.42 in \({{\mathcal {P}}}_1\), 1.90 in \({{\mathcal {P}}}_2\), and 6.32 in \({{\mathcal {P}}}_3\). For all problems, these ratios are further away from 1 than the ratios of Model II. One natural alternative to Model III is to assume that subjects have preferences for the expected group payoff instead of preferences for the student-optimal stable matching. We also estimate this alternative model and, perhaps surprisingly, no evidence of this type of preferences for efficiency is found. In all cases, \({\widehat{c}} = 1\).

One important conclusion that can be drawn from the estimation results in Table 7 is that including the risk parameter r reduces the ratio \(|{\widehat{\lambda }}^H_j / {\widehat{\lambda }}^L_j|\) in each of the three problems. We explore this point further by re-estimating Model I for various non-zero levels of r. In fact, one caveat of Model II is that \({\widehat{r}}\) is 1 or close to 1 in problems \({{\mathcal {P}}}_1\) and \({{\mathcal {P}}}_2\), yet \({\widehat{r}}\) is 0 in problem \({{\mathcal {P}}}_3\). This stands in contrast with the idea that a subject’s risk aversion is an external characteristic that is constant throughout the experiment.

Table 8 shows that \({\widehat{\lambda }}\) decreases as r increases. The last column of Table 8 calculates for each level of risk aversion r the variance of \({\widehat{\lambda }}\) over all 6 numbers in the same row. In this calculation, the \(\lambda \) estimates are normalized to the unit interval. The variance is lowest for \(r=0.6\), which means that intermediate values of r seem to be more appropriate if the degree of risk aversion is considered an external characteristic and one aims at minimizing the variance of \({\widehat{\lambda }}\).

4 Concluding remarks

An important part of the experimental literature on school choice focuses on comparing different centralized assignment mechanisms. The theoretical literature is sometimes able to provide very sharp predictions. For example, in an unconstrained setting in which subjects can rank all schools, both the student-optimal stable mechanism and the top trading cycles mechanism are strategy-proof, that is, in the induced simultaneous-move games subjects have an incentive to reveal their preferences truthfully. One can then use the truth-telling rates observed in a laboratory experiment to make inferences about the quality of the decisions. Since the immediate acceptance mechanism is manipulable, the comparison of subject behavior between the student-optimal stable mechanism and the immediate acceptance mechanism is not as straightforward, even in an unconstrained setting. Thus, comparing truth-telling rates between mechanisms is only useful in certain clearly defined instances. In general, there is a need to specify a behavioral model that permits the derivation of testable predictions and compare the quality of the decisions between treatments. The quantal response equilibrium is a natural tool in this respect.

With the help of a laboratory experiment we have analyzed the effects of changes in the cardinal payoffs (i.e., relative preference intensities) on subject behavior for three stylized school choice problems. Our main finding is that the logit-QRE correctly captures the qualitative changes of both subject behavior and the resulting matching. We note that the logit-QRE can also be used to derive predictions across mechanisms, which is a recurring topic in the experimental literature. Given the potential interest for future research, we briefly discuss an example. Consider the problem with three students (1, 2, and 3) and three schools (\(s_1\), \(s_2\), and \(s_3\)) in Table 9. Each school has 1 seat and gives a strictly positive payoff. Also assume that students submit lists of size 3.

There are three stable matchings. In the student-optimal stable matching, each student is assigned to her best match. In the “median” stable matching, each student is assigned to her second best match. And in the school-optimal stable matching, each student is assigned to her worst match. The unconstrained student-optimal stable mechanism is strategy-proof, which implies that agents report their preferences truthfully in the limiting logit-QRE. Since students cannot remain unmatched under the immediate acceptance mechanism, the worst school must be ranked last (not doing so is a weakly dominated strategy). Whether students rank their best school or their second best school first in the limiting logit-QRE under the immediate acceptance mechanism is a function of the payoff structure. If the payoff of the best and the second best school are “close enough” to each other, the limiting logit-QRE is equal to the median stable matching. And if there is a “suficient” payoff difference for these two schools, the student-optimal stable matching is obtained.

Finally, part of the recent experimental literature on school choice studies mechanisms that incorporate concerns for affirmative action (i.e., Klijn et al. 2016; Kawagoe et al. 2018), allow for information acquisition (Chen and He 2021), or employ a dynamic assignment procedure (i.e., Klijn et al. 2019; Bó and Hakimov 2020; Dur et al. 2021). Also, there are innovative experimental designs that analyze the roots of sub-optimal behavior in experimental matching markets (i.e., Guillen and Hakimov 2017; Dur et al. 2018; Ding and Schotter 2019; Guillen and Veszteg 2021). It seems worthwhile to more closely study the predictive power of the quantal response equilibrium in these settings.

Notes

The student-optimal mechanism is the mechanism based on the deferred acceptance algorithm (Gale and Shapley 1962). The immediate acceptance mechanism is also known as the “Boston mechanism.” For further details we refer to Abdulkadiroğlu and Sönmez (2003) who initiated the market design literature on school choice.

See the subsection “Related literature” at the end of the Introduction for references.

The quantal response equilibrium has been applied as an equilibrium notion in many experimental settings including all-pay auctions (Anderson et al. 1998), the traveler’s dilemma (Capra et al. 1999), jury decision rules (Guarnaschelli et al. 2000), alternating offer bargaining games (Goeree and Holt 2000), coordination games (Anderson et al. 2001), first price auctions (Goeree et al. 2002), generalized matching pennies games (Goeree et al. 2003), capacity allocation games (Chen et al. 2012), and two-sided matching (Echenique et al. 2016).

Problem \({{\mathcal {P}}}_2\) has been added to the design mainly for completeness reasons. We thus concentrate in the introduction on our two main problems \({{\mathcal {P}}}_1\) and \({{\mathcal {P}}}_3\). A complete set of predictions and the corresponding data analysis for problem \({{\mathcal {P}}}_2\) is presented alongside the other two problems in Sects. 2 and 3, respectively.

Abdulkadiroğlu et al. (2011) highlight in a restricted environment that the immediate acceptance mechanism allows parents to express their relative preference intensities.

There is no need to counterbalance the order in which problems are played because we do not compare outcomes between problems.

The structure of the limiting logit-QRE in Fig. 1 hinges on the fact that students submit a single application. We show in Appendix 4 that if students submit lists of length 2, then the limiting logit-QRE converges for all three problems of both treatments to the student-optimal stable matching. Thus, the robustness of our results cannot be analyzed with this alternative design.

For all \(\lambda ,\lambda '>0\), \(p_1^*(\lambda \, | \, {{\mathcal {P}}}_1, L) > p_1^*(\lambda ' \, | \, {{\mathcal {P}}}_1, H)\), \(p_3^*(\lambda \, | \, {{\mathcal {P}}}_1, L) < p_3^*(\lambda ' \, | \, {{\mathcal {P}}}_1, H)\), \(p_2^*(\lambda \, | \, {{\mathcal {P}}}_3, L) > p_2^*(\lambda ' \, | \, {{\mathcal {P}}}_3, H)\), and \(p_3^*(\lambda \, | \, {{\mathcal {P}}}_3, L) < p_3^*(\lambda ' \, | \, {{\mathcal {P}}}_3, H)\).

Truly independent observations for all statistical tests are obtained by aggregating data over all six consecutive rounds in which a particular problem is played. As an example, consider the hypothesis for treatment L that the resulting matching in \({{\mathcal {P}}}_1\) is more likely to be \(\mu ^I\) than \(\mu ^S\). For each of the six groups, we calculate the overall empirical probabilities \(p^I\) and \(p^S\) with which \(\mu ^I\) and \(\mu ^S\) are obtained for this \({{\mathcal {P}}}_1\). For each group, we then take the difference \(d=p^I-p^S\) between these empirical probabilities. Thus, we obtain for each group a sole number between \(-1\) and 1. The associated Wilcoxon signed-rank test then analyzes whether the mean of these six numbers is significantly greater than 0.

Time-independence is a restrictive condition, but we do not have sufficient data points to perform an estimation for each round.

The log-likelihood function is strictly concave and we find an interior solution on the considered grid. Hence, even though the logit-QRE is defined for all \(\lambda >0\), there is no need to widen the grid.

It might be surprising that \({\widehat{\lambda }}_2^L = 8.1266\) is substantially greater than \({\widehat{\lambda }}_2^H = 4.3436\) even though there are only minor treatment differences in Table 5. The logit-QRE trajectories in Fig. 1 provide an explanation for this. According to the empirical data, there is a high likelihood that student 3 applies to school \(s_1\). Yet, a high \(p_3^*\) requires a substantially greater \(\lambda \) in treatment L than in treatment H.

Klijn et al. (2013) show experimentally that the student-optimal stable mechanism is more robust to changes in the cardinal preference structure than the immediate acceptance mechanism and that subjects with a higher degree of risk aversion (measured through a Holt-Laury lottery task) are more likely to play a “protective strategy” under the student-optimal stable mechanism but not under the immediate acceptance mechanism.

We are very grateful to an anonymous referee for suggesting us to analyze the impact of risk preferences and preferences for the student-optimal stable matching on the estimates of \(\lambda \).

For instance, given strategies \((p_2,p_3)\) of students 2 and 3, the expected utility of student 1 from applying to \(s_1\) is \(1\times (1-p_3)\) because \(s_1\) has only one seat and only student 3 has higher priority for the school than student 1. Similarly, applying to \(s_2\) yields the expected utility \(x \times 1\), because \(s_2\) has two seats and student 1 has the second highest priority for the school (so, applying to the school guarantees entrance).

References

Abdulkadiroğlu A, Sönmez T (2003) School choice: a mechanism design approach. Am Econ Rev 93(3):729–747

Abdulkadiroğlu A, Che Y-K, Yasuda Y (2011) Resolving conflictive preferences in school choice: the Boston mechanism reconsidered. Am Econ Rev 101(1):399–410

Anderson SP, Goeree JK, Holt CA (1998) Minimum-effort coordination games: an analysis of all-pay auctions. J Political Econ 106(4):828–853

Anderson SP, Goeree JK, Holt CA (2001) Rent seeking with bounded rationality: stochastic potential and logit equilibrium. Games Econ Behav 34(2):177–199

Bó I, Hakimov R (2020) Iterative versus standard deferred acceptance: experimental evidence. Econ J 130(626):356–392

Bonkoungou S, Nesterov A (2021) Comparing school choice and college admissions mechanisms by their strategic accessibility. Theor Econ 16(3):881–909

Calsamiglia C, Haeringer G, Klijn F (2010) Constrained school choice: an experimental study. Am Econ Rev 100(4):1860–1874

Capra MC, Goeree JK, Gomez R, Holt CA (1999) Anomalous behavior in a traveler’s dilemma. Am Econ Rev 89(3):678–690

Chen Y, He Y (2021) Information acquisition and provision in school choice: an experimental study. J Econ Theory 197:105345

Chen Y, Kesten O (2019) Chinese college admissions and school choice reforms: an experimental study. Games Econ Behav 115(1):83–100

Chen Y, Sönmez T (2006) School choice: an experimental study. J Econ Theory 127(1):202–231

Chen Y, Su X, Zhao Z (2012) Modeling bounded rationality in capacity allocation games with the quantal response equilibrium. Manag Sci 58(10):1952–1962

Ding T, Schotter A (2019) Learning and mechanism design: an experimental test of school matching mechanisms with intergenerational advice. Econ J 129(623):2779–2804

Dreyfuss B, Heffetz O, Rabin M (2022) Expectations-based loss aversion may help explain seemingly dominated choices in strategy-proof mechanisms. Am Econ J Microecon 14(4):515–555

Dur U (2019) The modified Boston mechanism. Math Soc Sci 101:31–40

Dur U, Hammond RG, Kesten O (2021) Sequential school choice: theory and evidence from the field. J Econ Theory 198:105344

Dur U, Hammond RG, Morrill T (2018) Identifying the harm of manipulable school-choice mechanisms. Am Econ J Econ Policy 10(1):187–213

Dur U, Morrill T (2020) What you don’t know can help you in school assignment. Games Econ Behav 120:246–256

Echenique F, Wilson A, Yariv L (2016) Clearinghouse for two-sided matching: an experimental study. Quant Econ 7(2):449–482

Featherstone CR, Niederle M (2016) Boston versus deferred acceptance in an interim setting: an experimental investigation. Games Econ Behav 100(1):353–375

Fischbacher U (2007) Z-tree: Zurich toolbox for ready-made economic experiments. Exp Econ 10(2):171–178

Gale D, Shapley LS (1962) College admissions and the stability of marriage. Am Math Mon 69(1):9–15

Goeree JK, Holt CA (2000) Asymmetric inequality aversion and noisy behavior in alternating-offer bargaining games. Eur Econ Rev 44(4):1079–1089

Goeree JK, Holt CA, Palfrey TR (2002) Quantal response equilibrium and overbidding in private-value auctions. J Econ Theory 104(1):257–272

Goeree JK, Holt CA, Palfrey TR (2003) Risk averse behavior in generalized matching pennies games. Games Econ Behav 45(1):97–113

Goeree JK, Holt CA, Palfrey TR (2005) Regular quantal response equilibrium. Exp Econ 8:347–367

Guarnaschelli S, McKelvey RD, Palfrey TR (2000) An experimental study of jury decision rules. Am Political Sci Rev 94(2):407–423

Guillen P, Hakimov R (2017) Not quite the best response: truth-telling, strategy-proof matching, and the manipulation of others. Exp Econ 20(3):670–686

Guillen P, Veszteg R (2021) Strategy-proofness in experimental matching markets. Exp Econ 24(2):650–668

Haeringer G, Klijn F (2009) Constrained school choice. J Econ Theory 144(5):1921–1947

Haile PA, Hortaçsu A, Kosenok G (2008) On the empirical content of quantal response equilibrium. Am Econ Rev 98(1):180–200

Hakimov R, Kübler D (2020) Experiments on centralized school choice and college admissions: a survey. Exp Econ 24:434–488

Kawagoe T, Matsubae T, Takizawa H (2018) The skipping-down strategy and stability in school choice problems with affirmative action: theory and experiment. Games Econ Behav 109:212–239

Klijn F, Pais J, Vorsatz M (2013) Preference intensities and risk aversion in school choice: a laboratory experiment. Exp Econ 16:1–23

Klijn F, Pais J, Vorsatz M (2016) Affirmative action through minority reserves: an experimental study on school choice. Econ Lett 139:72–75

Klijn F, Pais J, Vorsatz M (2019) Static versus dynamic deferred acceptance in school choice: a laboratory experiment. Games Econ Behav 113:147–163

Kojima F, Ünver MU (2014) The Boston school-choice mechanism: an axiomatic approach. Econ Theory 55:515–544

Li S (2017) Obviously strategy-proof mechanisms. Am Econ Rev 107(11):3257–3287

McKelvey RD, Palfrey TR (1995) Quantal response equilibria for normal form games. Games Econ Behav 10(1):6–38

Pais J, Pintér Á (2008) School choice and information: an experimental study on matching mechanisms. Games Econ Behav 64(1):303–328

Pathak PA, Sönmez T (2013) School admissions reform in Chicago and England: comparing mechanisms by their vulnerability to manipulation. Am Econ Rev 103(1):80–106

Funding

Open Access funding provided thanks to the CRUE-CSIC agreement with Springer Nature. The authors gratefully acknowledge financial support from Fundación Ramón Areces. J. Alcalde-Unzu gratefully acknowledges financial support from Ministerio de Ciencia, Innovación y Universidades (PGC2018-093542-B-I00 and PID2021-127119NB-I00). F. Klijn gratefully acknowledges financial support from AGAUR–Generalitat de Catalunya (2017-SGR-1359 and 2021-SGR-00416) and the Spanish Agencia Estatal de Investigación (AEI) through grants ECO2017-88130-P and PID2020-114251GB-I00 and the Severo Ochoa Programme for Centres of Excellence in R&D (Barcelona School of Economics CEX2019-000915-S). M. Vorsatz gratefully acknowledges financial support from Ministerio de Ciencia, Innovación y Universidades (PGC2018-096977-B-I00 and PID2021-122919NB-I00).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no relevant financial or non-financial interests to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

We thank an associate editor and two anonymous reviewers for their valuable comments and suggestions which improved the paper. The authors gratefully acknowledge financial support from Fundación Ramón Areces. J. Alcalde-Unzu gratefully acknowledges financial support from Ministerio de Ciencia, Innovación y Universidades (PGC2018-093542-B-I00 and PID2021-127119NB-I00). F. Klijn gratefully acknowledges financial support from AGAUR–Generalitat de Catalunya (2017-SGR-1359 and 2021-SGR-00416) and the Spanish Agencia Estatal de Investigación (AEI) through grants ECO2017-88130-P and PID2020-114251GB-I00 and the Severo Ochoa Programme for Centres of Excellence in R&D (Barcelona School of Economics CEX2019-000915-S). M. Vorsatz gratefully acknowledges financial support from Ministerio de Ciencia, Innovación y Universidades (PGC2018-096977-B-I00 and PID2021-122919NB-I00).

Appendices

Appendix 1: Instructions (translated from Spanish)

1.1 General instructions

Dear participant, thank you for taking part in this experiment. The purpose of this session is to study how people make decisions. The session will last about 90 min. In addition to the 3 Euro show-up fee you can—depending on your decisions—earn some more money. In order to ensure that the experiment takes place in an optimal setting, we would like to ask you to abide to the following rules during the whole experiment:

-

Please, do not communicate with other participants!

-

Do not forget to switch off your mobile phone!

-

Read the instructions carefully. If something is unclear or if you have any question now or at any time during the experiment, please ask one of the experimenters. However, do not ask out loud, raise your hand instead. We will answer questions privately.

If you do not obey the rules, the data becomes useless for us and in this case we will have to exclude you from this experiment and you will not receive any monetary compensation. Payoffs during the experiment are expressed in ECU (experimental currency units). At the end of the session you will receive 1 Euro for each ECU obtained in the course of the experiment.

1.2 Description

The basic decision environment in the experiment is as follows. There are three students—let us call them \(E_1\), \(E_2\), and \(E_3\)—that can be assigned to a school. There are two schools—denoted \(C_1\) and \(C_2\)—and each school can have 1 or 2 available seats (we will specify this later on in these instructions).

Since the schools differ in their location and quality, students have different opinions regarding which school they would like to attend. The desirability of schools in terms of location and quality is expressed in a table such as Table 10. Note: this is an illustrative example and hence any table you will see later in the experiment might be different.

Each column gives the preferences of a particular student. Consider the column that is marked \(E_3\). This column gives the preferences of student \(E_3\) and tells us that he/she would most of all like to obtain a seat in school \(C_1\). Therefore, the least preferred school of student \(E_3\) is \(C_2\). Finally, not obtaining a seat at any of the schools is the worst possible outcome. The columns of \(E_1\) and \(E_2\) have similar interpretations.

Some students have already a brother or sister attending one of the schools. Also, the students differ in walking distance to the schools. The authorities use these and other factors to determine the schools’ priorities over the students. Each school has a priority ordering where all students are ranked. The priority orderings of the schools can be summarized in a table such as Table 11. Note: this is an illustrative example and hence any table you will see later in the experiment might be different.

Each column gives the priority ordering of a particular school. Consider the column that is marked \(C_2\). This column gives the priority ordering of school \(C_2\) and tells us that this school gives the highest priority to receiving student \(E_2\). If this is not possible, then school \(C_2\) gives priority to student \(E_3\) to be enrolled. The lowest priority student of school \(C_2\) is student \(E_1\). The column of \(C_1\) has similar interpretations.

1.3 The matching procedure

To decide if and how students are assigned to schools, the following procedure is followed. It consists of two phases.

Phase 1. Students are asked to simultaneously and independently send an application to one school. For instance, it can happen that the students apply to schools as described by Table 12. Here each column shows the application of a student.

Note: this is an illustrative example of three applications. Each student is free to apply to the school that he/she thinks is appropriate. The application does not necessarily have to coincide with the most preferred. In fact, in our example students \(E_1\) and \(E_3\) apply to their most preferred school, but this is not the case for student \(E_2\).

Phase 2. The students’ applications together with the schools’ priority orderings determine an assignment of students to schools in the following way.

-

Step 1: Each school that has received at least one application accepts the application from the student with the highest priority (among all received applications). If a school with two seats has received at least two applications, then it also accepts the application from the student with the second highest priority (among all received applications). All other applications (if any) are rejected.

-

Step 2: Each student is assigned to the school that she applied to provided that the school accepted her application. If the application of a student was rejected, then the student remains without a seat.

1.4 The experiment

In the beginning of the experiment, the computer randomly divides the participants into groups of 3. The assignment process is random and anonymous, so no participant will know who is in which group. Then, each participant in a group gets randomly assigned the role of a student in such a way that one group member will be in the role of student \(E_1\), another group member will be in the role of student \(E_2\), and a third member will be in the role of student \(E_3\).

You will play the basic decision situation explained above 18 times in total. The composition of the group and the roles of the participants within each group do not change over the course of the experiment (for example, if you are assigned the role of student \(E_2\), then this will be your role until the end of the experiment; also, you will always be playing with the same participant in role \(E_1\) and with the same participant in role \(E_3\)). Every 6 rounds the numbers of seats school offer change. The first table with student preferences and the second table with priority orderings of schools remain the same in all 18 rounds of the experiment.

In each of the 18 rounds, payoffs are such that you receive 1 ECU if you end up at the school you prefer most, \(\varvec{x}\) ECU if you are assigned to your second most preferred school, and 0 ECU if you end up unassigned. At the end of the experiment, we will sum up your payoffs over the 18 rounds. Your final payoff will be equivalent of the sum of the per-round ECUs and the 3 Euro show-up fee.

The first thing you will see when the computer program starts is an illustrative example. Then, there will be one trial round that does not count for your final payoff so that you can familiarize yourself with the computer program. Afterwards, the first of the 18 rounds that count for payment starts.

Note: in the experimental sessions of treatment H, the parameter x took the value 0.7; in the experimental sessions of treatment L, the parameter x took the value 0.3.

Appendix 2: Nash equilibria

Let x be the per-round payoff for obtaining a seat at the second most preferred school, i.e., \(x=0.3\) in treatment L and \(x=0.7\) in treatment H. Recall that a mixed strategy of player/student i is completely described by the probability \(p_i\in [0,1]\) with which the student applies to school \(s_1\) (so, \(1-p_i\) is the probability with which student i applies to school \(s_2\)).

1.1 The set of NE in mixed strategies of problem \({{\mathcal {P}}}_1\)

One can easily verify that the normal-form game is given by Tables 13 and 14.

Thus, the best response correspondences of the three students are as followsFootnote 17:

We compute the set of Nash equilibria by checking the three Cases I, II, and III below. Let \((p_1,p_2,p_3)\) be a Nash equilibrium.

-

I:

\(1-p_3 > x\). It follows from \(br_1\) that \(p_1=1\). Since \(p_1=1, (1-p_1)(1-p_3)=0 < x\). Therefore, \(p_2=0\) by \(br_2\). Hence, \(x< 1 = p_1+p_2-p_1p_2\). So, \(p_3=0\) by \(br_3\). Now one easily verifies that the strategy-profile \((p_1,p_2,p_3)=\varvec{(1,0,0)}\) is indeed a Nash equilibrium.

-

II:

\(1-p_3<x\). It follows from \(br_1\) that \(p_1=0\). Since \(p_1=0\), \((1-p_1)(1-p_3)=1-p_3 < x\). Therefore, \(p_2=0\) by \(br_2\). Hence, \(x> 0 = p_1+p_2-p_1p_2\). So, \(p_3=1\) by \(br_3\). Now one easily verifies that the strategy-profile \((p_1,p_2,p_3)=\varvec{(0,0,1)}\) is indeed a Nash equilibrium.

-

III:

\(1-p_3=x\). We distinguish between two subcases.

-

Subcase \(0< p_1 \le 1\). Then, since \(x> 0\), \((1-p_1)(1-p_3) < x\). Then, \(p_2=0\) by \(br_2\). Since \(p_3 = 1-x \in \{0.3, 0.7\}\) and \(p_3=br_3(p_1,p_2)\), we have \(x = p_1+p_2-p_1p_2 = p_1\). Now one easily verifies that the strategy-profile \((p_1,p_2,p_3)=\varvec{(x,0,1-x)}\) is indeed a Nash equilibrium.

-

Subcase \(p_1 = 0\). Then, \((1-p_1)(1-p_3) = x\). Since \(p_3 = 1-x \in \{0.3, 0.7\}\) and \(p_3=br_3(p_1,p_2)\), we have \(x = p_1+p_2-p_1p_2 = p_2\). One easily verifies that the strategy-profile \((p_1,p_2,p_3)=\varvec{(0,x,1-x)}\) is indeed a Nash equilibrium.

-

1.2 The set of NE in mixed strategies of problem \({{\mathcal {P}}}_2\)

One can easily verify that the normal-form game is given by Tables 15 and 16.

Thus, the best response correspondences are as follows:

We compute the set of Nash equilibria by checking the three cases I, II, and III below. Let \((p_1,p_2,p_3)\) be a Nash equilibrium.

-

I:

\(1-p_3 > p_2x\). It follows from \(br_1\) that \(p_1=1\). Since \(p_1=1\), \((1-p_1)(1-p_3)=0 < x\). Therefore, \(p_2=0\) by \(br_2\). Hence, \(x> 0 = p_1p_2\). So, \(p_3=1\) by \(br_3\). But then \(0=1-p_3 > p_2x =0\). Hence, there is no Nash equilibrium in this case.

-

II:

\(1-p_3 < p_2x\). It follows from \(br_1\) that \(p_1=0\). Since \(p_1=0\), \((1-p_1)(1-p_3)= 1-p_3 < p_2x\le x\). Therefore, \(p_2=0\) by \(br_2\). Hence, \(x> 0 = p_1p_2\). So, \(p_3=1\) by \(br_3\). But then \(0=1-p_3 < p_2x=0\). Hence, there is no Nash equilibrium in this case.

-

III:

\(1-p_3 = p_2x\). We distinguish among three subcases.

-

Subcase \((1-p_1)(1-p_3) > x\). Then, \(p_2=1\) by \(br_2\). Thus, \(1-p_3=x\). From \((1-p_1)(1-p_3) > x\) and \(1-p_3=x\) we obtain \((1-p_1) x > x\), which, by \(x>0\), is equivalent to \(p_1 < 0\). Hence, there is no Nash equilibrium in this subcase.

-

Subcase \((1-p_1)(1-p_3) = x\). Substituting \(1-p_3=p_2x\) yields \((1-p_1) p_2x = x\) or equivalently (because \(x\ne 0\)), \((1-p_1) p_2 = 1\). Then, since \(p_1,p_2\in [0,1]\), \(p_1=0\) and \(p_2=1\). Since \(1-p_3=p_2x\), \(p_3=1-x<1\). However, from \(x>0=p_1p_2\) and \(br_3\) it follows that \(p_3=1\). Hence, there is no Nash equilibrium in this subcase.

-

Subcase \((1-p_1)(1-p_3) < x\). Then, \(p_2=0\) by \(br_2\). From \(1-p_3 = p_2 x\), \(p_3=1\). Now one easily verifies that for each \(\varvec{p_1 \in [0,1]}\) the strategy-profile \((p_1,p_2,p_3)=\varvec{(p_1,0,1)}\) is indeed a Nash equilibrium.

-

1.3 The set of NE in mixed strategies of problem \({{\mathcal {P}}}_3\)

One can easily verify that the normal-form game is given by Tables 17 and 18.

In particular, player 1 has the dominant strategy \(p_1=1\). The best response correspondences of the other two players are then as follows:

We compute the set of Nash equilibria by checking the three cases I, II, and III below. Let \((p_1,p_2,p_3)\) be a Nash equilibrium.

-

I:

\(1-p_3 > x\). It follows from \(br_2\) that \(p_2=1\). Then, from \(br_3\) and \(x<1=p_2\), \(p_3=0\). One easily verifies that the strategy-profile \((p_1,p_2,p_3)=\varvec{(1,1,0)}\) is indeed a Nash equilibrium.

-

II:

\(1-p_3 < x\). It follows from \(br_2\) that \(p_2=0\). Then, from \(br_3\) and \(x>0=p_2\), \(p_3=1\). One easily verifies that the strategy-profile \((p_1,p_2,p_3)=\varvec{(1,0,1)}\) is indeed a Nash equilibrium.

-

III:

\(1-p_3=x\). Since \(p_3 =1-x \in \{0.3,0.7\}\) and \(p_3=br_3(1,p_2)\), \(x=p_1p_2=p_2\). One easily verifies that the strategy-profile \((p_1,p_2,p_3)=\varvec{(1,x,1-x)}\) is indeed a Nash equilibrium.

Appendix 3: Disaggregated data

Figure 5 indicates how subject behavior changes over rounds. One natural hypothesis regarding learning is that repetitions bring subject behavior closer to the limiting logit-QRE. To statistically analyze this hypothesis, we divide the six rounds in which a particular problem is played into two subsets of three rounds each, namely the first three rounds and the last three rounds. Table 19 shows that all differences that are significant at the 5% level (4 comparisons out of a total of 18) support this hypothesis.

Figure 6 shows the distributions over matchings per round.

Table 20 analyzes the hypothesis that repetitions help reaching a stable matching. We divide the six rounds in which a particular problem is played again into two subsets of three rounds each (the first three and the last three rounds, respectively). We observe that in all six instances in Table 20, the frequency of reaching an unstable matching is substantially lower in the last three than in the first three rounds. The effect is significant at the 5%-level in five out of the six instances.

Appendix 4: Lists of length 2

We analyze the robustness of the theoretical results for the immediate acceptance and the student-optimal stable mechanism, respectively, when students submit lists of length 2. Note that the two mechanisms do not coincide in general in this setting.Footnote 18 For each problem \(\mathcal {P} \in \{\mathcal {P}_1,\mathcal {P}_2,\mathcal {P}_3\}\), \(\mathcal {P}^{IA}\) and \(\mathcal {P}^{DA}\) refer to problem \(\mathcal {P}\) under the immediate acceptance and the student-optimal stable mechanism, respectively. A mixed strategy of player/student i is completely described by the probability \(p_i\in [0,1]\) with which the student uses strategy \(s_1,s_2\) (so, \(1-p_i\) is the probability with which student i uses strategy \(s_2,s_1\)). Again, x is the per-round payoff for obtaining a seat at the second most preferred school, i.e., \(x=0.3\) in treatment L and \(x=0.7\) in treatment H. Table 27 shows the probability distribution of matchings induced by the Nash equilibria. There are neither differences between treatments nor between mechanisms. Our most important findings are the logit-QRE predictions in Fig. 7. Together with Table 27 they establish that in both treatments, the student-optimal stable matching is obtained in the limiting logit-QRE. Therefore, we expect that a similar experiment applied to this alternative setting would yield results that are different from our study.

1.1 The set of NE in mixed strategies of problems \({{\mathcal {P}}}_1^{IA}\) and \({{\mathcal {P}}}_1^{DA}\)

One can easily verify that the normal-form game is given by Tables 21 and 22. Note that reporting truthfully is a weakly dominant strategy. The best response correspondences are as follows:

We compute the set of Nash equilibria by checking the two cases I and II below. Let \((p_1,p_2,p_3)\) be a Nash equilibrium.

-

I:

\(p_3\ne 1\). From \(br_1\), \(p_1=1\). Then, from \(br_2\) and \(br_3\), \(p_2\in [0,1]\) and \(p_3=0\), respectively. One easily verifies that for each \(\varvec{p_2\in [0,1]}\), the strategy-profile \((p_1,p_2,p_3)=\varvec{(1,p_2,0)}\) is indeed a Nash equilibrium.

-

II:

\(p_3= 1\). Then, from \(br_3\), \(p_1=p_2=0\). One easily verifies that the strategy-profile \((p_1,p_2,p_3)=\varvec{(0,0,1)}\) is indeed a Nash equilibrium.

1.2 The set of NE in mixed strategies of problems \({{\mathcal {P}}}_2^{IA}\)

One can easily verify that the normal-form game is given by Tables 23 and 24. Thus, the best response correspondences are as follows: