Abstract

We study a continuous one-dimensional spatial model of electoral competition with two office-motivated candidates differentiated by their “intensity valence”, the degree to which they will implement their announced policy. The model generates results that differ significantly from those obtained in models with additive valence. First, the low intensity valence candidate is supported by voters with ideal points on both extremes of the policy space. Second, there exist pure strategy Nash equilibria (PSNE) in which the high intensity valence candidate wins if the distribution of voters in the policy space is sufficiently homogeneous. If, instead, this distribution is sufficiently heterogeneous, there are PSNE in which the low intensity valence candidate wins. For moderate heterogeneity, only mixed strategy equilibria exist.

Similar content being viewed by others

Notes

Note also that when candidates maximize vote share and the distribution of voters is public information, a pure strategy Nash equilibrium exists if the additive valence of one candidate is sufficiently higher than the one of the other candidate. In such a case, the candidate with the highest additive valence obtains 100% of the votes (Dix and Santore 2002). When the difference between the additive valences of the two candidates is not enough to have a pure strategy Nash equilibrium, there exists a mixed strategy equilibrium such that the candidate with the highest additive valence expects to have a higher percentage of votes. And a more homogenous distribution of voters increases the expected percentage of votes that this additive-valence advantaged candidate can obtain. So, again, an increase in voter’s homogeneity favors the candidate with the highest additive valence, in the same way as voter’s homogeneity favors the candidate with the highest intensity valence in our model; but a higher additive valence is always an advantage, contrary to a higher intensity valence.

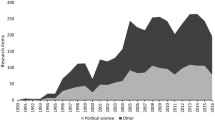

Several papers consider that the valence is endogenous and/or private information. Various papers consider that campaign expenditures or a costly effort from the part of a candidate may improve his valence, and the probability of winning (e.g., Ashworth and de Mesquita 2009; Carrillo and Castanheira 2008; Meirowitz 2008). In Herrera et al. (2008), there is an exogenous valence, uncertain ex-ante, which is revealed after the parties propose their policy platform and carry out their campaign spending. The campaign spending in their model has an impact on the mobilization of voters by bringing them to the booth. An increase in the ex-ante heterogeneity of the values that the valence can take implies that the results of the election are less certain, so it increases polarization; this, in turn, increases campaign spending (because it increases its marginal benefit). Bernhardt et al. (2011) is another model wherein the valence is uncertain ex-ante. They introduce an exogenous valence in a repeated election model à la Duggan (2000); the valence of a candidate is revealed to the electorate if he is elected. In all these models, the valence remains additive and increases the utility of all voters.

Note that K is not candidate-specific. This has much to do with Gouret et al. (2011) who show that the intensity valence utility function (i.e., with a parameter K which is not candidate-specific) explains their data as well as a utility function with candidate-specific \(K_j\). More precisely, the intensity valence utility function implies different testable cross-equation parameter restrictions on a system of unrestricted utility functions which includes candidate-specific-additive and candidate-specific-multiplicative parameters at the same time; the number of equations in the system is the number of candidates, given that each equation represents the utility of a voter i if a specific candidate j is elected. The null hypothesis that a system of intensity valence utility functions explains their data as well as a system of unrestricted utility functions is not rejected.

The main results of Krasa and Polborn (2012, p.255, Theorem 3) states that when candidates are uncertain about the distribution of voter preferences and all voters have UCR preferences, if a strict Nash equilibrium exists, then there is policy convergence. The fact that all voters must have UCR preferences is a sufficient but not necessary condition to have convergence (if a Nash equlibrium exists). Indeed, convergence might occur with non-UCR preferences. The fact that some voters have non-UCR preferences is necessary but not sufficient to have policy divergence (Krasa and Polborn 2012, p.256).

Following Gans and Smart (1996), a utility function satisfies the single-crossing property if for two voters i and \(i'\) with ideal points \(a_i\) and \(a_{i'}\) and two policies x and \(x'\), the following statement is true: if \(x>x'\) and \(a_{i'}>a_i\), or if \(x<x'\) and \(a_{i'}<a_i\), then \(u(a_i,x)\ge u(a_i,x')\) implies that \(u(a_{i'},x)\ge u(a_{i'},x')\).

Note that this distributional assumption can be justified empirically. Using data from the 2012 American National Election Study, Hare et al. (2015, p.766) find that the distribution of voters on a liberal-conservative scale “follows a bell curve pattern with a marked peak in the middle”. In the 2007 French data used by Gouret et al. (2011), the distribution of voters on a left-right scale also follows a bell curve with a marked peak in the middle. Assuming a distribution for voter preferences is necessary to find pure (and mixed) strategy Nash equilibria; hence it is natural to choose a distribution which can be justified empirically. However, a natural question is to know if the basic message of the model still holds with alternative distributional assumptions. By basic message we mean the fact that a high intensity valence is not always an advantage to win the election because it depends on the heterogeneity of the distribution of voters in the policy space. We elaborate on it in Sect. 4.3 by making no distributional assumption on f and considering the minimal conditions to have pure strategy Nash equilibria.

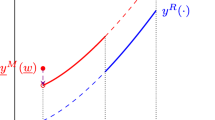

Remark: to fully understand when \(x_2=x_1+\frac{(\lambda _1-1)K}{\lambda _1}\) and when \(x_2=x_1-\frac{(\lambda _1-1)K}{\lambda _1}\), Panels (C) and (D) of Fig. 2 depict these two situations.

If we had not normalized \(\lambda _2=1\), the results in Proposition 1 would have been the same substituting \(\lambda _1\) by \(\lambda _1/\lambda _2\). For instance, Case (A) would have given \(b=\frac{(\lambda _1/\lambda _2)x_1-x_2}{(\lambda _1/ \lambda _2)-1}-K=\frac{\lambda _1x_1-\lambda _2x_2}{\lambda _1-\lambda _2}-K\) and \(c=\frac{\lambda _1x_1-\lambda _2x_2}{\lambda _1-\lambda _2}+K\) if \(x_2\in \left[ x_1-\frac{(\lambda _1-\lambda _2)K}{\lambda _1},x_1 +\frac{(\lambda _1-\lambda _2)K}{\lambda _1}\right] \).

Note that Proposition 6 does not consider the limit case \(\sigma =\sigma ^{** }\). If \(\sigma =\sigma ^{**}\), Candidate 2 wins with probability 1 playing a mixed strategy \(\delta _{2}^{*}\) such that: \(\text{ supp }(\delta _{2}^{*})\) is a non empty open interval symmetric with respect to 0 (not too large), and Candidate 2 plays uniformly in this interval.

The function \(\phi \) is defined by \(x_{2}=\phi (x_{1})\Leftrightarrow \pi _{1}(x_{1},x_{2})=\frac{1}{2}\) (as precised a few lines before the statement of Lemma 3 in “Appendix A.8”).

We would like to thank a referee for stressing this point.

By atomless, we mean, as it is standard in measure theory, a measure which has no atoms. An atom is a measurable set whose measure is positive and contains no set of smaller positive measure. If so, a mixed strategy which is a continuous distribution is atomless because the probability of every one-point set is zero.

Mattozzi and Merlo (2015) note that the term “aristocracy” comes from the Greek “aristokratía” meaning “the government of the best” while “mediocracy” is defined as the “rule by the mediocre”.

To be more precise about their paper, Mattozzi and Merlo (2015) compare majoritarian and proportional systems. The majoritarian system is more competitive because it is a winner-takes-all system while the proportional system implies that the probability that each candidate wins the elections is proportional to his effort. The winner-takes-all nature of the majoritarian system makes the electoral return to candidate’s ability higher; thus it is less likely to generate a mediocracy than the proportional system.

Parts (i), (iii), (iv) and (v) of Lemma 3 are also valid if \(\sigma >\sigma ^{**}\).

References

Ansolabehere S, Snyder J (2000) Valence politics and equilibrium in spatial election models. Public Choice 103:327–336

Aragones E, Palfrey TR (2002) Mixed equilibrium in a Downsian model with a favored candidate. J Econ Theory 103:131–161

Aragonès E, Xefteris D (2012) Candidate quality in a Downsian model with a continuous policy space. Games Econ Behav 75:464–480

Aragonès E, Xefteris D (2017) Imperfectly informed voters and strategic extremism. Int Econ Rev 58:439–471

Ashworth S, de Mesquita EB (2009) Elections with platform and valence competition. Games Econ Behav 67:191–216

Bernhardt D, Câmara O, Squintani F (2011) Competence and ideology. Rev Econ Stud 78:487–522

Carrillo JD, Castanheira M (2008) Information and strategic political polarization. Econ J 118:845–874

Dix M, Santore R (2002) Candidate ability and platform choice. Econ Lett 76:189–194

Downs A (1957) An economic theory of democracy. Harper and Row, New York

Duggan J (2000) Repeated elections with asymmetric information. Econ Politics 12:109–135

Evrenk H (2019) Valence politics, ch.13. In: Congleton RD, Grofman BN, Voigt S (eds) The Oxford handbook of public choice. Oxford University Press, Oxford, pp 266–291

Gans JS, Smart M (1996) Majority voting with single-crossing preferences. J Public Econ 59:219–237

Gerber ER, Lewis JB (2004) Beyond the median: Voter preferences, district heterogeneity, and political representation. J Political Econ 112:1364–1383

Gouret F, Hollard G, Rossignol S (2011) An empirical analysis of valence in electoral competition. Soc Choice Welf 37:309–340

Groseclose T (2001) A model of candidate location when one candidate has a valence advantage. Am J Political Sci 45:862–886

Hare C, Armstrong DA II, Baker R, Carroll R, Poole KT (2015) Using Bayesian Aldrich–McKelvey scaling to study citizens’ ideological preferences and perceptions. Am J Political Sci 59:759–774

Herrera H, Levine DK, Martinelli C (2008) Policy platforms, campaign spending and voter participation. J Public Econ 92:501–513

Hummel P (2010) On the nature of equilibria in a Downsian model with candidate valence. Games Econ Behav 70:425–445

Kartik N, Preston McAfee R (2007) Signaling character in electoral competition. Am Econ Rev 97:852–869

Krasa S, Polborn MK (2010) Competition between specialized candidates. Am Political Sci Rev 104:745–765

Krasa S, Polborn MK (2012) Political competition between differentiated candidates. Games Econ Behav 76:249–271

Mattozzi A, Merlo A (2015) Mediocracy. J Public Econ 130:32–44

Meirowitz A (2008) Electoral contests, incumbency advantages, and campaign finance. J Politics 70:680–699

Merrill III S, Grofman B (1999) A unified theory of voting: directional and proximity spatial models. Cambridge University Press, Cambridge

Miller MK (2011) Seizing the mantle of change: modeling candidate quality as effectiveness instead of valence. J Theor Politics 23:52–68

Soubeyran R (2009) Does a disadvantaged candidate choose an extremist position? Ann Econ Stat 93–94:328–348

Stokes DE (1963) Spatial models of party competition. Am Political Sci Rev 57:368–377

Xefteris D (2012) Mixed strategy equilibrium in a Downsian model with a favored candidate: a comment. J Econ Theory 147:393–396

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

We are grateful to the Editor Maggie Penn, an associate editor and two referees for valuable criticisms. We also thank Navin Kartik, Annick Laruelle, Martin Obradovits and Marcus Pivato for comments on previous versions, as well as Micael Castanheira, Frédéric Chantreuil, Sébastien Courtin, Eric Danan, Renaud Foucart, Abel François, Etienne Farvaque, Jérôme Héricourt, Antonin Macé, Mathieu Martin, Vincent Merlin, Magali Noël-Linnemer, Matías Núñez, Charlotte Simunek, Julien Vauday and participants at the 3rd Lille Workshop on Political Economy, the 2018 Meeting of the Society for Social Choice and Welfare, the 2017 European Meeting of the Econometric Society, the 2016 ASSET Meeting, a Seminar in Lille and a CREM Seminar in Caen. Fabian Gouret would like to acknowledge the financial support of a “Chaire d’Excellence CNRS”, Labex MME-DII and ANR Elitisme (ANR-14-CE22-0006).

A Proofs

A Proofs

1.1 A.1 Proof of Proposition 1

We prove first that \(\Omega _1(x_1,x_2)=(b,c)\), i.e., the set of voters who strictly prefer Candidate 1, is a bounded open interval, and find the bounds b and c. Let \(u_{1}=u(a,x_{1},\lambda _{1},K)=\lambda _{1}(K-|x_{1}-a|)\) and \(u_{2}=u(a,x_{2},\lambda _{2},K)=K-|x_{2}-a|\). We thus have:

Note also the three preliminary results in (A2), (A3) and (A4):

Consider the three following cases: Case (A) \(\left| x_{1}-x_{2}\right| \le \frac{(\lambda _1-1)K}{\lambda _1}\), Case (B) \(x_2>x_1+\frac{(\lambda _1-1)K}{\lambda _1}\), and Case (B’) \(x_2<x_1-\frac{(\lambda _1-1)K}{\lambda _1}\).

- Case (A) :

-

– If \(\left| x_{1}-x_{2}\right| \le \frac{(\lambda _1-1)K}{\lambda _1}\) and \(a\in (\min \{x_1,x_2\},\max \{x_1,x_2\})\), then \(\lambda _1|x_{1}-a|< \lambda _1|x_{1}-x_{2}| \le (\lambda _1-1)K< (\lambda _1-1)K+|x_2-a|\). Inequality (A1) is thus satisfied, so \(u_1>u_2\).

– If \(\left| x_{1}-x_{2}\right| \le \frac{(\lambda _1-1)K}{\lambda _1}\) and \(a\le \min \{x_{1},x_{2}\}\), then \(u_1>u_2\Leftrightarrow a>\frac{\lambda _1x_1-x_2}{\lambda _1-1}-K\) according to Inequality (A3).

– If \(\left| x_{1}-x_{2}\right| \le \frac{(\lambda _1-1)K}{\lambda _1}\) and \(a\ge \max \{x_{1},x_{2}\}\), then \(u_1>u_2\Leftrightarrow a<\frac{\lambda _1x_1-x_2}{\lambda _1-1}+K\) according to Inequality (A4).

- Conclusion :

-

If \(\left| x_{1}-x_{2}\right| \le \frac{(\lambda _1-1)K}{\lambda _1}\), then \(\Omega _{1}(x_{1},x_{2})=\left( \frac{\lambda _1x_1-x_2}{\lambda _1-1} -K,\frac{\lambda _1x_1-x_2}{\lambda _1-1}+K\right) \).

- Case (B) :

-

– If \(x_2>x_1+\frac{(\lambda _1-1)K}{\lambda _1}\) and \(x_1<a<x_2\), then, according to Inequality (A1), \(u_1>u_2\Leftrightarrow (\lambda _1-1)K+(x_2-a)>\lambda _1(a-x_1)\), i.e., \(u_1>u_2\Leftrightarrow a<\frac{(\lambda _1-1)K+\lambda _1x_1+x_2}{\lambda _1+1}\).

– If \(x_2>x_1+\frac{(\lambda _1-1)K}{\lambda _1}\) and \(a\le x_1\), then \(u_1>u_2\Leftrightarrow a>\frac{\lambda _1x_1-x_2}{\lambda _1-1}-K\) according to Inequality (A3).

– If \(x_2>x_1+\frac{(\lambda _1-1)K}{\lambda _1}\) and \(a\ge x_2\), then \(u_1>u_2\Leftrightarrow a<\frac{\lambda _1x_1-x_2}{\lambda _1-1}+K\) according to Inequality (A4). However, since \(x_2>x_1 +\frac{(\lambda _1-1)K}{\lambda _1}\) and \(a\ge x_2\), note that \(\frac{\lambda _1x_1-x_2}{\lambda _1-1}+K=\frac{\lambda _1 \left[ x_1+\frac{(\lambda _1-1)K}{\lambda _1}\right] -x_2}{\lambda _1-1} <\frac{(\lambda _1-1)x_2}{\lambda _1-1}=x_2\le a\). Hence, \(u_1\le u_2\) if \(a\ge x_2\) and \(x_2>x_1+\frac{(\lambda _1-1)K}{\lambda _1}\).

- Conclusion :

-

If \(x_2>x_1+\frac{(\lambda _1-1)K}{\lambda _1}\), then \(\Omega _{1}(x_{1},x_{2})=\left( \frac{\lambda _1x_1-x_2}{\lambda _1-1} -K,\frac{(\lambda _1-1)K+\lambda _1x_1+x_2}{\lambda _1+1}\right) \).

- Case (B’) :

-

– If \(x_2<x_1-\frac{(\lambda _1-1)K}{\lambda _1}\) and \(x_2<a<x_1\), then, according to Inequality (A1), \(u_1>u_2\Leftrightarrow (\lambda _1-1)K+(a-x_2)>\lambda _1(x_1-a)\), i.e., \(u_1>u_2\Leftrightarrow a>\frac{(1-\lambda _1)K+\lambda _1x_1+x_2}{\lambda _1+1}\).

– If \(x_2<x_1-\frac{(\lambda _1-1)K}{\lambda _1}\) and \(a\le x_2\), then \(u_1>u_2\Leftrightarrow a>\frac{\lambda _1x_1-x_2}{\lambda _1-1}-K\) according to Inequality (A3). However, since \(x_2<x_1-\frac{(\lambda _1-1)K}{\lambda _1}\) and \(a\le x_2\), note that \(\frac{\lambda _1x_1-x_2}{\lambda _1-1}-K=\frac{\lambda _1 \left[ x_1-\frac{(\lambda _1-1)K}{\lambda _1}\right] -x_2}{\lambda _1-1} >\frac{(\lambda _1-1)x_2}{\lambda _1-1}=x_2\ge a\). Hence \(u_1\le u_2\) if \(a\le x_2\) and \(x_2<x_1-\frac{(\lambda _1-1)K}{\lambda _1}\).

– If \(x_2<x_1-\frac{(\lambda _1-1)K}{\lambda _1}\) and \(a\ge x_1\), then \(u_1>u_2\Leftrightarrow a<\frac{\lambda _1x_1-x_2}{\lambda _1-1} +K\) according to Inequality (A4).

- Conclusion :

-

If \(x_2<x_1 -\frac{(\lambda _1-1)K}{\lambda _1}\), then \(\Omega _{1}(x_{1},x_{2})=\left( \frac{(1-\lambda _1)K +\lambda _1x_1+x_2}{\lambda _1+1},\frac{\lambda _1x_1-x_2}{\lambda _1-1}+K\right) \).

Now we prove that \(\Omega _2(x_1,x_2)=(-\infty ,b)\cup (c,+\infty )\), i.e., the set of voters who strictly prefer Candidate 2, is a non-convex set. In the three cases (A), (B) and (B’), it is easily shown (replacing \(u_1>u_2\) by \(u_1=u_2\)) that \(I(x_1,x_2)=\{b,c\}\). Given that \(\Omega _2(x_1,x_2)=\mathbb {R}\backslash \left[ \Omega _1(x_1,x_2)\cup I(x_1,x_2)\right] \), we obtain that \(\Omega _2(x_1,x_2)=(-\infty ,b)\cup (c,+\infty )\). \(\square \)

1.2 A.2 Proof of Lemma 1

We first show that (i) \(\int _{\frac{(1-\lambda _1)K}{\lambda _1}}^{\frac{(\lambda _1+1)K}{\lambda _1}}f_\sigma (a)da=\int _{-\frac{(\lambda _1+1)K}{\lambda _1}}^{\frac{(\lambda _1-1)K}{\lambda _1}}f_\sigma (a)da\), and then that (ii) \(0<\int _{\frac{(1-\lambda _1)K}{\lambda _1}}^{\frac{(\lambda _1+1)K}{\lambda _1}}f_\sigma (a)da<\int _{-K}^Kf_\sigma (a)da\), i.e., that \(\int _{-K}^{K}f_\sigma (a)da-\int _{\frac{(1-\lambda _1)K}{\lambda _1}}^{\frac{(\lambda _1+1)K}{\lambda _1}}f_\sigma (a)da>0\).

-

(i)

Making the substitution \(u=-a\), \(du=-da\), we get:

$$\begin{aligned} \int _{\frac{(1-\lambda _1)K}{\lambda _1}}^{\frac{(\lambda _1+1)K}{\lambda _1}}f_\sigma (a)da=-\int _{\frac{(\lambda _{1}-1)K}{\lambda _{1}}}^{\frac{ -(\lambda _{1}+1)K}{\lambda _{1}}}f_{\sigma }(-u)du= \int _{\frac{-(\lambda _{1}+1)K}{\lambda _{1}}}^{\frac{(\lambda _{1}-1)K}{\lambda _{1}}}f_{\sigma }(-u)du. \end{aligned}$$Given that \(f_{\sigma }\) is an even function, we have \(\int _{\frac{-(\lambda _{1}+1)K}{\lambda _{1}}}^{\frac{(\lambda _{1}-1)K}{\lambda _{1}}}f_{\sigma }(-u)du=\int _{\frac{-(\lambda _{1}+1)K }{\lambda _{1}}}^{\frac{(\lambda _{1}-1)K}{\lambda _{1}}}f_{\sigma }(u)du\).

-

(ii)

Remark that

$$\begin{aligned} \int _{-K}^{K}f_\sigma (a)da =\int _{-K}^{\frac{(1-\lambda _1)K}{\lambda _1}} f_\sigma (a)da+\int _{\frac{(1-\lambda _1)K}{\lambda _1}}^Kf_\sigma (a)da, \end{aligned}$$(A5)and

$$\begin{aligned} \int _{\frac{(1-\lambda _1)K}{\lambda _1}}^{\frac{(\lambda _1+1)K}{\lambda _1}} f_\sigma (a)da=\int _{\frac{(1-\lambda _1)K}{\lambda _1}}^{K}f_\sigma (a)da +\int _{K}^{\frac{(\lambda _1+1)K}{\lambda _1}}f_\sigma (a)da. \end{aligned}$$(A6)Subtracting Eqs. (A5) and (A6), we get

$$\begin{aligned} \int _{-K}^{K}f_\sigma (a)da-\int _{\frac{(1-\lambda _1)K}{\lambda _1}}^{\frac{(\lambda _1+1) K}{\lambda _1}}f_\sigma (a)da=\int _{-K}^{\frac{(1-\lambda _1)K}{\lambda _1}}f_\sigma (a)da -\int _{K}^{\frac{(\lambda _1+1)K}{\lambda _1}}f_\sigma (a)da, \end{aligned}$$(A7)\(f_\sigma \) is an even function, so \(\int _{-K}^{\frac{(1-\lambda _1)K}{\lambda _1}} f_\sigma (a)da=\int _{\frac{(\lambda _1-1)K}{\lambda _1}}^{K}f_\sigma (a)da\). Eq. (A7) is thus equivalent to:

$$\begin{aligned} \int _{-K}^{K}f_\sigma (a)da-\int _{\frac{(1-\lambda _1)K}{\lambda _1}}^{\frac{(\lambda _1+1) K}{\lambda _1}}f_\sigma (a)da= & {} \int _{\frac{(\lambda _1-1)K}{\lambda _1}}^{K}f_\sigma (a)da -\int _{K}^{\frac{(\lambda _1+1)K}{\lambda _1}}f_\sigma (a)da\nonumber \\= & {} \int _{\frac{(\lambda _1-1)K}{\lambda _1}}^{K}\left[ f_\sigma (a)-f_\sigma \left( a+\frac{K}{\lambda _1}\right) \right] da. \end{aligned}$$(A8)The limits of integration in the right hand side of (A8) are positive, and \(f_\sigma (a)\) is strictly decreasing on \(\mathbb {R}_+\), so \(\left[ f_\sigma (a)-f_\sigma \left( a+\frac{K}{\lambda _1}\right) \right] >0\), and (A8) is strictly positive. The result in Lemma 1 follows. \(\square \)

1.3 A.3 Proof of Lemma 2

Let \(g_1(\sigma )=\int _{\frac{(1-\lambda _1)K}{\lambda _1}}^{\frac{(\lambda _1+1)K}{\lambda _1}}f_\sigma (a)da\) and \(g_2(\sigma )=\int _{-K}^{K}f_\sigma (a)da\). According to Lemma 1, we know that \(0<g_1(\sigma )<g_2(\sigma )\), \(\forall \sigma >0\).

Recall the definition of \(f_\sigma \) and note that \(g_1(\sigma )=\int _{\frac{(1-\lambda _1)K}{\lambda _1}}^{\frac{(\lambda _1+1)K}{\lambda _1}}\frac{1}{\sigma }f_1(\frac{a}{\sigma })da\). Let \(z=\frac{a}{\sigma }\). Then \(g_1(\sigma )=\int _{\frac{(1-\lambda _1)K}{\lambda _1\sigma }}^{\frac{(\lambda _1+1)K}{\lambda _1\sigma }}f_1(z)dz\): one can see that when \(\sigma \) increases, \(g_1(\sigma )\) is an integral of \(f_1\) on a smaller interval. Thus, \(\sigma \mapsto g_1(\sigma )\) is a continuous and strictly decreasing function on \(\mathbb {R}_+^*\), with

Hence, there is a unique \(\sigma ^*\) such that \(g_1(\sigma ^*)=\frac{1}{2}\); moreover, \(g_1(\sigma )>\frac{1}{2}\Leftrightarrow \sigma <\sigma ^*\).

Now consider \(g_2(\sigma )=\int _{-K}^{K}\frac{1}{\sigma }f_1(\frac{a}{\sigma })da\). Let \(z=\frac{a}{\sigma }\). Then \(g_2(\sigma )=\int _{-\frac{K}{\sigma }}^{\frac{K}{\sigma }}f_1(z)dz\). When \(\sigma \) increases, \(g_2(\sigma )\) is an integral of \(f_1\) on a smaller interval. Thus, \(\sigma \mapsto g_2(\sigma )\) is a continuous and strictly decreasing function on \(\mathbb {R}_+^*\), with

Hence, there is a unique \(\sigma ^{**}\) such that \(g_2(\sigma ^{**})=\frac{1}{2}\). Moreover, \(g_2(\sigma )>\frac{1}{2}\Leftrightarrow \sigma <\sigma ^{**}\).

According to Lemma 1, \(g_1(\sigma )<g_2(\sigma )\), \(\forall \sigma >0\), which implies \(g_1(\sigma ^*)=\frac{1}{2}<g_2(\sigma ^*)\). Given that \(g_2(\sigma ^{**})=\frac{1}{2}\), then \(g_2(\sigma ^{**})<g_2(\sigma ^*)\). Thus, \(\sigma ^{**}>\sigma ^{*}\) since \(g_2\) is a strictly decreasing function. Results (i), (ii) and (iii) in Lemma 2 follow. \(\square \)

1.4 A.4 Proof of Proposition 2

We prove (i) first, i.e., if \(\sigma <\sigma ^{*}\), Candidate 1 wins with certainty if he chooses \(x_{1}^{*}=0\), \(\forall x_{2}\in \mathbb {R}\). We thus have to show that if \(\sigma <\sigma ^{*}\), then \(S_1(0,x_{2})=\int _b^cf_\sigma (a)da >\frac{1}{2}\), \(\forall x_2\in \mathbb {R}\). Since \(\sigma <\sigma ^{*}\), we know from Lemma 2 that \(\frac{1}{2}<\int _{\frac{(1-\lambda _1)K}{\lambda _1}}^{\frac{(\lambda _1+1)K}{\lambda _1}}f_\sigma (a)da<\int _{-K}^Kf_\sigma (a)da\). Let’s consider the three possible cases: Case (A) \(x_2\in \left[ -\frac{(\lambda _1-1)K}{\lambda _1}, \frac{(\lambda _1-1)K}{\lambda _1}\right] \), Case (B) \(x_2>\frac{(\lambda _1-1)K}{\lambda _1}\), and Case (B’) \(x_2<-\frac{(\lambda _1-1)K}{\lambda _1}\) which correspond to Panels (A), (B) and (B’) in Fig. 2 when \(x_1=0\).

- Case (A) :

-

If \(x_2\in \left[ -\frac{(\lambda _1-1)K}{\lambda _1},\frac{(\lambda _1-1)K}{\lambda _1}\right] \) and \(x_1=x_{1}^{*}=0\), then we know from Proposition 1 that \(b=\frac{-x_2}{\lambda _1-1}-K\) and \(c=\frac{-x_2}{\lambda _1-1} +K\). If so, \(S_{1}(0,x_{2})=\int _{\frac{-x_2}{\lambda _1-1} -K}^{\frac{-x_2}{\lambda _1-1}+K}f_{\sigma }(a)da\) and \(\frac{\partial S_1}{\partial x_2}(0,x_2)=\frac{-1}{\lambda _1 -1}f_\sigma \left( \frac{-x_2}{\lambda _1-1}+K\right) +\frac{1}{\lambda _1 -1}f_\sigma \left( \frac{-x_2}{\lambda _1-1}-K\right) \). Given that \(f_{\sigma }\) is an even function, \(f_\sigma \left( \frac{-x_2}{\lambda _1 -1}-K\right) =f_\sigma \left( \frac{x_2}{\lambda _1-1}+K\right) \), so \(\frac{\partial S_1}{\partial x_2}=\frac{-1}{\lambda _1-1} f_\sigma \left( \frac{-x_2}{\lambda _1-1}+K\right) +\frac{1}{\lambda _1 -1}f_\sigma \left( \frac{x_2}{\lambda _1-1}+K\right) \). Furthermore, given that this even function is strictly increasing on \(\mathbb {R}_{-}\), and strictly decreasing on \(\mathbb {R}_{+}\), then \(f_\sigma \left( \frac{x_2}{\lambda _1-1}+K\right) \ge f_\sigma \left( \frac{-x_2}{\lambda _1-1}+K\right) \) if \(x_2\le 0\), while \(f_\sigma \left( \frac{x_2}{\lambda _1-1}+K\right) \le f_\sigma \left( \frac{-x_2}{\lambda _1-1}+K\right) \) if \(x_2\ge 0\). That is \(\frac{\partial S_1}{\partial x_2}\ge 0\Leftrightarrow x_2\le 0\). Thus, \(x_{2}\mapsto S_{1}(0,x_{2})\) is increasing on \(\left[ -\frac{(\lambda _1-1)K}{\lambda _1},0\right] \), and decreasing on \(\left[ 0,\frac{(\lambda _1-1)K}{\lambda _1}\right] \), and it has a minimum at \(x_{2}=\pm \frac{(\lambda _1-1)K}{\lambda _1}\). Note that \(S_{1}\left( 0, \frac{(\lambda _1-1)K}{\lambda _1}\right) =\int _{-\frac{(\lambda _1 +1)K}{\lambda _1}}^{\frac{(\lambda _1-1)K}{\lambda _1}}f_\sigma (a)da\) and \(S_{1}\left( 0, -\frac{(\lambda _1-1)K}{\lambda _1}\right) =\int _{\frac{(1 -\lambda _1)K}{\lambda _1}}^{\frac{(\lambda _1+1)K}{\lambda _1}}f_\sigma (a)da\), and according to Lemma 1, we have \(S_{1}\left( 0, \frac{(\lambda _1-1)K}{\lambda _1}\right) =S_{1}\left( 0, -\frac{(\lambda _1-1)K}{\lambda _1}\right) \). Now since \(\sigma <\sigma ^*\) and given Lemma 2, we have \(S_{1}\left( 0, -\frac{(\lambda _1-1)K}{\lambda _1}\right) =\int _{\frac{(1-\lambda _1)K}{\lambda _1}}^{\frac{(\lambda _1 +1)K}{\lambda _1}}f_\sigma (a)da>\frac{1}{2}\); we can thus conclude that \(S_{1}(0,x_{2})\ge S_{1}\left( 0,-\frac{(\lambda _1-1)K}{\lambda _1}\right) >\frac{1}{2}\), \(\forall x_{2}\in \left[ -\frac{(\lambda _1-1)K}{\lambda _1},\frac{(\lambda _1 -1)K}{\lambda _1}\right] \).

- Case (B) :

-

If \(x_2>\frac{(\lambda _1-1)K}{\lambda _1}\) and \(x_1=x_{1}^{*}=0\), then we know from Proposition 1 that \(b=\frac{-x_2}{\lambda _1-1}-K\) and \(c=\frac{(\lambda _1-1)K+x_2}{\lambda _1+1}\). We thus have \(S_{1}(0,x_{2})=\int _{\frac{-x_2}{\lambda _1-1}-K}^{\frac{(\lambda _1 -1)K+x_2}{\lambda _1+1}}f_{\sigma }(a)da\) and \(\frac{\partial S_1}{\partial x_2}(0,x_2)=\frac{1}{\lambda _1+1}f_\sigma \left( \frac{(\lambda _1-1)K +x_2}{\lambda _1+1}\right) +\frac{1}{\lambda _1-1}f_\sigma \left( \frac{-x_2}{\lambda _1 -1}-K\right) >0\). Then, \(x_{2}\mapsto S_{1}(0,x_{2})\) is strictly increasing on \(\left[ \frac{(\lambda _1-1)K}{\lambda _1},+\infty \right) \), and has a minimum at \(x_{2}=\frac{(\lambda _1-1)K}{\lambda _1}\). Consequently, \(S_{1}(0,x_{2})> S_{1}\left( 0,\frac{(\lambda _1-1)K}{\lambda _1}\right) >\frac{1}{2}\), \(\forall x_{2}> \frac{(\lambda _1-1)K}{\lambda _1}\).

- Case (B’) :

-

If \(x_{2}< -\frac{(\lambda _1-1)K}{\lambda _1}\) and \(x_1=x_{1}^{*}=0\), we know from Proposition 1 that \(b=\frac{(1-\lambda _1)K+x_2}{\lambda _1+1}\) and \(c=\frac{-x_2}{\lambda _1-1}+K\). We thus have \(S_{1}(0,x_{2})=\int _{\frac{(1-\lambda _1)K+x_2}{\lambda _1 +1}}^{\frac{-x_2}{\lambda _1-1}+K}f_{\sigma }(a)da\) and \(\frac{\partial S_1}{\partial x_2}(0,x_2) =\frac{-1}{\lambda _1-1}f_\sigma \left( \frac{-x_2}{\lambda _1-1}+K\right) -\frac{1}{\lambda _1+1}f_\sigma \left( \frac{(1-\lambda _1)K +x_2}{\lambda _1+1}\right) <0\). Then, \(x_{2}\mapsto S_{1}(0,x_{2})\) is strictly decreasing on \(\left( -\infty ,-\frac{(\lambda _1-1)K}{\lambda _1}\right] \) and has a minimum at \(x_{2}=-\frac{(\lambda _1-1)K}{\lambda _1}\). Consequently, \(S_{1}(0,x_{2})> S_{1}\left( 0,-\frac{(\lambda _1-1)K}{\lambda _1}\right) >\frac{1}{2}\), \(\forall x_{2}< -\frac{(\lambda _1-1)K}{\lambda _1}\).

- Conclusion :

-

we have shown that if \(\sigma <\sigma ^{*}\), then \(S_{1}(0,x_{2})>\frac{1}{2}\), \(\forall x_{2}\in \mathbb {R}\).

Now we prove (ii), i.e., if \(\sigma >\sigma ^{**}\), Candidate 2 wins with certainty if he chooses \(x_{2}^{*}=0\), \(\forall x_{1}\in \mathbb {R}\). We thus have to show that if \(\sigma >\sigma ^{**}\), then \(S_1(x_1,0)=\int _b^cf_\sigma (a)da<\frac{1}{2}\), \(\forall x_1\in \mathbb {R}\). Since \(\sigma >\sigma ^{**}\), we know from Lemma 2 that \(\int _{\frac{(1-\lambda _1)K}{\lambda _1}}^{\frac{(\lambda _1 +1)K}{\lambda _1}}f_\sigma (a)da<\int _{-K}^Kf_\sigma (a)da<\frac{1}{2}\). Let’s consider the three possible cases: Case (A) \(x_1\in \left[ -\frac{(\lambda _1-1)K}{\lambda _1},\frac{(\lambda _1-1)K}{\lambda _1}\right] \), Case (B) \(x_1<-\frac{(\lambda _1-1)K}{\lambda _1}\), and Case (B’) \(x_1>\frac{(\lambda _1-1)K}{\lambda _1}\) which correspond to Panels (A), (B) and (B’) in Fig. 2 when \(x_2=0\).

- Case (A) :

-

If \(x_1\in \left[ -\frac{(\lambda _1-1)K}{\lambda _1},\frac{(\lambda _1-1)K}{\lambda _1}\right] \) and \(x_2=x_{2}^{*}=0\), then we know from Proposition 1 that \(b=\frac{\lambda _1x_1}{\lambda _1-1}-K\) and \(c=\frac{\lambda _1x_1}{\lambda _1-1}+K\). If so, \(S_{1}(x_1,0)=\int _{\frac{\lambda _1x_1}{\lambda _1-1} -K}^{\frac{\lambda _1x_1}{\lambda _1-1}+K}f_{\sigma }(a)da\) and \(\frac{\partial S_1}{\partial x_1}(x_1,0)=\frac{\lambda _1}{\lambda _1-1}f_\sigma \left( \frac{\lambda _1x_1}{\lambda _1-1} +K\right) -\frac{\lambda _1}{\lambda _1-1}f_\sigma \left( \frac{\lambda _1x_1}{\lambda _1-1}-K\right) \). Given that \(f_{\sigma }\) is an even function, strictly increasing on \(\mathbb {R}_{-}\), and strictly decreasing on \(\mathbb {R}_{+}\), \(f_\sigma \left( \frac{\lambda _1x_1}{\lambda _1-1}+K\right) \ge f_\sigma \left( \frac{\lambda _1x_1}{\lambda _1-1}-K\right) \) if \(x_1\le 0\), while \(f_\sigma \left( \frac{\lambda _1x_1}{\lambda _1-1}+K\right) \le f_\sigma \left( \frac{\lambda _1x_1}{\lambda _1-1}-K\right) \) if \(x_1\ge 0\). That is \(\frac{\partial S_1}{\partial x_1}\ge 0\Leftrightarrow x_1\le 0\). Thus, \(x_{1}\mapsto S_{1}(x_1,0)\) is strictly increasing on \(\left[ -\frac{(\lambda _1-1)K}{\lambda _1},0\right] \), and strictly decreasing on \(\left[ 0,\frac{(\lambda _1-1)K}{\lambda _1}\right] \), and it has a maximum at \(x_{1}=0\). \(S_1(0,0)=\int _{-K}^Kf_\sigma (a)da\), and since \(\sigma >\sigma ^{**}\), we know from Lemma 2 that \(S_1(0,0)=\int _{-K}^Kf_\sigma (a)da<\frac{1}{2}\). We can thus conclude that \(S_{1}(x_{1},0)\le S_{1}(0,0)<\frac{1}{2}\), \(\forall x_{1}\in \left[ -\frac{(\lambda _1-1)K}{\lambda _1},\frac{(\lambda _1-1)K}{\lambda _1}\right] \).

- Case (B) :

-

If \(x_1<-\frac{(\lambda _1-1)K}{\lambda _1}\) and \(x_2=x_{2}^{*}=0\), then we know from Proposition 1 that \(b=\frac{\lambda _1x_1}{\lambda _1-1}-K\) and \(c=\frac{(\lambda _1-1)K+\lambda _1x_1}{\lambda _1+1}\). If so, \(S_{1}(x_{1},0)=\int _{\frac{\lambda _1x_1}{\lambda _1-1}-K}^{\frac{(\lambda _1-1) K+\lambda _1x_1}{\lambda _1+1}}f_{\sigma }(a)da\). Now remark that if \(x_1<-\frac{(\lambda _1-1)K}{\lambda _1}\), then \(\frac{(\lambda _1-1)K+\lambda _1x_1}{\lambda _1+1}<0\), so \(S_{1}(x_{1},0)=\int _{\frac{\lambda _{1}x_{1}}{\lambda _{1}-1}-K}^{\frac{ (\lambda _{1}-1)K+\lambda _{1}x_{1}}{\lambda _{1}+1}}f_{\sigma }(a)da<\int _{ \frac{\lambda _{1}x_{1}}{\lambda _{1}-1}-K}^{0}f_{\sigma }(a)da<\int _{-\infty }^{0}f_{\sigma }(a)da=\frac{1}{2}\), i.e., \(S_{1}(x_{1},0)<\frac{1}{2}\), \(\forall x_{1}<-\frac{(\lambda _{1}-1)K}{ \lambda _{1}}\).

- Case (B’) :

-

If \(x_1>\frac{(\lambda _1-1)K}{\lambda _1}\) and \(x_2=x_{2}^{*}=0\), then we know from Proposition 1 that \(b=\frac{(1-\lambda _1)K+\lambda _1x_1}{\lambda _1+1}\) and \(c=\frac{\lambda _1x_1}{\lambda _1-1}+K\). If so, \(S_{1}(x_{1},0)=\int _{\frac{(1-\lambda _1)K+\lambda _1x_1}{\lambda _1+1}}^{\frac{\lambda _1x_1}{\lambda _1-1}+K}f_{\sigma }(a)da\). Now remark that if \(x_1>\frac{(\lambda _1-1)K}{\lambda _1}\), then \(\frac{(1-\lambda _1)K+\lambda _1x_1}{\lambda _1+1}>0\), so \(S_{1}(x_{1},0)=\int _{\frac{(1-\lambda _1)K+\lambda _1x_1}{\lambda _1 +1}}^{\frac{\lambda _1x_1}{\lambda _1-1}+K}f_{\sigma }(a)da<\int _{0}^{\frac{\lambda _1x_1}{\lambda _1-1}+K}f_{\sigma }(a)da\le \int _{0}^{+\infty }f_\sigma (a)=\frac{1}{2}\), \(\forall x_1>\frac{(\lambda _1-1)K}{\lambda _1}\).

- Conclusion :

-

we have shown that if \(\sigma >\sigma ^{**}\), then \(S_{1}(x_1,0)<\frac{1}{2}\), \(\forall x_{1}\in \mathbb {R}\).

Finally, we prove (iii), i.e., if \(\sigma ^{* }\le \sigma \le \sigma ^{**}\), there is no political equilibrium. Since \(\sigma ^{*}\le \sigma \le \sigma ^{**}\), we know from Lemma 2 that \(\int _{\frac{(1-\lambda _1)K}{\lambda _1}}^{\frac{(\lambda _1 +1)K}{\lambda _1}}f_\sigma (a)da\le \frac{1}{2}\le \int _{-K}^Kf_\sigma (a)da\). Because of Lemma 1, one of these inequalities must be strict, so without loss of generality, we consider that \(\int _{\frac{(1-\lambda _1)K}{\lambda _1}}^{\frac{(\lambda _1+1)K}{\lambda _1}} f_\sigma (a)da<\frac{1}{2}\le \int _{-K}^Kf_\sigma (a)da\). Now let \((\overline{x}_{1},\overline{x}_{2})\in \mathbb {R}^{2}\). We want to show that \((\overline{x}_{1},\overline{x}_{2})\) is not a political equilibrium. It is sufficient to prove that there exist \(x_{1}\) and \(x_{2}\) such that \(S_{1}(x_{1},\overline{x}_{2})\ge \frac{1 }{2}> S_{1}(\overline{x}_{1},x_{2})\). We proceed in two steps.

First step We first show that \(S_{1}(x_{1},\overline{x}_{2})\ge \frac{1}{2}\) if \(x_{1}=\frac{\overline{x}_{2}}{\lambda _1}\), \(\forall \overline{x}_{2}\).

-

If \(\overline{x}_{2}\in \left[ -K,K\right] \), then \(\left| \overline{x}_{2}-x_1\right| = \left| \frac{(\lambda _1-1)\overline{x}_{2}}{\lambda _1}\right| \le \frac{(\lambda _1-1)K}{\lambda _1}\). We are thus in Case (A), where \(b=\frac{\lambda _1x_1-\overline{x}_2}{\lambda _1-1}-K\) and \(c=\frac{\lambda _1x_1-\overline{x}_2}{\lambda _1-1}+K\) (see Proposition 1). Given that \(x_{1}=\frac{\overline{x}_{2}}{\lambda _1}\), then \(b=-K\) and \(c=K\), so \(S_{1}\left( \frac{\overline{x}_{2}}{\lambda _1},\overline{x}_{2}\right) =\int _{-K}^{+K}f_{\sigma }(a)da\ge \frac{1}{2}\).

-

If \(\overline{x}_{2}>K\), then \(\overline{x}_{2}-x_1=\frac{(\lambda _1-1)\overline{x}_{2}}{\lambda _1} >\frac{(\lambda _1-1)K}{\lambda _1}\). We are thus in Case (B), where \(b=\frac{\lambda _1x_1-\overline{x}_2}{\lambda _1-1}-K\) and \(c=\frac{(\lambda _1-1)K+\lambda _1x_1+\overline{x}_2}{\lambda _1+1}\) (see Proposition 1). Given that \(x_{1}=\frac{\overline{x}_{2}}{\lambda _1}\), then \(b=-K\) and \(c=\frac{(\lambda _1-1)K+2\overline{x}_2}{\lambda _1+1}>K\) (since \(\overline{x}_{2}>K\)). Hence, \(S_{1}\left( \frac{\overline{x}_{2}}{\lambda _1}, \overline{x}_{2}\right) =\int _{-K}^{\frac{(\lambda _1-1)K +2\overline{x}_2}{\lambda _1+1}}f_{\sigma }(a)da>\int _{-K}^{K}f_{\sigma }(a)da\ge \frac{1}{2}\).

-

If \(\overline{x}_{2}<-K\), then \(\overline{x}_2-x_1 =\frac{(\lambda _1-1)\overline{x}_{2}}{\lambda _1}<-\frac{(\lambda _1-1)K}{\lambda _1}\). We are thus in Case (B’), where \(b=\frac{(1-\lambda _1)K+\lambda _1x_1+\overline{x}_2}{\lambda _1+1}\) and \(c=\frac{\lambda _1x_1-\overline{x}_2}{\lambda _1-1}+K\) (see Proposition 1). Given that \(x_{1}=\frac{\overline{x}_{2}}{\lambda _1}\), then \(b=\frac{(1-\lambda _1)K+2\overline{x}_2}{\lambda _1+1}<-K\) (since \(\overline{x}_{2}<-K\)) and \(c=K\). Hence, \(S_{1}\left( \frac{\overline{x}_{2}}{\lambda _1}, \overline{x}_{2}\right) =\int _{\frac{(1-\lambda _1)K +2\overline{x}_2}{\lambda _1+1}}^{K}f_{\sigma } (a)da> \int _{-K}^{K}f_{\sigma }(a)da\ge \frac{1}{2}\).

Second step We now show that \(S_{1}(\overline{x}_{1},x_{2})<\frac{1}{2}\) if \(x_{2}\) is such that: \(x_{2}=\overline{x}_{1}-\frac{(\lambda _1-1)K}{\lambda _1}\), \(\forall \overline{x}_{1}\ge 0\), and \(x_{2}= \overline{x}_{1}+\frac{(\lambda _1-1)K}{\lambda _1}\), \(\forall \overline{x}_{1}<0\). For any \(\overline{x}_{1}\), we are thus in Case (A), where \(b=\frac{\lambda _1\overline{x}_1-x_2}{\lambda _1-1}-K\) and \(c=\frac{\lambda _1\overline{x}_1-x_2}{\lambda _1-1}+K\) (see Proposition 1).

-

If \(\overline{x}_{1}\ge 0\), and given that \(x_{2}=\overline{x}_{1}-\frac{(\lambda _1-1)K}{\lambda _1}\), then \(b=\overline{x}_1+\frac{(1-\lambda _1)K}{\lambda _1}\) and \(c=\overline{x}_{1}+\frac{(\lambda _1+1)K}{\lambda _1}\), so \(S_{1}\left( \overline{x}_{1},\overline{x}_{1} -\frac{(\lambda _1-1)K}{\lambda _1}\right) =\int _{\overline{x}_1 +\frac{(1-\lambda _1)K}{\lambda _1}}^{\overline{x}_{1} +\frac{(\lambda _1+1)K}{\lambda _1}}f_{\sigma }(a)da\le \int _{\frac{(1-\lambda _1)K}{\lambda _1}}^{\frac{(\lambda _1+1)K}{\lambda _1}}f_{\sigma }(a)da<\frac{1}{2}\).

-

If \(\overline{x}_{1}<0\), and given that \(x_{2}=\overline{x}_{1}+\frac{(\lambda _1-1)K}{\lambda _1}\), then \(b=\overline{x}_1-\frac{(\lambda _1+1)K}{\lambda _1}\) and \(c=\overline{x}_{1}+\frac{(\lambda _1-1)K}{\lambda _1}\), so \(S_{1}\left( \overline{x}_{1},\overline{x}_{1}+\frac{(\lambda _1 -1)K}{\lambda _1}\right) =\int _{\overline{x}_1-\frac{(\lambda _1 +1)K}{\lambda _1}}^{\overline{x}_{1}+\frac{(\lambda _1 -1)K}{\lambda _1}}f_{\sigma }(a)da< \int _{-\frac{(\lambda _1 +1)K}{\lambda _1}}^{\frac{(\lambda _1-1)K}{\lambda _1}}f_{\sigma }(a)da\). Because of Lemma 1, we know that \(\int _{-\frac{(\lambda _1+1)K}{\lambda _1}}^{\frac{(\lambda _1 -1)K}{\lambda _1}}f_{\sigma }(a)da=\int _{\frac{(1-\lambda _1)K}{\lambda _1}}^{\frac{(\lambda _1+1)K}{\lambda _1}}f_{\sigma }(a)da\), so \(S_{1}\left( \overline{x}_{1},\overline{x}_{1}+\frac{(\lambda _1 -1)K}{\lambda _1}\right)<\int _{\frac{(1-\lambda _1)K}{\lambda _1}}^{\frac{(\lambda _1 +1)K}{\lambda _1}}f_{\sigma }(a)da<\frac{1}{2}\).

Conclusion: we have shown that if \(\sigma ^{*}\le \sigma \le \sigma ^{**}\), then there is no political equilibrium. \(\square \)

1.5 A.5 Proof of Proposition 3

We prove (i) first, i.e., if \(\sigma <\sigma ^{*}\), then \(X_{1}^{*}=(-\alpha ,\alpha )\) where \(\alpha \) is the unique positive real which satisfies \(\int _{\alpha +\frac{(1-\lambda _1)K}{\lambda _1}}^{\alpha +\frac{(\lambda _1+1)K}{\lambda _1}}f_\sigma (a)da=\frac{1}{2}\). Moreover, we prove that \(\alpha \in \left( 0,\frac{(\lambda _1-1)K}{\lambda _1}\right) \), \(\sigma \mapsto \alpha (\sigma )\) is a decreasing function on \((0,\sigma ^*)\), with \(\lim _{\sigma \rightarrow {\sigma ^*}^{-}} \alpha (\sigma )=0\) and \(\lim _{\sigma \rightarrow 0^+} \alpha (\sigma )=\frac{(\lambda _1-1)K}{\lambda _1}\). We proceed in three steps.

- First step :

-

First we show that there is a unique positive real \(\alpha \) satisfying \(\int _{\alpha +\frac{(1-\lambda _1)K}{\lambda _1}}^{\alpha +\frac{(\lambda _1+1)K}{\lambda _1}}f_\sigma (a)da=\frac{1}{2}\), and \(\alpha \in \left( 0,\frac{(\lambda _1-1)K}{\lambda _1}\right) \). Since \(\sigma <\sigma ^{*}\), we know from Lemma 2 that \(\frac{1}{2}<\int _{\frac{(1-\lambda _1)K}{\lambda _1}}^{\frac{(\lambda _1+1)K}{\lambda _1}}f_\sigma (a)da<\int _{-K}^{K}f_\sigma (a)da\). Now let \(g(v)=\int _{v-K}^{v+K}f_{\sigma }(a)da\), for \(v\in \mathbb {R}\), we have \(g^{\prime }(v)=f_{\sigma }(v+K)-f_{\sigma }(v-K)\). Given that \(f_{\sigma }\) is an even function strictly increasing on \(\mathbb {R}_{-}\), and strictly decreasing on \(\mathbb {R}_{+}\), \(f_{\sigma }\left( v+K\right) < f_{\sigma }\left( v-K\right) \) if \(v> 0\), and \(f_{\sigma }\left( v+K\right) > f_{\sigma }\left( v-K\right) \) if \(v< 0\). It implies that g is an even continuous function, strictly increasing on \(\mathbb {R}_{-}\), and strictly decreasing on \(\mathbb {R}_{+}\). Since \(g\left( \frac{K}{\lambda _1}\right) = \int _{\frac{(1-\lambda _1)K}{\lambda _1}}^{\frac{(\lambda _1+1)K}{\lambda _1}}f_\sigma (a)da>\frac{1}{2}\) and \(g(K)=\int _{0}^{2K}f_{\sigma }(a)da<\int _{0}^{+\infty }f_{\sigma }(a)da=\frac{1}{2}\), there exists a unique \(v^{*}>0\) such that \(g(v^{*})=\frac{1}{2}\). And we must have \(\frac{K}{\lambda _1}<v^{*}<K\). Now let \(\alpha =v^{*}-\frac{K}{\lambda _1}\), then \(0<\alpha <\frac{(\lambda _1-1)K}{\lambda _1}\). Given that \(g(v^{*})=\int _{v^{*}-K}^{v^{*}+K}f_{\sigma }(a)da=\frac{1}{2}\),

$$\begin{aligned} g\left( \alpha +\frac{K}{\lambda _1}\right) =\int _{\alpha +\frac{(1-\lambda _1)K}{\lambda _1}}^{\alpha +\frac{(\lambda _1+1)K}{\lambda _1}}f_\sigma (a)da=\frac{1}{2}. \end{aligned}$$(A9)

- Second step :

-

Now we show that \(X_{1}^*=(-\alpha ,\alpha )\).

-

First, we show that if \(x_{1}\in (-\alpha ,\alpha )\), then \(x_{1}\in X_{1}^{*}\), i.e., \(S_{1}(x_{1},x_{2})>\frac{1}{2}\), \(\forall x_{2}\). Consider the three possible cases: Case (A) \(-\frac{(\lambda _1-1)K}{\lambda _1}\le x_{1}-x_{2}\le \frac{(\lambda _1-1)K}{\lambda _1}\), Case (B) \(x_{2}>x_{1}+\frac{(\lambda _1-1)K}{\lambda _1}\), and Case (B’) \(x_{2}<x_{1}-\frac{(\lambda _1-1)K}{\lambda _1}\).

- Case (A) :

-

If \(-\frac{(\lambda _1-1)K}{\lambda _1}\le x_{1}-x_{2}\le \frac{(\lambda _1-1)K}{\lambda _1}\), then \(b=\frac{\lambda _1x_1-x_2}{\lambda _1-1}-K\) and \(c=\frac{\lambda _1x_1-x_2}{\lambda _1-1}+K\) (see Proposition 1). So \(S_{1}(x_{1},x_{2})= \int _{\frac{\lambda _1x_1-x_2}{\lambda _1-1}-K}^{\frac{\lambda _1x_1-x_2}{\lambda _1-1}+K}f_{\sigma }(a)da=g\left( \frac{\lambda _1x_1-x_2}{\lambda _1-1}\right) \).

If \(-\frac{(\lambda _1-1)K}{\lambda _1}\le x_{1}-x_{2}\le \frac{(\lambda _1-1)K}{\lambda _1}\) and \(-\alpha<x_{1}<\alpha \) (so \(-(\lambda _1-1)\alpha<(\lambda _1-1)x_{1}<(\lambda _1-1)\alpha \)), then adding these two inequalities we obtain \(-(\lambda _1-1)\left( \alpha +\frac{K}{\lambda _1}\right)<\lambda _1x_{1}-x_2<(\lambda _1-1)\left( \alpha +\frac{K}{\lambda _1}\right) \). Dividing by \((\lambda _1-1)\), then \(-\left( \alpha +\frac{K}{\lambda _1}\right)<\frac{\lambda _1x_1-x_2}{\lambda _1-1}<\alpha +\frac{K}{\lambda _1}\). Since g is an even function, strictly increasing on \(\mathbb {R}_{-}\), and strictly decreasing on \(\mathbb {R}_{+}\), then \(g\left( \frac{\lambda _1x_1-x_2}{\lambda _1-1}\right) >g\left( \alpha +\frac{K}{\lambda _1}\right) =\frac{1}{2}\) (see Eq. (A9)). Hence, if \(x_{1}\in (-\alpha ,\alpha )\), \(S_{1}(x_{1},x_{2})>\frac{1}{2}\), \(\forall x_{2}\in \left[ x_1-\frac{(\lambda _1-1)K}{\lambda _1},x_1 +\frac{(\lambda _1-1)K}{\lambda _1}\right] \).

- Case (B) :

-

If \(x_2>x_1+\frac{(\lambda _1-1)K}{\lambda _1}\), then \(b=\frac{\lambda _1x_1-x_2}{\lambda _1-1}-K\) and \(c=\frac{(\lambda _1-1)K+\lambda _1x_1+x_2}{\lambda _1+1}\) (see Proposition 1), and \(S_1(x_1,x_2)=\int _{\frac{\lambda _1x_1 -x_2}{\lambda _1-1}-K}^{\frac{(\lambda _1-1)K+\lambda _1x_1+x_2}{\lambda _1+1}}f_{\sigma } (a)da\). Thus, it is easy to see that if \(x_2>x_1+\frac{(\lambda _1-1)K}{\lambda _1}\), then \(S_1(x_1,x_2)\) is an increasing function of \(x_2\), and \(S_{1}(x_{1},x_{2})>S_{1} \left( x_{1},x_1+\frac{(\lambda _1-1)K}{\lambda _1}\right) \), with \(S_{1}\left( x_{1},x_1+\frac{(\lambda _1-1)K}{\lambda _1}\right) >\frac{1}{2}\) according to Case (A). Hence, if \(x_{1}\in (-\alpha ,\alpha )\), \(S_{1}(x_{1},x_{2})>\frac{1}{2}\), \(\forall x_{2}>x_1+\frac{(\lambda _1-1)K}{\lambda _1}\).

- Case (B’) :

-

If \(x_2<x_1-\frac{(\lambda _1-1)K}{\lambda _1}\), then \(b=\frac{(1-\lambda _1)K+\lambda _1x_1+x_2}{\lambda _1+1}\) and \(c=\frac{\lambda _1x_1-x_2}{\lambda _1-1}+K\) (see Proposition 1), and \(S_1(x_1,x_2)=\int _{\frac{(1-\lambda _1)K +\lambda _1x_1+x_2}{\lambda _1+1}}^{\frac{\lambda _1x_1-x_2}{\lambda _1-1}+K}f_{\sigma }(a)da\). Thus, it is easy to see that if \(x_2<x_1-\frac{(\lambda _1-1)K}{\lambda _1}\), \(S_1(x_1,x_2)\) is a decreasing function of \(x_2\), and \(S_{1}(x_{1},x_{2})>S_{1} \left( x_{1},x_1-\frac{(\lambda _1-1)K}{\lambda _1}\right) \), with \(S_{1}\left( x_{1},x_1-\frac{(\lambda _1-1)K}{\lambda _1}\right) >\frac{1}{2}\) according to Case (A). Hence, if \(x_{1}\in (-\alpha ,\alpha )\), \(S_{1}(x_{1},x_{2})>\frac{1}{2}\), \(\forall x_{2}<x_1-\frac{(\lambda _1-1)K}{\lambda _1}\).

- Conclusion :

-

we have shown that if \(x_{1}\in (-\alpha ,\alpha )\), then \(x_{1}\in X_{1}^{*}\).

-

Second, we show that if \(x_{1}\notin (-\alpha ,\alpha )\), then \(x_{1}\notin X_{1}^{*}\). Assume that \(x_{1}\ge \alpha \), and consider \(x_{2}=x_1-\frac{(\lambda _1-1)K}{\lambda _1}\). We are then in Case (A), and \(S_{1}(x_{1},x_{2})= \int _{\frac{\lambda _1x_1-x_2}{\lambda _1-1} -K}^{\frac{\lambda _1x_1-x_2}{\lambda _1-1}+K}f_{\sigma }(a)da=\int _{\frac{\lambda _1x_1-x_1+\frac{(\lambda _1-1) K}{\lambda _1}}{\lambda _1-1}-K}^{\frac{\lambda _1x_1-x_1 +\frac{(\lambda _1-1)K}{\lambda _1}}{\lambda _1-1}+K}f_{\sigma }(a)da=\int _{x_1+\frac{(1-\lambda _1)K}{\lambda _1}}^{x_1 +\frac{(\lambda _1+1)K}{\lambda _1}}f_{\sigma }(a)da=g\left( x_1+\frac{K}{\lambda _1}\right) \). Given that \(x_{1}\ge \alpha \), then \(x_1+\frac{K}{\lambda _1}\ge \alpha +\frac{K}{\lambda _1}\). Since g is strictly decreasing on \(\mathbb {R}_+\), then \(g\left( x_1+\frac{K}{\lambda _1}\right) \le g\left( \alpha +\frac{K}{\lambda _1}\right) =\frac{1}{2}\) (see Eq. (A9)). Thus, for each \(x_{1}\ge \alpha \), there exists \(x_{2}\) such that \(S_{1}(x_{1},x_{2})\le \frac{1}{2}\), so \(x_{1}\notin X_{1}^{*}\). Similarly, if \(x_{1}\le -\alpha \), consider \(x_{2}=x_1+\frac{(\lambda _1-1)K}{\lambda _1}\). We are again in Case (A), and \(S_{1}(x_{1},x_{2})= \int _{\frac{\lambda _1x_1-x_2}{\lambda _1-1}-K}^{\frac{\lambda _1x_1-x_2}{\lambda _1-1}+K}f_{\sigma }(a)da=\int _{\frac{\lambda _1x_1-x_1-\frac{(\lambda _1-1)K}{\lambda _1}}{\lambda _1-1}-K}^{\frac{\lambda _1x_1-x_1-\frac{(\lambda _1-1)K}{\lambda _1}}{\lambda _1-1}+K}f_{\sigma }(a)da=\int _{x_1-\frac{(\lambda _1+1)K}{\lambda _1}}^{x_1 +\frac{(\lambda _1-1)K}{\lambda _1}}f_{\sigma }(a)da=g\left( x_1-\frac{K}{\lambda _1} \right) \). We have \(x_{1}\le -\alpha \), so \(x_{1}-\frac{K}{\lambda _1}\le -\alpha -\frac{K}{\lambda _1} \). Now recall that g is an even function so \(g\left( -\alpha -\frac{K}{\lambda _1}\right) =g\left( \alpha +\frac{K}{\lambda _1}\right) =\frac{1}{2}\) (see Eq. (A9)). And given that g is strictly increasing on \(\mathbb {R}_-\), then \(g\left( x_1-\frac{K}{\lambda _1}\right) \le g\left( \alpha +\frac{K}{\lambda _1}\right) =\frac{1}{2}\). Thus, for each \(x_{1}\le -\alpha \), there exists \(x_{2}\) such that \( S_{1}(x_{1},x_{2})\le \frac{1}{2}\), so \(x_{1}\notin X_{1}^{*}\).

- Conclusion :

-

we have shown that if \(x_{1}\notin (-\alpha ,\alpha )\), then \(x_{1}\notin X_{1}^{*}\). Given that we have also shown that if \(x_{1}\in (-\alpha ,\alpha )\), then \(x_{1}\in X_{1}^{*}\), it implies that \(X_{1}^*=(-\alpha ,\alpha )\).

- Third step :

-

It remains to show that \(\sigma \mapsto \alpha (\sigma )\) is a decreasing function on \((0,\sigma ^*)\), with \(\lim _{\sigma \rightarrow {\sigma ^*}^{-}} \alpha (\sigma )=0\) and \(\lim _{\sigma \rightarrow 0^+} \alpha (\sigma ) =\frac{(\lambda _1-1)K}{\lambda _1}\) .

– First, we show that \(\sigma \mapsto \alpha (\sigma )\) is a decreasing function on \((0,\sigma ^*)\). We know from Equation (A9) that \(G(\sigma ,\alpha )=g\left( \alpha +\frac{K}{\lambda _1} \right) -\frac{1}{2}= \int _{\alpha +\frac{(1-\lambda _1)K}{\lambda _1}}^{\alpha +\frac{(\lambda _1+1)K}{\lambda _1}}f_\sigma (a)da-\frac{1}{2}=0\). The implicit function theorem gives \(\frac{d\alpha }{d\sigma }=-\frac{\frac{\partial G}{\partial \sigma }}{\frac{\partial G}{\partial \alpha }}\). Let \(z=\frac{a}{\sigma }\), so \(G(\sigma ,\alpha )=\int _{\alpha +\frac{(1-\lambda _1)K}{\lambda _1}}^{\alpha +\frac{(\lambda _1 +1)K}{\lambda _1}}\frac{1}{\sigma }f_1(\frac{a}{\sigma })da-\frac{1}{2} =\int _{\frac{\alpha +\frac{(1-\lambda _1)K}{\lambda _1}}{\sigma }}^{\frac{\alpha +\frac{(\lambda _1+1)K}{\lambda _1}}{\sigma }}f_1(z)dz-\frac{1}{2}=0\) and \(\frac{\partial G}{\partial \sigma }=-\frac{\alpha +\frac{(\lambda _1 +1)K}{\lambda _1}}{\sigma ^2}f_1\left( \frac{\alpha +\frac{(\lambda _1+1)K}{\lambda _1}}{\sigma }\right) +\frac{\alpha -\frac{(\lambda _1-1)K}{\lambda _1}}{\sigma ^2}f_1\left( \frac{\alpha -\frac{(\lambda _1-1)K}{\lambda _1}}{\sigma }\right) \). Now recall that we have shown that \(\alpha <\frac{(\lambda _1-1)K}{\lambda _1}\) (first step of this proof), so \(\frac{\partial G}{\partial \sigma }<0\). Concerning \(\frac{\partial G}{\partial \alpha }=f_\sigma \left( \alpha +\frac{(\lambda _1 +1)K}{\lambda _1}\right) -f_\sigma \left( \alpha +\frac{(1-\lambda _1)K}{\lambda _1}\right) \), given that \(f_\sigma \) is an even function, strictly increasing on \(\mathbb {R}_-\) and strictly decreasing on \(\mathbb {R}_+\), \(f_\sigma \left( \alpha +\frac{(\lambda _1+1)K}{\lambda _1}\right) <f_\sigma \left( \alpha +\frac{(1-\lambda _1)K}{\lambda _1}\right) \) and \(\frac{\partial G}{\partial \alpha }<0\). Since \(\frac{\partial G}{\partial \sigma }<0\) and \(\frac{\partial G}{\partial \alpha }<0\), then \(\frac{d\alpha }{d\sigma }<0\) according to the implicit function theorem. Hence \(\sigma \mapsto \alpha (\sigma )\) is a decreasing function on \((0,\sigma ^*)\).

– Second, we show that \(\lim _{\sigma \rightarrow {\sigma ^*}^{-}} \alpha (\sigma )=0\). According to Lemma 2, we know that \(\lim _{\sigma \rightarrow {\sigma ^*}^{-}} \int _{\frac{(1-\lambda _1)K}{\lambda _1}}^{\frac{(\lambda _1+1)K}{\lambda _1}} f_{\sigma }(a)da=\frac{1}{2}\). We also know from Eq. (A9) that \(\int _{\alpha +\frac{(1-\lambda _1)K}{\lambda _1}}^{\alpha +\frac{(\lambda _1+1)K}{\lambda _1}}f_\sigma (a)da=\frac{1}{2}\). It is thus obvious that if \(\sigma \rightarrow {\sigma ^*}^{-}\), then we must have \(\alpha =0\).

– Third, we show that \(\lim _{\sigma \rightarrow 0^{+}}\alpha (\sigma )=\frac{(\lambda _{1}-1)K}{\lambda _{1}}\). According to Eq. (A9), \(\alpha \) is defined by \(\int _{\alpha +\frac{(1-\lambda _1)K}{\lambda _1}}^{\alpha +\frac{(\lambda _1+1)K}{\lambda _1}}f_\sigma (a)da=\frac{1}{2}\). Let \(z=\frac{a}{\sigma }\). We then obtain that \(\alpha =\alpha (\sigma )\) is defined by \(\int _{\frac{1}{\sigma } \left[ \alpha +\frac{(1 -\lambda _{1})K}{\lambda _{1}}\right] }^{\frac{1}{\sigma }\left[ \alpha +\frac{(\lambda _{1}+1)K}{\lambda _{1}}\right] }f_{1}(z)dz=\frac{1}{2}\). \(\sigma \mapsto \alpha (\sigma )\) is a decreasing function on \(\sigma \in (0,\sigma ^*)\), with \(0<\alpha <\frac{ (\lambda _{1}-1)K}{\lambda _{1}}\), i.e., \(\sigma \mapsto \alpha (\sigma )\) is bounded. Thus there is a limit \(\alpha _{0}=\lim _{\sigma \rightarrow 0^{+}}\alpha (\sigma )\), with \(\alpha _{0}\in \left[ 0,\frac{(\lambda _{1}-1)K}{\lambda _{1}}\right] \).

We must have \(\lim _{\sigma \rightarrow 0^{+}}\int _{\frac{1}{ \sigma }\left[ \alpha +\frac{(1-\lambda _{1})K}{\lambda _{1}} \right] }^{\frac{1}{\sigma } \left[ \alpha +\frac{(\lambda _{1}+1)K}{\lambda _{1}}\right] }f_{1}(z)dz=\int _{\lim _{\sigma \rightarrow 0^{+}} \frac{1}{\sigma }\left[ \alpha +\frac{(1-\lambda _{1})K}{\lambda _{1}} \right] }^{\lim _{\sigma \rightarrow 0^{+}}\frac{1}{\sigma }\left[ \alpha +\frac{(\lambda _{1}+1)K}{\lambda _{1}}\right] }f_{1}(z)dz=\int _{\lim _{\sigma \rightarrow 0^{+}}\frac{1}{\sigma }\left[ \alpha +\frac{(1-\lambda _{1})K}{\lambda _{1}}\right] }^{+\infty }f_{1}(z)dz=\frac{ 1}{2}\). If \(\alpha _{0} < \frac{(\lambda _{1}-1)K}{\lambda _{1}}\), then \(\int _{\lim _{\sigma \rightarrow 0^{+}}\frac{1}{\sigma }\left[ \alpha +\frac{ (1-\lambda _{1})K}{\lambda _{1}}\right] }^{+\infty }f_{1}(z)dz=\int _{-\infty }^{+\infty }f_{1}(z)dz =1\ne \frac{1}{2}\). It is impossible, thus \(\alpha _{0}=\frac{(\lambda _{1}-1)K}{\lambda _{1}}\), and we have \(\int _{\lim _{\sigma \rightarrow 0^{+}}\frac{1}{\sigma }\left[ \alpha +\frac{(1-\lambda _{1})K}{\lambda _{1}}\right] }^{+\infty }f_{1}(z)dz=\int _{0}^{+\infty }f_{1}(z)dz=\frac{1}{2}\).

- Conclusion :

-

We have shown that \(\sigma \mapsto \alpha (\sigma )\) is a decreasing function on \((0,\sigma ^*)\), with \(\lim _{\sigma \rightarrow {\sigma ^*}^{-}} \alpha (\sigma )=0\) and \(\lim _{\sigma \rightarrow 0^{+}}\alpha (\sigma )=\frac{(\lambda _{1}-1)K}{\lambda _{1}}\).

Now we prove (ii), i.e., if \(\sigma >\sigma ^{**}\), then \(X_{2}^{* }=(-\beta ,\beta )\) where \(\beta \) is the unique positive real which satisfies \(\sup _{x_{1}\le \beta -\frac{(\lambda _1-1)K}{\lambda _1}}S_{1}(x_{1},\beta )=\frac{1}{2}\). Then we will prove that \(\sigma \mapsto \beta (\sigma )\) is an increasing function on \((\sigma ^{**},+\infty )\).

Since \(\sigma >\sigma ^{**}\), we know from Lemma 2 that \(\int _{-K}^{+K}f_{\sigma }(a)da<\frac{1}{2}\). For a given \(x_{2}\), let us set:

Remark that each \(\sup \) is in fact a \(\max \) since \(S_{1}\) is continuous and \(S_{1}(x_{1},x_{2})\rightarrow 0\) if \(x_{1}\rightarrow \pm \infty \). Now, consider the three cases, Case (A), Case (B), and Case (B’).

- Case (A) :

-

If \(x_{1}\in \left[ x_{2}-\frac{(\lambda _{1}-1)K}{\lambda _{1}},x_{2}+\frac{(\lambda _{1}-1)K}{\lambda _{1}}\right] \), then \(S_{1}(x_{1},x_{2})=\int _{\frac{\lambda _{1}x_{1}-x_{2}}{\lambda _{1}-1}-K}^{ \frac{\lambda _{1}x_{1}-x_{2}}{\lambda _{1}-1}+K}f_{\sigma }(a)da\) (see Proposition 1). Remark that the length of the interval \(\Omega _1(x_1,x_2)\) is 2K for all \(x_{1}\in \left[ x_{2}-\frac{(\lambda _{1}-1)K}{\lambda _{1}},x_{2}+\frac{(\lambda _{1}-1)K}{\lambda _{1}}\right] \). We then have \(\int _{\frac{\lambda _{1}x_{1}-x_{2}}{\lambda _{1}-1}-K}^{\frac{\lambda _{1}x_{1}-x_{2}}{\lambda _{1}-1}+K}f_{\sigma }(a)da\le \int _{-K}^{+K}f_{\sigma }(a)da\) since \([-K,+K]\) is the interval of length 2K on which the integral of \(f_{\sigma }\) has the highest value. Thus \( S_{1}(x_{1},x_{2})\le \int _{-K}^{+K}f_{\sigma }(a)da<\frac{1}{2}\). It implies that \(M_{A}(x_{2})=\sup _{x_{1}; \text{ Case } \text{ A } }S_{1}(x_{1},x_{2})<\frac{1}{2}\).

- Case (B) :

-

If \(x_{1}\le x_{2}-\frac{(\lambda _{1}-1)K}{\lambda _{1}}\), then \(S_{1}(x_{1},x_{2})=\int _{\frac{\lambda _{1}x_{1}-x_{2}}{\lambda _{1}-1}-K}^{\frac{(\lambda _{1}-1)K+\lambda _{1}x_{1}+x_{2}}{\lambda _{1}+1} }f_{\sigma }(a)da\) (see Proposition 1). Here \(\frac{\partial S_{1}}{\partial x_{2}}>0\), thus \(x_{2}\mapsto M_{B}(x_{2})\) is an increasing function. Moreover \(M(0)<\frac{1}{2}\) according to Proposition 2 (ii), thus \(M_{B}(0)\le M(0)<\frac{1}{2}\). Since \(\lim _{x_{2}\rightarrow +\infty }M_{B}(x_{2})=1\) and \(M_{B}\) is a continuous strictly increasing function, thus: there exists a unique \(\beta >0\) such that \(M_{B}(\beta )=\frac{1}{2}\).

– If \(x_{2}\ge \beta \), then \(M_{B}(x_{2})\ge \frac{1}{2}\), thus \(M(x_{2})\ge \frac{1 }{2}\), which implies that \(x_{2}\notin X_{2}^{*}\).

– If \(0\le x_{2}<\beta \), then \(M_{B}(x_{2})<\frac{1}{2}\).

- Case (B’) :

-

If \(x_{1}\ge x_{2}+\frac{(\lambda _{1}-1)K}{\lambda _{1}}\) , then \(S_{1}(x_{1},x_{2})=\int _{\frac{(1 -\lambda _{1})K+\lambda _{1}x_{1}+x_{2}}{\lambda _{1} +1}}^{\frac{\lambda _{1}x_{1}-x_{2}}{\lambda _{1}-1}+K}f_{\sigma }(a)da\) (see Proposition 1). Here \(\frac{\partial S_{1}}{\partial x_{2}}<0\), thus \(x_{2}\mapsto M_{B'}(x_{2})\) is a decreasing function. Moreover \(M(0)<\frac{1}{2}\) according to Proposition 2 (ii), thus \(M_{B^{\prime }}(0)\le M(0)<\frac{1}{2 }\), and \(\lim _{x_{2}\rightarrow -\infty }M_{B^{\prime }}(x_{2})=1\). By symmetry (since \(f_{\sigma }\) is an even function), for the same \(\beta >0\), we have \(M_{B^{\prime }}(\beta )=\frac{1}{2}\).

– If \(x_{2}\le -\beta \), then \(M_{B^{\prime }}(x_{2})\ge \frac{1}{2}\), thus \(M(x_{2})\ge \frac{1}{2}\), which implies that \(x_{2}\notin X_{2}^{*}\). – If \(-\beta <x_{2}\le 0\), then \(M_{B^{\prime }}(x_{2})<\frac{1}{2}\).

- Conclusion :

-

To sum up, we have:

–If \(x_{2}\ge \beta \) or \(x_{2}\le -\beta \), then \(x_{2}\notin X_{2}^{*}\).

– If \(0\le x_{2}<\beta \), then \(M_{B}(x_{2})<\frac{1}{2}\). Since \(M_{A}(x_{2})<\frac{1}{2}\) and \(M_{B^{\prime }}(x_{2})\le M_{B}(x_{2})\) for \(x_{2}\ge 0\), we can conclude that \(M(x_{2})<\frac{1}{2}\), i.e., \(x_{2}\in X_{2}^{*}\).

– If \(-\beta <x_{2}\le 0\), then \(M_{B^{\prime }}(x_{2})<\frac{1}{2}\). Since \(M_{A}(x_{2})<\frac{1}{2}\) and \(M_{B}(x_{2})\le M_{B^{\prime }}(x_{2})\) for \(x_{2}\le 0\), we can conclude that \(M(x_{2})<\frac{1}{2}\), i.e., \(x_{2}\in X_{2}^{*}\).

Now we prove that \(\sigma \mapsto \beta (\sigma )\) is an increasing function on \((\sigma ^{**},+\infty )\).

\(\beta \) is defined by \(M_{B}(\beta )=\max _{x_{1}\le \beta -\left( \frac{ \lambda _{1}-1}{\lambda _{1}}\right) K}S_{1}(x_{1},\beta )=\max _{x_{1}; \text{ Case } \text{ B }}S_{1}(x_{1},\beta )=\frac{1}{2}\). \((x_{1},x_{2})\) in Case (B) means that \(x_{2}\ge x_{1}+\left( \frac{\lambda _{1}-1}{\lambda _{1}}\right) K\). Now, note that:

-

\(\forall x_{1}\in \mathbb {R}\), if \(x_{2}=x_{1}+\left( \frac{\lambda _{1}-1}{ \lambda _{1}}\right) K\) (i.e., at the boundary between Case (A) and Case (B)), then \(S_{1}(x_{1},x_{2})<\frac{1}{2}\) according to Part (ii) of Lemma 2.

-

\(\forall x_{1}\in \mathbb {R}\), \(x_{2}\mapsto S_{1}(x_{1},x_{2})\) is an increasing continuous function on \(x_{2}>x_{1}+\left( \frac{\lambda _{1}-1}{ \lambda _{1}}\right) K\), with \(\lim _{x_{2}\rightarrow +\infty }S_{1}(x_{1},x_{2})=\lim _{x_{2}\rightarrow +\infty }\int _{\frac{\lambda _{1}x_{1}-x_{2}}{\lambda _{1}-1}-K}^{\frac{\lambda _{1}x_{1}+x_{2}+(\lambda _{1}-1)K}{\lambda _{1}+1}}f_{\sigma }(a)da=\int _{-\infty }^{+\infty } f_{\sigma }(a)da=1\).

Thus there exists a continuous function \(\phi \) such that in Case (B):

\(x_{2}>\phi (x_{1})\Leftrightarrow S_{1}(x_{1},x_{2})>\frac{1}{2}\) and \(x_{2}=\phi (x_{1})\Leftrightarrow S_{1}(x_{1},x_{2})=\frac{1}{2}\)

\(\phi \) is a convex function according to Part (i) of Lemma 3 (in “Appendix A.8”).Footnote 17

According to Part (iv) and (v) of Lemma 3, the function \(x_{1}\mapsto \phi (x_{1})\) is first decreasing, then increasing. Then, \(\beta \) is such that \(\beta =\inf _{x_{1}\in \mathbb {R}}\phi (x_{1})=\min _{x_{1}\in \mathbb {R}}\phi (x_{1})\).

We now write \(\beta (\sigma )=\min _{x_{1}\in \mathbb {R}}\phi _{\sigma }(x_{1})\) and \(S_{1}^{\sigma }(x_{1},x_{2})\) to stress the impact of \(\sigma \). Hence, we have \(S_{1}^{\sigma }(x_{1},x_{2})=\int _{b(x_{1},x_{2})}^{c(x_{1},x_{2})}f_{\sigma }(a)da\) where \(b(x_{1},x_{2})=\frac{\lambda _{1}x_{1}-x_{2}}{\lambda _{1}-1}-K\le c(x_{1},x_{2})=\frac{\lambda _{1}x_{1}+x_{2}+(\lambda _{1}-1)K}{\lambda _{1}+1}\) and we are in Case (B).

\(b(x_{1},x_{2})\ge 0\) or \(c(x_{1},x_{2})\le 0\) would imply \(S_{1}(x_{1},x_{2})<\frac{1}{2}\), thus: \(S_{1}^{\sigma }(x_{1},x_{2})\ge \frac{1}{2}\Longrightarrow b(x_{1},x_{2})<0<c(x_{1},x_{2})\).

If \(x_{2}=\phi (x_{1})\), then \(S_{1}^{\sigma }(x_{1},x_{2})=\int _{b(x_{1},x_{2})}^{c(x_{1},x_{2})}f_{\sigma }(a)da=\int _{ \frac{1}{\sigma }b(x_{1},x_{2})}^{\frac{1}{\sigma }c(x_{1},x_{2})}f_{1}(a)da= \frac{1}{2}\)

If \(\widetilde{\sigma }>\sigma \), then \(\int _{\frac{1}{\widetilde{\sigma }} b(x_{1},x_{2})}^{\frac{1}{\widetilde{\sigma }}c(x_{1},x_{2})}f_{1}(a)da< \int _{\frac{1}{\sigma }b(x_{1},x_{2})}^{\frac{1}{\sigma } c(x_{1},x_{2})}f_{1}(a)da=\frac{1}{2}\) because \(b(x_{1},x_{2})<0<c(x_{1},x_{2})\),

i.e., \(S_{1}^{\widetilde{\sigma }}(x_{1},x_{2})<S_{1}^{\sigma }(x_{1},x_{2})= \frac{1}{2}\).

This implies that \(\phi _{\widetilde{\sigma }}(x_{1})>\phi _{\sigma }(x_{1})\) , \(\forall x_{1}\in \mathbb {R}\), thus \(\beta (\widetilde{\sigma })>\beta (\sigma )\), \(\forall \widetilde{\sigma }>\sigma \).

Conclusion: \(\sigma \mapsto \beta (\sigma )\) is an increasing function on \((\sigma ^{**},+\infty )\). \(\square \)

1.6 A.6 Proof of Proposition 4

We proceed in three steps.

- First step :

-

We first show that \(\lambda _1\mapsto \sigma ^*(\lambda _1)\) is an increasing function on \((1,+\infty )\). Recall that according to the proof of Lemma 2 in “Appendix A.3”, \(\sigma ^*\) is unique and defined by:

$$\begin{aligned} g_1(\sigma ^*)= \int _{\frac{(1-\lambda _1)K}{\sigma ^*\lambda _1}} ^{\frac{(\lambda _1+1)K}{\sigma ^*\lambda _1}}f_1(z)dz=\frac{1}{2} \end{aligned}$$(A10)Using the implicit function theorem, we know that \(\frac{d\sigma ^*}{d\lambda _1}=-\frac{\frac{\partial g_1}{\partial \lambda _1}}{\frac{\partial g_1}{\partial \sigma ^*}}\). We have \(\frac{\partial g_1}{\partial \sigma ^*}=-\frac{\frac{(\lambda _1+1) K}{\lambda _1}}{\sigma ^{*^2}}f_1\left( \frac{\frac{(\lambda _1+1)K}{\lambda _1}}{\sigma ^{*}}\right) +\frac{\frac{(1-\lambda _1)K}{\lambda _1}}{\sigma ^{*^2}}f_1\left( \frac{\frac{(1-\lambda _1)K}{\lambda _1}}{\sigma ^*}\right) <0\). Concerning \(\frac{\partial g_{1}}{\partial \lambda _{1}}=-\frac{K}{\sigma ^{*}\lambda _{1}^{2}}f_{1}\left( \frac{ (\lambda _{1}+1)K}{\sigma ^{*}\lambda _{1}}\right) +\frac{K}{\sigma ^{*}\lambda _{1}^{2}}f_{1}\left( \frac{(1-\lambda _{1})K}{\sigma ^{*}\lambda _{1}}\right) \), given that \(f_{1}\) is an even function, strictly increasing on \(\mathbb {R}_{-}\) and strictly decreasing on \(\mathbb {R}_{+}\), \(f_{1}\left( \frac{(\lambda _{1}+1)K}{\sigma ^{*}\lambda _{1}}\right) <f_{1}\left( \frac{(1-\lambda _{1})K}{\sigma ^{*}\lambda _{1}}\right) \) thus \(\frac{\partial g_{1}}{\partial \lambda _{1}}>0\). Since \(\frac{\partial g_{1}}{\partial \sigma ^{*}}<0\) and \(\frac{\partial g_{1}}{ \partial \lambda _{1}}>0\), then \(\frac{d\sigma ^{*}}{d\lambda _{1}}>0\) according to the implicit function theorem.

- Second step :

-

Now we show that \(\lim _{\lambda _1\rightarrow 1^+}\sigma ^*(\lambda _1)=0\). According to Eq. (A10), \(\sigma ^{*}=\sigma ^{*}(\lambda _{1})\) is defined by \(\int _{\frac{(1-\lambda _{1})K}{\lambda _{1}\sigma ^{* }}}^{\frac{(\lambda _{1}+1)K}{\lambda _{1}\sigma ^{* }} }f_{1}(z)dz=\frac{1}{2}\), for all \(\lambda _{1}>1\). \(\lambda _{1}\mapsto \sigma ^{*}(\lambda _{1})\) is an increasing function on \(\lambda _{1}>1\), with \(\sigma ^{*}(\lambda _{1})>0\), i.e., \(\lambda _{1}\mapsto \sigma ^{*}(\lambda _{1})\) has a lower bound on \(\lambda _{1}>1\). Thus there is a limit \(\sigma _{0}^{* }=\lim _{\lambda _{1}\rightarrow 1^{+}}\sigma ^{*}(\lambda _{1})\), with \(\sigma _{0}^{*}\ge 0\). We must have \(\lim _{\lambda _{1}\rightarrow 1^{+}}\int _{\frac{(1-\lambda _{1})K}{\lambda _{1}\sigma ^{* }}}^{\frac{(\lambda _{1}+1)K}{\lambda _{1}\sigma ^{* }}}f_{1}(z)dz=\int _{\lim _{\lambda _{1} \rightarrow 1^{+}}\frac{(1-\lambda _{1})K}{\lambda _{1} \sigma ^{* }}}^{\lim _{\lambda _{1}\rightarrow 1^{+}} \frac{(\lambda _{1}+1)K}{\lambda _{1}\sigma ^{* }}}f_{1}(z)dz=\frac{1}{2}\). If \(\sigma _{0}^{*}>0\), then \(\int _{\lim _{\lambda _{1}\rightarrow 1^{+}}\frac{(1-\lambda _{1})K}{\lambda _{1}\sigma ^{*}} }^{\lim _{\lambda _{1}\rightarrow 1^{+}}\frac{(\lambda _{1}+1)K}{\lambda _{1}\sigma ^{*}}}f_{1}(z)dz=\int _{0}^{\frac{2K}{\sigma _{0}^{*}}}f_{1}(z)dz<\frac{1}{2}\). It is impossible, thus \(\sigma _{0}^{*}=0\), and we find \(\int _{\lim _{\lambda _{1} \rightarrow 1^{+}}\frac{(1-\lambda _{1})K}{\lambda _{1}\sigma ^{*}}}^{\lim _{\lambda _{1}\rightarrow 1^{+}}\frac{(\lambda _{1}+1)K}{\lambda _{1}\sigma ^{*}}}f_{1}(z)dz =\int _{0}^{+\infty } f_{1}(z)dz=\frac{1}{2}\).

- Third step :

-

We finally show that \(\lim _{\lambda _1\rightarrow +\infty }\sigma ^*(\lambda _1)=\sigma ^{**}\), and that \(\sigma ^{**}\) does not depend on \(\lambda _{1}\). First, recall that according to the proof of Lemma 2 in “Appendix A.3”, \(\sigma ^{**}\) is defined by \( g_{2}(\sigma ^{**})=\int _{-K}^{K}\frac{1}{ \sigma ^{**} }f_{1}\left( \frac{a}{\sigma ^{**} }\right) da=\frac{1}{2}\); thus \(\sigma ^{**}\) does not depend on \(\lambda _{1}\). Second, \(\lambda _{1}\mapsto \sigma ^{*}(\lambda _{1})\) is an increasing function on \(\lambda _{1}>1\), with \(0<\sigma ^{* }(\lambda _{1})\le \sigma ^{**}\), i.e., \( \lambda _{1}\mapsto \sigma ^{* }(\lambda _{1})\) has a upper bound on \(\lambda _{1}>1\). Thus there is a limit \(\sigma _{\infty }^{*}=\lim _{\lambda _{1}\rightarrow +\infty }\sigma ^{*}(\lambda _{1})\), with \(0<\sigma _{\infty }^{*}\le \sigma ^{** }\). We must have \(\lim _{\lambda _{1}\rightarrow +\infty }\int _{\frac{(1-\lambda _{1})K}{\lambda _{1}\sigma ^{*}}}^{\frac{(\lambda _{1}+1)K}{\lambda _{1}\sigma ^{*}}}f_{1}(z)dz=\int _{\lim _{\lambda _{1}\rightarrow +\infty }\frac{(1-\lambda _{1})K}{\lambda _{1}\sigma ^{* } }}^{\lim _{\lambda _{1}\rightarrow +\infty }\frac{(\lambda _{1}+1)K}{\lambda _{1}\sigma ^{* }}}f_{1}(z)dz=\int _{\frac{-K}{ \sigma _{\infty }^{*}}}^{\frac{K}{\sigma _{\infty }^{* }} }f_{1}(z)dz=\frac{1}{2}\). Since \(\int _{\frac{-K}{ \sigma ^{** }}}^{\frac{K}{ \sigma ^{** }}}f_{1}(z)dz=\frac{1}{2}\), then \(\sigma _{\infty }^{* }=\sigma ^{**}\), i.e., \( \lim _{\lambda _{1}\rightarrow +\infty }\sigma ^{*}(\lambda _{1})=\sigma ^{** }\).

- Conclusion :

-

We have shown that \(\lambda _{1}\mapsto \sigma ^{* }(\lambda _{1})\) is an increasing function on \((1,+\infty )\), with \(\lim _{\lambda _{1}\rightarrow 1^{+}}\sigma ^{*}(\lambda _{1})=0\) and \(\lim _{\lambda _{1}\rightarrow +\infty }\sigma ^{*}(\lambda _{1})=\sigma ^{** }\), and that \(\sigma ^{**}\) does not depend on \(\lambda _{1}\). \(\square \)

1.7 A.7 Proof of Proposition 5

We prove (i) first, i.e., if it exists an interval of length \(2\frac{(\lambda _1-1)K}{\lambda _1}\) wherein strictly more than 50 percent of the voters are located, Candidate 1 wins with certainty if he chooses the midpoint of this interval. Consider that z is the midpoint of this interval \(\Delta =\left[ z-\frac{(\lambda _1 -1)K}{\lambda _1},z+\frac{(\lambda _1-1)K}{\lambda _1}\right] \) of length \(2\frac{(\lambda _1-1)K}{\lambda _1}\) wherein strictly more than 50 percent of the voters are located, so \(\int _{\Delta }f(a)da=\int _{z-\frac{(\lambda _1-1)K}{\lambda _1}}^{z +\frac{(\lambda _1-1)K}{\lambda _1}}f(a)da>\frac{1}{2}\). We have to show that Candidate 1 wins with certainty if he chooses \(x_1^*=z\), \(\forall x_2\in \mathbb {R}\). Consider the three possible cases: Case (A) \(x_2\in \left[ z-\frac{(\lambda _1-1)K}{\lambda _1},z +\frac{(\lambda _1-1)K}{\lambda _1}\right] \), Case (B) \(x_2>z+\frac{(\lambda _1-1)K}{\lambda _1}\), and Case (B’) \(x_2<z-\frac{(\lambda _1-1)K}{\lambda _1}\) which correspond to Panels (A), (B) and (B’) in Fig. 2 when \(x_1=z\).

- Case (A) :

-

If \(x_2\in \left[ z-\frac{(\lambda _1-1)K}{\lambda _1},z +\frac{(\lambda _1-1)K}{\lambda _1}\right] \) and \(x_1=x_{1}^{*}=z\), then we know from Proposition 1 that \(b=\frac{\lambda _1z-x_2}{\lambda _1-1}-K\) and \(c=\frac{\lambda _1z-x_2}{\lambda _1-1}+K\). If so, \(\Omega _1(z,x_2) =\left( \frac{\lambda _1z-x_2}{\lambda _1-1}-K,\frac{\lambda _1z-x_2}{\lambda _1-1} +K\right) \). Note that \(\frac{\lambda _1z-x_2}{\lambda _1-1}-K\le z-\frac{(\lambda _1-1)K}{\lambda _1}\Leftrightarrow x_2\ge z-\frac{(\lambda _1-1)K}{\lambda _1}\) which is always true in Case (A), i.e., the lower bound of \(\Omega _1(z,x_2)\) is lower than the lower bound of \(\Delta \). Note also that \(\frac{\lambda _1z-x_2}{\lambda _1-1}+K\ge z+\frac{(\lambda _1-1)K}{\lambda _1}\Leftrightarrow x_2\le z+\frac{(\lambda _1-1)K}{\lambda _1}\) which is always true in Case (A), i.e., the upper bound of \(\Omega _1(z,x_2)\) is higher than the upper bound of \(\Delta \). If so, \(\Delta \subseteq \Omega _1(z,x_2)\); hence, \(S_1(z,x_2)>\frac{1}{2}\) if \(\int _{z-\frac{(\lambda _1-1)K}{\lambda _1}}^{z +\frac{(\lambda _1-1)K}{\lambda _1}}f(a)da>\frac{1}{2}\), \(\forall x_2\in \left[ z -\frac{(\lambda _1-1)K}{\lambda _1},z+\frac{(\lambda _1-1)K}{\lambda _1}\right] \).

- Case (B) :

-

If \(x_2>z+\frac{(\lambda _1-1)K}{\lambda _1}\) and \(x_1=x_{1}^{*}=z\), then \(b=\frac{\lambda _1z-x_2}{\lambda _1-1}-K\) and \(c=\frac{(\lambda _1-1)K+\lambda _1z+x_2}{\lambda _1+1}\) (see Proposition 1). If so, \(\Omega _1(z,x_2)=\left( \frac{\lambda _1z-x_2}{\lambda _1 -1}-K,\frac{(\lambda _1-1)K+\lambda _1z+x_2}{\lambda _1+1}\right) \). Note that \(\frac{\lambda _1z-x_2}{\lambda _1-1}-K\le z-\frac{(\lambda _1-1)K}{\lambda _1}\Leftrightarrow x_2\ge z-\frac{(\lambda _1-1)K}{\lambda _1}\) which is always true in Case (B), i.e., the lower bound of \(\Omega _1(z,x_2)\) is lower than the lower bound of \(\Delta \). Note also that \(\frac{(\lambda _1-1)K+\lambda _1z+x_2}{\lambda _1+1}> z+\frac{(\lambda _1-1)K}{\lambda _1}\Leftrightarrow x_2> z+\frac{(\lambda _1-1)K}{\lambda _1}\) which is always true in Case (B), i.e., the upper bound of \(\Omega _1(z,x_2)\) is higher than the upper bound of \(\Delta \). If so, \(\Delta \subseteq \Omega _1(z,x_2)\); hence, \(S_1(z,x_2)>\frac{1}{2}\) if \(\int _{z-\frac{(\lambda _1-1)K}{\lambda _1}}^{z+\frac{(\lambda _1-1)K}{\lambda _1}}f(a)da>\frac{1}{2}\), \(\forall x_2>z+\frac{(\lambda _1-1)K}{\lambda _1}\).

- Case (B’) :

-

If \(x_2<z-\frac{(\lambda _1-1)K}{\lambda _1}\) and \(x_1=x_{1}^{*}=z\), then \(b=\frac{(1-\lambda _1)K+\lambda _1z+x_2}{\lambda _1+1} \) and \(c=\frac{\lambda _1z-x_2}{\lambda _1-1}+K\) (see Proposition 1). If so, \(\Omega _1(z,x_2)=\left( \frac{(1-\lambda _1) K+\lambda _1z+x_2}{\lambda _1+1},\frac{\lambda _1z-x_2}{\lambda _1-1}+K\right) \). Note that \(\frac{(1-\lambda _1)K+\lambda _1z+x_2}{\lambda _1+1}<z -\frac{(\lambda _1-1)K}{\lambda _1}\Leftrightarrow x_2< z -\frac{(\lambda _1-1)K}{\lambda _1}\) which is always true in Case (B’), i.e., the lower bound of \(\Omega _1(z,x_2)\) is lower than the lower bound of \(\Delta \). Note also that \(\frac{\lambda _1z-x_2}{\lambda _1-1}+K> z+\frac{(\lambda _1-1)K}{\lambda _1}\Leftrightarrow x_2<z-\frac{(\lambda _1-1)K}{\lambda _1}\) which is always true in Case (B’), i.e., the upper bound of \(\Omega _1(z,x_2)\) is higher than the upper bound of \(\Delta \). If so, \(\Delta \subseteq \Omega _1(z,x_2)\); hence, \(S_1(z,x_2)>\frac{1}{2}\) if \(\int _{z-\frac{(\lambda _1-1)K}{\lambda _1}}^{z+\frac{(\lambda _1 -1)K}{\lambda _1}}f(a)da>\frac{1}{2}\), \(\forall x_2<z-\frac{(\lambda _1-1)K}{\lambda _1}\).

- Conclusion :

-

We have shown that if it exists an interval of length \(2\frac{(\lambda _1-1)K}{\lambda _1}\) wherein strictly more than 50 percent of the voters are located, Candidate 1 wins with certainty if he chooses the midpoint of this interval.

Now we prove (ii), i.e., if all the possible intervals of length 2K have strictly less than 50% of the voters, Candidate 2 wins with certainty if he chooses the median location. Without loss of generality, we consider that the median location is 0, so we have to show that in such a case, Candidate 2 wins with certainty if he chooses \(x_2^*=0\), \(\forall x_1\in \mathbb {R}\). Consider the three possible cases: Case (A) \(x_1\in \left[ -\frac{(\lambda _1-1)K}{\lambda _1},\frac{(\lambda _1-1)K}{\lambda _1}\right] \), Case (B) \(x_1<-\frac{(\lambda _1-1)K}{\lambda _1}\), and Case (B’) \(x_1>\frac{(\lambda _1-1)K}{\lambda _1}\) which correspond to Panels (A), (B) and (B’) in Fig. 2 when \(x_2=0\).

- Case (A) :

-

If \(x_1\in \left[ -\frac{(\lambda _1-1)K}{\lambda _1},\frac{(\lambda _1-1)K}{\lambda _1}\right] \) and \(x_2=x_{2}^{*}=0\), then we know from Proposition 1 that \(b=\frac{\lambda _1x_1}{\lambda _1-1}-K\) and \(c=\frac{\lambda _1x_1}{\lambda _1-1}+K\). If so, \(S_{1}(x_1,0)=\int _{\frac{\lambda _1x_1}{\lambda _1 -1}-K}^{\frac{\lambda _1x_1}{\lambda _1-1}+K}f(a)da\). Remark that the length of the interval \(\Omega _1(x_1,0)\) is 2K for all \(x_{1}\in \left[ -\frac{(\lambda _{1}-1)K}{\lambda _{1}} \frac{(\lambda _{1}-1)K}{\lambda _{1}}\right] \). Hence, given that all the intervals of length 2K have strictly less than 50 percent of the voters, we can conclude that \(S_{1}(x_{1},0)<\frac{1}{2}\), \(\forall x_{1}\in \left[ -\frac{(\lambda _1-1)K}{\lambda _1},\frac{(\lambda _1 -1)K}{\lambda _1}\right] \).

- Case (B) :

-

If \(x_1<-\frac{(\lambda _1-1)K}{\lambda _1}\) and \(x_2=x_{2}^{*}=0\), then \(S_{1}(x_{1},0)=\int _{\frac{\lambda _1x_1}{\lambda _1-1}-K}^{\frac{(\lambda _1-1)K+\lambda _1x_1}{\lambda _1+1}}f(a)da\) (see Proposition 1). If \(x_1<-\frac{(\lambda _1-1)K}{\lambda _1}\), then \(\frac{(\lambda _1-1)K+\lambda _1x_1}{\lambda _1+1}<0\), so \(S_{1}(x_{1},0)=\int _{\frac{\lambda _{1}x_{1}}{\lambda _{1}-1}-K}^{\frac{ (\lambda _{1}-1)K+\lambda _{1}x_{1}}{\lambda _{1}+1}}f(a)da<\int _{\frac{\lambda _{1} x_{1}}{\lambda _{1}-1}-K}^{0}f(a)da<\int _{-\infty }^{0}f(a)da=\frac{1}{2}\), i.e., \(S_{1}(x_{1},0)<\frac{1}{2}\), \(\forall x_{1} <-\frac{(\lambda _{1}-1)K}{\lambda _{1}}\).

- Case (B’) :

-

If \(x_1>\frac{(\lambda _1-1)K}{\lambda _1}\) and \(x_2=x_{2}^{*}=0\), then \(S_{1}(x_{1},0)=\int _{\frac{(1-\lambda _1)K +\lambda _1x_1}{\lambda _1+1}}^{\frac{\lambda _1x_1}{\lambda _1-1}+K}f(a)da\) (see Proposition 1). If \(x_1>\frac{(\lambda _1-1)K}{\lambda _1}\), then \(\frac{(1-\lambda _1)K+\lambda _1x_1}{\lambda _1+1}>0\), so \(S_{1}(x_{1},0) =\int _{\frac{(1-\lambda _1)K+\lambda _1x_1}{\lambda _1+1}}^{\frac{\lambda _1x_1}{\lambda _1-1}+K}f(a)da<\int _{0}^{\frac{\lambda _1x_1}{\lambda _1-1}+K}f(a)da \le \int _{0}^{+\infty }f_\sigma (a)=\frac{1}{2}\), \(\forall x_1>\frac{(\lambda _1 -1)K}{\lambda _1}\).

- Conclusion :

-

We have shown that if all the possible intervals of length 2K have strictly less than 50 percent of the voters, then \(S_{1}(x_1,0)<\frac{1}{2}\), \(\forall x_{1}\in \mathbb {R}\), i.e., Candidate 2 wins with certainty if he chooses the median location. \(\square \)

1.8 A.8 Proof of Proposition 6

We consider that \(\sigma \in [\sigma ^{* },\sigma ^{** })\). If so, we know from Proposition 2 that there is no political equilibrium, i.e., no pure strategy equilibrium, and we are looking for a set of mixed strategy equilibria. We will proceed in three steps.

- First step :

-

In the space \(\mathbb {R}^{2}\), we will study the shape of:

$$\begin{aligned} H_{1}= & {} \left\{ (x_{1},x_{2})\in \mathbb {R}^{2}; \pi _{1}(x_{1},x_{2})=1\right\} \\ H_{2}= & {} \left\{ (x_{1},x_{2})\in \mathbb {R}^{2}; \pi _{2}(x_{1},x_{2})=1\right\} \\ H_{0}= & {} \left\{ (x_{1},x_{2})\in \mathbb {R}^{2}; \pi _{1}(x_{1},x_{2})=\pi _{2}(x_{1},x_{2})=\frac{1}{2}\right\} \end{aligned}$$ - Second step :

-

We will eliminate weakly dominated strategies.

- Third step :

-

We will determine a set of mixed strategy equilibria.

- First step :

-

Clearly we have \(H_{1}\cup H_{2}\cup H_{0}=\mathbb {R}^{2}\). If Candidate 1 plays \(x_{1}\) and Candidate 2 plays \(x_{2}\), then: Candidate 1 wins if \((x_{1},x_{2})\in H_{1}\); Candidate 2 wins if \((x_{1},x_{2})\in H_{2}\); there is a tie if \((x_{1},x_{2})\in H_{0}\).

We want to draw the graph in \(\mathbb {R}^{2}\) delimiting \(H_{1}\), \(H_{2}\), \(H_{0}\). Consider Cases (A), (B) and (B’) of Proposition 1. Then, Case (A) corresponds to the domain of \(\mathbb {R}^{2}\) between lines \(D_{1}\) and \(D_{2}\), Case (B) to the domain above line \(D_{2}\), and Case (B’) to the domain below line \(D_{1}\), where:

– \(D_{1}\) is the line of Equation \(x_{1}-x_{2}=\left( \frac{\lambda _{1}-1}{ \lambda _{1}}\right) K\).

– \(D_{2}\) is the line of Equation \(x_{1}-x_{2}=-\left( \frac{\lambda _{1}-1}{ \lambda _{1}}\right) K\).

- Case (A) :

-

If \(\left| x_{1}-x_{2}\right| \le \left( \frac{\lambda _{1}-1}{\lambda _{1}}\right) K\), and recalling that according to the proof of Part (i) of Proposition 3 in “Appendix A.5” (first step, and Case (A) in the second step) \(S_{1}(x_{1},x_{2})=g\left( \frac{\lambda _1x_1-x_2}{\lambda _1-1}\right) \), then

$$\begin{aligned} (x_{1},x_{2})\in & {} H_{1}\Leftrightarrow S_{1}(x_{1},x_{2})>\frac{1}{2}\\ (x_{1},x_{2})\in & {} H_{1}\Leftrightarrow g\left( \frac{\lambda _{1}x_{1} -x_{2}}{\lambda _{1}-1}\right) >\frac{1}{2}\\ (x_{1},x_{2})\in & {} H_{1}\Leftrightarrow \left| \frac{\lambda _{1}x_{1}-x_{2}}{\lambda _{1}-1}\right|<v^{*}\\ (x_{1},x_{2})\in & {} H_{1}\Leftrightarrow -(\lambda _{1}-1)v^{*}<\lambda _{1}x_{1}-x_{2}<(\lambda _{1}-1)v^{*} \end{aligned}$$Let \(\Delta _{1}\) be the line of Equation \(\lambda _{1}x_{1}-x_{2}=(\lambda _{1}-1)v^{*}\), and \(\Delta _{2}\) be the line of Equation \(\lambda _{1}x_{1}-x_{2}=-(\lambda _{1}-1)v^{*}\), then

\((x_{1},x_{2})\in H_{1}\Leftrightarrow (x_{1},x_{2})\) is between \(\Delta _{1}\) and \(\Delta _{2}\)

\((x_{1},x_{2})\in H_{0}\Leftrightarrow (x_{1},x_{2})\in \Delta _{1}\cup \Delta _{2}\) Denote by E and F the points such that: \(\{E\}=D_{2}\cap \Delta _{1}\) and \(\{F\}=D_{2}\cap \Delta _{2}\)

If \((x_{1},x_{2})\in D_{2}\) (i.e., just at the boundary between Case (A) and Case (B)), then:

$$\begin{aligned} \pi _{1}(x_{1},x_{2})= & {} 1 \text{ if } (x_{1},x_{2})\in (F,E) \nonumber \\ \pi _{2}(x_{1},x_{2})= & {} 1 \text{ if } (x_{1},x_{2})\notin [ F,E]\nonumber \\ \pi _{1}(x_{1},x_{2})= & {} \pi _{2}(x_{1},x_{2})=\frac{1}{2} \text{ if } (x_{1},x_{2})\in \{F,E\} \end{aligned}$$(A11) - Case (B) :

-

If \(x_{2}\ge x_{1}+\left( \frac{\lambda _{1}-1}{\lambda _{1}}\right) K\), then \((x_{1},x_{2})\in H_{1}\Leftrightarrow S_{1}(x_{1},x_{2})>\frac{1}{2}\) where \(S_{1}(x_{1},x_{2}) =\int _{\frac{\lambda _{1}x_{1}-x_{2}}{\lambda _{1}-1}-K}^{\frac{\lambda _{1}x_{1}+x_{2} +(\lambda _{1}-1)K}{\lambda _{1}+1}}f_{\sigma }(a)da\). For \(x_{1}\) given, \(x_{2}\mapsto S_{1}(x_{1},x_{2})\) is a continuous increasing function, with \(\lim _{x_{2}\rightarrow +\infty }S_{1}(x_{1},x_{2})=\int _{-\infty }^{+\infty }f_{\sigma }(a)da=1\). It means that for any given \(x_{1}\), we have \(S_{1}(x_{1},x_{2})>\frac{1}{2}\) for \(x_{2}\) high enough; hence, \(\pi _{1}(x_{1},x_{2})=1\). From this result and from Eq. (A11), there is a function \(\phi :\mathbb {R\rightarrow R}\) such that (in Case (B)):

\(*\) If \(x_{1}\le x_{1}(F)\) or \(x_{1}\ge x_{1}(E)\), then

\(\pi _{1}(x_{1},x_{2})=1\Leftrightarrow x_{2}>\phi (x_{1})\)

\(\pi _{1}(x_{1},x_{2})=\frac{1}{2}\Leftrightarrow x_{2}=\phi (x_{1})\)

\(*\) If \(x_{1}\in (x_{1}(F),x_{1}(E))\), then \(\pi _{1}(x_{1},x_{2})=1\).

It means that \(H_{1}\) is the domain above the graph of \(\phi \) (for \( x_{1}\le x_{1}(F)\) or \(x_{1}\ge x_{1}(E)\) ) and above \(D_{2}\) (for \( x_{1}\in [ x_{1}(F),x_{1}(E)]\)).

Lemma 3

-

(i)

\(H_{1}\) is a convex set inside Case (B).

-

(ii)

\(\phi \) is a convex function on \(x_{1}\le x_{1}(F)\) and on \(x_{1}\ge x_{1}(E)\).

-

(iii)

The set \(H_{1}\) does not intersect the line \(x_{2}=0\) inside Case (B).

-

(iv)

The line of Equation \(\lambda _{1}x_{1}+x_{2}+(\lambda _{1}-1)K=0\) is an asymptote of \(\phi \) when \(x_{1}\rightarrow -\infty \) and \( x_{2}\rightarrow +\infty \).

-

(v)

The line of Equation \(\lambda _{1}x_{1}-x_{2}-(\lambda _{1}-1)K=0\) is an asymptote of \(\phi \) when \(x_{1}\rightarrow +\infty \) and \(x_{2}\rightarrow +\infty \).

Proof of Lemma 3

-

(i)