Abstract

Particle size measurement is crucial in various applications, be it sizing droplets in inkjet printing or respiratory events, tracking particulate ejection in hypersonic impacts or detecting floating target markers in free-surface flows. Such systems are characterised by extracting quantitative information like size, position, velocity and number density of the dispersed particles, which is typically non-trivial. The existing methods like phase Doppler or digital holography offer precise estimates at the expense of complicated systems, demanding significant expertise. We present a novel volumetric measurement approach for estimating the size and position of dispersed spherical particles that utilises a unique ‘Depth from Defocus’ (DFD) technique with a single camera. The calibration-free sizing enables in situ examination of hard to measure systems, including naturally occurring phenomena like pathogenic aerosols, pollen dispersion or raindrops. The efficacy of the technique is demonstrated for diverse sparse dispersions, including dots, glass beads and spray droplets. The simple optical configuration and semi-autonomous calibration procedure make the method readily deployable and accessible, with a scope of applicability across vast research horizons.

Similar content being viewed by others

1 Introduction

Dispersions are heterogeneous mixtures of particles dispersed within a continuous phase, whereby the term ‘particle’ can refer to particles of any phase, e.g. drops/aerosols, bubbles or solid particles. These particulate systems are omnipresent, and they bear significance in numerous natural and practical applications. For instance, in industrial settings, the size, location and velocity of atomized fuel droplets are crucial for evaporation, rapid ignition and achieving higher efficiency of combustion-based engines. Parallel examples apply to the pharmaceutical, food, agriculture, energy and automobile industries. Understanding the transport mechanism of toxic dispersions, such as contagious aerosol droplets, dust or microplastics, is crucial for health care and environmental sciences since it is constrained by their size ranges. From a biological perspective, entities such as pollen, blood cells, vesicles or micro-organisms possess characteristics that are dependent on their size. The list is endless, but in summary, the need to characterise the size, position and velocity of dispersed particles in a mixture is ubiquitous. Knowing such information then also allows for concentration and flux to be measured.

Among the numerous alternatives to perform such measurements, optical methods are of particular interest, as they are non-intrusive. Optical techniques are usually characterised as being pointwise, planar or volumetric and are based on various principles, such as interferometry (e.g. phase Doppler, holography, laser diffraction, ILIDS/IPI, etc.), time shift or direct imaging (Tropea 2011). However, pointwise or planar methods are tedious to deploy when volumetric information is required for two reasons. For one, the measurement point or plane must be traversed throughout the flow field, necessitating tedious measurement repetition and demanding steady flow conditions during the entire measurement procedure. Furthermore, the measurement volume is seldom known exactly, making a quantitative computation of global volumetric distributions difficult. Holography offers a volumetric measurement, and furthermore, in-line holography is optically quite simple to realise. Nevertheless, holography does involve considerable computational effort, making the processing time longer.

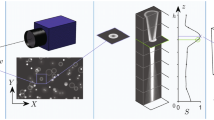

Direct imaging techniques provide a potential solution as they allow for high spatiotemporal resolution combined with simple experimental configurations. Shadow imaging is one such favourable configuration suitable to distinguish the particulate content from the continuous phase, and furthermore, it is easy to set up and adjust (Erinin et al. 2023). However, delineating the observation volume is difficult with such approaches. As the particle moves out of focus away from the object plane (Fig. 1a), projected geometric features become blurred and the apparent size seems to increase. Hence, most of the early implementations of direct imaging involved only the measurement of particles in focus and rejection of the blurred projections based on grey-level intensity (Fantini et al. 1990), gradient (Hay et al. 1998; Lecuona et al. 2000) or contrast-based criteria (Kim and Kim 1994). In many applications, near-focus instances occur less often, resulting in a small sample size and consequently increasing the statistical uncertainty of the measurement. Moreover, smaller particles tend to blur more rapidly with increasing distance from the object plane, reaching beyond the detection limit faster than the larger particles. This leads to an intrinsic bias in evaluating the size distribution using arithmetic averaging, by overweighting the occurrence of larger particles.

These drawbacks can be mitigated by considering volumetric methods where the blurring of the out-of-focus particles is utilised to determine not only size but also position through the degree of blurring. Such systems are known as the Depth from Defocus (DFD) approach, first introduced in the context of general imaging systems (Pentland 1987; Krotkov 1988). Several extensions were then proposed, which can be broadly classified into single or two image approaches. The single image approach is realised through special apertures (Willert and Gharib 1992; Levin et al. 2007; Cao and Zhai 2019), lenses (Cierpka et al. 2010) or active illumination (Ghita and Whelan 2001). Another approach is to employ image processing algorithms based on the concept of deconvolution (Ens and Lawrence 1993; Subbarao and Surya 1994), normalised contrast (Blaisot and Yon 2005; Fdida and Blaisot 2010), circle of confusion (Legrand et al. 2016) or machine learning (Saxena et al. 2008; Wang et al. 2022a, b). Some of these methods offer both size and depth estimation, albeit with an ambiguity in the depth direction, as blurring is symmetric across the object plane. Furthermore, these methods usually require a lengthy calibration procedure.

The two image DFD approach involves acquiring the images at different degrees of blur (out of focus). This can be realised from a single camera by capturing sequential images after changing the parameters of the optical system (Subbarao et al. 1995) or by using coloured illumination with suitable filters (Murata and Kawamura 1999). Alternatively, two cameras and a beam splitter can be deployed to obtain simultaneous images, each with a different degree of focus. Recent developments of the two-camera DFD (Zhou et al. 2020, 2021; Sharma et al. 2023) enable reliable measurement of size and depth using images from two cameras, whose object planes have a prescribed spacing. These images are processed using functions determined from the calibration procedure, requiring a series of target dot images of known size moved along the optical axis at known depths. Unlike other methods, this DFD approach enables the precise estimation of the measurement volume (or more precisely, the detection volume), which varies with particle size. The theoretical formulation of this two-camera DFD (Sharma et al. 2023) lays the foundation for the present newly proposed technique using only one camera for the measurement of spherical particles.

The underlying principle of the proposed technique is illustrated in Fig. 1b. When the dispersed particle of interest is located on the object plane of the lens, a focused image with distinct features is obtained, blurred only by the point spread function (diffraction limit). However, as the particle is displaced along the depth axis away from this plane, blurring occurs, resulting in smoother features and lower intensity gradients. A parameter of interest is the radius of the particle image at a constant grey-level threshold, which decreases as the depth increases. In the earlier DFD approaches, two experimental calibration functions were employed, utilising the radius information obtained from two cameras for analysis. However, since the actual size and position of a particle also influence the greyscale gradient of its image, this gradient is utilised in the single-camera approach proposed here. This approach aims to determine the size and depth of a particle using thresholded radius and gradient magnitude extracted from a single image at a reference normalised greyscale intensity (chosen here as 0.5). This is achieved using analytical functions. This is the core novelty of this new technique.

a Illustration of image projection using ray optics, where a particle located at a distance \(u_\textrm{o}\) from the lens in the object plane is in focus at a distance s in the image plane (IP). Objects in front or behind the object plane by a distance \(|\Delta z|\) appear blurred on the image plane. b Graphical illustration of particle size estimation using single-camera image by extracting two quantities—radius (\(r_\textrm{t}\)) and intensity gradient (\(\partial g_\textrm{t}/\partial r_\textrm{t}\)) at a reference intensity value (\(g_\textrm{t}=0.5\)); both of which decrease with increasing depth from object plane \(|\Delta z|\)

2 Theoretical analysis

This novel implementation of a single image DFD relies on a theoretical description of the image blurring as a function of the position of the dispersed particle with respect to the object plane of the system. How this description is implemented into the analysis and into a practical measurement procedure is summarised graphically in Fig. 2. However, the imaging processing is always performed on a normalised greyscale, where the intensity values are scaled between 0 and 1. This normalisation step and the image processing algorithm are described in more detail in Sect. 3.

2.1 Blurred image formation

The image projection onto a camera sensor can be described using simple ray optics, as illustrated in Fig. 1a. When a particle is on the object plane at a distance \(u_\textrm{o}\) from the lens, a focused image is formed at the imaging plane, located at a distance s from the lens. However, when the particle is displaced to a distance \(|\Delta z|\) from the object plane, the focused image shifts to a different plane, causing a blurred image projection on the sensor. This blurred image can be described by a convolution of the focused image (\(i_\textrm{f}\)) of a particle (size \(d_0\)) with a blurring kernel (h) (Blaisot and Yon 2005; Zhou et al. 2020). The intensity \(g_\textrm{t}\) at any location \(r_\textrm{t}\) is then evaluated as (Fig. 3a):

a The blurred image is estimated by convolving the focused image of a particle of size \(d_\textrm{o}\) with a Gaussian blur kernel (shown as a shaded circle). The intensity (\(g_\textrm{t}\)) at each location (\(r_\textrm{t}\)) is evaluated by convoluting the focused image with the point spread function. b Theoretical variation of dimensionless parameters \(\tilde{\rho }_\textrm{t} = r_\textrm{t}/d_\textrm{o}\) with \(\tilde{\sigma } = \sigma /d_\textrm{o}\) for different intensity threshold values (\(g_\textrm{t} =\) 0.1–0.9)

Here \(i_\textrm{f}(r)\) is a normalised intensity image of a particle of radius \(r_\textrm{o} = d_\textrm{o}/2\) on the image plane in polar coordinates

The particle dimension on the image plane \(d_\textrm{o}\) is related to the actual size \(d_\textrm{p}\) through \(d_\textrm{o} = d_\textrm{p} \times M\), where M is the magnification of the optical system. The blur kernel \(h(r_\textrm{h})\) can be represented using a Gaussian profile with \(\sigma\) as the standard deviation (Savakis and Trussell 1993; Liu et al. 2021; Dasgupta 2022):

where \(\vec {r_\textrm{h}} = \vec {r}-\vec {r_\textrm{t}}\). Therefore, the two-dimensional convolution Eq. (1) can be written as:

The standard deviation \(\sigma\) represents the degree of blur or size of the blur kernel, which can be expressed as (Zhou et al. 2020)

where A is an experimental constant for the imaging system, D is the aperture diameter, f is the focal length and \(\Delta z\) is the distance of the particle from the object plane (see Fig. 1a). As A, D, M and f are invariant for a given DFD measurement system, these terms are replaced with a single constant \(\beta\). Ultimately the resolution of imaging systems is limited by diffraction, and the smallest possible point spread function (PSF) is associated with the formation of the Airy disk. This limits the contour sharpness when in focus, i.e. \(\sigma \ne 0\) at \(\Delta z = 0\). However, for the present system parameters, this diffraction limitation is negligible, and other factors are more prominent, as discussed in detail in the Appendix A.

The solution for the convolution integral Eq. (4) is obtained by non-dimensionalisation of the variables with the particle image diameter as

Here we use \(\widetilde{\sigma }\) as a parameter to represent the dimensionless depth from the object plane; refer Eq. (5). Using appropriate substitutions in Eq. (4), the reduced dimensionless form is obtained as

where \(I_\textrm{o}\) is the zeroth order modified Bessel function of the first kind. The dimensionless equation, Eq. (7), is the foundation for analytical functions, the solutions of which are numerically determined and are depicted in Figs. 3b and 4a. One must note that when \(\widetilde{\sigma } \rightarrow 0\), the term inside the Bessel function; hence, the function itself blows up in Eq. (7). To obtain a solution in this region, the asymptotic estimate of the function as \(({\widetilde{\rho }\widetilde{\rho _\textrm{t}}}/{{\widetilde{\sigma }}^2}) \rightarrow \infty\) is used (Bowman 1958). Figure 3b presents the variation of threshold radius with particle depth from the object plane for a specific threshold intensity. This solution also provides the foundation for the calibration curves used in the earlier two-camera DFD approach (Sharma et al. 2023). Figure 4a presents the intensity distribution of blurred images in the radial direction at a specific depth.

a Analytical variation of intensity \(g_\textrm{t}\) with dimensionless radius \(\tilde{\rho }_\textrm{t}\) for various dimensionless blurring standard deviations \(\tilde{\sigma }\) which is proportional to depth. b Theoretical intensity variation with modified dimensionless radius \(\tilde{R}_\textrm{t}\) for various dimensionless blurring standard deviations \(\tilde{\sigma }\)

Analytical curves \(f_1\) and \(f_2\) for reference intensity \(g_\textrm{t}=0.5\). a Variation of dimensionless gradient \(\tilde{G}\) with depth, i.e. \(\tilde{G}\) = \(f_2(\tilde{\sigma })\). b Variation of dimensionless radius \(\tilde{\rho }_\textrm{t}\) with depth, i.e. \(\tilde{\rho }_\textrm{t}\) = \(f_1(\tilde{\sigma })\)

2.2 Analytical characteristic functions

From the single-camera image, two quantities can be extracted—radius \(\left( r_\textrm{t}\right)\) and intensity gradient \(\left( \partial g_\textrm{t}/\partial r_\textrm{t}\right)\) at a reference intensity value \(g_\textrm{t}=0.5\). These parameters decrease with increasing depth of the particle from the object plane \(\left| \Delta z\right|\) (Fig. 1b), indicating the possibility of a gradient-based function to estimate the degree of blur; hence, indirectly the depth. This is confirmed in Fig. 4a by observing the intensity profiles for blurred particles at different depths, exhibiting different gradients at a reference intensity. Using an experimental image, we can only evaluate the radial intensity profiles, i.e. \(r_\textrm{t}-g_\textrm{t}\) variation rather than the dimensionless version shown in Fig. 4a, since \(d_\textrm{o}\) is unknown. Hence, we propose a novel measurable dimensionless radius:

where \(\left( r_\textrm{t}\right) _{g_\textrm{t}=0.5}\) is the radius at the reference intensity. The corresponding modified solution is depicted in Fig. 4b, and the proposed functional form of the characteristic function based on the modified gradient at reference intensity \(g_\textrm{t}=0.5\) is

From this measurable dimensionless version of intensity gradient \(|\partial g_\textrm{t}/\partial {\widetilde{R}}_\textrm{t}| = |r_\textrm{t} \partial g_\textrm{t}/\partial r_\textrm{t}|\) at the reference intensity (sub-script \(g_\textrm{t}=0.5\) omitted for brevity from now on), we can estimate the dimensionless quantity \(\widetilde{\sigma }\), which is proportional to depth. This curve is shown in Fig. 5a. From the solution depicted in Fig. 3b, another required function is directly obtained to estimate \({\widetilde{\rho }}_\textrm{t}\) from \(\widetilde{\sigma }\) at the reference intensity represented in the functional form as

This curve is illustrated in Fig. 5b. The input parameters for the analytical functions \(f_1\) and \(f_2\) are conveniently measurable from the image. These functions can be further combined in the form \({\widetilde{\rho }}_\textrm{t}=f_1(f_2^{-1}(\widetilde{G}))\), as depicted in Fig. 6a.

a Combination of analytical curves \({\tilde{\rho }}_\textrm{t}=f_1\left( f_2^{-1}\left( \tilde{G}\right) \right)\) for the reference intensity \(g_\textrm{t}=0.5\). A steep variation of \(\tilde{\rho }_\textrm{t}\) with the gradient is observed in the blue-shaded region where \(\tilde{\sigma }>0.35\). At the same time, there is a minimal variation in the grey-shaded region, i.e. \(\tilde{\rho }_\textrm{t} \approx 0.5\), where \(\tilde{\sigma }<0.05\). b Variation of the intensity value at the location of maximum gradient magnitude with dimensionless depth \(\tilde{\sigma }\). This corresponds to \(g_\textrm{t} \approx 0.5\) for most of the suitable working range (\(\tilde{\sigma } \leqslant 0.2\)) and therefore is chosen as reference. Beyond the working range, i.e. the grey shaded region \(r_\textrm{t} \rightarrow 0\), as is evident from function \(f_1\)

Being dimensionless, these analytical functions are universal to optical systems that exhibit a Gaussian blur kernel, which makes this technique a powerful measurement tool. The measurement process based on these functions is explained in the next subsection.

On the assessment of radial intensity profiles, i.e. \(\rho _\textrm{t}-g_\textrm{t}\) curves with varying \(\widetilde{\sigma }\), the maximum slope values are found to occur at the intensity \(g_\textrm{t} \approx 0.5\) for most of the suitable working range \(\left( \widetilde{\sigma }\le 0.2\right)\) (see Fig. 6b). This intensity value at the location of maximum gradient magnitude, \(g_\textrm{t} = 0.5\), is chosen as the reference location described earlier, making gradient estimation less susceptible to noise. The gradient \(\widetilde{G}\) is estimated by considering the average magnitude within a thin strip whose edges are defined by the intensities \(\left( g_\textrm{t}\pm \delta g_\textrm{t}\right)\) around the reference intensity (see Fig. 7a). This is necessary as the image is composed of pixels, and precise estimation at exactly the reference intensity is challenging. Moreover, the noise manifests with pixel level fluctuations, leading to sharp intensity variations; hence, steep local gradient values. By ensuring that the base gradient values are maximum at the region of interest, these fluctuations will have a minor influence on the estimated average when compared with the rest of the domain.

The current analysis considers individual blurred particles, but in practical applications, particles often overlap when projected onto the image plane. This overlapping can result in a single indistinguishable, non-symmetric entity due to blurring. Appendix A includes a discussion on the particle concentration limit, which refers to the maximum degree to which closely packed particles can be distinguished. By solving the convolution equation specific to this case, it is deduced that the particles with a spacing between their centres greater than 1.4 times the diameter will be distinguishable at all depths for the segmentation threshold of 0.4.

To the best of the authors’ knowledge, this approach using both an intensity threshold and the grey-level gradient for contour and size measurement is novel and a patent for this analytic approach has been filed.

a Gradient \(\tilde{G}\) estimation using the average magnitude in a thin strip (\(g_\textrm{t} \pm \delta g_\textrm{t}\)) at the reference intensity depicted by cyan in the figure. This is necessary because the image is composed of pixels, restricting the precise estimation of gradients at exactly the reference intensity. The strip width increases as \(\tilde{\sigma }\) increases, causing the average value to deviate from the anticipated exact value. b Error correction function \(\varepsilon\) (ratio of actual to estimated diameter) generated using synthetic images to consider the pixelation effect on size and gradient estimation at reference intensity location \(g_\textrm{t}=0.5\)

2.3 Measurement process

Size estimation: The size of the particles can be estimated based on the analytical functions \(f_1\) and \(f_2\). First, the threshold radius \(r_\textrm{t}\) and gradient magnitude \(\left| \frac{\partial g_\textrm{t}}{\partial r_\textrm{t}}\right|\) are evaluated at the reference intensity \(g_\textrm{t}=0.5\) from the particle image. The associated image processing is explained in Sect. 3, consisting of aspects like image normalisation, segmentation and sub-pixel interpolation. These parameters are used to calculate the dimensionless gradient \(\widetilde{G}=\left| \frac{\partial g_\textrm{t}}{\partial {\widetilde{R}}_\textrm{t}}\right| =\left| r_\textrm{t}\frac{\partial g_\textrm{t}}{\partial r_\textrm{t}}\right|\). From Eq. (9), the dimensionless depth \(\widetilde{\sigma }=f_2^{-1}(\widetilde{G})\) is obtained and substituted into Eq. (10) to evaluate the dimensionless radius \({\widetilde{\rho }}_\textrm{t}=f_1\left( \widetilde{\sigma }\right)\). The size of the particle in the image plane, \(d_\textrm{o}\), is then evaluated using the relation \(d_\textrm{o}\ ={r_\textrm{t}}/{{\widetilde{\rho }}_\textrm{t}}\).

Depth estimation: The estimation of particle depth requires an experimental calibration function in addition to the analytical functions used above. This step is optional and is not required if emphasis is placed only on the particle size estimation. Experimental calibration is achieved following the size estimation procedure described earlier and is performed for target dots or reticles of known size moved along the optical axis at known depths. The blur kernel size \(\sigma\) is evaluated using the relation \(\sigma = \widetilde{\sigma }d_\textrm{o}\). Since the depths of these target dots are already known, the correlation between \(\sigma\) and \(\left| \Delta z\right|\) can be estimated through Eq. (5). The calculated linear fit \(\beta\) remains constant for the system and is applied to the \(\sigma\) values obtained from the sample particle measurements to estimate their corresponding depths. Due to the symmetric nature of the image blurring across the object plane, the depth location exhibits directional ambiguity, and only absolute values can be determined from the object plane.

Referring to Fig. 6a, we now examine the characteristics of the functions \(f_1\) and \(f_2\) and their implications for the measurement process. In the vicinity of the object plane or the near-focus depth field \(\widetilde{\sigma }<0.05\), the parameter \(\rho _\textrm{t}\) is practically constant, as can be seen in the combined calibration curve. This makes the method robust under near-focus conditions for diameter estimation, even though the gradient estimation and thus, \(\widetilde{\sigma }\), is prone to error. This is due to the expected sharp gradients and the limitations imposed by image projection onto discrete pixels. Consequently, the depth estimates of particles near the object plane are unreliable. Furthermore, Fig. 6a reveals a steep variation of \({\widetilde{\rho }}_\textrm{t}\) with the gradient in the blue-shaded region corresponding to \(\widetilde{\sigma }>0.35\). This region represents larger depth locations, approaching the limit of the measurement system. The measurements in this region are unreliable for diameter estimation. Moreover, the overall intensity level is lower due to a higher degree of blur, rendering the image susceptible to noise. This limits the measurement depth to approx. \(\widetilde{\sigma }_\textrm{c}=0.35\), and the results beyond this are disregarded. Corresponding to this imposed limit, \(\widetilde{\rho _\textrm{t}}=0.3211\) and \(\widetilde{G}=0.2501\). Hence, the availability of discrete two-dimensional intensity data due to pixelated image information poses a challenge in various ways. The errors associated with estimating gradients and threshold radius propagate through the aforementioned functions, leading to inaccuracies in the estimated size values. To quantify this error, synthetic images of dots with known sizes and degrees of blur were analysed. An error correction function \(\varepsilon\) is developed to compensate for the errors due to the pixelation effect, which is defined as the ratio:

where \(d_\textrm{0,est}\) is the diameter estimated using the proposed method and \(d_\textrm{0,act}\) is the actual diameter of the particle in the image. This function is illustrated in Fig. 7b and used to estimate the corrected diameter as \(d_\textrm{0,corr} = d_\textrm{0,est} \cdot \varepsilon (d_\textrm{0,est})\). On closer inspection, we find the error in diameter estimation to be \(\Delta d_{0} \approx 0.35\) pixel irrespective of the actual particle diameter for the proposed algorithm and parameters. Since the particle image is discrete, an inaccuracy of \(\Delta d_{0} \approx 1\) pixel is anticipated; however, we are able to achieve a lower value due to the sub-pixel interpolation procedure discussed in Sect. 3. The correction function depends on the sub-pixel grid size, and for Fig. 7b and the rest of the study, a \(5 \times 5\) sub-pixel grid with bilinear interpolation was employed.

2.4 Depth of detection

In the limit of detection, corresponding to the depth \(|\Delta z|=|\Delta z|_\textrm{c}\), the threshold radius \(r_\textrm{t}\) goes to zero (\(r_\textrm{t} \rightarrow 0\)). Solving the dimensionless equation, Eq. (7) developed earlier, this limit predicts a linear variation of depth of detection \(\delta\) (total depth considering both sides of the object plane) with particle diameter \(d_\textrm{p}\) (Sharma et al. 2023), which can be represented as

where \(\alpha\) is a constant and \(d_\textrm{p0}\) is an offset parameter to adjust the linear fit (usually \(d_\textrm{p} \gg d_\textrm{p0}\)). This offset parameter is an artefact of the pixelation associated with actual images and is discussed in detail in previous articles (Sharma et al. 2023).

Considering the limit set on the measurement up to \(\widetilde{\sigma }_\textrm{c}\), \(\alpha\) can be determined using Eqs. (5), (6) and (12)

The detection volume can then be determined as a function of particle size as

where \(H\times L\) is the dimensions of the region of interest. This precise determination of the measurement volume is a distinguishing feature of the DFD approach, and a detailed discussion regarding the same can be found in previous works (Sharma et al. 2023). The smaller particles are measured over a smaller depth range, and the detection depth increases linearly as the size increases, leading to an overweighting of larger particles. Hence, the information on detection depth is used for volumetric bias correction of the size distributions as discussed in Sect. 3.

The parameter \(\alpha\) plays a significant role in determining the detection volume (\(V_\textrm{d}\)), as indicated by Eq. (14). This system parameter is dependent on \(\beta\), implying that \(\alpha \propto f/AD\) according to Eqs. (5) and (13). Therefore, by choosing or adjusting these parameters, one can ensure a larger detection volume for a higher sampling rate. For instance, in designing the optical system for a particular application, if a larger focal length (f) for the optical system or a lower aperture diameter (D) is chosen, one could achieve a larger detection volume. Although the latter significantly affects the overall intensity profiles captured in the image and must be compensated by controlling the background illumination. Furthermore, experimental factors affecting parameter A are not precisely known, but it is highly dependent on the type, collimation and chromaticity of background illumination. As will be demonstrated later using target dot measurements, a diffused beam illumination leads to a lower value of \(\alpha\) and a smaller detection volume, but provides reliable measurement results. However, a collimated beam illumination leads to a much higher \(\alpha\) value, but the results obtained are unreliable due to interference effects.

3 Materials and methods

3.1 Experimental set-up

3.1.1 Suitable set-up requirements

This measurement technique requires the minimal equipment associated with basic backlight imaging: a camera and a diffused light source for background illumination, as shown in Fig. 8. For reliable measurements, the camera resolution and magnification should be carefully selected to ensure that the minimum particle of interest has a diameter of at least 3–5 pixels on the image sensor plane. To achieve suitable background illumination, a diffusor plate or an appropriate optical device should be used. It is crucial to avoid collimated beams, as they can lead to inaccurate results due to non-Gaussian blurring and interference effects, such as Fresnel diffraction (refer to the Appendix A). Additionally, the light source should be aligned along the optical axis to ensure proper shadow formation, which means that the contours should remain circular when the particle is blurred. The background intensity should be adjusted to an intermediate value in the dynamic range of the sensor to avoid saturation associated with very high intensities and noise with low intensity levels. If the particle is not completely opaque, a bright central spot will appear inside the shadow, corresponding to first-order refracted light passing through the particle. However, this effect can be more or less completely eliminated by moving the light source farther away from the object plane. In this manner, to an increasing degree, only paraxial rays will be seen and the intensity of the bright central spot decreases. The formation of this localised central bright spot does not impact the estimation of radius and gradient at the reference intensity (see Fig. 11).

The choice of lens is crucial and depends on the particle sizes being measured and the observation volume. A telecentric lens is preferred for accurate measurements, since it maintains a constant magnification, keeping the object size constant, independent of its position along the optical axis. Furthermore, a telecentric lens maintains symmetry of the blurred image for particles behind or in front of the object plane. However, standard optical arrangements can be used if the measurement volume is small in the depth direction, where the magnification variation is insignificant. It is important to note that the aperture size and focal length can affect the system parameter \(\beta\) (Eq. (5)) and consequently the measurement depth of the system (Eqs. (13) and (14)).

3.1.2 Set-up used in experiments

The basic configuration used in the present study consisted of a high-speed camera, zoom lens and light source, with other accessories such as a beam expander, diffusor plate and calibration target dot plate.

Target dot measurement: high-speed camera: Photron SA5; Lens: \(6.5\times\) Navitar zoom lens coupled with \(1.5\times\) lens attachment, and \(1\times\) and \(2\times\) objective, where the latter was used for the higher magnification configuration; Light sources: Dolan Jenner Fiber-Lite Mi-150 LED light and Cavitar Cavilux smart UHS pulsed laser; Beam Expander: Thorlabs GBE05-A; Magnification: \(\sim 6.8 \times\) and \(\sim 13.7 \times\); Resolution: 2.94 \(\upmu\)m/pixel and 1.46 \(\upmu\)m/pixel.

Glass beads on a slide: high-speed camera: Photron SA5; Lens: 6.5\(\times\) Navitar zoom lens coupled with 1.5\(\times\) lens attachment, and 1\(\times\) objective; Light sources: Dolan Jenner Fiber-Lite Mi-150 LED light; Magnification: \(\sim 6.7 \times\); Resolution: 2.97 \(\upmu\)m/pixel.

Dispersed glass beads and ethanol spray measurements: high-speed camera: Photron SA5; Lens: 6.5\(\times\) Navitar zoom lens coupled with 1.5\(\times\) lens attachment, and 1\(\times\) objective; Light sources: Cavitar Cavilux smart UHS pulsed laser with diffusor plate; Magnification: \(\sim 4 \times\); Resolution: 5 \(\upmu\)m/pixel.

Bubble rupture aerosol measurement and surface reconstruction: high-speed camera: Photron SA5; Lens: Tokina AT-X PRO M100 F2.8 D Macro lens; Light sources: Dolan Jenner Fiber-Lite Mi-150 LED light with diffusor plate; Magnification: \(\sim 0.32 \times\); Resolution: 62.5 \(\upmu\)m/pixel.

3.2 Experimental calibration procedure

The calibration procedure for position involves capturing a sequence of images to obtain the correlation between depth \(|\Delta z|\) and blur kernel size \(\sigma\). To repeat, this step is optional and required only for depth estimation. We have confirmed a linear relationship between \(\sigma\) and \(|\Delta z|\) as depicted in the subsequent section in Fig. 10c. The calibration target dots of known size are moved along the optical axis at known depth positions from the object plane. For each of these particles, blur kernel size or \(\sigma\) can be estimated. Linear regression is performed on the scatter plot of \(\sigma\) and \(|\Delta z|\), as shown in Fig. 10c, to derive the inverse functional form \(|\Delta z| = m\sigma + c\). This functional form and associated parameters (m, c) remain constant for all measurements performed using the same optical system. Utilising the calculated \(\sigma\), along with the established functional form, we can estimate the depth of the particles under measurement. For improved accuracy, higher order polynomial fits can be considered.

If the object plane lies behind a glass window, then the calibration should ideally be conducted also with the glass window in place. The glass window will have the effect of shifting the absolute position measured by the system, but will not affect the relative positions between particles. If however, the dispersed phase is in a continuous phase with a refractive index other than air, then the value of \(\beta\) will be affected. An example would be solid spheres in a liquid vessel, whereby the shadow imaging system is outside looking through the vessel. In this case, the calibration is best performed in situ, i.e. the calibration plate is traversed inside the vessel.

3.3 Image processing algorithm

The image processing routine consists of the following key aspects: normalisation, particle identification, sub-pixel interpolation, size estimation and depth estimation. The size and depth estimation processes utilise the proposed algorithm. The preceding steps are standard procedures for image processing systems. The flow chart for the algorithm depicted in Fig. 2 was implemented using MATLAB.

Normalisation: This process involves rescaling the intensity of the greyscale shadow image to a range of [0, 1]. The global maximum value associated with the unobstructed illuminated background is mapped to 0, while the global minimum corresponding to the completely obstructed background or shadow is mapped to 1. The reference value for the former is derived from background illumination images and the latter from black-shading images (images captured with the camera lid on). Mathematically, the normalised intensity \((I_n)\) is obtained (Zhou et al. 2020) as:

where \(I, I_\textrm{bi}\) and \(I_\textrm{bs}\) are actual shadow image, background illumination and blackshade image intensities, respectively.

Particle identification: This step involves isolating and extracting individual particles from the normalised image for further analysis. In this study, a simple intensity-based method was adopted, in which regions with an intensity above a threshold value were identified as a particle. This process, known as segmentation in image processing, allowed for particle identification with a threshold set at 0.4 for this study. The particles were isolated as separate images based on the bounding box enclosing the identified regions on segmentation (see Fig. 9). The bounding box refers to the smallest rectangular region that encloses the particle. The intensity threshold for particle detection should be lower than the reference intensity value of 0.5, within which the subsequent analysis for size and depth estimation is conducted. This ensures that the information used for estimation is extracted within the bounding box, sufficiently away from its edges. Depending on the system under study, more advanced algorithms can be employed for the segmentation or isolation process.

Sub-pixel interpolation: This step involves interpolating intensity data on a grid finer than pixel resolution for the isolated particles. This is necessary because only discrete information is available from an image, and extraction of information precisely at exactly some prescribed reference intensity is a challenge. In this study, a simple bilinear interpolation was performed, where each pixel was subdivided into a \(5\times 5\) grid (see Fig. 9). Prior to the interpolation process, a noise removal step is performed using a Wiener filter. Depending on the noise characteristics of the system, further advanced interpolation techniques can be performed on a suitable sub-grid.

Image processing steps depicting segmentation of normalised image and extracting image of each particle enclosed in a bounding box, sub-pixel interpolation, thresholding to estimate radius \(r_\textrm{t}\) and average gradient \(\widetilde{G}\) within a thin strip defined by edges at (\(g_\textrm{t} \pm \delta g_\textrm{t}\))

Size estimation: To estimate the image size \(d_\textrm{o}\) of the isolated particle, radius and gradient magnitude information at a reference intensity of 0.5 is required. The radius \(r_\textrm{t}\) is determined by obtaining a region with an intensity above 0.5 and calculating the equivalent radius from its area \(A_\textrm{t}\) as \(r_\textrm{t} = \sqrt{A_\textrm{t} / \pi }\) (see Fig. 9). If glare points exist, they will appear as holes in this image region and can be easily removed by the ‘fill hole’ operation commonly available in image processing systems. This would be typical of transparent particles like glass beads (see Fig. 11). The region eccentricity provides an estimation of the actual particle shape and is used to segregate non-circular particles as discussed in the subsequent sections. To determine the gradient, the average magnitude in a thin strip (\(g_\textrm{t} \pm \delta g_\textrm{t}\)) centred at reference intensity is considered (Figs. 7a, 9). The gradient can be calculated using standard gradient functions available in image processing systems. For this study, the strip width is set by choosing \(\delta g_\textrm{t} = 0.005\).

The threshold radius \(r_\textrm{t}\) and gradient magnitude \(\left| \frac{\partial g_\textrm{t}}{\partial r_\textrm{t}}\right|\) evaluated as above are then used to determine the dimensionless gradient \(\widetilde{G}=\left| r_\textrm{t}\frac{\partial g_\textrm{t}}{\partial r_\textrm{t}}\right|\). The analytical functions \(f_1\) and \(f_2\) are employed to determine \(\widetilde{\sigma }=f_2^{-1}(\widetilde{G})\) and subsequently, \({\widetilde{\rho }}_\textrm{t}=f_1\left( \widetilde{\sigma }\right)\). The size of the particle \(d_\textrm{o}\) is evaluated using the relation \(d_\textrm{o}\ ={r_\textrm{t}}/{{\widetilde{\rho }}_\textrm{t}}\). The blur kernel size \(\sigma\) is evaluated using the relation \(\sigma = \widetilde{\sigma }d_\textrm{o}\). Until this step, analytical functions are sufficient and experimental calibration is not required. Hence, size estimation can be performed independently in a calibration-free manner.

Depth estimation: To estimate depth, the inverse functional form \(|\Delta z| = m\sigma + c\) from the experimental calibration procedure is required. By substituting the determined value of \(\sigma\), the absolute depth from the object plane is evaluated. However, the proposed method does not provide directional information for the depth, i.e. in front of or behind the object plane. Nevertheless, this does not prohibit an accurate estimate of number and/or volume concentration to be made, since the detection volume is symmetric about the object plane.

3.4 Limiting parameters for reliable measurements

Particles located at the outer limits of the detection depth exhibit high levels of blurring, low intensities and significant alterations in gradients due to imaging system noise. Consequently, measurements in this region are highly unreliable, as even a small error in gradient estimation can result in a large diameter error. To address this, we introduce a critical measurement depth limit \(\widetilde{\sigma }_\textrm{c}=0.35\), beyond which results are not considered. By imposing a tighter depth of detection with a lower \(\widetilde{\sigma }_\textrm{c}\) value, more accurate overall results can be achieved. Furthermore, while the ideal eccentricity for spherical entities is zero, a practical limit can be set in the range of 0.3–0.5. Particles exceeding this limit can be rejected from the analysis.

3.5 Volumetric corrections in size distributions

The detection depth and volume are dependent on the size of the particle being measured. Detection depth varies linearly with particle size, and the detection volume can be determined as per Eq. (14). This leads to a volumetric measurement bias, because larger particles are measured (and counted) over a larger volume compared to the smaller particles. To address this bias, it is important to consider the number of dispersed particles per unit volume when determining the size distribution. This can be achieved by weighting the occurrence frequency in each histogram bin by the inverse of the corresponding measurement volume. Normalising this weighted frequency yields the required probability density function. From Eq. (14), it can be observed that \(d_\textrm{p0}\) is not significant, since \(d_\textrm{p} \gg d_\textrm{p0}\) and \(V_\textrm{d} \propto \alpha\), which implies that this \(\alpha\) will cancel out uniformly during the normalisation procedure. Hence, the volumetric bias correction of the PDFs can be easily achieved without any experimental calibration or knowledge of \(\alpha\). This estimation of the size probability density distribution implicitly assumes that the distribution is uniform along the optical axis. Nevertheless, since the position and size of all particles are known, one could retroactively examine sub-volumes and determine whether the assumption of uniformity was correct. However, the sub-volumes must lie within the detection bounds of all particles.

4 Results

4.1 Parameteric analysis of measurement system

Calibration target dots (or reticles) of known size are moved along the optical axis at known depths and captured in different background illumination configurations (see Fig. 8). This enables to validate the measurement technique by comparing the size estimated by the proposed technique (\(d_\textrm{p}\)) with the actual dot size (\(d_\textrm{p,a}\)) at various depth locations (\(|\Delta z|\)). A comprehensive discussion on various illumination configurations using diffused and collimated light can be found in the Appendix A. Measurements for the case of a diffused LED light source illumination are performed at a magnification of \(\sim 6.8{\times }\) at two background intensity levels (low (0.2) and high (0.65), rescaled average background image pixel bit value where 0.2 means intensity at \(20\%\) of the dynamic range of the image sensor where \(100\%\) represents completely saturated) and depicted in Fig. 10. The size is predicted accurately up to a 5–15% relative error in most parts of the measurement depth (Fig. 10b). One observes a higher relative error in measurements for the collimated beam illumination due to the interference pattern caused by Fresnel diffraction (Hecht 2017). Hence the proposed analysis does not apply to such optical settings due to the non-Gaussian blurring (Stokseth 1969; Lee 1990) of the dots. The dashed line in Fig. 10a represents the linear depth of detection, indicated by \(\widetilde{\sigma }_\textrm{c}=0.35\). Measurements beyond this limit on the right side are not as unreliable as anticipated. The target dots measurements also enable to validate the hypothesis of a linear relationship \(\sigma \propto |\Delta z|\), as depicted in Fig. 10c. Hence, the experimental calibration can be performed and \(\beta\) can be estimated through linear regression from these dot images. No considerable effect of the background illumination intensity is observed. Still, an intermediate background intensity is suggested, as a lower value is prone to noise, and a higher value might flush out the blurring information due to over-saturation at the sensor. For particles of the same physical size, the higher magnification ensures the availability of more pixels to extract more accurate information. This enables a slightly better estimation of size. A discussion on measurements at higher magnification (\(\sim 13.7{\times }\)) is presented in the Appendix A.

Measurement results for calibration dots of known sizes and depths at a magnification \(\sim 6.8{\times }\) for diffused LED beam illumination depicting the variation of a measured diameter with depth, b the relative error in diameter measurement with dimensionless depth, c blur kernel size with depth. ‘Low’ and ‘High’ intensity background illumination measurements are overlaid on the same plot

4.2 Technique implementation for diverse applications

This section illustrates the application of the technique to a diverse range of problems. The details of the experimental set-up for each system are provided in Sect. 3.

4.2.1 Dispersed glass beads

Untinted spherical glass beads within a size range of 40–90 \(\upmu \text {m}\) are used for sample measurement. Such measurements are common in the field of chemical sciences, particularly as calibration standards for a wide range of analytical techniques such as flow cytometry and spectroscopy. To validate the method, transparent glass beads were placed over a slide, which was transversed to prescribed depths. The blurred shadow images, hence captured, are analysed in a fashion similar to the calibration target dots performed in the previous section. Measurements obtained using a diffused LED light source illumination were performed at a magnification of \(\sim 6.7{\times }\) as depicted in Fig. 11. The size of the particle when in focus is considered to be the true size (\(d_\textrm{p,a}\)) while determining the relative errors in Fig. 11b. Furthermore, as shown in Fig. 11d, the glare point caused by first-order refraction (Hulst and Wang 1991) appears as a bright spot in the centre, which is very small and only exists when the particle is near the object plane. This has a negligible influence on the gradient estimation, and therefore, the size is predicted accurately from the blurred particle images.

Measurement results for spherical glass beads traversed to prescribed depths at a magnification \(\sim\)6.7x for diffused LED beam illumination depicting the variation of a measured diameter with depth, b the relative error in diameter measurement with dimensionless depth, c blur kernel size with depth. d A shadowgraph image of the beads in focus, exhibiting a glare point at the centre. The scale bar represents 60 μm

Moreover, a reference size distribution is estimated using microscope images of glass beads on a slide (Fig. 12a). For measuring in a DFD system, the glass beads are uniformly dispersed in a DI water solution and stirred continuously to avoid settling. Shadow images of the dispersed solution are captured using a diffused LED and laser illumination (Fig. 12b). The predicted size distribution from the DFD measurement is compared with the microscope results and is in good agreement (see Fig. 12d). Error bars are added to represent one standard deviation realised over six runs. The volumetric measurement with a varying detection depth is evident from Fig. 12c.

Global measurements for glass beads utilising a diffused background illumination. a Spherical glass beads under the microscope. b Glass beads dispersed in a solution, being continuously stirred. The detected beads are marked with red circles in the normalised shadow image with size \(d_\textrm{p}\) in \(\upmu\)m. c The estimated size of dispersed glass beads \(d_\textrm{p}\) and the corresponding blur kernel size \(\sigma\) depicting the linear relationship between the depth of detection and the diameter. d Comparison of the size distribution evaluated from the DFD technique with the microscope measurements as a reference. The uncorrected and detection volume bias-corrected estimates are depicted as probability density functions (PDFs)

4.2.2 Sprays

The measurement of a droplet size distribution in sprays holds significance in various natural and industrial systems. For instance, in fuel injection systems, the size of atomized droplets affects combustion efficiency through droplet lifetime and evaporation rate (Kumar et al. 2022). In high-speed gas flow-induced atomization, precise control of droplet dispersion size is important for monodisperse powder production for additive manufacturing and pharmaceutical applications (Sharma et al. 2021, 2022). The COVID-19 pandemic highlighted the role of microdroplets in disease transmission and the requirement to develop mitigation strategies (Fischer et al. 2020; Prather et al. 2020; Sharma et al. 2021). To illustrate the applicability of the DFD method, shadow imaging of an ethanol spray using monochromatic background illumination from a diffused laser beam is performed (Fig. 13a). The spray is generated using a laboratory grade positive displacement pump-type spray dispenser. Measurements are performed at a downstream sparse spray region to obtain the size distribution, as depicted in Fig. 13b. The error bars correspond to the standard deviation evaluated from six runs. The number distribution follows a familiar skewed distribution, commonly observed in dispersed spray systems.

a Ethanol spray in monochromatic background, illumination using a diffused laser beam. b Ethanol spray droplet size distribution measured using the DFD technique, represented as a PDF corrected for detection volume bias. c Droplets generated during the rupture of a surface bubble in DI water. d Droplet size distribution from bubble rupture measured using the DFD technique represented as corrected PDF

4.2.3 Aerosol generation from surface bubble rupture

Air bubbles formed at a liquid surface undergo film drainage, eventually leading to rupture and fragmentation into dispersed droplets (Fig. 13c). Depending on the surface tension, film thickness and the bubble lifetime, this can lead to the formation of droplets in the aerosolization range (Lhuissier and Villermaux 2012). This mode of mass transfer at bulk liquid interfaces is of interest in marine and environmental sciences. Furthermore, recent studies (Poulain and Bourouiba 2018) identified the effect of biological secretions on the size of fragmenting droplets, with many falling in sizes critical for aerosolization. Such transport of pathogen-loaded droplets into the ambient environment is relevant to disease transmission. Hence the proposed method can be deployed for such studies. To illustrate this method, bubbles are generated below the surface of a sample liquid pool with a nozzle connected to the air supply from the pump. The continuous bubbles generated in the DI water sample coalesce to form a larger surface bubble of diameter \(\sim\)30 mm (spherical cap), which eventually ruptures. For measurement, shadow imaging is performed on the unobstructed dispersed droplets generated from the rupture of a bubble, and \(\sim\)50 such events were considered. The obtained size distribution is depicted in Fig. 13d.

5 Discussion

We introduce a new measurement technique to precisely characterise the size and position of both in-focus and out-of-focus spherical dispersions using minimal and accessible optical resources. The measurement principle is based on an analytical framework of image blurring, and the derived functions are universal, enabling particle sizing without calibration of the blur kernel. Particle position from the object plane is estimated based on its correlation with the degree of blurring, established using a simple calibration procedure. The system precisely calculates measurement volume and its dependence on the size of the dispersion particles. This is crucial to obtain bias-free size distribution and volume concentration estimates. The method requires simple shadow imaging with a diffused light source for background illumination and a camera, paired with a telecentric lens or equivalent arrangement. With a suitable spatiotemporal resolution, implementation is possible in various systems, including microns to millimetres size particles moving with speeds ranging from stationary suspensions to supersonic droplets, limited only by imaging hardware capabilities.

To validate the method, opaque target dots of known size at known incremental depth locations across the object plane were considered. The implementation under various background illumination demonstrated its suitability in diffused beams, where the blurring is Gaussian. However, in cases with collimated beams, the presence of diffraction effects resulted in deviations due to the non-Gaussian nature of the point spread function (PSF), in particular for very small particles.

To illustrate the technique, sparse dispersions of spherical particles like glass beads and spray droplets were considered. In the case of dispersed glass beads, microscopy was used as a reference to validate the DFD measurements.

It should be noted that the measurement accuracy is limited by the precision of gradient evaluation from discrete pixel information, which is susceptible to noise. Moreover, although the absolute distance of the particle from the object plane is known, an ambiguity remains whether the particle is positioned in front of or behind the object plane. Thus, in practice the optical arrangement should be designed, such that the region of interest lies all on one side of the object plane, to avoid ambiguous position measurements. Note that this ambiguity does not exist for the two-camera implementation of the DFD technique.

The question may be posed whether a position measurement of each particle is necessary, since this requires the extra calibration step. There are several reasons why this might be essential. For one, if particle tracking is to be realised, for instance with a high-speed camera, then the particle position must be known at each time step. The position would also be necessary if spatial inhomogenieties of size distribution are to be detected.

As an outlook, the approach using blur gradients together with a grey-level threshold offers possibilities in characterising overlapping projections in dense particle clusters and/or non-spherical/irregular particles. The first extension would greatly increase the tolerable volume concentration for applying this technique. The second feature would open up inumerable new application areas. Both of these extensions are currently being developed by the authors.

Data Availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

References

Blaisot JB, Yon J (2005) Droplet size and morphology characterization for dense sprays by image processing: application to the Diesel spray. Exp Fluids 39(6):977–994. https://doi.org/10.1007/s00348-005-0026-4

Bowman F (1958) Introduction to Bessel functions. Dover Publications, New York

Cao Z, Zhai C (2019) Defocus-based three-dimensional particle location with extended depth of field via color coding. Appl Opt 58(17):4734–4739. https://doi.org/10.1364/AO.58.004734

Cierpka C, Segura R, Hain R, Kähler CJ (2010) A simple single camera 3C3D velocity measurement technique without errors due to depth of correlation and spatial averaging for microfluidics. Meas Sci Technol 21(4):045401. https://doi.org/10.1088/0957-0233/21/4/045401

Damaschke N, Nobach H, Tropea C (2002) Optical limits of particle concentration for multi-dimensional particle sizing techniques in fluid mechanics. Exp Fluids 32(2):143–152. https://doi.org/10.1007/s00348-001-0371-x

Dasgupta PB (2022) Comparative analysis of non-blind deblurring methods for noisy blurred images. Int J Comput Trends Technol 70(3):1–8. https://doi.org/10.14445/22312803/IJCTT-V70I3P101

Ens J, Lawrence P (1993) An investigation of methods for determining depth from focus. IEEE Trans Pattern Anal Mach Intell 15(2):97–108. https://doi.org/10.1109/34.192482

Erinin MA, Néel B, Mazzatenta MT, Duncan JH, Deike L (2023) Comparison between shadow imaging and in-line holography for measuring droplet size distributions. Exp Fluids 64(5):96. https://doi.org/10.1007/s00348-023-03633-8

Fantini E, Tognotti L, Tonazzini A (1990) Drop size distribution in sprays by image processing. Comput Chem Eng 14(11):1201–1211. https://doi.org/10.1016/0098-1354(90)80002-S

Fdida N, Blaisot J-B (2010) Drop size distribution measured by imaging: determination of the measurement volume by the calibration of the point spread function. Meas Sci Technol 21(2):025501. https://doi.org/10.1088/0957-0233/21/2/025501

Fischer EP, Fischer MC, Grass D, Henrion I, Warren WS, Westman E (2020) Low-cost measurement of face mask efficacy for filtering expelled droplets during speech. Sci Adv 6(36):3083. https://doi.org/10.1126/sciadv.abd3083

Ghita O, Whelan PF (2001) A video-rate range sensor based on depth from defocus. Opt Laser Technol 33(3):167–176. https://doi.org/10.1016/S0030-3992(01)00010-X

Hay KJ, Liu Z-C, Hanratty TJ (1998) A backlighted imaging technique for particle size measurements in two-phase flows. Exp Fluids 25(3):226–232. https://doi.org/10.1007/s003480050225

Hecht E (2017) Optics, 5th global, edn. Pearson, London

Hulst HCVD, Wang RT (1991) Glare points. Appl Opt 30(33):4755–4763. https://doi.org/10.1364/AO.30.004755

Kim KS, Kim S-S (1994) Drop sizing and depth-of-field correction in tv imaging. Atomization Sprays. https://doi.org/10.1615/AtomizSpr.v4.i1.30

Krotkov E (1988) Focusing. Int J Comput Vis 1(3):223–237. https://doi.org/10.1007/BF00127822

Kumar S, Rathod DD, Basu S (2022) Experimental investigation of performance of high-shear atomizer with discrete radial-jet fuel nozzle: mean and dynamic characteristics. Flow 2:31. https://doi.org/10.1017/flo.2022.25

Lecuona A, Sosa PA, Rodríguez PA, Zequeira RI (2000) Volumetric characterization of dispersed two-phase flows by digital image analysis. Meas Sci Technol 11(8):1152. https://doi.org/10.1088/0957-0233/11/8/309

Lee H-C (1990) Review of image-blur models in a photographic system using the principles of optics. Opt Eng 29(5):405. https://doi.org/10.1117/12.55609

Legrand M, Nogueira J, Lecuona A, Hernando A (2016) Single camera volumetric shadowgraphy system for simultaneous droplet sizing and depth location, including empirical determination of the effective optical aperture. Exp Therm Fluid Sci 76:135–145. https://doi.org/10.1016/j.expthermflusci.2016.03.018

Levin A, Fergus R, Durand F, Freeman WT (2007) Image and depth from a conventional camera with a coded aperture. ACM Trans Graph 26(3):70. https://doi.org/10.1145/1276377.1276464

Lhuissier H, Villermaux E (2012) Bursting bubble aerosols. J Fluid Mech 696:5–44. https://doi.org/10.1017/jfm.2011.418

Liu Y-Q, Du X, Shen H-L, Chen S-J (2021) Estimating generalized gaussian blur kernels for out-of-focus image deblurring. IEEE Trans Circuits Syst Video Technol 31(3):829–843. https://doi.org/10.1109/TCSVT.2020.2990623

Murata S, Kawamura M (1999) Particle depth measurement based on depth-from-defocus. Opt Laser Technol 31(1):95–102. https://doi.org/10.1016/S0030-3992(99)00027-4

Pentland AP (1987) A new sense for depth of field. IEEE Trans Pattern Anal Mach Intell PAMI 9(4):523–531. https://doi.org/10.1109/TPAMI.1987.4767940

Poulain S, Bourouiba L (2018) Biosurfactants change the thinning of contaminated bubbles at bacteria-laden water interfaces. Phys Rev Lett 121(20):204502. https://doi.org/10.1103/PhysRevLett.121.204502

Prather KA, Marr LC, Schooley RT, McDiarmid MA, Wilson ME, Milton DK (2020) Airborne transmission of SARS-CoV-2. Science 370(6514):303–304. https://doi.org/10.1126/science.abf0521

Savakis AE, Trussell HJ (1993) On the accuracy of PSF representation in image restoration. IEEE Trans Image Process 2(2):252–259. https://doi.org/10.1109/83.217229

Saxena A, Chung SH, Ng AY (2008) 3-D depth reconstruction from a single still image. Int J Comput Vis 76(1):53–69. https://doi.org/10.1007/s11263-007-0071-y

Sharma S, Pratap Singh A, Srinivas Rao S, Kumar A, Basu S (2021) Shock induced aerobreakup of a droplet. J Fluid Mech 929:27. https://doi.org/10.1017/jfm.2021.860

Sharma S, Pinto R, Saha A, Chaudhuri S, Basu S (2021) On secondary atomization and blockage of surrogate cough droplets in single- and multilayer face masks. Sci Adv 7(10):0452. https://doi.org/10.1126/sciadv.abf0452

Sharma S, Chandra NK, Basu S, Kumar A (2022) Advances in droplet aerobreakup. Eur Phys J Spec Top. https://doi.org/10.1140/epjs/s11734-022-00653-z

Sharma S, Rao SJ, Chandra NK, Kumar A, Basu S, Tropea C (2023) Depth from defocus technique applied to unsteady shock-drop secondary atomization. Exp Fluids 64(4):65. https://doi.org/10.1007/s00348-023-03588-w

Stokseth PA (1969) Properties of a defocused optical system*. J Opt Soc Am 59(10):1314. https://doi.org/10.1364/JOSA.59.001314

Subbarao M, Surya G (1994) Depth from defocus: a spatial domain approach. Int J Comput Vis 13(3):271–294. https://doi.org/10.1007/BF02028349

Subbarao M, Wei T-C, Surya G (1995) Focused image recovery from two defocused images recorded with different camera settings. IEEE Trans Image Process 4(12):1613–1628. https://doi.org/10.1109/TIP.1995.8875998

Tropea C (2011) Optical particle characterization in flows. Annu Rev Fluid Mech 43(1):399–426. https://doi.org/10.1146/annurev-fluid-122109-160721

Wang Z, He F, Zhang H, Hao P, Zhang X, Li X (2022) Characterization of the in-focus droplets in shadowgraphy systems via deep learning-based image processing method. Phys Fluids 34(11):113316. https://doi.org/10.1063/5.0121174

Wang Z, He F, Zhang H, Hao P, Zhang X, Li X (2022) Three-dimensional measurement of the droplets out of focus in shadowgraphy systems via deep learning-based image-processing method. Phys Fluids 34(7):073301. https://doi.org/10.1063/5.0097375

Willert CE, Gharib M (1992) Three-dimensional particle imaging with a single camera. Exp Fluids 12(6):353–358. https://doi.org/10.1007/BF00193880

Zhou W, Tropea C, Chen B, Zhang Y, Luo X, Cai X (2020) Spray drop measurements using depth from defocus. Meas Sci Technol 31(7):075901. https://doi.org/10.1088/1361-6501/ab79c6

Zhou W, Zhang Y, Chen B, Tropea C, Xu R, Cai X (2021) Sensitivity analysis and measurement uncertainties of a two-camera depth from defocus imaging system. Exp Fluids 62(11):224. https://doi.org/10.1007/s00348-021-03316-2

Funding

Open Access funding enabled and organized by Projekt DEAL. The financial support of the Science and Engineering Research Board of India is acknowledged in sponsoring author CT through the VAJRA Faculty scheme. SJR acknowledges the support from the Prime Minister’s Research Fellowship (PMRF). SB is thankful to SERB (Science and Engineering Research Board) - CRG: CRG/2020/000055 for financial support.

Author information

Authors and Affiliations

Contributions

SJR and CT conceptualised the methodology. SJR and SS performed the experiments and analysed the data. SJR developed the image processing code. SB and CT supervised the project. SJR and SS prepared the original draft, CT edited the draft and all authors reviewed the manuscript.

Corresponding authors

Ethics declarations

Conflict of interest

The authors report no competing interests.

Ethical Approval

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Parameteric analysis of measurement system

Detailed results and discussion are presented here on the effect of various system parameters on measurement accuracy. The calibration target dots of known size and depth locations are captured in different background illumination configurations. The size estimated by the proposed technique is compared with the actual dot size at various depth locations. Measurements performed at a magnification of \(\sim 6.8{\times }\) are depicted in Figs. 14, 15 and 16. Two background intensity levels: low (0.2) and high (0.65) were considered. These are the rescaled average background image pixel bit value, where, for a 16-bit image, the pixel value ranges from 0–65,535 and is rescaled to 0–1.

Diffused background illumination: The size is accurately predicted within a relative error of 5–10% within the measurement depth when using the diffused light source (Fig. 15a, c). The measurements of target dots further validate the linear relationship between \(\sigma\) and \(|\Delta z|\), as shown in Fig. 16a, c. The intensity of the background illumination is found to have no significant effect on the results. The use of diffused white light yields better results due to its incoherent nature.

Collimated beam illumination: Measurements with a collimated light source exhibit a higher relative error, as shown in Fig. 15b, d. This is due to the interference pattern caused by Fresnel diffraction and Poisson spot formation as depicted in Fig. 18. The presence of interference patterns causes significant deviations in the gradients, which do not align with the expected profiles based on Gaussian PSFs. This non-Gaussian blurring of the dots invalidates the proposed analysis. However, in the case of a collimated white light beam, this error is prominent only for the smaller dots (\(\le 30\,\upmu \text {m}\)), since the interference patterns due to different wavelengths average out at length scales associated with the larger dot sizes. The measurements also deviate from the hypothesised linear relationship between \(\sigma\) and \(|\Delta z|\), as illustrated in Fig. 16b, d. However, the depth of detection is substantially increased, as evident from the higher values of \(\alpha\). The background illumination intensity has minimal impact on the results.

The second set of measurements, shown in Fig. 17, is performed using a diffused laser beam illumination at a higher magnification of approximately 13.7\({\times }\). The same low and high normalised background intensity level as earlier is ensured for these measurements. For the particle of the same physical size, the higher magnification ensures the availability of more pixels to extract accurate information. Hence, with roughly twice as many pixels available in this second set, a slightly better estimation of size is achieved than with the first set of measurements. The validity of the proposed technique is demonstrated for particle sizes as small as 7 \(\upmu\)m with a suitable resolution.

Measurement results for calibration dots of known size and depth illustrating the variation of measured diameter with depth for different background illumination configurations a LED white light diffused beam, b LED white light collimated beam, c Laser monochromatic light diffused beam, d Laser monochromatic light collimated beam. ‘Low’ and ‘High’ intensity illumination measurements are overlaid on the same plot for magnification \(\sim 6.8{\times }\)

Measurement results for calibration dots of known size and depth illustrating the variation of relative error in measured diameter with dimensionless depth for different background illumination configurations. a LED white light diffused beam, b LED white light collimated beam, c Laser monochromatic light diffused beam, d Laser monochromatic light collimated beam. ‘Low’ and ‘High’ intensity illumination measurements are overlaid on the same plot for magnification \(\sim 6.8{\times }\)

Measurement results for calibration dots of known size and depth illustrating the variation of blur kernel size with depth for different background illumination configurations. a LED white light diffused beam, b LED white light collimated beam, c Laser monochromatic light diffused beam, d Laser monochromatic light collimated beam. ‘Low’ and ‘High’ intensity illumination measurements are overlaid on the same plot for magnification \(\sim 6.8{\times }\)

Measurement results for calibration dots of known size and depth at a higher magnification \(\sim 13.7x\) for monochromatic diffused laser beam illumination illustrating the variation of a measured diameter with depth, b the relative error in measured diameter with dimensionless depth, c blur kernel size with depth. ‘Low’ and ‘High’ intensity illumination measurements are overlaid on the same plot

Non-Gaussian blurring of a calibration target dot (diameter-20 \(\upmu\)m) in collimated background illumination. a LED white light collimated beam, b Laser monochromatic light collimated beam. Fresnel diffraction and Poisson spot observed due to interference of light waves, more evident for the monochromatic light

1.1 Limits on point spread function (PSF)

The presumed Gaussian PSF in optical systems is limited by the diffraction of light waves and the formation of the Airy disk. This limits the resolution of the system as well as the validity of the proposed DFD approach. In Fig. 18, we have observed how interference patterns emerge owing to diffraction around the particle edges for the cases of collimated beams. In this section, we estimate the size of the smallest PSF, i.e. Airy disk, to see if it affects the measurement analysis.

For the given combination of lenses (Navitar \(1.5\times\) lens attachment + \(6.5\times\) Zoom lens + \(1.0\times\) or \(2.0\times\) adapter) being used for the parametric study using target dots, the Objective Numerical Aperture \({NA}_{obj}\) as provided by the manufacturer is

The corresponding F-number \((f/\#)\) is given as

Then, Airy disk diameter \(d_{Airy}\) in terms of \((f/\#)\) is given by (Stokseth 1969)

For the Cavilux light source \(\lambda = 640\,\text {nm}\) (Red). Substituting values in Eqs. A1 and A2 we get

Even in the case of a white light source, the components with a longer wavelength will form a larger Airy disk, as evident from Eq. A2, and hence we can use the red light wavelength as a test case to evaluate the limitations.

The least squared error fit of a Gaussian PSF to the Airy disk profile provides an equivalent Gaussian blurring standard deviation \(\sigma _\textrm{eq}\) with respect to the Airy disk diameter as

where the R-squared value of the fit is \(R^2=0.9981\). Substituting the values in Eq. A3, we get

This value is smaller compared to the pixel size (refer to Sect. 3 for details) and hence will not affect the results drastically. Furthermore, as a diffused light beam is suggested for the proposed technique, these diffraction effects will be significantly less obvious.

Although from Figs. 16 and 17c, one observes that the calculated blur kernel size approaches a finite nonzero value at focus \((|\Delta z|=0)\) instead of an expected sharp focused image with Dirac function as PSF (i.e. \(\sigma =0\)). This is expected due to the following reasons:

-

1.

The pixel intensity value is the average manifestation of the light intensity falling over the sensor. The image of a focused particle (both actual and artificial) has some pixels with intermediate intensity values at the boundary due to the edge of the projected shadow lying in an intermediate position within the pixel/sensor. This gives a sense of blurring even for the focused image with \(\sigma \ne 0\).

-

2.

In theory, we need gradients at the edge of the particle image to approach infinity when in focus, which practically never seems to happen, partially due to this discrete way of capturing information. The \(\sigma\) calculations are further affected due to errors associated with the estimation of steep gradients from the available discrete information in the image.

Theoretical particle concentration limit

The proposed methodology is currently capable of analysing an isolated blurred particle. However, in sprays and other dispersed systems, particle images often overlap when projected along the optical axis onto the image plane. Blurring can cause particles to appear as a single indistinguishable non-symmetric entity, even if they do not overlap. The particle concentration limit is the extent to which the closely packed particles are distinguishable on the imaging plane based on a segmentation threshold value, which is chosen for the current study as \(g_\textrm{t,c}=0.4\). This limiting condition is illustrated theoretically for a simple case, where two particles of the same size \(d_\textrm{o}\) are considered at a specified centre-to-centre distance of \(2\Delta\), as illustrated in Fig. 19a. The intensity at point ‘O’ is evaluated for different degrees of blurring \(\widetilde{\sigma }\) and separation distance \(\Delta\). If this exceeds the detection threshold value \(g_\textrm{t,c}\), then the particles are indistinguishable. Convolution, as earlier (Eq. (1)), is applied using a Gaussian blur kernel h (Eq. (3)). In this case, the normalised image function \((i_\textrm{f})\) takes a value of one within shaded regions (1) and (2) in Fig. 19a, and zero otherwise. These shaded regions can be defined geometrically in polar coordinates, with point ‘O’ as the origin

where \(\phi\) in the angle subtended by the tangent to particle contour at origin as depicted in Fig. 19a. Substituting this into Eq. (1) to evaluate \(g_\textrm{t,c}\) at ’O’ where \(r_\textrm{t}=0\), while considering the additional non-dimensionalisation \(\widetilde{\Delta } = \Delta /d_\textrm{o}\), we obtain the following expression:

where \(\phi =\sin ^{-1}{\left( \frac{d_0}{2\Delta }\right) }=\sin ^{-1}{\left( \frac{1}{2\widetilde{\Delta }}\right) }\). The solutions for this are numerically evaluated and variation of the dimensionless parameter \(\widetilde{\sigma }\) with inter-particle half separation \(\widetilde{\Delta }\) for different intensity values \(\left( g_\textrm{t,c}\right)\) at the centre of the pair ‘O’ is depicted in Fig. 19b. Two solutions for \(\widetilde{\sigma }\), at near-focus depth and far-focus depths, exist for a prescribed \(\widetilde{\Delta }\) and \(g_\textrm{t,c}\). Also, there is a critical separation \({\widetilde{\Delta }}_\textrm{c}\) for a prescribed \(g_\textrm{t,c}\) beyond which, for any depth, the particle pair is distinguishable. For the chosen \(g_\textrm{t,c}=0.4\) corresponding to particle segmentation, this value is \({\widetilde{\Delta }}_\textrm{c} \approx 0.7\). This signifies the critical concentration limit, and particles with spacing such that \(\widetilde{\Delta } > {\widetilde{\Delta }}_\textrm{c}\) are distinguishable for all depths in the measurement. In simpler terms, the particles with a spacing between their centres greater than 1.4 times the diameter will be distinguishable at all depths for the segmentation threshold of 0.4.

This analysis is a simplified representation of the presence of such a limit to be considered when choosing the image-based system for measurement. However, several further aspects must be considered when attempting to determine an absolute concentration limit for a given optical configuration. To start, most dispersed systems consist of multiple particles of different sizes, and the size distribution must be accounted for. Furthermore, there are two effects leading to overlap. Even if all particles were in the same plane perpendicular to the optical axis, the overlap would increase with the degree of out of focus, as treated above. This is very similar to the situation encountered in other out-of-focus approaches such as ILIDS/IPI, and concentration limits for such techniques have been derived previously Damaschke et al. (2002). However, with the DFD technique, we also encounter varying degrees of out of focus because the detection volume is also larger in the z-direction. This is an added influence that was not treated in the earlier work Damaschke et al. (2002). Finally, when attempting to determine a concentration limit theoretically, some assumption must be made regarding how uniform the concentration is throughout the detection volume, the most simple assumption being a uniform distribution.

a The blurred image for a particle pair is estimated by convolving the focused image with a Gaussian blur kernel, shown as a shaded circle. Here, 2\(\Delta\) is the separation between the particles of the same size \(d_\textrm{p}\). Intensity at point ‘O’ is estimated for different degrees of blur \(\tilde{\sigma }\). \(\phi\) is the angle that the tangent from ‘O’ makes with the horizontal axis. b Theoretical variation of non-dimensional parameter \(\tilde{\sigma } = \sigma /d_\textrm{o}\) with inter-particle half separation \(\tilde{\Delta } = \Delta /d_\textrm{o}\) for different intensity values (\(g_\textrm{t,c}\)) at the centre of the pair O. Two \(\tilde{\sigma }\) solutions—near-focus (B) and far-focus (C) depth—exist for a prescribed \(\tilde{\Delta }\) and \(g_\textrm{t,c}\). Also, there is a critical separation \(\tilde{\Delta }_\textrm{c}\) corresponding to (D) for a prescribed \(g_\textrm{t,c}\) beyond which, for any depth, the particle pair is distinguishable. c Illustration of the blurred image of a particle pair corresponding to points (A), (B) and (C) in (a). If \(g_\textrm{t,c}\) exceeds the detection intensity threshold (0.4 here), then both particles cannot be directly distinguished, as shown. Here cyan represents \(g_\textrm{t}=0.4 \pm 0.05\)

Rights and permissions