Abstract

We rigorously derive linear elasticity as a low energy limit of pure traction nonlinear elasticity. Unlike previous results, we do not impose any restrictive assumptions on the forces, and obtain a full \(\Gamma \)-convergence result. The analysis relies on identifying the correct reference configuration to linearize about, and studying its relation to the rotations preferred by the forces (optimal rotations). The \(\Gamma \)-limit is the standard linear elasticity model, plus a term that penalizes for fluctuations of the reference configurations from the optimal rotations. However, on minimizers this additional term is zero and the limit energy reduces to standard linear elasticity.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction: How to Choose a Reference Configuration?

Derivation of Linear Elasticity from Finite Elasticity In nonlinear (or finite) hyperelasticity, the elastic problem consists of minimizing an elastic energy over deformations \(y:\Omega \rightarrow {\mathbb {R}}^n\), where \(\Omega \subset {\mathbb {R}}^n\) is the elastic body. Linear elasticity is the linearization of this problem about a reference configuration: Under the assumption that the displacement \(u(x) := y(x)-x\) is small, one obtains a quadratic energy-minimization problem for u. While this derivation of linear elasticity has been a textbook material for a very long time, only less than 20 years ago the first fully rigorous justification of it was obtained, via variational convergence, in Dal Maso et al. (2002). There, the authors considered the elastic energy of the type

where W(x, A) is the elastic energy density, \(g\in L^2(\Omega ;{\mathbb {R}}^n)\) is the body forces, and \(W^{1,2}_{\varepsilon v_0}(\Omega ;{\mathbb {R}}^n)\) is the space of all maps \(y\in W^{1,2}(\Omega ;{\mathbb {R}}^n)\) such that \(y = x + \varepsilon v_0\) on \(\partial \Omega _D\), where \(v_0\) is a given vector field and \(\partial \Omega _D\) is a prescribed subset of \(\partial \Omega \). They showed that the functionals \(\frac{1}{\varepsilon ^2}({\bar{J}}_\varepsilon (y_\varepsilon ) - {\bar{J}}_\varepsilon ({\text {id}}))\), where \({\text {id}}:\Omega \rightarrow \Omega \) is the identity map, \(\Gamma \)-converge to a linear elastic functional

where u is the limit of the rescaled displacements \(u_\varepsilon = \frac{1}{\varepsilon }(y_\varepsilon (x)-x)\), e(u) is its symmetric gradient, and \({\mathcal {Q}}\) is the quadratic form obtained from linearizing \({\mathcal {W}}\) at the identity (see (2.1)). They also showed the associated compactness result; namely, if \({\bar{J}}_\varepsilon (y_\varepsilon ) - {\bar{J}}_\varepsilon ({\text {id}})\le C\varepsilon ^2\), then \(u_\varepsilon \) weakly converge to some u (modulo a subsequence).

Of course, the map \({\text {id}}:\Omega \rightarrow {\mathbb {R}}^n\) is not the only reference configuration of the elastic body \(\Omega \); any isometric embedding \(Rx + c\), where \(R\in \text {SO}(n)\) and \(c\in {\mathbb {R}}^n\), is. Nevertheless, the choice of \({\text {id}}\) as a reference configuration in Dal Maso et al. (2002) is a natural one, as they show that boundary conditions force \(y-{\text {id}}\) to be small in \(W^{1,2}\).

A recent paper, Maddalena et al. (2019a), approached the analogous problem, but with Neumann boundary conditions instead of Dirichlet. That is, they considered the pure traction problem

where \(f\in L^2(\partial \Omega ;{\mathbb {R}}^n)\) and \(g\in L^2(\Omega ;{\mathbb {R}}^n)\) are the traction forces and body forces, respectively, which are equilibrated in the sense that the energy \({\bar{J}}_\varepsilon \) is invariant to translations. Furthermore, they assume a certain non-degeneracy condition (called compatibility there); as explained later on, it is equivalent to the assumption that among all rigid motions, \({\bar{J}}_\varepsilon \) is minimized at \({\text {id}}\), which is a unique minimizer (up to translations). The fact that \({\text {id}}\) is a minimizer among rigid motions can always be guaranteed by rotating the whole system; the fact that it is a unique minimizer, however, does limit the admissible forces.

Under these assumptions, as in the Dirichlet case, they analyze the energy \(J_\varepsilon (y):= {\bar{J}}_\varepsilon (y) - {\bar{J}}_\varepsilon ({\text {id}})\). The analysis in this case turns out to be trickier than in the Dirichlet case, with some surprising results:

-

1.

It turns out that a sequence of displacements \(u_\varepsilon = \frac{1}{\varepsilon }(y_\varepsilon (x)-x)\) associated with approximate minimizers \(y_\varepsilon \) of \(\frac{1}{\varepsilon ^2} {\bar{J}}_\varepsilon \) needs not to be bounded in \(W^{1,2}\); in fact, one can only obtain, after moving to a subsequence, that \(e(u_\varepsilon ){\mathop {\rightharpoonup }\limits ^{}} e(u)\), and \(\sqrt{\varepsilon } \nabla u_\varepsilon \rightarrow W\) for some \(u\in W^{1,2}\) and \(W\in {\mathbb {M}}^{n\times n}_{\text {skew}}\) (Maddalena et al. 2019a, Theorem 2.2).

-

2.

The limiting u does not minimize the expected linear elastic functional (1.1), but rather the energy

$$\begin{aligned} {\tilde{I}}(u) = \min _{W\in {\mathbb {M}}^{n\times n}_{\text {skew}}} \int _\Omega {\mathcal {Q}}\left( x,e(u) - \frac{1}{2}W^2\right) \,\mathrm{d}x - \int _{\partial \Omega } f\cdot u\, \,\mathrm{d}{\mathcal {H}}^{n-1}- \int _\Omega g\cdot u\,\mathrm{d}x. \end{aligned}$$This energy is further investigated in a sequel paper, Maddalena et al. (2019b).

-

3.

Unlike Dal Maso et al. (2002), there is no full \(\Gamma \)-limit, but rather a statement about approximate minimizers.

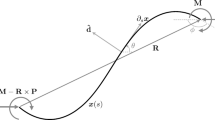

Reference Configurations and Optimal Rotations The above-mentioned works defined the displacement with respect to a reference configuration that is dictated by the problem; that is, by the boundary conditions or the forces. In this work, we show that by choosing, for a given deformation, the rigid motion closest to it as its reference configuration, one can obtain stronger and more general results. More precisely, we define the reference configuration of a deformation \(y\in W^{1,2}(\Omega ;{\mathbb {R}}^n)\) as the map \(Rx+c\), \(R\in \text {SO}(n)\), \(c\in {\mathbb {R}}^n\) that minimizes the displacement, that isFootnote 1

In this case, one should distinguish between the reference configuration induced by a deformation y, and the preferred rotations of the forces, which we call optimal rotations. Formally, for the energy (1.2), we define the as

where \(F\in \left( {\mathbb {M}}^{n\times n}\right) ^*\) is the linear functional defined by the forces, that is,

In this setting, the correct normalization of the energy to consider is

that is, the deviation of \({{\bar{J}}}_\varepsilon \) from its value on optimal rotations. By rotating the system, we can always assume that \(I\in {\mathcal {R}}\) and thus, define \(J_\varepsilon (y) := {\bar{J}}_\varepsilon (y) - {\bar{J}}_\varepsilon ({\text {id}})\). As shown in Corollary 4.2, the compatibility assumption of Maddalena et al. (2019a) is equivalent to saying that \({\mathcal {R}}=\{I\}\).

Main Results In this paper we address the pure traction elastic problem (1.2), using the definitions of reference configurations, optimal rotations, and normalized energy as discussed above. That is, for a given deformation \(y_\varepsilon \in W^{1,2}(\Omega ;{\mathbb {R}}^n)\), whose reference configuration according to (1.3) is \(R_\varepsilon x+ c_\varepsilon \), we define its by

We obtain the following:

-

1.

First, we prove that the set of optimal rotations \({\mathcal {R}}\) is a totally geodesic submanifold of \(\text {SO}(n)\) (Proposition 4.1). This geometric observation is important for the following analysis. We also give a complete classification of the possible optimal rotations in dimensions \(n=2,3\) (Sect. 6).

-

2.

Compactness (Theorem 5.1): If \(\frac{1}{\varepsilon ^2}J_\varepsilon (y_\varepsilon )\) is bounded, then, modulo a subsequence, we have

-

\(u_\varepsilon {\mathop {\rightharpoonup }\limits ^{}} u_0\) in \(W^{1,2}(\Omega ;{\mathbb {R}}^n)\),

-

\(R_\varepsilon \rightarrow R_0\) for some \(R_0\in {\mathcal {R}}\),

-

\(\frac{1}{\sqrt{\varepsilon }}(R_\varepsilon - {\mathcal {P}}(R_\varepsilon )) \rightarrow A_0\), where \({\mathcal {P}}(R_\varepsilon )\) is the projection of \(R_\varepsilon \) onto \({\mathcal {R}}\), and \(A_0\) is an element of the normal bundle at \(R_0\) of \({\mathcal {R}}\) in \(\text {SO}(n)\). We can write \(A_0 = R_0 W_0\) for some \(W_0 \in {\mathbb {M}}^{n\times n}_{\text {skew}}\).

-

-

3.

\(\Gamma \)-convergence (Theorem 5.2): Under the above notion of convergence \(y_\varepsilon \rightarrow (u_0,R_0,W_0)\), the functional \(J_\varepsilon \) \(\Gamma \)-converges to

$$\begin{aligned} I(u_0,R_0,W_0)&:= \int _\Omega {\mathcal {Q}}(x,e(u_0(x)))\,\mathrm{d}x - \int _{\partial \Omega } f\cdot R_0 u_0 \, \,\mathrm{d}{\mathcal {H}}^{n-1} \\&\quad - \int _\Omega g\cdot R_0 u_0 \, \mathrm{d}x - \frac{1}{2}F(R_0W_0^2), \end{aligned}$$where F is defined in (1.4).Footnote 2

It turns out that this viewpoint, compared to the one of Maddalena et al. (2019a), provides better compactness properties, a full \(\Gamma \)-convergence result, and it is valid for all equilibrated forces (in particular, the assumption \({\mathcal {R}}=\{I\}\) is not necessary for a rigorous validation of linear elasticity). On a more technical point, our proofs are simpler and work for any dimension n, whereas the proofs in Maddalena et al. (2019a) rely on the Rodrigues rotation formula (see (A.1)), which is only valid for \(n=2,3\).

Our approach also gives a geometric interpretation to the difference between the Dirichlet and Neumann derivations of linear elasticity: Whereas in the Dirichlet case, the rotational part \(R_\varepsilon \) of the reference configuration differs from the rotation prescribed by the boundary data by an order of \(\varepsilon \) (see Dal Maso et al. 2002, equation (3.14)), in the Neumann case the distance between \(R_\varepsilon \) and the optimal rotations prescribed by the forces is only of order \(\sqrt{\varepsilon }\).Footnote 3 From a mechanical point of view, it means that a low energy pure traction elastic body can fluctuate more compared to a low energy elastic body which is clamped in part of its boundary.

Finally, we note that the term \(-\frac{1}{2}F(R_0W_0^2)\) that appears in the limiting energy, does not appear in the standard linear elastic energy, such as (1.1) (this can be viewed as a manifestation of the “gap”, as it is called in Maddalena et al. (2019a), between standard linear elasticity and its rigorous derivation from finite elasticity for pure traction problems). This term represents the elastic cost of fluctuations of the reference configurations from the optimal rotations; in the Dirichlet case, these fluctuations are smaller, and their elastic cost does not appear in this energy scaling. However, note that the term \(-\frac{1}{2}F(R_0W_0^2)\) is non-negative, since \(R_0\) is an optimal rotation (see (4.1) below); therefore, from a minimization point of view, we can always choose \(W_0=0\), thus eliminating it. More precisely, we show that minimizers of \(J_\varepsilon \) converge to minimizers of I of the form \((u_0,R_0,0)\), which reduces I to the standard linear elasticity energy (see Theorem 5.3), with the slight difference that formal derivations of linear elasticity typically focus on linearization about a fixed optimal rotation and thus do not consider \(R_0\) explicitly. In other words, the standard linear elasticity energy gives the correct asymptotic description of minimizers of finite elasticity for small forces not only in the Dirichlet case, but also for all pure traction problems.

After this work was essentially complete, we learned about the papers (Mainini and Percivale 2020; Jesenko and Schmidt 2020), where the authors study the derivation of pure traction linear elasticity from finite elasticity for incompressible materials. In Mainini and Percivale (2020), the external forces are assumed to satisfy the same compatibility condition as in Maddalena et al. (2019a), that is, in our language \({\mathcal {R}}=\{I\}\). In Jesenko and Schmidt (2020), the assumptions on the forces imply the other extreme, namely that \({\mathcal {R}}=\text {SO}(n)\). We believe that our approach, adapted to the incompressible case, should be able to unify these two results and extend them to all forces.

Structure of this Paper In Sect. 2, we describe in more detail the elastic energy \(J_\varepsilon \) that we are considering, and define the set of optimal rotations \({\mathcal {R}}\) induced by it. In Sect. 3, we give some standard preliminary estimates, regarding (a) the distance between a deformation and its reference configuration (Lemma 3.1, in which the Friesecke–James–Müller rigidity theorem comes into play), and (b) the scaling of the infimum of elastic energy \(J_\varepsilon \) (Proposition 3.2), which justifies the energy scaling considered. In Sect. 4, we treat the geometry of the set of optimal rotations \({\mathcal {R}}\), and show that it is a totally geodesic submanifold of \(\text {SO}(n)\) (Proposition 4.1). In Sect. 5, we state and prove our main results—compactness (Theorem 5.1), \(\Gamma \)-convergence (Theorem 5.2), and convergence of minimizers (Theorem 5.3). In Sect. 6, we give a full classification of the possible sets of optimal rotations that can arise in two and three dimensions, and provide examples for each.

2 The Model

Let \(\Omega \subset {\mathbb {R}}^n\) be a Lipschitz domain, and consider the energy \({\bar{J}}_\varepsilon : W^{1,2}(\Omega ;{\mathbb {R}}^n) \rightarrow {\mathbb {R}}\cup \{+\infty \}\), defined by

where \({\mathcal {W}}: \Omega \times {\mathbb {M}}^{n\times n} \rightarrow [0,\infty ]\) is the elastic energy density, a Carathéodory function satisfying the following assumptions:

-

(a)

Frame indifference: \({\mathcal {W}}(x,RA) = {\mathcal {W}}(x,A)\) for a.e. \(x\in \Omega \), all \(A\in {\mathbb {M}}^{n\times n}\) and \(R\in \text {SO}(n)\).

-

(b)

\({\mathcal {W}}(x,A) = 0\) if and only if \(A\in \text {SO}(n)\).

-

(c)

Coercivity: There exists \(c>0\) such that \({\mathcal {W}}(x,A) \ge c {\text {dist}}^2(A,\text {SO}(n))\) for all \(A\in {\mathbb {M}}^{n\times n}\) and a.e. \(x\in \Omega \).

-

(d)

Regularity: There exists a neighborhood of \(\text {SO}(n)\) in which \({\mathcal {W}}(x,\cdot )\) is \(C^2\) uniformly in x:

$$\begin{aligned} \left| {\mathcal {W}}(x, I + B) - {\mathcal {Q}}(x,B)\right| \le \omega (|B|), \qquad {\mathcal {Q}}(x,B) := \frac{1}{2}D_A^2{\mathcal {W}}(x,I)(B,B) \end{aligned}$$(2.1)where \(\omega :[0,\infty )\rightarrow [0,\infty ]\) is a function satisfying \(\lim _{t\rightarrow 0} \omega (t)/t^2 = 0\). Moreover, \(D_A^2{\mathcal {W}}(\cdot ,I)\) is a bounded function in \(\Omega \).

We note that assumptions (b) and (c) imply that

for all \(B\in {\mathbb {M}}^{n\times n}\) and a.e. \(x\in \Omega \).

We assume that the forces f and g are equilibrated, that is,

Without this assumption, by changing \(y\mapsto y+c\) we can make \({\bar{J}}_\varepsilon \) arbitrarily small, i.e., \(\inf {\bar{J}}_\varepsilon = -\infty \).

Let

and define the set of optimal rotations \({\mathcal {R}}\) by

Fix \({\bar{R}}\in {\mathcal {R}}\). By changing \(f\mapsto {\bar{R}}^T f\), \(g\mapsto {\bar{R}}^T g\) and \(y\mapsto {\bar{R}}^T y\), we can assume without loss of generality that \({\bar{R}} = I\). In particular, we have

with equality holding if and only if \(R\in {\mathcal {R}}\).

Let \(I_\varepsilon \) be the elastic part of \({\bar{J}}_\varepsilon \), i.e.,

and denote

3 Preliminary Estimates

We begin with some preliminary calculations: In Lemma 3.1, we show that if \(J_\varepsilon (y_\varepsilon )\le C\varepsilon ^2\), then the \(W^{1,2}\)-distance between \(y_\varepsilon \) and its reference configuration is of order \(\varepsilon \). In Proposition 3.2 we show that

for some \(C>0\) depending on the forces f, g and the energy density W. These motivate the study of the \(\Gamma \)-limit of \(\frac{1}{\varepsilon ^2} J_\varepsilon \).

In this section, we use the notation \(A_\varepsilon \lesssim B_\varepsilon \) if \(A_\varepsilon \le C B_\varepsilon \) for some constant \(C>0\) that is independent of \(\varepsilon \), but can depend on \(\Omega \), the constant c in the coercivity assumption (c), and other fixed quantities.

Lemma 3.1

If \(J_\varepsilon (y_\varepsilon ) \le C\varepsilon ^2\), then \(I_\varepsilon (y_\varepsilon ) = O(\varepsilon ^2)\) and there exist a sequence \(R_\varepsilon \in \text {SO}(n)\) and constants \(c_\varepsilon \in {\mathbb {R}}^n\) such that

If \(R_\varepsilon '\in \text {SO}(n)\) is another sequence with respect to which this holds, then \(|R_\varepsilon - R'_\varepsilon | \lesssim \varepsilon \).

Remark

As we will show later, the fact that \(|R_\varepsilon - R'_\varepsilon | \lesssim \varepsilon \) implies that we can regard any sequence \(R_\varepsilon x+c_\varepsilon \) for which this lemma holds as reference configurations of the sequence \(y_\varepsilon \), without changing the results of this paper.

Proof

By the Friesecke–James–Müller rigidity theorem (Friesecke et al. 2002, Theorem 3.1), the coercivity assumption (c) on \({\mathcal {W}}\) implies that there exist \(R_\varepsilon \in \text {SO}(n)\) such that

This also implies that, for an appropriate constant \(c_\varepsilon \),

where \(Y_\varepsilon := y_\varepsilon - R_\varepsilon x - c_\varepsilon \). From the trace theorem, a similar bound also holds for \(L^2\)-norm of the trace of \(Y_\varepsilon \). Therefore, we only need to prove that \(I_\varepsilon (y_\varepsilon ) = O(\varepsilon ^2)\). Using the inequalities above, (2.3) and (2.4), we have

which completes the proof by choosing \(\delta \) small enough.

Finally, the last statement follows since

\(\square \)

Proposition 3.2

There exists \(C>0\) such that

Proof

The upper bound follows since \(J_\varepsilon ({\text {id}}) = 0\). For the lower bound, consider a sequence of approximate minimizers \(y_\varepsilon \), that is

for some \(C'>0\). In particular, \(J_\varepsilon (y_\varepsilon ) \le C'\varepsilon ^2\), hence the results of Lemma 3.1 hold. We therefore have

for some constant \(C>0\). \(\square \)

4 Geometry of the Set of Optimal Rotations \({\mathcal {R}}\)

We recall that the tangent space to \(\text {SO}(n)\) at the identity is the space of skew-symmetric matrices, and at \(R\in \text {SO}(n)\) it is \(\{RW ~:~ W\in {\mathbb {M}}^{n\times n}_{\text {skew}}\}\). Moreover, for a fixed R, we have that \(\text {SO}(n) = \{Re^W ~:~ W\in {\mathbb {M}}^{n\times n}_{\text {skew}}\}\), and for every \(R'\in \text {SO}(n)\), there exists \(W\in {\mathbb {M}}^{n\times n}_{\text {skew}}\) such that \(R' = Re^W\) and the map \(t\in [0,1]\mapsto Re^{tW}\) is a minimizing geodesic in \(\text {SO}(n)\) connecting R and \(R'\).

Let now \(R\in {\mathcal {R}}\) and \(W\in {\mathbb {M}}^{n\times n}_{\text {skew}}\). From the definition of \({\mathcal {R}}\) the function \(\phi (t):=F(Re^{tW})\) satisfies \(\phi '(0)=0\) and \(\phi ''(0)\le 0\). Thus, we deduce that

for every \(W\in {\mathbb {M}}^{n\times n}_{\text {skew}}\) and \(R\in {\mathcal {R}}\). We note that the first equation in (4.1) for \(R=I\), together with (2.3), provides the usual balance condition in linearized elasticity:

for every \(W\in {\mathbb {M}}^{n\times n}_{\text {skew}}\) and \(c\in {\mathbb {R}}^n\).

Our main result of this section is the following characterization of the set of optimal rotations:

Proposition 4.1

\({\mathcal {R}}\) is a closed, connected, boundaryless, totally geodesic submanifold of \(\text {SO}(n)\), and the tangent space of \({\mathcal {R}}\) at \(R_0\) is

In particular, \(T{\mathcal {R}}_{R_0}\) is a linear space.

Recall that a totally geodesic submanifold \({\mathcal {M}}\) of a manifold \({\mathcal {N}}\) is a submanifold, such that a length-minimizing curve in \({\mathcal {M}}\) between any two elements in \({\mathcal {M}}\) is also a length-minimizing curve in \({\mathcal {N}}\) (e.g., a hyperplane in Euclidean space).

Corollary 4.2

An immediate corollary is that strict inequality in (4.1) is equivalent to saying that \({\mathcal {R}}\) is a singleton, i.e., \({\mathcal {R}}=\{I\}\). This strict inequality is the compatibility assumption on the forces in Maddalena et al. (2019a) (see (2.25) there).

Proposition 4.1 is what we need for the compactness and \(\Gamma \)-convergence results. Later on, in Sect. 6, we give more details on the structure of \({\mathcal {R}}\); in particular, we show that the second fundamental form of \(\text {SO}(n)\) in \({\mathbb {M}}^{n\times n}\) in the direction F is negative semi-definite, and that the number of its zero principal curvatures corresponds to the dimension of \({\mathcal {R}}\). This yields a complete classification of the possible optimal rotations in two and three dimensions.

We will prove Proposition 4.1 at the end of the section, after a few preliminaries. For later use, we denote

Note that \(R_0 N{\mathcal {R}}_{R_0}\) is the normal space of \(T{\mathcal {R}}_{R_0}\) in \(T_{R_0} \text {SO}(n)\). Also, we define the projection operator

Since \({\mathcal {R}}\) is a closed submanifold, \({\mathcal {P}}\) is well defined in a neighborhood of \({\mathcal {R}}\). Here, \({\text {dist}}_{\text {SO}(n)}\) is the intrinsic distance in the manifold \(\text {SO}(n)\); that is,

Note that this distance is equivalent to the regular (Frobenius) distance in \({\mathbb {M}}^{n\times n}\) (since \(\text {SO}(n)\) is a compact submanifold), and moreover,

Towards the proof of Proposition 4.1, we start by recalling a few linear algebra facts: any \(W\in {\mathbb {M}}^{n\times n}_{\text {skew}}\) can be written as \(R^T\Sigma R\), where \(R\in \text {SO}(n)\) and

From this, we have the following:

Lemma 4.3

Given a rotation \(R\in \text {SO}(n)\), any rotation \(R'\in \text {SO}(n)\) can be written as \(R' = Re^W\), where \(W\in {\mathbb {M}}^{n\times n}_{\text {skew}}\) and the values \(\lambda _1,\ldots ,\lambda _k\) in the representation (4.6) of W belong to the interval \((-\pi ,\pi ]\).

Proof

We prove for the case \(R=I\), that is, that for each \(W\in {\mathbb {M}}^{n\times n}_{\text {skew}}\) there exists \(W'\in {\mathbb {M}}^{n\times n}_{\text {skew}}\) such that \(e^W=e^{W'}\), and whose nonzero eigenvalues \(\{\pm \lambda _i i\}_{i=1}^k\) satisfy \(\lambda _i \in (-\pi ,\pi ]\). For a general R, the result follows by multiplying everything from the left by R. First, note that (4.6) implies that

where \(\lambda _i\) and \(R\in \text {SO}(n)\) are as in (4.6), and

We note that \(e^W = \cosh (W) + \sinh (W)\), and this is exactly the decomposition of \(e^W\) into a symmetric (\(\cosh (W)\)) and a skew-symmetric (\(\sinh (W)\)) matrices. Formulae (4.7) imply, in particular, that if

where \(\lambda _i' - \lambda _i \in 2\pi {\mathbb {Z}}\) for every i, then \(e^W = e^{W'}\). Thus, it is possible to choose the \(\lambda _i\)s in any interval of length \(2\pi \). This completes the proof. \(\square \)

Next, we note that for every \(R_0\in {\mathcal {R}}\), \(R\in \text {SO}(n)\) and i,

Assume otherwise; without loss of generality, assume that

Now, consider the matrix

We have

hence, for every \(t\in (0,2\pi )\), using that \(\sinh (tW)\in {\mathbb {M}}^{n\times n}_{\text {skew}}\) and thus, \(F(R_0\sinh (tW)) =0\) by (4.1),

which is a contradiction to \(R_0\in {\mathcal {R}}\).

Now we can easily prove the following two lemmas, which are the main building blocks towards Proposition 4.1. Lemma 4.4 states that for any \(W\in T{\mathcal {R}}_{R_0}\) (see (4.2)), the whole \(\text {SO}(n)\)-geodesic emanating from \(R_0\) in direction W belongs to \({\mathcal {R}}\); Lemma 4.5 states that for any two elements \(R_0,R_1\in {\mathcal {R}}\), there exists a geodesic between them that belongs to \({\mathcal {R}}\).

Lemma 4.4

If \(R_0\in {\mathcal {R}}\) and \(W\in {\mathbb {M}}^{n\times n}_{\text {skew}}\) such that \(F(R_0W^2) = 0\), then \(R_0e^{tW} \in {\mathcal {R}}\) for any \(t\in {\mathbb {R}}\).

Proof

Let \(W\in {\mathbb {M}}^{n\times n}_{\text {skew}}\) be such that \(F(R_0W^2) = 0\). Let us write W in its canonical form (4.6), with \(\lambda _i\ne 0\). Note that

By (4.8) it follows that \(F(R_0 R^T D_i R)=0\) for \(i=1,\ldots ,k\). We then have

hence \(R_0e^{tW}\in {\mathcal {R}}\) for every \(t\in {\mathbb {R}}\). \(\square \)

Lemma 4.5

If \(R_0,R_1\) are two distinct elements in \({\mathcal {R}}\), then \({\mathcal {R}}\) contains a geodesic of \(\text {SO}(n)\) that connects \(R_0\) and \(R_1\). More precisely, if \(R_1 = R_0 e^{W}\), where W is of the form of Lemma 4.3, then

Remark

In dimensions \(n=2,3\), we can actually obtain that any geodesic between \(R_0\) and \(R_1\) lies in \({\mathcal {R}}\); for \(n>3\), this is no longer the case due to conjugate points. See Appendix A for details.

Proof

Let \(R_0,R_1\in {\mathcal {R}}\), and pick \(W\in {\mathbb {M}}^{n\times n}_{\text {skew}}\) such that \(R_1=R_0 e^{W}\), with W of the form of Lemma 4.3. We therefore have, for some \(R\in \text {SO}(n)\), that

where we used (4.8). Since \(\lambda _i \in (-\pi ,\pi ]{\setminus } \{0\}\), it follows that \(a_i=0\) for all i. But then, for every \(t\in {\mathbb {R}}\),

hence \(R_0e^{tW}\in {\mathcal {R}}\) for every \(t\in {\mathbb {R}}\). \(\square \)

Finally, we prove Proposition 4.1.

Proof of Proposition 4.1:

We first prove that the set

is a vector space. It is obvious that T is closed under scalar multiplication; the idea is to “zoom in” near the origin, where we can effectively treat the geodesics that connect two matrices in \(\text {SO}(n)\) as straight lines in the linear space of matrices: Assume that \(W_1,W_2\in T\); Lemma 4.4 implies that \(e^{taW_1},e^{tbW_2}\in {\mathcal {R}}\) for every \(a,b\in {\mathbb {R}}\) and \(t> 0\). We will show that for small t, the midpoint of the geodesic between \(e^{taW_1}\) and \(e^{tbW_2}\) belongs to \({\mathcal {R}}\), and that this midpoint is \(\exp \left( \frac{t}{2}(aW_1 + bW_2 + O(t))\right) \). The previous lemmata will then imply that \(\frac{t}{2}(aW_1 + bW_2 + O(t))\in T\); we will then “zoom out” and obtain that \(aW_1 + bW_2 \in T\). Indeed, consider, for small t, the geodesic between \(e^{taW_1}\) and \(e^{tbW_2}\). We can write it as

where \(e^Z = e^{-taW_1}e^{tbW_2}\), hence

Since \(|Z|=O(t)\), we obtain that for small enough t, all the eigenvalues of Z are close to zero; hence, Lemma 4.5 implies that this geodesic belongs to \({\mathcal {R}}\). In particular, we have that the midpoint of this geodesic, \(e^{taW_1} e^{Z/2}\), belongs to \({\mathcal {R}}\); we can write it as

Using Lemma 4.5 again, we have that \(e^{\tau Z'}\in {\mathcal {R}}\) for every \(\tau \), from which we obtain that \(Z'\in T\). Since T is closed to scalar multiplication, we have that \(2Z'/t\in T\); thus,

for every \(t>0\), and since T is a closed set, we have that \(aW_1 + bW_2 \in T\).

We now claim that at the vicinity of I, \({\mathcal {R}}\) is the image of the exponential map restricted to T. Indeed, Lemma 4.4 implies that the image of the exponential map, restricted to T, is in \({\mathcal {R}}\). On the other hand, Lemma 4.5 implies that if \(R\in {\mathcal {R}}\), then \(R = e^{W}\) for some \(W\in T\). This tells us that at the vicinity of I, \({\mathcal {R}}\) is a manifold whose tangent space is T.

However, we can do this analysis around any \(R_0\in {\mathcal {R}}\), and thus, \({\mathcal {R}}\) is indeed a manifold whose tangent space is \(T{\mathcal {R}}_{R_0}\). Lemma 4.5 implies that it is connected. Since for each \(R_0\), \({\mathcal {R}}\) is locally homeomorphic to an open neighborhood of the zero element of the vector space \(T{\mathcal {R}}_{R_0}\), we have that \({\mathcal {R}}\) has no boundary; since, by definition, \({\mathcal {R}}\) is a set of maximizers of a continuous function, it is closed. We therefore deduce that \({\mathcal {R}}\) is a closed manifold.

Finally, Lemma 4.4 implies that for any \(W\in T_{R_0}{\mathcal {R}}\), the \(\text {SO}(n)\)-geodesic \(R_0e^{tW}\) stays on the submanifold \({\mathcal {R}}\); hence, \({\mathcal {R}}\) is totally geodesic a small square.\(\square \)

5 Main Results

Theorem 5.1

(Compactness) Let \(y_\varepsilon \in W^{1,2}(\Omega ;{\mathbb {R}}^n)\) be such that \(J_\varepsilon (y_\varepsilon ) \le C\varepsilon ^2\), and let \(R_\varepsilon x + c_\varepsilon \) be a reference configuration of \(y_\varepsilon \), satisfying the results of Lemma 3.1. Denote the of \(y_\varepsilon \) by

We then have the following, up to moving to a subsequence:

-

\(u_\varepsilon {\mathop {\rightharpoonup }\limits ^{}} u_0\) in \(W^{1,2}(\Omega ;{\mathbb {R}}^n)\),

-

\(R_\varepsilon \rightarrow R_0\in {\mathcal {R}}\),

-

\(\frac{1}{\sqrt{\varepsilon }}\left( R_\varepsilon - {\mathcal {P}}(R_\varepsilon )\right) \rightarrow R_0W_0\), for some \(W_0 \in N{\mathcal {R}}_{R_0}\),

where \(N{\mathcal {R}}_{R_0}\) and \({\mathcal {P}}\) were defined in (4.3) and (4.4). Moreover, we have that \(R_0\), \(W_0\) are independent of the choice of \(R_\varepsilon \), and \(u_0\) is independent up to a change by an infinitesimal isometry \(Ax + b\), where \(A\in {\mathbb {M}}^{n\times n}_{\text {skew}}\) and \(b\in {\mathbb {R}}^n\).

Theorem 5.2

(\(\Gamma \)-convergence) Under the convergence \(y_\varepsilon \rightarrow (u_0,R_0,W_0)\) as defined in Theorem 5.1, we have

where \({\mathcal {Q}}\) is defined in (2.1). In particular, this means

-

1.

Lower Bound If \(y_\varepsilon \rightarrow (u_0,R_0,W_0)\), then

$$\begin{aligned} \liminf \frac{1}{\varepsilon ^2} J_\varepsilon (y_\varepsilon )\ge & {} \int _\Omega {\mathcal {Q}}(x,e(u_0(x)))\,\mathrm{d}x - \int _{\partial \Omega } f\cdot R_0 u_0 \, \,\mathrm{d}{\mathcal {H}}^{n-1} - \int _\Omega g\cdot R_0 u_0 \, \mathrm{d}x \\&- \frac{1}{2}F(R_0W_0^2). \end{aligned}$$ -

2.

Upper Bound For every \(u_0\in W^{1,2}(\Omega ;{\mathbb {R}}^n)\), \(R_0\in {\mathcal {R}}\) and \(W_0\in N{\mathcal {R}}_{R_0}\), there exists \(y_\varepsilon \in W^{1,2}(\Omega ;{\mathbb {R}}^n)\) such that \(y_\varepsilon \rightarrow (u_0,R_0,W_0)\) and

$$\begin{aligned} \lim \frac{1}{\varepsilon ^2} J_\varepsilon (y_\varepsilon )= & {} \int _\Omega {\mathcal {Q}}(x,e(u_0(x)))\,\mathrm{d}x - \int _{\partial \Omega } f\cdot R_0 u_0 \, \,\mathrm{d}{\mathcal {H}}^{n-1} - \int _\Omega g\cdot R_0 u_0 \, \mathrm{d}x \\&- \frac{1}{2}F(R_0W_0^2). \end{aligned}$$

Theorem 5.3

(Convergence of minimizers) Let \(y_\varepsilon \in W^{1,2}(\Omega ;{\mathbb {R}}^n)\) be a sequence such that

Then, there exist a sequence \(R_\varepsilon \in \text {SO}(n)\) and constants \(c_\varepsilon \in {\mathbb {R}}^n\) such that, up to subsequences, the rescaled displacements

converge to \(u_0\) strongly in \(W^{1,q}(\Omega ;{\mathbb {R}}^n)\) for every \(1\le q<2\), \(R_\varepsilon \) converge to \(R_0\in {\mathcal {R}}\), and \(\frac{1}{\sqrt{\varepsilon }}\left( R_\varepsilon - {\mathcal {P}}(R_\varepsilon )\right) \rightarrow 0\). Furthermore, \((u_0, R_0)\) is a minimizer of the functional

on \(W^{1,2}(\Omega ;{\mathbb {R}}^n)\times {\mathcal {R}}\).

Remark

The results of Maddalena et al. (2019a) are an immediate consequence of Theorem 5.1. Indeed, let \(y_\varepsilon \in W^{1,2}(\Omega ;{\mathbb {R}}^n)\) be such that \(J_\varepsilon (y_\varepsilon ) \le C\varepsilon ^2\) and let \(v_\varepsilon =\frac{1}{\varepsilon }(y_\varepsilon -{\text {id}})\) be the displacement as defined in Maddalena et al. (2019a). By (5.1) we have that

From this relation it is clear that in general one cannot expect \(v_\varepsilon \) to be bounded in \(W^{1,2}\), since the limit \(R_0\) of \(R_\varepsilon \) may be different from I and, even if \(R_0=I\), the distance of \(R_\varepsilon \) from \({\mathcal {R}}\) is only of order \(\sqrt{\varepsilon }\). Assume now that \({\mathcal {R}}=\{I\}\). By Theorem 5.1 and Eq. (5.3), we deduce that \(\sqrt{\varepsilon }\nabla v_\varepsilon \) converge, up to subsequences, to \(W_0\) strongly in \(L^2\). Moreover, writing \(R_\varepsilon =e^{\sqrt{\varepsilon }W_\varepsilon }\), with \(W_\varepsilon \) a bounded sequence (Theorem 5.1), we obtain

hence, \(e(v_\varepsilon )\) converges, up to subsequences, to \(e(u_0)\) weakly in \(L^2\), and \(e(v_0)=e(u_0)+\frac{1}{2} W_0^2\). Thus, we recover the result of Maddalena et al. (2019a).

Proof of Theorem 5.1:

Convergence of \(u_\varepsilon \) and \(R_\varepsilon \). By Lemma 3.1, we have that \(u_\varepsilon \) is bounded in \(W^{1,2}\), from which the first assertion follows. \(\text {SO}(n)\) is compact; hence, by moving to a subsequence, we have \(R_\varepsilon \rightarrow R_0\in \text {SO}(n)\). Note that the boundedness of \(u_\varepsilon \) implies that for some \(C>0\) we have

If \(R_0\notin {\mathcal {R}}\), then \({\text {dist}}(R_0,{\mathcal {R}})\ge c\) for some constant \(c>0\), and since, from the definition of \({\mathcal {R}}\),

we obtain from (5.4) that \(\varepsilon ^{-2} J_\varepsilon (y_\varepsilon )\rightarrow \infty \), in contradiction. This proves the second assertion.

Convergence of \(\varepsilon ^{-1/2}(R_\varepsilon -{\mathcal {P}}(R_\varepsilon ))\). First, note that \(R_\varepsilon \rightarrow R_0\in {\mathcal {R}}\) implies that \({\mathcal {P}}(R_\varepsilon )\) is well defined for small enough \(\varepsilon \). We first show that \({\text {dist}}_{\text {SO}(n)}(R_\varepsilon ,{\mathcal {R}}) = O(\sqrt{\varepsilon })\).

To simplify the notation, denote \(Q_\varepsilon = {\mathcal {P}}(R_\varepsilon )\) and \(d_\varepsilon = {\text {dist}}_{\text {SO}(n)}(R_\varepsilon ,{\mathcal {R}})\). We therefore have \(R_\varepsilon = Q_\varepsilon e^{d_\varepsilon W_\varepsilon }\) for some \(W_\varepsilon \in N{\mathcal {R}}_{Q_\varepsilon }\), with \(|W_\varepsilon | = 1\). Since \(R_\varepsilon \rightarrow R_0\), we also have \(Q_\varepsilon \rightarrow R_0\), and therefore, by moving to a subsequence, we have that \(W_\varepsilon \rightarrow W\), where \(|W|= 1\) and \(W\in N{\mathcal {R}}_{R_0}\). From (5.4) and (4.1), we have that for some constant \(C>0\),

we therefore obtain that if \(d_\varepsilon \gg \sqrt{\varepsilon }\), then \(F(R_0 W^2) = \lim F(Q_\varepsilon W_\varepsilon ^2) = 0\). But this is a contradiction since W is a non-zero element of \(N{\mathcal {R}}_{R_0}\). We therefore obtain that \(d_\varepsilon = O(\sqrt{\varepsilon })\) as needed. By moving to a subsequence, we have that \(d_\varepsilon /\sqrt{\varepsilon }\rightarrow \alpha \) for some \(\alpha \ge 0\).

Putting this all together we have

which completes the proof as \(W_0 = \alpha W \in N{\mathcal {R}}_{R_0}\).

Uniqueness of \(R_0\) and \(e(u_0)\). We now show that \(R_0\) is independent of the choice of \(R_\varepsilon \), and that \(u_0\) is also independent up to a change by a linear function \(Ax + b\), with \(A\in {\mathbb {M}}^{n\times n}_{\text {skew}}\).

Indeed, assume that \(R_\varepsilon '\) is an alternative choice of rotations, \(u_\varepsilon '\) are the associated displacements, and let \(u_0'\) be their limit. From Lemma 3.1, we know that \(|R_\varepsilon -R_\varepsilon '|<C\varepsilon \) for some \(C>0\); thus, \(\lim R_\varepsilon ' = \lim R_\varepsilon = R_0\).

Moreover, writing \(R_\varepsilon ' = R_\varepsilon e^{\varepsilon A_\varepsilon }\) for some uniformly bounded matrices \(A_\varepsilon \in {\mathbb {M}}^{n\times n}_{\text {skew}}\), we have

Here, \(O(\varepsilon )\) is with respect to the \(L^2\) norm. By passing to the limit, using the fact that \(A_\varepsilon \) is antisymmetric and \(R_\varepsilon ^T\nabla y_\varepsilon \rightarrow I\) strongly in \(L^2\) (Lemma 3.1), we obtain that \(u_0' = u_0 + Ax + b\), where \(A\in {\mathbb {M}}^{n\times n}_{\text {skew}}\).

Uniqueness of \(W_0\). It remains to show that \(W_0\) is independent of the choice of \(R_\varepsilon \). Assume we have an alternative choice of rotations \(R_\varepsilon '\). From Lemma 3.1, we have that \(|R_\varepsilon -R_\varepsilon '|=O(\varepsilon )\).

Denote \(Q_\varepsilon = {\mathcal {P}}(R_\varepsilon )\), \(Q_\varepsilon ' = {\mathcal {P}}(R_\varepsilon ')\) and define

We have already established the bounds

From the definition of \(Q_\varepsilon \) and \(Q_\varepsilon '\), it therefore follows that

Indeed, this follows from

and similarly when reversing the roles of \(R_\varepsilon \) and \(R_\varepsilon '\).

Our goal is to obtain \(|Q_\varepsilon - Q_\varepsilon '| \ll \sqrt{\varepsilon }\), which would imply the uniqueness of \(W_0\). If \(d_\varepsilon \ll \sqrt{\varepsilon }\), then we are done by (5.5), since the extrinsic and intrinsic distances on \(\text {SO}(n)\) are equivalent. We can therefore assume that \(d_\varepsilon \approx \sqrt{\varepsilon }\). Let us write

where \(W_\varepsilon , {\bar{W}}_\varepsilon \in {\mathbb {M}}^{n\times n}_{\text {skew}}\) are of norm 1, and \(t_\varepsilon = |Q_\varepsilon - Q_\varepsilon '| + O(\varepsilon )\) (see (4.5)). In particular, \(t_\varepsilon \rightarrow 0\).

Since both \(Q_\varepsilon ,Q_\varepsilon '\in {\mathcal {R}}\) are optimal rotations, we obtain from Lemma 4.5 that for \(\varepsilon \) small enough, \(Q_\varepsilon e^{t {\bar{W}}_\varepsilon }\in {\mathcal {R}}\) for any \(t\in {\mathbb {R}}\). We therefore have, for any \(t\in {\mathbb {R}}\),

Let us restrict ourselves to \(|t| \le c d_\varepsilon \) for some \(c>0\). Since \(d_\varepsilon \approx \sqrt{\varepsilon }\), we obtain that

Now, since \(|W_\varepsilon | = |{\bar{W}}_\varepsilon | = 1\), we have

from which we obtain that

On the other hand, we have

Therefore, using again the fact that \(d_\varepsilon \approx \sqrt{\varepsilon }\), we have

which implies that \(t_\varepsilon \ll d_\varepsilon = O(\sqrt{\varepsilon })\), hence \(|Q_\varepsilon - Q_\varepsilon '| \ll \sqrt{\varepsilon }\), which completes the uniqueness proof a small square.\(\square \)

Proof of Theorem 5.2:

Lower Bound. First consider the elastic part \(\varepsilon ^{-2}I_\varepsilon (y_\varepsilon )\). We have, using frame indifference,

Taylor expanding \(W(I+A)\), we have from the regularity assumption (d) and (2.2) that

where \(\omega (t)\) is a non-negative function satisfying \(\lim _{t\rightarrow 0} \omega (t)/t^2 = 0\). We therefore have

where

Since \(u_\varepsilon {\mathop {\rightharpoonup }\limits ^{}} u_0\) in \(W^{1,2}\), we have that \(\chi _\varepsilon \rightarrow 1\) in \(L^2\) and therefore also \(\chi _\varepsilon ^{1/2} e(u_\varepsilon ) {\mathop {\rightharpoonup }\limits ^{}} e(u_0)\) in \(L^2\). Therefore, since \({\mathcal {Q}}(x,\cdot )\) is positive-semidefinite (and in particular, convex), we have that

From this, and the fact that \(\chi _\varepsilon \frac{\omega (\varepsilon |\nabla u_\varepsilon |)}{\varepsilon ^2 |\nabla u_\varepsilon |^2}\rightarrow 0\) uniformly, we obtain that

Next, since \(R_\varepsilon u_\varepsilon {\mathop {\rightharpoonup }\limits ^{}} R_0 u_0\) in \(W^{1,2}\), we have that

Finally, writing \(R_\varepsilon = {\mathcal {P}}(R_\varepsilon ) e^{\sqrt{\varepsilon }W_\varepsilon }\), we have that

Putting all these together, we have

which completes the proof of the lower bound.

Upper Bound. For \(u_0 \in W^{1,2}\), choose a sequence \(u_\varepsilon \in W^{1,\infty }\) such that \(u_\varepsilon \rightarrow u_0\) in \(W^{1,2}\) and \(\Vert \nabla u_\varepsilon \Vert _{\infty } < \varepsilon ^{-1/2}\). Define \(y_\varepsilon := R_0 e^{\sqrt{\varepsilon }W_0} (x + \varepsilon u_\varepsilon )\). In this case we have \(R_\varepsilon = R_0 e^{\sqrt{\varepsilon }W_0}\) and \(u_\varepsilon \) is indeed the displacement of \(y_\varepsilon \) as in (5.1). Note that since \(R_0\in {\mathcal {R}}\) and \(W_0 \in N{\mathcal {R}}_{R_0}\), we have that \(R_0 = {\mathcal {P}}(R_\varepsilon )\). It follows that \(y_\varepsilon \rightarrow (u_0,R_0,W_0)\) as needed. Now, similarly as in the lower bound, we have

since \(\varepsilon \Vert \nabla u_\varepsilon \Vert _{\infty } = O(\sqrt{\varepsilon })\). Now, since \(u_\varepsilon \rightarrow u_0\) strongly in \(W^{1,2}\) and \(D_A^2{\mathcal {W}}(\cdot ,I)\) is in \(L^\infty \), we have that \(\int _\Omega {\mathcal {Q}}(x, e(u_\varepsilon ))\,\mathrm{d}x \rightarrow \int _\Omega {\mathcal {Q}}(x, e(u_0))\,\mathrm{d}x\).

The forces part behaves exactly as in the lower bound, yielding

\(\square \)

Proof of Theorem 5.3:

By Proposition 3.2 we have that \(J_\varepsilon (y_\varepsilon ) < C\varepsilon ^2\); hence, by Theorem 5.1 there exist \(u_0\in W^{1,2}(\Omega ;{\mathbb {R}}^n)\), \(R_0\in {\mathcal {R}}\), and \(W_0 \in N{\mathcal {R}}_{R_0}\) such that \(u_\varepsilon {\mathop {\rightharpoonup }\limits ^{}} u_0\) in \(W^{1,2}\), \(R_\varepsilon \rightarrow R_0\), and

where we used the lower bound in Theorem 5.2.

Let now \(v\in W^{1,2}\) and \(R\in {\mathcal {R}}\). By the upper bound in Theorem 5.2 with \(W_0=0\), there exists a sequence \(v_\varepsilon \in W^{1,2}\) such that

Combining (5.2), (5.8), and (5.9), we deduce

Therefore, \((u_0, R_0)\) is a minimizer of the functional J on \(W^{1,2}\times {\mathcal {R}}\), and \(W_0 = 0\) (this follows from (4.1) and the definition of \(N{\mathcal {R}}_{R_0}\)).

It remains to show that \(u_\varepsilon \) converge to \(u_0\) strongly in \(W^{1,q}\) for every \(1\le q<2\). Choosing \(v=u_0\) and \(R=R_0\) in (5.10), we obtain

hence

Equation (5.7) and the fact that I is an optimal rotation imply that \(\frac{1}{\varepsilon } F(R_\varepsilon - I)\rightarrow 0\) and

Let now \(\chi _\varepsilon \) be defined as in (5.6). From the proof of the lower bound in Theorem 5.2, it follows that

Therefore, by (5.11) we obtain

By the coercivity of \({\mathcal {Q}}\), we have that

We now use the weak convergence of \(\chi _\varepsilon ^{1/2} e(u_\varepsilon )\) to \(e(u_0)\) in \(L^2\), the boundedness of \(D_A^2{\mathcal {W}}(x,I)\), and equation (5.12), to deduce that \(\chi _\varepsilon ^{1/2} e(u_\varepsilon )\rightarrow e(u_0)\) strongly in \(L^2\). Since \(\chi _\varepsilon \rightarrow 1\) in \(L^p\) for every \(1\le p<\infty \) and \(e(u_\varepsilon )\) is bounded in \(L^2\), we have that \((1-\chi _\varepsilon ^{1/2})e(u_\varepsilon )\rightarrow 0\) strongly in \(L^q\) for every \(1\le q<2\), hence \(e(u_\varepsilon )\rightarrow e(u_0)\) strongly in \(L^q\) for every \(1\le q<2\). By Korn’s inequality, there exists, for every \(q\in (1,2)\), a constant \(c_q\) such that

By the Rellich theorem \(u_\varepsilon \rightarrow u_0\) strongly in \(L^q\); hence, we conclude that \(u_\varepsilon \rightarrow u_0\) strongly in \(W^{1,q}\) for every \(q\in (1,2)\). The convergence for \(q=1\) follows immediately since \(\Omega \) is a bounded domain a small square.\(\square \)

6 Classification and Examples of Optimal Rotations

In this section, we classify the possible sets \({\mathcal {R}}\) of optimal rotations, in dimensions \(n=2,3\). The optimal rotations are derived from the functional \(F\in ({\mathbb {M}}^{n\times n})^*\). Endowing \({\mathbb {M}}^{n\times n}\) with the Frobenius inner-product, we can identify F with an \(n\times n\) matrix, which we will also denote by F; since \(F(W) = 0\) for any \(W\in {\mathbb {M}}^{n\times n}_{\text {skew}}\), it follows that F is a symmetric matrix. Note that the assumption \(I\in {\mathcal {R}}\) gives further restrictions on F, as seen in (4.8); in particular, it cannot be an arbitrary symmetric matrix.

Proposition 6.1

(Classification of optimal rotations in 2D) When \(n=2\), the set of optimal rotations is either \({\mathcal {R}}=\{I\}\) or \({\mathcal {R}}= \text {SO}(2)\). The latter case happens if and only if \({\text {tr}}F = 0\).

Proof

Since \({\mathcal {R}}\) is a complete, connected, closed, boundaryless submanifold of \(\text {SO}(2)\), and \(\text {SO}(2)\) is one dimensional, \({\mathcal {R}}\) is either a singleton or the whole \(\text {SO}(2)\). Since \(R\in \text {SO}(2)\) implies that \(-R\in \text {SO}(2)\), the case \({\mathcal {R}} = \text {SO}(2)\) happens if and only if \(F(R)=0\) for every \(R\in \text {SO}(2)\). Since F is symmetric and \(R= \left( \begin{matrix} \cos \alpha &{} -\sin \alpha \\ \sin \alpha &{} \cos \alpha \end{matrix} \right) \) for some angle \(\alpha \), this holds if and only if F is traceless. \(\square \)

Proposition 6.2

(Classification of optimal rotations in 3D) When \(n=3\), the set of optimal rotation is either \({\mathcal {R}}=\{I\}\) or one of the following:

-

\({\mathcal {R}}= \text {SO}(3)\), if and only if \(F\equiv 0\).

-

\({\mathcal {R}}\) is isometric to the real projective plane \({\mathbb {P}}_2({\mathbb {R}}) \cong S^2\big /\sim \), where \(\sim \) is the identification of antipodal points and \(S^2\) is the round sphere. This happens if and only if the eigenvalues of F are \(a,a,-a\) for some \(a>0\).

-

\({\mathcal {R}}\) is a single closed geodesic (that is, it is isometric to \(\text {SO}(2) \cong S^1\)); this happens if and only if the eigenvalues of F are \(b,a,-a\) for some \(b>a\ge 0\).

Proof

Classification of the Possible Isometry Classes of \({\mathcal {R}}\). Assume that \({\mathcal {R}}\ne \{I\}\), hence it is a closed, connected, boundaryless totally geodesic submanifold of \(\text {SO}(3)\). In particular, \({\mathcal {R}}\) is the image of the exponential map of \(\text {SO}(3)\), restricted to the subspace \(T{\mathcal {R}}_I\subset T\text {SO}(3)_I\). This is because every complete manifold is the image of its exponential map, and the exponential map of a totally geodesic submanifold is the exponential map of the ambient manifold restricted to the tangent plane of the submanifold. It follows that if \(\dim {\mathcal {R}}= 1\), then \({\mathcal {R}}\) consists of a single, closed geodesic. If \(\dim {\mathcal {R}}= 3 = \dim \text {SO}(3)\), then \(T{\mathcal {R}}_I = T\text {SO}(3)_I\), hence \({\mathcal {R}}= \text {SO}(3)\).

Note that \(\text {SO}(3)\), with the metric induced from \({\mathbb {M}}^{3\times 3}\), is isometric to \(S^3/{\sim }\), where \(S^3\) is the round 3-sphere, and \(\sim \) is the identification of antipodal points. This follows since in both cases the metric obtained is bi-invariant with respect to the group action, and such a metric is unique.Footnote 4 Denote by \(\pi : S^3 \rightarrow \text {SO}(3)\) the covering map. If \(\dim {\mathcal {R}}= 2\), then \(\pi ^{-1} {\mathcal {R}}\) is a connected, totally geodesic, complete two-dimensional submanifold of \(S^3\); hence, it is isometric to the round \(S^2\) (since the image of a two-dimensional subspace of \(T S^3_p\) under the exponential map of \(S^3\) is isometric to \(S^2\)). Thus, \({\mathcal {R}}\) is isometric to \({\mathbb {P}}_2({\mathbb {R}}) = S^2\big / \sim \). This completes the classification of the possible isometry classes of \({\mathcal {R}}\).

The Principal Curvatures of \(\text {SO}(n)\) in \({\mathbb {M}}^{n\times n}\). In order to relate the eigenvalues of F to the structure of \({\mathcal {R}}\), we need first to recall the second fundamental form of \(\text {SO}(n)\) in \({\mathbb {M}}^{n\times n}\).Footnote 5 Generally, the second fundamental form of a submanifold \({\mathcal {M}} \subset {\mathcal {N}}\) at \(p\in {\mathcal {M}}\) is the vector-valued quadratic form \(II _p: T{\mathcal {M}}_p \rightarrow N{\mathcal {M}}_p\) defined by \(II _p(X) := \nabla ^{{\mathcal {N}}}_X X - \nabla ^{{\mathcal {M}}}_X X\) (here \(N{\mathcal {M}}_p\) is the normal bundle of \({\mathcal {M}}\) at p, and \(\nabla ^{{\mathcal {M}}}\) and \(\nabla ^{{\mathcal {N}}}\) are the Levi–Civita covariant derivatives of \({\mathcal {M}}\) and \({\mathcal {N}}\), respectively). The second fundamental form of \({\mathcal {M}}\) in direction \(\eta \subset N{\mathcal {M}}_p\) is the quadratic form \(X\mapsto \left\langle II _p(X), \eta \right\rangle \), and the principal curvatures of \({\mathcal {M}}\) in direction \(\eta \) are the eigenvalues of this form (with respect to an orthonormal basis of \(T{\mathcal {M}}_p\)). If \({\mathcal {M}}\) is totally geodesic in \({\mathcal {N}}\), then its second form vanishes identically.

Now let \({\mathcal {N}} = {\mathbb {R}}^D\). Since \(T{\mathcal {M}}_p \oplus N{\mathcal {M}}_p ={\mathbb {R}}^D\), we can write \({\mathcal {M}}\), at the vicinity of p, as a graph of a function \(f:T{\mathcal {M}}_p \rightarrow N{\mathcal {M}}_p\), whose differential at p vanishes. In this case we can identify the second fundamental form as the quadratic correction of f, that is, \(f(X) = f(0) + II (X) + O(|X|^3)\).

In our case, the tangent and normal spaces of \(\text {SO}(n)\) at I are \({\mathbb {M}}^{n\times n}_{\text {skew}}\) and \({\mathbb {M}}^{n\times n}_{\text {sym}}\), respectively. The map \(W\mapsto e^{W}\) maps \({\mathbb {M}}^{n\times n}_{\text {skew}}\) to \(\text {SO}(n)\); the decomposition of \(e^W\) into skew and symmetric parts is given by

Therefore, since \(W\mapsto \sinh W\) is a diffeomorphism of \({\mathbb {M}}^{n\times n}_{\text {sym}}\) at the vicinity of 0, we obtain that \(\text {SO}(n)\) is the graph of the function \(f: {\mathbb {M}}^{n\times n}_{\text {skew}}\rightarrow {\mathbb {M}}^{n\times n}_{\text {sym}}\), defined by

for small enough W. Thus, the second form of \(\text {SO}(n)\) at the identity is \(II (W) = \frac{1}{2}W^2\). The second fundamental form in a direction \(S \in {\mathbb {M}}^{n\times n}_{\text {sym}}\) is then the map \(W\mapsto \left\langle \frac{1}{2}W^2 , S\right\rangle \). If \(s_1,\ldots , s_n\) are the eigenvalues of S, then a direct calculation shows that \(-\frac{1}{4}(s_i + s_j)\), \(i<j\) are the principal curvatures of \(\text {SO}(n)\) at I in direction S.Footnote 6

Back to our case, we show that the second form of \(\text {SO}(n)\) at the identity in the direction F is negative semi-definite. That is, if \(f_1,\ldots , f_n\) are the eigenvalues of F, then \(f_i+f_j \ge 0\) for all \(i\ne j\). Assume otherwise, and without loss of generality assume that \(f_1+f_2 <0\). This contradicts (4.8): Indeed, we can write \(F = R^T {\text {diag}}(f_1,\ldots , f_n) R\) for some \(R\in \text {SO}(n)\), and then, with the notation of (4.8), we obtain

which is a contradiction to (4.8).

The Relation Between Eigenvalues of F and \(\dim {\mathcal {R}}\). Denote by H the hyperplane \(H := F^{-1}\{F(I)\} \subset {\mathbb {M}}^{3\times 3}\). The normal to H is, by definition, the matrix F. We have the inclusions

In what follows, \(II ^{{\mathcal {R}}, H}\) denotes the second fundamental form of \({\mathcal {R}}\) in H at I, and similarly for the other inclusions; \(II ^{{\mathcal {R}}, H}_F\) denotes the second fundamental form in direction F at I, and so on. Since H is a hyperplane, it is totally geodesic in \({\mathbb {M}}^{3\times 3}\). It follows that \(II ^{{\mathcal {R}}, {\mathbb {M}}^{3\times 3}}_F\) vanishes:

where we used the fact that H is totally geodesic in \({\mathbb {M}}^{3\times 3}\) and thus, \(\nabla ^{{\mathbb {M}}^{3\times 3}}_{W} W = \nabla ^{H}_W W\). Now, since \({\mathcal {R}}\subset H\), \(\nabla ^{H}_W W - \nabla ^{\mathcal {R}}_W W\) is a tangent vector to H; on the other hand, F is perpendicular to H; hence, \(II ^{{\mathcal {R}}, {\mathbb {M}}^{3\times 3}}_F=0\). On the other hand, since \({\mathcal {R}}\subset \text {SO}(3)\) is totally geodesic, \(II ^{{\mathcal {R}}, \text {SO}(3)} = 0\). Thus, by a similar argument (with \(\text {SO}(3)\) instead of H and without taking the inner product with F), we obtain that \(II ^{{\mathcal {R}}, {\mathbb {M}}^{3\times 3}} = \left. II ^{\text {SO}(3), {\mathbb {M}}^{3\times 3}} \right| _{T{\mathcal {R}}_I}\). Thus, we obtain that

Recall that \(II ^{\text {SO}(3), {\mathbb {M}}^{3\times 3}}_F\) is a negative semi-definite quadratic form. Since it vanishes on a subspace of dimension \(\dim {\mathcal {R}}\), it follows that at least \(\dim {\mathcal {R}}\) of the principal curvatures of \(\text {SO}(n)\) in the direction F vanish. As shown above, the principal curvatures are \(-\frac{1}{4}(f_1+f_2)\), \(-\frac{1}{4}(f_2+f_3)\) and \(-\frac{1}{4}(f_1+f_3)\), where \(f_i\) are the eigenvalues of F.

-

If \(\dim {\mathcal {R}}= 3\), it follows that \(f_1=f_2=f_3=0\), and thus \(F=0\). Obviously, if \(F=0\) then \({\mathcal {R}}= \text {SO}(3)\) and thus \(\dim {\mathcal {R}}= 3\).

-

If \(\dim {\mathcal {R}}= 2\), we have that, without loss of generality \(f_1=f_2 =-f_3\). Since \(II ^{\text {SO}(3), {\mathbb {M}}^{3\times 3}}_F\) is negative semi-definite, we have that \(f_1+f_2 \ge 0\); if equality holds, then \(F=0\) and \(\dim {\mathcal {R}}= 3\). We thus obtain that \(\dim {\mathcal {R}}= 2\) implies that the eigenvalues of F are \(a,a,-a\) for some \(a>0\).

-

If \(\dim {\mathcal {R}}= 1\), we have that, without loss of generality, \(f_2 = -f_3\). Again, the negative semi-definiteness of \(II ^{\text {SO}(3), {\mathbb {M}}^{3\times 3}}_F\) implies that \(f_1 \ge |f_2| = |f_3|\); thus, \(\dim {\mathcal {R}}= 1\) implies that the eigenvalues of F are \(b,a,-a\) for some \(b>a\ge 0\).

In order to complete the proof, we need to show that if the eigenvalues of F are \(a,a,-a\) for some \(a>0\), then \(\dim {\mathcal {R}}= 2\), and if they are \(b,a,-a\) for \(b> a \ge 0\), then \(\dim {\mathcal {R}}= 1\). Assume that for some \(Q\in \text {SO}(3)\),

Thus, for a general matrix \(R\in \text {SO}(3)\), we have that

Writing R in a quaternion representation, that is \(R = p_1 + p_2\mathbf{i} +p_3 \mathbf{j} + p_4 \mathbf{k}\) for a unit vector \(p=(p_1, p_2, p_3, p_4)\), we obtain that

Thus, \({\mathcal {R}}\) is the two-dimensional submanifold \(Q\{p_4=0\}Q^T\).

Next, assume that for some \(Q\in \text {SO}(3)\) and \(b>a\ge 0\), we have

In this case \(F(Q^T R Q)\) is maximized for all rotations R around the x-axis. Thus, \(\dim {\mathcal {R}}\ge 1\), and since \(b>a\), we have that \(\dim {\mathcal {R}}= 1\). \(\square \)

Example 6.1

(Uniform tension) Let \(\Omega \subset {\mathbb {R}}^n\) be a Lipschitz domain, and denote by \(\nu \) the outer normal of \(\partial \Omega \). Let the traction force f be \(f=\nu \), and set the body force g to be zero. We then have, using the divergence theorem, that

It immediately follows that I is the unique maximizer of F on \(\text {SO}(n)\). That is, \({\mathcal {R}}= \{I\}\) in this case.Footnote 7

Example 6.2

(Uniform compression) Reversing the sign from the previous example, that is, taking \(f = -\nu \), we obtain

In this case I is a minimizer of F among rotations; hence, in order to use the formalism of this paper, we first need to rotate the system by a maximizer of F.Footnote 8

If \(n=2\) (or more generally, if n is even), then \(-I\) is a maximizer, and rotating by it reduces this example to the previous one, with a unique maximizer.

If \(n=3\), we recall that for \(R = p_1 + p_2\mathbf{i} +p_3 \mathbf{j} + p_4 \mathbf{k}\), \({\text {tr}}(R) = 3 - 4(p_2^2 + p_3^2 + p_4^2)\). Thus, a maximizer of F in \(\text {SO}(3)\) is any rotation with \(p_1=0\) (that is, a rotation by \(\pi \) around any axis). In particular, we obtain that \({\mathcal {R}}\) is two-dimensional in this case.

Example 6.3

(Tangential forces) Consider now the two-dimensional case \(n=2\), and let the traction force be \(f=Z\tau \), where \(\tau \) is the unit tangent to \(\partial \Omega \), and Z is a reflection matrix, say, a reflection by the \(x_2\) axis. If there are no body forces, we have (by Green’s theorem),

In particular, \(F|_{\text {SO}(2)} = 0\), and thus \({\mathcal {R}}= \text {SO}(2)\). By considering a cylinder \(\Omega \times (0,1)\), this example can be lifted to three dimensions, thus obtaining a three-dimensional example in which \(\dim {\mathcal {R}}= 1\).

Example 6.4

(Full degeneracy) In dimensions \(n>2\), \({\mathcal {R}}= \text {SO}(n)\) implies that \(F\equiv 0\) (the previous example is a counterexample for this for \(n=2\)). However, as the following example shows, \(F \equiv 0\) does not imply that the forces themselves must be zero. Let \(\Omega \) be the unit ball, and consider zero traction forces \(f\equiv 0\) and a body force \(g(x) = \rho (|x|)e_1\) for some sufficiently nice function \(\rho :(0,1)\rightarrow {\mathbb {R}}\). In order for the forces to be equilibrated (2.3), we must have

where \(\omega _n\) is the measure of the unit ball. For example, if \(n=3\), we can take \(\rho (r) = 1 -\frac{4}{3}r\) or \(\rho (r) = \frac{1}{r^2} - \frac{2}{r}\). For any such force, we obtain that \(F\equiv 0\):

since the domain is a ball.

Example 6.5

(Gravity field) In dimension \(n=3\) let the traction force f be zero and let the body force g be given by the gravity field

where \({{\bar{g}}}\) is the gravitational constant and \(\rho \in L^2(\Omega )\) is the mass density. The normalization constant \(-\frac{1}{|\Omega |}\int _\Omega \rho (z)\,\mathrm{d}z\) is introduced to guarantee the forces to be equilibrated. By direct computations, we have

Set \(b:= \int _\Omega {\bar{\rho }}(x) x\,\mathrm{d}x\). If \(b=0\), then \(F\equiv 0\) and \({\mathcal {R}}= \text {SO}(3)\). If \(b\ne 0\), then \({\mathcal {R}}\) is the set of all rotations having \(-b/|b|\) as third row. Note that this is a mechanically relevant example, which is covered by our analysis (after rotating the system, so that \(I\in {\mathcal {R}}\)), whereas the compatibility assumption of Maddalena et al. (2019a) is not satisfied.

Notes

The fact that the minimum here is comparable with the elastic energy of y is the content of the celebrated Friesecke–James–Müller rigidity theorem (Friesecke et al. 2002, Theorem 3.1), which is the key technical tool for rigorously establishing limiting theorems for low-energy elastic systems.

Under the assumption that \({\mathcal {R}}=\{I\}\), this functional coincides with the functional obtained in Maddalena et al. (2019a), under the change \(u_0(x) \mapsto u_0(x) - \frac{1}{2} W_0^2 x\) in the functional above.

We note that a related observation appears in Maddalena et al. (2019a, Remark 2.9).

In the case of \(S^3\), with its canonical embedding into \({\mathbb {R}}^4\), the group action is quaternion conjugation, where we identify \(p=(p_1,p_2,p_3,p_4)\in S^3\) with the quaternion \(p_1 + p_2\mathbf{i} +p_3 \mathbf{j} + p_4 \mathbf{k}\).

This is by no means a new result; here we follow the presentation as in Bryant (2018).

Indeed, consider, for \(1\le i<j\le n\), the orthonormal basis \(W_{ij} = \frac{1}{\sqrt{2}}(e_{ij} - e_{ji})\) of \({\mathbb {M}}^{n\times n}_{\text {skew}}\), where \(e_{ij}\) is the standard matrix basis. If S is diagonal with entries \(s_1,\ldots ,s_n\), then for \(W= \sum _{i<j} \alpha _{ij}W_{ij}\), we have that

$$\begin{aligned} \left\langle \frac{1}{2}W^2 , S\right\rangle = -\frac{1}{4}\sum _{i<j} \alpha _{ij}^2 (s_i+s_j), \end{aligned}$$showing that the eigenvalues are \(-\frac{1}{4}(s_i + s_j)\). For a general S, we have that \(S = R^T D R\) for some rotation R and diagonal matrix D. The calculation is then similar, using the orthonormal basis \(R^T W_{ij} R\).

This example essentially appears in Maddalena et al. (2019a, Remark 2.8).

Compare with Maddalena et al. (2019a, Remark 2.7, Example 4.6).

References

Bryant, R.: Principal curvatures of \({\mathbb{R}}^{n^2}\)-embedded \(\text{SO}{(n)}\) (2018). https://mathoverflow.net/q/313403

Dal Maso, G., Negri, M., Percivale, D.: Linearized elasticity as \(\Gamma \)-limit of finite elasticity. Set Valued Anal. 10, 165–183 (2002)

Friesecke, G., James, R.D., Müller, S.: A theorem on geometric rigidity and the derivation of nonlinear plate theory from three dimensional elasticity. Commun. Pure Appl. Math. 55, 1461–1506 (2002)

Jesenko, M., Schmidt, B.: Geometric linearization of theories for incompressible elastic materials and applications (2020). arXiv:2004.11271

Mainini, E., Percivale, D.: Variational linearization of pure traction problems in incompressible elasticity. Z. Angew. Math. Phys. 71, 146 (2020)

Maddalena, F., Percivale, D., Tomarelli, F.: The gap in pure traction problems between linear elasticity and variational limit of finite elasticity. Arch. Ration. Mech. Anal. 234, 1091–1120 (2019)

Maddalena, F., Percivale, D., Tomarelli, F.: A new variational approach to linearization of traction problems in elasticity. J. Optim. Theory Appl. 182, 383–403 (2019)

Acknowledgements

CM was partially supported by ISF Grant 1269/19. MGM acknowledges support by the Università degli Studi di Pavia through the 2017 Blue Sky Research Project “Plasticity at different scales: micro to macro”, by MIUR–PRIN 2017, and by GNAMPA–INdAM.

Funding

Open access funding provided by University degli Studi di Pavia within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Irene Fonseca.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

An Example for Lemma 4.5

An Example for Lemma 4.5

Here we show that, for \(n>3\), Lemma 4.5 does not imply that if \(R_0\) and \(R_1\) are two distinct elements of \({\mathcal {R}}\), then any geodesic between \(R_0\) and \(R_1\) lies in \({\mathcal {R}}\). Let

Since all the entries of a rotation matrix are between \(-1\) and 1, it is obvious that \(R_0 :=I\in {\mathcal {R}}\). Choose \(\lambda \) and \(\mu \) such that \(\rho := \lambda /\mu \) is not an integer, and let

We then have

hence \(e^{tW_0}\in {\mathcal {R}}\) if and only if \(t\in \frac{2\pi }{\mu } {\mathbb {Z}}\), and since \(\lambda /\mu \) is not an integer, \(R_1 := e^{\frac{2\pi }{\mu } W_0}\ne I\). In other words, the geodesic \(e^{tW_0}\) between I and \(R_1\) does not belong to \({\mathcal {R}}\). The geodesic connecting I and \(R_1\) that does belongs to \({\mathcal {R}}\) is \(e^{tW_1}\), where

In dimensions \(n=2,3\) this cannot happen. In these dimensions, we have the Rodrigues formula

whenever \(W\in {\mathbb {M}}^{n\times n}_{\text {skew}}\), \(|W| =\sqrt{2}\).Footnote 9 Let \(R_0,R_1\in {\mathcal {R}}\). If \(R_1=R_0 e^{t_0W}\) for some \(t_0\ne 0\) and \(W\in {\mathbb {M}}^{n\times n}_{\text {skew}}\), \(|W| =\sqrt{2}\), then \(F(R_0)=F(R_1)\), together with (A.1) imply that \(F(R_0W^2) = 0\). Using (A.1) again (or Lemma 4.4), we have that \(R_0e^{tW}\in {\mathcal {R}}\) for every \(t\in {\mathbb {R}}\). In other words, the assumption in Lemma 4.5, that W needs to be of the form of Lemma 4.3, can be dropped in dimensions \(n=2,3\).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Maor, C., Mora, M.G. Reference Configurations Versus Optimal Rotations: A Derivation of Linear Elasticity from Finite Elasticity for all Traction Forces. J Nonlinear Sci 31, 62 (2021). https://doi.org/10.1007/s00332-021-09716-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00332-021-09716-2