Abstract

We study the integrability of an eight-parameter family of three-dimensional spherically confined steady Stokes flows introduced by Bajer and Moffatt. This volume-preserving flow was constructed to model the stretch–twist–fold mechanism of the fast dynamo magnetohydrodynamical model. In particular we obtain a complete classification of cases when the system admits an additional Darboux polynomial of degree one. All but one such case are integrable, and first integrals are presented in the paper. The case when the system admits an additional Darboux polynomial of degree one but is not evidently integrable is investigated by methods of differential Galois theory. It is proved that the four-parameter family contained in this case is not integrable in the Jacobi sense, i.e. it does not admit a meromorphic first integral. Moreover, we investigate the integrability of other four-parameter \({\textit{STF}}\) systems using the same methods. We distinguish all the cases when the system satisfies necessary conditions for integrability obtained from an analysis of the differential Galois group of variational equations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 Origin of \({\textit{STF}}\) Systems

One of the important problems of geo- and astrophysics is an explanation of the origin of magnetic fields of stars and planets. The dynamo model provides a widely accepted explanation. Let us consider the liquid iron in the outer core of the Earth or an ionized gas in a star. An external magnetic field operates on the particles of electrically conducting liquid flowing with velocity \(\varvec{u}\) by the electromotive force \(\varvec{u}\times \varvec{B}\) which generates a current. But according to Ampère’s law, whenever a current flows, a magnetic field is generated. Conditions under which an induced magnetic field and an inducing field are the same are especially interesting, and are studied in the dynamo theory, where we say that a dynamo is self-excited and produces a magnetic field in a continuous way. To describe these complex phenomena, we use equations of magnetohydrodynamics, see e.g. Childress and Gilbert (1995) or Childress (1992). However, in this kinematic approach to the dynamo theory, we assume that a velocity field \(\varvec{u}\) is known. Its properties are crucial when one wants to explain how a flowing conductive liquid can generate the magnetic field, because the magnetic field is frozen into this fluid.

In the case of the so-called fast dynamo, a heuristic explanation of the mechanism was proposed by Vainshtein and Zeldovich (1972). The growth of the magnetic field is generated by an iterated sequence of three processes, i.e. stretch, twist and fold (\({\textit{STF}}\)), acting on the flux tube created by a small bundle of lines of the magnetic field. On the basis of this explanation, scientists started to construct dynamical systems called \({\textit{STF}}\) systems describing a steady-state velocity field \(\varvec{u}(\varvec{x})\) which mimics these three processes and is subject to certain constraints. We usually assume that \(\varvec{u}(\varvec{x})\) should satisfy the incompressibility condition \(\nabla \cdot \varvec{u}=0\), and the boundedness of flows to unity sphere \(\varvec{x}\cdot \varvec{x}=1\) with the boundary condition \(\varvec{x}\cdot \varvec{u}=0\). The streamlines in the first \({\textit{STF}}\) model proposed in Moffatt and Proctor (1985) were unbounded, which was an undesirable property of the system. The authors tried to correct this defect by multiplying the vector potential of the obtained velocity field \(\varvec{u}(\varvec{x})\) by the exponential term \(e^{-r/R}\) that forces the streamlines to return to the interior of the sphere with radius \(r=\sqrt{\varvec{x}\cdot \varvec{x}}=R\). Results of numerical simulations of dynamics of this type modified the velocity field; in particular, its multi-fractal properties were investigated in Vainshtein et al. (1996b).

A more elegant remedy was proposed in Bajer (1989) and Bajer and Moffatt (1990). The authors extended the velocity field considered in Moffatt and Proctor (1985), adding to it the appropriate additional potential field such that the two required conditions were satisfied. As a result, they obtained the following differential system

The properties of the system (1.1) were investigated in manys articles. The condition of incompressibility \(\nabla \cdot \varvec{u}=0\) means that the system preserves the volume in its phase space and is manifested by the absence of strange attractors. However, such systems can still exhibit a rich variety of structures with chaotic and regular orbits intricately interspersed among one another, see e.g. Chapter 7 in Lakshmanan and Rajasekar (2003). Bajer and Moffatt (1990) observed that for \(\alpha =\beta =0\), the system (1.1) is integrable with first integrals \(I_1= x_1 x_3^4\) and \(I_2=x_3^{-3}(x_1^2+x_2^2+x_3^2-1)\), and it is chaotic for small values of \(\alpha \). Lyapunov exponents and the power spectrum of (1.1) were analysed by Aqeel and Yue (2013). Additionally, Yue and Aqeel (2013) detected Smale’s horseshoe chaos using the Shil’nikov criterion for the existence of a heteroclinic trajectory. Vainshtein et al. (1996a) considered the system (1.1) with \(\beta =0\) and with small values of \(\alpha \) as a small perturbation of the integrable system corresponding to \(\alpha =0\).

Let \(\varvec{u}_0(\varvec{x})\) denote the vector field given by the right-hand sides of (1.1). It has zero divergence and can be considered as a velocity field of an incompressible fluid. Moreover, the unit ball

is invariant with respect to its flow, and the vector field \(\varvec{u}_0(\varvec{x})\) is tangent to the boundary \(\partial B^3\), which is the unit sphere \({\mathbb {S}}^{2}\). In fact, polynomial \(F_0=x_1^2+x_2^2+x_3^2-1 \) is a Darboux polynomial of \(\varvec{u}_0(\varvec{x})\), as it satisfies the equality

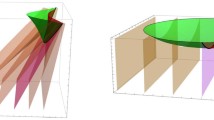

where \(L_{\varvec{v}}\) denotes the Lie derivative along vector field \(\varvec{v}\). Thus, sphere \({\mathbb {S}}^{2}\) which coincides with level set \(F_{0}(\varvec{x})=0\) is also invariant with respect to the flow generated by \(\varvec{u}_0(\varvec{x})\). Hence, considering \(\varvec{u}_0(\varvec{x})\) as the velocity of a fluid, the system (1.1) describes a steady flow inside a unit ball. As was pointed out by Bajer and Moffatt (1990), this is the first example of a steady Stokes flow in a bounded region exhibiting chaos. The fact that the system (1.1) is chaotic for generic values of parameters is clearly visible on the Poincaré cross-sections in Fig. 1 containing large chaotic regions.

Example of Poincaré cross-sections for the system (1.1) with cross-plane \(y=0\)

The system (1.1) is contained in a wider multi-parameter family of three-dimensional quadratic systems satisfying incompressibility and boundedness conditions proposed in Bajer and Moffatt (1990), of the form

where \(\varvec{a}, \varvec{\omega }\in \mathbb {R}^3\) are constant vectors and \(\varvec{J}\) is a symmetric matrix. We also call it the \({\textit{STF}}\) system. The vector field \(\varvec{u}(\varvec{x})\) defined by the right-hand side of (1.3) satisfies

for arbitrary values of parameters \(\varvec{a}\), \(\varvec{\omega }\) and \(\varvec{J}\). Thus, it is a divergence-free vector field, and the unit ball \(B^3\) and the unit sphere \({\mathbb {S}}^2\) are invariant with respect to its flow. In fact, this is the most general polynomial vector field of degree two having these two properties.

Note that Eq. (1.1) is just a special case of the system (1.3) corresponding to parameters

It is also worth mentioning that some experimental realizations of the \({\textit{STF}}\) flows have been conducted, see e.g. Fountain et al. (1998, 2000).

1.2 The Canonical Form of \({\textit{STF}}\) System

In this subsection we rewrite the considered system (1.3) in an equivalent form that is useful for further analysis. Let \(\varvec{A}\in \mathrm {SO}(3,\mathbb {R})\) be a rotation matrix and let \(\varvec{x}\mapsto \varvec{y}=\varvec{A}\varvec{x}\) be the corresponding change of variables. Then the transformed vector field \({{\widetilde{\varvec{u}}}}(\varvec{y})= \varvec{A}\varvec{u}(\varvec{A}^{\mathrm{T}}\varvec{y})\) has the form

where

Using the invariance property \(\varvec{u}(\varvec{x})\), we can assume that matrix \(\varvec{J}\) is diagonal \(\varvec{J}={\text {diag}}(J_1,J_2,J_3)\), and then

Hence, we introduce new parameters \(m_1=J_2-J_3\), \(m_2=J_3-J_1\) and \(m_3=J_1-J_2= -\,m_1-m_2\). Using these, we can write \(\varvec{J}\) in the form

Thus, system (1.3) can be written as

where \(\varvec{W}=(x_2 x_3, x_1 x_3, x_1 x_2)\) and \(\varvec{K}={\text {diag}}(m_1,m_2,m_3)\). In further analysis we will use this form of the \({\textit{STF}}\) flow which depends on eight parameters: components of vectors \(\varvec{a},\)\(\varvec{\omega }\), and \(m_1,m_2\).

Let us also note that this system is invariant with respect to simultaneous cyclic permutations of variables \((x_1,x_2, x_3)\mapsto \pi (\varvec{x})=(x_3, x_1,x_2)\) and parameters \(\varvec{a}\mapsto \pi (\varvec{a})\), \(\varvec{\omega }\mapsto \pi (\varvec{\omega })\), and \((m_1,m_2, m_3)\mapsto \pi (\varvec{m})=(m_3, m_1,m_2)\).

Remark 1.1

Let

where

Then one can check that the change \(\varvec{x}\mapsto \varvec{A}\varvec{x}\) transforms the system (1.1) to the form of (1.3) with \(\varvec{a}=(0,0,-\,3)\), \( \varvec{\omega }=(0,0,\alpha )\) and

This gives

which satisfy the relation

1.3 Main Problem

Let us note that investigations of the system (1.1), which is a two-parameter family of general SFT system (1.3), were performed in two directions. Apart from the results showing that for generic values of parameters \((\alpha ,\beta )\in \mathbb {R}^2\) the system (1.1) is chaotic, investigations of integrability have been carried out. Bao and Yang (2014) proved that if \(\alpha \ne 0\), then the system (1.1) does not admit a Darboux first integral. Nishiyama (2014a) proved that if \(\alpha \in \mathbb {R}^{+}{\setminus } \varLambda \), where \(\varLambda =\big \{\tfrac{24}{\sqrt{65}},4\sqrt{\tfrac{6}{5}}, 4\sqrt{\tfrac{21}{17}}\big \},\) and \(\beta = 1\), or \(\alpha = 1\), \(\beta \in \mathbb {R}^{+}{\setminus }\{2\sqrt{23}, 8\sqrt{5}, 16\sqrt{2}\}\), the system has no real meromorphic first integral. Later, Nishiyama (2014b) showed that the system does not admit a meromorphic first integral for an arbitrary \(\alpha >0\) and \(\beta =1\). These non-integrability results were obtained by means of the Ziglin theory combined with differential Galois theory. Yagasaki and Yamanaka (2017) formulated necessary conditions for the integrability of systems with orbits which are homo- or heteroclinic to unstable equilibria. Using these, they proved that if the system (1.1) admits a real meromorphic first integral, then \(\sqrt{25-\alpha (\alpha \pm \beta )}\in \mathbb {Q}\).

On the other hand, the strongest results describing chaotic behaviour of the system (1.1) were obtained by Neishtadt et al. (1999, 2003) by considering small perturbations of integrable case when \(\alpha =0\). The mechanism of destruction of an adiabatic invariant caused by the separatrix crossings, scatterings and captures by resonances results in mixing and transport in large parts of the phase space. Although the dynamics of the system are close to hyperbolic, the system is not ergodic, as one can find stable periodic orbits surrounded by stability islands.

In order to perform investigations of the general \({\textit{STF}}\) flow (1.3) using methods similar to those of Neishtadt et al. (1999, 2003), we must identify the parameter values for which the system is integrable.

For example, we found that if \(\varvec{a}=0\), then the system (1.3) simplifies to

and it is integrable with two quadratic first integrals

Let us note that system (1.14) coincides with the Zhukovski–Volterra gyrostat (Basak 2009). Thus, for small values of \(\varvec{a}\), one can consider the \({\textit{STF}}\) system as a perturbation of an integrable Zhukovski–Volterra gyrostat. This enables the possibility to investigate chaotic behaviour for small values of \(\Vert \varvec{a} \Vert \), and it seems that this fact has not been explored until now.

The aim of this paper is to study the integrability of the general \({\textit{STF}}\) flow (1.3). More precisely, our goal is to distinguish the parameter values for which the dynamics is regular and the considered system is integrable. However, all known methods for studying integrability give only the necessary conditions for integrability. Thus, it is better to say that our main goal is to distinguish parameter values for which the system is not integrable. Nevertheless, considering a specific family of \({\textit{STF}}\) systems, we found the necessary and sufficient conditions for its integrability.

To the best of our knowledge, apart from a preliminary analysis in Bajer and Moffatt (1990), the integrability of (1.3) has not yet been investigated. Thus, our main goal is to initiate such an investigation. Here we underline again that the formulated problem is very hard because the system depends on many parameters. Our attempt is to distinguish as many cases as possible for which investigation of the integrability can be performed effectively.

For analysis of the integrability, we use the differential Galois framework. Here, two facts are important. In the context of this paper, integrability means integrability in the Jacobi sense. Thus, we do not use the criteria for integrability of non-Hamiltonian systems developed by Ayoul and Zung (2010). In fact, we know only one article when differential Galois methods were specifically used to investigate integrability in the Jacobi sense (Przybylska 2008). Moreover, in this paper we propose to combine differential Galois tools with the Darboux method for studying integrability. This idea is general in the sense that it can be applied to studying the integrability of an arbitrary polynomial system. That is, to apply the differential Galois methods, we need a particular solution of the considered system. To find it we perform a direct search for Darboux polynomials. Then we restrict the search for a particular solution to the common zero level of Darboux polynomials. In the case of the \({\textit{STF}}\) system, we already have one Darboux polynomial \(F_0=x_1^2+x_2^2+x_3^2-1\). Hence, if \(F_1\) is an additional Darboux polynomial, then their common level

if non-empty, is a union of phase curves of the system. An analysis of variational equations along selected phase curves gives obstructions for the integrability. These are expressed in terms of properties of the differential Galois group of the variational equations.

In the general case, when the system admits several Darboux polynomials, we can try to find a first integral using the Darboux method.

Simply trying to perform the action described above for the \({\textit{STF}}\) flow, we quickly face serious difficulties. Again, because of the large number of parameters, the direct search for Darboux polynomials, even with a help of computer algebra systems, must be restricted to polynomials of low degree. Moreover, it appeared that the existence of just one additional Darboux polynomial almost always gives rise to a first integral of the system. This is why we restricted our search to Darboux polynomials of first degree, and we found all the cases when the \({\textit{STF}}\) system admits such a polynomial. The proof of this fact is purely analytic.

Thanks to the above result, we have found cases dependent on six parameters for which the differential Galois methods can be used, i.e. we know a particular solution of the system. However, an investigation of this case with all admissible parameters leads to intractable complexities. This is why we restrict our study to some cases with certain restrictions on parameters.

2 Results

In this section we collect the main results of our paper. They are naturally divided into two parts.

The first involves the determination of cases when the general \({\textit{STF}}\) system (1.9) admits a linear Darboux polynomial. Although this was a preliminary step in our investigations, it unexpectedly gave, among other things, quite a large list of integrable cases.

The second part of our results contains theorems which give necessary or necessary and sufficient conditions for the integrability of distinguished families of the \({\textit{STF}}\) system obtained by an application of the differential Galois methods.

2.1 \({\textit{STF}}\) System with Linear Darboux Polynomials

Finding all Darboux polynomials of a given system is difficult because we do not know the upper bound for the degree of this polynomial. Moreover, even if we fix the degree of the Darboux polynomial we search for, the problem is difficult because it reduces to a system of non-linear polynomial equations. The difficulty grows significantly when the systems considered depend on parameters.

As we mentioned above, the general \({\textit{STF}}\) system (1.9) has Darboux polynomial \(F_{0}(\varvec{x})\), and the problem is to find all values of parameters for which other Darboux polynomials exist. Even if we limit ourselves to linear Darboux polynomials, finding all of them for a multi-parameter \({\textit{STF}}\) system is not trivial. In fact, we have to find all solutions of a system of 10 quadratic polynomial equations dependent on 16 variables.

The results of the search for an additional linear Darboux polynomial in variables can be summarized in the following theorem.

Theorem 2.1

The \({\textit{STF}}\) system (1.9) has a Darboux polynomial of degree one only in the cases listed below and in conjugated cases obtained by a cyclic permutation of the parameters and variables.

- Case Ia::

If \(m_1=m_2=0\) and \(\varvec{a}\cdot \varvec{\omega }=0\), then there are three Darboux polynomials

$$\begin{aligned} \begin{aligned} F_1&= a_3x_2-a_2x_3-\omega _1, \quad F_2 = a_1x_3-a_3x_1-\omega _2,\quad F_3 =a_2x_1-a_1x_2-\omega _3,\\ P_1&=P_2=P_3=a_1x_1+a_2x_2+a_3x_3. \end{aligned} \end{aligned}$$(2.1)- Case Ib::

If \(m_1=m_2=0\) and \(\varvec{\omega }=\lambda \varvec{a}\), then there are two Darboux polynomials

$$\begin{aligned} \begin{aligned} F_1^{\varepsilon }&=-\left( a_2\Vert \varvec{a} \Vert +\mathrm {i}\varepsilon a_1a_3\right) x_1+\left( a_1\Vert \varvec{a} \Vert -\mathrm {i}\varepsilon a_2a_3\right) x_2 +\mathrm {i}\varepsilon (a_1^2+a_2^2)x_3,\\ P_1^{\varepsilon }&=a_1x_1+a_2x_2+a_3x_3+\mathrm {i}\varepsilon \lambda \Vert \varvec{a} \Vert , \end{aligned} \end{aligned}$$(2.2)where \(\varepsilon ^2=1\).

- Case IIa::

If

$$\begin{aligned} m_1a_2^2 =m_2a_1^2, \qquad \varvec{a}\cdot \varvec{\omega }= \omega _3 m_1\frac{a_2}{a_1}, \quad a_1\ne 0, \end{aligned}$$and \(m_1^2 +m_2^2\ne 0\) and \(a_1^2+a_2^2\ne 0\), then there is one Darboux polynomial

$$\begin{aligned} F_1 = -\,\omega _3+a_2x_1 -a_1 x_2, \quad P= \varvec{a}\cdot \varvec{x}- m_1\frac{a_2}{a_1}x_3. \end{aligned}$$(2.3)- Case IIb::

If \(a_1=a_2=0\), \(\omega _3=0\) and \(m_1^2+m_2^2\ne 0\), then

$$\begin{aligned} F_1^{\varepsilon } = \varepsilon \sqrt{m_1 m_2} x_1 + m_1 x_2+\frac{-m_1\omega _1 + \varepsilon \sqrt{m_{1}m_2}\omega _2 }{a_3 + \varepsilon \sqrt{m_1m_2}}, \quad P_1^{\varepsilon }= \left( a_3 +\varepsilon \sqrt{m_1m_2}\right) x_3. \end{aligned}$$(2.4)In this case, if additionally \(a_3 +\varepsilon \sqrt{m_1m_2}=0\), then it must be \( -m_1\omega _1 + \varepsilon \sqrt{m_{1}m_2}\omega _2=0\) and

$$\begin{aligned} F_1^{\varepsilon } = \varepsilon \sqrt{m_1 m_2} x_1 + m_1 x_2 \end{aligned}$$(2.5)is a first integral of the system.

- Case IIc::

If \(a_1=a_2=0\), \(\omega _1=\omega _2=0\) and \(m_2=-\,m_1\) and \(\omega _3\ne 0\), then the two polynomials

$$\begin{aligned} F_1^{\varepsilon } = -\,\mathrm {i}\varepsilon x_1 + x_2,\quad P_1^{\varepsilon }= -\,\mathrm {i}\varepsilon \omega _3 + \left( a_3 +\mathrm {i}\varepsilon m_1\right) x_3 \end{aligned}$$are Darboux polynomials.

- Case III::

If \(a_1=m_1=0\) and \(\varvec{\omega }=(\omega _1, -\alpha a_3, \alpha a_2)\), then there is one Darboux polynomial

$$\begin{aligned} F_1= -\,\alpha + x_1,\quad P_1 = a_2x_2 +a_3x_3. \end{aligned}$$(2.6)

All of the above cases except Case IIa are integrable. Since the \({\textit{STF}}\) flow preserves a volume in the phase space for the integrability, just one first integral is necessary; see explanations about the integrability in the Jacobi sense at the beginning of Sect. 3. Knowing Darboux polynomials, one can effectively construct first integrals using properties of Darboux polynomials recapitulated in Proposition 3.1. In particular, when cofactors are linearly dependent over \(\mathbb {Z}\), a rational first integral can be built. The \({\textit{STF}}\) system reduced to a fixed level of a first integral has an integrating factor (3.3) that enables us to find the second first integral using formula (3.4). This procedure is called the last Jacobi multiplier method and is briefly described in Sect. 3. Finding an explicit form of this first integral, however, can be difficult.

In Case Ia, polynomials of degree four \(F_0 F_i^2\), or rational functions \(F_i/F_j\), where \(F_i\) are given in (2.1), are first integrals, and one can choose two that are functionally independent e.g.

In Case Ib, since the Darboux polynomial \(F_1^{+}F_1^{-}\) has the cofactor \(2\varvec{a}\cdot \varvec{x}\) (see Eq. (2.2)), \(I_1=F_1^{+}F_1^{-}F_0\) is a first integral that after division by constant \(a_1^2+a_2^2\) takes the final form

In Case IIa, as well as in the conjugated cases obtained by a cyclic permutation of the parameters, the integrability of the system is an open question.

In Cases IIb and IIc, it holds that \(a_1=a_2=0\), and the Darboux polynomial \(F_0\) has the cofactor \(P_0=-\,2 a_3 x_3\). Thus, the Darboux polynomial \(F_1=F_1^+F_1^-\) has the cofactor \(P_1=2 a_3 x_3\), and the product \(I_1=F_0F_1\) is a polynomial first integral of the system. In Case IIb, first integral \(I_1=F_0F_1\) takes the form

Moreover, one can construct the second first integral \(I_2\) which is functionally independent of \(I_1\) and is of the Darboux type

where \(F_1^{\pm }\) are given in (2.4).

In the special subcase of Case IIb, the second first integral built by means of the last Jacobi multiplier and functionally independent of (2.5) is

In Case IIc, the explicit form of the first integral \(I_1=F_0F_1\) is

The second first integral can be constructed by means of the last Jacobi multiplier as

where \(I_1\) is given in (2.11). The integral in the last term defines an elliptic integral, see Section 230 in Byrd and Friedman (1971).

In Case III system is integrable with polynomial first integral \(I_1=F_1^2F_0=(x_1-\alpha )^2(x_1^2+x_2^2+x_3^2-1)\).

We can find the second first integral just by applying the Jacobi last multiplier method. However, it is instructive to note that in the considered case, the system admits an exponential factor H of the form

This satisfies the equation \(L_{\varvec{u}} H= -\,(a_2 x_2 +a_3x_3)H\), so

is a first integral of the system.

2.2 Integrability of Distinguished Families of the \({\textit{STF}}\) System

In Case IIa given in the previous section, the \({\textit{STF}}\) system depends on six parameters. The intersection of sphere \(F_0(\varvec{x})=0\) with plane \(F_1(\varvec{x})=0\), where \(F_1(\varvec{x})\) is given by (2.3) in \(\mathbb {R}^3\), is, if not empty, a small circle on the sphere. It is just a phase curve we look for in order to apply the differential Galois methods to study integrability. In general, the sphere \(F_0(\varvec{x})=0\) and plane \(F_1(\varvec{x})=0\) have a non-empty intersection in \(\mathbb {C}^3\) which gives us a phase curve of the complexified \({\textit{STF}}\) system. Hence, our idea concerning finding a particular solution of the system was successfully applied. In fact, it gave us more than we expected. When trying to apply the differential Galois techniques for the Case IIa family, we encountered serious problems. When working with a seven-parameter family, we did not find a good way to cope with the complexity of calculation. Moreover, the difficulties were of a fundamental nature. In the best case, using the differential Galois method, we can obtain necessary conditions for integrability that depend on five or four parameters. In fact, these conditions are not usable. This is why we decided to consider a family of the \({\textit{STF}}\) system in Case IIa with the additional assumption \(\varvec{\omega }=\varvec{0}\). We obtained the necessary and sufficient integrability conditions formulated in this theorem.

Theorem 2.2

Assume that \(\varvec{\omega }=\varvec{0}\) and \(a_1^2m_2 =a_2^2m_1\). Then the \({\textit{STF}}\) system is integrable if and only if either \(a_1=a_2=0\), or \(m_1=m_2=0\), or \(a_1=m_1=0\), or \(a_2=m_2=0\).

The first integrals in the four cases mentioned in the above theorem are constructed using Darboux polynomials and using the last Jacobi multiplier method.

If \(a_1=a_2=0\), then we are in Case IIb. Formulae for the two additional Darboux polynomials (2.4) simplify to

$$\begin{aligned} F_1^{\varepsilon }=\varepsilon \sqrt{m_1m_2}\,x_1+m_1x_2,\quad P_1^{\varepsilon }=(a_3 +\varepsilon \sqrt{m_1m_2})x_3, \end{aligned}$$and for the first integral (2.8) to

$$\begin{aligned} I_1=F_1^{+1}F_1^{-1}F_0=\left( m_1 x_2^2-m_2 x_1^2\right) \left( x_1^2 + x_2^2 + x_3^2-1\right) . \end{aligned}$$The second first integral built by means of the last Jacobi multiplier and after taking the exponent becomes

$$\begin{aligned} I_2=\frac{1}{m_2x_1^2-m_1x_2^2}\exp \left\{ \frac{2a_3}{\sqrt{m_1}\sqrt{m_2}}{\text {arctanh}}\left( \frac{\sqrt{m_2}\,x_1}{\sqrt{m_1}\,x_2}\right) \right\} . \end{aligned}$$One can simplify it using the formula \({\text {arctanh}}x=\tfrac{1}{2}\ln \tfrac{x+1}{1-x}\) to the form

$$\begin{aligned} I_2=\frac{\left( 1+\frac{2\sqrt{m_2}\, x_1}{\sqrt{m_1}\, x_2-\sqrt{m_2}\, x_1}\right) ^{\frac{a_3}{\sqrt{m_1}\sqrt{m_2}}}}{m_2x_1^2-m_1x_2^2}. \end{aligned}$$If \(m_1=m_2=0\), then we are in Case Ia with additional Darboux polynomials (2.1) and two functionally independent first integrals (2.7).

If \(a_1=m_1=0\), we are in Case III, where an additional Darboux polynomial given in (2.6) simplifies to \(F_1=x_1\) with the cofactor \(P_1=a_2x_2+a_3x_3\). The corresponding first integral is

$$\begin{aligned} I_1=F_1^2F_0=x_1^2\left( x_1^2 + x_2^2 + x_3^2-1\right) . \end{aligned}$$The second first integral built by means of the last Jacobi multiplier is

$$\begin{aligned} I_2=\frac{a_2x_3-a_3x_2}{x_1}+m_2\ln |x_1|, \end{aligned}$$(2.14)where \(|\cdot |\) denotes the absolute value.

If \(a_2=m_2=0\), an additional Darboux polynomial is \(F_1=x_2\) with the cofactor \(P_1=a_1x_1+a_3x_3\). The corresponding first integral is

$$\begin{aligned} I_1=F_1^2F_0=x_2^2\left( x_1^2 + x_2^2 + x_3^2-1\right) , \end{aligned}$$and the second first integral built by means of the last Jacobi multiplier takes the form

$$\begin{aligned} I_2=\frac{a_1x_3-a_3x_1}{x_2}+m_1\ln |x_2|. \end{aligned}$$This case can be obtained from the previous one by the change of variables \(x_1\leftrightarrow x_2.\)

Let us note that in all integrable cases, the first integral \(I_1\) is polynomial and global, but the second first integral obtained from the last Jacobi multiplier method is not meromorphic. The dynamics of divergence-free three-dimensional systems with one global first integral is described in Section 3 of (Lerman and Yakovlev 2019). Phase space of such systems is foliated by means of levels of its global first integral. In the case when a global first integral has only a finite number of critical levels, its non-critical levels are always a 2-torus, but the linearization of the flow on these tori is not always possible.

The second analysed family of \({\textit{STF}}\) systems can be considered as a direct generalization of the system (1.1). That is, we consider the system (1.9) with the following parameters

We denote the corresponding vector field by \(\varvec{u}_{\mathrm {g}}(\varvec{x})\). According to Remark 1.1, for the system (1.1) we have \(m_1m_2=25\), \(\omega _3=\alpha \) and \(a_3=-\,3\). Thus, \(\varvec{u}_{\mathrm {g}}(\varvec{x})\) is a two-parameter generalization of the system (1.1).

To describe the obtained results, we introduce the following parameters

With these parameters, and after rescaling of time \(t\mapsto a_3t\), the explicit form of the system corresponding to \(\varvec{u}_{\mathrm {g}}(\varvec{x})\) reads

where we defined \(\mu _3=-\,(\mu _1+\mu _2) \).

Regions \({\mathscr {C}}\), \({\mathscr {D}}\), \({\mathscr {D}}'\) and \({\mathscr {E}}\) on the plane of parameters \((\mu _1,\mu _2)\). The dotted lines in region \({\mathscr {D}}\) denote hyperbolas \({\mathscr {H}}_{3,1},{\mathscr {H}}_{5,3}, {\mathscr {H}}_{1,1}\), and the lines in region \({\mathscr {D}}'\) denote hyperbolas \({\mathscr {H}}_{1,1}^{\prime },{\mathscr {H}}_{5,3}^{\prime }, {\mathscr {H}}_{3,1}^{\prime }\), respectively, counting from the top

We divide the whole range of parameters \((\mu _1, \mu _2)\) into disjoint sets as shown in Figs. 2 and 3. Our investigation of the integrability of the system (2.17) is performed separately in each of these regions. Let us first note that in the case \(\mu _3=0\), i.e. where \(\mu _2=-\,\mu _1\), the system (2.17) is integrable with the first integral

Thus, in our further analysis, we exclude cases where \(\mu _2=-\,\mu _1\).

The results of our analysis are split into five theorems. To formulate the first of these, we define the hyperbolas

and

Theorem 2.3

Assume that \(\mu _1\mu _2\le 0\) i.e. \((\mu _1, \mu _2)\in {\mathscr {A}}\cup {\mathscr {B}}\cup {\mathscr {B}}'\) in Fig. 2. If the system (2.17) is integrable, then either

- 1.

\(\mu _1+\mu _2=0\), or

- 2.

\((\mu _1,\mu _2)\in {\mathscr {H}}\), \(\mu _2 <0\), and

$$\begin{aligned} \omega ^2 = \frac{4m^2}{ (\mu _1-1)(\mu _2+1)} \end{aligned}$$(2.21)for an integer m, or

- 3.

\((\mu _1,\mu _2)\in {\mathscr {H}}'\), \(\mu _2 >0\), and

$$\begin{aligned} \omega ^2 = \frac{4m^2}{ (\mu _1+1)(\mu _2-1)} \end{aligned}$$(2.22)for an integer m.

The cases specified in points 2 and 3 of Theorem 2.3 with parameters \((\mu _1,\mu _2)\in {\mathscr {H}}_{-}\) or \((\mu _1,\mu _2)\in {\mathscr {H}}'_{-}\) and satisfying (2.21) or (2.22), respectively, seem to be non-integrable. In Fig. 4a we present a Poincaré cross-section for the system (2.17) with parameters \((\mu _1,\mu _2)=\left( 3,-\tfrac{1}{2}\right) \in {\mathscr {H}}_{-}\subset {\mathscr {B}}\) and \(\omega =4\), satisfying (2.21) for \(m=2\). Similarly, Fig. 4b shows a Poincaré cross-section corresponding to the parameters \((\mu _1,\mu _2)=\left( -\,11,\tfrac{3}{8}\right) \in {\mathscr {H}}'_{-}\subset {\mathscr {B}}'\) and \(\omega =4\), satisfying (2.22) for \(m=5\). Most of both these Poincaré cross-sections fill scattered points obtained from intersections of a few chaotic orbits with the cross plane \(y=0\). Also visible are two regions filled with closed quasi-periodic orbits around central points corresponding to stable periodic solutions.

Example of Poincaré cross-sections for the system (2.17) with parameters \((\mu _1,\mu _2)\) lying on hyperbolas \({\mathscr {H}}_{-}\subset {\mathscr {B}}\) or \({\mathscr {H}}'_{-}\subset {\mathscr {B}}'\), cross-plane \(y=0\)

The analysis of cases with \(\mu _1\mu _2>0\) is split into four cases. Region \({\mathscr {C}}\) on the \((\mu _1,\mu _2) \) plane is defined by

see Fig. 3.

Theorem 2.4

Assume that \((\mu _1,\mu _2)\in {\mathscr {C}}\). If the system (2.17) is integrable, then \(\mu _1=\mu _2=\mu \) and \(\omega \mu \in \mathbb {Z}\).

In the cases specified in Theorem 2.4, Poincaré cross-sections do not give a clear suggestion concerning the integrability, see Fig. 5a, and its magnification around an unstable periodic solution in Fig. 5b. Calculations were carried out for the system (2.17) with parameters \(\mu _1=\mu _2=\mu =\tfrac{1}{2}\) and \(\omega =2\) satisfying \(\mu \omega =1\in \mathbb {Z}\). Actually, when we compare these Poincaré cross-sections with Fig. 6a and with its magnification in Fig. 6b obtained for the system (2.17) with parameters \(\mu _1=\mu _2=\mu =\sqrt{\tfrac{2}{3}}\) and \(\omega =\tfrac{3}{2}\sqrt{\tfrac{3}{2}}\), we do not see a large difference in the regularity of the trajectories, although in this case \(\mu \omega =\tfrac{3}{2}\not \in \mathbb {Z}\). The global Poincaré cross-sections shown in Figs. 5a and 6a have very regular structures built by means of quasi-periodic orbits. But the fact that we do not see chaos in the global scale does not mean that the system is regular. We can expect that in the neighbourhood of an unstable periodic orbit chaotic zones exist, but magnifications in Figs. 5b and 6b do not show them.

Example of a Poincaré cross-section for the system (2.17) with \((\mu _1,\mu _2)=\left( \tfrac{1}{2},\tfrac{1}{2}\right) \in {\mathscr {C}}\) and \(\omega =2\) satisfying \(\mu \omega =1\); cross-plane \(y=0\)

Example of a Poincaré cross-section for the system (2.17) with parameters \((\mu _1,\mu _2)=\left( \sqrt{\tfrac{2}{3}},\sqrt{\tfrac{2}{3}}\right) \in {\mathscr {C}}\) and \(\omega =\tfrac{3}{2}\sqrt{\tfrac{3}{2}}\) for which \(\mu \omega =\tfrac{3}{2}\not \in \mathbb {Z}\), cross-plane \(y=0\)

Region \({\mathscr {D}}\) in Fig. 3 is defined by the following inequalities

We also define a family of hyperbolas

parameterized by two odd integers \(k,l\in \mathbb {Z}\).

Theorem 2.5

Assume that \((\mu _1,\mu _2)\in {\mathscr {D}}\). If the system (2.17) is integrable, then \((\mu _1,\mu _2)\in {\mathscr {H}}_{k,l}\) and

for certain odd integers \(k,l\in \mathbb {Z}\).

In region \({\mathscr {D}}'\) determined by inequalities

we define a family of hyperbolas

parameterized by two odd integers \(k,l\in \mathbb {Z}\), see Fig. 3.

Examples of Poincaré cross-sections for the system (2.17) with parameters lying on hyperbolas \({\mathscr {H}}_{k,l}\subset {\mathscr {D}}\) and \({\mathscr {H}}'_{k,l}\subset {\mathscr {D}}'\), cross-plane \(y=0\)

Theorem 2.6

Assume that \((\mu _1,\mu _2)\in {\mathscr {D}}'\). If the system (2.17) is integrable, then \((\mu _1,\mu _2)\in {\mathscr {H}}_{k,l}'\) and

for certain odd integers \(k,l\in \mathbb {Z}.\)

The cases specified in Theorems 2.5 and 2.6 seem to be non-integrable. Figure 7a presents a Poincaré cross-section for the system (2.17) with parameters \((\mu _1,\mu _2)=\left( \tfrac{9}{8},\tfrac{1}{3}\right) \in {\mathscr {H}}_{3,1}\subset {\mathscr {D}},\) and \(\omega =\sqrt{\tfrac{3}{2}}\) satisfying (2.26). Similarly, Fig. 7b shows a Poincaré cross-section for the system (2.17) with parameters \((\mu _1,\mu _2)=\left( \tfrac{1}{3},\tfrac{9}{8}\right) \in {\mathscr {H}}'_{3,1}\subset {\mathscr {D}}',\) and \(\omega =\sqrt{\tfrac{3}{2}}\), satisfying the condition (2.29). Both these Poincaré cross-sections are mainly created by scattered points due to chaotic trajectories with two regions filled with closed quasi-periodic orbits surrounding certain period orbits.

Most difficult for the analysis is the case when \((\mu _1,\mu _2)\in {\mathscr {E}}\) in Fig. 3. This is why the necessary conditions given in this theorem are not optimal. Here, region \({\mathscr {E}}\) is defined by the following inequalities

Theorem 2.7

Assume that \((\mu _1,\mu _2)\in {\mathscr {E}}\). If \(\omega \sqrt{\mu _1\mu _2} \in \mathbb {C}{\setminus } \tfrac{1}{2}\mathbb {Z}\) and

then the system (2.17) is not integrable.

Example of a Poincaré cross-section for the system (4.52) with \((\mu _1,\mu _2)=\left( \tfrac{3}{2},\tfrac{3}{2}\right) \in {\mathscr {E}}\) and \(\omega =\tfrac{2}{3}\), cross-plane \(y=0\)

Let us check what happens when we consider the system (2.17) satisfying conditions mentioned in Theorem 2.4, i.e. \(\mu _1=\mu _2=\mu \) and \(\mu \omega \in \mathbb {Z}\), but when \((\mu _1,\mu _2)\in {\mathscr {E}}\). Then the Poincaré cross-section given in Fig. 8 for the values of parameters \((\mu _1,\mu _2)=\left( \tfrac{3}{2},\tfrac{3}{2}\right) \in {\mathscr {E}}\) and \(\omega =\tfrac{2}{3}\), satisfying \(\mu \omega =1\), shows an evident macroscopic chaotic region.

3 Tools and Methods

Let us make the notion of integrability precise in the context of this paper. Since the system is divergence-free, it is natural to use integrability in the Jacobi sense.

Definition 3.1

An n-dimensional system \({{\dot{\varvec{x}}}}=\varvec{v}(\varvec{x})\) is integrable in the Jacobi sense if and only if it admits \((n-2)\) functionally independent first integrals \(f_1(\varvec{x}), \ldots , f_{(n-2)}(\varvec{x})\), and an invariant n-form \(\omega =\rho (\varvec{x}) \mathrm {d}x_1 \wedge \cdots \wedge \mathrm {d}x_n\).

The invariance \(\omega \) in the above definition means that

As the \({\textit{STF}}\) system is divergence-free, i.e. \(\rho =1\), its integrability in the Jacobi sense means that it possesses a first integral.

A system integrable in the Jacobi sense is integrable by quadratures. In fact, taking the first integrals \(f_1(\varvec{x}), \ldots , f_{(n-2)}(\varvec{x})\) as new variables, we can assume that the transformation

is invertible, at least locally. In new variables, the system reduces to two equations

with right-hand sides dependent on \((n-2)\) parameters. System (3.2) has an integrating factor

so

is the remaining first integral, which allows us to determine phase curves of the system, and with one more quadrature allows us to determine the time evolution along them.

Let us recall basic definitions and facts concerning Darboux polynomials. We denote by \(\mathbb {C}[\varvec{x}]=\mathbb {C}[x_1, \ldots , x_n]\) the ring of complex polynomials of n variable \(\varvec{x}\), and by \(\mathbb {C}(\varvec{x})\) the field of rational functions. Let \(\varvec{v}(\varvec{x})=(v_1(\varvec{x}), \ldots , v_n(\varvec{x}))\in \mathbb {C}[\varvec{x}]^n \) be a polynomial vector field and let \(L_{\varvec{v}}\) be the corresponding Lie derivative.

Polynomial \(F\in \mathbb {C}[\varvec{x}]\) is called a Darboux polynomial of \(\varvec{v}(\varvec{x})\) if \(L_{\varvec{v}}F=PF\) for a certain polynomial \(P\in \mathbb {C}[\varvec{x}]\), which is called the cofactor of F. We collect basic properties of Darboux polynomials in the following proposition.

Proposition 3.1

-

1.

If \(F_i\) are Darboux polynomials, \(L_{\varvec{v}}F_i=P_iF_{i}\), for \(i=1,\ldots , k\), then their product \(F= F_1\cdots F_k \) is a Darboux polynomial with a cofactor \(P=P_1+\cdots +P_k\), i.e. \(L_{\varvec{v}}F=PF\).

-

2.

If F is a Darboux polynomial, then its irreducible factors are also Darboux polynomials.

-

3.

If \(F_1, \ldots , F_k\) are Darboux polynomials and their cofactors satisfy

$$\begin{aligned} \sum _{i=1}^k \alpha _i P_i(\varvec{x})=0 \end{aligned}$$(3.5)for certain numbers \(\alpha _1, \ldots , \alpha _k\in \mathbb {C}\), then \(F=F_1^{\alpha _1} \cdots F_k^{\alpha _k}\) is a first integral of \(\varvec{v}(\varvec{x})\).

-

4.

If \(F_1, \ldots , F_k\) are Darboux polynomials with the same cofactor P, then an arbitrary linear combination

$$\begin{aligned} F=\sum _{i=1}^k \alpha _i F_i(\varvec{x}), \qquad \alpha _i\in \mathbb {C}, \quad i=1, \ldots , k \end{aligned}$$(3.6)is a Darboux polynomial with the cofactor P.

A very nice and concise exposition of this subject can be found in Nowicki (1994).

To prove non-integrability of the \({\textit{STF}}\) system, we need strong necessary integrability conditions that can be effectively applied. We use obstructions formulated by means of the properties of the differential Galois group of variational equations obtained from the linearization of the \({\textit{STF}}\) system along certain known particular solutions. For a detailed exposition of the differential Galois theory, see e.g. Kaplansky (1976) and Morales Ruiz (1999). To find a necessary particular solution, an additional Darboux polynomial or a manifold invariant with respect to the \({\textit{STF}}\) flow can be useful. We will apply the following theorem, which follows from Corollary 3.7 in Casale (2009).

Theorem 3.1

Assume that a complex meromorphic system \({{\dot{\varvec{x}}}} =\varvec{v}(\varvec{x})\), \(\varvec{x}\in \mathbb {C}^n\) is integrable in the Jacobi sense with meromorphic first integrals and with a meromorphic invariant n-form. Then the identity component of the differential Galois group of variational equations along a particular phase curve is solvable. Moreover, the identity component of the normal variational equations is Abelian.

Our paper is the first application of this general criterion for the integrability in the Jacobi sense. The applicability of this theorem is dependent on the knowledge of a particular solution and the possibility of determining the differential Galois group of variational equations along this particular solution.

For the investigated system, we found particular solutions, so the problem is to determine the differential Galois group of variational equations. Here we underline that for a parameterized system, this problem is very hard and, in fact, is unsolvable, see Theorem 1 in Boucher (2000).

The crucial step in our investigation is the proper reduction of the variational equation to the second-order equation of second-order and rational coefficients. There is a canonical recipe for how to perform this. The fact that we succeeded in reducing the variational equations to the Riemann P equation gave us the possibility of proving our main theorem. It was equally important to find necessary and sufficient conditions for which the differential Galois group of the Riemann P equation has an Abelian identity component, as the well-known Kimura theorem only gives the necessary and sufficient conditions for solvability of this group.

4 Proofs

4.1 Proof of Theorem 2.1

Preliminary analysis Let F be a Darboux polynomial linear in variables and let P be its cofactor. We can write them in the form

and we can assume that \(\varvec{f}\ne {\mathbf {0}}\). The polynomial \(R=L_{\varvec{u}}F - PF\) has degree two. Its homogeneous terms \(R_i\) of degree i are as follows

As, by assumption, \(R(\varvec{x})\) vanishes identically, all its coefficients vanish so that we obtain the following system of polynomial equations:

where

and \( [\cdot ,\cdot ]\) denotes the commutator of matrices. Hence, we have a system of 10 polynomial equations for 15 variables. Taking into account two independent rescalings, we can reduce the number of variables to 13.

Equation (4.3c) can be rewritten in the form

where (i, j, k) is a cyclic permutation of (1, 2, 3).

The starting point of our analysis is two equations \(R_1(\varvec{f})=0\) and \(R_2(\varvec{f})=0\). Their explicit forms are

Note also that from (4.5) we get

Proposition 4.1

Let \(F(\varvec{x})\) and \(P(\varvec{x})\) of the form (4.1) be a Darboux polynomial and the respective cofactor of (1.3). Then \( \varvec{f}\cdot \varvec{a}=0\), and \( \varvec{f}\cdot \varvec{p}=0\). Moreover,

- 1.

if \(\varvec{f}\cdot \varvec{f}\ne 0\), then \(p_0=0\) and \(\varvec{\omega }\cdot \varvec{p}=0\),

- 2.

if \(p_0\ne 0\), then \(f_0=0\), \(\varvec{f}\cdot \varvec{f}=0\) and \(\varvec{f}\cdot \varvec{\omega }=0\).

Proof

There are two cases.

If \(\varvec{f}\cdot \varvec{f}\ne 0\), then directly from Eqs. (4.8) and (4.9) we obtain that \(\varvec{f}\cdot \varvec{a}=0\), and \( \varvec{f}\cdot \varvec{p}=0\).

If \(\varvec{f}\cdot \varvec{f}= 0\), then from (4.7) it follows that either \(f_0=0\), or \(\varvec{f}\cdot \varvec{p}=0\). But, if \(f_0=0\), then by (4.3a) \(\varvec{f}\cdot \varvec{a}=0\), and then by (4.9), \(\varvec{f}\cdot \varvec{p}=0\). If \(\varvec{f}\cdot \varvec{p}=0\), then (4.9) implies that \(\varvec{f}\cdot \varvec{a}=0\).

In this way we have proved that \( \varvec{f}\cdot \varvec{a}=0\), and \( \varvec{f}\cdot \varvec{p}=0\).

To prove point 1 of the Proposition, we note that if \(\varvec{f}\cdot \varvec{f}\ne 0\), then from (4.7) we get \(p_0=0\), because \( \varvec{f}\cdot \varvec{p}=0\). Next, from Eq. (4.3b) we obtain

so, taking the scalar product of both sides with \(\varvec{p}\) we get \((\varvec{p}\cdot \varvec{\omega }) (\varvec{f}\cdot \varvec{f})=0 \). As \(\varvec{f}\cdot \varvec{f}\ne 0\) by assumption, we obtain \(\varvec{p}\cdot \varvec{\omega }=0\).

Now we prove point 2. As \(\varvec{f}\cdot \varvec{a}=0\), Eq. (4.3a) implies that if \(p_0\ne 0\), then \(f_0=0\). Similarly, because \(\varvec{f}\cdot \varvec{p}=0\), from Eq. (4.7) it follows that \(p_0\ne 0\) implies \(\varvec{f}\cdot \varvec{f}= 0\).

From Eq. (4.3b), with \(f_0=0\), we have

so \((\varvec{f}\cdot \varvec{\omega })=0\) because \(\varvec{f}\ne \varvec{0}\). \(\square \)

We recapitulate the above considerations in the following.

Corollary 4.1

System (1.3) with \(\varvec{a}\ne \varvec{0}\) has a Darboux polynomial \(F=f_0 +\varvec{f}\cdot \varvec{x}\) with cofactor \(P=p_0+\varvec{p}\cdot \varvec{x}\) if

and

and

where (i, j, k) is a cyclic permutation of (1, 2, 3).

We split our further analysis into three disjoint cases corresponding to the number of non-vanishing components of vector \(\varvec{f}=(f_1,f_2,f_3)\). We assume that \(\varvec{a}\ne \varvec{0}\), \(\varvec{a},\varvec{\omega }\in \mathbb {R}^3\), and \(m_1,m_2\in \mathbb {R}\). Under these assumptions, our analysis is complete.

Case I: Let us assume that \(f_i\ne 0\) for \(i=1,2,3\). Then, Eq. (4.12) imply that \(\varvec{p}=\varvec{a}\), and in turn, from Eq. (4.15) we obtain \(m_1=m_2=m_3=0\).

If \(\varvec{f}\cdot \varvec{f}\ne 0\), then by Proposition 4.1\(\varvec{p}\cdot \varvec{\omega }=\varvec{a}\cdot \varvec{\omega }=0\) and \(p_0=0\). Therefore, in this case we take an arbitrary \(\varvec{f}\ne 0\) such that \(\varvec{f}\cdot \varvec{a}=0\), and then Eqs. (4.15), (4.12) and (4.13) are fulfilled. It remains to solve Eq. (4.14) for \(f_0\). By taking the scalar product of both sides (4.14) with \(\varvec{a}\), we obtain

To summarize, if \(\varvec{f}\cdot \varvec{f}\ne 0\) and \(\varvec{f}\cdot \varvec{a}=0\), then \(F=f_0 +\varvec{f}\cdot \varvec{x}\) with \(f_0\) given above is a Darboux polynomial of (1.3), and \(P=\varvec{a}\cdot \varvec{x}\) is its cofactor. Note that the cofactor does not depend on a choice of \(\varvec{f}\). Thus, we have a family of Darboux polynomials parameterized by a complex vector \(\varvec{f}\) which is orthogonal to vector \(\varvec{a}\). As all these Darboux polynomials have the same cofactor, they form a two-dimensional complex linear space, see point 4 in Proposition 3.1. Each element of this vector space can be written as a linear combination of the following three polynomials

Compare this with formula (2.1).

Assume now that \(p_0\ne 0\); then by Proposition 4.1 we have \(f_0=0\) and \(\varvec{f}\cdot \varvec{f}=0\). It is easy to show that \(\varvec{f}\cdot \varvec{f}=0\) if and only if \(\varvec{f}=\varvec{b}+\mathrm {i}\varvec{c}\), with \(\varvec{b},\varvec{c}\in \mathbb {R}^3\) such that \(\varvec{b}\cdot \varvec{b}=\varvec{c}\cdot \varvec{c}\) and \(\varvec{b}\cdot \varvec{c}=0\). As \(\varvec{f}\cdot \varvec{a}=0\), we have \(\varvec{a}\cdot \varvec{b}=0\) and \(\varvec{a}\cdot \varvec{c}=0\). Thus, a real vector perpendicular to \(\varvec{b}\) and \(\varvec{c}\) is parallel to \(\varvec{a}\). Hence, because \(\varvec{f}\cdot \varvec{\omega }=0\), we have \(\varvec{\omega }=\lambda \varvec{a}\) for a certain \(\lambda \in \mathbb {R}\). It remains to determine \(p_0\). When multiplying Eq. (4.3b) by \(\varvec{b}\), we obtain

To summarize, if \(\varvec{f}\cdot \varvec{f}\ne 0\) and \(\varvec{f}\cdot \varvec{a}=0\), then \(F=\varvec{f}\cdot \varvec{x}\) is a Darboux polynomial of (1.3), and \(P=p_0+\varvec{a}\cdot \varvec{x}\) with \(p_0\) defined above is its cofactor. In fact, the above formulae define a family of Darboux polynomials for the given parameters of the system. To show this we assume that for a given \(\varvec{a}\) and \(\varvec{\omega }=\lambda \varvec{a}\), \(\varvec{f}_0=\varvec{b}_0 +\mathrm {i}\varvec{c}_0\) satisfies \(\varvec{f}_0\cdot \varvec{f}_0=0\) and \(\varvec{a}\cdot \varvec{f}_0=0\). Then \(\varvec{f}(s)=\varvec{b}(s) +\mathrm {i}\varvec{c}(s)\) where

satisfies \(\varvec{f}(s)\cdot \varvec{f}(s)=0\) and \(\varvec{a}\cdot \varvec{f}(s)=0\) for all \(s\in \mathbb {R}\). Moreover

Hence, for arbitrary \(s\in \mathbb {R}\), \(F(s)=\varvec{f}(s)\cdot \varvec{x}\) is a Darboux polynomial of the system and \(P=p_0 +\varvec{a}\cdot \varvec{x}\) is its cofactor.

To give explicit forms of vectors \(\varvec{b}\) and \(\varvec{c}\), we assume that \(a_1\ne 0\). Then, we can set

and \(p_0=\mathrm {i}\lambda \Vert \varvec{a} \Vert \). Substituting these formulas into (4.19) gives \(F(s)=\varvec{f}(s)\cdot \varvec{x}=\tfrac{e^{-\mathrm {i}s}}{\Vert \varvec{a} \Vert }F_1^{+}\), where \(F_1^{+}\) is given in (2.2). Since \(F_1^{+}\) is a complex Darboux polynomial, its complex conjugation is also a Darboux polynomial \(F_1^{-}={\overline{F}}_1^{+}\) with the cofactor \(P_1^{-}={\overline{P}}_1^{+}\).

Case II: Here we assume that two components of \(\varvec{f}\) are different from zero. Let \(f_1 f_2\ne 0\) and let \(f_3 = 0\). Then, by (4.12) we get \(p_1=a_1\) and \(p_2=a_2\). Equations (4.15) reduce to the following system

As a homogeneous system for \((f_1,f_2)\) it has a non-zero solution if

We can assume that \(m_1m_2 \ne 0\). In fact, if \(m_1=0\), then \(p_3=a_3\) and \(m_2=0\), so this is the case considered in the previous subsection.

We split our further analysis into two parts with results collected in two lemmas.

Lemma 4.1

Assume that the \({\textit{STF}}\) system has a Darboux polynomial \(F=f_0 +f_1x_1+f_2x_2\) with \(f_1f_2\ne 0\) and \(m_1^2 +m_2^2\ne 0\). If \(a_1^2+a_2^2\ne 0\). Then,

and the parameters of the system satisfy the conditions

The cofactor of F is

Proof

As \(\varvec{f}\cdot \varvec{a}= a_1f_1+a_2f_2=0\) and \(f_1f_2\ne 0\), we have \(a_1a_2\ne 0\) and we can assume that \(\varvec{f}=(a_2, -a_1,0)\). Next, from (4.22) we obtain

and

As \(\varvec{f}\cdot \varvec{f}=a_1^2+a_2^2\ne 0\), from Proposition 4.1 we obtain that \(p_0=0\), and then condition \(\varvec{p}\cdot \varvec{\omega }=0\) reads

Finally, Eq. (4.14) simplify to

Hence, \(f_0=-\,\omega _3\), and then the last equation in (4.30) coincides with (4.29). \(\square \)

Lemma 4.2

Assume that \(a_1=a_2=0\)\(m_1^2 +m_2^2\ne 0\). If a \({\textit{STF}}\) system has a Darboux polynomial \(F=f_0 +f_1x_1+f_2x_2\) with \(f_1f_2\ne 0\) then either

- 1.

\(\omega _3=0\) and

$$\begin{aligned} F_1^{\varepsilon } = f_0^{\varepsilon } +\varepsilon \sqrt{m_1 m_2} x_1 + m_1 x_2, \quad \varepsilon ^2=1, \end{aligned}$$(4.31)where

$$\begin{aligned} f_0^{\varepsilon }=\frac{-m_1\omega _1 + \varepsilon \sqrt{m_{1}m_2}\omega _2 }{a_3 + \varepsilon \sqrt{m_1m_2}}, \end{aligned}$$(4.32)are Darboux polynomials with cofactors

$$\begin{aligned} P_1^{\varepsilon }= (a_3 +\varepsilon \sqrt{m_1m_2})x_3. \end{aligned}$$(4.33)In this case if \(a_3 +\varepsilon \sqrt{m_1m_2}=0\), then \(m_1\omega _1 - \varepsilon \sqrt{m_{1}m_2}\omega _2=0\) and \(F_1^{\varepsilon } = \varepsilon \sqrt{m_1 m_2} x_1 + m_1 x_2\) are first integrals of the system.

- 2.

or \(\omega _3\ne 0\) and then \(\omega _1=\omega _2=0\) and \(m_2=-\,m_1\). In this case there are two Darboux polynomials

$$\begin{aligned} F_1^{\varepsilon } = -\,\mathrm {i}\varepsilon x_1 + x_2, \end{aligned}$$(4.34)with the corresponding cofactors

$$\begin{aligned} P_1^{\varepsilon }= -\,\mathrm {i}\varepsilon \omega _3 + (a_3 +\mathrm {i}\varepsilon m_1)x_3. \end{aligned}$$(4.35)

Proof

Since, by assumption, \(a_1=a_2=0\), we have also \(p_1=p_2=0\). Next, from Eq. (4.22) we determine two values for \(p_{3}\)

From the same equation we conclude that up to a multiplicative constant, \(f_1 = \varepsilon \sqrt{m_1 m_2}\) and \(f_2=m_1\). Vector \(\varvec{f}\) is isotropic if \(f_1^2 +f_2^2=m_1(m_1+m_2)=-\,m_1m_3=0\). Let us first assume that \(m_3\ne 0\). Then, \(\varvec{f}\cdot \varvec{f}\ne 0\) and, by Proposition 4.1, \(p_0=0\) but we still have to solve Eq. (4.14), which now reduce to equations

which give \(\omega _3\) and

If \(a_3 + \varepsilon \sqrt{m_1 m_2}=0\), then the third equation in (4.37) gives \(-m_1\omega _1 + \varepsilon \sqrt{m_1 m_2}\omega _2=0\) and the second statement in point 1 of the lemma follows.

If \(m_3=0\), i.e. \(m_2=-\,m_1\), we cannot claim that \(p_0=0\). But in this case we can set \(\varepsilon \sqrt{m_1 m_2}=\mathrm {i}\varepsilon m_1\). Now, Eqs. (4.13) and (4.14) reduce to the following system:

Hence, if \(p_0=0\), then \(\omega _3=0\) and \(F_1^{\varepsilon }\) and \(P_1^{\varepsilon }\) are given by formulae (4.31) and (4.33) with \(m_2=-\,m_1\).

On the other hand, if \(p_0\ne 0\), i.e. \(\omega _3\ne 0\), then necessarily \(f_0=0 \), and the third equation in (4.39) implies that \(\omega _1=\omega _2=0\). As \(f_0=0\), we rescale \(F_1^{\varepsilon }\) dividing it by \(m_1\) in order to obtain (4.34). \(\square \)

Case III: Here we assume that \(f_1\ne 0\) and \(f_2=f_3=0\). Thus, from equation \(\varvec{f}\cdot \varvec{a}=0\) we get \(a_1=0\), and similarly, \(p_1=0\). Then, from Eq. (4.6) we obtain immediately \(m_1=0\), \(p_2=a_2\) and \(p_3=a_3\). Now Eq. (4.14) read

Thus, \(p_0=0\), and from the last two equations we deduce that \(\omega _2a_2 +\omega _3a_3=0\). Because, by assumption, \(\varvec{a}\ne 0\), we can set \((\omega _2,\omega _3) = \alpha (-\,a_3, a_2)\), and taking \(f_1=1\), we obtain \(f_0=-\,\alpha \).

To conclude, if \(a_1=m_1=0\) and \(\varvec{\omega }=(\omega _1, -\alpha a_3, \alpha a_2)\), then the system has the Darboux polynomial \(F_1= x_1-\alpha \) with the cofactor \(P_1 = a_2x_2 +a_3x_3\).

Collecting the results obtained for all the above cases gives the statement of Theorem 2.1.

4.2 Proof of Theorem 2.2

Proof

In the previous section we showed that if the \({\textit{STF}}\) system possesses a linear Darboux polynomial, then it is integrable, with the exception of the families distinguished in Lemma 4.1. These families depend generically on six real parameters, and there is no reasonable way to effectively and completely investigate their integrability. This is why we decided to investigate certain subfamilies which depend on a smaller number of parameters. Thus, in Theorem 2.2, we consider the \({\textit{STF}}\) system satisfying the two conditions \(\varvec{\omega }=\varvec{0}\) and \(a_1^2m_2=a_2^2m_1\).

Taking into account the thesis of Theorem 2.2, we can assume that \(a_1a_2\ne 0\) and \(m_1m_2\ne 0\).

With the specified restrictions on parameters, the \({\textit{STF}}\) system possesses one additional Darboux polynomial \(F_1\) with the corresponding cofactor \(P_1\) given by

We first set \(a_1=a\sin \alpha \) and \(a_2=a\cos \alpha \), \(a=\sqrt{a_1^2+a_2^2}\). Next, we rotate coordinates \(\varvec{x}=A\varvec{y}\) in such a way that the Darboux polynomial \(F_1\) becomes a new coordinate

The transformed system reads

where parameters c and s are defined by \(a_3=a c\), \(a_3 + m_1 \cot \alpha =a s \), and

A particular solution is given by the intersection of the sphere \(F_0=y_1^2+y_2^2+y_3^2 -1=0\) with the plane \(F_{1}=y_1=0\), so it is the great circle \(y_2^2+y_3^2=1\). We parameterize it in the following way

where function x(t) satisfies the differential equation

The variational equations for this particular solution have the form

where

The explicit form of entries \(b_{i1}\) is irrelevant for our further considerations. The equation for \(Y_1\) separates from the other equations. Thus, we can assume that \(Y_1=0\), and then equations for \(Y_2\) and \(Y_3\) form a closed system called a normal variational system. If we choose

as a dependent variable, and

as an independent variable, then we obtain the second-order differential equation

with rational coefficients

The reduced form of this equation

is obtained by means of the transformation

The coefficient r(z) in (4.48) has the form

where

Equation (4.48) has three regular singular points at \(z=0\), \(z=1\) and \(z=\infty \), so it is a Riemann P equation. To prove non-integrability of the \({\textit{STF}}\) system by Theorem 3.1, we must show that the identity component of the differential Galois group of Eq. (4.48) is not Abelian. The facts concerning the differential Galois group of a general Riemann P equation are collected in “Appendix B” section.

In the above notation, \(\rho \), \(\sigma \) and \(\tau \) are the differences of exponents at singular points. From (4.51) we have \(\rho -\sigma +\tau =1\), so by Lemma B.1, the equation and its differential Galois are reducible. Next, by Lemma B.4, if the identity component of the differential Galois group is Abelian, then either all the exponents are rational or the difference of the exponents at one point is an integer, and this singularity is not logarithmic.

Let us check the first possibility. Conditions \(\rho , \sigma \in \mathbb {Q}\) imply that \(c=s\). Recall that \(c=\tfrac{a_3}{a}\), and \(s=\tfrac{b}{a}=\tfrac{a_3}{a}+\tfrac{a_2m_1}{a_1a}\). Thus, we obtain condition \(a_2m_1=0\). However, the assumptions of the theorem exclude this case.

As \(\rho \) and \(\tau \) are not real numbers, for the second possibility we have only one choice, namely, that the difference of exponents \(\sigma \) at \(z=1\) is an integer.

In order to apply Lemma B.5, we must calculate all the exponents at all singularities. If we assume that \(\sigma =n\in \mathbb {N}\), then \(c=\tfrac{n-3+(n-1)s^2}{2s},\) and the exponents are

We calculate all the sums mentioned in Lemma B.5

Note that none of these sums belongs to the set \(\langle n\rangle \) defined as

This means that the singularity \(z=1\) is logarithmic.

To summarize, the identity component of the differential Galois group of the variational equation is solvable, but not Abelian. Hence, the system is not integrable; this finishes our proof. \(\square \)

4.3 Proof of Theorems 2.3–2.7

In this section we prove theorems concerning the non-integrability of the systems

where we defined \(\mu _3=-\,(\mu _1+\mu _2) \).

The system (4.52) has a particular phase curve defined by

The variational equations for this curve have the form

Only a subsystem for two first variables is relevant for further consideration.

This is a normal variational system. It can be rewritten as a second-order equation, although this procedure is not unique. To find an optimal reduction, we can derive the second-order differential equation for variable \(Z = c_1X_1 +c_2X_2\) with arbitrary constant coefficients \(c_1\) and \(c_2\). We achieve this by the elimination of \(X_{1}\) and \(X_2\) from the equations

The obtained equation

has complicated coefficients. For further analysis, it is crucial to choose coefficients \(\varvec{c}\) in such a way that the obtained equation has the simplest form. For the problem considered here, a generic choice of coefficients \(\varvec{c}=[c_1,c_2]^{\mathrm{T}}\) leads to an equation with four regular singular points. However, we note that for all the values of the problem parameters except the case \(\mu _2=-\,\mu _1\), we can reduce the system to an equation with three regular singular points, i.e. to the Riemann P equation. To achieve this, we choose the independent variable

and set

as a dependent variable, where

Here, we assume that \(\omega \ne 0\). Then, w(z) satisfies the equation

where

This is the Riemann P equation; see “Appendix B” section. The differential Galois group of this equation is denoted by \({\mathscr {G}}\), and its identity component by \({\mathscr {G}}^{\circ }\). The differences of exponents \(\rho \) and \(\sigma \) are real or imaginary, depending on the values taken by \(\mu _1\) and \(\mu _2\), and the analysis of \({\mathscr {G}}^{\circ }\) splits into parts related to particular regions of \((\mu _1,\mu _2)\)-plane. We first consider the case when parameters belong to the region \({\mathscr {A}}\) defined by the following three inequalities

see Fig. 2.

Lemma 4.3

If \((\mu _1,\mu _2)\in {\mathscr {A}}\), then the identity component of the differential Galois group of Eq. (4.60) is not Abelian, except the case \(\mu _1=\mu _2=0\).

Proof

If \((\mu _1,\mu _2)\in {\mathscr {A}}\), then \(\rho , \sigma \in \mathrm {i}\mathbb {R}\), and \(\tau = 1 - 2\mathrm {i}\omega \sqrt{-\mu _1\mu _2}\) with \({\text {Im}}\tau = -\, 2\omega \sqrt{-\mu _1\mu _2}\), see formulae (4.61).

Assume that \((\mu _1,\mu _2)\in {\mathscr {A}}{\setminus }\{(0,0)\}\), and that the group \({\mathscr {G}}^{\circ }\) is Abelian. Then, by the Kimura Theorem B.1, either this group is reducible (case A of this theorem), or the differences of exponents \((\rho ,\sigma ,\tau )\) belong to an item of the table given for case B.

We show that case B does not occur for the considered domain of the parameters. It is impossible that \(\rho ,\sigma ,\tau \in \mathbb {R}\). In fact, if \(\rho ,\sigma \in \mathbb {R}\), then \( \rho =\sigma =0\), and this is possible only for \((\mu _1,\mu _2)=(1,-\,1)\) or \((\mu _1,\mu _2)=(-\,1,1)\), but for these two values \(\tau =1 -\mathrm {i}\omega \not \in \mathbb {R}\). In this way we have excluded items \(2-15\) in the table of case B. If \((\rho ,\sigma ,\tau )\) belong to the first item in this table, any two of these numbers belong to \(\tfrac{1}{2}+\mathbb {Z}\). Thus, either \(\rho \in \tfrac{1}{2}+\mathbb {Z}\) or \(\sigma \in \tfrac{1}{2}+\mathbb {Z}\). However, this is impossible.

Thus, if \({\mathscr {G}}^{\circ }\) is Abelian, then it is reducible (case A of the Kimura theorem). By Lemma B.4, it is possible in only two cases. Either \(\rho \), \(\tau \) and \(\sigma \) are rational, but we have already shown that this is impossible, or one of these numbers is an integer and the corresponding singularity is not logarithmic. The case \(\rho \in \mathbb {Z}\) implies that \(\rho =0\) and the singularity \(z=0\) is logarithmic. Similarly, if \(\sigma \in \mathbb {Z}\), then \(\sigma =0\) and the singularity \(z=1\) is logarithmic.

Thus, the only possibility is that \(\tau \in \mathbb {Z}\), but this immediately implies that \(\tau =1\) and \(\mu _1\mu _2=0\).

Assume that \(\mu _2=0\) and \(\mu _1\ne 0\). Then, \(\tau =1\) and \(\rho , \sigma \in \mathrm {i}\mathbb {R}\). Moreover, either \(\rho \ne 0\), or \(\sigma \ne 0\). Now, the necessary and sufficient condition for case A of the Kimura theorem implies that either \(\rho +\sigma =0\), or \(\rho -\sigma =0\). This implies that \(\rho ^2 =\sigma ^2\), but this is possible only if \(\mu _1=0\). However, we have excluded the case with \((\mu _1,\mu _2)=(0,0)\).

In a similar way we show that if \(\mu _1=0\) and \(\mu _2\ne 0\), then \({\mathscr {G}}^{\circ }\) is not Abelian.

To summarize, we have shown that if \((\mu _1,\mu _2)\in {\mathscr {A}}{\setminus }\{(0,0)\}\), then \({\mathscr {G}}^{\circ }\) is not Abelian.

It remains to be shown that if \(\mu _1=\mu _2=0\), then \({\mathscr {G}}^{\circ }\) is Abelian. In this case \(\rho =\sigma =\mathrm {i}\omega \) and \(\tau =1\), and the equation is reducible because \(\rho -\sigma +\tau =1\). We show that singularity \(z=\infty \) is not logarithmic. In fact, we have \(\tau _1=0\), \(\tau _2=-\,1\) and \(\rho _1=\sigma _1 = \tfrac{1}{2}(1+\mathrm {i}\omega )\), \(\rho _2=\sigma _2 = \tfrac{1}{2}(1-\mathrm {i}\omega )\). Thus, among the numbers

only \(s_{12}=s_{21}=1\) are integers. By Lemma B.5, this implies that the singularity is not logarithmic, and thus the group \({\mathscr {G}}^{\circ }\) is Abelian. \(\square \)

Now let us consider region \({\mathscr {B}}\) on the \((\mu _1,\mu _2)\) plane. It is defined by

see Fig. 2. We also define hyperbola \({\mathscr {H}}\) given by

and denote by \({\mathscr {H}}_{-}={\mathscr {H}}\cap {\mathscr {B}}\) its component contained in \({\mathscr {B}}\).

Lemma 4.4

For \((\mu _1,\mu _2)\in {\mathscr {B}}\), the identity component of the differential Galois group of Eq. (4.60) is Abelian if and only if \((\mu _1,\mu _2)\in {\mathscr {H}}_{-} \) and

where m is a non-zero integer.

Proof

If \((\mu _1,\mu _2)\in {\mathscr {B}}\), then \(\tau =1-2\mathrm {i}\sqrt{-\mu _1\mu _2}\), \(\rho \in \mathrm {i}\mathbb {R}\), \(\rho \ne 0\) and \(\sigma \in \mathbb {R}\). Moreover, if \(\mu _1\mu _2\ne 0\), then \(\tau \not \in \mathbb {R}\).

Let us assume that the group \({\mathscr {G}}^{\circ }\) is Abelian. Then, we proceed as in the previous lemma. We first show that case B of the Kimura theorem is impossible. In fact, because \(\rho \not \in \mathbb {R}\), only the first item in the table of case B is possible. Therefore, \(\sigma \in \tfrac{1}{2}+\mathbb {Z}\), \(\tau \in \tfrac{1}{2}+\mathbb {Z}\), but the last condition is impossible.

Thus, if \({\mathscr {G}}^{\circ }\) is Abelian, then Eq. (4.60) is reducible. From the condition (B.7) for this case we deduce that \(\rho =\pm {\text {im}}\tau \). By squaring this equality, we obtain

This is exactly the hyperbola \({\mathscr {H}}\) defined by (4.65) and one of its components denoted by \({\mathscr {H}}_-\) lies in region \({\mathscr {B}}\).

As \(\rho \) is not rational, then either \(\sigma \in \mathbb {Z}\), or \(\tau \in \mathbb {Z}\). If \(\tau \in \mathbb {Z}\), then \(\mu _1\mu _2=0\), and from (4.67) we obtain a contradiction. Thus, \(\sigma \in \mathbb {Z}\). We can assume that \(\sigma =n>0\), so \(\sigma _1=\tfrac{1}{2}(1+n)> \sigma _2=\tfrac{1}{2}(1-n)\). We also denote the remaining exponents as \(\rho _1=\tfrac{1}{2}(1+\rho )\), \(\rho _2=\tfrac{1}{2} (1-\rho )\) and \(\tau _1=\tfrac{1}{2}(-\,1+\tau )\)\(\tau _2=\tfrac{1}{2}(-\,1-\tau )\). If \((\mu _1, \mu _2)\in {\mathscr {H}}_-\), then \(\rho =2\mathrm {i}\omega \sqrt{-\mu _1\mu _2}\). Hence, \(\rho +\tau =1\) for \((\mu _1, \mu _2)\in {\mathscr {H}}_-\). In order to apply Lemma B.5, we must calculate four numbers

By this Lemma, the singularity \(z=1\) is not logarithmic if and only if one of these numbers is an element of \(\{1,2, \ldots , n\}\). But

and \(s_{12}, s_{21}\not \in \mathbb {R}\). This proves our claim.

To finish the proof of the lemma, it is enough to rewrite the equality \(n^2=4m^2=\sigma ^2\) in the form (4.66). \(\square \)

Let us consider region \({\mathscr {B}}'\) on the \((\mu _1,\mu _2) \) defined by

see Fig. 2. We also define curve \({\mathscr {H}}'\) given by the equation

and we denote its component contained in \({\mathscr {B}}\) as \({\mathscr {H}}_{-}'={\mathscr {H}}'\cap {\mathscr {B}}\).

Lemma 4.5

For \((\mu _1,\mu _2)\in {\mathscr {B}}'\), the identity component of the differential Galois group of Eq. (4.61) is Abelian if and only if \((\mu _1,\mu _2)\in {\mathscr {H}}'_{-} \) and

where m is a non-zero integer.

The proof of this lemma is similar to the previous one, so we leave it to the reader.

Now, proof of Theorem 2.3 follows directly from Lemmas 4.3–4.5.

For the system (4.52) with parameters \((\mu _1,\mu _2)\in {\mathscr {C}}\), see Eq. (2.23) and Fig. 3, we can prove the following.

Lemma 4.6

For \((\mu _1,\mu _2)\in {\mathscr {C}}\), the identity component of the differential Galois group of Eq. (B.4) is Abelian if and only if \(\mu _1=\mu _2=\mu \) and \(\omega \mu \in \mathbb {Z}\).

Proof

If \((\mu _1,\mu _2)\in {\mathscr {C}}\), then \(\rho ,\sigma \in \mathrm {i}\mathbb {R}\) and \(\tau \in R\). Assume that the group \({\mathscr {G}}^{\circ }\) is Abelian. Then, the Kimura Theorem B.1 gives two possibilities. As in the previous proofs, we first exclude case B of this theorem. If \(\rho \in \mathbb {R}\), then \(\rho =0\), and, similarly, if \(\sigma \in \mathbb {R}\), then \(\sigma =0\), and this eliminates all the items in the table for case B. In fact, for case B at least two of the numbers \(\rho \), \(\sigma \) and \(\tau \) are non-zero real numbers.

Thus, if \({\mathscr {G}}^{\circ }\) is Abelian, then Eq. (4.60) is reducible. The necessary conditions (B.7) for this case imply that \(\rho ^2=\sigma ^2\), so \(\mu _1=\mu _2=\mu \). Moreover, the same conditions require that \(\tau =1-2\omega \mu =m= 2n+1\) for a certain \(n\in \mathbb {Z}\). Thus, \(\omega \mu \in \mathbb {Z}\). To prove that \({\mathscr {G}}^{\circ }\) is Abelian in this case, it is enough to show that the infinity is not a logarithmic singularity. We apply Lemma B.5. The exponents at infinity are \(\tau _1=n\) and \(\tau _2= -\,(1+n)\). We can assume that \(n>0\). Now, among numbers

we have \(s_{12}=s_{21}=1+n\). Hence, by Lemma B.5 the singularity is not logarithmic and the group \({\mathscr {G}}^{\circ }\) is Abelian. \(\square \)

Now, we consider region \({\mathscr {D}}\) in Fig. 3 defined by the following inequalities

We also define a family of hyperbolas

parametrized by two odd integers \(k,l\in \mathbb {Z}\).

Lemma 4.7

Assume that \((\mu _1,\mu _2)\in {\mathscr {D}}\). Then, the group \({\mathscr {G}}^{\circ }\) is Abelian if and only if \((\mu _1,\mu _2)\in {\mathscr {H}}_{k,l}\) and

for certain odd integers \(k,l\in \mathbb {Z}\)

Proof

For \((\mu _1,\mu _2)\in {\mathscr {D}}\), \(\rho \in \mathrm {i}\mathbb {R}\), \(\rho \ne 0\), and \(\sigma ,\tau \in \mathbb {R}\).

Assume that the group \({\mathscr {G}}^{\circ }\) is Abelian. Then, by the Kimura Theorem B.1, we have two possibilities. However, case A of this theorem is impossible. In case B we have only one possibility, which is the first item in the table for this case:

for certain integers s and t. Let \(l=2s+1\) and let \(k=2t-1\). Then, eliminating \(\omega \) from the equations

we obtain an equation defining \({\mathscr {H}}_{k,l}\). Moreover, from the second of the above equations we obtain (4.76). This ends our proof. \(\square \)

Theorem 2.5 follows directly from the above lemma.

Similar result holds true for region \({\mathscr {D}}'\) defined in Eq. (2.27) and drawn in Fig. 3.

Lemma 4.8

Assume that \((\mu _1,\mu _2)\in {\mathscr {D}}'\). Then, the identity component \({\mathscr {G}}^{\circ }\) of the differential Galois group of the Eq. (B.4) is Abelian if and only if \((\mu _1,\mu _2)\in {\mathscr {H}}_{k,l}'\) defined in Eq. (2.28), and

for certain odd integers \(k,l\in \mathbb {Z}\).

The proof of this lemma is similar to the proof of the previous one, and so we omit it. This lemma proves Theorem 2.6.

We prove Theorem 2.7 by a contradiction. Thus, let us assume that the system is integrable. Then the group \({\mathscr {G}}^{\circ }\) is Abelian. Again, we recall the Kimura theorem. Case A of this theorem cannot occur because \(\rho \) and \(\sigma \) are not rational so, by Lemma B.4, the difference of exponents \(\tau \) must be an integer, however, this is excluded by an assumption. Case B of the Kimura theorem is also impossible, because \(\rho \) and \(\sigma \) are not rational. Hence, \({\mathscr {G}}^{\circ }\) is not Abelian. The contradiction proves the theorem.

5 Final Remarks

When we started our analysis of the Bajer–Moffatt system (1.3), we did not expect to find many integrable cases. Thus, the fact that almost all the cases with a degree one Darboux polynomial are integrable was a surprise. This is why we distinguished and classified all the cases where the Bajer–Moffatt system (1.3) has a Darboux polynomial of degree one in variables.

In Lemma 4.1 we distinguish three families that admit a linear Darboux polynomial F of the form (4.24). A common level \(F(\varvec{x})=F_0(\varvec{x})=0\) gives a particular phase curve, so we could potentially apply differential Galois methods to study the integrability of these cases. However, there are two difficulties that block this idea. First of all, necessary integrability conditions distinguish algebraic sets of codimension one in the space of parameters. When the number of parameters is large, then it is practically impossible to distinguish all of them. Moreover, the variational equations for this case does not reduce to the Riemann P equation, and this fact makes the problem even more difficult.

The key point in the proofs of Theorems 2.3–2.7 is the reduction of the variational equations to the Riemann P equation. Thanks to the Kimura theorem, we know all the cases where the identity component of the differential Galois group is solvable. Moreover, we supplement this analysis with a criterion, see Lemma B.4, which distinguishes cases where this group is Abelian. The system depends on three parameters; however, only two of them, \(\mu _1\) and \(\mu _2\), play a crucial role. This is why we divided the \((\mu _1, \mu _2) \) plane into non-overlapping regions, and we performed our analyses in each of these regions separately.

Most interesting are the cases where the system satisfies the necessary conditions for integrability. In the parameter space \((\mu _1, \mu _2,\omega ) \), they form surfaces. If a system is integrable, then the parameters necessarily belong to one of these surfaces. However, numerical tests show that generically the system is not integrable in these cases. Moreover, although we performed additional searches using the direct method, we did not find integrable cases.

The most peculiar case corresponds to a two-dimensional plane \(\mu _1=\mu _2\) in \((\mu _1, \mu _2,\omega ) \) space. We performed intensive numerical tests just looking for signs of non-integrability, however without success. The Poincaré cross-sections are presented in Figs. 5 and 6. They present behaviour of trajectories where a necessary condition for integrability \(\mu \omega \in \mathbb {Z}\) is fulfilled (see Fig. 5), as well as when it is not satisfied (see Fig. 6). Magnifications of regions shown in these figures are small, but we have searched for chaos in both cases in neighbourhoods of unstable periodic solutions of size \(10^{-5}-10^{-6}\) and we did not succeed. However, chaos appears immediately when we leave region \({\mathscr {C}}\), i.e. when we take \(\mu _1=\mu _2>1\), see Fig. 8. This strange behaviour requires separate investigation.

References

Aqeel, M., Yue, B.Z.: Nonlinear analysis of stretch–twist–fold (STF) flow. Nonlinear Dyn. 72(3), 581–590 (2013)

Ayoul, M., Zung, N.T.: Galoisian obstructions to non-Hamiltonian integrability. C. R. Math. Acad. Sci. Paris 348(23–24), 1323–1326 (2010)

Bajer, K.: Flow kinematics and magnetic equilibria. Ph.D. Thesis, Cambridge University (1989)

Bajer, K., Moffatt, H.K.: On a class of steady confined Stokes flows with chaotic streamlines. J. Fluid Mech. 212, 337–363 (1990)

Bao, J., Yang, Q.: Darboux integrability of the stretch–twist–fold flow. Nonlinear Dyn. 76(1), 797–807 (2014)

Basak, I.: Explicit solution of the Zhukovski–Volterra gyrostat. Regul. Chaot. Dyn. 14(2), 223–236 (2009)

Boucher, D.: Sur les équations différentielles linéaires paramétrées, une application aux systèmes hamiltoniens. Ph.D. Thesis, Université de Limoges, France (2000)

Byrd, P.F., Friedman, M.D.: Handbook of elliptic integrals for engineers and scientists. Die Grundlehren der mathematischen Wissenschaften, Band 67, 2nd edition, revised. Springer, New York (1971)

Casale, G.: Morales–Ramis theorems via Malgrange pseudogroup. Ann. Inst. Fourier (Grenoble) 59(7), 2593–2610 (2009)

Childress, S.: Fast dynamo theory. In: Moffatt, H.K., Zaslavsky, G.M., Comte, P., Tabor, M. (eds.) Topological Aspects of the Dynamics of Fluids and Plasmas, pp. 111–147. Springer, Dordrecht (1992)

Childress, S., Gilbert, A.D.: Stretch, Twist, Fold: The Fast Dynamo. Springer, Berlin (1995)

Churchill, R.C.: Two generator subgroups of \({\rm SL}(2,{ C})\) and the hypergeometric, Riemann, and Lamé equations. J. Symb. Comput. 28(4–5), 521–545 (1999)

Fountain, G.O., Khakhar, D.V., Ottino, J.M.: Visualization of three-dimensional chaos. Science 281(5377), 683–686 (1998)

Fountain, G.O., Khakhar, D.V., Mezić, I., Ottino, J.M.: Chaotic mixing in a bounded three-dimensional flow. J. Fluid Mech. 417, 265–301 (2000)

Iwasaki, K., Kimura, H., Shimomura, S., Yoshida, M.: From Gauss to Painlevé, A modern theory of special functions. Aspects of Mathematics, E16. Friedr. Vieweg & Sohn, Braunschweig (1991)

Kaplansky, I.: An Introduction to Differential Algebra, 2nd edn. Hermann, Paris (1976)

Kimura, T.: On Riemann’s equations which are solvable by quadratures. Funkcial. Ekvac. 12, 269–281 (1969/1970)

Kovacic, J.J.: An algorithm for solving second order linear homogeneous differential equations. J. Symb. Comput. 2(1), 3–43 (1986)

Lakshmanan, M., Rajasekar, S.: Nonlinear Dynamics. Advanced Texts in Physics. Springer, Berlin (2003)

Lerman, L., Yakovlev, E.: On interrelations between divergence-free and Hamiltonian dynamics. J. Geom. Phys. 135, 70–79 (2019)

Moffatt, H.K., Proctor, M.R.E.: Topological constraints associated with fast dynamo action. J. Fluid Mech. 154, 493–507 (1985)

Morales Ruiz, J.J.: Differential Galois Theory and Non-integrability of Hamiltonian Systems, Volume 179 of Progress in Mathematics. Birkhäuser, Basel (1999)

Neishtadt, A.I., Vainshtein, D.L., Vasiliev, A.A.: Adiabatic Chaos of Streamlines in a Family of 3D Confined Stokes Flows, pp. 618–624. Springer, Dordrecht (1999)

Neishtadt, A.I., Simó, C., Vasiliev, A.: Geometric and statistical properties induced by separatrix crossings in volume-preserving systems. Nonlinearity 16(2), 521–557 (2003)

Nishiyama, T.: Meromorphic non-integrability of a steady Stokes flow inside a sphere. Ergod. Theory Dyn. Syst. 34(2), 616–627 (2014a)

Nishiyama, T.: Algebraic approach to non-integrability of Bajer—Moffatt’s steady Stokes flow. Fluid Dyn. Res. 46(6), 061426 (2014b)

Nowicki, A.: Polynomial Derivations and Their Rings of Constants. N. Copernicus University Press, Toruń, (1994). http://www.mat.uni.torun.pl/~anow/polder.html