Abstract

We present an existence and stability theory for gravity–capillary solitary waves on the top surface of and interface between two perfect fluids of different densities, the lower one being of infinite depth. Exploiting a classical variational principle, we prove the existence of a minimiser of the wave energy \({\mathcal {E}}\) subject to the constraint \({\mathcal {I}}=2\mu \), where \({\mathcal {I}}\) is the wave momentum and \(0< \mu <\mu _0\), where \(\mu _0\) is chosen small enough for the validity of our calculations. Since \({\mathcal {E}}\) and \({\mathcal {I}}\) are both conserved quantities a standard argument asserts the stability of the set \(D_\mu \) of minimisers: solutions starting near \(D_\mu \) remain close to \(D_\mu \) in a suitably defined energy space over their interval of existence. The solitary waves which we construct are of small amplitude and are to leading order described by the cubic nonlinear Schrödinger equation. They exist in a parameter region in which the ‘slow’ branch of the dispersion relation has a strict non-degenerate global minimum and the corresponding nonlinear Schrödinger equation is of focussing type. The waves detected by our variational method converge (after an appropriate rescaling) to solutions of the model equation as \(\mu \downarrow 0\).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 The Model

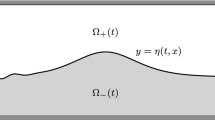

We consider a two-layer perfect fluid with irrotational flow subject to the forces of gravity, surface tension and interfacial tension. The lower layer is assumed to be of infinite depth, while the upper layer has finite asymptotic depth \({\overline{h}}\). We assume that density \({\underline{\rho }}\) of the lower fluid is strictly greater than the density \({\overline{\rho }}\) of the upper fluid. The layers are separated by a free interface \(\{y={\underline{\eta }}(x,t)\}\), and the upper one is bounded from above by a free surface \(\{y={\overline{h}}+{\overline{\eta }}(x,t)\}\). The fluid motion in each layer is described by the incompressible Euler equations. The fluid occupies the domain \({\underline{\Sigma }}({\underline{\eta }}) \cup {\overline{\Sigma }}(\varvec{\eta })\), where

and \(\varvec{\eta }=({\underline{\eta }}, {\overline{\eta }})\). Since the flow is assumed to be irrotational in each layer, there exist velocity potentials \({\underline{\phi }}\) and \({\overline{\phi }}\) satisfying

On the interface \(\{y={\underline{\eta }}\}\) we have the kinematic boundary conditions

where

is the upward unit normal vector to the interface. In particular, this implies that the normal component of the velocity is continuous across the interface. At the free surface \(\{y={\overline{h}}+{\overline{\eta }}\}\), the kinematic boundary condition reads

where

is the outer unit normal vector at the surface. In addition, we have the Bernoulli conditions

and

at the interface and surface, respectively, where \(g>0\) is the acceleration due to gravity, \({\overline{\sigma }}>0\) the coefficient of surface tension, and \({\underline{\sigma }}>0\) the coefficient of interfacial tension.

In order to obtain dimensionless variables we define

and obtain the equations (dropping the primes for notational simplicity)

with boundary conditions

and

in which \(\rho :={\overline{\rho }}/{\underline{\rho }}\in (0,1)\), \({\underline{\beta }}:={\underline{\sigma }}/(g {\overline{h}}^2 {\underline{\rho }})>0\) and \({\overline{\beta }}:={\overline{\sigma }}/(g {\overline{h}}^2 {\overline{\rho }})>0\). The total energy

and the total horizontal momentum

are conserved quantities.

Our interest lies in solitary-wave solutions of (1.1)–(1.8), that is, localised waves of permanent form which propagate in the negative x-direction with constant (dimensionless) speed \(\nu >0\), so that \({\underline{\eta }}(x,t)={\underline{\eta }}(x+\nu t)\), \({\overline{\eta }}(x,t)={\underline{\eta }}(x+\nu t)\), \({\underline{\phi }}(x,y,t)={\underline{\phi }}(x+\nu t,y)\) and \({\overline{\phi }}(x,y,t)={\overline{\phi }}(x+\nu t,y)\), and \({\underline{\eta }}(x+\nu t), {\overline{\eta }}(x+\nu t) \rightarrow 0\) as \(|x+\nu t|\rightarrow \infty \). Figure 1 contains a sketch of the physical setting.

1.2 Heuristics

The existence of small-amplitude solitary waves can be predicted by studying the dispersion relation of the linearised version of (1.1)–(1.8). Instead of linearising (1.1)–(1.8) directly, we may derive the dispersion relation by using the fact that these waves are minimisers of an energy functional \({\mathcal {J}}_\mu (\varvec{\eta })={\mathcal {K}}(\varvec{\eta })+\mu ^2/{\mathcal {L}}(\varvec{\eta })\), where \(\mu \) is the momentum (see Sect. 1.3 below). Writing the corresponding Euler–Lagrange equation in the form \({\mathcal {K}}'(\varvec{\eta })-\nu ^2 {\mathcal {L}}'(\varvec{\eta })=0\), where \(\nu =\mu /{\mathcal {L}}(\varvec{\eta })\) is the wave speed, linearising and substituting the ansatz \(\varvec{\eta }(x)=\cos (kx) \varvec{v}\), with \(\varvec{v}\) a constant vector, leads to the equation

where

(The formula for \({\mathcal {J}}_\mu '(\varvec{\eta })\) and its linearisation can be obtained from Lemmas A.27 and A.28.) Equivalently, \(\nu ^2\) is an eigenvalue and \(\varvec{v}\) an eigenvector of the matrix

(assuming that \(k\ne 0\) so that F(k) is invertible). The eigenvalues are given by

with

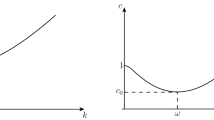

It follows that \(\lambda _-(k)<\lambda _+(k)\) for all \(k\ne 0\), meaning that for each wavenumber \(k\ne 0\) there is an associated ‘slow’ speed \(\sqrt{\lambda _-(k)}\) and a ‘fast’ speed \(\sqrt{\lambda _+(k)}\) (see Fig. 2). Moreover,

as \(k\rightarrow 0\). As \(|k|\rightarrow \infty \) we have that

Since

we have that \(\lambda _\pm (k) \rightarrow \infty \) as \(|k|\rightarrow \infty \). In view of the behaviour at 0, we conclude that \(\lambda _-(k)\) is minimised at some \(k=k_0>0\).

In order to find solitary waves we will assume the following non-degeneracy conditions.

Assumption 1.1

and

The first part of the assumption is introduced in order to avoid resonances. The second part is introduced in order to obtain inequality (1.14) below. This in turn dictates the choice of model equation (the cubic nonlinear Schrödinger equation). We note that these conditions are satisfied for generic parameter values, but that there are exceptions; see Figs. 3 and 4.

Set \(\nu _0=\sqrt{\lambda _-(k_0)}\) and note that \(\varvec{v}_0=(1,-a)\) is an eigenvector to the eigenvalue \(\nu _0^2\) of the matrix \(F(k_0)^{-1}P(k_0)\), in which

For future use we also introduce the matrix-valued function

which satisfies \(g(k_0)\varvec{v}_0=0\) and (due to the second part of Assumption 1.1 and evenness)

for \(||k|-k_0|\ll 1\), where c is a positive constant.

Numerical computations indicate that \(\lambda _-(k)\) has a degenerate minimum at \(k=1\) (\(\lambda '(1)=\lambda ''(1)=\lambda '''(1)=0\), \(\lambda ^{(iv)}(1)>0\)) for \(\rho \approx 0.063\), \({\underline{\beta }}\approx 0.939\), \({\overline{\beta }}\approx 0.232\), in violation of condition (1.11)

Bifurcations of nonlinear solitary waves are expected whenever the linear group and phase speeds are equal, so that \(\nu ^\prime (k)=0\) [see Dias and Kharif (1999, Sect. 3)]. We therefore expect the existence of small-amplitude solitary waves with speed near \(\nu _0\), bifurcating from a linear periodic wave train with frequency \(k_0 \nu _0\). Making the ansatz

where ‘\(\mathrm {c.c.}\)’ denotes the complex conjugate of the preceding quantity, and expanding in powers of \(\mu \) one obtains the cubic nonlinear Schrödinger equation

for the complex amplitude A, in which

and \(A_3\) and \(A_4\) are functions of \(\rho \), \({\underline{\beta }}\) and \({\overline{\beta }}\) which are given in Proposition 2.27 and Corollary 2.24. At this level of approximation a standing wave solution to Eq. (1.15) of the form \(A(X,T)=\mathrm {e}^{i\nu _\mathrm {NLS}\,T}\phi (X)\) with \(\phi (X) \rightarrow 0\) as \(X \rightarrow \pm \infty \) corresponds to a solitary water wave with speed

Lemma 1.2

\(A_2>0\) under Assumption 1.1.

Proof

Let \(\varvec{v}(k)\) be a smooth curve of eigenvectors of \(F(k)^{-1}P(k)\) corresponding to the eigenvalue \(\lambda _-(k)\) with \(\varvec{v}(0)=\varvec{v}_0\). Then

and

Evaluating the first equation at \(k=k_0\) and using that \(\lambda _-'(k_0)=0\), we find that

Taking the scalar product of the second equation with \(\varvec{v}(k)\), evaluating at \(k=k_0\) and using the previous equality, we therefore find that

where we have also used that \(g(k_0)\varvec{v}_0=0\). This concludes the proof since \(\lambda _-''(k_0)>0\) and \(F(k_0)\) and \(g(k_0)\) are positive definite. \(\square \)

It follows that a necessary and sufficient condition for Eq. (1.15) to possess solitary standing waves is that the coefficient in front of the cubic term is negative.

Assumption 1.3

It seems difficult to give a general criterion for when this assumption is satisfied. In specific cases it can be verified numerically.

Example 1.4

Consider the two choices of parameter values in Fig. 3a, c, that is, \(\rho =0.5\), \({\underline{\beta }}=1\) and (a) \({\overline{\beta }}=0.04\) or (c) \({\overline{\beta }}=0.07\). Numerical computations reveal that \(k_0\approx 4.99\) and \(\frac{1}{2} A_3 +A_4\approx -2.11 \times 10^{13}\) in case (a), while \(k_0 \approx 0.245\) and \(\frac{1}{2} A_3 +A_4\approx -50.7\) in case (c). Thus, Assumption 1.3 is satisfied in both cases.

Furthermore, in some cases it is possible to verify both Assumptions 1.1 and 1.3 using asymptotic analysis.

Example 1.5

Assume that \({\underline{\beta }}<1/4\) and \({\overline{\beta }}\ge 1/3\), and consider the limit \(\rho \rightarrow 0\). Straightforward computations show that

locally uniformly. Moreover, all derivatives also converge locally uniformly away from points where the two functions in the bracket are equal (that is, where \(D^\star (k):=((1+{\underline{\beta }}|k|^2)-(1+{\overline{\beta }}|k|^2)\tanh |k|)^2=0\)). Since \(\lambda _-^\star (k)\) has a unique strict and non-degenerate positive global minimiser \(k_0^\star =1/\sqrt{{\underline{\beta }}}\) with

(note that \((1+{\overline{\beta }}|k|^2) \tanh |k|/|k| \ge 1\) under the assumption \({\overline{\beta }}\ge 1/3\)) and \(\lim _{|k|\rightarrow \infty } \lambda _-(k)=\infty \) uniformly in \(\rho \), we find that \(\lambda _-(k)\) has a unique strict and non-degenerate positive global minimiser \(k_0\) for sufficiently small \(\rho \), and that \(k_0\rightarrow 1/\sqrt{{\underline{\beta }}}\) and \(\lambda _-(k_0)\rightarrow 2\sqrt{{\underline{\beta }}}\) as \(\rho \rightarrow 0\). Using the formulas in Propositions 2.23, 2.27 and Corollary 2.24 one now verifies that

as \(\rho \rightarrow 0\). Thus, under the conditions \({\underline{\beta }}<1/4\) and \({\overline{\beta }}\ge 1/3\), Assumptions 1.1 and 1.3 are both satisfied if \(\rho \) is sufficiently small.

Example 1.6

Consider next the limit \({\overline{h}} \rightarrow \infty \). Note that \({\overline{\beta }}, {\underline{\beta }}\rightarrow 0\) as \(h \rightarrow \infty \). Moreover, we expect \(k_0\) to diverge. Therefore, it is convenient to introduce the new length scale \(h:=\sqrt{{\underline{\sigma }}/(g{\underline{\rho }})}\), the non-dimensional length parameter \(H:={\overline{h}}/h\), the non-dimensional surface tension parameter \(B:={\overline{\beta }} H^2={\overline{\beta }}/{\underline{\beta }}={\overline{\sigma }}/(\rho {\underline{\sigma }})\) (note that \({\underline{\beta }}=1/H^2\)) and the new non-dimensional wavenumber \(K:=k/H\). We then find that

with

It follows that

uniformly for \(|K|\ge \delta \), where \(\delta >0\) is arbitrary, and that all derivatives converge uniformly on the same set away from points where the functions within the brackets coincide. On the other hand, \(H\lambda _-(k)\) can be made arbitrarily large for \(|K|\le \delta \) by first choosing \(\delta \) sufficiently small and then H sufficiently large depending on \(\delta \). Choosing \(B>(1-\rho )/(1+\rho )^2\), we find that the function \(\lambda _-^\star (K)\) has the unique strict and non-degenerate positive global minimiser \(K_0^\star =\sqrt{1-\rho }\) with

Therefore, \(\lambda _-(k)\) has a unique strict and strict and non-degenerate positive global minimiser \(k_0\) for large H with \(k_0/H\rightarrow K_0^\star \) as \(H\rightarrow \infty \). Straightforward computations now yield

as \(H\rightarrow \infty \). The right-hand side is negative for \(\rho <\rho ^\star =(21-8\sqrt{5})/11\approx 0.28\) and positive for \(\rho >\rho ^\star \). Thus, if \(B>(1-\rho )/(1+\rho )^2\) and \(\rho <\rho ^\star \) both Assumptions 1.1 and 1.3 are satisfied if \({\overline{h}}\) is sufficiently large, while if \(\rho >\rho ^\star \) then Assumption 1.1 is satisfied but not Assumption 1.3.

The following lemma gives a variational description of the set of solitary waves of the nonlinear Schrödinger equation (1.15) [see Cazenave (2003, Sect. 8)].

Lemma 1.7

Assume that \(A_2>0\) and \(\frac{1}{2} A_3+A_4<0\). The set of complex-valued solutions to the ordinary differential equation

satisfying \(\phi (X) \rightarrow 0\) as \(X \rightarrow \infty \) is \(D_\mathrm {NLS} = \{\mathrm {e}^{{i}\omega }\phi _\mathrm {NLS}(\cdot + y):\omega \in [0,2\pi ), y \in {\mathbb {R}}\},\) where

These functions are precisely the minimisers of the functional \({\mathcal {E}}_\mathrm {NLS}:H^1({\mathbb {R}}) \rightarrow {\mathbb {R}}\) given by

over the set \(N_\mathrm {NLS} = \{\phi \in H^1({\mathbb {R}}): \Vert \phi \Vert _0^2=2\alpha _\mathrm {NLS}\}\), where \(\alpha _\mathrm {NLS}=2(\nu _0 k_0+\nu _0\rho {\overline{F}}(k_0)\varvec{v}_0\cdot \varvec{v}_0)^{-1}\); the constant \(2\nu _\mathrm {NLS}\) is the Lagrange multiplier in this constrained variational principle and

1.3 Main Results

The main result of this paper is an existence theory for small-amplitude solitary-wave solutions to Eqs. (1.1)–(1.8) under Assumptions 1.1 and 1.3. The waves are constructed by minimising the energy functional \({\mathcal {E}}\) subject to the constraint of fixed horizontal momentum \({\mathcal {I}}\); see Theorem 2.4 for a precise statement. As a consequence of the existence result we also obtain a stability result for the set of minimisers; see Theorem 2.5.

Before describing our approach in further detail, we note that the above formulation of the hydrodynamic problem has the disadvantage of being posed in a priori unknown domains. It is therefore convenient to reformulate the problem in terms of the traces of the velocity potentials on the free surface and interface. We denote the boundary values of the velocity potentials by \({\underline{\Phi }}(x):={\underline{\phi }}(x,{\underline{\eta }}(x))\) and \(\overline{\varvec{\Phi }}(x)=({\overline{\Phi }}_i(x),{\overline{\Phi }}_s(x))\) where \({\overline{\Phi }}_i(x):={\overline{\phi }}(x,{\underline{\eta }}(x))\) and \({\overline{\Phi }}_s(x):={\overline{\phi }}(x,1+{\overline{\eta }}(x))\). Following Kuznetsov and Lushnikov (1995) and Benjamin and Bridges (1997) (see also Craig and Groves 2000; Craig et al. 2005) we set

the natural choice of canonical variables is \((\varvec{\eta },\varvec{\xi })\), where \(\varvec{\eta }=({\underline{\eta }},{\overline{\eta }})\), \(\varvec{\xi }=({\underline{\xi }},{\overline{\xi }})\). We formally define Dirichlet–Neumann operators \({\underline{G}}({\underline{\eta }})\) and \({\overline{G}}(\varvec{\eta })\) which map (for a given \(\varvec{\eta }\)) Dirichlet boundary data of solutions of the Laplace equation to the Neumann boundary data, i.e.

see Appendix A for the rigorous definition. Note that \({\underline{G}}\) only depends on \({\underline{\eta }}\), whereas \({\overline{G}}\) depends on \({\underline{\eta }}\) and \({\overline{\eta }}\). The boundary conditions (1.3)–(1.4) imply that

If we define

we can recover \({\underline{\Phi }}\) and \({\overline{\Phi }}\) from \(\varvec{\xi }\) using the formulas

under assumption (1.18). Moreover, the total energy and horizontal momentum can be re-expressed as

and

respectively, where we have abbreviated

Note that

We now give a brief outline of the variational existence method. We tackle the problem of finding minimisers of \({\mathcal {E}}(\varvec{\eta }, \varvec{\xi })\) under the constraint \({\mathcal {I}}(\varvec{\eta },\varvec{\xi })=2\mu \) in two steps.

- 1.

Fix \(\varvec{\eta }\ne 0\) and minimise \({\mathcal {E}}(\varvec{\eta },\cdot )\) over \(T_\mu :=\left\{ \varvec{\xi }\in {{\tilde{X}}} :{\mathcal {I}}(\varvec{\eta },\varvec{\xi })=2\mu \right\} \), where the space \({{\tilde{X}}}\) is defined in Definition A.16. This problem (of minimising a quadratic functional over a linear manifold) admits a unique global minimiser \(\varvec{\xi }\).

- 2.

Minimise \({\mathcal {J}}_\mu (\varvec{\eta }):={\mathcal {E}}(\varvec{\eta },\varvec{\xi }_{\varvec{\eta }})\) over \(\varvec{\eta }\in U{\setminus }\{0\}\) with \(U:=B_M(0)\subset (H^2({\mathbb {R}}))^2\). Because \(\varvec{\xi }_{\varvec{\eta }}\) minimises \({\mathcal {E}}(\varvec{\eta },\cdot )\) over \(T_\mu \) there exists a Lagrange multiplier \(\gamma _{\varvec{\eta }}\) such that

$$\begin{aligned} G(\varvec{\eta })\varvec{\xi }_{\varvec{\eta }}=\gamma _{\varvec{\eta }} \varvec{\eta }_x. \end{aligned}$$Hence,

$$\begin{aligned} \varvec{\xi }_{\varvec{\eta }}&=\gamma _{\varvec{\eta }}G(\varvec{\eta })^{-1}\varvec{\eta }_x. \end{aligned}$$Furthermore, we get

$$\begin{aligned} \gamma _{\varvec{\eta }}=\frac{\mu }{{\mathcal {L}}(\varvec{\eta })}, \qquad {\mathcal {L}}(\varvec{\eta })=\frac{1}{2}\int _{{{\mathbb {R}}}}\varvec{\eta }K(\varvec{\eta })\varvec{\eta }\,\mathrm{d}x, \end{aligned}$$(1.24)where

$$\begin{aligned} K(\varvec{\eta })=-\partial _x G(\varvec{\eta })^{-1}\partial _x = -\partial _x \begin{pmatrix} \rho {\overline{N}}_{11}(\varvec{\eta }) +{\underline{N}}({\underline{\eta }}) &{}\quad -\rho {\overline{N}}_{12}(\varvec{\eta })\ \\ -\rho {\overline{N}}_{21}(\varvec{\eta }) &{}\quad \rho {\overline{N}}_{22}(\varvec{\eta }) \end{pmatrix}\partial _x,\nonumber \\ \end{aligned}$$(1.25)with \({\underline{N}}({\underline{\eta }}):={\underline{G}}({\underline{\eta }})^{-1}\) and

$$\begin{aligned} {\overline{N}}(\eta )= \begin{pmatrix} {\overline{N}}_{11}(\varvec{\eta })&{}\quad {\overline{N}}_{12}(\varvec{\eta })\\ {\overline{N}}_{21}(\varvec{\eta })&{}\quad {\overline{N}}_{22}(\varvec{\eta }) \end{pmatrix} :={\overline{G}}(\varvec{\eta })^{-1}; \end{aligned}$$see Proposition A.19. For \({\mathcal {J}}_\mu (\varvec{\eta })\) we obtain the representation

$$\begin{aligned} {\mathcal {J}}_\mu (\varvec{\eta })={\mathcal {K}}(\varvec{\eta })+\frac{\mu ^2}{{\mathcal {L}}(\varvec{\eta })}, \end{aligned}$$(1.26)where

$$\begin{aligned} \begin{aligned} {\mathcal {K}}(\varvec{\eta })&=\underline{{\mathcal {K}}}({\underline{\eta }})+\overline{{\mathcal {K}}}({\overline{\eta }}),\\ \underline{{\mathcal {K}}}({\underline{\eta }})&= \int _{{\mathbb {R}}} \left\{ \frac{(1-\rho )}{2}{\underline{\eta }}^2 + {\underline{\beta }}\sqrt{1+{\underline{\eta }}_x^2}-{\underline{\beta }} \right\} \, \mathrm{d}x, \\ \overline{{\mathcal {K}}}({\overline{\eta }})&= \rho \int _{{\mathbb {R}}} \left\{ \frac{1}{2}{\overline{\eta }}^2 + {\overline{\beta }}\sqrt{1+{\overline{\eta }}_x^2}-{\overline{\beta }} \right\} \, \mathrm{d}x. \end{aligned} \end{aligned}$$We address the problem of minimising \({\mathcal {J}}_\mu \) using the concentration-compactness method. The main difficulties are that the functional is quasilinear, non-local and non-convex. These difficulties are partly solved by minimising over a bounded set in the function space, but we then have to prevent minimising sequences from converging to the boundary of this set. This is achieved by constructing a suitable test function and a special minimising sequence with good properties using the intuition from the nonlinear Schrödinger equation above.

Our approach is similar to that originally used by Buffoni (2004) to study solitary waves with strong surface tension on a single layer of fluid of finite depth, and later extended to deal with weak surface tension (Buffoni 2005, 2009; Groves and Wahlén 2010), infinite depth (Buffoni 2004; Groves and Wahlén 2011), fully localised three-dimensional waves (Buffoni et al. 2013) and constant vorticity (Groves and Wahlén 2015). Our main interest is in investigating the non-trivial modifications needed to deal with multi-layer flows. We give detailed explanations when needed and refer to the above papers for the details of the proofs when possible. In particular, a new challenge is that we need to consider vector-valued Dirichlet–Neumann operators. This is discussed in detail in Appendix A. Another novelty is related to the special minimising sequence mentioned above. Since \(\varvec{\eta }\) is vector-valued it is not sufficient to prove that the spectrum of the special minimising sequence concentrates around the wavenumbers \(\pm k_0\). In addition, we need to identify a leading term related to the zero eigenvector \(\varvec{v}_0\) of the matrix \(g(k_0)\) and estimate the minimising sequence in a more refined way. Finally, as already discussed in Sect. 1.2, the multi-layer case allows for a much richer variety of scenarios in the weakly nonlinear regime. In particular, this means that we have to make some assumptions in order for the approach to work. We have, however, made these as weak as possible, and the examples in Sect. 1.2 show that they are satisfied in important special cases.

Note that we could also have considered a bottom layer with finite depth. This introduces an additional dimensionless parameter in the problem (the ratio between the depths of the two layers), which allows for other phenomena. (For example, the slow speed can have a minimum at the origin.) We refer to Woolfenden and Părău (2011) for a discussion of the dispersion relation and numerical computations of solitary waves in the finite depth case. One of the reasons why we chose to look at the infinite depth problem is that it entails some technical challenges which invalidates the use of certain methods which are widely used to find solitary waves in hydrodynamics. In particular, the idea originally due to Kirchgässner (1982) of formulating the steady water-wave problem as an ill-posed evolution equation and applying a centre-manifold reduction cannot be used. The variational method that we use is less sensitive to these issues. Note, however, that Kirchgässner’s method has been extended to deal with the issues due to infinite depth by several authors (see Barrandon and Iooss 2005 and references therein) and this could have been used in order to construct solitary waves also in our setting. These methods give no information about stability, however.

As far as we are aware, there are no previous existence results for solitary waves in our setting. However, Iooss (1999) constructed small-amplitude periodic travelling-wave solutions of problem (1.1)–(1.8) in two situations. The first situation is when the parameters are chosen so that \(\nu ^2=\lambda _+(k)\) or \(\nu ^2=\lambda _-(k)\) for some wavenumber \(k\ne 0\) which is not in resonance with any other wavenumber (i.e. \(\lambda _{\pm }(nk) \ne \nu ^2\) for all \(n\in {\mathbb {Z}}\)) and \(\lambda _\pm '(k) \ne 0\) (where the sign is chosen such that \(\lambda _{\pm }(k)=\nu ^2\)). The second situation is the 1 : 1 resonance, that is when k is a non-degenerate critical point of \(\lambda _{\pm }\). In both situations he proved the existence of small-amplitude waves with period close to \(2\pi /k\) using dynamical systems techniques. The second situation includes our setting, but is somewhat more general. (The critical point is, for example, not assumed to be a minimum.) There are also a number of papers dealing with solitary or generalised solitary waves (asymptotic to periodic solutions at spatial infinity) in the related settings where either one or both of the surface and interfacial tension vanish (see Barrandon 2006; Barrandon and Iooss 2005; Dias and Iooss 2003; Iooss et al. 2002; Lombardi and Iooss 2003; Sun and Shen 1993 and references therein). The variational method presented in this paper does not work in those settings since it requires both surface tension and interfacial tension. Finally, let us conclude this section by mentioning that our assumptions exclude two possibilities which could be interesting for further study (by variational or other methods), that is when \(\lambda _-\) has a degenerate global minimum at \(k_0\) (see Fig. 4) or when the minimum value is attained at two distinct wavenumbers (Fig. 3). Also, when Assumption 1.1 is satisfied, but the corresponding nonlinear Schrödinger equation is of defocussing type (so that Assumption 1.3 is violated), one would expect the existence of dark solitary waves.

2 Existence and Stability

This section contains the main results of the paper. We begin by proving that the functional \({\mathcal {J}}_\mu \) has a minimiser in \(U\!\setminus \!\{0\}\). This is done by using concentration-compactness and penalisation methods as in Buffoni (2004, 2005, 2009), Buffoni et al. (2013), Groves and Wahlén (2011, 2015), and we refer to those papers for the details of some of the proofs. The outcome is the following result.

Theorem 2.1

Suppose that Assumptions 1.1 and 1.3 hold.

- (i)

The set \(C_\mu \) of minimisers of \({\mathcal {J}}_\mu \) over \(U \!\setminus \!\{0\}\) is non-empty.

- (ii)

Suppose that \(\{\varvec{\eta }_n\}\) is a minimising sequence for \({\mathcal {J}}_\mu \) on \(U\!\setminus \!\{0\}\) which satisfies

$$\begin{aligned} \sup _{n\in {{\mathbb {N}}}} \Vert \varvec{\eta }_n\Vert _2 < M. \end{aligned}$$(2.1)There exists a sequence \(\{x_n\} \subset {{\mathbb {R}}}\) with the property that a subsequence of\(\{\varvec{\eta }_n(x_n+\cdot )\}\) converges in \((H^r({\mathbb {R}}))^2\), \(0 \le r < 2\) to a function \(\varvec{\eta }\in C_\mu \).

The first statement of the theorem is a consequence of the second statement, once the existence of a minimising sequence satisfying (2.1) has been established. The existence of such a sequence can be proved using a penalisation method, cf. Buffoni (2004), Buffoni et al. (2013) and Groves and Wahlén (2011, 2015). A key part of the proof of Theorem 2.1 is the existence of a suitable ‘test function’ \(\varvec{\eta }_\star \) which satisfies the inequality

This implies in particular that any minimising sequence \(\{\varvec{\eta }_n\}\) satisfies this property for n sufficiently large. We construct such a test function in Appendix B. Once the existence of the test function has been proved, the remaining steps in the construction of the special minimising sequence satisfying (2.1) are similar to Buffoni (2004), Buffoni et al. (2013) and Groves and Wahlén (2011, 2015), to which we refer for further details. In fact, this special minimising sequence satisfies further properties which will be used below. (Note that a general minimising sequence satisfies the weaker estimate \(\Vert \varvec{\eta }_n\Vert _1^2 \le c\mu \) by Proposition A.29.)

Theorem 2.2

Suppose that Assumptions 1.1 and 1.3 hold. There exists a minimising sequence \(\{\tilde{\varvec{\eta }}_n\}\) for \({\mathcal {J}}_\mu \) over \(U\!\setminus \!\{0\}\) with the properties that \(\Vert \tilde{\varvec{\eta }}_n\Vert _2^2 \le c \mu \) and \({\mathcal {J}}_\mu (\tilde{\varvec{\eta }}_n) < 2\nu _0\mu -c\mu ^3\) for each \(n \in {{\mathbb {N}}}\), and \(\lim _{n \rightarrow \infty }\Vert {\mathcal {J}}_\mu ^\prime (\tilde{\varvec{\eta }}_n)\Vert _0=0\).

The second statement of Theorem 2.1 is proved by applying the concentration-compactness principle (Lions 1984a, b) [a form suitable for the present situation can be found in Groves and Wahlén (2015, Theorem 3.7)] to the sequence \(\{u_n\}\) defined by

where \(\{\varvec{\eta }_n\}\) is a minimising sequence satisfying (2.1). Taking a subsequence if necessary, we may suppose that the limit \(\ell :=\lim _{n \rightarrow \infty } \int _{-\infty }^\infty u_n(x) \, \mathrm{d}x> 0\) exists (\(\ell =0\) would imply that \(\lim _{n\rightarrow \infty } {\mathcal {J}}_\mu (\eta _n) =\infty \)). Similar to Buffoni et al. (2013, Lemma 3.7) it is easy to show that the vanishing property

leads to a contradiction to the estimate \(\Vert \varvec{\eta }_n\Vert _{1,\infty }\ge \,c\mu ^3\) which any minimising sequence has to satisfy because of the estimate \({\mathcal {J}}_\mu (\varvec{\eta }_n)<2\nu _0 \mu -c\mu ^3\) [see Lemma 2.29 and Buffoni et al. (2013, Lemma 3.4)]. We now comment on the more involved case ‘dichotomy’. Let us assume that there are sequences \(\{x_n\} \subset {\mathbb {R}}\), \(\{M_n^{(1)}\}, \{M_n^{(2)}\} \subset {\mathbb {R}}\) and a real number \(\kappa \in (0,\ell )\) with the properties that \(M_n^{(1)}\), \(M_n^{(2)} \rightarrow \infty \), \(M_n^{(1)}/M_n^{(2)} \rightarrow 0\),

as \(n \rightarrow \infty \). Furthermore,

for each \(r>0\), and for each \(\varepsilon >0\) there is a positive, real number R such that

for each \(n \in {{\mathbb {N}}}\). We abbreviate the sequence \(\{\varvec{\eta }_n(\cdot +x_n)\}\) to \(\{\varvec{\eta }_n\}\) and define sequences \(\{\varvec{\eta }_n^{(1)}\}\), \(\{\varvec{\eta }_n^{(2)}\}\) by the formulas

where \(\chi \in C^\infty _c(-2,2)\) with \(\chi =1\) in \([-1,1]\) and \(0\le \chi \le 1\). The ‘splitting properties’

and hence

are straightforward consequences of these definitions [see Buffoni et al. (2013, Lemma 3.10 and Appendix C)]. The corresponding splitting property

for the non-local functional \({\mathcal {L}}\) is not as direct, but nevertheless follows using its ‘pseudo-local’ properties [see Appendix D, in particular Theorem D.6, in Buffoni et al. (2013) and Sect. 2.2.2, in particular Theorem 2.36, in Groves and Wahlén (2015)]. Taking subsequences, we can assume that all of the sequences \(\{{\mathcal {K}}(\varvec{\eta }_n)\}\), \(\{{\mathcal {K}}(\varvec{\eta }_n^{(1)})\}\), \(\{{\mathcal {K}}(\varvec{\eta }_n^{(2)})\}\), \(\{{\mathcal {L}}(\varvec{\eta }_n)\}\), \(\{{\mathcal {L}}(\varvec{\eta }_n^{(1)})\}\) and \(\{{\mathcal {L}}(\varvec{\eta }_n^{(2)})\}\) converge and that the limits are positive [see Buffoni et al. (2013, Lemma 3.10 and Appendix C)]. Setting

we obtain that \(\mu =\mu _1+\mu _2\), \(\mu _1, \mu _2>0\) and

The next key step in the analysis of dichotomy is to show that the function

is strictly sub-additive.

Theorem 2.3

Suppose that Assumptions 1.1 and 1.3 hold. The number \(I_\mu \) has the strict sub-additivity property

Theorem 2.3 is obtained using a careful analysis of the special minimising sequence from Theorem 2.2, which is postponed to the end of this section. With this at hand, the dichotomy assumptions lead to the contradiction

It follows that the sequence \(\{u_n\}\) concentrates, that is, there is a sequence \(\{x_n\} \subset {\mathbb {R}}\) with the property that for each \(\varepsilon >0\) there exists a positive real number R with

for each \(n \in {{\mathbb {N}}}\). Arguing as in the proof of Lemma 3.9 of Buffoni et al. (2013), one finds that the sequence \(\{\varvec{\eta }_n(\cdot + x_n)\}\) admits a subsequence which converges in \((H^r({\mathbb {R}}))^2\), \(0 \le r < 2\), to a minimiser of \({\mathcal {J}}_\mu \) over \(U{\setminus } \{0\}\). This concludes the proof of Theorem 2.1.

The next step is to relate the above result to our original problem of finding minimisers of \({\mathcal {E}}(\varvec{\eta },\varvec{\xi })\) subject to the constraint \({\mathcal {I}}(\varvec{\eta },\varvec{\xi }) = 2\mu \), where \({\mathcal {E}}\) and \({\mathcal {I}}\) are defined in Eqs. (1.21) and (1.22). The following result is obtained using the argument explained in Sect. 5.1 of Groves and Wahlén (2015). In fact, we first minimise \({\mathcal {E}}(\varvec{\eta },\cdot )\) over \(T_\mu \) and then minimise \({\mathcal {J}}_\mu (\varvec{\eta })={\mathcal {E}}(\varvec{\eta },\varvec{\xi }_{\varvec{\eta }})\) over \(B_M(0)\subset H^2({\mathbb {R}})\) (cf. Theorem 2.1) as indicated in Sect. 1.3.

Theorem 2.4

Suppose that Assumptions 1.1 and 1.3 hold.

- (i)

The set \(D_\mu \) of minimisers of \({\mathcal {E}}\) over the set

$$\begin{aligned} S_\mu =\{(\varvec{\eta },\varvec{\xi }) \in U \times {{\tilde{X}}}:{\mathcal {I}}(\varvec{\eta },\varvec{\xi })=2\mu \} \end{aligned}$$is non-empty.

- (ii)

Suppose that \(\{(\varvec{\eta }_n,\varvec{\xi }_n)\} \subset S_\mu \) is a minimising sequence for \({\mathcal {E}}\) with the property that

$$\begin{aligned} \sup _{n\in {{\mathbb {N}}}} \Vert \varvec{\eta }_n\Vert _2 < M. \end{aligned}$$There exists a sequence \(\{x_n\} \subset {\mathbb {R}}\) with the property that a subsequence of\(\{\varvec{\eta }_n(x_n+\cdot ),\varvec{\xi }_n(x_n+\cdot )\}\) converges in \((H^r({\mathbb {R}}))^2 \times {{\tilde{X}}}\), \(0 \le r < 2\) to a function in \(D_\mu \).

We obtain a stability result as a corollary of Theorem 2.4 using a contradiction argument as in Buffoni (2004), Theorem 19. Recall that the usual informal interpretation of the statement that a set V of solutions to an initial value problem is ‘stable’ is that a solution which begins close to V remains close to V at all subsequent times. The precise meaning of a solution in the theorem below is irrelevant, as long as it conserves the functionals \({\mathcal {E}}\) and \({\mathcal {I}}\) over some time interval [0, T] with \(T>0\).

Theorem 2.5

Suppose that Assumptions 1.1 and 1.3 hold and that \((\varvec{\eta },\varvec{\xi }):[0,T] \rightarrow U \times {{\tilde{X}}}\) has the properties that

and

Choose \(r \in [0,2)\), and let ‘\({{\,\mathrm{dist}\,}}\)’ denote the distance in \((H^r({\mathbb {R}}))^2 \times {{\tilde{X}}}\). For each \(\varepsilon >0\) there exists \(\delta >0\) such that

for \(t\in [0,T]\).

This result is a statement of the conditional, energetic stability of the set \(D_\mu \). Here energetic refers to the fact that the distance in the statement of stability is measured in the ‘energy space’ \((H^r({\mathbb {R}}))^2 \times {{\tilde{X}}}\), while conditional alludes to the well-posedness issue. At present there is no global well-posedness theory for interfacial water waves (although there is a large and growing body of literature concerning well-posedness issues for water-wave problems in general). The solution \(t \mapsto (\varvec{\eta }(t),\varvec{\xi }(t))\) may exist in a smaller space over the interval [0, T], at each instant of which it remains close (in energy space) to a solution in \(D_\mu \). Furthermore, Theorem 2.5 is a statement of the stability of the set of constrained minimisers \(D_\mu \); establishing the uniqueness of the constrained minimiser would imply that \(D_\mu \) consists of translations of a single solution, so that the statement that \(D_\mu \) is stable is equivalent to classical orbital stability of this unique solution.

Let us finally discuss the relation to nonlinear Schrödinger waves and confirm the heuristic argument given in Sect. 1.2. Due to the relation

for the special test function \(\varvec{\eta }_\star \) obtained in Lemma B.1 (constructed via the function \(\phi _{\mathrm {NLS}}\) form Lemma 1.7) and the variational characterisation of \(D_\mathrm{NLS}\) from Lemma 1.7 one can prove the following result by contradiction as in Groves and Wahlén (2011, Sect. 5; 2015, Sect. 5.2.2). Since the proof is similar, we omit the details.

Theorem 2.6

Under Assumptions 1.1 and 1.3, the set \(D_\mu \) of minimisers of \({{\mathcal {E}}}\) over \(S_\mu \) satisfies

as \(\mu \downarrow 0\), where we write \({\varvec{\eta }}_1^+(x) = \tfrac{1}{2}\mu {\varvec{\phi }}_{\varvec{\eta }}(\mu x)e^{i k_0 x}\) and \(\varvec{\eta }_1^+={{\mathcal {F}}}^{-1}[\chi _{[k_0-\delta _0,k_0+\delta _0]}\hat{\varvec{\eta }}]\) with \(\delta _0 \in (0,\tfrac{1}{3}k_0)\). Furthermore,

and the speed \(\nu _\mu \) of the corresponding solitary wave satisfies

uniformly over \((\varvec{\eta },\varvec{\xi }) \in D_\mu \).

Note in particular that since \(\varvec{v}_0=(1,-a)\) with \(a>0\) (cf. Eq. (1.12)) the surface profile \({\overline{\eta }}\) is to leading order a scaled and inverted copy of the interface profile \({\underline{\eta }}\) (cf. Fig. 1). The fact that we do not know if the minimiser is unique up to translations is reflected by the lack of control over \(\omega \); for the model equation, the minimiser is in fact not unique up to translations (see Lemma 1.7). Using dynamical systems methods (see, for example, Barrandon and Iooss 2005), we expect that one can prove the existence of two solutions corresponding to \(\omega =0\) and \(\omega =\pi \) above, but without any knowledge of stability. Since the proof of Theorem 2.6 follows Groves and Wahlén (2015, Sect. 5.2) closely, we shall omit it.

The goal of the rest of this section is to prove Theorem 2.3, which follows directly from the strict sub-homogeneity of \(I_\mu \) (see Corollary 2.32). We will work under Assumptions 1.1 and 1.3 throughout the rest of the section, without explicitly mentioning when they are used. We begin by giving an outline of the proof. The heuristic argument in Sect. 1.2 (verified a posteriori in Theorem 2.6) suggests that the spectrum of minimisers should concentrate at wavenumbers \(\pm k_0\) and that they should resemble the test function \(\varvec{\eta }_\star \) identified in Lemma B.1 for small \(\mu \). Consequently, \(I_\mu \) should be well approximated by the upper bound \(2\nu _0 \mu + I_\mathrm{NLS}\mu ^3 +o(\mu ^3)\), the first two terms of which define a strictly sub-homogeneous function. The strict sub-homogeneity property is rigorously established by proving results in this direction for a ‘near minimiser’ of \({\mathcal {J}}_\mu \) over \(U\!\setminus \!\{0\}\), that is a function in \(U \!\setminus \!\{0\}\) with

for some \(N\ge 3\). The existence of near minimisers is a consequence of Theorem 2.2. One of the main tools that we will use is the weighted norm

and a splitting of \(\tilde{\varvec{\eta }}\) in view of the expected wavenumber distribution. A difference compared to previous works is that \(\tilde{\varvec{\eta }}\) is vector-valued and that we therefore have to identify a leading term related to the zero eigenvector \(\varvec{v}_0\) of the matrix \(g(k_0)\) in Sect. 1.2. We establish weighted and non-weighted estimates for the different components of \(\tilde{\varvec{\eta }}\) in Lemma 2.19. These estimates allow us to identify the dominant term in the ‘nonlinear part’

of \({\mathcal {J}}_\mu (\tilde{\varvec{\eta }})\) for near minimisers \(\tilde{\varvec{\eta }}\), the key ingredients being a Modica–Mortola-type argument in the proof of Lemma 2.29 and the effect of the concentration of the Fourier modes, cf. Lemma 2.20. Finally, we can show in Proposition 2.31 monotonicity of the function \(s\mapsto s^{-q}{\mathcal {M}}_{s^2\mu }(s\tilde{\varvec{\eta }})\) for a certain \(q>2\). The strict sub-homogeneity follows easily from this (see Corollary 2.32).

Turning now to the details of the proof, it follows from Appendix A.3 that the functionals \({\mathcal {K}}\) and \({\mathcal {L}}\) are analytic on U with convergent power series expansions

Moreover, the gradients \({\mathcal {K}}'(\varvec{\eta })\) and \({\mathcal {L}}'(\varvec{\eta })\) exist in \((L^2({\mathbb {R}}))^2\) for each \(\varvec{\eta }\in U\) and define analytic operators \(U \rightarrow (L^2({\mathbb {R}}))^2\). Formulas for some of the terms in the power series and their gradients can be found in Appendix A.3. In particular, the quadratic part \({\mathcal {L}}_2(\varvec{\eta })\) can be expressed as

using the Fourier multiplier operators

with

We will also use the notation \({\mathcal {K}}_\mathrm {nl}(\varvec{\eta }):={\mathcal {K}}(\varvec{\eta })-{\mathcal {K}}_2(\varvec{\eta })\), \({\mathcal {L}}_\mathrm {nl}(\varvec{\eta }):={\mathcal {L}}(\varvec{\eta })-{\mathcal {L}}_2(\varvec{\eta })\) for the superquadratic parts of the functionals. (The corresponding gradients are the nonlinear parts of \({\mathcal {K}}'\) and \({\mathcal {L}}'\), respectively.)

We next seek to split each \(\varvec{\eta }\in U\) into the sum of a function \(\varvec{\eta }_1\) with spectrum near \(k=\pm k_0\) and a function \(\varvec{\eta }_2\) whose spectrum is bounded away from these points. To this end we write the identity

in the form

where g(k) is given by (1.13). We decompose it into two coupled equations by defining \(\varvec{\eta }_2 \in (H^2({\mathbb {R}}))^2\) by the formula

and \(\varvec{\eta }_1 \in (H^2({\mathbb {R}}))^2\) by \(\varvec{\eta }_1=\varvec{\eta }-\varvec{\eta }_2\), so that \(\hat{\varvec{\eta }}_1\) has support in \(S:=[-k_0-\delta _0,-k_0+\delta _0] \cup [k_0-\delta _0,k_0+\delta _0]\), where \(\delta _0\in (0,k_0/3)\). Here we have used the fact that

is a bounded linear operator \((L^2({\mathbb {R}}))^2 \rightarrow (H^2({\mathbb {R}}))^2\).

It will also be useful to express vectors \(\varvec{w}=({\underline{w}}, {{\overline{w}}})\) in the basis \(\{\varvec{v}_0, \varvec{v}_0^\sharp \}\), where \(\varvec{v}_0\) is the zero eigenvector of the matrix \(g(k_0)\) (see Sect. 1.2) and \(\varvec{v}_0^\sharp \not \parallel \varvec{v}\). The exact choice of the complementary vector \(\varvec{v}_0^\sharp \) is unimportant, but in order to simplify the notation later on we choose \(\varvec{v}_0^\sharp =(0,1)\). This implies that

where \(c_1={\underline{w}}\) and \(c_2={{\overline{w}}}+a{\underline{w}}\).

The following propositions are used to estimate the special minimising sequence. The proofs follow Groves and Wahlén (2015, Sect. 4.1) and are omitted.

Proposition 2.7

-

(i)

The estimates \(\Vert \varvec{\eta }\Vert _{1,\infty } \le c \mu ^\frac{\alpha }{2}|{}|{}|\varvec{\eta }|{}|{}|_\alpha \), \(\Vert {\underline{K}}^0{{\underline{\eta }}}\Vert _\infty \le c \mu ^\frac{\alpha }{2}|{}|{}|\varvec{\eta }|{}|{}|_\alpha \), \(\Vert {\overline{K}}^0_{ij}\varvec{\eta }\Vert _\infty \le c \mu ^\frac{\alpha }{2}|{}|{}|\varvec{\eta }|{}|{}|_\alpha \) hold for each \(\varvec{\eta }\in (H^2({\mathbb {R}}))^2\).

-

(ii)

The estimates

$$\begin{aligned} \Vert \varvec{\eta }^{\prime \prime }+k_0^2\varvec{\eta }\Vert _0 \le c \mu ^{\alpha }|{}|{}|\varvec{\eta }|{}|{}|_\alpha , \end{aligned}$$and

$$\begin{aligned} \Vert ({\underline{K}}^0{\underline{\eta }})^{(n)}\Vert _\infty , \Vert ({\overline{K}}^0\varvec{\eta })^{(n)}\Vert _\infty \le \mu ^\frac{\alpha }{2}|{}|{}|\varvec{\eta }|{}|{}|_\alpha , \quad n=0,1,2,\ldots , \end{aligned}$$hold for each \(\varvec{\eta }\in (H^2({\mathbb {R}}))^2\) with \(\mathrm {supp}\,\hat{\varvec{\eta }} \subseteq S\).

Proposition 2.8

Any near minimiser \(\tilde{\varvec{\eta }}\) satisfies the inequalities

and

where

and

Proposition 2.9

The estimates

hold for each \(\varvec{\eta }\in U\).

Proposition 2.10

The estimates

hold for each \(\varvec{\eta }\in U\).

It is also helpful to write

where \({\underline{m}}\in {{\mathcal {L}}}_\mathrm {s}^2(H^2({\mathbb {R}}), L^2({\mathbb {R}}))\) and \({\overline{m}}\in {{\mathcal {L}}}_\mathrm {s}^2((H^2({\mathbb {R}}))^2, (L^2({\mathbb {R}}))^2)\) are defined by

and similarly

where \(n_j \in {{\mathcal {L}}}_\mathrm {s}^3(H^2({\mathbb {R}}), {\mathbb {R}})\), \(j=1,2,3\), are defined by

The symbol \({\mathcal {P}}[\cdot ]\) denotes the sum of all distinct expressions resulting from permutations of the variables appearing in its argument.

Similarly to Groves and Wahlén (2015, Proposition 4.6) we obtain the following estimates by direct calculations.

Proposition 2.11

The estimates

hold for each \(\varvec{\eta }\in U\) and \(\varvec{u}_2\), \(\varvec{u}_3 \in (H^2({\mathbb {R}}))^2\).

Using Proposition 2.9 and arguing as in Groves and Wahlén (2015, Proposition 4.6 and Lemma 4.7) we obtain the following estimates.

Lemma 2.12

The estimates

and

hold for each \(\varvec{\eta }\in U\) with \(\Vert \varvec{\eta }\Vert _2 \le c\mu ^\frac{1}{2}\) and \({\mathcal {L}}_2(\varvec{\eta })>c\mu \).

The following proposition is an immediate consequence of the definition of \(\varvec{\eta }_1\).

Proposition 2.13

The identity

holds for each \(\varvec{\eta }\in U\).

As a consequence, \(\varvec{\eta }_1\) satisfies the equation

where

In keeping with Eq. (2.2) we write the equation for \(\varvec{\eta }_2\) in the form

where

the decomposition \(\varvec{\eta }=\varvec{\eta }_1-H(\varvec{\eta })+\varvec{\eta }_3\) forms the basis of the calculations presented below. An estimate on the size of \(H(\varvec{\eta })\) is obtained from Eq. (2.4) and Proposition 2.11.

Proposition 2.14

The estimate

holds for each \(\varvec{\eta }\in U\).

The above results may be used to derive estimates for the gradients of the cubic parts of the functionals which are used in the analysis below.

Proposition 2.15

Any near minimiser \(\tilde{\varvec{\eta }}\) satisfies the estimates

Proof

Observe that

and estimate the right-hand side of this equation using Propositions 2.11 and 2.14. \(\square \)

An estimate for \({\mathcal {L}}_3(\tilde{\varvec{\eta }})\) is obtained in a similar fashion using Propositions 2.11, 2.13, and 2.14.

Proposition 2.16

Any near minimiser \(\tilde{\varvec{\eta }}\) satisfies the estimates

Estimating the right-hand sides of the inequalities

(together with the corresponding inequalities for \({\mathcal {K}}\) and \({\mathcal {L}}\)). Using Propositions 2.9 and 2.10, the calculation

and Propositions 2.15 and 2.16 yield the following estimates for the ‘nonlinear’ parts of the functionals.

Lemma 2.17

Any near minimiser \(\tilde{\varvec{\eta }}\) satisfies the estimates

We now have all the ingredients necessary to estimate the wave speed and the quantity \(|{}|{}|\tilde{\varvec{\eta }}_1 |{}|{}|_\alpha \).

Proposition 2.18

Any near minimiser \(\tilde{\varvec{\eta }}\) satisfies the estimates

Proof

Combining Lemma 2.12, inequality (2.5) and Lemma 2.17, one finds that

from which the given estimates follow by Proposition 2.8. \(\square \)

Lemma 2.19

Any near minimiser \(\tilde{\varvec{\eta }}\) satisfies \(|{}|{}|\tilde{\varvec{\eta }}_{1} |{}|{}|_\alpha ^2 \le c\mu \), \(\Vert \tilde{\varvec{\eta }}_{1}^{\varvec{v}_0^\sharp } \Vert _0^2\le c\mu ^{3+2\alpha }\), \(\Vert \tilde{\varvec{\eta }}_3\Vert _2^2 \le c\mu ^{3+2\alpha }\) and \(\Vert H(\tilde{\varvec{\eta }})\Vert _2^2 \le c\mu ^{2+\alpha }\) for \(\alpha <1\).

Proof

Lemma 2.17 and Proposition 2.18 assert that

which shows that

and therefore

and

Multiplying the above inequality by \(\mu ^{-4\alpha }\) and adding \(\Vert \tilde{\varvec{\eta }}_1\Vert _0^2 \le \Vert \tilde{\varvec{\eta }}\Vert _0^2 \le c \mu \), one finds that

where Proposition 2.7 and the inequality

for \(k \in S\) have also been used. The latter follows from (1.14) and the fact that \(g(k) \varvec{v}_0^\sharp \ne 0\) for \(k\in S\).

The estimate for \(\tilde{\varvec{\eta }}_1\) follows from the previous inequality using the argument given by Groves and Wahlén (2010, Theorem 2.5), while those for \(\tilde{\varvec{\eta }}_3\) and \(H(\tilde{\varvec{\eta }})\) are derived by estimating \(|{}|{}|\tilde{\varvec{\eta }}_1 |{}|{}|_\alpha ^2 \le c\mu \) in Eq. (2.6) and Proposition 2.14. Finally, as a consequence of (2.7)–(2.9) we obtain the inequality

using that \(|{}|{}|\tilde{\varvec{\eta }}_1|{}|{}|^2_\alpha \le c\,\mu \). \(\square \)

The next step is to identify the dominant terms in the formulas for \({\mathcal {M}}_\mu (\tilde{\varvec{\eta }})\) and\(\langle {\mathcal {M}}_\mu ^\prime (\tilde{\varvec{\eta }}), \tilde{\varvec{\eta }} \rangle + 4\mu {\tilde{{\mathcal {M}}}}_\mu (\tilde{\varvec{\eta }})\) given in Lemma 2.12. We begin by examining the quantities \({\mathcal {K}}_4(\tilde{\varvec{\eta }})\) and \({\mathcal {L}}_4(\tilde{\varvec{\eta }})\) using a lemma which allows us to replace Fourier multiplier operators acting on functions with spectrum localised around certain wavenumbers by multiplication by constants. The result is a straightforward modification of Groves and Wahlén (2011, Proposition 4.13; 2015, Lemma 4.23), and the proof is therefore omitted.

Lemma 2.20

Assume that \(u, v \in H^2({\mathbb {R}})\) with \(\mathrm {supp}\,{\hat{u}}, \mathrm {supp}\,{\hat{v}}\subseteq S\) and \(|{}|{}|u|{}|{}|_\alpha , |{}|{}|v|{}|{}|_\alpha \le c\mu ^\frac{1}{2}\) for some \(\alpha <1\) and let \(u^+:={\mathcal {F}}^{-1}[\chi _{[0,\infty )}{\hat{u}}]\), \(v^+:={\mathcal {F}}^{-1}[\chi _{[0,\infty )}{\hat{v}}]\) and \(u^-:={\mathcal {F}}^{-1}[\chi _{(-\infty ,0]}{\hat{u}}]\), \(v^-:={\mathcal {F}}^{-1}[\chi _{(-\infty ,0]}{\hat{v}}]\) (so that \(u^-=\overline{u^+}\) and \(v^-=\overline{v^+}\)). Then u and v satisfy the estimates

- (i)

\(L(u^{\pm }) = m(k_0)u^\pm + {\underline{O}}(\mu ^{\frac{1}{2}+\alpha })\),

- (ii)

\(L(u^+ v^+)= m(2k_0) u^+ v^+ + {\underline{O}}(\mu ^{1+\frac{3\alpha }{2}})\),

- (iii)

\(L(u^- v^-)= m(-2k_0) u^- v^- + {\underline{O}}(\mu ^{1+\frac{3\alpha }{2}})\),

- (iv)

\(L(u^+ v^-) =m(0)u^+v^-+ {\underline{O}}(\mu ^{1+\frac{3\alpha }{2}})\),

where \(L={\mathcal {F}}^{-1}[m(k) \cdot ]\) is a Fourier multiplier operator whose symbol m is locally Lipschitz continuous, and \({\underline{O}}(\mu ^p)\) denotes a quantity whose Fourier transform has compact support and whose \(L^2({\mathbb {R}})\)-norm (and hence \(H^s({\mathbb {R}})\)-norm for \(s \ge 0\)) is \(O(\mu ^p)\).

Remark 2.21

Note in particular that we can take \(L\in \{\partial _x, {\underline{K}}_0, {\overline{K}}^0_{ij}\}\) in estimates (i)–(iv) in Lemma 2.20 and that we can take \(m(k)=(g(k)^{-1})_{ij}\) in (ii)–(iv) since \((g(k)^{-1})_{ij}\) is locally Lipschitz on \({\mathbb {R}}{\setminus } S\).

Using formulas (A.16), (A.20), (A.21), Lemmas 2.19 and 2.20 (with \(\alpha \) sufficiently close to 1), and the identity \(\tilde{\varvec{\eta }}_1^{\varvec{v}_0}= \tilde{{\underline{\eta }}}_1 (1,-a)\) we now obtain the following estimates.

Proposition 2.22

Any near minimiser \(\tilde{\varvec{\eta }}\) satisfies the estimates

Proposition 2.23

Any near minimiser \(\tilde{\varvec{\eta }}\) satisfies the estimates

Corollary 2.24

Any near minimiser \(\tilde{\varvec{\eta }}\) satisfies the estimate

where

We now turn to the corresponding result for \({\mathcal {L}}_3(\tilde{\varvec{\eta }})\). The following result is obtained by writing

expanding the right-hand side and estimating the terms using Propositions 2.7 and 2.11, Lemma 2.19 and the identity \(n(\tilde{\varvec{\eta }}_1, \tilde{\varvec{\eta }}_1, \tilde{\varvec{\eta }}_1)=0\).

Proposition 2.25

Any near minimiser \(\tilde{\varvec{\eta }}\) satisfies the estimate

Proposition 2.26

Any near minimiser \(\tilde{\varvec{\eta }}\) satisfies the estimate

Proof

Noting that

(see Propositions 2.7 and 2.10, Corollary 2.18 and Lemma 2.19) one finds that

recalling the definition of H in (2.4). The proof is concluded by estimating

(cf. Propositions 2.7 and 2.11, and Lemma 2.19). \(\square \)

Combining Propositions 2.25 and 2.26, one finds that

Expanding the right-hand side using Lemma 2.20 we then obtain the following result.

Proposition 2.27

Any near minimiser \(\tilde{\varvec{\eta }}\) satisfies

where

The following estimates for \({\mathcal {M}}_\mu (\tilde{\varvec{\eta }})\) and \(\langle {\mathcal {M}}_\mu ^\prime (\tilde{\varvec{\eta }}), \tilde{\varvec{\eta }} \rangle + 4\mu {\tilde{{\mathcal {M}}}}_\mu (\tilde{\varvec{\eta }})\) may now be derived from Corollary 2.24 and Proposition 2.27.

Lemma 2.28

The estimates

hold uniformly over \(s \in [1,2]\).

Proof

Lemma 2.12 asserts that

uniformly over \(s \in [1,2]\). The first result follows by estimating

(see Eq. (2.5)),

(by Propositions 2.22, 2.23 and 2.27) and

The second result is derived in a similar fashion. \(\square \)

Lemma 2.29

Any near minimiser satisfies the inequality

Proof

Note first that an arbitrary function \(\varvec{\eta }\in U{\setminus }\{0\}\) satisfies the inequality

where we have used that

(cf. (1.14)). The result now follows from the calculation

\(\square \)

Corollary 2.30

The estimates

hold uniformly over \(s \in [1,2]\) and

Proof

The estimates follow by combining Corollary 2.24, Proposition 2.27 and Lemma 2.28, while the inequality for \(\underline{{\tilde{\eta }}}_1\) is a consequence of the first estimate (with \(s=1\)) and Lemma 2.29. \(\square \)

Proposition 2.31

There exist \(s_0\in (1,2]\) and \(q>2\) with the property that the function

is decreasing and strictly negative.

Proof

This result follows from the calculation

for \(s \in (1,s_0)\) and \(q \in (2,q_0)\), where we have used Corollary 2.30 and chosen \(s_0>1\) and \(q_0>2\) so that \((3-q)A_3+s(4-q)A_4\), which is negative for \(s=1\) and \(q=2\) (by Assumption 1.3), is also negative for \(s \in (1,s_0]\) and \(q \in (2,q_0]\). \(\square \)

The sub-homogeneity of \(I_\mu \) now follows using a simplified form of the argument in the proof of Groves and Wahlén (2015, Corollary 4.32), which is repeated here for the reader’s convenience.

Corollary 2.32

There exists \(\mu _0>0\) such that the map \((0, \mu _0)\ni \mu \mapsto I_\mu \) is strictly sub-homogeneous, that is

whenever \(0<\mu<s\mu <\mu _0\).

Proof

It follows from the previous lemma that there exists \(q>2\) such that

Combining this with Lemma 2.29 and letting \(\{\tilde{\varvec{\eta }}_n\}\) be the special minimising sequence in Theorem 2.2, we find that

As \(n\rightarrow \infty \) this inequality yields

For \(s>s_0^2\) choose \(p\ge 2\) such that \(s\in (1,s_0^{2p}]\) and note that

\(\square \)

Theorem 2.3 follows from Corollary 2.32 using a classical argument. Indeed, if \(0<\mu _2 \le \mu _1\) with \(\mu _1+\mu _2 <\mu _0\), then

References

Barrandon, M.: Reversible bifurcation of homoclinic solutions in presence of an essential spectrum. J. Math. Fluid Mech. 8, 267–310 (2006)

Barrandon, M., Iooss, G.: Water waves as a spatial dynamical system; infinite depth case. Chaos 15, 037112 (2005)

Benjamin, T .B., Bridges, T.J.: Reappraisal of the Kelvin–Helmholtz problem. I. Hamiltonian structure. J. Fluid Mech. 333, 301–325 (1997)

Buffoni, B.: Existence and conditional energetic stability of capillary–gravity solitary water waves by minimisation. Arch. Ration. Mech. Anal. 173, 25–68 (2004)

Buffoni, B.: Conditional energetic stability of gravity solitary waves in the presence of weak surface tension. Topol. Methods Nonlinear Anal. 25, 41–68 (2005)

Buffoni, B.: Gravity solitary waves by minimization: an uncountable family. Topol. Methods Nonlinear Anal. 34, 339–352 (2009)

Buffoni, B., Groves, M.D., Sun, S.M., Wahlén, E.: Existence and conditional energetic stability of three-dimensional fully localised solitary gravity–capillary water waves. J. Differ. Equ. 254, 1006–1096 (2013)

Cazenave, T.: Semilinear Schrödinger Equations. Courant Lecture Notes in Mathematics, vol. 10. New York University, Courant Institute of Mathematical Sciences, New York (2003)

Craig, W., Groves, M.D.: Normal forms for wave motion in fluid interfaces. Wave Motion 31, 21–41 (2000)

Craig, W., Guyenne, P., Kalisch, H.: Hamiltonian long-wave expansions for free surfaces and interfaces. Commun. Pure Appl. Math. 58, 1587–1641 (2005)

Dias, F., Iooss, G.: Water-Waves as a Spatial Dynamical System. Handbook of Mathematical Fluid Dynamics, vol. II, pp. 443–499. North-Holland, Amsterdam (2003)

Dias, F., Kharif, C.: Nonlinear gravity and capillary–gravity waves. Annu. Rev. Fluid Mech. 31, 301–346 (1999)

Groves, M.D., Wahlén, E.: On the existence and conditional energetic stability of solitary water waves with weak surface tension. C. R. Math. Acad. Sci. Paris 348, 397–402 (2010)

Groves, M.D., Wahlén, E.: On the existence and conditional energetic stability of solitary gravity–capillary surface waves on deep water. J. Math. Fluid Mech. 13, 593–627 (2011)

Groves, M.D., Wahlén, E.: Existence and conditional energetic stability of solitary gravity–capillary water waves with constant vorticity. Proc. R. Soc. Edinb. Sect. A 145, 791–883 (2015)

Iooss, G.: Gravity and capillary–gravity periodic travelling waves for two superposed fluid layers, one being of infinite depth. J. Math. Fluid Mech. 1, 24–61 (1999)

Iooss, G., Lombardi, E., Sun, S.M.: Gravity travelling waves for two superposed fluid layers, one being of infinite depth: a new type of bifurcation. R. Soc. Lond. Philos. Trans. Ser. A Math. Phys. Eng. Sci. 360, 2245–2336 (2002). Recent developments in the mathematical theory of water waves (Oberwolfach, 2001)

Kirchgässner, K.: Wave-solutions of reversible systems and applications. In: Dynamical Systems, II (Gainesville, FL, 1981), pp. 181–200. Academic Press, New York (1982)

Kuznetsov, E.A., Lushnikov, P.M.: Nonlinear theory of the excitation of waves by a wind due to the Kelvin–Helmholtz instability. J. Exp. Theor. Phys. 81, 332–340 (1995)

Lannes, D.: The Water Waves Problem. Mathematical Analysis and Asymptotics. Mathematical Surveys and Monographs, vol. 188. American Mathematical Society, Providence, RI (2013)

Lions, P.-L.: The concentration-compactness principle in the calculus of variations. The locally compact case. I. Ann. Inst. H. Poincaré Anal. Non Linéaire 1, 109–145 (1984a)

Lions, P.-L.: The concentration-compactness principle in the calculus of variations. The locally compact case. II. Ann. Inst. H. Poincaré Anal. Non Linéaire 1, 223–283 (1984b)

Lombardi, E., Iooss, G.: Gravity solitary waves with polynomial decay to exponentially small ripples at infinity. Ann. Inst. H. Poincaré Anal. Non Linéaire 20, 669–704 (2003)

Sun, S.M., Shen, M.C.: Exact theory of generalized solitary waves in a two-layer liquid in the absence of surface tension. J. Math. Anal. Appl. 180, 245–274 (1993)

Woolfenden, H.C., Părău, E.I.: Numerical computation of solitary waves in a two-layer fluid. J. Fluid Mech. 688, 528–550 (2011)

Acknowledgements

We are grateful to the referees for valuable suggestions. E. Wahlén was supported by the Swedish Research Council (Grant Nos. 621-2012-3753 and 2016-04999).

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Dr. Paul Newton.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

The Functional-Analytic Setting

The goal of this section is to introduce rigorous definitions of the Dirichlet–Neumann operators \({\underline{G}}({{\underline{\eta }}})\) and \({\overline{G}}(\varvec{\eta })\) and their inverses \({\underline{N}}({{\underline{\eta }}})\) and \({\overline{N}}(\varvec{\eta })\), as well as the operators \(G(\varvec{\eta })\) and \(K(\varvec{\eta })\).

1.1 Definition of Operators

1.1.1 Lower Fluid

In order to define \({\underline{G}}({{\underline{\eta }}})\) and \({\underline{N}}({{\underline{\eta }}})\), we first introduce suitable function spaces on which these operators are well defined. We begin by recalling the definition of the Schwartz class \({\mathcal {S}}({\overline{\Omega }})\) for an open set \(\Omega \subset {\mathbb {R}}^n\):

Definition A.1

-

(i)

Let \(\dot{H}^{\frac{1}{2}}({\mathbb {R}})\) be the completion of \({\mathcal {S}}({\mathbb {R}})\) with respect to the norm

$$\begin{aligned} \Vert u\Vert _{\dot{H}^{\frac{1}{2}}({\mathbb {R}})}:=\left( \int _{\mathbb {R}}|k| |{\hat{u}}(k)|^2 \, \mathrm{d}k\right) ^{\frac{1}{2}}. \end{aligned}$$ -

(ii)

Let \({\dot{H}}^{-\frac{1}{2}}({\mathbb {R}})\) be the completion of \({\overline{{\mathcal {S}}}}({\mathbb {R}})=\{u\in {\mathcal {S}}({\mathbb {R}}):{\hat{u}}(0)=0\}\) with respect to the norm

$$\begin{aligned} \Vert u\Vert _{\dot{H}^{-\frac{1}{2}}({\mathbb {R}})}:=\left( \int _{\mathbb {R}}|k|^{-1} |{\hat{u}}(k)|^2\, \mathrm{d}k\right) ^{\frac{1}{2}}. \end{aligned}$$ -

(iii)

Let \(\dot{H}^1(\Omega )\) be the completion of \({\mathcal {S}}({\overline{\Omega }})\) with respect to the norm

$$\begin{aligned} \Vert u\Vert _{\dot{H}^1(\Omega )}:=\left( \int _{\Omega }|\nabla u|^2\, \mathrm{d}x\, \mathrm{d}y\right) ^{\frac{1}{2}}. \end{aligned}$$

The following result is classical, and the proof is therefore omitted. (We do, however, present a proof of a similar result for the upper domain later; see Proposition A.7.)

Proposition A.2

-

(i)

The trace map \(u \mapsto u|_{y={\underline{\eta }}}\) defines a continuous map \(\dot{H}^1({\underline{\Sigma }}({\underline{\eta }})) \rightarrow \dot{H}^{\frac{1}{2}}({\mathbb {R}})\) and has a continuous right inverse \(\dot{H}^{\frac{1}{2}}({\mathbb {R}})\rightarrow \dot{H}^1({\underline{\Sigma }}({\underline{\eta }}))\).

-

(ii)

The space \({\dot{H}}^{-\frac{1}{2}}({\mathbb {R}})\) can be identified with \(({\dot{H}}^{\frac{1}{2}}({\mathbb {R}}))^\prime \).

Definition A.3

For \(\eta \in W^{1,\infty }({\mathbb {R}})\), the bounded linear operator \({\underline{G}}(\eta ):\dot{H}^{\frac{1}{2}}({\mathbb {R}})\rightarrow \dot{H}^{-\frac{1}{2}}({\mathbb {R}})\) is defined by

where \(\langle \cdot \,, \cdot \rangle \) denotes the \(\dot{H}^{-\frac{1}{2}}({\mathbb {R}}) \times \dot{H}^{\frac{1}{2}}({\mathbb {R}})\) pairing and \({\underline{\phi }}_j\), \(j=1,2\), is the unique function in \(\dot{H}^1({\underline{\Sigma }}({\underline{\eta }}))\) such that \({\underline{\phi }}_j|_{y={\underline{\eta }}}={\underline{\Phi }}_j\) and

for all \({\underline{\psi }} \in \dot{H}^1({\underline{\Sigma }}({\underline{\eta }}))\) with \({\underline{\psi }}|_{y={\underline{\eta }}}=0\).

Using Proposition A.2 and the definition of \({\underline{G}}({\underline{\eta }})\), we find that

for some constant \(c>0\) which depends on \(\Vert {\underline{\eta }}\Vert _{W^{1, \infty }({\mathbb {R}})}\). From this we immediately obtain the following result.

Lemma A.4

The Dirichlet–Neumann operator \({\underline{G}}({\underline{\eta }}):\dot{H}^{\frac{1}{2}}({\mathbb {R}}) \rightarrow \dot{H}^{-\frac{1}{2}}({\mathbb {R}})\) is an isomorphism for each \({\underline{\eta }}\in W^{1,\infty }({\mathbb {R}})\).

Definition A.5

For \({\underline{\eta }}\in W^{1,\infty }({\mathbb {R}})\), the Neumann–Dirichlet operator \({\underline{N}}({\underline{\eta }}) :\dot{H}^{-\frac{1}{2}}({\mathbb {R}})\rightarrow \dot{H}^{\frac{1}{2}}({\mathbb {R}})\) is defined as the inverse of \({\underline{G}}({\underline{\eta }})\).

1.1.2 Upper Fluid

We next discuss the same questions for the upper fluid. Here we have the additional difficulty that both boundaries are free. Choose \(h_0 \in (0,1)\). In order to prevent the boundaries from intersecting, we consider the class

of surface and interface profiles.

Definition A.6

-

(i)

Let \(H_\star ^{\frac{1}{2}}({\mathbb {R}})\) be the completion of \({\mathcal {S}}({\mathbb {R}})\) with respect to the norm

$$\begin{aligned} \Vert u\Vert _{H_\star ^{\frac{1}{2}}({\mathbb {R}})}:=\left( \int _{{\mathbb {R}}} (1+k^2)^{-\frac{1}{2}}k^2|\hat{u}|^2\, \mathrm{d}k\right) ^{\frac{1}{2}}, \end{aligned}$$ -

(ii)

Let \(H_\star ^{-\frac{1}{2}}({\mathbb {R}})\) be the completion of \({\overline{{\mathcal {S}}}}({\mathbb {R}})\) with respect to the norm

$$\begin{aligned} \Vert u\Vert _{H_\star ^{-\frac{1}{2}}({\mathbb {R}})}:=\left( \int _{{\mathbb {R}}} (1+k^2)^{\frac{1}{2}}k^{-2}|\hat{u}|^2\, \mathrm{d}k\right) ^{\frac{1}{2}}. \end{aligned}$$ -

(iii)

Let X be the Hilbert space

$$\begin{aligned} \{ \overline{\varvec{\Phi }}=({\overline{\Phi }}_i, {\overline{\Phi }}_s)\in (H_\star ^{\frac{1}{2}}({\mathbb {R}}))^2 :{\overline{\Phi }}_s-{\overline{\Phi }}_i \in H^{\frac{1}{2}}({\mathbb {R}})\} \end{aligned}$$equipped with the inner product

$$\begin{aligned} \langle \overline{\varvec{\Phi }}_1,\overline{\varvec{\Phi }}_2\rangle _X= \langle \overline{\varvec{\Phi }}_1,\overline{\varvec{\Phi }}_2\rangle _{(H_\star ^{\frac{1}{2}}({\mathbb {R}}))^2}+\langle {\overline{\Phi }}_{1,s}-{\overline{\Phi }}_{1,i},{\overline{\Phi }}_{2,s}-{\overline{\Phi }}_{2,i}\rangle _{H^{\frac{1}{2}}({\mathbb {R}})}. \end{aligned}$$ -

(iv)

Let Y be the Hilbert space

$$\begin{aligned} \{\overline{\varvec{\Psi }}=({\overline{\Psi }}_i, {\overline{\Psi }}_s) \in (H^{-\frac{1}{2}}({{\mathbb {R}}}))^2:{\overline{\Psi }}_s+ {\overline{\Psi }}_i \in H_\star ^{-\frac{1}{2}}({\mathbb {R}})\} \end{aligned}$$equipped with the inner product

$$\begin{aligned} \langle \overline{\varvec{\Psi }}_1,\overline{\varvec{\Psi }}_2\rangle _Y=\langle \overline{\varvec{\Psi }}_1,\overline{\varvec{\Psi }}_2\rangle _{(H^{-\frac{1}{2}}({\mathbb {R}}))^2}+\langle {\overline{\Psi }}_{1,s}+{\overline{\Psi }}_{1,i},{\overline{\Psi }}_{2,s}+{\overline{\Psi }}_{2,i}\rangle _{H_\star ^{-\frac{1}{2}}({\mathbb {R}})}. \end{aligned}$$

Note that we have the inclusions

The reason for introducing the space X is that it is the natural trace space associated with \(\dot{H}^1({\overline{\Sigma }}(\varvec{\eta }))\). Since this is not completely standard, we include a proof.

Proposition A.7

Fix \(\varvec{\eta }\in W\). The trace map \(u \mapsto (u|_{y={\underline{\eta }}}, u|_{y=1+{\overline{\eta }}})\) defines a continuous map \(\dot{H}^1({\overline{\Sigma }}(\varvec{\eta }))\rightarrow X\) with a continuous right inverse \(X\rightarrow \dot{H}^1({\overline{\Sigma }}(\varvec{\eta }))\).

Proof

We flatten the domain using the transformation \((x,y)\mapsto (x, y^\prime (x,y))\), where

This maps the domain \({\overline{\Sigma }}(\varvec{\eta })\) onto the strip \({{\overline{\Sigma }}}_0=\{(x,y)\in {\mathbb {R}}^2 :0<y<1\}\), and \(\dot{H}^1({\overline{\Sigma }}(\varvec{\eta }))\) to \(\dot{H}^1({\overline{\Sigma }}_0)\). Letting \(\chi \in C_0^\infty ({\mathbb {R}})\) be a cut-off function with \(\chi (0)=1\) and support in \([-1/2,1/2]\), we find that

and hence

Moreover,

It follows that \(\Vert \phi (\cdot , 1)-\phi (\cdot , 0)\Vert _{H^{\frac{1}{2}}({\mathbb {R}})}\le c\Vert \phi \Vert _{\dot{H}^1({\overline{\Sigma }}_0)}\), and hence that \(\Vert (\phi |_{y=0}, \phi |_{y=1})\Vert _X\le c\Vert \phi \Vert _{\dot{H}^1({\overline{\Sigma }}_0)}\). The continuity of the trace map now follows by a density argument.

Conversely, given \(({\overline{\Phi }}_i,{\overline{\Phi }}_s)\) we formally define \(u\in \dot{H}^1({\overline{\Sigma }}_0)\) by

This means that u is the element of \(\dot{H}^1({\overline{\Sigma }}_0)\) whose partial derivatives have Fourier transforms

It is clear from these formulas that the map \(X\ni ({\overline{\Phi }}_i, {\overline{\Phi }}_s) \mapsto u\in \dot{H}^1({\overline{\Sigma }}_0)\) is continuous. \(\square \)

Note that \((H_\star ^{\frac{1}{2}}({\mathbb {R}}))'\) can be identified with \(H_\star ^{-\frac{1}{2}}({\mathbb {R}})\). A straightforward argument shows that the dual space of X is Y.

Proposition A.8

The space Y can be identified with the dual of X using the duality pairing

Definition A.9

For \(\varvec{\eta }\in W\), the bounded linear operator \({\overline{G}}(\varvec{\eta }):X\rightarrow Y\) is defined by

where \(\langle \cdot , \cdot \rangle \) denotes the \(Y\times X\) pairing and \({\overline{\phi }}_j\), \(j=1,2\), is the unique function in \(\dot{H}^1({\overline{\Sigma }}(\varvec{\eta }))\) such that \({\overline{\phi }}_j|_{y=1+{\overline{\eta }}}={\overline{\Phi }}_{j,s}\), \({\overline{\phi }}_j|_{y={\underline{\eta }}}={\overline{\Phi }}_{j,i}\) and

for all \({\overline{\psi }} \in \dot{H}^1({\overline{\Sigma }}(\varvec{\eta }))\) with \({\overline{\psi }}|_{y={\underline{\eta }}}=0\) and \({\overline{\psi }}|_{y=1+{\overline{\eta }}}=0\)

As in the case of the lower fluid, we obtain that

for some constant \(c>0\) which depends on \(h_0\) and \(\Vert \varvec{\eta }\Vert _{W^{1,\infty }({\mathbb {R}})}\), and the following consequence.

Lemma A.10

The operator \({\overline{G}}(\varvec{\eta }):X \rightarrow Y\) is an isomorphism for each \(\varvec{\eta }\in W\).

Definition A.11

For \(\varvec{\eta }\in W\), the Neumann–Dirichlet operator \({\overline{N}}(\varvec{\eta }) :Y \rightarrow X\) is defined as the inverse of \({\overline{G}}(\varvec{\eta })\).

1.1.3 Further Operators

We now proceed with the rigorous definition of the operators \(G(\varvec{\eta })\), \(N(\varvec{\eta })\) and \(K(\varvec{\eta })\). Recall that the definition of \({\overline{G}}(\varvec{\eta })\) involves various combinations of the components of \({\overline{G}}(\varvec{\eta })\) (cf. (1.23)). We can formally write

but since the definition of the function space X involves the condition \({\overline{\Phi }}_s-{\overline{\Phi }}_i \in H^{\frac{1}{2}}({\mathbb {R}})\) which couples the components \({\overline{\Phi }}_s\) and \({\overline{\Phi }}_i\), the definition of the components \({\overline{G}}_{ij}\) requires some care. Note, however, that \((H^{\frac{1}{2}}({\mathbb {R}}))^2 \subset X\), so that the components \({\overline{G}}_{ij}(\varvec{\eta })\) define bounded operators \(H^{\frac{1}{2}}({\mathbb {R}})\rightarrow H^{-\frac{1}{2}}({\mathbb {R}})\). The components \({\overline{N}}_{ij}(\varvec{\eta })\) can similarly be defined by considering the subspace \((H_\star ^{-\frac{1}{2}}({\mathbb {R}}))^2\subset Y\).

Proposition A.12

The operators \({\overline{G}}_{ij}(\varvec{\eta }):H^{\frac{1}{2}}({\mathbb {R}})\rightarrow H^{-\frac{1}{2}}({\mathbb {R}})\) and \({\overline{N}}_{ij}(\varvec{\eta }):H_\star ^{-\frac{1}{2}}({\mathbb {R}})\rightarrow H_\star ^{\frac{1}{2}}({\mathbb {R}})\) are continuous.

Lemma A.13

For each \(\varvec{\eta }\in W\), the operator \(B(\varvec{\eta }):={\overline{G}}_{11}(\varvec{\eta })+ \rho {\underline{G}}({\underline{\eta }})\) is an isomorphism \(H^{\frac{1}{2}}({\mathbb {R}})\rightarrow H^{-\frac{1}{2}}({\mathbb {R}})\).

Proof

Recall that \({\underline{G}}({\underline{\eta }}):\dot{H}^{\frac{1}{2}}({\mathbb {R}})\rightarrow \dot{H}^{-\frac{1}{2}}({\mathbb {R}})\) is an isomorphism, with

(cf. (A.1) and Lemma A.4) for some \(c>0\). On the other hand,

by Definition A.6 and (A.2) with \({\overline{\Phi }}_s=0\). It follows that

and hence \(B(\varvec{\eta }) :H^{\frac{1}{2}}({\mathbb {R}})\rightarrow H^{-\frac{1}{2}}({\mathbb {R}})\) is an isomorphism. \(\square \)

Recall that we formally defined the operator \(G(\varvec{\eta })\) by

It is not difficult to see that \(G(\varvec{\eta })\) is bounded \((H^{\frac{1}{2}}({\mathbb {R}}))^2 \rightarrow (H^{-\frac{1}{2}}({\mathbb {R}}))^2\). However, we need to extend it to a larger space in order to define \(K(\varvec{\eta })\). We record some lemmas which enable us to do this.

Lemma A.14

The operators \({\overline{G}}_{11}(\varvec{\eta }) B^{-1}(\varvec{\eta })\) and \({\overline{G}}_{21}(\varvec{\eta }) B^{-1}(\varvec{\eta })\) are bounded on \(\dot{H}^{-\frac{1}{2}}({\mathbb {R}})\).

Proof

The first part follows from the facts that \({\overline{G}}_{11}(\varvec{\eta })B^{-1}(\varvec{\eta })=I-\rho {\underline{G}}({\underline{\eta }})B^{-1}(\varvec{\eta })\) as well as \({\underline{G}}({\underline{\eta }})B^{-1}(\varvec{\eta }) \in {\mathcal {L}}(H^{-\frac{1}{2}}({\mathbb {R}}), \dot{H}^{-\frac{1}{2}}({\mathbb {R}}))\). The second part now follows from the fact that \({\overline{G}}_{11}(\varvec{\eta })+{\overline{G}}_{21}(\varvec{\eta })\in {\mathcal {L}}(H^{\frac{1}{2}}({\mathbb {R}}), H_\star ^{-\frac{1}{2}}({\mathbb {R}}))\). \(\square \)

Corollary A.15

The maps \(B^{-1}(\varvec{\eta }) {\overline{G}}_{11}(\varvec{\eta })\) and \(B^{-1}(\varvec{\eta }) {\overline{G}}_{12}(\varvec{\eta })\) extend to bounded mappings on \(\dot{H}^{\frac{1}{2}}({\mathbb {R}})\) by duality.

Recall that \(\varvec{\xi }\) is defined in terms of \({\underline{\Phi }}\) and \(\overline{\varvec{\Phi }}\) through (1.17). Conversely, we can formally recover \({\underline{\Phi }}\) and \(\overline{\varvec{\Phi }}\) from \(\varvec{\xi }\) through (1.20) under assumption (1.18). We now investigate these relations in more detail. We begin defining appropriate function spaces for \(\varvec{\xi }\) and \(G(\varvec{\eta }) \varvec{\xi }\).

Definition A.16

-

(i)

Let \({{\tilde{X}}}\) be the Hilbert space

$$\begin{aligned} \{\varvec{\xi }=({\underline{\xi }}, {\overline{\xi }})\in (H_\star ^{\frac{1}{2}}({\mathbb {R}}))^2 :{\underline{\xi }}+{\overline{\xi }} \in \dot{H}^{\frac{1}{2}}({\mathbb {R}})\} \end{aligned}$$equipped with the inner product

$$\begin{aligned} \langle \varvec{\xi }_1,\varvec{\xi }_2\rangle _{{{\tilde{X}}}}=\langle \varvec{\xi }_1, \varvec{\xi }_2\rangle _{(H_\star ^{\frac{1}{2}}({\mathbb {R}}))^2}+\langle {\overline{\xi }}_{1}+{\underline{\xi }}_{1},{\overline{\xi }}_{2}+{\underline{\xi }}_{2}\rangle _{\dot{H}^{\frac{1}{2}}({\mathbb {R}})}. \end{aligned}$$ -

(ii)

Let \({{\tilde{Y}}}\) be the Hilbert space

$$\begin{aligned} \{\varvec{\zeta }=({\underline{\zeta }}, {\overline{\zeta }}) \in (\dot{H}^{-\frac{1}{2}}({\mathbb {R}}))^2 :{\overline{\zeta }}-{\underline{\zeta }}\in H_\star ^{-\frac{1}{2}}({\mathbb {R}})\} \end{aligned}$$equipped with the inner product

$$\begin{aligned} \langle \varvec{\zeta }_1, \varvec{\zeta }_2\rangle _{{{\tilde{Y}}}}=\langle \varvec{\zeta }_1,\varvec{\zeta }_2 \rangle _{(\dot{H}^{-\frac{1}{2}}({\mathbb {R}}))^2}+\langle {\overline{\zeta }}_{1}-{\underline{\zeta }}_{1},{\overline{\zeta }}_{2}-{\underline{\zeta }}_{2}\rangle _{H_\star ^{-\frac{1}{2}}({\mathbb {R}})}. \end{aligned}$$

An argument similar to Proposition A.8 shows that \({{\tilde{Y}}}\) is dual to \({{\tilde{X}}}\).

Lemma A.17

Equation (1.20) defines bounded linear operators

and

with

Proof

By definition, we have that

This defines an element of \(\dot{H}^{\frac{1}{2}}({\mathbb {R}})\) by Corollary A.15 and the continuity of

Similarly,

It is obvious that \({\overline{\Phi }}_s=\frac{1}{\rho } {\overline{\xi }} \in H_\star ^{\frac{1}{2}}({\mathbb {R}})\). To see that \(\overline{\varvec{\Phi }}\in X\), we note that

It is easily seen that all of the involved operators are bounded. The final formula follows by straightforward algebraic manipulations. \(\square \)

Proposition A.18

The operator \(G(\varvec{\eta })\) is bounded \({{\tilde{X}}} \rightarrow {{\tilde{Y}}}\).

Proof

Assume that \(\varvec{\xi }\in {{\tilde{X}}}\). A direct computation then shows that

where we have used Lemma A.17. Similarly,

We have to show that the last expression is actually an element of \(\dot{H}^{-\frac{1}{2}}({\mathbb {R}})\). To see this, we note that

by the definition of Y and Definition A.9. On the other hand,

This shows that \({\overline{G}}_{21}(\varvec{\eta }){\overline{\Phi }}_i+{\overline{G}}_{22}(\varvec{\eta }){\overline{\Phi }}_s\in \dot{H}^{-\frac{1}{2}}({\mathbb {R}})\). The fact that \((G(\varvec{\eta })\varvec{\xi })_2-(G(\varvec{\eta })\varvec{\xi })_1\in H_\star ^{-\frac{1}{2}}({\mathbb {R}})\) follows from (A.3). The boundedness of \(G(\varvec{\eta })\) follows from the above formulas and Lemma A.17. \(\square \)

Define

Proposition A.19

\(G(\varvec{\eta }):{{\tilde{X}}} \rightarrow {{\tilde{Y}}}\) is invertible with

Proof

We begin by showing that \(N(\varvec{\eta })\) defines an operator \({{\tilde{Y}}}\rightarrow {{\tilde{X}}}\). Indeed, if \(\varvec{\zeta }=({\underline{\zeta }}, {\overline{\zeta }})\in {{\tilde{Y}}}\), then \(({\underline{\zeta }}, -{\overline{\zeta }})\in Y\) whence

and

Finally,

which implies that

The equation \(G(\varvec{\eta }) \varvec{\xi }=\varvec{\zeta }\in {{\tilde{Y}}}\) can equivalently be written

with the unique solution

On the other hand, we also have \({\underline{G}}({\underline{\eta }}){\underline{\Phi }} ={\underline{\zeta }}\), so that \({\underline{\Phi }}={\underline{N}}({\underline{\eta }}) {\underline{\zeta }}\). It follows that \(G(\varvec{\eta }) \varvec{\xi }=\varvec{\zeta }\) if and only if

and

Hence, \(N(\varvec{\eta })\) is the inverse of \(G(\varvec{\eta })\). \(\square \)

We are now finally ready to discuss the operator \(K(\varvec{\eta })\).

Definition A.20

-

(i)

Let \(\breve{X}\) be the Hilbert space

$$\begin{aligned} \{\varvec{\xi }=({\underline{\xi }}, {\overline{\xi }})\in (\dot{H}^{\frac{1}{2}}({\mathbb {R}}))^2 :{\underline{\xi }}-{\overline{\xi }} \in H^{\frac{1}{2}}({\mathbb {R}})\} \end{aligned}$$equipped with the inner product

$$\begin{aligned} \langle \varvec{\xi }_1,\varvec{\xi }_2\rangle _{\check{X}}=\langle \varvec{\xi }_1, \varvec{\xi }_2\rangle _{(\dot{H}^{\frac{1}{2}}({\mathbb {R}}))^2}+\langle {\overline{\xi }}_{1}-{\underline{\xi }}_{1},{\overline{\xi }}_{2}-{\underline{\xi }}_{2}\rangle _{H^{\frac{1}{2}}({\mathbb {R}})}. \end{aligned}$$ -

(ii)

Let \(\breve{Y}\) be the Hilbert space

$$\begin{aligned} \{\varvec{\zeta }=({\underline{\zeta }}, {\overline{\zeta }}) \in (H^{-\frac{1}{2}}({\mathbb {R}}))^2 :{\overline{\zeta }}+{\underline{\zeta }}\in \dot{H}^{-\frac{1}{2}}({\mathbb {R}})\} \end{aligned}$$equipped with the inner product

$$\begin{aligned} \langle \varvec{\zeta }_1,\varvec{\zeta }_2\rangle _{\check{X}}=\langle \varvec{\zeta }_1, \varvec{\zeta }_2\rangle _{(H^{-\frac{1}{2}}({\mathbb {R}}))^2}+\langle {\overline{\zeta }}_{1}+{\underline{\zeta }}_{1},{\overline{\zeta }}_{2}+{\underline{\zeta }}_{2}\rangle _{\dot{H}^{-\frac{1}{2}}({\mathbb {R}})}. \end{aligned}$$

Note \(\breve{X}\) and \(\breve{Y}\) are each other’s duals and that \((H^{\frac{1}{2}}({\mathbb {R}}))^2 \hookrightarrow \breve{X}\), \(\breve{Y}\hookrightarrow (H^{-\frac{1}{2}}({\mathbb {R}}))^2\).

Proposition A.21

The formula \(K(\varvec{\eta })=-\partial _x N(\varvec{\eta }) \partial _x\) defines an isomorphism \(\breve{X}\rightarrow \breve{Y}\) with

Proof