Abstract

We present a data-driven framework for extracting complex spatiotemporal patterns generated by ergodic dynamical systems. Our approach, called vector-valued spectral analysis (VSA), is based on an eigendecomposition of a kernel integral operator acting on a Hilbert space of vector-valued observables of the system, taking values in a space of functions (scalar fields) on a spatial domain. This operator is constructed by combining aspects of the theory of operator-valued kernels for multitask machine learning with delay-coordinate maps of dynamical systems. In contrast to conventional eigendecomposition techniques, which decompose the input data into pairs of temporal and spatial modes with a separable, tensor product structure, the patterns recovered by VSA can be manifestly non-separable, requiring only a modest number of modes to represent signals with intermittency in both space and time. Moreover, the kernel construction naturally quotients out dynamical symmetries in the data and exhibits an asymptotic commutativity property with the Koopman evolution operator of the system, enabling decomposition of multiscale signals into dynamically intrinsic patterns. Application of VSA to the Kuramoto–Sivashinsky model demonstrates significant performance gains in efficient and meaningful decomposition over eigendecomposition techniques utilizing scalar-valued kernels.

Similar content being viewed by others

1 Introduction

Spatiotemporal pattern formation is ubiquitous in physical, biological, and engineered systems, ranging from molecular-scale reaction-diffusion systems, to engineering- and geophysical-scale convective flows, and astrophysical flows, among many examples (Cross and Hohenberg 1993; Ahlers et al. 2009; Fung et al. 2016). The mathematical models for such systems are generally formulated by means of partial differential equations (PDEs), or coupled ordinary differential equations, with dissipation playing an important role in the development of low-dimensional effective dynamics on attracting subsets of the state space (Constantin et al. 1989). In light of this property, many pattern-forming systems are amenable to analysis by empirical, data-driven techniques, complementing the scientific understanding gained from first-principles approaches.

Historically, many of the classical proper orthogonal decomposition (POD) and principal component analysis (PCA) techniques for spatiotemporal pattern extraction have been based on the spectral properties of temporal and spatial covariance operators estimated from snapshot data (Aubry et al. 1991; Holmes et al. 1996). In singular spectrum analysis (SSA) and related algorithms (Broomhead and King 1986; Vautard and Ghil 1989; Ghil et al. 2002), combining this approach with delay-coordinate maps of dynamical systems (Packard et al. 1980; Takens 1981; Sauer et al. 1991; Robinson 2005; Deyle and Sugihara 2011) generally improves the representation of the information content of the data in terms of a few meaningful modes. More recently, advances in machine learning and harmonic analysis (Schölkopf et al. 1998; Belkin and Niyogi 2003; Coifman et al. 2005; Coifman and Lafon 2006; Singer 2006; von Luxburg et al. 2008; Berry and Harlim 2016; Berry and Sauer 2016) have led to techniques for recovering temporal and spatial patterns through the eigenfunctions of kernel integral operators (e.g., heat operators) defined intrinsically in terms of a Riemannian geometric structure of the data. In particular, in a family of techniques called nonlinear Laplacian spectral analysis (NLSA) (Giannakis and Majda 2012), and independently in Berry et al. (2013), the diffusion maps algorithm (Coifman and Lafon 2006) was combined with delay-coordinate maps to extract spatiotemporal patterns through the eigenfunctions of a kernel integral operator adept at capturing distinct and physically meaningful timescales in individual eigenmodes from multiscale high-dimensional signals.

At the same time, spatial and temporal patterns have been extracted from eigenfunctions of Koopman (Mezić and Banaszuk 2004; Mezić 2005; Rowley et al. 2009; Giannakis et al. 2015; Williams et al. 2015; Brunton et al. 2017; Das and Giannakis 2019; Giannakis 2017) and Perron–Frobenius (Dellnitz and Junge 1999; Froyland and Dellnitz 2000) operators governing the evolution of observables and probability measures, respectively, in dynamical systems (Budisić et al. 2012; Eisner et al. 2015). Koopman eigenfunction analysis is also related to the dynamic mode decomposition (DMD) algorithm (Schmid 2010) and linear inverse model techniques (Penland 1989). An advantage of these approaches is that they target operators defined intrinsically for the dynamical system generating the data, and thus able, in principle, to recover temporal and spatial patterns of higher physical interpretability and utility in predictive modeling than kernel-based approaches. In practice, however, the Koopman and Perron–Frobenius operators tend to have significantly more complicated spectral properties (e.g., non-isolated eigenvalues and/or continuous spectra) than kernel integral operators, hindering the stability and convergence of data-driven approximation techniques. These issues were recently addressed through an approximation scheme for the generator of the Koopman group with rigorous convergence guarantees (Giannakis 2017; Das and Giannakis 2019), utilizing a data-driven orthonormal basis of the \(L^2\) space associated with the invariant measure, acquired through diffusion maps. There, it was also shown that the eigenfunctions of kernel integral operators defined on delay-coordinate mapped data (e.g., the covariance and heat operators in SSA and NLSA, respectively) in fact converge to Koopman eigenfunctions in the limit of infinitely many delays, indicating a deep connection between these two branches of data analysis algorithms.

All of the techniques described above recover from the data a set of temporal patterns and a corresponding set of spatial patterns, sometimes referred to as “chronos” and “topos” modes, respectively (Aubry et al. 1991). In particular, for a dynamical system with a state space X developing patterns in a physical domain Y, each chronos mode, \( \varphi _j \), corresponds to a scalar- (real- or complex-) valued function on X, and the corresponding topos mode, \( \psi _j \), corresponds to a scalar-valued function on Y. Spatiotemporal reconstructions of the data with these approaches thus correspond to linear combinations of tensor product patterns of the form \( \varphi _j \otimes \psi _j \), mapping pairs of points (x, y) in the product space \( \Omega = X \times Y \) to the number \( \varphi _j( x ) \psi _j( y ) \). For a dynamical system possessing a compact invariant set \( A \subseteq X \) (e.g., an attractor) supporting an ergodic invariant measure, the chronos modes effectively become scalar-valued functions on A, which may be of significantly smaller dimension than X, increasing the robustness of approximation of these modes from finite datasets.

Evidently, for spatiotemporal signals F(x, y) of high complexity, tensor product patterns, with separable dependence on x and y, can be highly inefficient in capturing the properties of the input signal. That is, the number l of such patterns needed to recover F at high accuracy via a linear superposition

is generally large, with none of the individual patterns \( \varphi _j \otimes \psi _j \) being representative of F. In essence, the problem is similar to that of approximating a non-separable space-time signal in a tensor product basis of temporal and spatial basis functions. Another issue with tensor product decompositions based on scalar-valued eigenfunctions is that in the presence of nontrivial symmetries, the recovered patterns are oftentimes pure symmetry modes (e.g., Fourier modes in a periodic domain with translation invariance), with minimal dynamical significance and physical interpretability (Aubry et al. 1993; Holmes et al. 1996).

Here, we present a framework for spatiotemporal pattern extraction, called vector-valued spectral analysis (VSA), designed to alleviate the shortcomings mentioned above. The fundamental underpinning of VSA is that time-evolving spatial patterns have a natural structure as vector-valued observables on the system’s state space, and thus data analytical techniques operating on such spaces are likely to offer maximal descriptive efficiency and physical insight. We show that eigenfunctions of kernel integral operators on vector-valued observables, constructed by combining aspects of the theory of operator-valued kernels (Micchelli and Pontil 2005; Caponnetto et al. 2008; Carmeli et al. 2010) with delay-coordinate maps of dynamical systems (Packard et al. 1980; Takens 1981; Sauer et al. 1991; Robinson 2005; Deyle and Sugihara 2011): (a) Are superior to conventional algorithms in capturing signals with intermittency in both space and time; (b) Naturally incorporate any underlying dynamical symmetries, eliminating redundant modes and thus improving physical interpretability of the results; (c) Have a correspondence with Koopman operators, allowing detection of intrinsic dynamical timescales; and, (d) Can be stably approximated via data-driven techniques that provably converge in the asymptotic limit of large data.

The plan of this paper is as follows. Section 2 introduces the class of dynamical systems under study and provides an overview of data analysis techniques based on scalar kernels. In Sect. 3, we present the VSA framework for spatiotemporal pattern extraction using operator-valued kernels, and in Sect. 4 discuss the behavior of the method in the presence of dynamical symmetries, as well as its correspondence with Koopman operators. Section 5 describes the data-driven implementation of VSA. In Sect. 6, we present applications to the Kuramoto–Sivashinsky (KS) PDE model (Kuramoto and Tsuzuki 1976; Sivashinsky 1977) in periodic and chaotic regimes. Our primary conclusions are described in Sect. 7. Technical results, descriptions of basic properties of kernels and Koopman operators, pseudocode, and an overview of NLSA are collected in six appendices.

2 Background

2.1 Dynamical System and Spaces of Observables

We begin by introducing the dynamical system and the spaces of observables under study. The dynamics evolves by a \(C^1 \) flow map \(\Phi ^t : X \rightarrow X\), \(t \in {\mathbb {R}}\), on a manifold X, possessing an ergodic, invariant, Borel probability measure \(\mu \) with compact support \(A \subseteq X\). The system develops patterns on a spatial domain Y, which has the structure of a compact metric space, supporting a finite Borel measure (volume) \( \nu \). As a natural space of vector-valued observables, we consider the Hilbert space \(H = L^2(X,\mu ; H_Y)\) of square-integrable functions with respect to the invariant measure \( \mu \), taking values in \(H_Y = L^2(Y,\nu )\). That is, modulo sets of \(\mu \)-measure 0, the elements of H are functions \( \vec {f} : X \rightarrow H_Y \), such that for any dynamical state \(x\in X\), \(\vec {f}(x) \) is a scalar (complex-valued) field on Y, square-integrable with respect to \(\nu \). For every such observable \( \vec {f} \), the map \(t \mapsto \vec {f}(\Phi ^t(x)) \) describes a spatiotemporal pattern generated by the dynamics. Given \(\vec {f}, \vec {f}' \in H \) and \( g, g' \in H_Y \), the corresponding inner products on H and \(H_Y\) are given by \(\langle \vec {f}, \vec {f}' \rangle _{H} = \int _X \langle \vec {f}( x ), \vec {f}'( x ) \rangle _{H_Y} \, \mathrm{d}\mu (x)\) and \( \langle g, g' \rangle _{H_Y} = \int _Y g^*(y) g'(y) \, \mathrm{d}\nu (y)\), respectively.

An important property of H is that it exhibits the isomorphisms

where \(H_X = L^2(X,\mu )\) and \(H_\Omega = L^2( \Omega , \rho ) \) are Hilbert spaces of scalar-valued functions on X and the product space \(\Omega = X \times Y\), square-integrable with respect to the invariant measure \( \mu \) and the product measure \( \rho = \mu \times \nu \), respectively (the inner products of \(H_X\) and \(H_\Omega \) have analogous definitions to the inner product of \(H_Y\)). That is, every \(\vec {f}\in H\) can be equivalently viewed as an element of the tensor product space \(H_X\otimes H_Y\), meaning that it can be decomposed as \(\vec {f} = \sum _{j=0}^\infty \varphi _j \otimes \psi _j\) for some \(\varphi _j \in H_X\) and \(\psi _j \in H_Y\), or it can be represented by a scalar-valued function \(f \in H_\Omega \) such that \(\vec {f}(x)(y) = f(x,y)\). Of course, not every observable \( \vec {f} \in H \) is of pure tensor product form, \( \vec {f} = \varphi \otimes \psi \), for some \( \varphi \in H_X \) and \( \psi \in H_Y \).

We consider that measurements \( \vec {F}(x_n)\) of the system are taken along a dynamical trajectory \( x_n = \Phi ^{n\tau }(x_0) \), \( n \in {\mathbb {N}}\), starting from a point \(x_0 \in X\) at a fixed sampling interval \( \tau > 0 \) through a continuous vector-valued observation map \( \vec {F} \in H\). We also assume that \( \tau \) is such that \( \mu \) is an ergodic invariant probability measure of the discrete-time map \( \Phi ^\tau \).

2.2 Separable Data Decompositions via Scalar Kernel Eigenfunctions

Before describing the operator-valued kernel formalism at the core of VSA, we outline the standard approach to separable decompositions of spatiotemporal data as in (1) via eigenfunctions of kernel integral operators associated with scalar-valued kernels. In this context, a kernel is a continuous bivariate function \( k : X \times X \rightarrow {\mathbb {R}} \), which assigns a measure of correlation or similarity to pairs of dynamical states in X. Sometimes, but not always, we will require that k be symmetric, i.e., \( k( x, x' ) = k( x', x ) \) for all \( x, x' \in X \). Two examples of popular kernels used in applications (both symmetric) are the covariance kernels employed in POD,

and radial Gaussian kernels,

which are frequently used in manifold learning applications. Note that in both of the above examples the dependence of \( k( x, x' ) \) on x and \( x' \) is through the values of \( \vec {F} \) at these points alone; this allows \( k(x,x') \) to be computable from observed data, without explicit knowledge of the underlying dynamical states x and \(x'\). Hereafter, we will always work with such “data-driven” kernels.

Associated with every scalar-valued kernel is an integral operator \( K : H_X \rightarrow H_X \), acting on \( f \in H_X \) according to the formula

If k is symmetric, then by compactness of A and continuity of k, K is a compact, self-adjoint operator with an associated orthonormal basis \( \{ \varphi _0, \varphi _1, \ldots \} \) of \( H_X \) consisting of its eigenfunctions. Moreover, the eigenfunctions \( \varphi _j \) corresponding to nonzero eigenvalues are continuous. These eigenfunctions are employed as the chronos modes in (1), each inducing a continuous temporal pattern, \( t \mapsto \varphi _j( \Phi ^t( x ) ) \), for every state \( x \in X \). The spatial pattern \( \psi _j \in H_Y \) corresponding to \( \varphi _j \) is obtained by pointwise projection of the observation map onto \( \varphi _j \), namely

where \( F_y \in H_X \) is the continuous scalar-valued function on X satisfying \( F_y( x ) = \vec {F}( x )( y ) \) for all \( x \in X \).

2.3 Delay-Coordinate Maps and Koopman Operators

A potential shortcoming of spatiotemporal pattern extraction via the kernels in (2) and (3) is that the corresponding integral operators depend on the dynamics only indirectly, e.g., through the geometrical structure of the set \(\vec {F}( A ) \subset H_Y \) on which the data is concentrated. Indeed, a well-known deficiency of POD, particularly in systems with symmetries, is failure to identify low-variance, yet dynamically important patterns (Aubry et al. 1993). As a way of addressing this issue, it has been found effective (Broomhead and King 1986; Vautard and Ghil 1989; Ghil et al. 2002; Giannakis and Majda 2012; Berry et al. 2013) to first embed the observed data in a higher-dimensional data space through the use of delay-coordinate maps, and then extract spatial and temporal patterns through a kernel operating in delay-coordinate space. For instance, analogs of the covariance and Gaussian kernels in (2) and (3) in delay-coordinate space are given by

and

respectively, here \( Q \in {\mathbb {N}} \) is the number of delays. The covariance kernel in (6) is essentially equivalent to the kernel employed in multi-channel SSA (Ghil et al. 2002) in an infinite-channel limit, and the Gaussian kernel in (7) is closely related to the kernel utilized in NLSA (though the NLSA kernel employs a state-dependent distance scaling akin to (19) ahead, as well as Markov normalization, and these features lead to certain technical advantages compared to unnormalized radial Gaussian kernels). See Appendix F for a description of NLSA.

As is well known (Packard et al. 1980; Takens 1981; Sauer et al. 1991; Robinson 2005; Deyle and Sugihara 2011), delay-coordinate maps can help recover the topological structure of state space from partial measurements of the system (i.e., non-injective observation maps), but in the context of kernel algorithms they also endow the kernels, and thus the corresponding eigenfunctions, with an explicit dependence on the dynamics. In Giannakis (2017) and Das and Giannakis (2019), it was established that as the number of delays Q grows, the integral operators \(K_Q\) associated with a family of scalar kernels \(k_Q\) operating in delay-coordinate space converge in operator norm, and thus in spectrum, to a compact kernel integral operator \(K_\infty \) on \(H_X\) commuting with the Koopman evolution operators (Budisić et al. 2012; Eisner et al. 2015) of the dynamical system. The latter are the unitary operators \(U^t : H_X \rightarrow H_X\), \( t \in {\mathbb {R}} \), acting by composition with the flow map,

thus governing the evolution of observables in \(H_X\) under the dynamics.

In the setting of measure-preserving ergodic systems, associated with \(U^t\) is a distinguished orthonormal set \(\{ z_j \}\) of observables \(z_j \in H_X\) consisting of Koopman eigenfunctions (see Appendix A). These observables have the special property of exhibiting time-periodic evolution under the dynamics at a single frequency \( \alpha _j \in {\mathbb {R}}\) intrinsic to the dynamical system,

even if the underlying dynamical flow \( \Phi ^t \) is aperiodic. Moreover, every Koopman eigenspace is one dimensional by ergodicity. Because commuting operators have common eigenspaces, and the eigenspaces of compact operators corresponding to nonzero eigenvalues are finite-dimensional, it follows that as Q increases, the eigenfunctions of \(K_Q \) at nonzero eigenvalues acquire increasingly coherent (periodic or quasiperiodic) time evolution associated with a finite number of Koopman eigenfrequencies \( \alpha _j\). This property significantly enhances the physical interpretability and predictability of these patterns, providing justification for the skill of methods such as SSA and NLSA in extracting dynamically significant patterns from complex systems. Conversely, because kernel integral operators are generally more amenable to approximation from data than Koopman operators (which can have a highly complex spectral behavior), the operators \(K_Q\) provide an effective route for identifying finite-dimensional approximation spaces to stably and efficiently solve the Koopman eigenvalue problem.

2.4 Differences Between Covariance and Gaussian Kernels

Before closing this section, it is worthwhile pointing out two differences between covariance and Gaussian kernels, indicating that the latter may be preferable to the former in applications.

First, Gaussian kernels are strictly positive and bounded below on compact sets. That is, for every compact set \(S \subseteq X\) (including \(S=A\)), there exists a constant \(c_S > 0 \) such that \( k( x, x' ) \ge c_S \) for all \( x,x'\in S\). This property allows Gaussian kernels to be normalizable to ergodic Markov diffusion kernels (Coifman and Lafon 2006; Berry and Sauer 2016). In a dynamical systems context, an important property of such kernels is that the corresponding integral operators always have an eigenspace at eigenvalue 1 containing constant functions, which turns out to be useful in establishing well-posedness of Galerkin approximation techniques for Koopman eigenfunctions (Das and Giannakis 2019). Markov diffusion operators are also useful for constructing spaces of observables of higher regularity than \(L^2\), such as Sobolev spaces.

Second, if there exists a finite-dimensional linear subspace of \(H_Y\) containing the image of A under \( \vec {F} \), then the integral operator K associated with the covariance kernel has necessarily finite rank (bounded above by the dimension of that subspace), even if \( \vec {F} \) is an injective map on A. This effectively limits the richness of observables that can be stably extracted from data-driven approximations of covariance eigenfunctions. In fact, it is a well-known property of covariance kernels that every eigenfunction \( \varphi _j\) at nonzero corresponding eigenvalue depends linearly on the observation map; specifically, up to proportionality constants, \( \varphi _j( x ) = \langle \psi _j, \vec {F}( x ) \rangle _{H_Y} \) with \(\psi _j\) given by (5), and the number of such patterns is clearly finite if \( \vec {F}(x ) \) spans a finite-dimensional linear space as x is varied. On the other hand, apart from trivial cases, the kernel integral operators associated with Gaussian kernels have infinite rank (even if \( \vec {F} \) is non-injective), and if \( \vec {F} \) is injective they have no zero eigenvalues. In the latter case, data-driven approximations to the eigenfunctions of K provide an orthonormal basis for the full \(H_X \) space. Similar arguments also motivate the use of Gaussian kernels over polynomial kernels. In effect, by invoking the Taylor series expansion of the exponential function, a Gaussian kernel can be thought of as an “infinite-order” polynomial kernel.

3 Vector-Valued Spectral Analysis (VSA) Formalism

The main goal of VSA is to construct a decomposition of the observation map \( \vec {F}\) via an expansion of the form

where the \( c_j \) and \( \vec {\phi }_j \) are real-valued coefficients and vector-valued observables in H, respectively. Along a dynamical trajectory starting at \(x\in X\), every such \(\vec {\phi }_j\) gives rise to a spatiotemporal pattern \(t \mapsto \vec {\phi }_j(\Phi ^t(x))\), generalizing the time series \( t \mapsto \varphi _j(\Phi ^t(x)) \) from Sect. 2.2. A key consideration in the VSA construction is that the recovered patterns should not necessarily be of the form \( \vec {\phi }_j = \varphi _j \otimes \psi _j \) for some \( \varphi _j \in H_X \) and \( \psi _j \in H_Y \), as would be the case in the conventional decomposition in (1). To that end, we will determine the \( \vec {\phi }_j\) through the vector-valued eigenfunctions of an integral operator acting on H directly, as opposed to first identifying scalar-valued eigenfunctions in \(H_X\), and then forming tensor products with the corresponding projection-based spatial patterns, as in Sect. 2.2. As will be described in detail below, the integral operator nominally employed by VSA is constructed using the theory of operator-valued kernels (Micchelli and Pontil 2005; Caponnetto et al. 2008; Carmeli et al. 2010) for multitask machine learning, combined with delay-coordinate maps and Markov normalization as in NLSA.

3.1 Operator-Valued Kernel and Vector-Valued Eigenfunctions

Let \(B(H_Y)\) be the Banach space of bounded linear maps on \(H_Y\), equipped with the operator norm. For our purposes, an operator-valued kernel is a continuous map \( l : X \times X \rightarrow B(H_Y) \), mapping pairs of dynamical states in X to a bounded operator on \(H_Y\). Every such kernel has an associated integral operator \( L : H \rightarrow H \), acting on vector-valued observables according to the formula [cf. (4)]

where the integral above is a Bochner integral (a vector-valued generalization of the Lebesgue integral). Note that operator-valued kernels and their corresponding integral operators can be viewed as generalizations of their scalar-valued counterparts from Sect. 2.2, in the sense that if Y only contains a single point, then \(H_Y\) is isomorphic to the vector space of complex numbers (equipped with the standard operations of addition and scalar multiplication and the inner product \( \langle w, z \rangle _{{\mathbb {C}}} = w^* z\)), and \(B(H_Y)\) is isomorphic to the space of multiplication operators on \({\mathbb {C}}\) by complex numbers. In that case, the action \(l(x,x') \vec {f}(x')\) of the linear map \( l(x,x') \in B(H_Y) \) on the function \( \vec {f}(x') \in H_Y\) becomes equivalent to multiplication of the complex number f(x) , where f is a complex-valued observable in \(H_X\), by the value \(k(x,x') \in {\mathbb {C}} \) of a scalar-valued kernel k on X.

Consider now an operator-valued kernel \( l : X \times X \rightarrow B(H_Y)\), such that for every pair \( ( x, x' ) \) of states in X, \( l( x, x' ) =L_{xx'} \) is a kernel integral operator on \(H_Y \) associated with a continuous kernel \( l_{xx'} : Y \times Y \rightarrow {\mathbb {R}} \) with the symmetry property

This operator acts on a scalar-valued function \( g \in H_Y \) on the spatial domain via an integral formula analogous to (4), viz.

Moreover, it follows from (9) that the corresponding operator L on vector-valued observables is self-adjoint and compact, and thus there exists an orthonormal basis \( \{ \vec {\phi }_j\} \) of H consisting of its eigenfunctions,

Hereafter, we will always order the eigenvalues \( \lambda _j\) of integral operators in decreasing order starting at \( j = 0\). By continuity of l and \( l_{xx'} \), and compactness of A and Y, every eigenfunction \( \vec {\phi }_j \) at nonzero corresponding eigenvalue is a continuous function on X, taking values in the space of continuous functions on Y. Such eigenfunctions can be employed in the VSA decomposition in (8) with the expansion coefficients

Note that, as with scalar kernel techniques, the decomposition in (8) does not include eigenfunctions at zero corresponding eigenvalue, for, to our knowledge, no data-driven approximation schemes are available for such eigenfunctions. See Sect. 5 and Appendix D for further details.

Because H is isomorphic as Hilbert space to the space \(H_\Omega \) of scalar-valued observables on the product space \( \Omega = X \times Y \) (see Sect. 2.1), every operator-valued kernel satisfying (9) can be constructed from a symmetric scalar kernel \( k : \Omega \times \Omega \rightarrow {\mathbb {R}}\) by defining \( l( x, x' ) = L_{xx'} \) as the integral operator associated with the kernel

In particular, the vector-valued eigenfunctions of L are in one-to-one correspondence with the scalar-valued eigenfunctions of the integral operator \( K : H_\Omega \rightarrow H_\Omega \) associated with k, where

That is, the eigenvalues and eigenvectors of K satisfy the equation \( K \phi _j =\lambda _j \phi _j \) for the same eigenvalues as those of L, and we also have

It is important to note that unless k is separable as a product of kernels on X and Y, i.e., \( k( ( x, y ), ( x', y' ) ) = k^{(X)}(x,x') k^{(Y)}(y,y') \) for some \(k^{(X)} : X \times X \rightarrow {\mathbb {R}} \) and \( k^{(Y)} : Y \times Y \rightarrow {\mathbb {R}} \), the \( \vec {\phi }_j \) will not be of pure tensor product form, \( \vec {\phi }_j = \varphi _j \otimes \psi _j \) with \( \varphi _j \in H_X \) and \( \psi _j \in H_Y \). Thus, passing to an operator-valued kernel formalism allows one to perform decompositions of significantly higher generality than the conventional approach in (1).

3.2 Operator-Valued Kernels with Delay-Coordinate Maps

While the framework described in Sect. 3.1 can be implemented with a broad range of kernels, VSA employs kernels leveraging the insights gained from SSA, NLSA, and related techniques on the use of kernels operating in delay-coordinate space. That is, analogously to the kernels employed by these methods that depend on the values \( \vec {F}( (x) ),\vec {F}( \Phi ^{- \tau }(x) ), \ldots , \vec {F}( \Phi ^{-(Q-1) \tau }(x)) \) of the observation map on dynamical trajectories, VSA is based on kernels on the product space \( \Omega \) that also depend on data observed on dynamical trajectories, but with the key difference that this dependence is through the local values \( F_y( x ), F_y( \Phi ^{- \tau }(x) ), \ldots , F_y( \Phi ^{-(Q-1) \tau }(x)) \) of the observation map at each point y in the spatial domain Y. Specifically, defining the family of pointwise delay-embedding maps \( {\tilde{F}}_Q : \Omega \rightarrow {\mathbb {R}}^Q \) with \( Q \in {\mathbb {N}} \) and

we require that the kernels \( k_Q : \Omega \times \Omega \rightarrow {\mathbb {R}} \) utilized in VSA have the following properties:

-

1.

For every \(Q \in {\mathbb {N}} \), \( k_Q \) is the pullback under \( {\tilde{F}}_Q \) of a continuous kernel \( {\tilde{k}}_Q : {\mathbb {R}}^Q \times {\mathbb {R}}^Q \rightarrow {\mathbb {R}} \), i.e.,

$$\begin{aligned} k_Q( \omega , \omega ' ) = {\tilde{k}}_Q( {\tilde{F}}_Q( \omega ), {\tilde{F}}_Q( \omega ' ) ), \quad \forall \omega ,\omega '\in \Omega . \end{aligned}$$(15) -

2.

The sequence of kernels \( k_1, k_2, \ldots \) converges in \( H_\Omega \otimes H_\Omega \) norm to a kernel \( k_\infty \in H_\Omega \otimes H_\Omega \).

-

3.

The limit kernel \(k_\infty \) is invariant under the dynamics, in the sense that for all \( t\in {\mathbb {R}}\) and \( ( \rho \times \rho ) \)-a.e. \( ( \omega , \omega ' ) \in \Omega \times \Omega \), where \( \omega = ( x, y ) \) and \( \omega ' = ( x', y' ) \),

$$\begin{aligned} k_\infty ( ( \Phi ^t(x), y ), ( \Phi ^t(x'), y' ) ) = k_\infty ( \omega , \omega ' ). \end{aligned}$$(16)

We denote the corresponding integral operator on vector-valued observables in H corresponding to \(K_Q\), determined through (11), by \( L_Q\). As we will see below, operators of this class can be highly advantageous for the analysis of signals with an intermittent spatiotemporal character, as well as signals generated in the presence of dynamical symmetries. In addition, the family \(L_Q\) exhibits a commutativity with Koopman operators in the infinite-delay limit as in the case of SSA and NLSA.

Let \( \omega = ( x, y ) \) and \( \omega ' = (x',y') \) with \(x,x' \in X \) and \( y, y' \in Y\) be arbitrary points in \( \Omega \). As concrete examples of kernels satisfying the conditions listed above,

and

are analogs of the covariance and Gaussian kernels in (2) and (3), respectively, defined on \( \Omega \). For the reasons stated in Sect. 2.4, in practice we generally prefer working with Gaussian kernels than covariance kernels. Moreover, following the approach employed in NLSA and in Berry and Harlim (2016), and Giannakis (2017), we consider a more general class of Gaussian kernels than (18), namely

where \( a_Q : \Omega \rightarrow {\mathbb {R}}\) is a continuous nonnegative scaling function. Intuitively, the role of \(a_Q \) is to adjust the bandwidth (variance) of the Gaussian kernel in order to account for variations in the sampling density and time tendency of the data. The explicit construction of this function is described in Appendix C.1. For the purposes of the present discussion, it suffices to note that \( a_Q( \omega ) \) can be evaluated given the values of \(F_y \) on the lagged trajectory \( \Phi ^{-q\tau }(x) \), so that, as with the covariance and radial Gaussian kernels, the class of kernels in (19) also satisfy (15). The existence of the limit \( k_\infty \) for this family of kernels, as well as the covariance kernels in (17), satisfying the conditions listed above is established in Appendix C.3.

3.3 Markov Normalization

As a final kernel construction step, when working with a strictly positive, symmetric kernel \( k_Q\), such as (18) and (19), we normalize it to a continuous Markov kernel \(p_Q : \Omega \times \Omega \rightarrow {\mathbb {R}}\), satisfying \( \int _\Omega p_Q( \omega , \cdot ) \, \mathrm{d}\rho = 1\) for all \(\omega \in \Omega \), using the normalization procedure introduced in the diffusion maps algorithm (Coifman and Lafon 2006) and in Berry and Sauer (2016); see Appendix C.2 for a description. Due to this normalization, the corresponding integral operator \( P_Q : H_\Omega \rightarrow H_\Omega \) is an ergodic Markov operator having a simple eigenvalue \( \lambda _0 = 1 \) and a corresponding constant eigenfunction \( \phi _0\). Moreover, the range of \(P_Q\) is included in the space of continuous functions of \( \Omega \). While this operator is not necessarily self-adjoint (since the kernel \(p_Q\) resulting from diffusion maps normalization is generally non-symmetric), it can be shown that it is related to a self-adjoint, compact operator by a similarity transformation. As a result, all eigenvalues of \(P_Q \) are real and admit the ordering \( 1 = \lambda _0 > \lambda _1 \ge \lambda _2 \cdots \). Moreover, there exists a (non-orthogonal) basis \( \{ \phi _0, \phi _1, \ldots \} \) of \(H_\Omega \) consisting of eigenfunctions corresponding to these eigenvalues, as well as a dual basis \( \{ \phi '_0, \phi '_1, \ldots \} \) consisting of eigenfunctions of \( P^*_Q \) satisfying \( \langle \phi '_i, \phi _j \rangle _{H_\Omega } = \delta _{ij}\). As with their unnormalized counterparts \(k_Q\), the sequence of Markov kernels \( p_Q \) has a well-defined, shift-invariant limit \(p_\infty \in H_\Omega \otimes H_\Omega \) as \( Q \rightarrow \infty \); see Appendix C.2 for further details.

The eigenfunctions \( \phi _j \) induce vector-valued observables \( \vec {\phi }_j \in H\) through (13), which are in turn eigenfunctions of an integral operator \( {\mathcal {P}}_Q : H \rightarrow H\) associated with the operator-valued kernel determined via (11), applied to the Markov kernel \(p_Q\). Similarly, the dual eigenfunctions \( \phi '_i\) induce vector-valued observables \( \vec {\phi }'_j \in H \), which are eigenfunctions of \({\mathcal {P}}^*_Q\) satisfying \( \langle \vec {\phi }_i', \vec {\phi }_j \rangle _{H} = \delta _{ij} \). Equipped with these observables, we perform the VSA decomposition in (8) with the expansion coefficients \(c_j = \langle \vec {\phi }_j', \vec {F} \rangle _H\). The latter expression can be viewed as a generalization of (10), applicable for non-orthonormal eigenbases.

4 Properties of the VSA Decomposition

In this section, we study the properties of the operators \(K_Q\) employed in VSA and their eigenfunctions in two relevant scenarios in spatiotemporal data analysis, namely data generated by systems with (i) dynamical symmetries, and (ii) nontrivial Koopman eigenfunctions. These topics will be discussed in Sects. 4.2 and 4.3, respectively. We begin in Sect. 4.1 with some general observations on the topological structure of spatiotemporal data in delay-coordinate space, and the properties this structure imparts on the recovered eigenfunctions.

4.1 Bundle Structure of Spatiotemporal Data

In order to gain insight on the behavior of VSA, it is useful to consider the triplet \((\Omega ,B_Q,\pi _Q)\), where \(B_Q = {\tilde{F}}_Q( \Omega ) \) is the image of the product space \( \Omega \) under the delay-coordinate observation map, and \( \pi _Q : \Omega \rightarrow B_Q \) is the continuous surjective map defined as \(\pi _Q( \omega ) = {\tilde{F}}_Q( \omega )\) for any \( \omega \in \Omega \). Such a triplet forms a topological bundle with \( \Omega \), \( B_Q \), and \( \pi _Q \) playing the role of the total space, base space, and projection map, respectively. In particular, \(\pi _Q\) partitions \(\Omega \) into equivalence classes

called fibers, on which \(\pi _Q(\omega ) \) attains a fixed value (i.e., \( {{\tilde{\omega }}} \) lies in \( [ \omega ]_Q \) if \( \pi _Q( {{\tilde{\omega }}} ) = \pi _Q( \omega ) \)).

By virtue of (15), the kernel \( k_Q \) is a continuous function, constant on the \([\cdot ]_Q\) equivalence classes, i.e., for all \( \omega ,\omega ' \in \Omega \), \( {{\tilde{\omega }}} \in [\omega ]_Q\), and \( {{\tilde{\omega }}}' \in [\omega ']_Q\),

Therefore, since for any \( f \in H_\Omega \) and \( {{\tilde{\omega }}} \in [\omega ]_Q\),

the range of the integral operator \( K_Q \) is a subspace of the continuous functions on \( \Omega \), containing functions that are constant on the \( [ \cdot ]_Q \) equivalence classes. Correspondingly, the eigenfunctions \( \phi _j \) corresponding to nonzero eigenvalues (which lie in \( {{\,\mathrm{ran}\,}}K_Q\)) have the form \( \phi _j = \eta _j \circ \pi _Q \), where \( \eta _j \) are continuous functions in the Hilbert space \( L^2( B_Q, \pi _{Q*} \rho ) \) of scalar-valued functions on \(B_Q\), square-integrable with respect to the pushforward of the measure \( \rho \) under \( \pi _Q\). We can thus conclude that, if all eigenvalues \( \lambda _j \) with \( j \le l-1 \) are nonzero, the VSA-reconstructed signal from (8) (viewed as a scalar-valued function on \(\Omega \)), lies in the closed subspace \( \overline{ {{\,\mathrm{ran}\,}}K_Q } = \overline{ {{\,\mathrm{span}\,}}\{ \phi _j : \lambda _j > 0 } \} \) of \( H_\Omega \) spanned by functions that are constant on the \( [ \cdot ]_Q \) equivalence classes. Note that \( \overline{ {{\,\mathrm{ran}\,}}K_Q } \) is not necessarily decomposable as a tensor product of \(H_X\) and \(H_Y\) subspaces.

Observe now that with the definition of the kernel in (19), the \([\cdot ]_Q\) equivalence classes consist of pairs of dynamical states \(x \in \Omega \) and spatial points \(y \in Y\) for which the evolution of the observable \( F_y\) is identical over Q delays. While one can certainly envision scenarios where these equivalence classes each contain only one point, in a number of cases of interest, including the presence of dynamical symmetries examined below, the \([\cdot ]_Q\) equivalence classes will be nontrivial, and as a result \(\overline{{{\,\mathrm{ran}\,}}K_Q}\) will be a strict subspace of \(H_\Omega \). In such cases, the patterns recovered by VSA naturally factor out data redundancies, which generally enhances both robustness and physical interpretability of the results. Besides spatiotemporal data, the bundle construction described above may be useful in other scenarios, e.g., analysis of data generated by dynamical systems with varying parameters (Yair et al. 2017).

4.2 Dynamical Symmetries

An important class of spatiotemporal systems exhibiting nontrivial \([\cdot ]_Q\) equivalence classes is PDE models with equivariant dynamics under the action of symmetry groups on the spatial domain (Holmes et al. 1996). As a concrete example, we consider a PDE for a scalar field in \(H_Y\), possessing a \(C^1\) inertial manifold, i.e., a finite-dimensional, forward-invariant submanifold of \(H_Y\) containing the attractor of the system, onto which every trajectory is exponentially attracted (Constantin et al. 1989). In this setting, the inertial manifold plays the role of the state space manifold X. Moreover, we assume that the full system state is observed, so that the observation map \( \vec {F} \) reduces to the inclusion \( X \hookrightarrow H_Y \).

Consider now a topological group G (the symmetry group) with a continuous left action \(\Gamma _Y^g: Y \rightarrow Y \), \( g \in G \), on the spatial domain, preserving null sets with respect to \(\nu \). Suppose also that the dynamics is equivariant under the corresponding induced action \(\Gamma _X^g : X \rightarrow X \), \( \Gamma _X^g( x ) = x \circ \Gamma _Y^{g^{-1}} \), on the state space manifold. This means that the dynamical flow map and the symmetry group action commute,

or, in other words, if \( t \mapsto \Phi ^t( x ) \) is a solution starting at \( x \in X \), then \( t \mapsto \Phi ^t( \Gamma ^g_X( x ) ) \) is a solution starting at \( \Gamma ^g_X( x ) \). Additional aspects of symmetry group actions and equivariance are outlined in Appendix B. Our goal for this section is to examine the implications of (22) to the properties of the operators \(K_Q\) employed in VSA and their eigenfunctions.

4.2.1 Dynamical Symmetries and VSA Eigenfunctions

We begin by considering the induced action \( \Gamma _\Omega ^g : \Omega \rightarrow \Omega \) of G on the product space \( \Omega \), defined as

This group action partitions \( \Omega \) into orbits, defined for every \( \omega \in \Omega \) as the subsets \( \Gamma _\Omega ( \omega ) \subseteq \Omega \) with

As with the subsets \( [ \omega ]_Q \subseteq \Omega \) from (20) associated with delay-coordinate maps, the G-orbits on \( \Omega \) form equivalence classes, consisting of points connected by symmetry group actions (as opposed to having common values under delay-coordinate maps). In general, these two sets of equivalence classes are unrelated, but in the presence of dynamical symmetries, they are, in fact, compatible, as follows:

Proposition 1

If the equivariance property in (22) holds, then for every \( \omega \in \Omega \), the G-orbit \( \Gamma _\Omega ( \omega ) \) is a subset of the \( [ \omega ]_Q \) equivalence class. As a result, the following diagram commutes:

Proof

Let x(y) denote the value of the dynamical state \( x \in X \subset H_Y \) at \( y \in Y \). It follows from (22) that for every \( t \in {\mathbb {R}}\), \( g \in G\), \(x \in X\), and \( y \in G \),

Therefore, since \( \vec {F} \) is an inclusion (i.e., \(F_y(x) = x(y)\)), setting \( \omega = (x, y ) \in \Omega \), we obtain

\(\square \)

We thus conclude from Proposition 1 and (21) that the kernel \(k_Q \) is constant on G-orbits,

and therefore the eigenfunctions \( \phi _j\) corresponding to nonzero eigenvalues of \(K_Q\) are continuous functions with the invariance property

This is one of the key properties of VSA, which we interpret as factoring the symmetry group from the recovered spatiotemporal patterns.

4.2.2 Spectral Characterization

In order to be able to say more about the implication of the results in Sect. 4.2.1 at the level of operators, we now assume that the group action \( \Gamma ^g_\Omega \) preserves the measure \( \rho \). Then, there exists a unitary representation of G on \( H_\Omega \), whose representatives are unitary operators \( R^g_\Omega : H_\Omega \rightarrow H_\Omega \) acting on functions \( f \in H_\Omega \) by composition with \( \Gamma ^g_\Omega \), i.e., \( R^g_\Omega f = f \circ \Gamma _\Omega ^g \). Another group of unitary operators acting on \( H_\Omega \) consists of the Koopman operators, \( {\tilde{U}}^t : H_\Omega \rightarrow H_\Omega \), which we define here via a trivial lift of the Koopman operators \(U^t \) on \(H_X\), namely \({\tilde{U}}^t = U^t \otimes I_{H_Y}\), where \(I_{H_Y} \) is the identity operator on \(H_Y\); see Appendix A for further details. In fact, the map \( t \mapsto {\tilde{U}}^t \) constitutes a unitary representation of the Abelian group of real numbers (playing the role of time), equipped with addition as the group operation, much like \( g \mapsto R^g_\Omega \) is a unitary representation of the symmetry group G. The following theorem summarizes the relationship between the symmetry group representatives and the Koopman and kernel integral operators on \(H_\Omega \).

Theorem 2

For every \( g \in G\) and \(t \in {\mathbb {R}}\), the operator \( R^g_\Omega \) commutes with \( K_Q\) and \( {\tilde{U}}^t\). Moreover, every function in the range of \(K_Q\) is invariant under \(R^g_\Omega \), i.e., \( R^g_\Omega K_Q = K_Q\).

Proof

The commutativity between \(R^g_\Omega \) and \( {\tilde{U}}^t\) is a direct consequence of (22). To verify the claims involving \( K_Q\), we use (23) and the fact that \( \Gamma ^g_\Omega \) preserves \( \rho \) to compute

where g and \( g' \) are arbitrary, and the equalities hold for \( \rho \)-a.e. \( \omega \in \Omega \). Setting \( g' = g^{-1} \) in the above, and acting on both sides by \( R^g_\Omega \), leads to \( R^g_\Omega K_Q = K_Q R^g_\Omega \), i.e., \( [ R^g_\Omega , K_Q ] = 0 \), as claimed. On the other hand, setting g to the identity element of G leads to \( K_Q = R^{g'}_\Omega K_Q \), completing the proof of the theorem.\(\square \)

Because commuting operators have common eigenspaces, Theorem 2 establishes the existence of two sets of common eigenspaces associated with the symmetry group, namely common eigenspaces between \( R^g_\Omega \) and \( K_Q\) and those between \( R^g_\Omega \) and \({\tilde{U}}^t\). In general, these two families of eigenspaces are not compatible since \( {\tilde{U}}^t \) and \( K_Q \) many not commute, so for now we will focus on the common eigenspaces between \( R^g_\Omega \) and \( K_Q \) which are accessible via VSA with finitely many delays. In particular, because \( R^g_\Omega K_Q = K_Q \), and every eigenspace \( W_l \) of \( K_Q \) at nonzero corresponding eigenvalue \( \lambda _l \) is finite-dimensional (by compactness of that operator), we can conclude that the \( W_l\) are finite-dimensional subspaces onto which the action of \(R^g_\Omega \) reduces to the identity. In other words, the eigenspaces of \( K_Q\) at nonzero corresponding eigenvalues are finite-dimensional trivial representation spaces of G, and every VSA eigenfunction \( \phi _j \) is also an eigenfunction of \(R^g_\Omega \) at eigenvalue 1.

At this point, one might naturally ask to what extent these properties are shared in common between VSA and conventional eigendecomposition techniques based on scalar kernels on X. In particular, in the measure-preserving setting for the product measure \( \rho = \mu \times \nu \) examined above, it must necessarily be the case that the group actions \( \Gamma ^g_X\) and \( \Gamma ^g_Y\) separately preserve \( \mu \) and \( \nu \), respectively, thus inducing unitary operators \( R^g_X : H_X \rightarrow H_X \) and \( R^g_Y : H_Y \rightarrow H_Y\), defined analogously to \(R^g_\Omega \). For a variety of kernels \( k^{(X)}_Q : X \times X \rightarrow {\mathbb {R}}\) that only depend on observed data through inner products and norms on \(H_Y\) (e.g., the covariance and Gaussian kernels in Sect. 2.2), the unitarity of \( R^g_X\) and \(R^g_Y\) implies that the invariance property

holds for all \( g \in G\) and \(x,x' \in X\). Moreover, proceeding analogously to the proof of Theorem 2, one can show that \( R^g_X \) and the integral operator \( K^{(X)}_Q : H_X \rightarrow H_X\) associated with \(k_Q^{(X)}\) commute, and thus have common eigenspaces \(W^{(X)}_l\), \( \lambda _l^{(X)} \ne 0 \), which are finite-dimensional invariant subspaces under \( R^g_X\). Projecting the observation map \( \vec {F} \) onto \( W^{(X)}_l\) as in (5), then yields a finite-dimensional subspace \(W^{(Y)}_l \subset H_Y\), which is invariant under \(R^g_Y \), and thus \( W^{(X)}_l \otimes W^{(Y)}_l \subset H_\Omega \) is invariant under \( R^g_\Omega \). The fundamental difference between the representation of G on \( W^{(X)}_l \otimes W^{(Y)}_l \) and that on the \(W_l\) subspaces recovered by VSA, is that the former is generally not trivial, i.e., in general, \( R^g_\Omega \) does not reduce to the identity map on \( W^{(X)}_l \otimes W^{(Y)}_l\). A well-known consequence of this is that the corresponding spatiotemporal patterns \( \varphi _j \otimes \psi _j\) from (1) become pure symmetry modes (e.g., Fourier modes in dynamical systems with translation invariance), hampering their physical interpretability.

This difference between VSA and conventional eigendecomposition techniques can be traced back to the fact that on X there is no analog of Proposition 1, relating equivalence classes of points with respect to delay-coordinate maps and group orbits on that space. Indeed, Proposition 1 plays an essential role in establishing the kernel invariance property in (23), which is stronger than (24) as it allows action by two independent group elements. Equation 23 is in turn necessary to determine that \(K_Q R^g_\Omega = K_Q \) in Theorem 2. In summary, these considerations highlight the importance of taking into account the bundle structure of spatiotemporal data when dealing with systems with dynamical symmetries.

4.3 Connection with Koopman Operators

4.3.1 Behavior of Kernel Integral Operators in the Infinite-Delay Limit

As discussed in Sect. 4.2, in general, the kernel integral operators \( K_Q \) do not commute with the Koopman operators \( {\tilde{U}}^t\), and thus these families of operators do not share common eigenspaces. Nevertheless, as we establish in this section, under the conditions on kernels stated in Sect. 3.2, the sequence of operators \( K_Q \) has an asymptotic commutativity property with \( {\tilde{U}}^t \) as \(Q \rightarrow \infty \), allowing the kernel integral operators from VSA to approximate eigenspaces of Koopman operators.

In order to place our results in context, we begin by noting that an immediate consequence of the bundle construction described in Sect. 4.1 is that if the support of the measure \( \rho \), denoted \( M \subseteq \Omega \), is connected as a topological space, then in the limit of no delays, \(Q=1\), the image of M under the delay-coordinate map \( {\tilde{F}}_1 \) is a closed interval in \( {\mathbb {R}}\), and correspondingly the eigenfunctions \( \phi _j \) are pullbacks of orthogonal functions on that interval under \( {\tilde{F}}_1\). In particular, because \( {\tilde{F}}_1 \) is equivalent to the vector-valued observation map, in the sense that \( \vec {F}( x )( y ) = {\tilde{F}}_1( (x, y ) ) \), the eigenfunctions \( \phi _j\) of the \(Q=1\) operator corresponding to nonzero eigenvalues are continuous functions, constant on the level sets of the input signal. Therefore, in this limit, the recovered eigenfunctions will generally have comparable complexity to the input data, and thus be of limited utility for the purpose of decomposing complex signals into simpler patterns. Nevertheless, besides the strict \(Q = 1\) limit, the \( \phi _j \) should remain approximately constant on the level sets of the input signal for moderately small values \( Q > 1\), and this property should be useful in a number of applications, such as signal denoising and level set estimation [note that data-driven approximations to \( \phi _j \) become increasingly robust to noise with increasing Q (Giannakis 2017)]. Mathematically, in this small-Q regime VSA has some common aspects with nonlocal averaging techniques in image processing (Buades et al. 2005).

We now focus on the behavior of VSA in the infinite-delay limit, where the following is found to hold.

Theorem 3

Under the conditions on the kernels \( k_Q\) stated in Sect. 3.2, the associated integral operators \(K_Q \) converge as \(Q \rightarrow \infty \) in operator norm, and thus in spectrum, to the integral operator \( K_\infty \) associated with the kernel \( k_\infty \). Moreover, \( K_\infty \) commutes with the Koopman operator \( {\tilde{U}}^t\) for all \( t \in {\mathbb {R}}\).

Proof

Since \( k_Q \) and \(k_\infty \) all lie in \(H_\Omega \otimes H_\Omega \), \( K_Q \) and \(K_\infty \) are Hilbert–Schmidt integral operators. As a result, the operator norm \( ||K_Q - K_\infty ||\) is bounded above by \( ||k_Q - k_\infty ||_{H_\Omega \otimes H_\Omega }\), and the convergence of \( ||K_Q - K_\infty ||\) to zero follows from the fact that \( \lim _{Q\rightarrow \infty } ||k_Q - k_\infty ||_{H_\Omega \otimes H_\Omega } = 0 \), as stated in the conditions in Sect. 3.2. To verify that \(K_\infty \) and \( {\tilde{U}}^t\) commute, we proceed analogously to the proof of Theorem 2, using the shift invariance of \( k_\infty \) in (16) and the fact that \( {{\tilde{\Phi }}}^t = \Phi ^t \otimes I_Y \) preserves the measure \( \rho \) to compute

where the equalities hold for \( \rho \)-a.e. \(\omega \in \Omega \). Pre-multiplying these expressions by \({\tilde{U}}^t \) leads to

as claimed. \(\square \)

Theorem 3 generalizes the results in Giannakis (2017) and Das and Giannakis (2019), where analogous commutativity properties between Koopman and kernel integral operators were established for scalar-valued observables in \(H_X\). By virtue of the commutativity between \( K_\infty \) and \( {\tilde{U}}^t \), at large numbers of delays Q, VSA decomposes the signal into patterns with a coherent temporal evolution associated with intrinsic frequencies of the dynamical system. In particular, being a compact operator, \( K_\infty \) has finite-dimensional eigenspaces, \( W_l\), corresponding to nonzero eigenvalues, whereas the eigenspaces of \(\tilde{U}^t \) are infinite-dimensional, yet are spanned by eigenfunctions with a highly coherent (periodic) time evolution at the corresponding eigenfrequencies \( \alpha _j \in {\mathbb {R}}\),

see Appendix A.1 for further details. The commutativity between \(K_\infty \) and \(\tilde{U}^t\) allows us to identify finite-dimensional subspaces \(W_l\) of \(H_\Omega \) containing distinguished observables which are simultaneous eigenfunctions of \(K_\infty \) and \( {\tilde{U}}^t \). As shown in Appendix A.2, these eigenfunctions have the form

where \(z_{jl} \) is an eigenfunction of the Koopman operator \(U^t\) on \( H_X \) at eigenfrequency \( \alpha _{jl}\), and \( \psi _{jl}\) a spatial pattern in \(H_Y\). Note that here we use a two-index notation, \( z_{jl} \) and \( \alpha _{jl} \), for Koopman eigenvalues and eigenfrequencies, respectively, to indicate the fact that they are associated with the \(W_l\) eigenspace of \(K_\infty \). We therefore deduce from (25) that in the infinite-delay limit, the spatiotemporal patterns recovered by VSA can be factored into a separable, tensor product form similar to the conventional decomposition in (1) based on scalar kernel algorithms. It is important to note, however, that unlike (1), the spatial patterns \( \psi _{jl} \) in (25) are not necessarily given by linear projections of the observation map onto the corresponding scalar Koopman eigenfunctions \( z_{jl} \in H_X\) [called Koopman modes in the Koopman operator literature (Mezić 2005)]. In effect, taking into account the intrinsic structure of spatiotemporal data as vector-valued observables allows VSA to recover more general spatial patterns than those associated with linear projections of observed data.

Another consideration to keep in mind (which applies for many techniques utilizing delay-coordinate maps besides VSA) is that \(K_\infty \) can only recover patterns in a subspace \( {\mathcal {D}}_\Omega \) of \(H_\Omega \) associated with the point spectrum of the dynamical system generating the data (i.e., the Koopman eigenfrequencies; see Appendix A.1). Dynamical systems of sufficient complexity will exhibit a nontrivial subspace \( {\mathcal {D}}_\Omega ^\perp \) associated with the continuous spectrum, which does not admit a basis associated with Koopman eigenfunctions. One can show via analogous arguments to Das and Giannakis (2019) that \( {\mathcal {D}}_\Omega ^\perp \) is, in fact, contained in the nullspace of \(K_\infty \), which is a potentially infinite-dimensional space not accessible from data. Of course, in practice, one always works with finitely many delays Q, which in principle allows recovery of patterns in \( {\mathcal {D}}_\Omega ^\perp \) through eigenfunctions of \(K_Q\), and these patterns will not have an asymptotically separable behavior as \(Q \rightarrow \infty \) analogous to (25).

In light of the above, we can conclude that increasing Q from small values will impart changes to the topology of the base space \(B_Q\), and in particular the image of the support M of \( \rho \) under \(\pi _Q\), but also the spectral properties of the operators \(K_Q\). On the basis of classical delay-embedding theorems (Sauer et al. 1991), one would expect the topology of \(\pi _Q(M)\) to eventually stabilize, in the sense that for every spatial point \(y \in Y\) the set \(A_y = A \times \{ y \} \subseteq M \) will map homeomorphically under \( \pi _Q \) for Q greater than a finite number (that is, topologically, \(\pi _Q(A_y)\) will be a “copy” of A). However, apart from special cases, \( K_Q \) will continue changing all the way to the asymptotic limit \(Q \rightarrow \infty \) where Theorem 3 holds.

Before closing this section, we also note that while VSA does not directly provide estimates of Koopman eigenfrequencies, such estimates could be computed through Galerkin approximation techniques utilizing the eigenspaces of \( K_Q\) at large Q as trial and test spaces, as done elsewhere (Giannakis et al. 2015; Giannakis 2017; Das and Giannakis 2019) for scalar-valued Koopman eigenfunctions. A study of such techniques in the context of vector-valued Koopman eigenfunctions (equivalently, eigenfunctions in \(H_\Omega \)) is beyond the scope of this work, though it is expected that their well-posedness and convergence properties should follow from fairly straightforward modification of the approach in the references cited above.

4.3.2 Infinitely Many Delays with Dynamical Symmetries

As a final asymptotic limit of interest, we consider the limit \( Q \rightarrow \infty \) under the assumption that a symmetry group G acts on \( H_\Omega \) via unitary operators \(R^g_\Omega \), as described in Sect. 4.2. In that case, the commutation relations

imply that there exist finite-dimensional subspaces of \( H_\Omega \) spanned by simultaneous eigenfunctions of \( R^g_\Omega \), \( {\tilde{U}}^t \), and \( K_\infty \). We know from (25) that these eigenfunctions, \( {\tilde{z}}_{jl}\), are given by a tensor product between a Koopman eigenfunction \( z_{jl} \in H_X\) and a spatial pattern \(\psi _{jl} \in H_Y\). It can further be shown (see Appendix B.2) that \( z_{jl} \) and \( \psi _{jl}\) are eigenfunctions of the unitary operators \(R^g_X \) and \( R^g_Y \) , i.e.,

and moreover the eigenvalues \( \gamma ^g_{X,jl}\) and \( \gamma ^g_{Y,jl}\) satisfy \( \gamma ^g_{X,jl} \gamma ^g_{Y,jl} = 1\). In particular, we have \( R^g_\Omega = R^g_X \otimes R^g_Y\), and the quantity \( \gamma ^g_{\Omega ,jl} = \gamma ^g_{X,jl} \gamma ^g_{Y,jl}\) is equal to the eigenvalue of \( R^g_\Omega \) corresponding to \( {\tilde{z}}_{jl}\), which is equal to 1 by Theorem 2.

In summary, every simultaneous eigenfunction \( {\tilde{z}}_{jl}\) of \(K_\infty \), \({\tilde{U}}^t \), and \( R^g_{\Omega }\) is characterized by three eigenvalues, namely (i) a kernel eigenvalue \( \lambda _l \) associated with \( K_\infty \); (ii) a Koopman eigenfrequency \( \alpha _{jl} \) associated with \( {\tilde{U}}^t \); and (iii) a spatial symmetry eigenvalue \( \gamma ^g_{Y,jl} \) (which can be thought of as a “wavenumber” on Y).

5 Data-Driven Approximation

In this section, we consider the problem of approximating the eigenvalues and eigenfunctions of the kernel integral operators employed in VSA from a finite dataset consisting of time-ordered measurements of the vector-valued observable \( \vec {F}\). Specifically, we assume that available to us are measurements \(\vec {F}(x_0), \vec {F}(x_1), \ldots ,\vec {F}( x_{N-1} ) \) taken along an (unknown) orbit \(x_n = \Phi ^{n\tau }(x_0)\) of the dynamics at the sampling interval \(\tau \), starting from an initial state \(x_0 \in X\). We also consider that each scalar field \(\vec {F}(x_n) \in H_Y \) is sampled at a finite collection of distinct points \( y_0, y_1, \ldots , y_{S-1} \) in Y. We will exclude the trivial case that the support A of the invariant measure \(\mu \) is a fixed point by assumption. Given such data, and without assuming knowledge of the underlying dynamical flow and/or state space geometry, our goal is to construct a family of operators, whose eigenvalues and eigenfunctions converge, in a suitable sense, to those of \(K_Q\), in an asymptotic limit of large data, \(N, S \rightarrow \infty \). In essence, we seek to address a problem on spectral approximation of kernel integral operators from an unstructured grid of points \(( x_n, y_s)\) in \( \Omega \).

5.1 Data-Driven Hilbert Spaces and Kernel Integral Operators

An immediate consequence of the fact that the dynamics is unknown is that the invariant measure \(\mu \) defining the Hilbert space \(H_X = L^2(X,\mu ) \) is also unknown (arguably, apart from special cases, \(\mu \) would be difficult to explicitly determine even if \(\Phi ^t\) were known). This means that instead of \(H_X\) we only have access to a finite-dimensional Hilbert space \(H_{X,N} = L^2(X,\mu _N) \) associated with the sampling measure \( \mu _N = \sum _{n=0}^{N-1} \delta _{x_n}/N\) on the trajectory \(X_N = \{ x_0, \ldots ,x_{N-1} \}\), where \( \delta _{x_n} \) is the Dirac probability measure supported at \( x_n \in X \). Since \(\mu \) is not supported at a fixed point, it follows by ergodicity of \(\Phi ^\tau \) and continuity of \( t \mapsto \Phi ^t \) that all points in \(X_N\) are distinct for \(\mu \)-a.e. starting state \(x_0\). The analysis that follows will thus only treat the case of distinct sampled states \(x_n\). In that case, \(H_{X,N}\) consists of equivalence classes of functions on X having common values on the finite set \(X_N \subset X\), and is equipped with the inner product \( \langle f, g \rangle _{H_{X,N}} = \sum _{n=0}^{N-1} f^*(x_n) g(x_n)/ N\). Because every such equivalence class f is uniquely characterized by N complex numbers, \( f(x_0), \ldots , f(x_{N-1})\), corresponding to the values of one of its representatives on \(X_N \), \(H_{X,N}\) is isomorphic to \( {\mathbb {C}}^N\), equipped with a normalized Euclidean inner product. Thus, we can represent every \( f \in H_{X,N}\) by an N-dimensional column vector \( {\underline{f}} = ( f(x_0), \ldots , f(x_{N-1} ) )^\top \in {\mathbb {C}}^N\), and every linear operator \( T : H_{X,N} \rightarrow H_{X,N} \) by an \(N\times N \) matrix \( {\varvec{T}} \) such that \( {\varvec{T}} {\underline{f}} \) is equal to the column-vector representation of Tf. In particular, associated with every scalar kernel \( k : X \times X \rightarrow {\mathbb {R}} \) is a kernel integral operator \( K_N : H_{X,N} \rightarrow H_{X,N} \), acting on \(f \in H_{X,N}\) according to the formula (cf. (4))

This operator is represented by an \(N\times N\) kernel matrix \( {\varvec{K}} = [ k(x_m,x_n ) / N ] \).

In the setting of spatiotemporal data analysis, one has to also take into account the finite sampling of the spatial domain, replacing \(H_Y =L^2(Y,\nu )\) by the S-dimensional Hilbert space \(H_{Y,S} = L^2(Y,\nu _S)\) associated with a discrete measure \( \nu _S = \sum _{s=0}^{S-1} \beta _{s,S} \delta _{y_s} \). Here, the \(\beta _{s,S} \) are positive quadrature weights such that given any continuous function \(f : Y \rightarrow {\mathbb {C}}\), the quantity \( \sum _{s=0}^{S-1} \beta _{s,S} f(y_s) \) approximates \( \int _Y f \, \mathrm{d}\nu \). For instance, if \( \nu \) is a probability measure, and the sampling points \( y_s \) are equidistributed with respect to \( \nu \), a natural choice is uniform weights, \( \beta _{s,S} = 1/ S \). The space \(H_{Y,S}\) is constructed analogously to \(H_{X,N}\), and similarly we replace \(H_\Omega = L^2(\Omega ,\rho )\) by the NS-dimensional Hilbert space \(H_{\Omega ,NS} = L^2 (\Omega , \rho _{NS}) \), where \( \rho _{NS} = \mu _N \times \nu _S = \sum _{n=0}^{N-1} \sum _{s=0}^{S-1} \beta _{s,S} \delta _{\omega _{ns}} /N \) and \( \omega _{ns} = ( x_n, y_s)\). As a Hilbert space, \(H_{\Omega ,NS} \) is isomorphic to the space \(H_{NS} = L^2( X, \mu _N; H_{Y,N} )\) of vector-valued observables, which is the data-driven analog of H, as well as the tensor product space \(H_{X,N} \otimes H_{Y,S}\) (cf. Sect. 2.1). Given a kernel \( k_Q : \Omega \times \Omega \rightarrow {\mathbb {R}} \) satisfying the conditions in Sect. 3.2, there is an associated integral operator \(K_{Q,NS} : H_{\Omega ,NS} \rightarrow H_{\Omega ,NS} \), defined analogously to (26) by

and represented by the \((NS)\times (NS) \) matrix \( {\varvec{K}} \) with elements

Solving the eigenvalue problem for \(K_{Q,NS} \) (which is equivalent to the matrix eigenvalue problem for \( {\varvec{K}}\)) leads to eigenvalues \( \lambda _{NS,j} \in {\mathbb {R}} \) and eigenfunctions \( \phi _{NS,j} \in H_{\Omega ,NS} \), the latter, represented by column vectors \( {\underline{\phi }}_{j} \in {\mathbb {R}}^{NS} \) with elements equal to \( \phi _{NS,j}( \omega _{ns} )\). We consider \( \lambda _{NS,j} \) and \( \phi _{NS,j} \) as data-driven approximations to the eigenvalues and eigenfunctions \( \lambda _j \) and \(\phi _j\), respectively, of the integral operator in (12) associated with the kernel \(k_Q\). The convergence properties of this approximation will be made precise in Sect. 5.2.

A similar data-driven approximation can be performed for operators based on the Markov kernels \( p_Q \) from Sect. 3.3, which is our preferred class of kernels for VSA. However, in this case the kernels \( p_{Q,NS} : \Omega \times \Omega \rightarrow {\mathbb {R}}\) associated with the approximating operators \(P_{Q,NS} \) on \(H_{\Omega ,NS}\) are Markov-normalized with respect to the measure \( \rho _{NS}\), i.e., \( \int _\Omega p_{Q,NS}(\omega , \cdot ) \, \mathrm{d}\rho _{NS} = 1\), so they acquire a dependence on N and S. As with the eigenvalues of \(P_Q\), the eigenvalues \( \lambda _{NS,j}\) of \(P_{Q,NS}\) are real, and admit the ordering \(1=\lambda _{NS,0} > \lambda _{NS,1} \ge \lambda _{NS,2} \ge \cdots \ge \lambda _{NS,NS-1}\). Moreover, there exists a basis of \(H_{\Omega ,NS}\) consisting of corresponding eigenvectors, \( \phi _{NS,j} \), as well as a dual basis with elements \( \phi '_{NS,j} \) such that \(\langle \phi '_{NS,i}, \phi _{NS,j} \rangle _{H_{\Omega ,NS}}= \delta _{ij}\). Further details on this construction and its convergence properties can be found in Appendix D.

The \( \phi _{NS,j} \) and \( \phi '_{NS,j} \) have associated vector-valued functions \( \vec {\phi }_{NS,j} \) and \( \vec {\phi }'_{NS,j}\), respectively, in \( H_{NS} \), which we employ to perform a decomposition of the observation map \( \vec {F}\) analogous to (8), viz.

Here, the reconstructed signal \(\sum _{j=0}^{l-1} {\vec {F}}_{NS,j}\) converges to \( \vec {F} \) in the limit of \( l = NS -1 \) in \( H_{NS} \) norm; this is equivalent to pointwise convergence on the sampled dynamical states \(x_n \in X\) and spatial points in \(y_s \in Y\). Moreover, as we will see in Sect. 5.2, if all eigenvalues \(\lambda _{NS,j}\) with \( j \le l -1\) are nonzero, \( \vec {F}_{NS,l} \) has a continuous representative, which can be evaluated at arbitrary \(x \in X \) and \( y \in Y \). A pseudocode implementation of the full VSA pipeline for the class of Markov kernels \(p_{Q,NS}\) is included in Appendix E.

5.2 Spectral Convergence

For a spectrally consistent data-driven approximation scheme, we would like to able to establish that, as N and S increase, the sequence of eigenvalues \( \lambda _{NS,j}\) of \(K_{NS}\) converges to eigenvalue \( \lambda _j \) of K, and for an eigenfunction \( \phi _j\) of K corresponding to \(\lambda _j \) there exists a sequence of eigenfunctions \( \phi _{NS,j} \) of \( K_{NS}\) converging to it. While convergence of eigenvalues can be unambiguously understood in terms of convergence of real numbers, in the setting of interest here a suitable notion of convergence of eigenfunctions (or, more generally, eigenspaces) is not obvious, since \( \phi _{NS,j}\) and \( \phi _j \) lie in fundamentally different spaces. That is, there is no natural way of mapping equivalence classes of functions with respect to \( \rho _{NS}\) (i.e., elements of \(H_{\Omega ,NS}\)) to equivalence classes of functions with respect to \( \rho \) (i.e., elements of \(H_{\Omega }\)), allowing one, e.g., to establish convergence of eigenfunctions in \(H_\Omega \) norm. This issue is further complicated by the fact, that in many cases of interest, the support A of the invariant measure \(\mu \) is a non-smooth subset of X of zero Lebesgue measure (e.g., a fractal attractor), and the sampled states \(x_n \) do not lie exactly on A (as that would require starting states \(x_0 \) drawn from a measure zero subset of X, which is not feasible experimentally). In fact, the issues outlined above are common to many other data-driven techniques for analysis of dynamical systems besides VSA (e.g., POD and DMD), yet are oftentimes not explicitly addressed in the literature.

Here, following Das and Giannakis (2019), we take advantage of the fact that, by the assumed continuity of VSA kernels, every kernel integral operator \(K_Q : H_{\Omega } \rightarrow H_{\Omega } \) from Sect. 3.2 can be also be viewed as an integral operator on the space \(C({\mathcal {V}})\) of continuous functions on any compact subset \( {\mathcal {V}} \subset \Omega \) containing the support of \( \rho \). This integral operator, denoted by \( {\tilde{K}}_Q : C({\mathcal {V}}) \rightarrow C({\mathcal {V}})\), acts on continuous functions through the same integral formula as (12), although the domains and codomains of \(K_Q \) and \( {\tilde{K}}_Q\) are different. It is straightforward to verify that every eigenfunction \( \phi _j \in H_\Omega \) of \(K_Q\) at nonzero eigenvalue \( \lambda _j \) has a unique continuous representative \( {{\tilde{\phi }}}_j \in C({\mathcal {V}}) \), given by

and \( {{\tilde{\phi }}}_j \) is an eigenfunction of \( {\tilde{K}}_Q \) at the same eigenvalue \( \lambda _j \). Assuming further that \( {\mathcal {V}}\) also contains the supports of the measures \( \rho _{NS}\) for all \(N,S \ge 1 \), we can define \( {\tilde{K}}_{Q,NS} : C({\mathcal {V}}) \rightarrow C({\mathcal {V}})\) analogously to (27). Then, every eigenfunction \( \phi _{NS,j} \in H_{\Omega ,NS} \) of \( K_{Q,NS}\) at nonzero corresponding eigenvalue \( \lambda _{NS,j}\) has a continuous representative \( {{\tilde{\phi }}}_{NS,j}\), with

which is an eigenfunction of \( {\tilde{K}}_{Q,NS}\) at the same eigenvalue \( \lambda _{NS,j}\).

As is well known, the space \(C({\mathcal {V}})\) equipped with the uniform norm \( ||f ||_{C({\mathcal {V}})} = \max _{\omega \in {\mathcal {V}}} |f(\omega ) |\) becomes a Banach space, and it can further be shown that \({\tilde{K}}_{Q,NS}\) and \( {\tilde{K}}_Q\) are compact operators on this space. In other words, \(C({\mathcal {V}})\) can be used as a universal space to establish spectral convergence of \( {\tilde{K}}_{Q,NS}\) to \( {\tilde{K}}_Q\), using approximation techniques for compact operators on Banach spaces (Chatelin 2011). Von Luxburg et al. (2008) use this approximation framework to establish convergence results for spectral clustering techniques, and their approach can naturally be adapted to show that, under natural assumptions, \( {\tilde{K}}_{Q,NS}\) indeed converges in spectrum to \( {\tilde{K}}_Q\). In Appendix D, we prove the following result:

Theorem 4

Suppose that \({\mathcal {V}} \subseteq \Omega \) is a compact set containing the supports of \( \rho \) and the family of measures \( \rho _{NS} \), and assume that \( \rho _{NS}\) converges weakly to \( \rho \), in the sense that

Then, for every nonzero eigenvalue \( \lambda _j \) of \( K_Q \), including multiplicities, there exist positive integers \( N_0, S_0 \) such that the eigenvalues \( \lambda _{NS,j} \) of \( K_{Q,NS} \) with \( N \ge N_0 \) and \( S \ge S_0\) converge, as \( N,S \rightarrow \infty \), to \( \lambda _j \). Moreover, for every eigenfunction \( \phi _j \in H_\Omega \) of K corresponding to \(\lambda _j\), there exist eigenfunctions \( \phi _{NS,j} \) of \( K_{Q,NS} \) corresponding to \( \lambda _{NS,j} \), whose continuous representatives \( {{\tilde{\phi }}}_{NS,j} \) from (30) converge uniformly on \( {\mathcal {V}} \) to \( {{\tilde{\phi }}}_j \) from (29). Moreover, analogous results hold for the eigenvalues and eigenfunctions of the Markov operators \(P_{Q,NS} \) and \(P_Q\).

A natural setting where the conditions stated in Theorem 4 are satisfied are dynamical systems with compact absorbing sets and associated physical measures. Specifically, for such systems we shall assume that there exists a Lebesgue measurable subset \({\mathcal {U}}\) of the state space manifold X, such that (i) \({\mathcal {U}}\) is forward-invariant, i.e., \( \Phi ^t({\mathcal {U}}) \subseteq {\mathcal {U}}\) for all \(t\ge 0\); (ii) the topological closure \( \overline{{\mathcal {U}}}\) is a compact set containing the support of \(\mu \); (iii) \({\mathcal {U}}\) has positive Lebesgue measure in X; and (iv) for any starting state \(x_0 \in {\mathcal {U}}\), the corresponding sampling measures \(\mu _N\) converge weakly to \(\mu \), i.e., \( \lim _{N\rightarrow \infty } \int _X f \, \mathrm{d}\mu _N = \int _X f \, \mathrm{d}\mu \) for all \(f \in C(X)\). Invariant measures exhibiting Properties (iii) and (iv) are known as physical measures (Young 2002); in such cases, the set \({\mathcal {U}}\) is called a basin of \(\mu \). Clearly, Properties (i)–(iv) are satisfied if \( \Phi ^t : X \rightarrow X \) is a flow on a compact manifold with an ergodic invariant measure supported on the whole of X, but are also satisfied in more general settings, such as certain dissipative flows on noncompact manifolds [e.g., the Lorenz 63 system on \(X={\mathbb {R}}^3\) (Lorenz 1963)]. Assuming further that the measures \( \nu _S\) associated with the sampling points \(y_0,\ldots , y_{S-1} \) and the corresponding quadrature weights \( \beta _{0,S}, \ldots , \beta _{S-1,S} \) on the spatial domain Y converge weakly to \(\nu \), i.e., \( \lim _{S\rightarrow \infty } \int _Y g \, \mathrm{d}\nu _S = \int _Y g \, \mathrm{d}\nu \) for every \( g \in C(Y)\), the conditions in Theorem 7 are met with \( {\mathcal {V}} = \overline{{\mathcal {U}}} \times Y \), and the measures \( \rho _{NS}\) constructed as described in Sect. 5.1 for any starting state \(x_0 \in {\mathcal {U}}\). Under these conditions, the data-driven spatiotemporal patterns \( \phi _{NS,j}\) recovered by VSA converge for an experimentally accessible set of initial states in X.

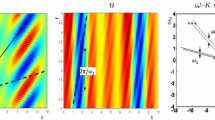

6 Application to the Kuramoto–Sivashinsky Model

6.1 Overview of the Kuramoto Sivashinsky Model

The KS model, originally introduced as a model for wave propagation in a dissipative medium (Kuramoto and Tsuzuki 1976), or laminar flame propagation (Sivashinsky 1977), is one of the most widely studied dissipative PDE models displaying spatiotemporal chaos. On a one-dimensional spatial domain \( Y = [ 0, L ], L \ge 0 \), the governing evolution equation for the real-valued scalar field \( u( t, \cdot ) : Y \rightarrow {\mathbb {R}} \), \( t \ge 0 \) is given by

where \( \nabla \) and \( \Delta = - \nabla ^2 \) are the derivative and (positive definite) Laplace operators on Y, respectively. In what follows, we always work with periodic boundary conditions, \( u( t, 0 ) = u(t, L) \), \( \nabla u(t, 0 ) = \nabla u( t, L ) \), ..., for all \(t \ge 0 \).

The domain size parameter L controls the dynamical complexity of the system. At small values of this parameter, the trivial solution \(u=0\) is globally asymptotically stable, but as L increases, the system undergoes a sequence of bifurcations, marked by the appearance of steady spatially periodic modes (fixed points), then traveling waves (periodic orbits), and progressively more complicated solutions leading to chaotic behavior for \( L \gtrsim 4 \times 2 \pi \) (Greene and Kim 1988; Arbruster et al. 1989; Kevrekidis et al. 1990; Cvitanović et al. 2009; Takeuchi et al. 2011).

A fundamental property of the KS system is that it possesses a global compact attractor, embedded within a finite-dimensional inertial manifold of class \( C^r \), \( r \ge 1 \) (Foias et al. 1986, 1988; Constantin et al. 1989; Jolly et al. 1990; Chow et al. 1992; Robinson 1994). That is, there exists a \(C^r\) submanifold \( {\mathcal {X}} \) of the Hilbert space \( H_Y = L^2( Y, \nu ) \) with \( \nu \) set to the Lebesgue measure, which is invariant under the dynamics, and to which the solutions \( u( t, \cdot ) \) are exponentially attracted. This means that after the decay of initial transients, the effective degrees of freedom of the KS system, bounded above by the dimension of \( {\mathcal {X}} \), is finite. Dimension estimates of inertial manifolds (Robinson 1994; Jolly et al. 2000) and attractors (Tajima and Greenside 2002) of the KS system as a function of L indicate that the system exhibits extensive chaos, i.e., unbounded growth of the attractor dimension with L. As is well known, analogous results to those outlined above are not available for many other important models of complex spatiotemporal dynamics such as the Navier-Stokes equations.

For our purposes, the availability of strong theoretical results and rich spatiotemporal dynamics makes the KS model particularly well-suited to test the VSA framework. In our notation, an inertial manifold \( {\mathcal {X}} \) of the KS system will act as the state space manifold X, which is embedded in this case in \( H_Y \). Moreover, the compact invariant set A will be a subset of the global attractor supporting an ergodic probability measure, \( \mu \). On X, the dynamics is described by a \( C^r \) flow map \( \Phi ^t : X \rightarrow X \), \( t \in {\mathbb {R}} \), as in Sect. 2.1. In particular, for every initial condition \( x_0 \in X \), the orbit \( t \mapsto x( t ) = \Phi ^t( x_0 ) \) with \( t \ge 0 \) is the unique solution \(u(t,\cdot )=x(t)\) to (32) with initial condition \( x_0 \). While in practice the initial data will likely not lie on X, the exponential tracking property of the dynamics ensures that for any admissible initial condition \( u \in H_Y \) there exists a trajectory x(t) on X to which the evolution starting from u converges exponentially fast.

As stated in Sect. 5.2, for data-driven approximation purposes, we will formally assume that the measure \( \mu \) is physical. While, to our knowledge, there are no results in the literature addressing the existence of physical measures (with appropriate modifications to account for the infinite state space dimension) specifically for the KS system, recent results (Lu et al. 2013; Lian et al. 2016) on infinite-dimensional dynamical systems that include the class of dissipative systems in which the KS system belongs to indicate that analogs of the assumptions made in Sect. 5.2 should hold.