Abstract

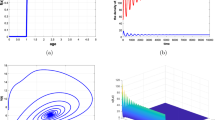

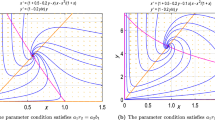

We study a class of Lotka–Volterra stochastic differential equations with continuous and pure-jump noise components, and derive conditions that guarantee the strong stochastic persistence (SSP) of the populations engaged in the ecological dynamics. More specifically, we prove that, under certain technical assumptions on the jump sizes and rates, there is convergence of the laws of the stochastic process to a unique stationary distribution supported far away from extinction. We show how the techniques and conditions used in proving SSP for general Kolmogorov systems driven solely by Brownian motion must be adapted and tailored in order to account for the jumps of the driving noise. We provide examples of applications to the case where the underlying food-web is: (a) a 1-predator, 2-prey food-web, and (b) a multi-layer food-chain.

Similar content being viewed by others

References

Applebaum D (2009) Lévy processes and stochastic calculus, 2nd edn. Cambridge University Press, Cambridge

Bao J, Mao X, Yin G, Yuan C (2012) Competitive lotka-volterra population dynamics with jumps. Nonlinear Anal 74:6601–6616

Bao J, Yuan C (2011) Stochastic population dynamics driven by lévy noise. J Math Anal Appl 391:363–375

Benaim M (2018) Stochastic persistence. arXiv:1806.08450. Accessed 25 Dec 2020

Benaïm M, Lobry C (2016) Lotka-volterra with randomly fluctuating environments or how switching between beneficial environments can make survival harder. Ann Appl Probab 26(6):3754–3785

Bertoin J (1998) Lévy processes. Cambridge University Press, Cambridge

Billingsley P (1999) Convergence of probability measures, 2nd edn. John Wiley & Sons Inc., New York

Chessa S, Fujita H (2002) Equazione stocastica di dinamica di popolazioni di tipo preda-predatore. Bollettino Unione Matematica Italiana 5–B:789–804

Ciomaga A (2010) On the strong maximum principle for second order nonlinear parabolic integro-differential equations. Adv Differ Equ 17(7/8):635–671

Dunne J (2006) The network structure of food webs. In: Pascual M, Dunne J (eds) Ecological networks: linking structure to dynamics in food webs. Oxford University Press, Oxford, pp 28–86

Gard T, Hallam T (1979) Persistence in food-webs. i. lotka-volterra food-chains. Bull Math Biol 41(6):877–891

Hening A, Nguyen D (2018a) Coexistence and extinction for stochastic kolmogorov systems. Ann Appl Prob 28(3):1893–1942

Hening A, Nguyen D (2018b) Persistence in stochastic lotka-volterra food chains with intraspecific competition. Bull Math Biol 80:2527–2560

Hening A, Nguyen D (2018c) Stochastic lotka-volterra food chain. J Math Biol 77(1):135–163

Hening A, Nguyen D, Schreiber S (2020) A classification of the dynamics of three-dimensional stochastic ecological systems. arxiv:2004.00535. Accessed 25 Dec 2020

Hening A, Nguyen D, Chesson P (2021) A general theory of coexistence and extinction for stochastic ecological communities. J Math Biol 82(56)

Hofbauer J (1981) A general cooperation theorem for hypercycles. Monatshefte für Mathematik 91(3):233–240

Kaspi H, Mandelbaum A (1994) On harris recurrence in continuous time. Math Oper Res 19(1)

Khasminskii R (2012) Stochastic stability of differential equations. Springer-Verlag, Berlin

Kunita H (2019) Stochastic flows and jump-diffusions. Springer Verlag, Berlin

Liptser R (1980) A strong law of large numbers for local martingales. Stochastics 3(1–4):217–228

Liu M, Wang K (2014) Stochastic lotka-volterra systems with lévy noise. J Math Anal Appl 410(2):750–763

Mao X (2003) Asymptotic behaviour of the stochastic lotka-volterra model. J Math Anal Appl 287(1):141–156

Mao X, Luo Q (2007) Stochastic population dynamics under regime switching. J Math Anal Appl 334(1):69–84

Meyn S, Tweedie R (1992a) Stability of markovian processes i: criteria for discrete-time chains. Adv Appl Prob 24(3):542–574

Meyn S, Tweedie R (1992b) Stability of markovian processes ii: Continuous time processes and sampled chains. Adv Appl Prob 25(3):487–517

Meyn S, Tweedie R (1993) Stability of markovian processes iii: foster-lyapunov criteria for continuous-time processes. Adv Appl Prob 25(3):518–548

Milner-Gulland E (2011) Animal migration: a synthesis. Oxford University Press, Oxford

Morin P (2011) Community ecology. Wiley-Blackwell, Newyork

Ohman M, Mantua N, Keister J, García-Reyes M, McClatchie S (2017) Enso impacts on ecosystem indicators in the california current system. https://www.us-ocb.org/enso-impacts-on-ecosystem-indicators-in-the-california-current-system/. Accessed 23 Jan 2021

Pimm S (1982) Food Webs. Springer, Netherlands

Polis G, Strong D (1996) Food web complexity and community dynamics. Am Nat 147:813–846

Rebolledo R (2019) An open-system approach to complex biological networks. SIAM J Appl Math 79(2):619–640

Rudnicki R (2003) Long-time behavior of a stochastis prey-predator model. Stoc Process Appl 108(1):93–107

Schreiber S, Benaïm M, Atchadé K (2011) Persistence in fluctuating environments. J Math Biol 62(5):655–683

Strickler E (2019) Persistance de processus de markov déterministes par morceaux. Ph.D. thesis, Université de Neuchatel

Strickler E, Nguyen D (2020) A method to deal with the critical case in stochastic population dynamics. SIAM J Appl Math 80(3):1567–1589

Terborgh J, Holt R, Estes J (2010) Trophic cascades: what they are, how they work, and why they matter. In: Terborgh J, Estes J (eds) Trophic cascades: predators, prey and the changing dynamics of nature. Island Press, Washington D.C., pp 1–18

Thébault E, Loreau M (2003) Food-web constraints on biodiversity-ecosystem functioning relationships. Proc Natl Acad Sci USA 100:14949–14954

Thompson R (2012) Food webs: reconciling the structure and function of biodiversity. Trends Ecol Evol 27(12):689–697

Williams R, Martínez N (2004) Limits to trophic levels and omnivory in complex food webs: theory and data. Am Nat 163:458–468

Yuang C, Mao X (2003) Asymptotic stability in distribution of stochastic differential equations with markovian switching. Stoch Proc Appl 103:277–291

Acknowledgements

I would like to thank my Ph.D. adviser Dr. Rolando Rebolledo for his supportive and attentive guidance during the preparation of the first draft of this article.

Funding

The author has been supported by ANID, ex-CONICYT, through Beca de Doctorado Nacional, 21170406, convocatoria 2017. This research was partially funded by project ANID-FONDECYT 1200925. The author declares that has no financial or personal relationship with other people or organizations that could inappropriately influence or bias the content of this paper.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The author declares that he has no conflicts of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Leonardo Videla has been supported by CONICYT through Beca de Doctorado 21170406, Convocatoria 2017. This research was partially funded by project ANID-FONDECYT 1200925.

Appendix: Deferred proofs

Appendix: Deferred proofs

Proof of Lemma 1

Since \((\mathbf{A}, \mathbf{B})\) is feasible, consider the vector \(\mathbf{c}\) given by Definition 1. We will prove that there exists positive constants \(K_1\), \(K_2\) such that:

for every \(\mathbf{x}\in {\mathbb {R}}^n_{+}\). Indeed:

where we have used Jensen’s inequality to obtain the last line. Thus:

Of course, there exists \(R> 0\) such that \(\Vert \mathbf{x}\Vert > R\) implies \(\dfrac{1}{\sum _{i=1}^{n}x_i} < 1\). Define \(\tilde{K}_1= \dfrac{ \max _{i=1, \ldots , n} \left\{ c_i b_i \right\} }{\min _{i=1, \ldots , n} c_i}\) and \(K_2= \dfrac{\min _{i=1, \ldots , n} \left\{ c_i a_{ii} \right\} }{n (1+\max _{i=1,\ldots , n} c_i)} \). Thus, for \(\Vert \mathbf{x}\Vert > R\), we have:

Observe that the right hand side is a continuous function of \(\mathbf{x}\). Let:

Then, (48) holds with \(K_1:=\max \{\tilde{K}_1, r_1+r_2\}\). \(\square \)

Proof of Lemma 2

Let \(V: {\mathbb {R}}^n_{++} \mapsto {\mathbb {R}}_{+}\) be the function defined by:

where \(c_i\) are the constant guaranteed by Assumption 1. Then V is log-Lyapunov and for \(\mathbf{x}\in {\mathbb {R}}^n_{++}\):

By Assumption 2, Taylor’s theorem, and Assumption 3, the last term is bounded above. Moreover, since the intraspecies interaction are negative, we have that the above expression is not grater than:

for some positive constants \(K_1, K_2, K_3\). Now, the last sum is non-negative, by definition of feasibility. In toto, thus, there exist positive constants \(K_1\), \(K_2\), \(K_3\) such that:

We conclude that there exists a constant \(M> 0\) such that on \({\mathbb {R}}^n_{++}\):

If we set:

then

Since V is continuous, \(V_k\) is open, and thus

is a \({\mathcal {F}}_t\)-stopping time. Dynkin’s formula applied to V gives:

Fix \(N \in {\mathbb {N}}\), and consider the set:

Define:

Observe that for every \(k \ge 1\):

where the second-to-last line follows from the fact that \(\mathbf{X}\) has right-continuous a.s. paths and by the relation (51). This last inequality and (52) gives:

and thus necessarily \(\epsilon _N={\mathbb {P}}_{\mathbf{X}_0}(\varOmega _N)=0\) for every \(N \in {\mathbb {N}}\). Consequently,

and this concludes the proof. \(\square \)

Recall that \((P_t: t\ge 0)\) is the semigroup associated to the process \(\mathbf{X}\), i.e.,

for bounded measurable real functions f.

Proof of Lemma 3

Let \(F_{i}(\mathbf{x})=x_{i} (B_i+\sum _{j=1}^n A_{ij}x_j ) \), \(G_{i}(\mathbf{x})= x_{i}\sigma _{i}\), and \(H_i(\mathbf{x}, \mathbf{z})= x_i L_{i}(\mathbf{x}, \mathbf{z})\). For two initial conditions \(\mathbf{x}, \mathbf{y}\), let \(\ {^\mathbf{x}}{\tilde{\mathbf{X}}}{}\) and \(\ {^\mathbf{y}}{\tilde{\mathbf{X}}}{}\) be the solutions of (2) in the natural coupling, i.e., driven by the same Lévy noise \((\mathbf{W}, \tilde{N})\). Let \(D_{k}:= \{\mathbf{x}\in {\mathbb {R}}^n_+: \Vert \mathbf{x}\Vert \le k\}\), and set \(\eta _k:=\inf \{ t\ge 0: \ {^\mathbf{x}}{\tilde{\mathbf{X}}}{_t} \in D^C_k \text { or } \ {^\mathbf{y}}{\tilde{\mathbf{X}}}{_t} \in D^C_k \}\). For \(\mathbf{u}=\mathbf{x}\text { or } \mathbf{y}\) define:

Observe that \(\mathbf{F}=(F_{1}, F_2, \ldots , F_n) \) is locally Lipschitz continuous, and thus for every \(k\in {\mathbb {N}}\) there exist a constant \(M_k\) such that for \(\mathbf{x}, \mathbf{y}\in D_k\):

Fix \(T\ge 0\). For any time \(0 \le t \le T\) define \(t_{k}:= t \wedge \eta _k\). For \(t \le T_k\):

Cauchy-Schwartz inequality yields:

On \(s \le T_k\), by (53) we have that:

and trivially, for \(s \le T_k\):

Let \(\tilde{M}_1\) be a Lipschitz constant for G . By Burkholder-Davis-Gundy inequality, Lipschitz-continuity of G and Fubini’s theorem:

Analogously, for the jump component, we have:

where the constant \(\tilde{M}_2\) is guaranteed by Assumption3(c). Thus, if we take supremum on \(0 \le t \le T_{k}\) and then take expectation at both sides of the inequality (54), we obtain:

where C is a universal constant and \((C_k: k\ge 0)\) is an increasing positive sequence. We conclude by Gromwall’s Lemma that:

Now, let \(f: {\mathbb {R}}^n_{+} \mapsto {\mathbb {R}}\) be a bounded continuous function, and fix \(\mathbf{x}\in {\mathbb {R}}^n_{+}\), \(t \ge 0\), \(\varepsilon > 0\). Let \(r > 0\) such that \( \bar{B} ( \mathbf{x}, r ) \subset {\mathbb {R}}^n_{++}\). Plainly, for \(\mathbf{y}\in \bar{B} ( \mathbf{x}, r)\):

On the other hand, observe that from inequality (52), we deduce that for \(\mathbf{y}\in \bar{B}(\mathbf{x}, r)\):

and thus, we can choose a \(k_0\) such that uniformly on \(\mathbf{y}\in \bar{B} (\mathbf{x}, r)\), we have:

Since f is continuous, it is uniformly continuous on \(D_{k_0}\). Let \(\delta : {\mathbb {R}}_{+} \mapsto {\mathbb {R}}_{+}\) be a modulus of continuity of f on \(D_{k_0}\), i.e. a function that satisfies that for every \(\mathbf{y}_0 \in D_k\), \(f(B(\mathbf{y}_0, \delta (\varepsilon )) \cap D_k) \subseteq B (f(\mathbf{y}_0), \varepsilon )\). Let \(\varDelta = \delta (\varepsilon /3)\). Then, again for \(\mathbf{y}\in \bar{B} (\mathbf{x}, r)\):

Using (56) and Markov’s Inequality, the last term is not greater than \( \Vert \mathbf{x}-\mathbf{y}\Vert ^2 \dfrac{2 C \Vert f \Vert e ^{C_k t}}{\varDelta ^2} \) and this is smaller than \(\varepsilon /3\) for every \(\mathbf{y}\) in the open ball \(B \left( \mathbf{x}, r \wedge \dfrac{\sqrt{\varepsilon }\varDelta }{\sqrt{6 C \Vert f \Vert e^{C_{k}t}}} \right) \). The result follows. \(\square \)

Proof of Lemma 9

For fixed \(\alpha > 0\) set \(\varrho (\alpha ; \mathbf{x})= \exp \{\alpha (1+\mathbf{c}^T \mathbf{x}) \}\). An easy computation shows that:

Fix \(\alpha _1> 0\) such that for \(i=1, \ldots , n\):

and observe that for every \(0 \le \alpha \le \alpha _1\), our choice of the \(c_i\), Assumptions 3 and 5 imply that for every \(\delta > 0\) there exists \(M> 0\) such that:

whenever \(\alpha \) is small enough. Just as in the proof of Lemma 5, Dynkin’s formula yields:

for \(0 \le \alpha \le \alpha _1\). Consider the random variables \(\ {^\mathbf{x}}{U}{_t} = \varrho (\alpha _1, \ {^\mathbf{x}}{\mathbf{X}}{_t})\). Since \(\varrho (\alpha ; \cdot )\) is continuous, De la Vallée Poussin’s Lemma implies that for every compact set \(K \subset {\mathbb {R}}^n_{+}\) and \(T > 0\) the family:

is a uniformly integrable (UI) family of random variables, written:

Now, by our assumptions on \(\mathbf{L}\):

for some positive constant C. Consequently, there exists \(\alpha _0\) such that for \(\alpha < \alpha _0\):

Consequently, there exists \(R_1\) such that whenever \(\varrho (\alpha _1, \mathbf{x})> R_1\) and \(\alpha < \alpha _0\):

Next, consider a sequence \((\mathbf{x}_{k})\) converging to \(\mathbf{x}\in {\mathbb {R}}^n_{+}\), and set \(\varepsilon > 0\). Fix \(T > 0\). Since \(\mathbf{x}_{k}\) is convergent, there exists a compact set K containing \((\mathbf{x}_k)\) and \(\mathbf{x}\). By the UI property (60), there exists \(R_2 >0\) such that for every \(\mathbf{y}\in K\) and \(0 \le t \le T\):

Fix \(R_3 = \max \{R_1, R_2\}\), and let \(\eta : {\mathbb {R}}^n_{+}\mapsto [0,1]\) be a smooth function such that \(\eta (\mathbf{x})=1\) for \(\varrho (\alpha _1; \mathbf{x})< R_3\) and \(\eta (\mathbf{x})=0\) for \(\varrho (\alpha _1; \mathbf{x}) > 2 R_3\). Now, for \(\alpha < \alpha _0\), \(0\le t \le T\) and \(k \in {\mathbb {N}}\):

The \({\mathcal {C}}_b\)-Feller property (Lemma 3) applies to the first term on the right, and thus there exist a \(k_0 > 0\) such that for every \(k \ge k_0\) it is smaller that \(\varepsilon /2\). As for the second term, observe that:

Of course, an analogous inequality holds for the terms \({\mathbb {E}}_{\mathbf{x}_k}(\cdot )\). This concludes the proof.

Rights and permissions

About this article

Cite this article

Videla, L. Strong stochastic persistence of some Lévy-driven Lotka–Volterra systems. J. Math. Biol. 84, 11 (2022). https://doi.org/10.1007/s00285-022-01714-6

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00285-022-01714-6

Keywords

- Ecological models

- Stochastic Lotka–Volterra systems

- Lévy-driven SDE

- Strong stochastic persistence

- Food-chains