Abstract

In a physiologically structured population model (PSPM) individuals are characterised by continuous variables, like age and size, collectively called their i-state. The world in which these individuals live is characterised by another set of variables, collectively called the environmental condition. The model consists of submodels for (i) the dynamics of the i-state, e.g. growth and maturation, (ii) survival, (iii) reproduction, with the relevant rates described as a function of (i-state, environmental condition), (iv) functions of (i-state, environmental condition), like biomass or feeding rate, that integrated over the i-state distribution together produce the output of the population model. When the environmental condition is treated as a given function of time (input), the population model becomes linear in the state. Density dependence and interaction with other populations is captured by feedback via a shared environment, i.e., by letting the environmental condition be influenced by the populations’ outputs. This yields a systematic methodology for formulating community models by coupling nonlinear input–output relations defined by state-linear population models. For some combinations of submodels an (infinite dimensional) PSPM can without loss of relevant information be replaced by a finite dimensional ODE. We then call the model ODE-reducible. The present paper provides (a) a test for checking whether a PSPM is ODE reducible, and (b) a catalogue of all possible ODE-reducible models given certain restrictions, to wit: (i) the i-state dynamics is deterministic, (ii) the i-state space is one-dimensional, (iii) the birth rate can be written as a finite sum of environment-dependent distributions over the birth states weighted by environment independent ‘population outputs’. So under these restrictions our conditions for ODE-reducibility are not only sufficient but in fact necessary. Restriction (iii) has the desirable effect that it guarantees that the population trajectories are after a while fully determined by the solution of the ODE so that the latter gives a complete picture of the dynamics of the population and not just of its outputs.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

From the very beginning of community modelling, ordinary differential equations (ODEs) have been its main tool. This notwithstanding the fact that much earlier Euler (1760) and other mathematicians working on population dynamics had already considered age structured models, see (Bacaër 2008, 2011; Gyllenberg 2007) for more information on the history of population dynamics. This probably had two causes, the architects of the initial flurry in the nineteen-twenties and thirties (cf. Scudo and Ziegler 1998) and their successors like MacArthur and May (cf. Kingsland 1995) got their inspiration from the successes of physics, which is dominated by differential equations, and ODEs are rather easier to write down and analyse than e.g. integral equations. However, the assumptions needed to arrive at ODEs generally match biological reality less closely, and give these models more of a toy character: good to get new ideas, but difficult to match in some detail to concrete ecological systems. That for the latter age may well matter also mathematicians know from their immediate experience: few women give birth before the age of ten and while most humans nowadays reach their seventy’s anniversary still few live beyond a century. For this reason, many mathematical modellers turned to age as a structuring variable, even in the non-linear realm. However, for ectotherms, that is, all organisms other than mammals and birds, size usually matters far more than age (cf. de Roos and Persson 2013). We have spent considerable effort in the past to develop tools for studying general physiologically structured models in the hope to gradually overcome the surviving endotherm-bias of the modelling community. Yet ODE models remain paramount as didactical tools and for the initial exploration of so far unexplored mechanisms, notwithstanding the disadvantage that in these models individual level mechanisms generally can only be fudged instead of faithfully represented. Given this situation, it becomes of importance to explore in what manner ODE models fit among the physiologically structured ones. Of course, there is the boringly simple embedding of the unrealistic case where individuals indeed have only a single, or at most a few possible states.

Example 1.1

Consider a size-structured population with individual size (biomass) denoted as x, starting from a birth size \(x_b\), individual growth rate g(x, E), E a resource density, per capita birth rate \(\beta (x,E)\), and per capita death rate \(\mu (x,E)\). For such populations, if

the population biomass B per unit of spatial area or volume (below to be abbreviated as just volume) satisfies

To see this, observe that the left hand side of (1.1) corresponds to the contribution to the change in population biomass by an individual of size x expressed as fraction of its own biomass. So if we integrate this term over the biomass density over size (and volume), say xn(t, x), n the numeric (per unit of volume) size density, we get the total change in biomass (per unit of volume), (cf. de Roos et al. 2013).

If moreover

-

(i)

the per capita contribution to the “consumptive pressure on a resource unit” is a product of an individual’s size and a size-independent functional response based component, say, f(E) / E,

-

(ii)

all other populations in the community are similarly affected only by our focal population’s biomass, and

-

(iii)

we ourselves are also only interested in this quantity,

then we can for all practical purposes represent our focal population by no more than its biomass.

We call (1.2) an ODE-reduction of the size-structured population model.

The question then naturally arises whether or not this example of ODE-reducibility is essentially the only one, that is, up to coordinate choices, such as in the case of isomorphs not biomass but its scaled cubic root, length. The following example shows this not to be the case.

Example 1.2

Daphnia models. Now let in the wake of (Kooijman and Metz 1984) and (de Roos et al. 1990) size be represented by length, starting from a size \(x_b\) at birth, the growth rate be given by \(g(x,E)=\delta f(E) -\varepsilon x\), the per capita birth rate by \(\beta (x,E)=\alpha f(E)x^2\), the per capita death rate by \(\mu \), and the per capita resource consumption by \(f(E)x^2\). (This means that individual biomass, w, scaled to be equal to \(x^3\), grows as \(3\delta f(E)w^{2/3}-3\varepsilon w\), that is, mass intake is taken to be proportional to surface area and metabolism to biomass.) Let n(t, x) again denote the numeric size-density. Now define

that is, \(N_0\) is the total population size, \(N_1\) the total population length, \(N_2\) the total population surface area, \(N_3\) the total population biomass, all per unit of volume. Then

etc.. If the only other component of the community is an unstructured resource, and we need no further output from the population than its total biomass per unit of volume, we can combine (1.4) with

into a sufficient representation of our community model.

The differential equation for \(N_0\) is obvious, and so is the first term in the differential equation for \(N_1\). To understand the second term observe that g consists of two terms, the first of which is size independent. This term makes all individuals of the population increase their length at a rate \(\delta f(E)\). We get the corresponding increase in the total population length by multiplying this \(\delta f(E)\) with the total population density. The \(\varepsilon \) in the last term also derives from the length growth except that the corresponding term in g contains a factor x. When we account for this x when calculating the integral over n we get \(N_1\). The differential equations for the other \(N_i\) follow in a similar manner.

ODE-reducibility of age-structured models and, slightly more generally, of distributed delay systems, has been investigated since the mid 1960s (Vogel 1965; Fargue 1973, 1974; Gurtin and MacCamy 1974, 1979a, b; McDonald 1978, 1989).

It has already been known for a long while that there also exist more realistic cases, where for instance a size-structured model allows a faithful representation in ODE terms (Murphy 1983; Cushing 1989; Metz and Diekmann 1991).

The next question is then whether we can characterise the set of all possible cases. For the practically important subset of cases where the population birth rate figures on the list of population outputs and with a single state variable on the level of the individuals, the last author solved this problem on a heuristic level already in 1989 during a holiday week in summer spent at the Department of Applied Physics of the University of Strathclyde. An allusion to this was given in a “note added in print” to the paper (Metz and Diekmann 1991). However, it took till now before we together had plugged all the minor holes in the proof. Below you find the result.

2 Preview of Sects. 3 to 6

In this section we give a preview of the main content of the paper, first for theoretical biologists and probabilists and then for all kinds of mathematicians. The much shorter paper (Diekmann et al. 2019) provides additional examples and may serve as a more friendly user guide to ODE-reducibility of structured population models.

2.1 Mainly for theoretical biologists and probabilists: the context of discovery

2.1.1 Biological context

The term “physiologically structured population models” (PSPM) refers to large system size limits of individual-based models where (i) individuals are differentiated by physiological states, e.g. \(x = (\mathrm {size, age})\), referred to as i(ndividual)-states, (ii) the world in which these individuals live is characterized by a set of variables collectively called environmental condition, to be denoted as E. [Hard proofs for the limit conjectures are still lacking except for age-based models (cf. Tran 2008, and the references therein), and more recently also for a class of simple (size,age)-based ones (Metz and Tran 2013).] Sections 2.2 and 3 go into how these deterministic models can be specified by means of equations.

The i-level model ingredients are a set of feasible i-states, \(\varOmega \subset \mathbb {R}^m\), \(m \in {\mathbb {N}}\), and, most commonly,

-

(i)

a rate of i-state change taken to be deterministic, g(x, E),

-

(ii)

a death rate, \(\mu (x,E)\), and

-

(iii)

a birth rate, \(\beta (x,E)\).

In the general case \(\beta (x,E)\) has as value a distribution over \(\varOmega \). However, from a mathematical perspective it is preferable to use instead of “distribution” the term “measure” as this is more encompassing, and the birth rate does not total to one and may consist of a mixture of discrete and continuous distributions. (Actually, we should even be a bit more general and talk about a signed measure as a cell that divides generates a measure over the states where the daughters may land plus a compensating negative mass, equal to minus the division rate, at the state of the mother.)

Notational convention The value of \(\beta (x,E)\) for the measurable set \(\omega \subset \varOmega \) is denoted by \(\beta (x,E,\omega )\). A similar convention applies to other measure valued functions.

The p(opulation)-state then is a measure m on \(\varOmega \). However, on many occasions it suffices to think in terms of just densities n on \(\varOmega \), or \(n\in L^1(\varOmega )\) in the mathematicians’ jargon. As a consequence of how the rates are specified, when E is given as a function of time (below to be looked at as input) the individuals are independent (except for a possible dependence of their birth state on the state of their parents), and hence the dynamics of the p-state is linear.

The more interesting case is when E is determined by the surrounding community. Community models are sets of population models coupled through a common E. This leads to c(ommunity)-state spaces that are products of the state spaces of the comprised species, times the state spaces of any non-living resources. The mass action principle tells that generally E can be calculated by applying a linear map to the c-state, like when a predation pressure equals the sum of the predation pressures exerted by all individuals in the community. This leads us to the final set of ingredients of a population model:

-

(iv)

functions of (x, E), like biomass or per capita feeding rate, that when cumulated over all individuals produce components of the population output.

Side remarks on terminology: In our context, each output component is thus obtained by taking the integral of the p-state over \(\varOmega \) after multiplying it with a, possibly E-dependent, function of the i-state variables. This function specifies the relationship between the i-state and the property that we want to measure, e.g., biomass as a function of length. In mathematical jargon we say that the output components are obtained by applying a linear functional, i.e., a linear map from the p-state space to the real numbers. The corresponding function will be referred to as weight function, and for a p-state m and weight function \(\psi \), the corresponding map will be written as \(\left\langle {m,\psi } \right\rangle \).

The population dynamical behaviour of individuals almost never can be captured in terms of a finite number of i-states. Yet, ecological discourse is dominated by ODE models. This leads to the philosophical question which of these models can be justified from the more realistic physiologically structured populations perspective. At the more pragmatic side there is moreover the problem that in community biology PSPM usually become too difficult to handle for more than two or three species. This leads to the complementary question whether there are relevant choices of model ingredients for which a PSPM can be represented by a low dimensional system of ODEs. To answer these questions we looked at populations as state-linear input–output relations, with E as input, and as output a population’s contribution to E as well as anything that ‘a client’ may want to keep track of. The key question addressed in this paper is thus: under what conditions on the model ingredients is it possible to obtain the same input–output relation when the PSPM is replaced by a finite dimensional ODE? (This representation may have an interpretation in its own right, but this is not required.) If such a representation is possible, we say that the population model is reduced to the ODE or that the input–output relation is realised by it.

2.1.2 The mathematical question

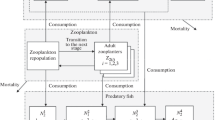

Our starting point thus are models that can be represented as in the following diagram.

In Fig. 1Y is the p-state space and \({\mathbb {R}}^r\) the output space. E is the time course of the environment and \(U_E^c (t,s)\) the (positive) linear state transition map with \(s,\; t\) the initial and final time. (The upper index c here refers to the mathematical construction of the p-state, explained in Sect. 3, through the cumulation of subsequent generations.) Finally O(E(t)) is the linear output map. The mathematical question then is under which conditions on the model ingredients it is possible to extend the diagram in Fig. 1 (for all \(E,\; t,\; s\)) to the following diagram.

Here P is a linear map, \(\varPhi _E (t,s)\) a linear state transition map (which should be differentiable with respect to t) and Q(E(t)) a linear output map. The dynamics of the output cannot be generated by an ODE when the space spanned by the output vectors at a given time is not finite dimensional. Hence ODE reducibility implies that there exists an r such that the outputs at a given time can be represented by \({\mathbb {R}}^r\). (Below we drop the time arguments to diminish clutter, except in statements that make sense only for each value of the argument separately, or when we need to refer to those arguments.) Moreover, the biological interpretation dictates that

where m is the p-state and the components of the vector \(\varGamma (E)\) are functions \(\gamma _i(E): \varOmega \rightarrow \mathbb {R}\).

Thanks to the linearity of \(U^c_E (t,s)\) and O(E(t)) we can without loss of generality assume P, \(\varPhi _E (t,s)\) and Q(E(t)) to be linear. Moreover, ODE reducibility requires that P can be written as \(Pm = \left\langle {m,\varPsi } \right\rangle \) with \(\varPsi =(\psi _1,\dots ,\psi _k)^\mathrm {T}\), \(\psi _i:\varOmega \rightarrow \mathbb {R}\), where the \(\psi _i\) should be sufficiently smooth to allow

(The last expression comes from combining \({{d\varPhi _E (t,s)} \big /{dt}} = K(E(t))\varPhi _E (t,s)\) and \(\varPhi _E (t,t)=I\).) Finally, we should have \(O(E)=Q(E)P\), and therefore \(\varGamma (E)=Q(E)\varPsi \), implying that the output weight functions should be similarly smooth. (The precise degree of smoothness needed depends on the other model ingredients in a manner that is revealed by the TEST described in Sect. 2.1.4.)

2.1.3 A tool

The main tool in the following considerations is the so-called backward operator, to be called \(\bar{A}(E)\), as encountered in the backward equation of Markov process theory and thereby in population genetics, used there to extract various kinds of information from the process without solving for its transition probabilities. What matters here is that the backward operator summarises the behaviour of the “clan averages” \(\bar{\psi } (t,s)\) of weight functions \(\psi \) (such as occur in \(Pm = \left\langle {m,\varPsi } \right\rangle \)), defined by

where \({m(t,s;\delta _{x } )}\) is the p-state resulting at t from a p-state corresponding to a unit mass \(\delta _x\) at x at time s (hence the term clan average), in the sense that

For further use we moreover note that

independent of s, which on differentiating for s gives

(When E does not depend on s, (2.5) also holds good for \(t\ne s\), leading to the perhaps more familiar form of the backward equation: \(d\bar{\psi } /dt = \bar{A}\bar{\psi }\).)

One reason to start from (2.2) is that it leads to simple interpretation-based heuristics for calculating backward operators, which we shall discuss a little later on. (More abstract and rigorous functional analytical counterparts to the intuitive interpretation-based line of argument developed here can be found in Sects. 2.2 and 4.) The reason for coming forth with the backward operator is that it provides the counterpart in the space of weight functions \(\psi \) of the time differentiation in (2.1). To see that, the perspective sketched so far still needs a slight extension. A look at (2.2) shows that we can also interpret the \({\bar{\psi }}(t,s)\) as weight functions in their own right that can be paired with \(\delta _x\) as \(\left\langle {\delta _x,\bar{\psi } (t,s) } \right\rangle \,(\,=\bar{\psi } (t,s)(x )\,)\). Moreover, thanks to the linearity of (2.2), (2.3) and the p-state transition maps \(U_E^c (t,s)\) and the consequent linearity of \(m(t,s;\cdot )\), we can extend the action of these candidate functionals to more general measures \(m_s\) at time s, and in this way calculate \(\int _\varOmega {\psi (\xi )m(t,s;m_s,dx)}\) as \(\left\langle {m_s,\bar{\psi } (t,s) } \right\rangle \). This sleight of hand transforms the question about the change of Pm over time to a question about the dynamics of the \(\bar{\psi }(t,s)\), for which we can find the time derivative by applying \(\bar{A}(E)\) to \(\bar{\psi }\). The final step then is to make the connection with (2.1) by setting \(s=t\) and using (2.4), to arrive at

To apply these ideas we need to find expressions for the backward operators. To this end we use that we assumed E to be given, making individuals independent. Then on the level of population averages it makes no difference whether we start with a scaled large number of individuals all with i-state x or with a single individual in that state. Since it is easier to think in terms of individuals, we shall do the latter. We can then look at the effect on the clan averages of the founder individual engaging in each of the component processes (i) to (iii) over a short time interval from s to \(s+h\). For such short intervals the effects of the interaction of the components in determining their combined effect on the clan average is only second order in h and can be neglected together with the other higher order terms. Hence the backward operator can be written as a sum of operators corresponding to the model ingredients as introduced at the beginning of Sect. 2.1.1. As this is useful for the remainder of the paper we here combine ingredients (i) and (ii):

The expressions in (2.7) are found as follows:

-

(i)

If we neglect births and deaths, for small h an individual situated at x at time s produces an individual situated at \(x+g(x, E(s))h\) at time \(s+h\). Therefore \({\bar{\psi }}(t,s+h)(x+g(x, E(s))h)={\bar{\psi }}(t,s)(x)\), which after on both sides subtracting \(\bar{\psi }(t,s+h)(x)\) and dividing by h, gives

$$\begin{aligned} \left. {-\frac{{\partial \bar{\psi }}(t,s)(x )}{{\partial s}}} \right| _{{\text {movement}}} = \frac{{\partial \bar{\psi } }(t,s)(x )}{{\partial x }}g(x,E(s)). \end{aligned}$$(2.8) -

(ii)

Next we account for the possibility that individuals die on the way, which happens with probability \(\mu (x,E(s))h\), so that, if we neglect movement and births, \({\bar{\psi }}(t,s)(x)=(1-\mu (x,E(s))ds){\bar{\psi }}(t,s+h)(x)\) (over a longer time survival is less), giving

$$\begin{aligned} \left. {-\frac{{\partial \bar{\psi } }(t,s)(x )}{{\partial s}}} \right| _{{\text {death}}} = -\mu (x,E(s)){\bar{\psi }}(t,s)(x ). \end{aligned}$$(2.9) -

(iii)

Finally a parent at x through its kids at \(\xi \) will have added a contribution \(h\beta (x,E(s),d\xi ){\bar{\psi }}(t,s)(\xi )\) to \({\bar{\psi }}(t,s)(x)\), which is missing in \(\bar{\psi }(t,s+h)(x)\). Summing over all these contributions gives

$$\begin{aligned} \left. {-\frac{{\partial \bar{\psi } }(t,s)(x )}{{\partial s}}} \right| _{{\text {births}}}=\int _\varOmega {\bar{\psi }}(t,s)(\xi )\beta (x,E(s),d\xi ). \end{aligned}$$(2.10)

2.1.4 Testing combinations of model ingredients for ODE reducibility

The classical search heuristic for finding a state space representation for a given set of dynamical variables in continuous time is to see whether their derivatives can be expressed in terms of the variables themselves, and, if not, to join the derivatives for which this is not the case as additional prospective state variables to the original set, whereupon the procedure is repeated till one succeeds or runs out of patience (cf. Diekmann et al. 2018, p. 1443; Fargue 1973).

If one is after a representation as a state linear system with a linear output map, “can be expressed as” translates to “is linearly dependent on”, a property that can be checked algorithmically, so that with infinite patience we end up with a firm conclusion. In our case the only difference is that we choose not to look at the output variables themselves, but at the weight functions by which they are produced from the population state, and therefore replace the time derivative of the output variables by the backward operator applied to these functions.

Notation For the remainder of this subsection we shall again use E to denote the environmental conditions, as opposed to the course of the environment as we did in the previous two subsections.

-

TEST

-

Choose a basis \(V_0=\{\psi _1,\dots ,\psi _{k_0}\}\) for the \(\gamma _i\) making up the population output map.

-

Provided that the expression \(\bar{A}(E)V_i\) makes sense, extend \(V_{i}\) to a basis \(V_{i+1}\) for \(V_i \cup \bigcup _{\mathrm{{all \; possible \; }}E} {\bar{A}(E)V_{i} }\).

-

The population model is ODE reducible if and only if the expressions \(\bar{A}(E)V_i\) keep making sense, and the \(V_i\) stop increasing after a certain \(i=h\).

For the \(\bar{A}(E)\) from (2.7), \(\bar{A}(E)V_i\) makes sense iff all elements of \(V_i\) are differentiable. (For a mathematically more precise rendering see Sect. 4.)

If the biological ingredients of a model satisfy the above test, it is possible to go directly from the ingredients to the ODE.

Example 2.1

Daphnia models, continued. In Example 1.2 the output weight functions were \(x^3\) and \(x^2\). Applying the backward operator

to these functions gives

This introduces x as an additional weight function, linearly independent of \(x^3\) and \(x^2\). Applying the backward operator to x gives

introducing the constant 1 as further weight function. Applying \(\bar{A}(E)\) to 1 gives

which introduces no further linearly independent weight functions, and thus ends the process. Hence, a P can be built from the weight functions 1, x, \(x^2\) and \(x^3\) leading to the four dimensional ODE representation already derived in Example 1.2.

Integrating (2.11) to (2.13) left and right over the p-state gives (1.4). This is why we could reorder (2.11) to (2.13) to look like (1.4), with the \(N_i\) replaced by \(x^i\). Rewritten in matrix form this then gives the K(E) from (2.1). Note though that in the process we have made some particular choices in order to get the precise form (1.4). In general K(E) is only unique up to a similarity transform, corresponding to alternative choices of a basis for the weight functions \(\psi _1,\dots ,\psi _k\), with a corresponding change of Q(E).

Remark

At first sight the TEST may not seem very practical as deciding that a certain set of model ingredients is not ODE reducible may require infinitely many operations. However, if the model ingredients come in the form of explicit expressions it can often be inferred from these whether the combination of ingredients will or will not pass the test. And even when those expressions on first sight are less than transparent, not all is lost, as in practice people are generally not so much interested in whether a certain model is ODE reducible as such, but in whether there exists a representation with a relatively low dimensional state space, which after specification of the maximum allowed dimension leads to a task executable by e.g. MapleTM or Mathematica®.

2.1.5 A catalogue of ODE reducible models

Modellers often initially still have quite some freedom in their choice of model ingredients. Hence it is useful to construct catalogues of classes of ODE reducible models, to help them make their choices with an eye on future model tractability (for a good example, see (Cushing 1989)). For this purpose observe that the TEST is no more than an attempt to construct a vector \(\varPsi \) of weight functions such that for all environmental conditions E (i) \(\varGamma (E)=Q(E)\varPsi \) and (ii)

suggesting the following strategy: start from some specific promising model class, and within this class try to solve (2.14) for \(\varPsi \) and K. In Sects. 5 and 6 we concentrate on one particular class of models that is both hallowed by tradition, and has the good property of allowing the p-state trajectory to be reconstructed by relatively easy means:

with the \(\alpha _i\) treated as output weight functions au par with the other \(\gamma _i\). (For the numerics it helps when the \(\beta _i(E)\) consist of just a few point masses at positions that depend smoothly on E.) For the remainder of this subsection we assume that (2.15) holds good.

Remark

In earlier papers like (Metz and Diekmann 1991) we referred to ODE reducible models satisfying (2.15) as ordinarily ODE reducible or ODE reducible sensu stricto, as these models then were the only ones figuring in discussions of ODE reducibility (or linear chain trickability as it then was called).

The assumption that the \(\alpha _i\) are additional output weight functions makes that we can absorb the birth term of the backward operator into the right hand side of (2.14) giving

All our general results so far pertain to the case where \(\mathrm {dim}(\varOmega )=1\). In Sect. 8 we show how the results for this case can be combined to make ODE reducible models with \(\mathrm {dim}(\varOmega )>1\), but a full catalogue for general \(\varOmega \) is still lacking. So for the remainder of this subsection we assume that \(\mathrm {dim}(\varOmega )=1\).

Below we give a gross sketch of the reasoning. As first step we choose a fixed constant \(E=E_\mathrm {r}\), write \(v_\mathrm {r}(x)=g(x,E_\mathrm {r}),\, \mu _\mathrm {r}(x)=\mu (x,E_\mathrm {r}),\, H_\mathrm {r}=H(E_\mathrm {r})\), where the label \(\mathrm {r}\) in the subscipts stands for “reference”, and solve the corresponding version of (2.16),

for \(\varPsi \). The result is that \(\varPsi (x)\) is the product of

(note that \({\mathscr {G}}_\mathrm {r}(x)\) can be interpreted as the inverse of a survival probability up to size x) and a matrix exponential in the transformed i-state variable

This tells that the weight functions \(\psi \) should be linear combinations of polynomials times exponentials in \(\zeta _\mathrm {r}\), all multiplied with the same \({\mathscr {G}}_\mathrm {r}\). The fact that these weight functions should not depend on E then gives a set of conditions that should be satisfied by the \(\varPsi \), g and \(\mu \) together. There are three possibilities to satisfy these conditions:

(i) and (ii) Without any restrictions on \(H_\mathrm {r}\), the death rate \(\mu \) should be decomposable as \(g(x,E)\gamma _0(x)+\mu _0(E)\), that is, a death rate component that at each value of x depends on how fast an individual grows through this value plus a death rate component that does not depend on x, and

-

(i)

the representation should be one-dimensional, in which case \(\varPsi (x) = d{\mathscr {G}}(x)\) with \({\mathscr {G}}(x):=\exp \left( \int ^x \gamma _0(\xi )d\xi \right) \), d a scalar of choice, and \(H(E)=\mu _0(E)\),

or, in the higher dimensional case,

-

(ii)

g should be decomposable as \(g(x,E) = v_0(x)v_1(E)\), so that we may interpret \(\zeta (x):=\int ^x(v_0(\xi ))^{-1}d\xi \) as physiological age. H in this case can be written as \(H(E) = -\mu _0(E)I + v_1(E) L\), with L an arbitrary matrix with all eigenvalues having geometric multiplicity one. \(\varPsi \) can then be written as

$$\begin{aligned} \varPsi (x)={\mathscr {G}}(x)D\left( e^{\lambda _1 z},\dots ,z^{k_1-1} e^{\lambda _1 z},\dots , e^{\lambda _r z},\dots ,z^{k_r-1} e^{\lambda _r z} \right) ^\mathrm {T}, \end{aligned}$$D a nonsingular matrix, with a corresponding representation of L as

$$\begin{aligned}L= D\left( {\begin{array}{*{20}c} {\left( {\begin{array}{*{20}c} {\lambda _1 } &{}\quad {} &{}\quad {} &{}\quad 0 \\ 1 &{}\quad \ddots &{}\quad {} &{}\quad {} \\ {} &{}\quad \ddots &{}\quad \ddots &{}\quad {} \\ 0 &{}\quad {} &{}\quad {k_1 - 1} &{}\quad {\lambda _1 } \\ \end{array} } \right) } &{}\quad {} &{}\quad 0 \\ {} &{}\quad \ddots &{}\quad {} \\ 0 &{}\quad {} &{}\quad {\left( {\begin{array}{*{20}c} {\lambda _r } &{}\quad {} &{}\quad {} &{}\quad 0 \\ 1 &{}\quad \ddots &{}\quad {} &{}\quad {} \\ {} &{}\quad \ddots &{}\quad \ddots &{}\quad {} \\ 0 &{}\quad {} &{}\quad {k_r - 1} &{}\quad {\lambda _r } \\ \end{array} } \right) } \\ \end{array} } \right) D^{ - 1}. \end{aligned}$$ -

(iii)

For higher dimensional representations also slightly less restricted growth laws are possible, but at the cost of a severe restriction on the eigenvalues of \(H_\mathrm {r}\) which should lie in special regular configurations in the complex plane. A lot of hard work is then required to render the corresponding class of representations into a biologically interpretable form. The end result becomes a slight extension of the Daphnia model of Examples 1.2 and 2.1 with \(\mu (x,E) = g(x,E)\gamma _0(x) + \mu _0(E)\), \(g(x,E) = v_0(x)(v_1(E)+v_2(E)\zeta )\) (note that if we transform from x to \(\zeta \) the growth law stays the same but for the disappearance of the factor \(v_0(x)\)). In this case \(\varPsi (x) = {\mathscr {G}}(x)D\varXi (x)\) with \(\varXi (x) = (1,\ldots , \zeta ^{k-1})^\mathrm {T}\). (The growth laws of this model family, in addition to von Bertalanffy growth, also encompass two other main growth laws from the literature: logistic, \(a(E)x + b(E)x^2\), and Gompertz, \(a(E)x -b(E)x \ln (x)\). Just appropriately transform the x-axis.) A further extension comes from a mathematical quirk for which we failed to find a biological interpretation: it is possible to add a quadratic term in \(\zeta \) to the growth law, \(g(x,E) = v_0(x)(v_1(E)+v_2(E)\zeta +v_3(E) \zeta ^2)\), which then should be exactly compensated by a similar additional term in the death rate, \(\mu (x,E) = g(x,E)\gamma _0(x) + \mu _0(E)+(k-1)v_3(E)\zeta \). Not only are these terms uninterpretable, the required simultaneous fine tuning of the model components makes them irrelevant in any modelling context coming to mind.

For this model family \(H(E) = -\mu _1 (E)I + DL(E)D^{-1}\) with

$$\begin{aligned} L(E)=\left( \begin{array}{ccccc} 0 &{}\quad -(k-1)v_3(E) &{}\quad 0 &{}\quad \cdots &{}\quad 0\\ v_1(E) &{}\quad v_2(E) &{}\quad -(k-2)v_3(E) &{}\quad &{}\quad 0 \\ 0 &{}\quad 2v_1(E) &{}2v_2(E) &{}\quad &{}\quad 0\\ \vdots &{}\quad 0 &{}\quad 3v_1(E) &{}\quad &{}\quad 0\\ &{}\quad &{}\quad &{}\quad \ddots &{}\quad \\ 0&{}\quad \cdots &{}\quad \cdots &{}\quad (k-1)v_1(E) &{}\quad (k-1)v_2(E)\\ \end{array}\right) \end{aligned}$$with \(v_3=0\) in the Daphnia style models.

The matrix K occurring in the ODE realising the population input–output relation can be recovered by removing the effect of subtracting the birth operator on both sides of (2.14) to get (2.15): \(K(E)=H(E)+ \int _\varOmega \psi _i (\xi )\beta _j(E,d\xi ) C\), with C defined by the requirement that \(\alpha _j=\sum _h c_{jh}\psi _h\), where the \(\alpha _j\) are the weight functions telling how the birth rates depend on the i-states (see Formula (2.15)).

The remainder of the paper is geared to an audience of analysts, and accordingly stresses proofs, instead of interpretation-based heuristics.

2.2 For mathematicians: the context of justification

We shall look at a community as a set of coupled populations. The coupling is mediated by the environment, denoted by E. On the one hand individuals react to the environment, on the other hand they influence their environment. We concentrate on a single population and pretend that E is a given function of time taking values in a set \({\mathscr {E}}\). So E can be regarded as an input. The single population model can serve as a building block for the community model once we have also specified a population level output by additively combining the impact that individuals have.

In order to account for the population dynamical behaviour of individuals (giving birth, dying, impinging on the environment), we first introduce the concept of individual state (i-state). Given the course of the environment, the i-states of the individuals independently move through the i-state space and their current position is all that matters at any particular time. The use of the word state entails the Markov property; admittedly idealisation is involved and finding the i-state space as well as specifying the relevant environmental variables is often a process of trial and error. The art of modelling comprises deliberate simplification in order to gain significant insights.

We denote the i-state space by \(\varOmega \) and assume that it is a subset of \({\mathbb {R}}^n\). In general the population state (p-state) is a measure m on \(\varOmega \) with the interpretation that \(m(\omega )\) is the number (per unit of volume or area) of individuals with i-state in the measurable subset \(\omega \) of \(\varOmega \).

A word of warning We usually denote a Borel subset of \(\varOmega \) by \(\omega \). We are aware of a different notational convention in probability theory where \(\omega \) denotes a point in \(\varOmega \). We hope this will not lead to confusion.

In many models the p-state can adequately be represented by a density \(n \in L^1(\varOmega )\). The abstract ODE

captures that the density n(t) changes in time due to

-

(i)

transport through \(\varOmega \) due to i-state development such as growth of individuals, and degradation due to death of individuals,

-

(ii)

reproduction.

The effects of (i) are incorporated in the action of \(A_0(E)\) and the effects of (ii) in the action of B(E). Since the i-states of offspring are, as a rule, quite different from the i-states of the parent, the operator B(E) is usually non-local. When \(x \in \varOmega \) specifies the size of an individual, growth is deterministic and giving birth does not affect the parent’s size, the abstract ODE (2.18) corresponds to the PDE

with \(g,\, \mu \) and \(\int _\varOmega \widetilde{\beta }(\cdot ,\cdot ,x)dx\) denoting, respectively, the growth, death, and reproduction rate of an individual with the specified i-state under the specified environmental condition.

The mathematical justification of (2.18) or (2.19) is cumbersome. In particular, it is difficult to give a precise characterisation of the domains of the various unbounded operators. Note that we are not in the setting of (generators of) semigroups of linear operators for which some results can be found in the paper by Atay and Roncoroni (2017). Indeed, we are dealing with evolutionary systems and for this non-autonomous analogue of semigroups a one-to-one correspondence between an evolutionary system and a generating family of differential operators is not part of a well-established theory (but see our earlier work (Clément et al. 1988; Diekmann et al. 1995) for some results in that direction). As shown by Diekmann et al. (1998, 2001), one can actually avoid the differential operators. In the next section we present this approach in the setting where, because of assumptions concerning reproduction, we can work with densities rather than measures. In the present section we simply ignore the mathematical difficulties and proceed formally.

If the test described in Sects. 4.1 and 2.1.4 yields a positive result in the end, it provides

-

an integer k,

-

a bounded linear map \(P:L^1(\varOmega ) \rightarrow {\mathbb {R}}^k\),

-

a family K(E) of \(k \times k\) matrices,

such that

and accordingly

where n(t) is a solution of (2.18), satisfies the ODE

The p-output that is needed in the community model, which is our ultimate interest, as well as any other p-output that we are interested in, was the starting point for the test and thus is incorporated in N, so we can focus our attention on the finite dimensional ODE (2.22) and forget about the infinite dimensional version (2.18) from which it was derived.

A special case occurs when there exist

-

a family H(E) of \(k \times k\) matrices,

-

a family Q(E) of bounded linear maps from \({\mathbb {R}}^k\) to \(L^1(\varOmega )\)

such that

(Incidentally, we here took \(L^1(\varOmega )\) as the range space for Q(E), but actually we shall allow Q(E) to take on values in a linear subspace of the vector space of all Borel measures on \(\varOmega \), see Sect. 4.) This case amounts to taking

with

The key nice features of this special case are:

-

(i)

\(A_0(E)\) is a strictly local operator and this allows us to make an in-depth study of the characterisation of those \(A_0(E)\) for which P and H(E) exist.

-

(ii)

\(B(E)n =Q(E)N\) and hence we can rewrite (2.18) in the form

$$\begin{aligned} \frac{dn}{dt} = A_0(E)n +Q(E)N. \end{aligned}$$(2.27)The same local character of \(A_0(E)\) now allows us to solve (2.27) explicitly, thus expressing the part of the population that is born after the time at which we put an initial condition explicitly in terms of N(t). See Proposition 7.2 for a concrete example.

If we ignore birth, that is, set \(B(E)=0\) (\(\widetilde{\beta }(\cdot ,E,\cdot ) =0\)), then the abstract ODE (2.18) and its PDE counterpart (2.19) become transport-degradation equations and we only have to consider condition (2.23). In this paper we give necessary and sufficient conditions in terms of g and \(\mu \) for the existence of \(k,\,P\) and H(E) such that (2.23) holds and hence N(t) satisfies (2.22) (with \(K(E)=H(E)\), or, equivalently \(M(E)=0\)). Subsequently we view condition (2.24) as a restriction on the submodel for reproduction. If \(\widetilde{\beta }\) is such that the restriction (2.24) holds, then the full infinite-dimensional system (2.18) is reducible to the finite dimensional ODE (2.22). In this manner we obtain a catalogue of models that are ODE-reducible and within a restricted class of models with one-dimensional i-state space the catalogue is even complete.

Let \(w_i\,\, i=1,2,\ldots ,k\) be bounded measurable functions defined on \(\varOmega \) such that

Then condition (2.23) amounts to

We might call \(A_0^*(E)\) the Kolmogorov backward operator although strictly speaking that operator acts on the continuous functions on \(\varOmega \) and is the pre-adjoint of the forward operator acting on the measures on \(\varOmega \). Since the elements \(w_i\) that figure in our catalogue are continuous functions, the distinction between \(A_0^*(E)\) and the Kolmogorov backward operator is inessential.

In words (2.29) states that the E-independent subspace spanned by \(\{w_1,w_2,\ldots , w_k\}\) is in the domain of \(A_0^*(E)\) and invariant under \(A_0^*(E)\) for all relevant E. To avoid redundancy one should choose k as small as possible and we therefore require that the functions \(w_i:\varOmega \rightarrow {\mathbb {R}},\, i=1,2,\ldots ,k,\) are linearly independent.

When (2.18) represents (2.19), condition (2.23) with P given by (2.28), amounts to

It is easy to find a solution: if

for some functions \(\gamma _0:\varOmega \rightarrow {\mathbb {R}}\) and \(\mu _0:{\mathscr {E}}\rightarrow {\mathbb {R}}\), then the choice

makes (2.30) a valid equality. If we restrict to \(k=1\), then this is in fact the only possibility.

As mentioned above, condition (2.24) is a restriction on reproduction. The smaller the value of k, the more severe is the restriction. We therefore want to make k as large as possible while retaining the linear independence of \(\{w_1,w_2,\ldots , w_k\}\). So the question arises whether it is possible to pinpoint more restrictive conditions on g and \(\mu \) that allow for arbitrarily large values of k.

If the growth rate of an individual does not depend on its i-state but only on the environmental condition, that is, if

for some function \(v:\varOmega \rightarrow {\mathbb {R}}\), we call the i-state physiological age and often talk about maturation rather than growth. If on top of (2.33) we assume (2.31) and replace the unknown w by \(\widetilde{w}\) via the transformation

then (2.30) is equivalent to

If we choose

with \(\varLambda \) an arbitrary \(k \times k\) matrix for arbitrary k, then the E-dependence vanishes from Eq. (2.35), which becomes an autonomous linear ODE with solution

To avoid redundancy, we have to make sure that \(\varLambda \) and \(\widetilde{w}(x_b)\) are such that the components of \(\widetilde{w}\) are linearly independent as scalar functions of the variable \(x\in \varOmega \), see conditions (H1) to (H3) of Sect. 5 as well as Corollary 6.3.

If instead of (2.33) we assume

for some functions \(v_0:\varOmega \rightarrow {\mathbb {R}}\) and \(v_1:{\mathscr {E}}\rightarrow {\mathbb {R}}\), we can introduce \(\zeta \) defined in terms of x by

as a new i-state variable and thus reduce the situation to (2.33).

In Example 1.2 we have

In combination with (2.31) this leads to

where \(\widetilde{w}\) is once more defined by (2.34) and where we have put

For arbitrary k we can make (2.41) into an identity by choosing for \(i=1,2,\ldots ,k\)

and the entries of the matrix \(H_0(E)\)

Again we can allow g to have an extra factor \(v_0(x)\) since we can remove it by the transformation from x to \(\zeta \) defined by (2.39).

If instead of (2.40) we assume that

we can, as somewhat more complicated computations show, keep the \(\widetilde{w}\) specified in (2.43), but adapt \(H_0(E)\) slightly as follows:

while keeping the entries for all other combinations of i and j as in (2.44). But in addition we need to replace (2.31) by

which, nota bene, involves k. So if we consider g and \(\mu \) as given, there can be at most one k for which this works. Again we can allow a factor \(v_0(x)\) in g and work with \(\zeta \) defined by (2.39).

The main result of the present paper is that problem (2.30), with \(\{w_1,w_2,\ldots , w_k\}\) linearly independent, admits no other solution than the ones described above.

3 Physiologically structured population models

The formulation of a population model starts at the individual level with the specification of the individual states (i-states for short) representing physiological conditions that distinguish individuals from one another. The set of all admissible i-states is denoted by \(\varOmega \). In the present paper we restrict ourselves to the case of a finite dimensional \(\varOmega \subset {\mathbb {R}}^n\) which we make a measurable space by equipping it with the \(\sigma \)-algebra \(\varSigma \) of all Borel sets. This measurable space is called the individual state space (i-state space). Our main results concern \(n=1\) with \(\varOmega \) an interval, possibly of infinite length. In that case one may think of the i-state as, for example, the size of an individual and we shall indeed often refer to the i-state as size.

The world in which individuals lead their lives has an impact on their development and behaviour. We capture the relevant aspects of the external world in a variable called the environmental condition denoted by E and taken from a set denoted by \({\mathscr {E}}\). One may think of E as a specification of food concentration, predation pressure and, possibly, other quantities like temperature or humidity.

Dependence among individuals arises from a feedback loop: the individuals themselves exert an influence on the environmental condition, for instance, by consuming food or serving as food for predators. As a rule, this feedback loop involves several species. We refer to the paper by Diekmann et al. (2010) for a concrete example. Note, however, that the example of cannibalism shows that this rule is not universal.

We consider the environmental condition as input and investigate how the input leads to output that comprises the contribution to the (change of) the environmental condition of the species itself, or other species, or any other quantity that we happen to be interested in. By taking population outputs as inputs for other populations or inanimate resources, we can build a dynamical model of a community. The ultimate model incorporates dependence among individuals and leads to nonlinear equations. But each building block considers the environmental condition as a given input and computes population output by summing the contributions by individuals. The present paper focusses on the population state (p-state for short) linear (but otherwise nonlinear) input–output map generated by a single building block.

The processes that have to be modelled are:

-

i-state development (called growth for short),

-

survival,

-

reproduction (how much offspring and with what i-state at birth).

We assume that, given the environmental condition, growth is deterministic. We further assume that reproduction can be described by a per capita rate. We thus exclude, for instance, cell fission occurring exactly when the mother cell reaches a threshold size (see Example 8.5). Accordingly, and in line with Metz and Diekmann (1986) and de Roos et al. (2013), we introduce the three key model ingredients:

-

the growth rate g(x, E),

-

the death rate \(\mu (x,E)\),

-

the reproduction rate \(\beta (x, E, \omega ),\, \omega \in \varSigma \).

Again we warn the readers that our use of the symbol \(\omega \) for a measurable subset of \(\varOmega \) differs from the notational convention in probability theory.

The reproduction rate \(\beta \) should be interpreted as follows: the rate at which an individual of size x gives birth under environmental condition E is \(\beta (x, E, \varOmega )\) and the state-at-birth of the offspring is distributed according to the Borel probability measure \(\beta (x, E, \cdot )/\beta (x, E, \varOmega )\).

Once we have a model at the i-level, it is a matter of bookkeeping to lift it to the p-level (Metz and Diekmann 1986; Diekmann and Metz 2010): Equating a p-level fraction to an i-level probability one obtains the deterministic (that is, the large population limit) link between the two levels. Still there are choices to be made for the formalism to employ: it could be partial differential equations (Metz and Diekmann 1986; Perthame 2007; Gwiazda and Marciniak-Czochra 2010) or renewal equations (RE) (Diekmann et al. 1998, 2001). Here we choose RE, albeit not in the most general form, since reproduction is described by a per capita rate.

As a first step we build composite ingredients from the basic ingredients \(g, \mu \), and \(\beta \). We shall do so without specifying the nature of the environmental condition E, in particular, without specifying the space \({\mathscr {E}}\) to which E belongs. Often we conceive of the environmental condition as a given function of time. When E occurs as a subscript to a function with argument (t, s) this entails that \(E(\tau )\) is given for \(\tau \in [s,t]\).

We shall provide a constructive definition of the following quantities:

We assume that g and \(\tau \mapsto E(\tau )\) are such that the initial value problem

has a unique solution \(\xi (\tau )\) on [s, t] and define

We further assume that \(\mu ,\, g\), and \(\tau \mapsto E(\tau )\) are such that also the initial value problem

has a unique solution \(f(\tau )\) on [s, t] and define

Let

Then, since the growth of an individual is deterministic, we have

that is, the (unless survival is guaranteed) defective probability distribution \(u^0_E(t,s,x,\cdot )\) is a point measure concentrated at position \(X_E(t,s,x) \in \varOmega \) of mass \({\mathscr {F}}_E(t,s,x)\). Let

Then

Lemma 3.1

Given the input \(\tau \mapsto E(\tau )\) defined on [s, t], the relations

hold for all \(\tau \in (s,t)\) and all \(\omega \in \varSigma \).

Relation (3.7) is the Chapman–Kolmogorov equation and (3.8) is a similar consistency relation relating growth, survival and reproduction.

We omit the straightforward proof of Lemma 3.1, but note that, essentially, the consistency relations reflect the uniqueness of solutions to Eqs. (3.1) and (3.3).

The composite ingredients \(u^0_E\) and \(\beta ^1_E\) satisfying (3.7) and (3.8) are the starting point for a next round of constructive definitions. They are examples of kernels parametrised by the input (cf. Diekmann et al. 2001). Such a kernel \(\phi _E\) assigns to each input \(\tau \mapsto E(\tau )\) defined on [s, t] a function \(\phi _E(t,s,\cdot ,\cdot ): \varOmega \times \varSigma \rightarrow {\mathbb {R}}\) which is bounded and measurable with respect to the first variable and countably additive with respect to the second variable. This is to say that for fixed \(\omega \in \varSigma \) the function \(x \mapsto \phi _E(t,s,x,\omega )\) is bounded and measurable while for fixed \(x \in \varOmega \) the map \(\omega \mapsto \phi _E(t,s,x,\omega )\) is a finite signed measure.

The product \((\phi \star \psi )_E\) of two kernels \(\phi _E\) and \(\psi _E\) parametrised by the input is defined by

The \(\star \)-product is associative.

For \(k \ge 2\) we define recursively

The interpretation of \(\beta _E^k\) is as follows: Given the input \(\tau \mapsto E(\tau )\) defined on [s, t], the quantity \(\beta _E^2(t,s,x,\omega )\) is the rate at which grandchildren to an individual that had i-state x at time s are born at time t with i-state-at-birth in the set \(\omega \in \varSigma \). The quantity \(\beta _E^3(t,s,x,\omega )\) has the same interpretation but for great-grandchildren and \(\beta _E^k(t,s,x,\omega )\) for kth generation offspring. To get the combined birth rate of all descendants of such an individual we sum up over all generations and define

where the superscript c stands for clan.

Because every member of the clan is either a child of the ancestor or a child of a member of the clan, or, alternatively, either a child of the ancestor or a member of the clan of a child of the ancestor, we obtain a consistency relation in terms of the following RE:

Mathematically, (3.12) means that \(\beta _E^c\) is the resolvent kernel of the kernel \(\beta _E^1\) (cf. Gripenberg et al. 1990).

In order to incorporate both the founding ancestor and the development of the descendants after birth, we finally define

For later use we note that

and

The clan-kernels \(u_E^c\) and \(\beta _E^c\) satisfy the same consistency relations as \(u_E^0\) and \(\beta _E^1\) of Lemma 3.1.

Theorem 3.2

Given the input \(\tau \mapsto E(\tau )\) defined on [s, t], the relations

hold for all \(\tau \in (s,t)\) and all \(\omega \in \varSigma \).

The proof of Theorem 3.2 proceeds by proving (3.17) first and next use this identity to verify (3.16). The papers (Diekmann et al. 1998, 2001, 2003) contain a much more detailed exposition of this constructive approach, including proofs of (3.16) and (3.17) in a generalised form with the instantaneous rate \(\beta _E^1\) replaced by cumulative offspring production \(\varLambda \), necessitating the use of the Stieltjes integral, which can be avoided here since we assume that offspring are produced at a per capita rate, so with some probability per unit of time. In our paper (Diekmann et al. 1998) we considered general linear time dependent problems (the time dependence corresponding to fixing an input). In the paper (Diekmann et al. 2001) we explicitly considered input, but focussed on the feedback loop that captures dependence. As the notation of (Diekmann et al. 2001) is not ideal for investigating the problem introduced in the next section, we have adopted in the present exposition a different, more suitable notation, viz. the use of the subscript E. In the paper (Diekmann et al. 2003) the main objective was to characterise the steady states.

The central idea of the modelling and analysis methodology of physiologically structured populations is that we view the population state as a measure m on \(\varOmega \). We use the kernel \(u_E^c\) to define operators \(U_E^c(t,s)\) that map the p-state at time s to the p-state at time t as follows. Assuming that all individuals experience the same environmental condition E, we can associate to each measure m a new measure

and note that the Chapman–Kolmogorov relation (3.16) translates into the semigroup property

while (3.15) yields

Note that we may replace the superscript c in (3.18) by 0 and use (3.7) to deduce the semigroup property for \(U_E^0\). That \(U_E^0(s,s)=I\) follows again from (3.15). Families of linear maps that satisfy the conditions (3.18) and (3.20) are called state-linear dynamical systems with input.

In a previous paper (Diekmann et al. 2018) we have already considered what amounts to population level conditions for ODE-reducibility, in this paper we concentrate on finding i-level ones.

4 Finite dimensional state representation

4.1 General considerations

Let Y be a vector space. We do not yet fix a topology for Y, but note that if \(Y'\) is a separating vector space of linear functionals on Y, then the weakest topology on Y for which all \(y' \in Y'\) are continuous (the so-called \(w(Y,Y')\)-topology) makes Y into a locally convex space whose dual space is \(Y'\) (Rudin 1973; Theorem 3.10, p.62).

Let \(U_E\) be a state-linear dynamical system with input \(\tau \mapsto E(\tau )\), which at this point has no connection yet with either \(U^0_E\) or \(U^c_E\). This means that each \(U_E(t,s)\) is a linear operator on Y and

If a vector topology has been chosen for Y we also require the operators \(U_E(t,s)\) to be continuous with respect to this topology.

We are interested in finding a finite dimensional exact reduction (or, lumping) of \(U_E(t,s)\). More precisely, we want, if possible, to choose a separating vector space \(Y'\) of linear functionals on Y and construct a \(w(Y,Y')\)-continuous linear map \(P: Y \rightarrow {\mathbb {R}}^k\) and a \(k \times k\) matrix K(E) such that

where \(\varPhi _E(t,s)\) is the fundamental matrix solution of the k-dimensional ODE-system

The \(w(Y,Y')\)-continuous linear map P can be represented by elements \(W_i \in Y',\, i=1,2,\ldots ,k\) as

To avoid useless variables, P should be surjective or, equivalently, the functionals \(W_1, W_2,\ldots , W_k\) should be linearly independent.

The adjoint of a forward evolutionary system characterised by (4.1)–(4.2) is a backward evolutionary system (see Clément et al. 1988; Diekmann et al. 1995). For these it is more natural to think of s as the dynamic variable with respect to which we differentiate. Since the restriction of an evolutionary system to the diagonal in the (t, s)-plane is the identity operator, the derivative with respect to t is simply minus the derivative with respect to s at the diagonal. This observation suffices for our purpose and we therefore do not elaborate the forward-backward duality here.

Let W denote the k-vector with components \(W_i\). We may then rewrite (4.3) in the form

as a shorthand for

Since the right hand side of (4.7) is differentiable, the same must be true for the left hand side. By differentiation we obtain the following task:

Here the subscript L refers to lumpability and the task is to find k elements \(W_i \in Y^*\) such that

-

the derivatives exist,

-

the outcome is a linear combination, with input dependent coefficients, of the elements \(W_i\).

Assuming that the derivative exists, we may write

and in Sects. 2.1 and 8 we employ the notation of the right hand side.

In this paper we accomplish \(\hbox {TASK}_{\mathrm{L}}\) for the state-linear dynamical system \(U_E^0\) with input introduced in Sect. 3. In fact, we characterise the growth rates g and the death rates \(\mu \) for which exact reduction is possible and we compute the corresponding W and K(E). In order to explain the relevance of these results for the dynamical system \(U_E^c\) we now widen the perspective by introducing output.

We return to (4.1) and (4.2) and complement them by a \(w(Y,Y')\)-continuous linear map

We call

the output at time t, given the state y at time s and the input \(\tau \mapsto E(\tau )\) defined on [s, t]. The idea is that the state itself is not observable, only the output can be measured. We now ask the following question: can, in fact, the relation between the pair (initial state, input) on the one hand and output on the other, alternatively and equivalently be described in terms of a finite dimensional dynamical system? That is, when is the diagram in Fig. 2 commutative for all inputs \(\tau \mapsto E(\tau )\) defined on [s, t]?

More precisely, we again want to find an integer k and continuous linear maps P and K(E), but in addition to (4.3) we now require

where the \(k \times r\) matrix Q(E) is also to be determined. In other words, we require that, given the state y at time s and the input \(\tau \mapsto E(\tau )\) defined on [s, t], the output at time t is obtained by applying Q(E(t)) to the solution at time t of (4.4) with initial condition

We stress that, as far as the output is concerned, the reduction does not involve loss of information. At the black box level we cannot distinguish between the true system \(U_E\) and its finite dimensional counterpart \(\varPhi _E\).

Remark 4.1

In principle we could allow P to depend on E and write the initial condition (4.12) as

But if we can choose E(s) without affecting \(U_E(t,s)\), then, since also \(\varPhi _E(t,s)\) is insensitive to the precise value of E in s, it follows that after all, P cannot depend on E. A similar observation was made for a related problem in Remark 7.3 of (Diekmann et al. 2018).

Taking \(t=s\) in (4.11) we find that necessarily

This allows us to rewrite (4.11) as

The aim is now to derive necessary and sufficient conditions on O(E) and \(U_E\) for the existence of \(P,\, Q(E),\, K(E)\) that make (4.15) a valid identity for a minimal value of k. Note that for \(k>r\) the Eq. (4.15) allows for possibly redundant information in (4.4). Indeed, adding components to N that are unobservable, in the sense that they do not contribute directly or indirectly to future output, does not harm; by requiring k to be minimal we avoid such redundancy. To derive the conditions, we follow an iterative procedure that is well-known in systems theory.

Our starting point is the output map O(E). For (4.14) to be possible at all, the range of \(O(E)^*: {\mathbb {R}}^r \rightarrow Y'\) should be contained in a finite dimensional subspace which is independent of E. Without loss of generality we can assume that this subspace has dimension r. Indeed, if the dimension is less than r there is dependence among the output components and by choosing suitable coordinates we can reduce r without losing any information.

Let \(\left\{ W_1^0, W_2^0,\ldots , W_r^0\right\} \subset Y'\) be a basis for the subspace of \(Y'\) that contains the range of \(O(E)^*\). Recall the representation (4.5) of P in terms of the elements \(W_i \in Y',\, i=1,2,\ldots ,k\). From (4.14) we conclude that for all \(i=1,2,\ldots ,r\), the element \(W_i^0\) belongs to the subspace spanned by \(\left\{ W_1, W_2,\ldots , W_k\right\} \). In particular, \(k \ge r\). By a suitable choice of basis for \({\mathbb {R}}^k\) we can arrange things so that

Define \(P_0:Y \rightarrow {\mathbb {R}}^r\) by

Then

with \(Q_0(E): {\mathbb {R}}^r \rightarrow {\mathbb {R}}^r\) such that for all \(v \in {\mathbb {R}}^r\)

because we have chosen r in the optimal way and \(\left\{ W_1^0, W_2^0,\ldots , W_r^0\right\} \) is a basis for the range of \(O(E)^*\). In the first step in the iterative procedure we try to find \(K_0(E):{\mathbb {R}}^r \rightarrow {\mathbb {R}}^r\) such that (4.15) in the guise of

holds, where \(\varPhi _E(t,s)\) is now the fundamental matrix solution of the r-dimensional system

On account of (4.19) we may reduce (4.20) to

if, as we assume, we can manipulate E(t) without changing \(P_0U_E(t,s)\).

One should compare (4.22) with (4.3), but there is an important difference: determining the map P or, equivalently, the elements \(W_i, \, i= 1,2,\ldots ,k\) of \(Y'\) is part of the task whereas the elements \(W_i^0, \, i= 1,2,\ldots , r\) are known because O(E) is given. So rather than a task, we now have the following test:

In more detail the test consists in answering the following questions:

-

Does \(\frac{d}{dt}U_E^*(t,s)W_i^0{}_{\big | t=s}\) exist for \(i=1,2,\ldots ,r\)?

-

Is the outcome a linear combination, with input dependent coefficients, of the elements \(W_1^0,W_2^0,\ldots , W_r^0\)?

If, for some index i, the derivative does not exist, finite dimensional state representation is not possible. In contrast, there is still hope that a finite dimensional state representation might be possible if a derivative is not in the span of \(\left\{ W_1^0, W_2^0,\ldots , W_r^0\right\} \). If that is the case, we add the derivative to the basis and thus enlarge the subspace. Varying both the index i and the value of E(s), we obtain a new subspace of \(Y'\) that may, or may not, be finite dimensional. If it is finite dimensional we perform \(\hbox {TEST}_1\) which is the analogue of \(\hbox {TEST}_0\), but with \(W^0\) extended to \(W^1\), a vector the components of which span the new subspace. If necessary this procedure can be repeated. If the process leads after a finite number of steps to a finite dimensional subspace, we are in business. If the process does not terminate, finite dimensional state representation is not possible.

In general, finding P and K(E) such that (4.3) holds, or, in other words, performing \(\hbox {TASK}_{\mathrm{L}}\), is hard since, literally, one does not know how to start. In contrast, for a given output O(E), the tests \(\hbox {TEST}_0\), \(\hbox {TEST}_1, \ldots \) yield a constructive procedure.

If one does manage to characterise P and K(E) such that (4.3) holds, one can give the output problem a twist: (4.11) holds for all outputs of the form (4.14). Below we shall follow this road while considering reproduction as part of the output. In this manner we can focus on (4.3) for \(U_E^0\) and in the end still obtain results for \(U_E^c\) as described in the next subsection.

4.2 Physiologically structured population models

As explained in Sect. 3, it is natural to consider the p-state of a physiologically structured population as a measure and therefore the p-state space should be a linear subspace Y of the vector space \(M(\varOmega )\) of all Borel measures on \(\varOmega \). One reason for not choosing the whole \(M(\varOmega )\) as p-state space is that we may want to keep biologically relevant quantities such as the total biomass finite. If in the one-dimensional i-state space case x denotes the size of an individual, then \(\int _\varOmega x m(dx)\) represents the total biomass and it is finite only for measures m in a proper subspace of \(M(\varOmega )\) if \(\varOmega \) is an infinite interval. An other reason is that when we check whether a reduction of the infinite dimensional model is ODE-reducible we construct a \(w(Y,Y')\)-continuous linear map \(P:Y \rightarrow {\mathbb {R}}^k\) or, equivalently, linear functionals \(W_i \in Y'\), now representable by locally bounded measurable functions \(w_i\) on \(\varOmega \) via the pairing

and we may end up with functions \(w_i\) for which the integral in (4.23) is not finite for every \(m \in M(\varOmega )\). So we want these functions \(w_i\) to represent elements in \(Y'\) and consequently have to restrict Y to a suitably chosen subspace of \(M(\varOmega )\). The freedom we have in choosing Y and \(Y'\) therefore comes in very handy.

We denote the \({\mathbb {R}}^k\)-valued function with components \(w_i\) by w. Later we shall show that when a finite dimensional state representation exists for the physiologically structured population model, the function w is actually continuous, but not necessarily bounded, on \(\varOmega \).

Consider the dynamical systems \(U_E^0\) and \(U_E^c\) with input of Sect. 3. The system \(U_E^0\) represents a transport-degradation process (without reproduction) while \(U_E^c\) represents a physiologically structured population model with reproduction.

Using (3.18), in its superscript 0 version, (3.13) and (3.5) we find that for the transport-degradation case, (4.3) amounts to

while for complete physiologically structured population models, it amounts to

Using in addition (3.14) and (3.5) we can elaborate \(\hbox {TASK}_{\mathrm{L}}\) by taking the derivative with respect to t of both sides of (4.25) and then putting \(t=s\). Since \(t \mapsto X_E(t,s,x)\) is differentiable, the differentiability of the left hand side of (4.25) implies that the function \(x \mapsto w(x)\) is differentiable, at least in certain directions. More precisely, for \(x\in \varOmega \) the linear approximation of \(w(x+h)-w(x)\) exists as a map acting on the space of vectors h spanned by \(g(x,\cdot )\). This map we call (Dw)(x). We therefore find that necessarily

for the transport-degradation model, and

for the population model.

If the transport-degradation model is ODE-reducible, that is, if we can find a positive integer k, linearly independent measurable functions \(\{w_1,w_2,\ldots ,w_k\}\) and a \(k \times k\) matrix K(E) so that (4.26) holds, the physiologically structured population model is also ODE-reducible if we impose the following restriction on the reproduction process: There exists a \(k \times k\) matrix M(E) such that

The reason is simply that when this is the case, we can write the Eqs. (4.26) and (4.27) in a unified way as

where \(H(E)=K(E)\) for the transport-degradation model and \(H(E)=K(E)-M(E)\) for the population model.

Note that the restriction (4.28) is satisfied if reproduction is (part of the) output in the following sense:

because then we can take M(E) to be the \(k \times k\) matrix with entries

But there are other situations in which (4.28) is satisfied. The simplest case is when \(M(E)=0\). Note that this does not imply the absence of reproduction, merely that \(\beta \) is annihilated by all \(w_i\) or, in other words, that the functions \(w_i\) measure properties of individuals that are preserved under reproduction (as a concrete example, think of mass in cell-fission models).

Yet another case, viz. the one in which \(\beta \) has the form

will be briefly discussed in Sect. 8.

When \(n=1\), that is, when the i-state space is one-dimensional, (4.29) reduces to the following equation:

In Sect. 5 we address the following problem: list necessary and sufficient conditions on g and \(\mu \) for the existence of a measurable function \(w: \varOmega \rightarrow {\mathbb {R}}^k\) with a priori unknown k such that there exists a \(k \times k\) matrix H(E) for which (4.33) holds.

To solve the problem, we heavily use that (4.33) is a local equation in the x-variable. For a fixed value \(E_0\) the solution w of (4.33) is, essentially, given by a matrix exponential. Once w has been determined, we can view (4.33) as a constraint for the ways in which \(g,\, \mu \) and H can depend on E.

5 A catalogue of models that admit a finite dimensional state representation

In this section we present an explicit catalogue of all possible combinations of model ingredients that allow a finite dimensional state representation for transport-degradation models with \(\varOmega \subset {\mathbb {R}}^1\) an interval that may have infinite length. The catalogue extends naturally to all physiologically structured population models in which the submodel for reproduction is restricted by (4.28).

One should choose the individual state space \(\varOmega \) such that every point in it is reachable. The following assumption guarantees this. We shall also make use of it in the proofs of our results.

Assumption 5.1

There exists a constant environmental condition \(E_0\) such that

The catalogue consists of three families \(F_i,\,\,i=1,2,3,\) of functions g and \(\mu \) for which we specify w and H(E) such that (4.33) holds. These families involve infinite dimensional parameters in the form of functions \(\gamma _0:\varOmega \rightarrow {\mathbb {R}}, \,\, \mu _0:{\mathscr {E}}\rightarrow {\mathbb {R}},\,\, v_0:\varOmega \rightarrow {\mathbb {R}},\,\, v_i:{\mathscr {E}}\rightarrow {\mathbb {R}}, \,\, i=1,2,3\). We do not claim that \(g, \,\, \mu \) and w are biologically meaningful for all choices of these parameters (in fact they are not). The catalogue simply provides a precise description of the constraints on g and \(\mu \) that enable an equivalent, as far as the output map defined by w is concerned, finite dimensional representation of the corresponding transport-degradation model.

A transformation of the i-state variable affects the death rate \(\mu \) and the output function w in the usual manner. But since the growth rate needs to keep its interpretation, we have to incorporate a transformation specific factor. If

and

then

and accordingly

is the growth rate of the transformed i-state variable y.

Transformation of the i-state-variable induces a transformation of the parameters in our families \(F_i\). As we shall indicate below, the multiplicative factor highlighted by (5.4) allows us to transform the i-state variable in such a way that the formula for the growth rate becomes relatively simple.

Let \(L:{\mathbb {R}}^k \rightarrow {\mathbb {R}}^k\) be linear and invertible. If (4.33) holds and we define

then, by applying L to the identity (4.33), we obtain

So, if g and \(\mu \) are given and (w, H) is a solution to (4.33), then \((\widetilde{w},\widetilde{H})\) defined by (5.5) is also a solution. As (5.5) defines an equivalence relation we see that solutions (w, H) to (4.33) occur in equivalence classes. In our catalogue we choose w such that H has a relatively simple form, but one should keep in mind that this choice yields a representative of an equivalence class.

As a reference for integration we choose a reference point \(x_b \in \varOmega \). If all individuals are born with the same i-state at birth, we choose this i-state as \(x_b\).

We are now ready to present our first family in the catalogue.

\(F_1\): scalar representations | |

|---|---|

k | \(k=1\) |

Parameters | \(\gamma _0:\varOmega \rightarrow {\mathbb {R}},\,\, \mu _0:{\mathscr {E}}\rightarrow {\mathbb {R}}\) |

g | No restriction |

\(\mu \) | \(\mu (x,E) = \gamma _0(x)g(x,E) + \mu _0(E)\) |

w | \(w(x) = \exp \left( \int _{x_b}^x \gamma _0(y)dy\right) \) |

H | \(H(E)=-\mu _0(E)\) |

The proof that (4.33) is an identity if \(F_1\) applies is straightforward and omitted.

In the rest of this section we assume that \(k \ge 2\) and we supplement (4.33) with the non-degeneracy condition

-

(H1)

\(w_1,w_2,\ldots ,w_k\) are linearly independent as scalar functions of \(x \in \varOmega \).

Among the parameters of the second family are a constant (that is, independent of both x and E) \(k \times k\) matrix \(\varLambda \) and a constant vector \(w(x_b)\in {\mathbb {R}}^k\) specifying the value of w in \(x_b\). The identity (4.33) holds for all choices of \(\varLambda \) and \(w(x_b)\). But (H1) has also to be satisfied. Therefore we have to impose the condition

-

(H2)

the eigenvalues of \(\varLambda \) have geometric multiplicity one

on the matrix \(\varLambda \) and the condition

-

(H3)

if \(\{\theta _1,\theta _2,\ldots ,\theta _k\}\) is a basis for \({\mathbb {R}}^k\) consisting of eigenvectors and generalised eigenvectors of \(\varLambda \) and

$$\begin{aligned} w(x_b) = \sum _{j=1}^k d_j\theta _j, \end{aligned}$$then \(d_j \ne 0\) whenever \(\theta _j\) is a generalised eigenvector of highest rank

on the combination of \(\varLambda \) and \(w(x_b)\).

We are now ready for the second family in our catalogue.

\(F_2\): physiological age | |

|---|---|

k | \(k \ge 2\) |

Parameters | \(\gamma _0:\varOmega \rightarrow {\mathbb {R}},\,\, \mu _0:{\mathscr {E}}\rightarrow {\mathbb {R}},\,\,v_0:\varOmega \rightarrow {\mathbb {R}},\,\,v_1:{\mathscr {E}}\rightarrow {\mathbb {R}}\) |

\(\varLambda \in {\mathbb {R}}^{k\times k}\) and \(w(x_b) \in {\mathbb {R}}^k\) such that (H2) and (H3) hold. | |

\(\zeta (x):= \int _{x_b}^x \frac{dy}{v_0(y)}\) | |

g | \(g(x,E) = v_0(x)v_1(E)\) |

\(\mu \) | \(\mu (x,E) = \gamma _0(x)g(x,E) + \mu _0(E)\) |

w | \(w(x) = \exp \left( \int _{x_b}^x \gamma _0(y)dy\right) \exp \left( \zeta (x)\varLambda \right) w(x_b)\) |

H | \(H(E)=v_1(E)\varLambda -\mu _0(E)I\) |

Note that we do not lose any generality by assuming that \(\varLambda \) is in Jordan normal form. Apart from the common factor \(\exp \left( \int _{x_b}^x \gamma _0(y)dy\right) \) the components of w are therefore linear combinations of k building blocks of the form

where \(j=1,2,\ldots , r,\,\, m=0,1,\ldots ,k_j-1,\,\, \sum _{j=1}^r k_j = k\). The condition (H3) guarantees that each and every building block (5.7) contributes to at least one component of w. By choosing \(w(x_b)\) such that \(d_j=1\) whenever \(\theta _j\) is a generalised eigenvector of highest rank and zero otherwise, the components are (because \(\varLambda \) is assumed to be in Jordan normal form) precisely these building blocks.

We now present the third and last family of the catalogue.

\(F_3\): generalised von Bertalanffy growth | |

|---|---|

k | \(k \ge 2\) |

Parameters | \(\gamma _0:\varOmega \rightarrow {\mathbb {R}},\,\, \mu _0:{\mathscr {E}}\rightarrow {\mathbb {R}},\,\,v_0:\varOmega \rightarrow {\mathbb {R}},\,\,v_j:{\mathscr {E}}\rightarrow {\mathbb {R}},\,\, j=1,2,3.\) |

\(\zeta (x):= \int _{x_b}^x \frac{dy}{v_0(y)}\) | |

g | \(g(x,E) = v_0(x)\left( v_1(E)+v_2(E)\zeta (x)+v_3(E)\zeta (x)^2\right) \) |

\(\mu \) | \(\mu (x,E) = \gamma _0(x)g(x,E) + \mu _0(E) +(k-1)v_3(E)\zeta (x)\) |

w | \(w_j(x) = \exp \left( \int _{x_b}^x \gamma _0(y)dy\right) \zeta (x)^{j-1},\,\, j=1,2,\ldots ,k.\) |

H | \(H(E)=H_0(E)-\mu _0(E)I\) |

\(H_0=\left( \begin{array}{ccccc} 0 &{}-(k-1)v_3 &{}0 &{}\cdots &{}0\\ v_1 &{}v_2 &{}-(k-2)v_3 &{} &{}0 \\ 0 &{}2v_1 &{}2v_2 &{} &{}0\\ \vdots &{}0 &{}3v_1 &{} &{}0\\ &{} &{} &{} \ddots &{} \\ 0&{} \cdots &{} \cdots &{}(k-1)v_1 &{}(k-1)v_2\\ \end{array} \right) \) | |

It is important to realise that the parametrisation is far from unique. For instance, if we write

it is not difficult to check that the linear fractional transformation

with \(ad-bc \ne 0\) yields the alternative form

with

With a little bit more effort one can check that

becomes

with

Note that

and that, accordingly,

So apart from the factor \(\exp \left( \int _{x_b}^x \gamma _0(y)dy\right) \) the components \(\widetilde{w}_j(x)\) are linear combinations of the powers \(\zeta ^\ell ,\,\, \ell =0,1,\ldots ,k-1\). We conclude that the requirement that \(\{w_1,w_2,\ldots ,w_k\}\) and \(\{\widetilde{w}_1,\widetilde{w}_2,\ldots ,\widetilde{w}_k\}\) are equivalent systems of output functionals is indeed satisfied.

A transformation \(y=\phi (x)\) leaves the form of g invariant. Because of the extra factor (recall (5.4)) we have to adapt \(\gamma _0\) by a factor, too. The net effect is that the integral of \(\gamma _0\), and hence w, transforms in the standard manner.