Abstract

Response inhibition is essential for terminating inappropriate actions and, in some cases, may be required selectively. Selective stopping can be investigated with multicomponent anticipatory or stop-signal response inhibition paradigms. Here we provide a freely available open-source Selective Stopping Toolbox (SeleST) to investigate selective stopping using either anticipatory or stop-signal task variants. This study aimed to evaluate selective stopping between the anticipatory and stop-signal variants using SeleST and provide guidance to researchers for future use. Forty healthy human participants performed bimanual anticipatory response inhibition and stop-signal tasks in SeleST. Responses were more variable and slowed to a greater extent during the stop-signal than in the anticipatory paradigm. However, the stop-signal paradigm better conformed to the assumption of the independent race model of response inhibition. The expected response delay during selective stop trials was present in both variants. These findings indicate that selective stopping can successfully be investigated with either anticipatory or stop-signal paradigms in SeleST. We propose that the anticipatory paradigm should be used when strict control of response times is desired, while the stop-signal paradigm should be used when it is desired to estimate stop-signal reaction time with the independent race model. Importantly, the dual functionality of SeleST allows researchers flexibility in paradigm selection when investigating selective stopping.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Humans rely on response inhibition to stop inappropriate pre-planned or ongoing actions. Response inhibition can be required in nonselective or selective stopping contexts. Nonselective stopping refers to scenarios where all components of a response must be terminated, for example, actions that require coordination of effectors with a common goal. Selective stopping represents complex scenarios where inhibitory control is required in response to only certain stimuli (stimulus-selective; Bissett and Logan 2014) or only part of a multicomponent action (response-selective; Coxon et al. 2007). Selective stopping may better capture the complexity of human actions, which often require the coordination of multiple effectors guided by various environmental stimuli (for a detailed review of selective stopping, see Wadsley et al. 2022b). To date, investigations of selective stopping have provided new insights into healthy ageing (Albert et al. 2022; Coxon et al. 2016; Hsieh and Lin 2017) and may lead to a better understanding of clinical conditions associated with poor impulse control (MacDonald et al. 2016; Rincon-Perez et al. 2020).

Multicomponent stop-signal task (SST; Lappin and Eriksen 1966; Verbruggen et al. 2019) and anticipatory response inhibition (ARI; He et al. 2021; Slater-Hammel 1960) paradigms can be used to investigate nonselective and response-selective stopping (hereby referred to as selective stopping for simplicity). Both paradigms require a default multi-effector action (e.g., press two buttons) in response to a go-signal which, on a subset of trials, must be terminated on the presentation of a stop signal. Responses in the SST are equivalent to a reaction time task where an imperative go-signal cues a speeded action. In contrast, responses in the ARI are cued by a predictable indicator reaching a stationary target. Both paradigms can assess nonselective stopping by presenting a stop-signal to all subcomponents of the cued response (stop-all trials), or they can assess selective stopping by presenting a stop signal to only one subcomponent while the other continues as initially cued by the go-signal (partial-stop trials). Importantly, both a go- and a stop-signal is presented during stop trials, which better captures response inhibition as a form of top-down cognitive control than Go/No-Go paradigms (Verbruggen and Logan 2008).

Selective stopping can be supported by selective or nonselective response inhibition. During partial-stop trials, a substantial response delay is observed in the subcomponent cued to go when the subcomponent cued to stop is successfully withheld (Coxon et al. 2007). This stopping-interference effect has been observed across various within-limb and between-limb effector pairings across SST and ARI paradigms (Aron and Verbruggen 2008; MacDonald et al. 2012; Xu et al. 2015). Stopping-interference likely arises due to a restart process during partial-stop trials (De Jong et al. 1995), whereby a response delay reflects nonselective response inhibition followed by selective re-initiation of the effector cued to respond (MacDonald et al. 2017). Importantly, the stopping-interference effect is not hard-wired and can be modulated by factors that result in nonselective response inhibition (e.g., functional coupling; Wadsley et al. 2022a), or vice-versa, factors that allow for selective response inhibition (e.g., proactive cueing; Majid et al. 2012). Therefore, the stopping-interference effect provides a means to capture the selectivity, or lack thereof, of response inhibition.

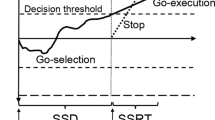

An independent race model can describe behaviour in response inhibition paradigms (Logan and Cowan 1984). The independent race model proposes a theoretical race between independent go and stop processes triggered by their respective signals. The outcome on a given stop trial is governed by which of the two processes ‘wins’ the race. The covert latency of response inhibition, termed stop-signal reaction time (SSRT), can be estimated since both a go- and a stop-signal is presented during a stop trial (Logan et al. 1984). Estimates of SSRT provide an objective measure of stopping latency that can be compared across experimental conditions and populations (e.g., Aron et al. 2003). Importantly, behavioural data needs to meet the independence assumption of the race model (i.e., response times during failed stop trials should be faster than go trials) for SSRT estimates to be reliable (Verbruggen et al. 2019).

The aim of the current study is to improve the ability to conduct research on selective stopping. At present, selective stopping research has been limited by software availability. Open-source versions of the ARI (He et al. 2021) and SST (Verbruggen et al. 2008) paradigms are freely available, however, they do not provide the functionality to assess selective stopping. Current open-source paradigms also only support using either the SST or ARI paradigm, but not both. We believe that the best practice for selective stopping research is to have the option to choose either paradigm and to have maximum flexibility with task design. Therefore, to achieve our aim we have developed a freely available, open-source toolbox to design selective stopping tasks with in-built optionality for either the SST or ARI paradigm. The toolbox was then used to conduct an experiment to provide key comparisons of behavioural data acquired using the SST and ARI paradigms. In doing so, the experiment reveals important information about each paradigm’s strengths and limitations, guiding recommendations for future use and interpretation of findings. Three hypotheses were tested: (1) Response slowing and variation would be greater during the SST than ARI paradigm. (2) The stopping-interference effect would be larger during the SST than ARI paradigm. (3) The assumption of the independent race model would be met more frequently with the SST than the ARI paradigm.

Methods

Participants

Participants were eligible for the experiment if they had no history of neurological illness and were aged between 18 and 60 years. Forty neurologically healthy adults volunteered to participate and met the inclusion criteria (23 female and 17 male; mean age 29.4 yrs., range 18 to 56 yrs.; 36 right-handed and 4 left-handed). The study was approved by the University of Auckland Human Participants Ethics Committee (Ref. UAHPEC22709).

The selective stopping toolbox

The Selective Stopping Toolbox (SeleST) allows response inhibition to be assessed in nonselective and selective stopping contexts via the SST or ARI paradigm. SeleST was developed using freely available Python-based PsychoPy software that provides high-quality stimulus and response timing (Bridges et al. 2020; Peirce et al. 2019). SeleST is open-source and made freely available using a dedicated GitHub repository at: https://github.com/coreywadsley/SeleST. Detailed and up-to-date instructions for installation and operation are included in the GitHub repository. An overview of key features and design choices is provided below.

Application

SeleST makes use of the object-oriented basis of Python. Classes and functions are contained in two primary components: SeleST_initialize and SeleST_run. Most parameters can be modified using a graphical user interface (GUI) presented whenever SeleST is run (Fig. 1A). The default menu presents users with dialogue boxes where general information (e.g., participant demographics) and options (e.g., paradigm) can be set. Additional options (e.g., trial numbers, response keys, stimuli colours) can be set as desired through advanced settings GUIs. The design of SeleST provides maximum flexibility without disrupting data collection for experimenters who are inexperienced with coding. Advanced users can also make use of the design by inserting modified versions of the various functions that handle specific aspects of the task (e.g., stop-signal presentation, feedback).

The selective stopping toolbox (SeleST). A A graphical user interface (GUI) is presented whenever SeleST is run. Participant demographics and basic design choices, such as paradigm type, can be set from the initial GUI. Advanced settings can be set through subsequent GUIs by selecting the ‘Change general settings?’ option. B Default implementations of simple (top) and choice (bottom) variants of the anticipatory response inhibition (ARI) and stop-signal task (SST) in SeleST

Paradigms

SeleST contains simple and choice variants of multicomponent SST and ARI paradigms (Fig. 1B). A go-signal is presented for only two visual indicators during the simple variants, whereas a go-signal is presented for two of four possible visual indicators during the choice variants. An element of choice is typically included in the SST to slow go-signal RTs and provide sufficient time for a stop-signal to be processed before the go response is enacted. Choice variants may also be beneficial to avoid the automaticity of responding that can occur in simple contexts (Verbruggen and Logan 2008). Simple variants may be more suitable for populations where response times are slowed (e.g., Parkinson’s disease) or for the ARI paradigm since it is robust to response slowing (Leunissen et al. 2017). Notably, the data output structure is consistent across all paradigm types, allowing flexible comparisons and design choices based on the population of interest.

Default task structure

The default version of SeleST implements a choice variant of the SST or ARI paradigm. Participants first complete a practice routine with in-built task instructions. During practice, a participant is first introduced and required to complete a block of only go-trials. Stop-trials are then introduced, and participants must complete a mixed block of go and stop-trials. Introducing go trials before stop trials works to prioritise “going” as the default mode of responding and ensure a measure of go performance that is not biased by the implicit expectation of stopping in mixed blocks (Verbruggen and Logan 2009b). A nonbiased measure of go response time is also important when determining if deficits in response inhibition are beyond simple differences in processing speed (e.g., Verhaeghen 2011). After practice, the default trial arrangement includes 288 go trials and 144 stop trials (equally distributed for stop-all, stop-left, and stop-right trials) across 12 blocks. Each block takes approximately 2 min to complete. Thus, a behavioural assessment of selective stopping can be completed within 30 min.

Custom task routines can be implemented easily within SeleST. For example, trial and block numbers can be modified using the GUIs to set a 25% stop-signal probability or to include stop-all trials only. More advanced customisation is made available through the option to import a custom trial list. A custom trial list can be created with the SeleST_trialArrayCreator script in which various parameters (e.g., cue and stop-signal colour) can be specified across a series of custom trial types. Custom trial lists are helpful for advanced implementations of SeleST, for example, to assess proactive selective stopping (e.g., Cirillo et al. 2018) or create a stimulus-selective stopping task (e.g., Sanchez-Carmona et al. 2021). Together, the above options provide a variety of methods for experimenters to modify the task structure to suit their demands.

Feedback

Trial-by-trial feedback is available for all paradigms in SeleST. Feedback is provided by points on a sliding scale, with more points earned by making more accurate responses. During the ARI paradigm, accuracy is determined by stop success or the closeness of the go-signal RT to the target time. During the SST, accuracy is determined by stop success or the speed of the go-signal RT. Points are awarded independently for the left and right responses to provide separate feedback for the respond-hand and stop-hand during partial-stop trials. The number of points awarded is signalled by changing the colour of the target line or stimulus outline for the ARI and SST paradigm, respectively. Using symbolic rather than text cues helps avoid potential language-related confounds. The current block and total score are updated and displayed to the participant at the end of each task block. An option to modify or disable trial-by-trial feedback is also included in SeleST.

Trial-by-trial feedback is beneficial to task performance. Strategic slowing (i.e., delaying responses to improve the chance of success in a stop trial) is a prevalent confound in response inhibition paradigms (Verbruggen et al. 2013; Verbruggen and Logan 2009b). The tendency to slow responses is less during the ARI than the SST since go-trial success depends on a temporally precise response (Leunissen et al. 2017). We have applied this concept to the SST in SeleST by making feedback during a go-trial dependent on the speed of the response, as opposed to the binary presence of a response used in typical implementations. We also use points for feedback rather than information on RTs or stop success. Points are a way to gamify a task which, in turn, can enhance task engagement and be more intuitive than information on response times for most populations (Lumsden et al. 2016).

Experiment protocol

Choice variants of the ARI and SST implementations of SeleST were used to assess response-selective stopping. Participants were seated comfortably in front of an LG-24GL600F-B monitor (144 Hz refresh rate, ~ 60 cm viewing distance) controlled by a RTX A4000 Laptop GPU. The operating system was set to optimise timing as per the recommendations of Bridges et al. (2020). Responses were made using the middle and index fingers of both hands on a DELL KB216 keyboard. A keyboard was used for accessibility, but a specialised response box (e.g., Li et al. 2010) is recommended for accurate timing during advanced implementations of SeleST. Each trial consisted of a fixation (0.75–1.25 s), response (1.25 s), and feedback (1 s) period.

Trial onset occurred when the go-signal was presented on two of four possible indicators. The go-signal was always presented for either the two inner or two outer indicators; thus, the choice component required responding with either the index (inner) or middle (outer) fingers. Inner and outer trials were randomised and distributed equally throughout the task. The objective during most trials was to respond with both hands (go-left go-right: GG). A subset of stop trials was included to assess response inhibition (Fig. 2). A stop-signal required the corresponding response to be withheld and was presented for both indicators during nonselective stop-all trials (stop-left stop-right: SS). During selective partial-stop trials, a stop-signal was presented for either the left (stop-left go-right: SG) or right (go-left stop-right: GS) indicator, requiring the response to be withheld in the stop-hand but not in the respond-hand. The stop-signal delay (SSD) was initially set to occur at 250 ms for SS, SG, and GS trials. The SSD was then adjusted in steps of 35 ms (~ 5 frames) across each stop trial type independently. The SSD was increased after successful stopping and decreased after unsuccessful stopping to obtain an average stopping success of ~ 50%.

Schematic of primary trial types during choice variants of the anticipatory response inhibition (ARI) and stop-signal task (SST) in SeleST. Participants are presented with four empty indicators during fixation. A multicomponent ‘go’ response is cued for either the inner (L1 & R1, depicted) or outer (L2 & R2) indicators by presenting the go-signal (ARI filling bars reaching target; SST indicator turning black). Nonselective stopping is assessed by presenting a stop-signal (indicator turning cyan) on both the selected indicators. Selective stopping is assessed by presenting a stop-signal on either the left or the right indicator while the other continues as initially cued by the go-signal

Participants completed the SST and ARI (counterbalanced order) in a single experiment which lasted approximately one hour. Task instructions were built into SeleST and given prior to the start of each task. Participants completed a certain-go practice block (36 GG trials) and then a maybe-stop practice block (24 GG, 4 SS, 4 SG, 4 GS trials) for familiarisation. The task consisted of 12 maybe-stop blocks after practice, resulting in a total of 288 GG, 48 SS, 48 SG, and 48 GS trials for each paradigm. The trial order was randomised with the exception that each block started with at least one GG trial. Participants were informed that their primary goal was to earn as many points as possible and to avoid slowing their responses to prepare for a stop trial. The block and total score were updated and displayed at the end of each block. Paradigm-specific information is presented below.

ARI

The display for the ARI paradigm consisted of four white bars (15 cm high, 1.5 cm wide) on a grey background. A black horizontal target line was positioned behind each bar at 80% of its total height. Trial onset occurred when the two innermost or outermost bars appeared to “fill” (i.e., gradually turn black from bottom to top). The objective during GG trials was to cease the bars from filling as close as possible to the target lines. Each bar took 1 s to fill completely; thus, a target RT of 800 ms was cued during GG trials. Response keys were pressed with either the index fingers (inner bars) or middle fingers (outer bars) to cease filling. On stop trials, either both bars (SS), or only the left (SG) or right bar (GS), changed colour (cyan). Participants were instructed to let the corresponding bars fill completely when a stop-signal was presented. Points were awarded based on the closeness of the RT for each response (green [100 points]: < 25 ms or successful stop; yellow [50 points]: 26–50 ms: orange [25 points]: 51–75 ms; red [0 points]: > 75 ms or failed stop).

SST

The display for the SST paradigm consisted of four empty white bars with black outlines (5 cm high, 1.5 cm wide). Trial onset occurred when the inner or outer bars turned completely black. The objective during GG trials was to respond with the correct response keys as fast as possible. Either both, the left, or the right bar turned to the stop colour after the go-signal during SS, SG, and GS stop trials, respectively. Participants were instructed to cancel the corresponding go response when a stop-signal was presented. Points were awarded based on the speed of RT for each response (green [100 points]: < 400 ms or successful stop; yellow [50 points]: 401–500 ms; orange [25 points]: 501–600 ms; red [0 points]: > 600 ms or failed stop).

Dependent measures

Data were processed using custom Python analysis scripts available in the SeleST GitHub repository. Responses during GG trials were coded as errors if the incorrect response keys were chosen or if part of the cued response was omitted. Mean GG response time was calculated across both hands during successful trials. Response slowing was calculated by subtracting mean GG response time during the certain-go block from the mixed go and stop task blocks to quantify strategic slowing. Response variation was calculated as the median absolute deviation of GG response times. Stop-trial data were taken from stop-all trials or averaged across GS and SG partial-stop trials for statistical analyses on nonselective and selective stopping, respectively. Mean stop success was calculated as the percentage of trials where the response was correctly withheld in the cued indicators. Mean SSD was calculated and made relative to the target for the ARI paradigm, thus, smaller and larger SSDs indicate less time available for stopping in the ARI and SST, respectively. Stopping-interference was calculated on a trial-by-trial basis by subtracting the mean GG response time during the task blocks from the response time of the respond-hand during partial-stop trials. Stopping-interference was used to determine stopping selectivity, where greater values indicate more interference (less selective stopping). The assumption of the independent race model (fail-stop RT < go RT) was tested for each paradigm across nonselective stop-all and selective partial-stop trials, respectively. Stop-signal reaction time (SSRT) was estimated with the integration method (Logan et al. 1984). Response times from GG trials with errors were included, and omissions were replaced with the maximum RT value (1.25 s) before averaging across both hands (Verbruggen et al. 2019).

Statistical analyses

Data were analysed using JASP software (Version 0.16.3; JASP Team 2020). Evidence was determined using Bayes factor in favour of the alternative hypothesis (BF10), where values greater than 1 indicate support for the alternative hypothesis and values less than 1 support the null hypothesis. The strength of evidence was determined using a standard BF10 classification table (BF10 < 0.3: moderate evidence for null hypothesis; 0.3 ≤ BF10 ≤ 3: inconclusive evidence; BF10 > 3: moderate evidence for alternative hypothesis; van Doorn et al. 2021). Comparisons of GG trial (success, response slowing, response variation) and stop trial (success, stopping-interference) performance between the ARI and SST paradigm were made using Bayesian paired Wilcoxon signed-rank tests with default priors (van Doorn et al. 2020). The association between stop-signal delay and stopping-interference was determined using Bayesian Kendall’s tau correlations with pooled data from successful partial-stop trials across all participants. A frequentist linear mixed model with a fixed effect of Outcome (successful, failed) and random effect of participant was used to determine whether log-transformed stopping-interference was specific to successful partial-stop trials. In this case, stopping-interference data were also pooled from all participants. SSRT data were compared between nonselective stop-all and selective partial-stop trials with Wilcoxon signed-rank tests to determine how the speed of stopping is impacted by the required selectivity for each paradigm separately. Data are presented as median ± interquartile range unless otherwise specified.

Results

Trial success and response times

Trial success and response time results are shown in Table 1. There was no difference in GG success between paradigms for either the go-only (BF10 = 0.245) or mixed go and stop trial blocks (BF10 = 0.300). The amount of response slowing from the go-only to mixed task blocks was greater in the SST (73.6 ± 54.0 ms) compared to ARI (7.3 ± 11.6 ms; BF10 = 1.16 × 105; Fig. 3A). The median absolute deviation during GG trials was greater in the SST (37.8 ms ± 20.1 ms) compared to ARI (18.4 ± 7.2 ms; BF10 = 1.94 × 105; Fig. 3B). As expected, stop success was close to 50% for both paradigms, but overall was greater with ARI than SST for both stop-all (BF10 = 356.05) and partial-stop trials (BF10 = 5.38). GG trial success was similar, but responses were slower and more variable during the SST compared to ARI.

Go-left go-right (GG) trial behavioural comparisons between anticipatory response inhibition (ARI) and stop-signal task (SST) paradigms. A Response slowing measured as the difference in GG response times from the go-only to mixed task blocks, where values greater than 0 indicate more slowing. B Response variation measured as the median absolute deviation of GG response times during the mixed blocks. Grey lines connect paired participant averages

Stopping-interference effect

The stopping-interference effect was examined from successful partial-stop trials. The stopping-interference effect was larger in the SST (146.5 ± 31.0 ms) compared to ARI (65.0 ± 146.5 ms; BF10 = 1.56 × 106) and is shown in Fig. 4A. The magnitude of stopping-interference on a given partial-stop trial was positively associated with the stop-signal delay for both the ARI (tau-b = 0.18, BF10 = 7.47 × 1027) and SST (tau-b = 0.25, BF10 = 1.20 × 1055) paradigm (Fig. 4B). Stopping-interference was larger in the SST compared to the ARI paradigm and was larger when there was less time available for stopping.

Comparisons between ARI and SST paradigms. A The stopping-interference effect calculated as the difference between mean GG response time and the response time of the respond-hand during partial-stop trials, where greater values indicate more interference. Grey lines connect paired participant averages. B Correlation between stopping-interference and stop-signal delay on a trial-by-trial basis. Stop-signal delay values closer or further from 0 indicate less time for stopping in the ARI and SST paradigms, respectively

Trial-wise analyses of stopping-interference (Fig. 5) indicate that response delays were greater during successful partial-stop (M = 62.3 ms, SD = 53.39 ms) compared to failed partial-stop trials in the ARI paradigm (M = 9.4 ms, SD = 48.8 ms; F1,38.9 = 165.1, P < 0.001). The same relationship of greater stopping-interference in successful partial-stop (M = 153.7 ms, SD = 82.7 ms) compared to failed partial-stop trials was also evident for the SST paradigm (M = -27.7 ms, SD = 107.9 ms; F1,38.5 = 210.8, P < 0.001). The stopping-interference effect was specific to successful partial-stop trials.

Frequency histograms (n bins = 25) of trial-wise stopping-interference from failed and successful partial-stop trials. Stopping-interference is calculated as the difference between mean go-trial response time and the response time of the respond-hand during partial-stop trials, where greater values indicate more interference. Solid vertical lines indicate condition means. ***P < 0.001 for fixed effect of Outcome (failed, successful)

Stop-signal reaction time

As expected, the independence assumption (fail-stop RT < go-RT) was met for 100% of participants during nonselective stop-all trials with SST. Conversely, the assumption was met for only 20% of the same participants with ARI. For selective partial-stop trials, the independence assumption was met in 95% and 25% of participants in the SST and ARI, respectively. After excluding participants who violated the independence assumptions, estimates of SSRT were larger in stop-all (243.8 ± 36.7 ms) compared to partial-stop trials for the SST (231.6 ± 30.6 ms; BF10 = 48.95). The ARI paradigm showed inconclusive evidence of SSRT differences between stop-all (254.1. ± 63.2 ms) and partial-stop trials (208.2 ± 22.7 ms; BF10 = 0.75), likely due to the limited number of available participants. Assumptions of the independent race model were met more frequently for the SST than for the ARI paradigm for both nonselective and selective stop trials (Fig. 6).

Stop-signal reaction time (SSRT) estimates during nonselective and selective stop trials for the anticipatory response inhibition (ARI) and stop-signal task (SST) separately. SSRT was only estimated for participants that met the independence assumption and was calculated using the integration method with inclusion and replacement of go trials errors and omissions, respectively. Grey lines connect paired participant averages. ***Posterior odds > 100

Discussion

The present study compared response-selective stopping between multicomponent SST and ARI paradigms, using a novel open-source Selective Stopping Toolbox (SeleST). In support of the first hypothesis, go responses were more variable and slowed to a greater extent in the SST than ARI. In support of the second hypothesis, the stopping-interference effect was larger during successful partial-stop trials in the SST than ARI. The third hypothesis was also supported since the assumption of the independent race model was met more frequently in the SST than ARI. The findings indicate that nonselective and selective stopping can be assessed with SeleST using either the SST or ARI paradigm. Below we discuss the main findings and provide recommendations for future use.

Responses are slower and more variable during the SST

Responses were more variable and slowed to a greater extent in the SST than in the ARI paradigm. While GG trial success was equivalent between both paradigms, response slowing from a go-only to a mixed go and stop trial context was ~ 66 ms larger in the SST. Strategic slowing in the SST has been attributed to the lack of a temporal constraint for GG trial success (Verbruggen and Logan 2009b). However, slowing still occurred in the current implementation of the SST, where points were awarded trial-by-trial based on response speed. Response slowing was small in the ARI paradigm, presumably since the go response was cued by a predictable indicator reaching a stationary target (MacDonald et al. 2017). This temporal constraint also likely contributed to response times being half as variable in the ARI than in the SST paradigm. Response slowing and variability are likely driven by the competing tendencies of going and stopping (Verbruggen and Logan 2009a). Strategic slowing does not invalidate the SST and instead likely reflects how individuals slow responses in contexts where stopping may be required (e.g., Hannah and Aron 2021). However, slowing does produce right-skewed response time distributions, which can bias SSRT estimates (Leunissen et al. 2017; Verbruggen et al. 2013). Isolating reactive and proactive response inhibition processes is also problematic with response slowing since successful stopping may simply reflect an omission of the go process rather than overt response inhibition. Therefore, the ARI paradigm provides for more stable GG trial performance.

Stopping-interference is greater in the SST and specific to successful selective stopping

The stopping-interference effect was ~ 80 ms longer in the SST than in the ARI paradigm. Greater stopping-interference in the SST may be related to response slowing. Xu et al. (2015) identified that part of the measured response delay during partial-stop trials results from a sampling bias. According to the independent race model, successful partial-stop trials, from which stopping-interference is sampled, are likely to reflect the slowest components of the response time distributions (Logan et al. 1984). Thus, a portion of the measured response delay likely reflects a natural discrepancy between GG trial and successful partial-stop trial response times. While it is possible that a sampling bias also impacts stopping-interference estimates in the ARI paradigm, it is likely to be smaller since slowing is less than in the SST, and the assumptions of the independent race model are often violated (Leunissen et al. 2017). Therefore, the stopping-interference effect during partial-stop trials is present in both paradigms but may be inflated by a sampling bias in the SST.

The stopping-interference effect was specific to successful partial-stop trials for both the SST and ARI paradigms. A pause-then-cancel model of action stopping posits that a nonselective pause process is initiated during the presentation of infrequent stimuli, such as a stop-signal (Diesburg and Wessel 2021). Thus, a response delay would be expected to occur during all partial-stop trials, whether partial stopping was successful or not. However, the trial-by-trial analyses indicated that stopping-interference was specific to or at least amplified by successfully stopping part of a response. Greater stopping-interference during successful partial-stop trials is likely the consequence of a nonselective cancel process in addition to the indiscriminate pause process (e.g., Wadsley et al. 2022b). Stopping-interference was also positively associated with stop-signal delay on a trial-by-trial basis, whereby response delays were greater as there was less time available for stopping in both paradigms. Likely, excitatory processes in the respond-hand took longer to reach threshold the later into the planned response the stop process was triggered. Therefore, the stopping-interference effect is specific to successful selective stopping and temporally associated with stop-signal delay.

SSRT was faster during selective than nonselective trials

In examining the reliability of SSRT estimates, the SST better conformed to the assumptions of the independent race model. The independence assumption predicts that response times during failed stop trials should be faster than go trials. This independence assumption was valid for all but two participants in the SST but only valid for ~ 25% of participants in the ARI paradigm. Violations of the independence assumption are problematic since they can produce unreliable estimates of SSRT (Verbruggen et al. 2019). The proportion of violations in the current ARI paradigm may have been elevated by adding a choice component, as previous studies have reported SSRT in many participants using a simple ARI paradigm (Leunissen et al. 2017; Zandbelt et al. 2013). It is important to note however, that the presence of a stopping-interference effect may violate the independence assumption since the presentation of a stop-signal should not influence the go process. Indeed, the independent race model is often violated in selective stopping contexts (Bissett & Logan 2014).

More sophisticated race models may need to be developed to better account for the relationship between going and stopping in a selective context (Bissett et al. 2021). Matzke et al. (2013a, b) have developed a Bayesian parametric model for SSRT estimation that can account for failures to trigger the stop process (Matzke et al. 2017) as well as context independence violations in a unimanual ARI paradigm (Matzke et al. 2021). An assessment of the efficacy of the above models during selective stopping is beyond the scope of the current study. However, SeleST provides a toolbox that facilitates such assessments across various contexts, which could be explored in future studies. An alternative approach to SSRT estimation is to use electrophysiological measures to capture stopping latency, for example, more direct measures from electromyography that indicate the time taken to cancel a response (Jana et al. 2020; Raud et al. 2022). In summary, the SST can be used more reliably than the ARI to describe behaviour in the context of the independent race model, but both paradigms may require alternative methods for selective stopping.

Analyses of SSRT estimates indicated that stopping was faster during partial-stop compared to stop-all trials in the SST paradigm. It is reasonable to expect SSRT to be longer during selective than nonselective stopping since it represents a more complex form of response inhibition that may occur through a slower but more selective mechanism (Aron 2011). Some studies have indicated longer selective than nonselective SSRTs (Cirillo et al. 2018; MacDonald et al. 2021). Other studies have found no difference, suggesting that a global response inhibition mechanism may be recruited regardless of stopping selectivity (Majid et al. 2012; Raud et al. 2020). In the current study, a trend for faster selective stopping was observed for the ARI paradigm, however, there were too few participants after exclusions. Faster SSRTs for selective stopping may result from participants attending more to partial-stop trials since they represent a greater percentage (22%) than stop-all trials (11%). A key distinction of partial-stop trials in SeleST is that feedback is provided for both the respond-hand and stop-hand, which encourages accurate selective stopping. Alternatively, the independent race model may not accurately reflect the relationship between going and stopping in a selective context, which requires more than global response inhibition (Bissett et al. 2021; Hall et al. 2022). In summary, the speed of selective versus nonselective stopping may depend on context. Future investigations may wish to investigate this matter by exploring alternative models of response inhibition (e.g., Matzke et al. 2013a, b).

Recommendations for future use

The SST and ARI paradigms offer unique insights into selective stopping but may be suited to different experimental demands. Response slowing and variability were less in the ARI than in the SST, which corroborates previous comparisons made in a unimanual task context (Leunissen et al. 2017). Less response slowing is important when the stopping-interference effect is a key measure of interest since slowing can lead to estimates of stopping-interference inflated by a sampling bias (Xu et al. 2015). Tight control of response times in the ARI paradigm is also an important feature for studies investigating neural processes of response inhibition, for example, using transcranial magnetic stimulation to probe the primary motor cortex at specific time points during response preparation and inhibition (e.g., MacDonald et al. 2014). Indeed, stimulus timing can be set a priori relative to the target response time in the ARI paradigm, whereas it may be required to set them post hoc based on observed response times in the SST. Furthermore, acquiring a stable baseline can be more challenging when response times are variable based on pre-trial expectations. Thus, the ARI paradigm favours selective stopping experiments where strict control of response times is required.

The independence assumption of the independent race model was met in most participants for the SST but not the ARI paradigm. It is sensible to expect the SST to better conform to the independent race model since it was formulated from behavioural observations in a unimanual SST (Logan et al. 1984). Many race model variants have since been developed for reaction time paradigms that provide detailed descriptions of behaviour (Heathcote and Matzke 2022). For example, a parametric race model can be used to estimate SSRT as well as the percentage of stop trials where an inhibitory process was not triggered (Skippen et al. 2019). The applicability of the SST to race models is important for studies determining where deficits in inhibitory control may arise beyond simple behavioural measures (e.g., Choo et al. 2022). Overall, race models seem less applicable to selective stopping in ARI (Matzke et al. 2021). In summary, the assumptions of an independent race model are favoured by the SST when examining selective stopping.

Conclusion

Selective stopping is a complex form of action stopping that can provide unique insights into response inhibition. Here we provide a freely available open-source selective stopping toolbox, SeleST, which can be used to assess response inhibition with either an SST or ARI paradigm. By directly comparing performance between SST and ARI paradigms, the current study showed that both paradigms might be best suited for different experimental demands. The SST is better suited when there is a desire to estimate SSRT in the context of the independent race model, whereas the ARI paradigm is better suited when strict control of response times is required. Importantly, we believe the SST and ARI paradigms can be viewed as complementary methods to assess selective stopping. The dual functionality of SeleST allows the decision of which paradigm to use to be guided by the research question. The research community interested in response inhibition can also drive advances with SeleST since it is freely available and open-source. SeleST can help bridge the gap between researchers who favour one paradigm over the other and may therefore increase the generalisability of selective stopping research.

Data availability

The datasets generated during the current study are not publicly available due to restricted ethical approval but are available on reasonable request. Analysis code is freely available along with an example dataset within the SeleST GitHub repository. The experiment was not preregistered.

References

Albert J, Rincon-Perez I, Sanchez-Carmona AJ, Arroyo-Lozano S, Olmos R, Hinojosa JA, Fernandez-Jaen A, Lopez-Martin S (2022) The development of selective stopping: qualitative and quantitative changes from childhood to early adulthood. Dev Sci 25(5):e13210. https://doi.org/10.1111/desc.13210

Aron AR (2011) From reactive to proactive and selective control: developing a richer model for stopping inappropriate responses. Biol Psychiatry 69(12):e55-68. https://doi.org/10.1016/j.biopsych.2010.07.024

Aron AR, Verbruggen F (2008) Stop the presses: dissociating a selective from a global mechanism for stopping. Psychol Sci 19(11):1146–1153. https://doi.org/10.1111/j.1467-9280.2008.02216.x

Aron AR, Fletcher PC, Bullmore ET, Sahakian BJ, Robbins TW (2003) Stop-signal inhibition disrupted by damage to right inferior frontal gyrus in humans. Nat Neurosci 6(2):115–116. https://doi.org/10.1038/nn1003

Bissett PG, Jones HM, Poldrack RA, Logan GD (2021) Severe violations of independence in response inhibition tasks. Sci Adv. https://doi.org/10.1126/sciadv.abf4355

Bissett PG, Logan GD (2014) Selective stopping? Maybe Not J Exp Psychol Gen 143(1):455–472. https://doi.org/10.1037/a0032122

Bridges D, Pitiot A, MacAskill MR, Peirce JW (2020) The timing mega-study: comparing a range of experiment generators, both lab-based and online. PeerJ 8:e9414. https://doi.org/10.7717/peerj.9414

Choo Y, Matzke D, Bowren MD, Tranel D, Wessel JR (2022) Right inferior frontal cortex damage impairs the initiation of inhibitory control, but not its implementation. bioRxiv. https://doi.org/10.1101/2022.05.03.490498

Cirillo J, Cowie MJ, MacDonald HJ, Byblow WD (2018) Response inhibition activates distinct motor cortical inhibitory processes. J Neurophysiol 119(3):877–886. https://doi.org/10.1152/jn.00784.2017

Coxon JP, Stinear CM, Byblow WD (2007) Selective inhibition of movement. J Neurophysiol 97(3):2480–2489. https://doi.org/10.1152/jn.01284.2006

Coxon JP, Goble DJ, Leunissen I, Van Impe A, Wenderoth N, Swinnen SP (2016) Functional brain activation associated with inhibitory control deficits in older adults. Cereb Cortex 26(1):12–22. https://doi.org/10.1093/cercor/bhu165

De Jong R, Coles MG, Logan GD (1995) Strategies and mechanisms in nonselective and selective inhibitory motor control. J Exp Psychol Hum Percept Perform 21(3):498–511. https://doi.org/10.1037//0096-1523.21.3.498

Diesburg DA, Wessel JR (2021) The Pause-then-cancel model of human action-stopping: theoretical considerations and empirical evidence. Neurosci Biobehav Rev 129:17–34. https://doi.org/10.1016/j.neubiorev.2021.07.019

Hall A, Jenkinson N, MacDonald HJ (2022) Exploring stop signal reaction time over two sessions of the anticipatory response inhibition task. Exp Brain Res 240(11):3061–3072. https://doi.org/10.1007/s00221-022-06480-x

Hannah R, Aron AR (2021) Towards real-world generalizability of a circuit for action-stopping. Nat Rev Neurosci 22(9):538–552. https://doi.org/10.1038/s41583-021-00485-1

He JL, Hirst RJ, Puri R, Coxon J, Byblow W, Hinder M, Skippen P, Matzke D, Heathcote A, Wadsley CG, Silk T, Hyde C, Parmar D, Pedapati E, Gilbert DL, Huddleston DA, Mostofsky S, Leunissen I, MacDonald HJ, Chowdhury NS, Gretton M, Nikitenko T, Zandbelt B, Strickland L, Puts NAJ (2021) OSARI, an open-source anticipated response inhibition task. Behav Res Methods. https://doi.org/10.3758/s13428-021-01680-9

Heathcote A, Matzke D (2022) Winner takes all! what are race models, and why and how should psychologists use them? Curr Dir Psychol Sci. https://doi.org/10.1177/09637214221095852

Hsieh S, Lin YC (2017) Strategies for stimulus selective stopping in the elderly. Acta Psychol (amst) 173:122–131. https://doi.org/10.1016/j.actpsy.2016.12.011

Jana S, Hannah R, Muralidharan V, Aron AR (2020) Temporal cascade of frontal, motor and muscle processes underlying human action-stopping. Elife. https://doi.org/10.7554/eLife.50371

JASP Team. (2020). JASP. In JASP Team. https://jasp-stats.org/. Accessed 12 Oct 2022

Lappin JS, Eriksen CW (1966) Use of a delayed signal to stop a visual reaction-time response. J Exp Psychol 72(6):805–811. https://doi.org/10.1037/h0021266

Leunissen I, Zandbelt BB, Potocanac Z, Swinnen SP, Coxon JP (2017) Reliable estimation of inhibitory efficiency: to anticipate, choose or simply react? Eur J Neurosci 45(12):1512–1523. https://doi.org/10.1111/ejn.13590

Li X, Liang Z, Kleiner M, Lu ZL (2010) RTbox: a device for highly accurate response time measurements. Behav Res Methods 42(1):212–225. https://doi.org/10.3758/BRM.42.1.212

Logan GD, Cowan WB (1984) On the ability to inhibit thought and action: a theory of an act of control. Psychol Rev 91(3):295–327. https://doi.org/10.1037/0033-295x.91.3.295

Logan GD, Cowan WB, Davis KA (1984) On the ability to inhibit simple and choice reaction time responses: a model and a method. J Exp Psychol Hum Percept Perform 10(2):276–291. https://doi.org/10.1037//0096-1523.10.2.276

Lumsden J, Edwards EA, Lawrence NS, Coyle D, Munafo MR (2016) Gamification of cognitive assessment and cognitive training: a systematic review of applications and efficacy. JMIR Serious Games 4(2):e11. https://doi.org/10.2196/games.5888

MacDonald HJ, Stinear CM, Byblow WD (2012) Uncoupling response inhibition. J Neurophysiol 108(5):1492–1500. https://doi.org/10.1152/jn.01184.2011

MacDonald HJ, Coxon JP, Stinear CM, Byblow WD (2014) The fall and rise of corticomotor excitability with cancellation and reinitiation of prepared action. J Neurophysiol 112(11):2707–2717. https://doi.org/10.1152/jn.00366.2014

MacDonald HJ, Stinear CM, Ren A, Coxon JP, Kao J, Macdonald L, Snow B, Cramer SC, Byblow WD (2016) Dopamine gene profiling to predict impulse control and effects of dopamine agonist ropinirole. J Cogn Neurosci 28(7):909–919. https://doi.org/10.1162/jocn_a_00946

MacDonald HJ, Laksanaphuk C, Day A, Byblow WD, Jenkinson N (2021) The role of interhemispheric communication during complete and partial cancellation of bimanual responses. J Neurophysiol 125(3):875–886. https://doi.org/10.1152/jn.00688.2020

MacDonald HJ, McMorland AJ, Stinear CM, Coxon JP, Byblow WD (2017) An activation threshold model for response inhibition. PLoS ONE 12(1):e0169320. https://doi.org/10.1371/journal.pone.0169320

Majid DS, Cai W, George JS, Verbruggen F, Aron AR (2012) Transcranial magnetic stimulation reveals dissociable mechanisms for global versus selective corticomotor suppression underlying the stopping of action. Cereb Cortex 22(2):363–371. https://doi.org/10.1093/cercor/bhr112

Matzke D, Dolan CV, Logan GD, Brown SD, Wagenmakers EJ (2013a) Bayesian parametric estimation of stop-signal reaction time distributions. J Exp Psychol Gen 142(4):1047–1073. https://doi.org/10.1037/a0030543

Matzke D, Love J, Wiecki TV, Brown SD, Logan GD, Wagenmakers EJ (2013b) Release the BEESTS: bayesian estimation of Ex-gaussian STop-signal reaction time distributions. Front Psychol 4:918. https://doi.org/10.3389/fpsyg.2013.00918

Matzke D, Love J, Heathcote A (2017) A Bayesian approach for estimating the probability of trigger failures in the stop-signal paradigm. Behav Res Methods 49(1):267–281. https://doi.org/10.3758/s13428-015-0695-8

Matzke D, Strickland LJG, Sripada C, Weigard AS, Puri R, He J, Hirst R, Heathcote A (2021) Stopping timed actions. PsyArXiv. https://doi.org/10.31234/osf.io/9h3v7

Peirce J, Gray JR, Simpson S, MacAskill M, Hochenberger R, Sogo H, Kastman E, Lindelov JK (2019) PsychoPy2: Experiments in behavior made easy. Behav Res Methods 51(1):195–203. https://doi.org/10.3758/s13428-018-01193-y

Raud L, Huster RJ, Ivry RB, Labruna L, Messel MS, Greenhouse I (2020) A Single mechanism for global and selective response inhibition under the influence of motor preparation. J Neurosci 40(41):7921–7935. https://doi.org/10.1523/JNEUROSCI.0607-20.2020

Raud L, Thunberg C, Huster RJ (2022) Partial response electromyography as a marker of action stopping. Elife. https://doi.org/10.7554/eLife.70332

Rincon-Perez I, Echeverry-Alzate V, Sanchez-Carmona AJ, Buhler KM, Hinojosa JA, Lopez-Moreno JA, Albert J (2020) The influence of dopaminergic polymorphisms on selective stopping. Behav Brain Res 381:112441. https://doi.org/10.1016/j.bbr.2019.112441

Sanchez-Carmona AJ, Rincon-Perez I, Lopez-Martin S, Albert J, Hinojosa JA (2021) The effects of discrimination on the adoption of different strategies in selective stopping. Psychon Bull Rev 28(1):209–218. https://doi.org/10.3758/s13423-020-01797-6

Skippen P, Matzke D, Heathcote A, Fulham WR, Michie P, Karayanidis F (2019) Reliability of triggering inhibitory process is a better predictor of impulsivity than SSRT. Acta Psychol (amst) 192:104–117. https://doi.org/10.1016/j.actpsy.2018.10.016

Slater-Hammel AT (1960) Reliability, accuracy, and refractoriness of a transit reaction. Research Quarterly. Am Asso Health, Phy Edu Recreat 31(2):217–228. https://doi.org/10.1080/10671188.1960.10613098

van Doorn J, Ly A, Marsman M, Wagenmakers EJ (2020) Bayesian rank-based hypothesis testing for the rank sum test, the signed rank test, and Spearman’s rho. J Appl Stat 47(16):2984–3006. https://doi.org/10.1080/02664763.2019.1709053

van Doorn J, van den Bergh D, Bohm U, Dablander F, Derks K, Draws T, Etz A, Evans NJ, Gronau QF, Haaf JM, Hinne M, Kucharsky S, Ly A, Marsman M, Matzke D, Gupta A, Sarafoglou A, Stefan A, Voelkel JG, Wagenmakers EJ (2021) The JASP guidelines for conducting and reporting a Bayesian analysis. Psychon Bull Rev 28(3):813–826. https://doi.org/10.3758/s13423-020-01798-5

Verbruggen F, Logan GD (2008) Automatic and controlled response inhibition: associative learning in the go/no-go and stop-signal paradigms. J Exp Psychol Gen 137(4):649–672. https://doi.org/10.1037/a0013170

Verbruggen F, Logan GD (2009a) Models of response inhibition in the stop-signal and stop-change paradigms. Neurosci Biobehav Rev 33(5):647–661. https://doi.org/10.1016/j.neubiorev.2008.08.014

Verbruggen F, Logan GD (2009b) Proactive adjustments of response strategies in the stop-signal paradigm. J Exp Psychol Hum Percept Perform 35(3):835–854. https://doi.org/10.1037/a0012726

Verbruggen F, Logan GD, Stevens MA (2008) STOP-IT: Windows executable software for the stop-signal paradigm. Behav Res Methods 40(2):479–483. https://doi.org/10.3758/brm.40.2.479

Verbruggen F, Chambers CD, Logan GD (2013) Fictitious inhibitory differences: how skewness and slowing distort the estimation of stopping latencies. Psychol Sci 24(3):352–362. https://doi.org/10.1177/0956797612457390

Verbruggen F, Aron AR, Band GP, Beste C, Bissett PG, Brockett AT, Brown JW, Chamberlain SR, Chambers CD, Colonius H, Colzato LS, Corneil BD, Coxon JP, Dupuis A, Eagle DM, Garavan H, Greenhouse I, Heathcote A, Huster RJ, Jahfari S, Kenemans JL, Leunissen I, Li CR, Logan GD, Matzke D, Morein-Zamir S, Murthy A, Pare M, Poldrack RA, Ridderinkhof KR, Robbins TW, Roesch M, Rubia K, Schachar RJ, Schall JD, Stock AK, Swann NC, Thakkar KN, van der Molen MW, Vermeylen L, Vink M, Wessel JR, Whelan R, Zandbelt BB, Boehler CN (2019) A consensus guide to capturing the ability to inhibit actions and impulsive behaviors in the stop-signal task. Elife. https://doi.org/10.7554/eLife.46323

Verhaeghen P (2011) Aging and executive control: reports of a demise greatly exaggerated. Curr Dir Psychol Sci 20(3):174–180. https://doi.org/10.1177/0963721411408772

Wadsley CG, Cirillo J, Nieuwenhuys A, Byblow WD (2022a) Decoupling countermands nonselective response inhibition during selective stopping. J Neurophysiol 127(1):188–203. https://doi.org/10.1152/jn.00495.2021

Wadsley CG, Cirillo J, Nieuwenhuys A, Byblow WD (2022b) Stopping interference in response inhibition: behavioral and neural signatures of selective stopping. J Neurosci 42(2):156–165. https://doi.org/10.1523/JNEUROSCI.0668-21.2021

Xu J, Westrick Z, Ivry RB (2015) Selective inhibition of a multicomponent response can be achieved without cost. J Neurophysiol 113(2):455–465. https://doi.org/10.1152/jn.00101.2014

Zandbelt BB, Bloemendaal M, Neggers SF, Kahn RS, Vink M (2013) Expectations and violations: delineating the neural network of proactive inhibitory control. Hum Brain Mapp 34(9):2015–2024. https://doi.org/10.1002/hbm.22047

Acknowledgements

The authors thank Fenwick Nolan for their assistance developing SeleST.

Funding

Open Access funding enabled and organized by CAUL and its Member Institutions.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Communicated by Bill J Yates.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wadsley, C.G., Cirillo, J., Nieuwenhuys, A. et al. Comparing anticipatory and stop-signal response inhibition with a novel, open-source selective stopping toolbox. Exp Brain Res 241, 601–613 (2023). https://doi.org/10.1007/s00221-022-06539-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00221-022-06539-9