Abstract

The least squares linear filter, also called the Wiener filter, is a popular tool to predict the next element(s) of time series by linear combination of time-delayed observations. We consider observation sequences of deterministic dynamics, and ask: Which pairs of observation function and dynamics are predictable? If one allows for nonlinear mappings of time-delayed observations, then Takens’ well-known theorem implies that a set of pairs, large in a specific topological sense, exists for which an exact prediction is possible. We show that a similar statement applies for the linear least squares filter in the infinite-delay limit, by considering the forecast problem for invertible measure-preserving maps and the Koopman operator on square-integrable functions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The prediction (or forecast or extrapolation) of time series is important in diverse applications [1,2,3,4,5] and attracts lively research activity [6,7,8,9,10,11,12,13,14,15]. It can be framed as follows. Given a discrete-time dynamics \(T:\mathbb {X} \rightarrow \mathbb {X}\) on state space \(\mathbb {X}\) and observation function \(f:\mathbb {X}\rightarrow \mathbb {C}\), are we able to assess from the time series \(f(x), f(Tx), \ldots , f(T^n x)\) its next element \(f(T^{n+1}x)\)?

We are going to consider for this problem the linear least (mean) squares filter [16, section 9.7]. Going back to Wiener [17] and Kolmogorov [18, 19], it is also called Wiener filter. It computes linear combination coefficients for d consecutive observations \(f(T^ix), \ldots , f(T^{i+d-1}x)\) such that the square error of the linear combination to the next observations in the sequence, \(f(T^{i+d}x)\), averaged over the sequence is minimal. The positive integer d is called delay depth.

If we were to allow nonlinear transformations to map the time-delayed observations, Takens’ celebrated theorem [13] implies that an exact prediction is possible for a “large” set of pairs of map T and observable f:

Takens’ theorem

Let \(\mathbb {M}\) be a compact manifold of dimension m. The set of pairs of mappings and observables \((T,f)\in \textrm{Diff}^2(\mathbb {M}) \times C^2(\mathbb {M},\mathbb {R})\), for which the delay-coordinate map

is an embedding, is open and dense with respect to the \(C^1\) topology.

To be more precise, exact predictability is implied by the consequence of Takens’ theorem that the mapping \(\left( f(T^ix), \ldots , f(T^{i+d-1}x) \right) \mapsto \left( f(T^{i+1}x), \ldots , f(T^{i+d}x) \right) \) with \(d=2\,m+1\) is one-to-one as the composition \(\Phi _{T,f}(x) \mapsto x \mapsto Tx \mapsto \Phi _{T,f}(Tx)\) of (smooth) one-to-one mappings. Note that a part of this pipeline is the reconstruction step \(\Phi _{T,f}(x) \mapsto x\), possible by the injectivity of the map \(\Phi _{T,f}\). Closer to our way of looking at the problem, [8] considers the (less restrictive) problem of predicting from d consecutive terms of the time series its future values. Notice that such prediction takes place not in the original phase space \(\mathbb {M}\), but in \(\Phi _{T,f}(\mathbb {M})\subset \mathbb {R}^d\), considered as a model space for the system. See also [20].

The descriptor “large” from above refers to the adjectives ‘open and dense’ from Takens’ theorem—often called genericity in this context. It is customary to call a property generic if it holds for all points of a set that is large in a topological sense. This largeness, however, has no unique description throughout the literature. Indeed, in most cases the word “generic” refers to a property that holds for a set of points containing a dense G\(_{\delta }\) set. Here, a set is called G\(_{\delta }\) if it is a countable intersection of open sets, cf. Definition 15 below. Note that this is slightly weaker than the genericity used in Takens’ theorem itself.

Takens’ theorem has been extended over the years in different directions, such as less smoothness of the setting [6, 7, 11, 21, 22] (see also [8, 23] for a comprehensive overview) or stronger concepts for the largeness of the set for which it holds. One such concept is prevalence [11], which is a generalization of “Lebesgue-almost every” for infinite-dimensional normed spaces; cf. Definition 16.

Takens-type theorems serve as a justification of the validity of time-delay based approaches used in applications, and have been the basis of further methodological developments, see e.g. [2,3,4,5, 9, 10, 24,25,26,27,28].

At this point the prediction problem seems to reduce to learning the scalar function \(\left( f(x), \ldots , f(T^{d-1}x) \right) \mapsto f(T^dx)\) for a sufficiently large delay depth. However, this function is nonlinear and the curse of dimensionality becomes a burden, as no additional exploitable structural property of it is known. Although, for instance, deep-learning based approaches have been deployed to this learning problem with success [15, 29,30,31], training such artificial neuronal networks remains a nontrivial optimization problem and performance guarantees are not available. Similar difficulties arise for “composite” methods, where for a fixed set of nonlinear ansatz functions a linear combination optimizing the forecast error is sought [28, 32, 33] and for the related family of regression methods based on the Mori–Zwanzig formalism [34, 35], utilizing a projection-based quantification of how the unobserved past propagates to the present and future [36,37,38,39]. Due to its simplicity and (also theoretic) accessibility, the linear filter remains a viable tool for prediction. In addition, as we will see below, the forecast problem is all about approximating a linear operator.

Indeed, we interpret the prediction problem by the linear least squares filter in the setting of ergodic theory, with an essentially invertible measure-preserving transformation \(T: (\mathbb {X},\mathfrak {B}, \mu ) \rightarrow (\mathbb {X},\mathfrak {B}, \mu )\) and its associated Koopman operator \(\mathcal {U}:L^2_\mu \rightarrow L^2_\mu \) with \(\mathcal {U}f = f\circ T\) [40, 41], often also called composition operator. We will see in Proposition 3 below that the least squares filter in the limit of infinite data corresponds to an orthogonal projection of \(\mathcal {U}\) to the Krylov subspace \(\mathcal {K}_d = \textrm{span}\{ f\circ T^{-d+1}, \ldots , f\circ T^{-1}, f\} \subset L^2_{\mu }\) spanned by time-delayed observables.

Without an explicit mentioning of it, a first connection of the linear least squares filter and the Koopman operator in this projective sense seems to have appeared in [42]. Therein, the focus was on the connection to a more recent linear method, Dynamic Mode Decomposition (DMD), which arose in the context of fluid dynamics [43]. The relationship of DMD and the Koopman operator was observed in [44], after which generalizations such as Extended DMD [45] appeared. This led to the reintroduction of the Wiener filter with the name “Hankel DMD” in [42].

The marriage of Koopman operator and delay embedding has since then witnessed a lively activity. Intermittency in chaotic systems was investigated by the “Hankel alternative view of Koopman analysis” [26, 27], spectral approximation results of Hankel DMD have been derived in [46], while others have also sought for spectral approximation beyond the linear filter scenario [46,47,48,49,50,51].

Note that prediction requires the inference of \(\mathcal {U}f(x)\) from past observations \(\ldots , f\circ T^{-2}(x), f\circ T^{-1}(x), f(x)\). The usual trade-off in Koopman-operator based methods is that the original nonlinear but (potentially) finite-dimensional system is considered through a linear, but infinite-dimensional operator. It is thus not surprising that in general perfect prediction by linear manipulations of time-delayed observations cannot be achieved, merely in the limit of infinite delay depth, i.e. “asymptotically”. We will consider two notions of asymptotic linear predictiveness. The first is (strong) predictiveness, requiring that the closure \(\overline{\bigcup _{d\in \mathbb {N}}\mathcal {K}_d} = L^2_{\mu }\); i.e., that the Krylov spaces “fill” \(L^2_{\mu }\), cf. Definition 7. The second, weak predictiveness, we define by \({ \mathcal {U}f \in \overline{\bigcup _{d\in \mathbb {N}}\mathcal {K}_d} }\), cf. Definition 10. With regard to the above discussion after Takens’ theorem, we note that the stronger version of predictiveness here is closer to the notion of reconstruction (of the Koopman operator), while the weaker one is in line with what one considered above as prediction in model space.

In the following we consider the Wiener filter and our results will mainly concern (strong) linear asymptotic predictiveness. We provide some Takens-type predictiveness theorems where the prediction map is linear. Using the notions of standard probability space without atoms (that is, a measure space isomorphic to [0, 1] with Lebesgue measure) and weak topology on measure-preserving essential bijections from Sect. 3.1.2, our main results are summarized in a simplified fashion as follows:

Koopman–Takens theorem

Let \((\mathbb {X},\mathcal {B},\mu )\) be a standard probability space without atoms and let \(\text {MPT}\) be the space of \(\mu \)-preserving essential bijections on \(\mathbb {X}\) with the weak topology.

-

(a)

Theorem 23: For a dense set of transformations \(T \in \text {MPT}\), the collection of asymptotically linearly predictive observables f is prevalent and generic in \(L^2_{\mu }\).

-

(b)

Proposition 26: For a generic set of transformations \(T \in \text {MPT}\), the collection of asymptotically linearly predictive observables f is generic in \(L^2_{\mu }\).

-

(c)

Proposition 14: For a dense set of transformations \(T \in \text {MPT}\), every \(L^2_\mu \)-observable f is weakly linearly asymptotically predictive. Specifically, the conclusion holds for all transformations T with discrete spectrum.

Practically this means that for a large set of systems almost any measurement procedure (i.e., observable) results in a predictive scenario. However, it also means, that on finite delay depths the Wiener filters of predictive and of unpredictive systems can be arbitrary close (Proposition 38). A result of our analysis is also that there are mixing systems that are predictive (Sect. 3.5). Since mixing systems “lose their memory” over time, it was not clear a priori whether observations from arbitrary far in the past can contribute enough for this to be the case.

We can compare the Takens and Koopman–Takens theorems along different lines:

-

(i)

While for Takens’ theorem the (often unknown) dimension of the manifold \(\mathbb {M}\) provides a bound on the minimal delay depth for predictability to hold, in the Koopman–Takens setting statements hold in the asymptotic limit of infinite delay depth. In fact, in this latter ergodic setting the structure of a manifold is not needed; we will only require Lebesgue–Rokhlin spaces below (Sect. 3.1.2). However, in Sect. 3.6 we can extend our results to the setting of measure-preserving homeomorphisms on compact connected manifolds.

-

(ii)

Takens-type results require the mapping and the observable to be at least (sometimes Lipschitz) continuous, while our framework works with measurable maps and observables. It should also be observed that the prediction problem can be considered for both invertible and non-invertible transformations T, while the possibility of dynamical reconstruction (embedding) by delay coordinates maps is generally restricted to invertible systems in the Takens framework. It is important to note that from a theoretical point of view, reconstruction implies prediction but not vice versa. In the Koopman–Takens framework we only consider essentially invertible maps.

-

(iii)

For Takens’ theorem, one can identify a class of \(T\in \textrm{Diff}^2(\mathbb {M})\) such that the conclusion of the theorem holds: If T has finitely many periodic points of period at most 2m and for any periodic point x of period \(p\le 2m\) the eigenvalues of \(\textrm{D}T^p(x)\) are all distinct, then for generic \(f \in C^2(\mathbb {M},\mathbb {R})\) the map \(\Phi _{T,f}\) is an embedding [52, Theorem 2]. In our setting, one can also give a class of systems for which weak predictiveness holds: Mappings with discrete spectrum are weakly predictive for every \(L^2_\mu \)-observable (see Proposition 14).

The paper is structured as follows. Section 2 sets up the prediction problem in an ergodic-theoretic context and shows that an important role is played by so-called cyclic vectors of the Koopman operator. The size of the set of predictive pairs of dynamics and observables is considered in Sect. 3, where we give different genericity results for predictiveness—both of constructive and nonconstructive nature. Further practical properties of the least squares linear filter for time series analysis are collected in Sect. 4. We illustrate our findings on four numerical examples in Sect. 5, before we conclude with Sect. 6.

2 The Least-Squares Filter

2.1 Preliminaries on operator-based considerations in ergodic theory

The measure-theoretic consideration of dynamical systems starts with a measure space \((\mathbb {X},\mathfrak {B}, \mu )\) and a measure-preserving transformation (mpt) \(T:\mathbb {X}\rightarrow \mathbb {X}\), i.e., \(\mu = \mu \circ T^{-1}\). If the \(\sigma \)-algebra \(\mathfrak {B}\) is clear from the context, we suppress it in the notation, and write \((\mathbb {X},\mu )\). We will work with probability spaces, thus \(\mu (\mathbb {X})=1\).

The system (or, if no ambiguity arises, the dynamics or the measure) is called ergodic if there are only trivial invariant sets, that is, for every set \(E\in \mathcal {B}\) with \(\mu (T^{-1}(E) \triangle E)=0\) we have \(\mu (E)=0\) or \(\mu (E)=1\). Every system can be restricted to subsets \(\mathbb {X}' \subset \mathbb {X}\), such that \(T\big \vert _{\mathbb {X}'}\) is ergodic; so-called ergodic components. For ergodic systems, the Birkhoff Ergodic Theorem states that for \(f\in L^p_\mu := L^p_\mu (\mathbb {X})\), \(1\le p < \infty \), the orbital averages converge, more precisely

for \(\mu \)-a.e. \(x\in \mathbb {X}\) and also as a function in \(L^p_\mu \). Note that this is a functional version of the indecomposability statement defining ergodicity: The orbital averages of any observables converge to a constant. Again, if T is not ergodic, this statement holds restricted to its ergodic components.

This functional view on ergodic theory (also) motivates to consider observables and their evolution under the dynamics, giving rise to the Koopman operator \(\mathcal {U}: L^2_\mu \rightarrow L^2_\mu \), \(\mathcal {U}f = f\circ T\). The analogously defined operator could be considered on \(L^p_\mu \), \(1\le p\le \infty \), as well, but the additional structure of a Hilbert space and the possibility to consider autocorrelations leads to our choice of \(L^2_\mu \). Sometimes, to omit ambiguity, we write \(\mathcal {U}_T\) to denote the Koopman operator associated to T. Since T preserves the measure, we have that \({\Vert \mathcal {U}f\Vert _{L^2_\mu } = \Vert f\Vert _{L^2_\mu } }\), and if T is (essentially) invertible, then \(\mathcal {U}\) is unitary. Then, in particular, \(\mathcal {U}_{T^{-1}} = \mathcal {U}_T^{-1}\) and the spectrum of \(\mathcal {U}\) is contained in \(\{z\in \mathbb {C}\mid |z|=1\}\), since both \(\mathcal {U}\) and \(\mathcal {U}^{-1}\) are non-expansive. This spectrum can be further classified as follows. The mpt T (or the operator \(\mathcal {U}\)) is said to have (purely) discrete spectrum, if \(\mathcal {U}\) has a countable set of eigenvalues with associated eigenfunctions yielding an orthonormal basis \(\phi _i\), \(i\in \mathbb {N}\), of \(L^2_\mu \). Note that \(\lambda _1=1\) is always an eigenvalue of \(\mathcal {U}\) with eigenfunction \(\phi _1 = \mathbb {1}\) (the constant function with value one). If 1 is the only eigenvalue of \(\mathcal {U}\), then T (and \(\mathcal {U}\)) is said to have (purely) continuous spectrum. This is for instance the case for mixing systems, while rotations of abelian groups have purely discrete spectrum [53, §3.3]. One speaks of T having mixed spectrum if neither of the above pure cases hold. We note that the terminology is consistent with the functional-analytic partitioning of spectra into the point, continuous, and residual parts. Indeed, unitary operators have no residual spectrum: one way to see this known fact is to combine [54, Theorem 9.2-4] and [54, section 10.6].

If \(f,g \in L^2_\mu \), then \(f\, \mathcal {U}^k g \in L^1_\mu \) for \(k\ge 0\), and the Birkhoff Ergodic Theorem yields

for \(\mu \)-a.e. \(x\in \mathbb {X}\).

Finally, the form of the observation sequence suggests to consider sequences of the form \((f,\mathcal {U}f, \mathcal {U}^2 f,\ldots )\). If the so-called cyclic subspace of f, defined by

is equal to \(L^2_\mu \), then we call f a cyclic vector of \(\mathcal {U}\). As we will see, such cyclic observables play an important role for the prediction problem.

2.2 The filter as \(L^2\)-orthogonal projection

In all what follows, we assume T to be a \(\mu \)-ergodic essentially invertible mpt on \(\mathbb {X}\). Let a trajectory

of length \((m+1)\) be given. For a given delay depth \(d\in \mathbb {N}\), let us consider the linear least squares filtering problem for forecasting the next observation from the previous d ones:

where \(\rightarrow \min _{\tilde{c}\in \mathbb {C}^{d}}!\) expresses the goal of minimizing \(J(\tilde{c})\) over \(\tilde{c}=(\tilde{c}_0,\dots ,\tilde{c}_{d-1})\in \mathbb {C}^{d}\). For \(\mu \)-a.e. \(x\in \mathbb {X}\) the value function converges as \(m\rightarrow \infty \) by (1) and we obtain the limiting filtering problem

Replacing \(\tilde{J}_m\) by \(\tilde{J}\) is reasonable in the following sense.

Lemma 1

Let \(d\in \mathbb {N}\) be fixed and let \(\{f, f\circ T,\ldots ,f\circ T^{d-1}\}\) be a linearly independent set in \(L^2_\mu \). Then the minimizer of \(\tilde{J}_m\) converges for almost every \(x\in \mathbb {X}\) to the unique minimizer of \({\tilde{J}}\) for \(m\rightarrow \infty \).

Proof

The linear independence assumption guarantees that \(\tilde{J}:\mathbb {C}^d \rightarrow \mathbb {R}\) is a quadratic loss function with symmetric positive definite \(d\times d\) Hessian matrix H. Thus, \(\tilde{J}\) has a unique minimizer, that can be characterized as the unique solution of a linear system \(H \tilde{c} = b\) with a suitable right-hand side b. Also, \(\tilde{J}_m:\mathbb {C}^d\rightarrow \mathbb {R}\) is a quadratic loss function, whose finitely many parameters converge by the Birhoff Ergodic Theorem for a.e. \(x\in \mathbb {X}\) to those of \(\tilde{J}\). Thus, \(\tilde{J}_m\) has almost surely a unique minimizer for sufficiently large m, which converges essentially because \((H,b) \mapsto H^{-1}b\) is continuous for invertible matrices H. \(\square \)

From now on, we will thus consider the infinite-data case. More precisely, we will work with the following equivalent formulation of problem (3). Note that by invariance of \(\mu \) with respect to T and hence with respect to \(T^{d-1}\) as well, we have with \(c_i:= \tilde{c}_{d-1-i}\) that

We note that at this point it is convenient to write the (finite) trajectory, by redefining its starting point, as

whenever we interpret the ergodic limit in the finite-data case.

To interpret the minimizer of J in (4), we define the Krylov “basis” \(\mathcal {B}_d:= (f, f\circ T^{-1}, \ldots , f\circ T^{-d+1})\) and the associated Krylov space \(\mathcal {K}_d:= \textrm{span}\, \mathcal {B}_d\). The Krylov basis is a basis of \(\mathcal {K}_d\) if and only if it forms a linearly independent set.

Remark 2

Note that if f is a cyclic vector of \(\mathcal {U}^{-1}\), then \(\mathcal {B}_d\) is a basis for any \(d\ge 1\), assuming that \(L^2_\mu \) is infinite-dimensional.

Since \(J(c) \rightarrow \min !\) is the best approximation problem in \(L^2_\mu \), the minimizer \(c = (c_0,\ldots ,c_{d-1})\) of J induces a function

that is the unique \(L^2_{\mu }\)-orthogonal projection of \(\mathcal {U}f\) onto \(\mathcal {K}_d\). There will be no confusion of notation between \(\mathcal {U}_T\) and \(\mathcal {U}_d\), as T will always be a map and d an integer. If \(\mathcal {B}_d\) is a basis, the associated coefficient vector c is unique. The orthogonal projection property is equivalent to the variational equation (by setting the gradient of J to zero)

We can extend the operator \(\mathcal {U}_d\) to \(\mathcal {K}_d\) by defining it to have the matrix representation

with respect to \(\mathcal {B}_d\), where the first column is the vector c, the first upper diagonal contains ones, and all other entries are zero. By setting up the variational equation for the \(L^2_\mu \)-orthogonal projection of the Koopman operator \(\mathcal {U}\) on the space \(\mathcal {K}_d\), it is immediate to see the following

Proposition 3

The operator \(\mathcal {U}_d: \mathcal {K}_d \rightarrow \mathcal {K}_d\) with matrix representation \(U_d\) with respect to \(\mathcal {B}_d\) is the \(L^2_\mu \)-orthogonal projection of \(\mathcal {U}\) onto \(\mathcal {K}_d\).

Remark 4

(Hankel DMD) A statement similar to Proposition 3 has been made in [42, section 3] and was used there in the case \(\mathcal {K}_{d+1} = \mathcal {K}_d\) for some \(d\in \mathbb {N}\). Therein, the filter was coined Hankel Dynamic Mode Decomposition (Hankel DMD), because it arises as the least squares solution of the (usually overdetermined) linear system

involving a Hankel-type matrix (more precisely, a rectangular Toeplitz matrix) in the construction of (Extended) Dynamic Mode Decomposition [43, 45].

Remark 5

(Computational aspects) There are several issues that need to be taken into account when computing the filter numerically from finite data.

The problem of obtaining the filter vector c via (4) inherits the numerical condition of the Krylov basis \(\mathcal {B}_d\). If the basis elements are highly non-orthogonal, the computation of c is ill-conditioned. This need not effect the performance of the filter, though. This is because the filter’s performance is measured by the objective function J in \(L^2_\mu \) and not in the space of coefficients c.

A more severe source of error is the finite-sample approximation (2) of J. From an ergodic-theoretic perspective, the convergence in Lemma 1 as \(m\rightarrow \infty \) can be arbitrarily slow. From the perspective of quadrature, integrals of the form (5) involve functions \(f\circ T^{-d+1}\), which might become increasingly irregular as d grows and thus slowing down convergence. Such integrals enter implicitly while computing inner products in the solution of the least squares problem (7). A remedy (simple, but without performance guarantee) is to compute the filter for larger sample sizes m (or start with reducing the set, if obtaining new samples is not an option) and thus checking whether convergence can be safely assumed.

We now return to our original question, whether the filter is able to continue an observation sequence \(\ldots ,f(T^{-1}x),f(x)\). Hence, we would like to infer \(\mathcal {U}f(x)\). While there are also other mathematical frameworks for quantifying predictability and information content in ensemble predictions (see [55,56,57] and references therein), we focus on the mean squared forecast error and can give the following result.

Proposition 6

Let f be a cyclic vector for \(\mathcal {U}^{-1}\). Then, as \(d\rightarrow \infty \), we have

Proof

Since f is a cyclic vector, the space \(\bigcup _{d\ge 0} \mathcal {K}_d\) is dense in \(L^2_\mu \). Since \(\mathcal {U}_d\) is the orthogonal projection of \(\mathcal {U}\) to \(\mathcal {K}_d\), and the spaces \(\mathcal {K}_d\) are nested, i.e., \(\mathcal {K}_{d_1} \subset \mathcal {K}_{d_2}\) for \(d_1\le d_2\), this proves that \(\mathcal {U}_d f\) converges to \(\mathcal {U}f\). \(\square \)

Proposition 6 motivates the following notion of predictiveness.

Definition 7

(Predictiveness). We call the pair (T, f) of a mpt and an \(L^2_{\mu }\)-observable asymptotically linearly predictive in the \(L^2\)-sense, if f is a cyclic vector of \(\mathcal {U}^{-1} = \mathcal {U}_{T^{-1}}\). If T and \(\mu \) are clear from the context, we simply say that f is predictive.

Remark 8

The term “predictive” is motivated by the observation that if \(f \in L^2_\mu \) is a cyclic vector of \(\mathcal {U}^{-1}\), then for any \(g \in L^2_{\mu }\) we can approximate \(\mathcal {U}g\) by a linear combination of \(\mathcal {U}^{-j}f\), \(j\in \mathbb {N}\), to any desired accuracy in \(L^2_{\mu }\) norm by minimizing a modified version of \(\tilde{J}(\tilde{c})\) in (3).

An interesting question is, whether \(L^2_\mu \) convergence in Proposition 6 can be replaced by almost sure pointwise convergence.

Remark 9

(Almost sure asymptotic predictiveness) Convergence of a sequence in \(L^2_\mu \) implies convergence almost everywhere for a subsequence. Thus, if f is a cyclic vector of \(\mathcal {U}^{-1}\), there is a sequence \((d_k)_{k\in \mathbb {N}}\) of delay depths for which “almost sure asymptotic linear predictiveness” holds. However, we do not know in general which subsequence this is. In practice, an a posteriori test for convergence of a sequence \(\big (\mathcal {U}_{d_k}f(x) \big )_{k\in \mathbb {N}}\) could be our only option.

We also note that almost-everywhere pointwise convergence of orthogonal projections on \(L^2\) spaces is considered in [58, Theorems 2 and 3], but it seems unclear whether the conditions therein (e.g., positivity) on the projection can be met in our situation (i.e., \(L^2_\mu \)-orthogonal projection on \(\mathcal {K}_d\)). It seems rather unlikely for an arbitrary observation function f.

Proposition 6 shows that cyclic vectors of \(\mathcal {U}\) are of particular interest and importance to the forecast properties of the filter. In Sect. 3 we shall discuss the size of the set of cyclic vectors and the size of the set of transformations that have “many” of them. Before doing so, we consider a weaker but “sufficient” notion of predictiveness.

2.3 On weak predictiveness

Observe that in order to approximate the next element of the time series by a linear filter, it is only required.Footnote 1 that \(\mathcal {U}f\) can be arbitrarily well approximated in \(L^2_\mu \) by polynomials in \(\mathcal {U}^{-1}\) applied to f.

Definition 10

(Weak predictiveness). We call the pair (T, f), or simply the observable f, weakly predictive if \(f \circ T \in \overline{\bigcup _{d\in \mathbb {N}} \mathcal {K}_d}\). Equivalently, weak predictiveness means \(\lim _{d\rightarrow \infty } d_{L^2_\mu }(\mathcal {U}f, \mathcal {K}_d) = 0\), where \(d_{L^2_\mu }\) denotes the distance in \(L^2_\mu \) of a function to a subspace.

In the following, we are almost exclusively going to consider the stronger notion of predictiveness implied by the existence of cyclic vectors, as in Definition 7 and Proposition 6. It is nevertheless worthwhile discussing the weaker notion presented here, since observables that are eigenfunctions of \(\mathcal {U}\) (such as constant functions) will always be weakly but never strongly predictive (except for pathological cases where \(L^2_\mu \) is one-dimensional). In particular, we give a characterization of weak predictiveness in Theorem 12 and show in Proposition 14 that if the system has discrete spectrum, then every observable f is weakly predictive.

To analyze weak predictiveness of a system, we will use the Spectral Theorem for unitary operators. Let \(\mathbb {T}\subset \mathbb {C}\) denote the unit circle. In a simplified form (namely by restricting the operator in question to a cyclic subspace), the Spectral Theorem of unitary operators states that \(\mathcal {U}\) is isomorphic to the multiplication operator on \(L^2_\nu (\mathbb {T})\) for a suitable measure \(\nu \). The general form shows isomorphy to a sum of such spaces. See, for instance, [59, Chapter 8] or [60, Chapter 18] for the general statement and also for the form discussed next in Sect. 2.3.1.

2.3.1 Spectral Theorem for unitary operators

As a warm-up, consider a unitary (with respect to the Euclidean inner product \(\langle \cdot ,\cdot \rangle \)) matrix \(A \in \mathbb {C}^{k\times k}\) with eigenvalues \(\lambda _i \in \mathbb {T}\), \(i=1,\ldots ,k\), with an orthonormal set of eigenvectors \(v_i\). We can write the image of a vector \(v = \sum _i c_i v_i\) under p(A), where p is a polynomial, as \(p(A)v = \sum _i p(\lambda _i) c_i v_i\). Then we have that

where \(\delta _x\) denotes the Dirac measure centered at x. The measure \(\nu = \sum _i |c_i|^2\delta _{\lambda _i}\) thus encodes a weighted version of the spectrum of A associated with the vector v.

The above finite-dimensional construction can be generalized to unitary operators on Hilbert spaces. We will not consider the most general case, it will be sufficient to restrict a unitary operator \(\mathcal {A}:H \rightarrow H\) on the Hilbert space H to the cyclic subspace \(C_{v,\mathcal {A}}\) of \(\mathcal {A}\) and \(v\in H\):

Note that \(C_{v,\mathcal {A}}\) is invariant under \(\mathcal {A}\). By Riesz duality, one finds a unique (v-dependent) measure \(\nu \) (which we will call the “trace of the spectral measure for v”, or simply “trace measure”) with

In fact, this equality can be extended to hold for every \(h\in L^2_{\nu }(\mathbb {T})\), and one can define a unitary transformation

Note that if \(\mathbb {1}\) denotes the constant function with value 1 on \(\mathbb {T}\), then \(W\mathbb {1} = v\). Ultimately, by applying W to the function \(\zeta \mapsto \zeta h(\zeta )\), one has that

Thus, on its spectrum, \(\mathcal {A}\) can be characterized by the multiplication operator M, with \((Mh)(\zeta ):= \zeta h(\zeta )\), just as for matrices. The Spectral Theorem comprises the statements that W is a unitary transformation and that (8) holds.

2.3.2 Application of the Spectral Theorem

For weak predictiveness, we would need \(\mathcal {U}^{-1}f \in C_{f,\, \mathcal {U}}\) (for simplicity of notation, we swapped the roles of T and \(T^{-1}\)). In other words, for any \(\varepsilon >0\) we would like to have a polynomial \(p_{\varepsilon }\) such that

Note that the Spectral Theorem can be used in its above simplified form on the invariant cyclic subspace \(C_{f,\,\mathcal {U}}\) for \(v = f\) and \(\mathcal {A} = \mathcal {U}\). With this and \(W\mathbb {1} = f\), one has that

Spelling out the last expression, we would like to approximate the function \(\zeta \mapsto \zeta ^{-1} \) arbitrarily well by polynomials in the \(L^2_\nu \) norm. Note that if \(\nu = \textrm{Leb}\), then this is impossible, because on the complex circle \(\mathbb {T}\) the monomials \(\zeta \mapsto \zeta ^k\), \(k\in \mathbb {Z}\), are orthogonal trigonometric polynomials. If, however, \(\nu \) is a finite sum of weighted Dirac measures (for finitely many distinct eigenvalues \(\lambda _i\)), then there is a polynomial p with \(p(\lambda _i) = \frac{1}{\lambda _i}\) for all i. This follows from the invertibility of a Vandermonde matrix. A much broader answer, containing these previous two examples, can be given by invoking an implication of Szegő’s theorem:

Theorem 11

(Kolmogorov’s Density Theorem [61, Theorem 2.11.5]). Let \(\nu \) be a probability measure on the complex circle \(\mathbb {T}\) of the form \(d\nu (\theta ) = w(\theta )\,d\theta + d\nu _s(\theta )\), where \(\nu _s\) is singular and \(d\theta \) measures arc length. Then the polynomials are dense in \(L^2_\nu (\mathbb {T})\) if and only if

Theorem 12

Let \(d\nu (\theta ) = w(\theta )\,d\theta + d\nu _s(\theta )\) denote the decomposition of the trace measure \(\nu \) associated with T and f as in Theorem 11. Then f is weakly predictive if and only if the Szegő condition \(\int \log (w(\theta ))\,d\theta = -\infty \) holds.

Proof

We recall that—with abbreviating notation such that \(\zeta ^n\) denotes the rational function \(\zeta \mapsto \zeta ^n\) for \(n\in \mathbb {Z}\)—weak predictiveness is equivalent to that \(\zeta ^{-1}\) can be arbitrary well approximated by monomials \(\mathbb {1},\zeta ,\zeta ^2,\ldots \) in \(L^2_\nu \). Equivalently, we write \(\zeta ^{-1} \in \overline{\textrm{span}\{\mathbb {1},\zeta ,\zeta ^2,\ldots \}}\).

“\(\Leftarrow \)”. Assume that the Szegő condition holds. Then, by Theorem 11 we have that \(L^2_\nu = \overline{\textrm{span}\{\mathbb {1},\zeta ,\zeta ^2,\ldots \}}\), and in particular \(\zeta ^{-1} \in \overline{\textrm{span}\{\mathbb {1},\zeta ,\zeta ^2,\ldots \}}\).

“\(\Rightarrow \)”. The proof of this direction is due to Friedrich Philipp and is inspired by [62, Lemma 7.4]. We include it with his permission.

Assume weak predictiveness, i.e., \(\zeta ^{-1} \in \overline{\textrm{span}\{\mathbb {1},\zeta ,\zeta ^2,\ldots \}}\). By [61, Lemma 2.11.3] we have that \(\zeta ^{-n} \in \overline{\textrm{span}\{\mathbb {1},\zeta ,\zeta ^2,\ldots \}}\) for every \(n\in \mathbb {N}\). In particular, for any two polynomials p, q we have that \(p(\zeta ) + q(\bar{\zeta }) \in \overline{\textrm{span}\{\mathbb {1},\zeta ,\zeta ^2,\ldots \}}\), showing that \(\overline{\textrm{span}\{\mathbb {1},\zeta ,\zeta ^2,\ldots \}}\) is closed under complex conjugation (here, \(\bar{\zeta }\) stands for the complex conjugate of \(\zeta \)). The Stone–Weierstraß theorem now implies that \(\overline{\textrm{span}\{\mathbb {1},\zeta ,\zeta ^2,\ldots \}}\) is dense in \(C(\mathbb {T})\), the usual space of continuous function on the unit circle. Since \(\nu \) is a Borel probability measure on \(\mathbb {T}\), it is regular, and [63, Theorem 3.14] shows that \(C(\mathbb {T})\) is dense in \(L^2_\nu \). Theorem 11 now implies that the Szegő condition holds. \(\square \)

The above proof also shows that weak predictiveness implies that \(\mathcal {U}^n f\) can be approximated from past observations for every \(n\in \mathbb {N}\), not merely for \(n=1\).

Remark 13

(Time flip)

Note that swapping the roles of T and \(T^{-1}\) is merely a reflection of the trace measure \(\nu \) on the real axis. Thus, all statements on weak predictiveness that we consider here are valid both for T and \(T^{-1}\).

Furthermore, the proof of Theorem 12 shows that weak predictiveness is equivalent to the cyclic subspace of f under \(\mathcal {U}\) being equal to its cyclic subspace under \(\mathcal {U}^{-1}\), i.e., \(C_{f,\mathcal {U}} = C_{f,\mathcal {U}^{-1}}\). To see this, first note that the proof shows the equivalence of weak predictiveness and \(\overline{\textrm{span}\{\ldots , \zeta ^{-2},\zeta ^{-1},\mathbb {1},\zeta ,\zeta ^2,\ldots \}} = \overline{\textrm{span}\{\mathbb {1},\zeta ,\zeta ^2,\ldots \}} = L^2_\nu \). By complex conjugation one obtains that also \(\overline{\textrm{span}\{\mathbb {1},\zeta ^{-1},\zeta ^{-2},\ldots \}} = L^2_\nu \), which implies our claim.

If \(\mathcal {U}\) has discrete spectrum, then for any observable f the decomposition of the associated trace measure \(\nu \) in Theorem 11 has no absolutely continuous part, i.e., \(w\equiv 0\). Theorem 12 thus implies:

Proposition 14

If the invertible mpt T has discrete spectrum, then every observable \(f\in L^2_\mu \) is weakly predictive.

We note that Theorem 12 allows for mixing systems (that have purely continuous spectrum) also to be predictive; for instance by having a subinterval of \(\mathbb {T}\) (i.e., an arc) of positive (arc-length) measure on which \(w=0\). Note that this is compatible with our observation in Sect. 3.5 below, that there are mixing systems which even possess cyclic vectors.

3 Genericity of Asymptotically Linearly Predictive Pairs (T, f)

Predictiveness in Proposition 6 requires cyclic vectors of \(\mathcal {U}^{-1}\). For simplicity of notation, in the remainder of this section we are going to speak about cyclic vectors of \(\mathcal {U}\). We ask the reader to keep in mind that the statements hold true for \(\mathcal {U}^{-1}\) just as well, because the properties discussed herein are shared by T and \(T^{-1}\).

In Sect. 3.1.2 below we define \(\text {MPT}\) as the set of all inveritble mpts and endow it with the weak topology. We are going to investigate the size of the set of pairs (T, f) such that f is a cyclic vector of \(\mathcal {U}= \mathcal {U}_T\). For instance, we show in Sect. 3.3 that a dense set of measure-preserving transformations admits a prevalent set of cyclic vectors. The collection of mpts with a dense G\(_{\delta }\) set of cyclic vectors is even a dense G\(_{\delta }\) set in \(\text {MPT}\) (see Sect. 3.4). This result is extended to the setting of measure-preserving homeomorphisms in Sect. 3.6.

In Sect. 3.5 we discuss so-called rank one systems. We will see that the rank one property is generic in \(\text {MPT}\) and guarantees existence of cyclic vectors.

3.1 Preliminaries

3.1.1 Some terminology from topology

Definition 15

(G\(_{\delta }\) and generic). In a topological space a G\(_{\delta }\) set is a countable intersection of open sets. We call a property generic if the set with this property contains a dense G\(_{\delta }\) set.

Generic properties represent sets which are large in a topological sense, and in particular, nonempty. Since a unitary transformation is an isometric bijection, it maps G\(_{\delta }\) sets to G\(_{\delta }\) sets. It is also easy to see that a unitary transformation maps dense sets to dense ones; we will spell this out in Lemma 21 for a specific setting.

For comparison, we also mention another related concept of genericity: Some authors define a generic property as one that holds on a residual set (that is, a countable intersection of dense open sets), with the dual concept being a meagre set (i.e., a countable union of nowhere dense closed sets). We observe as an important practical aspect for applications that, if a property holds on a residual set, it may not hold for every point, but perturbing the point slightly will generally land one inside the residual set (by nowhere density of the components of the meagre set). Thus, generic sets constitute the most important case to address in theorems and algorithms.

In this paper we will actually study generic sets in completely metrizable topological spaces. In those spaces a residual set is dense by Baire category theorem and, hence, the two concepts of genericity coincide.

The next “largeness” concept, prevalence, arose from the desire to generalize the notion “Lebesgue-almost surely” to infinite-dimensional spaces.

Definition 16

(Prevalence [11]). Let \(\mathbb {V}\) be a vector space. A set \(\mathbb {S} \subset \mathbb {V}\) is called prevalent if there is a finite-dimensional probe space \(\mathbb {E} \subset \mathbb {V}\) such that for all \(v\in \mathbb {V}\) (Lebesgue-)almost every point in \(v + \mathbb {E}\) belongs to \(\mathbb {S}\).

We also note that unitary transformations map prevalent sets to prevalent sets. To see this, we pick an orthonormal basis \(\{e_1,\dots , e_{\dim \mathbb {E}}\}\) of the finite-dimensional probe space \(\mathbb {E}\) and define the Lebesgue measure \(\mu _{v+\mathbb {E}}\) on \(v+\mathbb {E}\) via the coefficients with respect to this basis, that is, for any subset A of \(v+\mathbb {E}\) we define \(\mu _{v+\mathbb {E}}(A)=\lambda (A)\), where \({A=\left\{ c\in \mathbb {C}^{\dim \mathbb {E}}\mathrel {}|\mathrel {}v+\sum ^{\dim \mathbb {E}}_{i=1}c_ie_i \in A\right\} }\) and \(\lambda \) is the Lebesgue measure on \(\mathbb {C}^{\dim \mathbb {E}}\). Since a unitary transformation U is isometric, it is measure-preserving as a map from \((v+\mathbb {E},\mu _{v+\mathbb {E}})\) to \((Uv+U\mathbb {E},\mu _{Uv+U\mathbb {E}})\).

3.1.2 Some terminology from measure theory

Two measure spaces \((\mathbb {X},\mathcal {B},\mu )\) and \((\mathbb {X}',\mathcal {B}',\mu ')\) are said to be isomorphic if there are null sets \(N \subset \mathbb {X}\), \(N' \subset \mathbb {X}'\) and an invertible map \(\phi :\mathbb {X}{\setminus } N \rightarrow \mathbb {X}'{\setminus } N'\) such that \(\phi \) and \(\phi ^{-1}\) both are measurable and measure-preserving maps. Such a map \(\phi \) is called a measure-theoretic isomorphism. In the case when \((\mathbb {X},\mathcal {B},\mu )=(\mathbb {X}',\mathcal {B}',\mu ')\) one sometimes also calls such an isomorphism \(\phi \) an automorphism. We denote the collection of automorphisms of a given measure space by \(\text {MPT}\). As usual, two measure-preserving transformations are identified if they differ on a set of measure zero only.

If \(\mu (\mathbb {X})=1\), then the measure space \((\mathbb {X},\mathcal {B},\mu )\) is a probability space. An important class of probability spaces are the Lebesgue–Rokhlin spaces, that is, they are complete and separable probability spaces. We refer to [64, section 9.4] for details. Every Lebesgue–Rokhlin spaces is isomorphic to a disjoint union of the unit interval with Lebesgue measure and at most countably many atoms [64, Theorem 9.4.7].

In the following, we restrict ourselves to Lebesgue–Rokhlin spaces without any atoms and call them standard measure spaces. Since every standard measure space is isomorphic to the unit interval with Lebesgue measure on the Borel sets, every invertible mpt of a standard measure space is isomorphicFootnote 2 to an invertible Lebesgue-measure-preserving transformation on [0, 1]. Hence, it often suffices to prove statements for mpts on [0, 1].

We endow \(\text {MPT}\) with the weak topology.

Definition 17

(Weak topology on \(\text {MPT}\) [65, p. 62]). A subbasis of the weak topology is given by all sets of the form

where E is measurable and \(\varepsilon >0\). Convergence in the weak topology of the sequence of mpts \((T_n)_n\) to the mpt T, denoted by \(T_n {\mathop {\rightarrow }\limits ^{w}} T\), is thus defined such that \(\mu (T_n E \, \triangle \, T E) \rightarrow 0\) as \(n\rightarrow \infty \) for every \(E\in \mathfrak {B}\).

This weak topology is even completely metrizable, see [65, p. 64]. To quote Halmos: “Since, however, this rather artificial construction [of the metric] does not seem to throw any light on the structure of \(\text {MPT}\), I see no point in studying it further.” Weak convergence of mpts can be characterized as follows. The proof can be found in “Appendix A”.

Lemma 18

For \(T,T_n \in \text {MPT}\), \(n\in \mathbb {N}\), the following are equivalent:

-

1.

\(T_n {\mathop {\rightarrow }\limits ^{w}} T\) as \(n\rightarrow \infty \).

-

2.

\(T_n^{-1} {\mathop {\rightarrow }\limits ^{w}} T^{-1}\) as \(n\rightarrow \infty \).

-

3.

\(\mathcal {U}_{T_n} \rightarrow \mathcal {U}_T\) as \(n\rightarrow \infty \) in the strong operator topology, i.e., \(\Vert \mathcal {U}_{T_n} g - \mathcal {U}_T g\Vert _{L^2_\mu } \rightarrow 0\) for all \(g\in L^2_\mu \).

-

4.

\(\mathcal {U}_{T_n} \rightarrow \mathcal {U}_T\) as \(n\rightarrow \infty \) in the weak operator topology, i.e., \(\langle \mathcal {U}_{T_n} g - \mathcal {U}_T g, h \rangle _{L^2_\mu } \rightarrow 0\) for all \(g,h\in L^2_\mu \).

-

5.

For every \(g\in L^2_\mu \), one has that \(\Vert g\circ T_n^i - g\circ T^i\Vert _{L^2_\mu } \rightarrow 0\) as \(n\rightarrow \infty \) for every \(i\in \mathbb {Z}\).

3.2 Ergodic circle rotations have a prevalent set of cyclic vectors

Recall that predictiveness requires cyclic vectors of \(\mathcal {U}^{-1}\), while, for simplicity of notation, we consider cyclic vectors of \(\mathcal {U}\) in the entire Sect. 3. Note that the statement of the next result holds just as well for \(T^{-1}\) as for T. Let \(S^1=\mathbb {R}/\mathbb {Z}\) denote the unit circle (the onedimensional unit torus) closed under addition.

Proposition 19

Let \(T:S^1\rightarrow S^1\), \(Tx = x + \alpha \mod 1\), be an ergodic circle rotation and \(\mathcal {U}:L^2(S^1)\rightarrow L^2(S^1)\) the associated Koopman operator, where the measure underlying \(L^2(S^1)\) is the Lebesgue measure. Then \(\mathcal {U}\) has a prevalent set of cyclic vectors. Furthermore, the set of cyclic vectors contains a dense G\(_{\delta }\) set.

Proof

1. Prevalence. We recall that the translation semigroup \(\mathcal {T}^t f = f(\cdot - t)\), \(t\in \mathbb {R}\), is strongly continuous on \(L^2(S^1)\), which means that \(\lim _{t\rightarrow s} \mathcal {T}^t f = \mathcal {T}^s f\) for \(f\in L^2(S^1)\), cf. [66, I.4.18]. This implies, since the sequence \((n\alpha )_n\) is dense in \(S^1\) by ergodicity of T, that

Assume that \(f\in L^2(S^1)\) is not cyclic. Then there is a \(0 \ne g\in L^2(S^1)\) such that

which is by (9) equivalent to

where \(\mathcal {R}f\), defined by \((\mathcal {R}f)(x):= f(-x)\) for \(x\in S^1\), is the reflection of f and \(g*h\) denotes the convolution of g with h. Denoting by \(\mathcal {F}: L^2(S^1) \rightarrow \ell ^2(\mathbb {Z})\) the Fourier transform, we have that (10) is equivalent to

Consider the probe vector \(p \in L^2(S^1)\) such that the Fourier transform of its reflection is given by

Alternatively, one could consider the real-valued probe vector p by replacing \(\frac{1}{k^2}\) by \(\frac{1}{k^2}(1 + i\, \textrm{sign}(k))\), where i denotes the imaginary unit. Let \(\lambda \in \mathbb {C}\). By (11), the requirement for \(f+\lambda p\) not to be cyclic is that

for some (possibly \(\lambda \)-dependent) \(g\ne 0\). However, \(g\ne 0\) implies that \(\mathcal {F}g(k) \ne 0\) for at least one \(k\in \mathbb {Z}\). Thus, there is a \(k\in \mathbb {Z}\) with \(\mathcal {F}\mathcal {R}f(k) + \lambda \mathcal {F}\mathcal {R}p(k) = 0\), implying \(\lambda = -k^2 \mathcal {F}\mathcal {R}f(k)\). Hence, cyclicity of \(f+\lambda p\) can fail at most at countably many \(\lambda \). In other words, \(f+\lambda p\) is cyclic for Lebesgue-almost every \(\lambda \), rendering the set of cyclic vectors of \(\mathcal {U}\) prevalent.

2. Dense G\(_{\delta }\). By the above we have the implication

For \(k\in \mathbb {Z}\) let us define

where we denote by \(s_k\) the k-th element of a sequence s. With (12) we have that \(\bigcap _{k\in \mathbb {Z}} A_k\) is contained in the set of cyclic vectors. Note that both \(\mathcal {F}\) and \(\mathcal {R}\) are unitary transformations, hence \((\mathcal {F}\mathcal {R})^{-1}\) is a bounded operator preserving openness and denseness with respect to the norm topologies. As the sets \(\left\{ s\in \ell ^2(\mathbb {Z}) \mid s_k \ne 0\right\} \) are open and dense for every \(k\in \mathbb {Z}\), the set \(\bigcap _{k\in \mathbb {Z}} A_k\) is G\(_{\delta }\). Since the set \(\left\{ s\in \ell ^2(\mathbb {Z}) \mid s_k \ne 0\ \forall k \in \mathbb {Z} \right\} \) is also dense, so is its preimage \(\bigcap _{k\in \mathbb {Z}} A_k\) under \(\mathcal {F}\mathcal {R}\). \(\square \)

Remark 20

Note that the set \(\left\{ s\in \ell ^2(\mathbb {Z}) \mid s_k \ne 0\ \forall k \in \mathbb {Z} \right\} \) is not open in \(\ell ^2(\mathbb {Z})\). Thus, “dense G\(_{\delta }\)” cannot be replaced in the statement of Proposition 19 by “open and dense”.

3.3 One map with a prevalent set of cyclic vectors implies densely many

We just showed that ergodic circle rotations possess a prevalent set of cyclic vectors. Through the isomorphy of the nonatomic and separable probability space \((\mathbb {X},\mu )\) and \(([0,1], \textrm{Leb})\) (see Sect. 3.1.2 and also [65, p. 61]) we can build an ergodic “rotation” on \((\mathbb {X},\mu )\), hence a transformation with a prevalent set of cyclic vectors. First, one transfers a cyclic vector via isomorphy:

Lemma 21

Let \(T:\mathbb {X}\rightarrow \mathbb {X}\) be a \(\mu \)-preserving transformation with a cyclic vector c. For every \(\mu \)-preserving essential bijection \(S:\mathbb {X}\rightarrow \mathbb {X}\), the mpt \(\widetilde{T} = S^{-1}TS\) has the cyclic vector \(c\circ S\).

Proof

Note that \(\mathcal {U}_{\widetilde{T}}^n f = \mathcal {U}_S \circ \mathcal {U}_T^n \circ \mathcal {U}_{S}^{-1}\)for \(n\in \mathbb {N}\). In particular,

Since \(\textrm{span}\{\mathcal {U}_T^n c\mid n\in \mathbb {N}\}\) is dense, it is sufficient to show that for any dense subspace \({ \mathbb {U} \subset L^2_\mu }\) also the space \({ \mathcal {U}_{S}\mathbb {U} }\) is dense. For any fixed \(\varepsilon >0\) and \({ f\in L^2_\mu }\) let \(u\in \mathbb {U}\) be such that \({ \Vert u- f\circ S^{-1} \Vert _{L^2_\mu } < \varepsilon }\). Since S is measure-preserving and an essential bijection, we have that \({ \Vert u\circ S - f \Vert _{L^2_\mu } < \varepsilon }\). Thus, \(\mathcal {U}_{S}\mathbb {U}\) is dense and the claim follows. \(\square \)

To show denseness of transformations with specific ergodic properties, the following tool is extremely useful.

Lemma 22

(Conjugacy Lemma, [65, p. 77]). Let \(T_0\) be an aperiodic \(\mu \)-preserving transformation (i.e., the set of periodic points has zero measure). In the weak topology the set of all transformations of the form \(S^{-1}T_0S\), where S is a \(\mu \)-preserving essential bijection, is dense in \(\text {MPT}\).

It follows that the set of transformations that has prevalently many cyclic vectors is dense in MPT, because we can match every cyclic vector c of T with a cyclic vector \(c\circ S\) of \(S^{-1}TS\). The mapping \(c \mapsto c\circ S\) is a unitary transformation, since S is a measure-preserving essential bijection, and thus it preserves the properties discussed in Sect. 3.1.1.

Altogether we conclude the following denseness result.

Theorem 23

The collection of \(\mu \)-preserving transformations with a prevalent and dense G\(_{\delta }\) set of cyclic vectors in \(L^2_{\mu }\) lies dense in \(\text {MPT}\) with respect to the weak topology.

Proof

We take any fixed irrational rotation \(R_{\alpha }\) on \(S^1\) (that has a dense G\(_{\delta }\) and prevalent set of cyclic vectors by Proposition 19) and conjugate it with an isomorphy \(\phi \) from \(\mathbb {X}\) to \(S^1\) to get an aperiodic mpt \(T_0=\phi ^{-1} \circ R_{\alpha } \circ \phi \) on \(\mathbb {X}\). By Lemma 21, \(T_0\) has a dense G\(_{\delta }\) and prevalent set of cyclic vectors. Then we apply the Conjugacy Lemma (Lemma 22) to get a dense set of mpts, each of which have a dense G\(_{\delta }\) and prevalent set of cyclic vectors, again by Lemma 21. \(\square \)

Remark 24

(Weakly predictive mpts are dense) In Theorem 23 we used an ergodic circle rotation to generate densely many ergodic transformations on an arbitrary Lebesgue–Rokhlin space \((\mathbb {X},\mathcal {B},\mu )\) without atoms, such that all these transformations yield a prevalent set of cyclic vectors. Note that for such spaces \(L^2_\mu (\mathbb {X})\) is separable. From [53, §3.3] we know that ergodic group rotations have discrete spectrum. Thus, also the dense set of transformations constructed in Theorem 23 have discrete spectrum, and by Proposition 14 all observables for these transformations are weakly predictive.

It is natural to ask whether for an ergodic transformation the possession of discrete spectrum already implies that the set of cyclic vectors is prevalent. The Representation Theorem [53, Theorem 3.6] states that an ergodic measure-preserving transformation with discrete spectrum on a probability space is isomorphic to an ergodic rotation on a compact abelian group. The strong analogy between (ergodic) circle rotations and group rotations, together with the prevalence statement of Proposition 19 could lead one to formulate the following conjecture:

Conjecture 25

Let the ergodic \(T \in \text {MPT}\) possess discrete spectrum. Then \(\mathcal {U}= \mathcal {U}_T\) has a prevalent set of cyclic vectors in \(L^2_\mu \).

In Sect. 3.5 we will discuss another type of systems that possesses cyclic vectors.

3.4 A generic MPT has a dense G\(_{\delta }\) set of cyclic vectors

By Theorem 23 the collection of measure-preserving transformations with a dense G\(_{\delta }\) set of cyclic vectors lies dense in \(\text {MPT}\) with respect to the weak topology. In fact, we can even prove that it is a dense G\(_{\delta }\) set in \(\text {MPT}\). We use similar methods as in [67, Theorem 8.26], where it is shown that the collection of measure-preserving transformations admitting a cyclic vector is a dense G\(_{\delta }\) set in \(\text {MPT}\) with respect to the weak topology.

Proposition 26

The collection of \(\mu \)-preserving transformations with a dense G\(_{\delta }\) set of cyclic vectors in \(L^2_\mu \) is a dense G\(_{\delta }\) set in \(\text {MPT}\) with respect to the weak topology.

Proof

By Theorem 23 we already know that the collection of measure-preserving transformations with a dense G\(_{\delta }\) set of cyclic vectors is dense in \(\text {MPT}\).

To show that it is a G\(_{\delta }\) set, we let \(\{f_j\}_{j\in \mathbb {N}}\) be a dense set in \(L^2_{\mu }\). For \(g\in L^2_{\mu }\), \(m,N\in \mathbb {N}\) we introduce the set

where \(P_N\) denotes the set of polynomials of degree not larger than N. Such a set \(\tilde{V}_i(m,N,g)\) is open in the space \(\mathcal {B}(L^2_\mu )\) of bounded linear operators with respect to the strong operator topology because \(\mathcal {U}_n \rightarrow \mathcal {U}\) implies \(p(\mathcal {U}_n) \rightarrow p(\mathcal {U})\) for every polynomial p by Lemma 18(5). Since the weak topology on \(\text {MPT}\) coincides with the topology induced from the strong operator topology on Koopman operators (cf. Lemma 18), we see that the sets

are open with respect to the weak topology. Thus, the finite intersections

are also open. With this terminology, the collection of mpts with a dense G\(_{\delta }\) set of cyclic vectors is given by the G\(_{\delta }\) set

where it remains to show that \(T\in \mathcal {T}\) has a dense G\(_{\delta }\) set of cyclic vectors in \(L^2_\mu \). Heuristically, this dense G\(_{\delta }\) set of cyclic vectors is obtained by the intersections over t and s which guarantee for any function \(f_s\) from our dense family and any \(t\in \mathbb {N}\) the existence of a cyclic vector that is \(\frac{1}{t}\)-close to \(f_s\). To show this in detail, let \(B_r(f) \subset L^2_\mu \) denote the open ball with radius \(r>0\) and center \(f\in L^2_\mu \) and consider

The functions \(g \in L^2_\mu \) that appear in the characterization of \(\mathcal {T}(m,n)\) are candidates for cyclic vectors upon intersection over all \(m,n\in \mathbb {N}\). We denote this set by \(C(T,m,n) \subset L^2_\mu \), more precisely, for a fixed \(T\in \mathcal {T}(m,n)\) let

For \(T \in \mathcal {T} = \bigcap _{m,n \in \mathbb {N}} \mathcal {T}(m,n)\), the set of cyclic vectors is given by \(\bigcap _{m,n\in \mathbb {N}} C(T,m,n)\). To see that this is a G\(_{\delta }\) set, it is sufficient to show that the C(T, m, n) are themselves G\(_{\delta }\) for every \(m,n\in \mathbb {N}\). This is straightforward by noting that \(p(\mathcal {U}_T)\) is a continuous mapping on \(L^2_\mu \) for every \(p\in P_N\) and that

Arbitrary unions and finite intersections of open sets are open, hence C(T, m, n) and with it the set of cyclic vectors for \(T \in \mathcal {T}\) is G\(_{\delta }\), concluding the proof. \(\square \)

While this result gives us the genericity of transformations with cyclic vectors, it does not provide us with a criterion to check if a given transformation has cyclic vectors. In the following section we meet such a sufficient condition to guarantee the existence of cyclic vectors.

3.5 Rank one systems

In this section we discuss rank one systems which constitute a generic class of measure-preserving transformations possessing cyclic vectors. We start with the admittedly technical definition of rank one systems.Footnote 3

Definition 27

(Rank one system). A system \(T:(\mathbb {X},\mu ) \rightarrow (\mathbb {X},\mu )\) is of rank one if for any measurable set \(A\subset \mathbb {X}\) and any \(\varepsilon >0\) there exist \(F\subset \mathbb {X}\), \(h\in \mathbb {Z}^+\) and a measurable subset \(A^{\prime }\subset \mathbb {X}\) such that

-

the sets \(T^kF\), \(k=0,1,\ldots ,h-1\) are disjoint;

-

\(\mu (A\triangle A^{\prime })<\varepsilon \);

-

\(\mu (\bigcup ^{h-1}_{k=0}T^kF)>1-\varepsilon \);

-

\(A^{\prime }\) is measurable with respect to the partition \(\xi (\mathcal {T})\) formed by the sets \(F,TF,\ldots , T^{h-1}F\) and \(\mathbb {X} {\setminus } \bigcup ^{h-1}_{k=0}T^kF\).

One calls \(\mathcal {T}=\{F,TF,\ldots , T^{h-1}F\}\) a Rokhlin tower (or column) of height h and with base F.

Thus, a system is rank one if for any fixed set and a tolerance one can choose some set that has disjoint iterates which achieve filling the space and approximating the first set both within the tolerance. Furthermore, one says that a sequence of towers \(\mathcal {T}_n\) is exhaustive if \(\xi (\mathcal {T}_n)\) converges to the decomposition into points as \(n\rightarrow \infty \), that is, for every measurable set \(A\subseteq \mathbb {X}\) and for every \(n \in \mathbb {N}\) there exists a set \(A_n\), which is a union of elements of \(\xi (\mathcal {T}_n)\), such that \(\lim _{n\rightarrow \infty }\mu (A \triangle A_n)=0\). By definition, rank one systems have exhaustive sequences of towers.

If \(T\in \text {MPT}\) is rank one, so is \(T^{-1}\). To see this, note that for every \(\varepsilon >0\) and associated tower \(\mathcal {T}\) with base F for T we get the same tower with base \(T^{h-1}F\), now for \(T^{-1}\), satisfying the requirements of Definition 27.

Examples of rank one systems include rotations and odometer transformations; see our example in Sect. 5.4. In general, it is true that discrete spectrum together with ergodicity implies rank one [69]. Rank one systems were introduced and actively studied in the framework of so-called cutting-and-stacking constructions in ergodic theory (see e.g. [70, section 5.2]). Here, one can view a rank one system as a transformation obtained from a cutting-and-stacking construction with a single tower but there is a scarcity of explicit examples.

Our interest in the rank one property is based on the fact that it is a sufficient condition to guarantee the existence of cyclic vectors.Footnote 4

Proposition 28

A rank one system has cyclic vectors.

Proof

We combine arguments from [67, chapter 4] and [70, Proposition 5.8].

Let T be a rank one transformation. Recall that \({ C_g = \overline{\textrm{span} \{ g,\mathcal {U}_T g, \mathcal {U}^2_T g,\ldots \}} }\) denotes the cyclic subspace of \(g\in L^2_{\mu }\). Furthermore, \(d_{L^2_\mu }(f,C_g)\) denotes the \(L^2_{\mu }\) distance of \(f\in L^2\) from \(C_g\).

Suppose that T does not admit cyclic vectors. Then there exist orthogonal unit vectors \(f_1,f_2 \in L^2_{\mu }\) such that

for every \(g\in L^2_{\mu }\) (see [71, Lemma 3.1] or [67, Theorem 4.3] for a proof of a more general result). As in the definition of the rank one property, we consider an exhaustive sequence of towers \(\mathcal {T}_n\) with base \(F_n\) and height \(h_n\) approximating T. Then for each n the images of the characteristic function \(\chi _{F_n}\) under \(\mathcal {U}^i_T\), \(i=0,1,\ldots ,h_n-1\), are characteristic functions of the disjoint levels of the tower \(\mathcal {T}_n\). Then for each n there is a cyclic subspace \(C_{\chi _{F_n}}\) which contains all characteristic functions of the levels of the tower and their linear combinations. Since our sequence of towers is exhaustive, we can choose n sufficiently large such that \(d_{L^2_\mu }(f_1,C_{\chi _{F_n}})<\frac{1}{2}\) and \(d_{L^2_\mu }(f_2,C_{\chi _{F_n}})<\frac{1}{2}\). We obtained a contradiction to (13). Hence, T has cyclic vectors. \(\square \)

In the converse direction to Footnote 4, the simplicity of the spectrum does not imply the rank one property; see [68, section 2.4.2] for various types of counterexamples. Interestingly, there are also mixing systems of rank one; see e.g. [68, section 1.4.4] for a celebrated construction by Ornstein. We would like to emphasize the following:

Remark 29

(Mixing can be predictive) On the one hand, measure-theoretic (strong) mixing implies gaining asymptotic independence on the past. Intuitively, this could lead to the impossibility of asymptotic predictiveness, since the observation \(f\circ T^{-k}\) gives “increasingly less information” about \(f\circ T\) as k grows. On the other hand, the existence of mixing systems that are rank one—and thus possess cyclic vectors—tells us that this intuition is not necessarily correct (recall also the discussion after Proposition 14).

Given the significance of cyclic vectors for predictiveness, it is an interesting question if a rank one system has a prevalent set of cyclic vectors. We do not provide an answer to this question, but note that if it were positive, then also Conjecture 25 would be true, as ergodic systems with discrete spectrum are rank one.

We provide a proof of the following folklore result by adapting proofs of similar results in [71, Theorem 1.1] and [72, Theorem 3.1].

Proposition 30

The collection of rank one systems is a dense G\(_{\delta }\) set in \(\text {MPT}\) with respect to the weak topology.

Proof

Let \(\{E_j\}_{j\in \mathbb {N}}\) be a dense collection of measurable subsets in [0, 1]. We define \(\mathcal {A}_n\) as the set of transformations \(T\in \text {MPT}\) that admit a tower approximating \(E_1,\ldots , E_n\) with accuracy 1/n. We claim that \(\mathcal {R}:=\bigcap _{n\in \mathbb {N}}\mathcal {A}_n\) is a dense G\(_{\delta }\) set and coincides with the collection of rank one systems.

To see that every \(T\in \mathcal {R}\) has rank one, we let A be a measurable set and \(\varepsilon >0\). Since \(\{E_j\}_{j\in \mathbb {N}}\) is dense, there is \(E_k\) with \(\mu (A \triangle E_k)< \varepsilon /2\). Let \(n>\max (k,2/\varepsilon )\). Since \(T \in \mathcal {A}_n\), there is a tower approximating \(E_k\) with accuracy \(1/n<\varepsilon /2\). Hence, the tower approximates A with accuracy \(\varepsilon \).

In the next step we show that each \(\mathcal {A}_n\) is dense. For this purpose, we choose \(K\in \mathbb {N}\) sufficiently large such that for all \(k\ge K\) each \(E_1,\ldots , E_n\) can be approximated by unions of dyadic intervals

with accuracy 1/n. On the partition \(\left\{ I_{k,i}\mathrel {}|\mathrel {}i=0,1,\ldots , 2^k-1\right\} \) we consider cyclic permutations of order k, that is, maps cyclically permuting the dyadic intervals of rank k by translations. We denote the collection of cyclic permutations of order k by \(\Pi _k\). In particular, each such permutation admits a tower of exactly the dyadic intervals of rank k. Hence, \(\Pi _k \subset \mathcal {A}_n\). By the so-called Weak Approximation Theorem from [65, p. 65] the collection \(\bigcup _k \Pi _k\) of cyclic permutations is dense in \(\text {MPT}\) with respect to the weak topology. Since all but finitely many of the cyclic permutations are in \(\mathcal {A}_n\), \(\mathcal {A}_n\) still contains a dense set.

One can also verify that each \(\mathcal {A}_n\) is open. Then the Baire category theorem yields that \(\mathcal {R}\) is a dense G\(_{\delta }\) set. \(\square \)

3.6 A generic measure-preserving homeomorphism has a dense G\(_{\delta }\) set of cyclic vectors

In this section we want to extend our results to the setting of measure-preserving homeomorphisms. Let \(\mathbb {M}\) be a compact connected manifold and \(\mu \) be a so-called OU measure.Footnote 5, that is, \(\mu \) is nonatomic, locally positive (i.e., it is positive on every nonempty open set), and zero on the manifold boundary. We consider the collection \(\textrm{Homeo}(\mathbb {M},\mu )\) of \(\mu \)-preserving homeomorphisms of \(\mathbb {M}\) with the uniform topology defined by the complete metric

where \(\textrm{d}\) is a given metric on \(\mathbb {M}\) compatible with its topology.Footnote 6

In this setting, any measure-theoretic property which is generic for abstract measure-preserving transformations is also generic for measure-preserving homeomorphisms of compact manifolds. This can be stated as follows.

Lemma 31

([73, Corollary 10.4]). Let \(\mu \) be an OU measure on a compact connected manifold \(\mathbb {M}\). Let \(\mathcal {V}\) be a conjugate invariant dense G\(_{\delta }\) subset of \(\text {MPT}\) with the weak topology. Then \(\mathcal {V}\cap \textrm{Homeo}(\mathbb {M},\mu )\) is a dense G\(_{\delta }\) subset of \(\textrm{Homeo}(\mathbb {M},\mu )\) with the uniform topology.

Since the collection of mpts with a dense G\(_{\delta }\) set of cyclic vectors is conjugate invariant by Lemma 21, we can apply Lemma 31 on Proposition 26. This gives the following genericity result for homeomorphisms with cyclic vectors.

Proposition 32

Let \(\mathbb {M}\) be a compact connected manifold and \(\mu \) be an OU measure. The collection of \(\mu \)-preserving homeomorphisms on \(\mathbb {M}\) with a dense G\(_{\delta }\) set of cyclic vectors in \(L^2_\mu \) is a dense G\(_{\delta }\) set in \(\textrm{Homeo}(\mathbb {M},\mu )\) with the uniform topology.

Thus, a generic homeomorphism admits a generic set of predictive \(L^2\) observables. Note that Takens-type theorems require observables to be continuous.

4 Properties of the Least Squares Filter

4.1 Forecast properties

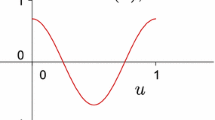

Beyond the prediction error considered in Proposition 6, a further dynamic object of interest is the autocorrelation function; that is

It turns out that autocorrelations for \(n\le d\) are always reproduced correctly, irrespective of whether the observation function is a cyclic vector of \(\mathcal {U}\) or not.

To show this, let us define the shorthand notations \(\Pi _{\mathcal {V}}\) (or \(\Pi _h\)) for the orthogonal projection in \(L^2_{\mu }\) to a subspace \(\mathcal {V} \subset L^2_{\mu }\) (or to the onedimensional subspace spanned by \(h\in L^2_\mu \)) and \(\mathcal {K}_d^- := \textrm{span}\{ f\circ T^{-d+1},\ldots , f\circ T^{-1}\}\). Note, in particular, that \(\mathcal {K}_d^- = \mathcal {U}^{-1} \mathcal {K}_{d-1}\) and \(\mathcal {K}_d = \mathcal {K}_d^- + \textrm{span}\{f\}\). Let \(\Pi _{\mathcal {V}}^{\perp }:= \textrm{id} - \Pi _{\mathcal {V}}\) denote the orthogonal projection on the orthogonal complement of \(\mathcal {V}\).

Proposition 33

Let \(A_d(n) := \langle \mathcal {U}_d^n f, f \rangle _{L^2_\mu }\) denote the filter’s autocorrelation. We have for every map T and observable f that

Proof

We can express the statement of Proposition 3 as

For \(n=1\), the claim \(A(n) = A_d(n)\) translates to

Note that (14) implies this with \(g=f\) and \(i=0\). By expressing the difference \(\mathcal {U}_d^n - \mathcal {U}^n\) as a telescopic sum, we can extend this result to higher powers n:

where we used \({ \langle \mathcal {U}^i h, f\rangle _{L^2_\mu } = \langle h, f\circ T^{-i} \rangle _{L^2_\mu } }\) for any \(h \in L^2_\mu \), implied by the invariance of \(\mu \). Since \(i\le n-1\), note that \(g:= \mathcal {U}_d^{n-i-1}f \in \mathcal {K}_d\) and (14) implies that for \(i=0,\ldots ,d-1\),

The first term on the right-hand side of (15) is hence zero. Thus, \(A_d(n) = A(n)\) for \(n\le d\), as the second sum on the right-hand side of (15) is empty.

For \(n=d+1\) the second sum on the right-hand side of (15) reduces to

The inequality for \(n=d+1\) thus follows with \(\Vert \mathcal {U}\Vert =1\). Note that since \(\mathcal {U}\) is unitary and \(\mathcal {U}_d\) is the orthogonal projection of \(\mathcal {U}\), by the non-expansiveness of orthogonal projections we have that \(\Vert \mathcal {U}_d\Vert \le 1\).

For \(n\ge d+2\) we can estimate the terms in the second sum on the right-hand side of (15) by

It remains to bound \(\left\| (\mathcal {U}_d - \mathcal {U})\vert _{\mathcal {K}_d} \right\| \). We note that for every \(f^- \in \mathcal {K}_d^-\) we have that \(\mathcal {U}_d f^- = \mathcal {U}f^-\) because \(\mathcal {U}f^- \in \mathcal {K}_d\). Thus, we can write

where the second equation holds because for any g without a contribution from f (i.e., \(g\in \mathcal {K}_d^-\)) the fraction on the right is zero, clearly dominated by the supremum as written. We obtain

The claim follows by applying the bound (16) to every term in the sum. \(\square \)

Thus, if f is predictive (in particular, if it is a cyclic vector of \(\mathcal {U}\)), then even

By the Wiener–Khinchin theorem, autocorrelation is related to the notion of power spectrum through Fourier transformation [74]. Since Proposition 33 does not indicate anything about autocorrelation beyond a finite horizon, statements about the reconstruction of the power spectrum seem to be elusive. Methods more powerful than the least squares filter can approximate the power spectrum, e.g. [75, §4.5]. In the next section we consider spectral approximation of \(\mathcal {U}\). We note that the rightmost term in (17) will reappear in the pseudospectral bound given there.

4.2 Some spectral properties

We start with the following trivial observation:

Proposition 34

The spectrum of \(U_d\) is contained in the unit complex disk.

Proof

As in the proof of Proposition 33: Since \(\mathcal {U}\) is unitary and \(\mathcal {U}_d\) is the orthogonal projection of \(\mathcal {U}\), by the non-expansiveness of orthogonal projections we have that \(\Vert \mathcal {U}_d\Vert \le 1\), implying the claim. \(\square \)

Under additional assumptions, stronger statements about the position and the distribution of the spectrum of \(U_d\) can be found in [46, Corollaries 2 and 3].

How close is the spectrum of \(\mathcal {U}_d\) to that of \(\mathcal {U}\)? The theory of pseudospectra [76] for normal operators offers a way to approach this question. For simplicity of notation, we use \(\Vert \cdot \Vert = \Vert \cdot \Vert _{L^2_\mu }\) for the remainder of this section. A scalar \(\lambda \in \mathbb {C}\) is said to be in the \(\varepsilon \)-pseudospectrum of \(\mathcal {U}\) if there is a \(h\in L^2_{\mu }\) with \(\Vert h\Vert =1\) such that \(\Vert \mathcal {U}h- \lambda h\Vert < \varepsilon \). Since \(\mathcal {U}\) is unitary, hence normal, every value in the \(\varepsilon \)-pseudospectrum is at most distance \(\varepsilon \) away from a true spectral value [76].

One can now take an eigenpair \((\lambda _d,\phi _d)\) of \(\mathcal {U}_d\) with \(\Vert \phi _d\Vert =1\) and estimate \(\Vert \mathcal {U}\phi _d - \lambda _d \phi _d\Vert \) to assess how far is \(\lambda _d\) from the spectrum of \(\mathcal {U}\). Recall the notation introduced prior to Proposition 33. With it, we have:

Proposition 35

Let \(\lambda _d\) be an eigenvalue of \(\mathcal {U}_d\). Then \(\lambda _d\) is contained in the \(\varepsilon \)-pseudospectrum of \(\mathcal {U}\), where

Proof

Let \((\lambda _d,\phi _d)\) be an eigenpair of \(\mathcal {U}_d\) with \(\Vert \phi _d\Vert =1\). Note that we seek \(\varepsilon = \Vert \mathcal {U}\phi _d - \lambda _d \phi _d\Vert \). Let us write \(\phi _d = a f^- + b f\) with \(f^- \in \mathcal {K}_d^-\) and \(a,b \in \mathbb {C}\). Then, since \(\lambda _d\phi _d = \mathcal {U}_d \phi _d\), we have that

because \(\Pi _{\mathcal {K}_d}^{\perp } \mathcal {U}f^- = 0\). We thus need to bound |b| from above, given \(\Vert a f^- + b f\Vert =1\). This can be done by basic trigonometry. Let us consider the triangle with corners \(0,a f^-\), and \(\phi _d\), which then have the opposite “sides” of lengths \(\Vert bf\Vert \), \(\Vert \phi _d\Vert =1\), and \(\Vert a f^-\Vert \), respectively. Denote the angle at the corner 0 by \(\beta \) and the one at \(af^-\) by \(\theta \). It is then immediate that

where we used \(\textrm{span}\{f^-\} \subset \mathcal {K}_d^-\) for the inequality and \(\Pi _{\mathcal {K}_d}^{\perp } f^- = 0\) for the equality. The sine law for this triangle yields

giving \(|b| = \frac{\sin \beta }{\Vert f\Vert \sin \theta }\). Since \(\sin \beta \le 1\), combining this with the above proves the claim.

\(\square \)

Remark 36

-

(a)

Note that in the expression (18) for \(\varepsilon \), the denominator represents the error to approximate f by the \((d-1)\) observables \(f\circ T^{1-d},\ldots ,f \circ T^{-1}\) preceding it, and the numerator represents the error to approximate \(\mathcal {U}f\) by the d observables \(f\circ T^{1-d},\ldots , f\) preceding it.

-

(b)

In [77, Lemma 2], there was an attempt to make a similar pseudospectral statement. The result, however, is questionable, since its proof seems to be incorrect: Their pseudo-eigenfunction \(\tilde{ \textbf{e} } \cdot \tilde{ \textbf{f} }\) does not have \(L^2_{\mu }\)-norm equal to 1, in general. This also impairs [77, Theorem 5].

Despite the appealing form of (18), we do not seem to be able to draw practical conclusions from it for \(d\rightarrow \infty \). With going to a subsequence, however, we can make connections to the point spectrum. The following is a consequence of [78, Theorem 4], by noting that \(\mathcal {U}_d\) is the \(L^2_{\mu }\)-orthogonal projection on \(\mathcal {K}_d\).

Proposition 37

Let \((\lambda _d,\phi _d)\) be eigenpairs of \(\mathcal {U}_d\) for \(d\in \mathbb {N}\) with \(\Vert \phi _d\Vert =1\). If f is a cyclic vector of \(\mathcal {U}\), then there is a subsequence \((d_k)_k \subset \mathbb {N}\) such that \(\phi _{d_k} {\mathop {\rightarrow }\limits ^{w}} \phi \) and \(\lambda _{d_k} \rightarrow \lambda \), and \(\mathcal {U}\phi = \lambda \phi \). In particular, if \(\phi \ne 0\), then \((\lambda ,\phi )\) is an eigenpair of \(\mathcal {U}\).

At this point it is important to note that other methods than the least squares filter allow to make stronger claims about faithful approximation of (parts of) the spectrum of \(\mathcal {U}\), see [46,47,48,49,50,51].

4.3 Approximate filter

Both Theorem 23 and Proposition 26 imply that the set of systems that are predictive for a large set of observables is weakly dense in \(\text {MPT}\). As the weak topology is metrizable [65, p. 64], there is some predictive map “arbitrary close” to our original system T. Recall, however, that a practical interpretation of this metric seems hardly possible according to Halmos. To remedy this fact somewhat, we will consider this closeness statement on the level of filters. In particular, we show in Proposition 38 below that there is a predictive system that produces a filter arbitrary close to that produced by T.

Note that, by ergodicity of T, for almost every \(x\in \mathbb {X}\) we obtain the same filter c (cf. Lemma 1). A quick inspection of the structure of the objective function J in (4) reveals that the actual observation sequence is of little relevance for the filter c; what matters are the correlation integrals \(\int (f\circ T^{-i})\, (f\circ T^{-j})\,d\mu \), \(i,j=-1,0,\ldots ,d-1\). Note that Lemma 18(5) gives for a sequence \((T_n)_n \subset \text {MPT}\) with \({ T_n{\mathop {\rightarrow }\limits ^{w}} T }\) that

for all \(i,j = -1,0,\ldots ,d-1\). Since for a fixed \(d\in \mathbb {N}\) there are finitely many pairs (i, j) to consider, this convergence is uniform across these pairs. Thus, Theorem 23, Equation (19), together with Lemma 1 gives

Proposition 38

Let \(T\in \text {MPT}\) and \(d\in \mathbb {N}\) a fixed delay depth. Let \(f\in L^2_\mu \) be an observable such that \(\mathcal {B}_d\) is a basis. Then there are predictive \(S\in \text {MPT}\) that produce filters arbitrary close to that of T.

Remark 39

In particular, Proposition 38 implies that based on the filter alone, which is computed from (finite or infinite) observation data, one is not able to infer whether the system at hand is predictive. This also implies that the spectrum of \(\mathcal {U}_d\) (which is for a fixed d a continuous function of the filter vector c) is not able to distinguish whether the observed time series comes from a predictive system.

We stress that Proposition 38 is a statement for a fixed delay depth, and in particular does not imply anything about the forecast error \(\Vert \mathcal {U}_d f - \mathcal {U}f\Vert _{L^2_\mu }\) in the limit \(d\rightarrow \infty \).

5 Numerical Examples

We illustrate our results with a variety of examples properties of the linear least squares filter. In particular, we will consider the prediction error and the autocorrelations.

5.1 Numerical setup

The filter is learned on (observations of) a long “training” trajectory of length \((m+1)\), cf. (2). The mean squared forecast error is computed on a different “testing” trajectory, of the same length \((m+1)\), by sliding the filter along the trajectory and comparing the prediction with the next (the true) element on the trajectory. By ergodicity, the mean square error thus approximates \({ \Vert \mathcal {U}_d f - \mathcal {U}f \Vert _{L^2_\mu }^2 }\) in the limit of \(m\rightarrow \infty \).

The autocorrelations are compared as follows. We generate a large sample of \(\mu \)-distributed initial points \(x^{(i)}_1\), \(i=1,\ldots ,N\). This is done either by random uniform sampling, as in most cases it is the unique ergodic distribution, or by a long trajectory of a rationally independent stepsize compared with the one used for learning and testing, in the case of the one time-continuous system. From each of these initial conditions we generate trajectory “snippets” of length d, denoted by \(x^{(i)}_1, x^{(i)}_2 = T x^{(i)}_1, \ldots , x^{(i)}_d = T^{d-1} x^{(i)}_1\), and continue each snippet in two ways:

-

(i)

By the true map, and apply the observation map to the elements at the end, giving

$$\begin{aligned} f \big (x^{(i)}_1\big ), f \big ( x^{(i)}_2\big ), \ldots , f \big ( x^{(i)}_d\big ), f \big ( x^{(i)}_{d+1} = T x^{(i)}_d\big ), f \big ( x^{(i)}_{d+2} = T^2 x^{(i)}_d\big ), \ldots \end{aligned}$$ -

(ii)

By applying the filter iteratively to the observed trajectory “snippet” to generate an observation sequence of the same length as in (i), giving

$$\begin{aligned} z^{(i)}_1 = f \big (x^{(i)}_1\big ), z^{(i)}_2 = f \big ( x^{(i)}_2\big ), \ldots , z^{(i)}_d = f \big ( x^{(i)}_d\big ), z^{(i)}_{d+1}, z^{(i)}_{d+2}, \ldots , \end{aligned}$$where \(z^{(i)}_n\), \(n>d\), is obtained by the filter applied to \(z^{(i)}_{n-d},\ldots , z^{(i)}_{n-1}\).