Abstract

We consider a discrete-time TASEP, where each particle jumps according to Bernoulli random variables with particle-dependent and time-inhomogeneous parameters. We use the combinatorics of the Robinson–Schensted–Knuth correspondence and certain intertwining relations to express the transition kernel of this interacting particle system in terms of ensembles of weighted, non-intersecting lattice paths and, consequently, as a marginal of a determinantal point process. We next express the joint distribution of the particle positions as a Fredholm determinant, whose correlation kernel is given in terms of a boundary-value problem for a discrete heat equation. The solution to such a problem finally leads us to a representation of the correlation kernel in terms of random walk hitting probabilities, generalizing the formulation of Matetski et al. (Acta Math. 227(1):115–203, 2021) to the case of both particle- and time-inhomogeneous rates. The solution to the boundary value problem in the fully inhomogeneous case appears with a finer structure than in the homogeneous case.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 Background and literature

The Kardar–Parisi–Zhang (KPZ) universality class is a large class of stochastic systems with highly correlated components that exhibit a similar statistical asymptotic behavior under space-time rescaling. They include \((1+1)\)-dimensional random growth models, interacting particle systems, eigenvalues of random matrices, and stochastic partial differential equations. These models can be characterized by means of a space-time ‘height function’, which typically features random non-Gaussian fluctuations depending on the initial height profile. For certain specific initial configurations (e.g. ‘step’ and ‘flat’), the one-point distributions are given by the Tracy–Widom laws (first introduced in the random matrix literature [TW94, TW96]) and the multi-point distributions are given by Airy processes. Such precise asymptotics have been obtained so far only for a few integrable models, whose rich algebraic structure lead to exact formulas that are suitable for asymptotic analysis; see e.g. [BG16, Zyg22]. Among these integrable models, the most accessible ones can be described in terms of determinantal measures: popular examples are the corner growth model, the polynuclear growth model, last passage percolation, and various types of exclusion processes.

It is conjectured that all KPZ models, under the so-called 1:2:3-scaling, converge to a universal, scale-invariant Markov process, known as the KPZ fixed point. Such a limiting process was constructed in [MQR21] for the continuous-time Totally Asymmetric Simple Exclusion Process (\(\textsf{TASEP}\)), a prototypical interacting particle system on the integer line. The transition probabilities of \(\textsf{TASEP}\) were first shown to admit determinantal formulas in [Sch97], using the coordinate Bethe ansatz. Based on these formulas, [Sas05, BFPS07] showed that the \(\textsf{TASEP}\) evolution is encoded by a determinantal point process; consequently, for arbitrary initial configurations, the multi-point distribution of \(\textsf{TASEP}\) particles was given as a Fredholm determinant, whose kernel is implicitly characterized by a biorthogonalization problem. This problem was solved in [BFPS07] in the case of flat (2-periodic) initial configuration for the particles. The solution to the problem of biorthogonalization for general initial configuration was given in [MQR21], where the kernel was expressed in terms of a functional of a random walk and its hitting times to a curve encoding the (arbitrary) initial configuration. The KPZ fixed point was then constructed by taking a Donsker type scaling limit, under which random walk and associated hitting times turn into Brownian motion and corresponding hitting times.

Since the seminal contribution of [MQR21], numerous works have considered other, not only determinantal, KPZ models with general initial configurations, obtaining similar Fredholm determinant formulas and, in certain cases, also convergence to the KPZ fixed point. Here is a non-exhaustive list of such results. A system of one-sided reflected Brownian motions was studied in [NQR20]. Two variations of discrete-time \(\textsf{TASEP}\) with geometric and Bernoulli jumps were considered in [Ara20]. Convergence to the KPZ fixed point was proved in [QS23] in the case of finite range asymmetric exclusion processes and the KPZ equation, for certain classes of initial conditions. In [MR22] it was shown that the method of [MQR21] can cover a general class of models whose multipoint distributions possess a Schütz type determinantal formula.

In the present work, we consider a discrete-time \(\textsf{TASEP}\) with inhomogeneous jump probabilities. We provide an explicit, step-by-step route from the very definition of the model to a Fredholm determinant representation of the joint distribution of the particle positions in terms of random walk hitting times. This is, to the best of our knowledge, the first such formulation for an interacting particle system with both particle- and time-inhomogeneous rates. As discussed above, a result of this type, for the continuous-time and homogeneous-rate \(\textsf{TASEP}\), was first obtained in [MQR21]. However, our approach differs from that of [MQR21]: Firstly, our starting point is not a Schütz type formula, but rather the combinatorial structure of the integrable model. Moreover, instead of solving the biorthogonalization problem, we map to ensembles of non-intersecting paths. We work directly with the corresponding determinantal processes, exploiting some special features that emerge through mapping to path ensembles and from the expression of the path weights via local operators. We hope this perspective can shed additional light on the structure of the KPZ fixed point formulas and may also be useful in different settings, in particular for other particle systems that can be characterized by intertwining relations. In the next subsection we present our result and discuss our approach in detail.

1.2 Our result and approach

In all variations of \(\textsf{TASEP}\), particles occupy sites of \(\mathbb {Z}\) and, according to a stochastic mechanism, perform jumps in the same direction (to the right, by convention). The interaction between particles consists in the exclusion rule: no two particles may occupy the same position at any given time. Therefore, if a particle attempts to jump to an occupied site, the jump is suppressed. There are several possible stochastic mechanisms inducing the dynamics. In this article, as a working example, we consider a version of \(\textsf{TASEP}\) where:

-

(i)

there is a finite number \(N\ge 2\) of particles;

-

(ii)

the dynamics evolves in discrete time;

-

(iii)

jump sizes are given by independent Bernoulli random variables;

-

(iv)

the expected jump size may depend both on the particle (particle-inhomogeneous rates) and on time (time-inhomogenous rates);

-

(v)

particle positions are updated sequentially from right to left.

Let us define the version of \(\textsf{TASEP}\) we are concerned with. For \(k=1,\ldots ,N\), denote by \(Y_k(t) \in \mathbb {Z}\) the position of the k-th particle from the right at time \(t \in \mathbb {Z}_{\ge 0}\). Therefore, the configuration encoding the positions of the particles, at time t, will be

with arbitrary initial configuration

Let \(p_t\), \(t\ge 1\), and \(q_k\), \(1\le k\le N\), be positive parameters. The random dynamics is then given by sequential updates from right to left, i.e. from the particle labeled 1 to the particle labeled N, as follows: Assume we have already updated the position of the \((k-1)\)-th particle at time t (with \(k\ge 2\)). Then, the k-th particle updates its position at time t by jumping one step to the right with probability \(p_tq_k/(1+p_tq_k)\), assuming that the neighboring site on its right is not occupied by the \((k-1)\)-th particle; otherwise, the particle remains in its current position. We call this model discrete-time (Bernoulli) \(\textsf{TASEP}\) and abbreviate it as \(\textsf{dTASEP}\).

To state our main result, we first define the following kernels of operators from \(\mathbb {Z}\) to \(\mathbb {Z}\). For \(1\le j\le k\) and \(0\le r\le t\), let

where \(\Gamma _0\) and \(\Gamma _{{\varvec{q}}}\) are simple closed contours with counterclockwise orientation, enclosing 0 and \(\{q_i\}_{i=1}^N\) as the only poles, respectively. The kernels \(\mathcal {S}_{[j,k],(r,t]}\) are compositions of some random walk transition kernels and \(\bar{\mathcal {S}}_{[j,k],(r,t]}\) are dual versions of \(\mathcal {S}_{[j,k],(r,t]}\) with contribution coming from the poles \(\{q_i\}_{i=1}^N\) instead of 0; see Proposition 4.1 for the precise statement. The random walk/path interpretations of the kernels will be explained through §2-4.

Let S be a geometric random walk (as defined in (4.24)). Let

be the (discrete) strict epigraph of the (discrete) curve \((i,y_{i+1})_{0\le i\le N-1}\). For \(n\le N\), let \(\tau \) be the first time \(\le n\) at which the random walk S hits \(\textrm{epi}(y)\):

The kernel encoding the initial configuration \({\varvec{y}}=(y_1>\cdots >y_N)\) is then expressed in terms of \(\bar{\mathcal {S}}\) and \(\tau \) as the expectation

Let also \(Q_i(x,y):= q_i^{y-x}\mathbbm {1}_{\{y< x\}}\) for \(1\le i\le N\) and \(x,y\in \mathbb {Z}\) and \(Q_{(m,n]}:=Q_{m+1}\circ \cdots \circ Q_n\) for \(n>m\).

Finally, for two operators A and B with kernels A(x, y) and B(x, y), \(x,y\in \mathbb {Z}\), we define the composition operator \(A\circ B\) through the kernel \((A\circ B) (x,z):=\sum _{y\in \mathbb {Z}} A(x,y) B(y,z)\). With these notations, our main result is the following.

Theorem 1.1

(Multipoint distributions of \(\textsf{dTASEP}\) with particle- and time-inhomogeneous rates). Let \((Y(t))_{t\ge 0}=(Y_1(t)>\cdots >Y_N(t))_{t\ge 0}\) be the locations of N particles evolving according to the \(\textsf{dTASEP}\) dynamics with parameters \(\{p_t\}_{t\ge 1}\) and \(\{q_k\}_{k=1}^N\) and initial configuration \(Y(0)={\varvec{y}}\). Assume that \(q_kp_t<1\) for all k, t and \(q_k>1\) for all k. Then, the joint distribution of particle locations at time t is given by the Fredholm determinant

for any \(m\in \mathbb {N}\), \(1\le k_1<k_2<\cdots <k_m\le N\) and \((s_1,\ldots ,s_m)\in {\mathbb {R}^m}\), where \(\chi _s(k_i,x):= \mathbbm {1}_{x<s_i}\) and K is the kernel

We remark that the condition \(q_k p_t<1\) for all k, t is equivalent to assuming that, for all k, t, the k-th particle attempts its t-th jump with probability \((q_k p_t)/(1+q_k p_t)<\frac{1}{2}\). The case when all the jump probabilities are greater than 1/2 can be analyzed using particle-hole duality (see for example [Fer04]). Our theorem does not cover the case where some of the jump rates are \(>\frac{1}{2}\) and others are \(<\frac{1}{2}\). This seems to be a common restriction, appearing for example also in [MR22, Assumption 1.1].

The assumption \(q_k>1\) is innocuous, as we will explain in Remark 4.8.

The original work [MQR21] dealt with homogeneous rates, while [Ara20] considered time-inhomogeneous rates only. In their recent work [MR22], Matetski and Remenik expressed interest in the case of particle-inhomogeneous rates, considering it “meaningful from a physical point of view”. However, they only considered this general case in the preliminary part of their analysis, and did not solve the corresponding biorthogonalization problem.Footnote 1

Let us mention that shape functions and hydrodynamic limits of inhomogeneous \(\textsf{TASEP}\) and corner growth model have been studied since the 1990s; see [SK99, Emr16, EJS21]. Furthermore, multipoint formulas for \(\textsf{TASEP}\) with particle- and time-inhomogeneous rates have been obtained e.g. in [BP08, KPS19, JR22, IMS22]. However, Theorem 1.1 expresses, for the first time in the case of a particle- and time-inhomogeneous \(\textsf{TASEP}\), the joint law of particle locations in terms of random walk hitting times. As we explain later in the introduction, we obtain these formulas in a quite different way from [MQR21, MR22], overcoming the technical difficulties that such a generalization presents.

The route we follow to prove Theorem 1.1 is also new, in various aspects, compared to the methods used so far, as we now discuss. The remarkable idea of expressing the multipoint law in terms of a random walk hitting problem is due to [MQR21]. Before that, the standard representation was in terms of a Fredholm determinant involving two families of biorthogonal functions \(\{\Psi _k^n(\cdot ), \Phi _k^n(\cdot ) \}_{1\le k \le n }\), where \(\{\Psi _k^n(\cdot )\}\) are typically given explicitly, but \(\{\Phi _k^n(\cdot )\}\) are to be determined by solving the biorthogonalisation problem with respect to \(\{\Psi _k^n(\cdot )\}\). This formulation was first established in [Sas05, BFPS07], where the case of flat (2-periodic) initial configuration for continuous-time \(\textsf{TASEP}\) (which corresponds to a biorthogonalisation problem for shifted Charlier polynomials) was solved.

Starting from the determinantal formula of [BFPS07] for \(\textsf{TASEP}\) with general initial configuration, [MQR21] were able to solve the biorthogonalisation problem in terms of a hitting time expectation of the form (1.4). At the core of this lay an expression of the family \(\{\Phi _k^n(\cdot )\}\) in terms of a terminal-boundary value problem for a discrete heat equation. The fact that the family \(\{\Phi _k^n(\cdot )\}\) obtained by this method solves the biorthogonal problem with respect to \(\{\Psi _k^n(\cdot )\}\) was achieved via a direct check. As explained in [MQR21] (see also [Rem22]), the intuition that led to such a guess was based on two points. Firstly, thanks to the “skew time reversibility” of \(\textsf{TASEP}\), the one-point distribution of \(\textsf{TASEP}\) with homogeneous parameters and a general initial profile is equal to the multi-point distribution of \(\textsf{TASEP}\) starting from the so-called step initial configuration (which represented the first solvable example of a particle system [Joh00]). Secondly, the multi-point distribution of \(\textsf{TASEP}\) with the step initial condition has been known since [PS02] to possess the Fredholm determinant expression

where \(Q^n\) is the n-step transition kernel of a homogeneous, geometric random walk, \(K_t^{(n)}\) is the kernel of the one-point distribution of the model and \(\chi '_{s}(k_j,x):=\mathbbm {1}_{x>s_j}\). The expression \(Q^{k_1-k_m}\chi '_{s_1} Q^{k_2-k_1}\chi '_{s_2}\cdots Q^{k_m-k_{m-1}}\chi '_{s_m}\) can then be interpreted as the probability that a homogeneous, geometric random walk with transition kernel Q lies above \(s_1,\ldots ,s_m\) at times \(k_1,\ldots ,k_m\). We remark that, due to the aforementioned skew time reversibility of \(\textsf{TASEP}\), the levels \(s_1,\ldots ,s_m\) are related to the initial (rather than final) positions of the particles.

Our approach to Theorem 1.1 differs from the above, even though our guiding principle has been the already mentioned terminal-boundary value problem and a desire to better understand its foundations. We do not start from the determinantal formulas; instead, we work with the combinatorial foundations of discrete \(\textsf{TASEP}\) and its links, via intertwinings and Markov functions, to determinantal point processes. We first compute the transition kernel of \(\textsf{dTASEP}\) by using the column insertion, dual version of the Robinson–Schensted–Knuth (\(\textsf{RSK}\)) correspondence, which we abbreviate as \(\textsf{dRSK}\). As it turns out, this combinatorial algorithm, viewed from a dynamical standpoint, encodes the \(\textsf{dTASEP}\) dynamics as a projection. Furthermore, the transition kernel of \(\textsf{dTASEP}\) intertwines with the transition kernel of the evolution of the shape of the tableaux generated by the \(\textsf{dRSK}\) dynamics and, thus, can be written as the latter kernel conjugated by an ‘intertwining’ kernel; see (2.25). The general link between \(\textsf{TASEP}\) dynamics and \(\textsf{RSK}\) correspondences is of course well known; see for example [DW08] and references therein. In particular, our approach can be regarded as a time-inhomogeneous generalization of [DW08] (see Case B: ‘Bernoulli jumps with blocking’).

Next, we interpret all the kernels appearing in our representation of the \(\textsf{dTASEP}\) transition kernel in terms of weights of ensembles of non-intersecting lattice paths; see (3.29). For our later goals, it is important to remark that these weights can be expressed in terms of one-step (local) transition operators.

The Lindström–Gessel–Viennot theorem leads, then, to a determinantal formulation for all these kernels, thus allowing us to view the transition distribution of \(\textsf{dTASEP}\) as a marginal of a determinantal point process; see (3.35). Using standard methods in the theory of determinantal point processes (as in [BFPS07, Joh03]), we express the fixed-time joint distribution of \(\textsf{dTASEP}\) particles as a Fredholm determinant; see Proposition 3.10. The correlation kernel of the Fredholm determinant involves the local operators encoding the transition weights of the path ensemble as well as the inverse of a matrix M; see (3.53)–(3.54). The geometric picture that we obtain through the non-intersecting path ensembles leads us to conclude that M is upper triangular and, therefore, explicitly invertible. This crucial aspect leads to the boundary-terminal value problem (Proposition 4.2), which we next solve to arrive at the random walk hitting formula (1.4). This task turns out to be more challenging than in [MQR21], since, in the case of particle-inhomogeneous rates, the solution is not spanned by polynomials; see Remark 4.4. In particular, we develop a very careful double induction argument (see the proof of Proposition 4.6) that involves some subtle cancellations of inclusion–exclusion type that take place in the formulas.

As outlined in Sect. 1.1, the KPZ fixed point was constructed in [MQR21] as the 1:2:3 scaling limit, at large time and length scales, of the homogeneous continuous-time \(\textsf{TASEP}\). The present work paves the way to construct analogous processes from particle systems with variable, fast/slow, rates. We leave this task for future work.

1.3 Outline of the article

In Sect. 2 we start by presenting some combinatorial objects and, in particular, the dual, column \(\textsf{RSK}\) algorithm (\(\textsf{dRSK}\)) and its link with discrete-time \(\textsf{TASEP}\) (\(\textsf{dTASEP}\)); we also obtain an expression for the transition probability kernel of \(\textsf{dTASEP}\) via an intertwining relation. In Sect. 3 we re-express the transition kernel of \(\textsf{dTASEP}\) in terms of weights of ensembles of non-intersecting paths and determinantal point processes, thus arriving at an initial Fredholm determinant formula. In Sect. 4 we prove our main result, Theorem 1.1, first formulating a terminal-boundary value problem and then solving it, to arrive at the hitting time representation for the correlation kernel of \(\textsf{dTASEP}\) with inhomogeneous rates. To solve the terminal-boundary value problem, we first use path representations for certain subsets of \(\mathbb {Z}\), and then extend the solution to the whole space via a subtle double induction argument (see Proposition 4.6).

2 \(\textsf{TASEP}\) Dynamics and Combinatorics

In this section we present the main combinatorial tools that we need for the analysis of the \(\textsf{dTASEP}\) dynamics. In Sect. 2.1, we introduce a few standard algebraic combinatorial objects. In Sect. 2.2, we describe the dual, column \(\textsf{RSK}\) (\(\textsf{dRSK}\)) correspondence and its main properties. In Sect. 2.3, we discuss the link between \(\textsf{dTASEP}\) and \(\textsf{dRSK}\). Finally, in Sect. 2.4 we establish certain intertwining relations and deduce a preliminary expression for the \(\textsf{dTASEP}\) transition kernel.

2.1 Partitions, tableaux, and Schur polynomials

A partition \(\lambda \) of \(n\ge 0\) is a sequence \(\lambda = (\lambda _1\ge \lambda _2 \ge \cdots )\) of weakly decreasing non-negative integers, called parts of \(\lambda \), such that \(|\lambda |:=\sum _{i\ge 1} \lambda _i =n\). If \(\lambda \) is a partition of n, we write \(\lambda \vdash n\) and refer to n as the size of \(\lambda \). We will also say that \(\lambda \) is a partition without referring to its size. We will denote by \(\emptyset \) the only partition of 0. Any partition of n can be graphically represented as a Young diagram of size n, i.e. a collection of n cells arranged in left-justified rows, with \(\lambda _i\) cells in the i-th row. Every such a cell can be identified with a pair \((i,j) \in \mathbb {Z}_{\ge 1}^2\) with row index i and column index j; thus, we may alternatively write \(\lambda \) as the set of such pairs:

The conjugate partition of \(\lambda \), which we denote by \(\lambda ^{\top }\), is defined by setting \(\lambda _i^{\top }\) to be the number of \(k\ge 1\) such that \(\lambda _k\ge i\); conjugating a partition corresponds to transposing the associated Young diagram. The length of \(\lambda \) is the number of its non-zero parts; since it clearly coincides with the first part of the conjugate partition \(\lambda ^{\top }\), we denote it by \(\lambda ^{\top }_1\).

We define the (discrete) Weyl chamber as

Throughout this section, elements of \({\textsf{W}}_n\) will be implicitly taken to have non-negative components and, thus, to be integer partitions of length \(\le n\). In later sections, we will drop this assumption and consider elements of \({\textsf{W}}_n\) with possibly negative components.

For any two partitions \(\lambda \) and \(\mu \), we write \(\mu \subseteq \lambda \) if \(\mu _i\le \lambda _i\) for all \(i\ge 1\), or equivalently if \(\mu \) is a subset of \(\lambda \), viewing the partitions as sets as in (2.1). A skew Young diagram \(\lambda /\mu \) is the set difference between two partitions \(\lambda \) and \(\mu \) such that \(\mu \subseteq \lambda \). The size of \(\lambda /\mu \), denoted by \(|\lambda /\mu |\), is the number of its cells, which equals \(|\lambda | - |\mu |\). If \(\lambda /\mu \) has at most one cell per column, we call it horizontal strip; if \(\lambda /\mu \) has at most one cell per row, we call it vertical strip. We say that two partitions \(\mu \) and \(\lambda \) interlace, and write \(\mu \prec \lambda \), if \(\lambda /\mu \) is a horizontal strip, or equivalently if \(\lambda _i \ge \mu _i \ge \lambda _{i+1}\) for all \(i\ge 1\).

A Young tableau \({\textsf{T}}=\{{\textsf{T}}_{i,j} :(i,j)\in \lambda \}\) of shape \(\lambda \) is a filling of a Young diagram \(\lambda \) with elements of an alphabet \(A\subseteq \mathbb {Z}_{\ge 1}\). We write \({{\,\mathrm{\textrm{sh}}\,}}({\textsf{T}})\) for the shape of \({\textsf{T}}\). The transpose of \({\textsf{T}}\), denoted by \({\textsf{T}}^{\top }\), is the tableau of shape \(\lambda ^{\top }\) that is obtained from \({\textsf{T}}\) by exchanging its rows with its columns. A Young tableau \({\textsf{T}}\) is called column-strict if its entries weakly increase along rows and strictly increase down columns. Every column-strict Young tableau \({\textsf{T}}\) of shape \(\lambda \) can be alternatively represented as a sequence of interlacing partitions:

where each \(\lambda ^{(k)}\) is the shape of the Young tableau obtained from \({\textsf{T}}\) by removing all the cells containing numbers \(>k\). By the column-strict property of \({\textsf{T}}\), we have \(\lambda ^{(k)} \in {\textsf{W}}_k\) for all k, and the partitions interlace. Clearly, \(\lambda ^{(k)}\) coincides with \(\lambda \) for k large enough; therefore, one can think of the sequence as finite, by stopping it at any \(\lambda ^{(k)}\) such that \(\lambda ^{(k)}=\lambda \). See Fig. 1 for an example of a column-strict Young tableau. Similarly, a Young tableau \({\textsf{T}}\) is called row-strict if its rows are strictly increasing and its columns are weakly increasing, or equivalently if \({\textsf{T}}^{\top }\) is column-strict.

We define the left edgeFootnote 2 of a column-strict tableau \({\textsf{T}}= \big (\lambda ^{(0)} \prec \lambda ^{(1)} \prec \lambda ^{(2)} \prec \cdots \big )\) to be the partition

Notice that, as all entries of the k-th row of \({\textsf{T}}\) are \(\ge k\) by the column-strict property, \(\lambda ^{(k)}_k\) is simply the number of k’s in the k-th row of \({\textsf{T}}\). See again Fig. 1 for an example.

Finally, we give two equivalent, combinatorial definitions of Schur polynomials. Let \(n\ge 1\) and \(\lambda \in {\textsf{W}}_n\). The Schur polynomial in n variables of shape \(\lambda \) is given by

The first sum is taken over any sequence \(\big (\lambda ^{(0)} \prec \cdots \prec \lambda ^{(n)}\big )\) of interlacing partitions such that \(\lambda ^{(k)} \in {\textsf{W}}_k\) for all k and \(\lambda ^{(n)}=\lambda \). The second sum is taken over any column-strict Young tableau \({\textsf{T}}\) of shape \(\lambda \) in the alphabet \(\{1,\ldots ,n\}\). It is also convenient to define \(\textrm{s}_{\lambda }(x_1,\ldots ,x_n):=0\) whenever the length of \(\lambda \) exceeds n.

2.2 Dual column \(\textsf{RSK}\)

As we will see, the \(\textsf{TASEP}\) dynamics we are concerned with are encoded by a certain variation of the Robinson–Schensted–Knuth correspondence (\(\textsf{RSK}\)), a celebrated combinatorial algorithm [Knu70, Ful97, Sta99].

All the \(\textsf{RSK}\) variations map a matrix (input) to a pair of Young tableaux (output). They can be differentiated based on two key factors:

-

The input may be a non-negative integer matrix or a \(\{0,1\}\)-matrix (in the latter case, one usually talks about dual \(\textsf{RSK}\));

-

The algorithm may be based on the so-called row insertion or column insertion.

According to these factors, one obtains four variations of \(\textsf{RSK}\): row \(\textsf{RSK}\), column \(\textsf{RSK}\), dual row \(\textsf{RSK}\), and dual column \(\textsf{RSK}\). For our purposes we need the latter variation, dual column \(\textsf{RSK}\), which we abbreviate as \(\textsf{dRSK}\). We introduce it here and refer to [Ful97, A.4.3] for further details.

It is convenient to first define, for \(j\ge 1\), a mapping

which should be interpreted as the insertion of a number x into the j-th column of a tableau \({\textsf{T}}\). Here:

-

(i)

\({\textsf{T}}\) is an input column-strict Young tableau \({\textsf{T}}\) of shape \(\lambda \), with \(\lambda _1 \ge j-1\);

-

(ii)

x is an input positive integer such that, if \(j>1\) and \(\lambda ^{\top }_j = \lambda ^{\top }_{j-1}\), then \(x\le \max _{i} {\textsf{T}}_{i,j}\);

-

(iii)

\({\textsf{T}}'\) is an output column-strict Young tableau;

-

(iv)

y is either an output positive integer or a ‘stop symbol’

.

.

The mapping works as follows. For fixed \(j\ge 1\), if all entries \({\textsf{T}}_{i,j}\), \(1\le i\le \lambda _j^{\top }\), of the j-th column of \({\textsf{T}}\) are \(<x\) (so that, by (ii), we have \(\lambda ^{\top }_j < \lambda ^{\top }_{j-1}\)), then a new cell \((\lambda ^{\top }_j+1,j)\) containing x is added to the column, thus yielding a new column-strict tableau \({\textsf{T}}'\); the outputs are then  . Otherwise, let i be the smallest integer such that \(x \le {\textsf{T}}_{i,j} =: y\); define \({\textsf{T}}'\) to be the same tableau as \({\textsf{T}}\) except for the (i, j)-entry \({\textsf{T}}'_{i,j}:=x\); the outputs are then \(({\textsf{T}}',y)\).

. Otherwise, let i be the smallest integer such that \(x \le {\textsf{T}}_{i,j} =: y\); define \({\textsf{T}}'\) to be the same tableau as \({\textsf{T}}\) except for the (i, j)-entry \({\textsf{T}}'_{i,j}:=x\); the outputs are then \(({\textsf{T}}',y)\).

We now define the column insertion algorithm as a composition of several mappings of the form (2.6). Consider the sequence

where k is the smallest integer such that  . Notice that, by construction, every \(y^{(j-1)}\), \(1\le j\le k\), can be inserted into the j-th column of \(T^{(j-1)}\), in the sense that hypothesis (ii) above is satisfied. We then set \({\textsf{T}}'\) to be the outcome of the column insertion of x into the tableau \({\textsf{T}}\). Clearly, if \({\textsf{T}}\) is of size n, then \({\textsf{T}}'\) will be of size \(n+1\). See Fig. 2 for a graphical representation of the column insertion algorithm.

. Notice that, by construction, every \(y^{(j-1)}\), \(1\le j\le k\), can be inserted into the j-th column of \(T^{(j-1)}\), in the sense that hypothesis (ii) above is satisfied. We then set \({\textsf{T}}'\) to be the outcome of the column insertion of x into the tableau \({\textsf{T}}\). Clearly, if \({\textsf{T}}\) is of size n, then \({\textsf{T}}'\) will be of size \(n+1\). See Fig. 2 for a graphical representation of the column insertion algorithm.

Example of column insertion of an integer x into a tableau \({\textsf{T}}\). We start with the pair \(({\textsf{T}},x)\), on the left-hand side, and apply the mappings \(\mathcal {I}_1, \mathcal {I}_2, \ldots \) until we get a pair of the form  . The tableau \({\textsf{T}}'\) is then the outcome of the column insertion. At the j-th step, the red number is to be inserted into the j-th column: either it replaces the blue number (first two steps) or it is inserted in a new cell at the end of the column (third step). In the former case, the blue number becomes the red one at the next step; in the latter case, a ‘stop symbol’

. The tableau \({\textsf{T}}'\) is then the outcome of the column insertion. At the j-th step, the red number is to be inserted into the j-th column: either it replaces the blue number (first two steps) or it is inserted in a new cell at the end of the column (third step). In the former case, the blue number becomes the red one at the next step; in the latter case, a ‘stop symbol’  is returned and the procedure stops

is returned and the procedure stops

We now construct the \(\textsf{dRSK}\) algorithm. Given an input matrix \(w=\{w_{i,j} :1\le i\le n, \, 1\le j\le N \}\) with entries in \(\{0,1\}\), we define a sequence

of Young tableaux pairs starting from the pair of empty tableaux and ending at the \(\textsf{dRSK}\) output pair \(({\textsf{P}},{\textsf{Q}})\) (for an example, see Fig. 3). Essentially, each \({\textsf{P}}(i)\) is constructed by column inserting into \({\textsf{P}}(i-1)\) the column indices j that correspond to ones in the i-th row of w, whereas each \({\textsf{Q}}(i)\) records the cells that are added in the construction of \({\textsf{P}}(i)\). More precisely, for all \(i=1,\ldots ,n\), given \(({\textsf{P}}(i-1),{\textsf{Q}}(i-1))\), the next pair \(({\textsf{P}}(i),{\textsf{Q}}(i))\) is obtained as the last element of the sequence

where, for \(j=1,\ldots ,N\):

-

if \(w_{i,j}=0\), then \({\textsf{P}}(i,j)={\textsf{P}}(i,j-1)\) and \({\textsf{Q}}(i,j)={\textsf{Q}}(i,j-1)\);

-

if \(w_{i,j}=1\), then

-

\({\textsf{P}}(i,j)\) is the tableau obtained by column inserting j into \({\textsf{P}}(i,j-1)\), and

-

\({\textsf{Q}}(i,j)\) is obtained from \({\textsf{Q}}(i,j-1)\) by adding a cell, filled with i, at the same location where a cell was added in the column insertion of j into \({\textsf{P}}(i,j-1)\).

-

By construction, for all i, j, \({\textsf{P}}(i,j)\) and \({\textsf{Q}}(i,j)\) are Young tableaux of the same shape. Each \({\textsf{P}}(i,j)\) is column-strict. Moreover, it is not difficult to see that each \({\textsf{Q}}(i,j)\) is row-strict.

An example of the \(\textsf{dRSK}\) correspondence, constructed as in (2.7). An input \(\{0,1\}\)-matrix w yields a sequence of tableaux pairs, which terminates at the \(\textsf{dRSK}\) output pair \(({\textsf{P}},{\textsf{Q}})=({\textsf{P}}(3),{\textsf{Q}}(3))\)

In the next theorem we summarize the properties of this mapping that are useful for our purposes. They are all either immediate from the construction or easy to prove, and can be visualized in the example of Fig. 3. We refer e.g. to [Ful97, A.4.3] for a proof.

Theorem 2.1

The dual column Robinson–Schensted–Knuth correspondence \(\textsf{dRSK}:w \mapsto ({\textsf{P}},{\textsf{Q}})\) is a bijection between a matrix with entries in \(\{0,1\}\) and a pair \(({\textsf{P}},{\textsf{Q}})\) of Young tableaux of the same shape such that \({\textsf{P}}\) is column-strict and \({\textsf{Q}}\) is row-strict. If the input matrix is \(n\times N\), then \({\textsf{P}}\) is in the alphabet \(\{1,\ldots ,N\}\) and \({\textsf{Q}}\) is in the alphabet \(\{1,\ldots ,n\}\), so one can identify

where \(\lambda ^{(k)}, \mu ^{(k),\top } \in {\textsf{W}}_k\) for all k. Referring to the sequence of pairs (2.7) that defines \(\textsf{dRSK}\), we then have

Moreover, we have

2.3 \(\textsf{dTASEP}\) dynamics and \(\textsf{dRSK}\)

Let us now elaborate on the definition of \(\textsf{dTASEP}\) given in the introduction and describe its relation to the dynamics of \(\textsf{dRSK}\). Recall that the N-particle \(\textsf{dTASEP}\) is encoded by the discrete-time Markov chain \((Y(t))_{t\ge 0}\) of particle configurations \(Y(t) = (Y_1(t)> Y_2(t)> \cdots > Y_N(t))\), where \(Y_k(t)\) is the location of the k-th particle from the right. We consider an arbitrary initial configuration \(Y(0) = {\varvec{y}} = (y_1> y_2> \cdots > y_N)\). Let \({\varvec{p}}=(p_t)_{t\ge 1}\) and \({\varvec{q}}=(q_1,\ldots ,q_N)\) be positive parameters and let \(W = \{ W_{t,k} :t \ge 1, \, 1\le k\le N\}\) be a collection of independent Bernoulli random variables with

The random dynamics is then given by sequential updates from right to left, i.e. from the particle labeled 1 to the particle labeled N, driven by these random variables as follows:

with the convention that \(Y_0(t) = \infty \) for all \(t \ge 0\). These dynamics clearly preserve the ordering of the particles (exclusion rule). We will abbreviate the N-particle \(\textsf{dTASEP}\) with parameters \({\varvec{p}}\) and \({\varvec{q}}\) as \(\textsf{dTASEP}(N;{\varvec{p}},{\varvec{q}})\).

The construction of \(\textsf{dRSK}\) given in Sect. 2.2 (see in particular (2.7) and Fig. 3) is ‘dynamic’: at the t-th step, the tableau pair \(({\textsf{P}}(t-1),{\textsf{Q}}(t-1))\) and the t-th row of the input matrix w are used to generate a new tableau pair \(({\textsf{P}}(t),{\textsf{Q}}(t))\) through the column insertion algorithm. We will now see how this dynamic procedure encodes the evolution of the \(\textsf{dTASEP}\), if one interprets the input matrix entries as the Bernoulli random variables governing the particle jumps.

Notice that the collection \(W = \{ W_{t,k} :t \ge 1, \, 1\le k\le N\}\) of Bernoulli random variables defined in (2.11) can be seen as a (random) matrix with infinitely many rows. For all \(t\ge 0\), let \(({\textsf{P}}(t),{\textsf{Q}}(t))\) be the tableau pair obtained by applying \(\textsf{dRSK}\) to the (random) matrix \(\{ W_{i,j} :1\le i\le t, \, 1\le j\le N\}\) consisting of the first t rows of W. As each \({\textsf{P}}(t)\) is a column-strict tableau, we may write \({\textsf{P}}(t) = \big (\lambda ^{(0)}(t) \prec \lambda ^{(1)}(t) \prec \cdots \prec \lambda ^{(N)}(t)\big )\) as a sequence of interlacing partitions; see (2.3).

Recall from (2.4) that the left edge of \({\textsf{P}}(t)\) is the partition \({{\,\mathrm{\mathrm {l-edge}}\,}}({\textsf{P}}(t)) = (\lambda ^{(1)}_1 \ge \cdots \ge \lambda ^{(N)}_N)\), where each \(\lambda ^{(k)}_k(t)\) is the number of k’s in the k-th row of \({\textsf{P}}(t)\). It is a consequence of the column insertion algorithm that the time evolution of \({{\,\mathrm{\mathrm {l-edge}}\,}}({\textsf{P}}(t))\) is autonomous from any additional information carried by \({\textsf{P}}(t)\). To see this, suppose that the first \(t-1\) rows of W have been inserted, yielding a \({\textsf{P}}\)-tableau \({\textsf{P}}(t-1)\). If \(W_{t,1}=1\), then a 1 is column inserted into \({\textsf{P}}(t-1)\), thus yielding a new tableau \({\textsf{P}}(t,1)\) (according to the notation of Sect. 2.2) that contains one more 1 in the first row. As the subsequent insertion of any \(k>1\) does not affect the cells containing 1’s, we have \(\lambda ^{(1)}_1(t) = \lambda ^{(1)}_1(t-1)+W_{t,1}\). Suppose now that, at time t, for some \(k\ge 2\), the numbers \(<k\) have been sequentially inserted, thus yielding a tableau \({\textsf{P}}(t,k-1)\). Now, if \(W_{t,k}=1\), then \({\textsf{P}}(t,k)\) is generated by column inserting a k into \({\textsf{P}}(t,k-1)\): if there are more \((k-1)\)’s in the \((k-1)\)-th row than k’s in k-th row, then the ‘new’ k will end up in the k-th row; however, if there are as many \((k-1)\)’s in the \((k-1)\)-th row as there are k’s in the k-th row, then the ‘new’ k will end up in the j-th row, for some \(j<k\). Again, since the cells containing k are not affected by subsequent insertions of larger numbers, we conclude that

The latter formula is also valid for \(k=1\), if we adopt the convention \(\lambda ^{(0)}_0(t)=\infty \) for all \(t\ge 0\). Notice that the update rules (2.13) must be applied sequentially, from \(k=1\) to \(k=N\). It is then straightforward to check that the N-tuple

satisfies the same recursion Equations (2.12) that the \(\textsf{dTASEP}\) satisfies.

Remark 2.2

Integer partitions coming from Young tableaux have of course nonnegative parts. As a result, the transition kernels arising in the \(\textsf{dRSK}\) dynamics that will be computed in the next subsection will be acting, in principle, on elements of \({\textsf{W}}_N\) with nonnegative components. On the other hand, \(\textsf{dTASEP}\) particles may occupy any site of \(\mathbb {Z}\). However, this is not an issue: The kernels coming from \(\textsf{dRSK}\) can be extended to elements of \({\textsf{W}}_N\) with components of any sign, just by shifting all the parts by the same (integer) amount.

2.4 Transition probabilities for \(\textsf{dRSK}\) and \(\textsf{dTASEP}\)

We now study the evolution of the \({\textsf{P}}\)- and \({\textsf{Q}}\)-tableaux under the \(\textsf{dRSK}\) dynamics considered in Sect. 2.3. This will yield useful formulas for the transition probabilities of \(\textsf{dTASEP}(N;{\varvec{p}},{\varvec{q}})\), as defined in (2.11)–(2.12).

By (2.11), the joint probability distribution of the Bernoulli weights up to time t is

where \(\{w_{i,j}:1\le i\le t, 1\le j\le N\}\) is any \(\{0,1\}\)-matrix and, for \(0\le r< t\),

Then, by Theorem 2.1, the pushforward law of the tableaux under the \(\textsf{dRSK}\) bijection at time t is given by

where \(\big (\lambda ^{(0)}\prec \cdots \prec \lambda ^{(N)}\big )\) and \(\big (\mu ^{(0),\top }\prec \cdots \prec \mu ^{(t),\top }\big )\) are any sequences of interlacing partitions such that \(\lambda ^{(k)}, \mu ^{(k),\top } \in {\textsf{W}}_k\) for all k.

It follows from (2.15) and from the definition (2.5) of Schur polynomials that the marginal law of the common shape of \({\textsf{P}}(t)\) and \({\textsf{Q}}(t)\) is given by a Schur measure:

where \({\varvec{p}}_{[1,t]}:= (p_1,\ldots ,p_t)\). By summing the above probabilities over all partitions \(\lambda \), one obtains the so-called dual Cauchy identity (see e.g. [Sta99, §7.14]):

Recalling (2.8) and taking a marginal of (2.15), we see that the joint distribution of the shapes of the \({\textsf{P}}\)-tableaux up to time t is given by

For \(0\le r<t\), define now the kernels \(\hat{{{\mathcal {R}}} }_{(r,t]}\) and \({{\mathcal {R}}} _{(r,t]}\) by setting

for any \(\mu ,\lambda \in {\textsf{W}}_N\), where \(\nu ^{(k)} \in {\textsf{W}}_k\) for all k. From the first equality, we see that \(\hat{{{\mathcal {R}}} }_{(r,t]}\) can be interpreted as a Doob h-transform of \({{\mathcal {R}}} _{(r,t]}\), with Schur polynomials as h-functions (for a precise account of Doob’s h-transforms, see e.g. [RW00, Doo01]). It follows immediately from (2.17) that \(\hat{{{\mathcal {R}}} }_{(r,t]}\) is the transition kernel of the shape of the \({\textsf{P}}\)-tableau from time r to time t:

Next, define the kernel \(\hat{K}\) and K by setting

for any \(\lambda , {\varvec{y}} \in {\textsf{W}}_N\), where, as usual, \(\lambda ^{(k)} \in {\textsf{W}}_k\) for all k. Notice that K is an unnormalised version of \(\hat{K}\), which is a probability kernel. It follows from (2.15) and (2.17) that, for all \(t\ge 0\),

Finally, recall from Sect. 2.3 that the left edge of \({\textsf{P}}\) evolves as a Markov chain in its own filtration (i.e., autonomously from the rest of \({\textsf{P}}\)). Thus, we may write its transition kernel from time r to time t, for \(0\le r<t\), as

for \({\varvec{y}}, {\varvec{y}}' \in {\textsf{W}}_N\). We will soon derive explicit expressions for the kernel \(Q_{(r,t]}\) defined above.

The \({{\mathcal {Q}}} \)- and \(\hat{{{\mathcal {R}}} }\)-kernels are transition kernels of the left edge and of the shape of the \({\textsf{P}}\)-tableau, respectively; on the other hand, \(\hat{K}\) encodes the conditional law of the left edge of \({\textsf{P}}\) given its shape at any given time. Therefore, from the theory of Markov functions (see e.g. [RP81]), we expect these kernels to satisfy intertwining relations, and this is indeed the case. We state the result in the next proposition and, for completeness, we also provide a proof, following [DW08].

Proposition 2.3

For \(0\le r<t\), the following intertwining relations between operators from \({\textsf{W}}_N\) to \({\textsf{W}}_N\) hold:

Proof

Let \({\varvec{y}}\in {\textsf{W}}_N\). By (2.21) and (2.19), we have

We point out that, by the definition of \(\hat{{{\mathcal {R}}} }_{(r,t]}\) in (2.18), \(\hat{{{\mathcal {R}}} }_{(r,t]}( {{\,\mathrm{\textrm{sh}}\,}}({\textsf{P}}(r)), \lambda )\) is non-zero only for a finite number of partitions \(\lambda \).

On the other hand, by (2.22) and (2.21), we have

Comparing the two expressions above leads to the first intertwining relation in (2.23). The second one follows from the first, by using (2.18) and (2.20) and noting that the Schur polynomials cancel out. \(\square \)

Remark 2.4

Notice that, by construction, \({{\mathcal {Q}}} _{(r,t]}({\varvec{y}}, {\varvec{y}}')\) equals zero unless \(y_j\le y_j'\) for all \(1\le j\le N\).

Define now the modified kernels

It is immediate to see that the second intertwining relation in (2.23) still holds when replacing K with \(\Lambda \) and \({{\mathcal {Q}}} _{(r,t]}\) with \(\hat{{{\mathcal {Q}}} }_{(r,t]}\). Moreover, it was proven in [DW08, Prop. 3] that \(\Lambda \) is invertible (with an explicit inverse). This provides an explicit expression for the kernel \(\hat{{{\mathcal {Q}}} }_{(r,t]}\). We summarize these facts in the next proposition.

Proposition 2.5

The intertwining relation \({{\mathcal {R}}} _{(r,t]} \Lambda = \Lambda \, \hat{{{\mathcal {Q}}} }_{(r,t]}\) holds. Moreover, the operator \(\Lambda \) is invertible, so that

In the next section we will interpret (2.25) in terms of weights of non-intersecting paths.

3 Path Ensembles and Determinantal Point Processes

This section concerns the non-intersecting path constructions that lie at the core of our approach. In Sect. 3.1, we introduce certain ‘local’ Toeplitz operators that we will use throughout this work. In Sect. 3.2, we provide a non-intersecting path interpretation of the \(\textsf{dTASEP}\) transition kernel in terms of these local operators. In Sect. 3.3, we deduce an expression for the law of \(\textsf{dTASEP}\) in terms of a determinantal point process, which we then study in Sect. 3.4, obtaining an initial expression for its correlation kernel in terms of biorthogonal functions and local operators.

3.1 Local operators

We will express the weights of the path ensembles in terms of convolutions of local operators, which we now introduce. Let us first define the conventions for the operator formalism. For an operator A defined through a kernel \((A(x,y) :x\in X, y\in Y)\) on suitable spaces X, Y and a function (or vector) \(f=(f(y) :y\in Y)\), we define the function \((A\circ f)(x):=\sum _{y\in Y} A(x,y) f(y)\), whenever the sum is absolutely convergent. Similarly, for a function \(g=(g(x) :x\in X)\), we define the function \((g\circ A)(y):=\sum _{x \in X} g(x) A(x,y)\). For two operators A and B with kernels \((A(x,y) :x\in X, y\in Y\big )\) and \((B(y,z) :y\in Y, z\in Z)\), respectively, we define the operator \(A\circ B\) through the kernel \((A\circ B) (x,z):=\sum _{y\in Y} A(x,y) B(y,z)\). Finally, we define the adjoint of A as the operator \(A^*\) with kernel \(A^*(x,y):= A(y,x)\).

Let us now introduce some specific local operators we are concerned with. Recall from Sect. 2.3 that we fixed positive parameters \({\varvec{p}}=(p_i)_{i\ge 1}\) and \({\varvec{q}}=(q_1,\ldots ,q_N)\).

The first family of operators encode geometric jumps weakly to the right: for \(i=1,\ldots ,N\), let

We also define a family of operators encoding geometric jumps strictly to the left:

We note that, under the hypothesis \(q_i>1\) (which we will always assume, without explicitly mentioning, from now on), the kernel \(Q_i(x,y)\) defines a bounded operator on \(\ell ^1(\mathbb {Z})\) with a well-defined inverse:

Finally, for \(i\ge 1\), we define the operators

For \(1\le m\le n\le N\), we will use the compact notations

We will abuse the notation slightly by defining

which makes sense even for \(m>n\), in which case \(Q_{[m,n]}=Q_{m-1}^{-1} \circ \cdots \circ Q_{n+1}^{-1}\). In particular, we have \(Q_{(n,n]}=Q_{[n+1,n]}:=I\) for \(1\le n\le N\). We will use similar conventions for the \(Q^\dagger \)- and R-operators.

Certain convolutions of the operators defined above may be expressed in terms of symmetric functions. Given indeterminates \(x_1,\ldots ,x_N\), let

be the complete symmetric polynomial of degree n and the elementary symmetric polynomial of degree n, respectively. By convention, we set \(h_0=e_0:=1\) and \(h_n=e_n:=0\) for all \(n<0\). Then, it is not difficult to check that

and

for \(x,y\in \mathbb {Z}\), where the first i indeterminates of the symmetric polynomials are set to be 0. These identities follow from the definitions of the symmetric functions \(h_n\) and \(e_n\), the form of the operators \(Q^\dagger \) and \(Q^{-1}\) in (3.1) and (3.3) and the definition of the operation \(\circ \).

Observe that the values of \(Q^\dagger _i(x,y)\), \(Q_i(x,y)\), \(Q_i^{-1}(x,y)\) and \(R_i(x,y)\) only depend on \(y-x\). The operators with such a property are known as (bi-infinite) Toeplitz operators. To each Toeplitz operator T with kernel T(x, y) on \(\mathbb {Z}\times \mathbb {Z}\), we associate a formal Laurent series \(\varphi _{T}(z)\), known as the symbol of T, defined by

Inside its domain of convergence, which is a (possibly empty) annulus \(\{r<|z|<R\}\), the function \(\varphi _T(z)\) is analytic in z. We summarize some standard properties of Toeplitz operators that will be used later; the proofs are elementary, so we omit them. From now on, all contours will be implicitly taken to have a counterclockwise orientation.

Proposition 3.1

-

(i)

Let T be a (bi-infinite) Toeplitz operator whose symbol \(\varphi _T(z)\) is analytic in a non-empty annulus \(\{r<|z|<R\}\). Then, the entries T(x, y) can be computed through the contour integral

$$\begin{aligned} T(x,y)=\oint _{|z|=r_1}\frac{\textrm{d}z}{2\pi \textrm{i} z}z^{y-x}\cdot \varphi _T(z), \end{aligned}$$(3.10)for any \(r<r_1<R\).

-

(ii)

Let T and S be two (bi-infinite) Toeplitz operators whose symbols \(\varphi _T(z)\) and \(\varphi _S(z)\) are both analytic inside a common non-empty annulus \(\{r<|z|<R\}\). Then, the convolutions \(T\circ S\) and \(S\circ T\) both converge, with

$$\begin{aligned} \varphi _{T\circ S}(z) = \varphi _{T}(z)\varphi _{S}(z) = \varphi _{S\circ T}(z) \end{aligned}$$(3.11)for all z on the annulus. In particular, T and S commute. Assuming that T is invertible with \(T^{-1}=S\), we have

$$\begin{aligned} 1=\varphi _{\textrm{id}}(z) =\varphi _T(z)\varphi _{T^{-1}}(z), \end{aligned}$$or equivalently

$$\begin{aligned} \varphi _{T^{-1}}(z)=\varphi _{T}(z)^{-1}. \end{aligned}$$

For example, the symbols of the \(Q^\dagger \)- and Q-operators defined above are given by

As a consequence of Proposition 3.1 and the fact that the series are absolutely convergent in the domains considered below, we then obtain the contour integral representations

3.2 Non-intersecting path ensembles

We now define two ensembles of paths, which we call h-paths \({\textsf{h}}\Pi \) (related to the complete symmetric polynomials h) and e-paths \({\textsf{e}}\Pi \) (related to the elementary symmetric polynomials e).

Let \((y_1,i_1),\ldots ,(y_n,i_n),(x_1,j_1),\ldots ,(x_n,j_n) \in \mathbb {Z}^2\). We denote by \({{\textsf{h}}\Pi }_{\{(y_1,i_1),\ldots ,(y_n,i_n)\}}^{\{(x_1,j_1),\ldots ,(x_n,j_n)\}}\) the (possibly empty) ensemble of all of n-tuples \((\pi _1,\ldots ,\pi _n)\) of non-intersecting paths in \(\mathbb {Z}^2\), such that each path \(\pi _k\) starts from \((y_k,i_k)\), ends at \((x_k,j_k)\), and moves either straight up or straight to the right at each step; namely, from a point (x, j) the path moves either to \((x,j+1)\) or to \((x+1,j)\). We also denote by \({\textsf{h}}\Pi _{\{(y_1,i_1),\ldots ,(y_n,i_n)\}, \uparrow }^{\{(x_1,j_1),\ldots ,(x_n,j_n)\}}\) the subset of \(\Pi _{\{(y_1,i_1),\ldots ,(y_n,i_n)\}}^{\{(x_1,j_1),\ldots ,(x_n,j_n)\}}\) of all \((\pi _1,\ldots ,\pi _n)\) such that the first step of each path \(\pi _k\) is vertical, upwards.

We also denote by \({{\textsf{e}}\Pi }_{\{(y_1,i_1),\ldots ,(y_n,i_n)\}}^{\{(x_1,j_1),\ldots ,(x_n,j_n)\}}\) the ensemble of all n-tuples \((\pi _1,\ldots ,\pi _n)\) of non-intersecting paths in \(\mathbb {Z}^2\), such that each path \(\pi _k\) starts from \((y_k,i_k)\), ends at \((x_k,j_k)\), and moves either straight up or diagonally up-right at each step; namely, from a point (x, i) the path moves either to \((x,i+1)\) or to \((x+1,i+1)\). Finally, we denote by \(({{\textsf{e}}\Pi }^*)_{\{(y_1,i_1),\ldots ,(y_n,i_n)\}}^{\{(x_1,j_1),\ldots ,(x_n,j_n)\}}\) a similar e-path ensemble, where the allowed diagonal steps are up-left, instead of up-right.

In the following, both h- and e-paths will be assigned weights, based on suitable weights \({\textsf{w}}{\textsf{t}}({\textsf{e}})\) assigned to each edge \({\textsf{e}}\). The rules are as follows. The weight of a path \(\pi \) with edges \({\textsf{e}}_1,{\textsf{e}}_{2},\ldots \) is defined as \({\textsf{w}}{\textsf{t}}(\pi )= {\textsf{w}}{\textsf{t}}({\textsf{e}}_1) {\textsf{w}}{\textsf{t}}({\textsf{e}}_2)\cdots \). The total weight of an n-tuple \((\pi _1,\ldots ,\pi _n)\) of paths is defined as \({\textsf{w}}{\textsf{t}}(\pi _1,\ldots ,\pi _n):=\prod _{i=1}^n{\textsf{w}}{\textsf{t}}(\pi _i)\). Finally, the weight of an ensemble \(\Pi \) of n-tuples of paths is defined as \({\textsf{w}}{\textsf{t}}(\Pi ):= \sum _{(\pi _1,\ldots ,\pi _n)\in \Pi } {\textsf{w}}{\textsf{t}}(\pi _1,\ldots ,\pi _n)\).

We are now ready to provide the path and local operator representations of the kernels appearing in (2.25).

Proposition 3.2

(Path and local operator representation of \(\Lambda \)). Let a vertical edge connecting (x, i) to \((x,i+1)\) be assigned weight 1 and a horizontal edge connecting (x, i) to \((x+1,i)\) be assigned weight \(q_i\), for \(x\in \mathbb {Z}\) and \(1\le i\le N\). For \(\lambda =(\lambda _1\ge \cdots \ge \lambda _N)\) and \({\varvec{y}}'=(y_1'\ge \cdots \ge y_N')\) in \({\textsf{W}}_N\), the kernel \( \Lambda (\lambda ,{\varvec{y}}')\) defined in (2.24) can be written as

Proof

The first equality is a rewriting of the definition of \(\Lambda \) (see (2.24) and (2.20)) in terms of weights of non-intersecting path ensembles; see e.g. [FK97, Section 4] for a description of the connection between tableaux and non-intersecting lattice paths. The second equality is an application of the Lindström–Gessel–Viennot theorem. Note now that, by the definition of path weights and by the form of the complete symmetric polynomials, we have

Combining this with (3.8), we arrive at the third equality. Notice that this proposition can be also seen as a reformulation of [DW08, Prop. 2]. \(\square \)

As stated in Proposition 2.5, the operator \(\Lambda \) is invertible: We now provide a determinantal and path representation of its inverse.

Proposition 3.3

(Path and local operator representation of \(\Lambda ^{-1}\)). Let a vertical edge connecting \((x,i-1)\) to (x, i) be assigned weight 1 and a diagonal up-right edge connecting \((x,i-1)\) to \((x+1,i)\) be assigned weight \(-q_{N-i+1}\), for \(x\in \mathbb {Z}\) and \(1\le i\le N-1\). For \({\varvec{y}}=(y_1\ge \cdots \ge y_N)\) and \(\mu =(\mu _1\ge \cdots \ge \mu _N)\) in \({\textsf{W}}_N\), the kernel \(\Lambda ^{-1}({\varvec{y}},\mu )\) can be written as

Proof

It was proved in [DW08, Prop. 3] that \(\Lambda \) is invertible, with an inverse given by

This, together with (3.9), yields the first equality. On the other hand, from the form of the elementary symmetric functions, it is easy to see that

The latter, together with (3.24) and (3.9), yields the second equality. Finally, the third equality follows from the Lindström–Gessel–Viennot theorem. \(\square \)

The proof of the following proposition follows the same lines as the proofs of Propositions 3.2 and 3.3, so we will be brief.

Proposition 3.4

(Path and local operator representation of \({{\mathcal {R}}} _{(r,t)}\)). Let \(0\le r< t\). Let a vertical edge connecting (x, i) to \((x,i+1)\) be assigned weight 1 and a diagonal up-right edge connecting (x, i) to \((x+1,i+1)\) be assigned weight \(p_{i+1}\), for \(x\in \mathbb {Z}\) and \(r\le i\le t-1\). Then, for \(\lambda =(\lambda _1\ge \cdots \ge \lambda _N)\) and \(\mu =(\mu _1\ge \cdots \ge \mu _N)\) in \({\textsf{W}}_N\) with \(\mu \subseteq \lambda \), the kernel \({{\mathcal {R}}} _{(r,t]}(\mu ,\lambda )\) defined in (2.18) can be written as

where the R-operators are defined in (3.4).

Proof

The first equality follows from the definition of \({{\mathcal {R}}} _{(r,t]}(\mu ,\lambda )\) in (2.18) and its representation in terms of weights of non-intersecting lattice paths. The second equality is then a consequence of the Lindström–Gessel–Viennot theorem, while the last equality is a direct consequence of the representation of the weight of a single e-path ensemble in terms of the R-operator. \(\square \)

The following proposition provides a path representation of the transition kernel of \(\textsf{dTASEP}(N;{\varvec{p}},{\varvec{q}})\), obtained by path concatenation. The graphical depiction of this result is shown in Fig. 4. Let us first explain what we mean by path concatenation, again referring to Fig. 4 for an illustration. Let \(\Sigma ^{(1)}\) and \(\Sigma ^{(2)}\) be two lattice path ensembles, each consisting of N-tuples of paths. Suppose that, for all \((\pi ^{(1)}_1,\ldots ,\pi ^{(1)}_N)\in \Sigma ^{(1)}\) and \((\pi ^{(2)}_1,\ldots ,\pi ^{(2)}_N)\in \Sigma ^{(2)}\) and for all j, the endpoint of \(\pi ^{(1)}_j\) equals the starting point of \(\pi ^{(2)}_j\). Then, we define the path concatenation \(\Sigma _1\sqcup \Sigma _2\) to be the ensemble consisting of all paths \((\pi ^{(1)}_1 \cup \pi ^{(2)}_1,\ldots ,\pi ^{(1)}_N \cup \pi ^{(2)}_N)\), for some \((\pi ^{(1)}_1,\ldots ,\pi ^{(1)}_N)\in \Sigma ^{(1)}\) and \((\pi ^{(2)}_1,\ldots ,\pi ^{(2)}_N)\in \Sigma ^{(2)}\) (here, union of paths is understood in terms of both edges and vertices).

Proposition 3.5

(Path representation of \(\textsf{dTASEP}\) transition kernel). Let \({\varvec{y}}, {\varvec{y}}'\in {\textsf{W}}_N\) with \({\varvec{y}} \subseteq {\varvec{y}}'\). The transition kernel of \(\textsf{dTASEP}(N;{\varvec{p}},{\varvec{q}})\), encoding the probability that particles starting from locations \((y_1-1>y_2-2>\cdots >y_N-N)\) at time r end up at locations \((y_1'-1>y_2'-2>\cdots >y_N'-N)\) at time t, admits the following weighted path representation:

The weights assigned to the edges are as follows:

-

All vertical edges are assigned weight 1.

-

Horizontal edges between (x, i) and \((x+1,i)\) for \(x\in \mathbb {Z}\) and \(1\le i\le N\) are assigned weight \(q_i\).

-

Diagonal, up-left edges between (x, i) and \((x-1,i+1)\), for \(x\in \mathbb {Z}\) and \(N\le i\le N+t-r-1\), are assigned weight \(p_{N+t-i}\).

-

Finally, diagonal, up-right edges between (x, i) and \((x+1,i+1)\) for \(x\in \mathbb {Z}\) and \(N+t-r\le i\le 2N+t-r-2\), are assigned weight \({-q_{2N+t-r-i}}\).

Choose now \(x_0\) so that \(x_0-1< y_N-N\) and consider an auxiliary vector \({\varvec{x}}^{(0)}=(x^{(0)}_1,\ldots ,x^{(0)}_{N})\), with \(x^{(0)}_i=x_0-i\) for \(1\le i\le N\). Then, with the same assignment of weights and setting \(\widehat{Z}^{{\varvec{p}},{\varvec{q}}}_{(r,t]}:=Z^{{\varvec{p}},{\varvec{q}}}_{(r,t]} \prod _{i=1}^N q_i^{y_i-x_0}\), we also have

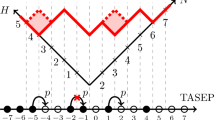

Non-intersecting path representation of the transition kernel of \(\textsf{dTASEP}\) particles, as in (3.29). The figure refers to the transition probability of five \(\textsf{dTASEP}\) particles, starting from locations \((y_1-1>y_2-2>\cdots >y_5-5)\) at time r and ending at locations \((y_1'-1>y_2'-2>\cdots >y_5'-5)\) at time t. Note that the paths do not depict the actual trajectories of the particles. The weights assigned to all vertical steps are equal to 1. In the bottom part of the figure, paths move either vertically up or horizontally to the right, with horizontal weights \(q_1,\ldots ,q_5\), as shown on the left-hand side of the figure. In the middle part, paths move vertically up or diagonally up-left, with diagonal weights \(p_t, p_{t-1}, \ldots , p_{r+2},p_{r+1}\), as shown. In the top part, paths move either vertically up or diagonally up-right, with diagonal weights \(q_5,\ldots ,q_2\), as shown. The solid paths can be extended in such a way that they all start at level zero and include the dashed colored lines in the bottom-left part of the picture; due to the non-intersecting property and the fact that vertical weights are assigned weight 1, such an extension does not change the weight of the ensemble (provided that the horizontal edges on the bottom level are assigned weight 0). The bullets in the bottom part of the figure refer to the point processes \({\textsf{X}}_N\) and \(\overline{{\textsf{X}}}_N\) from Sect. 3.3

Proof

By Proposition 2.5, we have

where the summation is over all partitions \(\lambda ,\mu \in {\textsf{W}}_N\) such that \(\mu \subseteq \lambda \), \(\mu \subseteq {\varvec{y}}\) and \({\varvec{y}}' \subseteq \lambda \). We now concatenate the non-intersecting paths corresponding to the operators \(\Lambda ^{-1}\), \({{\mathcal {R}}} _{(r,t]}\) and \(\Lambda \), as given in Propositions 3.3, 3.4 and 3.2, respectively, and as shown in Fig. 4. The only point to notice is that, compared to Proposition 3.4, the paths corresponding to the operator \({{\mathcal {R}}} _{(r,t]}\) are flipped upside down, resulting in the ‘reverse’ ensemble \(({{\textsf{e}}\Pi }^*_r)_{\{(\lambda _i-i,N) :1\le i\le N\}}^{\{(\mu _i-i,N+t-r) :1\le i\le N\}}\), in which paths move either upwards or in the up-left direction at each step, starting from the points \(\lambda _i-i\), \(1\le i\le N\), at level N and ending at the points \(\mu _i-i\), \(1\le i\le N\), at level \(N+t-r\). Thus, using Eqs. (3.18)–(3.23)–(3.25) and the weights defined in the proposition, we obtain

which is the same as (3.28).

We now rewrite (3.28), by multiplying and dividing by \(\prod _{i=1}^N q_i^{-x_0}\), as

The denominator equals \({\widehat{Z}}^{{\varvec{p}},{\varvec{q}}}_{(r,t]}\). Moreover, the product \(\prod _{i=1}^N q_i^{y'_i-x_0}=\prod _{i=1}^N q_i^{(y'_i-i)-x^{(0)}_i}\) equals the total weight of the path ensemble \({{\textsf{h}}\Pi }^{\{(y'_i-i,i) :1\le i\le N\}}_{\{(x_{i}^{(0)},i) :1\le i\le N \}}\), since the h-weight of a single path from \((x_i^{(0)},i)\) to \((y_i'-i,i)\) (see bottom-left end of the path depictions in Fig. 4) is \(q_i^{(y'_i-i)-x^{(0)}_i}\). Notice that such a path ensemble is nonempty, due to the hypothesis \(x_0-1< y_N-N\), which implies

for \(1\le i\le N\). This readily yields (3.29). \(\square \)

3.3 Determinantal point processes

We now describe the law of \(\textsf{dTASEP}(N;{\varvec{p}},{\varvec{q}})\) as a marginal of a determinantal point process, which we now build out of the above path construction. The configuration space will be integer arrays

Such a point process naturally arises from the non-intersecting path construction given in Proposition 3.5 (see in particular (3.29)) and illustrated in the bottom part of Fig. 4. For \(1\le j\le i\le N\), the point \(x_j^{(i)}\) of \({\textsf{X}}_N\) is identified with the rightmost point on the horizontal line \(\{(x,i):x\in \mathbb {Z}\}\) of the j-th path (enumerating the paths from right to left). We will consider (3.31) as a point process on \(\mathbb {Z}\times \{1,\ldots ,N\}\), such that the line \(\{i\}\times \mathbb {Z}\) has exactly i points \(x^{(i)}_1,\ldots ,x^{(i)}_i\), for \(1\le i\le N\). However, for brevity and when there is no ambiguity, we will usually write \(x^{(i)}_j\) instead of \((x^{(i)}_j,i)\). We note that the non-intersecting property of the paths enforces the inequalities in (3.31). It will be useful to extend the above triangular array to a square array with additional frozen points on every line:

The auxiliary points \(x^{(i)}_j\) with \( 1\le i<j\le N\) are illustrated as the ‘frozen’ bullets in the bottom-left part of Fig. 4. As in Proposition 3.5, the point \(x_0\) is chosen arbitrarily but such that \(x_0 -1< y_N-N\). We will also use the notation \({\varvec{x}}^{(0)}:=(x^{(0)}_1,x^{(0)}_2,\ldots ,x^{(0)}_N)\), with \(x^{(0)}_i:=x_0-i\), for \(i=1,\ldots ,N\). The freezing is, again, due to the non-intersecting nature of the extended paths (i.e., the paths that start from \(\{(x^{(0)}_i,0):1\le i\le N\}\) and include the dashed lines) in Fig. 4.

For \(0\le r<t\), \(1\le k\le N\) and \(x\in \mathbb {Z}\), we now define the family of functions

where, as usual, \(R^{*}_{(r,t]}\) denotes the adjoint of \(R_{(r,t]}\), i.e. \(R^{*}_{(r,t]}(x,y):= R_{(r,t]}(y,x)\). Notice that the function \(\Psi ^{(N)}_{k} (x)\) depends implicitly on \({\varvec{y}}=(y_1\ge \cdots \ge y_N)\). With reference to Fig. 4, \((-1)^{N-k}\Psi ^{(N)}_{k} (x)\) captures the weight of a path starting at location (x, N) on the lower solid black line and ending at \((y_k-k, 2N+t-r-k)\) at the top of the figure (passing by any z on the upper solid black line). Note also that, even though the sums in the first two lines of (3.33) are over the \(\mathbb {Z}\), these sums actually have only a finite number of non-zero terms, since the values of x and \(y_k-k\) are fixed.

Observe that the left edge of the triangular array \({\textsf{X}}_N\), i.e. \({{\,\mathrm{\mathrm {l-edge}}\,}}( {\textsf{X}}_N ):=(x^{(i)}_i:1\le i\le N)\), coincides with the terminal positions \((y_1'-1>y_2'-2>\cdots >y_N'-N)\) of the \(\textsf{dTASEP}(N;{\varvec{p}},{\varvec{q}})\) particles, by Proposition 3.5. Thanks to this, we are able to obtain an initial representation of the transition kernel of \(\textsf{dTASEP}(N;{\varvec{p}},{\varvec{q}})\) as a marginal of the determinantal point process \({\textsf{X}}_N\).

Proposition 3.6

Let \({\varvec{y}}\in {\textsf{W}}_N\). Let \(x_0\in \mathbb {Z}\) with \(x_0 -1< y_N-N\) and write \({\varvec{x}}^{(0)}:=(x^{(0)}_1,x^{(0)}_2,\ldots ,x^{(0)}_N)\), where \(x^{(0)}_i:=x_0-i\). Define the (signed) determinantal measure

on configurations \({\textsf{X}}_N= \{x^{(i)}_j\}_{ 1 \le j\le i \le N} \) with \(x^{(i+1)}_{j+1} < x^{(i)}_j\le x^{(i+1)}_j\), where we have set \(x^{(k-1)}_k=x^{(0)}_k\) for \(1\le k\le N\), \(\widehat{Z}^{{\varvec{p}},{\varvec{q}}}_{(r,t]}:=Z^{{\varvec{p}},{\varvec{q}}}_{(r,t]} \prod _{i=1}^N q_i^{y_i-x_0}\) and \(Z^{{\varvec{p}},{\varvec{q}}}_{(r,t]} \) as in (2.14) (note that the right-hand side of (3.34) depends on \({\varvec{y}}\) through the \(\Psi \)-functions). The determinantal measure does not depend on the auxiliary point \(x_0\), as long as the condition \(x_0-1<y_N-N\) holds. Then, the transition kernel of \(\textsf{dTASEP}(N;{\varvec{p}},{\varvec{q}})\), encoding the probability that particles starting from locations \((y_1-1>y_2-2>\cdots >y_N-N)\) at time r end up at locations \((y_1'-1>y_2'-2>\cdots >y_N'-N)\) at time t, is given by

Proof

In a nutshell, this result is a consequence of the path representation of \({{\mathcal {Q}}} _{(r,t]}({\varvec{y}},{\varvec{y}}')\), as described in Proposition 3.5 (see in particular (3.29)), as well as the identification of the point process \({\textsf{X}}_N\) as the ‘trace’ of that path ensemble on \(\{(x,i):x\in \mathbb {Z}, \, 1\le i\le N \}\). Starting from (3.30) and using (3.21), (3.27) and (3.20), we obtain

where the summations are over all \(\lambda ,\mu \in {\textsf{W}}_N\) such that \(\mu \subseteq \lambda \), \(\mu \subseteq {\varvec{y}}\) and \({\varvec{y}}' \subseteq \lambda \). Using the Cauchy–Binet identity to compute the sum over \(\mu \), we have

where the latter equality follows from definition (3.33). We next multiply and divide by \(\prod _{i=1}^N q_i^{-x_0}\), recall that \(\widehat{Z}^{{\varvec{p}},{\varvec{q}}}_{(r,t]}=\big (\prod _{i=1}^N q_i^{y_i-x_0} \big )Z^{{\varvec{p}},{\varvec{q}}}_{(r,t]}\) and absorb the factor \(\prod _{i=1}^N q_i^{y_i'-x_0}\) into the second determinant, thus obtaining

Using the facts that \(Q^\dagger _{(0,i-1]}(x^{(0)}_i,x^{(0)}_i )=1\) and \(q_i^{y_i'-x_0}=Q^\dagger _i(x^{(0)}_i,y_i'-i)\), we can rewrite

Using several times the Cauchy–Binet identity, we obtain

Notice that the constraints \(x^{(i-1)}_i=\cdots =x^{(1)}_{i}=x^{(0)}_i\), \(1\le i\le N\), corresponds to ‘freezing’ the points \(x^{(i)}_j\), \(1\le i<j\le N\), as in (3.32). Due to the inequalities (3.32) that \(\overline{{\textsf{X}}}_N\) satisfies, we have \(x^{(k)}_{j}<x^{(k-1)}_i\) for \(i<j\), hence \(Q^\dagger _{k}(x^{(k-1)}_i,x^{(k)}_j)=0\) for \(i<j\). Furthermore, due the constraints \(x^{(i-1)}_i=\cdots =x^{(1)}_{i}=x^{(0)}_i\), we have \(Q^\dagger _{k}(x^{(k-1)}_i,x^{(k)}_i )=1\) for \(i>k\). Therefore, the matrix \(\big (Q^\dagger _{k}(x^{(k-1)}_i,x^{(k)}_j ) \big )_{i,j=1}^N\) is lower triangular with diagonal elements \(Q^\dagger _{k}(x^{(k-1)}_i,x^{(k)}_i )=1\) for \(i>k\). This implies

which leads to

with the convention that \(x^{(k-1)}_k=x^{(0)}_k\) for \(1\le k\le N\). This completes the proof of (3.35).

To show that the determinantal measure does not in fact depend on \(x_0\), notice first that the k-th row of the matrix \(Q^\dagger _{k}(x^{(k-1)}_i,x^{(k)}_j )\) has a common factor \(q^{-x_0}\), for all \(1\le k\le N\). When factoring these terms out of the determinants, we see a cancellation with the corresponding terms in the normalizing constant \(\hat{Z}^{{\varvec{p}},{\varvec{q}}}_{(r,t]}\). The resulting expression has no further dependence on \(x_0\). \(\square \)

For the analysis that will follow in the next sections, it will be convenient to re-express the determinantal measure (3.34) in terms Q-operators, which represent weights of paths moving strictly to the left, rather than \(Q^\dagger \)-operators, which represent weights of paths moving weakly to the right. Towards this, the main observation is the following equality of determinants, which will be a consequence of certain path constructions.

Proposition 3.7

Let \(\{x_j^{(i)}\}_{1\le j\le i\le N}\) be a triangular array of integers and let \(\{x_j^{(j-1)}\}_{j=1}^N\) and \(\{x_0^{(j-1)}\}_{j=1}^N\) be auxiliary integer variables. Assume that \(\{x_j^{(i)}\}_{1\le j\le i\le N}\) satisfies

Then we have

and both sides are nonzero.

Proof

By the Lindström–Gessel–Viennot theorem, we may view \(\det \big (Q^\dagger _k(x_{i}^{(k-1)},x_j^{(k)})\big )_{i,j=1}^{k}\) as the total weight of k non-intersecting paths starting from \((x_k^{(k-1)},k-1),\ldots ,(x_{1}^{(k-1)},k-1)\) and ending at \((x_k^{(k)},k),\ldots ,(x_{1}^{(k)},k)\), with the first step upwards (with weight 1) and subsequent steps in the horizontal right direction (with weight \(q_k\) at each step). Note that such a non-intersecting path ensemble exists if and only if

In such a case, the weight of the ensemble equals \(\prod _{j=1}^{k}Q^\dagger _k(x^{(k-1)}_j,x^{(k)}_j)\). Taking the product over \(k=1,\ldots ,N\), we obtain the total weight of the non-intersecting paths illustrated in red in Fig. 5:

We now consider similar, ‘dual’ paths, illustrated in blue in Fig. 5. Recalling from (3.2) that the Q-operators encode geometric jumps strictly to the left, by the Lindström–Gessel–Viennot theorem we view \(\det \big ( Q_k(x_{i-1}^{(k-1)},x_j^{(k)})\big )_{i,j=1}^{k}\) as the total weight of k non-intersecting paths starting from \((x_{k-1}^{(k-1)},k-1),\ldots ,(x_{0}^{(k-1)},k-1)\) and ending at \((x_k^{(k)},k),\ldots ,(x_{1}^{(k)},k)\), with the first step diagonally up-left and subsequent steps in the horizontal left direction, with all steps having weight \(q_k\). Reasoning as before, we obtain

Thus, since by hypothesis the interlacing conditions (3.36) are satisfied, both sides of (3.37) are nonzero. Moreover, we have

Moreover, up to inverting the weights of the blue paths, the total weight of red and blue paths simply equals the weight of all the horizontal paths from \((x_{k}^{(k-1)}, k)\) to \((x_0^{(k-1)},k)\), \(k=1,\cdots ,N\); in other words, we have

Combining (3.39) with (3.38), we readily arrive at (3.37). \(\square \)

Ensemble of non-intersecting red and blue paths used in the proof of Proposition 3.7

The following corollary re-expresses the determinantal measure (3.34) in a form that will be more suitable to our purposes.

Corollary 3.8

The determinantal measure (3.34) is equal to

with

The determinantal measure (3.40) is actually independent of the auxiliary variables \(\{x_0^{(j-1)}\}_{j=1}^N\). Reasoning as in the proof of Proposition 3.6, the first row of the matrix \( \big ( Q_{k}(x^{(k-1)}_{i-1},x^{(k)}_j) \big )_{i,j=1}^{k}\) has a common factor \(q_k^{-x_0^{(k-1)}}\), for all \(1\le k\le N\). These terms, when factored out of the determinants, cancel the corresponding terms in the normalizing constant \(\tilde{Z}^{p,q}_{(r,t]}\), making the resulting expression independent of \(\{x_0^{(j-1)}\}_{j=1}^N\). Thus, we may set these auxiliary variables to be \(\infty \) and simply define

Using these conventions and defining

the determinantal measure (3.40) may be written in the form

3.4 Correlation kernel and biorthogonal functions

From now on we will make the additional assumption that \(q_1<q_2<\cdots \), throughout this subsection and most of Sect. 4, until when we remove it in the proof of Theorem 1.1 (see Sect. 4). This assumption allows for a bona fide composition of the local operators and makes all infinite sums in this subsection and in the next section well defined; it also justifies swapping sums and exchanging sums with contour integrals. To better understand its need, recall that in (3.42) we defined \(Q_k(x_0^{(k-1)},y):=q_k^y\), for virtual variable \(x_0^{(k-1)}\) regarded as \(\infty \). This convention might seemingly lead to issues when defining

for \(k>j\): if, for example, \(k=j+1\), the sum above equals \(\sum _{y>x}q_j^{y}\cdot q_{j+1}^{x-y}\), which diverges for \(q_{j+1}\le q_j\). However, for \(q_1<q_2<\cdots \), (3.45) is well defined. To see this, recall from (3.16) that

where \(0<r<\min \{q_\ell \}_{\ell =j+1}^k\). To get a well-defined expression for \(Q_{[j,k]}(x_0^{(j-1)},y)\), note that, since \(q_j<q_k\) for all \(k>j\), we can write

where \(r,r'\) are chosen such that \(0<r<q_j<r'<\min \{q_\ell \}_{\ell =j+1}^k\). Both geometric series converge and computing them yields

where \(\gamma _{q_j}\) is any simple closed contour enclosing \(q_j\) as the only pole for the integrand. This computation motivates the definition

where \(\gamma _{q_j}\) is the contour defined above. We also set

We now summarize some basic properties of \(Q_{[j,k]}(x_0^{(j-1)},x)\), which we will use frequently:

Proposition 3.9

Assume that \(q_1<q_2<\cdots \) and take \(Q_{[j,k]}(x_0^{(j-1)},x)\) to be defined as in (3.47)–(3.48), where \(x_0^{(j-1)}\) are virtual variables regarded as \(\infty \).

-

(i)

For \(k\ge 1\) and \(y\in \mathbb {Z}\), we have

$$\begin{aligned} Q_{[k,k]}(x_0^{(k-1)},y) = q_k^{y}=Q_k(x_0^{(k-1)},y), \end{aligned}$$(3.49)which is consistent with (3.42).

-

(ii)

Given \(j,k,n\ge 1\) and \(y\in \mathbb {Z}\) with \(j<k\) and \(j<n\), we have

$$\begin{aligned} Q_{[j,k]}\circ Q_{(k,n]}(x_0^{(j-1)},y):= \sum _{x'\in \mathbb {Z}}Q_{[j,k]}(x_0^{(j-1)},x')\,Q_{(k,n]}(x',y) =Q_{[j,n]}(x_0^{(j-1)},y), \nonumber \\ \end{aligned}$$(3.50)where we allow the slight abuse of notation (3.7) for \(k\ge n\).

-

(iii)

For any \(j,k\ge 1\), we have

$$\begin{aligned} Q_{[j,k]}(x_0^{(j-1)},y) = \lim _{x\rightarrow \infty } q_j^{x}\cdot Q_{[j,k]}(x,y). \end{aligned}$$(3.51)

Proof

-

(i)

This follows immediately from (3.47).

-

(ii)

To prove this, one can mimic (3.46), using the integral representation (3.16) and splitting the sum into two geometric series with contours deformed in such a way that both series converge simultaneously.

-

(iii)

We first prove (3.51) for \(1\le j\le k\), using again the integral representation (3.16). Since \(q_j<q_{j+1}<\cdots \), if we deform the contour \(|z|=r\) to be a slightly larger circle \(|z|=r'\) with \(0<r<q_j<r'<q_{j+1}\), the only extra contribution of the integral comes from the residue at \(z=q_j\). Hence, we have

$$\begin{aligned} Q_{[j,k]}(x,y)&= \oint _{|z|=r} \frac{\textrm{d} z}{2\pi \textrm{i}z} \cdot \frac{z^{y-x+k-j+1}}{\prod _{\ell =j}^{k}(q_\ell -z)}\\&= \oint _{|z|=r'} \frac{\textrm{d} z}{2\pi \textrm{i}z} \cdot \frac{z^{y-x+k-j+1}}{\prod _{\ell =j}^{k}(q_\ell -z)}+\frac{q_j^{y-x+k-j}}{\prod _{\ell =j+1}^{k}(q_\ell -q_j)}. \end{aligned}$$Note that

$$\begin{aligned} \left| q_j^x\cdot \oint _{|z|=r'}\frac{\textrm{d} z}{2\pi \textrm{i}z} \cdot \frac{z^{y-x+k-j+1}}{\prod _{\ell =j}^{k}(q_\ell -z)}\right| \le C\cdot \left( \frac{q_j}{r'}\right) ^{x}\rightarrow 0,\qquad \text {as }x\rightarrow \infty , \end{aligned}$$for some constant \(C>0\). Thus, by (3.47), we have

$$\begin{aligned} \lim _{x\rightarrow \infty } q_j^{x}\cdot Q_{[j,k]}(x,y)= \frac{q^{y+k-j}_j}{\prod _{\ell =j+1}^{k}(q_\ell -q_j)}=Q_{[j,k]}(x_0^{(j-1)},y). \end{aligned}$$To prove (3.51) for \(j>k\), notice that in this case, using our convention (3.7), we have

$$\begin{aligned} Q_{[j,k]}(x,y)= Q_{j-1}^{-1}\circ \cdots \circ Q^{-1}_{k+1}(x,y)=0, \end{aligned}$$whenever \(x>y\). By definition (3.48), it then follows that

$$\begin{aligned} \lim _{x\rightarrow \infty } q_j^{x}\cdot Q_{[j,k]}(x,y)= 0 =Q_{[j,k]}(x_0^{(j-1)},y), \end{aligned}$$as desired.

\(\square \)

The following proposition is rather standard in the theory of determinantal point processes. However, here we unveil an additional important structure, i.e. the triangularity of the correlation matrix \(\mathsf M\), which can be seen from our non-intersecting paths formulation.

Define first, for \(n< N\), the following generalisation of the functions \(\Psi _k^{(N)}\) from (3.33):

We remark that the summation over z is actually within the finite range \(y_k-N\le z < x\); see Fig. 4 and recall the definition of the operator Q.

Proposition 3.10

(Correlation kernel and Fredholm determinant). Let the functions \(\Psi ^{(n)}_k\) be defined by (3.33) and (3.52). Let \( Q_{[j,n]}(x_0^{(j-1)},y)\) be defined by (3.47)–(3.48). Under the assumption that \(q_1<q_2<\cdots \), the determinantal point process (3.40) admits the Fredholm determinant representation

for any bounded test function \(g:\{1,\ldots ,N\}\times \mathbb {Z}\rightarrow \mathbb {R}\). The correlation kernel K is given by

where the matrix \({\textsf{M}}\) is defined by

Furthermore, \({\textsf{M}}\) is upper-triangular and invertible.

Proof

Except for the stated properties of \({\textsf{M}}\), Proposition 3.10 follows from [BFPS07, Lemma 3.4] and the accompanying remark, see also [Joh03, Proposition 2.1]. We now check that, under our assumptions that \(q_1<q_2<\cdots \), the matrix \(\mathsf M\) is indeed upper-triangular with nonzero diagonal entries, and therefore invertible. By (3.54) and (3.33), we have

for \(1\le i,j\le N\). Recalling formula (3.47) for \(Q_{[i,N]}(x_0^{(i-1)},z)\) and expressing the Toeplitz operator \(R^*_{(r,t]}\circ Q_{(j,N]}^{-1}\) as a contour integral in the usual way (see (4.6) in the next section for details), we see that

where \(r>\max \{|\xi |:\xi \in \gamma _{q_i}\}\), so that the sum over z converges. Note that in the second equality we can restrict the sum to \(z\ge {y_j-N}\), since the contour integral with respect to w vanishes for \(z<{y_j-N}\) due to analyticity. The only pole inside the w-contour is at \(w=\xi \), and calculating its residue yields

Note now that, for \(i>j\), the integrand is analytic at \(q_i\), hence the integral vanishes and \({\textsf{M}}_{i,j}=0\). On the other hand, when \(i=j\), by our assumptions on the parameters we have

which is nonzero. We conclude that the matrix \({\textsf{M}}\) is upper-triangular and invertible. \(\square \)

Remark 3.11

Here we provide a more intuitive, pathwise explanation of the fact that the matrix \({\textsf{M}}\) is upper-triangular. By (3.51), for \(i>j\) we have

where in the last equality we used the fact that all the operators commute. Viewing the operators as associated to paths, \(R^*\)-operators take at most one step to the left at the time, whereas \(Q^{-1}\)-operators take at most one step to the right at the time. Thus, if \(i>j\), for any x sufficiently large, the point \({y_j-j}\) cannot be reached from x by applying the operator \(R_{(r,t]}^*\circ Q^{-1}_{[j+1,i-1]}\), hence \(R_{(r,t]}^*\circ Q^{-1}_{[j+1,i-1]}(x,{y_j-j})=0\). This shows that \({\textsf{M}}_{i,j}=0\) for \(i>j\).

We now derive a simplified expression for the correlation kernel in terms of biorthogonal functions. Define

where the latter equality is due to the fact that \({\textsf{M}}\) (and hence \({\textsf{M}}^{-1}\)) is upper-triangular.

Proposition 3.12

The kernel K in (3.53) can be written as

Moreover, for \(1\le i,j\le n\) and \(n=1,\ldots ,N\), the following biorthogonality relation holds: