Abstract

This paper is motivated by computing correlations for domino tilings of the Aztec diamond. It is inspired by two of the three distinct methods that have recently been used in the simplest case of a doubly periodic weighting, that is, the two-periodic Aztec diamond. One of the methods, powered by the domino shuffle, involves inverting the Kasteleyn matrix giving correlations through the local statistics formula. Another of the methods, driven by a Wiener–Hopf factorization for two-by-two matrix-valued functions, involves the Eynard–Mehta Theorem. For arbitrary weights, the Wiener–Hopf factorization can be replaced by an LU- and UL-decomposition, based on a matrix refactorization, for the product of the transition matrices. This paper shows that, for arbitrary weightings of the Aztec diamond, the evolution of the face weights under the domino shuffle and the matrix refactorization is the same. In particular, these dynamics can be used to find the inverse of the LGV matrix in the Eynard–Mehta Theorem.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 Domino tilings of the Aztec diamond

Random tiling models of bounded regions have been studied heavily in the past few decades; see [Gor20] and references therein. One of the central examples of this area is domino tilings of the Aztec diamond, where an Aztec diamond of size n is all the squares of the square grid whose centers satisfy the condition that \(|x|+|y| \le n\) and a domino tiling is a non-overlapping covering by two by one rectangles [EKLP92]. To obtain a random domino tiling of the Aztec diamond, one assigns weights to particular dominoes, which can be dependent on their location, picking each domino tiling with probability proportional to the product of the domino weights in that domino tiling. These models are often studied on the dual graph with a tile becoming a dimer, and the resulting random tiling probability measure is known as the dimer model.

These random tilings contain many fascinating asymptotic behaviors that should be apparent in other statistical mechanical models. Indeed, for large random tilings limit shape curves emerge splitting the domain into different macroscopic regions, of which there are three types: frozen, where the configurations are deterministic; rough, where the correlations between tiles decay polynomially; smooth, where the correlations between tiles decay exponentially. These phases were characterized for dimer models on bipartite graphs in [KOS06].

To study these interesting asymptotic behaviors, one of the main approaches in recent years has been to find a non-intersecting path picture for the tiling. Using a combination of the Lindström–Gessel–Viennot theorem and the Eynard–Mehta Theorem (e.g., see [BR06]), the correlation kernel of the underlying determinantal point process of the particle system defined through the paths can be written in terms of the inverse of a particular principal submatrix of a product of transition matrices. Finding an explicit expression for that inverse and thus the correlation kernel, one that is amenable for asymptotic analysis, poses a serious challenge that has only been carried out in special situations. For instance, it has been worked out for models that are Schur processes, such as uniformly random domino tilings of the Aztec diamond [Joh05]. For Schur processes, the transition matrices are doubly infinite Toeplitz matrices and (in an appropriate limit) the inverse can be computed using a Wiener–Hopf factorization of the product of the symbols. As the symbols are scalar-valued, finding a Wiener–Hopf factorization is a mere reordering of the symbols in the product (see for example [Joh18]). In [BD19], the authors introduced a natural generalization of Schur process, one that includes doubly periodically weighted domino tiling of the Aztec diamond, by taking block Toeplitz matrices as transition matrices. In this case, the symbols are matrix valued and this complicates a Wiener–Hopf factorization. Still, it is possible to define a refactorization procedure that provides such a Wiener–Hopf factorization, and in special situations this Wiener–Hopf factorization is even explicit. Once formulas for the correlation kernel of the determinantal point process have been found in a suitable form, fine asymptotic analysis unlocks the full asymptotic picture, which is often unavailable in more complicated models.

An alternate approach for random tiling models has been through the Kasteleyn matrix K and its inverse. The (Percus)-Kasteleyn matrix is a type of signed adjacency matrix whose rows and columns are indexed by the black and white vertices of the graph, respectively. The appeal of the inverse Kasteleyn matrix is that it is the correlation kernel of the determinantal point process on the edges of the graph [Ken97]. Computations of this inverse are only known for periodic graphs and in certain special cases such as the Aztec diamond. For instance, a procedure for computing the inverse Kasteleyn matrix for the Aztec diamond was given [CY14] in a certain setting, which showed that the entries could be computed using recurrence relations from an entry-wise expansion of the matrix equations \(K.K^{-1}=K^{-1}.K=\mathbb {I}\) and a boundary recurrence relation. This boundary recurrence relation involved transformations of the entries of the inverse Kasteleyn matrix under the domino shuffle,Footnote 1 which is a particular graphical move special to 4-valent faces of the graph.

The main purpose of this paper is to show that both formulations for computing correlations for arbitrarily weighted domino tilings of the Aztec diamond are equivalent, relying on the same amount of computational complexity. Along the way, we show that commuting transition matrices arising from the non-intersecting path picture for arbitrary weights is equivalent to the domino shuffle, thus providing an analog to the role of Yang–Baxter for the six-vertex model [Bax89]; see Corollary 5.5. This had only previously been noted for Schur processes [BCC17]. We next give an overview of the main results along with an outline of the paper.

1.2 Outline of the main results

Since our results hold for arbitrary weights and minimal assumptions, we start our discussion of the Aztec diamond from first principles. We therefore start in Sect. 2 by recalling the basics on the Kasteleyn approach and the Eynard–Mehta Theorem for the non-intersecting paths in Sect. 3.

Our first main result is a general expression in Theorem 4.1 for the inverse Kasteleyn matrix that involves the inverse of a matrix that counts the DR-paths on the Aztec diamond, very similar to the Eynard–Mehta Theorem for the non-intersecting paths process. In fact, when setting this up in the slightly larger domain, cf. Theorem 4.3, it is exactly the same matrix from the Eynard–Mehta Theorem that needs to be inverted. This shows that the two approaches ultimately boil down to the same question.

In Sect. 5, we then discuss and compare two fundamental discrete dynamical systems on the infinite underlying weighted graphs for the dimer model and the non-intersecting path process. For the dimer model, we apply the well-known square move on all even faces, as one does in the domino shuffle. For the non-intersecting path processes, we use a matrix refactorization by swapping all even transition matrices with their consecutive odd neighbor. We show that these two systems are the equivalent in the sense that each iteration changes the face weights of the underlying graphs identically. For special choices of doubly periodic weights (as we briefly discuss below), both systems have been used in the literature to compute the correlation functions, and we will show that this can be done for arbitrary weights.

The dynamics (and its reverse) provides us, up to a trivial shift, with an LU- and UL-decomposition of the product of the transition matrices. For the Eynard–Mehta Theorem, we need to invert a particular submatrix of the product of transition matrices, and the LU- and UL decompositions do not immediately provide an inverse of this matrix. Inspired by a similar analysis for the special case of block Toeplitz matrices [Wid74], we show in Sect. 6 that it is possible to provide an explicit expression for an approximate inverse, with only very minor assumptions on the weights (in particular, no periodicity is required). The approximation converges to the inverse when the size of the submatrix tends to infinity. Our analysis culminates in an expression of the correlation kernel that only involves the LU-decomposition and the transition matrices, cf. Theorem 6.9. Although the expression we obtain is not yet in a form that one can start an asymptotic study, it is valid under fairly weak conditions on the parameters, and we find it remarkable that it can be carried out in this generality. Moreover, it covers the result of [BD19] as a special case, including doubly periodic weights, and even provides an alternative more direct proof, which we included in Appendix B.

The results of Sect. 7 are discussed in the next subsection. Finally, in Sect. 8, we show how to use the domino shuffle to compute the boundary recurrence relations, a method used in [CY14] and outline the steps needed to compute the inverse of the Kasteleyn matrix of the Aztec diamond.

1.3 Doubly periodic weightings

In Sect. 7, we discuss how our general procedure specializes to doubly periodic domino tilings of the Aztec diamond in which there has been significant progress in recent years. The attraction of these types of models is that they are currently the only statistical mechanical model with all three types of macroscopic regions present which also have explicit formulas for their correlations. Indeed, the original motivation for studying the two-periodic Aztec diamond was to study the probabilistic behavior at the rough-smooth boundary which is a transition between polynomially and exponentially decaying regions. This type of interface is believed to appear in other statistical mechanical models such as the six-vertex model with domain wall boundary conditions (with the associated parameter \(\Delta <-1\)) and low temperature 3D Ising models with certain boundary conditions.

The starting point was in [CY14], where a formula for the inverse Kasteleyn matrix was derived for the two-periodic Aztec diamond which was later simplified to a form suitable for asymptotic analysis in [CJ16], leading to asymptotic results for the two-periodic Aztec diamond including the behavior at the rough–smooth boundary [BCJ18, BCJ22, JM21, Bai22] which shows that the behavior is much more nuanced than the behavior at the frozen-rough boundary. An alternative approach is to find the correlation kernel of the determinantal point process for the particle system associated with the non-intersecting path picture which gives two different methods. One of these methods used matrix orthogonal polynomials combined with Riemann Hilbert techniques [DK21] while the other method, introduced in [BD19], is an important inspiration for the general refactorization of the present paper.

For doubly periodic weights, the transition matrices are doubly infinite block Toeplitz. The refactorization procedure in this case is equivalent to a Wiener–Hopf factorization of the symbol corresponding to the product of the transition matrices. For various models, such as the two-periodic Aztec diamond and even a class of weightings with higher periodicity [Ber21], the dynamical system determined by the refactorization procedure is periodic and this allows for a very explicit double integral formulation for the correlation kernel that can be analyzed asymptotically. In general, tracing this dynamical system is not an easy task. In a recent work [BD22], the authors showed that for the biased two-periodic Aztec diamond the dynamical system from the refactorization is equivalent to a linear flow on an elliptic curve, and it is reasonable to expect that the general case can be linearized on the Jacobian of the spectral curve.

It is important to note that the (matrix)-orthogonal method of [DK21] for doubly periodically weighted tilings is essentially based on an LU-decomposition of the submatrix of the product of transition matrices, whereas the Wiener–Hopf factorization in [BD19] is an LU- and UL-decomposition for the entire matrix. The LU-decomposition is hiding in the orthogonality condition, but it was an important fact in [DK21]. The benefit of the approach of [DK21] is that it also holds in more general situations. Moreover, one can use tools from complex analysis, such as the Riemann-Hilbert problem, to study these polynomials. This has been carried out for several interesting tiling models [DK21, Cha21, CDKL20, GK21]. For the Aztec diamond, the polynomials simplify significantly, and therefore, one can circumvent this heavy machinery. It is also important to observe that the orthogonal polynomials only occur for weightings that have at least one direction in which they are periodic.

Restricting our results to doubly periodic weights means that Wiener–Hopf factorization is equivalent to the domino shuffle. The dynamical system from domino shuffle, known as the dimer cluster integrable system introduced in [GK13], has been studied extensively in various contexts [GSTV16, KLRR18, AGR21, Izo21] for example, under the guise of the octahedron recurrence [Spe07, DFSG14, DF14]. These dynamics also have a probabilistic interpretation [CT19, CT21] and when applied to the Aztec diamond, giving a powerful method for perfect simulation of domino tilings of the Aztec diamond with arbitrary weights [Pro03]. Finally, we mention that the connection between the dimer cluster integrable system and matrix refactorization is currently investigated [BGR22].

2 Preliminaries on the Aztec Diamond and the Kasteleyn Approach

In this section, we give the general setup, definitions of the Aztec diamond and tower Aztec diamonds graphs, and the Kasteleyn matrices associated to these graphs. We specify the importance of the inverse (of the) Kasteleyn matrix for computing correlations. Finally, we introduce the DR-path picture for domino tilings of the Aztec diamond and tower Aztec diamond graphs.

We note that throughout the paper, we use the notation that for a matrix \(M=(m_{i,j})_{1 \le i,j \le n}\), \(M(i,j)=m_{i,j}\), depending on whichever is most convenient. We will also use the notation \(\mathbb {I}_B\) to denote the indicator of a set B.

2.1 General setup

We consider the dimer model on (finite) planar bipartite graphs \(G=(V,E)\). A dimer configuration is a collection of edges such that each vertex is incident to exactly one edge of the collection. To each edge of the graph, assign a positive number, that is, \(w:E \rightarrow \mathbb {R}\) with \(w(e)>0\) for all \(e \in E\). The weight of a dimer configuration, M, is equal to the product of the edge weights in that dimer configuration, that is \(\prod _{e \in M} w(e)\). The dimer model is the probability measure where each dimer configuration is picked with probability proportional to the product of the edge weights. In other words, the probability of a dimer configuration M is equal to

where the sum is over all possible dimer configurations, \(\mathcal {M}\), of the graph G.

For this paper, we work only on the square grid and particular subgraphs of it. Introduce the vertex sets

and

which denote the white and black vertices. The centers of the faces of the infinite graph are given by \((i,j) \in (2\mathbb {Z})^2\) or \((i,j) \in (2\mathbb {Z}+1)^2\).

Although we introduced the edge weights above, as mentioned in [GK13], it is in fact the face weights which parameterize the dimer model.Footnote 2 The face weights are defined as the alternating product of the edge weights around each face viewed from a clockwise orientation, such that the weights of the edges of the black to white vertices are in the numerator and the weights of the edges of the white to black vertices are in the denominator of this alternating product. For \(i,j \in \mathbb {Z}\), let the face weight of the face whose center is given by \((2i+1,2j+1)\) be equal to \(F_{2i,j}\) and let the face weight of the face whose center is given by \((2i+2,2j+2)\) be equal to \(F_{2i+1,j}\).

Without loss of generality, we fix a convention for the edge-weights used throughout this paper. For \(\texttt{b}=(2i,2j+1)\) with \(i,j \in \mathbb {Z}\), we assert that the edge weights of the edges \((\texttt{w},\texttt{b})\) are given by

-

1 if \(\texttt{w}=(2i-1,2j+2)\) or \(\texttt{w}=(2i-1,2j)\)

-

\(a_{i,j}\) if \(\texttt{w}=(2i+1,2j+2)\),

-

\(b_{i,j}\) if \(\texttt{w}=(2i+1,2j)\)

for \(a_{i,j},b_{i,j}>0\) for all \(i,j \in \mathbb {Z}\). With our conventions, each face \((2i+1,2j+1)\) has face weight \(F_{2i,j}=\frac{a_{i,j}}{b_{i,j}}\) while each face \((2i+2,2j+2)\) has face weight \(F_{2i+1,j}=\frac{b_{i+1,j+1}}{a_{i+1,j}}\). We will use this weighting throughout the paper.

Remark 2.1

Note that setting the weight of the edges \(((2i,2j+1),(2i-1,2j))\) equal to 1 can be done without loss of generality. Indeed, in a general edge weighting one can always change all edge weights so that the edges \(((2i,2j+1),(2i-1,2j))\) have weights 1 without changing the face weights. This can be achieved by a so-called successive application of gauge transformations, in which one multiplies each edge weight around a given vertex by a common factor. We note, however, that this can have an effect on the structure of the edge weights (see for instance Remark 7.1).

2.2 The Aztec diamond

We introduce the Aztec diamond graph of size n denoted by \(G_n^{\textrm{Az}}\). Let

and

The edges are given by

Let \([n]=\{1,\dots ,n\}\). We assign a specific ordering to the white and black vertices, which are given by the functions \(w_n^{\textrm{Az}}:[n(n+1)] \rightarrow \texttt{W}_n^{\textrm{Az}}\) and \(b_n^{\textrm{Az}}:[n(n+1)] \rightarrow \texttt{B}_n^{\textrm{Az}}\) where

and

for \(1 \le i \le n(n+1)\), where \([i]_n=i\mod n\). See Fig. 1 for an example of these labels.

An Aztec diamond of size 3 with the Cartesian coordinates given on the left and the vertex labels on the right, including the edge weights with our conventions given in Sect. 2.1. The unmarked edges have weight 1

The Kasteleyn(-Percus) matrix on \(G_n^{\textrm{Az}}\), \(K_n^{\textrm{Az}}:\texttt{B}_n^{\textrm{Az}} \times \texttt{W}_n^{\textrm{Az}} \rightarrow \mathbb {C}\) is given by

where \(x=(x_1,x_2)\), \(r=x_1/2\), and \(s=(x_2-1)/2\). The significance of the Kasteleyn matrix is explained below.

2.3 Tower Aztec diamond

We next introduce the tower Aztec diamond of size n and corridor of size p. Informally speaking, this is two Aztec diamonds of size n and \(n-1\) stitched together by a strip of the square grid of size p; see Fig. 2. This model was introduced in [BD19]; however, it was not assigned a name. It takes little effort to see that it is not possible to have a dimer configuration of the tower Aztec diamond in which there is an edge with one vertex in the strip and the other in one of the Aztec diamonds. In fact, each dimer configuration consists of three independent dimer configurations: one for each of the two Aztec diamonds and a trivial configuration for the strip. The benefit of using the tower Aztec diamond is that it allows us to use infinite matrices in our analysis, after letting p tend to infinity; see Sect. 6. Let

and

The edges here are given by

Label \(G_{n,p}^{\textrm{Tow}}=(\texttt{W}_{n,p}^{\textrm{Tow}}\cup \texttt{B}_{n,p}^{\textrm{Tow}},\texttt{E}_{n,p}^{\textrm{Tow}})\) to be the tower Aztec diamond of size n with corridor of size p. We assign a specific ordering to the white and black vertices, which are given by the functions \(w_n^{\textrm{Tow}}:[n(2n+p)] \rightarrow \texttt{W}_{n,p}^{\textrm{Tow}}\) and \(b_n^{\textrm{Tow}}:[n(2n+p)] \rightarrow \texttt{B}_{n,p}^{\textrm{Tow}}\) where

and

where we recall that \([i]_n=i\mod n\). See Fig. 2 for an example of these labels.

The Kasteleyn matrix on \(G_{n,p}^{\textrm{Tow}}\), defined by \(K_{n,p}^{\textrm{Tow}}:\texttt{B}_{n,p}^{\textrm{Tow}} \times \texttt{W}_{n,p}^{\textrm{Tow}} \rightarrow \mathbb {C}\), is given by

where \(x=(x_1,x_2)\), \(r=x_1/2\), and \(s=(x_2-1)/2\).

2.4 The Kasteleyn method

Kasteleyn’s theorem [Kas61, Kas63, TF61] gives that \(|\det K_n^{\textrm{Az}} |\) equals the number of weighted dimer coverings on \(G_n^{\textrm{Az}}\) while \(|\det K_{n,p}^{\textrm{Tow}} |\) equals the number of weighted dimer coverings on \(G_{n,p}^{\textrm{Tow}}\). We have chosen the sign conventions in (2.6) and (2.12) so that the sign of \(\det K_n^{\textrm{Az}}\) equals \((-1)^{\lfloor (n+1)/2 \rfloor }\), whereas the sign in \(\det K_{n,p}^{\textrm{Tow}}\) equals

Both of these follow after a computation which we omit in this paper.

In what follows below, it is useful to define \(K_n=K_n(w_n^{\textrm{Az}}(i),b_n^{\textrm{Az}}(j))_{1\le i,j \le n(n+1)}\) which is the Kasteleyn matrix for the Aztec diamond using the specific ordering of the white and black vertices as well as defining \(K_{n,p}=K_{n,p}(w_{n,p}^{\textrm{Tow}}(i),b_{n,p}^{\textrm{Tow}}(j))_{1\le i,j \le n(2n+p)}\) which is the Kasteleyn matrix for the tower Aztec diamond. This gives a more compact notation for our Kasteleyn matrices and the subscript p helps distinguish between the two.

The inverse of the Kasteleyn matrix can be used to compute statistics. We only state our result for the Aztec diamond graph and the formulation is analogous for the tower Aztec diamond as well as other graphs. Suppose that \(E=\{e_i\}_{i=1}^m\) with \(e_i=(b_i,w_i)\) are a collection of distinct edges with \(b_i\) and \(w_i\) denoting black and white vertices.

Theorem 2.2

([Ken97, Joh18]). The dimers form a determinantal point process on the edges of the Aztec diamond graph with correlation kernel given by \(L(e_i,e_j)=K_n(b_i,w_i) K_n^{-1}(w_j,b_i)\), that is,

2.5 DR paths for the Aztec diamond

Associated with each dimer covering of the Aztec diamond of size n, there are DR-lattice paths [Joh05]. The vertex set for the DR-lattice paths is given by

and the edge set

Then, we write \(G_n^{\textrm{Az},\textrm{DR}}=( \texttt{V}_n^{\textrm{Az},\textrm{DR}}, \texttt{E}_n^{\textrm{Az},\textrm{DR}})\) and label this graph the DR graph for the Aztec diamond. Figure 3 shows an example of the DR graph for the Aztec diamond. The lattice paths start at the vertices \(\{(0,2k-1), 0 \le k \le n\}\) and end at \(\{(2j,-1), 0 \le j \le n\}\). We use the convention that we drop the path from \((0,-1)\) to \((0,-1)\) as this path is trivial. The paths are non-intersecting meaning that they cannot share a vertex.

The correspondence between dimers on the Aztec diamond graph and the DR lattice paths is given as follows:

-

if a dimer covers the edge \(((2i,2j+1),(2i+1,2j))\in \texttt{E}_n^{\textrm{Az}}\) with \((2i,2j+1) \in \texttt{B}_n^{\textrm{Az}}\), then there is an edge \(((2i,2j+1),(2i+2,2j-1))\) in \(\texttt{E}_n^{\textrm{Az},\textrm{DR}}\);

-

if a dimer covers the edge \(((2i,2j+1),(2i+1,2j+2))\in \texttt{E}_n^{\textrm{Az}}\) with \((2i,2j+1) \in \texttt{B}_n^{\textrm{Az}}\), then there is an edge \(((2i,2j+1),(2i+2,2j+1))\) in \(\texttt{E}_n^{\textrm{Az},\textrm{DR}}\);

-

if a dimer covers the edge \(((2i,2j+1),(2i-1,2j))\in \texttt{E}_n^{\textrm{Az}}\) with \((2i,2j+1) \in \texttt{B}_n^{\textrm{Az}}\), then there is an edge \(((2i,2j+1),(2i,2j-1))\) in \(\texttt{E}_n^{\textrm{Az},\textrm{DR}}\).

The edge weights of the dimers transfer directly to the edges associated to the lattice paths. Recall that the edge weight of \(((2i,2j+1),(2i-1,2j+2))\in \texttt{E}_n^{\textrm{Az}}\) for \((2i,2j+1) \in \texttt{B}_n^{\textrm{Az}}\) is equal to 1 and so we conclude that each weighted dimer covering is in one-to-one correspondence with each weighted lattice path configuration.

2.6 Tower Aztec diamond DR paths

As with the Aztec diamond case, we can also associate DR-lattice paths to the tower Aztec diamond. The vertex set for the DR-lattice paths for the tower Aztec diamond of size n and corridor of size p is given by

and the edge set

Let \(G_{n,p}^{\textrm{Tow},\textrm{DR}}=(V_{n,p}^{\textrm{Tow},\textrm{DR}},E_{n,p}^{\textrm{Tow},\textrm{DR}})\) and label this graph to be the DR graph for the tower Aztec diamond. The lattice paths on this DR graph start at the vertices \(\{(0,2k-1), -p+1 \le k \le n\}\) and end at \(\{(2n,-1-2j), 0 \le j \le n+p-1\}\). The same correspondence between paths and dimers for the Aztec diamond holds for the tower Aztec diamond; see Fig. 2.

3 Preliminaries on Non-intersecting Paths

We will now recall the model of non-intersecting paths that is equivalent to the dimer model for the Aztec diamond.

We start with a directed graph \(\mathcal G=(\mathtt V,\mathtt E)\), with the vertex set \(\mathtt V=\mathbb Z^2\) and (directed) edges

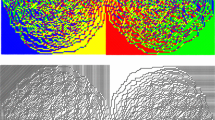

See also Fig. 6. This graph can be thought of as gluing two types of strips in an alternating fashion. The two types of strips are found in Fig. 5. Both strips consist of two columns of vertices. One strip has horizontal and diagonal edges all directed from left to the right. The other strip does not have the diagonal edges but instead has edges pointing down between consecutive vertices in the right column. We then cover \(\mathbb Z ^2\) by putting copies of the left strip in Fig. 5 such that the horizontal coordinates of the vertices in the left column of the strip are even and putting copies of the other strip such that the horizontal coordinates of the left column of vertices are odd.

Two strips that are the building blocks for the graph \(\mathcal G\). Each strip has infinite length and consists of two vertical columns of vertices. The left strip has horizontal and diagonal edges directed from left to right. The right strip has downward edges between consecutive vertices in the right column instead of diagonal edges

Next we introduce a function \(w:\mathtt V \rightarrow [0,\infty )\) that puts weights on all edges as follows

for \(i,j \in \mathbb Z\). So only the edges \(((2i,j),(2i+1,j))\) and \(((2i,j),(2i+1,j-1))\) may have weights different from 1.

Based on the edge weights, we also assign weights to the faces of the graph. Let \(F_{2i,j}\) be the trapezoidal face defined by the vertices (2i, j), \((2i+2,j)\), \((2i+2,j-1)\) and \((2i+1,j-1)\). Similarly, let \(F_{2i+1,j}\) be the triangular face defined by the vertices \((2i+2,j)\), \((2i+2,j+1)\) and \((2i+3,j)\). We then define the weights of the faces by

and

In Sect. 5, we will use the infinite graph \(\mathcal G\), but for the connection with the Aztec diamond we will only need the subgraph that is obtained by gluing strips of the type in Fig. 5 on \(\{0,\ldots ,2n\} \times \mathbb Z\) in the same way as before, starting with a copy of the type on the left of Fig. 5.

The correspondence between dimers on the tower Aztec diamond graph and the non-intersecting paths on \(\mathcal G\) is obtained by inserting trivial horizontal parts after every step in the DR-paths of Sect. 2.6 (albeit trivial, these parts help when applying the LGV-Theorem below, see also [Joh05]). More precisely, for a given dimer configuration, construct a collection of paths in the following way:

-

if there is a dimer covering the edge \(((2i,2j+1),(2i+1,2j))\in \texttt{E}_n^{\textrm{Tow,Az}}\) with \((2i,2j+1) \in \texttt{B}_n^{\textrm{Tow,Az}}\), then select the edges \(((2i,j),(2i+1,j-1)), ((2i+1,j-1),(2i+2,j-1))\) in \(\texttt{E}\);

-

if there is a dimer covering the edge \(((2i,2j+1),(2i+1,2j+2))\in \texttt{E}_n^{\textrm{Tow,Az}}\) with \((2i,2j+1) \in \texttt{B}_n^{\textrm{Tow,Az}}\), then select the edges \(((2i,j),(2i+1,j)),((2i+1,j),(2i+2,j))\) in \(\texttt{E}\);

-

if there is a dimer covering the edge \(((2i,2j+1),(2i-1,2j))\in \texttt{E}_n^{\textrm{Tow,Az}}\) with \((2i,2j+1) \in \texttt{B}_n^{\textrm{Az}}\), then select the edge \(((2i,j),(2i,j-1))\) in \(\texttt{E}\).

The selected edges in E form non-intersecting paths \((\pi _1,\ldots ,\pi _{n+p})\) in \(\mathcal G\) such that

-

1.

for each j, the path \(\pi _j\) starts in \((0,n-j)\) and ends in \((2n,-j)\),

-

2.

the paths are non-intersecting, i.e., \(\pi _j \cap \pi _k = \varnothing \) for \(j \ne k\).

See Fig. 7 for an example.

Let us denote the set of all such collections of non-intersecting paths by \(\Pi _{ni}\). Then, we can define a probability measure on \( \Pi _{ni}\) by setting the probability of a given collection \((\pi _1,\ldots ,\pi _{n+p})\) to be proportional to

where w is the weight function on the edges.

For each \(i \in \mathbb Z\), we define a \(\mathbb Z\times \mathbb Z\) matrix \(M_i(j,k)\) and refer to these as transition matrices. For even indices, the matrices \(M_i\) are only nonzero on the diagonal and the subdiagonal right below the main diagonal and have the values

For odd indices the matrices \(M_i\) are lower triangular, with values

Note that the transition matrices \(M_{2i}\) and \(M_{2i+1}\) correspond to the left and right columns, respectively, in Fig. 5.

The random configuration of paths induces a natural point process \(\{(i,x_j^i)\}_{i=0,j=1}^{2n,n+p}\) where \((i,x_j^i)\) is the lowest vertex in \(\pi _j\) at the vertical section with horizontal coordinate i. By applying a celebrated theorem of Lindström–Gessel–Viennot [GV85, Lin73], which we will henceforth simply refer to as the LGV Theorem, (for completeness, we included this theorem in Appendix A) the point process has the probability distribution proportional to a product of determinants

The Eynard–Mehta Theorem [EM98] then tells us that the process is a determinantal point process:

Theorem 3.1

[EM98]. Let W be the matrix

and set

Then, for any \((m_\ell ,x_\ell )\) for \(\ell =1, \ldots ,M\) we have

The power of this result is that studying the asymptotic behavior of the point process, as \(n \rightarrow \infty \), boils down to studying the limiting behavior of the kernel K. Obviously, a major obstacle remains: the expression for the kernel (3.7) involves the inverse of the matrix W in (3.6) that is growing in size. In the literature, one typically restricts to special situations, in which more workable expressions for the inverse can be found. In Sect. 6, we will show how, with only very minor restriction on the weights, the inverse can be computed using a dynamical system defined by refactorizing the matrices \(M_i\), giving an LU- and UL-decomposition of the doubly infinite matrix \(M_0 \cdots M_{2n-1}\) (corrected by a shift matrix). Since we are after the inverse of a submatrix, these decompositions do not immediately provide the inverse of W. Here the auxiliary variable p comes to the rescue: from these LU and UL-decompositions one can construct an approximate inverse for p large enough, and, by taking the limit \(p\rightarrow \infty \), this can be used to find an expression for K.

But before we explain this inverse, we first return to the approach with the inverse Kasteleyn matrix and show that there is an analogue of Theorem 3.1 for the inverse Kasteleyn matrix that involves the inverse of the same matrix W in (3.6) (up to a trivial sign change in the entries).

4 Relation Between the Kasteleyn Approach and the LGV Theorem

In this section, we show a relation between the Kasteleyn matrix and the DR-lattice paths via the LGV Theorem. This also gives a relation for computing the inverse of the Kasteleyn matrix using the inverse of the (complete) LGV matrix. We prove our result for the Aztec diamond and the tower Aztec diamond graph. This result holds more generally, but we specialize to the case we are interested in this paper.

4.1 The relation for the Aztec diamond graph

Let \(W_n^{\textrm{Az}}=(w_{ij})_{1 \le i,j \le n}\) with \(w_{ij}\) equal to the number of weighted paths from \((0,2i-1)\) to \((2j,-1)\) for \(1 \le i,j \le n\) on \(G_n^{\textrm{Az},\textrm{DR}}\). The LGV Theorem, Theorem A.1, asserts that the number of non-intersecting weighted lattice paths on \(G_n^{\textrm{Az},\textrm{DR}}\) whose start points are given by \(\{(0,2k-1), 0 \le k \le n\}\) and end at \(\{(2j,-1), 0 \le j \le n\}\) is equal to \(\det W_n^{\textrm{Az}}\). Due to the one-to-one correspondence with dimer coverings, \(\det W_n^{\textrm{Az}}\) is also equal to the weighted number of dimer coverings on \(G_n^{\textrm{Az}}\).

For a matrix M, denote M[i; j, k; l] to be the submatrix of M restricted to rows i through to j and columns k through to l.

Theorem 4.1

Let \(A_n=K_n[1;n,1;n]\), \(B_n=K_n[1;n,n+1;n(n+1)]\),\(C_n=K_n[n+1;n(n+1),1;n]\) and \(D_n=K_n[n+1;n(n+1),n+1;n(n+1)]\). For \(1 \le i,j \le n\), let

and \(\tilde{W}_n^{\textrm{Az}}=(\tilde{w}_{ij})_{1 \le i,j \le n}\). Then, we have that \(w_{ij}=| \tilde{w}_{ij}|\) for \(1 \le i,j \le n\) and \(\det W_n^{\textrm{Az}}=|\det \tilde{W}_n^{\textrm{Az}}|\). We also have that

Remark 4.2

-

1.

A similar assertion for the first statement has been made for the square-grid on a cylinder; see [AGR21][Section 4].

-

2.

The signs for the entries in \((\tilde{W}_n^{\textrm{Az}})^{-1}\) can be computed explicitly. In fact, by [CY14][Lemma 3.6], we have that \(\tilde{w}_{i,j}=\textrm{i}^{i+j-1}w_{i,j}\) for \(1 \le i,j\le n\). We omit this computation.

-

3.

Here, \(D_n\) is a triangular matrix while \(B_n\) and \(C_n\) are very sparse matrices.

Proof

We first show that \(|\det D_n|=1\). Notice that \(D_n\) is the Kasteleyn matrix of removing the vertices \(\{(2k-1,0):1\le k \le n \} \in \texttt{W}_n^{\textrm{Az}}\) and \(\{(0,2k-1):1\le k \le n \} \in \texttt{B}_n^{\textrm{Az}}\) and their incident edges from \(G_n^{\textrm{Az}}\), that is, removing the bottom row and leftmost column of vertices and their incident edges from \(G_n^{\textrm{Az}}\). This is tile-able and so \(\det D_n\) is non-zero. Moreover, it is easy to see that there is in fact exactly one dimer configuration on this graph; see Fig. 8 for an example. This configuration is precisely all dimers of the form \(((i,j),(i-1,j+1))\in \texttt{E}_n^{\textrm{Az}}\) for \((i,j) \in \texttt{B}_n^{\textrm{Az}}\backslash \{(0,2k+1):0\le k \le n-1 \}\), which all have weight 1.

Kasteleyn’s theorem, the formula for determinants of 2 by 2 block matrices, and the evaluation of \(\det D_n\) above give

Observe that \((A_n)_{i,j}=\mathbb {I}[i=j=1] b_{0,0}\). Next, we expand out \(B_n D_n^{-1} C_n\). We have that

where the penultimate line follows from only considering the nonzero entries of \(B_n\) and \(C_n\) and the last line is just a rearrangement of the sum.

The entries of \(D_n^{-1}\) in the last line of the above formula are those on the boundary of the graph induced from the Kasteleyn matrix \(D_n\). Since these vertices are on the boundary, they represent, up to sign, the ratio between the number of weighted dimer coverings on the graph induced by \(D_n\) with these vertices removed and the number of weighted dimer coverings on the graph induced by \(D_n\). We next show that \(\textrm{sgn}( D_n^{-1})_{n^2+1-\varepsilon _1n, n^2-n+\varepsilon _2}=\textrm{i}^{\varepsilon _1+\varepsilon _2+1}\). To see this, observe that \(\textrm{sgn}(\det D_n)=\textrm{i}^{n^2}\), since there are \(n^2\) edges each having weight \(\textrm{i}\) and the only configuration corresponds to the identity permutation in the expansion of the determinant. It follows that \(\textrm{sgn}( D_n^{-1})_{n^2+1-n, n^2-n+1}=\textrm{i}^{n^2-1}/\textrm{i}^{n^2}=\textrm{i}^{3}\) since the numerator corresponds to the sign of the relevant entry of the adjugate matrix. We can then sequentially increase \(\varepsilon _1\) which removes a factor of \(\textrm{i}\) from the product of entries of the Kasteleyn matrix and flips a sign in the relevant entry of the adjugate matrix; similar for \(\varepsilon _2\).

We have that for \(1 \le i,j \le n\),

is equal to, up to sign, the weighted number of dimer coverings on \(G_n^{\textrm{Az}}\backslash (\{(0,2k-1):k\not = i \} \cap \{(2k-1,0):k\not = j \}\). This indeed follows because the prefactor and postfactor multiplication by entries of \(( B_n)\) and \((C_n)\), respectively, is in fact edge weights due to their specific entries, and the signs from each of the terms combine in such a way that the expansion of each term has the same sign.

Next notice that the graph \(G_n^{\textrm{Az}}\backslash (\{(0,2k-1):k\not = i \} \cap \{(2k-1,0):k\not = j \})\) induces a single DR lattice path from \((0,2i-1)\) to \((2j,-1)\) on \(G_n^{\textrm{Az},\textrm{DR}}\) for \(1 \le i,j\le n\); see Fig. 9 for an example. Therefore, the above expression, up to sign, is also equal to the number of DR lattice paths from \((0,2i-1)\) to \((2j,-1)\) for \(1 \le i,j\le n\).

The final assertion is a consequence of the first assertion in the statement of the theorem and the Schur complement formula. \(\square \)

4.2 The relation for the tower Aztec diamond

We next give an analogous theorem to Theorem 4.1 for the tower Aztec diamond of size n with corridor p.

Theorem 4.3

Let \(B_{n,p}=K_{n,p}[1;n+p,n+p+1;n(2n+p)]\),\(C_{n,p}=K_{n,p}[n+p+1;n(2n+p),1;n+p]\) and \(D_{n,p}=K_{n,p}[n+p+1;n(2n+p),n+p+1;n(2n+p)]\). For \(1 \le i,j \le n+p\), let

and \(\tilde{W}_{n,p}^{\textrm{Tow}}=(\tilde{w}_{ij})_{i,j=1}^{2n^2+np}\). Then, up to sign, \(\tilde{W}_{n,p}^{\textrm{Tow}}\) is equal to the LGV matrix for the tower Aztec diamond of size n with corridor p and

We also have that

Remark 4.4

-

1.

The signs for the entries in \((\tilde{W}_{n,p}^{\textrm{Tow}})^{-1}\) can be computed explicitly. Following the computation given in [CY14][Lemma 3.6], we have that \(\textrm{sgn}\tilde{w}_{ij}=\textrm{i}^{i+3j+1}(-1)^n\) for \(1 \le i,j\le n+p\). We omit this computation.

-

2.

The matrix \(D_n\) is a triangular matrix and so its inverse can easily be computed while \(B_n\) and \(C_n\) are very sparse matrices. Thus, the complicated step to finding a formula for the asymptotic inverse of the Kasteleyn matrix as p tends to infinity is to find the asymptotic inverse of \((\tilde{W}_{n,p}^{\textrm{Tow}})^{-1}\) as p tends to infinity. We will discuss this in Sect. 6. In certain special cases, such as doubly periodic weights, we expect using our results that the asymptotic inverse of the Kasteleyn matrix is given by double contour integral formulas, but we will not work this out here.

Two left figures show the graph induced by \(D_{3,4}\) and the only possible dimer covering. The right two figures show a dimer covering on \(G_{3,4}^{\textrm{Tow}}\backslash (\{(0,2n+1-2k):k\not = 2 \} \cap \{(2n-1,2-2k):k\not = 5 \}\) and the corresponding path (0, 3) to \((6,-7)\) on \(G_{3,4}^{\textrm{Tow},\textrm{LGV}}\)

Proof

The proof proceeds similar to the proof of Theorem 4.1.

First observe that \(D_{n,p}\) is the Kasteleyn matrix of removing the vertices \(\{(2k,2n-1):-p-n+1\le k \le 0 \} \in \texttt{W}_n^{\textrm{Tow}}\) and \(\{(0,2k+1):-p\le k \le n-1 \} \in \texttt{B}_{n,p}^{\textrm{Tow}}\) and their incident edges from \(G_{n,p}^{\textrm{Tow}}\). This is tileable and has exactly one dimer configuration with all edges in the configuration having weight 1, and so \(| \det D_{n,p}|=1\); see Fig. 10 for an example. From the formula for determinants of 2-by-2 block matrices and that \(D_{n,p}\) is invertible, we have

since \(K_{n,p}[1;n+p,1;n+p]\) has all entries equal to 0. This gives (4.5).

The entries of \(D_{n,p}^{-1}\) in the last line of the above formula are those on the boundary of the graph induced from the Kasteleyn matrix \(D_{n,p}\). Since these vertices are on the boundary, they represent, up to sign, the ratio between the number of weighted dimer coverings on the graph induced by \(D_{n,p}\) with these vertices removed and the number of weighted dimer coverings on the graph induced by \(D_{n,p}\). Similar to the proof of Theorem 4.1, we can compute explicitly the sign of the boundary entries of \(D_{n,p}^{-1}\)—we omit this computation.

We have that for \(1 \le i,j \le n+p\),

where we have simplified by only recording the non-zero entries of \(B_{n,p}\) and \(D_{n,p}\). This is equal, up to sign, to the number of weighted dimer coverings on \(G_{n,p}^{\textrm{Tow}}\backslash (\{(0,2n+1-2k):k\not = i \} \cap \{(2n-1,2-2j):k\not = j \}\). This indeed follows because the prefactor and postfactor multiplication by entries of \(( B_{n,p})\) and \((C_{n,p})\), respectively, is in fact edge weights due to their specific entries, and the signs from each of the terms combine in such a way that the expansion of each term has the same sign.

Next notice that the graph \(G_{n,p}^{\textrm{Tow}}\backslash (\{(0,2n+1-2k):k\not = i \} \cap \{(2n-1,2-2j):k\not = j \}\) induces a single DR lattice path from \((0,2n-1-2i)\) to \((2n-1,1-2j)\) for \(1 \le i,j\le n+p\) on \(G_{n,p}^{\textrm{Tow},\textrm{LGV}}\); see Fig. 10 for an example. Therefore, the above expression, up to sign, is also equal to the weighted number of DR lattice paths from \((0,2n-1-2i)\) to \((2n-1,1-2j)\).

The final statement of the theorem follows directly from the Schur complement formula.

5 Equivalence of the Domino Shuffle and Matrix Refactorizations

5.1 Domino shuffle for face weights

Introduce the vertex sets

and

We initially set \(\texttt{W}=\texttt{V}_1\) and \(\texttt{B}=\texttt{V}_2\), that is, the white and black vertices.

Square move and its effect on the edge weights. The left figure shows a square with edge weights a, b, c and d while the right figure shows an application of the square move to that single face. Here, we have \(A=c/\Delta \), \(B=d/\Delta \), \(C=a/\Delta \), and \(D=b/\Delta \) where \(\Delta =(ac+bd)\)

We need the following two graph transformations.

-

1.

(Square Move) Suppose the edge weights around a square with vertices \((0,1),(1,0),(0,-1)\) and, \((-1,0)\) are given by a, b, c, and d where the labeling is done clockwise around the face starting with the NE edge. We can replace the square by a smaller square with edge weights A, B, C, and D (with the same labeling convention) and add an edge, with edge-weight equal to 1, between each vertex of the smaller square and its original vertex. Then, set \(A=c/\Delta \), \(B=d/\Delta \), \(C=a/\Delta \), and \(D=b/\Delta \) where \(\Delta =(ac+bd)\). This transformation is called the square move; see Fig. 11.

-

2.

(Edge contraction) For any two-valent vertex in the graph with incident edges having weight 1, contract the two incident edges. This is called edge contraction.

When we apply the above two moves to all the even (or odd) faces, we recover \(\mathbb {Z}^2\) but with different face weights and the black and white vertices interchanged, that is after applying these two moves, we have that \(\texttt{W}=\texttt{V}_2\) and \(\texttt{B}=\texttt{V}_1\). To counter this interchanging of vertex colors, we translate the square grid by \((-1,1)\), that is, the face \((2i+1,2j+1)\) becomes the face \((2i,2j+2)\). We call the application of the two moves above and the shift the domino shuffle. To simplify conventions, we label the face \((2i+1,2j+1)\) to be even faces. Due to the shift, we only need to consider the domino shuffle applied to even faces; it is not hard to see that applying the square move twice to the same face gives the original graph and its original face weights.

An example of the graph transformation after applying the square move on all even faces of an Aztec diamond of size 4. The left figure shows where the square move applied. The figure on the right shows the actual graph. Applying edge contraction to the two-valent vertices and removing pendant edges give an Aztec diamond of size 3; see Sect. 8 for an explanation on pendant edges

Square move and its effect on the face weights. The left figure shows the original even face with center \((2i+1,2j+1)\), whose face weight is given by \(F_{2i,j}\), and the neighboring face weights given by \( (F_{2i+1,j}, F_{2i+1,j-1}, F_{2i-1,j-1}, F_{2i-1,j})\) for the faces with centers \((2i+2,2j+2), (2i+2,2j), (2i,2j),\) and \((2i,2j+2)\), respectively

The next two propositions indicate the transformation of the face weights after the square move is applied to a single even face and when the domino shuffle is performed on all even faces. The first of which is well known, see, e.g. [GK13], but we include it in our presentation to keep the paper self-contained; see Fig. 13.

Proposition 5.1

Consider the even face \((2i+1,2j+1)\) whose face weight is given by \(F_{2i,j}\) and neighboring faces have face weights

for the faces with centers \((2i+2,2j+2), (2i+2,2j), (2i,2j),\) and \((2i,2j+2)\), respectively. Applying the square move to the face \((2i+1,2j+1)\) changes the face weight at \((2i+1,2j+1)\) to \(1/F_{2i,j}\) and the face weights of the neighboring faces to

for the faces whose original centers are given by \((2i+2,2j+2), (2i+2,2j), (2i,2j),\) and \((2i,2j+2)\), respectively.

Proof

Let the edge weights around the face whose center is \((2i+1,2j+1)\) be given by a, b, c, , and d where the labeling is done clockwise around the face starting with the NE edge. By definition of the face weights and the fact that \((2i+1,2j+1)\) is even, we have

After applying the square move to \((2i+1,2j+1)\), the small square has the opposite parity to the original square and so the new face at \((2i+1,2j+1)\) is given by

where \(\Delta =ac+bd\). We now need to compute the face weights around the neighboring faces to the face whose center is at (2i, j). The face weight of the face whose center is at \((2i+2,2j+2)\) now has weight

To see this equation, the first term on the left side is the original face weight, the second term is removing the contribution from a from \(F_{2i+1,j}\) while the third term is the weight of the new edge, which is oriented from white to black around the face (clockwise). The computation for the new face weight at the face (2i, 2j) which has original face weight \(F_{2i-1,j-1}\) is similar. The face weight of the face whose center is at \((2i+2,j)\) now has weight

To see this equation, the first term on the left side is the original face weight, the second term is removing the contribution from b from \(F_{2i+1,j-1}\) while the third term is the weight of the new edge, which is oriented from black to white around the face (clockwise). The computation for the new face weight at the face \((2i,2j+2)\) which has original face weight \(F_{2i-1,j}\) is similar.

We next consider the effect of applying the shuffle to all even faces.

Proposition 5.2

Let the face weights of the even faces whose centers are given by \((2i+1,2j+1)\) be equal to \(F_{2i,j} > 0\) for all \(i,j \in \mathbb {Z}^2\) and the face weights of the odd faces whose centers are given by \((2i+2,2j+2)\) be equal to \(F_{2i+1,j} > 0\) for all \(i,j \in \mathbb {Z}^2\). Applying the domino shuffle to all the even faces of the graph, then

-

1.

the face weight at \((2i+2,2j+2)\) is given by \(1/F_{2i+2,j}\),

-

2.

the face weight at \((2i+1,2j+1)\) is given by

$$\begin{aligned} F_{2i+1,j-1} \frac{1+F_{2i+2,j}}{1+1/F_{2i+2,j-1}}\frac{1+F_{2i,j-1}}{1+1/F_{2i,j}} \end{aligned}$$

for all \(i,j \in \mathbb {Z}\).

Proof

The proposition follows by applying Proposition 5.1 to all even faces and noting the shift by \((-1,1)\) in our conventions of the domino shuffle.

5.2 Dynamics on the transitions matrices

Now let \(\mathcal {G}\) be the weighted directed graph from Sect. 3, i.e., the underlying graph for the non-intersecting path model (3.3). We recall that the weights are determined by the transition matrices (3.4) and (3.5). We will now define a dynamics on the set of transition matrices that is equivalent to the domino shuffle for the corresponding dimer model. The dynamics will be based on a commutation relation between transitions matrices that we will discuss first.

It will be convenient to use the notations

and

Note that this series converges entrywise, but not in matrix norm. For two sequences \(\mathtt a=(\mathtt a_j)_{j\in \mathbb Z}, \mathtt b =(\mathtt b_j)_{j\in \mathbb Z}\) of non-negative real numbers, we define

where \(D(\mathtt a)\) is the diagonal matrix \((D(\mathtt a))_{jj}= a_j\).

Then, we can rewrite (3.4) as

with \(\mathtt a_i=(a_{i,j})_{j \in \mathbb Z},\) and (3.5) as

The following lemma is the main ingredient for the dynamics.

Lemma 5.3

Let \(\sigma (\mathtt b)=(b_{i-1})_{i \in \mathbb Z}\). Then,

Proof

First we write

and then

which can then be turned into

Then, using

we find

and we have proved the statement. \(\square \)

Given transition matrices \(M_i\) on the graph \(\mathcal G\), it follows from the above lemma that

where \(\tilde{M}_{2i+1}=M_{2i+1}=\Psi \), \(X_i=D(\mathtt a_i +\mathtt b_i)\), and \(\tilde{M}_{2i}= \Phi (\mathtt a_i,\sigma (\mathtt b_i))\). We define a new weighting \(\{\hat{M}_i\}_{i \in \mathbb Z}\) on the graph \(\mathcal G\) such that the transition matrices are the following:

and \(\hat{M}_{2i+1}=\Psi \). Then, (5.7) can be written as

The parameters \((\hat{\mathtt a}_i)_{i \in \mathbb Z}\) and \((\hat{\mathtt b}_i)_{i \in \mathbb Z}\) for \(\hat{M}_{2i}\) can be obtained from the parameters \((\mathtt a_i)_{i \in \mathbb Z}\) and \((\mathtt b_i)_{i \in \mathbb Z}\) for \(M_{2i}\), using the maps

and

The map

defines a discrete dynamical system that we will be interested in. In the following theorem, we show how the face weights change under this map.

Theorem 5.4

Under the map \(\{M_i\}_{i\in \mathbb {Z}}\mapsto \{\hat{M}_i\}_{i\in \mathbb {Z}}\), the face weights change as:

Proof of Theorem 5.4

Substituting (5.9) and (5.10) into the face weights (3.1) gives

This can be written as

By putting a factor \(\frac{ b_{i+1,j}}{ a_{i+1,j-1}}\) on front, we get

This proves the statement for the even face weights.

For the odd face weights, we substitute (5.9) and (5.10) into (3.2) giving

and this finishes the proof.\(\square \)

We have the following corollary.

Corollary 5.5

The evolution of the face weights under domino shuffle and the map \(\{M_i\}_{i\in \mathbb {Z}}\mapsto \{\hat{M}_i\}_{i\in \mathbb {Z}}\) are the same.

Proof

This is immediate from comparing the evolution of the face weights in Proposition 5.2 and Theorem 5.4. \(\square \)

It is also possible to define a reverse flow, which we will discuss now.

Given transition matrices \(M_i\) on the graph \(\mathcal G\), it follows from the above lemma that

where  , \(Y_i=D(\mathtt a+\sigma ^{-1}(\mathtt b_i))\), and

, \(Y_i=D(\mathtt a+\sigma ^{-1}(\mathtt b_i))\), and  . We can define now a new weighting \(\{\check{M}_{i}\}_i\) by setting

. We can define now a new weighting \(\{\check{M}_{i}\}_i\) by setting

and \(\check{M}_{2i+1}=\Psi \). With this definition, we can rewrite (5.7) as

Now the parameters \((\check{\mathtt a}_i)_{ i\in \mathbb Z }\) and \((\check{\mathtt b}_i)_{ i\in \mathbb Z }\) for \(\check{M}_i\) can be obtained from the parameters \((\mathtt a_i)_{ i\in \mathbb Z }\) and \((\mathtt b_i)_{ i\in \mathbb Z }\) as follows:

and

The following theorem explains how the face weights change under the reverse dynamics \(\{M_i\}_{i \in \mathbb Z}\mapsto \{\check{M}_i\}_{i \in \mathbb Z}\).

Theorem 5.6

Under the map \(\{M_i\}_{i \in \mathbb Z}\mapsto \{\check{M}_i\}_{i \in \mathbb Z}\), the face weights change as:

Proof

We start with \(\check{F}_{2i,j}\). By (3.1) and using (5.12) and (5.13), we find

and we arrive at the statement for \(\check{F}_{2i,j}\) Then, by (3.2) and using (5.12) and (5.13) we find

This can be rewritten using (3.2) to

Then, we can put a factor \(F_{2i,j+1}=\frac{ a_{i,j+1}}{ b_{i,j+1}}\) in front and obtain the statement for \(\check{F}_{2i+1,j}\). \(\square \)

6 Inverse from Matrix Refactorization

In this section, we return to the Eynard–Mehta Theorem 3.1 for the non-intersecting paths, and we show how to compute \(W^{-1}\) from the matrix refactorization (5.8) and its reverse (5.11). We recall (3.6)

where the doubly infinite matrix \((V_{i,j})_{i,j=-\infty }^\infty \) is defined by

Now W is a submatrix of V, but it is not a principal submatrix. It will be convenient to write

where \(G=V S^{-n}\) and S is the shift matrix (5.1).

Although we will focus on the non-intersecting paths, the analysis in this section can also be applied to find the inverse of the Kasteleyn matrix through Theorem 4.3. Indeed, we have that \(W_{r,s}=(-1)^n {\mathrm i}^{i+3j+1}\tilde{W}_{n,p}^{\textrm{Tow}}(r,s)\) for \(1 \le r,s\le n+p\) where \(\tilde{W}_{n,p}^{\textrm{Tow}}\) is defined in Theorem 4.3; see also Remark 4.4 for a statement on the exact sign.

The heart of the matter is that the map (5.8) and its reverse (5.11) can be iteratively used to find LU-decomposition and UL-decomposition of the matrix G. From these decompositions, it will be easy to find the inverse of G. However, we are after the inverse of a particular submatrix of G of size \((n+p) \times (n+p)\). We will show how an approximate inverse for this submatrix can be computed using the LU- and UL-decomposition for the doubly infinite matrix G. This construction is inspired by the formula for the approximate inverse of submatrices of block Toeplitz matrices, introduced by Widom [Wid74]. With the approximate inverse at hand, it will be easy to take the limit \(p \rightarrow \infty \) and give a general expression for the correlation function K of (3.7).

Before we come to the arguments, we stress that the relevance of the final result is the following: if one is able to track and comprehend the flows defined by iterating the maps (5.9)–(5.10) and (5.12)–(5.13) (for instance, by finding closed expressions), then the final result of the procedure in this section will give an explicit expression for correlation kernel. Understanding these flows is not a trivial matter, and one typically has to resort to weightings with special structures, for instance, uniform weights or doubly periodic weights which we discuss briefly in Sect. 7.

6.1 Inverse from LU- and UL-decomposition

We start with some basics facts on LU-decompositions.

Let \((G_{i,j})_{i,j=-\infty }^{\infty }\) be an infinite matrix that has an LU-decomposition and a UL-decomposition. That is, we assume that, for \(j=1,2\), there exist lower triangular matrices \(L^{(j)}\) and upper triangular matrices \(U^{(j)}\) such that

Suppose now that \(L^{(j)}\) and \(U^{(j)}\) are invertible and denote the inverses, for \(j=1,2\), by the lower triangular matrices \(\Lambda ^{(j)}\) and upper triangular matrices \(\Upsilon ^{(j)}\). Then,

We decompose all matrices in nine blocks:

where \(A_{22}\) is a finite \((n+p)\times (n+p)\) matrix such that \((A_{22})_{i,j}=A_{i,j}\) for \(i,j=-p,\ldots ,n-1\). Note this also fixed the dimensions of the other blocks. In particular, \(A_{11}\) and \(A_{33}\) are square infinite matrices.

We also need the diagonal matrix \(P_2\) defined by

where \(I_2\) is the identity matrix of size \(n+p\) and is placed at columns at rows/columns of indices between \(-p\) and \(n-1\). We let I denote the doubly infinite identity matrix. We will need the following formula

The following result is a key step in inverting the matrix W.

Lemma 6.1

We have

Proof

From \(G^{-1}=\Lambda ^{(2)} \Upsilon ^{(2)}=\Upsilon ^{(1)}\Lambda ^{(1)}\) and the fact that \(\Lambda ^{(j)}\) and \(\Upsilon ^{(j)}\) are lower and upper triangular, respectively, we find

and

Now

where we inserted (6.5) and (6.6) in the last step. Note also that

Combining (6.7) and (6.8) gives

Now using that \(\Lambda ^{(2)}\) is the inverse of \(L^{(2)}\), we can write using (6.3)

and thus, using that fact that \(U^{(2)}\) is upper triangular

Similarly,

and thus,

We obtain the statement after inserting (6.10) and (6.11) into (6.9).

The intuition behind (6.4) is the following: in special cases, the matrices that we are interested in are diagonally dominant and the values on the k-th subdiagonals above and below the main diagonal decrease rapidly with k. This means that the entries of the matrices \(\Upsilon ^{(1)}_{13}\) and \(\Lambda ^{(2)}_{31}\) are small. This can be used to show that the right-hand side of (6.4) equals \(I_2\) plus a small correction. Thus,

is an approximate inverse to \(G_{22}\).

Note that

but also

In the upper left corner of (6.12), the matrix \(\Upsilon ^{(1)}_{23} \Lambda ^{(1)}_{32}\) is small, and thus, by (6.12) and (6.13) we find that

Similarly, in the lower right corner we find

We formalize this discussion in the following proposition.

Proposition 6.2

Assume that there exists an \(R>0\) and \(0< \rho <1\) such that, for \(i,j \in \mathbb Z\),

Then,

as \(p\rightarrow \infty \). The error term is with respect to the standard matrix norm.

Moreover, for \(i,j=1,\ldots ,n+p\),

and

as \(p\rightarrow \infty \).

Proof

By expanding the product

and using the bounds (6.15) and (6.17), we find, for \(-p \le r,s \le n-1\),

for some constant C independent of r and s. By further using the standard inequality \(\Vert A\Vert \le \sum _{i,j}|A_{i,j}|\), we thus find

By a similar argument, we find that (we can choose the constant \(C>0\) large enough such that also)

Then, (6.21) and (6.22) prove (6.18). It also shows that \(G_{22}\) is invertible for p sufficiently large and

as \(p \rightarrow \infty \).

Finally, (6.19) and (6.20) follow by applying (6.17) to (6.13) and (6.14), respectively, and inserting the result in (6.12).

In the special case that G is a block Toeplitz matrix, this result was already proved by Widom in [Wid74]. We will also come back to this in Appendix B.

6.2 LU-decomposition

We now show how we can obtain an LU-decomposition for the doubly infinite matrix \(VS^{-n}\) with V as in (6.1). The idea is to use the commutation relation (5.8) and shift all \(M_{2i}\) to the right and all \(M_{2i+1}\) to the left.

Repeatedly applying (5.8) gives

Care should be take here since \(X_n^{-1}\) requires the parameters of \(M_{2n}\) and this matrix is not necessarily defined. Instead, we will work with the assumption that \(X_{n}=I\), giving

Since \(X_0 \Psi \) and \(\hat{M}_{2n-2}\) are at the desired locations already, we drop these factors and continue with

and refactorize \(\hat{M}_{2j} \Psi \) using the same principles. We iterate this procedure in total n times so that all factors are on the desired place.

This iteration is described by the following algorithm:

For \(j=0\), we set

and then, for \(j=1,\ldots , n\),

where, for \(i=0,\ldots ,n-j-1\), we define \(\hat{\mathtt a}^{(j-1)}_i\) and \(\hat{\mathtt b}^{(j-1)}_i\) as in (5.9) and (5.10), respectively, and, for \(i=n-j\) we set, for \(k \in \mathbb Z\),

and

The difference in the last step is explained by the fact that (5.9) and (5.10) are defined for infinite products, while we are working with a finite product, and thus, care should be taken for the last matrix in the product.

We then set

Note also that from (5.8) we have

where

The conclusion of this procedure is that

After multiplying by \(S^{-n}\) from the right, this gives an LU-decomposition for G.

Lemma 6.3

Let

and

The \(L^{(1)}\) is lower triangular, \(U^{(1)}\) is upper triangular matrix and \(G=VS^{-n}=L^{(1)}U^{(1)}\).

Proof

We have already seen that \(VS^{-n}=L^{(1)}U^{(1)}\). It remains to prove the triangular structure of \(L^{(1)}\) and \(U^{(1)}\). From the definition (5.2), it is clear that \(\Psi \) is lower triangular. Combining this with the fact that each \(X_0^{(j)}\) is diagonal, it follows that \(L^{(1)}\) is lower triangular. To see that \(U^{(1)}\) is upper triangular, we write

Each \(S^{i} M_{2i}^{(n-i)} S^{(-i-1)}\), for \(i=0,\ldots ,n-1\), is upper triangular, and thus, the product \(U^{(1)}\) is upper triangular. \(\square \)

6.3 UL-decomposition

To obtain a UL-decomposition, we use the reverse dynamics but with all the \(M_{2i}\) at the left and all \(M_{2i+1}\) to the right. Since the outermost factors are already at the desired place, we drop them in the first step and start with

Repeatedly applying (5.11) gives

In this factorization, we have the freedom to choose \(Y_0\) as we wish (as long as it is consistent for the definition of \(\check{M}_2\)), and we choose it to be \(Y_0=I\) so that it can be removed from the product. Now we drop the first and last factor and obtain

and refactorize \(\Psi \check{M}_{2j} \) using the same principles. We then iterate this procedure in total \(n-1\) times until all factors are on the desired place.

The result can be presented as follows.

We start by defining

and then set, for \(j=1,\ldots ,n-1\),

where, for \(i=j+1,\ldots ,n-1\), we define \(\check{\mathtt a}_i^{[j-1]}\) and \(\check{ \mathtt b}_i^{[j-1]}\) as in (5.12) and (5.13), respectively, and \(i=j\) we set

and

We then set

for \(j=1,\ldots , n-1\) and \(i=j,\ldots , n-1\).

It is important to observe that from (5.11) we find that

where

for \(j=1,\ldots , n-1\) and \(i=j,\ldots , n-1\).

The conclusion of this procedure is that

The following lemma is the analogue of Lemma 6.3 for the reverse dynamics.

Lemma 6.4

Let

and

The \(L^{(2)}\) is a lower triangular matrix, \(U^{(2)}\) is upper triangular matrix, and \(G=VS^{-n}=U^{(2)}L^{(2)}.\)

Proof

As the proof is similar to the proof of Lemma 6.3, it will be omitted.

6.4 The correlation kernel

Now that we have both an LU- and UL-decomposition:

with \(L^{(1)}\) and \(U^{(1)}\) as in (6.25) and (6.26), respectively, and \(L^{(2)}\) and \(U^{(2)}\) as in (6.27) and (6.28), respectively, we can try the ideas of Sect. 6.1 to compute the inverse of the matrix W in (3.7). To this end, we define

and decompose all matrices into blocks as in (6.2).

Note that the inverses \(\Lambda ^{(1,2)}\) of \(L^{(1,2)}\) are rather easy to compute, as they are products that alternate between diagonal matrices (with trivial inverses) and the matrix \(\Psi \), which has inverse

Observe also that this means that \(\Lambda ^{(1,2)}\) are banded matrices where the width of the band depends on n, but not on p.

The inverses \(\Upsilon ^{(1,2)}\) of \(U^{(1,2)}\) are also easy to compute, but now \(\Upsilon ^{(1,2)}\) are not banded.

In order to take the limit \(p \rightarrow \infty \), we also need \(\Lambda ^{(1,2)}\) and \(\Upsilon ^{(1,2)}\) to satisfy (6.17). This requires a condition on the parameters \(a_{i,j}\) and \(b_{i,j}\).

Assumption 6.5

We assume that there exists \(R>0\) and \(0< \rho <1\) such that, for \(1\le i \le n\) and \(k\in \mathbb N\),

Furthermore, we assume that there exists \(0<\delta _1< \delta _2\) such that, for \(j \in \mathbb Z\) and \(i=1,\ldots ,n\),

Lemma 6.6

Under Assumption 6.5, we have that for each \(i=1,\ldots , n\) the inverse of \(D(\mathtt a_i)+D(\mathtt b_i)S\) satisfies (6.17).

Proof

By using the notation \((\mathtt a_i/ \mathtt b_i)=(a_{i,j}/b_{i,j})_{j \in \mathbb Z}\) and using the rule \(S^{-1}D(\mathtt a)=D(\sigma ^{-1} \mathtt a) S^{-1}\), we can write

By (6.30), we see that the values of the diagonal matrix \(D(\mathtt b_m)^{-1}\) are bounded from above and below. By (6.29), we have that the values of the diagonal matrix \(D\left( \prod _{\ell =0}^{k-1} \sigma ^{-\ell }(\mathtt a_m/ \mathtt b_m)\right) \) are bounded by \(R \rho ^k\). Combining these facts gives that \( \left( D(\mathtt a_i)S^{-1} +D(\mathtt b_i)\right) ^{-1}\) satisfies (6.17).

Lemma 6.7

Assume that \(( \mathtt a_i, \mathtt b_i)_{i=1,\ldots ,n-1}\) satisfies Assumption 6.5. Then, the parameters \((\hat{\mathtt a}_i,\hat{\mathtt b} _i)_{i=1,\ldots ,n-1}\) also satisfy assumptions (6.29) and (6.30), possibly with different values of \(R, \delta _1\) and \(\delta _2\), but with the same \(\rho \). Consequently, each inverse of \(D(\hat{\mathtt a}_i)+D(\hat{\mathtt b}_i)S\), for \(i=1,\ldots , n-1\), also satisfies (6.17).

Proof

It is clear from (6.30) that also

for some \(0<\delta _1'<\delta _2\). Then,

By (6.29) and (6.30), we thus find

and thus, \((\hat{\mathtt a}_i, \hat{\mathtt b}_i)_{i=0}^{n-1} \) also satisfy both (6.29) and (6.30), which by the above means that \( \left( D(\hat{\mathtt a_i})S^{-1} +D(\hat{\mathtt b}_i)\right) ^{-1}\) satisfies (6.17).

Lemma 6.8

Under assumptions (6.29) and (6.30), it holds that both \(\Lambda ^{(1,2)}\) and \(\Upsilon ^{(1,2)}\) satisfy (6.17).

Proof

Let us start by mentioning that if A and B are matrices for which there exists \(R>0\) and \(0< \rho <1\), such that, for i, j, we have

then also

for some \(\tilde{R}\).

As in the proof of Lemma 6.3, we write \(U^{(1)}\) as

By the principle in (6.32), it is sufficient to show that the inverse of each \(S^{i} M_{2i}^{(n-m)}S^{-i-1}\) satisfies (6.17). Conjugation by \(S^{\pm 1}\) only moves the values on the diagonal up or down by 1, and thus, it is sufficient to show that the inverse of

satisfies (6.17). But since \(({\mathtt a}^{(n-i)}_i,{\mathtt b}^{(n-i)}_i)\) are obtained by iterating the maps (5.9) and (5.10), this fact follows from Lemma 6.7 and we have thus proved the statement for \(\Upsilon ^{(1)}\).

The claim for \(\Upsilon ^{(2)}\) follows similarly.

The claim for \(\Lambda ^{(1,2)}\) is easier. Indeed, both matrices are banded with a bandwidth independent of p, and it is not hard to verify that under assumption (6.30) there is a uniform bound for all the entries. \(\square \)

Theorem 6.9

With L and U as in (6.25) and (6.26), respectively, set \(\Upsilon =U^{-1}\) and \(\Lambda =L^{-1}\). Then, for \(x_1,x_2\ge -1\), we have

Proof

The starting point is the expression for K in (3.7). Then, we note that

Now note that there exists a constant \(C>0\) such that

for all \(r,s\ge 1\), and thus, by (6.18),

as \(p \rightarrow \infty \). We then use (6.14) to write

as \(p \rightarrow \infty \). It remains to show that the term \(\Lambda _{21}^{(2)} \Upsilon _{12}^{(2)}\) can be ignored. To this end, note that

Since, by assumption, \(x_2\ge -1\) we can restrict the sum over r to range from \(r=1,\ldots ,n+1\). Observe that this range is independent of p. Using (6.17) we then find that, for some constant C independent of p

for \(s=1,\ldots , n+p\) and \(r=1,\ldots , n\). Together with (6.34), this implies that

as \(p \rightarrow \infty \).

The last step is to take the limit \(p \rightarrow \infty \). First observe that the series converges because of (6.17) and (6.34). Then,

for \(r,s=1,2, \ldots \). Inserting this back into (6.37) and taking the limit \(p \rightarrow \infty \) gives

Since \(\Upsilon ^{(1)}_{n-s,n-\ell }=0\) for \(s \le \ell \) and \(\ell \ge 1\), we can let the sum over s range from \(-\infty \) to \(\infty \). Similarly, we can let the sum over r range from \(-\infty \) to \(\infty \), since \(r\ge 1\) and \(\Lambda ^{(1)}_{n-\ell ,n-r}=0\) for \(r<\ell \). By doing so, and writing

we prove (6.33).

7 Periodic Weights

7.1 Preliminaries on block Toeplitz matrices

Let A(z) be \(p\times p\) matrix-valued function whose entries are rational functions in z. Then, the doubly infinite block Toeplitz matrix M(A(z)) is defined as the doubly infinite matrix

for \(j,k \in \mathbb Z\). Typically, one assumes that A(z) has no poles on the unit circle. In our situation, we will consider matrices with poles on the unit circle, and we integrate over a circle centered at the origin with a radius \(1+\varepsilon \) where \(\varepsilon \) is sufficiently small so that all the poles of A(z) and \((A(z))^{-1}\) that are outside the unit circle are also on the outside the circle of radius \(1+\varepsilon \).

The values on the diagonals in the upper triangular part decay exponentially with the distance to the main diagonal. The values on the diagonals in the lower triangular part decay exponentially with the distance to the main diagonal if there is no poles on the unit circle, and remain bounded if there is a pole on the unit circle. Indeed, by deforming contours it is straightforward to check that

for \(k-j \rightarrow \infty \), and

for \(j-k \rightarrow \infty \), where \(r_*>1\) is the radius of the pole of A outside the unit circle with the smallest radius and \(r_{**} \le 1\) is the radius of the pole of A inside or on the unit circle with the largest radius.

Doubly infinite block Toeplitz matrices have the convenient property that

for any two \(p\times p\) matrix-valued functions \(A_{1,2}(z)\) with rational entries.

In the upcoming discussion, we will need block LU- and UL-decompositions of doubly infinite Toeplitz matrices.

Note that if A(z) has no poles inside or on the unit circle, then M(A(z)) is an upper triangular block matrix, and if A(z) has no poles outside the unit circle, then M(A(z)) is a lower triangular block matrix. This, together with (7.3), means that finding a block UL-decomposition for a matrix M(A(z)) amounts to finding a factorization

of the matrix-valued symbol A(z) such that \(A_+(z)\) has no poles inside or on the unit circle and \(A_-(z)\) has no poles outside the unit circle. Similarly, a block LU-decomposition for a matrix M(A(z)) amounts to finding a factorization

of the matrix-valued symbol A(z) such that \(\tilde{A}_+(z)\) has no poles inside or on the unit circle and \(\tilde{A}_-(z)\) has no poles outside the unit circle. Note that in the scalar case \(p=1\), we can take \(\tilde{A}_\pm =A_\pm \).

In a block LU- or UL-decomposition, we will also want that \(L^{-1}\) and \(U^{-1}\) are lower and upper block triangular matrices, respectively. To this end, we will also need that \(A_+^{-1}\) and \(\tilde{A}_+^{-1}\) have no pole inside or on the unit circle, and \(A_-^{-1}\) and \(\tilde{A}_-^{-1}\) have no poles outside the unit circle. By Cramer’s rule, this is equivalent to require that \(\det A_+(z)\) has no pole inside or on the unit circle and \(\det A_-(z)\) has no pole outside the unit circle.

Concluding, finding a block LU- and UL-decomposition of a doubly infinite block Toeplitz matrix with symbol A(z) such that the lower and upper triangular matrices remain lower and upper triangular after taking inverses is equivalent to finding factorizations

where \(A_+^{\pm 1}\) and \(\tilde{A}_+^{\pm 1}\) have no pole inside or on the unit circle and \(A_-^{-1}\) and \(\tilde{A}_-^{-1}\) have no poles outside the unit circle. Such factorizations are called Wiener–Hopf factorizations and have been studied extensively in the literature.

7.2 Vertically periodic transition matrices

In this section, we assume that there exists a p such that, for \(i=0,\ldots ,n-1\) and \(j \in \mathbb Z\),

In other words, the parameters are p-periodic in the vertical direction. Before we continue, we mention that Assumption 6.5 takes a simpler form. Indeed, (6.30) just means that all parameters are positive, and (6.29) is equivalent to requiring

We will see shortly why this assumption is relevant.

With p-periodic parameters (7.4), the transition matrices \(M_{i}\) become block Toeplitz matrices. Indeed, for each \(i\in \mathbb Z\), we have \(M_i=M(A_i(z))\) with

for the symbols with even index, and

for the symbols with odd index.

It is convenient to use the notation

and observe that the shift matrix S is the block Toeplitz matrix with symbol s(z).

The matrix \(G=V S^{-n}\) is then the block Toeplitz matrix \(M(\eta (z))\) with symbol

which, using the fact that \(s(z)\psi s(z)^{-1}= \psi \), we can rewrite as

Note that the factor \(\psi (z)\) and its inverse are analytic outside the circle, and \(\psi \) has a pole on the unit circle. Thus, in a block LU-decomposition, we would like to have the factors \(M(\psi )\) as part of L. Now also observe that each factor

is analytic in the unit circle, and its determinant is linear:

The assumption (7.5) shows that its zero is outside the unit circle, and thus, each factor \(s(z)^i \phi (z;\mathtt a_{i}, \mathtt b_{i})s(z)^{-i-1}\) and its inverse are analytic inside and on the unit circle. This is the importance of (7.5).

We see that for a block LU-factorization we would like to reorganize the factors in \(\eta (z)\) such that the factors \(s(z)^i \phi (z;\mathtt a_{i}, \mathtt b_{i})s(z)^{-i-1}\) are at the right, and the factors \(\psi (z)\) are all on the left. If \(p=1\), all terms commute and this is a triviality. If \(p>1\) this is not a triviality at all, and this is where the refactorization procedure of Sect. 6.2 comes into play. Note that in this procedure we update the parameters using the maps (5.9) and (5.10), and it is crucial that these maps preserve the condition (7.5). That this is indeed the case follows by the fact that \(\det \phi (z;\mathtt a_i, \mathtt b_{i})=c\det \phi (z;\hat{\mathtt a}_i, \hat{\mathtt b}_i)\), and thus the location of its zero is preserved. This also directly proves Lemma 6.7 in the periodic setting.

7.3 Doubly periodic weights

Let us now assume in addition that the weights are also periodic in the horizontal direction, and let q be the smallest integer such that

and hence \(M_{q+i}=M(A_{q+i})=M(A_i)=M_i\). For simplicity, we will assume that the total number of transition matrices is qn (instead of n). Then, the product of all transfer matrices has the symbol

The idea that was introduced in [BD19], and used in [Ber21] and [BD22], is to first find a Wiener–Hopf factorization for \(B=B_+(z)B_-(z)\) (and similarly \(B=\tilde{B}_-(z) \tilde{B}_+(z)\)) and then continue with the symbol

Now set \(\hat{B}(z)=B_-(z)B_+(z)\) and try to find Wiener–Hopf factorization for \(\hat{B}(z)=\hat{B}_+(z) \hat{B}_-(z)\), and so forth until there are no factors left. This gives a discrete dynamical system on the space of symbols, by computing first a factorization and then swapping the order of that factorization. In special situations, the dynamics is periodic and this helps in finding a Wiener–Hopf factorization in compact and explicit form, which greatly helps in the asymptotic study. Generally, however, it will not be periodic. Recently, it was shown for the biased two-periodic Aztec diamond [BD22] that the dynamical system can be linearized by passing to the Jacobian of the spectral curve of B(z) (which is an invariant for the flow). In a work in progress, this is worked out in a more general situation [BB22].

Remark 7.1

We emphasize that the restriction that all transition matrices with odd index are given by (7.6) means that we do not cover all doubly periodic models. Although it is always possible to change the edge weights such that the face weights do not change and such that the transition matrices with odd index are the doubly infinite Toeplitz matrix with symbol (7.6), it is not necessarily true that the corresponding transition matrices at even steps are doubly infinite Toeplitz matrices. In other words, the gauge transformations needed to turn the weights of the desired edges to 1 do not necessarily preserve double periodicity. See also Remark 2.1.

8 The Inverse of \((W_n^{\textrm{Az}})^{-1}\)

In this section, we give a recurrence for \((W_n^{\textrm{Az}})^{-1}\) using the domino shuffle. This is a generalization of the computation which originally appeared in [CY14].

8.1 Recurrence for entries of \((W_n^{\textrm{Az}})^{-1}\)

In order to give the recurrence for \((W_n^{\textrm{Az}})^{-1}\), we first need to consider the partition function of the square move and introduce an additional graphical transformation.

For a finite graph, label \(Z_{\textrm{Old}}\) to be the partition function before applying the square move to a single face with edge weights a, b, c, and d, and label \(Z_{\textrm{New}}\) to be the partition function after applying the square move. Then, it is easy to see that \(Z_{\textrm{Old}}=\Delta Z_{\textrm{New}}\).

The final graphical transformation that we need is removal of pendant edges: if a vertex is incident to exactly one edge which has weight 1, then the edge and its incident edges can be removed from the graph since the vertex must be covered by a dimer. This transformation does not alter the partition function.

For notational simplicity in stating the result and its proof, for each even face with center \((2i+1,2j+1)\) of the Aztec diamond of graph of size n, introduce \(r_1(i,j), r_2(i,j), r_3(i,j)\), and \(r_4(i,j)\) to be the edge weights where the labeling proceeds clockwise starting with the north-east edge. From our choice of edge weights, we initially have \(r_1(i,j)=1\), \(r_2(i,j)=1\), \(r_3(i,j)=b_{i,j}\) and \(r_4(i,j)=a_{i,j}\), but these will change under applying the square move. We denote \(F^{(n)}_{2i,j}\) to be the face weight of the Aztec diamond of size n at face \((2i+1,2j+1)\) and \(F^{(n)}_{2i+1,j}\) to be the face weight at face \((2i+2,2j+2)\), where the superscript marks the size of the Aztec diamond. This has the same convention as given in Sect. 5.1, and we write \(F^{(n)}\) to be the collection of face weights of an Aztec diamond of size n. We remind the reader that the face weight at the face whose center is given by \((2i+1,2j+1)\) equals

for \(0\le i,j \le n-1\) while the face whose center is given by \((2i+2,2j+2)\) equals

for \(0\le i,j \le n-2\).

To preempt the use of the domino shuffle, we write \((W_n^{\textrm{Az}})^{-1} (i,j,F^{(n)})\) instead of \((W_n^{\textrm{Az}})^{-1} (i,j)\) for \(1 \le i,j \le n\) and \((K_n^{\textrm{Az}})^{-1}((x,y),F^{(n)})\) instead of \((K_n^{\textrm{Az}})^{-1}(x,y)\) for \(x \in \texttt{W}_n^{\textrm{Az}}\) and \(y \in \texttt{B}_n^{\textrm{Az}}\). Here, the third argument in each case marks the face weights as these will change under successive iterations of the domino shuffle.

For the purposes of the proof of the result below, set, for \(1\le i,j\le n\), \(\Delta (i,j)=r_1(i,j)r_3(i,j)+r_2(i,j)r_4(i,j)\), \(Z_n(F^{(n)})\) to be the partition function of the Aztec diamond and