Abstract

We show that typical cocycles (in the sense of Bonatti and Viana) over irreducible subshifts of finite type obey several limit laws with respect to the unique equilibrium states for Hölder potentials. These include the central limit theorem and the large deviation principle. We also establish the analytic dependence of the top Lyapunov exponent on the underlying equilibrium state. The transfer operator and its spectral properties play key roles in establishing these limit laws.

Similar content being viewed by others

References

Avila, A., Viana, M.: Simplicity of lyapunov spectra: proof of the Zorich–Kontsevich conjecture. Acta Mathematica 198(1), 1–56 (2007)

Baladi, V.: Positive Transfer Operators and Decay of Correlations. Advanced Series in Nonlinear Dynamics, vol. 16. World Scientific Publishing Co., Inc., River Edge (2000)

Backes, L., Brown, A., Butler, C.: Continuity of Lyapunov exponents for cocycles with invariant holonomies. J. Mod. Dyn. 12(1), 223–260 (2018)

Bonatti, C., Gómez-Mont, X., Viana, M.: Généricité d’exposants de lyapunov non-nuls pour des produits déterministes de matrices. Annales de l’IHP Analyse non linéaire 20, 579–624 (2003)

Bougerol, P., Lacroix, J.: Products of Random Matrices with Applications to Schrödinger Operators, Progress in Probability and Statistics, vol. 8. Birkhäuser Boston Inc, Boston (1985)

Bocker-Neto, C., Viana, M.: Continuity of Lyapunov exponents for random two-dimensional matrices. Ergod. Theory Dyn. Syst. 37(5), 1413–1442 (2017)

Bougerol, P.: Théorèmes limite pour les systèmes linéaires à coefficients markoviens. Probab. Theory Relat. Fields 78(2), 193–221 (1988)

Bowen, R.: Equilibrium States and the Ergodic Theory of Anosov Diffeomorphisms, Lecture Notes in Mathematics, vol. 470. Springer-Verlag (1975)

Benoist, Y., Quint, J.-F..: Random Walks on Reductive Groups, Random Walks on Reductive Groups, pp. 153–167. Springer (2016)

Bonatti, C., Viana, M.: Lyapunov exponents with multiplicity 1 for deterministic products of matrices. Ergod. Theory Dyn. Syst. 24(5), 1295–1330 (2004)

Bochi, J., Viana, M.: The Lyapunov exponents of generic volume-preserving and symplectic maps. Ann. Math. 161, 1423–1485 (2005)

Conway, J.B.: A Course in Functional Analysis, Graduate Texts in Mathematics, vol. 96, 2nd edn. Springer-Verlag, New York (1990)

Duarte, P., Klein, S.: Lyapunov exponents of linear cocycles, continuity via large deviations. Atl. Stud. Dyn. Syst. 3 (2016)

Duarte, P., Klein, S., Poletti, M.: Holder continuity of the Lyapunov exponents of linear cocycles over hyperbolic maps. arXiv preprint arXiv:2110.10265 (2021)

Dunford, N., Schwartz, J.T.: Linear Operators, Part I. Wiley Classics Library, Wiley, New York (1988)

Furstenberg, H., Kesten, H.: Products of random matrices. Ann. Math. Stat. 31, 457–469 (1960)

Furstenberg, H., Kifer, Y.: Random matrix products and measures on projective spaces. Israel J. Math. 46(1–2), 12–32 (1983)

Furstenberg, H.: Noncommuting random products. Trans. Am. Math. Soc. 108(3), 377–428 (1963)

Guivarc’h, Y., Le Page, É.: Simplicité de spectres de Lyapounov et propriété d’isolation spectrale pour une famille d’opérateurs de transfert sur l’espace projectif, Random Walks and Geometry, pp. 181–259. Walter de Gruyter, Berlin (2004)

Gouëzel, S., Stoyanov, L.: Quantitative Pesin theory for Anosov diffeomorphisms and flows. Ergod. Theory Dyn. Syst. 39(1), 159–200 (2019)

Guivarc’h, Y.: Spectral gap properties and limit theorems for some random walks and dynamical systems, hyperbolic dynamics, fluctuations and large deviations. Proc. Sympos. Pure Math. 89, 279–310 (2015)

Hennion, H.: Sur un théorème spectral et son application aux noyaux lipchitziens. Proc. Am. Math. Soc. 118(2), 627–634 (1993)

Horn, R.A., Johnson, C.R.: Matrix Analysis, 2nd edn. Cambridge University Press, Cambridge (2013)

Kato, T.: Perturbation Theory for Linear Operators, Classics in Mathematics. Springer-Verlag, Berlin (1995)

Kingman, J.F.C.: Subadditive ergodic theory. Ann. Probab. 1, 883–909 (1973)

Kloeckner, B.R.: Effective perturbation theory for simple isolated eigenvalues of linear operators. J. Oper. Theory 81(1), 175–194 (2019)

Kalinin, B., Sadovskaya, V.: Cocycles with one exponent over partially hyperbolic systems. Geometriae Dedicata 167(1), 167–188 (2013)

Leplaideur, R.: Local product structure for equilibrium states. Trans. Am. Math. Soc. 352(4), 1889–1912 (2000)

Le Page, É.: Théorèmes limites pour les produits de matrices aléatoires, Probability measures on groups (Oberwolfach, 1981) Lecture Notes in Mathematics, vol. 928, pp. 258–303. Springer, Berlin-New York (1982)

Le Page, É.: Régularité du plus grand exposant caractéristique des produits de matrices aléatoires indépendantes et applications. Annales de l’IHP Probabilités et statistiques 25, 109–142 (1989)

Naud, F.: Birkhoff cones, symbolic dynamics and spectrum of transfer operators. Discrete Contin. Dyn. Syst. 11(2–3), 581–598 (2004)

Oseledets, V.I.: A multiplicative ergodic theorem. Characteristic Ljapunov, exponents of dynamical systems. Trudy Moskovskogo Matematicheskogo Obshchestva 19, 179–210 (1968)

Park, K.: Quasi-multiplicativity of typical cocycles. Commun. Math. Phys. 376(3), 1957–2004 (2020)

Peres, Y.: Domains of analytic continuation for the top Lyapunov exponent. Ann. Inst. H. Poincaré Probab. Stat. 28(1), 131–148 (1992)

Piraino, M.: The weak Bernoulli property for matrix Gibbs states. Ergod. Theory Dyn. Syst. 40, 1–20 (2018)

Parry, W., Pollicott, M.: Zeta functions and the periodic orbit structure of hyperbolic dynamics. Astérisque 187(188), 1-268 (1990)

Ratner, M.: The central limit theorem for geodesic flows on n-dimensional manifolds of negative curvature. Israel J. Math. 16(2), 181–197 (1973)

Sasser, D.W.: Quasi-positive operators. Pac. J. Math. 14, 1029–1037 (1964)

Sert, C.: Large deviation principle for random matrix products. Ann. Probab. 47(3), 1335–1377 (2019)

Viana, M., Yang, J.: Continuity of Lyapunov exponents in the c0 topology. Israel J. Math. 229(1), 461–485 (2019)

Young, L.-S.: Large deviations in dynamical systems. Trans. Am. Math. Soc. 318(2), 525–543 (1990)

Acknowledgements

The authors would like to thank Amie Wilkinson, Aaron Brown, Jairo Bochi, and Silvius Klein for helpful discussions comments. The authors would also like to thank anonymous referees for their comments which helped improved the paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by C. Liverani.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

M.P. was supported in part by the National Science Foundation Grant “RTG: Analysis on manifolds” at Northwestern University.

Appendix

Appendix

1.1 The Ruelle–Perron–Frobenius theorem for semi-positive operators

Here we present the abstract Ruelle–Perron–Frobenius theorem that we make use of in this paper. We begin by recalling some definitions and propositions; for more background and proofs of these facts, we refer the reader to [DS88].

Let X be a complex Banach space and \({\mathcal {L}}(E)\) the space of bounded linear operators on X. If \(L \in {\mathcal {L}}(E)\), we define

and

Theorem 9

The function \(\lambda \mapsto (\lambda - L)^{-1}\) is analytic on \({{\,\mathrm{res}\,}}(L)\).

For \(\lambda \in {{\,\mathrm{res}\,}}(L)\), we define

Definition 5

The geometric multiplicity of an eigenvalue \(\lambda \) is

and the algebraic multiplicity

The order of \(\lambda \), provided it exists, is

Proposition 12

If \(\lambda _{0}\) is an isolated point of \({{\,\mathrm{spec}\,}}(L)\) and a pole of \(R(\lambda )\), then \(\lambda _{0}\) is a eigenvalue for L. The order of \(\lambda _{0}\) as an eigenvalue is the order of \(\lambda _{0}\) as a pole of \(R(\lambda )\).

The main ideas of results are essentially contained in Sasser [Sas64]. However, we cannot use the results from those contained in [Sas64] directly. So we will use the ideas to prove a Ruelle–Perron–Frobenius theorem which applies in our case.

Definition 6

Let X be a real topological vector space a set \({\mathcal {C}}\subseteq X\) is called a cone if

-

1.

\({\mathcal {C}}\) is convex, and

-

2.

If \(x \in {\mathcal {C}}\), then \(\lambda x \in {\mathcal {C}}\) for all \(\lambda \ge 0\).

A cone is called proper if \(-{\mathcal {C}}\cap {\mathcal {C}}= \left\{ 0 \right\} \). A cone is called closed if it is a closed set in the topology of X.

Throughout this section we will assume that X is a real Banach space ordered by a closed proper cone \({\mathcal {C}}\).

Definition 7

A set \({\mathcal {S}}\subseteq X^{*}\) is called sufficient for \({\mathcal {C}}\) if

Definition 8

Let \(L:X \rightarrow X\) be linear and bounded, \({\mathcal {C}}\subseteq X\) be a closed proper cone with non-empty interior, and \({\mathcal {S}}\) be a sufficient set for \({\mathcal {C}}\). We say that L is semi-positive with respect to \({\mathcal {C}}\) if

-

1.

\(L{\mathcal {C}}\subseteq {\mathcal {C}}\).

-

2.

For all \(x \in {\mathcal {C}}\) one of the following is true:

-

(a)

There exists N(x) such that \(L^{n}x \in {{\,\mathrm{int}\,}}({\mathcal {C}})\) for all \(n \ge N(x)\).

-

(b)

For any \(s\in {\mathcal {S}}\), the sequence \(\left\{ \left\langle L^{n}x,s \right\rangle \right\} _{n=1}^{\infty }\) converges to 0.

-

(a)

The main goal of this subsection is to prove the following theorem.

Theorem 10

Let X be a real Banach space ordered by a proper closed cone \({\mathcal {C}}\), with non-empty interior and \(L:X \rightarrow X\) be a quasi-compact bounded linear operator which is semi-positive with respect to \({\mathcal {C}}\). Then

-

1.

There exist \(u \in {{\,\mathrm{int}\,}}({\mathcal {C}})\) and \(u^{*}\in {\mathcal {C}}^{*}\) such that \(Lu=\rho (L)u\), \(L^{*}u^{*}=\rho (L)u^{*}\), and \(\left\langle u,u^{*} \right\rangle =1\).

-

2.

If \(\lambda \in {{\,\mathrm{spec}\,}}(L)\) with \(\left| \lambda \right| =\rho (L)\), then \(\lambda =\rho (L)\).

-

3.

L can be written as

$$\begin{aligned} L=\rho (L)(P+S) \end{aligned}$$where \(Px = \left\langle x,u^{*} \right\rangle u\), S is a bounded linear operator with \(\rho (S)<1\) and \(PS=SP=0\).

-

4.

There exist constants \(C>0\) and \(0<\gamma < 1\) such that for any \(x \in X\)

$$\begin{aligned} \left\| \rho (L)^{-n}L^{n}x - \left\langle x,u^{*} \right\rangle u \right\| \le C\left\| x \right\| \gamma ^{n} \end{aligned}$$for all \(n \ge 0\).

Lemma 10

Suppose that \({\mathcal {C}}\) is a closed proper cone and \({\mathcal {S}}\) is a sufficient set for \({\mathcal {C}}\). If \(\left\langle x,s \right\rangle =0\) for all \(s \in {\mathcal {S}}\), then \(x=0\).

Proof

Notice that if \(\left\langle x,s \right\rangle =0\) for all s, then \(x \in {\mathcal {C}}\). On the other hand \(\left\langle -x,s \right\rangle =0\) for all \(s \in {\mathcal {S}}\), so \(-x \in {\mathcal {C}}\). As \({\mathcal {C}}\) is proper this implies that \(x=0\). \(\quad \square \)

One may in general be concerned that a cone may not have a sufficient set. Note that every cone has a natural candidate for a sufficient set. Define

Lemma 11

Suppose that \({\mathcal {C}}\) is a closed proper cone with non-empty interior. Then

-

1.

\(x \in {\mathcal {C}}\) if and only if \(\left\langle x,x^{*} \right\rangle \ge 0\) for all \(x^{*}\in {\mathcal {C}}\); that is \({\mathcal {C}}^{*}\) is a sufficient set for \({\mathcal {C}}\).

-

2.

We have

$$\begin{aligned} {{\,\mathrm{int}\,}}({\mathcal {C}})=\left\{ x \in X: \left\langle x,x^{*} \right\rangle >0 \text { for all }x^{*}\in {\mathcal {C}}^{*} \right\} . \end{aligned}$$

Proof

See [Nau04, Proposition 4.11] for (1).

For (2), we will show inclusions of each set into another. First, suppose that \(x \in {{\,\mathrm{int}\,}}({\mathcal {C}})\). Take \(\delta >0\) such that \(B(x,\delta )\subseteq {\mathcal {C}}\). If \(\left\| y \right\| =\delta /2\), then

and this implies that \(x-y,x+y \in {\mathcal {C}}\). Thus for any \(x^{*} \in {\mathcal {C}}^{*}\),

which implies

Then we have

In particular if \(x^*\ne 0\), then \(\left\langle x,x^{*} \right\rangle >0\).

For the other inclusion, suppose that

Let \(u \in {{\,\mathrm{int}\,}}({\mathcal {C}})\) and take \(\delta >0\) such that \(B(u , \delta )\subseteq {{\,\mathrm{int}\,}}({\mathcal {C}})\). Setting

notice that S is closed in the weak*-topology and by (34) we have that \(S \subseteq \overline{B(0, 2/ \delta )}^{\left\| \cdot \right\| }\). Thus S is weak*-compact by Banach-Alaoglu, and we can take \(\eta >0\) such that \(\left\langle x,x^{*} \right\rangle >\eta \) for all \(x^{*}\in S\). Then for any \(y\in X\) with \(\left\| x-y \right\| <\eta \delta /4\) and any \(x^{*}\in S\), we have

Hence \(\left\langle y,x^{*} \right\rangle >0\). As \({\mathcal {C}}\) is defined as

we have that \(y \in {\mathcal {C}}\). This shows that \(x \in {{\,\mathrm{int}\,}}({\mathcal {C}})\). \(\quad \square \)

Denote by \(\widetilde{X}\) the complexification of X. That is,

With addition and scalar multiplication defined in the natural way, this becomes a complex vector space. The function

defines a norm on \(\widetilde{X}\) making it into a Banach space.

Any bounded linear operator \(T:X \rightarrow X\) extends to a bounded linear operator \(\widetilde{T}:\widetilde{X}\rightarrow \widetilde{X}\) and \(\left\| T \right\| _{X}=\left\| \widetilde{T} \right\| _{\widetilde{X}}\). For a bounded linear operator T, we define \({{\,\mathrm{spec}\,}}(T):={{\,\mathrm{spec}\,}}(\widetilde{T})\). Finally the dual of the complexification of X is isomorphic to the complexification of \(X^{*}\) and elements \(x^{*}\in X^{*}\) act on \(\widetilde{X}\) in the natural way by \(\left\langle x+iy,x^{*} \right\rangle :=\left\langle x,x^{*} \right\rangle +i\left\langle y,x^{*} \right\rangle \).

Let \(\widetilde{L}:\widetilde{X} \rightarrow \widetilde{X}\) be the extension of L to \(\widetilde{X}\). As L is quasi-compact, any \(\lambda _{0}\in {{\,\mathrm{spec}\,}}(L):={{\,\mathrm{spec}\,}}(\widetilde{L})\) with \(\left| \lambda _{0} \right| =1\) is an isolated point of the spectrum and a pole of the resolvent. Thus \(R(z)=(zI-\widetilde{L})^{-1}\) has an expansion

which is valid for \(0<\left| z-\lambda _{0} \right| <\delta \) for any \(\delta >0\) with \(B(\lambda _{0},\delta )\cap {{\,\mathrm{spec}\,}}(L)=\left\{ \lambda _{0} \right\} \). Note that \(\displaystyle \sum _{k=0}^{\infty }(z-\lambda _{0})^{k}A_{k}\) converges in the operator norm topology, n is the order of \(\lambda \) (by Proposition 12, n is the smallest integer such that \(\ker (\lambda _{0}I-\widetilde{L})^{n}=\ker (\lambda _{0}I-\widetilde{L})^{n+1}\)), and \(P_{\lambda _{0}}\) is the spectral projection onto the subspace \(\ker (\lambda _{0}I-\widetilde{L})^{n}\).

Next two results due to Sasser [Sas64] describes the properties of quasi-compact operator preserving a closed cone.

Proposition 13

Suppose that \(L:X\rightarrow X\) is quasi-compact, \({\mathcal {C}}\) is a proper closed cone, \(L{\mathcal {C}}\subseteq {\mathcal {C}}\) and \(\rho (L)=1\). Then \(1 \in {{\,\mathrm{spec}\,}}(L)\). Moreover if \(\lambda _{0}\in {{\,\mathrm{spec}\,}}(L)\) and \(\left| \lambda _{0} \right| =1\), then the order of \(\lambda _{0}\) is at most the order of 1.

Proof

This is [Sas64, Theorem 2]. \(\quad \square \)

Lemma 12

Suppose that \(L:X\rightarrow X\) is quasi-compact, \({\mathcal {C}}\) is a proper closed cone, \(L{\mathcal {C}}\subseteq {\mathcal {C}}\), and \(\rho (L)=1\). Then there exist \(u \in {\mathcal {C}}\) (\(u \ne 0\)) and \(u^{*}\in {\mathcal {C}}^{*}\) (\(u^{*}\ne 0\)) such that \(Lu=u\) and \(L^{*}u^{*}=u^{*}\).

Proof

This is [Sas64, Theorem 3]. \(\quad \square \)

Lemma 13

If \(z \in {{\,\mathrm{int}\,}}({\mathcal {C}})\) and \(x \in X\) with \(x \ne 0\), then there exists \(t \in {\mathbb {R}}{\setminus }\left\{ 0 \right\} \) such that \(z+tx \in \partial {\mathcal {C}}\).

Proof

By replacing x with \(-x\) if necessary, we may assume that \(x \notin {\mathcal {C}}\). Set

and notice that as \(z \in {{\,\mathrm{int}\,}}({\mathcal {C}})\) we have that \(t_{0}>0\). As \(x \notin {\mathcal {C}}\), we may choose \(x^{*} \in {\mathcal {C}}^{*}\) such that \(\left\langle x,x^{*} \right\rangle <0\) by Lemma 11 (1). Notice that if \(t>-\left\langle z,x^{*} \right\rangle /\left\langle x,x^{*} \right\rangle \), then \(z+tx \notin {\mathcal {C}}\). Thus \(t_{0}<\infty \). As \({\mathcal {C}}\) is closed we have that \(z+t_{0}x \in {\mathcal {C}}\), but it is clear that \(z+t_{0}x\notin {{\,\mathrm{int}\,}}({\mathcal {C}})\). Hence, \(z+t_{0}x \in \partial {\mathcal {C}}\). \(\quad \square \)

The following proposition shows that with the added assumption of semi-positivity with respect to a proper closed cone, extra properties (such as some of the listed properties in Theorem 10) of the operator can be established.

Proposition 14

Suppose that \(L:X\rightarrow X\) is quasi-compact, \({\mathcal {C}}\) is a proper closed cone, L is semi-positive with respect to \({\mathcal {C}}\), and \(\rho (L)=1\). Then the following are true:

-

1.

If \(w \in {\mathcal {C}}{\setminus }\{0\}\) with \(Lw=w\), then \(w \in {{\,\mathrm{int}\,}}({\mathcal {C}})\).

-

2.

The order of the eigenvalue 1 is one.

-

3.

\(\widetilde{L}\) can be written as

$$\begin{aligned} \widetilde{L}=\sum _{j=1}^{m}\lambda _{j}P_{j}+S \end{aligned}$$where \(\left\{ \lambda _{j} \right\} \) is the collection of eigenvalues of L with modulus 1, \(P_{j}^{2}=P_{j}\), \(P_{j}S=SP_{j}=0\), \(P_{i}P_{j}=0\) for \(i\ne j\), and \(\rho (S)<1\).

-

4.

The dimension of the eigenspace corresponding to 1 is one.

Proof

We can modify the proof of [Sas64, Theorem 4] to fit our assumptions.

-

1.

It is clear that there is some \(s\in {\mathcal {S}}\) such that \(\left\{ \left\langle L^{n}w,s \right\rangle \right\} _{n=1}^{\infty }\) does not converge to 0. So by the assumption that L is semi-positive, it must be that \(L^{n}w\in {{\,\mathrm{int}\,}}({\mathcal {C}})\) for some n, but of course \(L^{n}w=w\). Hence, \(w \in {{\,\mathrm{int}\,}}({\mathcal {C}})\).

-

2.

Let n be the order of 1 and set

$$\begin{aligned} \varGamma = (I-\widetilde{L})^{n-1}P_{1}. \end{aligned}$$From the Laurent expansion of R(z) about 1 we can see that

$$\begin{aligned} \varGamma&= \lim _{z \rightarrow 1}(z-1)^{n}R(z) \\&= \lim _{z \rightarrow 1^{+}}(z-1)^{n}R(z)\\&= \lim _{z \rightarrow 1^{+}}(z-1)^{n}\sum _{k=0}^{\infty }z^{-k-1}\widetilde{L}^{k}. \end{aligned}$$From this it follows that \(\varGamma X \subseteq X\) and \(\varGamma {\mathcal {C}}\subseteq {\mathcal {C}}\). Moreover

$$\begin{aligned} (I-\widetilde{L})\varGamma = (I-\widetilde{L})^{n}P_{1}=0. \end{aligned}$$This combined with the previous equation implies that \(\varGamma \widetilde{L}=\widetilde{L}\varGamma = \varGamma \).

Take \(x\in {\mathcal {C}}\) such that \(\varGamma x \ne 0\). Notice that \(L\varGamma x = \varGamma x\) and \(\varGamma x \in {\mathcal {C}}\), and thus \(\varGamma x \in {{\,\mathrm{int}\,}}({\mathcal {C}})\) by part (1). With \(u^* \in {\mathcal {C}}^*\) from Lemma 12, we have

$$\begin{aligned} 0<\left\langle \varGamma x, u^{*} \right\rangle&=\lim _{z\rightarrow 1^{+}}(z-1)^{n}\sum _{k=0}^{\infty }z^{-k-1}\left\langle \widetilde{L}^{k}x,u^{*} \right\rangle \\&=\left\langle x,u^{*} \right\rangle \lim _{z\rightarrow 1^{+}}(z-1)^{n}\sum _{k=0}^{\infty }z^{-k-1}\\&=\left\langle x,u^{*} \right\rangle \lim _{z\rightarrow 1^{+}}(z-1)^{n}\frac{z^{-1}}{1-z^{-1}}\\&=\left\langle x,u^{*} \right\rangle \lim _{z\rightarrow 1^{+}}(z-1)^{n-1}. \end{aligned}$$Therefore \(n =1\).

-

3.

We enumerate the points in \({{\,\mathrm{spec}\,}}(L)\) with modulus 1 as \(\left\{ \lambda _{i} \right\} _{i=1}^{m}\) where \(\lambda _{1}=1\). Letting \(P_{i}=P_{\lambda _{i}}\) be the spectral projection onto \(\ker (\lambda _{i}I-\widetilde{L})\), notice from Proposition 13 that the order of \(\lambda _{i}\) is one. Setting \({\mathcal {P}}=\sum _{i}P_{i}\) and writing

$$\begin{aligned} \widetilde{L}= \sum _{i}\widetilde{L}P_{i} + \widetilde{L}(I-{\mathcal {P}}), \end{aligned}$$it is easily seen that \(\widetilde{L}P_{i} = \lambda _{i}P_{i}\).

We then take \(S = \widetilde{L}(I-{\mathcal {P}})\). As \(I-{\mathcal {P}}\) is the spectral projection onto \({{\,\mathrm{spec}\,}}(L){\setminus }\left\{ \lambda _{i} \right\} _{i=1}^{m}\) we have that \({{\,\mathrm{spec}\,}}(\widetilde{L}|_{{{\,\mathrm{Ran}\,}}(I-{\mathcal {P}})})={{\,\mathrm{spec}\,}}(L){\setminus } \left\{ \lambda _{i} \right\} _{i=1}^{k}\). In particular, \(\rho (S)<1\).

-

4.

Let \(u\in {\mathcal {C}}\) be as in Lemma 12. Supposing that \(Lx=x\) for some \(x\in X\), we take t as in Lemma 13 such that \(u+tx \in \partial {\mathcal {C}}\). Since \(L(u+tx)=u+tx\), it follows from part (1) that \(u+tx=0\); that is x is a scalar multiple of u.

Now if \(x+iy \in \widetilde{X}\) is such that \(L(x+iy)=x+iy\), then \(Lx=x\) and \(Ly=y\). Hence, \(x+iy\) is a scalar multiple of u, as required. \(\quad \square \)

Lemma 14

Suppose that \(L:X\rightarrow X\) is quasi-compact, \({\mathcal {C}}\) is a proper closed cone, L is semi-positive with respect to \({\mathcal {C}}\), and \(\rho (L)=1\). Then for any \(z \in {{\,\mathrm{int}\,}}({\mathcal {C}})\) we have that \(\overline{\left\{ L^{n}z \right\} }\subseteq {{\,\mathrm{int}\,}}({\mathcal {C}})\).

Proof

Let \(u\in {\mathcal {C}}\) be as in Lemma 12. Notice that for any \(x^{*}\in {\mathcal {C}}^{*}\), we have that

Suppose \(z \in {{\,\mathrm{int}\,}}({\mathcal {C}})\) and take \(\delta >0\) such that \(B(z,\delta )\subseteq {\mathcal {C}}\). Then

where the first inequality is due to (34). In particular, if \(y \in \overline{\left\{ L^{n}z \right\} }\), then \(\left\langle y,x^{*} \right\rangle >0\). Thus by Lemma 11, \(y \in {{\,\mathrm{int}\,}}({\mathcal {C}})\). \(\quad \square \)

Proposition 15

Suppose that \(L:X\rightarrow X\) is quasi-compact, \({\mathcal {C}}\) is a proper closed cone, L is semi-positive with respect to \({\mathcal {C}}\), and \(\rho (L)=1\). Then 1 is the only element of \({{\,\mathrm{spec}\,}}(L)\) of modulus 1.

Proof

Let u be as in Lemma 12. Suppose that \(e^{i\theta }\) belongs to \({{\,\mathrm{spec}\,}}(L)\) and let \(x+iy \ne 0\) be its corresponding eigenvector; that is, \(L(x+iy)=e^{i\theta }(x+iy)\). Take a sequence \(n_{k}\) such that \(e^{in_{k}\theta }\xrightarrow {k \rightarrow \infty }1\), then

Thus

If \(x \ne 0\), then by Lemma 13 we can take \(t\ne 0\) such that \(u+tx \in \partial {\mathcal {C}}\). Notice that

By the assumption that L is semi-positive with respect to \({\mathcal {C}}\), either \(u+tx=0\) or there exists N such that \(L^{n}(u+tx)\in {{\,\mathrm{int}\,}}({\mathcal {C}})\) for all \(n\ge N\).

It turns out that we must have \(u+tx=0\). This is because if \(u+tx \ne 0\), then we would have \(u+tx \in \overline{\left\{ L^{n}(L^{N}(u+tx)) \right\} _{n=1}^{\infty }} \subseteq {{\,\mathrm{int}\,}}({\mathcal {C}})\) by Lemma 14. Then \(u+tx \in \partial {\mathcal {C}}\), and at the same time, \(u+tx \in {{\,\mathrm{int}\,}}({\mathcal {C}})\), which is absurd.

The same argument shows that if \(y \ne 0\), then y is a scalar multiple of u. Therefore, \(x+iy\) is a scalar multiple of u and \(e^{i \theta }=1\). \(\quad \square \)

Proof of Theorem 10

-

1.

This follows by applying Lemma 12 as well as Proposition 14 (1) and (2) to \(\rho (L)^{-1}L\).

-

2.

This follows by an application of Proposition 15 to \(\rho (L)^{-1}L\).

-

3.

This follows from Proposition 14 (4) and part (2) of this theorem.

-

4.

Notice that by part (3) we have that \(\rho (L)^{-n}L^{n}= P + S^{n}\). Thus

$$\begin{aligned} \left\| \rho (L)^{-n}L^{n}x - \left\langle x,u^{*} \right\rangle u \right\| = \left\| Px+S^{n}x - \left\langle x,u^{*} \right\rangle u \right\| =\left\| S^{n}x \right\| . \end{aligned}$$Take \(\rho (S)<\gamma < 1\), then

$$\begin{aligned} \left\| S^{n}x \right\| \le \frac{\left\| S^{n} \right\| }{\gamma ^{n}}\left\| x \right\| \gamma ^{n} . \end{aligned}$$As \(\left\| S^{n} \right\| \) has an exponential growth rate of \(\log \rho (S)\) by Gelfand’s formula, we have that \(\displaystyle \frac{\left\| S^{n} \right\| }{\gamma ^{n}}\) tends to 0, and in particular, is bounded by some constant \(C>0\). \(\quad \square \)

1.2 Direct sums of Banach spaces and spectral properties of non-negative matrices

Proposition 16

-

1.

Let \(\left\{ X_{i} \right\} _{i=1}^{n}\) be a finite collection of Banach spaces. Define the vector space

$$\begin{aligned} \bigoplus _{i=1}^{n}X_{i}=\left\{ (x_{1}, x_{2}, \ldots , x_{n}) :x_{i}\in X_{i} \right\} \end{aligned}$$with addition and scalar multiplication being coordinate wise. Then the function

$$\begin{aligned} \left\| (x_{i})_{i=1}^{n} \right\| = \max _{i}\left\| x_{i} \right\| _{X_{i}} \end{aligned}$$defines a norm on \(\bigoplus _{i=1}^{n}X_{i}\) which makes it a Banach space.

-

2.

Suppose that \(\left\{ X_{i} \right\} _{i=1}^{n}\) is a collection of Banach spaces and that \(\left\{ A_{ij} \right\} _{i=1,j=1}^{n,n}\) is a collection of bounded linear operators \(A_{ij}:X_{j}\rightarrow X_{i}\). The function

$$\begin{aligned} {\mathcal {A}}(x_{i})_{i=1}^{n}:= \left( \sum _{j=1}^{n}A_{ij}x_{j}\right) _{i=1}^{n} \end{aligned}$$defines a bounded linear operator \({\mathcal {A}}:\bigoplus _{i=1}^{n}X_{i}\rightarrow \bigoplus _{i=1}^{n}X_{i}\). We will denote this operator by \([A_{ij}]\).

-

3.

Suppose that \({\mathcal {A}}: \bigoplus _{i}X_{i} \rightarrow \bigoplus _{i}X_{i}\) is a bounded linear operator. Then there exists a collection of bounded linear operators \(A_{ij}:X_{j}\rightarrow X_{i}\) such that \({\mathcal {A}}= [A_{ij}]\).

-

4.

Let \({\mathcal {A}}= [A_{ij}]\) and \({\mathcal {B}}=[B_{ij}]\), then \({\mathcal {A}}{\mathcal {B}}= [\sum _{k}A_{ik}B_{kj}]\).

Proof

-

1.

This can be found in [Con90, Chapter 3 proposition 4.4].

-

2.

That the function \({\mathcal {A}}\) is linear can be verified by computation. To see that \({\mathcal {A}}\) is bounded, we note that

$$\begin{aligned} \left\| \sum _{j=1}^{n}A_{ij}x_{j} \right\| _{X_{i}}\le \sum _{j=1}^{n}\left\| A_{ij} \right\| _{{{\,\mathrm{op}\,}}}\left\| x_{j} \right\| _{X_{j}}\le \left\| (x_{i}) \right\| \sum _{j=1}^{n}\left\| A_{ij} \right\| _{{{\,\mathrm{op}\,}}}. \end{aligned}$$Therefore \(\left\| {\mathcal {A}} \right\| _{{{\,\mathrm{op}\,}}}\le \max \limits _{i}\displaystyle \sum _{j=1}^{n}\left\| A_{ij} \right\| _{{{\,\mathrm{op}\,}}}\).

-

3.

For each \(1\le i\le n\), define \(P_{i}:\bigoplus _{k}X_{k} \rightarrow X_{i}\) by

$$\begin{aligned} P_{i}(x_{1}, x_{2}, \ldots , x_{n})=x_{i} \end{aligned}$$and \(:\overline{P}_{i}:X_{i} \rightarrow \bigoplus _{k}X_{k}\) by

$$\begin{aligned} \overline{P}_{i}x = (0,\ldots , 0,\underset{i\text {th place}}{x},0,\ldots ,0). \end{aligned}$$Setting \(A_{ij}=P_{i}{\mathcal {A}}\overline{P}_{j}\), it can be verified that \({\mathcal {A}}=[A_{ij}]\).

-

4.

Notice that

$$\begin{aligned} {\mathcal {A}}{\mathcal {B}}(x_{i})_{i=1}^{n}&= {\mathcal {A}}\left( \sum _{j=1}^{n}B_{ij}x_{j}\right) _{i=1}^{n}\\&=\left( \sum _{k=1}^{n}A_{ik}\sum _{j=1}^{n}B_{kj}x_{j}\right) _{i=1}^{n}\\&=\left( \sum _{j=1}^{n}\sum _{k=1}^{n}A_{ik}B_{kj}x_{j}\right) _{i=1}^{n}. \end{aligned}$$Therefore \({\mathcal {A}}{\mathcal {B}}= [\sum _{k}A_{ik}B_{kj}]\). \(\quad \square \)

When working with an operator \({\mathcal {A}}\) acting on a direct sum, we will sometimes write

to mean that \({\mathcal {A}}=[A_{ij}]\). By Proposition 16, the operation of multiplication of operators is compatible with the typical formulas for matrix multiplication, justifying such notation.

Lemma 15

Let \(\left\{ X_{i} \right\} _{i=1}^{n}\) be a collection of Banach space and \({\mathcal {A}}:\bigoplus _{i}X_{i}\rightarrow \bigoplus _{i}X_{i}\). Suppose that A is block upper triangular

-

1.

A is invertible if and only if \(A_{ii}\) is invertible for all \(1 \le i \le n\).

-

2.

We have

$$\begin{aligned} {{\,\mathrm{spec}\,}}(A) = \bigcup _{i=1}^{n}{{\,\mathrm{spec}\,}}(A_{ii}). \end{aligned}$$

Proof

-

1.

\((\Rightarrow )\) This direction is clear; use the invertibility of A and apply it to vectors of the form \((0,0, \ldots , x_{i}, \ldots , 0)\) to define the inverse for \(A_{ii}\).

\((\Leftarrow )\) The proof is by induction on n (the number of Banach spaces). It is clear that the result holds for \(n=1\). Supposing that the result holds for \(n-1\), we can think of \(\bigoplus _{i=1}^{n}X_{i}\) as \(\left( \bigoplus _{i=1}^{n-1}X_{i}\right) \oplus X_{n}\) and write \({\mathcal {A}}\) as a matrix with respect to this direct sum

$$\begin{aligned} {\mathcal {A}}= \begin{bmatrix} \widetilde{A}_{11}&{} \widetilde{A}_{12} \\ 0 &{} A_{nn} \end{bmatrix} . \end{aligned}$$By assumption \(A_{nn}\) is invertible, and by the induction hypothesis \(\widetilde{A}_{11}\) is invertible. Now one can check using Proposition 16 (4) that

$$\begin{aligned} \begin{bmatrix} \widetilde{A}^{-1}_{11}&{}-\widetilde{A}_{11}^{-1}\widetilde{A}_{12}A_{nn}^{-1} \\ 0 &{} A_{nn}^{-1} \end{bmatrix} \end{aligned}$$defines an inverse for A.

-

2.

This follows from part (1). \(\quad \square \)

Theorem 11

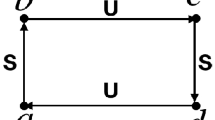

(Perron–Frobenius). Suppose that T is an irreducible non-negative matrix. There exist a number \(h\ge 1\), called the period of A, and a permutation matrix P such that \(PAP^{*}\) can be written as a block matrix of the following form

where the diagonal blocks are square. Moreover,

and each diagonal block is primitive.

Proof

For the proof we refer the reader to [HJ13, Section 8.4] and the references therein. \(\quad \square \)

Proposition 17

Suppose that

That is \({\mathcal {A}}=[A_{ij}]\) where \(A_{ij}=0\) unless \(j\equiv i+1 \mod h\). Then for each \(0\le k\le h-1\), there exists an isometric isomorphism \({\mathcal {D}}:\bigoplus _{i=1}^{h}X_{i}\rightarrow \bigoplus _{i=1}^{h}X_{i}\) such that \({\mathcal {D}}^{-1}{\mathcal {A}}{\mathcal {D}}= e^{\frac{2k \pi i}{h}}A\).

Proof

Define

Then

which is equal to \(e^{\frac{2k \pi i}{h}}{\mathcal {A}}\). \(\quad \square \)

Rights and permissions

About this article

Cite this article

Park, K., Piraino, M. Transfer Operators and Limit Laws for Typical Cocycles. Commun. Math. Phys. 389, 1475–1523 (2022). https://doi.org/10.1007/s00220-021-04300-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00220-021-04300-x