Abstract

We consider a sharp interface formulation for the multi-phase Mullins–Sekerka flow. The flow is characterized by a network of curves evolving such that the total surface energy of the curves is reduced, while the areas of the enclosed phases are conserved. Making use of a variational formulation, we introduce a fully discrete finite element method. Our discretization features a parametric approximation of the moving interfaces that is independent of the discretization used for the equations in the bulk. The scheme can be shown to be unconditionally stable and to satisfy an exact volume conservation property. Moreover, an inherent tangential velocity for the vertices on the discrete curves leads to asymptotically equidistributed vertices, meaning no remeshing is necessary in practice. Several numerical examples, including a convergence experiment for the three-phase Mullins–Sekerka flow, demonstrate the capabilities of the introduced method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper, we consider the problem of networks of curves moving under the multi-phase Mullins–Sekerka flow, see, e.g., [14]. These networks feature triple junctions, at which certain balance laws need to hold. The network depicted in Fig. 1 consists of three time dependent curves \(\Gamma ^{}_{i}(t)\), \(i=1,2,3\), that meet at two triple junction points \(\mathcal {T}_1(t)\) and \(\mathcal {T}_2(t)\). We assume that the curve network \(\Gamma ^{}_{}(t):= \cup _{i=1}^3\Gamma ^{}_{i}(t)\) lies in a domain \(\Omega \subset \mathbb {R}^2\) for all times \(t \in [0, T ]\), and it partitions \(\Omega \) into the three subdomains \(\Omega _j(t)\), \(j=1,2,3\). The three domains correspond to different phases in the multi-component system. The evolution of the interfaces \(\Gamma ^{}_{1}(t),\Gamma ^{}_{2}(t),\) and \(\Gamma ^{}_{3}(t)\) is driven by diffusion. As in [14], given a time \(T > 0\) and the hyperplane \(T\Sigma := \{{\varvec{u}}\in \mathbb {R}^3\mid \sum _{j=1}^3u_j = 0\}\), we introduce a vector of chemical potentials \({\varvec{w}}^{}_{}:(0,T]\times \Omega \rightarrow T\Sigma \) which fulfills the quasi-static diffusion equation for \(j=1,2,3\) and \(t\in (0,T]\),

together with

where \(\vec {\nu }_\Omega \) denotes the outer unit normal vector to \(\partial \Omega \).

To close the system, we need boundary conditions on \(\Gamma ^{}_{}(t)\) and on \(\mathcal {T}_1(t)\) and \(\mathcal {T}_2(t)\). These boundary conditions are given by a Stefan-type kinetic condition and the Gibbs–Thomson law on the moving interfaces, and Young’s law at the triple junctions, see [14]. The kinetic condition reads

where \({\varvec{\chi }}= (\chi _1,\chi _2,\chi _3)^T\) denotes the vector which consists of the characteristic functions \(\chi _j = \mathrm {\mathcal {X}}_{\Omega _j(t)}\) of \(\Omega _j(t)\), \(\vec {\nu }\) is the unit normal vector on \(\Gamma ^{}_{}(t)\), and V is the velocity of \(\Gamma (t)\) in the direction of \(\vec {\nu }\). We write \(\vec \nu = \sum _{i=1}^3 \vec \nu _i\mathrm {\mathcal {X}}_{\Gamma _i(t)}\) and use this convention for quantities defined on \(\Gamma (t)\) throughout the paper. The orientation of the three normal vectors is shown in Fig. 1. In addition, the quantity [q] represents the jump of q across \(\Gamma (t)\) in the direction of \(\vec {\nu }\) defined by \([q](\vec x) := \lim _{\varepsilon \searrow 0}\{q(\vec x + \varepsilon \vec {\nu }) - q(\vec x - \varepsilon \vec {\nu })\}\). Furthermore, the Gibbs–Thomson equations can be written as

where \(\varkappa \) denotes the curvature of \(\Gamma (t)\) (well-defined on the interiors of \(\Gamma _i(t)\)), and \(\sigma = \sum _{i=1}^3 \sigma _i \mathrm {\mathcal {X}}_{\Gamma _i(t)}\) is a surface tension coefficient on \(\Gamma (t)\). Our sign convention is such that unit circles have curvature \(\varkappa =-1\), which is different to the one used in [14]. Finally, denoting by \(\vec {\mu }_{i}\), the outer unit co-normal to \(\Gamma ^{}_{i}(t)\), we further require Young’s law, which is a balance of force condition at the triple junction as follows:

In order to be able to fulfill this condition, we require \(\sigma _1 \le \sigma _2 + \sigma _3\), \(\sigma _2 \le \sigma _1 + \sigma _3\) and \(\sigma _3 \le \sigma _1 + \sigma _2\). It can be shown that solutions to (1.1) reduce the weighted length \(\sum _{i=1}^3 \sigma _i |\Gamma _i(t)|\) of the curve network, while conserving the areas of the subdomains \(\Omega _1(t)\) and \(\Omega _2(t)\) (and hence trivially also of \(\Omega _3(t)\)), see Sect. 2 for the precise details. Our aim in this paper is to introduce a numerical method that preserves these two properties on the discrete level.

Prescribing an initial condition \(\Gamma ^{}_{}(0) = \Gamma ^{0}_{}\) for the interface, we altogether obtain the following system:

The system (1.2) at present is written for the setup from Fig. 1, i.e. a network of three curves, meeting at two triple junctions and partitioning \(\Omega \) into three phases. We will later generalize this to an arbitrary network of curves. The simplest case is given by a single closed curve that partitions the domain into two phases. Then we obtain the classical two phase Mullins–Sekerka problem, see [18] and the references given below. Indeed, let \(({\varvec{w}},\{\Gamma ^{}_{}(t)\}_{0\le t\le T})\), with \({\varvec{w}} = (w_1,w_2)^T\), be a solution to the corresponding problem (1.2) with \(\sigma =1\), and let \(\vec {\nu }^{}_{}\) point into \(\Omega _2(t)\), the interior domain of \(\Gamma ^{}_{}(t)\). Then we have \([{\varvec{\chi }}] = {(-1,1)}^T\) on \(\Gamma ^{}_{}(t)\), and it holds that \(w = {w_2 - w_1}\) is a solution to the system

The multi-phase Mullins–Sekerka problem (1.2) arises naturally as the sharp interface limit of a nondegenerate multi-component Cahn–Hilliard equation. Let \(\Sigma :=\{{\varvec{u}}\in \mathbb {R}^3\mid \sum _{j=1}^3u_j = 1\}\) and let \(T\widetilde{\Sigma }\) be the family of all functions \({\varvec{w}}^{}_{}:\Omega \rightarrow \mathbb {R}^3\) such that \({\text {Im}}{({\varvec{w}}^{}_{})}\subset T\Sigma \). Let \(\psi :\mathbb {R}^3\rightarrow \mathbb {R}\) be a potential whose restriction to \(\Sigma \) has exactly three distinct and strict global minima, say \({\varvec{p}}_i\in \Sigma \), \(i=1,2,3\), with \(\psi ({\varvec{p}}_1) = \psi ({\varvec{p}}_2) = \psi ({\varvec{p}}_3)\). Let \(F:\mathbb {R}^3\rightarrow \mathbb {R}^3\) be the projection of \(\nabla {\psi }\) onto \(T\widetilde{\Sigma }\). According to [14, Section 2], the system (1.2) is derived as the limit with \(\varepsilon \rightarrow 0\) of a chemical system consisting of three species governed by the vector-valued Cahn–Hilliard equation whose form reads as:

where \({\varvec{u}}_0:\Omega \rightarrow \Sigma \) denotes the initial distribution of each component and \({\varvec{u}}:\Omega \times [0,T]\rightarrow \Sigma \) and \({\varvec{w}}^{}_{}:\Omega \times (0,T]\rightarrow T\Sigma \) indicate the concentration and the chemical potential of each component in time, respectively. A distributional solution concept to (1.2) was proposed, and its existence was established via an implicit time discretization and under the assumption that no interfacial energy is lost in the limit in the time discretization (see [14, Definition 4.1, Theorem 5.8]). See [28] for a related work which treated the case without triple junctions and with a driving force.

Compared to the multi-phase Mullins–Sekerka problem, the binary case, namely the two-phase case, has been well studied so far. For classical solutions, Chen et al. [18] showed the existence of a classical solution to the Mullins–Sekerka problem local-in-time in the two-dimensional case, whereas Escher and Simonett [22] gave a similar result in the general dimensional case. When it comes to the notion of weak solutions, Luckhaus and Sturzenhecker [33] established the existence of weak solutions to (1.2) in a distributional sense. Therein, the weak solution was obtained as a limit of a sequence of time discrete approximate solutions under the no mass loss assumption. The time implicit scheme is the basis of the approach in [14]. After that, Röger [40] removed the technical assumption of no mass loss in the case when the Dirichlet–Neumann boundary condition is imposed by using geometric measure theory. Recently, researches which treat the boundary contact case gradually appear. Garcke and Rauchecker [27] considered a stability analysis in a curved domain in \(\mathbb {R}^2\) via a linearization approach. Hensel and Stinson [29] proposed a varifold solution to (1.2) by starting from the energy dissipation property. For a gradient flow aspect of the Mullins–Sekerka flow, see e.g., [42, Section 3.2].

The numerical scheme that we propose in this paper is based on the BGN method, a parametric finite element method that allows the variational treatment of triple junctions and was first introduced by Barrett, Garcke, and Nürnberg in [6, 7]. We also refer to the review article [9] for more details on the BGN method, including in the context of the standard Mullins–Sekerka problem (1.3). Alternative front-tracking methods for geometric flows of curve networks have been considered in, e.g., [15, 35, 38, 39, 43].

Let us briefly review numerical methods being available in the literature for the Mullins–Sekerka problem and for its diffuse interface model, the multi-component Cahn–Hilliard equation (1.4). To the best of our knowledge, there are presently no sharp interface methods for the numerical approximation of the multi-phase Mullins–Sekerka problem. For the boundary integral method for the two-phase case, we refer the reader to [10, 11, 16, 34, 44]. A level set formulation of moving boundaries together with the finite difference method was proposed in [17]. For an implementation of the method of fundamental solutions for the Mullins–Sekerka problem in 2D, see [23]. For the parametric finite element method in general dimensions, see [8, 37]. Numerical analysis of the scalar Cahn–Hilliard equation is dealt with in the works [3, 4, 13, 21]. Feng and Prohl [25] proposed a mixed fully discrete finite element method for the Cahn–Hilliard equation and provided error estimates between the solution of the Mullins–Sekerka problem and the approximate solution of the Cahn–Hilliard equation which are computed by their scheme. The established error bounds yielded a convergence result in [26]. Aside from the sharp interface model, the Cahn–Hilliard equation for the multi-component case has been computed in several works, see [5, 12, 24, 31, 36]. The multi-component Cahn–Hilliard equation on surfaces has recently been considered in [32].

This paper is organized as follows. In the first part, we focus on the three-phase case, as outlined in the introduction. In Sect. 2, we show a curve-shortening property and an area-preserving property of strong solutions to the system. In Sect. 3, we introduce a weak formulation of the system, which in Sect. 4 will then be approximated with the help of an unfitted parametric finite element method. The scheme, which is linear, can be shown to be unconditionally stable. Section 5 is devoted to discussing solution methods for the linear systems that arise at each time level. In Sect. 6, we adapt our approximation to allow for an exact area-preservation property on the fully discrete level, leading to a nonlinear scheme. Section 7 is devoted to generalizations of the problem formulation and our numerical approximation to the general multi-phase case. Finally, we will show several results of numerical computation in Sect. 8, including convergence experiments for a constructed solution in the three-phase case.

2 Mathematical properties

In this section, we shall present two important properties of strong solutions to (1.2).

Proposition 2.1

(Curve shortening property of strong solutions) Assume that \(({\varvec{w}},\{\Gamma ^{}_{}(t)\}_{0\le t\le T})\) is a solution to (1.2). Then it holds that

where we have defined the weighted length \(|\Gamma (t)|_\sigma = \sum _{i=1}^3\sigma _i|\Gamma ^{}_{i}(t)| = \sum _{i=1}^3 \sigma _i \int _{\Gamma ^{}_{i}(t)} 1 \,\textrm{d}\mathcal {H}^{1}\). Here \(\,\textrm{d}\mathcal {L}^{2}\) and \(\,\textrm{d}\mathcal {H}^{1}\) refer to integration with respect to the Lebesgue measure and the one-dimensional Hausdorff measure in \({\mathbb R}^2\), respectively.

Proof

Since the curvature is the first variation of the curves’ length in the normal direction, we deduce, on recalling (1.1d) and (1.1c), that

Now for each \(1 \le j \le 3\) it follows from integration by parts, (1.1a) and (1.1b) that

Summing (2.2) for \(j=1,2,3\) and combining with (2.1) yields the desired result. \(\square \)

Proposition 2.2

(Area preserving property of strong solutions) Let \(\Omega _1(t),\Omega _2(t),\) and \(\Omega _3(t)\) be the domains bounded by \(\Gamma ^{}_{2}(t)\cup \Gamma ^{}_{3}(t)\), \(\Gamma ^{}_{1}(t)\cup \Gamma ^{}_{3}(t)\), and \(\Gamma ^{}_{1}(t)\cup \Gamma ^{}_{2}(t)\cup \partial \Omega \), respectively, see Fig. 1. Then for any solution to (1.2), it holds that

Proof

We first deduce from the motion law (1.1c) that

Here, we note that \(\nabla w_1\) does not jump over \(\Gamma ^{}_{1}(t)\) to derive the last equality. Meanwhile, integration by parts with (1.1a) and (1.1b) shows

Combining (2.3) and (2.4) gives the assertion. The cases for \(j=2,3\) can be shown in the same manner. \(\square \)

3 Weak formulation

Let us derive a weak formulation for (1.2). In the sequel, we often abbreviate \(\Omega _j(t)\) and \(\Gamma ^{}_{i}(t)\) as \(\Omega _j\) and \(\Gamma ^{}_{i}\), for simplicity. Now \(1\le j\le 3\). Then, testing the first equation of (1.2) with \(\varphi \in H^1(\Omega )\), we deduce similarly to (2.4) that

Then, we obtain from the second equality of (2.3) that

Hence, \({\varvec{w}} \in T\widetilde{\Sigma }\cap [H^1(\Omega )]^3\) is such that

where

The Gibbs–Thomson relation (1.1d) is encoded as follows:

Finally, we give a weak formulation of the weighted curvature vector \(\varkappa _\sigma := \sigma \varkappa \), which means that \(\varkappa _\sigma = \sigma _i\varkappa _i\) on \(\Gamma ^{}_{i}(t)\) for \(i=1,2,3\). Let \(\vec {{\text {Id}}}\) denote the identity map in \(\mathbb {R}^2\). Then, it holds that \(\sigma \Delta _s\vec {{\text {Id}}} = \varkappa _\sigma \vec {\nu }\) on \(\Gamma \), see [20]. Take a test function \(\vec {\eta }\in H^1(\Gamma ;\mathbb {R}^2)\) with \(\vec {\eta }\!\mid _{\Gamma ^{}_{1}} = \vec {\eta }\!\mid _{\Gamma ^{}_{2}} = \vec {\eta }\!\mid _{\Gamma ^{}_{3}}\) on \(\partial \Gamma ^{}_{1}\cap \partial \Gamma ^{}_{2}\cap \partial \Gamma ^{}_{3}\). Then applying integration by parts gives

Here, we have used Young’s law (1.1e) to get the last equality. For later use, we define inner products on \(\Gamma ^{}_{}\) and \(\Omega \) as follows:

Let us summarize the weak formulation of the system (1.2) as follows, where we recall (3.3).

[Motion law] For all \({\varvec{\varphi }}\in T\widetilde{\Sigma }\cap [H^1(\Omega )]^3\),

[Gibbs–Thomson law] For all \(\xi \in L^2(\Gamma ^{}_{})\),

[Curvature vector] For all \(\vec {\eta }\in H^1(\Gamma ^{}_{};\mathbb {R}^2)\) with \(\vec {\eta }_1 = \vec {\eta }_2 = \vec {\eta }_3\) on \(\partial \Gamma ^{}_{1}\cap \partial \Gamma ^{}_{2}\cap \partial \Gamma ^{}_{3}\),

4 Finite element approximation

To approximate the weak solution \(({\varvec{w}},V,\varkappa _\sigma )\) of (1.2), we use ideas from [6, (2.30a,b)] and [37, Section 3]. Let the time interval [0, T] be split into M sub-intervals \([t_{m-1}, t_m]\) for \(m = 1,\cdots ,M\) whose lengths are equal to \(\tau _m\). Then, given a triplet of polygonal curves \(\Gamma ^{0}_{} = (\Gamma ^{0}_{1},\Gamma ^{0}_{2},\Gamma ^{0}_{3})\), our aim is to find time discrete triplets \(\Gamma ^{1}_{},\cdots ,\Gamma ^{M}_{}\) governed by discrete analogues of (3.1, (3.4)) and (3.5). For each \(m\ge 0\) and \(1\le i\le 3\), \(\Gamma ^{m}_{i} = \vec {X}^{m}_{i}(I)\) is parameterized by \(I = [0,1]\ni \rho \mapsto \vec {X}^{m}_{i}(\rho )\in \mathbb {R}^2\) and I is split into sub-intervals as \(I = \cup _{j=1}^{N_i}[q_{i,j - 1}, q_{i,j}]\), where \(N_i\in \mathbb {N}_{\ge 2}\). Then, we note that

Set \(\vec {q}^{m}_{i,j}:= \vec {X}^{m}_{i}(q_{i,j})\). Let be a of triangulation of \(\overline{\Omega }\) and let \({S}^m\) be the associated scalar- and vector-valued finite element spaces be defined by

Let \({V^h_{i}}\) be the set of all piecewise continuous functions on I which are affine on each sub-interval \([q_{i,j-1},q_{i,j}]\), and let \(\pi ^{{h}}_i:C({I})\rightarrow {V^h_{i}}\) be the associated standard interpolation operators for \(1\le i\le 3\). Similarly, \({\underline{V}^h_{i}}\) denotes the set of all vector valued functions such that each element belongs to \({V^h_{i}}\). Let \(\{{\Phi _{{i},{j}}}\}_{j=1}^{N_i}\) be the standard basis of \({V^h_{i}}\) for \(1\le i\le 3\), namely \({\Phi _{{i},{j}}}{(q_{i,k})} = {\delta _{jk}}\) holds. We set \(V(\Gamma ^{m}_{}):= \bigotimes _{i=1}^3{V^h_{i}}\) and

Let \(e^{m}_{i,j}\) denote the edge \([\vec {q}^{m}_{i,j-1},\vec {q}^{m}_{i,j}]:= \{(1-s)\vec {q}^{m}_{i,j-1} + s\vec {q}^{m}_{i,j}\mid 0\le s\le 1\}\). We define the normal vector to each edge of \(\Gamma ^{m}_{i}\) by

where \(\left( {\begin{array}{c}a\\ b\end{array}}\right) ^\perp = \left( {\begin{array}{c}-b\\ a\end{array}}\right) \) denotes the anti-clockwise rotation of \(\vec {p}\) through \(\frac{\pi }{2}\) and \(|e^{m}_{i,j}|\) is the length of the interval \(e^{m}_{i,j}\). Let \(\vec {\nu }^{m}_{i}\) be the normal vector field on \(\Gamma ^{m}_{i}\) which is equal to \(\vec {\nu }^{m}_{i,j-\frac{1}{2}}\) on each edge \(e^{m}_{i,j}\ (1\le j\le N_i)\).

For two piecewise continuous functions on I, which may jump across the points \({q_{i,j}}\ (1\le j\le N_i)\), we define the mass lumped inner product

where \(u({q^{-}_{i,j}}):= \lim _{{[q_{i,j-1},q_{i,j}]}\ni y\rightarrow {q_{i,j}}}u(y)\) and \(u({q^{+}_{i,j}}):= \lim _{{[q_{i,j},q_{i,j+1}]}\ni y\rightarrow {q_{i,j}}}u(y)\) for each \(1\le i\le 3\). We extend these definitions to vector- and tensor-valued functions. Moreover, we define

where here and throughout, the notation \(\cdot ^{(h)}\) means an expression with or without the superscript h. The vertex normals \(\vec {\omega }^{m}_{i}\in {\underline{V}^h_{i}}\) on \(\Gamma ^{m}_{i}\) are defined through the lumped \(L^2\) projection

see [9, Definition 51].

We make an assumption on the discrete vertex normals \(\vec {\omega }^{m}_{}\), following [6, Assumption \(\mathcal {A}\)] and [9, Assumption 108], which will guarantee well-posedness of the system of linear equations:

Assumption 1

Assume that \({\text {span}}{\{\vec {\omega }^{m}_{i,j}\}_{1\le j\le N_i-1}} \not = \vec {\{0\}}\) for \(1 \le i \le 3\) and

Here, for \(\vec {\xi }\in {\underline{V}^h_{i}}\) and \(\varphi \in S^m\), we use the slight abuses of notation \(\left<\vec \xi ,\varphi \right>^{}_{\Gamma ^{m}_{i}} = \int _{\Gamma ^{m}_{i}} \vec \xi \varphi \,\textrm{d}\mathcal {H}^{1}\) and \(\left<\vec \xi ,\varphi \right>^{h}_{\Gamma ^{m}_{i}} = \int _{\Gamma ^{m}_{i}} \pi ^h[\vec \xi \varphi ] \,\textrm{d}\mathcal {H}^{1}\).

We remark that the first condition basically means that each of the three curves has at least one nonzero inner vertex normal, something that can only be violated in very pathological cases. The proof of Theorem 4.1 shows that it is actually sufficient to require this for just two out of the three curves, but for simplicity we prefer to state the stronger assumption. The second condition in Assumption 1, on the other hand, is a very mild constraint on the interaction between bulk and interface meshes. In fact, it can only be violated if all the vectors in the set are linearly dependent, which happens, for example, if the three curves are straight lines that lie on top of each other.

Given \((\vec {X}^{m}_{},\kappa ^m_\sigma )\in \underline{V}(\Gamma ^{m}_{})\times V(\Gamma ^{m}_{})\), we find \(({{\varvec{W}}^{m+1}_{}}, \vec {X}^{m+1}_{}, \kappa ^{m+1}_\sigma )\in {\varvec{S}}^m_\Sigma \times \underline{V}(\Gamma ^{m}_{})\times V(\Gamma ^{m}_{})\) such that the following conditions hold:

[Motion law] For all \({\varvec{\varphi }}\in {\varvec{S}}^m_\Sigma \),

[Gibbs–Thomson law] For all \(\xi \in V(\Gamma ^{m}_{})\),

[Curvature vector] For all \(\vec \eta \in \underline{V}(\Gamma ^{m}_{})\),

We stress that (4.2) encodes two different schemes: One that uses mass lumping in the two bulk-surface terms in (4.2a) and (4.2b), and one that uses true integration in both. The interpolation operator \(\pi ^h_i\) in (4.2a) is superfluous in the former case, but necessary for the stability proof of the latter. Writing (4.2) as above allows for a compact presentation. Observe that the implementation of the scheme with mass-lumping is far easier, since there bulk finite element functions only need to be evaluated at the vertices of the curve network. We refer to [8] for more details.

Theorem 4.1

(Existence and uniqueness) Let Assumption 1 hold and let \(m\ge 0\). Then there exists a unique solution \(({{\varvec{W}}^{m+1}_{}}, \vec {X}^{m+1}_{}, \kappa ^{m+1}_\sigma )\in {\varvec{S}}^m_\Sigma \times \underline{V}(\Gamma ^{m}_{})\times V(\Gamma ^{m}_{})\) to (4.2).

Proof

Since (4.2a), (4.2b) and (4.2c) is a linear system with the same number of unknowns and equations, existence follows from uniqueness. To show the latter, it is sufficient to prove that only the zero solution solves the homogeneous system. Hence let \(({{\varvec{W}}^{}_{}}, \vec {X}^{}_{}, \kappa _\sigma )\in {\varvec{S}}^m_\Sigma \times \underline{V}(\Gamma ^{m}_{})\times V(\Gamma ^{m}_{})\) be such that

Choosing \({\varvec{\varphi }}= {{\varvec{W}}^{}_{}}\) in (4.3a), \(\xi = \pi ^h[\vec {X}^{}_{}\cdot \vec {\omega }^{m}_{}]\) in (4.3b) and \(\vec {\eta } = \vec {X}^{}_{} \in \underline{V}(\Gamma ^{m}_{})\) in (4.3c) gives

Thus, we see that \({W^{}_{i}} = C_i\in {\mathbb R}\) and \(\vec {X}^{}_{i} = \vec {X}^{c}_{}\in {\mathbb R}^2\), for \(1\le i\le 3\), are constant functions, with \(\sum _{i=1}^3 C_i=0\). We deduce from (4.3a) that

and so the second condition in Assumption 1 yields that \(\vec {X}^{}_{} = \vec {X}^{c}_{} = \vec {0}\). Moreover, it follows from (4.3b) that \(\kappa _{\sigma ,1} = C_3 - C_2, \kappa _{\sigma ,2} = C_1 - C_3\), and \(\kappa _{\sigma ,3} = C_2 - C_1\) are also equal to constants. We now choose as a test function in (4.3c) the function \(\vec \eta \in \underline{V}(\Gamma ^{m}_{})\) with \(\vec \eta _i =\kappa _{{\sigma ,i}} \vec z^m_i\), where \(\vec z^m_i = \sum _{j=1}^{N_i-1} \vec \omega ^m_{i,j} \Phi _{i,j}\) for \(i=1,2,3\). Hence, we obtain

so that the first condition in Assumption 1 implies that \(\kappa _{\sigma ,i} = 0\) for \(i=1,2,3\), i.e. \(\kappa _\sigma = 0\). Since \(C_1=C_2=C_3\), they must all be zero, and so \({\varvec{W}} = \textbf{0}\) also follows. Hence, we have shown the existence of a unique solution \(({{\varvec{W}}^{m+1}_{}}, \vec {X}^{m+1}_{}, \kappa ^{m+1}_\sigma )\in {\varvec{S}}^m_\Sigma \times \underline{V}(\Gamma ^{m}_{})\times V(\Gamma ^{m}_{})\) to (4.2). \(\square \)

Before proving the stability of our scheme, we recall the following lemma from [9, Lemma 57] without the proof.

Lemma 4.2

Let \(\Gamma ^{h}_{}\) be a polygonal curve in \(\mathbb {R}^2\). Then, for any \(\vec {X}^{}_{}\in \underline{V}(\Gamma ^{h}_{})\), it holds that

where \(|\Gamma ^{h}_{}|\) and \(|\vec {X}^{}_{}(\Gamma ^{h}_{})|\) are the lengths of \(\Gamma ^{h}_{}\) and \(\vec {X}^{}_{}(\Gamma ^{h}_{})\), respectively.

Theorem 4.3

(Unconditional stability) Let \(m\ge 0\) and let \(({{\varvec{W}}^{m+1}_{}},\vec {X}^{m+1}_{},\kappa ^{m+1}_\sigma )\in {\varvec{S}}^m_\Sigma \times \underline{V}(\Gamma ^{m}_{})\times V(\Gamma ^{m}_{})\) be a solution to (4.2). Then the following estimate is satisfied:

where we recall that \(|\Gamma ^{m}_{}|_\sigma = \sum _{i=1}^3\sigma _i|\Gamma ^{m}_{i}|\).

Proof

We choose \({\varvec{\varphi }}= {{\varvec{W}}^{m+1}_{}}\) in (4.2a) and \(\xi = \pi ^h\left[ \frac{\vec {X}^{m+1}_{}-\vec {X}^{m}_{}}{\tau _m}\cdot \vec {\omega }^{m}_{}\right] \) in (4.2b) to obtain

Choosing \(\vec {\eta } = \vec {X}^{m+1}_{} - \vec {X}^{m}_{}\in \underline{V}(\Gamma ^{m}_{})\) in (4.2c), gives

We compute from (4.6) and (4.7), on recalling Lemma 4.2, that

This proves the desired result. \(\square \)

Remark 4.4

Similar stability results for the discretization of other gradient flows of curve networks can be found in [6, 7]. For the two-phase Mullins–Sekerka problem, related stability results were derived in [8, 37].

5 Solution of the linear system

In this section, we discuss solution methods for the systems of linear equations arising from (4.2) at each time level. To this end, we make use of ideas from [6, 8]. Here the crucial idea is to avoid having to work with the trial and test spaces \(\underline{V}(\Gamma ^{m}_{})\) and \({\varvec{S}}^m_\Sigma \) directly, and rather employ a technique that is similar to a standard treatment of periodic boundary conditions for ODEs and PDEs. In particular, following [6, (2.44)] we introduce the orthogonal projections \(\mathcal {P}:[V(\Gamma ^{m}_{})]^2\rightarrow \underline{V}(\Gamma ^{m}_{})\) and \(\mathcal {Q}:{\varvec{S}}^m\rightarrow {\varvec{S}}^m_\Sigma \). On letting \(\textbf{1}= (1,1,1)^T\), it is easy to see that for \({\varvec{W}} \in {\varvec{S}}^m\) it holds that

point-wise in \(\overline{\Omega }\).

Now, given \(\vec {X}^{m}_{} \in \underline{V}(\Gamma ^{m}_{})\), let \(({{\varvec{W}}^{m+1}_{}},\kappa ^{m+1}_\sigma ,\vec {X}^{m}_{}+\delta \vec {X}^{m+1}_{})\in {\varvec{S}}^m_\Sigma \times V(\Gamma ^{m}_{})\times \underline{V}(\Gamma ^{m}_{})\) be the unique solution to (4.2) whose existence has been proven in Theorem 4.1. Let \(N:= \sum _{i=1}^3(N_i+1)\) be the sum of the vertices on each individual curve, and let \(K^m_{\Omega }\) be the number of vertices of the mesh \(\mathfrak {T}^m\) inside \(\overline{\Omega }\). From now on, as no confusion can arise, we identify \(({{\varvec{W}}^{m+1}_{}},\kappa ^{m+1}_\sigma ,\delta \vec {X}^{m+1}_{})\) with their vectors of coefficients with respect to the bases \(\{\Psi ^{m}_{i}\}_{1\le i\le K^m_{\Omega }}\) and \(\{\{{\Phi _{{i},{j}}}\}_{1\le j\le N_i}\}_{i=1}^3\) of the unconstrained spaces \({\varvec{S}}^m\) and \(V(\Gamma ^{m}_{})\). In addition, we let  be the Euclidean space equivalent of \(\mathcal {P}\), and similarly for the equivalent \(Q: (\mathbb {R}^3)^{K^m_{\Omega }}\rightarrow \mathbb {W}:= \{(v_1,v_2,v_3)^T\in (\mathbb {R}^3)^{K^m_{\Omega }}\!\!\! \ \mid \ \!\!\! v_1+v_2+v_3 = 0\in \mathbb {R}^{K^m_{\Omega }}\}\) of \(\mathcal {Q}\).

be the Euclidean space equivalent of \(\mathcal {P}\), and similarly for the equivalent \(Q: (\mathbb {R}^3)^{K^m_{\Omega }}\rightarrow \mathbb {W}:= \{(v_1,v_2,v_3)^T\in (\mathbb {R}^3)^{K^m_{\Omega }}\!\!\! \ \mid \ \!\!\! v_1+v_2+v_3 = 0\in \mathbb {R}^{K^m_{\Omega }}\}\) of \(\mathcal {Q}\).

Then the solution to (4.2) can be written as  for any solution of the linear system

for any solution of the linear system

where \(A_\Omega \in \mathbb {R}^{3K^m_{\Omega }\times 3K^m_{\Omega }},\vec {N}_{\Omega ,\Gamma }\in (\mathbb {R}^2)^{N\times 3K^m_{\Omega }},B_{\Omega ,\Gamma }\in \mathbb {R}^{N\times 3K^m_{\Omega }},C_\Gamma \in \mathbb {R}^{N\times N},\vec {D}_\Gamma \in (\mathbb {R}^2)^{N\times N}\) and  are defined by

are defined by

with

for each \(1\le c\le 3\). The advantage of the system (5.1) over a naive implementation of (4.2) is that complications due to nonstandard finite element spaces are completely avoided. A disadvantage is, however, that the system (5.1) is highly singular, in that due to the presence of the projections the dimension of its kernel is larger than the dimension of the scalar bulk finite element space \(S^m\). This makes it difficult to solve (5.1) in practice. A more practical formulation can be obtained by eliminating one of the components of \({{\varvec{W}}^{m+1}_{}}\) completely. In particular, on recalling that \({{\varvec{W}}^{m+1}_{}} \cdot \textbf{1}= 0\), we can reduce the unknown variables \({{\varvec{W}}^{m+1}_{}}\in (\mathbb {R}^3)^{K^m_{\Omega }}\) to \((W^{m+1}_1,W^{m+1}_2)\in (\mathbb {R}^2)^{K^m_{\Omega }}\) by introducing the linear map \(\widehat{Q}:(\mathbb {R}^2)^{K^m_{\Omega }}\rightarrow \mathbb {W}\subset (\mathbb {R}^3)^{K^m_{\Omega }}\) defined by

where \(I_M\) denotes the identity matrix of size M for \(M\in \mathbb {N}\).

Then the solution to (4.2) can be written as  for any solution of the reduced linear system

for any solution of the reduced linear system

where

In contrast to (5.1), the kernel of (5.3) is small. In fact, it has dimension 8 due to the fact that  has a kernel of dimension 8. Hence, iterative solution methods, combined with good preconditioners, work very well to solve (5.3) in practice. For our numerical results in Sect. 8, below, we employ a GMRes iterative solver with least squares solution of the block matrix in (5.3) without

has a kernel of dimension 8. Hence, iterative solution methods, combined with good preconditioners, work very well to solve (5.3) in practice. For our numerical results in Sect. 8, below, we employ a GMRes iterative solver with least squares solution of the block matrix in (5.3) without  as preconditioners.

as preconditioners.

6 Obtaining a fully discrete area conservation property

Although the linear scheme (4.2) introduced in Sect. 4 can be shown to be unconditionally stable, recall Theorem 4.3, in general the areas occupied by the discrete approximations of the three phases will not be conserved. In this section, we state how to modify the previously introduced scheme (4.2) in such a way, that it satisfies both of the structure defining properties from Sect. 2. To this end, we follow the discussion in [37, Section 3] in order to obtain an exact area preservation property on the fully discrete level. We remark that our approach hinges on ideas first presented for area-conserving geometric flows for closed curves in [2, 30]. See also [1, Section 3.2] for related work in the context of the surface diffusion flow for curve networks with triple junctions.

Let us define families of polygonal curves \(\{\Gamma ^{h}_{i}(t)\}_{t\ge 0}\), \(i=1,2,3\), that are parameterized by the time variable. In particular, for each \(0\le m\le M\), \(t\in [t_{m},t_{m+1}]\) and \(1\le i\le 3\), we define the polygonal curve \(\Gamma ^{h}_{i}(t)\) by

Precisely speaking, the vertices of \(\Gamma ^{h}_{i}(t)\) are defined as follows:

while we write each edge of \(\Gamma ^{h}_{i}(t)\) as \(e^{h}_{i,j}(t):= [\vec {q}^{h}_{i,j-1}(t),\vec {q}^{h}_{i,j}(t)]\) for \(1\le i\le 3\) and \(1\le j\le N_i\).

Lemma 6.1

For each \(m\ge 0\) and \((i,j,k)\in \Lambda \), it holds that

where

Proof

The desired result for \(i=1,2\) is shown in [1, Lemma 3.1], and the result for \(i=3\) follows analogously on noting that \(\partial \Omega \) is fixed. \(\square \)

Now the weighted vertex normal vector \(\vec {\omega }^{m+\frac{1}{2}}_{i}\in {\underline{V}^h_{i}}\), for \(i=1,2,3\), associated with \(\vec {\nu }^{m+\frac{1}{2}}_{i}\) is defined through the following formula:

Consequently, we obtain a nonlinear system with the aid of \(\vec {\omega }^{m+\frac{1}{2}}_{i}\): Given \((\vec {X}^{m}_{},\kappa ^m_\sigma )\in \underline{V}(\Gamma ^{m}_{})\times V(\Gamma ^{m}_{})\), find \(({{\varvec{W}}^{m+1}_{}}, \vec {X}^{m+1}_{}, \kappa ^{m+1}_\sigma )\in {\varvec{S}}^m_\Sigma \times \underline{V}(\Gamma ^{m}_{})\times V(\Gamma ^{m}_{})\) such that

[Motion law] For all \({\varvec{\varphi }}\in {\varvec{S}}^m_\Sigma \),

[Gibbs–Thomson law] For all \(\xi \in V(\Gamma ^{m}_{})\),

[Curvature vector] For all \(\vec {\eta }\in \underline{V}(\Gamma ^{m}_{})\),

We can now prove the area preserving property of each domain surrounded by the polygonal curve on the discrete level.

Theorem 6.2

(Area preserving property for the discrete scheme) Let \(m\ge 0\) and let \(({{\varvec{W}}^{m+1}_{}},\vec {X}^{m+1}_{},\kappa ^{m+1}_\sigma )\in {\varvec{S}}^m_\Sigma \times \underline{V}(\Gamma ^{m}_{})\times V(\Gamma ^{m}_{})\) be a solution of (6.3). Then, for each \(1\le j\le 3\) it holds that

Proof

We will argue similarly to the proof of [37, Theorem 3.3]. Choosing \({\varvec{\varphi }}= (-\frac{2}{3},\frac{1}{3},\frac{1}{3})^T\in {\varvec{S}}^m_\Sigma \) in (6.3a), we obtain from (6.2) and Lemma 6.1 that

This yields the desired result for \(k=1\). The other cases can be treated analogously. \(\square \)

Theorem 6.3

(Stability for the area-conserving scheme) Let \(m\ge 0\) and let \(({{\varvec{W}}^{m+1}_{}},\vec {X}^{m+1}_{},\kappa ^{m+1}_\sigma )\in {{\varvec{S}}^m_\Sigma }\times \underline{V}(\Gamma ^{m}_{})\times V(\Gamma ^{m}_{})\) be a solution of (6.3). Then, it holds that

Proof

The proof is analogous to the proof of Theorem 4.3 once we replace \(\vec {\omega }^{m}_{}\) by \(\vec {\omega }^{m+\frac{1}{2}}_{}\). \(\square \)

Remark 6.4

We observe that as \(\vec {\omega }^{m+\frac{1}{2}}_{i}\) depends on \(\vec {X}^{m+1}_{i}\), the scheme (6.3) is no longer linear. In practice the nonlinear systems of equations arising at each time level of (6.3) can be solved with the aid of a simple lagged iteration as mentioned in [37, Section 3]. In particular, given \(\Gamma ^m\), let \(\Gamma ^{m+1,0} = \Gamma ^m\). Then for \(\ell \ge 0\), and until convergence, define \(\vec \omega ^{m+\frac{1}{2},\ell }\) through (6.2) and (6.1), but with \(\Gamma ^m\) replaced by \(\Gamma ^{m+1,\ell }\), and find \(({{\varvec{W}}^{m+1,\ell +1}_{}}, \vec {X}^{m+1,\ell +1}_{}, \kappa ^{m+1,\ell +1}_\sigma )\in {\varvec{S}}^m_\Sigma \times \underline{V}(\Gamma ^{m}_{})\times V(\Gamma ^{m}_{})\) such that

for all \({\varvec{\varphi }}\in {\varvec{S}}^m_\Sigma \), \(\xi \in V(\Gamma ^{m}_{})\), \(\vec {\eta }\in \underline{V}(\Gamma ^{m}_{})\).

7 Generalization to multi-component systems

In order to simplify the presentation, in the previous sections we concentrated on the simple three phase situation depicted in Fig. 1. However, it is not difficult to generalize our introduced finite element approximations to the general multi-phase case. We present the details in this section, following closely the description of the general curve network used in [1, Section 2].

7.1 Problem setting

For later use, we let \(\mathbb {N}_{\le {M}}:= \{1,\cdots ,M\}\) for each \(M\in \mathbb {N}\). First, let us introduce counters which will be used frequently later on. Given a curve network \(\Gamma ^{}_{}(t)\), \(I_C\ge 1\) denotes the number of curves which are included in \(\Gamma ^{}_{}(t)\). Thus, we have \(\Gamma ^{}_{}(t) = \cup _{i=1}^{I_C}\Gamma ^{}_{i}(t)\), where each \(\Gamma ^{}_{i}(t)\) is either open or closed. Let \(I_P\ge 2\) be the number of phases, i.e. the not necessarily connected subdomains of \(\Omega \) which are separated by \(\Gamma ^{}_{}(t)\). This means that \(\Omega \backslash \Gamma ^{}_{}(t) = \bigcup _{j=1}^{I_P}\Omega _{j}(t)\). Finally, each endpoint of an open curve included in \(\Gamma ^{}_{}(t)\) is part of a triple junction. We write the number of triple junctions as \(I_T\ge 0\).

Assumption 2

[Triple junctions] Every curve \(\Gamma ^{}_{i}(t)\), \(i\in \mathbb {N}_{\le {I_C}}\), must not self-intersect, and is allowed to intersect other curves only at its boundary \(\partial \Gamma ^{}_{i}(t)\). If \(\partial \Gamma ^{}_{i}(t) = \emptyset \), then \(\Gamma ^{}_{i}(t)\) is called a closed curve, otherwise an open curve. For each triple junction \(\mathcal {T}_{k}(t)\), \(k\in \mathbb {N}_{\le {I_T}}\), there exists a unique tuple \((c^{k}_{1}, c^{k}_{2}, c^{k}_{3})\) with \(1\le c^{k}_{1}<c^{k}_{2}<c^{k}_{3}\le I_C\) such that \(\mathcal {T}_{k}(t)=\partial \Gamma ^{}_{c^{k}_{1}}(t)\cap \partial \Gamma ^{}_{c^{k}_{2}}(t)\cap \partial \Gamma ^{}_{c^{k}_{3}}(t)\). Moreover, \(\cup _{i=1}^{I_C} \partial \Gamma ^{}_{i}(t)\subset \cup _{k=1}^{I_T} \mathcal {T}_{k}(t)\).

Assumption 3

(Phase separation) The curve network \(\Gamma ^{}_{}(t)\) is equipped with a matrix \(\mathcal {O}:\{-1,0,1\}^{I_P \times I_C}\) that encodes the orientations of the phase boundaries. In particular, each row contains nonzero entries only for the curves that make up the boundary of the corresponding phase, with the sign specifying the orientation needed for the curves normal to make it point outwards of the phase. I.e. for \(p\in \mathbb {N}_{\le {I_P}}\) and \(i\in \mathbb {N}_{\le {I_C}}\) we have

For every \(i\in \mathbb {N}_{\le {I_C}}\), there exists a unique pair \((p_1,p_2)\) with \(1 \le p_1\le p_2\le I_P\) such that \(\mathcal {O}_{p_1,i} = -\mathcal {O}_{p_2,i} = 1\). In this situation, we say that \(\Omega _{p_1}(t)\) is a neighbour to \(\Omega _{p_2}(t)\).

Clearly, given the matrix \(\mathcal {O}\), the boundary of \(\Omega _p(t)\) can be characterized by

where we have assumed that \(\Omega _{I_P}(t)\) is the only phase with contact to the external boundary.

Remark 7.1

(Examples) We note that for the three-phase problem shown in Fig. 1, we have \(I_C = 3\), \(I_P = 3\), \(I_T = 2\), \((c^{1}_{1},c^{1}_{2},c^{1}_{3}) = (c^{2}_{1},c^{2}_{2},c^{2}_{3}) = (1,2,3)\) and \(\mathcal {O}= \begin{pmatrix}0 &{}-1&{}1 \\ 1 &{} 0 &{}-1 \\ -1 &{}1&{}0 \end{pmatrix}\). Examples for more complicated networks, and their description in our general framework, can be found in the numerical results section, see Sect. 8.2.

Under these preparations, we can generalize the system (1.2) to the multi-phase case. Where no confusion can arise, we use the same notation as before, e.g., \(T\Sigma = \{{\varvec{u}} \in \mathbb {R}^{I_P}\mid \ \sum _{j=1}^{I_P}u_j = 0\}\). Given an initial curve network \(\Gamma ^{0}_{}\), our aim is to find \({\varvec{w}}^{}_{}:\Omega \rightarrow T\Sigma \) and the evolution of a curve network \(\{\Gamma ^{}_{}(t)\}_{t\ge 0}\) which satisfy

where \({\varvec{\chi }}\) denotes the vector of the characteristic functions \(\chi _j = \mathrm {\mathcal {X}}_{\Omega _j(t)}\), \(j=1,\ldots ,I_P\), as before.

Remark 7.2

The system (7.1) does not depend on the choice of normals \(\vec {\nu }^{}_{i}\), for \(i\in \mathbb {N}_{\le {I_C}}\). Indeed, if we take \(-\vec {\nu }^{}_{i}\) as the unit normal vector to \(\Gamma ^{}_{i}(t)\), then the sign of \(\varkappa _i\) reverses. On the other hand, the sign of the jump \(\left[ {{\varvec{\chi }}}\right] \) is also reversed. Thus, the second law of (7.1) does not change. Meanwhile, the sign of the normal velocity \(V_i\) in the third condition is also reversed, balancing with the sign change of \(\vec {\nu }^{}_{i}\) on the left hand side.

7.2 Weak formulation

Similarly to Sect. 3, it is possible to derive a weak formulation of the multi-phase problem (7.1). On noting that the jump of the j-th characteristic function \(\chi _j\) across the curve \(\Gamma ^{}_{i}\) is \(-\mathcal {O}_{ji}\), we can compute from the third condition in (7.1) that

Hence, overall we obtain the following weak formulation:

[Motion law] For all \({{{\varvec{\varphi }}}\in T\widetilde{\Sigma }\cap [H^1(\Omega )]^{I_P}}\),

[Gibbs–Thomson law] For all \(\xi \in L^2(\Gamma ^{}_{})\),

[Curvature vector] For all \(\vec {\eta }\in H^1(\Gamma ^{}_{};\mathbb {R}^2)\) such that \(\vec {\xi }\!\mid _{\Gamma ^{}_{c^{k}_{1}}} = \vec {\xi }\!\mid _{\Gamma ^{}_{c^{k}_{2}}} = \vec {\xi }\!\mid _{\Gamma ^{}_{c^{k}_{3}}}\) on \(\mathcal {T}_{k}\) for all \(k\in \mathbb {N}_{\le {I_T}}\),

7.3 Finite element approximations

We now generalize our finite element approximation (4.2) to the multi-phase case. The necessary discrete function spaces are the obvious generalizations, for example

where \(\rho ^{k}_{i} \in \{0,1\}\) encodes at which if its two endpoints the discrete curve \(\Gamma ^m_{c^{k}_{i}}\) meets the k-th triple junction.

Given \(\vec {X}^{m}_{}\in \underline{V}(\Gamma ^{m}_{})\), we find \(({{\varvec{W}}^{m+1}_{}}, \vec {X}^{m+1}_{}, \kappa ^{m+1}_\sigma )\in {{\varvec{S}}^m_\Sigma }\times \underline{V}(\Gamma ^{m}_{})\times V(\Gamma ^{m}_{})\) such that the following conditions hold:[Motion law] For all \({{\varvec{\varphi }}}\in {{\varvec{S}}^m_\Sigma }\),

[Gibbs–Thomson law] For all \(\xi \in {V(\Gamma ^{m}_{})}\),

[Curvature vector] For all \(\vec {\eta }\in \underline{V}(\Gamma ^{m}_{})\),

Remark 7.3

(Linear system) The linear system of equations arising at each time level of (7.3) is given by the obvious generalization of (5.1), where the block matrix entries of (5.1) are now defined by \(A_\Omega = {\text {diag}}{(A)_{j=1,\ldots ,I_P}}\), \(C_\Gamma = {\text {diag}}{(C_i)_{i=1,\ldots ,I_C}}\) \(\vec {N}_{\Omega ,\Gamma } = (\mathcal {O}_{ji}\vec {N}_i)_{i=1,\ldots ,I_C,j=1,\ldots ,I_P}\), \(B_{\Omega ,\Gamma } = (\mathcal {O}_{ji}B_i)_{i=1,\ldots ,I_C,j=1,\ldots ,I_P}\) \(\vec {D}_\Gamma = {\text {diag}}{(\vec {D}_i)_{i=1,\ldots ,I_C}}\) and  . Once again the generalized system (5.1) can be reduced by eliminating the final component \(W^{m+1}_{I_P}\) from \({{\varvec{W}}^{m+1}_{}}\). We obtain the same block structure as in (5.3), with the new entries now given by \({\widehat{A}}_\Omega = {\text {diag}}{(A)_{j=1,\ldots ,I_P-1}}\), \(\widehat{B}_{\Omega ,\Gamma } = ((\mathcal {O}_{I_P,i}-\mathcal {O}_{ji})B_i)_{i=1,\ldots ,I_C,j=1,\ldots ,I_P-1}\) and \(\widehat{N}_{\Omega ,\Gamma } = (\mathcal {O}_{ji}\vec {N}_i)_{i=1,\ldots ,I_C,j=1,\ldots ,I_P-1}\).

. Once again the generalized system (5.1) can be reduced by eliminating the final component \(W^{m+1}_{I_P}\) from \({{\varvec{W}}^{m+1}_{}}\). We obtain the same block structure as in (5.3), with the new entries now given by \({\widehat{A}}_\Omega = {\text {diag}}{(A)_{j=1,\ldots ,I_P-1}}\), \(\widehat{B}_{\Omega ,\Gamma } = ((\mathcal {O}_{I_P,i}-\mathcal {O}_{ji})B_i)_{i=1,\ldots ,I_C,j=1,\ldots ,I_P-1}\) and \(\widehat{N}_{\Omega ,\Gamma } = (\mathcal {O}_{ji}\vec {N}_i)_{i=1,\ldots ,I_C,j=1,\ldots ,I_P-1}\).

Finally, on using the techniques from Sect. 6, we can adapt the approximation (7.3) to obtain a structure preserving scheme that is unconditionally stable and that conserves the areas of the enclosed phases exactly. Given \((\vec {X}^{m}_{},\kappa ^m_\sigma )\in \underline{V}(\Gamma ^{m}_{})\times V(\Gamma ^{m}_{})\), find \(({{\varvec{W}}^{m+1}_{}}, \vec {X}^{m+1}_{}, \kappa ^{m+1}_\sigma )\in {\varvec{S}}^m_\Sigma \times \underline{V}(\Gamma ^{m}_{})\times V(\Gamma ^{m}_{})\) such that

[Motion law] For all \({\varvec{\varphi }}\in {\varvec{S}}^m_\Sigma \),

[Gibbs–Thomson law] For all \(\xi \in V(\Gamma ^{m}_{})\),

[Curvature vector] For all \(\vec {\eta }\in \underline{V}(\Gamma ^{m}_{})\),

We conclude this section by stating theoretical results for the generalized schemes. Their proofs are straightforward adaptations of the proofs of Theorems 4.1, 4.3 and 6.2.

Assumption 4

Assume that \({\text {span}}{\{\vec {\omega }^{m}_{i,j}\}_{1\le j\le N_i-1}} \not = \vec {\{0\}}\) for \(1 \le i \le I_C\) and

Theorem 7.4

Suppose that Assumption 4 holds, and that \(m\ge 0\). Then, there exists a unique solution \(({{\varvec{W}}^{m+1}_{}},\vec {X}^{m+1}_{},\kappa ^{m+1}_\sigma )\in {\varvec{S}}^m_\Sigma \times \underline{V}(\Gamma ^m)\times V(\Gamma ^m)\) to (7.3). Moreover, any solution to (7.3) or (7.4) satisfies the stability bound

Finally, a solution to (7.4) satisfies

8 Numerical results

We implemented the fully discrete finite element approximations (7.3) and (7.4) within the finite element toolbox ALBERTA, see [41]. The arising linear systems of the form (5.3) are solved with a GMRes iterative solver, applying as preconditioner a least squares solution of the block matrix in (5.3) without the projection matrices  . For the computation of the least squares solution we employ the sparse factorization package SPQR, see [19].

. For the computation of the least squares solution we employ the sparse factorization package SPQR, see [19].

For the triangulation \(\mathfrak {T}^m\) of the bulk domain \(\Omega \), that is used for the bulk finite element space \(S^m\), we use an adaptive mesh that uses fine elements close to the interface \(\Gamma ^m\) and coarser elements away from it. The precise strategy is as described in [8, Section 5.1] and for a domain \(\Omega =(-H,H)^d\) and two integer parameters \(N_c < N_f\) results in elements with maximal diameter approximately equal to \(h_f = \frac{2H}{N_f}\) close to \(\Gamma ^m\) and elements with maximal diameter approximately equal to \(h_c = \frac{2H}{N_c}\) far away from it. For all our computations we use \(H=4\). An example adaptive mesh is shown in Fig. 3, below.

We stress that due to the unfitted nature of our finite element approximations, special quadrature rules need to be employed in order to assemble terms that feature both bulk and surface finite element functions. For all the computations presented in this section, we use true integration for these terms, and we refer to [8, 37] for details on the practical implementation. Throughout this section we use (almost) uniform time steps, in that \(\tau _m=\tau \) for \(m=0,\ldots , M-2\) and \(\tau _{M-1} = T - t_{m-1} \le \tau \). Unless otherwise stated, we set \(\sigma =1\).

8.1 Convergence experiment

In order to validate our proposed schemes, we utilize the following exact solution for a network of three concentric circles. Let \(0<R_1(t)<R_2(t)<R_3(t)\) and \(\Gamma ^{}_{i}(t):= \partial B(0,R_i(t))\) for each \(i\in \mathbb {N}_{\le {3}}\). Suppose that \(\vec {\nu }^{}_{1}\), \(-\vec {\nu }^{}_{2}\) and \(\vec {\nu }^{}_{3}\) are pointing towards the origin, and let \(\Omega _1(t) = B(0,R_1(t)) \cup (\Omega {\setminus } \overline{B(0,R_3(t))})\), \(\Omega _j(t) = B(0,R_j(t)) {\setminus } \overline{B(0,R_{j-1}(t))}\), \(j=2,3\). Hence, with the notation from Sect. 7.1 we have \(I_C = 3\), \(I_P = 3\), \(I_T = 0\) and \(\mathcal {O}= \begin{pmatrix}-1 &{}0&{}1 \\ 1 &{} 1 &{} 0 \\ 0&{} -1 &{}-1 \end{pmatrix}\). See Fig. 2 for the setting.

We shall prove in Appendix A that \(({\varvec{w}},\{\Gamma ^{}_{}(t)\}_{0\le t\le T})\) is a solution to (7.1) with \(\sigma =1\) if the three radii satisfy the differential algebraic equations:

where \(F(u) := (\frac{1}{\sqrt{u^2 - A_2}} + \frac{1}{u} + \frac{1}{\sqrt{u^2 + A_3}})/({u\log {\frac{u^2 + A_3}{u^2 - A_2}}})\) for \(u\in (\sqrt{A_2},\infty )\), \(A_2 := R_2(0)^2 - R_1(0)^2\), and \(A_3 := R_3(0)^2 - R_2(0)^2\), and if the three chemical potentials are given by

where \(\alpha (t):= \frac{\frac{1}{R_1{(t)}} + \frac{1}{R_2{(t)}} + \frac{1}{R_3{(t)}}}{2\log {\frac{R_3{(t)}}{R_1{(t)}}}}\).

In order to accurately compute the radius \(R_2(t)\), rather than numerically solving the ODE in (8.1a), we employ a root finding algorithm for the equation

following similar ideas in [8, 37].

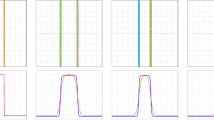

For the initial radii \(R_1(0) = 2\), \(R_2(0) = 2.5\), \(R_3(0) = 3\) and the time interval [0, T] with \(T=\frac{1}{2}\), so that \(R_1(T) \approx 1.60\), \(R_2(t) \approx 2.20\) and \(R_3(T) \approx 2.75\), we perform a convergence experiment for the true solution (8.1). To this end, for \(i=0\rightarrow 4\), we set \(N_f = \frac{1}{2} K = 2^{7+i}\), \(N_c = 4^{i}\) and \(\tau = 4^{3-i}\times 10^{-3}\). In Tables 1 and 2 we display the errors

and

where \(I^m: C^0(\overline{\Omega }) \rightarrow S^m\) denotes the standard interpolation operator, for the schemes (7.3) and (7.4), respectively. We also let \(K_\Omega ^m\) denote the number of degrees of freedom of \(S^m\), and define \(h^m_\Gamma = \max _{i=1,\ldots ,3} \max _{j = 1,\ldots ,N_i} \sigma ^m_{i,j}\), as well as \(v_\Delta ^M = \max _{j=1,\ldots ,I_P} |\,|\Omega ^M_j| - |\Omega ^0_j| \,|\). As expected, we observe true volume preservation for the scheme (7.4) in Table 2, up to solver tolerance, while the relative volume loss in Table 1 decreases as \(\tau \) becomes smaller. Surprisingly, the two error quantities \(\Vert \Gamma ^h - \Gamma \Vert _{L^\infty }\) and \(\Vert {\varvec{W}}^h - {\varvec{w}}\Vert _{L^\infty }\) are generally lower in Table 1 compared to Table 2, although the difference becomes smaller with smaller discretization parameters.

For all the following numerical simulations, we always employ the fully structure-preserving scheme (7.4).

8.2 Evolutions with equal surface energies

8.2.1 3 Phases

In the next set of experiments, we investigate how a standard double bubble and a disk evolve, when one phase is made up of the left bubble, and the other phase is made up of the right bubble and the disk. With the notation from Sect. 7.1 we have \(I_C = 4\), \(I_P = 3\), \(I_T = 2\), \((c^{1}_{1},c^{1}_{2},c^{1}_{3}) = (c^{2}_{1},c^{2}_{2},c^{2}_{3}) = (1,2,3)\) and \(\mathcal {O}= \begin{pmatrix}0 &{}-1&{}1&{}0 \\ 1 &{} 0 &{}-1&{}-1 \\ -1 &{}1&{}0&{}1 \end{pmatrix}\).

The two bubbles of the double bubble enclose an area of about 3.139 each, while the disk has an initial radius of \(\frac{5}{8}\), meaning it initially encloses an area of \(\frac{25\pi }{64} \approx 1.227\). During the evolution the disk vanishes, and the right bubble grows correspondingly, see Fig. 3. We note that our theoretical framework does not allow for changes of topology, e.g., the vanishing of curves. Hence, in our computations we perform heuristic surgeries whenever a curve becomes too short. Here a closed curve is simply discarded, while a curve that was part of a network is removed. This will leave two triple junctions, where only two curves meet, and the involved curves can be glued together so that the simulation can continue.

Repeating the simulation with a bigger initial disk gives the results in Fig. 4. Here the radius is \(\frac{5}{4}\), so that the enclosed area is 4.909. Now the disk grows at the expense of the right bubble, so that eventually two separate phases remain.

With the next simulation we demonstrate that, in the given setup, which of the two components of phase 1 survives is not down to the initial size. In particular, we allow the initial disk to have area 3.300, so that it is bigger than the other component of the same phase: the right bubble in the double bubble. And yet, due to the perimeter of the bubble being overall cheaper than the boundary of the disk, the latter shrinks to extinction. See Fig. 5.

For the next experiment we start from a nonstandard triple bubble, where we choose the right most bubble to have area 1.5, while the other two bubbles have unit area. We assign the two outer bubbles to belong to the same phase. In particular, with the notation from Sect. 7.1 we have \(I_C = 6\), \(I_P = 3\), \(I_T = 4\), \((c^{1}_{1},c^{1}_{2},c^{1}_{3}) = (1,2,5)\), \((c^{2}_{1},c^{2}_{2},c^{2}_{3}) = (1,3,5)\), \((c^{3}_{1},c^{3}_{2},c^{3}_{3}) = (2,4,6)\), \((c^{4}_{1},c^{4}_{2},c^{4}_{3}) = (3,4,6)\) and

We observe that during the evolution the larger bubble on the right grows at the expense of the left bubble, until the latter one vanishes completely. The remaining interfaces then evolve towards a standard double bubble with enclosed areas 1 and 2.5. See Fig. 6.

In the final numerical simulation for the setting with 3 phases, we consider the evolution of two double bubbles. With the notation from Sect. 7.1 we have \(I_C = 6\), \(I_P = 3\), \(I_T = 4\), \((c^{1}_{1},c^{1}_{2},c^{1}_{3}) = (c^{2}_{1},c^{2}_{2},c^{2}_{3}) = (1,2,3)\), \((c^{3}_{1},c^{3}_{2},c^{3}_{3}) = (c^{4}_{1},c^{4}_{2},c^{4}_{3}) = (4,5,6)\) and

The first bubble is chosen with enclosing areas 3.14 and 6.48, while the second double bubbles encloses two areas of size 3.64. In each case, the left bubble is assigned to phase 1, while the right bubbles are assigned to phase 2. In this way, the lower double bubble holds the larger portion of phase 1, while the upper double bubble holds the larger portion of phase 2. Consequently, each double bubble evolves to a single disk that contains just one phase. See Fig. 7.

8.2.2 4 Phases

In the next set of experiments, we investigate simulations for a nonstandard triple bubble, with one of the bubbles making a phase with a separate disk. In particular, with the notation from Sect. 7.1 we have \(I_C = 7\), \(I_P = 4\), \(I_T = 4\), \((c^{1}_{1},c^{1}_{2},c^{1}_{3}) = (1,2,5)\), \((c^{2}_{1},c^{2}_{2},c^{2}_{3}) = (1,3,5)\), \((c^{3}_{1},c^{3}_{2},c^{3}_{3}) = (2,4,6)\), \((c^{4}_{1},c^{4}_{2},c^{4}_{3}) = (3,4,6)\) and

The three bubbles of the triple bubble enclose an area of unity each, while the disk has an initial radius of \(\frac{1}{2}\), meaning it initially encloses an area of \(\frac{\pi }{4} \approx 0.785\). During the evolution the disk vanishes, and the right bubble grows correspondingly, see Fig. 8.

Repeating the experiment with initial data where the disk is a unit disk leads to the evolution in Fig. 9, where the disk now expands and survives, at the expense of the right bubble in the triple bubble.

8.3 Evolutions with different surface energies

8.3.1 3 Phases

As an example for non-equal surface energy densities for the various curves, we repeat the simulation in Fig. 3, but now weigh curves 1 and 3 in the double bubble with \(\sigma _1 = \sigma _3 = 2\), while keeping the other two densities at unity. This now means that in contrast to Fig. 3, it makes energetically more sense to increase the size of the single bubble, while shrinking the bubble that is surrounded by the more expensive interfaces. See Fig. 10 for the observed evolution.

8.3.2 4 Phases

Similarly, if we make the interface of the single circular bubble in the initial data in Fig. 9 more expensive, it will no longer grow but shrink to a point. Setting the weight for the curve to \(\sigma _7 = 2\) leads to the evolution seen in Fig. 11.

References

Bao, W., Garcke, H., Nürnberg, R., Zhao, Q.: A structure-preserving finite element approximation of surface diffusion for curve networks and surface clusters. Numer. Methods Partial Differ. Equ. 39, 759–794 (2023)

Bao, W., Zhao, Q.: A structure-preserving parametric finite element method for surface diffusion. SIAM J. Numer. Anal. 59, 2775–2799 (2021)

Barrett, J.W., Blowey, J.F.: An error bound for the finite element approximation of the Cahn-Hilliard equation with logarithmic free energy. Numer. Math. 72, 1–20 (1995)

Barrett, J.W., Blowey, J.F., Garcke, H.: Finite element approximation of the Cahn-Hilliard equation with degenerate mobility. SIAM J. Numer. Anal. 37, 286–318 (1999)

Barrett, J.W., Blowey, J.F., Garcke, H.: On fully practical finite element approximations of degenerate Cahn-Hilliard systems, M2AN Math. Model. Numer. Anal. 35, 713–748 (2001)

Barrett, J.W., Garcke, H., Nürnberg, R.: On the variational approximation of combined second and fourth order geometric evolution equations. SIAM J. Sci. Comput. 29, 1006–1041 (2007)

Barrett, J.W., Garcke, H., Nürnberg, R.: A parametric finite element method for fourth order geometric evolution equations. J. Comput. Phys. 222, 441–462 (2007)

Barrett, J.W., Garcke, H., Nürnberg, R.: On stable parametric finite element methods for the Stefan problem and the Mullins-Sekerka problem with applications to dendritic growth. J. Comput. Phys. 229, 6270–6299 (2010)

Barrett, J.W., Garcke, H., Nürnberg, R.: Parametric finite element approximations of curvature-driven interface evolutions. Handb. Numer. Anal. 21, 275–423 (2020)

Bates, P.W., Brown, S.: A numerical scheme for the Mullins–Sekerka evolution in three space dimensions. In: Differential Equations and Computational Simulations. Chengdu, 1999. World Scientific Publishing, River Edge, pp. 12–26 (2000)

Bates, P.W., Chen, X., Deng, X.: A numerical scheme for the two phase Mullins-Sekerka problem. Electron. J. Differ. Equ. 1995, 1–27 (1995)

Blowey, J.F., Copetti, M.I.M., Elliott, C.M.: Numerical analysis of a model for phase separation of a multi-component alloy. IMA J. Numer. Anal. 16, 111–139 (1996)

Blowey, J.F., Elliott, C.M.: The Cahn–Hilliard gradient theory for phase separation with nonsmooth free energy. II. Numerical analysis. Eur. J. Appl. Math. 3, 147–179 (1992)

Bronsard, L., Garcke, H., Stoth, B.: A multi-phase Mullins-Sekerka system: matched asymptotic expansions and an implicit time discretisation for the geometric evolution problem. Proc. R. Soc. Edinb. Sect. A 128, 481–506 (1998)

Bronsard, L., Wetton, B.T.R.: A numerical method for tracking curve networks moving with curvature motion. J. Comput. Phys. 120, 66–87 (1995)

Chen, C., Kublik, C., Tsai, R.: An implicit boundary integral method for interfaces evolving by Mullins–Sekerka dynamics. In: Mathematics for Nonlinear Phenomena—Analysis and Computation. Springer Proceedings in Mathematics and Statistics, vol. 215. Springer, Cham, pp. 1–21 (2017)

Chen, S., Merriman, B., Osher, S., Smereka, P.: A simple level set method for solving Stefan problems. J. Comput. Phys. 135, 8–29 (1997)

Chen, X., Hong, J., Yi, F.: Existence, uniqueness, and regularity of classical solutions of the Mullins-Sekerka problem. Commun. Partial Differ. Equ. 21, 1705–1727 (1996)

Davis, T.A.: Algorithm 915, SuiteSparseQR: multifrontal multithreaded rank-revealing sparse QR factorization. ACM Trans. Math. Softw. 38, 1–22 (2011)

Dziuk, G.: Finite elements for the Beltrami operator on arbitrary surfaces. In: Hildebrandt, S., Leis, R. (eds.) Partial Differential Equations and Calculus of Variations. Lecture Notes in Mathematics, vol. 1357, pp. 142–155. Springer, Berlin (1988)

Elliott, C.M., French, D.A.: Numerical studies of the Cahn-Hilliard equation for phase separation. IMA J. Appl. Math. 38, 97–128 (1987)

Escher, J., Simonett, G.: Classical solutions for Hele-Shaw models with surface tension. Adv. Differ. Equ. 2, 619–642 (1997)

Eto, T.: A rapid numerical method for the Mullins-Sekerka flow with application to contact angle problems. J. Sci. Comput. 98, 1573–7691 (2024)

Eyre, D.J.: Systems of Cahn-Hilliard equations. SIAM J. Appl. Math. 53, 1686–1712 (1993)

Feng, X., Prohl, A.: Error analysis of a mixed finite element method for the Cahn-Hilliard equation. Numer. Math. 99, 47–84 (2004)

Feng, X., Prohl, A.: Numerical analysis of the Cahn-Hilliard equation and approximation of the Hele-Shaw problem. Interfaces Free Bound. 7, 1–28 (2005)

Garcke, H., Rauchecker, M.: Stability analysis for stationary solutions of the Mullins-Sekerka flow with boundary contact. Math. Nachr. 295, 683–705 (2022)

Garcke, H., Sturzenhecker, T.: The degenerate multi-phase Stefan problem with Gibbs-Thomson law. Adv. Math. Sci. Appl. 8, 929–941 (1998)

Hensel, S., Stinson, K.: Weak solutions of Mullins–Sekerka flow as a Hilbert space gradient flow. Arch. Rational Mech. Anal. 248, 8 (2024)

Jiang, W., Li, B.: A perimeter-decreasing and area-conserving algorithm for surface diffusion flow of curves. J. Comput. Phys. 443, 110531 (2021)

Li, Y., Choi, J., Kim, J.: Multi-component Cahn-Hilliard system with different boundary conditions in complex domains. J. Comput. Phys. 323, 1–16 (2016)

Li, Y., Liu, R., Xia, Q., He, C., Li, Z.: First- and second-order unconditionally stable direct discretization methods for multi-component Cahn-Hilliard system on surfaces. J. Comput. Appl. Math. 401, 113778 (2022)

Luckhaus, S., Sturzenhecker, T.: Implicit time discretization for the mean curvature flow equation. Calc. Var. Partial Differ. Equ. 3, 253–271 (1995)

Mayer, U.F.: A numerical scheme for moving boundary problems that are gradient flows for the area functional. Eur. J. Appl. Math. 11, 61–80 (2000)

Neubauer, R.: Ein Finiteelementeansatz für Krümmungsfluß von unter Tripelpunktbedingungen verbundenen Kurven, Master’s thesis, University Bonn, Bonn (2002)

Nürnberg, R.: Numerical simulations of immiscible fluid clusters. Appl. Numer. Math. 59, 1612–1628 (2009)

Nürnberg, R.: A structure preserving front tracking finite element method for the Mullins-Sekerka problem. J. Numer. Math. 31, 137–155 (2023)

Pan, Z., Wetton, B.: A numerical method for coupled surface and grain boundary motion. Eur. J. Appl. Math. 19, 311–327 (2008)

Pozzi, P., Stinner, B.: On motion by curvature of a network with a triple junction. SMAI J. Comput. Math. 7, 27–55 (2021)

Röger, M.: Existence of weak solutions for the Mullins-Sekerka flow. SIAM J. Math. Anal. 37, 291–301 (2005)

Schmidt, A., Siebert, K.G.: Design of Adaptive Finite Element Software: The Finite Element Toolbox ALBERTA. Lecture Notes in Computational Science and Engineering, vol. 42. Springer, Berlin (2005)

Serfaty, S.: Gamma-convergence of gradient flows on Hilbert and metric spaces and applications. Discrete Contin. Dyn. Syst. 31, 1427–1451 (2011)

Thaddey, B.: Numerik für die Evolution von Kurven mit Tripelpunkt, Master’s thesis, University Freiburg, Freiburg (1999)

Zhu, J., Chen, X., Hou, T.Y.: An efficient boundary integral method for the Mullins-Sekerka problem. J. Comput. Phys. 127, 246–267 (1996)

Acknowledgements

A portion of this study was conducted during the research stay of the first author in Regensburg, Germany. He is grateful to the administration of the University of Regensburg for the invitation and for the financial support by the DFG Research Training Group 2339 IntComSin - Project-ID 32182185. He also appreciates the kindness and treatment received from all members in Research Training Group 2339.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A: An exact solution for the three-phase Mullins–Sekerka flow

Appendix A: An exact solution for the three-phase Mullins–Sekerka flow

In this appendix, we shall prove that (8.1) is a solution to (7.1) with \(\sigma =1\). Firstly, it directly follows from the definitions of F and \(\alpha \) in (8.1) that \(F(R_2(t)) = \alpha (t) / R_2(t)\). Hence, (8.1a) immediately implies that the normal velocity V of \(\Gamma ^{}_{}(t)\) satisfies

The first and fourth equations in (7.1) hold trivially since \({\varvec{w}}\) as defined in (8.1b) is constant in the two connected components of \(\Omega _1(t)\), and harmonic in \(\Omega _2(t)\) and \(\Omega _3(t)\). Let us confirm the Gibbs–Thomson law. We see from (8.1b) that \(w_1 - w_2 = 1/R_1(t)\) on \(\Gamma ^{}_{1}(t)\), \(w_3 - w_2 = -1/R_2(t)\) on \(\Gamma ^{}_{2}(t)\) and \(w_3 - w_1 = 1/R_3(t)\) on \(\Gamma ^{}_{3}(t)\). Thus, \({\varvec{w}}\) satisfies the second condition in (7.1). We move on the motion law. A direct calculation shows

Hence, the third condition of (7.1) is valid by (A.1) and (A.2). Therefore, \({\varvec{w}} = (w_1,w_2,w_3)^T\) given by (8.1) is an exact solution of (7.1) with \(\sigma =1\).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Eto, T., Garcke, H. & Nürnberg, R. A structure-preserving finite element method for the multi-phase Mullins–Sekerka problem with triple junctions. Numer. Math. 156, 1479–1509 (2024). https://doi.org/10.1007/s00211-024-01414-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00211-024-01414-x