Abstract

An explicit and computable error estimator for the \(hp\) version of the virtual element method (VEM), together with lower and upper bounds with respect to the exact energy error, is presented. Such error estimator is employed to provide, following the approach of Melenk and Wohlmuth (Adv Comput Math 15(1–4):311–331, 2001), \(hp\) adaptive mesh refinements for very general polygonal meshes. In addition, a novel VEM \(hp\) Clément quasi-interpolant, instrumental for the a posteriori error analysis, is introduced. The performances of the adaptive method are validated by a number of numerical experiments.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Among the novel technologies developed in the last two decades, polygonal methods [8, 14, 30, 32, 37, 43, 48, 50] received an increasing attention, thanks to the advantages that they entail compared to methods based on standard simplicial/quadrilateral meshes. One of the appealing features of polygonal methods is that they dovetail particularly well with adaptive mesh refinements, since polygonal meshes do not require any post-processing within the adaptive procedure.

In the mare magnum of the various theoretical and practical applications of mesh adaptivity, a particular place is occupied by the \(hp\) a posteriori analysis in Galerkin methods and in particular in finite element method (FEM) [3, 25, 28, 33, 36, 42]. Here, one combines mesh refinements with the increase of the dimension of the local approximation spaces.

This results in particularly competitive methods able to capture and to solve in an efficient way the singular behaviour of solutions to partial differential equations (PDEs), but comes at the price of a more involved framework. For instance, one has to face with the problem of deciding whether to refine in \(h\) (mesh refinement) or in \(p\) (increasing the dimension of local space), has to construct an error estimator with explicit bounds in terms of the distribution of local polynomial degrees, and has to construct spaces possibly tailored for high polynomial order (such that, e.g., the condition number of the stiffness matrix is sufficiently robust in terms of \(p\)).

Amidst the polygonal methods, we pinpoint the virtual element method (in short VEM, introduced in [8, 10]), which has established itself as one of the most successful technologies in this context and enjoyed an increasing community (a very limited list of papers being [4, 6, 16, 18, 21, 22, 24, 35, 45, 47, 52, 53]). Differently from other polygonal approaches, the VEM is built without an explicit knowledge of the functions in the approximation spaces. The construction of the method is based on two main ingredients, which are computable only via the degrees of freedom with whom the spaces are endowed: projections into polynomial spaces and particular bilinear forms stabilizing the method. Virtual elements are particularly suitable for the hp framework not only due to the mesh refinement flexibility, but also due to the fact that variable (from element to element) polynomial degrees can be included very naturally in the formulation, without resorting to“ad hoc” modifications as in finite elements.

The a posteriori version of VEM was investigated in [17, 20, 26, 27, 46] but the \(hp\) adaptivity has never been targeted before and is the topic of the present work. This paper falls in a series of articles covering different aspects of the \(p\) and \(hp\) version of VEM, namely a priori error analysis on quasi-uniform meshes [11], approximation of corner singularities [12], multigrid algorithms [5], ill-conditioning in two [39] and three dimensional problems [31], Trefftz and non-conforming approaches [29, 40], theoretical and numerical analysis of the stabilization typical of VEM [38].

In the present work, we introduce a VEM computable error estimator for a two dimensional model problem and we prove lower and upper bounds with respect to the energy error that are explicit both in the mesh size and in the distribution of local degrees of accuracy (which are the VEM counterpart of polynomial degrees for FEM). Additionally, we recall from [38, 42] some \(hp\) inverse inequalities on triangles and polygons. Besides, we introduce and prove error estimates of a novel \(hp\) Clément type quasi-interpolant, which is constructed starting from the quasi-interpolant of [41] on subtriangulations of the underlying polygonal meshes. Such estimates are an interesting result on their own, independently of their application in the a posteriori error analysis.

We also present many numerical experiments; more precisely, after verifying the lower and upper bounds of the error estimator, we recall from [42] an \(hp\) refinement strategy and we apply it to the proposed adaptive scheme. Such refinement strategy heuristically suggests which are the marked elements where the solutions is supposed to be “smooth” or “singular”; in the former case, \(p\) refinement are effectuated (since \(p\) VEM converges exponentially when approximating analytic solutions, see [11]), in the latter, \(h\) refinement are performed (geometric refinements towards the singularities also give exponential convergence in terms of the number of degrees of freedom, see [12]).

Possible further developments of this work include extension to more complex problems and to the three dimensional case. Moreover, one could also take into account in the adaptive strategy an \(hp\) derefinement process including the agglomeration of elements.

The outline of the paper is the following. We begin in Sect. 2 by presenting the model problem and the \(hp\) version of the virtual element method and next, in Sect. 3, we introduce a set of technical tools needed for the a posteriori error analysis; in particular, we discuss about approximation by functions in the virtual element space, \(hp\) polynomial inverse estimates and extension operators from an edge into the interior of a triangle. Successively, in Sect. 4 we deal with the a posteriori error analysis: we introduce an error estimator and we assert the “reliability” and the “efficiency” of such an estimator. Finally, a number of numerical experiments, including a comparison with the pure \(h\) refinement strategy, are presented in Sect. 5.

Notation In the remainder of the paper, we will employ the following notation. Given D a measurable open set in \({\mathbb {R}}^2\), we denote by \(L^2(D)\) and \(H^s(D)\), \(s \in {\mathbb {N}}\), the standard Lebesgue and Sobolev spaces endowed with the standard inner products and seminorms:

The Sobolev norms are denoted by:

Sobolev spaces with fractional order are defined via interpolation theory [51].

It is worth to highlight with a separate notation the \(H^1\) inner product over domain D:

We refer to [1] for the definition of spaces, inner products and (semi)norms.

Given \(\ell \in {\mathbb {N}}\), we set \({\mathbb {P}}_\ell (D)\) to be the space of polynomials of degree \(\ell \) over D. Finally, we write \(a \lesssim b\) and \(a \approx b\) in lieu of: there exist positive constants \(c_1\), \(c_2\) and \(c_3\) independent of the polynomial degree and of the mesh-size such that \(a \le c_1 b\) and \(c_2 a \le b \le c_3 a\), respectively.

2 Model problem and the \(hp\) version of the virtual element method

In this section, we briefly review the model problem and the \(hp\) version of the virtual element method.

Given \(\varOmega \subset {\mathbb {R}}^2\) a polygonal domain and \(f\in L^2(\varOmega )\), we consider the Poisson problem with homogeneous Dirichlet boundary conditions

and its weak formulation

where we have set

Next, we introduce a virtual element method based on polygonal meshes and with nonuniform local degree of accuracy for the approximation of problem (2). We note that virtual element methods with nonuniform degree of accuracy were firstly introduced in [12].

Given \(\{{\mathcal {T}}_n\}_{n\in {\mathbb {N}}}\) a sequence of decomposition of \(\varOmega \) into polygons with straight edges, we associate to each \({\mathcal {T}}_n\), \(n \in {\mathbb {N}}\), its set of vertices \({\mathcal {V}} _n\) and boundary vertices \({\mathcal {V}}_n^b\), its set of edges \({\mathcal {E}}_n\) and boundary edges \({\mathcal {E}}_n^b\). To each \(K\) in \({\mathcal {T}}_n\), we associate its diameter \(h_K\), its barycenter \({\mathbf {x}} _K\), its set of vertices \({\mathcal {V}}^K\) and its set of edges \({\mathcal {E}}^K\). To each edge \(s\in {\mathcal {E}}_n^b\), we associate once and for all a unit normal \({\mathbf {n}}_{\mathbf s} = {\mathbf {n}}\).

We require that \({\mathcal {T}}_n\) is a conforming polygonal decomposition of \(\varOmega \) for every \(n \in {\mathbb {N}}\), i.e. every internal edge belongs to the intersection of the boundary of two polygons.

Besides, we demand the two following geometric assumptions on sequence \({\mathcal {T}}_n\).

- (D1):

-

Every \(K\in {\mathcal {T}}_n\) is star-shaped with respect to a ball (see [23]) with radius greater than or equal to \(\gamma h_K\), where \(\gamma \) is a universal positive constant.

- (D2):

-

Given \(K\in {\mathcal {T}}_n\), each of his edges \(s\in {\mathcal {E}}^K\) has length greater than or equal to \({\widetilde{\gamma }} h_K\), where \({\widetilde{\gamma }}\) is a universal positive constant.

A consequence of assumptions (D1) and (D2) which will be extensively used in the following is highlighted in Remark 1.

Remark 1

Owing to assumptions (D1) and (D2), the following fact holds true. The subtriangulation \(\widetilde{{\mathcal {T}}}_n= \widetilde{{\mathcal {T}}}_n(K)\) of \(K\), obtained by joining the vertices of \(K\) to the center of the ball with respect to which \(K\) is star-shaped, is made of triangles that are star-shaped with respect to balls with radius greater than or equal to \({\overline{\gamma }} h_K\), where \({\overline{\gamma }}\) is a universal positive constant depending only on \(\gamma \) and \({\widetilde{\gamma }}\). For a proof of this fact, see [38, Chapter 2]. In the forthcoming analysis, we will often make use of standard functional inequalities (Poincaré, trace, ...). If not explicitly mentioned, the constants in such inequalities, and therefore also in all the results we will present, depend solely on the shape regularity of \({\mathcal {T}}_n\) and \(\widetilde{{\mathcal {T}}}_n\).

Next, we associate to each element \(K\in {\mathcal {T}}_n\) a local degree of accuracy \(p_K\). To each boundary edge \(s\in {\mathcal {E}}^b_n\), we associate \(p_s\) equal to \(p_{{\widetilde{K}}}\), where \({\widetilde{K}}\) is the unique polygon having \(s\) as an edge. On the other hand, to each internal edge \(s\in {\mathcal {E}}_n \setminus {\mathcal {E}}_n^b\) we associate \(p_s\) equal to \(\max (p_{K_1}, p_{K_2})\), where \(s= \overline{K_1} \cap \overline{K_2}\).

Given now \(\widetilde{{\mathcal {T}}}_n\) the regular subtriangulation of \(\varOmega \) obtained by gluing the local triangulations introduced in Remark 1, we let \(\widetilde{{\mathcal {V}}}_n\) and \(\widetilde{{\mathcal {E}}}_n\) be the sets of vertices and edges of \(\widetilde{{\mathcal {T}}}_n\), respectively. To each \(T\in \widetilde{{\mathcal {T}}}_n\) with \(T\subset K\), we associate \(p_T\) equal to \(p_K\). We denote by \(\mathbf p\) the vector of degrees of accuracy associated with polygonal mesh \({\mathcal {T}}_n\), whereas we denote by \(\widetilde{\mathbf p}\) the vector of degrees associated with triangular subdecomposition \(\widetilde{{\mathcal {T}}}_n\). To each edge \({\widetilde{s}}\) of \(T\) in the boundary of \(K\) we associate \(p_{{\widetilde{s}}}\) equal to \(p_s\), where \(s= {\overline{T}} \cap {\overline{K}} = {\widetilde{s}}\). To the other edges \({\widetilde{s}}\) of \(T\), we simply associate \(p_{{\widetilde{s}}}\) equal to \(p_K\). We also associate with each vertex \({\mathbf {V}}\in \widetilde{{\mathcal {V}}}_n\)

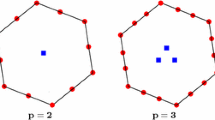

See Fig. 1 for a graphical idea regarding the distribution of the degrees over \({\mathcal {T}}_n\), \(\widetilde{{\mathcal {T}}}_n\), \({\mathcal {E}}_n\), and \(\widetilde{{\mathcal {E}}}_n\).

Local degrees of accuracy distribution. Left: distribution over \({\mathcal {T}}_n\), the polygonal decomposition, and \({\mathcal {V}}_n\) of \({\mathcal {T}}_n\). Center-left: distribution over \({\mathcal {E}}_n\), the set of edges of \({\mathcal {T}}_n\). Center-right: distribution over \(\widetilde{{\mathcal {T}}}_n\), the subtriangulation of \({\mathcal {T}}_n\), and \(\widetilde{{\mathcal {V}}}_n\), the set of vertices of \(\widetilde{{\mathcal {T}}}_n\). Right: distribution over \(\widetilde{{\mathcal {E}}}_n\), the set of edges of \(\widetilde{{\mathcal {T}}}_n\)

We also ask for the following assumption on the local degree of accuracy distribution associated with \({\mathcal {T}}_n\):

- (P1):

-

for every \(K\) and \(K'\) in \({\mathcal {T}}_n\) with \({\overline{K}} \cap \overline{K'}\ne \emptyset \), there exists a positive universal constant c such that

$$\begin{aligned} \vert p_K- p_{K'} \vert \le c. \end{aligned}$$(4)

As a consequence of the assumption (P1) and the above construction, it also holds \(p_s\approx p_K\) whenever \(s\) is an edge of \(K\).

We introduce now the virtual element method associated with the Poisson problem (2). In particular, following [8], we introduce a finite dimensional subspace \(V_n\) of \(V\), a discrete bilinear form \(a_n: V_n\times V_n\rightarrow {\mathbb {R}}\) and a discrete right-hand side \(f_n\), such that the method

is well-posed and its output \(u_n\) approximates the solution \(u\) of (2).

In Sect. 2.1, we describe the virtual element space \(V_n\). Next, in Sect. 2.2, we present a computable choice for the discrete bilinear form \(a_n\). Finally, in Sect. 2.3, we discuss the construction of the discrete right-hand side \(\langle f_n, \cdot \rangle _n\).

2.1 The virtual element space

The present section is devoted to introduce the virtual element space \(V_n\).

We begin by defining the local virtual element spaces. Given \(K\in {\mathcal {T}}_n\), we set first

The local virtual element space over polygon \(K\) reads

Let us consider the following set of linear functionals defined on \(V(K)\): given \(v_n\in V_n(K)\),

-

The point-values of \(v_n\) at the vertices of \(K\);

-

For every edge \(s\in {\mathcal {E}}^K\), the point-values at the \(p_s-1\) internal Gauß-Lobatto nodes on \(s\);

-

The internal moments

$$\begin{aligned} \frac{1}{\vert K\vert } \int m_{\varvec{\alpha }} v_n\quad \forall \varvec{\alpha }\in {\mathbb {N}}^2,\quad \vert \varvec{\alpha }\vert := \alpha _1+\alpha _2 \le p_K-2, \end{aligned}$$(7)where \(\{m_{\varvec{\alpha }},\, \varvec{\alpha }\in {\mathbb {N}}^2,\, \vert \varvec{\alpha }\vert \le p_K-2\}\) is any basis of \({\mathbb {P}}_{p_K-2}(K)\), provided that \(\Vert m_{\varvec{\alpha }} \Vert _{\infty ,K}\approx 1\) and that it is invariant with respect to homothetic transformations.

These linear functionals are a set of unisolvent degrees of freedom (dofs), see [8].

Remark 2

The choice of the polynomial basis dual to internal moments play a fundamental role in the prospective ill-conditioning of the high-order virtual element method. We refer to [31, 39] for a deep insight on this topic and to Sect. 5 for an explicit choice of such polynomial basis.

Although the space \(V_n(K)\) is not defined explicitly inside the element \(K\), by means of the set of degrees of freedom above, it is possible to compute a couple of local projectors, see [10]. The first operator is the \(L^2\) projector \(\varPi ^{0,K}_{p_K-2}: V_n(K)\rightarrow {\mathbb {P}}_{p_K- 2}(K)\) defined as

The second one is an \(H^1\) projector \(\varPi ^{\nabla ,K}_{p_K}: V_n(K)\rightarrow {\mathbb {P}}_{p_K} (K)\) defined as

When no confusion occurs we will write \(\varPi ^0_{p_K-2}\) and \(\varPi ^{\nabla }_{p_K}\) in lieu of \(\varPi ^{0,K}_{p_K-2}\) and \(\varPi ^{\nabla ,K}_{p_K}\).

The global virtual element space is obtained as in FEM by a standard conforming coupling of the local sets of degrees of freedom and by enforcing homogeneous boundary conditions on \(\partial \varOmega \):

We also introduce the set of global degrees of freedom and the global canonical basis

where \(\delta _{i,j}\) is the Kronecker delta.

Remark 3

We point out that if we aim to approximate a Poisson problem (1) with a non-homogeneous Dirichlet boundary condition \(g\in H^{\frac{1}{2}}(\partial \varOmega )\), then the global space (10) is modified into

where \(g_{GL|_s}\) denotes the Gauß-Lobatto interpolant of \(g\) over the boundary edge \(s\). In fact, Gauß-Lobatto interpolation on the boundary guarantees optimal approximation properties for the \(p\) and \(hp\) versions of VEM, see [29]. However, in order to avoid cumbersome technicalities in the following, we assume henceforth to deal with homogeneous Dirichlet boundary conditions.

2.2 The discrete bilinear form

Here, we introduce the discrete bilinear form \(a_n\) associated with method (5). To this purpose, we follow the guidelines of [8, 11]. Given \(a_n^K: V_n(K)\times V_n(K)\rightarrow {\mathbb {R}}\) local bilinear forms, the (global) discrete bilinear form is a sum of such local contributions:

where the local discrete bilinear forms \(a_n^K\) are assumed to satisfy

- (A1):

-

polynomial consistency: for every \(K\in {\mathcal {T}}_n\),

$$\begin{aligned} a_n^K(q, v_n) = a^K(q, v_n) \quad \forall q\in {\mathbb {P}}_{p_K} (K),\; \forall v_n\in V_n(K); \end{aligned}$$ - (A2):

-

local stability: for every \(K\in {\mathcal {T}}_n\), there exist two positive constants \(\alpha _*(p_K) \le \alpha ^*(p_K)\) independent of \(h_K\) but possibly depending on the local degree of accuracy \(p_K\), such that

$$\begin{aligned} \alpha _*(p_K) \vert v_n\vert ^2_{1,K} \le a_n^K(v_n, v_n) \le \alpha ^*(p_K) \vert v_n\vert ^2 _{1,K} \quad \forall v_n\in V_n(K). \end{aligned}$$(12)

The assumption (A1) implies that the discrete bilinear form is locally exact for piecewise polynomial functions having a proper degree. On the other hand, the assumption (A2) guarantees the coercivity and the continuity of the global discrete bilinear form and hence the well-posedness of method (5).

A number of computable discrete bilinear forms can be found in the literature. We refer to [12] for what concerns the first “\(p\)-explicit” stabilization available in the VEM literature and to [38, Chapter 6] and to [15] for a survey of the topic.

Following [8], the local bilinear forms \(a_n^K\) can be built with the aid of an auxiliary local stabilizing bilinear form \(S^K: \ker (\varPi ^{\nabla }_{p_K}) \times \ker (\varPi ^{\nabla }_{p_K}) \rightarrow {\mathbb {R}}\). For every \(K\in {\mathcal {T}}_n\), the bilinear form \(S^K\) must satisfy

where \(c_*(p_K)\) and \(c^*(p_K)\) are two positive constants independent of \(h_K\) but possibly depending on the local degree of accuracy \(p_K\). The local discrete bilinear form

where the energy projector \(\varPi ^{\nabla }_{p_K}\) is defined in (9), satisfies assumptions (A1) and (A2) with \(\alpha _*(p_K) = \min (1, c_*(p_K))\) and \(\alpha ^*(p_K) = \max (1, c^*(p_K))\), see [8].

An explicit choice of the stabilization, and consequently also of the discrete bilinear form, will be discussed in Sect. 5.

2.3 The discrete right-hand side

Here, we present the discrete right-hand side of method (5). In particular, we split the global discrete right-hand side \(\langle f_n, \cdot \rangle _n\) as a sum of local contributions:

where the local discrete duality pairing are defined as

For a deeper analysis concerning the discrete right-hand side, we refer to [2, 9, 11].

3 Technical tools

In this section, we introduce and discuss some technical tools that we will employ in the a posteriori error analysis. In Sect. 3.1, we prove local \(L^2\) and \(H^1\)\(hp\) approximation properties in terms of functions in the virtual element space. In Sect. 3.2, we recall a couple of \(hp\) polynomial inverse estimates, whereas, in Sect. 3.3, we recall from [42] the existence of a lifting operator of a polynomial from any edge of a triangle into its interior with good stability properties.

Henceforth, we adopt the following notation concerning spaces of piecewise continuous polynomials over triangular meshes. Given \(\widetilde{{\mathcal {T}}}_n\) a triangular mesh, we write

where the choice of the polynomial degrees \({\widetilde{p}}_s\) on the edges is picked as in Sect. 2, see Fig. 1.

3.1 Local \(hp\) approximation estimates

In this section, we discuss about approximation properties of functions in the local virtual element spaces \(V_n(K)\) defined in (6). In particular, we prove local approximation estimates in the \(L^2\) and \(H^1\) (semi)norms and in the \(L^2\) norm on the boundary. Previous results on \(hp\)-VEM approximation can be found in [11, 12].

We first need to recall a technical tool from [41, 42]. Given \(\widetilde{{\mathcal {T}}}_n\) the (regular) triangular subdecomposition of \(\varOmega \), defined locally by the subtriangulations of the polygons of \({\mathcal {T}}_n\) introduced in Remark 1, and given a vertex \({\mathbf {V}}\in \widetilde{{\mathcal {V}}}_n\) of subtriangulation \(\widetilde{{\mathcal {T}}}_n\), we set the triangular patch around vertex \({\mathbf {V}}\) as

Given D either a polygon of \({\mathcal {T}}_n\) or a triangle of \(\widetilde{{\mathcal {T}}}_n\), we define the triangular patch around D as

Besides, given an edge \(s\in {\mathcal {E}}_n\), we also define the triangular patch around \(s\) as

In Figs. 2, 3, 4 and 5 we provide graphical examples of patches around a vertex, a polygon, a triangle and an edge.

Left: a polygonal mesh \({\mathcal {T}}_n\) and its subtriangulation \(\widetilde{{\mathcal {T}}}_n\), in a red circle a vertex \({\mathbf {V}}\). Right: in light blue, \(\omega _{{\mathbf {V}}}\), the triangular patch around vertex \({\mathbf {V}}\), defined in (15) (color figure online)

Left: a polygonal mesh \({\mathcal {T}}_n\), in light red a polygon \(K\). Center: the regular subtriangulation \(\widetilde{{\mathcal {T}}}_n\). Right: in light blue, \(\omega _{K}\), the triangular patch around \(K\), defined in (16) (color figure online)

Left: a polygonal mesh \({\mathcal {T}}_n\). Center: the regular subtriangulation \(\widetilde{{\mathcal {T}}}_n\), in light red a triangle \(T\). Right: in light blue, \(\omega _{T}\), the triangular patch around \(T\), defined in (16) (color figure online)

Left: a polygonal mesh \({\mathcal {T}}_n\), in red an edge \(s\). Center: the regular subtriangulation \(\widetilde{{\mathcal {T}}}_n\). Right: in light blue, \(\omega _{s}\), the triangular patch around edge \(s\), defined in (17) (color figure online)

It can be proven that, using assumptions (D1)–(D2), \(\text {diam}(\omega _D) \approx \text {diam}(D)\), D being either a polygon or a triangle and \(\omega (D)\) being the associated triangular patch.

We now recall the following polynomial Clément-type approximation result over triangles.

Lemma 1

Let the assumptions (D1)–(D2) be valid. Then, given \(u\in H^1_0(\varOmega )\), there exists \(u_M\in S^{{\mathbf {p}}, 0} (\varOmega , \widetilde{{\mathcal {T}}}_n) \cap H^1_0(\varOmega )\), see (14), such that, for each triangle \(T\in \widetilde{{\mathcal {T}}}_n\) and for each \(s\) edge of \(\widetilde{{\mathcal {E}}}_n\) (i.e. of the skeleton of \(\widetilde{{\mathcal {T}}}_n\)), the following estimates hold true:

where \(c_M\) is a positive constant independent on \(u\), \(h_T\) and \(p_T\) but depending on the shape-regularity of \({\mathcal {T}}_n\) and hence of \(\widetilde{{\mathcal {T}}}_n\). Above, the symbols \(\omega _{T}\) and \(\omega _{s}\) represent the triangular patch around triangle \(T\) and the triangular patch around edge \(s\) defined in (16) and (17), respectively.

Proof

The proof, based on the partition of unity method [7], can be found in [41, Theorem 3.3]. \(\square \)

Lemma 1 is a key ingredient for the following result which asserts the existence of an \(hp\) VEM Clément quasi-interpolant.

Proposition 1

Let the assumptions (D1)–(D2) be valid. Given \(u\) the solution to (5), a convex polygon \(K\in {\mathcal {T}}_n\) and \(s\) any of its edges, there exists a function \(u_I\in V_n\), such that its restriction to \(K\) satisfies the following estimates:

where c is a positive constant independent on \(u\), \(h_K\) and \(p_K\) but depending on the shape-regularity of \({\mathcal {T}}_n\) and hence of \(\widetilde{{\mathcal {T}}}_n\), and where \(\omega _{K}\) and \(\omega _{s}\) follow the notation introduced in (16) and (17).

Proof

Given \(K\in {\mathcal {T}}_n\) convex, we define \(u_I\in V_n(K)\) as the solution to the following Poisson problem with nonhomogeneous Dirichlet boundary conditions

where \(u_M\) is the quasi-interpolant from [41] introduced in Lemma 1.

We observe that the construction of the quasi-interpolant \(u_M\) is based on the idea of subtriangulation of a polygon, which in turns can be traced back to the interpolation theory of generalized barycentric coordinates on polygons. In practice, we fix \(u_I\) on the boundary to be the polynomial quasi-interpolant guaranteeing hp approximation properties on triangles and we fix the internal moments of \(u_I\) by demanding

Roughly speaking, this last condition enforces the (distributional) identity \(\varDelta u_I= \varDelta u_M\) in an approximated sense.

The estimate (19b) is trivially guaranteed by (18b).

Next, we investigate the bound on the \(H^1\) seminorm in (19a). Given any bubble function w in \(V_n(K)\cap H^1_0(K)\), we observe that, owing to (20),

Therefore, since any function \({\widetilde{w}}\) in the virtual element space with \({\widetilde{w}}_{|\partial K} = u_{M|\partial K} = u_{I|\partial K}\) can be written as \(u_I+w\), one easily gets

As a consequence,

where we choose \({\widetilde{u}} _I\in V_n(K)\) satisfying the following local problem:

being \(u_\pi \) equal to \(\varPi ^{\nabla }_{p_K}u\), see (9).

The Dirichlet principle, see e.g. [34], yields

whence, applying (18a) and [11, Lemma 4.2] to the first and second terms on the right-hand side of (24), respectively,

We underline that the constant c depends only on the shape-regularity of \({\mathcal {T}}_n\) and hence of \(\widetilde{{\mathcal {T}}}_n\).

Finally, we investigate the bound on the \(L^2\) norm in (19a). To this purpose, we employ the hypothesis that \(K\) is convex and we consider the auxiliary problem

The following standard a priori bound on the solution to the auxiliary problem (25) for convex \(K\) is valid:

Owing to the fact that \({u_M-u_I} _{| _{\partial K}} = 0\) and to the orthogonality of \(u_M- u_I\) with respect to virtual bubble functions (21), we have

where \(\psi _{II}\) is a function in \(V_n(K)\cap H^1_0(K)\) providing optimal \(hp\) approximation estimates of \(\psi \), see e.g. [11, Lemma 4.3],

being c a positive constant depending only on the shape-regularity of \(K\).

We deduce, plugging (28) into (27),

where c is the same constant as in (28).

Recalling (26), we obtain

The assertion follows from a triangle inequality and the bound on the \(H^1\) seminorm in (19a). \(\square \)

Remark 4

If element \(K\) is nonconvex, then the internal \(L^2\) bound of Proposition 1 modifies to

for all \(\varepsilon > 0 \) arbitrarily small, where \(\alpha _K\) denotes the largest interior angle of \(K\).

3.2 Inverse estimates on triangles and polygons

In this section, we recall two \(hp\) polynomial inverse inequalities that will be instrumental in the forthcoming analysis.

We begin with the following 1D result.

Lemma 2

Given \({\widehat{I}} = [-1,1]\), given \(\psi \) the quadratic bubble function over \({\widehat{I}}\) and given \(0\le \alpha _1 \le \alpha _2\), there exists a positive constant such that, for all \(q\in {\mathbb {P}}_p({\widehat{I}})\), \(p\in {\mathbb {N}}\),

Proof

See [19, Lemma 4]. \(\square \)

Given \(K\in {\mathcal {T}}_n\), we define a piecewise bubble function \(\psi _{K}\) over \(\widetilde{{\mathcal {T}}}_n(K)\) as follows:

where we recall that \(\widetilde{{\mathcal {T}}}_n(K)\) is the subtriangulation of \(K\) introduced in Remark 1.

The following \(hp\) polynomial inverse inequality over a polygon holds true.

Lemma 3

Given \(K\) a polygon in \({\mathcal {T}}_n\), let \(\psi _K\) be the piecewise bubble function associated with the subtriangulation \(\widetilde{{\mathcal {T}}}_n(K)\) of \(K\) defined in (29). For all \(-1 < \alpha \le \beta \), there exist a positive constants c depending only on \(\alpha \), \(\beta \), and the shape-regularity of the subtriangulation \(\widetilde{{\mathcal {T}}}_n(K)\), such that, for all \(q\in {\mathbb {P}}_{p_K}(K)\),

Proof

See [38, Theorem B.3.2]. \(\square \)

3.3 A lifting operator

In this section, we recall the existence of a lifting operator from an edge of a triangle to its interior.

Lemma 4

Given \(T\in \widetilde{{\mathcal {T}}}_n\) a triangle and \(s\) any of its edges and given \(1/2 < \alpha \le 2\), let \(\psi _{s}\) be the quadratic bubble function associated with edge \(s\). Then, for every \(q\in {\mathbb {P}}_{p_{s}}(s)\) and for every \(\varepsilon >0\), there exist a lifting \(E(q) \in H^1(T)\), such that

where \(c_\alpha \) is a positive constant depending only on \(\alpha \) and on the shape of \(T\).

Proof

The proof follows from [42, Lemma 2.6] and a scaling argument. \(\square \)

Note that, since \(E(q)\) vanishes on \(\partial T\setminus s\), then it can be extended to 0 on the remaining part of the polygon \(K\) containing \(T\) as part of its subtriangulation whenever \(s\) lies on the boundary of \(K\). As a consequence, (32) and (33) can be “generalized” in a straightforward way, employing on the left-hand side (semi)norms on the complete polygon in lieu of their counterparts on triangle \(T\).

4 A posteriori error analysis

In this section, we build an error estimator and we prove that it can be upper and lower bounded by the \(H^1\) error of the method, with constants explicit in terms of the discretization parameters.

The remainder of the the section is structured as follows. In Sect. 4.1, we write the residual equation, whereas, in Sect. 4.2, we construct an error estimator and we show that the energy error can be bounded in terms of such an estimator with an explicit dependence in terms of the discretization parameters. Next, in Sect. 4.3, we show that the local estimator can be bounded by the \(H^1\) seminorm of the quasi-local error plus a couple of terms involving the oscillation of the right-hand side of the problem (1) and the stabilization of the method. Finally, in Sect. 4.4, we summarize the foregoing results.

Henceforth, we assume for the sake of clarity that all the polygons in \({\mathcal {T}}_n\) are convex. The nonconvex case is discussed in Remark 5. Furthermore, we also assume in the forthcoming analysis that \(p_K\ge 2\) for all \(K\in {\mathcal {T}}_n\); this allows us to avoid a cumbersome notation when treating the discrete right-hand side, cf. Sect. 2.3. Thanks to this assumption, we will write \(\langle f_n, v_n\rangle _n\) as \((f_n, v_n)_{0,\varOmega }\), where \(f_{n|_{K}} = \varPi ^0_{p_K-2}f\) for all \(K\in {\mathcal {T}}_n\).

Finally, we set the jump across an edge \(s\in {\mathcal {E}}_n\) as

where \(v^+\) and \(v^-\) are the restriction (assumed sufficiently regular) of \(v\) over \(\partial K^+\) and \(\partial K^-\), respectively, and where \(s\subseteq \partial K^+ \cap \partial K^-\). Note that we are assuming that the unit normal \({\mathbf {n}}\) is pointing outside \(K^+\) and inside \(K^-\).

4.1 The residual equation

We begin by introducing the residual equation. Let \(e := u- u_n\) be the difference between the solution to the continuous (2) and discrete (5) problems, respectively. One has, for any \(v\in H^1_0(\varOmega )\) and \(\chi _n\in V_n\),

We rewrite the last term on the right-hand side of (35) by observing that, for all \(w\in H^1_0(\varOmega )\), the following holds true:

where we recall that the jump \(\llbracket \cdot \rrbracket _s\) is defined in (34) and where we recall that we are assuming that the unit normal \({\mathbf {n}}\) of each edge is fixed once and for all. Note that \({\mathcal {E}}_n^I\) represents the set of edges of \({\mathcal {E}}_n\) not lying on \(\partial \varOmega \).

We highlight the presence of a polynomial projector in (36); without such a projector we would not be able to compute exactly the jump across the edges of normal derivatives of functions in the virtual element space, which we anticipate will be part of the error residual of the method.

Plugging (36) in (35) with \(w= v- \chi _n\), we deduce

whence

The first two and the last term on the right-hand side of (37) are analogous to the terms appearing in the FEM counterpart of the residual equation, see [42, Lemma 3.1]. The only difference here, is that we consider the \(H^1\) projection of the discrete solution. Note that in the FEM framework such a projection applied to the solution coincides with the solution itself (since the solution is a piecewise polynomial).

The remaining terms (virtual element consistency terms) on the right-hand side of (37) are instead typical of the virtual element setting and they take into account the fact that the discrete bilinear form is only an approximation to the exact one.

4.2 Error estimator and upper bounds

In this section, we introduce a computable error estimator and we prove the lower and upper bounds of the energy error in terms of such estimator.

To this purpose, we use the residual equation (37) with \(v=e:= u-u_n\) and \(\chi _n= e_I= (u- u_n)_I\), where we recall that the local \(hp\) approximation properties of \(e_I\) are described in Proposition 1. We obtain

We estimate the five local terms separately. We start with the term I. Applying Proposition 1, which is valid since we are assuming \(K\) convex, we get

where we recall that the hidden constant depends solely on the shape-regularity of \({\mathcal {T}}_n\) and hence of \(\widetilde{{\mathcal {T}}}_n\), see Remark 1. In the following, when no confusion occurs and when unnecessary, we will omit to highlight such dependence.

Secondly, we investigate II, the term involving the oscillation of the right-hand side. Owing to \(L^2\) orthogonality and \(hp\) approximation properties of such projector, see e.g. [11, Lemma 4.2], we write

where we recall that the \(L^2\) projector \(\varPi ^0_{p_K-2}\) is defined in (8).

Next, we deal with V, the projected jump edge residual term: for all \(s\in {\mathcal {E}}_n^I\),

where in the last but one inequality we employed (19b).

The first virtual element consistency term IV can be bounded instead using (19a) (in the last but one inequality) and the stability property (12) (in the last inequality):

We emphasize that we make appear the stabilization term since in the definition of the error residual we want to have computable quantities only.

Finally, we study III, the second virtual element consistency term:

whence, recalling Proposition 1 and the stability property (12),

In order to simplify the notation, we write henceforth

Collecting the estimates on the five terms on the right-hand side of (38), we get, after some trivial algebra,

whence

where we have set the local error estimators as

For ease of notation, we define next \(\eta _{p_K}\), which takes into account both the bulk and the edge error estimators \(\eta _K\) and \(\eta _s\), \(s\in {\mathcal {E}}^K\), defined in (41), as

We highlight two facts. The first one is that, apart from the term involving the stabilization, the upper bound is analogous to the one of \(hp\) FEM, see [42, Lemma 3.1]. The second observation is that for nonconvex \(K\), we would have an additional factor \(p_K^{2\frac{\pi }{\alpha _K} - \varepsilon }\) for all \(\varepsilon >0\) arbitrarily small in front of \(\eta _K^2\) in (40), where \(\alpha _K\) denotes the largest interior angle of \(K\), see Remark 4.

4.3 Lower bound

In this section, we bound the local error estimator \(\eta _{p_K}\) introduced in (42) with the quasi-local \(H^1\) seminorm of the error of the method plus a term related to the oscillation of the right-hand side and a term related to the stabilization of the method. After recalling a technical tool in Sect. 4.3.1, we prove in Sects. 4.3.2 and 4.3.3 the bounds on the internal and edge residuals, respectively, in terms of the local energy errors.

4.3.1 An inverse inequality

Here, we recall an \(hp\) polynomial inverse inequality, which will be used in Sect. 4.3.2.

Lemma 5

Given a polygon \(K\in {\mathcal {T}}_n\), we set \(\psi _K\) the piecewise bubble function associated with polygon \(K\in {\mathcal {T}}_n\) as in (29). Then, for all \(q\in {\mathbb {P}}_{p_K}(K)\) and \(1/2 < \alpha \le 2\), the following holds true:

where the hidden constant depends on the shape-regularity of \({\mathcal {T}}_n\) and hence of \(\widetilde{{\mathcal {T}}}_n\).

Proof

For a complete proof, see [38, Lemma B.3.3], which is the “polygonal” counterpart of [42, Lemma 3.4]. \(\square \)

4.3.2 Bounding the internal residual

In this section, we bound the local internal residual \(R_K\) appearing on the right-hand side of (42). To this purpose, we note that plugging \(\chi _n= 0\) in (37), we have

Let us focus our attention on a single element \(K\). Given \(\psi _K\) the piecewise bubble function associated with element \(K\) defined as in (29), we extend it to 0 outside \(K\). We then choose \(v= \psi _K^{\alpha } R_K\) in (44), with \(1/2 < \alpha \le 2\), obtaining

From (45), we get

As a consequence, applying the stability bounds (12), recalling that \(R_K\in {\mathbb {P}}_{p_K}(K)\) and applying the \(hp\) polynomial inverse estimate on polygons (43), one has, for all \(1/2 < \alpha \le 2\),

or, equivalently,

We are now ready to prove the bound on the internal residual. Recalling that \(1/2< \alpha \le 2\), the \(hp\) polynomial inverse estimate on polygons (30) implies

Hence,

We pick \(\alpha = 1/2 + \varepsilon \), with \(\varepsilon >0\) arbitrarily small, and get

4.3.3 Bounding the edge residual

Next, we bound the edge residual \(R_s\) appearing on the right-hand side of (42). We henceforth consider a function \({\overline{R_s}}\) given by \(E(R_s)\), where the lifting operator E is defined in Lemma 4. We recall that the restriction of \({\overline{R_s}}\) on \(s\) is equal to \(\psi _s^\alpha \, R_s\), being \(\psi _s\) defined as the quadratic edge bubble function on \(s\) and where \(1/2 < \alpha \le 2\).

In the following, we assume that \({\overline{R_s}}\) can be extended to 0 outside \({\overline{T}}_1 \cup {\overline{T}}_2\), see (17). Let us denote by \(K_1\) and \(K_2\) the two polygons containing the triangles \(T_1\) and \(T_2\).

We substitute \(v= {\overline{R_s}}\) and \(\chi _n=0\) in (37), obtaining for all \(s\in {\mathcal {E}}_n^I\)

We observe that the following bound of the third term on the right-hand side of (47) holds true:

We deduce from (47) that

and, combining this with (48), we arrive at

Recalling that \(p_s\approx p_{K_i}\) for all edges \(s\subset \partial K_i\), \(i=1,2\), see (4), and applying Lemma 4 with \(1/2 < \alpha \le 2\) on \(\vert {\overline{R_s}}\vert _{1,K_i}\) and \(\Vert {\overline{R_s}}\Vert _{0,K_i}\), we obtain, for every \(\varepsilon >0\),

Therefore, we can write

Hence, squaring both sides, one deduces

Using that \(h_s\le h_{K_i}\) for \(i=1,2\), also recalling (4) and (46), we get

whence, using again (4),

Selecting \(\varepsilon = p_s^{-2}\) and using once more (4), one obtains

So far, we have assumed that \(1/2 < \alpha \le 2\). In order to get the desired bound on the edge residual, i.e. the one with \(\alpha =0\), we apply Lemma 2 with \(\alpha _1=0\) and \(\alpha _2 = 1/2+\varepsilon \), getting

Finally, note that for any value of \(p_{K_i}\in {\mathbb {N}}\) it holds that \(p_{K_i}^{\frac{2}{p_{K_i}^2}} \le 3\) and thus such term can be discarded.

4.4 Conclusions

We collect here the lower and upper bounds discussed in the foregoing Sects. 4.2 and 4.3. Note that such bounds are explicit in \(h\) and \(p\).

Theorem 1

Assume that the assumptions (D1)–(D2)–(P1) hold true. Let \(u\) and \(u_n\) be the solutions to (2) and (5), respectively, and let \(e= u- u_n\). For all \(K\in {\mathcal {T}}_n\), let \(\eta _{p_K}\) be the error residual defined in (42) and let \(\rho _{p_K}\) and \(\zeta _{p_K}\) be defined in (41). Then, assuming that all the polygons \(K\in {\mathcal {T}}_n\) are convex, the following global upper bound holds true:

Further, for every \(K\in {\mathcal {T}}_n\) and for all \(\varepsilon > 0\), the following local lower bounds hold true:

where \({\widetilde{\omega }}_K= \cup \{K' \in {\mathcal {T}}_n\mid \partial K' \cap \partial K\ne \emptyset \}\). The hidden constants in (49) and (50) depend solely on \(\varepsilon \) and on the shape-regularity of \({\mathcal {T}}_n\) and hence of \(\widetilde{{\mathcal {T}}}_n\), see Remark 1.

On the light of Theorem 1, the global (computable) error estimator that we propose is

Note that in the bounds (49) and (50) there appear additional multiplicative terms depending on \(\max (\alpha _*^{-1}(p_K), \alpha ^*(p_K))\). On the other hand, such terms were numerically shown in [12] to have a very mild dependence in terms of \(p_K\). We refer to Remark 8 for additional comments regarding the effects of the “stability” terms \(\alpha _*^{-1}(p_K)\) and \(\alpha ^*(p_K)\).

We also underline that on the right-hand side of (50), in addition to the energy error, other two terms appear. The term \(\rho _{p_{K}}\) is related to the oscillation of \(f\), the datum of the problem (1), and is typical also in the finite element framework. On the other hand, the term \(\zeta _{p_{K}}\) deals with the nonexactness of the discrete bilinear form and is standard in a posteriori error analysis of VEM, see [17, 26].

Remark 5

In presence of nonconvex polygons, the bound (49) needs to be modified; in particular, it appears in front of \(\eta _{p_K}^2\) a suboptimal factor \(p^{2\frac{\pi }{\alpha _K} - \varepsilon }\) for all \(\varepsilon >0\) arbitrarily small, \(\alpha _K\) being the largest interior angle of \(K\). Moreover, assuming that the assumption (D2) does not hold true, the estimates would get more involved, as one should take care of different scaling in terms of the size of elements and edges and of the effects of small edges on the stabilization.

5 Numerical results

In this section, we present a set of numerical experiments investigating on the performances of the error estimator introduced in (51). More precisely, we validate in Sect. 5.1 the estimates presented in Theorem 1, whereas, in Sect. 5.2, we recall from [42] an \(hp\) refinement algorithm which permits us to apply the adaptive \(hp\) virtual element method on a number of test cases.

Before presenting the results, we have to settle some features of the method, which so far were kept at a very general level. First of all, we underline that in the virtual element setting, it is not possible to compute explicitly the exact \(H^1\) error (since functions in virtual element spaces are not known in closed-form) and therefore we compute instead the following quantity, that is a classical choice in the VEM literature:

where \((\varPi ^{\nabla }u_n)_{|{K}} = \varPi ^{\nabla }_{p_K}(u_n)_{|_{K}}\) for all \(K\in {\mathcal {T}}_n\), see (9). By a triangle inequality and continuity of the \(\varPi ^\nabla \) operator, it can be easily checked that the quantity above differs with \(\vert u - u_n\vert _{1,\varOmega }\) by a piecewise polynomial approximation term (and thus holds the same behaviour essentially in all cases of interest).

Another important aspect of the method is the choice of the stabilization in (13). We adopt here the so called “D-recipe”, which was firstly introduced in [13] and whose performances were investigated in deep in [31, 39]. If we denote by \(\mathbf {S^K}\) the matrix representing the stabilization \(S^K\) on element \(K\) with respect to the canonical basis (11), then we set

where \(\delta _{i,j}\) denotes the Kronecker delta. Among the possible stabilizations available in the virtual element literature, the one defined in (53) is one of the most appealing in terms of robustness of the method, both when considering high degrees of accuracy and in presence of badly-shaped elements.

Finally, we address the issue of picking a “clever” polynomial basis dual to the internal moments (7). In particular, following again [31, 39], we employ a basis \(\{m_{\varvec{\alpha }}\}_{\vert \varvec{\alpha }\vert =0}^{p-2}\) which is \(L^2(K)\) orthonormal for all \(K\in {\mathcal {T}}_n\). Such a basis can be built, for instance, by orthonormalizing a basis of scaled and shifted monomials. Picking an orthonormal basis dual to internal moments is a choice particularly suited for the \(p\) version of the method since it allows to effectively damp the condition number of the stiffness matrix for high values of the polynomial degree.

In the numerical experiments, both the error estimator and the computable error (52) are normalized by \(\vert u \vert _{1,\varOmega }\).

5.1 Performances in terms of \(p\) of the error estimator

In this section, we investigate the performances of the error estimator for uniform \(p\) refinements; that is, we fix a coarse mesh and we achieve convergence by raising the polynomial degree in all mesh elements (note that the performances of \(h\) refinements where the topic of [26, Sect. 6.1] and therefore are not explored here). To this end, we consider three test cases with known exact solution

As usual, the loading term and the (Dirichlet) boundary conditions are set in accordance with the exact solution. Function \(u_1\) is analytic, function \(u_2\) belongs to \(H^{3-\varepsilon }(\varOmega _1)\) for all \(\varepsilon >0\) and is the “natural” singular solution to an elliptic problem over square \(\varOmega _1\), function \(u_3\) belongs to \(H^{\frac{5}{3}-\varepsilon }(\varOmega _2)\) for all \(\varepsilon >0\) and is the “natural” singular solution to an elliptic problem over the L-shaped domain \(\varOmega _2\).

We test the performances of the error indicator employing two types of mesh, namely a Cartesian and a Voronoi mesh. In Fig. 6, we depict the two meshes (on domain \(\varOmega _1\)), their counterparts in the L-shaped domain \(\varOmega _2\) being analogous.

In Figs. 7 and 8, we plot (for different values of the degree p) the computable error (52) versus the computed error estimator (51) for the three exact solutions in (54) on the two meshes of Fig. 6.

We observe that, when approximating analytic solutions, the error estimator and the computed error behave practically in the same way. For singular functions, the behaviour is instead slightly different and in particular the error estimator is slightly smaller than the computed error. This is due to the use of inverse estimates producing extra factors of \(p\) and to the pollution effect of the stabilization, see (49)–(50); however, if the solution is analytic, the pollution factors due to the inverse estimates and the stabilization are negligible, since all the local error estimators, as well as the exact error, decrease exponentially in terms of \(p\), see [11].

5.2 The \(hp\) adaptive refinement strategy

In this section, we present an \(hp\) refinement strategy, based on the standard procedure

Before discussing the \(hp\) refinement strategy, we describe how to perform \(h\) refinements. The standard strategy that one can follow consists in subdividing a polygon \(K\) with \(N^K\) “edges” into a number of “quadrilaterals” smaller than or equal to \(N^K\) obtained by connecting the barycenter of \(K\) to the midpoints of its “edges”. Note that by “edge” we mean a straight line on the boundary of a polygonal element, possibly consisting of more edges belonging to the polygonal decomposition; in particular, an edge could contain hanging nodes. Besides, by “quadrilateral”, we mean polygons with four “edges”, that is, such “quadrilateral” could have more than four edges, but precisely four “edges”. See Fig. 9.

We observe that this procedure may generate very small edges, thus contradicting the assumption (D2). Notwithstanding, we will not experience in the forthcoming numerical experiments any loss in accuracy due to the presence of small edges, which in any case appear rarely.

Standard \(h\) refinement strategy. One connects the barycenter of the element with the midpoints of every “edge”. As an exception, whenever two or more edges belong to the same straight line (“edge”), one connects the barycenter with the midpoint of such straight line. The red dots denote the vertices; the original polygon is denoted by solid lines; the new “quadrilateral” are highlighted with dashed lines

We stress that this procedure can be performed only on elements containing their barycenter (e.g. convex elements), but, importantly, starting from a convex element this construction generates only convex elements. Besides, the hanging nodes popping-up with this strategy fit naturally in the polygonal framework of VEM. Note that, when dealing with Cartesian meshes, the refinement strategy of Fig. 9 reduces to standard square refinement.

We now briefly review the \(hp\) refinement strategy of [42, Sect. 4.2], here adapted to the present method. The basic idea behind this approach is that, on the elements on which the exact solution to the continuous problem is “regular”, one performs \(p\) refinement (since the \(p\) version of the method leads to exponential convergence of the error in terms of \(p\) when approximating analytic solutions, see [11]), whereas, on the elements where the solution has a “singular” behaviour, one performs \(h\) refinement (since geometric mesh refinements lead to exponential convergence of the error in terms of the number of degrees of freedom when approximating singular functions, cf. [12]).

The \(hp\) refinement strategy reads as follows. At the n-th level of the algorithm, one marks the elements on which the error estimator is sufficiently large. To this end, one marks all the elements such that \(\eta ^2_{comp,K,n} \ge \sigma {\overline{\eta }}_a^2\) for some \(\sigma \in (0,1)\), where

being \(\eta ^2_{comp,K,n}\) and \(\eta _{comp,n}\) computed as in (51) for all \(K\in {\mathcal {T}}_n\) and for all \(n \in {\mathbb {N}}\).

Let us now be given, at the n-th adaptive refinement step, a “prediction-estimator” \(\eta _{\text {pred},K,n}\) on each element \(K\) of the mesh \({\mathcal {T}}_n\). Such estimator is instrumental in order to heuristically decide whether (elementwise) the exact solution is regular or not.

If one performs on element \(K\) an \(h\) refinement, then the expectations are, under the hypothesis of analyticity of \(u\), that the error reduces by a factor decreasing exponentially in terms of \(p\), see [11, 12]. Therefore, given \(N^K\) the number of son elements of \(K\), one sets the prediction-estimator \(\eta _{\text {pred},K_i,n}\) on \(K_i\) to:

for some \(\gamma _h\) fixed positive parameter. Note that the term 0.5 in the above equation stems from the fact that we are roughly dividing the element diameter \(h_K\) by two when performing the procedure in Fig. 9.

Instead, if one performs on element \(K\) a \(p\) refinement, one expects, under the hypothesis of analyticity of \(u\), a reduction of the error by a fixed factor \(\gamma _p\). Thus, one sets the prediction-estimator \(\eta _{\text {pred},p_K+1}\) on \(K\) to:

for some \(0< \gamma _p< 1\) fixed parameter.

On the elements that are not marked for refinement, one updates in a trivial fashion as

for some \(\gamma _n\) fixed positive parameter.

So far, we have constructed, at the n-th level of the \(hp\) refinement process, a set of prediction-estimators for the \(n+1\)-th level; as already underlined, such prediction-estimators have the scope of “guessing” elementwise the behaviour of the exact solution.

What one does at this point is that on all marked elements he checks whether

If this is the case, the actual error estimator is “larger” than the predicted one, where we have assumed that the solution was analytic; this means that the solution is not sufficiently “regular” on the element and therefore an \(h\) refinement has to be performed. The \(p\) refinement is effectuated otherwise.

Since in the first refinement step the prediction-estimators are not available (since there is not a 0 level in the adaptive construction of the spaces), the \(hp\) refinement boils down to a simple \(h\) refinement. To this purpose, in the first iteration of the adaptive algorithm, it suffices to set \(\eta ^2_{pred,K,0} = \frac{\eta ^2_{comp,K,0}}{2}\) on all elements \(K\).

In Algorithm 1, we present the \(hp\) refinement process discussed so far.

Remark 6

It may occur within the adaptive algorithm that the assumptions (D2), guaranteeing the presence of edges with size comparable to that of the element to whom they belong, and (P1), guaranteeing comparable degrees of accuracy on neighbouring elements, are not valid. However, from the forthcoming numerical experiments, it is evident that the method is robust in this respect.

Remark 7

The approach of Algorithm 1 is not the only one available in the framework of \(hp\) Galerkin methods. We refer to [44] for an overview of different \(hp\) refinement approaches.

In our experiments, we set the parameters in Algorithm 1 to [42]

where we recall that \(N^K\) is the number of elements into which polygon \(K\) is split in case of \(h\) refinement.

Moreover, we test the method on the test case with known solution \(u_3\), see (54), and

where we recall that \(\varOmega _1\) is the square domain introduced in (54). We underline that the solution \(u_4\) is analytic but has a steep derivatives around (0.5, 0.5).

Remark 8

Algorithm 1 is based on \(h\) refinements where the solution is assumed to have a “singular” behaviour, whereas it is based on \(p\) refinements where the solution is assumed to be “smooth”. Therefore, the pollution factor \(\max (\alpha _*^{-1}(p), \alpha ^*(p))\) appearing in the estimates of Theorem 1, behaves in the following heuristic fashion. It does not blow up when doing \(h\) refinements since it is independent of \(h\). Instead, when doing \(p\) refinements, it multiplies the factor \(\zeta _{p_K}\) defined in (41) which, since we are assuming that the solution is analytic in case of \(p\) refinements, is converging exponentially, in terms of \(p\). Thus, the pollution factor, which typically grows algebraically in terms of \(p\), see [5, Lemma 2.5] and [12, Theorem 2], is not in principle spoiling the behaviour of the adaptive scheme. This observation is confirmed by the numerical experiments below.

In Figs. 10 and 11, we show the \(hp\) mesh refinement after 3 and 10 steps of Algorithm 1 applied to the two test cases with known solution \(u_3\) and \(u_4\), starting from a coarse Cartesian mesh. Furthermore, we depict in Figs. 12 and 13 the same set of experiments starting from a coarse Voronoi mesh. In both cases, the \(h\) refinements are performed as in Fig. 9.

From Figs. 10 and 12, it is possible to appreciate the fact that Algorithm 1 is actually catching the singular behaviours of the solution \(u_3\). In particular, the mesh is geometrically refined towards such singularity. Note that, taking into account that the degrees of accuracy increase where the solution is smooth, it may seem that \(p\) refinement are effectuated also towards the re-entrant corner. This is not what actually happens, since lots of geometric refinements are performed and high degree of accuracy is picked only in outer layers.

Regarding Figs. 11 and 13, we can observe that the algorithm indeed detects that the target function is regular and therefore mainly applies \(p\) refinements; such refinements are slightly more concentrated in the area with higher derivatives. In order to have a more quantitative evaluation on the algorithm performance, we plot in Fig. 14 the error (52) and the error estimator (51) in terms of the square root of the total number of degrees of freedom, for the case with exact solution \(u_4\). We can appreciate the exponential convergence of the method, which resembles that of the \(p\) version of VEM when approximating analytic solutions [11].

Finally, we concentrate on the singular solution case \(u_3\). We aim to investigate whether Algorithm 1 leads to a decay of the error which is exponential in terms of the cubic root of the overall degrees of freedom also in this more complex situation. This is indeed what one expects from the a priori error analysis of \(hp\) FEM [49] and of \(hp\) VEM [12], employing graded meshes towards the singularity and increasing elementwise the degrees of accuracy. In Fig. 15, we plot the computable error (52) and the error estimator (51). We observe that, after some adaptive refinement steps, the decay gets the desired exponential slope.

As a final experiment, we compare in Fig. 16 the performances of the \(hp\) adaptive refinement strategy of Algorithm 1, with a pure \(h\) adaptive refinement strategy, which is equivalent to the scheme presented in Algorithm 1 setting \(\eta ^2_{pred,K, n} = 0\) for all \(K\in {\mathcal {T}}_n\) and for all \(n\in {\mathbb {N}}\).

Comparison between the \(hp\) and \(h\) adaptive refinement in Algorithm 1. Exact solution: \(u_3\) introduced in (54) on Cartesian (left) and Voronoi (right) meshes. On the \(x-\)axis, we depict the cubic root of the number of degrees of freedom

From Fig. 16, it is evident that the \(hp\) adaptive strategy overperforms the \(h\) refinement strategy, since, on the portions of the domain where the solution is sufficiently smooth, the \(p\) version is able to capture with very few degrees of freedom the same accuracy of an \(h\) version with many more degrees of freedom. It is however worth to underline that this comes at the price of having, for high \(p\), a more densely populated stiffness matrix.

References

Adams, R.A., Fournier, J.J.F.: Sobolev Spaces, vol. 140. Academic Press, New York (2003)

Ahmad, B., Alsaedi, A., Brezzi, F., Marini, L.D., Russo, A.: Equivalent projectors for virtual element methods. Comput. Math. Appl. 66(3), 376–391 (2013)

Ainsworth, M., Oden, J.T.: A procedure for a posteriori error estimation for \(hp\) finite element methods. Comput. Methods Appl. Mech. Eng. 101(1–3), 73–96 (1992)

Antonietti, P.F., Manzini, G., Verani, M.: The fully nonconforming virtual element method for biharmonic problems. Math. Models Methods Appl. Sci. 28(02), 387–407 (2018)

Antonietti, P.F., Mascotto, L., Verani, M.: A multigrid algorithm for the \(p\)-version of the virtual element method. ESAIM Math. Model. Numer. Anal. 52(1), 337–364 (2018)

Ayuso, B., Lipnikov, K., Manzini, G.: The nonconforming virtual element method. ESAIM Math. Model. Numer. Anal. 50(3), 879–904 (2016)

Babuška, I., Melenk, J.M.: The partition of unity finite element method: basic theory and applications. Comput. Methods Appl. Mech. Eng. 139(1–4), 289–314 (1996)

Beirão da Veiga, L., Brezzi, F., Cangiani, A., Manzini, G., Marini, L.D., Russo, A.: Basic principles of virtual element methods. Math. Models Methods Appl. Sci. 23(01), 199–214 (2013)

Beirão da Veiga, L., Brezzi, F., Marini, L.D.: Virtual elements for linear elasticity problems. SIAM J. Numer. Anal. 51, 794–812 (2013)

Beirão da Veiga, L., Brezzi, F., Marini, L.D., Russo, A.: The hitchhiker’s guide to the virtual element method. Math. Models Methods Appl. Sci. 24(8), 1541–1573 (2014)

Beirão da Veiga, L., Chernov, A., Mascotto, L., Russo, A.: Basic principles of \(hp\) virtual elements on quasiuniform meshes. Math. Models Methods Appl. Sci. 26(8), 1567–1598 (2016)

Beirão da Veiga, L., Chernov, A., Mascotto, L., Russo, A.: Exponential convergence of the \(hp\) virtual element method with corner singularity. Numer. Math. 138, 581–613 (2018)

Beirão da Veiga, L., Dassi, F., Russo, A.: High-order virtual element method on polyhedral meshes. Comput. Math. Appl. 74, 1110–1122 (2017)

Beirão da Veiga, L., Lipnikov, K., Manzini, G.: The Mimetic Finite Difference Method for elliptic problems, vol. 11. Springer, Berlin (2014)

Beirão da Veiga, L., Lovadina, C., Russo, A.: Stability analysis for the virtual element method. Math. Models Methods Appl. Sci. 27(13), 2557–2594 (2017)

Beirão da Veiga, L., Lovadina, C., Vacca, G.: Divergence free virtual elements for the Stokes problem on polygonal meshes. ESAIM Math. Model. Numer. Anal. 51(2), 509–535 (2017)

Beirão da Veiga, L., Manzini, G.: Residual a posteriori error estimation for the virtual element method for elliptic problems. ESAIM Math. Model. Numer. Anal. 49(2), 577–599 (2015)

Benedetto, M.F., Berrone, S., Borio, A., Pieraccini, S., Scialò, S.: A hybrid mortar virtual element method for discrete fracture network simulations. J. Comput. Phys. 306, 148–166 (2016)

Bernardi, C., Fiétier, N., Owens, R.G.: An error indicator for mortar element solutions to the Stokes problem. IMA J. Numer. Anal. 21(4), 857–886 (2001)

Berrone, S., Borio, A.: A residual a posteriori error estimate for the virtual element method. Math. Models Methods Appl. Sci. 27(08), 1423–1458 (2017)

Bertoluzza, S., Pennacchio, M., Prada, D.: BDDC and FETI-DP for the virtual element method. Calcolo 54(4), 1565–1593 (2017)

Brenner, S.C., Guan, Q., Sung, L.-Y.: Some estimates for virtual element methods. Comput. Methods Appl. Math. 17(4), 553–574 (2017)

Brenner, S.C., Scott, L.R.: The Mathematical Theory of Finite Element Methods. Texts in Applied Mathematics, vol. 15, Third edn. Springer, New York (2008)

Cáceres, E., Gatica, G.N.: A mixed virtual element method for the pseudostress-velocity formulation of the Stokes problem. IMA J. Numer. Anal. 37(1), 296–331 (2017)

Cangiani, A., Georgoulis, E.H., Giani, S., Metcalfe, S.: \(hp\)-adaptive discontinuous Galerkin methods for non-stationary convection-diffusion problems. https://doi.org/10.1016/j.camwa.2019.04.002 (2019)

Cangiani, A., Georgoulis, E.H., Pryer, T., Sutton, O.J.: A posteriori error estimates for the virtual element method. Numer. Math. 137, 857–893 (2017)

Cangiani, A., Munar, M.: A posteriori error estimates for mixed virtual element methods (2019). arXiv:1904.10054

Canuto, C., Nochetto, R.H., Stevenson, R., Verani, M.: Convergence and optimality of \(hp\)-AFEM. Numer. Math. 135(4), 1073–1119 (2017)

Chernov, A., Mascotto, L.: The harmonic virtual element method: stabilization and exponential convergence for the Laplace problem on polygonal domains. IMA J. Numer. Anal. (2018). https://doi.org/10.1093/imanum/dry038

Cockburn, B., Gopalakrishnan, J., Lazarov, R.: Unified hybridization of discontinuous Galerkin, mixed, and continuous Galerkin methods for second order elliptic problems. SIAM J. Numer. Anal. 47(2), 1319–1365 (2009)

Dassi, F., Mascotto, L.: Exploring high-order three dimensional virtual elements: bases and stabilizations. Comput. Math. Appl. 75(9), 3379–3401 (2018)

Di Pietro, D.A., Ern, A.: Hybrid high-order methods for variable-diffusion problems on general meshes. C. R. Math. Acad. Sci. Paris 353(1), 31–34 (2015)

Dolejsi, V., Ern, A., Vohralík, M.: \(hp\)-adaptation driven by polynomial-degree-robust a posteriori error estimates for elliptic problems. SIAM J. Sci. Comput. 38(5), A3220–A3246 (2016)

Evans, L.C.: Partial Differential Equations. American Mathematical Society, Providence (2010)

Gain, A.L., Talischi, C., Paulino, G.H.: On the virtual element method for three-dimensional elasticity problems on arbitrary polyhedral meshes. Comput. Methods Appl. Mech. Eng. 282, 132–160 (2014)

Houston, P., Süli, E.: A note on the design of \(hp\)-adaptive finite element methods for elliptic partial differential equations. Comput. Methods Appl. Mech. Eng. 194(2–5), 229–243 (2005)

Lipnikov, K., Manzini, G., Shashkov, M.: Mimetic finite difference method. J. Comput. Phys. 257, 1163–1227 (2014)

Mascotto, L.: The \(hp\) Version of the Virtual Element Method. Ph.D. thesis (2018)

Mascotto, L.: Ill-conditioning in the virtual element method: stabilizations and bases. Numer. Methods Partial Differ. Equ. 34(4), 1258–1281 (2018)

Mascotto, L., Perugia, I., Pichler, A.: Non-conforming harmonic virtual element method: \(h\)- and \(p\)-versions. J. Sci. Comput. 77(3), 1874–1908 (2018)

Melenk, J.M.: \(hp\)-interpolation of non-smooth functions. SIAM J. Numer. Anal. 43, 127–155 (2005)

Melenk, J.M., Wohlmuth, B.I.: On residual-based a posteriori error estimation in \(hp\)-FEM. Adv. Comput. Math. 15(1–4), 311–331 (2001)

Menezes, I.F.M., Paulino, G.H., Pereira, A., Talischi, C.: Polygonal finite elements for topology optimization: a unifying paradigm. Int. J. Numer. Methods Eng. 82(6), 671–698 (2010)

Mitchell, W.F., McClain, M.A.: A survey of \(hp\)-adaptive strategies for elliptic partial differential equations. Recent Advances in Computational and Applied Mathematics, pp. 227–258. Springer, Berlin (2011)

Mora, D., Rivera, G., Rodríguez, R.: A virtual element method for the Steklov eigenvalue problem. Math. Models Methods Appl. Sci. 25(08), 1421–1445 (2015)

Mora, D., Rivera, G., Rodriguez, R.: A posteriori error estimates for a virtual elements method for the Steklov eigenvalue problem. Comput. Math. Appl. 74, 2172–2190 (2017)

Perugia, I., Pietra, P., Russo, A.: A plane wave virtual element method for the Helmholtz problem. ESAIM Math. Model. Numer. Anal. 50(3), 783–808 (2016)

Rjasanow, S., Weißer, S.: Higher order BEM-based FEM on polygonal meshes. SIAM J. Numer. Anal. 50(5), 2357–2378 (2012)

Schwab, C.: \(p\) -and \(hp\)-Finite Element Methods: Theory and Applications in Solid and Fluid Mechanics. Clarendon Press, Oxford (1998)

Sukumar, N., Tabarraei, A.: Conforming polygonal finite elements. Int. J. Numer. Methods Eng. 61, 2045–2066 (2004)

Triebel, H.: Interpolation Theory, Function Spaces, Differential Operators. North-Holland, Amsterdam (1978)

Vacca, G.: An \({H}^1\)-conforming virtual element for Darcy and Brinkman equations. Math. Models Methods Appl. Sci. 28(01), 159–194 (2018)

Wriggers, P., Rust, W.T., Reddy, B.D.: A virtual element method for contact. Comput. Mech. 58(6), 1039–1050 (2016)

Acknowledgements

Open access funding provided by University of Vienna. L. B. d. V was partially supported by the European Research Council through the H2020 Consolidator Grant (Grant no. 681162) CAVE, Challenges and Advancements in Virtual Elements. This support is gratefully acknowledged. G. M. was supported by the Laboratory Directed Research and Development Program (LDRD), U.S. Department of Energy Office of Science, Office of Fusion Energy Sciences, and the DOE Office of Science Advanced Scientific Computing Research (ASCR) Program in Applied Mathematics Research, under the auspices of the National Nuclear Security Administration of the U.S. Department of Energy by Los Alamos National Laboratory, operated by Los Alamos National Security LLC under contract DE-AC52-06NA25396. L. M. has been funded by the Austrian Science Fund (FWF) through the project F 65.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Beirão da Veiga, L., Manzini, G. & Mascotto, L. A posteriori error estimation and adaptivity in hp virtual elements. Numer. Math. 143, 139–175 (2019). https://doi.org/10.1007/s00211-019-01054-6

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00211-019-01054-6