Abstract

We consider a system of charged particles moving on the real line driven by electrostatic interactions. Since we consider charges of both signs, collisions might occur in finite time. Upon collision, some of the colliding particles are effectively removed from the system (annihilation). The two applications we have in mind are vortices and dislocations in metals. In this paper we achieve two goals. First, we develop a rigorous solution concept for the interacting particle system with annihilation. The main innovation here is to provide a careful management of the annihilation of groups of more than two particles, and we show that the definition is consistent by proving existence, uniqueness, and continuous dependence on initial data. The proof relies on a detailed analysis of ODE trajectories close to collision, and a reparametrization of vectors in terms of the moments of their elements. Second, we pass to the many-particle limit (discrete-to-continuum), and recover the expected limiting equation for the particle density. Due to the singular interactions and the annihilation rule, standard proof techniques of discrete-to-continuum limits do not apply. In particular, the framework of measures seems unfit. Instead, we use the one-dimensional feature that both the particle system and the limiting PDE can be characterized in terms of Hamilton–Jacobi equations. While our proof follows a standard limit procedure for such equations, the novelty with respect to existing results lies in allowing for stronger singularities in the particle system by exploiting the freedom of choice in the definition of viscosity solutions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Our starting point is the interacting particle system formally given by

where \(n \ge 2\) is the number of particles, \({\mathbf {x}}:= (x_1, \ldots , x_n)\) are the particle positions in \({\mathbb {R}}\), and \({\mathbf {b}}:= (b_1, \ldots , b_n)\) are the charges of the particles, which are initially set as \(+1\) or \(-1\). One can think of this particle system as charged particles in a viscous fluid. Indeed, the right-hand side of the ODE shows that the interaction forces are nonlocal and singular. Moreover, equal-sign charges repel and opposite-sign charges attract each other.

Due to these attractive forces, particles of opposite charge may collide in finite time. Since the right-hand side of the ODE does not vanish prior to the collision (in fact, it blows up), the ODE is ill-defined at the collision time, and a modeling choice is to be made on how to continue it. Here we make the choice that opposite signs annihilate each other: when two particles of opposite charge collide, they are removed from the system, and the remaining particles continue to evolve by (\(P_n\)). Technically we encode this annihilation by setting the charges \(b_i\) of the colliding particles to 0. This is equivalent to removing them from the system because the ODE assigns zero velocity to particles with zero charge and vice versa, particles with zero charge do not exert a force on any other particle. In analogy with electric charges we call particles with zero charge neutral, and particles with nonzero charge charged.

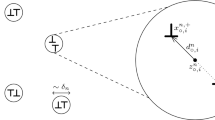

A rigorous description of (\(P_n\)) is given in Definition 2.1, and Fig. 1 illustrates an example set of trajectories. To give some insight into the properties of (\(P_n\)), we list here several observations:

-

Gradient flow. In between collisions, the ODE is the gradient flow of the energy

$$\begin{aligned} E({\mathbf {x}}; {\mathbf {b}}) = \frac{1}{2n^2} \sum _{i=1}^n \sum _{ \begin{array}{c} j = 1 \\ j \ne i \end{array} }^n b_i b_j V (x_i - x_j), \qquad V(x) := \log \frac{1}{|x_i - x_j|}, \end{aligned}$$(1)where V is the particle interaction potential.

-

Particle exchangeability. (\(P_n\)) is invariant under relabeling the indices of particles with the same charge.

-

Multiple-particle collisions. The most likely collision is that between two charged particles of opposite charge. Yet, there are possible situations in which three or more charged particles collide; see Fig. 1.

-

Translation invariance. If \(({\mathbf {x}}, {\mathbf {b}})\) is a solution to \((P_n)\), then \(({\mathbf {x}}+ a {\varvec{1}}, {\mathbf {b}})\) also is a solution, for any \(a \in {\mathbb {R}}\) (here \({\varvec{1}}= (1,\ldots ,1)\)).

-

Scale invariance. If \(t\mapsto ({\mathbf {x}}(t), {\mathbf {b}}(t))\) is a solution to \((P_n)\), then \(t\mapsto (\alpha {\mathbf {x}}(\alpha ^{-2} t), {\mathbf {b}}(\alpha ^{-2} t))\) also is a solution for any \(\alpha > 0\).

-

Conserved quantities. The first moment \(M_1 (x_i) := \sum _{i=1}^n x_i\) and the net charge \(\sum _{i=1}^n b_i\) are conserved under (\(P_n\)).

The main aim of this paper is to establish the ‘continuum limit’ \(n\rightarrow \infty \). This is a classical question in the study of interacting particle systems, with a long and distinguished history (see below). What makes the results of this paper special is the combination of opposite signs (and the ensuing annihilation) with singular interaction potentials.

Along the way we also develop a theory for the annihilating finite-n particle system (\(P_n\)). For instance, it turns out that formulating the right solution concept for (\(P_n\)) is subtle; with the choices made in Definition 2.1 we are able to prove the existence, uniqueness, stability, and various other properties of its solutions.

1.1 Motivation

Our main motivation for studying the limit \(n \rightarrow \infty \) of (\(P_n\)) comes from a key problem in understanding plastic deformation of metals. Plastic deformation of metals is the macroscopic behavior of many dislocations interacting on microscopic time and length scales. Dislocations are curve-like defects in the atomic lattice of the metal. We refer to [27, 29] for textbook descriptions of dislocations and their relation to plasticity. Because of the immense complexity of the dynamics of groups of dislocations, it is a long-standing problem to derive plastic deformation as the micro-to-macro limit of interacting dislocations.

To address this problem, several simplifying assumptions are common in the literature:

-

1.

The assumption that the dislocation lines are straight and parallel, such that their position can be identified by points in a 2D cross section [1, 5, 11, 19,20,21, 24, 30, 36, 39].

-

2.

The further assumption that these dislocation points move along the same line [17, 23, 25, 28, 49] . This assumption is inspired by the fact that for many types of dislocations the movement is confined to a slip plane.

-

3.

The assumption that the velocity of dislocations is proportional to the stress acting on them. Most of the literature on micro-to-macro limits operates under this assumption. A few exceptions are [2, 32, 39].

Under these assumptions, the resulting dynamics of dislocations are given by (\(P_n\)). The charges \(b_i\) correspond to the orientation of the dislocations (given by their Burgers vector). Dislocations with opposite Burgers vector are such that when they collide, their lattice defects cancel out, and a locally perfect lattice emerges after a collision. This motivates the annihilation rule that we adopt here.

Including annihilation in dynamic dislocation models in one form or another is not new. For instance, the discrete-to-continuum limits of Alicandro, De Luca, Garroni, and Ponsiglione [1, 2] allow opposite-sign defects to cancel each other, in a regime corresponding to O(1) net dislocations. Forcadel, Imbert, and Monneau [18] and Van Meurs and Morandotti [50] both include annihilation for regularized dislocation interactions. Another example is [37, 38, 42, 43], where the dislocation positions are described by a phase-field model (the Peierls–Nabarro model) which by construction includes annihilation, and can be considered a form of regularization.

The work of this paper is different in a number of ways. First, we insist on taking the \(n\rightarrow \infty \) limit for point particles with unregularized, singular interactions. Second, the finite-n system has an explicit annihilation rule, which is a consequence of the point nature of the particles and the singularity of the ODE, as we described above.

Our second motivation for studying annihilation is the dynamics of vortices. In [47] and [45] it is shown that the two-dimensional dissipative Ginzburg-Landau equation converges, as the related phase-field parameter tends to zero, to an evolution of point vortices. Besides the complication of two spatial dimensions, vortices may have an integer-valued degree (charge), which further complicates the annihilation rule. In fact, a self-contained equation for the vortex dynamics including an annihilation rule has not yet been constructed. With the results of this paper we hope to help in clarifying vortex dynamics.

On the other end of the spectrum, a model for the many-vortex limit appears in superconductivity where it is called the Chapman–Rubinstein–Schatzman–E model. In [4] a gradient flow structure of this model is formulated, but the mathematical description is not completely satisfactory due to an implicit term regarding the vortex annihilation.

As of yet, there appears to be no rigorous result on the many-vortex limit passage for multi-sign vortices. In this paper we aim to contribute by considering a simpler particle system.

Our third motivation for studying the limit \(n \rightarrow \infty \) of (\(P_n\)) is to contribute to the general understanding of many-particle limits for interacting particle systems with multiple species; see, e.g., [13,14,15]. The particle systems considered in these papers are similar to (\(P_n\)), but have bounded interaction forces depending on the particle types \(b_i\) and \(b_j\), for which no particular collision rule needs to be specified. Still, in [13], the interaction force is discontinuous at zero, which results in nontrivial particle collisions that require special attention. A central difference with the current paper is that particles that collide are not removed; instead, particles are conserved after collision. In the subsequent dynamics they remain completely ‘joined’ to each other, and this joined couple effectively decouples the interaction between other particles to the left and to the right. Since in [13] the number of particles in conserved and the interaction forces remain bounded, different techniques such as Wasserstein gradient flows apply, which makes the analysis significantly different from that in this paper.

1.2 Well-Posedness of the ODE

Our first main result is the well-posedness of (\(P_n\)). While the classical ODE theory gives existence and uniqueness of solutions up to the first collision time \(\tau \), it fails to say what happens at \(\tau \). Since the interaction force is singular, it is not obvious why the limit of \(x_i(t)\) as \(t \nearrow \tau \) exists. And, if it exists, the possibility of multiple particle collisions makes it unclear whether the right-hand side of the ODE is defined after the annihilation rule has been applied. Besides the classical ODE theory, the gradient flow structure is of limited use too, because the energy diverges to \(-\infty \) prior to collision.

Our strategy to overcome these two difficulties at a collision event is to consider the relevant quantities of the ODE. First, for existence of the limits \(x_i(t)\) as \(t \nearrow \tau \), we use the property that the moments

do not blow up as \(t \nearrow \tau \). Indeed, differentiating the moments along solutions yields that the singularity in the right-hand side of the ODE cancels out. This turns out to be sufficient to deduce that the limit of \(x_i(t)\) as \(t \nearrow \tau \) exists.

Secondly, we show that at any collision event, the total net charge of the colliding particles has to be either \(-1\), 0, or \(+1\). Hence, after a collision, at most one charge remains active, and therefore the right-hand side of the ODE (\(P_n\)) is again meaningful. Hence, the ODE can be restarted after collision.

These proof elements lead to our first main result.

Theorem A

(See Theorem 2.4). The system of equations (\(P_n\)) with rigorous meaning given by Definition 2.1 is well posed, i.e. solutions exist, are unique, and depend continuously on the initial data. In addition they are Lipschitz continuous in time with respect to a special metric \(d_{\mathbf {M}}\) based on the moments \(M_k\) (2).

1.3 The Many-Particle Limit \(n \rightarrow \infty \)

Our second main result, Theorem 4.1, is the passage of (\(P_n\)) to the limit \(n\rightarrow \infty \), and the characterization of the limit equation. The usual approach in the particle systems literature (see, e.g., [16, 24, 46, 49]) is to describe the particle positions by an empirical measure. For the two-species particle system (\(P_n\)) a natural choice is

Then, one derives from the particle system that \(\kappa _n\) satisfies a weak form of a PDE, which for (\(P_n\)) turns out to be

where \(V'(x) = - \frac{1}{x}\) as in (1) and \(*\) denotes the convolution over space. Finally, the task is to pass to \(n \rightarrow \infty \) in this weak form in the framework of measures. The expected limiting equation is

where positive values of the particle density \(\kappa \) correspond to positively charged particles, and negative values represent the density of the negatively charged ones. In our setting, it is difficult to make this limit passage rigorous due to the combination of signed charges, singular interactions, and the annihilation rule.

In this paper we follow a different approach. To date, the only rigorous interpretation of (5) is developed in [33] and [6]. It is given in terms of a Hamilton–Jacobi equation. Before laying out the details, let us formally derive this Hamilton–Jacobi equation. The idea is to integrate (5) in space. Assuming that \(\kappa \) is regular enough, we define the cumulative distribution function

Since \(\partial _x u = \kappa \), the derivative of u describes the density of particles; in particular, the sign of \(\partial _x u(x)\) determines the charge of the particles around x. With this definition of u, integrating (5) in space yields

The interaction term \(\partial _x (V' * u)\) has several alternative representations given by

where \(-{\mathcal {I}}\) is a Lévy operator of order 1, \((-\Delta )^{1/2}\) is the half-Laplacian, \({\mathcal {F}}\) is the Fourier transform, \({\mathcal {H}}\) is the Hilbert transform, and \(\text {pv}\int \) is the principal-value integral.

Using the short notation \({\mathcal {I}}[u]\) above we write the Hamilton–Jacobi equation (7) as

This is a Hamilton–Jacobi equation with a Hamiltonian \({\mathcal {I}}[u]\) which is non-standard for two reasons. First, it is a nonlocal function of u; similar nonlocal Hamilton–Jacobi equations are regularly used to describe curvature-driven flows such as mean-curvature flow (see e.g. [12]). Second, the singular kernel in \({\mathcal {I}}\) does not permit evaluation of \({\mathcal {I}}\) at every bounded, uniformly continuous function, and thus a proper extension has to be constructed. We postpone the related rigorous definition of viscosity solutions to Section 3.

Coming back to the question of passing to the limit \(n \rightarrow \infty \) in (\(P_n\)), it is then natural to seek a Hamilton–Jacobi formulation for it such that the limit passage can be carried out in a Hamilton–Jacobi framework. In [18] the authors develop such a formulation for a version of (\(P_n\)) with regularized interactions. They successfully pass to the limit \(n \rightarrow \infty \), but the link between the Hamilton–Jacobi equation and (\(P_n\)) (with singular interactions) is only established in the simple case where all particles have the same charge. The difficulty in the signed-charge case stems from the singularity of the interactions and the previously missing definition and well-posedness of (\(P_n\)). With Theorem A in hand, we overcome this difficulty, and treat the case of singular interactions and annihilation.

To construct a Hamilton–Jacobi formulation for (\(P_n\)), we build on the ideas in [18]. Analogously to (6), we set

as the level set function, where H is the Heaviside function with \(H(0) = 1\). We illustrate \(u_n\) in Fig. 2. The function \(u_n\) is piecewise constant, with jumps at the particle positions \(x_i\), where the direction of the jump (upward or downward) determines the charge \(b_i\). The precise value of \(u_n\) at the jump points turns out to be important; we will often consider the upper and lower semi-continuous envelope of \(u_n\).

Possible graphs of the level set functions \(u_n\) and v for the particle trajectories in Fig. 1 sliced at three time points; slightly before \(\tau _1\), at \(\tau _1\), and slightly after \(\tau _1\). The change of sign of v at the intersections with the level sets at \({\mathbb {Z}}/n\) determines the charge of the particles. If there is no change in sign, no particle is associated to it (illustrated by the white circle in the second graph)

However, to derive formally the Hamilton–Jacobi equation, it is more instructive to replace \(u_n\) by a regular enough level set function v. Figure 2 illustrates a possible choice of v. Given v, the particle positions \(x_i\) can be recovered from the level sets of v at \(\frac{1}{n} {\mathbb {Z}}\), i.e. by solving for y in \(v(y) \in \frac{1}{n} {\mathbb {Z}}\). The charge \(b_i\) is then given by

which is the analogue of the continuum Hamilton–Jacobi equation in which \(\partial _x u\) determines the charge of the particle density. Given \(u_n\), a sufficient condition for a related continuous function v is

Because of the jumps in \(u_n\) and the continuity of v, this implies that

where \(u_n^*\) is the upper semi-continuous envelope of \(u_n\). By (12) we can recover \(u_n\) from v by

where \(\lfloor \cdot \rfloor \) denotes the floor function, that is, the largest integer less than or equal to the argument.

To derive formally the Hamilton–Jacobi equation from the solution \(({\mathbf {x}}, {\mathbf {b}})\) to (\(P_n\)), suppose that there exists a regular level set function \(v : [0,\infty ) \times {\mathbb {R}}\rightarrow {\mathbb {R}}\) such that \(v(t, \cdot )\) satisfies (12) at each \(t \ge 0\). Then, by (13), \(v(t, x_i(t))\) is constant in t for each i. Hence, \(0 = \frac{\mathrm{d}}{\mathrm{d}t} v(t, x_i(t))\), which we rewrite as

To rewrite the force in terms of v, we use for \(x_i > x_j\) that

to obtain (see (74) for details)

where in the second equality we have used (14) with

Inserting this in (15) and recalling (11), we obtain

This is the formal shape for the Hamilton–Jacobi equation which describes solutions to (\(P_n\)).

We take a moment to discuss several features of (18). First, the expression for \({\mathcal {I}}_n\) resembles the last expression of \({\mathcal {I}}\) in (8). The only difference is the appearance of \(E_{1/n}\), which is a staircase approximation of the identity (see Fig. 3). The offset \(\frac{1}{2n}\) is carefully chosen to cancel out the singularity of the kernel \(1/z^2\) at 0; it originates in the subtraction of \(\frac{1}{2}\) in the integrand in (16). The role of \(E_{1/n}\) is to project the information of v down to the behavior of the level sets of v corresponding to the discrete levels \(v(x) = k/n\) for different values of \(k\in {\mathbb {Z}}\); this corresponds to the discrete nature of (\(P_n\)). Because of this nonlocal feature of \({\mathcal {I}}_n\) which even includes interactions between different level sets, (18) is a non-standard Hamilton–Jacobi equation. To further illustrate its behavior, we compute in Example 3.1 an explicit, continuous solution v to (18), and show how each choice of \(a \in [0,1)\) for the level sets at \(\frac{1}{n} ({\mathbb {Z}}+ a)\) corresponds to a different solution to (\(P_n\)).

Secondly, the formulation of (\(P_n\)) in terms of the Hamilton–Jacobi equation (18) has several mathematical advantages:

-

1.

No annihilation rule needs to be specified. For example, a two-particle annihilation happens when a local maximum of v crosses a level set downwards, or when a local minimum crosses it upwards (see Fig. 2).

-

2.

The function v need not develop singularities around annihilation points (see again Fig. 2).

Note that the Hamilton–Jacobi formulation exposes a monotonicity property of the trajectories of (\(P_n\)): for \(a_1 \ne a_2\), the level sets of v at \(\frac{1}{n} ({\mathbb {Z}}+ a_1)\) do not cross with any of the level sets of v at \(\frac{1}{n} ({\mathbb {Z}}+ a_2)\). This property follows after establishing a comparison principle. This monotonicity of trajectories is difficult to obtain from either (\(P_n\)) or its measure formulation (4), and is not used in the part of the literature on many-particle limits which relies on a measure-theoretic framework.

Thirdly, even for smooth functions \(\phi \) the operator \({\mathcal {I}}_n\) is not defined at local maxima or local minima of \(\phi \). However, at these points, \(\partial _x \phi \) vanishes, and thus it may be possible to extend the right-hand side in (18) at these points. We show that such an extension is possible when developing a notion of viscosity solutions to (18). In this definition, we build on ideas from [33] for the limiting Hamilton–Jacobi equation (9). It is here that we depart from the framework in [18]; in [18] this issue was solved by regularizing the singular kernel \(1/z^2\) around 0, which implies modifying the equation (\(P_n\)), while we insist on studying the original equation.

Fourthly, our Definition 3.4 of viscosity solutions of (18) does not only allow for solutions that are continuous, but also for discontinuous solutions; we show in Lemma 4.2 and Proposition 4.5 that the step function \(u_n(t,x)\) as constructed from the solution to (\(P_n\)) indeed is the unique viscosity solution to (18) with initial datum \(u_n(0,x)\). Because of the discontinuity, usual proofs of uniqueness do not apply (see e.g. [6, Th. 2.4]); Proposition 4.5 uses the special structure of (\(P_n\)) to deal with this discontinuity. This shows that each solution of (\(P_n\)) generates a corresponding unique solution of the Hamilton–Jacobi equation (18). This is interesting, because (\(P_n\)) has a clear physical interpretation with possible extension to higher dimensions, whereas (18) has an advantageous mathematical structure.

We now return to the aim of this paper to pass to the limit \(n \rightarrow \infty \). For this limit passage we follow the usual approach in viscosity theory; this is our second main result.

Theorem B

(See Theorem 4.1.) Assume that the initial data satisfy \(u_n^\circ \rightarrow u^\circ \) uniformly, and that \(u^\circ \) is bounded and uniformly continuous. Then \(u_n \rightarrow u\) locally uniformly in time-space as \(n \rightarrow \infty \), the function u is continuous, and u satisfies the limiting Hamilton–Jacobi equation given formally by (9).

Turning to the limiting Hamilton–Jacobi equation (9), we construct a different—but equivalent—notion of viscosity solutions than that in [6, 33]. The reason for this is that Theorem B is easier to prove if the notions of viscosity solutions of (9) and (18) are similar. More precisely, in contrast to the viscosity-solution approach in [33], we restrict the class of test functions at \(\partial _x u = 0\) to functions of the specific form \(cx^4 + g(t)\). This idea of reducing the class of test functions is inspired by the approach of Ishii and Souganidis [34] and [40]; see also [12] and the discussion of \(\mathcal F\)-solutions in [22]. This choice interacts well with the observation that annihilating particles meet with quadratic rate (see Theorem 2.4(iv–v) and Fig. 1). Even with this restricted class of test functions, the standard comparison principle holds (Theorem 3.6 and Theorem 3.11) and yields uniqueness even for discontinuous initial data (Proposition 4.5).

1.4 Measure-Theoretic Version of Theorem B

As we mentioned above, a common approach in many-particle limits is to consider the evolution equation (4) for the empirical measure \(\kappa _n\), and pass to the limit in a weak formulation of that equation. The typical type of convergence that one obtains in this way is narrow convergence at each time t, i.e.

For comparison with this body of literature we now restate Theorem B in terms of \(\kappa _n\) and \(\kappa \), using measure terminology.

Theorem B requires the initial datum \(u_n^\circ \) to converge uniformly to a continuous limit \(u^\circ \). If \(\kappa _n^\circ \) were non-negative, then the pair \((\kappa _n^\circ ,u_n^\circ )\) could be interpreted as a probability distribution and its cumulative distribution function; in this case \(\kappa _n^\circ \) converges narrowly if and only if \(u_n^\circ \) converges at every x at which the limit \(u^\circ \) is continuous. When the limit \(u^\circ \) is continuous, as is the case here, the convergence strengthens to uniform convergence.

However, the measures \(\kappa _n\) have both signs, and then (locally) uniform convergence to a continuous limit is significantly stronger than narrow convergence alone (a counterexample is \(\kappa _n = \delta _{1/n} - \delta _0\); see Section 8.1 in [7] for a discussion). This explains the appearance of the third condition on \(\kappa _n\) in the following Lemma:

Lemma C

(See Lemma 5.1.) Let \(u_n^\circ (x)=\kappa _n^\circ ((-\infty ,x])\) as in (10), and assume that the sequence \(\kappa _n^\circ \) is bounded in total variation and tight. Then the following are equivalent:

-

1.

\(u_n^ \circ \) converges uniformly to \(u^\circ \), and \(u^\circ \) is continuous;

-

2.

\(u_n^ \circ \) converges locally uniformly to \(u^\circ \), and \(u^\circ \) is continuous;

-

3.

-

(a)

\(\kappa _n^\circ \) converges narrowly to \(\kappa ^\circ \),

-

(b)

\(\kappa ^\circ \) has no atoms, and

-

(c)

there exist a sequence \(s_n\xrightarrow {n\rightarrow \infty }0\) and a modulus of continuity \(\omega \) such that

$$\begin{aligned} \text {for all }-\infty< x\le y< \infty , \qquad |\kappa ^\circ _n((x,y])|\le s_n + \omega (|x-y|). \end{aligned}$$(19)

-

(a)

The limits \(u^\circ \) and \(\kappa ^\circ \) are connected by \(u^\circ (x) = \kappa ^\circ ((-\infty ,x])\).

Corollary D

(See Corollaries 5.5 and 5.6.) Assume that \(\kappa _n^\circ \) converges to \(\kappa ^\circ \) in the sense of Lemma C. Then for any sequence \(t_n\rightarrow t\) in [0, T], \(\kappa _n(t_n)\) converges to \(\kappa (t)\) in the sense of Lemma C. The sequence \((s_n)_n\) and the modulus of continuity \(\omega \) can be chosen to be independent of the sequence \(t_n\rightarrow t\).

In addition the map \(t\mapsto \kappa (t)\) is narrowly continuous.

The connection between Theorem B and Corollary D is discussed in Section 5.

1.5 Discussion

To summarize the above, our main two results are the combination of Definition 2.1 and Theorem 2.4 on well-posedness of the particle systems described by (\(P_n\)), and Theorem 4.1 on the convergence of (\(P_n\)) as \(n \rightarrow \infty \) with (5) as the PDE for the signed limiting particle distribution. We conclude by discussing several features of these results.

Properties of the limiting equation In [6] regularity of viscosity solutions to (9) is proven. In particular, if \(u^\circ \in {\text {Lip}}({\mathbb {R}})\), then \(\Vert u(t, \cdot ) \Vert _\infty \) and \(\Vert \partial _x u(t, \cdot ) \Vert _\infty \) are non-increasing in time. Hence, if the signed particle distribution \(\kappa ^\circ \) is an absolutely continuous measure with bounded density, then \(\kappa (t, \cdot )\) is also absolutely continuous with density in \(L^\infty ({\mathbb {R}})\) for each \(t \ge 0\).

Hölder-continuous trajectories The properties of the solution \(({\mathbf {x}}, {\mathbf {b}})\) to (\(P_n\)) listed in Theorem 2.4 suggest that \(t \mapsto {\mathbf {x}}(t)\) is in \(C^{1/2}([0,T])\). Since our proof methods do not rule out oscillatory behavior of the trajectories prior to annihilation, we were only able to prove that \(t \mapsto {\mathbf {x}}(t)\) is continuous, and \(C^\infty \) away from collision times.

Other interaction potentials In (\(P_n\)) we chose to take as the particle interaction potential the function \(V(x) = -\log |x|\). This choice is most relevant to the applications mentioned in Section 1.1. In the literature on particle systems other potentials such as \(V(x) = |x|^{-s}\) with \(0< s < 1\) or smooth perturbations thereof also appear [16, 23, 26, 41]. Due to lack of clear applications for annihilation and to avoid clutter, we have not investigated whether our method works for such potentials.

Two dimensions A future goal is to define and prove well-posedness of (\(P_n\)) in two dimensions, and then also to pass to the limit \(n \rightarrow \infty \). Our current proof methods strongly rely on the ordering of the particles. Yet, already in 1D our proof method for the well-posedness of (\(P_n\)) contains unconventional ideas, which may inspire a new approach to treat the higher dimensional case.

Organization of the paper In Section 2 we state and prove our first main result on the well-posedness of (\(P_n\)). In Section 3 we give a precise meaning to the Hamilton–Jacobi equations (18) and (9), prove that they satisfy a comparison principle, and establish the convergence of (18) to (9). In Section 4 we apply this convergence to pass to \(n \rightarrow \infty \) in (\(P_n\)). In Section 5 we reformulate the convergence result in a measure theoretic framework.

2 Well-Posedness and Properties of \((P_n)\)

To give a rigorous meaning to the particle system in (\(P_n\)) with \(n \ge 2\), we start with defining a state space \({\mathcal {Z}}_n\) for the pair \(({\mathbf {x}}, {\mathbf {b}})\). It will be convenient to have a unique description of a set of particles by numbering them from left to right; in the case of charged particles the dynamics preserves such numbering, since same-sign neighbors repel each other and opposite-sign neighbors are removed upon collision. Neutral particles, however, have no reason to preserve the left-to-right numbering of the charged particles, since neutral and charged particles do not interact.

These observations lead to a definition of the state space \({\mathcal {Z}}_n\) that imposes ordering for charged particles only:

Since we are interested in the charged particles, we call two particles \(j < i\) neighbors if they are charged and any particle in between them is neutral, i.e.

(As an alternative to \({\mathcal {Z}}_n\), one could remove the neutral particles from the state, and consider the union of sets of varying dimension

as the state space. This state space can naturally be embedded into \({\mathcal {Z}}_n\) by relabeling the particle indices. We prefer to keep the number of particles the same, and ‘remove’ particles by setting their charge to zero.)

The solution concept to (\(P_n\)) is as follows:

Definition 2.1

(Solution to \((P_n)\)) Let \(n \ge 2\), \({\mathbf {b}}^\circ \in \{-1,0,1\}^n\) and \(({\mathbf {x}}^\circ , {\mathbf {b}}^\circ ) \in {\mathcal {Z}}_n\). A map \(({\mathbf {x}}, {\mathbf {b}}) : [0,T] \rightarrow {\mathcal {Z}}_n\) is a solution of \((P_n)\) if there exists a finite subset \(S \subset (0,T]\) such that

-

(i)

(Regularity) For each \(i\in \{1,n\}\), \(x_i \in C([0,T]) \cap C^1([0,T] \setminus S)\), and \(b_1, \ldots , b_n : [0,T] \rightarrow \{-1, 0, 1\}\) are right-continuous;

-

(ii)

(Initial condition) \(({\mathbf {x}}(0), {\mathbf {b}}(0)) = ({\mathbf {x}}^\circ , {\mathbf {b}}^\circ )\);

-

(iii)

(Annihilation rule) Each \(b_i\) jumps at most once. If \(b_i\) jumps at \(t \in [0,T]\), then \(t \in S\), \(|b_i(t-)| = 1\) and \(b_i(t) = 0\). Moreover, for all \((\tau , y) \in S \times {\mathbb {R}}\),

$$\begin{aligned} \sum _{i: x_i(\tau ) = y} \llbracket b_i \rrbracket (\tau ) = 0, \end{aligned}$$(20)where the bracket \(\llbracket f \rrbracket (t)\) is the difference between the right and left limits of f at t;

-

(iv)

(ODE of \({\mathbf {x}}\)) On \((0,T) \setminus S\), \({\mathbf {x}}\) satisfies the ODE in (\(P_n\)).

Definition 2.1 calls for some terminology. For a function f of one variable, we set \(f(t-) := \lim _{s \nearrow t} f(s)\) as the left limit. We call a point \((\tau , y) \in S \times {\mathbb {R}}\) an annihilation point if the sum in (20) contains at least one non-zero summand. We call the time \(\tau \) of an annihilation point a collision time. The set of all collision times \(\{ \tau _1, \ldots , \tau _K \}\) is finite, where \(K \le n^+ \wedge n^-\) and \(n^\pm \) are the numbers of positively/negatively charged particles at time 0. From Theorem 2.4 it turns out that the minimal choice for S is \(\{ \tau _1, \ldots , \tau _K \}\).

In Definition 2.1, annihilation is encoded by the combination of the annihilation rule in (iii) and the requirement that \(({\mathbf {x}}(t), {\mathbf {b}}(t)) \in {\mathcal {Z}}_n\). Indeed, Definition 2.1(iii) limits the choice of jump points for \(b_i\), while the separation of particles implied by \(({\mathbf {x}}(t), {\mathbf {b}}(t)) \in {\mathcal {Z}}_n\) requires particles to annihilate upon collision.

Remark 2.2

As we shall see in Theorem 2.4 below, solutions according to Definition 2.1 are unique, but only up to relabeling. One can recognize the possibility of relabeling as follows: if three particles (say numbered \(i=1,2,3\), with charges \(+,-,+\)) collide at some point \((\tau ,y)\), then according to (20) one positive particle should continue, while the two other particles should become neutral. Therefore either \(i=1\) could remain positive, with \(i=2,3\) becoming neutral, or \(i=3\) could remain positive with \(i=1,2\) becoming neutral. Both lead to the same evolution of points and their charges, and therefore the same physical interpretation, but the numbers attached to the points are different.

To state the main result of this section, Theorem 2.4, on the well-posedness of (\(P_n\)) and properties of the solutions, we introduce several objects. First, given \(({\mathbf {x}}, {\mathbf {b}}) \in {\mathcal {Z}}_n\), we set \(d^+\) as the smallest distance between any two neighboring particles with positive charge. Analogously, we define \(d^-\) for the negatively charged particles. More precisely, we set \(m := \sum _{i=1}^n |b_i|\) as the number of charged particles, and take a permutation \(\sigma \in S_n\) such that \((x_{\sigma (1)}, \dots , x_{\sigma (m)})\) is the ordered list of all charged particles. Then,

Secondly, we recall from the introduction the (scaled and signed) moments of \({\mathbf {x}}\) given by

Then, using the map

we define the moment-distance

Lemma 2.3

The distance \(d_{\mathbf {M}}\) is a metric on \({\mathbb {R}}^n / S_n\) and \(d_{\mathbf {M}}\)-bounded sets are relatively compact. Moreover, if \({\mathbf {x}}_m \rightarrow {\mathbf {x}}\) in \(({\mathbb {R}}^n/S_n, d_{\mathbf {M}})\) then \({\mathbf {x}}_m \rightarrow {\mathbf {x}}\) on \({\mathbb {R}}^n /S_n\) with the Euclidean norm.

Proof

Positivity and the triangle inequality are immediate. The fact that \(d_{\mathbf {M}}({\mathbf {x}}, {\mathbf {y}}) = 0\) implies \( {\mathbf {x}}= {\mathbf {y}}\) follows from Newton’s identities. To see this, we write

using the symmetric polynomials

By Newton’s identities, the symmetric polynomials satisfy

In particular, \(d_{\mathbf {M}}({\mathbf {x}}, {\mathbf {y}}) = 0\) implies \({\mathbf {M}}({\mathbf {x}}) = {\mathbf {M}}({\mathbf {y}})\) and hence \(\prod _{i=1}^n (z - x_i) = \prod _{i=1}^n (z - y_i)\) for all \(z\in {\mathbb {R}}\). Therefore \({\mathbf {x}}= {\mathbf {y}}\) up to a reordering of the indices, i.e. \({\mathbf {x}}={\mathbf {y}}\) as elements of \({\mathbb {R}}^n/S_n\). It follows that \(d_{\mathbf {M}}\) is a metric on \({\mathbb {R}}^n / S_n\).

If \(d_{\mathbf {M}}({\mathbf {x}}_m, {\mathbf {x}}) \rightarrow 0\) then by Newton’s identities above we deduce that \(e_k({\mathbf {x}}_m) \rightarrow e_k({\mathbf {x}})\) for all \(1 \le k \le n\) and hence the polynomials \(\prod _{i=1}^n(z - {\mathbf {x}}_{m,i})\) converge locally uniformly to \(\prod _{i=1}^n(z - {\mathbf {x}}_i)\). We deduce that \({\mathbf {x}}_m \rightarrow {\mathbf {x}}\) in the Euclidean norm as elements of \({\mathbb {R}}^n/S_n\).

Finally, take a sequence \({\mathbf {x}}_m\) bounded in \(d_{\mathbf {M}}\), that is, for some \(R >0\) we have \(d_{\mathbf {M}}({\mathbf {x}}_m, 0) < R\) for all \(m \ge 1\). In particular, \(\Vert {\mathbf {x}}_m \Vert _2^2 = 2 M_2({\mathbf {x}}_m) < 2R\), and therefore \({\mathbf {x}}_m\) converges along a subsequence in \({\mathbb {R}}^n\) to some \({\mathbf {x}}\) in the Euclidean norm. Then, along the same subsequence, \(M_k({\mathbf {x}}_m) \rightarrow M_k({\mathbf {x}})\) for all \(k \ge 1\), and thus \(d_{\mathbf {M}}({\mathbf {x}}_m, {\mathbf {x}}) \rightarrow 0\). \(\square \)

Theorem 2.4

(Properties of \((P_n)\)) Let \(n \ge 2\), \(T > 0\) and \(({\mathbf {x}}^\circ , {\mathbf {b}}^\circ ) \in {\mathcal {Z}}_n\). Then, \((P_n)\) has a solution \(({\mathbf {x}}, {\mathbf {b}})\) with initial datum \(({\mathbf {x}}^\circ , {\mathbf {b}}^\circ )\), according to Definition 2.1, that is unique modulo relabeling (see Remark 2.2). Moreover, setting S as the minimal set from Definition 2.1 for the solution \(({\mathbf {x}}, {\mathbf {b}})\), the following properties hold:

-

(i)

(\(d_{\mathbf {M}}\)-Lipschitz regularity). There exists a constant \(C_n > 0\) depending only on n and \({\mathbf {M}}({\mathbf {x}}^\circ )\) such that

$$\begin{aligned} d_{\mathbf {M}}({\mathbf {x}}(t), {\mathbf {x}}(s)) \le C_n|t-s| \qquad \text {for all } 0 \le s < t \le T; \end{aligned}$$ -

(ii)

(Lower bound on minimal distance between neighbors of equal sign).

$$\begin{aligned} d^\pm (t) \ge \sqrt{ \tfrac{8}{n^2 - 1} t + d^\pm (0)^2} \qquad \text {for all } t \in [0,T]; \end{aligned}$$ -

(iii)

(Lower bound on distance between any two neighbors). Let \(i < j\) be neighboring particles at time \(t_0 \ge 0\). Then

$$\begin{aligned} x_j(t) - x_i(t) \ge \sqrt{c_0^2 - 8 \tfrac{\log n + 1}{n} (t - t_0)}, \qquad c_0 := \min (d^+, d^-, x_j - x_i) (t_0) \end{aligned}$$(22)for all \(t \ge t_0\) for which the square root exists;

-

(iv)

(Upper bound at collision). For any \(\tau \in S\) and any i, there exists a \(C \ge 0\) such that

$$\begin{aligned} |x_i(t) - x_i(\tau )| \le C \sqrt{\tau - t} \quad \text {for all } t \in [0, \tau ]; \end{aligned}$$ -

(v)

(Lower bound at collision). For each annihilation point \((\tau , y)\), there exists a \(c > 0\) and indices i, j such that \(x_i(\tau ) = x_j(\tau ) = y\), \(b_i(\tau -) \ne 0\), \(b_j(\tau -) \ne 0\) and

$$\begin{aligned} x_i(t) - y&> c \sqrt{\tau - t} \\ x_j(t) - y&< -c \sqrt{\tau - t} \end{aligned}$$for all \(t < \tau \) large enough;

-

(vi)

(Stability with respect to \({\mathbf {x}}^\circ \)). Let \(({\mathbf {x}}_m^\circ , {\mathbf {b}}^\circ ) \in {\mathcal {Z}}_n\) be such that \({\mathbf {x}}_m^\circ \rightarrow {\mathbf {x}}^\circ \) as \(m \rightarrow \infty \). Let \(({\mathbf {x}}_m, {\mathbf {b}}_m)\) be the solution of \((P_n)\) with initial data \(({\mathbf {x}}_m^\circ , {\mathbf {b}}^\circ )\). Then, \({\mathbf {x}}_m \rightarrow {\mathbf {x}}\) in \(C([0,T]; ({\mathbb {R}}^n / S_n, d_{\mathbf {M}}))\) and \({\mathbf {b}}_m \rightarrow {\mathbf {b}}\) locally uniformly on \([0, T] \setminus S\) as \(m \rightarrow \infty \).

Remark that in Property (ii), equality is reached when n is odd, \(b_i^\circ = 1\) and \(x_i^\circ = i\) for all i. Moreover, as a direct consequence of Theorem 2.4(ii) we have the following result.

Corollary 2.5

(Multiple-particle collisions) Let \((\tau , y) \in S \times {\mathbb {R}}\) be an annihilation point, and let I be the corresponding indices:

Then prior to annihilation, the particles with index in I have charges of alternating sign. In particular,

Proof of Theorem 2.4

Uniqueness. Let \(({\mathbf {x}}, {\mathbf {b}})\) and \(({\hat{{\mathbf {x}}}}, {\hat{{\mathbf {b}}}})\) be two solutions with minimal sets of annihilation times \(S = \{\tau _1, \ldots , \tau _K\}\) and \({\hat{S}} = \{{\hat{\tau }}_1, \ldots , \hat{\tau }_{{\hat{K}}} \}\) respectively. By standard ODE theory and the minimality of S and \({\hat{S}}\), we obtain \({\hat{\tau }}_1 = \tau _1\) and \({\hat{{\mathbf {x}}}} |_{[0, \tau _1)} = {\mathbf {x}}|_{[0, \tau _1)}\). By continuity, \({\hat{{\mathbf {x}}}}(\tau _1) = {\mathbf {x}}(\tau _1)\). Hence, any annihilation point \((\tau _1, y)\) of \({\mathbf {x}}\) is also an annihilation point of \({\hat{{\mathbf {x}}}}\). Let \((\tau _1, y)\) be such an annihilation point, and let I be the related index set of the colliding particles (see (23)). Definition 2.1(iii) implies that

In addition, since \(({\mathbf {x}}, {\mathbf {b}}), \, ({\hat{{\mathbf {x}}}}, {\hat{{\mathbf {b}}}}) \in {\mathcal {Z}}_n\),

Hence, \(\{ {\hat{b}}_i(\tau ) \}_{i \in I}\) equals \(\{ b_i(\tau ) \}_{i \in I}\) up to a possible relabeling. Thus, the ODEs for \({\mathbf {x}}\) and \({\hat{{\mathbf {x}}}}\) are restarted with the same right-hand side. Iterating the argument above over all annihilation times in S, we obtain \({\hat{S}} = S\) and \(({\hat{{\mathbf {x}}}}, {\hat{{\mathbf {b}}}}) = ({\mathbf {x}}, {\mathbf {b}})\), modulo relabeling of the particles.

Existence and Properties (i),(ii). Standard ODE theory provides the existence of \({\mathbf {x}}\) up to the first time \(\tau _1\) at which either \({\mathbf {x}}(\tau _1-)\) does not exist, or \(({\mathbf {x}}(\tau _1-), {\mathbf {b}}^\circ ) \notin {\mathcal {Z}}_n\). It is sufficient to show that \({\mathbf {x}}(\tau _1-)\) exists, and that under Definition 2.1(iii) \({\mathbf {b}}(\tau _1)\) can be chosen such that \(({\mathbf {x}}, {\mathbf {b}})(\tau _1) \in {\mathcal {Z}}_n\). Indeed, if these two conditions are met, then \(({\mathbf {x}}, {\mathbf {b}})(\tau _1)\) is an admissible initial condition for \((P_n)\), and further annihilation times \(\tau _k\) are found and treated by induction.

To prove these two conditions, we set \(\tau := \tau _1\), and note that it is sufficient to prove Properties (i) and (ii) both with T replaced by \(\tau \). Indeed, Property (i) implies that \({\mathbf {x}}(\tau _1-) \in {\mathbb {R}}^n\) exists. Then, Property (ii) implies Corollary 2.5, which gives enough information to construct \({\mathbf {b}}(\tau )\) such that \(({\mathbf {x}}, {\mathbf {b}})(\tau _1) \in {\mathcal {Z}}_n\).

Property (i) with T replaced by \(\tau \). For any integer \(k \ge 0\) we compute

on \((0, \tau )\). For \(k = 0\), the right-hand side vanishes, and thus \(M_1({\mathbf {x}}(t)) = M_1({\mathbf {x}}^\circ )\) is constant in t. For \(k = 1\), we observe that

which is constant and bounded from above by \((n-1)/2\). Hence,

For \(k \ge 2\),

To bound the right-hand side, note that for even \(\ell \),

and for odd \(\ell \)

Hence, \(| \frac{\mathrm{d}}{\mathrm{d}t} M_{k+1} ({\mathbf {x}}) |\) is bounded on \((0,\tau )\) in terms of \(f_k(M_1 ({\mathbf {x}}), \ldots , M_k ({\mathbf {x}}) )\) for some \(f_k \in C({\mathbb {R}}^k)\). The result follows from induction over k by integrating \(\frac{\mathrm{d}}{\mathrm{d}t} M_{k+1} ({\mathbf {x}})\) from 0 to \(\tau \).

Property (ii) with T replaced by \(\tau := \tau _1\). We prove Property (ii) for \(d^+\); the proof for \(d^-\) is analogous. For convenience, we assume that there are no neutral particles. Since \({\mathbf {x}}\) is a solution to \((P_n)\), we obtain from the definition of \(d^+\) in (21) that \(d^+\) as a function on \([0, \tau )\) is positive, locally Lipschitz continuous and hence differentiable almost everywhere.

Let \(t \in (0, \tau )\) be a point of differentiability of \(d^+\), and let \(x_i(t)\) and \(x_{i+1}(t)\) be particles for which the minimum in (21) is attained. Then, \((x_{i+1} - x_i)(t) = d^+(t)\), and at time t,

Next we bound the sum in (27) from below. For convenience, we focus on the part corresponding to \(j > i+1\). The idea is to remove certain positive terms from the summation such that the remaining indices in the sum correspond to negative contributions of positively charged particles which are all separated by a distance no smaller than \(d^+\).

We remove indices (particles) in two consecutive steps. In the first step, we apply the following rule for all \(j = i+2, \ldots , n-1\). If \(b_j = -1\) and \(b_{j+1} = 1\), then we remove both j and \(j+1\) from the summation. Note that the joint contribution of j and \(j+1\) to the sum is \(-b_j \gamma _j + b_{j+1} \gamma _{j+1} = \gamma _j - \gamma _{j+1}\), which is positive since \(\gamma _k\) is decreasing in k. The second step is simply to remove all remaining negatively changed particles \(x_j\). Since \(\gamma _k > 0\), each such particle provides a positive contribution \(\gamma _j > 0\) to the summation.

After applying this rule for removing indices from the summation (but keeping the original labeling of the particles), all remaining indices j satisfy \(b_j = b_{j-1} = 1\), and thus all the corresponding particles are separated by a distance no smaller than \(d^+\). This yields the following lower bound (assuming n is even for convenience):

Inserting this lower bound in (27), we obtain

When n is odd, a similar computation yields

and thus

Since (29) holds for any \(n \ge 2\) and at any point of differentiability t, and since \(d^+\) is locally Lipschitz, we obtain by integrating from 0 to any \(t \in (0,\tau )\) that

This proves Property (ii) for \(t < \tau = \tau _1\).

A similar argument establishes Property (ii) for \(t\in [\tau _1,\tau _2)\), provided we show that \(d^+(\tau _1) \ge d^+(\tau _1-)\), which we do now. Let the indices \(i < k\) be such that \(x_{k}(\tau _1) - x_i(\tau _1)\) is a minimizer for the set in (21) at \(\tau _1\), i.e.,

-

\(d^+(\tau _1) = x_k(\tau _1)-x_i(\tau _1)\);

-

\(b_k(\tau _1) = b_i(\tau _1) = 1\);

-

\(x_i(\tau _1)\) and \(x_k(\tau _1)\) are neighbors.

Since \(x_i(\tau _1)\) and \(x_k(\tau _1)\) are neighbors, \(\sum _{j=i+1}^{k-1} b_j(\tau _1) = 0\), and (20) implies that \(\sum _{j=i+1}^{k-1} b_j(\tau _1-) = 0\). Therefore \(\sum _{j=i}^{k} b_j(\tau _1-) = 2\), and since all \(b_j(\tau _1-)\) in this sum are either \(+1\) or \(-1\), there exists \(j \in \{i, \ldots , k-1\}\) such that \(b_j(\tau _1-) = b_{j+1}(\tau _1-) = 1\). Hence,

This completes both the proof for the existence of the solution \(({\mathbf {x}}, {\mathbf {b}})\) to \((P_n)\) up to time T, and the proof of Properties (i),(ii) up to time T.

Property (iii). For convenience, we assume that at \(t_0\) all particles are charged (this implies \(j = i+1\)) and that \(b_i(t_0) = 1\). Setting \(d := x_{i+1} - x_i\), we write

To estimate the right-hand side from above, we use the technique in the proof of Property (ii) to remove from the first sum a certain number of particles such that all remaining particles have positive charge and are separated by a distance \(d^+\). Using the same technique also for the second sum, we obtain

where \(d^= := \min (d^+, d^-)\). Since this upper bound is positive, it includes the scenario in which \(x_i\) annihilates with \(x_{i-1}\). A symmetric argument yields

Therefore,

As \(d^=\) is nondecreasing due to Property (ii), by comparison with \(\frac{\mathrm{d} e}{\mathrm{d}t} = -4/e\) with initial datum \(e(t_0) = \min (d^=(t_0), d(t_0))\), we deduce (22).

Property (iv). Note that it is sufficient to prove Property (iv) only for all t from the last annihilation time \(\tau _0\) prior to \(\tau \) (we set \(\tau _0 = 0\) if \(\tau \) is the first annihilation time) up to \(\tau \). Indeed, we can otherwise iterate backwards in time over the finitely many annihilation times, and use the continuity of \(x_i\) and the bound in Property (iv) at each annihilation time to capture the resulting curve in a new parabola.

Next, we prove Property (iv) for all \(t \in (\tau _0, \tau )\) and any i. We note that on this interval, \({\mathbf {b}}\) is constant, and \({\mathbf {x}}\) satisfies the ODE in (\(P_n\)). If \(b_i = 0\), then Property (iv) is satisfied with \(C = 0\). If \(b_i \ne 0\) and \(x_i\) does not collide at \(t = \tau \), then the right-hand side in (\(P_n\)) is bounded at \(\tau \). By the continuity of \({\mathbf {x}}\), it is also bounded in a neighborhood around \(\tau \). Hence, \(x_i\) is Lipschitz continuous in this neighborhood, which is sufficient to construct a C for which Property (iv) is satisfied.

The delicate case is when \(x_i\) collides with other particles at \(\tau \). To avoid relabeling, we assume that there are no neutral particles up to time \(\tau \). Let I as in (23) be the index set of all particles that collide with \(x_i\) at \(\tau \), including i itself. We use the translation invariance to assume that \(x_i(\tau ) = 0\). We can split the right-hand side of the ODE in (\(P_n\)) as

where

By the definition of I, the continuity of \({\mathbf {x}}\) and \(({\mathbf {x}}, {\mathbf {b}}) \in {\mathcal {Z}}_n\), we have

Using c, we obtain the bound

which is independent of t.

Next we inspect the second moment of the colliding particles, which we define by

By definition \(M(\tau ) = 0\). A computation similar to (24) and (25) yields

where \(B \in {\mathbb {R}}\) is a constant and \(R = R(t)\) is a remainder term. A similar computation as in (25) shows that

To bound R(t), we use that \(M(t) \rightarrow 0\) as \(t \nearrow \tau \) to get

Therefore for all \(t < \tau \) sufficiently large we have

From this and \(M(\tau ) = 0\) we deduce that

which completes the proof of Property (iv).

Property (v). We translate coordinates such that \(y=0\), and consider the computation and notation in the proof of Property (iv) for the colliding particles. In addition, we may assume that \(\tau \) is the first collision time. Set \(k := \min I\) and \(\ell := \max I\); we will construct \(c' > 0\) such that for all \(t < \tau \) large enough

From (31) we have for \(t < \tau \) large enough

We conclude that there exists \(c > 0\) such that for all \(t < \tau \) large enough

To show that both cases have to hold, we inspect the first moment \(m(t) := \sum _{ i \in I } x_i(t)\). Similar to (24) we compute

where \(F_i \in C([0, \tau ])\). Hence, \(m \in C^1([0,\tau ])\). Since \(m(\tau ) = 0\), there exists \(C > 0\) such that

Next we show that each of the two inequalities in (32) implies the other. Suppose that \(x_\ell (t) \ge c \sqrt{ \tau -t }\) holds for some \(t < \tau \) large enough (to be specified later). Then,

Rearranging terms and changing constants,

Hence, for t large enough, the upper bound on \(x_k\) in (32) holds. Similarly, it follows that the upper bound on \(x_k\) implies the lower bound on \(x_\ell \).

Property (vi). Using Property (i) and Lemma 2.3, Ascoli–Arzelà gives a subsequence m (not relabeled) and an \({\tilde{{\mathbf {x}}}} : [0,T] \rightarrow {\mathbb {R}}^n\) for which \({\mathbf {x}}_{m} \rightarrow {\tilde{{\mathbf {x}}}}\) in \(C([0,T]; ({\mathbb {R}}^n / S_n, d_{\mathbf {M}}))\) as \(m \rightarrow \infty \), and in particular \({\mathbf {x}}_m(t) \rightarrow {\tilde{{\mathbf {x}}}} (t)\) pointwise in the Euclidean norm as elements of \({\mathbb {R}}^n/S_n\). By uniqueness of solutions to (\(P_n\)), it is then sufficient to show that \(({\tilde{{\mathbf {x}}}}, {\mathbf {b}})\) is a solution to (\(P_n\)).

We start by proving that \(({\tilde{{\mathbf {x}}}}, {\mathbf {b}})\) is a solution to (\(P_n\)) up to the first collision time \(\tau \) of the limit \({\tilde{{\mathbf {x}}}}\). Let \(\delta \in (0, \tau )\). Passing to the limit \(m \rightarrow \infty \) in the weak version of the ODE (testing against \(\varphi \in C^1([0,\tau - \delta ])\)), we obtain that \({\tilde{{\mathbf {x}}}}\) satisfies the weak version of the ODE on \([0,\tau - \delta ]\). Since \(\delta \) is arbitrary, \(({\tilde{{\mathbf {x}}}}, {\mathbf {b}})\) satisfies the ODE on \((0, \tau )\). Moreover, by the continuity of \({\tilde{{\mathbf {x}}}}\),

Next we claim that for all \(\delta \) small enough there exists \(m_0 > 0\) such that for all \(m \ge m_0\)

From this claim, the argument above applies again to pass to the limit \(m \rightarrow \infty \) in the weak form of the ODE on any compact subinterval of \((\tau , \tau _2)\), where \(\tau _2\) is the second collision time of \({\mathbf {x}}\). This yields that \(({\tilde{{\mathbf {x}}}}, {\mathbf {b}})\) satisfies the ODE on \((\tau , \tau _2)\), and by the continuity of \({\tilde{{\mathbf {x}}}}\) we get

Property (vi) follows by iterating over the annihilation times of \({\mathbf {x}}\).

It is left to prove the claim (34). The idea of the argument is to localize around any annihilation point at \(\tau \). With this aim, we fix any \(i \in \{1,\ldots ,n\}\), and take I as the index set of particles \({\tilde{x}}_j\) which collide with \(\tilde{x}_i\) at \(\tau \), including i itself. We allow for \(I = \{i\}\), in which case \({\tilde{x}}_i\) does not collide with any other particle at \(\tau \). From (33) we infer that \((\tilde{\mathbf {x}}(\tau ), {\mathbf {b}}(\tau )) \in {\mathcal {Z}}_n\), and thus any two particles \(\tilde{x}_j\) and \({\tilde{x}}_k\) with \(j \in I\) and \(k \notin I\) at time \(\tau \) are separated by a distance of at least

Then, since \({\tilde{{\mathbf {x}}}}\) is continuous, a similar separation distance remains in effect over the time interval \([\tau - \delta , \tau + \delta ]\) for all \(\delta \) small enough, i.e.,

We illustrate the geometric interpretation of \(\rho \) and \(\delta \) in Fig. 4. For later use, we will take \(\delta \) small enough so that

Next we construct \(m_0\). First, a separation condition similar to (35) remains in effect for the particles \({\mathbf {x}}_m\) when m is large enough. Indeed, by the pointwise convergence of \({\mathbf {x}}_m\) to \({\tilde{{\mathbf {x}}}}\) in \({\mathbb {R}}^n / S_n\) as \(m \rightarrow \infty \), it follows from (35) by the triangle inequality that for all \(t \in [\tau - \delta , \tau + \delta ]\) there exists \(m_0 > 0\) such that for all \(m \ge m_0\)

Then, by Property (iii), this separation condition is uniform in time, i.e., there exists \(m_0 > 0\) such that for all \(m \ge m_0\)

Second, by (33) and the fact that the trajectories of \({\mathbf {x}}\) over \([0, \tau - \delta ]\) do not intersect, it follows again from the pointwise convergence of \({\mathbf {x}}_m\) to \(\tilde{\mathbf {x}}\) and Property (iii) that for m large enough no particles \(x_{m,j}\) with \(j \in I\) collide before time \(\tau - \delta \), i.e., by taking \(m_0\) larger if necessary,

for all \(j \in I\) and all \(m \ge m_0\). Third, for later use, we take \(m_0\) larger if necessary to ensure that for all \(m \ge m_0\)

Take \(m \ge m_0\) arbitrary. To prove (34) it is enough to show that at most one particle \(x_{m,j}\) with \(j \in I\) is charged at time \(\tau + \delta \), i.e.,

Indeed, from Corollary 2.5 it follows that also at most one particle \(x_j\) with \(j \in I\) is charged at time \(\tau + \delta \). Then, by the conservation of charge at collisions (see Definition 2.1(iii)) and (38) we obtain

and the claim in (34) follows.

Sketch of the geometric interpretation of \(\rho \) and \(\delta \) for the localization of the trajectories of \(x_i\) in Fig. 1 zoomed in around \(\tau \)

To prove (40), we set

as the index set of charged particles \(x_{m,j}\) at time t with \(j \in I\), we define

as the maximal distance between any two particles with indices in \(I_m(t)\), and we prove that

so that in particular \(D_m(\tau + \delta ) = 0\), which implies (40).

By (39), the claim (41) holds at \(t = \tau \). To prove (41) beyond \(\tau \), we may assume that \(|I_m (\tau )| \ge 2\). For convenience, we relabel the particles so that \(I_m (\tau ) = \{k, k+1, \ldots , \ell \}\). We treat the case where \(b_{m,k}(\tau ) = 1\); the other case can be treated analogously. Let \(S_m\) be the set of annihilation times of \(\{ x_{m,j} : j \in I_m (\tau ) \}\), and set \(\tau _m = \min S_m\). It is sufficient to show that

Since (42a) is obvious, we focus on proving (42b). We first consider the case where |I| is even. In this case, \(|I_m (\tau )|\) is also even, and we find on \((\tau , \tau _m)\) that

where \(F_{m,k}\) is as in (30). We bound \(|F_{m,k}|\) as in the proof of Property (iv). Since any particle \(x_{m,j}(t)\) with index \(j < k\) or \(j > \ell \) satisfies either \(b_{m,j}(t) = 0\) or \(j \notin I\), we obtain from (37) that

For the first term on the right-hand side of (43), we infer from Corollary 2.5 that \(b_{m,j}(\tau ) = (-1)^{j-k}\) for all \(j = k, \ldots , \ell \). Hence, on \((\tau , \tau _m)\),

where in the last inequality we have used the ordering of the particles \(\{ x_{m,j} : j \in I_m (\tau ) \}\). Collecting these findings in (43), we obtain

Similarly, one can derive that \(\mathrm {d}x_{m, \ell }/\mathrm {d}t\le 3/\rho - 1/(n D_m)\) on \((\tau , \tau _m)\). Hence, \(\mathrm {d}D_m/\mathrm {d}t\le 6/\rho - 2/(n D_m)\), which by (36) and \(D_m(\tau ) < \delta \) implies (42b) on \((\tau , \tau _m)\). In fact, the estimates above show that outside of \(S_m\), \(\mathrm {d}D_m/\mathrm {d}t \le -1\) as long as \(|I_m (t)| \ge 2\), i.e., \(D_m(t) > 0\). This completes the proof of (42) for when |I| is even.

If |I| is odd, a similar argument applies. The only difference is that in (44) we need to be more precise in the estimates:

This concludes the proof of (41). Finally, the proof of (34) follows by repeating the construction of \(\delta \) small enough and \(m_0\) for each \(i \in \{1,\ldots ,n\}\), and then taking the minimal and maximum value respectively over i. \(\square \)

3 The Hamilton–Jacobi Equations

In this section we introduce the notion of viscosity solutions for the Hamilton–Jacobi equation (18) describing the particle system, as well as the limit equation (9).

Let us expand on the brief introduction of viscosity solutions at the end of Section 1.3. Viscosity solutions are the natural generalized notion of solutions for this type of nonlocal Hamilton–Jacobi equations due to their comparison principle structure. The classical theory for local Hamilton–Jacobi equations goes back to the work of Crandall and Lions [10]; see [9, 22] for the standard treatment of the theory and references. The general idea of viscosity solutions is to use the comparison principle as the defining property. We first identify a sufficiently large class of functions, called test functions, for which the property of locally being (strict) subsolutions or supersolutions has a classical meaning. We then require that a candidate viscosity solution satisfies a comparison principle with all such classical strict subsolutions and supersolutions. For the standard first-order and second-order partial differential equations one can choose smooth functions or even second order polynomials as the class of test functions; there is no unique choice. For more singular equations, it might be necessary to restrict the class of the test functions to be able to give the operator a classical meaning, but the choice of test functions may be subtle; choosing too few could make the comparison principle fail, whereas choosing too many could make the proof of existence or stability of solutions more challenging. This idea of restricting the class of test functions depending on the operator first appeared in [34] in the context of level set equations and in [40] for more general singular equations.

3.1 Notation

Throughout this section, we set \(Q := (0,\infty )\times {\mathbb {R}}\) and \(Q_T := (0,T) \times {\mathbb {R}}\). For any \(\varepsilon > 0\), the staircase function \(E_\varepsilon \) was already defined in (17). For a function \(f : {\mathbb {R}}^d \rightarrow {\mathbb {R}}\), we set \(f_*, f^*\) to be its lower/upper semi-continuous envelope over all its variables. For example,

Finally, BUC is the space of bounded uniformly continuous functions, \(C_b\) is the space of bounded continuous functions and \(C_b^2\) is the space of bounded twice continuously differentiable functions: \(C_b = C \cap L^\infty \) and \(C_b^2 = C^2 \cap L^\infty \).

3.2 Hamilton–Jacobi Equation at \(\varepsilon >0\)

With \(\varepsilon := \frac{1}{n}\) we rewrite the Hamilton–Jacobi equation in (18) as

with initial condition

where the nonlocal operator \({\mathcal {M}}_\varepsilon \) is formally defined as

In this section we switch the parameters n and \(\varepsilon \). Given any \(\varepsilon > 0\), the level sets of \(u^\circ \) at \(\varepsilon {\mathbb {Z}}\) determine \(({\mathbf {x}}^\circ , {\mathbf {b}}^\circ )\), which in particular prescribes the initial number \(n_\varepsilon \) of charged particles. Then, by the same formal arguments as in the introduction, it readily follows that the related ODE is

Before constructing a rigorous definition of (HJ\(_\varepsilon \)), we provide an explicit example of the expected solution to (HJ\(_\varepsilon \)) for simple choices of the initial condition \(u^\circ \).

Example 3.1

For any \(\varepsilon > 0\) and smooth even initial data \(u^\circ \) which is strictly decreasing in \([0, \infty )\) and satisfies \(\sup u^\circ - \inf u^\circ \le \varepsilon \), one can check that the function \(u(t,x) = u^\circ (\sqrt{x^2 + \varepsilon t})\) satisfies (HJ\(_\varepsilon \)) at all points (t, x) where \(\partial _x u(t,x) \ne 0\), i.e. at points \(x \ne 0\). As a concrete example, consider \(u^\circ (x) := \varepsilon /(x^2 + 1)\) that yields the solution \(u(t,x) = \varepsilon /(x^2 + \varepsilon t + 1)\). After giving a rigorous definition to (HJ\(_\varepsilon \)), it turns out to be the unique viscosity solution with the initial data \(u^\circ \). While it is not difficult to verify this, we do not provide the details, and refer instead to the proof of Lemma 4.2 for a possible procedure.

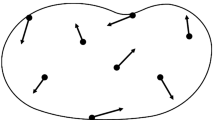

Instead, we focus on how the formula for u(t, x) can be deduced from the particle system (\(P_n\)) with time-rescaling factor \(\varepsilon n\) by using the method of characteristics. The initial data \(u^\circ \) describes a continuum of two-particle systems parametrized by their initial position \(0< a < \infty \): a particle of charge \(+1\) located at \(x_1^{a\circ } = -a\) and a particle of charge \(-1\) located at \(x_2^{a\circ } = a\). The unique solution of the rescaled (\(P_n\)) up to the annihilation time \(\tau _1 = a^2/\varepsilon \) is \(x_1^a(t) = -\sqrt{a^2 - \varepsilon t}\), with \(x_2^a(t) = -x_1^a(t)\). Note that that the parameter a generates a foliation by trajectories of the half-plane \(\{(t, x) : t > 0\}\); see Fig. 5. As u is constant along the trajectories of the particles, \(u(t, x_i^a(t)) = u^\circ (x_i^{a\circ })\), where \(a = a(t,x) = \sqrt{x^2 + \varepsilon t}\) is the unique parameter so that the point (t, x) lies on either of the trajectories \(x_1^a\) or \(x_2^a\).

We continue with constructing a rigorous definition for (HJ\(_\varepsilon \)). The difference with the setting in [18] is that we consider the kernel \(z^{-2}\) in the definition of \({\mathcal {M}}_\varepsilon \), whereas [18] considers an integrable one. As a result, the integral in the definition of \({\mathcal {M}}_\varepsilon [w] (x)\) does not converge as a Lebesgue integral if \(\partial _x w (x) = 0\) due to the singularity of \(z^{-2}\) at 0.

We construct a proper replacement for \({\mathcal {M}}_\varepsilon [u] |u_x|\) in two steps. In the first step, we follow the idea in the works of Sayah [44], Imbert, Monneau and Rouy [33] and Jakobsen and Karlsen [35] to replace u in the integral of \({\mathcal {M}}_\varepsilon [u]\) by a test function \(\phi \) on the range \(-\rho< z < \rho \), where \(\rho > 0\) is a (small) parameter. The test function \(\phi \) will later be taken as the same test functions used in the definition of viscosity solutions. It will also turn out that the notion of viscosity solutions which follows is independent of the choice of \(\rho \).

Definition 3.2

(Hamiltonians at \(\varepsilon >0\)) Fix \(\rho >0\), \(u \in L^\infty ({\mathbb {R}}\times [0,\infty ))\), \(t > 0\), \(x\in {\mathbb {R}}\) and \(\phi \in C^2_b(B_\delta (t) \times B_\rho (x))\) for some \(\delta > 0\). If \(\phi _x(t,x) \ne 0\), we define

where

The Hamiltonians \(\overline{H}_{\rho ,\varepsilon }\) and \({\underline{H}}_{\rho ,\varepsilon }\) will replace the right-hand side of (HJ\(_\varepsilon \)) in the definition of the viscosity subsolution and supersolution. Since the Hamiltonians are discontinuous, we need to choose the one with the correct semi-continuity to be able to pass to various limits.

The next lemma shows that the expressions \(\overline{H}_{\rho ,\varepsilon }\) and \({\underline{H}}_{\rho ,\varepsilon }\) above are well-defined.

Lemma 3.3

Let \(\varepsilon \), \(\rho \), u, t, x and \(\phi \) be as in Definition 3.2. Then, dropping the dependence on t,

-

(i)

\(\exists \, \rho _0 > 0 \, \forall \, {\tilde{\rho }} \in (0, \rho _0] : \displaystyle \mathrm {pv}\int _{B_{{\tilde{\rho }}}} E_\varepsilon ^*\big [ \phi (x+z)-\phi (x)\big ] \frac{\mathrm{d}z}{z^2} = 0\);

-

(ii)

\(\displaystyle \bigg | \int _{B_\rho ^c} E_\varepsilon ^*\big [ u(x+z)-u(x)\big ] \frac{\mathrm{d}z}{z^2} \bigg | \le \frac{ 4 \Vert u\Vert _\infty + \varepsilon }{\rho }\).

The above two properties also hold when \(E_\varepsilon ^*\) is replaced with \(E_{\varepsilon ,*}\).

Proof

Let \(\varepsilon \), \(\rho \), u, t, x, \(\phi \) be given. Property (ii) follows by simply using \(| E_\varepsilon ^*[\alpha ] | \le |\alpha | + \varepsilon /2\). For Property (i), we assume for convenience that \(\phi '(x) > 0\). Since \(\phi \in C^1({\mathbb {R}})\), there exists \(\rho _0 > 0\) such that

Hence,

Property (i) follows.

The proof for \(E_{\varepsilon ,*}\) is analogous. \(\square \)

Even after replacing \({\mathcal {M}}_\varepsilon [u] |u_x|\) by either \(\overline{H}_{\rho ,\varepsilon } [\phi ,u]\) or \({\underline{H}}_{\rho ,\varepsilon } [\phi ,u]\) for a regular test function \(\phi \), we still require \(\phi _x(t,x) \ne 0\) in Definition 3.2 for the Hamiltonians. This is the key difference with [33], where the corresponding integral is defined for any smooth \(\phi \). Here, the requirement \(\phi _x(t,x) \ne 0\) is more than a technical issue; at annihilation points of the particle system we necessarily have \(\partial _x v = 0\), and thus we require at least some test functions with \(\phi _x(t,x) = 0\). This issue is avoided in Slepčev [48] and Forcadel, Imbert and Monneau [18] by replacing the singular kernel \(1/z^2\) in the operator \({\mathcal {M}}_\varepsilon \) in (18) by a smooth one. In that case, the parameter \(\rho \) need not be introduced. However, in our case, regularizing the kernel breaks the connection with the particle system (\(P_n\)); we therefore take a different approach.

This brings us to step 2 of the construction of a rigorous definition to (HJ\(_\varepsilon \)). In this step we reduce the class of all regular enough test functions. This idea is briefly addressed in the introduction and at the start of Section 3. We recall that we may remove as many test functions as necessary as long as we can still prove a comparison principle. While Definition 3.2 suggests to remove those with \(\phi _x(\overline{t}, \overline{x}) = 0\) at the test point \((\overline{t}, \overline{x})\), the discussion in the previous paragraph demonstrates that this would remove too many test functions. Hence, we need to appropriately extend the Hamiltonian \(\overline{H}_{\rho ,\varepsilon }\) (and \({\underline{H}}_{\rho ,\varepsilon }\)) at least for some functions \(\phi \) with \(\partial _x \phi (\overline{t}, \overline{x}) = 0\). Since \(|\partial _x \phi (\overline{t}, \overline{x})| = 0\), a natural extension is to define \(\overline{H}_{\rho ,\varepsilon } [\phi , u] (\overline{t}, \overline{x}) = 0\). Since pairs of annihilating particles move along parabolas (see Theorem 2.4(iv), (v)), there are smooth functions \(\phi \) for which \(\partial _t \phi (\overline{t}, \overline{x})\) can have either sign (see Example 3.1), which does not fit (HJ\(_\varepsilon \)) with \(\overline{H}_{\rho ,\varepsilon } [\phi , u] (\overline{t}, \overline{x}) = 0\). To remove such functions from the class of test functions, we require \(\partial _{xx} \phi (\overline{t}, \overline{x}) = 0\). Our proof of the comparison principle allows us to go even further; we restrict the subclass of regular test functions with \(\phi _x(\overline{t}, \overline{x}) = 0\) to functions of fourth-order growth with the specific form \(c (x - \overline{x})^4 + g(t)\). This restriction is helpful later on (see Lemma 4.2) in the proof of Theorem B where we show that the solution of the system of ODEs given by (\(P_n\)) translates to a solution of (HJ\(_\varepsilon \)).

Definition 3.4

(\(\rho \)-sub- and \(\rho \)-supersolutions for \(\varepsilon >0\)) Let \(\rho , \varepsilon > 0\).

-

Let \(u:Q \rightarrow {\mathbb {R}}\) be upper semi-continuous and bounded. The function u is a \(\rho \)-subsolution of (HJ\(_\varepsilon \)) in Q if the following holds: whenever \(\phi \in C^2(Q)\) is such that \(u-\phi \) has a global maximum at \((\overline{t}, \overline{x})\), we have

$$\begin{aligned} \phi _t(\overline{t}, \overline{x}) \le {\left\{ \begin{array}{ll} \overline{H}_{\rho ,\varepsilon }[\phi ,u](\overline{t}, \overline{x})&{} \text { if }\phi _x(\overline{t}, \overline{x}) \ne 0,\\ 0 &{} \text { if }\phi ( t, x) \text { is of the form } c |x - \overline{x}|^4 + g(t),\\ +\infty &{} \text {otherwise}. \end{array}\right. } \end{aligned}$$ -

Let \(v:Q \rightarrow {\mathbb {R}}\) be lower semi-continuous and bounded. The function v is a \(\rho \)-supersolution of (HJ\(_\varepsilon \)) in Q if the following holds: whenever \(\psi \in C^2(Q)\) is such that \(v-\psi \) has a global minimum at \((\overline{t}, \overline{x})\), we have

$$\begin{aligned} \psi _t(\overline{t}, \overline{x}) \ge {\left\{ \begin{array}{ll} {\underline{H}}_{\rho , \varepsilon }[\psi ,v](\overline{t}, \overline{x})&{} \text { if }\phi _x(\overline{t}, \overline{x}) \ne 0,\\ 0 &{} \text { if }\phi ( t, x) \text { is of the form } c |x - \overline{x}|^4 + g(t),\\ -\infty &{} \text {otherwise}. \end{array}\right. } \end{aligned}$$

A function \(u:Q \rightarrow {\mathbb {R}}\) is a \(\rho \)-solution of (HJ\(_\varepsilon \)) in Q if \(u^*\) is a \(\rho \)-subsolution and \(u_*\) is a \(\rho \)-supersolution.

We remark that, as usual, we extend subsolutions u and a supersolutions v to \(t=0\) by

In addition, without loss of generality, we may assume in Definition 3.4 that the maximum of \(u-\phi \) (and the minimum of \(v-\psi \)) is strict, that \((u - \phi )(\overline{t}, \overline{x}) = 0\) and that \(\lim _{|t| + |x| \rightarrow \infty } (u - \phi )(t,x) = -\infty \). Indeed, if the maximum at \((\overline{t}, \overline{x})\) is not strict, then we can approximate \(\phi \) by

as \(\delta \searrow 0\). Indeed, the maximum of \(u-\phi _\delta \) is strict, and by Lemma 3.8(i) the right-hand side in Definition 3.9 converges as \(\delta \searrow 0\) to that of \(\phi \).

In the following lemma we show that this Definition 3.4 does not depend on \(\rho \). Therefore we can simply talk about subsolutions, supersolutions and viscosity solutions of (HJ\(_\varepsilon \)).

Lemma 3.5

(Independence of \(\rho \)) If u is a \(\rho \)-sub- or \(\rho \)-supersolution of (HJ\(_\varepsilon \)) for some \(\rho > 0\), then it is a \({\tilde{\rho }}\)-sub- or \({\tilde{\rho }}\)-supersolution of (HJ\(_\varepsilon \)), respectively, for any \({\tilde{\rho }} > 0\).

Proof

This is a modification of the proof of [44, Prop. II.1]. We prove the lemma for subsolutions; the proof for supersolutions is analogous. To prove that u is a \({\tilde{\rho }}\)-subsolution of (HJ\(_\varepsilon \)), let \({\tilde{\phi }}\) be any corresponding test function such that \(u - {\tilde{\phi }}\) has a global maximum at (t, x). We assume for convenience that \(u( t, x) = {\tilde{\phi }} ( t, x)\). If \({\tilde{\phi }}_x(t,x) = 0\), then u satisfies the condition for being a \({\tilde{\rho }}\)-subsolution of (HJ\(_\varepsilon \)) for any \({\tilde{\rho }} > 0\). Hence, in the remainder we may assume that \({\tilde{\phi }}_x(t,x) \ne 0\).

We start with the case \({\tilde{\rho }} > \rho \). Since u is a \(\rho \)-subsolution, we have

Since \({\tilde{\phi }}(t, x+z) - {\tilde{\phi }}(t, x) \ge u(t, x+z)-u(t, x)\) for all \(z \in {\mathbb {R}}\) and since \(E_\varepsilon ^*\) is non-decreasing, we obtain from the definition of \(\overline{H}_{\rho ,\varepsilon }\) that \(\overline{H}_{\rho ,\varepsilon }[{\tilde{\phi }}, u]( t, x) \le \overline{H}_{\tilde{\rho },\varepsilon }[{\tilde{\phi }}, u]( t, x)\). This shows that u is a \(\tilde{\rho }\)-subsolution.

It is left to treat the case \({\tilde{\rho }} < \rho \). Let \((\phi ^k) \subset C^2(Q)\) be a sequence of test functions satisfying

such that \(\phi ^k \searrow u\) pointwise on the interior of \(B_{{\tilde{\rho }}} (t,x)^c\) as \(k \rightarrow \infty \). Since u is a \(\rho \)-subsolution and \(u - \phi ^k\) has a global maximum at (t, x), we have

Next we prepare for passing to the limit \(k \rightarrow \infty \) in the right-hand side. To avoid clutter, we remove the time variable. By construction of \(\phi ^k\),

for a.e. \(z \in {\mathbb {R}}\). Then, since \(E_\varepsilon ^*\) is non-decreasing and upper semi-continuous,

for a.e. \(z \in {\mathbb {R}}\). Hence, from the definition of \(\overline{H}_{\rho ,\varepsilon }\) and Fatou’s lemma, we obtain that

This shows that u is a \({\tilde{\rho }}\)-subsolution. \(\square \)

Theorem 3.6

(Comparison principle for (HJ\(_\varepsilon \))) Let u be a subsolution and v be a supersolution of (HJ\(_\varepsilon \)). Assume that for each \(T>0\),

Then \(u(0,\cdot ) \le v(0,\cdot )\) on \({\mathbb {R}}\) implies \(u\le v\) on Q.

Proof

Suppose that the inequality \(u\le v\) does not hold on Q; then there exists \(T>0\) such that \(\theta := \sup _{Q_T} (u-v) >0\). For \(\eta >0\) and \(\gamma >0\) define the function

For sufficiently small \(\eta \) it follows that \(\sup _{Q_T\times Q_T} \Phi \ge \theta /2\), independently of \(\gamma \). By (47) and the semi-continuity of u and v, the supremum is achieved at some point \((\overline{t}, \overline{x}, \overline{s}, \overline{y})\in \overline{Q_T}\times \overline{Q_T}\). By the divergence of \(\Phi \) as \(s\nearrow T\) or \(t\nearrow T\) we have \(\overline{t}, \overline{s}< T\).

We now show by the usual arguments that for sufficiently small \(\gamma >0\) we have \(\overline{t}, \overline{s}>0\). Assume, to force a contradiction, that there exists a sequence \(\gamma _n\searrow 0\) such that for all n the corresponding maxima \((\overline{t}_n, \overline{x}_n, \overline{s}_n, \overline{y}_n)\) satisfy \(\overline{t}_n=0\). As \(u - v\) is bounded, by the structure of \(\Phi \) we have \(\overline{s}_n\rightarrow 0\) and \(\overline{x}_n-\overline{y}_n\rightarrow 0\), and by (47) we can assume by taking a subsequence if necessary that there exists \(\overline{x}\in {\mathbb {R}}\) such that \(\overline{x}_n,\overline{y}_n\rightarrow \overline{x}\) as \(n\rightarrow \infty \). We then estimate, using the semi-continuity of u and v,

which is a contradiction. Therefore we can fix \(\gamma \) and assume that \(\overline{t}, \overline{s}>0\).

We therefore have \((\overline{t}, \overline{x}, \overline{s}, \overline{y})\in Q_T\times Q_T\). Next we will obtain the contradiction to \(\theta >0\) by constructing test functions \(\phi \) and \(\psi \) for the subsolution u and the supersolution v, respectively, for which either u or v does not satisfy Definition 3.4. With this aim, we set

Since \(u-\phi = \Phi (\cdot , \cdot , \overline{s}, \overline{y}) + C\) has a global maximum at \((\overline{t},\overline{x})\) and \(v-\psi = -\Phi (\overline{t}, \overline{x}, \cdot , \cdot ) + C\) a global minimum at \((\overline{s},\overline{y})\), \(\phi \) and \(\psi \) are admissible test functionsFootnote 1. We compute

Next we separate two cases. If \(\overline{x} = \overline{y}\), then \(\phi (t,x) = \frac{|x - \overline{x}|^4}{4\gamma } + g(t)\) and \( \psi (s, y) = -\frac{|y - \overline{y}|^4}{4\gamma } + h(s)\), and Definition 3.4 yields

This contradicts with (49).

In the second case, \(\overline{x} \not = \overline{y}\), we note that \(\phi _x(\overline{t}, \overline{x}) = \psi _y(\overline{s}, \overline{y}) = \frac{1}{\gamma } (\overline{x} - \overline{y})^3 \ne 0\). We claim that

Then, as \(\phi _x(\overline{t}, \overline{x}) = \psi _y(\overline{s}, \overline{y})\),

and this expression equals zero for \(\rho \) small enough by Lemma 3.3(i). This together with (49) contradicts with Definition 3.4.

It is left to prove the claim (50). Assume for convenience that \(\overline{x} -\overline{y} >0\). For any \(\delta >0\) and \(z\in {\mathbb {R}}\), we have

We rewrite this inequality to find

Since the final term is strictly negative if \(|z|< (\overline{x} - \overline{y})/\delta \), we have

We now split the left-hand side of (50) into two parts:

Assuming that \(\delta \) is small enough such that \(\rho < (\overline{x} - \overline{y})/\delta \), we further split the first integral \(T_1\) into near-field and far-field parts:

The inequality (51) implies that \(T_{1,\mathrm {near}}\le 0\). For the integral \(T_{1,\mathrm {far}}\) we use Lemma 3.3(ii) to estimate

which converges to zero as \(\delta \rightarrow 0\).

Finally, to estimate the term \(T_2\) we set