Abstract

Minkowski space is shown to be globally stable as a solution to the massive Einstein–Vlasov system. The proof is based on a harmonic gauge in which the equations reduce to a system of quasilinear wave equations for the metric, satisfying the weak null condition, coupled to a transport equation for the Vlasov particle distribution function. Central to the proof is a collection of vector fields used to control the particle distribution function, a function of both spacetime and momentum variables. The vector fields are derived using a general procedure, are adapted to the geometry of the solution and reduce to the generators of the symmetries of Minkowski space when restricted to acting on spacetime functions. Moreover, when specialising to the case of vacuum, the proof provides a simplification of previous stability works.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 The Einstein–Vlasov System

The Einstein–Vlasov system provides a statistical description of a collection of collisionless particles, interacting via gravity as described by Einstein’s general theory of relativity. A fundamental problem is to understand the long time dynamics of solutions of this system. The problem is a great challenge even in the absence of particles, and global works on the vacuum Einstein equations all either involve simplifying symmetry assumptions or solutions arising from small data. In the asymptotically flat setting, small data solutions of the vacuum Einstein equations were first shown to exist globally and disperse to Minkowski space in the monumental work of Christodoulou–Klainerman [14]. An alternative proof of the stability of Minkowski space was later given by Lindblad–Rodnianski [37] in which a global harmonic coordinate system was constructed, described below. For the Einstein–Vlasov system, the global properties of small data solutions were first understood when the initial data were assumed to be spherically symmetric, an assumption under which the equations simplify dramatically, by Rein–Rendall [41] in the massive case, when all particles are assumed to have mass 1, and by Dafermos [15] in the massless case, when all particles are assumed to have mass 0. See also work of Andréasson–Kunze–Rein [4] for a global existence result in spherical symmetry for a class of large “outgoing” data. Without the simplifying assumption of spherical symmetry, small data solutions of the massless Einstein–Vlasov system were later understood by Taylor [46].

In this work the problem of the stability of Minkowski space for the Einstein–Vlasov system, without any symmetry assumptions, is addressed in the case that all particles have mass 1 (and can easily be adapted to the case that all particles have any fixed mass \(m>0\)). The system takes the form

where the unknown is a \(3+1\) dimensional manifold \(\mathcal {M}\) with Lorentzian metric g, together with a particle distribution function \(f: \mathcal {P}\rightarrow [0,\infty )\), where the mass shell\(\mathcal {P}\) is defined by

Here Ric(g) and R(g) denote the Ricci and scalar curvature of g respectively. A coordinate system (t, x) for \(\mathcal {M}\), with t a time function (that is the one form dt is timelike with respect to the metric g), defines a coordinate system \((t,x,p^0,p)\) for the tangent bundle \(T\mathcal {M}\) of \(\mathcal {M}\)conjugate to (t, x), where \((t,x^i,p^0,p^i)\) denotes the point

The mass shell relation in the definition of \(\mathcal {P}\),

should be viewed as defining \(p^0\) as a function of \((t,x^1,x^2,x^3,p^1,p^2,p^3)\). Here Greek indices run over 0, 1, 2, 3, lower case Latin indices run over 1, 2, 3, and often the notation \(t=x^0\) is used. The vector \(\mathbf {X}\) is the generator of the geodesic flow of \(\mathcal {M}\) which, with respect to the coordinate system (t, x, p) for \(\mathcal {P}\), takes the form

The volume form \((\sqrt{\vert \det g \vert }/{p^0})\,\mathrm{d}p^1 \mathrm{d}p^2 \mathrm{d}p^3\) in (1.2) is the induced volume form of the spacelike hypersurface \(\mathcal {P}_{(t,x)} \!\subset \! T_{(t,x)}\mathcal {M}\) when the tangent space \(T_{(t,x)} \mathcal {M}\) is endowed with the metric \(g_{\mu \nu }(t,x)\,\mathrm{d}p^{\mu } \mathrm{d}p^{\nu }\) induced by g on \(\mathcal {M}\).

1.2 The Global Existence Theorem

1.2.1 The Initial Value Problem and the Global Existence Theorem

In the Cauchy problem for the system (1.1)–(1.3) one prescribes an initial data set, which consists of a Riemannian 3 manifold \((\Sigma , \overline{g})\) together with a symmetric (0, 2) tensor k on \(\Sigma \), and an initial particle distribution \(f_0\), satisfying the constraint equations

for \(j=1,2,3\). Here \(\overline{\mathrm {div}}\), \(\overline{\text {tr}}\), \(\overline{R}\) denote the divergence, trace and scalar curvature of \(\overline{g}\) respectively, and \(T_{00}, T_{0j}\) denote (what will become) the 00 and 0j components of the energy momentum tensor. The topology of \(\Sigma \) will here always be assumed to be that of \(\mathbb {R}^3\). A theorem of Choquet-Bruhat [10], based on previous work by Choquet-Bruhat [9] and Choquet-Bruhat–Geroch [12] on the vacuum Einstein equations, (see also the recent textbook of Ringström [42]) guarantees that, for any initial data set as above, there exists a globally hyperbolic solution \((\mathcal {M},g,f)\) of the system (1.1)–(1.3) which attains the initial data, in the sense that there exists an imbedding \(\iota : \Sigma \rightarrow \mathcal {M}\) under the pullback of which the induced first and second fundamental form of g are \(\overline{g}\) and k respectively, and the restriction of f to the mass shell over \(\iota (\Sigma )\) is given by \(f_0\). As in [37], the proof is based on the harmonic gauge (or wave gauge), that is a system of coordinates \(x^{\mu }\) satisfying

where \(\square _g\) denotes the geometric wave operator of the metric g.

The initial data set

where e denotes the Euclidean metric on \(\mathbb {R}^3\), satisfies the constraint equations and gives rise to Minkowski space, the trivial solution of the system (1.1)–(1.3). The main result of this work concerns solutions arising from initial data sufficiently close to the trivial initial data set (1.7). The initial data will be assumed to be asymptotically flat in the sense that \(\Sigma \) is diffeomorphic to \(\mathbb {R}^3\) and there exists a global coordinate chart \((x^1,x^2,x^3)\) of \(\Sigma \) and \(M \ge 0\) and \(0<\gamma <1\) such that

For such \(\overline{g}\), k, write

and \(\chi \) is a smooth cut off function such that \(0 \le \chi \le 1\), \(\chi (s) = 1\) for \(s \ge {3}/{4}\) and \(\chi (s) = 0\) for \(s \le {1}/{2}\).

For given \(\gamma >0\) and such an initial data set, define, for any \(N\ge 0\), the initial energy

where \(\nabla = (\partial _{x^1},\partial _{x^2}, \partial _{x^3})\) denotes the coordinate gradient, and the initial norms for \(f_0\):

By the Sobolev inequality there exists a constant C such that \(\mathbb {D}_{N-4} \le C \mathcal {V}_N\) for any \(N \ge 0\).

The main result of this work is the following:

Theorem 1.1

Let \((\Sigma , \overline{g}, k,f_0)\) be an initial data set for the Einstein–Vlasov system (1.1)–(1.3), asymptotically flat in the above sense, such that \(f_0\) is compactly supported. For any \(0<\gamma <1\) and \(N \ge 11\), there exists \(\varepsilon _0>0\) (depending on the size of \(\mathrm {supp}(f_0)\)) such that, for all \(\varepsilon \le \varepsilon _0\) and all initial data satisfying

there exists a unique future geodesically complete solution of (1.1)–(1.3), attaining the given data, together with a global system of harmonic coordinates \((t,x^1,x^2,x^3)\), relative to which the solution asymptotically decays to Minkowski space.

The precise sense in which the spacetimes of Theorem 1.1 asymptotically decay to Minkowski space is captured in the estimates (1.15), (1.16), (6.5), (6.6), (6.8), (6.9), (6.10) and (6.11) below.

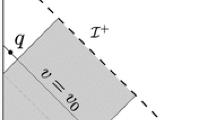

The analogue of Theorem 1.1 for the massless Einstein–Vlasov system (which is the system (1.1)–(1.3) but with the mass shell relation (1.4) replaced with the relation \(g_{\mu \nu } p^{\mu } p^{\nu } = 0\), so that all particles travel through spacetime along null geodesics, as opposed to unit timelike geodesics in the massive case considered here) was resolved by Taylor [46]. It should be noted that the difficulties encountered in [46] are very different to the difficulties encountered in the present work. The work [46] involves a double null gauge (in contrast to the harmonic gauge employed here) and, as is shown as part of the bootstrap argument in the proof, f, being initially supported in a spatially compact set, is supported only in the wave zone\(\vert x \vert \sim t\) in a region of finite retarded length. Due to the slow decay of the metric in the wave zone, the work [46] relies crucially in exploiting a null structureFootnote 1 present in the Vlasov equation.Footnote 2 In contrast, the main difficulties in the present work arise in the interior region\(\vert x \vert < t\). The “Minkowski vector fields” (see Section 1.5.1 below), which one would use to control the decoupled Vlasov equation on a fixed Minkowski background, are insufficient by themselves for the proof of Theorem 1.1 and have to be further adapted to the geometry of the spacetimes considered. See Section 1.5 below for a further discussion of the vector fields used in the proof.

The assumption in Theorem 1.1 that \(f_0\) is compactly supported is made for simplicity and, we believe, our method can be extended to relax this assumption. The assumption implies that the solution f is supported, at late times, away from the wave zone \(\vert x \vert \sim t\). The issue of the slow decay of the metric g in the wave zone is therefore not relevant in the proof of Theorem 1.1 when controlling the solution of the Vlasov equation f, and its derivatives. Were \(f_0\) not compactly supported, this slow decay of g would be relevant and it would be necessary to exploit a form of null structure present in the Vlasov equation, as in the massless case discussed above, together with the weak null structure of Einstein’s equations in wave coordinates.

A similar stability result to Theorem 1.1 was shown independently by Fajman–Joudioux–Smulevici [19]. We remark on some of the differences between the two proofs in Section 1.5 after presenting our argument.

There have been a number of related stability works on the Einstein–Vlasov system. In addition to those discussed earlier, there has been related work by Ringström [42] on the Einstein–Vlasov system in the presence of a positive cosmological constant, where the analogue of the Minkowski solution is the de Sitter spacetime. See also [5]. A stability result for a class of cosmological spacetimes was shown in \(2+1\) dimensions with vanishing cosmological constant by Fajman [16], and later extended to \(3+1\) dimensions by Andersson–Fajman [2]. See also the recent work of Moschidis [39] on the instability of the anti-de Sitter spacetime for a related spherically symmetric model in the presence of a negative cosmological constant. A much more comprehensive overview of work on the Einstein–Vlasov system can be found in the review paper of Andréasson [3].

There has also been work on the problem of the stability of Minkowski space for the Einstein equations coupled to various other matter models [7, 20, 22, 28,29,30, 43, 48].

Small data solutions of the Vlasov–Poisson system, the non-relativistic analogue of the Einstein–Vlasov system, were studied in [6, 23], and those of the Vlasov–Maxwell system in [21]. We note in particular works of Smulevici [44] and Fajman–Joudioux–Smulevici [18] (following [17]) on the asymptotic properties of small data solutions of the Vlasov–Poisson and the related Vlasov–Nordström systems respectively, where issues related to those described in Section 1.5.2 arise and are resolved using an alternative approach. Moreover, there are global existence results for general data for Vlasov–Poisson [38, 40] and Vlasov–Nordström [8].

1.2.2 Small Data Global Existence for the Reduced Einstein–Vlasov System

Following [37], the proof of Theorem 1.1 is based on a harmonic gauge (1.6), relative to which the Einstein equationsFootnote 3 (1.1) take the form of a system of quasilinear wave equations

where \(\widehat{T}_{\mu \nu } := T_{\mu \nu } - \frac{1}{2}g_{\mu \nu }{\text {tr}}_g T\) and \(F_{\mu \nu }(u)(v,v)\) depends quadratically on v. The system (1.2), (1.3), (1.11) is known as the reduced Einstein–Vlasov system. The condition that the coordinates \(x^{\mu }\) satisfy the harmonic gauge condition (1.6) is equivalent to the metric in the coordinates \(x^{\mu }\) satisfying the wave coordinate condition

Let \(m = \text {diag}\,(-1,1,1,1)\) denote the Minkowski metric in Cartesian coordinates and, for a solution g of the reduced Einstein equations (1.11), write

where \(\chi (s)=1\), when \(s>3/4\) and \(\chi (s)=0\), when \(s<1/2\). For a given \(0< \gamma < 1\), \(0<\mu < 1-\gamma \), we define the energy at time t as

Here I denotes a multi index and \(Z^I\) denotes a combination of |I| of the vector fields

for \(i,j=1,2,3\) and \(\alpha =0,1,2,3\). Let \(\vert \cdot \vert \) denote the norm, \( \vert x \vert = \big ( (x^1)^2 + (x^2)^2 + (x^3)^2 \big )^{{1}\!/{2}}\), and \( \vert h(t,x) \vert = \sum _{\alpha ,\beta =0}^3 \vert h_{\alpha \beta }(t,x)\vert \), \(\left| \Gamma (t,x) \right| = \sum _{\alpha ,\beta ,\gamma =0}^3 \big \vert \Gamma ^{\alpha }_{\beta \gamma } (t,x) \big \vert \), and similarly \(\Vert h(t,\cdot ) \Vert _{L^2} = \sum _{\alpha ,\beta =0}^3 \Vert h_{\alpha \beta }(t,\cdot )\Vert _{L^2}\) etc.. The notation \(A \lesssim B\) will be used if there exists a universal constant C such that \(A \le C B\).

Theorem 1.1 follows as a corollary of the following theorem:

Theorem 1.2

For any \(0<\gamma <1\), \(K,K'>0\) and \(N\ge 11\), there exists \(\varepsilon _0>0\) such that, for any data \((g_{\mu \nu }, \partial _t g_{\mu \nu },f) \vert _{t=0}\) for the reduced Einstein–Vlasov system (1.2), (1.3), (1.11) which satisfy the smallness condition

for any \(\varepsilon \le \varepsilon _0\) and the wave coordinate condition (1.12), and such that for

there exists a global solution attaining the data such that

for all \(t\ge 0\), along with the decay estimates

and the estimates (6.5), (6.6), (6.8), (6.9), (6.10) and (6.11) stated in Section 6.

Note that the wave coordinate condition (1.12), if satisfied initially, is propagated in time by the reduced Einstein–Vlasov system (1.2), (1.3), (1.11) (this fact is standard; see, for example, Section 4 of [36], which requires only minor modifications for the presence of matter).

Given an initial data set \((\Sigma ,\overline{g},k,f_0)\) as in Theorem 1.1, define initial data for the reduced equations

and

One can show that, with this choice, \( \left( E_N(0) \right) ^{{1}/{2}} \lesssim \mathcal {E}_N, \) where \(\mathcal {E}_N\) given by (1.9) is the norm of geometric data, and moreover \((g_{\mu \nu }, \partial _t g_{\mu \nu }) \vert _{t=0}\) satisfy the wave coordinate condition (1.12), see, for example, [36, 37].

It is therefore clear that Theorem 1.1 follows from Theorem 1.2 (the future causal geodesic completeness can be shown as in [36]) and so the goal of the paper is to establish the proof of Theorem 1.2.

1.3 Estimates for the Vlasov Matter

In what follows it is convenient, instead of parameterising the mass shell \(\mathcal {P}\) by (t, x, p), to instead parameterise it by \((t,x,{\,\widehat{\!p\!}\,\,})\), where

for \(i=1,2,3\). Note that, by the mass shell relation (1.4), in Minkowski space \((p^0)^2 = 1 + (p^1)^2 + (p^2)^2 + (p^3)^2\) and, under a mild smallness condition on \(g - m\), \(\vert {\,\widehat{\!p\!}\,\,}\vert < 1\). Abusing notation slightly, we will write \(f(t,x,{\,\widehat{\!p\!}\,\,})\) for the solution of the Vlasov equation (1.3).

Let \(\{ \Sigma _t \}\) denote the level hypersurfaces of the time coordinate t, and let \(X(s,t,x,{\,\widehat{\!p\!}\,\,})^i\), \({\,\widehat{\!P\!}\,\,}(s,t,x,{\,\widehat{\!p\!}\,\,})^i\) denote solutions of the geodesic equations (see Section 2)

normalised so that \((s,X(s,t,x,{\,\widehat{\!p\!}\,\,})) \in \Sigma _s\), with

Here

where \(\Gamma ^{\alpha }_{\beta \gamma }\) are the Christoffel symbols of the metric g with respect to a given coordinate chart \((t,x^1,x^2,x^3)\). Define \(X(s,t,x,{\,\widehat{\!p\!}\,\,})^0 = s\) and \({\,\widehat{\!P\!}\,\,}(s,t,x,{\,\widehat{\!p\!}\,\,})^0 = 1\). The notation X(s), \({\,\widehat{\!P\!}\,\,}(s)\) will sometimes be used for \(X(s,t,x,{\,\widehat{\!p\!}\,\,})\), \({\,\widehat{\!P\!}\,\,}(s,t,x,{\,\widehat{\!p\!}\,\,})\) when it is clear from the context which point \((t,x,{\,\widehat{\!p\!}\,\,})\) is meant, and the notation \({\,\widehat{\!X\!}\,\,}(s)=(s,X(s))\) will sometimes be used.

It follows that the Vlasov equation (1.3) can be rewritten as

for all s. The notation (y, q) will be used to denote points in the mass shell over the initial hypersurface, \(\mathcal {P}\vert _{t=0}\). In Theorem 1.2 it is assumed that \(f_0\) has compact support; \(|y|\le K\) and \(|q|\le K'\) for \((y,q) \in \mathrm {supp}(f_0)\), for some constants K, \(K'\). Under relatively mild smallness assumptions on \(h=g-m\), see Proposition 2.1, it follows that there exists \(c<1\), depending only on \(K'\), such that solutions of the Vlasov equation satisfy

The main new difficulties in the proof of Theorem 1.2, arising from the coupling to the Vlasov equation, are resolved in the following theorem, which is appealed to in the proof of Theorem 1.2:

Theorem 1.3

For a given \(t\ge 0\) and \(N\ge 1\), suppose that g is a Lorentzian metric such that the Christoffel symbols of g with respect to a global coordinate system \((t,x^1,x^2,x^3)\), for some \({1}/{2}<a<1\), satisfy

for all \(t'\!\in [0,t]\). Then there exists \(\varepsilon _0>0\) such that, for \(\varepsilon <\varepsilon _0\) and any solution f of the Vlasov equation (1.3) satisfying

and metric satisfying (1.20) and \(\vert g -m \vert \le \varepsilon \), the components of the energy momentum tensor \(T^{\mu \nu } (t,x)\) satisfy

for \(\vert I \vert \le N-1\), where \(k=|I|\) and \(k^\prime =\left\lfloor {k }/{2} \right\rfloor +1\), and

for \(\vert I \vert \le N\). Here the constants \(D_k\) depend only on \(C_N'\), K, \(K'\) and c, and \(\varepsilon _0\) depends only on c.

In the proof of Theorem 1.2, the \(L^2\) estimates of Theorem 1.3 are used to prove energy estimates for the metric g; see the discussion in Section 1.4.2. The \(L^1\) estimates of Theorem 1.3 are used to recover the pointwise decay (1.20) for the lower order derivatives of the Christoffel symbols \(\Gamma \); see the discussion in Section 1.4.7 below.

Remark 1.4

The proof of Theorem 1.3 still applies when \(a \ge 1\), though the theorem is only used in the proof of Theorem 1.2 for some fixed \(\frac{1}{2}< a< 1\). The case of \(a = 1\) is omitted in order to avoid logarithmic factors. The proof of an appropriate result when \(a > 1\) is much simpler, although, when Theorem 1.3 is used in the proof of Theorem 1.2, one could not hope for the assumptions (1.20) to hold with \(a > 1\), see Section 1.5.2.

Remark 1.5

In Section 5 a better \(L^2\) estimate in terms of t behaviour, compared with the \(L^2\) estimate of Theorem 1.3, is shown to hold for \(Z^I T^{\mu \nu }\), which involves one extra derivative of \(\Gamma \); see Proposition 5.8. It is important however to use the \(L^2\) estimate which does not lose a derivative in the proof of Theorem 1.2.

1.4 Overview of the Global Existence Theorem for the Reduced Einstein Equations

It should be noted from the outset that the reduced Einstein–Vlasov system (1.2), (1.3), (1.11) is a system of quasilinear wave equations coupled to a transport equation. It is well known that the general quasilinear wave equation does not necessarily admit global solutions for all small data [24, 25]. The null condition, an algebraic condition on the nonlinearity of such equations, was introduced by Klainerman [26], and used independently by Klainerman [27] and Christodoulou [13], as a sufficient condition that small data solutions exist globally in time and are asymptotically free. However, as was noticed by Lindblad [32, 33] and Alinhac [1], there are quasilinear equations that do not satisfy the null condition but still admit global solutions for all sufficiently small data. In fact, the classical null condition fails to be satisfied by the vacuum Einstein equations in the harmonic gauge ((1.11) with \(T\equiv 0\)), though it was noticed by Lindblad–Rodnianski [35] that they satisfy a weak null condition, which they used to prove a small data global existence theorem [36, 37].

The proof of Theorem 1.2 follows the strategy adopted in [37]. The new difficulties, of course, arise from the coupling to the Vlasov equation. A fundamental feature of the problem arises from the fact that, whilst the slowest decay of solutions to wave equations occurs in the wave zone, where \(t \sim r\), the slowest decay of solutions of the massive Vlasov equation occurs in the interior region \(t>r\). The most direct way to exploit this fact is to impose that \(f_0\) has compact support, in which case, as will be shown in Proposition 2.1, the support condition (1.19) holds and f actually vanishes in the wave zone at late times.

Since they have been described at length elsewhere, the difficulties associated to the failure of (1.11) to satisfy the classical null condition are only briefly discussed here. Suffice it to say that there is a rich structure in the equations (1.11) which is exploited heavily (see the further discussion in the introductions to [36, 37]). The main new features of this work are contained in the proof of Theorem 1.3. Indeed, for a given inhomogeneous term \(\widehat{T}\) in (1.11) which satisfies the support conditions and estimates of Theorem 1.3, the small data global existence theorem of [37] mostly goes through unchanged. An outline is given below, including a discussion of some observations which lead to simplifications compared with the proof in [37] (most notably the fact that the structure of the equations are better preserved under commuation with modified Lie derivatives, described in Section 1.4.4 below, but also the use of a simpler \(L^{\infty }\)–\(L^{\infty }\) estimate, described in Section 1.4.6).

The proof of Theorem 1.2 is based on a continuity argument. One assumes that the bounds

hold for all \(t\in [0,T_*]\) for some time \(T_*>0\) and some fixed constants \(C_N\) and \(\delta \), and the main objective is to use the Einstein equations to prove that the bounds (1.21) in fact hold with better constants, provided the initial data are sufficiently small.

1.4.1 The Contribution of the Mass

The first step in the proof of Theorem 1.2 is to identify the contribution of the mass M. Recall the decomposition of the metric (1.13) and note that the energy \(E_N\) is defined in terms of \(h^1\). Had the energy been defined with \(h=g-m\) in place of \(h^1\), it would not be finite unless \(M=0\), in which case it follows from the Positive Mass Theorem [45, 47] that the constraint equations imply that the solution is trivial. The contribution of the mass is therefore identified explicitly using the decomposition (1.13), and the reduced Einstein equations are recast as a system of equations for \(h^1\):

The term \(\widetilde{\square }_g \, h^0_{\mu \nu }\) is treated as an error term. Note that \(h^0\) is defined so that \(\square \, h^0_{\mu \nu }\), which is a good approximation to \(\widetilde{\square }_g \, h^0_{\mu \nu }\), is supported away from the wave zone \(t \sim r\) and so only contributes in the interior region, where \(h^1\) will be shown to have fast decay.

1.4.2 Energy Inequality with Weights

An important ingredient in the procedure to recover the assumption on the energy (1.21) is the energy inequality with weights,

which holds, for any suitably regular function \(\phi \), under mild assumptions on the metric g (see Lemma 6.29), where the weight w is as in (1.14) and \(\overline{\partial }_{\mu } = \partial _{\mu } - \frac{1}{2}L_{\mu }(\partial _r - \partial _t)\), with \(L = \partial _t + \partial _r\), denotes the derivatives tangential to the outgoing Minkowski light cones. The inequality will be applied to the system (1.22) after commuting with vector fields.

It is in the energy inequality (1.23) that the \(L^2\) estimates of Theorem 1.3 are used. The proof of Theorem 1.2, using Theorem 1.3, is given in detail in Section 7, but we briefly illustrate the use of the \(L^2\) estimates of Theorem 1.3 here. Setting \(Q_N(t) = \sup _{0\le s \le t} \sum _{\vert I \vert \le N} \Vert w^{\frac{1}{2}} \partial Z^I h^1 (s,\cdot ) \Vert _{L^2}\), it follows from Theorem 1.3 that

(see Section 7 for details of how estimates for \({\,\widehat{\!T\!}\,\,}^{\mu \nu }\) follow from estimates for \(T^{\mu \nu }\)). By the reduced Einstein equations (1.22) and the energy inequality (1.23),

The first four terms on the right hand side arise already in [37] and so, combining estimates which will be shown for these terms in Section 6 (see also the discussion below) with (1.24), the bound (1.25) implies that

and so the Grönwall inequality yields

In the proof of Theorem 1.2, such a bound for \(Q_N\) will lead to a recovery of the assumption (1.21) on \(E_N\) with better constants provided \(C_N\) is chosen to be sufficiently large and \(\varepsilon \) is sufficiently small.

1.4.3 The Structure of the Nonlinear Terms

As discussed above, whether a given quasilinear wave equation admits global solutions for small data or not depends on the structure of the nonlinear terms (moreover, the main analysis of the nonlinear terms is relevant in the wave zone, where \({{\,\widehat{\!T\!}\,\,}}^{\mu \nu }\) vanishes at late times and so plays no role in this discussion). A closer inspection of the nonlinearity in (1.22) reveals (see [11, 37]) that

where \(Q_{\mu \nu }(\partial h,\partial h)\) is a linear combination of null forms (satisfing \(\vert Q_{\mu \nu }(\partial h,\partial h) \vert \lesssim \vert \overline{\partial } h \vert \vert \partial h \vert \)), \(\vert G_{\mu \nu }(h)(\partial h,\partial h) \vert \lesssim \vert h \vert \vert \partial h \vert ^2\) denote cubic terms and

Clearly the failure of the semilinear terms of the system (1.22) to satisfy the classical null condition arises in the \(P(\partial _{\mu } h, \partial _{\nu } h)\) terms. In [35] it was observed that the semilinear terms of (1.22), after being decomposed with respect to a null frame \(\mathcal {N} = \{ \underline{L}, L, S_1, S_2 \}\), where

possess a weak null structure. It is well known that, for solutions of wave equations, derivatives tangential to the outgoing light cones \(\overline{\partial }\in \mathcal {T}=\{L,S_1,S_2\}\) decay faster and so, neglecting such \(\overline{\partial } h\) derivatives of h,

and, neglecting cubic terms and quadratic terms involving at least one tangential derivative,

For vectors U, V, define \((\widetilde{\square }_g h)_{UV} = U^{\mu } V^{\nu } \widetilde{\square }_g h_{\mu \nu }\). With respect to the null frame (1.28), the Einstein equations (1.22) become

since \(T^\mu L_\mu =0\) for \(T\in \mathcal {T}\). Decomposing with respect to the null frame (1.28), the term \(P(\partial _q h,\partial _q h)\) is equal to \(P_{\mathcal {N}}(\partial _{q} h, \partial _{q} h)\), where

(see [36]). Except for the \(\partial _q h_{LL} \partial _q h_{\underline{L} \underline{L}}\) term, \(P_{\!\mathcal {N}}( \partial _{q} h, \partial _{q} h)\) only involves the non \(\underline{L} \underline{L}\) components of h. The wave coordinate condition (1.12) with respect to the null frame becomes

neglecting tangential derivatives and quadratic terms, see [36]. In particular, the \(\partial _q h_{LL} \partial _q h_{\underline{L} \underline{L}}\) term in \(P_{\!\mathcal {N}}( \partial _{q} h, \partial _{q} h)\) can be neglected. The asymptotic identity (1.33) moreover implies (see equation (6.47)) that the leading order behaviour of \(P_N(\partial _{q} h, \partial _{q} h)\) is contained in \(P_{\!\mathcal {S}}(\partial _q h,\partial _q h)\), where

A decoupling therefore occurs in the semilinear terms of (1.22), modulo terms which are cubic or involve at least one “good” \(\overline{\partial }\) derivative, and the right hand side of the second identity in (1.31) only depends on components we have better control on by the first identity in (1.31).

A further failure of (1.22) to satisfy the classical null condition arises in the quasilinear terms. Expressing the inverse of the metric \(g_{\mu \nu }\) as

the reduced wave operator takes the form

which differs from the Minkowski wave operator \(\square \) only by the term \(H^{\underline{L} \underline{L}} \partial _q^2\), plus terms which involve at least one tangential \(\overline{\partial }\) derivative. This main quasilinear term is controlled by first rewriting the wave coordinate condition (1.12) as

where \(\vert W^{\nu }(h,\partial h) \vert \lesssim \vert h \vert \vert \partial h \vert \) is quadratic, and using the formula

for any \(F^{\mu \nu }\), to rewrite \(\partial _\mu \widehat{H}^{\mu \nu }\) in terms of the null frame. Here \(\partial _s=(\partial _r+\partial _t)/2\). This gives

that is \(\partial _q H^{\underline{L}\underline{L}}\) is equal to quadratic terms plus terms involving only tangential \(\overline{\partial }\) derivatives. Integrating \(\partial _q H^{\underline{L}\underline{L}}\) from initial data \(\{t=0\}\) then gives that \(H^{\underline{L} \underline{L}}\) is approximately equal to the main contribution of its corresponding initial value, 2M / r.

1.4.4 Commutation

In order to apply the energy inequality (1.23) to improve the higher order energy bounds (1.21), it is necessary to commute the system (1.22) with the vector fields Z. Instead of commuting with the vector fields Z directly as in [37], notice that, for any function \(\phi \),

where the fact that \(\partial _{\mu } \partial _{\nu } Z^{\lambda } = 0\) for each Z and \(\mu , \nu , \lambda = 0,1,2,3\) has been used. Here \(\mathcal {L}_Z\) denotes the Lie derivative along the vector field Z (see Section 6.3 for a coordinate definition). The procedure of commuting the system (1.22) therefore becomes computationally much simpler if it is instead commuted with the Lie derivatives along the vector fields, \(\mathcal {L}_Z\). In fact, the procedure simplifies further by commuting with a modified Lie derivative \(\widehat{\mathcal {L}}\), defined in the (t, x) coordinates by the formula

The modified Lie derivative has the property that \(\widehat{\mathcal {L}}_Z m = 0\) for each of the vector fields Z and moreover a computation shows that, in the case that \(\phi _{\mu \nu }\) is a (0, 2) tensor, the commutation property

holds for each of the vector fields Z.

The commutation error in (1.36) can be controlled by \((\widehat{\mathcal {L}}_Z H^{\underline{L}\underline{L}}) \partial ^2 \phi _{\mu \nu }\), plus terms which involve at least one tangential derivative of \(\phi _{\mu \nu }\)

The Lie derivative along any of the vector fields Z commutes with partial derivatives \(\partial \) (see Proposition 6.24). This fact leads to the commutation formula

involving the modified Lie derivative. The term \(\widehat{\mathcal {L}}_Z H^{\underline{L}\underline{L}}\) is then controlled easily by using the formula (1.35) and repeating the argument, described in Section 1.4.3, used to control \(H^{\underline{L}\underline{L}}\) itself.

When applying the commutation formula (1.36) to the reduced Einstein equations (1.22), it in particular becomes necessary to estimate the Lie derivative of the nonlinear terms, \(\mathcal {L}_Z^I \left( F_{\mu \nu }(h)(\partial h, \partial h) \right) \). Recall the nonlinear terms take the form (1.26). The modified Lie derivative also simplifies the process of understanding derivatives of the nonlinear terms due to the following product rule. Let \(h_{\alpha \beta }\) and \(k_{\alpha \beta }\) be (0, 2) tensors and let \(S_{\mu \nu }(\partial h,\partial k)\) be a (0, 2) tensor which is a quadratic form in the (0, 3) tensors \(\partial h\) and \(\partial k\) with two contractions with the Minkowski metric (in particular \(P(\partial _\mu h,\partial _\nu k)\) or \(Q_{\mu \nu }(\partial h,\partial k)\)). Then

and so the desirable structure of the nonlinear terms \(P(\partial _\mu h,\partial _\nu h)\) and \(Q_{\mu \nu }(\partial h,\partial k)\) described in Section 1.4.3 is preserved after applying Lie derivatives.

The Lie derivatives of the energy momentum tensor are controlled by Theorem 1.3 since, for any function \(\phi \), the quantities \(\sum _{\vert I \vert \le N} \vert Z^I \phi \vert \) and \(\sum _{\vert I \vert \le N} \vert \widehat{{\mathcal {L}}}_Z^I \phi \vert \) are comparable.

1.4.5 The Klainerman–Sobolev Inequality with Weights

In order to control the derivatives of the nonlinear terms and the error terms arising from commuting the system (1.22), described in Section 1.4.4, when using the energy inequality (1.23), pointwise estimates for lower order derivatives of the solution are first shown to hold. The Klainerman–Sobolev Inequality can be used to derive non-sharp bounds for \(\vert \partial Z^I h^1 \vert \) for \(\vert I \vert \le N-3\) (see equation (6.10)) directly from the bound on the energy (1.21). These pointwise bounds can be integrated from \(\{t=0\}\) to also give pointwise bounds for \(\vert Z^I h^1\vert \) for \(\vert I \vert \le N-3\) which, using the fact that \(\vert \overline{\partial } \phi \vert \le \frac{C}{1+t+r} \sum _{\vert I \vert = 1} \vert Z^I \phi \vert \) for any function \(\phi \), lead to strong pointwise estimates for all components of \(\overline{\partial } Z^I h^1\) for \(\vert I \vert \le N-4\). See Section 6.1 for more details.

Since, without restricting things to tangential derivatives, it is only true that \(\vert \partial \phi \vert \le \frac{C}{1+\vert t-r\vert } \sum _{\vert I \vert = 1} \vert Z^I \phi \vert \) for any function \(\phi \), the Klainerman–Sobolev Inequality does not directly lead to good pointwise estimates for all derivatives of \(h^1\). Some further improvement is necessary to control the terms in the energy estimate and recover the inequality (1.21).

1.4.6 \(L^{\infty }\)–\(L^{\infty }\) Estimate for the Wave Equation

The pointwise decay obtained for the transverse derivative of certain components of \(h^1\) “for free” from the wave coordinate condition is not sufficient in the wave zone \(t\sim r\) to close the energy estimate. The decay in this region is further improved by an \(L^{\infty }\)–\(L^{\infty }\) estimate, obtained by integrating the equations along the outgoing characteristics of the wave equation. In fact, instead of using the estimate for the full wave operator \(\widetilde{\square }_g\), as in [37], it suffices to use the estimate for the operator \(\square _0 = \big ( m^{\alpha \beta } - \frac{M}{r} \chi \big ( \frac{r}{1+t} \big ) \delta ^{\alpha \beta } \big ) \partial _{\alpha } \partial _{\beta }\). Moreover, using the pointwise decay obtained from the Klainerman–Sobolev inequality and the wave coordinate condition, it can be seen that, for the purposes of this estimate, the essential contribution of the failure of (1.11) to satisfy the classical null condition is present in the \(P_{\!\mathcal {S}}(\partial _q h,\partial _q h)\) terms, defined by (1.34). See Proposition 6.20 for a precise statement of this, and Lemma 6.21 for a proof of the \(L^{\infty }\)–\(L^{\infty }\) inequality. The fact that f is supported away from the wave zone can be shown using only the decay obtained from the Klainerman–Sobolev Inequality, and so the \(\widehat{T}\) term in (1.22) plays no role in Lemma 6.21. The pointwise decay of higher order Lie derivatives of \(h^1\) is similarly improved in Section 6.4.

1.4.7 The Hörmander \(L^1\)–\(L^{\infty }\) Inequality

Whilst the pointwise decay for lower order derivatives of h described above is sufficient to recover the assumptions (1.21) in the vacuum (when \(T^{\mu \nu } \equiv 0\)), the interior decay is not sufficient to satisfy the assumptions of Theorem 1.3. In Proposition 6.11 the Hörmander \(L^1\)–\(L^{\infty }\) inequality, Lemma 6.7, is used, together with the assumptions (1.21) on the \(L^1\) norms of \(Z^I T^{\mu \nu }(t,\cdot )\) and on the energy \(E_N(t)\) of \(h^1\), in order to improve the interior decay of \(h^1\) and lower order derivatives. This improved decay ensures that the assumptions of Theorem 1.3 are satisfied and hence the theorem can be appealed to in order to recover the assumptions (1.21) on the \(L^1\) norms of \(Z^I T^{\mu \nu }\), and to control the \(L^2\) norms of \(Z^I T^{\mu \nu }\) arising when the energy inequality (1.23) is used to improve the assumptions (1.21) on the energy \(E_N\). See Section 7 for further details on the completion of the proof of Theorem 1.2.

1.5 Vector Fields for the Vlasov Equation

The remaining difficulty in the proof of Theorem 1.2 is in establishing the \(L^1\) and \(L^2\) estimates of the vector fields applied to components of the energy momentum tensor, \(Z^I T^{\mu \nu }(t,x)\), of Theorem 1.3. For simplicity, we outline here how bounds are obtained for \(Z \rho (t,x)\), for \(Z = \Omega _{ij}, B_i, S\), where \(\rho (t,x)\) is the momentum average of f, defined by

The bounds for \(Z \rho (t,x)\) will follow from bounds of the form

for a suitable collection of vector fields \(\overline{Z}\), acting on functions of \((t,x,{\,\widehat{\!p\!}\,\,})\), which reduce to the \(Z = \Omega _{ij}, B_i, S\) vector fields when acting on spacetime functions, that is functions of (t, x) only.

Throughout this section, and in Sections 4 and 5, it is convenient, instead of considering initial data to be given at \(t=0\), to consider initial data for the Vlasov equation to be given at \(t=t_0\) for some \(t_0\ge 1\).Footnote 4 It follows from the form of the Vlasov equation (1.18) that

(where \(X(t_0,t,x,{\,\widehat{\!p\!}\,\,}), {\,\widehat{\!P\!}\,\,}(t_0,t,x,{\,\widehat{\!p\!}\,\,})\) are abbreviated to \(X(t_0), {\,\widehat{\!P\!}\,\,}(t_0)\) respectively) for any vector \(\overline{Z}\). Since derivatives of \(f|_{t=t_0}\) are explicitly determined by initial data and behave like f, an estimate for \(\vert \overline{Z}f(t,x,{\,\widehat{\!p\!}\,\,}) \vert \) will follow from appropriate estimates for \(\vert \overline{Z}\left( X(t_0,t,x,{\,\widehat{\!p\!}\,\,})^i \right) \vert \) and \(\vert \overline{Z}\big ( {\,\widehat{\!P\!}\,\,}(t_0,t,x,{\,\widehat{\!p\!}\,\,})^i \big ) \vert \).

1.5.1 General Procedure and Vector Fields in Minkowski Space

A natural way to extend a given vector field Z on \(\mathcal {M}\) to a vector field on \(\mathcal {P}\), which by construction will have the property that \(\overline{Z}(X(t_0)^i)\) satisfy good bounds, is as follows. For a given vector field Z on \(\mathcal {M}\), let \(\Phi ^Z_{\lambda } : \mathcal {M} \rightarrow \mathcal {M}\) denote the associated one parameter family of diffeomorphisms, so that

Under a mild assumption on g, for fixed \(\tau \) any point \((t,x,{\,\widehat{\!p\!}\,\,}) \in \mathcal {P}\) with \(t >\tau \) can be uniquely described by a pair of points \(\{ (t,x), (\tau ,y) \}\) in \(\mathcal {M}\), where

is the point where the geodesic emanating from (t, x) with velocity \({\,\widehat{\!p\!}\,\,}\) intersects the hypersurface \(\Sigma _{\tau }\) (recall that \(\{ \Sigma _t \}\) denotes the level hypersurfaces of the function t), that is \((t,x,{\,\widehat{\!p\!}\,\,}) \in \mathcal {P}\) can be parameterised by \(\{ (t,x), (\tau ,y) \}\) to getFootnote 5

The subscript X is used in \({\,\widehat{\!p\!}\,\,}_X(t,x,\tau ,y)\) to emphasise that \({\,\widehat{\!p\!}\,\,}\) is parametrised by y using the geodesics X (in contrast to suitable approximations to the geodesics, as will be considered later). Now the action of \(\Phi ^Z_{\lambda }\) on (t, x) and \((\tau ,y)\) induces an action on \(\mathcal {P}\) at time t, given by

For fixed \(t_0\) we define the vector field \(\overline{Z}\) by

A computation shows that

which results in a good bound for \(\vert \overline{Z}(X(t_0)^i) \vert \). In particular, if \(X^i(t_0,t,x,{\,\widehat{\!p\!}\,\,})\) and \({\,\widehat{\!P\!}\,\,}^i(t_0,t,x,{\,\widehat{\!p\!}\,\,})\) are bounded in the support of \(f(t_0,X(t_0), {\,\widehat{\!P\!}\,\,}(t_0))\), equation (1.40) guarantees that \(\vert \overline{Z}(X(t_0)^i) \vert \) is bounded by a constant.

To see that (1.40) indeed holds, first note that the left hand side is the derivative of \( X\big (t_0,\overline{\Phi }^{Z,X}_{\lambda ,t_0}(t,x,{\,\widehat{\!p\!}\,\,})\big )^i\) with respect to \(\lambda \) at \(\lambda =0\). Also the first term on the right hand side is the derivative of \(\Phi ^Z_{\lambda }(t_0,y)^i\) at \(\lambda =0\). The equality (1.40) follows from taking the derivative with respect to \(\lambda \), and setting \(\lambda =0\), of both sides of the identity

In Minkowski space, y has the explicit form \(y=x-(t-\tau ){\,\widehat{\!p\!}\,\,}\) and, when Z is chosen to be \(\Omega _{ij}, B_i, S\), a straightforward computation, see Section 3, shows that the resulting vectors \(\overline{Z}^M\!\) take the form,

Let \(\widehat{q}_X(t,x,t_0,y)=\widehat{P}(t_0,t,x,p)\) be the initial momentum of the geodesic going through \((t,x,\widehat{p})\). By differentiating the equality

with respect to \(\lambda \), one can similarly obtain an estimate for \(\overline{Z}({\,\widehat{\!P\!}\,\,}^i(t_0))\). In the simple case of Minkowski space, \(\widehat{q}_X\) has the explicit form

and so

since \({\mathrm{d}{\,\widehat{\!P\!}\,\,}}\!/{ \mathrm{d}s} = 0\) in Minkowski space, which leads to a good bound for \(\vert \overline{Z}({\,\widehat{\!P\!}\,\,}^i(t_0)) \vert \) and, together with (1.40), results in bounds of the form (1.37) for solutions of the Vlasov equation on Minkowski space. Such bounds lead to bounds on \(Z \rho (t,x)\), since

The rotation vector fields \(\overline{\Omega }^M_{ij}\) and a form of the scaling vector field \(\overline{S}^M\) were used in [46] for small data solutions of the massless Einstein–Vlasov system (note though that the above procedure of using Z to define \(\overline{Z}\) breaks down when the mass shell \(\mathcal {P}\) becomes the set of null vectors, as is the case for the massless Einstein–Vlasov system).Footnote 6 The vector fields \(\overline{\Omega }^M_{ij}\), \(\overline{B}^M_i\), \(\overline{S}^M\) were also used in the work [17] on the Vlasov–Nordström system, where the authors notice that the rotations \(\overline{\Omega }^M_{ij}\) and the boosts \(\overline{B}^M_i\) are the complete lifts of the spacetime rotations and boosts, and hence generate symmetries of the tangent bundle.

1.5.2 Vector Fields Used in the Proof of Theorem 1.3

In the proof of Theorem 1.2, in order to obtain good estimates for \(Z^I T^{\mu \nu }(t,x)\), it is necessary to obtain bounds of the form (1.37), now for solutions of the Vlasov equation on the spacetimes being constructed. The sharp interior decay rate of the Christoffel symbols in the spacetimes which are constructed, as we plan to show in forthcoming work, is

where \(0<c<1\) and \(K \ge 0\).Footnote 7 On a spacetime whose Christoffel symbols decay as such, it can be shown that the Minkowski vector fields \(\overline{Z}^M\) of the previous section only satisfy

for solutions f of the Vlasov equation. This logarithmic loss compounds at higher orders, and cannot be used to recover the sharp bounds (1.42) in the context of Theorem 1.2.

The proof of Theorem 1.2 is therefore based on a different collection of vector fields, \(\overline{Z}\), which are adapted to the geometry of the background spacetime and again reduce to \(Z = \Omega _{ij}, B_i, S\) when acting on spacetime functions, and satisfy a good bound of the form (1.37) when applied to solutions f of the Vlasov equation. The vector fields can be derived using the procedure described in Section 1.5.1, which in fact did not rely on the background spacetime being Minkowski space. Instead of the expression (1.39), the components \(y^i\) are defined using approximations \(X_2(s,t,x,{\,\widehat{\!p\!}\,\,})\) to the true geodesics \(X(s,t,x,{\,\widehat{\!p\!}\,\,})\) of the spacetimes to be constructedFootnote 8. One could also use the geodesics themselves, however doing so involves estimating the components \(T^{\mu \nu }\) at the top order in a slightly different way to how they are estimated at lower orders. We choose to use the approximations to the geodesics so that \(T^{\mu \nu }\) can be estimated in the same way at all orders. A derivation of the vector fields obtained using this procedure is given in Section 3, but here the failure of the Minkowski vector fields \(\overline{Z}^M\) are identified explicitly and shown how to be appropriately corrected. The two procedures agree up to lower order terms.

Instead of the sharp interior bounds (1.42), the proof of Theorem 1.3 requires only the weaker bounds

for \(\vert I \vert \le \left\lfloor N/2 \right\rfloor + 2\), where \(\frac{1}{2}< a < 1\). Consider first the rotation vector fields, and recall the expression (1.38). The rotation vector fields \(\overline{\Omega }_{ij}\) are defined using approximations to the geodesics. The geodesics take the form

Using the fact that

where each of the first approximations arise by replacing \({\,\widehat{\!P\!}\,\,}(s,t,x,{\,\widehat{\!p\!}\,\,})^k\) and \(X(s,t,x,{\,\widehat{\!p\!}\,\,})^k\) by their respective values in Minkowski space, and the second arise from the fact that \(\frac{x^i}{t} \sim {\,\widehat{\!p\!}\,\,}^i\), which holds asymptotically along each given geodesic (see Proposition 2.2 below), the approximations to the geodesics are defined as

for \(t_0 \le s \le t\). It will be shown in Section 2 that \(X_2(s,t,x,{\,\widehat{\!p\!}\,\,})^k\) are good approximations to the geodesics \(X(s,t,x,{\,\widehat{\!p\!}\,\,})^k\) in the sense that

for all \(t_0\le s \le t\) and \(k=1,2,3\). The idea is now to construct vector fields so that the vector fields applied to \(X_2\) are bounded. Then one can show that (1.45) is true with \(X_2 - X\) replaced by \(\overline{Z}(X_2-X)\). See Section 4 for more details. The approximations \(X_2\) have the desirable property, which will be exploited below, that

vanishes (and in particular does not involve derivatives of \(\Gamma \)).

Applying the Minkowski rotation vector fields to the approximations \(X_2\) gives

In the final equality, for \((t,x,{\,\widehat{\!p\!}\,\,}) \in \mathrm {supp}(f)\), the first two terms are bounded (as can be seen from (1.45) and the fact that \(f\vert _{t=t_0}\) has compact support). However on a spacetime only satisfying the bounds (1.43), the terms on the last line in general grow in t. The vector fields \(\overline{\Omega }_{ij}\) are defined so that these terms are removed:

where if the functions \(\mathring{\Omega }_{ij}^l\) are defined as

it follows from (1.46) and (1.47) that

and so

for \((t,x,{\,\widehat{\!p\!}\,\,}) \in \mathrm {supp}(f)\) and \(k=1,2,3\). It can similarly be shown (see Section 4.2) that

which, by (1.38), leads to a good bound for \(\overline{\Omega }_{ij} f(t,x,{\,\widehat{\!p\!}\,\,})\).

We remark that the identity (1.48) can be expressed as

which is exactly (1.40) when \(Z = \Omega _{ij}\) and X is replaced by \(X_2\). The rotation vector fields \(\overline{\Omega }_{ij}\) therefore arise by following the procedure in Section 1.5.1 with X replaced by \(X_2\); see Section 3.

Since the functions \(\mathring{\Omega }_{ij}^l\) do not depend on \({\,\widehat{\!p\!}\,\,}\),

and so the good bounds for \(\overline{\Omega }_{ij} f(t,x,{\,\widehat{\!p\!}\,\,})\) lead to good bounds for \(\Omega _{ij} \rho (t,x)\).

Had the true geodesics been used to define the vector fields \(\overline{\Omega }_{ij}\), the functions \(\mathring{\Omega }_{ij}^l\) would have involved a term of the form \(\int _{t_0}^t ({s' - t_0})({t-t_0})^{-1} \overline{\Omega }_{ij}^M \big ( {\,\widehat{\!\Gamma \!}\,\,}^k\big ( s',X(s'),{\,\widehat{\!P\!}\,\,}(s') \big ) \big )\, \mathrm{d}s'\) and so \(\partial _{{\,\widehat{\!p\!}\,\,}^k} \mathring{\Omega }_{ij}^l\) would involve second order derivatives of \(\Gamma \). The average \(\int \mathring{\Omega }_{ij}^l \partial _{{\,\widehat{\!p\!}\,\,}^l} f (t,x,{\,\widehat{\!p\!}\,\,}) \,\mathrm{d} {\,\widehat{\!p\!}\,\,}\) has better decay than the term \(\mathring{\Omega }_{ij}^l \partial _{{\,\widehat{\!p\!}\,\,}^l} f (t,x,{\,\widehat{\!p\!}\,\,})\) does pointwise and so, to exploit this fact when obtaining a bound for \(\Omega _{ij} \rho (t,x)\), it is necessary to integrate this term by parts \(\int \mathring{\Omega }_{ij}^l \partial _{{\,\widehat{\!p\!}\,\,}^l} f (t,x,{\,\widehat{\!p\!}\,\,})\, \mathrm{d} {\,\widehat{\!p\!}\,\,}= - \int \big ( \partial _{{\,\widehat{\!p\!}\,\,}^l} \mathring{\Omega }_{ij}^l \big ) f (t,x,{\,\widehat{\!p\!}\,\,}) \,\mathrm{d} {\,\widehat{\!p\!}\,\,}\). If the true geodesics are used to define \(\overline{\Omega }_{ij}\) then, in the setting of Theorem 1.2, the fact that \(\partial _{{\,\widehat{\!p\!}\,\,}^k} \mathring{\Omega }_{ij}^l\) involves second order derivatives of \(\Gamma \) would mean that this integration by parts cannot be used when estimating derivatives of \(T^{\mu \nu }\) at the top order, and so \(T^{\mu \nu }\) would be estimated in a slightly different way at the top order.

The approximations to the geodesics \(X_2(s,t,x,{\,\widehat{\!p\!}\,\,})\) are used in a similar manner in Section 4.1 to define vector fields, \(\overline{B}_i\) and \(\overline{S}\).Footnote 9

In Hwang–Rendall–Velázquez [23] derivatives of the average \(\int f(t,x,p)\, \mathrm{d}p\) for solutions of the Vlasov–Poisson system are controlled and \(L^{\infty }\) analogues of the estimates of Theorem 1.3 are obtained. The approach is different to that taken in the present work, though the estimates in [23] are obtained by similarly first controlling derivatives of the analogues of the maps X, P. The re-parameterisation (t, x, y) of \(\mathcal {P}\) is also used, though whilst here it is only formally used to motivate the definition of the \(\overline{Z}\) vector fields, in [23] \(\partial _x\) derivatives, in the (t, x, y) coordinate system, of the analogue of the maps X, P are controlled.

It is also interesting to compare the present work with the independent work of Fajman–Joudioux–Smulevici [19]. The proof in [19] is also based on the vector field method (following [29, 37] in the vacuum) and, accordingly, the new elements of the proof also involve controlling vector fields applied to the components of the energy momentum tensor \(T^{\mu \nu }\). The issue of the failure of the Minkowski vector fields applied to f to be bounded arises. A key step in [19] is therefore also to introduce a new collection of vector fields further adapted to the geometry of the spacetimes under consideration. The vector fields introduced are different to those introduced here. The construction in [19] proceeds roughly by, in the (t, x, p) coordinate system for \(\mathcal {P}\), considering the vectors \(\overline{Z}^M + C_Z(t,x,p)^{\mu } \partial _{x^{\mu }}\), where the coefficients \(C_Z(t,x,p)^{\mu }\) are defined, by solving an inhomogeneous transport equation, so that \(\overline{Z}^M+ C_Z(t,x,p)^{\mu } \partial _{x^{\mu }}\) has good commutation properties with \(\mathbf {X}\). The solutions of the Vlasov equation in [19] are controlled by commuting the Vlasov equation (1.3) with these vector fields, in contrast to the present work where solutions of the Vlasov equation are controlled by expressing them in terms of geodesics (1.18) and commuting the geodesic equations (1.17) with vector fields.

1.6 Outline of the Paper

In Section 2 the system (1.17) is used to prove the property (1.19) regarding the support of the matter, and the maps (1.44) are shown to be good approximations to the geodesics. In Section 3, which is not required for the proof of Theorem 1.2 or Theorem 1.3, the discussion of the vector fields in Section 1.5 is expanded on and a derivation of the vector fields used in the proof of Theorem 1.3 is given. In Section 4 combinations of the vector fields applied to the geodesics are estimated. In Section 5 the estimates of Section 4 are used to obtain estimates for spacetime vector fields applied to the components of the energy momentum tensor and hence prove Theorem 1.3. In Section 6 the solution of the reduced Einstein equations is estimated in terms of the components of the energy momentum tensor. The results of the previous sections are combined in Section 7 to give the proof of Theorem 1.2.

2 The Support of the Matter and Approximations to the Geodesics

In this section the Vlasov equation on a fixed spacetime is considered. It is shown that under some assumptions on the metric the solution is supported, for large times, away from the wave zone\(x \sim t\) provided \(f_0\) is compactly supported. Curves \(X_2\), which approximate the timelike geodesics X, are introduced, which are later used to define the \(\overline{Z}\) vector fields.

Note that the characteristics of the operator \(\frac{1}{p^0} \mathbf {X}\), where \(\mathbf {X}\) is given by (1.5), solve

and so

Hence

where \(X^0=s\), \({\,\widehat{\!P\!}\,\,}^0=1\) and

Let functions \(\Lambda ^{\alpha \beta , \mu }_{\gamma }\) be defined so that

that is \(\Lambda ^{\alpha \beta , 0}_{\gamma } ({\,\widehat{\!p\!}\,\,})=0\), \(\Lambda ^{\alpha \beta , i}_{0} ({\,\widehat{\!p\!}\,\,})={\,\widehat{\!p\!}\,\,}^i{\,\widehat{\!p\!}\,\,}^{\alpha }{\,\widehat{\!p\!}\,\,}^\beta \) and \(\Lambda ^{\alpha \beta , i}_{j} ({\,\widehat{\!p\!}\,\,})=-\delta _{\!j}^{\,\,i}{\,\widehat{\!p\!}\,\,}^{\alpha }{\,\widehat{\!p\!}\,\,}^\beta \), for \(i=1,2,3\).

2.1 Properties of the Support of the Matter

The results of this section will rely on the Christoffel symbols satisfying the assumptions

for various small \(N^{\prime \prime }\). Then if (2.7) below holds in \(\mathrm {supp}(f)\), the assumption (2.5) implies that

in \(\mathrm {supp}(f)\). The notation (y, q) will be used for points in the mass shell over the initial hypersurface \(\{t=0\}\), so \(y \in \Sigma _{0}\), \(q \in \mathcal {P}_{(0,y)}\).

The next result (Proposition 2.8) guarantees that

for \((t,x,{\,\widehat{\!p\!}\,\,}) \in \mathrm {supp}(f)\), for some constants K and c.

Proposition 2.1

Suppose that \(|y|\le K\) and \(|q|\le K^\prime \), for some \(K,K'\ge 1\), and that for some fixed \(a,\delta >0\)

where \(c'={1}/({16(1+8{K^{\prime }}^2)})\) and \(c^{\prime \prime }=\min {\big (2^{-\delta }{c'}^{\delta }K^{a}a,(1+2K/c')^{-\delta }\delta \big )}/({8(1+4{K^{\prime }})})\). Then with \(c=1-c'\) we have

Proof

Let \(s_1\) be the largest number such that \(| P(s,0,y,q)|\le 2K'\) for \(0\le s\le s_1\). We will show that then it follows that \(| P(s,0,y,q)|< K'+1\), for \(0\le s\le s_1\), contradicting the maximality of \(s_1\). Let \(p=P(s,0,y,q)\). Since \(g_{\alpha \beta }p^\alpha p^\beta =-1\) it follows that \(\big | 1+|p_{\,}|^2-|p^0|^2\big |\le |g-m|(|p^0|^2+|p_{\,}|^2)\). Hence \((1-c')|p^0|^2\le 1+(1+c')|p_{\,}|^2\) and

which proves the second and hence third part of (2.9), assuming the weaker bound of the first. It follows that

so \(s-|X| \ge (1-c)s-K\) along a characteristic X(s). Therefore \(|\Gamma \big (s,X(s)\big )|\le 2^{a+\delta }c^{\prime \prime }{c'}^{-a-\delta } s^{-1-a}\), when \(s\ge 2K/c'\), and \(|\Gamma |\le c^{\prime \prime }(1+s)^{-1+\delta }\), when \(s\le 2K/c'\) along a characteristic. By (2.1) we have

where

by assumption. Hence, using the weak inductive assumption,

It follows that \(|P(s,0,y,q)|\le K'+1/4\) in the support of f, and (2.9) follows.

\(\square \)

Proposition 2.2

If \(\vert \Gamma (t,x) \vert \le c^{\prime \prime \prime }(1+t)^{-1-a}\) for \(\vert x \vert \le ct +K\) then, for \((t,x,{\,\widehat{\!p\!}\,\,}) \in \mathrm {supp}(f)\) with \(t\ge 1\),

Proof

Note that any \((t,x,{\,\widehat{\!p\!}\,\,}) \in \mathrm {supp}(f)\) can be written as \((t,x,{\,\widehat{\!p\!}\,\,}) = (t,X(t,0,y,q),{\,\widehat{\!P\!}\,\,}(t,0,y,q))\) for some \((y,q) \in \mathrm {supp}(f_0)\). For (y, q) such that \((0,y,q) \in \mathrm {supp}(f)\),

for all \(s\ge 0\), using the bound (2.8). The proof follows by integrating forwards from \(s=0\) and using the fact that \(\vert y \vert + \vert q \vert \le C\),

and dividing by s. \(\quad \square \)

2.2 Translated Time Coordinate

Proposition 2.1 implies that \(\vert x \vert \le c t +K\) for \(t\ge 0\) in \(\mathrm {supp}(T^{\mu \nu })\) or, equivalently, \(\vert x \vert \le c \tilde{t}\) for \(\tilde{t} \ge t_0\), where \(t_0 := {K}/{c}\), and \(\tilde{t} = t_0 + t\). It is convenient to use this translated time coordinate \(\tilde{t}\) in what follows. In particular, the vector fields of Section 4 will be defined using this variable. The main advantage is that the spacetime vector fields \(\tilde{Z} = \tilde{\Omega }_{ij}, \tilde{B}_i, \tilde{S}\) defined by

satisfy, for any multi index I,

for some smooth functions \(\Lambda _{IJ}\). Estimates for \(\partial ^I \tilde{Z}^J T^{\mu \nu }\) will then follow directly from estimates for \(\tilde{Z}^I T^{\mu \nu }\), which are less cumbersome to obtain; see Section 5.4. For simplicity the \(\tilde{}\) will always be omitted, and statements just made for \(t\ge t_0\).

2.3 Approximations to Geodesics

Define, for \((t,x,{\,\widehat{\!p\!}\,\,}) \in \mathrm {supp}(f)\) and \(t_0 \le s \le t\),

and set

for \(i=1,2,3\). Note that

for \(i=1,2,3\). The geodesic equations (2.2) can be used to derive the following equations for \(\overline{X}\) and \(\overline{P}\):

It follows from the next proposition that the curves \(s\mapsto X_2(s,t,x,{\,\widehat{\!p\!}\,\,})\) are good approximations to the geodesics \(s \mapsto X(s,t,x,{\,\widehat{\!p\!}\,\,})\). Recall, from Section 1.2.2, the notation \(\lesssim \).

Proposition 2.3

Suppose \(\vert \Gamma (t,x) \vert + t \vert \partial \Gamma (t,x) \vert \lesssim \varepsilon t^{-1-a}\) in \(\mathrm {supp}(f)\). Given \(t\ge t_0\) such that \((t,x,{\,\widehat{\!p\!}\,\,}) \in \mathrm {supp}(f)\),

for all \(t_0 \le s \le t\) and \(i=1,2,3\).

Proof

First note that equation (2.2) and the bounds assumed on \(\Gamma \) imply

for \(t_0\le s \le t\). Note also that

where \(y=X(0,t,x,{\,\widehat{\!p\!}\,\,})\), \(q = {\,\widehat{\!P\!}\,\,}(0,t,x,{\,\widehat{\!p\!}\,\,})\). Proposition 2.2, and the integration of (2.17) from s to t and (2.11) then gives

for \(t_0 \le s \le t\) and \(i=1,2,3\). Hence

Moreover,

for \(t_0 \le s \le t\), and so

Integrating equation (2.15) backwards from \(s=t\), and using the fact that \(\overline{P}(t,t,x,{\,\widehat{\!p\!}\,\,})^i = 0\), gives

and integrating the equation (2.14) backwards from \(s=t\) and using \(\overline{X}(t,t,x,{\,\widehat{\!p\!}\,\,})^i = 0\) gives

since \(a> \frac{1}{2}\). \(\quad \square \)

Corollary 2.4

Suppose \(t\ge t_0\) is such that \((t,x,{\,\widehat{\!p\!}\,\,}) \in \mathrm {supp}(f)\) and \(\vert \Gamma (t,x) \vert + t \vert \partial \Gamma (t,x) \vert \lesssim \varepsilon t^{-1-a}\). Then

for \(t_0 \le s \le t\) and \(i=1,2,3\).

Proof

The proof is an immediate consequence of the first bound of (2.7), and Proposition 2.3. \(\quad \square \)

3 The Vector Fields

The general procedure for using approximate geodesics to lift a vector field Z on \(\mathcal {M}\) to a vector field \(\overline{Z}\) on \(\mathcal {P}\), outlined in Section 1.5 for geodesics, is described in more detail in Section 3.1. Here a formula for the action of the lifted vector fields on initial conditions for the approximate geodesics is derived. The essential property of the lifted vector fields is that they are bounded when applied to these initial conditions, which one can deduce from this formula. It is however computationally more convenient to define the vector fields from their action on initial conditions for the approximate geodesics, which we do in Section 3.2. Section 3.2 is independent of Section 3.1, but we include Section 3.1 for the purpose of conceptual justification of the vector fields and the formula mentioned above.

3.1 Geometric Construction of Lifted Vector Fields Using Approximate Geodesics

3.1.1 Parametrization of Momentum Space with Physical Initial and Final Coordinates for the Approximate Geodesics

Given first approximations to the geodesics \(X_1(s,t,x,{\,\widehat{\!p\!}\,\,})\) and \({\,\widehat{\!P\!}\,\,}_{\!\!1}(s,t,x,{\,\widehat{\!p\!}\,\,})\) we define the second approximations \(X_2(s,t,x,{\,\widehat{\!p\!}\,\,})\) and \({\,\widehat{\!P\!}\,\,}_{\!\!2}(s,t,x,{\,\widehat{\!p\!}\,\,})\) to the geodesics through \((t,x,{\,\widehat{\!p\!}\,\,})\), to be the solutions of the system

Under some mild assumptions on the metric g,Footnote 10 for fixed \(\tau \) any point \((t,x,{\,\widehat{\!p\!}\,\,}) \in \mathcal {P}\) with \(t >\tau \) can be described uniquely by the pair of points \(\{ (t,x), (\tau ,y) \}\) in \(\mathcal {M}\), where

is the point where the approximate geodesic \(X_2\) emanating from (t, x) with velocity \({\,\widehat{\!p\!}\,\,}\) intersects the hypersurface \(\Sigma _{\tau }\), that is \((t,x,{\,\widehat{\!p\!}\,\,}) \in \mathcal {P}\) can be parameterised by \(\{ (t,x), (\tau ,y) \}\),

where the subscript \(X_2\) is used in \({\,\widehat{\!p\!}\,\,}_{X_2}(t,x,\tau ,y)\) to emphasise that \({\,\widehat{\!p\!}\,\,}\) is now parametrised by y using the approximations \(X_2\) to the geodesics. Integrating (3.1) backwards from t to \(t_0\) gives that \({\,\widehat{\!p\!}\,\,}={\,\widehat{\!p\!}\,\,}_{X_2}(t,x,t_0,y)\) is implicitly given by

where

Here we used Taylor’s formula with integral remainder \(f(t_0)=f(t)+f^\prime (t)(t_0-t)+\int _t^{t_0}(t_0-s)f^{\prime \prime }(s)\, \mathrm{d}s\). The first approximate geodesics in the previous section are independent of \({\,\widehat{\!p\!}\,\,}\) so then \(\Theta \) is independent of \({\,\widehat{\!p\!}\,\,}\) and the above relation in fact gives \({\,\widehat{\!p\!}\,\,}_{X_2}\) explicitly.

3.1.2 Lifting of Physical Vector Fields to Vector Fields on Momentum Space Adapted to the Approximate Geodesics

For a given vector field Z on \(\mathcal {M}\), let \(\Phi ^Z_{\lambda } : \mathcal {M} \rightarrow \mathcal {M}\) denote the associated one parameter family of diffeomorphisms, so \(\Phi ^Z_{0}=Id\) and so that

Now the action of \(\Phi ^Z_{\lambda }\) on (t, x) and \((\tau ,y)\) in \(\mathcal {M}\) induces an action on \((t,x,{\,\widehat{\!p\!}\,\,})\) in \(\mathcal {P}\), given by

For fixed \(t_0\) we define the vector field \(\overline{Z}\) by

We have

where

3.1.3 The Lifted Vector Fields Applied to the Initial Conditions of the Approximate Geodesics Parameterized by the Final Momentum Space

A computation shows that

which as desired is bounded independently of \(t>t_0+1\). In fact, if \(y=X_2^i(t_0,t,x,{\,\widehat{\!p\!}\,\,})\) and \(q={\,\widehat{\!P\!}\,\,}_2^i(t_0,t,x,{\,\widehat{\!p\!}\,\,})\) are bounded in the support of \(f(t_0,y,q)\), equation (3.7) guarantees that \(\vert \overline{Z}(X_2(t_0,t,x,{\,\widehat{\!p\!}\,\,})^i) \vert \) is bounded.

To see that (3.7) indeed holds, first note that the left hand side is the derivative of \( X_2\big (t_0,\overline{\Phi }^{Z,X_2}_{\lambda ,t_0}(t,x,{\,\widehat{\!p\!}\,\,})\big )^i\) with respect to \(\lambda \) at \(\lambda =0\). Also the first term on the right hand side is the derivative of \(\Phi ^Z_{\lambda }(t_0,y)^i\) at \(\lambda =0\). The equality (3.7) follows from taking the derivative, with respect to \(\lambda \) at \(\lambda =0\) of both sides of the identity

The identity (3.8) is just two different ways of expressing that we apply the transformation \(\Phi _\lambda \) to the initial point of the path \((t_0,y)\). The left hand side is that we apply the transformation to the approximate geodesic which will lead to the transformation of the initial point.

3.2 Computation of the Lifted Vector Fields from Their Action on Initial Conditions for the Approximate Geodesics

In this section we will compute the vector fields from how they act of the initial conditions. We will use a slight modification of the formula for how they act on the initial conditions derived in the previous section and we will hence get a slightly modification of vector fields in the previous section. They are computationally slightly simpler to use than the vector fields of the previous section, though they are mildly singular for t close to \(t_0\). It is therefore assumed throughout this section that \(t\ge t_0+1\), and the vector fields will only be used in the proof of Theorem 1.3 under this assumption.

The vector fields can be derived by imposing that they have the form

where \(\widetilde{Z}^i\) are to be determined, and insisting that the relation

holds, instead of the relation (3.7) which involves \({\,\widehat{\!P\!}\,\,}_{\!\!2}(t_0,t,x,{\,\widehat{\!p\!}\,\,})^i\) instead of \({\,\widehat{\!p\!}\,\,}^i\) and is satisfied by the vector fields of the previous section. Equation (3.10) is in particular true for the Minkowski vector fields \(\overline{Z}^M\).

We now make the further assumption that the first approximate geodesics \(X_1\) and \({\,\widehat{\!P\!}\,\,}_{\!\!1}\) in (3.1) are independent of \({\,\widehat{\!p\!}\,\,}\) in which case by (3.3) the second approximation to the geodesics are given by

with \(\Theta \) given by (3.4) now independent of \({\,\widehat{\!p\!}\,\,}\). Applying the expression (3.9) to (3.11) gives

and so \(\widetilde{Z}^i\) is indeed determined if \(\overline{Z}\big (X_2(t_0,t,x,{\,\widehat{\!p\!}\,\,})^i\big )\) is prescribed for each i. Substituting the definition (3.10) into (3.12) and solving for \(\widetilde{Z}^i\) we obtain

The coefficients of the vector fields we are considering are linear functions

where \(x^0=t\). Hence, by (3.11),

where \({\,\widehat{\!p\!}\,\,}^0=1\) and we defined \(\Theta ^0=0\). Hence

Substituting \(X_1=sx/t\) and \({\,\widehat{\!P\!}\,\,}_{\! 1}=x/t\) into (3.4) we have

Lemma 3.1

If

then

where, with \((Z\Lambda )(x/t)=Z \big (\Lambda (x/t)\big )\),

Proof

Changing variables gives

Hence

Integrating by parts we have

and adding things up we get the lemma. \(\quad \square \)

Remark 3.2

We note \(\Theta _Z\) is of exactly the same form of \(\Theta \) and hence can be estimated in the same way, and moreover a vector field \(Z'\) applied to \(\Theta _Z\) will produce a \((\Theta _X)_{Z^\prime }\) of the same form.

One can further obtain bounds for \(\overline{Z}\) applied to the initial conditions for \({\,\widehat{\!P\!}\,\,}\) as follows: we have

so

which again is bounded, provided the components of Z grow at most like t.

3.2.1 The Rotation Vector Fields

Since the rotations satisfy \(Z^0=0\) it easily follows from the above lemma that

where \(\Theta \) is given by (3.17) and

3.2.2 The Scaling Vector Field

By the above lemma we have

3.2.3 The Boost Vector Fields

By the above lemma we have

where

4 Vector Fields Applied to Geodesics

Recall from Section 2 the definitions (2.13) of \(X_2(s,t,x,{\,\widehat{\!p\!}\,\,})^i\) and \({\,\widehat{\!P\!}\,\,}_{\!\!2}(s,t,x,{\,\widehat{\!p\!}\,\,})^i\), and

In this section estimates for combinations of appropriate vector fields, \(\overline{Z}^I\) (see Sections 3 and 4.1 below), applied to the geodesics \(X(t_0,t,x,{\,\widehat{\!p\!}\,\,})\) and \({\,\widehat{\!P\!}\,\,}(t_0,t,x,{\,\widehat{\!p\!}\,\,})\) of a fixed spacetime satisfying the pointwise bounds

where \(\frac{1}{2}< a < 1\), are obtained. The main results are Proposition 4.24 and Corollary 4.27, which will be used in Section 5 to estimate combinations of the spacetime vector fields, \(Z^I\), applied to the components of the energy momentum tensor, \(T^{\mu \nu }(t,x)\).

Proposition 4.24 and Corollary 4.27 are obtained by applying \(\overline{Z}^I\) to the system (2.14)–(2.15) (see Propositions 4.23 and 4.26) to first estimate \(\overline{Z}^I(\overline{X}(t_0)^k)\) and \(\overline{Z}^I(\overline{P}(t_0)^k)\). Proposition 4.24 and Corollary 4.27 then follow from good estimates for \(\overline{Z}^I(X_2(t_0)^k)\) and \(\overline{Z}^I({\,\widehat{\!P\!}\,\,}_2(t_0)^k)\). The vector fields \(\overline{Z}\) are defined so that such good estimates indeed hold (see Proposition 4.1 for the case \(\vert I \vert = 1\), and Proposition 4.9 for general I).

In Section 4.1 the vector fields are recalled from Section 3. In Section 4.2 the basic idea of the remainder of the section is illustrated by considering only one rotation vector field \(\overline{\Omega }_{ij}\) applied to the geodesics. In Section 4.3 schematic expressions for \(\overline{Z}^I(X_2(t_0)^k)\), along with \(\overline{Z}^I\) applied to \(\frac{x^i}{t} - {\,\widehat{\!p\!}\,\,}^i\) and \({\,\widehat{\!p\!}\,\,}^i\) (estimates of which are also used in the proof of Propositions 4.23 and 4.26) are obtained. In Section 4.4 these schematic expressions are used to obtain estimates for \(\overline{Z}^I(X_2(t_0)^k)\), and for \(\overline{Z}^I\) applied to various other quantities. Since the commuted system (2.14)–(2.15) is integrated backwards from \(s=t\), it is necessary to first estimate the “final conditions”, \(\overline{Z}^I(\overline{X}(s,t,x,{\,\widehat{\!p\!}\,\,})^k)\vert _{s=t}\) and \(\overline{Z}^I(\overline{P}(s,t,x,{\,\widehat{\!p\!}\,\,})^k)\vert _{s=t}\). Such estimates are obtained in Section 4.7 (see Propositions 4.21 and 4.22) using the results of Sections 4.5 and 4.6. In Section 4.8 lower order derivatives of \(\overline{X}(s)^k\) and \(\overline{P}(s)^k\) are estimated (Proposition 4.23) and in Section 4.9 higher order derivatives are estimated (Proposition 4.26).

In Sections 4.1–4.9 it will be assumed that \(t\ge t_0+1\). Section 4.10 is concerned with the case \(t_0 \le t \le t_0 + 1\). It will be assumed throughout this section that \(\vert x \vert \le c t\), where \(0<c<1\) (recall the discussion in Section 2.2).

4.1 Vector Fields

The proof of the main result uses the following collection of vector fields, introduced in Section 3, which are schematically denoted \(\overline{Z}\)

for \(i,j=1,2,3\), \(i<j\), and reduce to the standard rotations, boosts and scaling vector field of Minkowski space when acting on spacetime functions. Recall the notation, for \(i=1,2,3\),

and

Recall that the vector fields \(\overline{\Omega }_{ij}, \overline{B}_i, \overline{S}\) take the following form: first,

where

for \(1 \le i < j \le 3\). Second,

where

for \(i=1,2,3\). Finally,

where

By the bound (2.16), the maps \(X_2(s,t,x,{\,\widehat{\!p\!}\,\,})^i\) are good approximations to the true geodesics, \(X(s,t,x,{\,\widehat{\!p\!}\,\,})^i\). Recall that the vector fields\(\overline{\Omega }_{ij}\), \(\overline{B}_i\), \(\overline{S}\)are defined so that, when applied to\(X_2(s,t,x,{\,\widehat{\!p\!}\,\,})^i\)the following hold at\(s=t_0\):

Proposition 4.1

For \((t,x,p) \in \mathrm {supp}(f)\), the vector fields \(\overline{\Omega }_{ij}\), \(\overline{B}_i\), \(\overline{S}\) defined above satisfy that

for \(i,j,k = 1,2,3\).

Proof

The proof is a straightforward computation. See also Section 3.2, where the above form of the vector fields \(\overline{\Omega }_{ij}\), \(\overline{B}_i\), \(\overline{S}\) are derived by insisting that the result of this proposition is true. (Note that (4.7)–(4.9) are nothing other than equation (3.10) specialised to the case \(\overline{Z} = \overline{\Omega }_{ij}, \overline{B}_i, \overline{S}\) respectively. See also equation (1.40) and the general discussion in Section 1.5.) \(\quad \square \)

Remark 4.2

Recall the Minkowski vector fields \(\overline{\Omega }_{ij}^M\), \(\overline{B}_{i}^M\), \(\overline{S}^M\) from Section 1.5.1. Since, in Minkowski space, the maps \(X(s,t,x,{\,\widehat{\!p\!}\,\,})^i\) are simply given by

for \(i=1,2,3\), it is easy to see that Proposition 4.1 in fact holds in Minkowski space with \(t_0\) replaced by any s:

4.2 Estimates for One Rotation Vector Field Applied to the Geodesics

Recall that the main goal of Section 4 is to control combinations of vector fields applied to the components of \(X(t_0,t,x,{\,\widehat{\!p\!}\,\,})\) and \({\,\widehat{\!P\!}\,\,}(t_0,t,x,{\,\widehat{\!p\!}\,\,})\), that is to prove Proposition 4.24 and Corollary 4.27. Proposition 4.24 and Corollary 4.27 require introducing schematic notation and first obtaining preliminary results (see Sections 4.3–4.7). The main idea, however, is straightforward and can already be understood. In order to illustrate the idea, in this section it is shown how to estimate one rotation vector applied to the components of \(X(t_0,t,x,{\,\widehat{\!p\!}\,\,})\) and \({\,\widehat{\!P\!}\,\,}(t_0,t,x,{\,\widehat{\!p\!}\,\,})\). This section is included for the purpose of exposition and the results are not directly used in the proof of Proposition 4.24 and Corollary 4.27 or elsewhere.

By Proposition 4.1, in order to control \(\overline{\Omega }_{ij}\) applied to the components of \(X(t_0,t,x,{\,\widehat{\!p\!}\,\,})\) and \({\,\widehat{\!P\!}\,\,}(t_0,t,x,{\,\widehat{\!p\!}\,\,})\) it suffices to control \(\overline{\Omega }_{ij}\) applied to the components of \(\overline{X}(t_0,t,x,{\,\widehat{\!p\!}\,\,})\) and \(\overline{P}(t_0,t,x,{\,\widehat{\!p\!}\,\,})\) or, more generally, to show the following:

Proposition 4.3

Suppose \(t\ge t_0 + 1\), \(\vert x \vert \le c t\), \((t,x,{\,\widehat{\!p\!}\,\,}) \in \mathrm {supp}(f)\) and the bounds (4.1) hold with \(N = 0\). Then, for \(i,j,k=1,2,3\), \(i\ne j\),

for all \(t_0\le s \le t\).

The proof of Proposition 4.3 relies on two facts. The first is the fact that, for \((t,x,{\,\widehat{\!p\!}\,\,}) \in \mathrm {supp}(f)\),

which follows from the fact that \(\overline{X}(t,t,x,{\,\widehat{\!p\!}\,\,}) = \overline{P}(t,t,x,{\,\widehat{\!p\!}\,\,}) = 0\), and that \(\overline{\Omega }_{ij}\) does not involve \(\partial _t\) derivatives. The analogue of (4.10) is not true, due to the presence of the \(\partial _t\) derivatives, when \(\overline{\Omega }_{ij}\) is replaced by \(\overline{B}_{i}\) or \(\overline{S}\). These “final conditions” for \(\overline{B}_{i}\) and \(\overline{S}\), and higher order combinations of all of the \(\overline{Z}\) vector fields, applied to \(\overline{X}\) and \(\overline{P}\) are estimated in Section 4.7.

The second fact required for the proof of Proposition 4.3 is the following estimate for \(\overline{\Omega }_{ij}\) applied to the right hand side of equation (2.15).

Proposition 4.4

Suppose \(t\ge t_0 + 1\), \(\vert x \vert \le c t\), \((t,x,{\,\widehat{\!p\!}\,\,}) \in \mathrm {supp}(f)\) and the bounds (4.1) hold with \(N = 0\). Then

for all \(t_0 \le s \le t\).

Proof

Recall that

Write

and note that

The bound for the first term follows from writing \(X(s)^l - s \frac{x^l}{t} = \overline{X}(s)^l + X_2(s)^l - s \frac{x^l}{t}\) and, using the definition (2.13) for \(X_2\) and the definition of \(\mathring{\Omega }^l_{ij}\) (see (4.3)),

Using the assumptions (4.1) on \(\Gamma \), the fact that

for \((t,x,{\,\widehat{\!p\!}\,\,}) \in \mathrm {supp}(f)\) (see Proposition 2.2), and the fact that \(\vert \mathring{\Omega }^l_{ij}(t,x) \vert \le Ct^{-a}\), it follows that

For the second term, note that

and, as above,

Hence,

Similarly, using the fact that

it follows that

and similarly,

Hence,

In a similar manner it follows that

from which (4.11) then follows. \(\quad \square \)

A higher order analogue of Proposition 4.4, which includes also the boosts and scaling and is used in the proof of Proposition 4.24 and Corollary 4.27, is obtained in Section 4.5.

Proof of Proposition 4.3

The proof proceeds by applying \(\overline{\Omega }_{ij}\) to the system (2.14)–(2.15). Applying \(\overline{\Omega }_{ij}\) to the equation (2.14) and integrating backwards from \(s=t\), using (4.10), that

and, summing over \(k=1,2,3\), the Grönwall inequality (see Lemma 4.25) gives

Inserting this bound into the equation (2.14) for \(\overline{X}\), after applying \(\overline{\Omega }_{ij}\), integrating backwards from \(s=t\) and using (4.10) gives

since \(2a>1\). For any function \(\lambda (\tilde{s})\),

where \(\chi _A\) denotes the indicator function of the set A, and so

and another application of the Grönwall inequality gives

The proof follows after inserting this bound back into (4.12). \(\quad \square \)

4.3 Repeated Vector Fields Applied to the Initial Conditions for Approximations to Geodesics

Recall the discussion at the beginning of Section 4. In order to motivate the results of this section and the next section note that, after applying \(\overline{Z}^I\) to the equation (2.15), the term

(amongst others) appears. Consider the first of these summands. Rewriting

the first term \(\overline{Z}^I(\overline{X}^k)\) is controlled, in the proofs of Propositions 4.23 and 4.26, by the Grönwall inequality. In Section 4.4, the term \(\overline{Z}^I\left( X^k_2(s) - \frac{sx^k}{t} \right) \) is controlled by first rewriting

The second term is computed schematically in Proposition 4.5 below, and is controlled in Section 4.4. For the first term, the fact that

is used, along with the schematic expressions of Propositions 4.6 and 4.7. The expression

similarly appears after applying \(\overline{Z}^I\) to equation (2.15), where

and is therefore also estimated in Section 4.4.

The following generalises Proposition 4.1 to higher orders. Recall it is assumed that \(t\ge t_0+1\). Section 4.10 is concerned with the case \(t_0 \le t \le t_0 + 1\).

Proposition 4.5

For any multi index I, there exist smooth functions \(\Lambda ^i_{I}\), \(\Lambda ^i_{I,j}\) such that

for \(i=1,2,3\), where \(X_2(t_0)^i = X_2(t_0,t,x,p)^i\) and \(\tilde{\Lambda }_{I,j,i_1,\ldots ,i_k}^{i,J_1,\ldots ,J_k}\) and \(\tilde{\Lambda }_{I,i_1,\ldots ,i_k}^{i,J_1,\ldots ,J_k}\) satisfy