Abstract

We reinvestigate data from the voting experiment of Forsythe et al. (Soc Choice Welf 10:223–247, 1993). In every one of 24 rounds, 28 players were randomly (re)allocated into two groups of 14 to play a voting stage game with or without a preceding opinion poll phase. We find that the null hypothesis that play in every round is given by a particular evolutionarily stable attainable equilibrium of the 14-player stage game cannot be rejected if we account for risk aversion (or a heightened concern for coordination), calibrated in another treatment.

Similar content being viewed by others

1 Introduction

The purpose of this paper is to demonstrate, by means of a case study, how careful theory can be helpful in analyzing the data of laboratory experiments of (strategic) human interaction. For this case study, we chose an experiment performed by Forsythe et al. (1993). The authors’ aim was to perform an exploratory analysis of how opinion polls impact voting behavior in an election and to experimentally assess Duverger’s “law,” see Duverger (1954), that in any majority-rule election only two parties receive votes. They did not perform a full theoretical analysis of the game that the subjects played in the experiment. This experiment is close to perfect for our undertaking for the following reasons. First, it has a very clean and elegant design, which we explain in detail in Sect. 2. Second, it is a somewhat complicated game with a stage game that involves 14 players of three different types. Thus, the game has a large set of possible strategy profiles. This means that the game could possibly have (and indeed does have) a large number of Nash equilibria. Yet, the game has certain symmetries that pose subtle, but important restrictions on the set of feasible strategy profiles and thus on the set of feasible (or attainable) Nash equilibria in the sense of Alos-Ferrer and Kuzmics (2013).Footnote 1 Third, the game is played recurrently. This means players are repeatedly randomly chosen and matched up from a bigger pool of players to engage in this 14-player stage game. This implies that if a Nash equilibrium establishes itself over time, or if subjects play the same equilibrium throughout, this equilibrium should be evolutionarily stable as in the sense of Maynard Smith and Price (1973) and Maynard Smith (1982).Footnote 2 Fourth, in one of the two treatments, the game has two stages and even though the second stage game by itself has many equilibria with symmetries posing subtle restrictions this game can be said to have an “obvious” way to play or focal point in the spirit of Schelling (1960) and formally as in Alos-Ferrer and Kuzmics (2013). Nevertheless, while all these properties of the game make it somewhat hard to analyze for the analyst, the game it seems to us is not that difficult to (learn to) play for the actual subjects.

The laboratory experiment of Forsythe et al. (1993) has two treatments, a simpler one [denoted CPSS in Forsythe et al. (1993)], in which subjects only play a voting game, and a more complex one [denoted CPSSP in Forsythe et al. (1993)], in which subjects first play an opinion poll game with publicly observed outcome before they play a voting game. What we then find in our theory-driven analysis of these two treatments is this. We can calibrate the subjects’ payoff function with a parameter of risk aversion in such a way that the unique evolutionarily stable attainable equilibrium of the simpler game provides a perfect fit to the observed frequency of play. Furthermore, and this is the main result, taking this so-calibrated risk aversion parameter as given, the null hypothesis of play being given by an evolutionarily stable attainable equilibrium with focal voting stage behavior in the more complex game cannot be rejected at the 5% level of significance.Footnote 3

We believe the main contribution of this paper to be the illustration, by means of an interesting case study, how careful theory can help with the understanding and analysis of experiments in which subjects play somewhat complicated games. The paper, arguably, also makes a contribution to the political science content of Forsythe et al. (1993) in completing the theory that in fact provides the same insights as the experiment (by virtue of providing almost exactly correct predictions for the experiment).

1.1 Additional motivation and related literature

Why would we possibly expect equilibrium behavior in the experiments of Forsythe et al. (1993)? Bernheim (1984) and Pearce (1984) have shown that the rationality of players, in fact even common knowledge of rationality, is not sufficient for Nash equilibrium play, except in very special cases. One sufficient condition for equilibrium play, provided by Aumann and Brandenburger (1995), is that players have “mutual knowledge of the payoff functions and of rationality, and common knowledge of the conjectures,” where conjectures are meant to be “conjectures, on the part of other players, as to what a player will do.”Footnote 4 The question then is this: Under what conditions do players obtain common knowledge of conjectures and would we expect this in our case? One idea is that the game is simple enough for players to identify a focal point, see Schelling (1960), i.e., an “obvious” way to play the game. If it is both obvious and then also played, a necessary condition for such a focal point would be that it is a Nash equilibrium. Another idea is that this common knowledge of conjectures derives from the presence of a book that is commonly known to have been read, understood, and that its recommendations are followed by all players. Such a book could be, among others, a religious text, a book of etiquette, or a comprehensive attempt at such a book such as Harsanyi and Selten (1988).Footnote 5 A necessary condition for such a book to fulfill this role would be for it to describe Nash equilibrium behavior, and a unique one at that. In a similar spirit, it is feasible that a meta-norm, using the language of Alos-Ferrer and Kuzmics (2013)—meaning a norm not specific to the particular game that is played (and not necessarily in a book), exists that is commonly used as a guiding principle to identify a focal point, especially when multiple equilibria exist. This could, for instance, be “play a Pareto-optimal (or risk-dominant) Nash equilibrium if one exists” but would have to be much more refined and complex if it were to be successful in all games. To understand this, consider, for instance, a simple coordination game with two options A and B, where coordination on A gives both players a payoff of 1, coordination on B both players a payoff of 2, and mis-coordination both a payoff of zero. Despite the multiplicity of equilibria in this game, subjects presumably have no problem coordinating on the “focal” payoff- and risk-dominant action B.Footnote 6 Interestingly Gunnthorsdottir et al. (2010a, b), who study somewhat more complicated games than this simple two players two actions game, also find that subjects are able to identify and play the unique Pareto-dominant (not risk-dominant) Nash equilibrium in their games.Footnote 7 Finally, one can derive conditions for Nash equilibrium play if the game played is played recurrently, i.e., played often with always changing other players.Footnote 8 This is the subject of evolutionary game theory. For textbook treatments, see, e.g., Weibull (1995), Hofbauer and Sigmund (1998), and Sandholm (2010).Footnote 9 Its origins go back to at least Brown (1951) and Robinson (1951) and is already present in the “mass action” interpretation of equilibrium in (Nash 1950, page 21). One of the key findings of this literature, sometimes referred to as the “folk theorem” of evolutionary game theory, see, e.g., Nachbar (1990), is that if a “reasonable” evolutionary process converges to a point, then this point must be a Nash equilibrium. As our game of interest here is played recurrently, albeit only 24 times, concerns of evolutionary stability of play strike us as potentially relevant for our analysis. Whether 24 “repetitions” is sufficient for this is an empirical question which we address in this paper. Moreover if a “reasonable” evolutionary process converges to a point, then this point should be evolutionarily stable; in that, it should be robust to the introduction of a small likelihood of arbitrary behavior. This idea has been formalized in the concept of an evolutionarily stable strategy (ESS) by Maynard Smith and Price (1973) and Maynard Smith (1982). There are many connections between convergence points of dynamic processes such as the replicator dynamic of Taylor and Jonker (1978) and ESS. See, e.g., textbooks of Weibull (1995), and Sandholm (2010) for these. One of the interesting conclusions of this literature is that (certain) mixed equilibria in coordination games (or generally in many games with multiple equilibria) are not evolutionarily stable. This is despite the fact these equilibria typically constitute a singleton strategically stable set in the sense of Kohlberg and Mertens (1986), and are therefore typically trembling-hand perfect (Selten 1975) and proper (Myerson 1978).Footnote 10 Evolutionary stable strategies agree with strategic stability and other refinements; in that, they cannot be weakly dominated.Footnote 11

As the theoretical sufficient conditions for Nash equilibrium are never perfectly satisfied in practice, ultimately the question is an empirical one. There is evidence that playing a game only once is not typically sufficient to guarantee equilibrium play. For instance, Wright and Leyton-Brown (2017), performing a meta-analysis of many games, show that Nash equilibrium is overall not a good prediction in games played only once.Footnote 12 There is evidence that playing a game sufficiently often (recurrently with different other players) does lead to equilibrium play. For instance, VanHuyck et al. (1990) show that subjects often fail to play any Nash equilibrium in one-shot coordination games, while Cooper et al. (1990) find evidence of Nash equilibrium play in recurrent coordination games. Similarly, O’Neill (1987) finds evidence against laboratory subjects playing minmax (i.e., Nash equilibrium) strategies in zero-sum games, while Walker and Wooders (2001) find mixed evidence that professional tennis players use minmax strategies in their service game, Hsu et al. (2007) find evidence that professional tennis players use minmax strategies, and Palacios-Huerta (2003) finds strong evidence that professional soccer players (and goalkeepers) use minmax strategies when taking (or defending) penalty kicks. Professionals have played these games often, while laboratory subjects not (or not often enough). Binmore et al. (2001) find that after and only after sufficient practice with the game do laboratory subjects play minmax strategies in a set of zero-sum games.Footnote 13 Also in public good provision games initial play is never an equilibrium (under material self-interested preferences), but eventual play after repetitions often is. See, e.g., Andreoni (1988) and the control group in Fehr and Gächter (2000). See also Duffy and Hopkins (2005) for learning in market entry games.

There is also a more specific literature that aims to test evolutionary game theory in the laboratory and in the field. One could argue that Cooper et al. (1990) is an early example of that. This literature is surveyed in Friedman and Sinervo (2016). For instance, Oprea et al. (2011) study the evolution in single-population and two-population hawk–dove games and find support for evolutionarily stable play (which is very different in the two different cases). Our paper does in fact have similarities to a two-player hawk–dove game. Recently, people have been interested in testing evolutionary game theory in those cases where it predicts cycles instead of convergence to equilibrium. See, for instance, Sinervo and Lively (1996) who find that certain types of lizards display cyclical behavior, and Hoffman et al. (2015), Cason et al. (2010, 2013) for experiments with human subjects and some evidence of cycling.

We are not aware of too many papers on the issue of whether only certain strategically or evolutionarily stable equilibria are played in laboratory experiments.Footnote 14 Laboratory experiments suffer a bit from the problem of the experimenter’s lack of complete control over the subjects’ utility or payoffs. See, e.g., Weibull (2004) for a discussion of this issue. For instance, observed play in ultimatum games, perhaps started by Güth et al. (1982) and recently surveyed in Cooper and Kagel (2016), could be evidence against subgame perfection, but could also be evidence against self-centered preferences. Indeed, the literature mostly interpreted this as evidence of other-regarding preferences.Footnote 15

There is a sizeable literature on laboratory experiments in political economy. The results in this literature, on the whole, mostly support the theoretical equilibrium predictions of various models on voter turnout, the Condorcet jury theorem, and the swing voter’s curse. See, e.g., Guarnaschelli et al. (2000), Aragones and Palfrey (2004), Levine and Palfrey (2007), and Bhattacharya et al. (2014). Palfrey (2009) provides a survey of this literature and finds that “[a]ll the experiments find significant evidence of strategic voting and, with a few exceptions, find support for the equilibrium predictions of the theories.”Footnote 16 What we add to this literature is that we provide an(other) instance of laboratory play that is extremely close to equilibrium theory for a rather complicated game with multiple equilibria. We show that despite these complications and strategic uncertainty, the subjects are able to coordinate their play very effectively on one of the evolutionarily stable attainable equilibria of this game.

We also point out the role risk aversion (or some other reasonable and slight transformation of the utility function) could play in explaining laboratory experiments more closely.Footnote 17 Risk aversion has been used to explain behavior in experiments in a variety of studies. For instance, Goeree and Holt (2004), Goeree et al. (2003), and Fudenberg and Liang (2018) find that risk aversion improves the explanatory power of equilibrium behavior in matrix games, while Cox and Oaxaca (1996), Chen and Plott (1998), Goeree et al. (2002), and Campo et al. (2011) find that risk aversion is helpful in explaining behavior in auctions. Deck et al. (2008) and Friedman et al. (2014) have shown, however, that estimated risk-aversion parameters are often individual and even situation specific.Footnote 18 In fact, we favor another interpretation of the necessary concavification of the utility of money to explain the data here close to perfectly as stemming from the subjects’ heightened concern for coordination beyond pure monetary incentives.

2 The experimental setup

In this section, we describe the experimental setup employed by Forsythe et al. (1993). There are two treatments. In treatment 1, the stage game is a voting game with three types of players. In treatment 2, the stage game, also with three types of players, has two phases: players, before playing the voting game, participate in an opinion poll. There is one session per treatment, and there are 28 subjects per session. These are randomly re-arranged into two equal-sized groups in each of the 24 rounds, and randomly re-assigned to a new type in each round.

The voting game is the same in all treatments, and its payoffs are given in Table 1. Four players are randomly assigned the type A, four the type B, and 6 the type C.Footnote 19 Each player can choose to vote for one of the three candidates, also labeled A, B, and C, and can abstain. The table states the monetary payoffs (in US$) to each type depending on which candidate wins the election. Voters of type A favor candidate A, voters of type B favor candidate B, and voters of type C favor candidate C. Voter types are, thus, named in terms of their favorite candidate.

Voters are asked to cast their vote for one of the three candidates or to abstain. If there is a tie between two or three of the most-voted candidates, the elected candidate is chosen uniformly randomly.

For treatment 2, players are first asked to state one of the three candidates (or to abstain) in an opinion poll (without payoffs), the outcome of which is then publicly announced before players are asked to play the voting game as described above. All this was carefully explained to the subjects. Subjects were, however, not told that the game would end after exactly 24 rounds.Footnote 20

There are two correct ways of seeing this experiment. One way is to see each of the two treatments in Forsythe et al. (1993) as a finitely repeated 28-player game without discounting and with a particular highly imperfect monitoring structure. Subjects, after each round, only learn what the frequency of play is within their group. They do not learn what happens in the parallel group. They also can never associate action choices with the identity of the other players. This finitely repeated game has a huge number of subgame perfect equilibria. We shall not attempt to identify all equilibria of this huge game. This is also not necessary, as we in fact show that subjects of this game play a very particular subgame perfect equilibrium of this game. They play an evolutionarily stable attainable equilibrium of the 14-player stage game at each of the 24 rounds.

The other way to see this experiment is the one that the authors seem to have intended: as a 14-player stage game recurrently played with always changing other players. Forsythe et al. (1993) have been very careful in their design so that this is a valid interpretation of the experiment. In fact, we find that subjects play independently of the past. To make it clear that this is the case and that the second view is appropriate, we analyze the data both at the aggregate level and at the individual level. Under the assumption that play is stationary, the aggregate analysis is more powerful. However, we perform a very detailed round-by-round analysis in “Appendix C” that demonstrates that the stationarity assumption is justified. One cannot reject the null of subjects playing the same strategy profile across all 24 rounds. Finally, we also split the data into early rounds (1 to 12) and later rounds (13 to 24). The analysis of these two data halves is presented in “Appendix C” as well. The main finding there is that in the game without polls there is no evidence of a change in behavior over rounds and in the game with polls there is some evidence of a change in behavior which could be interpreted as learning leading closer to (evolutionarily stable attainable) Nash equilibrium play (without risk aversion) of the game.

3 The voting game without opinion polls

In this section, we study the voting game without polls [treatment CPSS in Forsythe et al. (1993)].

3.1 The game

This is a game in which each player of each of the three types of players has four pure strategies: vote for A, vote for B, vote for C, and abstain from voting. There are four players of the A type, four of the B type, and six of the C type. The winning candidate is determined by simple (relative) majority. If there is a candidate with more votes than any other candidate, this candidate is elected with payoff consequences to the various voter types as given in Table 1.Footnote 21 If there are two or three candidates with the highest number of votes, then one of them is drawn uniformly randomly as the winner with payoff consequences again as given in Table 1.

3.2 Attainable equilibria

We shall here identify and compute all attainable equilibria of this 14-player voting game that are not weakly dominated.Footnote 22 Note that for C types voting for C is a weakly dominant strategy, while for A and B types voting for C and abstaining are both weakly dominated. Thus, we need to concern ourselves only with the choice of A and B types between voting for A and voting for B.

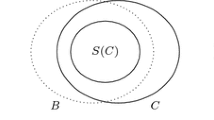

The game (without considering framing effects induced by ballot position—more about this further below) has inherent symmetries as identified already in Andonie and Kuzmics (2012). Before we identify attainable strategies for the game at hand, we here briefly explain how symmetries are identified mathematically and why symmetries restrict the set of feasible strategy profiles. We begin with the latter. The best simple example, that is in fact close to the game at hand, is (a version of) the two-player hawk–dove game. Suppose that there are two players who both can choose one of the two pure strategies, H or L. If both play H or both play L, they both get nothing. If one plays H and the other L, the player who chose H gets a high payoff and the player who chose L gets a low but also positive payoff. Suppose that this game is played recurrently by always independently randomly chosen and anonymously matched subjects from a large pool. It is then impossible, for instance, that every round of this game results in the asymmetric outcome of one player choosing H and the other L. Why? A necessary condition to achieve this asymmetric outcome would be that half of the pool of players choose H and the other half L. Note also that subjects cannot condition their behavior on the other player’s type as the interaction is completely anonymous. But then there is only a 50% chance that a pair of one H and one L player is matched.Footnote 23 Now suppose that an analyst arbitrarily always calls one of the two players player A and the other player B and arbitrarily labels the strategies H and L as A and B for player A and as B and A (i.e., the other way round) for player B. If this is only known to the analyst, then of course nothing changes for the subjects and they still find it impossible to play an asymmetric strategy profile. What changes if the subjects also see this labeling of the analyst? It is then feasible, but not clear whether it is very plausible, that the subjects now condition their behavior on the commonly known strategy names. For instance, they could use the fact that A comes before B in the alphabet to play the asymmetric equilibrium of everyone choosing A (that is A players play their favorite strategy, and B players their second favorite strategy). In the present context, the ballot position could have this effect. Forsythe et al. (1993) purposely always put candidate A as the first in the ballot. It is now feasible and perhaps plausible that all players understand this and condition their choice on the ballot position. This would allow all A and B players to vote for A identified as the first on the ballot. In the end, it is an empirical question whether players have sufficient faith in most of them “seeing” that they could do that in order for them to solve the coordination problem this way. In our empirical analysis, and also already that of Forsythe et al. (1993) it emerges clearly that this is not (or at least hardly) the case.

Mathematically, attainable strategies are defined, see, e.g., Alos-Ferrer and Kuzmics (2013) building on prior work of Von Neumann and Morgenstern (1953), Nash (1951), Harsanyi and Selten (1988), Crawford and Haller (1990), and others, as strategies that are unaffected by a relabeling of symmetric strategies and symmetric players. Symmetric strategies and players have to be (in general, and this is also the case here) identified simultaneously. Loosely speaking, two players are symmetric to each other if the payoff function of one player is the same as the payoff function of another player after some relabeling of symmetric strategies and symmetric players. For a precise definition, see Alos-Ferrer and Kuzmics (2013). In the voting game treatment of Forsythe et al. (1993), we get the following restrictions for the various players. Every A-type player must use the same (mixed) strategy, every B-type player must use the same (mixed) strategy, and every C type must use the same (mixed) strategy. Let \(x_A(A)\) denote the probability that an A-type player attaches to pure strategy A. Then, as we assume that A-type players do not use strategy C, we must have that \(x_A(B)=1-x_A(A)\), the probability that an A-type player attaches to pure strategy B. A final and perhaps most important restriction of attainability is that \(x_A(A)=x_B(B)\) (and, of course also, \(x_A(B)=x_B(A)\)), where \(x_B(B)\) is the probability that a B type attaches to pure strategy B. See Andonie and Kuzmics (2012) for details. Thus, if we restrict attention to undominated attainable strategy profiles, all we need to determine is \(x=x_A(A)\).

Proposition 3.1

The voting game has exactly three undominated attainable equilibria. In all of these, C types play C, and A and B types put zero probability on C and abstaining. The three undominated equilibria are then characterized by the probability an A type attaches to A (same as a B type attaches to B) which in the three equilibria is given by \(x=0\), \(x=1\), and \(x=0.6615\), respectively.Footnote 24

Proof

We have already argued that C types must play C in an undominated equilibrium and that A and B types will avoid playing C and abstaining. Now consider the supposed equilibrium given by \(x=0\). This implies that all A types play B and all B types play A, which leads to C winning the election by two votes. This implies that no individual can change this outcome by unilaterally deviating from the stated strategy profile. The same argument applies for \(x=1\). So, these are both equilibria.

In order to identify an equilibrium \(x \in (0,1)\), we need to appeal to the indifference principle (i.e., voting for A and B has to be equally good for all A and B types of voters).

Consider w.l.o.g. an A-type voter. Let Y(x) denote the random variable that, given probability \(x \in (0,1)\), equals the number of A votes among all seven other A and B types of voters. Note that for the considered A-type voter, a vote between A or B changes the election result if and only if \(Y(x) \in \{1,2,5,6\}\). For instance, if \(Y(x)=1\) an A-vote by the considered A-type player will result in a tie between candidates B and C, while a B-vote would result in a win for B.

Let \(u_A(A,x)\) denote the expected payoff to the considered A-type voter if she votes for A given all others use mixing probability x, and let, similarly, \(u_A(B,x)\) denote her expected payoff in this case if she votes for B. The equilibrium condition is then given by \(u_A(A,x)=u_A(B,x)\). This equation is given in “Appendix B.” It is a polynomial in x of degree 7, and the equilibria are given by its zeros. Dividing this polynomial by \(x(1-x)\), given that \(x=0\) and \(x=1\) are zeros of this polynomial, leaves a polynomial in x of degree 5. We then apply Newton’s method to find all further zeros of this polynomial and obtain only one at \(x=0.6615\). \(\square \)

Note that among all (three) attainable equilibria, in all of which C types vote for C, A and B types clearly as a group and individually prefer the mixed equilibrium with \(x=0.6615\), in which they have at least a positive probability of winning the election.Footnote 25

Note that the voting game has other, non-attainable, equilibria. For instance, it is an equilibrium that all A and B types vote for A, or all A and B types vote for B.

3.3 Evolutionary stability of attainable equilibria

In this section, we study the evolutionary stability properties of the three attainable equilibria. We shall adapt the notion of an evolutionarily stable strategy (ESS) in the sense of Maynard Smith and Price (1973) and Maynard Smith (1982) (see (Weibull 1995, Definition 2.1) for a textbook treatment) and Palm (1984) (who extended this to general symmetric multi-player games) to the context of attainable strategy profiles. Consider an attainable strategy (profile), characterized by \(x \in [0,1]\), the probability that an A type attaches to playing A (and equivalently that a B type attaches to B). Let \(y \in [0,1]\) denote a mutant strategy that enters with probability \(\epsilon >0\) close to zero.Footnote 26 Let \(w_{\epsilon }=(1-\epsilon )x + \epsilon y\) denote the post-entry mix of strategies. Let generally \(u_A(x,z)\) denote the payoff to an A-type playing strategy \(x \in [0,1]\) when all her (seven) other players (recall that C types just play C regardless) play strategy \(z \in [0,1]\). Then, x is an ESS if for all \(y \in [0,1]\) with \(y \ne x\) we have that \(u_A(x,w_{\epsilon }) \ge u_A(y,w_{\epsilon })\).

Proposition 3.2

The only ESS among the three attainable equilibria of the voting game is \(x=0.6615\).

Proof

The attainability restriction that x is the probability of an A type playing A and at the same time also the probability of a B type playing B could also be handled by relabeling all B types strategies from A to B and vice versa with the appropriate transformation in terms of utilities. Then, the attainable strategy x is simple a symmetric strategy of the relabeled game, which is now an eight-player symmetric game. In Lemma A.1, in “Appendix” we provide a simple equivalent condition for a (mixed) strategy \(x \in [0,1]\) to be an ESS for such games, where every player has the same two pure strategies. It implies that in the present context, attainable strategy (profile) x is an ESS if and only if for all \(y < x\) close to x A is a best reply to y for an A type and for all \(y>x\) close to x B is a best reply for an A type.

In order to check the evolutionary stability of the three attainable equilibria, we therefore numerically compute the payoff of an A type for strategies A and B as a function of x, the probability with which all other A types play A and all B types play B. This is given in Fig. 1.

Note that when y exceeds \(x=0.6615\), strategy B is best for an A type (and analogously strategy A best for a B type). On the other hand, when y is below \(x=0.6615\) strategy A is best for an A type (and analogously strategy B best for a B type). Thus, by Lemma A.1, the only evolutionarily stable strategy is the mixed equilibrium.Footnote 27\(\square \)

3.4 Empirical results and tests

The actual voting behavior in the 48 rounds of the stage game without opinion polls [treatment CPSS in Forsythe et al. (1993)] is given in Table 2.

Note that roughly 2–3% of subjects choose a weakly dominated strategy in each group of voter types. If we only look at those subjects that do not choose weakly dominated strategies, we get the empirical fractions for voter types A and B given in Table 3.

From the empirical results, given in Tables 2 and 3 we can immediately reject the null hypotheses that A and B types play one of the asymmetric pure equilibria, or one of the symmetric pure equilibria. This is even true if we allow for a small enough fraction of “noise” players who just randomize in some arbitrary way. We now turn to testing attainability.

Test 3.1

The null hypothesis of attainability, i.e., \(x_A(A)=x_B(B)\), using a \(\chi ^2\) test of independence, cannot be rejected at the 5% level of significance. It produces a p value of 0.8396 (\(\chi ^2=0.8411\)).

Note that in order to perform a \(\chi ^2\) test of independence, we need to first relabel the strategies to take account of the symmetry restrictions. The result is given in Table 4. We can then perform the test and obtain a p value of 0.8396.

Test 3.1 is, thus, also a test of the null of the absence of a ballot position effect. As it cannot be rejected, there is no evidence, in the voting game, that players condition their strategy on the ballot position of the candidates. This is in agreement with the finding of Forsythe et al. (1993).

We can now test the null that A and B types (those that do not play dominated strategies) on average play the evolutionarily stable strategy \(x=0.6615\). While the empirical frequency of 0.5672 (average across the two types) is not very far from the hypothesized \(x=0.6615\), the null of \(x=0.6615\) can nevertheless be rejected at the 5% level of significance.

Test 3.2

The null hypothesis of ESS equilibrium play, i.e., \(x_A(A)=x_B(B)=x=0.6615\), using an exact binomial test must be rejected at the 5% level of significance. It produces a p value of 0.0002.

In this test, we are using the pooled data for both A and B types. The individual analysis performed in “Appendices C.1 and C.2” justifies looking at aggregate data and confirms this finding.

Even though the null hypothesis of the evolutionarily stable equilibrium probability \(x=0.6615\) is rejected, this theory is nevertheless not so very far from “explaining” the data. In order to see this, we identify all possible \(x \in [0,1]\) that would “explain” the data better. That is, we identify those values of \(x \in [0,1]\) that produce a higher likelihood of the data than the equilibrium value \(x=0.6615\). One way to attempt this would be to compute the binomial likelihood of 211 “successes” (i.e., an A type choosing A, a B type choosing B) among 372 “trials.” This is computationally infeasible. Instead we perform a bootstrap, where we simulate the 48 rounds of the stage game 1000 times and compute the likelihood in each case and compute the average. We find that the set of theories “better than” the evolutionarily stable equilibrium theory is approximately the interval (0.4851, 0.6615) for x, which represents approximately 16.66% of the zero-one interval.

3.5 Risk-averse players

So far in this section, we considered that all players have affine preferences over money. In particular, for A and B types we postulated payoffs of \(u(m)=m\), where \(m \in \{1.2,0.9,0.2\}\) the three possible distinct payoffs these types can receive (see Table 1). One might call such players risk-neutral.

One could, however, in principle envision that players do not have such affine preferences over money. We could, for example, fix that \(u(1.2)=1.2\) and \(u(0.2)=0.2\) but choose u(0.9) somewhere between 0.2 and 1.2. A person with \(u(0.9)>0.9\) could then be said to be risk-averse and one with \(u(0.9)<0.9\) would be risk-loving.Footnote 28 In the present context, one could also imagine another reason why a person might have a \(u(0.9)>0.9\). Such a person, in place of an A or B type, might consider the experiment mostly a challenge to achieve coordination for A or B, and not actually care too much about the money involved.

Proposition 3.3

The voting game with preferences for A and B types of \(u(0.2)=0.2\) and \(u(1.2)=1.2\) and \(u(0.9)=1.0722\) has a unique undominated attainable ESS with a probability of A types playing A (and B types playing B) of \(x=0.5672\).Footnote 29

The proof of this proposition is omitted. It follows the same steps as the proofs of Propositions 3.1 and 3.2.

Proposition 3.3, thus, states that the voting game can be calibrated as to generate a unique undominated attainable ESS to perfectly match the observed average frequency \(x=0.5672\) of A types playing A and B types playing B. The individual analysis performed in “Appendices C.1 and C.2” confirms the finding that the null hypothesis of stationary play of A and B types mixing with the round-independent probability of \(x=0.5672\) across all 24 rounds cannot be rejected.

4 Opinion polls before voting

In this section, we study the opinion poll game with the understanding that, after the opinion poll results are publicly announced, the voting game is played [treatment CPSSP in Forsythe et al. (1993)].

4.1 The game

This game is, thus, a two-stage game with 14 players, 4 A and 4 B types and 6 C types. There are many possible strategies in this two-stage game. Every single player has to choose one of the four actions (vote for A, vote for B, vote for C, or abstain) in the first, the opinion poll, stage. We shall refer to these, in what follows, as “straw” votes whenever the context may be insufficient to distinguish them from the actual votes in the second stage. Then, for the second stage, the players, after observing the outcome of opinion polls, choose to vote for A, B, or C, or to abstain. Payoffs depend only on which candidate ultimately wins the election and are given as in Table 1.

4.2 Attainable equilibria

Given that the voting game by itself has multiple equilibria, the two-stage game with an opinion poll stage before the actual voting stage has many subgame perfect equilibria. We here follow the argument in Andonie and Kuzmics (2012) to restrict attention to a small subset of attainable equilibria. This restriction is also justified empirically as we shall see as follows.

The equilibria identified in Andonie and Kuzmics (2012) are such that the outcome in the opinion poll to a large extent already determines the ultimate election winner: Whichever candidate A or B receives more straw votes in the opinion poll wins the election. This is achieved by A and B types of subjects coordinating their second-stage voting choices on the publicly observed opinion poll winner (between candidates A and B). This behavior is feasible under attainability. Attainability imposes, however, a stronger restriction on second-stage voting behavior in the case when there is a tie between the number of straw votes cast for both candidates A or B in the opinion poll. Then only attainable equilibria of the voting game, as identified in Sect. 3.1, can be played. In this case, as we have seen in Sect. 3.2, the most likely candidate to win the election is candidate C. Thus, in these equilibria, the most important aim for A and B types is to cast straw votes in the opinion poll in such a way as to avoid a tie between A and B, ideally with their preferred candidate “winning” the opinion poll. The point of this paper is to demonstrate that the subjects’ behavior in the experiments of Forsythe et al. (1993) is very close to such an equilibrium in all stages of this game.

That the poll winner between candidates A and B determines the outcome of the election is an assumption that is somewhat justified by the experiment [treatment CPSSP in Forsythe et al. (1993)] as Table 5 illustrates.Footnote 30

When there is a tie between the numbers of straw votes cast for both candidates A or B in the opinion poll, only attainable equilibria of the voting game, as identified in Sect. 3.1, can be played. There are, however, three such equilibria as we saw in Sect. 3.2. Only one of these is evolutionarily stable in the voting game (see previous section). We assume that this is the voting behavior that the players foresee for the second stage when they participate in the opinion poll in the first stage. Ties in the experiment happen 11 out of 48 times. Play of A and B types in these cases is summarized in Table 6.

This means that the combined empirical proportion of A and B types who vote for their most preferred candidate (after a tie in the poll) is 0.7045, for their second most preferred is 0.2160, for C 0.0682, and for abstain 0.0114. This is close to but somewhat statistically different from the supposed equilibrium proportions of (0.6615, 0.3385, 0, 0) without risk aversion and (0.5672, 0.4328, 0, 0) with risk aversion. Nevertheless, we shall assume that players ex-ante expect the evolutionarily stable strategy play.Footnote 31

We shall term the behavior described in the previous paragraphs the focal voting behavior. Having solved (or assumed behavior for) the second-stage voting game for every subgame, we can write the first-stage opinion poll game in reduced form. Note that, under the given assumptions about subgame behavior, choosing to abstain in the opinion poll is equivalent to casting a straw vote for C for all types of players. Thus, each player of each type has three distinct pure strategies: Cast a straw vote for A, B, or C. The ultimate payoffs depend only on whether A or B has more straw votes in the poll or whether there is tie between the two in the poll. These payoffs are summarized in Table 7.

We can, then, finally turn to the equilibrium analysis of the behavior in the opinion poll. Note that, unlike in the voting game, no player of any type has weakly dominated strategies. Consider, for instance, a C type and other player behavior in the poll such that without her straw vote A will beat B in the poll by one straw vote. Then this C type’s best strategy is to cast a straw vote for B to create a tie between A and B and, thus, make C win in the election much more likely. Also for an A or a B type, there are other player strategy profiles that make casting a straw vote for C a uniquely best strategy.

An attainable strategy profile, again following Andonie and Kuzmics (2012), in this polling game, must be such that all A types use the same (mixed) strategy, all B types the same (mixed) strategy, and all C types the same (mixed) strategy. Additional restrictions induced by the symmetries of the game are as follows. Let \(x_i(j)\) denote the probability a player of type i attaches to stating a preference for candidate j, for any \(i,j \in \{A,B,C\}\). Then, we must have that \(x_A(A)=x_B(B)\) and \(x_A(C)=x_B(C)\) (implying \(x_A(B)=x_B(A)\)) and \(x_C(A)=x_C(B)\). Thus, ultimately, there are three unknowns. These are w.l.o.g. \(x_A(A)\), \(x_A(B)\), and \(x_C(C)\).

Proposition 4.1

There are exactly three attainable equilibria of the opinion poll game with focal voting behavior, and these are given by

x | \(x_A\) | \(x_B\) | \(x_C\) |

|---|---|---|---|

x* | (0.7955, 0.0723, 0.1322) | (0.0723, 0.7955, 0.1322) | (0, 0, 1) |

x** | (0.8872, 0, 0.1128) | (0,0.8872, 0.1128) | (0.5, 0.5, 0) |

x*** | (1, 0, 0) | (0, 1, 0) | (0.3965, 0.3965, 0.207) |

Proof

We need to find all attainable equilibria of the polling game given the assumed focal second-stage voting behavior. In principle, such equilibria may be in completely or only partially mixed or even pure strategies. We, thus, have to consider all possible support pairs. To give one example, there could be an equilibrium in which A types mix between A and C only (and, by attainability B types mix between B and C), while C types only play C. One would then get one equation from the indifference of A types between A and C and two inequalities as A types must then find playing B worse than (or equal to) playing A and C types must find playing A (and, thus, also B) worse than or equal to playing C.

Altogether, there are three possible equilibrium supports to be considered for C types (play the “pure” strategy given by playing A and B with probability 1 / 2 each, play pure strategy C, and mix between both). There are seven possible equilibrium supports for A types (B types then follow from attainability). These are pure strategies A, B, and C, mixing between two pure strategies AB, AC, and BC, and mixing between all three pure strategies ABC. Thus, there are 21 cases to consider.

For each case, we write down the (polynomial of degree up to 7) equalities and inequalities induced by the case and use the Newton method to find all solutions. The program is available as part of the supplementary material. This procedure provides us with the stated three attainable equilibria. \(\square \)

Expected payoffs to all types in these three attainable equilibria are given in Table 8.

This reduced polling game also has non-attainable equilibria. For instance, all A and B types playing A and all C types playing C are an equilibrium, in which eventually A is elected. No player can in this case change the outcome by unilaterally deviating to some other strategy. Even six of the A- and B-type players playing A and the other two B or C and all C types playing C is an equilibrium, in which again no player can change the outcome (of A winning the election) by unilaterally deviating. There are many more non-attainable equilibria.

4.3 Evolutionary stability of attainable equilibria

In this section, we study the evolutionary stability properties of the three attainable equilibria.

Proposition 4.2

Of the three attainable equilibria of the opinion poll game with focal second-stage voting behavior, exactly two, \(x^*\) and \(x^{**}\), are an ESS.

Payoff difference between playing A or B (solid line) or C (dashed line) for a C type as a function of \(\alpha \), where \((\alpha ,\alpha ,1-2\alpha )\) is the assumed mixed strategy of other C types, assuming all A and B types play according to their prescribed mixed strategy in equilibrium \(x^{***}\)

Proof

Let us first consider equilibrium \(x^{***}\). We shall look at the population of C types, which in this equilibrium mix between pure strategies A,B and C with probabilities (0.3965, 0.3965, 0.207). Attainability restricts C types to attach the same probability on pure strategies A and B. Thus, attainable strategies for C types can be identified with one number \(\alpha \in [0,1/2]\), the probability they attach to pure strategy A (and, thus, also to pure strategy B). They then must attach probability \(1-2\alpha \) to pure strategy C. Fixing play of A and B types with their equilibrium play in \(x^{***}\), the C types are playing a six-player symmetric game with two strategies. We can, thus, appeal to Lemma A.1, given in “Appendix,” to check whether or not \(x^{***}\) is an ESS.

Figure 2 depicts the payoff to a C type, for playing A (same as B) and C, as a function of \(\alpha \), assuming A-type and B-type players use their prescribed mixed strategy in equilibrium \(x^{***}\). This figure, appealing to Lemma A.1, thus shows that the strategy profile \(x^{***}\) is not evolutionarily stable. This is so because strategy A is the unique best reply to strategies \(\alpha \) above the equilibrium value 0.3965 and is strictly worse than strategy C for values \(\alpha \) below 0.3965.

We now turn to equilibrium \(x^{**}\). Figure 3 depicts the payoff to a C type, for playing A (same as B) and C, as a function of \(\alpha \), assuming A-type and B-type players use their prescribed mixed strategy in equilibrium \(x^{**}\). Note that, if, as prescribed in the equilibrium, all other C types play \(\alpha =1/2\), then strategy C is strictly worse than strategies A and B. Thus, \(x^{**}\) is an ESS from the point of view of C types. We then need to turn to the A and B types in this equilibrium. Figure 4 shows the best response regions for an A type as a function of the mixed strategy assumed by all other A types (and all B types playing accordingly), assuming C types play according to equilibrium \(x^{**}\). One can see from Fig. 4, and appealing to Lemma A.1, that no mutant that places probability zero on strategy B can enter the equilibrium \(x^{**}\). This is so because the unique best response to a strategy that puts more probability on A than \(x^{**}\) does, while B receives zero probability, is to play C, while the unique best response to a strategy that puts more probability on C than \(x^{**}\) does, while B receives zero probability, is to play A. To see that no other mutant can enter \(x^{**}\) either consider Fig. 5, which shows that the payoff from playing the A types’ part of \(x^{**}\) against all other A types playing y in the simplex (and all B types their corresponding mixed strategy), while C types play as prescribed in \(x^{**}\), is always positive unless \(y=x^{**}\).

Finally, we explore the evolutionary stability properties of equilibrium \(x^*\). This equilibrium is also an ESS. This can be seen partially from the best response regions given in Figs. 6 and 7. Figure 7, in conjunction with Lemma A.1, shows that playing C for C types is evolutionarily stable as C is the unique best reply to \(\alpha =0\) (i.e., to all other C types playing C). In Fig. 6, we can only partially see that \(x^*\) is an ESS. For instance, it is clear that a C mutant, who upon entering would lead us to region 1 of the picture, would lead to C being the worst strategy. From Fig. 6, the effect of mutants entering is, however, not clear for every possible mutant.Footnote 32 To fully see that \(x^*\) is an ESS, we appeal to Lemma A.2 and Fig. 8, which shows the payoff difference \(u_A(x^*,y^{n-1})-u(y,y^{n-1})\) which is positive everywhere and equal to zero only for \(y=x^*\). \(\square \)

Payoff difference between playing A or B (solid line) or C (dashed line) for a C type as a function of \(\alpha \), where \((\alpha ,\alpha ,1-2\alpha )\) is the assumed mixed strategy of other C types, assuming all A and B types play according to their prescribed mixed strategy in equilibrium \(x^{**}\)

The simplex of mixed strategies for players of type A and the best response regions for an A-type player if all other A types (and symmetrically B types) use a mixed strategy in this simplex, while all C types use their prescribed strategy in equilibrium \(x^{**}\). The solid line through the simplex is the indifference line between strategies A and C. The dashed line is the indifference line between strategies B and C. There is no point in the simplex at which an A type is indifferent between A and B

The simplex of mixed strategies for players of type A and the best response regions for an A-type player if all other A types (and symmetrically B types) use a mixed strategy in this simplex, while all C types use their prescribed strategy in equilibrium \(x^{*}\). The three lines in this picture are the indifference lines between each pair of pure strategies. The line emerging from the A corner is the indifference line between strategies B and C. The one emerging from the B corner is the indifference line between A and C. The remaining line is the indifference line between A and B. Suppose we label these six regions clockwise starting with 1 at the top (around the C corner), then in region 1 the A type has preferences \(A \succ B \succ C\), in region 2 \(A \succ C \succ B\), in region 3 \(C \succ A \succ B\), in region 4 \(C \succ B \succ A\), in region 5 \(B \succ C \succ A\), and in region 6 \(B \succ A \succ C\)

4.4 Empirical results and tests

The actual behavior in the opinion poll in the 48 rounds of the stage game with opinion polls [treatment CPSSP in Forsythe et al. (1993)] is given in Table 9.

We first test the null of attainability, that is, we test independence in the relabeled Table 10.

Test 4.1

The null hypothesis of attainability, for A and B types, that \(x_A(A)=x_B(B)\) and \(x_A(C)=x_B(C)\), cannot be rejected at the 5% level of significance. The \(\chi ^2\) test of independence leads to a p value of 0.1182 (\(\chi ^2=5.8677\)).

A few notes are in order here. The null of attainability is also the null of the absence of a ballot position effect. We, thus, cannot reject this null. Thus, at the 5% level of significance, there is no evidence that players condition their strategy on the ballot position. Forsythe et al. (1993) find some evidence of a ballot position effect. This evidence can be reproduced here if we restrict attention to straw votes cast for A and B only, ignoring those cast for C and abstaining. If we do this test, we obtain a p value of 0.048 (\(\chi ^2=3.91\)), which would lead to rejecting the null at the 5% level of significance. This test, however, ignores the randomness in the total number of A and B votes (assuming this total to be exogenously given by the experimenter, which is not the case).Footnote 33

It is clear that subjects are not playing anywhere close to ESS \(x^{**}\). While play is very close to ESS \(x^*\), the null of \(x^*\) must nevertheless be rejected at the 5% level of significance.

Test 4.2

The null hypothesis of attainable ESS equilibrium play, i.e., \(x^*\) as identified in Proposition 4.2, using a \(\chi ^2\) goodness of fit test must be rejected at the 5% level of significance. The empirical frequency of play averaged for A and B types is given by (0.7005,0.1432,0.1563), and the p value is essentially zero (\(\chi ^2=32.7551\)).

The individual analysis performed in “Appendices C.3 and C.4” confirms this finding.

Aggregating across A and B types, the data are “explained better” than with the \(x^*\) equilibrium by all \(x \in \Delta (\{A,B,C\})\) indicated in Fig. 9. Here, we use the simulated likelihood approach introduced in Sect. 3.4. The area of the gray “spot” is about 1.37% of the area of the simplex.

4.5 Risk-averse players

We now return to the possibility of risk-averse players or players who for some other reason value the money amount of 0.9 relatively higher than under the assumption of an affine preference in money. In fact, we shall here consider the case where \(u(1.2)=1.2\), \(u(0.2)=0.2\), and \(u(0.9)=1.0722\), the value that perfectly calibrates or “explains” the outcome of the voting game alone (see Sect. 3.5).

Proposition 4.3

There are two evolutionarily stable attainable equilibria in the opinion poll under focal voting behavior, when A and B types have utility \(u(0.2)=0.2\) and \(u(1.2)=1.2\) and \(u(0.9)=1.0722\). One is given by \(\tilde{\tilde{x}}\) such that \(\tilde{\tilde{x}}_A=(0.8272,0,0.1728)\), \(\tilde{\tilde{x}}_B=(0,0.8272,0.1728)\), and \(\tilde{\tilde{x}}_C=(0.5,0.5,0)\), the other by \(\tilde{x}\) such that \(\tilde{x}_A= (0.6857,0.1552,0.1591)\) and \(x_B=(0.1552,0.6857,0.1591)\) and \(x_C=(0,0,1)\). There is a third equilibrium given by (0.9825,0.0175,0), (0.0175,0.9825,0), and (0.3943,0.3943,0.2114). It is not an ESS.

The proof is omitted. It follows the steps of the proofs for Propositions 4.1 and 4.2.

Again, equilibrium \(\tilde{\tilde{x}}\) is not at all consistent with the data. Equilibrium \(\tilde{x}\) now is.

Test 4.3

The null hypothesis of evolutionarily stable attainable equilibrium \(\tilde{x}\) of the polling game, when A and B types have utility \(u(0.2)=0.2\) and \(u(1.2)=1.2\) and \(u(0.9)=1.0722\), cannot be rejected at the 5% level of significance. The \(\chi ^2\) goodness of fit test produces a p value of 0.7799 (\(\chi ^2=0.4972\)).

The null cannot also be rejected if we do this test individually for A and B types. The p values are 0.09889 (\(\chi ^2=4.6276\)) for A types and 0.4897 (\(\chi ^2=1.4277\)) for B types. The individual analysis performed in “Appendices C.3, and C.4” also confirms this finding.

Test 4.3 is very powerful.Footnote 34 The power function, for this test, is the function f from the set of all distributions x over \(\{A,B,C\}\) (for A types and appropriately relabeled for B types) to the interval [0, 1] with f(x) the probability of rejecting the null hypothesis at the 5% level of significance given that a sample of \(n=384\) data points is drawn given the alternative hypothesis x is true.

Figure 10 provides two insights. For the first insight, the simplex represents the set of all possible data vectors for A and B types (with coordinates denoting the proportion of individuals choosing their favorite, second favorite, and least favorite, respectively, in the opinion poll). The black dot then represents the null hypothesis \(\tilde{x}\), and the gray area is the set of possible data vectors for which the null would not be rejected. For the second insight, the simplex represents the set of all possible alternative hypotheses x, distributions over \(\{A,B,C\}\) (for A types and appropriately relabeled for B types). The iso-quants of the power function f(x) are indicated by the dashed “ellipses.”

Acceptance region (gray) and iso-quants (dashed lines) of the power function f for Test 4.3

5 Conclusion

What have we learnt from this paper? As the experiment was originally performed for a different purpose, we first had to carefully identify what equilibrium theory would predict in this game. It became clear that one had to take seriously certain symmetry restrictions, termed attainability by Crawford and Haller (1990), even though the game was presented to subjects in a way that also allowed play that violates these symmetry restrictions. As the game was played recurrently in the laboratory, the appropriate equilibrium theory is that of evolutionary stability. We, thus, need to check the evolutionary stability properties of all equilibria we found.

In one treatment, the two-stage game with an opinion poll followed by a voting stage, we needed to identify the focal (perhaps simplest reasonable and empirically founded) attainable subgame continuation play in the voting games for all possible different outcomes of the opinion poll.

We then tested whether subjects played an evolutionarily stable attainable equilibrium under the assumption that players are risk-neutral. While this theory must be rejected at the 5% level of significance, it is nevertheless quite close to “explaining” the data.

We then use one treatment, the voting game alone, to calibrate the risk aversion of the players to give a perfect fit to the data for this treatment. We then test whether subjects’ play can be rationalized by an evolutionarily stable attainable equilibrium in the polling game. This hypothesis cannot be rejected at the 5% level of significance despite the fact that we have close to 400 data points.

All in all, evolutionarily stable attainable equilibrium is not too far off “explaining” the play in this particular laboratory experiment. It might be interesting to perform a similar case study for experimental work following up on Forsythe et al. (1993), in which symmetries play a role, including Forsythe et al. (1996), Rietz et al. (1998), and Bassi (2015).

Notes

This is building on work on symmetries in games by Von Neumann and Morgenstern (1953), Nash (1951), Harsanyi and Selten (1988), Crawford and Haller (1990), Peleg et al. (1999). The term “attainable” was introduced by Crawford and Haller (1990). See, e.g., Crawford and Haller (1990), Bhaskar (2000) (with Kuzmics and Rogers 2012), Kuzmics et al. (2014), Hefti (2017) and Plan (2017) for properties of attainable equilibria in games with (partial) symmetries.

In fact, the concept of an evolutionarily stable strategy (ESS) of Maynard Smith and Price (1973) and Maynard Smith (1982) applies directly only to two-player symmetric games. As the game here is a 14-player game, we need to utilize an appropriate extension of ESS to games with symmetries with more than two players by Palm (1984) and Broom et al. (1997).

“Appendix C” shows that there is some evidence of subjects gradually learning to actually play the evolutionarily stable attainable equilibrium without risk aversion in the game with opinion polls. There is no evidence for such a change in behavior in the game without polls.

Both quotes are from the abstract of Aumann and Brandenburger (1995).

In mechanism design, see, e.g., Börgers (2015) for a textbook treatment, a situation where one “designer” designs a game and provides a little book of rules of the game, this designer can also add what she expects everyone to play in this game so that she can try to induce the “common knowledge of conjectures” this way.

Unlike us, Gunnthorsdottir et al. (2010a) do not need to appeal to refinements as we do here, as the games they study only have two (kinds of) pure strategy Nash equilibria. We have chosen the experiments of Forsythe et al. (1993) here because the game played there must be one of the very few that are studied in the laboratory that are possibly simple for subjects but complicated for analysts; in that, almost the whole weight of the detailed developed theory of play is required for its analysis.

If the other players are not changing, then the game is really one big repeated game in which players have very different incentives than in the one-stage game. See, e.g., Mailath and Samuelson (2006) for a textbook treatment.

There is also a large related literature on learning in games. See, e.g., Roth and Erev (1995) for the seminal contribution of reinforcement learning in games and Camerer and Ho (1999) for a very flexible learning model. As subjects seem to play equilibrium from essentially the first round in the experiments we study here, we do not pursue these learning models further in this paper.

This literature on equilibrium “refinements” can be said to ask the following question. Suppose that for some reason some Nash equilibrium play has established itself. What properties would this equilibrium have to have so that “highly rational” individuals really want to play according to this equilibrium? See, e.g., Ritzberger and Weibull (1995), Swinkels (1993), Balkenborg et al. (2013) for connections between evolutionary and strategic stability. Typically, (smallest) evolutionarily stable sets of strategy profiles, while they can include non-Nash behavior, also include strategically stable sets. Yet, not every strategically stable set is necessarily included in a (smallest) evolutionarily stable set.

See also Fudenberg and Liang (2018) on this point.

There are games for which even very long periods of learning are insufficient to provide minmax play. We know from Zermelo (1913) that optimal (minmax) play in chess would result in every game of chess ending the same way. Yet even with professional chess players’ games can end with any one of the three possible outcomes. Clearly, even these professionals do not play Nash equilibrium.

There is a sizeable empirical literature on whether one would expect Pareto-dominant or risk-dominant equilibria in coordination games. See, e.g., VanHuyck et al. (1990) as a starting point. There are also evolutionary game theory results about the ultra-long-run behavior in coordination games starting with Young (1993) and Kandori et al. (1993). But this is an issue of selection not refinement as all relevant equilibria among which selection may happen are singleton strategically stable sets.

See also Palfrey (forthcoming) for a more comprehensive and more recent survey.

Palfrey (forthcoming) argues that for cases when Nash equilibrium does not perfectly explain the data, quantal response equilibria of McKelvey and Palfrey (1995, 1998) often provide a much better fit. Given that Nash equilibrium theory suffices in the present case and that it is a special case of quantal response equilibrium, we have not attempted to consider quantal response equilibria further than that.

We are grateful to an anonymous referee for pointing this out.

As there are a finite and fixed number of each type, the game here differs somewhat from the voting games studied in, e.g., Palfrey (1989), Myerson and Weber (1993), Fey (1997), and Andonie and Kuzmics (2012), where the focus is on large elections and where every player is randomly allocated a type without keeping the fraction of players of any one type constant.

We are grateful to an anonymous referee for pointing this out.

We here assume that all players have affine preferences for money. This is challenged in Sect. 3.5.

A note on all our Propositions in this paper. Their proofs are done partly analytically and partly numerically as we need to solve polynomial equations of degree of up to seven. We employ the multi-dimensional Newton–Raphson method in order to do so. The MATLAB program with which we do this is provided as supplementary material.

Indeed of the 48 times that the stage game without polls is played in the experiment, three are won by candidate A and three are won by candidate B. See Sect. 3.4.

Throughout the paper, we shall only consider mutants separately in each population. That is, we do not consider a joint mutation in the population of A and B types and in the population of C types. Of course, any strategy profile that we find to be not evolutionarily stable is also not evolutionarily stable when we allow for more kinds of mutations. Strategy profiles that we find to be evolutionarily stable (within each population) may not necessarily be evolutionarily stable if we allow joint mutations. We shall, however, not pursue this point.

This is very similar to the two-player hawk–dove game. Indeed, the voting game is in some sense an eight-player (the C types just chose C and can be disregarded) hawk–dove game. The only difference here is that the payoff to an A type for playing A is not a linear function in x (because it is not a two-player game) and is not strictly decreasing.

There is plenty of experimental evidence that people are risk-averse even over small gambles (see, e.g., Holt and Laury 2002; Barberis et al. 2006, and Harrison and Rutström 2008). This is normatively not very appealing as such people would have to be implausibly extremely risk-averse over larger gambles (see, e.g., Rabin 2000).

The value \(u(0.9)=1.0722\) is the unique value that generates the equilibrium probability of A types playing A (and B types playing B) of \(x=0.5672\). Utility values higher than 1.0722 lead to lower x, while utility values lower than 1.0722 lead to higher x. The value of \(u(0.9)=1.0722\) corresponds to a level of risk aversion in a CARA utility of 2.014. This is higher than typically reported levels of risk aversion from experimental data, see, e.g., Goeree et al. (2003). Note, however, that risk aversion is just one interpretation one could give the value of \(u(0.9)=1.0722\).

One should also note that what matters to play in the first, the opinion poll, stage, is what players expect the continuation play to be in all possible subgames.

The behavior of A and B types after a A–B tie in the poll is, thus, statistically different from the behavior of A and B types in the treatment without polls. It is a bit difficult to explain this difference. It is as if the heightened concern for coordination of A and B types after a tie in the poll changes somewhat from their original preference.

Theoretically, one could well expect that subjects who play this game often eventually learn that they could use the ballot position to condition their strategy on. This would then allow the A and B types to be coordinated in more cases if not in all. While there is some suggestion that this is going on here, see also Forsythe et al. (1993) for a discussion, there is no statistically significant evidence of this. See the analysis of the data of the individual rounds in “Appendix C.”

We thank Daniel Houser for suggesting that we perform a proper power analysis.

The two functions, \(u_{A}(A,x)\) and \(u_{A}(B,x)\), are depicted in Fig. 1.

We can also compute the value for “middle” payoff that calibrates the data in early and later rounds perfectly. This value is \(u(0.9)=1.0444\) for the early rounds and \(u(0.9)=1.0992\) for the later rounds. Given the chi-squared test above, this difference is not statistically significant.

References

Alos-Ferrer, C., Kuzmics, C.: Hidden symmetries and focal points. J. Econ. Theory 148, 226–258 (2013)

Andonie, C., Kuzmics, C.: Pre-election polls as strategic coordination devices. J. Econ. Behav. Organ. 84, 681–700 (2012)

Andreoni, J.: Why free ride?: Strategies and learning in public goods experiments. J. Public Econ. 37(3), 291–304 (1988)

Andreoni, J., Blanchard, E.: Testing subgame perfection apart from fairness in ultimatum games. Exp. Econ. 9(4), 307–321 (2006)

Aragones, E., Palfrey, T.R.: The effect of candidate quality on electoral equilibrium: an experimental study. Am. Polit. Sci. Rev. 98(1), 77–90 (2004)

Aumann, R., Brandenburger, A.: Epistemic conditions for Nash equilibrium. Econometrica 63, 1161–1180 (1995)

Balkenborg, D., Hofbauer, J., Kuzmics, C.: Refined best reply correspondence and dynamics. Theor. Econ. 8(1), 165–192 (2013)

Barberis, N., Huang, M., Thaler, R.H.: Individual preferences, monetary gambles, and stock market participation: a case for narrow framing. Am. Econ. Rev. 96(4), 1069–1090 (2006)

Bassi, A.: Voting systems and strategic manipulation: an experimental study. J. Theor. Polit. 27(1), 58–85 (2015)

Bernergård, A., Mohlin, E.: Evolutionary Selection against Iteratively Weakly Dominated Strategies. Department of Economics, Lund Universtiy Working Papers (2017)

Bernheim, B.D.: Rationalizable strategic behavior. Econometrica 52, 1007–29 (1984)

Bhaskar, V.: Egalitarianism and efficiency in repeated symmetric games. Games Econ. Behav. 32(2), 247–262 (2000)

Bhattacharya, S., Duffy, J., Kim, S.-T.: Compulsory versus voluntary voting: an experimental study. Games Econ. Behav. 84, 111–131 (2014)

Binmore, K., Swierzbinski, J., Proulx, C.: Does minimax work? An experimental study. Econ. J. 111(473), 445–464 (2001)

Börgers, T.: An Introduction to the Theory of Mechanism Design. Oxford University Press, Oxford (2015)

Broom, M., Cannings, C., Vickers, G.T.: Multi-player matrix games. Bull. Math. Biol. 59(5), 931–952 (1997)

Brown, G.W.: Iterative solutions of games by fictitious play. In: Koopmans, T.C. (ed.) Activity Analysis of Production and Allocation, pp. 374–376. Wiley, New York (1951)

Camerer, C., Ho, T.H.: Experience-weighted attraction learning in normal form games. Econometrica 67(4), 827–874 (1999)

Campo, S., Guerre, E., Perrigne, I., Vuong, Q.: Semiparametric estimation of first-price auctions with risk-averse bidders. Rev. Econ. Stud. 78(1), 112–147 (2011)

Cason, T.N., Friedman, D., Hopkins, E.: Testing the TASP: an experimental investigation of learning in games with unstable equilibria. J. Econ. Theory 145(6), 2309–2331 (2010)

Cason, T.N., Friedman, D., Hopkins, E.: Cycles and instability in a rock-paper-scissors population game: a continuous time experiment. Rev. Econ. Stud. 81(1), 112–136 (2013)

Chen, K.-Y., Plott, C.R.: Nonlinear behavior in sealed bid first price auctions. Games Econ. Behav. 25(1), 34–78 (1998)

Cooper, D.J., Kagel, J.H.: Other-regarding preferences. In: Kagel, J.H., Roth, A.E. (eds.) The Handbook of Experimental Economics, p. 217. Princeton University, Princeton (2016)

Cooper, R.W., DeJong, D.V., Forsythe, R., Ross, T.W.: Selection criteria in coordination games: some experimental results. Am. Econ. Rev. 80, 218–233 (1990)

Cox, J.C., Oaxaca, R.L.: Is bidding behavior consistent with bidding theory for private value auctions? Res. Exp. Econ. 6, 131–148 (1996)

Crawford, V., Haller, H.: Learning how to cooperate: optimal play in repeated coordination games. Econometrica 58, 571–596 (1990)

Deck, C., Lee, J., Reyes, J., Rosen, C.: Measuring risk attitudes controlling for personality traits. SSRN working paper (2008)

Duffy, J., Hopkins, E.: Learning, information, and sorting in market entry games: theory and evidence. Games Econ. Behav. 51(1), 31–62 (2005)

Duverger, M.: Political Parties: Their Organization and Activity in the Modern State. Wiley, New York (1954)

Farrell, J.: Cheap talk, coordination and entry. Rand J. Econ. 18, 34–39 (1987)

Fehr, E., Gächter, S.: Cooperation and punishment in public goods experiments. Am. Econ. Rev. 90, 980–994 (2000)

Fey, M.: Stability and coordination in Duverger’s law: a formal model of pre-election polls and strategic voting. Am. Polit. Sci. Rev. 91, 135–147 (1997)

Forsythe, R., Myerson, R., Rietz, T.A., Weber, R.J.: An experiment on coordination in multicandidate elections: the importance of polls and election histories. Soc. Choice Welf. 10, 223–247 (1993)

Forsythe, R., Rietz, T., Myerson, R., Weber, R.: An experimental study of voting rules and polls in three-candidate elections. Int. J. Game Theory 25(3), 355–383 (1996)

Friedman, D., Isaac, R.M., James, D., Sunder, S.: Risky Curves: On the Empirical Failure of Expected Utility. Routledge, Abingdon (2014)

Friedman, D., Sinervo, B.: Evolutionary Games in Natural, Social, and Virtual Worlds. Oxford University Press, Oxford (2016)

Fudenberg, D., Liang, A.: Predicting and Understanding Initial Play. Unpublished manuscript MIT and University of Pennsylvania (2018)

Gale, J., Binmore, K.G., Samuelson, L.: Learning to be imperfect: the ultimatum game. Games Econ. Behav. 8(1), 56–90 (1995)

Gilboa, I., Matsui, A.: Social stability and equilibrium. Econometrica 59, 859–67 (1991)

Goeree, J.K., Holt, C.A.: A model of noisy introspection. Games Econ. Behav. 46(2), 365–382 (2004)

Goeree, J.K., Holt, C.A., Palfrey, T.R.: Quantal response equilibrium and overbidding in private-value auctions. J. Econ. Theory 104(1), 247–272 (2002)

Goeree, J.K., Palfrey, T.R., Holt, C.A.: Risk averse behavior in generalized matching pennies games. Games Econ. Behav. 45(1), 97–113 (2003)

Guarnaschelli, S., McKelvey, R.D., Palfrey, T.R.: An experimental study of jury decision rules. Am. Polit. Sci. Rev. 94(2), 407–423 (2000)

Gunnthorsdottir, A., Vragov, R., Seifert, S., McCabe, K.: Near-efficient equilibria in contribution-based competitive grouping. J. Public Econ. 94(11–12), 987–994 (2010a)

Gunnthorsdottir, A., Vragov, R., Shen, J.: Tacit coordination in contribution-based grouping with two endowment levels. In: Isaac, R .M., Norton, D. (eds.) Charity with Choice, Research in Experimental Economics, vol. 13, pp. 13–75. Emerald Group Publishing Limited, Bingley (2010b)

Güth, W., Schmittberger, R., Schwarze, B.: An experimental analysis of ultimatum bargaining. J. Econ. Behav. Organ. 3(4), 367–388 (1982)

Harrison, G.W., Rutström, E.E.: Risk aversion in the laboratory. In: Cox, J., Harrison, G.W. (eds.) Research in Experimental Economics, vol. 12, pp. 41–196. Emerald Group Publishing Limited, Bingley (2008)

Harsanyi, J., Selten, R.: General Theory of Equilibrium Selection in Games. MIT Press, Cambridge, MA (1988)

Harsanyi, J.C.: Games with randomly disturbed payoffs: a new rationale for mixed-strategy equilibrium points. Int. J. Game Theory 2(1), 1–23 (1973)

Hart, S.: Evolutionary dynamics and backward induction. Games Econ. Behav. 41, 227–64 (2002)

Hedges, L., Olkin, I.: Statistical Methods for Meta-Analysis. Academic Press, Cambridge (1985)

Hefti, A.: Equilibria in symmetric games: theory and applications. Theor. Econ. 12(3), 979–1002 (2017)

Hofbauer, J.: Stability for the best response dynamics. Unpublished manuscript (1995)

Hofbauer, J., Sigmund, K.: Evolutionary Games and Population Dynamics. Cambridge University Press, Cambridge (1998)

Hoffman, M., Suetens, S., Gneezy, U., Nowak, M.A.: An experimental investigation of evolutionary dynamics in the Rock-Paper-Scissors game. Sci. Rep. 5, 8817 (2015)

Holt, C.A., Laury, S.K.: Risk aversion and incentive effects. Am. Econ. Rev. 92(5), 1644–1655 (2002)

Hsu, S.-H., Huang, C.-Y., Tang, C.-T.: Minimax play at Wimbledon: comment. Am. Econ. Rev. 97, 517–523 (2007)

Kandori, M., Mailath, G., Rob, R.: Learning, mutation, and long-run equilibria in games. Econometrica 61, 29–56 (1993)

Kohlberg, E., Mertens, J.-F.: On the strategic stability of equilibria. Econometrica 54, 1003–37 (1986)

Kuzmics, C.: Stochastic evolutionary stability in extensive form games of perfect information. Games Econ. Behav. 83(2), 321–36 (2004)

Kuzmics, C.: On the elimination of dominated strategies in stochastic models of evolution with large populations. Games Econ. Behav. 72(2), 452–466 (2011)

Kuzmics, C., Palfrey, T., Rogers, B.W.: Symmetric play in repeated allocation games. J. Econ. Theory 154, 25–67 (2014)

Kuzmics, C., Rogers, B.: A comment on “Egalitarianism and efficiency in repeated symmetric games” by V. Bhaskar [Games Econ. Behav. 32: 247–262, 2000]. Games Econ. Behav. 74(1), 240–242 (2012)

Kuzmics, C., Rogers, B.W.: An incomplete information justification of symmetric equilibrium in symmetric games. SSRN working paper (2010)

Laraki, R., Mertikopoulos, P.: Higher order game dynamics. J. Econ. Theory 148(6), 2666–2695 (2013)

Levine, D.K., Palfrey, T.R.: The paradox of voter participation? A laboratory study. Am. Polit. Sci. Rev. 101(1), 143–158 (2007)

Mailath, G.J., Samuelson, L.: Repeated Games and Reputations. Oxford University Press, Oxford (2006)

Matsui, A.: Best response dynamics and socially stable strategies. J. Econ. Theory 57, 343–62 (1992)

Maynard Smith, J.: Evolution and the Theory of Games. Cambridge University Press, Cambridge (1982)

Maynard Smith, J., Price, G.R.: The logic of animal conflict. Nature 246, 15–18 (1973)

McKelvey, R.D., Palfrey, T.R.: Quantal response equilibria for normal form games. Games Econ. Behav. 1(10), 6–38 (1995)

McKelvey, R.D., Palfrey, T.R.: Quantal response equilibria for extensive form games. Exp. Econ. 1(1), 9–41 (1998)

Mehta, J., Starmer, C., Sugden, R.: Focal points in pure coordination games: an experimental investigation. Theor. Decis. 36, 163–185 (1994a)

Mehta, J., Starmer, C., Sugden, R.: The nature of salience: an experimental investigation of pure coordination games. Am. Econ. Rev. 74, 658–673 (1994b)

Myerson, R.B.: Refinements of the Nash equilibrium concept. Int. J. Game Theory 7, 73–80 (1978)

Myerson, R.B., Weber, R.J.: A theory of voting equilibria. Am. Polit. Sci. Rev. 87, 102–114 (1993)

Nachbar, J.: Evolutionary selection dynamics in games: convergence and limit properties. Int. J. Game Theory 19, 59–89 (1990)

Nash, J.: Non-cooperative games. Ph.D. dissertation, Princeton University (1950)

Nash, J.: Non-cooperative games. Ann. Math. 52, 286–295 (1951)

Nöldeke, G., Samuelson, L.: An evolutionary analysis of backward and forward induction. Games Econ. Behav. 5, 425–54 (1993)

O’Neill, B.: Nonmetric test of the minimax theory of two-person zerosum games. Proc. Natl. Acad. Sci. 84(7), 2106–2109 (1987)

Oprea, R., Henwood, K., Friedman, D.: Separating the Hawks from the Doves: evidence from continuous time laboratory games. J. Econ. Theory 146(6), 2206–2225 (2011)

Palacios-Huerta, I.: Professionals play minimax. Rev. Econ. Stud. 70(2), 395–415 (2003)

Palfrey, T.R.: A mathematical proof of Duverger’s law. In: Ordeshook, P.C. (ed.) Models of Strategic Choice in Politics, pp. 69–92. University of Michigan Press, Ann Arbor (1989)

Palfrey, T.R.: Laboratory experiments in political economy. Annu. Rev. Polit. Sci. 12, 379–388 (2009)

Palfrey, T.R.: Experiments in political economy. In: Kagel, J.H., Roth, A.E. (eds.) Handbook of Experimental Economics, vol. 2, pp. 347–434. Princeton University Press (2016)

Palm, G.: Evolutionary stable strategies and game dynamics for n-person games. J. Math. Biol. 19(3), 329–334 (1984)

Pearce, D.G.: Rationalizable strategic behavior and the problem of perfection. Econometrica 52, 1029–51 (1984)

Peleg, B., Rosenmüller, J., Sudhölter, P.: The canonical extensive form of a game form: symmetries. In: Current Trends in Economics, pp. 367–387. Springer, Berlin (1999)

Plan, A.: Symmetric N-Player Games. Mimeo, University of Arizona, Tucson (2017)

Rabin, M.: Risk aversion and expected-utility theory: a calibration theorem. Econometrica 68(5), 1281–1292 (2000)

Rietz, T., Myerson, R., Weber, R.: Campaign finance levels as coordinating signals in three-way, experimental elections. Econ. Polit. 10(3), 185–218 (1998)