Abstract

Using an agent-based model, this paper revisits the merits for a central bank of announcing its inflation target. The model preserves the main transmission channels of monetary policy used in stochastic dynamic general equilibrium models– namely the consumption and the expectation channels, while allowing for agents’ heterogeneity in both expectations and behavior. We find that, in a rather stable environment such as the Great Moderation period, announcing the target allows for the emergence of a loop between credibility and success: if the target is credible, inflation expectations remain anchored at the target, which helps stabilize inflation, and, in turn, reinforces the central bank’s credibility. We then tune the degree of heterogeneity in agents’ behavior and the individual learning process to introduce inflationary pressures, accompanied or not by uncertainty affecting the real transmission channel of monetary policy. Even if learning and heterogeneity would a priori lead to thinking favorably about transparency, we show that this virtuous circle is not robust, as transparency may expose the central bank to a risk of credibility loss. In this case, we discuss the potential benefits from partial announcements.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Over the past three decades, inflation targeting (IT hereafter) has been adopted by an increasing number of countries. Under an IT regime, the central bank (CB hereafter) puts a strong emphasis on communication, especially by announcing the inflation target to the public. Following this trend, an important strand of the academic literature has investigated the macroeconomic benefits that may be expected from adopting IT.Footnote 1 Most of the related studies point to the impact on inflation expectations as the key stabilization mechanism under this regime.

Two properties of an explicit inflation target have been emphasized (see, notably, Demertzis and Viegi 2008, 2009). First, an explicit, numerical target makes it a good candidate as a focal point of coordination for potentially heterogeneous inflation expectations. Second, the announced target becomes a natural reference point for assessing the inflation performances of the monetary authorities, and, hence, for judging the credibility of the announcement itself. As a consequence, this credibility stands as a key factor for determining whether the target can become an effective anchoring device for inflation expectations.Footnote 2 In a dynamic perspective, Demertzis and Viegi (2009) show that the anchoring properties of IT arise through the emergence of a self-reinforcing credibility-success loop: the more credible the monetary authorities, the more likely are inflation expectations to be anchored on the target, and the more likely is inflation to be stabilized around the target, which consolidates the initially favorable credibility assessment, and so on.

The current paper aims at revisiting the stabilization properties of the inflation target in an economic setting characterized by a collection of fully heterogeneous agents who behave, interact and learn under bounded rationality. We depart, in that regard, from the aforementioned literature that has addressed the role of the target in a context where heterogeneity essentially pertains to the formation of inflation expectations, while considering agents as homogeneous and fully rational players in the coordination game. In this paper, agents may not only differ regarding the formation of their inflation expectations but also concerning other dimensions of their economic behavior. This heterogeneity is, in turn, likely to complicate the coordination process between agents in a bounded rationality context.

In such a specific environment, the question naturally arises of whether the anchoring properties of the inflation target can be used as an efficient stabilization tool by the monetary authorities. The answer depends on the interplay between the inflation expectations dynamics that may be influenced by the publicity of the target on the one hand, and the learning dynamics that drives the coordination between agents, on the other hand. In particular, the control of the inflation rate is likely to be all but a simple matter, as interactions between agents, and learning shape the transmission mechanisms of monetary policy in the economy. As a consequence, the announcement of the target can pose a more pronounced credibility challenge for the policymaker than the one arising in representations of IT within representative agent settings.

Given the features of the economy on which we want to focus, an agent based model (ABM) seems a well-suited framework. This framework acknowledges the heterogeneity between agents, and the modalities of their learning behavior at the individual level, without being constrained by the assumptions of intertemporal optimization and aggregation requirements through representative agents.Footnote 3 The price to pay for that flexibility is the absence of any tractable representation of the model, which has then to be assessed through numerical simulations of the emerging dynamics, and a dependence of the outcomes on the range of parameters, which have thus to be chosen with caution.

In the following paper, we elaborate on the ABM developed in Salle et al. (2013). This ABM is deliberately constructed so as to retain the basic structure of standard macroeconomic models dealing with monetary policy (such as the NK model). Specifically, this model encompasses the consumption and the expectation channels of monetary policy to inflation. We extend this ABM by explicitly accounting for an inflation expectations formation process that acknowledges the twofold status of the inflation target under IT, playing both as a focal point and as a reference point for credibility. On those aspects, we adapt the approach developed by Demertzis and Viegi (2009) to the case of agents’ heterogeneity and bounded rationality. We examine under which conditions a credibility-success loop may emerge under IT, and make this regime an advantageous choice for the monetary authorities in the economic environment we have considered.

Our main results can be summarized as follows. The announcement of an inflation target may allow for the emergence of a credibility-loop success if a relatively wide radius of tolerance is coupled with that target. However, this loop arises only in stable macroeconomic environments. In volatile macroeconomic environments, especially characterized by a significant variability in the expectation channel of monetary policy, tying one’s hands by announcing an inflation target turns out to be problematic. Macroeconomic volatility impairs the ability of the CB to deliver its official inflation commitment, and a credibility problem emerges. As a result, a reverse credibility loop can set in. In that case, the economy may benefit from partial announcements about the inflation target, that provide a clear signal to anchor inflation expectations for the part of the public that is reached by that announcement, while allowing for a less tightly defined objective for the remaining part. Overall, our results suggest that fully revealing the inflation target can deteriorate macroeconomic performances, even in a setting with core features – i.e. learning and heterogeneity – which would a priori lead one to think favorably about CB’s transparency. The remainder of the paper is organized as follows. Section 2 presents the ABM. Section 3 explains the simulation protocol and gives insights into the main mechanisms at work in the model. Section 4 discusses the results and Section 5 concludes.

2 The model

2.1 General features

This model elaborates on the macroeconomic ABM first introduced in Salle et al. (2013). This ABM shares several general features of the baseline NK framework (see Woodford 2003, Chap. 4). labor is the only input, used to produce a perishable good, and the goods market operates under imperfect competition. The price/wage adjustments are characterized by nominal rigidities. Inflation is driven by both aggregate demand and inflation expectations, in line with the NK Phillips curve. The two usual transmission channels of monetary policy then result: the consumption and the expectation channels. The CB uses a Taylor rule to set the interest rate.

The economy is populated by n households, indexed by i ∈ [1,n], a single firm summarizing the supply side, and a CB. The sequence of events is as follows. First, the labor market allocates households’ labor supplies to the firm. The quantity of hired labor determines the unemployment rate, the firm’s goods supply, its labor costs and the corresponding price, as well as households’ labor income. Second, households choose their consumption and savings/debt strategy. In a third step, the goods market determines the allocation of the goods supply to each household. This allocation dictates the firm’s profit and each household’s utility. Fourth, agents update their individual behavior and inflation expectations. Finally, the CB sets the nominal interest rate for the next period, and the story starts all over again.

2.2 Households

Households supply labor and consume according to two simple rules of thumb. To implement those two rules, they need to forecast inflation. Moreover, they adapt those rules according to a social learning process, and update their inflation forecasts on the basis of the realization of inflation and the CB’s announcements.

2.2.1 Individual behavior

Labor supply

In each period, each household is endowed with an inelastic labor supply normalized to one, i.e.\(h^{s}_{i,t}= 1\), ∀t,i.Footnote 4 This normalization allows us to define explicitly unemployment in the model, and can be interpreted as a full-time occupation.Footnote 5 Labor supply behavior is formulated in terms of a reservation wage. The heuristic rule that we choose introduces a direct transmission channel of inflation expectations to the growth rate of nominal wages, and hence to price inflation, leading to an expectation channel of monetary policy in the model. In every period t, each household i sets its reservation wage following the first rule of thumb:

Heuristic (1) indicates that households raise their reservation wage wi,t only if their inflation expectation \(\pi ^{e}_{i,t + 1}\) is positive (and, consequently, \(\mathbb {1}_{()}= 1\)). A wage indexation process then prevails, according to which households raise their wage by \( \left (\gamma ^{w}_{i,t} . \pi ^{e}_{i,t + 1}\right )\). Otherwise, they keep it unchanged (\(\mathbb {1}_{()}= 0\)). Wages are increasing with expected inflation, while being subject to nominal wage downward stickiness (\(\gamma ^{w}_{i,t}>0\)).Footnote 6 In this set-up, coefficients \({\gamma ^{w}_{i}}\) stand for the strength of the wage-price inflation spiral. Coefficient \({\gamma ^{w}_{i}}\) is the first strategy variable of households. Any household faces a trade-off when choosing its strategy \({\gamma ^{w}_{i}}\): setting a high \({\gamma ^{w}_{i}}\), i.e. a high reservation wage, would induce higher wage payments, and a higher consumption level if the household can be employed. However, at the same time, the probability of becoming unemployed rises, as the firm first hires the less demanding households (see Section 2.5). Furthermore, the higher is inflation, the higher is the cost in terms of purchasing power of under-indexation, and the higher is the incentive of the household to set an indexation coefficient at least equal to unity. This relationship between expected inflation and indexation coefficients is investigated in detail in numerical simulations in Section 3.

Consumption

In each period, each household receives a nominal income given by:

where \(h_{i,t} \leq h^{s}_{i,t}\) is the actual labor supplied by household i after the matching process in the labor market, wi,thi,t is then the corresponding labor income, πt− 1/n the share of the last period’s total nominal profits evenly distributed among households, it− 1, the nominal riskless interest rate set by the CB. bi,t− 1 stands for the nominal holdings (positive in case of savings and negative in case of debt), and is simply given by the difference between actual consumption expenditures and current income after the matching in the goods market.

In line with the consumption behavior described by the Euler equation in NK models, we assume that households desire to smooth their consumption path. Accordingly, each household computes a proxy of its permanent income, as defined by Friedman (1957, Chap. III), as a moving average of its past incomes:

where ρ ∈ [0,1[ is a memory parameter common to all households. Each household then intends to consume a share di,t > 0 of its permanent income. Formally, the demand for the goods of each household i in period t is expressed as:Footnote 7

Households adjust their consumption rate, di,t, according to the second rule of thumb. We specify a counterpart of the standard Euler condition where the real interest rate dictates consumption and savings decisions:Footnote 8

The adjustments of di,t depend on the gap between the current real interest rate expected by household i, i.e. \(i_{t} - \pi ^{e}_{i, t + 1}\) and the natural (real) rate \({r^{n}_{t}}\) (assumed to be zero in numerical simulations). Accordingly, monetary policy influences aggregate demand through the nominal interest rate, and, for a given level of inflation expectations, the real interest rate. The coefficient \(\gamma ^{d}_{i,t} \in \mathbb {R} \) is the households’ second strategy. As soon as \(\gamma ^{d}_{i,t} > 0\), consumption decreases when the real interest rate rises, and we obtain the standard consumption channel of monetary policy through the substitution effect. Otherwise (i.e., when \( \gamma ^{d}_{i,t} < 0\)), the income effect dominates and the consumption channel is reversed. Both effects have been emphasized as plausible in the empirical literature. (See Oeffner 2008, p. 83, for a review.)

2.2.2 Adaptation through social learning

Following the assumption of perpetual learning, the two strategies \(\gamma ^{w}_{i,t}\) and \(\gamma ^{d}_{i,t}\) are updated at the end of each period through a simple form of a genetic algorithm involving two learning operators: a social learning mechanism (imitation) and random experiments in the strategy space.Footnote 9

Imitation is based on households’ performance, measured by smoothed utility:

where u(ci,t) ≡ ln(ci,t) in the numerical simulations and ρ is the same memory parameter as in Eq. 3. The use of a smoothed measure denotes a concern for the persistence in the performances. In each period t, with a probability Pimit, a household i imitates the strategies \((\gamma ^{w}_{j,t}, \gamma ^{d}_{j,t})\) of another household j≠i. The household to be imitated is chosen with a roulette-wheel selection process. Formally, the probability of household j to be imitated is proportional to its relative utility in the households’ population:

where the exponential function is set to cope with negative utility values. Consequently, better strategies in terms of utility are favored by the selection process, and they tend to replace less performing ones among the population of households.

With a probability Pmut, a household can also perform a random experiment, in order potentially to discover better strategies than those already present among the households’ population. In this case, it draws a new \(\gamma ^{w}_{i,t + 1}\) coefficient from a normal distribution with the mean equal to the average of the coefficients \(\gamma ^{w}_{i,t} \) across all households, and a given standard-deviation σw: \(\mathcal {N} \left (\frac {{\sum }_{l = 1}^{n} \gamma ^{w}_{l,t}}{n}, \sigma _{w} \right )\). We truncate the draw at zero, as negative indexation coefficients are not relevant. The new strategy \(\gamma ^{d}_{i,t + 1}\) is also drawn from a normal distribution, with a given standard deviation σd: \(\mathcal {N} \left (\frac {{\sum }_{l = 1}^{n} \gamma ^{d}_{l,t}}{n}, \sigma _{d} \right ) \), but this draw does allow for negative coefficients, as both substitution and income effects are plausible (see Eq. 5).

With a probability 1 − Pimit − Pmut, the household keeps its strategies \((\gamma ^{w}_{i,t}, \gamma ^{d}_{i,t})\) unchanged for the next period t + 1.

Parameters σd and σw can be interpreted in terms of shocks: they control the endogenous variability in the model, which arises from the heterogeneity in the individual behavior and its evolution through the learning process.

High values of σd are associated with a high level of uncertainty about the way monetary policy transmits to aggregate demand (see Eq. 5). That situation is akin to model uncertainty in the related literature about monetary policy under uncertainty and we refer to it as such in the paper.Footnote 10 Variability induced by σw directly translates into variability in the inflation process through the wage-indexation scheme, and leads to similar effects on inflation dynamics as cost-push shocks (see Eq. 1). Values of σw higher than unity generate second-round effects that fuel a wage-price inflation spiral, and may give rise to a stabilization trade-off between the level of inflation and the level of output.

From the preceding, it should be clear that households’ inflation expectations play a central role in the economic dynamics in our model: i) they determine the ex ante real interest rate, through which the CB affects aggregate demand, and ii) they feed the inflation dynamics, and can endogenously drive the inflation process. For these reasons, it becomes important that the CB acts as a manager of expectations (Woodford 2003).

2.2.3 Inflation expectations and CB’s announcements

We assume an inflation expectation formation mechanism that integrates jointly credibility and coordination issues, as in Demertzis and Viegi (2009).Footnote 11 We distinguish between two regimes: IT, in which the CB announces to all households the inflation target πT and the radius of tolerance around it + / − ζ, and non-IT, in which none of these parameters is announced. Unlike Demertzis and Viegi (2009), our ABM explicitly models heterogeneous expectations, and the question of coordination naturally arises.

Under IT,

agents assess the credibility they attribute to the CB by evaluating its past performances in terms of inflation over a finite number of past periods, denoted by window. In each period in which past inflation has been contained between the announced range [πT − ζ,πT + ζ], they consider the CB as successful. If the CB has been successful in x periods over the last window periods, they compute their perceived credibility, denoted by Ptarget, as \( P_{target}=\frac {x}{window} \in [0, 1]\). Each household then determines its inflation expectation as follows:

where ξi is an i.d.d. noise with mean zero and variance σξ. The first case in Eq. 8 is in line with the definition of credibility given in Faust and Svensson (2001), as the gap between the inflation target and the average inflation expectations. Our expectations scheme allows for the emergence of a credibility-success loop: the more successful the CB, the higher Ptarget, the closer to the target inflation expectations, and the more likely is inflation to be contained in the announced range. The reverse is true in the case of a credibility loss. Moreover, the radius of tolerance around the target, ζ, plays an ambivalent role: the wider the radius (i.e. the higher ζ), the more likely past inflation rates fall within the range, and the higher, ceteris paribus, Ptarget. However, the higher the range, the less clear the focal point, and the more heterogeneous agents’ expectations. Section 3.2 below illustrates this mechanism in the numerical simulations.

The second case of Eq. 8 corresponds to naive (noisy) expectations,Footnote 12 that well account for the unanchoring process of inflation expectations when credibility is weak.

Under non-IT,

we assume that households use the average inflation rate over the last window periods as a reference point to evaluate inflation performances, instead of the target, because they do not know it.Footnote 13 This is the only difference between the two regimes. Each household then determines its inflation expectation as follows:

where \(\tilde {\pi }_{t}\) is the average inflation over the last window periods. Admittedly, the radius of tolerance ζ under non-IT is not a choice of the CB, and finds a slightly different interpretation than under IT: the radius of tolerance is the tolerance that households use to determine whether inflation has been far from or close to its past average value.

The choice of the inflation expectations formation process under non-IT is made for various reasons. First, it allows for a credibility-success loop as under IT, although it does not provide an anchoring device around the target as such (we follow on that point an extension of their model that is suggested by Demertzis and Viegi 2009, p. 31). Second, Eq. 9 translates the lack of anchor in the absence of an explicit inflation target. In our model, if the CB meets its target, the average past inflation remains close to the target, and non-IT resembles IT. However, a series of failures in keeping inflation close to the target pulls average inflation away from the implicit inflation target, and contributes to further deviations of inflation expectations from that target. It should be further noted, as illustrated in Section 3.2, that this design of non-IT results in the same amount of heterogeneity in expectations under IT and non-IT by construction (for given values of Ptarget and ζ), which allows for a fair comparison of their relative performances. Third, as stressed in an experimental study by Roos and Schmidt (2012), past trends of macroeconomic variables are a key determinant of forecasts when laypeople, such as households, are concerned. Eventually, the specification we use translates the Keynesian notion of “market sentiment”, which has been modelled in the context of monetary policy by Canzian (2009) or De Grauwe (2011).

From the preceding, it ensues that, in our set-up, the benefit from announcing the target mainly arises from the potential anchoring effect on households’ inflation expectations. Other economic effects of transparency have been considered in the literature. We do not take them into consideration as such in this paper however. For example, the role of policy objective announcements as an implicit commitment device has been stressed in models where the CB has an incentive to create inflation surprises (see, for example, Walsh 1995), which is not the case in the framework we consider. Furthermore, in our model, households do not rely on interest rate changes to forecast inflation in the absence of an explicit inflation target, so that we cannot address the so-called opacity bias (see Walsh 2010). Finally, in our model, coordination is not made attractive as such, because the utility function depends only on consumption, but households’ expectations indirectly influence other households’ consumption.Footnote 14 They have therefore a collective interest in coordinating their inflation expectations. Coordination could also be assessed with respect to the performance of agents’ learning. As strategies \(\gamma ^{w}_{i,t}\) and \(\gamma ^{k}_{i,t}\) are directly related to individual inflation expectations, one could expect that the social learning mechanism would yield better performances if it takes place in an environment where agents hold comparable beliefs on the future. Coordination could thus favor learning.

We now turn to the description of the rest of the model.

2.3 The firm

2.3.1 Production and price setting behavior

When the labor demand of the firm (see Section 2.3.2) meets the labor supply of households in the labor market (see Section 2.5), the rationing mechanism determines the actual quantity of labor (Ht) that the firm hires, and the corresponding wage bill. The firm uses that quantity to produce goods through a standard production function (see, for example, Gali 2008):

where α ∈ [0,1[ encompasses decreasing returns, At is the technology factor.Footnote 15 The only production costs of the firm result from the wage bill:

and we can compute the nominal aggregate wage level as a weighted average of individual wages, i.e. \(W_{t} \equiv \frac {\Psi ({Y_{t}^{s}})}{H_{t}} \).

The firm sets its price P, according to a mark-up μ on the marginal cost, and the resulting price is given by:

where we have used the property of the production function relating the marginal cost to (1 − α) times the average cost.Footnote 16 Price is an increasing function of the production Ys as soon as 0 < α < 1.

The rationing mechanism in the goods market (see Section 2.5) determines the quantity that the firm actually sells to households (Yt), which gives its corresponding profit:

2.3.2 Adaptation of the goods supply

The firm behaves in an adaptive way, and updates, in each period, its labor demand strategy \({H^{d}_{t}}\).Footnote 17 As there is a single firm, it cannot benefit from social learning and can only learn through an individual learning process. We consider a simple adaptive mechanism, much in the spirit of gradient learning (see, for example, Leijonhufvud 2006, p. 1631-32 or Delli Gatti et al. 2005). We assume that the firm takes its unsold quantities, if any, as a proxy of the demand it faces, and specify the rule:Footnote 18

where 𝜖 > 0 is a parameter that denotes a (small) adjustment rate. The next period’s labor demand is slightly increased compared to the current quantity of labor hired Ht in case all goods have been sold, or slightly decreased otherwise. As unsold quantities are a loss on the firm’s profit, and profit is increasing with sold quantities, the rule (14) ensures that the adjustment mechanism, while simple, always works into the direction of profit increase.

2.4 Monetary authority

The CB reacts to both inflation and the level of activity, and sets the nominal interest rate it according to a non-linear Taylor (1993) instrumental rule.Footnote 19

where πT stands for the inflation target, u∗ for the natural rate of unemployment, and ϕπ > 0 and ϕu > 0 are the reaction coefficients to inflation and unemployment rates. The rule incorporates the unemployment rate, as we are able to derive it explicitly from the model (see also Orphanides and Williams 2007). We assume u∗ = 0 and the CB targets a full employment state.

2.5 Aggregation and dynamics

Markets do not necessarily clear because price and wage strategies are not set a priori so as to make agents’ strategies mutually consistent. Markets instead confront aggregate supply and aggregate demand according to rationing mechanisms.

2.5.1 Labor market

Aggregate demand for labor is the firm’s strategy \({H^{d}_{t}}\), while aggregate supply is given by:

The two are matched according to a process that is designed to be consistent with the firm aiming at minimizing its production costs: the firm hires households by increasing reservation wages.Footnote 20 The aggregate hired labor is then set as:

The corresponding unemployment rate is computed as \(u_{t}=\frac {n - H_{t}}{n}\). The real wage rate is given by \(\omega \equiv \frac {W_{t}}{P_{t}} = \frac {(1-\alpha )}{(1 + \mu )} H_{t}^{-\alpha }\), decreasing with H.

2.5.2 Goods market

Aggregate goods supply \({Y^{s}_{t}}\) is given by the production function (10) and the aggregate goods demand is given by the sum of individual ones (see Eq. 4). The two are confronted according to an efficient rationing mechanism: households are ranked by decreasing goods demand, so that the firm first faces the highest demand. This mechanism stands for the counterpart of the standard assumption of households aiming at maximizing their utility, derived from their consumption. If a household is rationed, it buys bonds b with its remaining cash. Inflation πt is computed as \( \pi _{t} = \frac {P_{t} - P_{t-1}}{P_{t-1}} \).

2.5.3 Inflation dynamics

We show in Salle et al. (2013) that the Philips curve in our ABM can be made explicit. First, notice that, through Eq. 1, we have:

and, by using the expression of the price (12) and the aggregate labor costs (11), and noticing that \({\Delta } H\equiv {\sum }_{i = 1}^{n}{\Delta } h_{i}\), we obtain:

where \(\pi ^{e}_{t + 1}\) refers to the average inflation expectation among households, and \({\Delta } h_{i,t} \propto {\Delta } {Y_{t}^{S}}\). In our ABM, the Phillips curve (19) does incorporate nominal rigidities, allowing for real effects of monetary policy in the short run.

Figure 1 summarizes the model’s dynamics. We now describe the simulation protocol.

3 Model simulations and emerging dynamics

The ABM outcomes are analyzed through a large number of computer simulations that are implemented over different sets of parameter values. In this section, we first describe the method we have adopted to determine these parameter values. We then carry out a first assessment of the mechanisms at play in the ABM on the basis of the emerging dynamics and salient features that arise from the computer simulations. We finally perform an exercise of empirical validation, and show to which extent the ABM is able to account for the stylized facts that are key for the issues covered in this paper.

3.1 Parameter setting and simulation protocol

The structural parameters that underlie the microfoundations of the economy have been set according to standard values in the NK literature (see, notably, Woodford 2003). As for the consumption bounds (\(\underline {d}\) and \(\bar {d}\)), the adjustment rate of the firm 𝜖, the number of households n and the length of the simulations T, we set their value by relying on results of intensive sensitivity analyses performed on the model to allow for a first screening of the parameter space.Footnote 21 This screening has been performed by following the validation procedure proposed by Klügl (2008) that consists in a successive sub-sampling of parameter values and systemic analyses of the plausibility of emergent dynamics vis-à-vis the specific research question at hand.Footnote 22 This procedure results in a so-called minimal model, i.e., a model that incorporates the minimum set of assumptions and parameters to design consistent mechanisms regarding a specific research question. In particular, we checked whether the choice of specific parameter values (or ranges of values) did significantly affect or not the dynamics generated at the micro or macro level, and whether the simulation of the model did lead to degenerate patterns that reflect an inconsistent behavior of the agents or the economy as a whole.Footnote 23 During this step, we specifically observe that i) the size of the macroeconomic variables is plausible, ii) aggregate welfare is increasing and stabilizes, indicating that learning is efficient, iii) explosive dynamics of real variables are ruled out. Accordingly, we use n = 500 households and T = 800 periods. We further set \(\sigma _{\xi }\equiv \frac {\sigma _{w}}{40}\), meaning that the variance of the noise ξ is related to the variance of the proxy for supply shocks σw (where 40 is a scaling parameter). It is a rather intuitive modelling device: the more unstable the economy (i.e., the bigger the shocks affecting the inflation rate are), the further from the objective the inflation rate is likely to be and the more difficult it is to stabilize inflation expectations. This assumption is also made for the sake of parsimony in the parameter set. Importantly, this feature is identical under IT or under non-IT, so that the noise in inflation expectations does not vary exogenously under the two regimes.

Following that stage, we are left with the determination of the values to be taken by eight parameters, namely window, Pmut , Pimit, σd, σw, ϕπ, ϕu and ζ . It is not a coincidence as those parameters are supposed to be key regarding the interplay between the learning environment, the inflation expectations dynamics and the monetary policy strategy upon which we want to focus. We use a design of experiment (DoE)Footnote 24 to cover the space of those remaining parameters and set their values accordingly. Large sampling methods such as Monte Carlo simulations come indeed at a computational cost if there are numerous parameters with large experiment domains, which is a priori our case. DoE allows us to minimize the sample size under constraint of representativeness. We use the design proposed by Cioppa (2002) and provided by Sanchez (2005), which efficiently combines space-filling properties and the non-correlation criteria between parameter configurations, avoiding multicolinearity issues in the analysis of the results. The design is reported in Table 5 in Appendix A. Each given set of parameter values (i.e., for each configuration or experience) is simulated 30 times, in order to account for the non-deterministic nature of the model. The simulation setting is kept the same for IT and non-IT, in order to provide a relevant comparison of the outcomes and dynamics of the model over those two regimes.

As for the outcomes, we summarize the CB’s performances with a usual loss function (see, for example, Svensson 1999):

where the inflation rate πt and the unemployment rate ut are measured for each run as the average over the whole simulation, with a 100 period burn-in phase.

3.2 Emerging dynamics and salient features

We first assess the model outcomes on the basis of a regression tree (see Fig. 2) that reports the main determinants of the values of the loss function over the whole set of simulations implemented under IT and non-IT.

The results indicate that the distinction between IT and non-IT matters for the stabilization outcomes obtained by the monetary authorities. However, the stabilizing role of IT appears to be also affected by other parameters, namely the two monetary policy coefficients ϕπ and ϕu, the bandwidth of the range around the target ζ as well as the features of the learning dynamics via the importance of the learning shocks (especially σw).Footnote 25 In Section 4, we therefore focus on those parameters specifically (thus fixing the other ones) and examine attentively their interplay with the features of IT and non-IT.

More precisely, the regression tree (Fig. 2) indicates that an IT regime coupled with a relative large radius (higher than 0.75 %) yields overall the lowest expected average loss (0.085), while an IT regime coupled with a narrow range (no more than 0.75%) yields the highest loss (0.509) in a strongly volatile inflationary environment (i.e. with a higher than 0.32 value of σw). Those two results suggest that the magnitude of the radius bears a strong influence on the stabilization performance of an IT regime, which, however, depend also on the volatility arising from the learning environment.

To go further into the assessment of the role of the target range, Fig. 3 plots the distributions of various variables of interest for every value of ζ retained in the DoE. This is done for both the IT and non-IT cases. As Fig. 3 clearly shows, the role played by the radius under IT hinges on a trade-off between a coordination and a credibility motive. Under IT, the value of the radius comes along with a compromise between providing a clear signal in order to coordinate heterogeneous individual expectations (with a narrow range) on the one hand, and allowing for a less tightly defined objective to be met (with a wide range) that could enhance credibility, on the other hand. Which aspect of this compromise dominates for the stabilization performances under IT does also depend on the volatility in the micro behavior created by the learning environment. This point will be more particularly investigated in Section 4. By looking at the inflation gap as a function of ζ, we see that this trade-off stands out less clearly under a non-IT regime.

The role of the radius around the inflation target under IT and non IT. The boxplots depict the distribution of the average inflation expectations among households(mean(πe), left panel), their variance (var(πe), middle panel) and the credibility measure probtarget (right panel) for the different values of the range ζ considered, in the IT regime (top panel) and in the non-IT regime (bottom panel), over the whole simulations (33 × 30 = 990 runs per regime). Each data point is measured every 50 periods, i.e. t = 100,150,...,750,800

One of the main objectives of this section is to identify the main mechanisms that underlie the impact of IT on the economy for different parameter configurations. We establish two main results.

First, the stabilization benefits associated with IT come along with the emergence of a credibility-success loop. Figure 4 illustrates this mechanism in experiment 13. This experiment has been chosen because it corresponds to a configuration where IT overperforms non-IT, as depicted in Fig. 2 (i.e. window> 5 and ζ > 0.75%). Under IT, we observe that inflation expectations are very well anchored to the target, which does help the monetary authorities to keep the inflation rate in the range. As a consequence, the credibility can be maintained at a high level, which positively feeds back into the formation of expectations. What is important, given the structure of the ABM, is to observe that the anchoring dynamics of expectations shows a stabilising interplay with the learning process of the agents at the micro-level. Higher values for coefficients of substitution regarding the consumption channel of monetary policy (γd) are found to emerge over time under IT (compared to non-IT). This situation clearly favors the transmission of monetary policy through the real interest rate, and hence the stabilization of inflation, as coefficients \(\gamma ^{d}_{i,t}\) directly measure the reactivity of individual consumption decisions to the level of the real interest rate (see Eq. 5). In turn, this configuration backs the anchoring process of the inflation expectations to the target. As for the indexation coefficients \(\left (\gamma ^{w}_{i,t}\right )\), they stabilise at a level less than one, which rules out emergent self-reinforcing price-wage inflation spirals. Therefore, the expectation channel reinforces the stabilising effects of the inflation expectations anchoring on the inflation process itself.

Dynamics in chosen experiments from the DoE given in Table 5. From the top-left to the bottom-right, evolution of the average inflation expectations, the realized inflation, the credibility measure Ptarget, households’ average strategies γd and γw and the output gap (measured as the gap to the full-employment output level). Each variable is averaged over the 30 replications of each experiment

Our second result shows that IT does not necessarily allow for the emergence of a credibility-success loop, so that a non-IT regime can overperform an IT regime. Figure 4 illustrates this problem using Experiment 3. This experiment is characterized by a strong volatility of the learning process regarding the wage indexation coefficients (σw > 0.32), which directly impinges on the inflation process (and thus can be assimilated to a cost-push shock). Moreover, the radius around the target is low (ζ < 0.75%). According to the regression tree Fig. 2, under that configuration, a non-IT regime over-performs an IT regime.

As Fig. 4 lets it clearly appear, inflation lies well above the target under both regimes at the beginning of the simulations. We observe more volatile substitution coefficients under IT (than under non-IT), which are, moreover, lower, and can be even negative. In that case, the income effect dominates regarding the impact of interest changes on consumption, which means that the usual consumption channel of monetary policy breaks down. In such a context, and even more if the radius of tolerance around the target is narrow, the CB quickly loses its credibility and the inflation expectations become unanchored. This unanchoring does in turn amplify the volatility of the learning behavior which feeds back negatively onto the stabilization of the inflation process, preventing the CB from benefiting from the credibility/success loop. This explains why, under IT, the CB fails to bring back inflation within the targeted range.

By contrast, under the non-IT regime, the monetary authorities manage to drive over time inflation expectations within the range, and the loss values are limited.

The overperformance of non-IT can be explained as follows: as the reference point of household’ expectations under non-IT is the average of past inflation, it works as a moving anchor which, under experience 3, decreases along the disinflationary path implemented by the CB. This allows the channelling of inflation expectations, despite a narrow range of tolerance ζ. By contrast, under IT, inflation expectations are only driven by naive expectations that extrapolate the decreasing path of inflation along the disinflationary path. At the micro level, the variability of agents’ behavior appears much lower under non-IT than under IT, despite the fact that the shocks σd and σw are the same. This means that the learning process stabilise the emerging substitution coefficients \(\gamma ^{d}_{i,t}\) at higher levels, with much less volatility, under non-IT than under IT, which makes the consumption channel more powerful. Indexation coefficients are also saliently less heterogeneous under non-IT, and stabilizes under unity, which contributes to stabilizing inflation close to the target.

As a final insight drawn from the overview exercise, we focus on the consumption and the expectation channels such as they emerge in the ABM from the micro behavior of the households. The top panel of Fig. 5 shows the relationship between the average indexation coefficient γw among households and the gap between average expected inflation rate and the target, under IT (left panel) and non-IT (right panel). The general pattern is very similar under the two regimes. It shows a structural change when indexation coefficients increase beyond unity: if lower than unity, inflation expectations are stabilized around the target (the gaps are scattered around zero), while, when increased beyond unity, an increasing relationship emerges between the expected inflation and the indexation coefficients. This depicts potentially explosive wage-price inflation spirals. This feature emerges from the learning process: the higher the expected inflation rate, the more costly in terms of purchasing power for the households not to index their reservation wage on the expected inflation. This in turn amplifies the rise in inflation, and inflation expectations are driven away from the target. It seems that, under IT, expectations remain anchored even if indexation coefficients rise beyond unity (but not a too high level) more often than under non-IT. But this difference concerns few observations.

Micro-founded expectations and consumption channels in the ABM. Top panel: average γw among households against average expected inflation gap (mean(πe) − πT) in the IT regime (left) and in the non-IT regime (right). Bottom panel: average coefficient d among households against average expected inflation gap (mean(πe) − πT) in the IT regime (left) and in the non-IT regime (right). Each variable is measured every 50 periods, i.e. t = 100,150,...,750,800 in each run of the DoE given by Table 5

The bottom panel of Fig. 5 reports the average consumption rate di,t among households as a function of expected real interest rate. Under IT, it is clear than negative real interests rate yield to higher than unity consumption rate (meaning that households are debtors), while positive interest rates drive consumption rate towards the lower bound \(\underline {b}\), as households take advantage of the higher return on savings to save, and decrease their current consumption. This translates into positive coefficients \(\gamma ^{d}_{i,t}\) (see Eq. 5), and indicates that the consumption channel of monetary policy is operational. Under non-IT, we observe a similar, even if less clear-cut, pattern: more observations indicate that households have a lower-than-unity (resp. higher than unity) consumption rate even if real interest rates are expected to be negative (resp. positive) under non-IT than under IT. Again referring to the consumption rule (5), this translates into more negative \(\gamma ^{d}_{i,t}\) coefficients under non-IT than under IT, meaning that the consumption channel is occasionally less effective under non-IT than under IT. However, it should be noted that Fig. 5 pools all observations of the DoE Table 5 together, embedding a variety of situations, as illustrated by Experiments 3 and 13 in Fig. 4.

As a conclusion, the overview of the model performances indicates that the inflation target announcement is not an unconditionally powerful tool to stabilise the economy. This depends on the volatility stemming from the learning environment, the radius of tolerance around the target and the monetary policy rule. We provide a detailed examination of how those elements interact in Section 4.

3.3 Empirical validation of the model

We now define a baseline scenario in which we fix the values of the parameters for which the regression tree (see Fig. 2) does not report any significant influence on monetary policy performance. The parameter values are given in Table 1. We use standard values in the learning literature for the probabilities of imitation and mutation (see e.g. Lux and Schornstein 2005) and for monetary policy (see e.g. Taylor 1993).

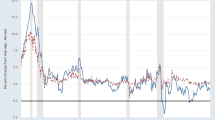

In line with recent developments in ABM,Footnote 26 we confront the baseline scenario to selected related empirical regularities. As the paper focuses on the interplay between inflation expectations, CB’s credibility and macroeconomic stabilization, the following empirical features have been focused on regarding the assessment of the predictive properties of the ABM. Figure 6 displays the joint evolution of inflation and inflation expectations, and statistical properties of inflation distribution in New Zealand between 1988 and 2012, and in the UK between 1997 and 2013. Those countries have been chosen because they have been pioneers in inflation targeting (implemented in 1989 in New Zealand and in 1992 in the UKFootnote 27), and they have been conducting surveys of inflation expectations since then (we use the J6 survey of inflation expectations available on the RBNZ website and the GfK NOP Inflation Attitudes survey available on the Bank of England’s website).

Three stylized facts are particularly relevant for our purpose.Footnote 28 First, inflation and inflation expectations are strongly and positively correlated, suggesting the predominance of the expectation channel (see also Woodford 2003). Second, inflation is characterized by a non-normal distribution with fat tails: the distribution displays excess kurtosis, indicating that values far from the mean are more frequent than under a normal distribution, and the distribution is right-skewed, meaning that inflation rates are more often strongly higher than strongly lower than the average (see also De Grauwe 2012 for a comparable analysis). Third, we compute an index of inflation target credibility for those two countries, in line with Faust and Svensson (2001)’s definition of credibility as negatively related to the distance between agents’ inflation expectations and the CB’s announced target.Footnote 29 In the ABM, credibility is modelled as the fraction of agents who believe that inflation will be contained in the range, and is not directly comparable to the values of de Mendonca (2007) index. Nevertheless, both measurements have the same interpretation: credibility varies between 0 (no credibility) and 1 (full credibility), and they are sufficient to highlight the third empirical regularity under interest: credibility and inflation performance appear highly correlated, lower credibility leading to a higher inflation gap.

We then run 100 replications of the baseline scenario, the calibration of which is given in Table 1. Results are reported in Table 2. Well in tune with the previous empirical findings, our model significantly reproduces the correlation between expectations and inflation, and the non-normal distribution of inflation, both excess kurtosis and right-skewness. Importantly, we are able to provide a comprehensive explanation of these findings within the model, all the more as this model is also able to account for the strong negative correlation between inflation target credibility and inflation gap.

Finally, it should be noted that non-normality is an emergent property of the model; we do not assume it beforehand. In our model, volatility results from the learning shocks, which are obtained using normal draws, but the non-linear and decentralized nature of our model leads to non-linear aggregate dynamics following these normal disturbances.

We conclude that our ABM is able to account for the stylized facts that are central to our research question.

4 Optimal monetary policy under IT

Section 3 shows that the merits of IT are contingent upon the level of volatility conveyed by the learning behavior of agents. We now focus on this issue, and distinguish various environments in terms of macroeconomic volatility so to analyse optimal monetary policy in such configurations. We can characterize those environments using ranges of values for parameters σd and σw. We first define a stable environment by setting σd = σw = 0.05. We then consider two levels of model uncertainty – a moderate level, by setting σd = 0.25, and a high level by setting σd = 0.4 – as well as two levels of inflationary shocks – a moderate one with σw = 0.25 and a strong one with σw = 0.4.Footnote 30 When unchanged, the other parameters are kept at their baseline values, reported in Table 1. We measure the CB’s performance with a loss function as given in Eq. 20. The entire methodology we use to map the loss function values to the monetary policy parameters in our non-linear ABM is detailed in Appendix B, and is based on Roustant et al. (2010) and Salle and Yıldızoğlu (2014).

4.1 Transparency, variability and optimal monetary policy rule

We first characterize, both under IT and non-IT, the best monetary policy strategies (coefficients ϕπ and ϕu) prevailing in the stable scenario, and in the alternative configurations of shocks. In each of those configurations, we alternatively retain two radius levels, which correspond to either a price stability objective as a single point (ζ = 0.1%), or as a range (ζ = 1%). Results are reported in Table 3, that shows the optimal coefficients \(\phi ^{*}_{\pi }\) and \(\phi _{u}^{*}\) as well as the corresponding minimum estimated loss L∗.

Table 6 in Appendix A gives the design of experiments of the ϕπ and ϕu values that we have used to estimate and validate the kriging metamodels that underlie our quantitative analysis, and Table 8 in Appendix B reports the details of the estimation.

In the stable environment, an IT regime with a relatively broad range (ζ = 1%) overperforms a non-IT strategy. In the absence of adverse shocks, the CB may benefit from the credibility/success loop and stabilize inflation expectations and inflation. Nevertheless, with a very tight objective (ζ = 0.1%), credibility and success cannot be ensured, the IT regime loses its attractiveness and its performance lies in the same range as the ones obtained under a non-IT regime. The benefit that can be reaped from announcing a vague objective have been notably emphasized by Stein (1989) and Garfinkel and Oh (1995), but in a theoretical framework that incorporates time inconsistency issues. In such a setting, the CB can create surprise inflation by announcing a wide range, which allows it to depart from the target without losing its credibility. In our model, the CB has no incentive to create inflation surprises but the learning environment may cause deviations of inflation and unemployment from their targets and put the CB’s credibility at risk.

We interpret the values of the optimal rule coefficients in terms of a trade-off between the two objectives of the CB. As long as the target is credible, expectations are anchored, and movements of inflation reflect changes in production (cf. second term of Eq. 19). In this case, the two objectives of the CB move in the same direction, and reacting to the deviation of one from its target simultaneously moves the other one towards its target. In this case, there is no trade-off between the two objectives of the CB, and the optimal monetary policy prescribes a one-sided solution (with either \(\phi _{\pi }^{*}\) or \(\phi _{u}^{*}\) being zero). As the expectation channel plays a strongly dominant role in our ABM, it favors the role played, when credible, by the inflation target as an anchoring device under IT, which does then act as a second monetary policy instrument that stabilizes inflation (see Svensson 2010 for a similar interpretation). Monetary policy reaction to inflation can become redundant, which can explain why the optimal reaction to inflation is zero in some cases under IT with a wide range.Footnote 31

By contrast, if expectations become unanchored, they drive inflation away from the target (cf. first component of Eq. 19), and movements in inflation no longer reflect changes in production. In this case, a trade-off arises between stabilizing inflation and the level of activity. The optimal monetary policy is then likely to be a two-sided solution, according to which the CB has to react to both objectives.

Introducing higher variability in the consumption channel (i.e. increasing σd) deteriorates the performance of the CB, across all regimes. For instance, under IT with a wide range, the loss value of the CB is more than four times higher when σd = 0.25 (\(\mathcal {L}^{*}= 0.008\)) than when σd = 0.05, and almost eight times larger when σd = 0.4 (\(\mathcal {L}^{*}= 0.0123\)). Following our interpretation of the optimal coefficients in terms of trade-off, model uncertainty does not create a trade-off between the two objectives of the CB under IT, as the CB optimally adopts a one-sided reaction. With a widely defined objective (ζ = 1%), the credibility-success loop stabilize inflation expectations better than with a narrow objective (ζ = 0.1%), and the CB should only react to deviations of unemployment.

Overall, in a moderate or high degree of uncertainty concerning the real transmission channel of monetary policy, an IT strategy slightly overperforms a non-IT strategy, but only if it is implemented with a wide range (ζ = 1%). With a tight objective (i.e. ζ = 0.1%), loss values are fairly the same as under non-IT.

Under inflationary shock dominance (i.e. moderate or high σw), macroeconomic outcomes strongly deteriorate. As explained in Section 2.2.3 (see, also, Eq. 19), this kind of volatility directly leads to variability in the inflation process. The CB is therefore highly likely to miss its inflation objective, and lose its credibility. Consequently, expectations get unanchored. Inflation becomes mostly driven by naive expectations, and is no more in line with the aggregate demand stance. This phenomenon creates a trade-off between the CB objectives. This trade-off translates into the optimal monetary policy reactions, which imply a strong two-sided reaction to both inflation and the level of activity – see, also, Alichi et al. (2009) for a similar analysis in the presence of cost-push shocks. Accordingly, optimal monetary policy implies, as soon as inflationary shocks are strong enough (i.e. for σw = 0.4), a strong reaction to both inflation and unemployment. In this case, it is clear that a non-IT strategy outperforms an IT regime. The worst performances are obtained under IT with a tight objective: the loop between credibility and success is strongly impaired, and loss values are much lower if the CB does not announce its target than under IT.

If both model uncertainty and inflationary shocks coexist, again, performances deteriorate compared to the cases with only one type of shock (i.e. either σw > 0.05 or σd > 0.5), and non-IT outperforms IT, especially when accompanied by a tight objective. The trade-off between the two objectives seems to be mitigated under non-IT as long as the two shocks are moderate (i.e. in the case where σw = σd = 0.25, the optimal reaction under non-IT is a one-sided strategy).

Finally, in all the cases we have considered, we note that the optimal monetary policy rule is always an aggressive one. This result goes along the lines of previous statements about optimal monetary policy under uncertainty (see Schmidt-Hebbel and Walsh 2009 for a review). Model uncertainty, i.e. uncertainty concerning the parameters that depict the transmission mechanisms of monetary policy, characterizes our environment. It is a multiplicative uncertainty case, as shocks on agents’ behavior translate to inflation and economic activity in a non-linear way.Footnote 32 There is no consensual answer to the question of optimal monetary policy in such a context. The conservatism principle first established by Brainard (1967) prescribes a moderate rule. However, when shocks and parameters are correlated, as it is the case in our model, the Brainard principle does not hold. Other contributions call for an aggressive rule under other cases of “Brainardian” uncertainty, for example when the CB cannot accurately estimate how inflation responds to inflation expectations (see Söderström 2002). Moreover, when radical uncertainty surrounds the economic model and is tackled through the tools of robust control theory, optimal monetary policy rules are hawkish ones (Giannoni 2007), especially when the CB cannot identify which parameters are uncertain (Tetlow and von zur Muehlen 2001). We also conclude in favor of aggressive rules under model uncertainty, which primarily stems, in our case, from learning.

4.2 Can partial announcements overperform pure IT or non-IT regimes?

Some contributions to the debate about the optimal degree of transparency have analysed partial announcements (see, among others, Cornand and Heinemann 2008 and Walsh 2009). In those works, it is assumed that only a fraction P ∈ [0,1] of agents receives the CB’s signal, i.e. the so-called “degree of publicity” P can be lower than one. According to Cornand and Heinemann (2008) this is the case when the CB chooses to provide news only in certain communities, or in a language that is understood only by some. Furthermore, public announcements are in general released through media, but each agent acknowledges a certain medium only with some probability, so that a CB can choose the degree of publicity by selecting appropriate media for publication. Agents may also have limited ability to process information, or may face costs to acquire it, so that an immediate release does not necessarily turn out to be incorporated into all agents’ decisions. Partial dissemination of precise public information may be an optimal communication strategy in combining the positive effects of valuable information for the agents who receive it with a confinement of the threat of overreaction by limiting the number of receivers (Cornand and Heinemann 2008). Walsh (2007b) also shows that the optimal degree of economic transparency depends on the existence of cost-push or demand shocks.

In the same vein as those authors, we introduce partial dissemination of CB’s announcement by defining the degree of publicity of the inflation target as the share of agents (P ∈ [0,1]) who know the target, and use it to forecast inflation (see the mechanism depicted in Section 2.2.3). Conversely, a share 1 − P of agents form inflation expectations in the same way as under non-IT. Following Demertzis and Viegi (2009), we also include in our experiments different values of the radius ζ around the target. In order to keep the optimization problem to a two-dimensional system, given the number of points of the DoE (see Table 7 in Appendix A), we fix the monetary policy coefficients to standard values (i.e. ϕπ = 1.5 and ϕu = 0.5), and derive the optimal announcement strategy (P,ζ) in the scenarios previously considered. Results are reported in Table 4, and details of the estimations in Table 9 in Appendix B.

In the stable scenario (i.e. {σw,σd} = {0.05,0.25}), a low publicity of the target (i.e. P = 0.36), coupled with a medium range (0.7%) is optimal. However, the minimum loss obtained (roughly 0.005) fairly equals the one obtained under a pure IT regime (i.e. when P = 1, ζ = 1% and ϕπ,ϕu = {4,0}, see Table 3). As a conclusion, we confirm the result of Demertzis and Viegi (2009): the publicity of the target is superfluous in a weakly volatile environment. This result is in line with what has been observed during the Great Moderation period, where developed countries, either under IT and non-IT, have experienced a low macroeconomic variability (Geraats 2009). The performance of IT in these countries appear, thus, at the most, “non-negative” (Walsh 2009).

When introducing shocks (either increasing σd or σw values, or both simultaneously), the value of the expected loss increases, and is minimized with partial announcements (i.e. P < 1).Footnote 33 The stronger the shocks (either σd or σw), the lower the optimal degree of publicity. Intuitively, a mitigate dissemination of the target balances the risk of losing credibility in front of inflation variability, and the gain from the coordination of inflation expectations at the targeted level.

The stronger the inflationary shocks (i.e. the higher σw values), the higher the optimal range ζ to be communicated around the target, but the optimal range values remain below the optimal one under the stable scenario (0.0067). Conversely, the higher the model uncertainty (i.e. the higher σd values), the lower the optimal range values. Yet, the optimal range values are high (typically above 0.5%), and higher than the ones under the stable scenario and under an environment with strong inflationary shocks.

Note that, this results contradicts Walsh (2007a), who establishes that complete transparency is optimal in front of demand shocks. Those demand shocks are assimilable to the disturbances associated with a high level of σd in our model: in both frameworks, they correspond to the control error of the CB on the demand through the nominal interest rate. However, the two models work differently. In Walsh’s set-up, transparency on the target allows firms to infer the kind of shocks (demand or supply) that the CB is expecting while, in case of opacity, firms set their forecasts using the CB’s instrument only, and the so-called opacity bias arises. As firms only adjust their prices in reaction to supply shocks, a demand shock contaminates inflation if firms misinterpret the change in the interest rate in reaction to the demand shock, as a change in response to a supply shock. In our model, the gain (or the loss) of being transparent comes from the gain of being credible (or the loss of having lost its credibility). Credibility, in turn, anchors the heterogeneous private inflation expectations, and reduces macroeconomic volatility through more favorable micro behavior (i.e. lower-than-unity indexation coefficients \(\gamma ^{w}_{i,t}\) and high positive values of the substitution coefficients \(\gamma ^{d}_{i,t}\)). In our set-up, partial dissemination of the target then limits the risk of losing its credibility, while maintaining a partial anchorage in case of success. In that respect, the optimal range around the target is relatively high, close to 1%. This result indicates that the insurance against a credibility loss turns out to be the primary concern of a CB facing high model uncertainty.

By contrast, the need of providing a clear focal point to coordinate expectations– through an explicit inflation target associated with a moderate radius – is of critical importance when volatility comes mainly from the inflation process per se – see also Libich (2011) for a similar argument in a context of wage inflation. Accordingly, a lower range minimizes the expected loss under σw-led than under σd-led volatility.

5 Conclusion

This paper revisits the virtues of transparency of an inflation targeting regime using an agent-based model. By transparency, we mean the announcement of the numerical value of the inflation target together with a range around it. Thanks to an agent-based perspective, we obtain a comprehensive way of modelling heterogeneity and bounded rationality from a collection of interacting agents, while allowing the main monetary policy mechanisms underlying the dynamics of the baseline NK model. In particular, our ABM incorporates the consumption (real interest rate) channel and the expectation channel of monetary policy.

In our setting, the benefit from announcing the target arises from the emergence of a virtuous circle through a loop between credibility and success. Accordingly, inflation expectations may remain anchored at the CB’s inflation target and inflation may be stabilized around the target. The trade-off that the CB faces between the inflation objective and the level of activity may be loosened. Our results confirm that this mechanism prevails in a rather stable environment, such as the so-called Great Moderation period. However, this virtuous circle is not robust to the introduction of strong inflationary pressures, even when coupled with uncertainty affecting the real transmission channel of monetary policy. This is because inflationary shocks feed back into the inflation dynamics and may produce a self-defeating mechanism.

In this case, partial dissemination of the target may limit the risk of losing its credibility, while maintaining a partial anchorage in case of success. We find that providing a clear signal to anchor inflation expectations on one part of the public, while allowing for a less tightly defined objective for the remaining part, achieves an optimal management of expectations when inflation and inflation expectations display a high degree of volatility. In face of model uncertainty, the insurance against the loss of credibility through the announcement of a wide range appears of primary importance.

Overall, our results support the hypothesis that there is a lack of robustness of a fully transparent inflation targeting regime under volatile economic environments.

Notes

The literature is impressive on these crossing issues. For a recent survey on IT, see Svensson (2010) and the references therein. A useful reference is Walsh (2009). On the impact of transparency and communication of the CB, see, among others, Geraats (2002, 2009) and Woodford (2005). Empirical evidence on the effects of IT on expectations has been provided by Johnson (2002, 2003).

Empirical evidence supports the view that the credibility of the CB, inherited from past inflation performances, acts as a primary determinant of inflation expectations (Blinder et al. 2008).

Lower case symbols stand for individual variables, and upper case symbols for aggregate ones. s and d superscripts indicate respectively, supply and demand variables.

In DSGE models, transversality conditions are imposed to avoid explosive dynamics in the bond accumulation process. Such restrictions cannot be set in our model, in which we have to impose period-by-period constraints. In that respect, we impose an upper limit \(\bar {d} > 1\) to the consumption adjustment rate d, in order to rule out excessive debt and household defaulting, and we impose a lower bound \(\underline {\textit {d}} > 0 \) to ensure minimal subsistence consumption at each period. This way, consumption cannot be driven to zero.

We depart from the behavioral rules introduced in the literature on learning about consumption (see the seminal contribution of Allen and Carroll 2001). This is because this literature seeks to explain how households may learn to smooth their consumption path over time assuming a constant nominal interest rate and a zero-inflation world, while we aim here at specifying the consumption channel of monetary policy through changes in the real interest rate.

On the credibility issue, our expectation model shares also common features with Bomfim and Rudebusch (2000), Alichi et al. (2009) and Libich (2011). See also Arifovic et al. (2010) for a private inflation expectations formation process that is partially based on adaptive learning and takes the announcement of an inflation target by the central bank into account.

See De Grauwe (2011) for a comparable mechanism

We could have considered a noisy target but our focus is on credibility issues of the announcement and the way the CB can use it to manage expectations; that is why we do not want to add issues of clarity, which have been tackled in Salle et al. (2013).

For instance, if most agents anticipate a rise in inflation, actual inflation will rise next period through the expectation channel. Agents who did not expect that rise may lose purchasing power, both through a misassessment of the real rate of return of their savings and an under-indexation of their reservation wage.

We assume a deterministic natural production level, we set At = 1, ∀t (the long run value of the technology assumed by Woodford 2003, p. 225).

Normally, the mark-up is computed over the average cost, and not the marginal cost, but we select here the latter in order to keep the analogy with the elasticity rule of the standard NK model, and the comparability with the DSGE literature in general (see also Rotemberg and Woodford 1999).

If we overlook potential rationing, having a labor demand or a good supply strategy is equivalent from the firm’s point of view, as labor is the only input (see Eq. 10). Through the mark-up price setting (12), adjusting price is also equivalent to adjusting quantities, so that the firm has actually only one decision-making variable, expressed here in terms of labor demand.

We consider the non-linear form of the rule rather than the log-linearized version, given the non-linear dynamics of our framework; see Ashraf and Howitt (2012) for a comparable specification.

Households are then either fully employed, i.e. hi,t = 1, or fully unemployed, i.e. hi,t = 0, except for the last hired, who can be only partially unemployed i.e. hi,t < 1.

Results are not displayed here but the whole validation procedure is detailed in the PhD thesis of the main author, see Salle (2012).

For this reason, we rule out parameter values such that 𝜖 > 0.05, \(\bar {d}>2\) and \(\underline {d}< 0.2\). Other values of this parameter have been found to have little, if any, influence on aggregate emergent dynamics.

See, for example, Goupy and Creighton (2007) for an introduction. This method is widely used in computer simulations in areas such as industry, chemistry, computer science, biology, etc.

A special case obtains when the number of past observations used to forecast inflation (window) does not exceed five periods (the lower bound of the interval we have retained). This is the case in three among the thirty-three configurations. In that case, the expected loss is high, probably because the expectations formation process is very reactive to changes in the inflation process.

An inflation target was first announced in September 1992 in the UK, but the operational responsibility, which implies greater independence and credibility was given to the Bank of England in May 1997.

We have also shown that inflation time series in our model display a significant autocorrelated pattern. This result sounds natural as the micro behavioral rules in the ABM prescribe to adjust past behavior, which by construction involves inertia in the model dynamics. Those additional results are available upon request.

More precisely, we use de Mendonca (2007) credibility index, that accounts for the range of tolerance around the target:

We apply the same index to both countries to make the comparison easier, although the UK does not announce a range around the target. However, an implicit range of ± 1% may prevail, as the Governor is held to account through an open letter to the Chancellor if the target is missed by more than 1%.

Those values belong to the range that has been analysed in Section 3, and, as shown below in the results of the numerical simulations, those σd and σw values are high enough to imply significant deviations from the stable case σd = σw = 0.05 in terms of loss function values.

It should be noted that the dynamics arising from the ABM cannot be exposed to determinacy issues, as in the RE models, as ABMs simulate trajectories that are multiple by nature. Moreover, the hypotheses underlying the construction of our ABM rule out the possibility of sunspot equilibria, as inflation expectations only depend on realized past inflation. Consequently, our results should not be compared as such to the ones stressing the necessity of complying with the Taylor principle in the related literature on NK models (see, e.g. Bullard and Mitra 2002).

In the case of multiplicative uncertainty, shocks impact the parameters of the model, and the noise is propagated in a multiplicative way with the change in the variables under concern, in contrast to the additive (uncertainty) case, in which shocks enter the model as a term that is added to the model equations.

It should be noted that the loss values are higher in case of partial announcements than in Table 3 under pure IT or non-IT regimes. However, in the present exercise, monetary policy coefficients are fixed while they constitute a degree of freedom of monetary policy in Table 3. As a result, we should be cautious when comparing as such the loss values between Tables 3 and 4 and concluding that a pure non-IT regime over-performs any form of partial announcements.

We have n = 17 observation points of \(\mathcal {L}\) over D (see DoE Table 6, Appendix A). As the model is stochastic, we repeat each 30 times. The kriging estimation has then to be performed in averaging \(\mathcal {L}\) values over the 30 repetitions (van Beers and Kleijnen 2004). This results in n = 17 observations of \(\mathcal {L}\) over D.

More complex forms would involve more parameters to be estimated, besides \({\sigma ^{2}_{L}}\), \(\theta _{\phi _{\pi }}\) and \(\theta _{\phi _{u}}\).

References

Alichi A, Chen H, Clinton K, Freedman C, Johnson M, Kamenik O, Kisinbay T, Laxton D (2009) Inflation targeting under imperfect policy credibility, IMF Working Papers 09/94, International Monetary Fund

Allen T W, Carroll C (2001) Individual learning about consumption. Macroecon Dyn 5:255–271

Arifovic J (2000) Evolutionary algorithms in macroeconomic models. Macroecon Dyn 4(03):373–414

Arifovic J, Dawid H, Deissenberg C, Kostyshyna O (2010) Learning benevolent leadership in a heterogenous agents economy. J Econ Dyn Control 34:1768–1790

Ashraf Q, Howitt P (2012) How inflation affects macroeconomic performance: an agent-based computational investigation NBER Working Papers 18225. National Bureau of Economic Research Inc

Assenza T, Delli Gatti D, Grazzini J (2015) Emergent dynamics of a macroeconomic agent based model with capital and credit. J Econ Dyn Control 50 (C):5–28

Blinder A S, Ehrmann M, Fratzscher M, De Haan J, Jansen D-J. (2008) Central bank communication and monetary policy: a survey of theroy and evidence. J Econ Lit 46(4):910–945

Bomfim A N, Rudebusch G D (2000) Opportunistic and deliberate disinflation under imperfect credibility. J Money Credit Bank 32(4):707–21

Brainard W (1967) Uncertainty and the effectiveness of policy. Amer Econ Rev Papers Proc 57:411–425

Bullard M, Mitra K (2002) Learning about monetary policy rules. J Monet Econ 49(6):1105–1129

Canzian J (2009) Three essays in agent-based macroeconomics. Doctoral Thesis, University of Trento CIFREM

Cioppa T (2002) Efficient nearly orthogonal and space-filling experimental designs for high-dimensional complex models. Doctoral Dissertation in philosophy in operations research, Naval postgraduate school

Cornand C, Heinemann F (2008) Optimal degree of public information dissemination. Econ J 118(528):718–742

De Grauwe P (2011) Animal spirits and monetary policy. Econ Theory 47:423–457

De Grauwe P (2012) Lectures on behavioral macroeconomics. Princeton University Press

de Mendonca H (2007) Towards credibility from inflation targeting: the Brazilian experience. Appl Econ 39:2599–2615

Delli Gatti D, Gaffeo E, Gallegati M, Palestrini A (2005) The apprentice wizard: monetary policy, complexity and learning. New Math Natural Comput (NMNC) 1(01):109–128

Demertzis M, Viegi N (2008) Inflation targets as focal points. Int J Central Bank 4(1):55–87

Demertzis M, Viegi N (2009) Inflation targeting: a framework for communication. B E J Macroecon 99(1):44

Dosi G, Fagiolo G, Roventini A (2010) Schumpeter meeting Keynes: a policy-friendly model of endogenous growth and business cycles. J Econ Dyn Control 34(9):1748–1767

Faust J, Svensson L (2001) Transparency and credibility: monetary policy with unobservable goals. Int Econ Rev 42(2):369–397

Friedman M (1957) A theory of the consumption function. Princeton University Press

Gali J (2008) Monetary policy, inflation, and the business cycle: an introduction to the new Keynesian framework. Princeton University Press

Garfinkel M, Oh S (1995) When and how much to talk, credibility and flexibility in monetary policy with private information. J Monet Econ 35(2):341–357

Geraats P (2002) Central bank transparency. Econ J 112(483):532–565

Geraats P (2009) Trends in monetary policy transparency. Int Financ 12 (2):235–268

Giannoni P (2007) Robust optimal monetary policy in a forward-looking model with parameter and shock uncertainty. J Appl Econ 22(1):179–213

Goupy J, Creighton L (2007) Introduction to design of experiments with JMP examples, 3rd edn. SAS Institute Inc., Cary, NC, USA

Holland J, Goldberg D, Booker L (1989) Classifier systems and genetic algorithms. Artif Intell 40:235–289

Klügl F (2008) A validation methodology for agent-based simulations. In: Wainwright R, Haddad H (eds) Proceedings of the 2008 ACM symposium on applied computing (SAC), Fortaleza

Leijonhufvud A (2006) Agent-based macro. In: Tesfatsion L, Judd K. (eds) Handbook of computational economic. chapter 36, vol 2, North-Holland, pp 1625–1646

Lengnick M (2013) Agent-based macroeconomics: a baseline model. J Econ Behav Org 86(C):102–120

Libich J (2011) Inflation nutters? Modelling the flexibility of inflation targeting. B E J Macroecon 11(1):1–17