Abstract

The progressive Type-II hybrid censoring scheme introduced by Kundu and Joarder (Comput Stat Data Anal 50:2509–2528, 2006), has received some attention in the last few years. One major drawback of this censoring scheme is that very few observations (even no observation at all) may be observed at the end of the experiment. To overcome this problem, Cho et al. (Stat Methodol 23:18–34, 2015) recently introduced generalized progressive censoring which ensures to get a pre specified number of failures. In this paper we analyze generalized progressive censored data in presence of competing risks. For brevity we have considered only two competing causes of failures, and it is assumed that the lifetime of the competing causes follow one parameter exponential distributions with different scale parameters. We obtain the maximum likelihood estimators of the unknown parameters and also provide their exact distributions. Based on the exact distributions of the maximum likelihood estimators exact confidence intervals can be obtained. Asymptotic and bootstrap confidence intervals are also provided for comparison purposes. We further consider the Bayesian analysis of the unknown parameters under a very flexible beta–gamma prior. We provide the Bayes estimates and the associated credible intervals of the unknown parameters based on the above priors. We present extensive simulation results to see the effectiveness of the proposed method and finally one real data set is analyzed for illustrative purpose.

Similar content being viewed by others

References

Balakrishnan N, Cramer E (2014) The art of progressive censoring. Birkhäuser, New York

Balakrishnan N, Childs A, Chandrasekar B (2002) An efficient computational method for moments of order statistics under progressive censoring. Stat Probab Lett 60:359–365

Balakrishnan N, Xie Q, Kundu D (2009) Exact inference for a simple step stress model from the exponential distribution under time constraint. Ann Inst Stat Math 61:251–274

Balakrishnan N, Kundu D (2013) Hybrid censoring models, inferential results and applications. Comput Stat Data Anal 57:166–209 (with discussion)

Balakrishnan N, Cramer E, Iliopoulos G (2014) On the method of pivoting the CDF for exact confidence intervals with illustration for exponential mean under life-test with time constraint. Stat Probab Letters 89:124–130

Bhattacharya S, Pradhan B, Kundu D (2014) Analysis of hybrid censored competing risks data. Statistics 48(5):1138–1154

Chan P, Ng H, Su F (2015) Exact likelihood inference for the two-parameter exponential distribution under Type-II progressively hybrid censoring. Metrika 78:747–770

Chen SM, Bhattayacharya GK (1987) Exact confidence bound for an exponential parameter under hybrid censoring. Commun Stat Theory Methodol 16:2429–2442

Childs A, Chandrasekhar B, Balakrishnan N, Kundu D (2003) Exact likelihood inference based on Type-I and Type-II hybrid censored samples from the exponential distribution. Ann Inst Stat Math 55:319–330

Cho Y, Sun H, Lee K (2015) Exact likelihood inference for an exponential parameter under generalized progressive hybrid censoring scheme. Stat Methodol 23:18–34

Cohen AC (1963) Progressively censored samples in life testing. Technometrics 5:327–329

Cox DR (1959) The analysis of exponentially lifetime distributed lifetime with two types of failures. J R Stat Soc Ser B 21:411–421

Cramer E, Balakrishnan N (2013) On some exact distributional results based on Type-I progressively hybrid censored data from exponential distribution. Stat Methodol 10:128–150

Crowder M (2001) Classical competing risks. Chapman & Hall/CRC, London

Epstein B (1954) Truncated life tests in the exponential case. Ann Math Stat 25:555–564

Gorny J, Cramer E (2016) Exact likelihood inference for exponential distribution under generalized progressive hybrid censoring schemes. Stat Methodol 29:70–94

Hemmati F, Khorram E (2013) Statistical analysis of log-normal distribution under type-II progressive hybrid censoring schemes. Commun Stat Simul Comput 42:52–75

Hoel DG (1972) A representation of mortality data by competing risks. Biometrics 28:475–488

Kalbfleish JD, Prentice RL (1980) The statistical analysis of the failure time data. Wiley, New York

Kundu D, Basu S (2000) Analysis of incomplete data in presence of competing risks. J Stat Plan Inference 87:221–239

Kundu D, Joarder A (2006) Analysis of Type-II progressively hybrid censored data. Comput Stat Data Anal 50:2509–2528

Kundu D, Gupta RD (2007) Analysis of hybrid life-tests in presence of competing risks. Metrika 65(2):159–170

Lawless JF (1982) Statistical models and methods for lifetimes data. Wiley, New York

Pena EA, Gupta AK (1990) Bayes estimation for the Marshall-Olkin exponential distribution. J R Stat Soc Ser B 52:379–389

Prentice RL, Kalbfleish JD, Peterson AV Jr, Flurnoy N, Farewell VT, Breslow NE (1978) The analysis of failure time points in presence of competing risks. Biometrics 34:541–554

Acknowledgements

The authors would like to thank the referees for their constructive suggestions which have helped us to improve the manuscript significantly.

Author information

Authors and Affiliations

Corresponding author

Appendix: the proof of the main theorem

Appendix: the proof of the main theorem

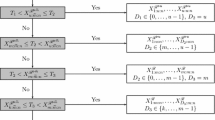

First we derive the distribution function of \(\widehat{\theta }_1\) which is given below.

where,

Now to compute the terms on the right hand side of (7), we need the following Lemmas.

Lemma 1

The joint distribution of \(Z_{1:m:n},\ldots , Z_{J:m:n}\) given \(J=j, D_1=i\) for \(i=1,\ldots ,j\) and \(j=k,\ldots , m-1\) at \(z_1,\ldots , z_j\), is given by,

Proof of Lemma 1

For \(j=k,k+1,\ldots m \ \ {\text {and}} \ \ i=1,2,\ldots , j \), consider left side of (8),

Note that, the event \(\{z_1<Z_{1:m:n}<z_1+dz_1,\ldots ,z_j<Z_{j:m:n}<z_j+dz_j,J=j, D_1=i)\}\) for \(i=1,\ldots ,j; j=k,k+1,\ldots ,m\) is nothing but the failure times of j units till time point T and out of them i units have failed due to Cause-1. The probability of this event is the likelihood contribution of the data when \(T^*=T\). Thus (9) becomes,

\(\square \)

Lemma 2

The joint distribution of \(Z_{1:m:n},\ldots , Z_{k:m:n}\) given \(T<Z_{k:m:n}<Z_{m:m:n}, D_1=i\) for \(i=1,\ldots ,k\) at \(z_1,\ldots , z_k\), is given by

Proof of Lemma 2

For \(i=1,2, \ldots , k\), consider left side of (10),

Note that, the event \(\{z_1<Z_{1:m:n}<z_1+dz_1,\ldots ,z_k<Z_{k:m:n}<z_k+dz_k,T<Z_{k:m:n}, D_1=i)\}\) for \(i=1,\ldots ,k\) is nothing but the failure times of k units till the experiment termination point \(Z_{k:m:n}\) and out of them i units have failed due to Cause-1. The probability of this event is the likelihood contribution of the data when \(T^*=Z_{k:m:n}\). Thus (11) becomes,

\(\square \)

Lemma 3

The joint distribution of \(Z_{1:m:n},\ldots , Z_{m:m:n}\) given \(Z_{k:m:n}<Z_{m:m:n}<T, D_1=i\) for \(i=1,\ldots ,m\) at \(z_1,\ldots , z_m\), is given by,

Proof of Lemma 3

For \(i=1,2,\ldots m\), consider left side of (12),

Note that, the event \(\{z_1<Z_{1:m:n}<z_1+dz_1,\ldots ,z_m<Z_{m:m:n}<z_m+dz_m,Z_{k:m:n}<Z_{m:m:n}<T, D_1=i)\}\) for \(i=1,\ldots ,m\) is nothing but the failure times of m units till the experiment termination point \(Z_{m:m:n}\) and out of them i units have failed due to Cause-1. The probability of this event is the likelihood contribution of the data when \(T^*=Z_{m:m:n}\). Thus (13) becomes,

\(\square \)

Theorem 3

The conditional moment generating function of \(\widehat{\theta }_1\) given \(J=j, D_1=i\) for \(i=1,\ldots , j\) and \(j=k,\ldots , m-1\) is given by

Proof

The above equality follows using Lemma 1,

The last equality follows using Lemma 1 of Balakrishnan et al. (2002).\(\square \)

Corollary 1

The conditional distribution of \(\widehat{\theta }_1\) given \(J=j, D_1=i\) for \(i=1,\ldots ,j\) and \(j=k,\ldots ,m-1\) is given by,

Theorem 4

The conditional moment generating function of \(\widehat{\theta }_1\) given \(T<Z_{k:m:n}<Z_{m:m:n}, D_1=i\) for \(i=1,\ldots , k\) is given by,

Proof

The above equality follows using Lemma 2,

The last equality follows using Lemma 1 of Balakrishnan et al. (2002)

\(\square \)

Corollary 2

The conditional distribution of \(\widehat{\theta }_1\) given \(T<Z_{k:m:n}<Z_{m:m:n}, D_1=i\) for \(i=1,\ldots , k\) is given by,

Theorem 5

The moment generating function of \(\widehat{\theta }_1\) given \(Z_{k:m:n}<Z_{m:m:n}<T, D_1=i\) for \(i=1,\ldots ,m\) is given by,

Proof

The above equality follows using Lemma 3,

The last equality follows using Lemma 1 of Balakrishnan et al. (2002).\(\square \)

Corollary 3

The conditional distribution of \(\widehat{\theta }_1\) given \(Z_{k:m:n}<Z_{m:m:n}<T, D_1=i\) for \(i=1,\ldots , m\) is given by,

Proof of Theorem 1

Combining corollaries 1–3, we get the first part of Theorem 1. \(\square \)

\(\mathrm{Derivation of} P(D_1=0).\)

We find each of the above probabilities separately.

Rights and permissions

About this article

Cite this article

Koley, A., Kundu, D. On generalized progressive hybrid censoring in presence of competing risks. Metrika 80, 401–426 (2017). https://doi.org/10.1007/s00184-017-0611-6

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00184-017-0611-6

Keywords

- Competing risk

- Generalized progressive hybrid censoring

- Beta–gamma distribution

- Maximum likelihood estimator

- Bootstrap confidence interval

- Bayes credible interval