Abstract

Two informed and interested parties (senders) repeatedly send messages to an uninformed party (public). Senders face a trade-off between propagating their favoured opinions, possibly by lying, and maintaining a high audience (or market share), as the state is occasionally revealed and lies cause audiences to switch to the competitor. We fully characterize a focal Markov perfect equilibrium of this game and discuss the impact of exogenous parameters on the truthfulness of equilibrium reporting. In particular, we find that senders’ lying propensities are strategic complements so that increasing one sender’s bias decreases both senders’ truthfulness. We also analyse the role of polarization across senders.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Interaction between informed parties (governments, firms, media outlets etc.) and an uninformed public (citizens, customers, readers, etc.) typically repeats over time and as time passes, facts may become available which allow to verify past claims. Inaccurate claims, though perhaps advantageous in the short run, hurt an informed party’s ability to wield influence in the future by affecting the latter’s credibility, audience size, etc.In spite of that, one often observes (diametrically) different accounts of the same underlying facts. For example, the following two quotes, taken from articles published in November 2018 by, respectively, the New York Times and Fox Business, offer very different evaluations of the state of the U.S. economy in the preceding months.

“Emerging signs of weakness in major economic sectors, including auto manufacturing, agriculture and home building, are prompting some forecasters to warn that one of the longest periods of economic growth in American history may be approaching the end of its run.”Footnote 1

“U.S. employers added a better-than-expected 250,000 jobs in October, soaring past expectations for an increase of 190,000 jobs (...). The unemployment rate remained at 3.7 percent, the lowest rate in nearly 50 years, while the labor force participation rate increased to 62.9 percent from 62.7 percent during the month. Footnote 2

One potential driver of such divergence in the selection and interpretation of facts is that informed parties have an ideological agenda and wish to influence public opinion accordingly. In this paper, we investigate the competition between two biased senders for an audience, whose members switch their preferred source of information in proportion to the discrepancy between sources’ past messages and the revealed state.

We propose a simple infinite horizon model that captures key features of the problem. Two biased and strategic senders face the public in every period. In each period, the state \(\omega _{t}\) is drawn anew from a fixed continuous distribution. The state is observed only by senders at the beginning of the period, while the public observes it by the end of the period with some probability. Each sender k has a fixed favoured state \(\omega ^{k}\) which may or may not be commonly known. After observing the state, each sender sends a period-t message \(m_{t}^{k}\). At the beginning of each period t, a share \(x_{t}^{k}\) of the market (audience) is held by sender k, and this share represents the fraction of the public that listens to him (i.e. trusts him, is loyal to him). Senders’ shares sum up to one in any given period, i.e. all members of the public are loyal to (exactly) one or the other media.

Sender k’s period-t message affects his payoff through two channels; by influencing the beliefs of his current audience, and by affecting the size of his audience tomorrow. Formally, message \(m_{t}^{k}\) yields a period-t payoff to sender k which is a function of his audience share \(x_{t}^{k}\), the distance between \(m_{t}^{k}\) and his ideal state \(\omega ^{k}\), as well as his ideological commitment \(\lambda ^{k}\). His period-t payoff decreases in the weighted distance \(\lambda ^{k}(m_{t}^{k}-\omega ^{k})^{2}\) and it increases in \(x_{t}^{k}\). In other words, sender k prefers sending messages that are close to his ideal state and are received by a large audience. The simplest interpretation is that k’s audience naively takes k’s message at face value, so that the smaller the distance between \(m_{t}^{k}\) and \(\omega ^{k}\), the closer his audience’s belief to his ideal belief.

The message \(m_{t}^{k}\) chosen by k at t also affects k’s expected audience tomorrow. At the end of t, the current state \(\omega _{t}\) becomes observable with some probability \(\gamma\) and, in this case, the public compares senders’ messages to the revealed truth. Then, at \(t+1\), each sender loses to his opponent a fraction of his audience that is increasing in the (square of the) size of his lie \((m_{t}^{k}-\omega _{t})^{2}\). In particular, if senders’ initial market shares are identical and both reports diverge equally from the truth, market shares stay put. One can think of electoral campaigns, where false claims by two opposing parties (candidates) cancel out. If instead only k lied, then l acquires a share from k while k acquires no share from l.

Summarizing, senders’ incentives exhibit two key features. First, a sender faces a trade-off between advantageously moving the beliefs of his audience towards his ideal state by biasing his report, and ensuring a large audience tomorrow by reporting truthfully. Second, a sender benefits from his competitor’s lies as this yields an expected inflow of audience.

Senders simultaneously choose their messages with the aim of maximizing the expected value of the discounted sum of their future payoffs. Each message \(m_{t}^{k}\) of sender k can depend on all information available up to the start of date t (including the realized state \(\omega _{t}\) and k’s audience \(x_{t}^{k}\)). The natural strategies to examine in this environment are Markov strategies, in which the period-t message can depend only on the current state and the current market audience. We construct a simple Markov perfect equilibrium (MPE), in which sender k’s message at each date t is a convex combination of the current state and his favoured state, \(m_{t}^{k}=\) \(a^{k}\omega _{t}+\) \((1-a^{k})\omega ^{k}\). The truthfulness of k in any period is thus quantified by the constant \(a^{k}\) that depends neither on the state nor on k’s audience. In the extreme case of \(a^{k}=1\), sender k always reports truthfully, whereas if \(a^{k}=0\), he ignores the realized state and simply reports his ideal point. Moreover, we show that the identified strategy profile constitutes the unique MPE if we restrict the strategy sets of senders to Markov strategies that condition the period t message on the current state only.

Our second set of findings regards the comparative statics of \(a^{k}\) w.r.t. the key parameters. For example, we find that the common discount factor \(\delta\) and the verification probability \(\gamma\) influence the reporting strategies asymmetrically through a function that captures the cost of lying. We consider also the effect of ideal states and the ideological commitments to these states by the two senders. We refer to \((\omega ^{k}-E[\omega ])^{2}\) as the ex-ante bias of k. Assuming identical ex ante biases (resp. ideological commitments), k is more truthful than l, i.e., \(a^{k}>a^{l}\), if and only if k is less ideologically committed (resp. ex ante biased) than l. Second, unilaterally increasing the ex-ante bias or ideological commitment of one player renders both strictly less truthful. Hence, \(a^{k}\) and \(a^{l}\) are both decreasing in the ex-ante bias and in the ideological commitment of any given sender l. For an intuition concerning the indirect impact on player k of increasing l’s lying, note that the latter reduces the expected cost of lying for k (in terms of lost audience), and thus incentivizes k to lie more as well. This in turn has a positive feedback effect on l’s lying incentive. We also find that, interestingly, the degree of polarization of sender preferences (as captured by the distance \(\left| \omega ^{k}-\omega ^{l}\right|\) separating their ideal states) is not a relevant quantity in determining the truthfulness of equilibrium communication. Rather, the key quantity is simply the sum of ex-ante biases. Finally, we also show that higher uncertainty negatively affects the accurateness of communication. In other words, \(a^{k}\) and \(a^{l}\) both decrease in \(Var(\omega )\).

2 Related Literature

Our paper relates to the literature on strategic information transmission with multiple experts. A central question is how the informativeness of communication is affected by the diversity of biases among informed parties. In the cheap talk model of Krishna and Morgan (2001b), two senders have different biases and at least one is not extremely biased. Then, consulting two senders is beneficial and may induce full revelation. In Battaglini (2002), the receiver faces multiple perfectly informed experts and the state is multidimensional. Full revelation is generically possible, even when the conflict of interest is arbitrarily large. In Battaglini (2004), senders hold noisy signals and multiple consultation induces less precise communication by each sender. In Austen-Smith (1993), the receiver faces two imperfectly informed experts and the state and signal space is binary. Under some conditions, full revelation is possible with a single expert but not when two experts are consulted simultaneously. On the other hand, there is little difference between similar and opposing biases. Krishna and Morgan (2001a), reexamining Gilligan and Krehbiel (1989), find that when fully informed experts have heterogeneous preferences, full revelation can be achieved. If instead experts have identical preferences, only imperfect revelation obtains.Footnote 3 McGee and Yang (2013) study a setup where a decision maker’s optimal decision is a (multiplicative) function of the uncorrelated types of two privately informed senders. In Li et al. (2016), a principal has to choose between two potential projects, information about returns being held separately by two experts who are each biased towards their own project. In both of these papers, senders’ levels of informativeness are strategic complements, as in our analysis. The underlying mechanism is that when the other sender communicates very informatively, large deviations from the truth become more costly. Alonso et al. (2008) consider information transmission in a multi-division organization, where each division’s profits depend on how its decision matches its privately known local conditions and on how it matches the other division’s decisions. One possible decision protocol is centralization, whereby division managers report to central headquarters which decide for both divisions. They find that a stronger desire to coordinate decisions worsens headquarters’ ability to retrieve information from divisions.Footnote 4

Our modelling of the cost of lying (through the loss of audience tomorrow) relates to Kartik (2009). The latter proposes to apply a cost function to a simple measure of lying which bases on the distance between the sender’s true type and the exogenous (literal) meaning of the message sent. He finds that communication is noisy regardless of the level of lying costs, though higher lying costs intuitively induce more informativeness. Our paper also relates to the literature on communication with strategic senders facing boundedly rational receivers (e.g., Ottaviani and Squintani 2006,or Kartik et al. 2007). When fully rational and boundedly rational receivers are simultaneously present, Ottaviani and Squintani (2006) show that the amount of information that is revealed to fully rational receivers increases in the fraction of naive receivers.

Moreover, our paper connects to the literature on trust and reputation under repeated interaction, which divides into two main strands; the Bayesian-reputation building and the repeated games and punishment (see, e.g., Cabral 2005). An important instance of the first strand is Board and Meyer-ter-Vehn (2013). The authors examine the problem of a firm which can invest in product quality, and whose profits from sales are determined by its reputation for quality. In their specification with perfect Poisson learning and either perfect good news or perfect bad news, they find that equilibrium takes a work-shirk form. The firm works when its reputation lies below some cutoff and shirks when it is above it. A set of papers has examined the issue of reputation building specifically in a repeated cheap talk context (see Sobel 1985; Benabou and Laroque 1992; Morris 2001; Ely and Välimäki 2003). Here, the key is that an expert who wants to repeatedly influence the decision of an uninformed party has to signal himself as a good expert over time. This might in turn bias the expert’s communication.

A different perspective on the foundations of trust is the literature on repeated games and punishment. The general insight is that if players care enough about future payoffs, the prospect of future punishment renders certain strategies credible which are not credible in a one-shot setup. A set of papers focuses specifically on repeated communication either in the form of cheap talk or in the form of disclosure of verifiable messages. Renault et al. (2013) study a situation of repeated cheap talk where the underlying state follows a Markov process and the receiver never observes the utility obtained from actions chosen. The sender is disciplined by the fact that in the long run, the profile of reports must be compatible with the stationary distribution of the process. If the receiver detects a clear deviation from this distribution, there is punishment in the form of reversion for ever to the babbling equilibrium (see also Escobar and Toikka (2013) for a related model). Margaria and Smolin (2018), in the same vein, study repeated interaction with multiple senders with state independent payoffs and a state evolving according to a Markov chain. They prove equivalents of Folk Theorems for the case where senders are sufficiently patient. In Golosov et al. (2014), communication and decision making is repeated over time but the state is fixed throughout. Their main finding is that full revelation of the state can be achieved in finite time. The equilibrium construction involves conditioning future information revelation on the receiver’s past response to information and, in particular, punishing the receiver if the latter has in the past responded to information in a hostile way.Footnote 5

We proceed as follows. Section 3 introduces the model. Section 4 presents the equilibrium analysis. Section 5 concludes. All proofs are relegated to the Appendix.

3 Two-sender game

Two biased and strategic senders 1 and 2 face the public in every period \(t=0,1,..\), the horizon being infinite. Each sender k has an initial audience \(x_{0}^{k}\in (0,1)\), where \(x_{0}^{1}+x_{0}^{2}=1\). During each period, the following events take place consecutively:

-

1.

The state of the world \(\omega _{t}\in [0,1]\) is drawn from a distribution with a continuous probability density function \(\phi (\omega )\) and a full support on the unit interval. The state is i.i.d. across periods.

-

2.

Each sender \(k\in \{1,2\}\) learns \(\omega _{t}\) and sends a message \(m_{t}^{k}\in [0,1]\) to her audience \(x_{t}^{k}\in (0,1)\). The message \(m_{t}^{k}\) can depend on all available past and current information (including \(\omega _{t}\) and \(x_{t}^{k}\)). Given the message \(m_{t}^{k}\), sender k receives the per-period utility,

$$\begin{aligned} u^{k}(x_{t}^{k},m_{t}^{k})=x_{t}^{k}-x_{t}^{k}\cdot \lambda ^{k}\cdot (m_{t} ^{k}-\omega ^{k})^{2}, \end{aligned}$$(1)where \(\omega ^{k}\in [0,1]\) is k’s favoured state and \(\lambda ^{k} \in [0,1]\) stands for k’s ideological commitment to \(\omega ^{k}\). Receivers may or may not observe \(\omega ^{k}\) and \(\lambda ^{k}\). The audience shares add up to one in each period, \(x_{t}^{1}+\) \(x_{t}^{2}\) \(=1\).

-

3.

Given the state \(\omega _{t}\) and the messages \(m_{t}^{k}\) and \(m_{t} ^{l}\), \(k\ne l\), sender k’s audience share is updated according to the function,

$$\begin{aligned} x_{t+1}^{k}=x_{t}^{k}-x_{t}^{k}\cdot \gamma (m_{t}^{k}-\omega _{t})^{2} +(1-x_{t}^{k})\gamma (m_{t}^{l}-\omega _{t})^{2}. \end{aligned}$$(2)

Hence, \(x_{t+1}^{k}\) obtains as the net balance of the outflows from and inflows to the share \(x_{t}^{k}\) at the end of period t. The parameter \(\gamma \in (0,1)\) can be interpreted as the probability that the period-t state is revealed ex post, allowing the audience to assess the truthfulness of reports. This interpretation reflects the level of transparency, i.e., observability by the public of the state of the world, and highlights possible frictions in information acquisition (e.g., due to censorship, state media dominance, language barriers, etc.). Alternatively, \(\gamma\) embodies inertia of the audience in changing their information source.

The updating rule (2) implies that sender k loses to the competitor l a fraction of his audience that is proportional to the quadratic distance between his message and the actual state of the world and, at the same time, gains a fraction of the competitor’s audience that is proportional to the quadratic distance between the competitor’s message and the actual state of the world. We show in Lemma 2 that under this rule it holds for \(t\ge 1\) and \(k\in \{1,2\}\) that \(x_{t}^{k}\in (0,1)\) and \(x_{t}^{1}+x_{t} ^{2}\) \(=1\) when \(x_{0}^{k}\in (0,1)\) and \(x_{0}^{1}+x_{0}^{2}\) \(=1\).

A simple interpretation of the above setup is that the public is boundedly rational and follows simple rules of thumb both in its belief updating conditional on messages as well as in weighting news sources over time. Each zero mass receiver in the audience of sender k takes the period-t message of sender k at face value, attaching probability one to state \(m_{t}^{k}\). If the state is revealed and the receiver realizes that he was lied to and thus tricked into wrong beliefs, he becomes likely to switch to the other sender, the more so the larger the lie. One can interpret this shift as motivated by a desire to punish the liar, or by a naive expectation that the other sender will be more truthful.

In what follows, we shall focus on equilibria that feature simple Markov strategies. A Markov strategy for sender k requires that the sender uses the same messaging rule \(m_{t}^{k}\) in every period and that the latter conditions only on the current state variables of the stochastic game, namely \(\omega _{t}\) and \(x_{t}^{k}\). However, the strategy spaces of senders are as such unrestricted (conditional on the available information) except that messages must change continuously in the state. The latter requirement ensures that agents’ expected utilities are well-defined.

Given a profile of communication strategies, agent k’s expected utility of the infinite stream of per-period payoffs at period t is given by

where \(\delta \in [0,1)\) is the common senders’ discount factor.

Our solution concept is MPE, which is a subgame perfect equilibrium of the game in which each sender k uses a Markov messaging strategy. All of the above aspects are common knowledge among senders.

4 Main results

This section introduces our main findings and accompanying discussions. Given Markov strategies, agent k’s expected utility (3) takes the recursive form (note that we omit time indices in what follows),

where we substituted from (1) for this period utility \(u^{k}(\cdot )\) and used (2) to compute the next period audience.

As mentioned in the Introduction, sender k’s current payoff decreases in the distance between the message \(m^{k}\) and his favoured state \(\omega ^{k}\), while his future audience decreases in the discrepancy between the message \(m^{k}\) and the realized state \(\omega\) due to the outflow of k’s audience. Therefore, a crucial role in our analysis will be played by the expected rate of utility loss,

and the expected rate of audience outflow,

When endowed with the audience \(x^{k}\) at a particular date t, sender k expects the period-t payoff \(x^{k}(1-\lambda ^{k}l^{k}(m^{k}))\) and the audience outflow \(x^{k}o^{k}(m^{k})\) at the end of period-t. Note that none of these expressions depends on the messaging rule of the opponent. Both expressions will depend in equilibrium on the expected squared deviation of the current state from the preferred state,

which decomposes into the squared difference of the current and the expected state (ex-ante bias \(\left( \omega ^{k}-E[\omega ]\right) ^{2}\)), and the variance \(Var(\omega )\) of the state distribution. Clearly, \(\theta (\omega ^{k})\) increases when the ex-ante bias or/and the uncertainty (variance) increases.

Although each sender is allowed to condition on her audience, the current state and past play, there exists a MPE with strikingly simple strategies: A sender’s period-t message is a convex combination of the realized period-t state and the favoured state. The weight placed on the realized state (i.e., sender’s truthfulness) in this equilibrium is a constant that depends only on the parameters of the game. This constant is computed by the simultaneous minimization of the expected rate of utility loss and the expected rate of audience outflow under the equilibrium strategy. Equilibrium messages change, therefore, continuously in the state.

Proposition 1

Assuming \(0<\delta \gamma <1\), there is a MPE in which player \(k\in \{1,2\}\) uses the message function

where \(({\widetilde{a}}^{k},{\widetilde{a}}^{l})\in [0,1]^{2}\) is the unique maximizer of,

We argue that this equilibrium is focal due to its simplicity but also because it is unique if the strategy spaces of senders are restricted to Markov strategies that condition on the current state only. This is shown in our next result.

Proposition 2

The MPE in Proposition 1 is unique if the strategy space of sender \(k\in \{1,2\}\) is restricted to Markov strategies \(m^{k}:[0,1]\rightarrow [0,1]\) that map the realized state \(\omega\) into a message \(m^{k}(\omega )\).

For the message function \({\widetilde{m}}^{k}\), the expected rate of utility loss and the expected rate of audience outflow simplify to

respectively. In the simple equilibrium that we focus on, players choose the vector \(({\widetilde{a}}^{k},{\widetilde{a}}^{l})\) that maximizes \(P(a^{k},a^{l})\), which implies that player k minimizes the utility loss \(l^{k} ({\widetilde{m}}^{k})\) in the numerator and minimizes the audience outflow \(o^{k}({\widetilde{m}}^{k})\) in the denominator of (9).

In this equilibrium, k’s choice boils down to picking \({\widetilde{a}}^{k}\), which we will henceforth refer to as k’s (messaging) strategy. As \({\widetilde{a}}^{k}\) is the weight of the realized state in k’s messages, it reflects the truthfulness of reporting. In particular, \({\widetilde{a}}^{k}=0\) corresponds to extremely biased and \({\widetilde{a}}^{k}=1\) to perfectly truthful messages. Note, that sender k reports truthfully when \(\omega =\omega ^{k}\).

We now add some comments on the fact that a player’s audience share does not affect his messaging incentives in the \(({\widetilde{m}}^{k},{\widetilde{m}}^{l})\) equilibrium, which is a property underpinning the fact that the equilibrium is subgame perfect. The intuition follows from applying the one-shot deviation principle. In the proof of Proposition 1, we show that (see equation (25) in the Appendix), after any history that ends with the audience \(x^{k}\), sender k chooses a message m such that

where the current period utility \(u^{k}(x^{k},m)\) is defined in (1), the next period audience \(z^{k}\) is computed from \(x^{k}\) by the updating rule (2) and \({\widetilde{v}}^{k}(z^{k})\) is k’s value function at the beginning of next period when senders play \(({\widetilde{m}}^{k},{\widetilde{m}} ^{l})\) from then on. Note that \(\frac{d{\widetilde{v}}^{k}(z^{k})}{dz^{k}}\) does not depend on \(x^{k}\), as can be seen from considering the closed form expressions (24) and (15) in the Appendix. Instead, \(\frac{du^{k}(x^{k},m)}{dm}\) and \(\frac{dz^{k}}{dm}\) are linear functions of \(x^{k}\). It follows that \(x^{k}\) cancels out of the above equality (10), which means that \(x^{k}\) does not affect sender k’s one-shot deviation incentives.

4.1 Comparative statics

In the proof of Proposition 1, we show that the maximizer \(({\widetilde{a}}^{k},{\widetilde{a}}^{l})\) of the function \(P(\cdot )\) in (9) satisfies the equation,

This equation defines implicitly a strictly increasing continuous function \({\widetilde{a}}^{k}=\eta ({\widetilde{a}}^{l})\) such that \(\eta (0)=0\) and \(\eta (1)=1\). An increase in \({\widetilde{a}}^{l}\) is then only compatible with MPE when \({\widetilde{a}}^{k}\) increases as well. In other words, \({\widetilde{a}} ^{k}\) and \({\widetilde{a}}^{l}\) are strategic complements. The idea here is that increasing l’s lying effectively reduces the cost of lying for k, which resides in the resulting future loss of audience. When k lies in period t, then k mechanically loses a fraction of his audience to l in period \(t+1\). However, when l lies in period \(t+1\), k acquires a fraction of l’s period \(t+1\) audience at \(t+2\), thereby effectively recovering some of the audience lost in period \(t+1\). Thus, the more l lies, the smaller the net future loss of audience associated with a fixed lie of k, and the higher the incentive to lie for k at t. In particular, false claims by competing parties cancel out, in a way that is proportional to the parties’ respective audience shares and deviations from the truth.

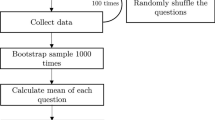

The shape of \(\eta ({\widetilde{a}}^{l})\) depends on the ex-ante biases \(\left( \omega ^{k}-E[\omega ]\right) ^{2}\), ideological commitments and the variance of the state distribution but not on \(\delta\) or \(\gamma\). In order to find the maximizer of \(P(\cdot )\) on \(\eta (\cdot )\) for particular values \(0<\delta ,\gamma <0\), we derive in the proof of Lemma 3 the formula for the best response function \(\beta ^{k}(a^{l})\) of player k to strategy \(a^{l}\) of his opponent. We show that \(\beta ^{k}(\cdot ,\pi )\) intersects \(\eta (\cdot )\) only once and that their intersection uniquely maximizes \(P(\cdot )\). It turns out that \(\beta ^{k}(a^{l})\) depends on \(\delta\) and \(\gamma\) only via the “cost of lying” parameter \(\pi =\delta \gamma /(1-\delta )\). As illustrated in Figure 1, the best response shifts upwards when this parameter increases, i.e., when lying becomes more costly. Together with the fact that \(\eta (\cdot )\) is increasing, this implies that \({\widetilde{a}}^{k}\) and \({\widetilde{a}}^{l}\) will move upwards along \(\eta (\cdot )\), i.e., reports become more truthful, when the cost of lying \(\pi\) increases.

Functions \(\eta (\cdot )\) (continuous line) and \(\beta ^{k}(\cdot )\) for \(\pi =3\) (dashed line) and for \(\pi =1\) (dotted line) when \(\omega ^{k}=\) \(\lambda ^{k}=1/2\), \(\omega ^{l}=\ \lambda ^{l}=1/4\) and \(\phi\) is the Uniform[0,1] PDF with \(E[\omega ]=\) 1/2 and \(Var(\omega )=\) 1/12

Although the maximizer of (9) has no simple explicit form, we are able to perform the comparative statics analysis summarized in our next result.

Proposition 3

The equilibrium message function \(m^{k}(\omega )=\) \({\widetilde{a}} ^{k}\cdot \omega +(1-{\widetilde{a}}^{k})\omega ^{k}\) of sender \(k\in \{1,2\}\) satisfies the following:

-

1.

Extreme cases: \({\widetilde{a}}^{k}=0\) iff \(\gamma \delta =0\) and \({\widetilde{a}}^{k}\rightarrow 1\) iff \(\delta \rightarrow 1\) or \(\lambda ^{k}\rightarrow 0\) for \(k\in \{1,2\}\).

-

2.

(A)symmetric messaging strategies:

$$\begin{aligned} {\widetilde{a}}^{k}>(=){\widetilde{a}}^{l}\Leftrightarrow \theta (\omega ^{k} )\lambda ^{k}<(=)\theta (\omega ^{l})\lambda ^{l}. \end{aligned}$$ -

3.

For \(0<\delta \gamma <1\), the discount factor \(\delta\) and the verification probability \(\gamma\) influence \({\widetilde{a}}^{k}\) only via the “cost of lying” parameter \(\pi =\frac{\delta \gamma }{1-\delta }\). Specifically,

$$\begin{aligned} \frac{d{\widetilde{a}}^{k}}{d\pi }>0,\quad k\in \{1,2\},\quad \pi \in [0,\infty ). \end{aligned}$$ -

4.

An increase in the ex-ante bias \(\left( \omega ^{l}-E[\omega ]\right) ^{2}\) or the ideological commitment \(\lambda ^{l}\) of sender \(l\in \{1,2\}\) or an increase in the variance of the state distribution \(Var\left( \omega \right) =\) \(E[\omega ^{2}]-E[\omega ]^{2}\) reduces truth telling of both senders,

$$\begin{aligned} \frac{d{\widetilde{a}}^{k}}{d\left( \omega ^{l}-E[\omega ]\right) ^{2}}<0,\text { }\frac{d{\widetilde{a}}^{k}}{d\lambda ^{l}}<0,\text { }\frac{d{\widetilde{a}}^{k} }{dVar\left( \omega \right) }<0,\quad \forall k,l\in \{1,2\}. \end{aligned}$$

Item (1) covers extreme cases that result in either fully revealing or fully uninformative messages. In particular, when \(\delta =0\), both players will always report their preferred state independently of \(\gamma\). In the other extreme, when \(\delta \rightarrow 1\) both players will report truthfully for any value \(\gamma >0\). Hence, for extreme values of \(\delta\), the level of transparency (verification probability) \(\gamma\) is irrelevant. A captive audience with scarce access to direct information on the state of the world (low \(\gamma\)) will still enjoy truthful reporting when senders assign high weights to future profits. Conversely, a lie-sensitive audience which very likely observes the state of the world (high \(\gamma\)) will face very biased reports when senders are extremely myopic. Moreover, \({\widetilde{a}}^{k}\) converges to 1 also when \(\lambda ^{k}\rightarrow 0\). In other words, the only guarantee for truthful reporting is either an ideologically uncommitted (but possibly biased) or a sufficiently patient sender.

Item (2) specifies the condition for (a)symmetric reporting strategies. This condition reveals an interesting relationship between biases and ideological commitments. In particular, we note that both senders use symmetric messaging functions, i.e., \({\widetilde{a}}^{1}={\widetilde{a}}^{2}\), when,

When (12) holds, the discount factor and the verification probability influence only the level of truth-telling by both senders but cannot break the symmetry of their reports.

Item (3) reveals, similarly to Item (1), an asymmetric impact of the discount rate \(\delta\) and the verification probability \(\gamma\) on equilibrium strategies. For example, the “cost of lying” parameter \(\pi =\frac{\delta \gamma }{1-\delta }\) cannot exceed \(\frac{\delta }{1-\delta }\) for any value of \(\gamma\), while for any \(\gamma >0\), this cost has no upper bound and goes to infinity when \(\delta \rightarrow 1\). Hence, for any interior point \(({\widetilde{a}}^{1},{\widetilde{a}}^{2})\) on the \(\eta (\cdot )\) curve, there exists a \(\delta <1\) such that \(({\widetilde{a}}^{1},{\widetilde{a}}^{2})\) is a MPE. This point, however, will not be an equilibrium for any value of \(\gamma\) when \(\delta\) is sufficiently low. Generally, an increase in the discount factor \(\delta\) or/and in the verification probability \(\gamma\) leads to less bias in reporting, i.e., to an increase in both, \({\widetilde{a}}^{k}\) and \({\widetilde{a}}^{l}\).

Item (4) states that both reports become less truthful when one of the following increases:

-

sender l’s ex-ante bias \(\left( \omega ^{l}-E[\omega ]\right) ^{2}\).

-

sender l’s ideological commitment \(\lambda ^{l}\).

-

uncertainty of the state distribution as measured by \(Var(\omega )\).

Therefore, sender l lies more (\({\widetilde{a}}^{l}\) decreases) when his ex-ante bias and/or his ideological commitment increase but also when there is more uncertainty about the state. As \({\widetilde{a}}^{l}\) and \({\widetilde{a}} ^{k}\) are strategic complements, this also induces more lying by k. Recall that the key intuition is that l’s lying reduces the expected cost of lying for k in terms of lost audience.

Note that senders distort their messages even if they are both ex-ante unbiased, i.e. even if \(\omega ^{1}=\omega ^{2}=E[\omega ]\). Clearly, ex ante unbiased players have an ex post incentive to lie which is increasing in the divergence between the realized state \(\omega\) and \(E[\omega ]\). For ex ante unbiased players, the expected ex post incentive to lie thus increases in \(Var(\omega ).\) An intuition for the role of key statistics and parameters can be gained by considering the expected period-t payoff (1) to player k, who holds an exogenous market share of \(x^{k}\). This payoff simplifies under the equilibrium messaging rule \({\widetilde{a}}^{k}\omega +(1-{\widetilde{a}} ^{k})\omega ^{k}\) to

Note that the derivative of the above w.r.t. \({\widetilde{a}}^{k}\) is negative and decreasing in the exogenous quantities \(\lambda ^{k}\), \(\left( \omega ^{k}-E[\omega ]\right) ^{2}\), \(Var(\omega )\). In consequence, sender k will decrease \({\widetilde{a}}^{k}\) (i.e. distort his messages more) to offset an increase in one these three exogenous quantities.Footnote 6

We conclude with some observations on the role of polarization in this model. It is often argued that polarization is a vital threat to modern societies. In the context of this work, we can analyse its impact on reporting policies. Polarization in our framework can be expressed as the distance between individual preferred states, \(|\omega ^{k}-\omega ^{l}|\), while recall that \((\omega ^{k}-E[\omega ])^{2}\) is the ex-ante bias of k. In order to disentangle the roles of polarization and ex-ante bias in our model, we consider two cases:

-

1.

There is a “left” and a “right” sender, \(\omega ^{k}\le E[\omega ]\le \omega ^{l}\).

-

2.

There are two “left” senders, \(\omega ^{k}\le \omega ^{l}\le E[\omega ]\) (the case of two “right” senders, \(\omega ^{k}\ge \omega ^{l}\ge E[\omega ]\), is analogous).

In the first case, rising polarization is equivalent to an increase in the ex-ante bias of at least one sender. By Proposition 3, this will lead to a larger bias in the reports of both senders. Hence, in this case, increasing polarization and ex-ante bias go hand in hand and have a negative effect on the quality of messages.

The analysis of the second situation is more subtle and requires distinguishing between two subcases. Depending on the subcase considered, increasing polarization is compatible with less or more ex-ante bias, which respectively leads to a smaller or a larger distortion in both senders’ reports. The first subcase is when \(\omega ^{l}\) moves closer to \(E[\omega ]\), thereby at the same time increasing polarization and making sender l less ex-ante biased. The second subcase is when \(\omega ^{k}\) moves closer to 0, thereby at the same time increasing polarization and making sender k more ex-ante biased. In either case, Item (4) in Proposition 3 makes it clear that ex-ante biases and not polarization per se influences the truthfulness of senders.

As an illustration, assume \(E[\omega ]=1/2\), \(\lambda ^{k}=\lambda ^{l}\) and consider the following two scenarios, where different levels of polarization lead to the same equilibrium outcome:

(a) \(\omega ^{k}=\omega ^{l}=c\) (polarization \(|\omega ^{k}-\omega ^{l}|=0\)),

(b) \(\omega ^{k}=1-\omega ^{l}=c<1/2\) (polarization \(|\omega ^{k}-\omega ^{l}|=\) \(1-2c\)).

In both scenarios, the equality in Proposition 3, Item (2), holds as,

It follows that both scenarios yield the same solution \(({\widetilde{a}} ^{1},{\widetilde{a}}^{2})\), which is furthermore symmetric, i.e. such that \({\widetilde{a}}^{1}={\widetilde{a}}^{2}\).

5 Conclusion

Two ideologically motivated informed parties repeatedly face uninformed audience. Senders simultaneously aim at influencing their audiences’ views as well as maximizing the size of their audiences. As the truth (the state) is occasionally revealed and lies cause audiences to switch to the competitor, senders face a trade-off between propagating their favoured views, possibly by lying, and maintaining a high audience. We fully characterize a focal MPE of this game, in which players only condition their messages on the realised state (and not on their current audience share). We study the impact of key exogenous parameters on this equilibrium such as: the discount factor, verification probability, senders’ ideological commitments and ex-ante biases on equilibrium strategies. One of our key findings here is that increasing the bias and/or ideological commitment of one player lowers the truthfulness of both senders.

An interesting direction worth exploring would be to assume Bayesian updating by audiences in every period (as opposed to the face-value interpretation assumed here), while maintaining our modelling of audience flows as a function of the distance between messages and the state.

Notes

The NYT report continues: “The economy has been a picture of health, expanding at a 3.5 percent annual pace during the third quarter and driving the unemployment rate to 3.7 percent, the lowest level in almost half a century. But General Motors’ plan to cut 14,000 jobs and shutter five factories reinforces other recent indications that the better part of the expansion is now in the rearview mirror.”, Appelbaum, B., New York Times, November 28, 2018.

The FB report continues: “Average hourly earnings meanwhile rose by five cents to $27.30, or 3.1 percent year-over-year, the highest it’s been since the Great Recession.“We saw better-than-expected payroll gains at 250,000 jobs, surging wages and increased labor force participation,” Mark Hamrick, a senior economic analyst for Bankrate.com said.“That’s a kind of an economic trinity that we haven’t often seen during this economic expansion.”. Henney, M., Fox Business, November 2, 2018.

Another strand of the static multi-expert literature examines games of disclosure (persuasion) with multiple senders. This relates less closely to our analysis in this paper. Milgrom and Roberts (1986) show that competition among interested parties with opposing biases might be helpful when the decision maker is uninformed or strategically unsophisticated (See also Shin (1994), Bhattacharya and Mukherjee (2013), Bhattacharya et al. (2018)).

The fact that \({\widetilde{a}}^{k}\) is positive in equilibrium stems from the fact that sender k, in maximising his expected stream of payoffs as appearing in the full expression (4), takes into account the negative effect of decreasing \({\widetilde{a}}^{k}\) on his expected market share.

We used Mathematica 11.3 commands FullSimplify, Resolve, Exists and FindInstance to obtain the derivatives and their signs.

References

Alonso R, Dessein W, Matouschek N (2008) When does coordination require centralization? Am Econ Rev 98(1):145–79

Ambrus A, Lu SE (2014) Almost fully revealing cheap talk with imperfectly informed senders. Games Econ Behav 88:174–189

Aumann RJ, Hart S (2003) Long cheap talk. Econometrica 71(6):1619–1660

Austen-Smith D (1993) Interested experts and policy advice: multiple referrals under open rule. Games Econ Behav 5(1):3–43

Battaglini M (2002) Multiple referrals and multidimensional cheap talk. Econometrica 70(4):1379–1401

Battaglini M (2004) Policy advice with imperfectly informed experts. Adv Theor Econ 4(1)

Benabou R, Laroque G (1992) Using privileged information to manipulate markets: insiders, gurus, and credibility. Q J Econ 107(3):921–958

Bhattacharya S, Mukherjee A (2013) Strategic information revelation when experts compete to influence. Rand J Econ 44(3):522–544

Bhattacharya S, Goltsman M, Mukherjee A (2018) On the optimality of diverse expert panels in persuasion games. Games Econ Behav 107:345–363

Board S, Meyer-ter-Vehn M (2013) Reputation for quality. Econometrica 81(6):2381–2462

Cabral LM (2005) The economics of trust and reputation: a primer. New York University and CEPR

Ely JC, Välimäki J (2003) Bad reputation. Q J Econ 118(3):785–814

Forges F, Koessler F (2008) Long persuasion games. J Econ Theory 143(1):1–35

Gilligan TW, Krehbiel K (1989) Asymmetric information and legislative rules with a heterogeneous committee. Am J Polit Sci pp 459–490

Golosov M, Skreta V, Tsyvinski A, Wilson A (2014) Dynamic strategic information transmission. J Econ Theory 151:304–341

Kartik N, Ottaviani M, Squintani F (2007) Credulity, lies, and costly talk. J Econ Theory 134(1):93–116

Kartik N (2009) Strategic communication with lying costs. Rev Econ Stud 76(4):1359–1395

Krishna V, Morgan J (2001) Asymmetric information and legislative rules: Some amendments. Am Polit Sci Rev 95(2):435–452

Krishna V, Morgan J (2001) A model of expertise. Q J Econ 116(2):747–775

Krishna V, Morgan J (2004) The art of conversation: eliciting information from experts through multi-stage communication. J Econ Theory 117(2):147–179

Li Z, Rantakari H, Yang H (2016) Competitive cheap talk. Games Econ Behav 96:65–89

McGee A, Yang H (2013) Cheap talk with two senders and complementary information. Games Econ Behav 79:181–191

Milgrom P, Roberts J (1986) Relying on the information of interested parties. RAND J Econ pp 18–32

Margaria C, Smolin A (2018) Dynamic communication with biased senders. Games Econ Behav 110:330–339

Morris S (2001) Political correctness. J Polit Econ 109(2):231–265

Ottaviani M, Sørensen P (2001) Information aggregation in debate: who should speak first? J Public Econ 81(3):393–421

Ottaviani M, Squintani F (2006) Naive audience and communication bias. Internat J Game Theory 35(1):129–150

Renault J, Solan E, Vieille N (2013) Dynamic sender-receiver games. J Econ Theory 148(2):502–534

Shin HS (1994) The burden of proof in a game of persuasion. J Econ Theory 64(1):253–264

Sobel J (1985) A theory of credibility. Rev Econ Stud 52(4):557–573

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Unless stated otherwise, all results are shown for the parameter values in the open unit interval:

Lemma 1

For any \(\omega ^{k}\in [0,1]\) and continuous PDF \(\phi (\omega )\) with the full support on the unit interval,

Proof: The first inequality is clear. For the second, we note that given the mean \(e=E[\omega ]\), the variance \(Var(\omega )\) is maximized under a distribution that assigns probability e to 1 and \(1-e\) to 0. On the other hand, \(\left( \omega ^{k}-E[\omega ]\right) ^{2}\) attains the highest value for \(\omega ^{k}=0\) (\(\omega ^{k}=1\)) when \(E[\omega ]=e\ge 1/2\) (\(E[\omega ]=e\le 1/2\)). In the former case, we have

where the r.h.s. attains the global maximum of one for \(e=1\). For any continuous PDF \(\phi (\omega )\), the maximum of \(\left( \omega ^{k} -E[\omega ]\right) ^{2}+Var(\omega )\) must thus be less than one. A similar argument applies to the case \(\omega ^{k}=1\) (\(e\le 1/2\)).

Lemma 2

Under the law of motion (2), it holds for \(t\ge 1\) and \(k\in \{1,2\}\) that \(x_{t}^{k}\in (0,1)\) and \(x_{t}^{1}+x_{t}^{2}\) \(=1\) when \(x_{0}^{k}\in (0,1)\) and \(x_{0}^{1}+x_{0}^{2}\) \(=1\).

Proof: The law of motion (2) can be written as,

As \(\gamma \in (0,1)\) and \(m_{t}^{k},\) \(m_{t}^{l}\) and \(\omega _{t}\) all live in the unit interval, it follows that the maximum (minimum) of the r.h.s. of (13) is less than 1 (more than 0) when \(x_{t}^{k}\in (0,1)\) and, hence, \(x_{t+1}^{k}\) lies in the open interval (0, 1). On the other hand, by substitution from (13) and rearranging terms one obtains,

which implies \(x_{t+1}^{1}+x_{t+1}^{2}=x_{t}^{1}+x_{t}^{2}\) when \(x_{t} ^{1}+x_{t}^{2}=1\).

Lemma 3

The function \(P:R^{2}\rightarrow R\) (that is identical to the function in (9)),

where \(\mu ^{k}:R^{2}\rightarrow R\) is given by,

for \(k,l\in \{1,2\},\) \(l\ne k\) and \(\theta (\cdot )\) defined in (7) is uniquely maximized at some \(({\widetilde{a}}^{k},{\widetilde{a}}^{l})\in [0,1]^{2}\) that satisfies

Proof: We note that a finite maximum of \(P(\cdot )\) exists as \(P(a^{k},a^{l})<\) \(1/(1-\delta )\). Moreover, any maximizer must be a point in the unit square because it holds for any \(a^{l}\) that \(P(0,a^{l})>\) \(P(a^{k},a^{l})\) when \(a^{k}<0\) and \(P(1,a^{l})>\) \(P(a^{k},a^{l})\) when \(a^{k}>1\) (similar inequalities hold for \(a^{l}\)). As \(P(\cdot )\) is differentiable, a maximizer \(({\widetilde{a}}^{k},{\widetilde{a}}^{l})\in [0,1]^{2}\) must satisfy the f.o.c. \(d\ln P(\cdot )/da^{k}=0\) and \(d\ln P(\cdot )/da^{l}=0\) that combined imply,

The last expression defines implicitly a continuous function \({\widetilde{a}} ^{k}=\eta ({\widetilde{a}}^{l})\) with the unit interval as its domain and range. Note that \(\eta (\cdot )\) does not depend on \(\delta\) and \(\gamma\). Implicit differentiation of (17) yields an explicit formula for \(\eta ^{\prime }(\cdot )\) with \(\eta ^{\prime }({\widetilde{a}}^{l})>0\). As \(\eta ({\widetilde{a}} ^{l})\) increases in \({\widetilde{a}}^{l}\), the equality (17) implies \(\eta (0)=0\) and \(\eta (1)=1\).

Moreover, for any maximizer \(({\widetilde{a}}^{k},{\widetilde{a}}^{l})\) of \(P(\cdot )\), it must hold that \({\widetilde{a}}^{k}\) maximizes \(\mu ^{k} (a^{k},{\widetilde{a}}^{l})\) w.r.t. \(a^{k}\). Similarly as in case of \(P(\cdot )\), a finite maximum of \(\mu ^{k}(a^{k},{\widetilde{a}}^{l})\) exists and it is attained for some \({\widetilde{a}}^{k}\in [0,1]\). The f.o.c. \(d\mu ^{k}(\cdot )/da^{k}=0\) that then holds defines the function,

Any maximizer \(({\widetilde{a}}^{k},{\widetilde{a}}^{l})\), where \({\widetilde{a}} ^{k}=\beta ^{k}({\widetilde{a}}^{l},\cdot )\), is then an intersection of \(\beta ^{k}(\cdot )\) and \(\eta (\cdot )\) as illustrated in Figure 1. Given that \(P(\cdot )\) attains a maximum for any \(\pi >0\), \(\beta ^{k}(\cdot ,\pi )\) must intersect \(\eta (\cdot )\) - that does not depend on \(\pi\) - at least once. We can define then the function \(({\widetilde{a}}^{k}(\pi ),{\widetilde{a}}^{l} (\pi )):\) \([0,\infty )\rightarrow [0,1]^{2}\) that maps \(\pi\) into the "highest" intersection of \(\beta ^{k}(\cdot ,\pi )\) with \(\eta (\cdot )\). By directly calculating,

we find that \(({\widetilde{a}}^{k}(\pi ),{\widetilde{a}}^{l}(\pi ))\) strictly increase in \(\pi\), \(\lim _{\pi \rightarrow 0}({\widetilde{a}}^{k}(\pi ),{\widetilde{a}}^{l}(\pi ))=\) (0, 0) (note that the limit of \(\beta ^{k} (a^{l},\pi )\) as \(\pi \rightarrow 0\) is 0 for any \(a^{l}\)) and \(\lim _{\pi \rightarrow \infty }({\widetilde{a}}^{k}(\pi ),{\widetilde{a}}^{l}(\pi ))=\) (1, 1). As \(({\widetilde{a}}^{k}(\pi ),{\widetilde{a}}^{l}(\pi ))\) inherits from \(\beta ^{k}(\cdot ,\pi )\) continuity in \(\pi\), it follows that any interior point on \(\eta (\cdot )\) corresponds to an intersection \(({\widetilde{a}}^{k} (\pi ),{\widetilde{a}}^{l}(\pi ))\) with \(\beta ^{k}(\cdot ,\pi )\) for some \(\pi >0\).

Now, we show that \(\beta ^{k}(\cdot ,\pi )\) intersects \(\eta (\cdot )\) only once for any \(\pi \in (0,\infty )\), i.e., that the maximizer of \(P(\cdot )\) is unique. Firstly, we note that \(P({\widetilde{a}}^{k}(\pi ),{\widetilde{a}}^{l}(\pi ))\) strictly increases in \(\pi\). To see this notice that \(P(a^{k},a^{l};\pi )\) increases in \(\pi\) for fixed \(a^{k},a^{l}\). Then, for any \(\pi ^{\prime }>\pi\),

where the first inequality follows because \(({\widetilde{a}}^{k}(\pi ^{\prime }),{\widetilde{a}}^{l}(\pi ^{\prime }))\) maximize \(P(a^{k},a^{l};\pi ^{\prime } )\). When \({\widetilde{a}}^{k}(\pi )\), \({\widetilde{a}}^{l}(\pi )\) and \(P({\widetilde{a}}^{k}(\pi ),{\widetilde{a}}^{l}(\pi ))\) all increase in \(\pi\), \(\beta ^{k}(\cdot ,\pi )\) cannot intersect \(\eta (\cdot )\) more than once. Otherwise, there exists \(\pi ^{\prime }\ne \pi\) such that \(({\widetilde{a}} ^{k}(\pi ),{\widetilde{a}}^{l}(\pi ))\) and \(({\widetilde{a}}^{k}(\pi ^{\prime }),{\widetilde{a}}^{l}(\pi ^{\prime }))\) are different intersection points with \(P({\widetilde{a}}^{k}(\pi ),{\widetilde{a}}^{l}(\pi ))\ne P({\widetilde{a}}^{k} (\pi ^{\prime }),{\widetilde{a}}^{l}(\pi ^{\prime }))\). This contradicts the fact that \(({\widetilde{a}}^{k}(\pi ),{\widetilde{a}}^{l}(\pi ))\) and \(({\widetilde{a}} ^{k}(\pi ^{\prime }),{\widetilde{a}}^{l}(\pi ^{\prime }))\) both maximize \(P(\cdot )\).

In order to show (16), we note that \(\mu ^{k}(a^{k},a^{l})\) is a quotient a two functions. Then, the f.o.c. holds when \(\mu ^{k}(a^{k},a^{l})\) is equal to the quotient of their derivatives w.r.t. \(a^{k}\),

Proof

Proposition 1: When senders use the Markov strategies,

then we show in Proposition 2 that sender k’s expected value function takes the form,

where \(\mu ^{k}(a^{k},a^{l})\) is defined in (15). We note that in order to maximize \(v^{k}(x^{k})\), sender k needs only maximize \(\mu ^{k} (a^{k},a^{l})\) w.r.t. \(a^{k}\). In Lemma 3, we showed that there is a unique maximizer \(({\widetilde{a}}^{k},{\widetilde{a}}^{l})\in [0,1]^{2}\) of the function (9) such that \({\widetilde{a}}^{k}\) maximizes \(\mu ^{k} (a^{k},{\widetilde{a}}^{l})\) w.r.t. \(a^{k}\) and \({\widetilde{a}}^{l}\) maximizes \(\mu ^{k}({\widetilde{a}}^{k},a^{l})\) w.r.t. \(a^{l}\). Hence, \(({\widetilde{a}} ^{k},{\widetilde{a}}^{l})\) defines a MPE when each sender k is restricted to a strategy of the form (20). Moreover, in Lemma 3 we prove also that,

We show now that sending the message

is a MPE strategy when each player \(k\in \{1,2\}\) chooses for each period t a messaging function \(m_{t}^{k}\) that can condition on all information available to k up to the start of date t (including \(\omega _{t}\) and \(x_{t}^{k}\)).

As MPE is, by definition, subgame perfect, we can apply the one-shot deviation principle for infinite games with discount. According to this principle, a strategy profile is a subgame perfect equilibrium if and only if there exist no profitable one-shot deviations for each subgame and every player. For any history that ends with k’s audience \(x^{k}\) and state \(\omega\), we denote by \({\widetilde{v}}^{k}(x^{k})\) her expected payoff (4) when both players play the strategy profile \(({\widetilde{m}}^{k},{\widetilde{m}}^{l})\), and by \(v_{m}^{k}(x^{k})\) her expected payoff when she deviates from \(({\widetilde{m}} ^{k},{\widetilde{m}}^{l})\) by sending the message m once and follows the strategy \({\widetilde{m}}^{k}\) thereafter. Then,

where the second equality follows after substitution from (1) and (21) and \(z^{k}\) results from the updating rule (2),

As \(v_{m}^{k}(x^{k})\) is concave in m (\(d^{2}v_{m}^{k}(x^{k})/dm^{2}<0\)), sender’s k optimal (unrestricted) message m uniquely maximizes \(v_{m} ^{k}(x^{k})\) and it is given by the f.o.c.,

We note that the optimal message does not depend on the share \(x^{k}\) that cancels out in the above f.o.c. Comparison with (22) and (23) confirms that \({\widetilde{m}}^{k}=\) \({\widetilde{a}}^{k}\omega +\) \((1-{\widetilde{a}}^{k})\omega ^{k}\) defines an MPE strategy for each player \(k\in \{1,2\}\) in the game with unrestricted strategy spaces. \(\square\)

Proof

Proposition 2: Given an infinite path \((\omega _{0},\omega _{1},...)\) of realized states, the payoff at date 0 to k under stationary message functions \((m^{k},m^{l})\) is computed by Eqs. (3) and (1),

where each \(x_{s}^{k}\), \(s>0,\) is a linear function of \(x_{0}^{k}=x^{k}\) and of previous states and messages of both players by the (repeatedly applied) updating rule (2). As k’s expected payoff is, essentially, an average over paths \((\omega _{0},\omega _{1},...)\), it follows from (26) that \(v^{k}(x^{k})=\) \(v_{0}+v_{1}\cdot x^{k}\) for some expressions \(v_{0}\) and \(v_{1}\) that do not depend on \(x^{k}\) (because senders’ messages do not depend on \(x^{k}\)). We substitute this form on both sides of (4) and equate the coefficients \(v_{0}\) and \(v_{1}\). This leads to,

where

and \(o^{k}(m^{k})\) and \(l^{k}(m^{k})\) are defined in (6) and (5), respectively. In order to maximize \(v^{k}(\cdot )\) w.r.t. \(m^{k}\), sender k needs only maximize \(\mu ^{k}(m^{k},m^{l})\).

By inspection of (28), we observe that the equilibrium message \(m^{k}\) must be a convex combination of the realized state and k’s ideal point, i.e., \(m^{k}(\omega )=\) \(a^{k}(\omega )\omega +\) \((1-a^{k}(\omega ))\omega ^{k}\) for some \(a^{k}(\omega )\in [0,1]\). Otherwise, sender k could simultaneously decrease \(l^{k}(m^{k})\) and \(o^{k}(m^{k})\) increasing \(\mu ^{k}(\cdot )\) in the process. For example, if \(m^{k}<\omega \le \omega ^{k}\) (\(\omega \le \omega ^{k}<m^{k}\)) this will be achieved by marginally increasing (decreasing) the message \(m^{k}(\omega )\).

We show now that for any message function \(m^{k}\) of the form,

where the continuous function \(a^{k}(\omega )\) has the unit interval as its domain and range, there is a message \({\overline{m}}^{k}(\omega )=\) \(a^{k} \omega +(1-a^{k})\omega ^{k}\) such that

unless \(a^{k}(\omega )=a^{k}\).

We compute the constant \(a^{k}\) from the condition,

In light of the fact that

lies between 0 and \(\theta (\omega ^{k})= {\textstyle \int \nolimits _{0}^{1}} (\omega -\omega ^{k})^{2}d\phi (\omega )\), it holds that \(a^{k}\in [0,1]\). In order to show that \(o^{k}(m^{k})>o^{k}({\overline{m}}^{k})\) unless \(a^{k} (\omega )=a^{k}\), we first evaluate the definition (6) for \(m^{k}\) and \({\overline{m}}^{k}\),

After substituting for \(l^{k}(m^{k})\) from (31), we calculate the difference,

The sign of the last difference is the same as the sign of

where we substituted for \(a^{k}\) from (31) and from (32) and defined the PDF function,

By Jensen’s inequality, the last difference in (34) is positive unless \(a^{k}(\omega )\) is constant with probability one (in which case this difference is zero). A continuous function \(a^{k}(\omega )\) is constant with probability one (under the continuous PDF \(\chi (\omega )\)) whenever it attains the same value for all \(\omega \in [0,1]\). Hence, for \({\overline{m}}^{k}\) (\(a^{k}\)) defined in (31), \(o^{k}(m^{k})>o^{k}({\overline{m}}^{k})\) unless \(a^{k}(\omega )=a^{k}\). We showed in Proposition 1 that \(a^{k}={\widetilde{a}}^{k}\) in equilibrium. \(\square\)

Proof

Proposition 3: \(\square\)

-

1.

When \(\gamma \delta =0\), it is clear that \({\widetilde{a}}^{k}=0\) maximizes k’s objective function \(\mu ^{k}(a^{k},a^{l})\) defined in (15). For \(\delta \rightarrow 1\) (i.e., \(\pi =\frac{\delta \gamma }{1-\delta } \rightarrow \infty\)), we show in the proof of Lemma 3 that \(\lim _{\pi \rightarrow \infty }({\widetilde{a}}^{k}(\pi ),\) \({\widetilde{a}}^{l}(\pi ))\) \(=(1,1)\). For \(\lambda ^{k}=0\), it is clear that \({\widetilde{a}}^{k}=1\) maximizes k’s objective function \(\mu ^{k}(a^{k},a^{l})\) defined in (15). In order to show that \({\widetilde{a}}^{k}=0\Rightarrow \gamma \delta =0\) and \({\widetilde{a}}^{k}=1\Rightarrow \lambda ^{k}=0\) or \(\delta \rightarrow 1\), we calculate the derivatives of function \(P(\cdot )\) in (9),

$$\begin{aligned} \frac{dP(a^{k},a^{l})}{da^{k}}|_{a^{k}=0}=P_{0}\Delta >0,\quad \frac{dP(a^{k},a^{l})}{da^{k}}|_{a^{k}=1}=-P_{1}\Delta <0, \end{aligned}$$(35)where \(P_{0}\) and \(P_{1}\) are strictly positive expressions when \(0<\gamma ,\delta ,\lambda ^{k}<1\) and \(\Delta =\) \((1-\lambda ^{l}(a^{l})^{2} \theta (\omega ^{l}))\). The signs of the derivative follow because \(\lambda ^{l},a^{l}\in [0,1]\) and \(\theta (\omega ^{l})<1\) by Lemma 1. Hence, \({\widetilde{a}}^{k}\) can only attain the extreme values if the same occurs to some of the parameters.

-

2.

This part follows directly from the equality (17) derived in the proof of Lemma 3.

-

3.

It is clear from the definition of the function \(P(a^{1},a^{2})\) in (9) that its unique maximizer \(({\widetilde{a}}^{k},{\widetilde{a}}^{l})\) depends on \(0<\delta ,\gamma <1\) only via \(\pi =\frac{\delta \gamma }{1-\delta }\). In the proof of Lemma 3, we show that \(({\widetilde{a}}^{k} (\pi ),{\widetilde{a}}^{l}(\pi ))\) strictly increases in \(\pi\).

-

4.

The essence of the proof is in the evaluation of the relevant derivatives. Algebraic manipulationsFootnote 7 show that,

$$\begin{aligned} \frac{d\beta ^{k}(\cdot )}{d\theta (\omega ^{l})}<0,\frac{d\beta ^{k} (\cdot )}{d\theta (\omega ^{k})}<0,\frac{d\beta ^{k}(\cdot )}{d\lambda ^{k} }<0,\frac{d\beta ^{k}(\cdot )}{d\lambda ^{l}}=0,\quad k\in \{1,2\}, \end{aligned}$$for all values of the parameters \(\gamma\), \(\delta\), \(\lambda ^{1}\), \(\lambda ^{2}\), \(\omega ^{1}\) and \(\omega ^{2}\). As the adjustment (if any) of the messaging strategies of both senders goes in the same direction in response to a change in the bias or in the ideological commitment of one of them and, moreover, \(d\beta ^{k}({\widetilde{a}}^{l})/d{\widetilde{a}}^{l}>0\), the conclusions follow.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Polanski, A., Le Quement, M. The battle of opinion: dynamic information revelation by ideological senders. Int J Game Theory 52, 463–483 (2023). https://doi.org/10.1007/s00182-022-00826-z

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00182-022-00826-z