Abstract

When two agents with private information use a mechanism to determine an outcome, what happens if they are free to revise their messages and cannot commit to a mechanism? We study this problem by allowing agents to hold on to a proposed outcome in one mechanism while they play another mechanism and learn new information. A decision rule is posterior renegotiation-proof if it is posterior implementable and robust to a posterior proposal of any posterior implementable decision rule. We identify conditions under which such decision rules exist. We also show how the inability to commit to the mechanism constrains equilibrium: a posterior renegotiation-proof decision rule must be implemented with at most five messages for two agents.

Similar content being viewed by others

Notes

See Poitevin (2000) for a discussion of the Revelation Principle in this context.

For example, this makes the first mechanism robust against the following possibility: agents play a second mechanism, and then replay the first mechanism.

Throughout the paper, we use \(\varvec{1}\{X\}\) to denote an indicator function that returns one if and only if the statement X is true.

More precisely, they disagree in the area strictly inside two curves, both are indifferent at the intersections of two curves, only one agent is indifferent at points on the either curve excluding the intersections, and both agree in the remaining area.

This may occur when an increase in \(\theta _{1}\) results in the discontinuous shift in the conditional density \(f_{1}\left( \cdot |\theta _{1}\right) \). This can lead to the possibility that \(V_{1}\left( \theta _{1};\left[ x_{2},y_{2}\right] \right) <0\) \(\forall \theta _{1}\in \left[ \underline{\theta }_{1},\theta ^{*}\right] \) and \(V_{1}\left( \theta _{1};\left[ x_{2},y_{2}\right] \right) >0\) \(\forall \theta _{1}\in \left( \theta ^{*},\overline{\theta }_{1}\right] \). I appreciate an anonymous referee for this point.

Their main result is stated as a necessary condition for posterior implementable rules. However, on page 88 of Green and Laffont (1987), they applied Brouwer’s fixed-point theorem using functions \(h_{1}\) and \(h_{2}\) defined here. It appears they assumed the continuity of these functions in some part of their analysis.

The formal definition of the Bayes–Nash equilibrium was presented in Green and Laffont (1987) and the earlier version of this paper. Since it does not add much to our analysis, we omit it here.

The assumption \(\Theta _{1}=\Theta _{2}=\left[ 0,1\right] \) can be relaxed with the burden of added notations.

With \(\theta _{1}=y\), the equation becomes \(\varepsilon y-\left\{ \varepsilon a\left( 1-\frac{y}{2}\right) -\left( 1-\varepsilon \right) y\right\} -\left( 1-\varepsilon \right) a\left( 1-\frac{2y}{3}\right) =0\).

This is because \(h_{1}\left( 0,1\right) \) defines \(\theta ^{d}\) and \( h_{1}\left( 1,1\right) =\left\{ \theta _{1}\in \Theta _{1}|v_{1}\left( \theta _{1},1\right) =0\right\} =0\).

In general, \(\left( \theta _{1}^{H},\theta _{2}^{H}\right) \) is characterized by two equations \(\theta _{1}^{H}=h_{1}\left( \theta _{2}^{H},1\right) \) and \(\theta _{2}^{H}=h_{2}\left( \theta _{1}^{H},1\right) \). In this symmetric example, \(h_{1}\left( x_{2},1\right) \) and \(h_{2}\left( x_{1},1\right) \) are identical and we can find \(\theta _{1}^{H}=\theta _{2}^{H}=\theta ^{H}\) by solving the single equation \( x=h_{1}\left( x,1\right) \).

Recall that \(\theta ^{d}=h_{1}\left( 0,1\right) \) is the indifference type believing that the other agent’s type is in the set \(\left[ 0,1\right] \). For a given type, changing this belief to \(\left[ 0,\theta ^{H}\right] \) decreases the expected payoff. Hence, the indifference type associated with \(\left[ 0,\theta ^{H}\right] \) must be larger than \(\theta ^{d}\), i.e., \(h_{1}\left( 0,\theta ^{H}\right) >h_{1}\left( 0,1\right) \). Similarly, note that \(a=h_{1}\left( 0,0\right) \) is the indifferent type believing that the other agent’s type is 0. Changing this belief to \(\left[ 0,\theta ^{H}\right] \) increases the expected payoff. Hence, the indifference type associated with \(\left[ 0,\theta ^{H}\right] \) must be smaller than a, i.e., \(h_{1}\left( 0,\theta ^{H}\right) <h_{1}\left( 0,0\right) \).

Because agent 1 with type \(\theta ^{d}\left( a,\varepsilon \right) \) is indifferent, he can randomly choose either message.

In this example, it can also be shown that the case (2-H) applies in the posterior type set \(\left[ \theta ^{H},1\right] \times \left[ 0,\theta ^{H} \right] \) after observing \(m=\left( H,L\right) \), and also in the posterior type set \(\left[ 0,\theta ^{H}\right] \times \left[ \theta ^{H},1\right] \) after observing \(m=\left( L,H\right) \).

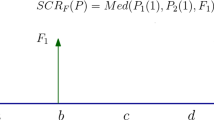

See the green double-dashed line. \(h_{2}\left( x_{1},\theta _{1}^{L}\left( a\right) \right) \) connects two points \(\left( 0,\theta _{2}^{L}\left( a\right) \right) \) and \(\left( y^{*},0\right) \), where \(y^{*}\) solves \(h_{2}\left( y^{*},\theta _{1}^{L}\left( a\right) \right) =0\). Because both \(\theta _{2}^{L}\) and \(y^{*}\) decrease, \(h_{2}\left( x_{1},\theta _{1}^{L}\left( a\right) \right) \) moves down.

The values of \(\phi \) on \(\xi \) can take many values due to potential randomization by indifferent agents. In our model, the set of indifferent types have measure zero, so we can ignore the impact of their particular randomization choice on expected payoffs and hence on equilibrium strategies for other types.

For example, if \(\Theta _{1}\in \widehat{M}_{1}\), “my type is in \(\Theta _{1}\)” is truthful although it reveals no information.

A constant mechanism is the only case in which, if a direct mechanism \( M_{1}=\Theta _{1}\) is used instead of \(M_{1}=\{\Theta _{1}\}\), every agent is indifferent among any messages after messages are made public. Hence, there is a posterior equilibrium in a pure strategy where type is perfectly revealed. However, once this happens, any outcome based on \(\theta \) can be proposed (recall that there is no commitment). Anticipating this, a constant mechanism with full information revelation augmented with any subsequent proposal must have an ex post equilibrium. To focus on posterior implementation, we ignore this perfect information revelation in a constant mechanism.

Agent 1 can choose an outcome \(\phi ^{-}\) by choosing \(\widehat{\Theta } _{1,1}\) and \(\phi ^{+}\) by choosing \(\widehat{\Theta }_{1,2}\).

All \((K+1,K)\)-rules share the property that agent 1 can choose an outcome independent of the agent 2’s message.

For a low type (2, 2)-rule, agent 1 (2) can implement \(\phi ^{+}\) by choosing \(\widehat{\Theta }_{1,2}\) (\(\widehat{\Theta }_{2,2}\)) regardless of the other agent’s message. To implement \(\phi ^{-}\), a coordinated choice of messages (\(\widehat{\Theta }_{1,1},\widehat{\Theta }_{2,1}\)) is required.

All (K, K)-rules share the property that both agents can choose the same outcome independent of the other agent’s message, while they need to coordinate their messages for the other outcome.

With the possible indifference for the lowest (highest) type observing the lowest (highest) type.

This is similar to strong renegotiation-proofness in Maskin and Tirole (1992).

(iii) and (v) have symmetric counterparts for (1, 2)- and (2, 3)-rules and (iv) has a counterpart for high type, but they are omitted.

See Green and Laffont (1987) page 84 for a discussion of an accumulation point.

In fact, it is always possible to make both agents better off when they reveal that they are middle types. See the discussion at the end of Example 1.

In (ii)(a) any \(\phi ^{0}\in \left[ 0,1\right] \) is possible, in (iii)(a) any \(\phi ^{-}<1\) is possible, and in (iii)(b) any \(\phi ^{+}>0\) is possible.

In (iii)(b), the same explanation applies to posterior disagreement in the posterior type set \(\left[ \theta _{1}^{d},\overline{\theta }_{1} \right] \times \Theta _{2}\). In (ii)(a), there is interim disagreement and the same explanation applies in the type set \(\Theta \).

First, no posterior implementable (1, 2)-rule exists above \(\theta _{2,1}\). Also, figure shows that \(h_{1}\left( \theta _{2,1},y_{2}\right) =\underline{ \theta }_{1}\) \(\forall y\in \left[ \theta _{2,1},\overline{\theta }_{2} \right] \). This implies that no (2, 1) and (2, 2)-rule is posterior implementable in A.

For example, consider a modified version of Example 1 where \(c_{1}=c_{2}=c\) is sufficiently small. The only posterior implementable rule which is not constant is a low type \(\left( 2,2\right) \)-rule, but it is not PRP. In sum, if (i) agents are symmetric, (ii) no \(\left( 2,1\right) \)-rule is posterior implementable, and (iii) a unique posterior implementable \(\left( 2,2\right) \)-rule has \(\theta _{1,1}=\theta _{2,1}\), then no PRP rule exists.

Watson (1999) is a related work in a dynamic environment.

References

Cramton PC, Palfrey TR (1995) Ratifiable mechanisms: learning from disagreement. Games Econ Behav 10:255–283

Forges F (1994) Posterior efficiency. Games Econ Behav 6:238–261

Green JR, Laffont JJ (1987) Posterior implementability in a two-person decision problem. Econometrica 55(1):69–94

Holmstrom B, Myerson RB (1983) Efficient and durable decision rules with incomplete information. Econometrica 51(6):1799–1819

Jehiel P, Meyer-ter-Vehn M, Moldovanu B, Zame W (2007) Posterior implementation vs ex-post implementation. Econ Lett 97:70–73

Lopomo G (2000) Optimality and robustness of the English auction. Games Econ Behav 36:219–240

Maskin E, Tirole J (1992) The principal–agent relationship with an informed principal, II: common values. Econometrica 60(1):1–42

Poitevin M (2000) Can the theory of incentives explain decentralization? Can J Econ 33(4):878–906

Vartiainen H (2013) Auction design without commitment. J Eur Econ Assoc 11(2):316–342

Watson J (1999) Starting small and renegotiation. J Econ Theory 85:52–90

Author information

Authors and Affiliations

Corresponding author

Additional information

I would like to thank Georgy Artemov, Chris Edmond, and seminar participants at the 2013 North American Summer Meeting of Econometric Society, SAET 2014 (Tokyo), and anonymous referees and the associated editor for helpful comments on earlier drafts. Support from the Faculty of Business and Economics at University of Melbourne is gratefully acknowledged.

Rights and permissions

About this article

Cite this article

Kawakami, K. Posterior renegotiation-proofness in a two-person decision problem. Int J Game Theory 45, 893–931 (2016). https://doi.org/10.1007/s00182-015-0491-9

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00182-015-0491-9

Keywords

- Information aggregation

- Limited commitment

- Posterior efficiency

- Posterior implementation

- Renegotiation-proofness