Abstract

In semiparametric regression, traditional methods such as mixed generalized additive models (GAM), computed via Laplace approximation or variational approximation using penalized marginal likelihood estimation, may not achieve sparsity and unbiasedness simultaneously, and may sometimes suffer from convergence problems. To address these issues, we propose an estimator for semiparametric generalized additive models based on the marginal likelihood. Our approach provides sparsity estimates and allows for statistical inference. To estimate and select variables, we use the smoothly clipped absolute deviation penalty (SCAD) within the framework of variational approximation. We also propose efficient iterative algorithms to obtain estimations. Simulation results support our theoretical characteristics, and we demonstrate that our method is more effective than the original variational approximations framework and many other penalized methods under certain conditions. Moreover, applications with actual data further demonstrate the superior performance of the proposed method.

Similar content being viewed by others

References

Antoniadis A, Fan J (2001) Regularization of wavelet approximations. J Am Stat Assoc 96(455):939–967

Breslow NE, Clayton DG (1993) Approximate inference in generalized linear mixed models. J Am Stat Assoc 88(421):9–25

Brezger A, Lang S (2006) Generalized structured additive regression based on Bayesian p-splines. Comput Stat Data Anal 50(4):967–991

Chartrand R (2007) Exact reconstruction of sparse signals via nonconvex minimization. IEEE Signal Process Lett 14(10):707–710

Corbeil RR, Searle SR (1976) Restricted maximum likelihood (reml) estimation of variance components in the mixed model. Technometrics 18(1):31–38

Eilers PHC, Marx BD (1996) Flexible smoothing with B-splines and penalties. Stat Sci 11(2):89–121

Fan J, Li R (2001) Variable selection via nonconcave penalized likelihood and its oracle properties. J Am Stat Assoc 96(456):1348–1360

Fan J, Peng H (2004) Nonconcave penalized likelihood with a diverging number of parameters. Ann Stat 32(3):928–961

Fan J, Xue L, Zou H (2014) Strong oracle optimality of folded concave penalized estimation. Ann Stat 42(3):819

Friedman JH, Stuetzle W (1981) Projection pursuit regression. J Am Stat Assoc 76(376):817–823

Friedman J, Hastie T, Tibshirani R (2010) Regularization paths for generalized linear models via coordinate descent. J Stat Softw 33(1):1

Hastie T, Tibshirani R (1987) Generalized additive models: some applications. J Am Stat Assoc 82(398):371–386

Hui FK, You C, Shang HL, Müller S (2019) Semiparametric regression using variational approximations. J Am Stat Assoc

Kauermann G, Krivobokova T, Fahrmeir L (2009) Some asymptotic results on generalized penalized spline smoothing. J R Stat Soc Ser B 71(2):487–503

Loh P-L, Wainwright MJ (2015) Regularized m-estimators with nonconvexity: Statistical and algorithmic theory for local optima. J Mach Learn Res 16(1):559–616

Luts J, Wand MP (2015) Variational inference for count response semiparametric regression. Bayesian Anal 10(4):991–1023

Luts J, Broderick T, Wand MP (2014) Real-time semiparametric regression. J Comput Graph Stat 23(3):589–615

Lv Y-W, Yang G-H (2022) An adaptive cubature Kalman filter for nonlinear systems against randomly occurring injection attacks. Appl Math Comput 418:126834

McElreath R (2018) Statistical rethinking: a Bayesian course with examples in R and Stan. Chapman and Hall/CRC

Nelder JA, Wedderburn RW (1972) Generalized linear models. J R Stat Soc Ser A 135(3):370–384

Shang HL, Hui FK (2019) Package ‘vagam’

Wang Z, Liu H, Zhang T (2014) Optimal computational and statistical rates of convergence for sparse nonconvex learning problems. Ann Stat 42(6):2164

Wood S (2018) Mixed gam computation vehicle with automatic smoothness estimation. R Package Version 1.8–12

Wood SN (2006) Generalized additive models: an introduction with R. Chapman and hall/CRC

Wood S, Scheipl F (2017) gamm4: Generalized additive mixed models using ‘mgcv’and ‘lme4’. r package version 0.2-5

Xu Z, Chang X, Xu F, Zhang H (2012) \( l\)_ \({1/2}\) regularization: a thresholding representation theory and a fast solver. IEEE Trans Neural Netw Learn Syst 23(7):1013–1027

Zhang C-H (2010) Nearly unbiased variable selection under minimax concave penalty. Ann Stat 38(2):894–942

Zhou B, Gao J, Tran M-N, Gerlach R (2021) Manifold optimization-assisted Gaussian variational approximation. J Comput Graph Stat 30(4):946–957

Zou H, Li R (2008) One-step sparse estimates in nonconcave penalized likelihood models. Ann Stat 36(4):1509

Acknowledgements

This work was supported by the National Natural Science Foundation of China (Grant No. 12371281); the Emerging Interdisciplinary Project, Program for Innovation Research, and the Disciplinary Funds of Central University of Finance and Economics.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Proof of Theorem 1

Set \(\delta =d^{1/2}n^{-1/2}\), where \(d = o(n^{1/4})\). The goal is to prove \(\Vert {\hat{\theta }}-\theta ^0\Vert _2=O_p(\delta )\). Let \(\theta = \theta ^0+\delta u\), then it is equivalent to prove that there exists a large constant C such that \(\forall \varepsilon >0\),

which implies that with probability at least \(1-\varepsilon \) there is a local solution in the ball \(\{\theta : \Vert \theta -\theta ^0\Vert _2=\delta u,\Vert u\Vert _2 \le C\} \) for \({\arg \max }_{\theta }\ l_{\lambda }\left( \theta ,\text {vech}(A)\right) \). According to Lemma 1, when \({\hat{\theta }} \in \{\theta : \Vert \theta -\theta ^0\Vert _2=\delta u,\Vert u\Vert _2 \le C\} \), the covariance \({\hat{A}}\) estimated by VA approximation satisfies \({\hat{A}} = O_p(1/n)\). For any \(\theta \in \{\theta : \Vert \theta -\theta ^0\Vert _2=\delta u,\Vert u\Vert _2 \le C\}\), by Taylor expansion, we have

We already have \(T_1=C\cdot O_p(d)\), \(T_2\le -K^2\tau _{\min }d\), \(T_3=o_p(d)\). In addition, by the inequality \(p_\lambda (|x|)-p_\lambda (|y|)\le \lambda |x-y|\), the conditions \(\lambda _0=o(1)\) and \(\lambda _0=O\left( d^{1/2}n^{-1/2}\right) \), we have

By Condition C6, we can obtain that there exists a constant \(C_3\) such that \(\big | \sqrt{\beta _l^{\mathrm {\scriptscriptstyle T} }H_l\beta _l}+\sqrt{\beta _{0\,l}^{\mathrm {\scriptscriptstyle T} }H_l\beta _{0\,l}} \big |>C_3\), then

In summary, \(T_1=C \cdot O_p(d)\), \(T_2\le -K^2\tau _{\min }d\), \(T_3=o_p(d)\), and \(T_\lambda =O_p(d)\). If we choose a large constant K, \(T_2\) will dominate \(T_1\), \(T_2\) and \(T_\lambda \). We thus conclude that there exists a local solve in the \(\{\theta : \Vert \theta -\theta ^0\Vert _2=\delta u,\Vert u\Vert _2 \le C \} \) for \({\arg \max }_{\theta } l_{\lambda }\left( \theta ,\text {vech}(A)\right) \). Then \(\Vert \theta -\theta ^0\Vert _2=O_p(\delta )=O_p(d^{1/2}n^{-1/2})\) is able to proved, completing the proof. \(\square \)

Proof of Theorem 2

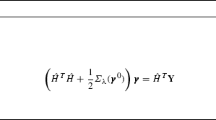

Based on Theorem 1, we can obtain that \({\hat{\theta }}\) is the consistent estimator of \(\theta \), thus there exists a local maximizer \({\hat{\theta }}\) with the convergence rate \(d^{1/2}n^{-1/2}\) such that \({l_{\lambda }\left( \theta ,\text {vech}(A)\right) }\) is maximum. That is

Note that \({\hat{\theta }}\) converges to \(\theta \) in probability and by Taylor expansion, we have when \(j=1,\dots ,p\), the score function of the likelihood \(\partial l_{\lambda }\left( \theta ,\text {vech}(A)\right) /\partial \theta _{j}=\partial l_{}\left( \theta ,\text {vech}(A)\right) /\partial \theta _{j}\) because the penalty item does not contain the parameter component \(\psi =(\alpha ,\phi )\), and when \(j=p+1,\dots ,q\), the following holds

Therefore, we only need to prove that the linear part of VA estimator is asymptotically normal solution the linear part \(\alpha \) to \(\partial l_{}\left( \theta ,\text {vech}(A)\right) /\partial \theta _{j}=0\) is one and the same as \(\partial l_{\lambda }\left( \theta ,\text {vech}(A)\right) /\partial \theta _{j}\). According to Hui et al. (2019), the VA estimators of normal response, poisson response and bernoulli response satisfy:

and the last term on the right-hand side is asymptotically negligible. We also have \(\sum _{i=1}^{n} L_{i}=n^{-1 / 2} G \mathcalligra {I}^{-1}\left( \theta ^{0}\right) \nabla _{\theta } \ell \left( \theta ^{0}\right) \) such that \(\sum _{i=1}^{n} L_{i}=n^{-1 / 2} G \mathcalligra {I}^{-1}\left( \theta ^{0}\right) \nabla _{\theta } \ell \left( \theta ^{0}\right) \). Thus, we conclude \(E(l_i)=0\) and \({\text {Var}}\left( L_{i}\right) =n^{-1} G \mathcalligra {I}^{-1}\left( \theta ^{0}\right) G^\mathrm {\scriptscriptstyle T} \). By multivariate Lindeberg-Feller central limit theorem, we have \(\jmath ^{-1} \ell (\theta ^0)\nabla _0 (\theta ^0){\mathop {\longrightarrow }\limits ^{d}}N(0,G \jmath ^{-1} ( \theta ^0)G^\top )\), completing the proof. \(\square \)

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Yang, F., Yang, Y. A sparse estimate based on variational approximations for semiparametric generalized additive models. Comput Stat (2024). https://doi.org/10.1007/s00180-024-01485-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00180-024-01485-2