Abstract

In this paper, we present a novel family of multivariate mixed Poisson-Generalized Inverse Gaussian INAR(1), MMPGIG-INAR(1), regression models for modelling time series of overdispersed count response variables in a versatile manner. The statistical properties associated with the proposed family of models are discussed and we derive the joint distribution of innovations across all the sequences. Finally, for illustrative purposes different members of the MMPGIG-INAR(1) class are fitted to Local Government Property Insurance Fund data from the state of Wisconsin via maximum likelihood estimation.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In recent years, there has been a growing interest in modelling integer-valued time series of univariate and multivariate count data in a plethora of different scientific fields such as sociology, econometrics, manufacturing, engineering, agriculture, biology, biometrics, genetics, medicine, sports, marketing, and insurance. In particular, regarding the univariate case (Al-Osh and Alzaid 1987) and McKenzie (1985) were the first to consider an INAR(1) model based on the so-called binomial thinning operator. Subsequently, many articles focused on extending this setup by applying different thinning operators or by varying the distribution of innovations. For more details, the interested reader can refer to Weiß (2018), Davis et al. (2016), Scotto et al. (2015), Weiß (2008) among many more. The INAR(1) model with Poisson marginal distribution (Poisson INAR(1)) has been the most popular choice due to the simplicity of its log-likelihood function that implies that the formality of parameter estimation via maximum likelihood (ML) estimation is straightforward. Also, Freeland and McCabe (2004) considered an extension of the model by allowing for regression specifications on the mean of the Poisson innovation as well as parameter of binomial thinning operator. On the other hand, the literature which focuses on the multivariate case is less developed. In particular, Latour (1997) introduced a multivariate GINAR(p) model with a generalized thinning operator. Karlis and Pedeli (2013) and Pedeli and Karlis (2011, 2013a, b) focused on the diagonal case under which the thinning operators do not introduce cross correlation among different counts. In this case, the dependence structure introduced by innovations. Additionally, Ristić et al. (2012), Popović (2016), Popović et al. (2016) and Nastić et al. (2016) constructed multivariate INAR distributions with cross correlations among counts and random coefficients thinning. Finally, Karlis and Pedeli (2013) extended the setup of the previous articles by allowing for negative cross correlation via a copula-based approach for modelling the innovations.

In this paper, we extend the model proposed by Pedeli and Karlis (2011) by introducing the multivariate mixed Poisson-Generalized Inverse Gaussian INAR(1), MMPGIG-INAR(1), regression model for multivariate count time series data. The MMPGIG-INAR(1) is a general three parameter distribution family of INAR(1) models driven by mixed Poisson regression innovations where the mixing densities are chosen from the Generalized Inverse Gaussian class of distributions. Thus, the proposed modelling framework can provide the appropriate level of flexibility for modelling positive correlations of different magnitudes among time series of different types of overdispersed count response variables. In particular, depending on the values taken by the shape parameter, the MMPGIG-INAR(1) family includes many members, such as the mixed Poisson-Inverse Gaussian (PIG), as special cases and several others as limiting cases, such as the Negative Binomial, or Poisson-Gamma, the Poisson-Inverse Gamma (PIGA), the Poisson-Inverse Exponential, the Poisson-Inverse Chi Squared and the Poisson-Scaled Inverse Chi Squared distributions. Therefore, it can accommodate different levels of overdispersion depending on the chosen parametric form of the mixing density. Furthermore, the MMPGIG-INAR(1) family of models is constructed by assuming that the probability mass function (pmf) of the MMPGIG innovations is parameterized in terms of the mean parameter which results in a more orthogonal parameterization that facilitates maximum likelihood (ML) estimation when regression specifications are allowed for the mean parameters of the MMPGIG-INAR(1) regression model. For expository purposes, we derive the joint probability mass functions and the derivatives of several special cases of the MMPGIG-INAR(1) family which are used as innovations. These models are fitted to time series of claim count data from the Local Government Property Insurance Fund (LGPIF) data in the state of Wisconsin. At this point it is worth noting that modelling the correlation between different types of claims from the same and/or different types of coverage it is very important from a practical business standpoint. Many articles have been devoted to this topic, see for example, Bermúdez and Karlis (2011), Bermúdez and Karlis (2012), Shi and Valdez (2014a, b), Abdallah et al. (2016), Bermúdez and Karlis (2017), Pechon et al. (2018), Pechon et al. (2019), Bolancé and Vernic (2019), Denuit et al. (2019), Fung et al. (2019), Bolancé et al. (2020), Pechon et al. (2021), Jeong and Dey (2021), Gómez-Déniz and Calderín-Ojeda (2021), Tzougas and di Cerchiara (2021a, b). However, with the exception of very few articles, such as Bermúdez et al. (2018) and Bermúdez and Karlis (2021), the construction of bivariate INAR(1) models which can capture the serial correlation between the observations of the same policyholder over time and the correlation between different claim types remains a largely uncharted territory. This is an additional contribution of this study.

The rest of the paper proceeds as follows. Section 2 presents the derivation of the MMPGIG-INAR(1) model. Statistical properties of the MMPGIG innovations are discussed in Sect. 3. In Sect. 4, we present a description of the alternative special cases of the MMPGIG-INAR(1) family. Section 5 discusses the parameter estimation for these models based on the maximum likelihood method and integer-valued prediction. Section 6 contains our empirical analysis for the LGPIF data set. Finally, concluding remarks are given in Sect. 7.

2 Generalized setting

Let \(\mathbf {X}\) and \(\mathbf {R}\) be non-negative integer-valued random vectors in \({\mathbb {R}}^m\). Let \(\mathbf {P}\) be a diagonal matrix in \({\mathbb {R}}^{m \times m}\) with elements \(p_i \in (0,1)\). The multivariate Poisson-Generalized Inverse Gaussian INAR(1) is defined as

where the thinning operator \(\circ\) is the widely used binomial thinning operator such that \(p_i \circ X_{i,t} = \sum _{k=1}^{X_{i,t}} U_k\) where \(U_k\) are independent identically distributed Bernoulli random variables with success probability \(p_i\), i.e. \({\mathcal {P}}(U_k = 1) = p_i\). Hence \(p_i \circ X_{i,t}\) is binomially distributed with size \(X_{i,t}\) and success probability \(p_i\). Then the distribution function \(f_{p_i}(x, X_{i,t})\) can be easily written down as

Note that given \(X_{i,t}, X_{j,t} \ i \ne j\), \(p_i \circ X_{i,t}\) and \(p_j \circ X_{j,t},\) are independent of each other. To adapt the heteroscedasticity arising from the data, \(\{R_{i,t}\}_ {i=1,\ldots ,m}\) are mixed Poisson random variables \(Po(\theta _t \lambda _{i,t})\) with the random effect \(\theta _t\). The rate \(\lambda _{i,t}\) is characterized by its observed covariate \(z_{i,t} \in {\mathbb {R}}^{a_i \times 1 }\) for some positive integer \(a_i\) and they are connected through a log link function such that \(\log (\lambda _{i,t}) = z_{i,t}^{T} \beta _i\) where \(\beta _i \in {\mathbb {R}}^{a_i \times 1 }\). Furthermore, \(\{R_{i,t}\}_ {i=1,\ldots ,m}\) share the same random effect \(\theta _t\) with mixing distribution \(G(\theta )\), which means the dependent structure among \(X_{i,t}\) can be controlled by the choice of distribution and its corresponding size of parameters. The joint distribution of \(\mathbf {R}_t\) is

We let \(\theta _t\) be a continuous random variable from the Generalized Inverse Gaussian distribution with density function \(g(\theta )\)

where \(-\infty \le \nu \le \infty , \psi>0, \chi >0\) and \(K_{\nu }(\omega )\) is the modified Bessel function of the third kind of order \(\nu\) and argument \(\omega\) such that

The Generalized Inverse Gaussian distribution is a widely used family. For example, it includes the Inverse Gaussian as special case and the Gamma and Inverse Gamma as limiting cases. To avoid identification problems for mixed Poisson regression random variable \(\mathbf {R}_t\), the mean of \(\theta _t\) is restricted to one, i.e. \({\mathbb {E}}[\theta _t] = 1\), and all the parameters \(\nu , \psi , \chi\) will be either fixed or a function of another parameter \(\phi\). With these two constraints, there is only one parameter that is free to vary. (e.g. for Inverse Gaussian distribution, \(\nu = -\frac{1}{2}\) and \(\psi = \chi = \phi\)). The joint distribution of \(\mathbf {R}_t\) becomes an MPGIG distribution

where \(\varDelta = \psi + 2 \sum _{i=1}^m \lambda _{i,t}\). In Sect. 5, we will discuss in detail the distribution function \(f_{\phi }(\mathbf {k},t)\) for some special cases. Finally, it should be noted that several articles discuss multivariate versions of MPGIG distribution and/or the MPIG distribution which is a special case for \(\nu =-0.5\), see, for instance, Barndorff-Nielsen et al. (1992), Ghitany et al. (2012) Amalia et al. (2017), Mardalena et al. (2020), Tzougas and di Cerchiara (2021b) and Mardalena et al. (2021). However, this is the first time that the MMPGIG-INAR(1) distribution family of INAR(1) models driven by mixed Poisson regression innovations are considered for modelling time series of count response variables.

3 Properties of innovations \(\mathbf {R}_t\)

Proposition 3.1

(The moments of \(\mathbf {R}_t\)) The mean, variance of \(R_{i,t}\) and covariance between \(R_{i,t}, R_{j,t}, i\ne j\) are given by

where \(\sigma _{\theta }^2\) is the variance for the random effect \(\theta _t\) and \(i,j = 1,\ldots ,m\).

Proposition 3.2

(Marginal property) The joint distribution function \(f_{\phi }(\mathbf {k},t)\) is closed to marginalization, i.e. the marginal distribution for \(R_{i,t}\) is given by \(f_{\phi }(k_i,t)\) such that

which is a univariate mixed Poisson regression random variable. In general, this result is valid for any \(m'\)-variate mixed Poisson regression random variable with \(m' < m\)

Proof

We will show the result for univariate case. The \(m'\)-variate case can be derived similarly by reducing the number of following sum to \(m - m'\)

\(\square\)

The marginalization property can enable, for example insurers, to easily price those policyholders who only engage in some but not all lines of business. The last property is about the identifiability of \(\mathbf {R}_t\), which will ensure the uniqueness of the model.

Proposition 3.3

(Identifiability of joint distribution \(\mathbf {R}_t\)) Assume that the covariate space \(\mathbf {z}_t = (z_{1,t}, \ldots , z_{m,t} )\) is of full rank. Denote the parameter set \(\varTheta _R = \{ \beta _i, \phi | i = 1,\ldots , m\}\) and \(\tilde{\varTheta }_R = \{ \tilde{\beta _i}, \tilde{\phi } | i = 1,\ldots , m\}\), the joint distribution \(f_{\phi }(\mathbf {k},t)\) is identifiable such that

if and only if \(\varTheta _R = \tilde{\varTheta }_R\).

Proof

With the assumption that the covariate \(\mathbf {z}\) is of full rank and the log-link function is monotonic such that \(\log (\lambda _{i,t}) = z_{i,t}^T \beta _i\), it is obvious that the identification problem for the mixed Poisson regression random variable \(\mathbf {R}_t\) reduces to identification for mixed Poisson random variable (without regression), which means the set of parameter can be re-parametrized as \(\varTheta ^{*}_R = \{ \lambda _{i,t}, \phi | i = 1,\ldots , m\}\) and \(\tilde{\varTheta }^*_R = \{\tilde{\lambda }_{i,t}, \tilde{\phi } | i = 1,\ldots , m\}\).

Then the ’if’ statement is obvious since the same set of parameters will definitely lead to the same joint distribution function. For the ’only if’ statement, to match two distribution functions, all the moments (mean,variance, covariance) must reconcile. From the moment properties above, matching the \({\mathbb {E}}[R_{i,t}]\) will lead to \(\lambda _{i,t} = \tilde{\lambda }_{i,t}\). Likewise, given that the first moment is matched, only \(\phi = \tilde{\phi }\) will lead to the same \(Var(R_{i,t})\). Matching these moments already leads to \(\varTheta ^*_R = \tilde{\varTheta }^*_R\), then the covariance \(Cov(R_{i,t},R_{j,t})\) must match with each other. \(\square\)

4 Model specification

The distributional properties of \(\mathbf {X}_t\), in particular the correlation structure and ’tailedness’ of the distribution, are mainly determined by the innovation \(\mathbf {R}_t\), more specifically, the mixing density \(g(\theta )\). On the other hand, the explicit form of the derivatives of \(f_{\phi }(\mathbf {k},t)\) can significantly accelerate the computational speed when performing estimation. Hence, the distribution function \(f_{\phi }(\mathbf {k},t)\) as well as its derivatives are derived for two limiting cases (Gamma, Inverse Gamma) and some other special cases (GIG with unit mean and different values of \(\nu\)). Throughout this session, we define \(S^{\lambda }_t = \sum _{i=1}^m \lambda _{i,t}\) and \(S^{k} = \sum _{i=1}^m k_i\).

4.1 Mixing by Gamma distribution

If \(\mathbf {R}_t\) is univariate, the resulting distribution is known as the negative binomial distribution and this result can be easily extended to the multivariate case which is called the multivariate negative binomial distribution (see e.g. Marshall and Olkin 1990; Boucher et al. 2008; Cheon et al. 2009). The gamma density is obtained by letting \(\nu =\phi , \psi = 2\phi\) and \(\chi = 0\) in generalized Inverse Gaussian density in Sect. 2.4. The resulting mixing density has the following form:

with unit mean and variance \(\frac{1}{\phi }\). Then the expectation 2.3 can be evaluated explicitly

Proposition 4.1

The derivatives of the distribution function \(f_{\phi } (\mathbf {k},t)\) with respect to \(\varTheta _R = \{\phi , \beta _i \ | \ i =1,\ldots , m \}\) when \(\theta _t \sim\) Gamma\((\phi , \phi )\) are given by

where the sum \(\sum _{n=1}^{S^k } \frac{1}{n+\phi -1 } = 0\) when \(S^{k} = 0\).

Proof

The derivatives \(\frac{\partial f_{\phi } (\mathbf {k},t) }{\partial \beta _i}\) can be figured out easily except \(\frac{\partial f_{\phi }(\mathbf {k},t) }{\partial \phi }\) which involves the gamma function. The derivative of the gamma function can be derived by utilizing the alternative Weierstrass’s definition such that

which is valid for all complex number z except non-positive integers and \(\gamma\) is Euler–Mascheroni constant. Then the derivative can be derived by differentiating its log transform \(\log \Gamma (z+1)\), which leads to the series expansion of digamma function

Then the derivative \(\frac{\partial f_{\phi }(\mathbf {k},t) }{\partial \phi }\) can be derived steps by steps. First let us simplify the expression of \(f_{\phi }(\mathbf {k},t)\) such that

The derivative is then

\(\square\)

4.2 Mixing by Inverse Gamma

The Inverse gamma distribution, which is another limiting case of generalized Inverse Gaussian distribution, is discussed in Sect. 9.3 (Johnson et al. 1995). Inverse gamma random variable has a relatively thicker right tail and a low probability in taking the values closed to 0. In this case, the density function \(g(\theta )\) is obtained by letting \(\psi = 0, \chi = 2\phi\) and \(\nu = -\phi -1\) such that

with mean 1 and variance \(\frac{1}{\phi - 1}\) for \(\phi > 1\). It is also called the reciprocal gamma distribution such that \(\theta = 1/x\) where \(x \sim\) Gamma\((\phi + 1, \phi )\). The distribution function \(f_{\phi } (\mathbf {k},t)\) becomes

where \(\nu = S^k - \phi - 1\) and \(\omega = 2 \sqrt{\phi S^{\lambda }_t}\). The derivatives of \(f_{\phi } (\mathbf {k},t)\) with respect to the parameter set \(\varTheta _R = \{\phi , \beta _i \ | \ i =1,\ldots , m \}\) are given by

In this case, numerical differentiation is applied to calculate \(\frac{\partial \log K_{\nu } \left( \omega \right) }{\partial \phi }\) since the parameter \(\phi\) appears both in the order \(\nu\) and argument \(\omega\) of the modified Bessel function \(K_{\nu }(\omega )\).

4.3 Mixing by Generalized Inverse Gaussian

Likewise, if \(\mathbf {R}_t\) is univariate, the distribution of \({\mathbf {R}}_t\) is known as the Poisson Generalized Inverse Gaussian distribution. To comply with constraints we made in Sect. 2, the mixing density function has following form

with unit mean and variance \(var(\theta _t) = \frac{1}{c^2} + \frac{2 (\nu +1)}{c\phi } - 1\), where \(c = \frac{K_{\nu + 1}(\phi )}{K_{\nu }(\phi )}\), \(\phi >0\) and \(\nu \in {\mathbb {R}}\). Then the distribution function \(f_{\phi } (\mathbf {k},t)\) becomes

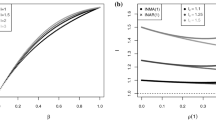

where \(a = \phi c + 2 S^{\lambda }_t\), \(b = \frac{\phi }{ c}\) and \(p = S^k+ \nu\). Furthermore, we let \(\nu\) be constant and fixed in order to avoid potential identification problems which may appear when performing estimation. In general, however, the derivative with respect to \(\phi\) is really hard to find since the constant c involves the Bessel function. On the other hand, it is worth noting that \(var(\theta _t)\) is roughly unbounded when \(\nu \in [-2,0]\) and the skewness and kurtosis are decreasing with respect to \(\nu\), which can be easily verified by some statistical software on computer. So, we will discuss cases where \(\nu = -\frac{1}{2}, -\frac{3}{2}, -\frac{3}{4}\), two of which have ‘explicit’ distributions in the sense that the constant c can be evaluated in closed form.

4.3.1 Generalized Inverse Gaussian with \(\nu = -\frac{1}{2}\)

In this case, the resulting distribution known as the Poisson Inverse Gaussian distribution is investigated by many authors (see,e.g Sichel 1974, 1982; Atkinson and Yeh 1982; Stein and Juritz 1988 among others). When \(\nu = -\frac{1}{2}\), \(c = 1\) and the distribution function f becomes

For convenience, we reparametrize the above density by squaring the parameter \(\phi\) such that

where \(p = S^k -\frac{1}{2}\) and \(\varDelta = \sqrt{\phi ^2 + 2S^{\lambda }}\). The derivatives of \(f_{\phi } (\mathbf {k},t)\) with respect to different parameters can be derived by making use of the derivative of \(K_{\nu }(\omega )\) with respect to its argument such that

then it leads to the following derivatives

where \(\mathbf {1}_i = (0,\ldots ,0,1,0,\ldots , 0)^T \in {\mathbb {R}}^{m \times 1}\) is vector with i-th element being one and 0 elsewhere.

4.3.2 Generalized Inverse Gaussian with \(\nu = -\frac{3}{2}\)

In this case, the constant \(c = \frac{\phi }{1+\phi }\) and the variance \(var(\theta _t) = \frac{1}{\phi }\) which is exactly the same as the variance of Inverse Gaussian case but the random effect \(\theta _t\) will in general have larger skewness and kurtosis. The resulting distribution function is

where \(p = S^k - \frac{3}{2}\) and \(\omega = \sqrt{\phi ^2 + 2(\phi +1)S^{\lambda }_t}\). The derivatives with respect to different parameters can be derived similar to that of Inverse Gaussian case

The remaining case where \(\nu = -\frac{3}{4}\) cannot be simplified since the \(c = \frac{K_{1/4}(\phi )}{K_{3/4}(\phi )}\) cannot be written down in terms of basic functions. Hence numerical differentiation has to be applied when evaluating \(\frac{\partial f_{\phi } (\mathbf {k},t) }{\partial \phi }\) and \(\frac{\partial f_{\phi } (\mathbf {k},t) }{\partial \beta _i}\). Finally, Table 1 summarise the parametrization of all mixing densities and Table 2 shows the moments formula for each mixing density.

Although the formula for variances is slightly different due to its parametrization, they can be easily reparameterized and compared with each other. It turns out that the Inverse Gamma has the largest skewness and kurtosis while the Gamma density has the smallest, which means the ’tailedness’ of those density increases in a ‘top-down’ order according to the Table. Hence, one can choose different density to accommodate different tail structure encountered in real data.

5 Model fitting and prediction

5.1 Maximum likelihood estimation for the MMPGIG-INAR(1) model

In this section, we derive the log likelihood function and score function of the MMPGIG-INAR(1) model defined above for the general case. Let the whole parameter set be \(\varTheta = \{ p_i, \beta _i, \phi | i = 1,\ldots , m\}\) and then the log likelihood function \(\ell (\varTheta )\) for this discrete Markov chain is just the product of their conditional probability function such that \(\ell (\varTheta ) = \prod _{t} {\mathcal {P}}_{\varTheta }(\mathbf {X}_t | \mathbf {X}_{t-1})\), where the conditional probability is the convolution of m+1 distribution functions such that

where the expectation is taken with respect to the random variable \(\theta _t\). The following proposition gives \(\ell (\varTheta )\) and its score functions.

Proposition 5.1

Suppose there is a multivariate random sequence \((\mathbf {X}_1, \mathbf {X}_2, \dots \mathbf {X}_n)\) generated from the MMPGIG-INAR(1) model, the log likelihood function \(\ell (\varTheta )\) and score functions are given by

The derivatives inside the sum are given by

where the derivative \(\frac{\partial f_{p_j}(\omega ,X_{j,t-1}) }{\partial p_j }\) has the same form for all \(j = 1,...,m\).

The derivatives \(\frac{\partial f_{\phi }(\mathbf {k},t) }{\vartheta _1}\) are already discussed in Sect. 4 for different cases. Hence, the maximum likelihood estimators can be obtained through numerical algorithms, for example Newton-raphson, Quasi-Newton and so on. However, optimization will be computational intensive as m increases. One can solve this issue by adopting the composite likelihood method introduced in Pedeli and Karlis (2013a), where the high dimensional likelihood function was reduced to a sum of bivariate cases.

5.2 Integer-valued prediction

Based on the estimates obtained by maximum likelihood and the random sequence \((\mathbf {X}_{1}, \dots , \mathbf {X}_n)\), the h-steps ahead distribution of \(\mathbf {X}_{n + h}\) conditional on \(\mathbf {X}_{n}\) is given by

where \(\hat{\mathbf {P}}\) is obtained from above estimation procedure. In the classical time series model, one would minimise MSE\((h) = {\mathbb {E}}[(\hat{\mathbf {X}}_{n+h} - \mathbf {X}_{n+h})^2 \vert \mathbf {X}_n]\) to obtain the optimal linear predictor such that \(\hat{\mathbf {X}}_{n + h} = {\mathbb {E}}[\mathbf {X}_{n+h} \vert \mathbf {X}_n]\). However, this would inevitably introduce real value for \(\hat{\mathbf {X}}_{n+h}\), which is not coherent to the integer-valued nature of MMPGIG-INAR(1) model. To solve this, one can instead use the median \(\tilde{\mathbf {X}}_{n+h}\) of \(\mathbf {X}_{n+h}\) , the 50% quantile, as prediction value for the model, which is also discussed by Pavlopoulos and Karlis (2008) and Homburg et al. (2019) . In the univariate case, the median is obtained by minimising the mean absolute error MAE\((h) = {\mathbb {E}}[|\tilde{X}_{n+h} - X_{n+h}| \vert X_n]\). The idea here can be extended to the multivariate case so that the median \(\tilde{\mathbf {X}}_{n+h}\) is called geometric median, which is calculated by minimising the expected Euclidean distance

On the other hand, the expectation can be evaluated numerically by simulating the random samples of \(\mathbf {X}_{n+h}\).

6 Empirical analysis

The data used in this section come from the Local Government Property Insurance Fund (LGPIF) from the state of Wisconsin. This fund provides property insurance to different types of government units, which includes villages, cities, counties, towns and schools. The LGPIF contains three major groups of property insurance coverage, namely building and contents (BC), inland marine (IM) and motor vehicles (PN, PO, CN, CO). For exploratory purposes, we focus on modelling jointly the claim frequency of IM, denoted as \(X_{1,t}\), and comprehensive new vehicles collision (CN), denoted as \(X_{2,t}\). The insurance data cover the period over 2006–2011 with 1234 policyholder records in total. Only \(n_1 = 1048\) of them have complete data over the period 2006–2010 which will be used as the training data set. The last year 2011 with \(n_2 =1025\) policyholders out of 1048 in the data set will be the test data set. Denote the IM type and CN type claim frequency for a particular policyholder as \(X^{(j)}_{1,t}, X^{(j)}_{2,t}\) respectively, where j is the identifier for each policyholder. Then the relationship between \(X_{i,t}\) and \(X^{(j)}_{i,t}\) is simply \(X_{i,t} = \sum _{j=1}^{n_1} X_{i,t}^{(j)}\) with \(i = 1,2\) while t would take the values from 1 to 5 corresponding to the year 2006 to 2010.

In what follows, basic statistical analysis is shown in Table 3 and Figs. 1 and 2. The proportion of zeros for the two types of claims is higher than 90% during the period 2006–2010. Also, both types of claims exhibit overdispersion, since their variances exceeds their means during this period. Furthermore, the overdispersion for \(X_{2,t}\) is even stronger than that of \(X_{1,t}\), which indicates the need to employ an overdispersed distribution for this data. Additionally, the correlation tests for \(X_{1,t}\) and \(X_{2,t}\) show a positive correlation between the two claim types. At this point it is worth noting that modelling positively correlated claims has been explored by many articles. See for example, Bermúdez and Karlis (2011), Bermúdez and Karlis (2012), Shi and Valdez (2014a, b), Abdallah et al. (2016), Bermúdez and Karlis (2017), Bermúdez et al. (2018), Bermúdez et al. (2018), Pechon et al. (2018), Pechon et al. (2019), Bolancé and Vernic (2019), Denuit et al. (2019), Fung et al. (2019), Bolancé et al. (2020), Pechon et al. (2021), Jeong and Dey (2021), Gómez-Déniz and Calderín-Ojeda (2021), Tzougas and di Cerchiara (2021a, b) and Bermúdez and Karlis (2021). Finally, the proportion of zeros and kurtosis show that the marginal distributions of \(X_{1,t}, X_{2,t}\) are positively skewed and exhibit a fat-tailed structure which indicates the appropriateness of adopting a positive skewed and fat-tailed distribution (GIG distribution).

The description and some summary statistics for all the explanatory variables (covariates \(z_{1,t}, z_{2,t}\)) that are relevant to \(X_{1,t}, X_{2,t}\) are shown in Table 4. Variables 1–5 including ‘TypeVillage’ are categorical variables to indicate the entity types of a policyholder. Due to the strongly heavy-tailed structure appearing in variables 6 and 9 which can drastically distort the model fitting, those variables are transformed by means of the ’rank’ function in R software and then standardized, which can mitigate the effect of outliers. Variables 6–8 are relevant to IM claim \(X_{1,t}\) while variables 9,10 provide information for CN claims \(X_{2,t}\). The covariate \(z_{1,t}\) includes variables 1–8 and \(z_{2,t}\) contains variables 1–5 and variables 9, 10. These covariates act as the regression part for \(\lambda _{i,t}\) mentioned in Sect. 2, which may help explained part of the heterogeneity between \(X_{1,t}\) and \(X_{2,t}\).

The MMPGIG-INAR(1) with \(m = 2\), is applied to model the joint behaviour of \(X_{1,t}^{(j)}, X^{(j)}_{2,t}\) across all the policyholders. Note that when Gamma mixing density is used in MPGIG INAR(1), the resulting model will be the ”BINAR(1) Process with BVNB Innovations” in Pedeli and Karlis (2011), which we will used as comparison benchmark for other choices of mixing density. The the likelihood function would simply become

where \(\ell _j(\varTheta )\) is the likelihood function for policyholder j. Note that all the policyholders with the same type of claim \(X_{i,.}\) will share the same set of parameters \(p_i,\beta _i\) and \(\phi\) will be same for both claim types. In addition, it is necessary to show the appropriateness of introducing correlation and time-series component (binomial thinning) in MPGIG INAR(1). Then we also fit the data to following models.

-

1.

The joint distribution of \(X^{(j)}_{1,t}\) and \(X^{(j)}_{2,t}\) are assumed to be bivariate mixed Poisson distribution (BMP) with probability mass function \(f_{\phi }(\mathbf {k},t)\) which we already discussed in Sect. 4.

-

2.

The joint distribution of \(X^{(j)}_{1,t}\) and \(X^{(j)}_{2,t}\) are characterized by two independent INAR(1) models (TINAR)

$$\begin{aligned} X^{(j)}_{1,t}&= p_1 \circ X^{(j)}_{1,t-1} + R_{1,t} \\ X^{(j)}_{2,t}&= p_2 \circ X^{(j)}_{2,t-1} + R_{2,t}, \end{aligned}$$where \(R_{i,t} \sim Pois(\lambda _{i,t} \theta _{i,t}), i=1,2\) and random effect \(\theta _{i,t}\) is independent of i.

Similarly, the likelihood functions for these models will have the same form as Eq. (6.1) but different joint distribution \(\Pr ( X^{(j)}_{1,t+1}, X^{(j)}_{2,t+1} | X^{(j)}_{1,t}, X^{(j)}_{2,t})\). For comparison purposes, we fit the bivariate Poisson mixture regression model with the training data starting from 2007 because BMP model does not need to consider lag responses.

All the estimations is implemented in R software by the ’optim’ function with method ’BFGS’ (quasi-Newton method). The gradient functions with respect to all the parameters are derived in Sects. 4 and 5 and they can be input as gradient argument in ’optim’ function, which will significantly decrease the amount of computational time compared to numerical gradient function in default setting.

Model fitting results are shown in Tables 5 and 6. All the results show a great improvement by adopting a time series model compared to BMP results in Table 6. When focusing on the results of BINAR in Table 6, except the case where the mixing density is GIG \(\nu = -\frac{3}{2}\), there is an significant improvement by introducing the fat-tailed distribution as mixing density in \(\mathbf {R}_t\) compared to Gamma case. On the other hand, the improvement from the optimal TINAR to the optimal BINAR (cells are in bold face) is obvious, which is indicated by lower AIC and BIC of BINAR with GIG \(\nu = -\frac{3}{4}\) compared to TINAR with GIG \(\nu = -\frac{3}{4}\) and Inverse Gaussian. It implies that there is significant correlation between two claim sequences. Maximum likelihood estimates for three cases are given in Table 7 as well as their standard deviations. The standard derivations are estimated by inverting the numerical Hessian matrix. From Table 7 we see that the estimates for \(p_i, \beta _i\) are very close to each other while the estimated \(\phi\) is significantly different among three mixing densities, which is expected because \(\phi\) influences the tail and correlation structure of the bivariate sequence \(X_{1,t}, X_{2,t}\). Furthermore, we see that the explanatory variables have a similar effect (positive and/or negative) and are almost identical for both response variables in the case of of all three models. Finally, the variables which are statistically significant at a 5 % threshold for \(X_{1,t}\) are TypeCounty, TypeMisc, TypeVillage, NoClaimCreditIM, and those which are statistically significant at a 5 % threshold for \(X_{2,t}\) are TypeCity, TypeCounty, TypeVillage, CoverageIM, and CoverageCN.

The Fig. 2 below presents prediction for both types of claims at \(t = 2011\) with \(n_2 = 1025\) policyholders based on geometric median Eq. (5.5). It seems that the prediction for number of policyholders who make no claims are reasonably good while the prediction for \(X_{1,t}\) are generally underestimated at tail and the prediction for \(X_{2,t}\) are overestimated at the tail. On the other hand, Table 8 shows the prediction sum of squared error (PSSE) and frequency of some basic combination of observations, namely (0, 0), (1, 0), (0, 1), (1, 1) for the best fitted models within three classes, bivariate mixed Poisson regression, Two independent INAR(1) and bivariate INAR(1). It is again clear that the introduction of autoregressive part makes sense as it greatly reduce the prediction error. Although the best TINAR model has the closet frequency of (0, 0), the best BINAR model has the lowest overall prediction error.

7 Concluding remarks

In this paper we proposed the MMPGIG-INAR(1) regression model for modelling multiple time series of different types of count response variables. The proposed model, which is an extension of BINAR(1) regression model that was introduced by Pedeli and Karlis (2011), can accommodate positive correlation and multivariate overdispersion in a flexible manner. In particular, the Generalized Inverse Gaussian class includes many distributions as its special and limiting cases that can be used for modelling the innovations \(\mathbf {R}_t\). Thus, the proposed modelling framework can efficiently capture the stylized characteristics of alternative complex data sets. Furthermore, due to the simple form of its density function, statistical inference for the MMPGIG-INAR(1) model is straightforward via the ML method, whereas other models that have been proposed in the literature, such as copula-based models, may result in numerical instability during the ML estimation procedure. For demonstration purposes different members of the proposed famly of models were fitted to LGPIF data from the state of Wisconsin. Finally, it is worth mentioning that a possible line of further research could be to also consider cross correlation, meaning that the non-diagonal elements of \(\mathbf {P}\) can take positive values.

References

Abdallah A, Boucher J-P, Cossette H (2016) Sarmanov family of multivariate distributions for bivariate dynamic claim counts model. Insurance 68:120–133

Al-Osh M, Alzaid AA (1987) First-order integer-valued autoregressive (INAR (1)) process. J Time Ser Anal 8(3):261–275

Amalia J, Purhadi, Otok BW (2017) Parameter estimation and statistical test of geographically weighted bivariate Poisson Inverse Gaussian regression models. In: AIP Conference Proceedings, vol 1905. AIP Publishing LLC, p 050005

Atkinson A, Yeh L (1982) Inference for Sichel’s compound Poisson distribution. J Am Stat Assoc 77(377):153–158

Barndorff-Nielsen O, Blaesild P, Seshadri V (1992) Multivariate distributions with generalized inverse Gaussian marginals, and associated Poisson mixtures. Can J Stat 109–120

Bermúdez L, Karlis D (2011) Bayesian multivariate Poisson models for insurance ratemaking. Insurance 48(2):226–236

Bermúdez L, Karlis D (2012) A finite mixture of bivariate Poisson regression models with an application to insurance ratemaking. Comput Stat Data Anal 56(12):3988–3999

Bermúdez L, Karlis D (2017) A posteriori ratemaking using bivariate Poisson models. Scand Actuar J 2017(2):148–158

Bermúdez L, Karlis D (2021) Multivariate Inar (1) regression models based on the Sarmanov distribution. Mathematics 9(5):505

Bermúdez L, Guillén M, Karlis D (2018) Allowing for time and cross dependence assumptions between claim counts in ratemaking models. Insurance 83:161–169

Bolancé C, Vernic R (2019) Multivariate count data generalized linear models: three approaches based on the Sarmanov distribution. Insurance 85:89–103

Bolancé C, Guillen M, Pitarque A (2020) A Sarmanov distribution with beta marginals: an application to motor insurance pricing. Mathematics 8(11):2020

Boucher J-P, Denuit M, Guillen M (2008) Models of insurance claim counts with time dependence based on generalization of Poisson and negative binomial distributions. Variance 2(1):135–162

Cheon S, Song SH, Jung BC (2009) Tests for independence in a bivariate negative binomial model. J Korean Stat Soc 38(2):185–190

Davis RA, Holan SH, Lund R, Ravishanker N (2016) Handbook of discrete-valued time series. CRC Press, Boca Raton

Denuit M, Guillen M, Trufin J et al (2019) Multivariate credibility modelling for usage-based motor insurance pricing with behavioural data. Ann Actuar Sci 13(2):378–399

Freeland RK, McCabe BP (2004) Analysis of low count time series data by Poisson autoregression. J Time Ser Anal 25(5):701–722

Fung TC, Badescu AL, Lin XS (2019) A class of mixture of experts models for general insurance: application to correlated claim frequencies. ASTIN Bull 49(3):647–688

Ghitany M, Karlis D, Al-Mutairi D, Al-Awadhi F (2012) An EM algorithm for multivariate mixed Poisson regression models and its application. Appl Math Sci 6(137):6843–6856

Gómez-Déniz E, Calderín-Ojeda E (2021) A priori ratemaking selection using multivariate regression models allowing different coverages in auto insurance. Risks 9(7):137

Homburg A, Weiß CH, Alwan LC, Frahm G, Göb R (2019) Evaluating approximate point forecasting of count processes. Econometrics 7(3):30

Jeong H, Dey DK (2021) Multi-peril frequency credibility premium via shared random effects. Available at SSRN 3825435

Johnson NL, Kotz S, Balakrishnan N (1995) Continuous univariate distributions, volume 1, vol 289. Wiley, New York

Karlis D, Pedeli X (2013) Flexible bivariate INAR (1) processes using Copulas. Commun Stat-Theory Methods 42(4):723–740

Latour A (1997) The multivariate GINAR (p) process. Adv Appl Probab 29(1):228–248

Mardalena S, Purhadi P, Purnomo JDT, Prastyo DD (2020) Parameter estimation and hypothesis testing of multivariate Poisson Inverse Gaussian regression. Symmetry 12(10):1738

Mardalena S, Purnomo J, Prastyo D, et al (2021) Bivariate poisson inverse gaussian regression model with exposure variable: infant and maternal death case study. In: Journal of Physics: Conference Series, vol 1752. IOP Publishing, p 012016

Marshall AW, Olkin I (1990) Multivariate distributions generated from mixtures of convolution and product families. Lecture Notes-Monograph Series, pp 371–393

McKenzie E (1985) Some simple models for discrete variate time series. J Am Water Resourc Assoc 21(4):645–650

Nastić AS, Ristić MM, Popović PM (2016) Estimation in a bivariate integer-valued autoregressive process. Commun Stat-Theory Methods 45(19):5660–5678

Pavlopoulos H, Karlis D (2008) INAR(1) modeling of overdispersed count series with an environmental application. Environmetrics 19(4):369–393

Pechon F, Trufin J, Denuit M et al (2018) Multivariate modelling of household claim frequencies in motor third-party liability insurance. Astin Bull 48(3):969–993

Pechon F, Denuit M, Trufin J (2019) Multivariate modelling of multiple guarantees in motor insurance of a household. Eur Actuar J 9(2):575–602

Pechon F, Denuit M, Trufin J (2021) Home and motor insurance joined at a household level using multivariate credibility. Ann Actuar Sci 15(1):82–114

Pedeli X, Karlis D (2011) A bivariate INAR (1) process with application. Stat Model 11(4):325–349

Pedeli X, Karlis D (2013a) On composite likelihood estimation of a multivariate INAR (1) model. J Time Ser Anal 34(2):206–220

Pedeli X, Karlis D (2013b) On estimation of the bivariate Poisson INAR process. Commun Stat-Simul Comput 42(3):514–533

Popović PM (2016) A bivariate INAR (1) model with different thinning parameters. Stat Pap 57(2):517–538

Popović PM, Ristić MM, Nastić AS (2016) A geometric bivariate time series with different marginal parameters. Stat Pap 57(3):731–753

Ristić MM, Nastić AS, Jayakumar K, Bakouch HS (2012) A bivariate INAR (1) time series model with geometric marginals. Appl Math Lett 25(3):481–485

Scotto MG, Weiß CH, Gouveia S (2015) Thinning-based models in the analysis of integer-valued time series: a review. Stat Model 15(6):590–618

Shi P, Valdez EA (2014a) Longitudinal modeling of insurance claim counts using jitters. Scand Actuar J 2014(2):159–179

Shi P, Valdez EA (2014b) Multivariate negative binomial models for insurance claim counts. Insurance 55:18–29

Sichel H (1974) On a distribution representing sentence-length in written prose. J R Stat Soc 137(1):25–34

Sichel H (1982) Asymptotic efficiencies of three methods of estimation for the inverse Gaussian-Poisson distribution. Biometrika 69(2):467–472

Stein GZ, Juritz JM (1988) Linear models with an Inverse Gaussian Poisson error distribution. Commun Stat-Theory Methods 17(2):557–571

Tzougas G, di Cerchiara AP (2021a) The multivariate mixed negative binomial regression model with an application to insurance a posteriori ratemaking. Insurance 101:602–625

Tzougas G, di Cerchiara AP (2021b) Bivariate mixed Poisson regression models with varying dispersion. N Am Actuar J 1–31

Weiß CH (2008) Thinning operations for modeling time series of counts—a survey. Adv Stat Anal 92(3):319–341

Weiß CH (2018) An introduction to discrete-valued time series. Wiley, New York

Acknowledgements

The authors would like to thank the anonymous referees for their very helpful comments and suggestions which have significantly improved this article.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Chen, Z., Dassios, A. & Tzougas, G. Multivariate mixed Poisson Generalized Inverse Gaussian INAR(1) regression. Comput Stat 38, 955–977 (2023). https://doi.org/10.1007/s00180-022-01253-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00180-022-01253-0