Abstract

Additive manufacturing has been presented as a novel and competitive method to achieve unprecedented part shapes and material complexities. Though this holds true in niche markets, the economic viability of additive manufacturing for large-scale industrial production is still in question. Companies often struggle to justify their investment in additive manufacturing due to challenges in the integration of such technologies into mainstream production. First, most additive technologies exhibit a relatively low production rate when compared with traditional production processes. Second, there is a lack of robust design for additive manufacturing methods and tools that enable the leveraging of the attendant unique capabilities, including the ability to form organic part geometries and automated part consolidations. Third, there is a dearth of systematic part screening methods to evaluate manufacturability in additive manufacturing. To tackle the challenge of manufacturability evaluation, the present work proposes a novel approach derived from latent semantic analysis and dimensional analysis to evaluate parts and their production for a variety of selected metrics. The selected metrics serve as descriptors of design features and manufacturing functions, which are developed using functional modeling and dimensional analysis theory. Singular-value decomposition and Euclidean distance measurement techniques are used to determine the relative manufacturability for a set of parts for a specified manufacturing process technology. The utility of the method is demonstrated for laser powder bed fusion technology. While demonstrated for additive manufacturing here, the developed approach can be expanded for any given set of manufacturing processes. Expansion of this systemic manufacturability analysis method can support part design decision-making, process selection, and design and manufacturing optimization.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Additive manufacturing (AM) is presented in literature as a strong competitor of traditional manufacturing methods [1]. The growth in popularity of AM is related to the advantages it provides in terms of material and design freedom to produce unprecedented shapes with high geometric complexity. However, successful adoption and integration of AM into production environments has been challenging for businesses. Often, the full potential of AM technologies is not leveraged, and direct application of AM for products with existing traditional manufacturing solutions is not economically viable [2]. Thus, industries must focus on designing and developing products that are suited for AM rather than using the technology as a direct replacement of traditional manufacturing processes [3].

A decision support system (DSS) can aid in finding potential candidate products for AM through systematic evaluation of alternatives. However, the priorities of most DSS solutions revolve around productivity and economics and seldom integrate design for manufacturing and assembly assessments. Recently, research effort has been directed towards building integrated DSS solutions capable of evaluating the technical and economic aspects of production [3, 4]. Nevertheless, such solutions are still in their infancy and require a significant amount of human interaction. In addition, the design freedom in AM can be leveraged by redesign for consolidation of parts into sub-assemblies; evaluating the relative performance of such part consolidations is challenging, however, due to a lack of metrics and systematic evaluation techniques. Pradel et al. [2] developed a design framework for mapping design and manufacturing knowledge in the context of design for additive manufacturing (DFAM). The study reviewed the current state of the art in DFAM and highlighted several limitations and future directions for research in the field. An important takeaway from the study was the need for easy-to-use and reliable tools which can aid the user to identify when AM can function as a competitive alternative for traditional manufacturing. The authors emphasize that such tools must provide a comprehensive survey of available AM technologies and allow for rapid identification of potential processes based on evaluation of product or part over a wide range of design, production, and economic metrics. Thus, in this research, a novel evaluation approach derived from singular value decomposition (SVD) and its application in the latent semantic analysis (LSA) method is developed herein to evaluate parts and their production for a variety of selected metrics. The developed approach serves to achieve two distinct objectives. First, the approach supports fast clustering of parts from a database for specific manufacturing processes, which enables evaluation of their manufacturability based on a set of design and manufacturing metrics. Metric values for part design alternatives and an “ideal” part are computed and compared using a mapping approach based on Euclidean distances between the evaluated parts. Second, the potential for consolidation of parts into sub-assemblies is evaluated using the additive property of part features and metrics provided by the SVD approach. The developed methodology aims to provide users with an evaluation approach which is automatic and allows for rapid identification of potential processes for different designs. However, the current approach does not provide any design guidelines for AM, rather it aims to provide designers with a generalized framework for development of dimensionless metrics which are scale independent and invariant as well as providing a mathematical mechanism for performing fast manufacturability evaluations.

The remainder of the manuscript is organized into five sections. Section 2 discusses relevant background information. Section 3 presents the methodology as follows. First, metrics are formalized using a combination of taxonomies organized around functions, organs, and variables, and the approach to build metrics as a combination of variables is selected. Second, the use of SVD is presented for part design and manufacturing evaluation. Sections 4 and 5 then demonstrate the usability of the developed approach using an AM case study. Finally, the findings of the presented work and potential for future developments are discussed in Section 6.

2 Background

DSS solutions for complex decision-making and problem-solving became widespread in the 1970s with the growth of computer technology. Over the past several decades, the utility of DSS has improved, and, with manufacturing moving towards more automated processes, solutions have been developed leading to intelligent decision support systems (IDSS) and cyber-physical production systems (CPPS). DSS can serve as passive, active, or cooperative systems depending upon their functionality. The available literature categorizes DSS into five types, namely, model-driven, data-driven, knowledge-driven, document-driven, and communication-driven systems [5]. In this current research, a combination of model-driven, data-driven, and knowledge-driven approaches is used to evaluate part manufacturability.

A model-driven DSS is typically not data intensive, rather it uses analytical models, simulation tools, and optimization methods to generate multiple experiments depicting the effects of alternative decisions. Monte Carlo simulation, discrete event simulation, probabilistic forecasting, agent-based and multi-agent simulation, system dynamics, and visual simulation are some of the common simulation methods used in model-driven DSS [6,7,8]. Data-driven DSS, on the other hand, utilizes structured data (e.g., machine learning using neural networks), such as internal and external company data, time-series data, and real-time data [9]. Business intelligence systems or online analytical processing (OLAP) are examples of data-driven DSS that enables better decision support by formulating decisions through triggering, manipulating, and/or analyzing data. However, accurate and structured data are a key requirement in developing data-driven solutions, and, thus, efficient data processing could enable fast and accurate decision-making [10, 11]. Knowledge-based DSS methods of today (e.g., fuzzy logic, Bayesian networks, and genetic algorithms) have evolved from their predecessors, known as rule-based expert systems. Such rule-based expert systems use heuristics to solve problems with the help of human expert knowledge stored in databases. In the age of big data, the challenges pertaining to the properties of data (i.e., volume, variety, velocity, veracity, validity, and value) need to be addressed to improve the process of decision-making [12,13,14,15].

Decision support for manufacturing process selection has been of key interest for the AM research community. Such systems require evaluation of design and manufacturing capability to choose the right process for a specific design or vice versa. Selection criteria for design elements are often objective and can be expressed in the mathematical form to be either maximized or minimized. However, tradeoffs involving conflicting selection criteria may introduce a certain level of subjectivity which, when combined with the existing complexity of the evaluated problem, makes the selection process difficult. In addition, material selection, process selection, and shape selection for AM should not be considered independent, mutually exclusive entities, rather they must be addressed with a focus on overlaps and interrelationships [2, 16]. Wang et al. [17] developed a DSS for AM process selection using a hybrid multi-criteria decision-making method, the analytic hierarchy process (AHP) combined with a technique for order preference by similarity. Their method was used to obtain an ideal solution to explore and refine the solution space and to rank suitable alternatives. Technical and economic aspects of the processes are taken into account; however, the method requires development of probabilistic models for representing each process and its capabilities for initial sampling based on customer preferences obtained through the AHP. Such an approach implies the need for large amounts of data relating to each technical or economic aspect of interest for training. Modeling each process with less data is challenging, especially in AM where parameters often reflect a non-linear relationship, requiring more intensive training to track them [18].

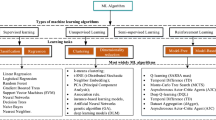

For humans, learning new concepts and skills is usually fast and efficient with only a few training examples. For example, people who know how to use a lathe are likely to quickly learn how to use a milling machine with little or no demonstration. In contrast, training a machine learning (ML) model for a similar situation would require a large number of training samples and is not likely to become an expert system capable of independent operation. Further, the training performed for the lathe will not be transferrable to the milling machine. The lack of interoperability is a fundamental problem of current ML approaches limiting their application to large-scale real problems [19]. In order to effectively use ML models in a particular design or manufacturing domain, it is important to evaluate the capability of current approaches based on their ability to quickly learn new concepts and skills with few training samples. Approaches that will enable the generalization of ML models for related application domains need to be developed.

Automated approaches are becoming increasingly popular for modeling AM processes, but the objectives for the use of ML methods are often unclear. The fundamental question remains: What is the real added value of the application of ML in AM process modeling? When comparing ML models with analytical modeling strategies, one fundamental difference is the ability of ML models to improve continuously and automatically over time. In this respect, other models are rigid and not updated dynamically during use. The second fundamental element to consider with modeling is the complexity of the modeling problem, which is strongly correlated with the required size of the datasets needed to generate the models. Based on these two fundamental aspects of ML, a primary goal of the research herein is to provide an approach capable of integrating new knowledge over time to modify predictions or update clustering when new data is included or when data is removed. Thus, the proposed approach can be coined as a type of machine learning method. As a second key goal, the ML approach should be capable of providing adequate evaluations using very small datasets.

To achieve these two goals, three fundamental approaches from meta-learning, a branch of ML dealing with improving learning and learning processes, are adopted. Meta-learning proposes metric-based, model-based, and optimization-based approaches to improve training of ML models. First, a metric-based approach is established here using dimensional analysis theory (DAT) and the Vashy-Buckingham theorem. The approach has been applied to manufacturing in general and is applicable to other engineering domains at large. Second, model-based and optimization-based approaches are then combined using SVD to perform part evaluation. This combination will also be studied further in future papers. This paper presents only one limited aspect of the combination between model-based and optimization-based approaches.

3 Methodology

As introduced above, the methodology followed in this research develops an ML approach for evaluating parts for manufacturability and presents some fundamental aspects of the approach suitable for part consolidation. The step-by-step implementation of the methodology is shown in Fig. 1. The methodology first uses the dimensional analysis conceptual modeling (DACM) framework to describe the function and define the organs and variables associated with the function. A group of dimensionless metrics are then formulated and computed for the part to be evaluated. Next, SVD and Euclidean distance measurement techniques are used to cluster part designs and compute distances between the evaluated parts and “ideal” parts for different manufacturing process technologies.

Steps I–V in Fig. 1 follow the hypothesis that functions can be efficiently described using a group of dimensionless variables and, subsequently, describe subsystems and systems. Such dimensionless variables can serve as metrics for assessing design and manufacturing information. The methodology starts by describing a set of functions representing the different activities of a system (step I). A function can be described using an action verb. In a graphical context, a function can be represented as a box with inputs and outputs; this is the traditional Pahl and Beitz model [18].

Figure 2 shows a modified generic model of a function and the associated variables. A generic model of a function was initially proposed in bond graph theory [20, 21] but later modified in the dimensional analysis conceptual modeling (DACM) framework by Coatanéa et al. [22]. In Fig. 2, a function is represented using three categories of variables, namely, power variables, state variables, and connecting variables. The power variables represent effort (in the form of force, pressure, temperature, and chemical potential) and flow (in the form of velocity, volumetric flow, volumetric velocity, and entropy flow), while state variables represent displacement and momentum. Generic categories of variables for different energy domains are shown in Table 1. The generic classification model was expanded by Coatanéa et al. [22] by introducing a supplementary category of variables called connecting variables, for describing the connections that exist between power variables (i.e., effort and flow) and state variables (i.e., displacement and momentum).

Connecting variables can describe the geometry of a part as well as material-related properties.

Functions can be classified using the terms employed by the DACM framework. The equivalence of functional terms used in DACM to the notation of other functional modeling approaches is shown in Fig. 3. After classification, the functional terms are associated with organs, such as in bond graph theory, to represent the function as an input-output relationship of generic variables [23]. Organs represent an intermediate layer of detail between the abstract concept of a function and a physical component or subsystem required to implement a function. The mapping between functions and organs is shown in Table 2.

Following the description of functions, dimensionless variables can be computed to define metrics. Metrics are measures used to track or assess the status of a system or activity. In this research, metrics are used to assess the performance of the different functions of the system. To illustrate how metrics can be used to assess the nature of surfaces, an example case for machining a cube is considered. Machining requires an operator to locate and to clamp a workpiece. We can assume that the functions (locating and clamping) are more easily achieved if the shape of the blank workpiece is known. Hence, the fulfillment of these functions can be analyzed by defining a metric representing the shape of the workpiece. We can conceptualize the shape of the workpiece with the help of a dimensionless Pi (π) number.

Assuming the face of the workpiece to be located and clamped is circular with a diameter D, the Pi number could be calculated using either Eq. 1 (surface area) or Eq. 2 (circumference).

Rearranging Eqs. 1 and 2, we obtain:

We see that both equations provide the same value for π (3.1416). This specific value of Pi is associated with the shape of the geometric form considered, in this case a circle.

The concept of reordering the elements in the equations to find the value of Pi can be performed using the Vashy-Buckingham theorem in DAT [28]. The theorem allows the creation of a set of dimensionless numbers, where, if a physical phenomenon is represented such that q1, a dependent variable, is influenced by a set of independent variables, then

where qi are the n physical variables, which are expressed in terms of h independent physical quantities. Equation 5 can be rewritten as (Eq. 6):

where πi are dimensionless parameters constructed from qi such that j = (n – h) dimensionless equations (Pi numbers herein) exist of the form (Eq. 7):

where the exponents are rational numbers. To calculate the values of the dimensionless Pi numbers, one variable (qi) is selected as the performance variable (i.e., dependent variable) whose exponent is 1, while the other variables are classified as repeating variables.

Similarly, considering the face of the workpiece to be located and clamped to be a rectangular shape involving three describing variables (length, l, and width, w, measured in meters, m, and surface area, S, measured in square meters, m2). If S is selected as the performance variable, the Pi number can be calculated as shown in Eq. 8:

In this case, π = 1 is a constant value classifying the shape as being rectangular. It is important to note that the value of π is independent from the scale and dimension of the rectangular shape, since S is dependent on l and w.

Thus, using DAT and the Vashy-Buckingham theorem, a generic method can be followed to combine parameters and form metrics measuring certain aspects of a designed part. Pi numbers have a broad usage in engineering; in this research, we demonstrate that they can be used as descriptors of functions. This hypothesis is supported in literature [29], where it is shown that combination of parameters supports the creation of parsimonious metrics. Such parsimonious metrics have proven to be efficient in assessing model performance.

Hence, the hypothesis in this research is similar, where the parsimonious principle applies to the description of functions. Revisiting the example of machining the cube, the goal is to evaluate the “locating” and “clamping” functions for a part in a material removal process. The evaluation of the functions requires considering the efficiency and ease with which both functions are performed. Thus, using the metric values, we can evaluate how “distant” a specific part is from an ideal part—a part for which the two functions (locating and clamping) are most optimally performed (e.g., when the shape is circular or prismatic and locating and clamping can be performed using a three- or six-jaw chuck or a vise). In this research, ideal parts are used to represent different manufacturing process technologies following the TRIZ concept of ideality [27]. In addition, metrics can be computed based on other functional requirements, such as shape complexity, macro- and micro-level precision, volume of material removed or added, and material complexity.

Through the previous examples, a generic method grounded in functional modeling and DAT can be conceptualized for the development of metrics. Though dimensional analysis has been widely used in engineering, the current application of this mathematical approach establishes a mechanism to select the appropriate combination of variables for an engineering application using the DACM framework. The lack of such mechanism to select the right performance and repeating variables for the study in traditional DAT implies that the domain knowledge associated with the study is absent. The DACM framework applied in this research bridges this gap by providing a functional basis through which performance and repeating variables can be assigned. Thus, the Pi numbers computed using this approach do not serve solely as combinations of variables to ensure dimensional homogeneity but also as metrics which describe specific functions associated with a technical system or part. The presented approach establishes a formal association between Pi numbers, a type of structural description metric, and functional modeling using the principle of composability (Fig. 4). In practice, a function is implemented using components. The components are modeled using variables and equations. Pi numbers are a generic form of power laws present ubiquitously in nature and used in order to model a function via key performances (i.e., key performance indicators), repeating variables (i.e., independent variables), and the equations combining them. Pi numbers are scale, metric, aggregation, and composition invariant. They form an ideal tool to represent manufacturing metrics when parts with different scales, metrics, and compositions need to be compared.

Metrics have long been used as a means of evaluating functions. Nevertheless, the use of dimensionless numbers as performance indicators is a generalization enabling the creation of generic metrics that can apply to a broader spectrum of functional use cases. The concept for the use of metrics to represent functions is next explained using an additive manufacturing example.

In laser or electron beam powder bed technologies, the build volume rate V (mm3/s) is a key performance indicator for assessing building time and, consequently, production rate and cost [30]. V is dependent on the layer thickness DS (μm), the scan speed Vscan (mm/s), and the scan line distance between parallel laser tracks Δys (μm). Other important variables affecting the overall product cost are height of the part H (mm), part orientation, part surface area SP (mm2), and total printing time tB (s). A printing process is equivalent to the superfunction/organ D in Fig. 4, wherein multiple low-level functions are integrated to form a high-level function (to print). The level of granularity of function descriptions focuses on the key outputs of the manufacturing machine sub-functions, namely, to create a layer of powder and to move the laser or electron beam with respect to the powder layer. Parameters related to the different functions as well as those that specify part properties and part position on the printing table are considered. Figure 5 shows the high-level functional model for the superfunction “to print” for powder bed printing technology. Variables are represented as their generic categories following Table 1.

The two performance variables (i.e., variables used to assess the performance of the printing process) here are V, the expected build volume rate, and tB, the total printing time. The expected build volume rate accounts for the specific performance of the laser or electron beam when taking into account laser beam diameter, line spacing between laser scans, and the height of the powder layer. The total printing time evaluates the total duration of the printing process, which is dependent on the part geometry. The dependent variables (performance variables) and the independent variables can be represented in the form of vectors. Table 3 presents the decomposition for the first performance variable V. Using the formula [C] = [A]−1. [B], the exponents ([C]) of the influencing variables in matrix A can be computed to ensure dimensional homogeneity. The base dimensions of the variables in this case are represented as the length of the part layers in each ordinal direction (Lx and Lz) and time (T). Here, time (T) is one of the seven base dimensions of the international system of units. In addition, matrix A is square due to wise decomposition of the base dimensions. Obtaining a square matrix A is needed to compute the matrix inverse, [A]−1.

Matrix A can be represented as a square matrix by modifying the number of base dimensions by either adding, suppressing, or combining variables in columns. Szirtes [31] presents heuristic rules to follow when performing such transformations. Using the proposed approach, Pi numbers are developed for the two performance variables, V and tB, selected above. Thus, the performance variables are represented in the form of dimensionless variables as presented in Eqs. 9 and 10:

Following the development of dimensionless variables as metrics describing functions, steps VI–IX in Fig. 1 are used to define the mathematical mechanisms to support SVD for part evaluation. The different part alternatives and metrics for their characterization are represented in the form of matrix X (Table 4). In addition to the evaluated part alternatives, an ideal part for each manufacturing process technology is also considered in matrix X. The ideal part is one in which the different functions of the manufacturing system are most optimally performed. This implies that the ideal part will have the ideal (maximum, minimum, or limit) value for all metrics assessed.

Matrix X is decomposed using SVD to enable the computation of distances between the evaluated part alternatives and the ideal part for each potential manufacturing process technology. SVD allows the creation of a model of parts and processes, presented in the form of a succession of vectors composed of metrics [32]. SVD is a decomposition technique related to principal component analysis (PCA) and used for classification and ranking of tasks. Applications of SVD have been presented in literature, for example, in aeronautics and in metamodeling [29, 33]. However, prior to this research, the use of the approach as a systematic part screening method has not been reported. In this research, SVD is used for part clustering, which enables the calculation of distances between the evaluated part alternatives and the ideal part for each manufacturing process technology. Matrix X can be decomposed to the form (Eqs. 11 and 12):

In Eq. 12, a compact SVD approach is followed, in which [X] is an (m × n) matrix, [U] is an (m × r) matrix, [W] is square diagonal matrix of size (r × r), where r represents the rank of [X] with r ≤ min {m, n} and [V] is an (n × r) matrix. A compact SVD approach implies that no null columns exist in the matrix [W]. The approach is chosen to ensure that the decomposition results in a square diagonal matrix to allow for straightforward inverse matrix operations.

The matrix [W] resulting from the decomposition is arranged in a way that its diagonal elements (i.e., the singular values) are ranked in descending order. This implies that the influence of the [U] rows is also in descending order. The initial [X] can be reconstructed with reasonable accuracy by reducing the number of rows in [U]. An interesting property of [U] is that the sum of the variance of each column is one. Thus, all columns have the same deviation. Considering only the singular values in [W], let us assume that the number of selected singular values, k, is two. Then, [W] can be represented as Eq. 13:

The parts are represented by the terms in the row vectors of the matrix product [Uk]. [Wk]. Similarly, the metrics are represented by the column vectors of the matrix product [Wk]. [Vk]T. The matrix product [Uk]. [Wk] creates vectors of dimension k, where the rows represent the parts (and respective manufacturing process technologies) considered in the study. Thus, the SVD approach develops a reduced model linking parts, metrics, and manufacturing processes. The required level of accuracy can be selected by choosing the number of singular values retained in the reduced model. In this research, the variation in the accuracy of models depending on the number of singular values selected is investigated as part of the case study. The use of SVD and of the decomposed products [Uk]. [Wk] and [Wk]. [Vk]T provides several advantages. First, it enables computation of distances using the Euclidean distance measurement approach and thus allows screening and ranking of parts in terms of their manufacturability for each manufacturing process technology evaluated. Ideal parts can be added for consideration of different manufacturing process technologies by computing the limits of each metric. The ideal limit of each metric computed would imply the best performance in a specific function. Subsequent ranking would then allow evaluation of the suitability of multiple manufacturing methods for a given part design or set of design alternatives. Further, SVD enables reconstruction of the design space, which allows for development of aggregated metrics and parts. These advantages are further explored in the case study for an additive manufacturing process technology below.

In order to rank the different parts and evaluate their suitability for certain manufacturing processes, the distance between the ideal part for the given manufacturing process technology and the evaluated parts from a database needs to be computed. Several methods are presented in literature to perform such computation. The two most frequently used approaches, the cosine similarity approach and the Euclidean distance computation approach, are investigated in this research. Cosine similarity is a measure of similarity between two non-zero vectors of an inner product space based on the cosine of the angle between them. Cosine similarity is usually used in positive design space, wherein the outcome is bounded in [0, 1]. It is assumed that the set of metrics developed is also bounded in [0, 1]. Thus, the input data is normalized in order to obtain values in the range of zero (0) to one (1) for computing cosine similarity. For two vectors A and B, the cosine similarity measure can be computed as (Eq. 14):

Cosine similarity is often used as a metric for measuring distance when the magnitude of the vectors does not matter, e.g., in semantic analysis. However, if the magnitude of the vectors is important, then a second approach can be followed by computing a Euclidean distance. The Euclidean distance between an ideal reference part (r), which represents a part containing the ideal features (also implies optimal metric values) for a specified manufacturing process technology, powder bed fusion technology in this case, and a real part to be evaluated (i), can be computed as (Eq. 15):

where x, y, z, and f represent the vectors of the product [Uk]. [Wk] when assessing part distances (Fig. 7) and vectors of the product [Wk]. [Vk]T when assessing metrics (Fig. 8). In this research, the magnitude of the distance between the points is of importance. Since the reference technologies play the role of ideal targets, distances must be computed between these ideal parts (technologies) and the evaluated alternative parts. The Euclidean distance method is chosen to compute distances and rank parts for a specific manufacturing process technology. Nevertheless, the cosine similarity measure is also used for comparison in the case study. Distance computation and ranking facilitate screening for part manufacturability for a specific technology by comparing metric performance with ideal values.

An important aspect of the current methodology is the development of dimensionless metrics (Pi numbers) which are scale, metric, and composability invariant, by virtue of the Pi laws (i.e., power laws with dimensional homogeneity). The developed metrics enable the computation of Euclidean distances by weighting each metric equally. If not, the Euclidean distance computed would not give equal importance to each metric rather will favor the ones with high variance when measured in the principal component space [33]. The use of the Euclidean distance to perform ranking would not be possible without the invariant nature of the developed metrics. Alternately, Mahalanobis distance could be used for comparing metrics. However, it is important to note that the Mahalanobis distance measurement would require the computation of the variance-covariance matrix of the dataset. The computation of the variance-covariance matrix is difficult when the data is multicollinear and/or when the number of variables exceeds the number of objects in the dataset. A feature reduction would then need to be carried out to accurately calculate the Mahalanobis distance [33]. The current approach provides an advantage over Mahalanobis distance, wherein the developed Pi numbers provide a method to reduce the dimension and complexity of a problem by aggregating metrics.

4 Additive manufacturing case study

The utility of the method developed above for systematic manufacturability evaluation using dimensionless metrics and SVD is demonstrated for an additive manufacturing case study using the laser powder bed fusion (LPBF) process. LPBF technology is considered as a unique manufacturing method in this proof of concept exemplar (no competing manufacturing method is considered here). Nevertheless, through future extension, the approach will enable the integration of several manufacturing processes simultaneously. The capability of the approach to aid manufacturing process selection will be investigated in future research.

In this work, twelve metrics are computed, as presented in Table 5, following the design rules proposed by Zimmer and Adam [34]. The last column of Table 5 is used to compute the position of the ideal part used as the reference for a given manufacturing method. Eight parts exhibiting a range of complexities (e.g., size and number and type of features) were selected to be assessed for the LPBF process (Fig. 6). Specific features of Bracket A and Connector D have been isolated in this case study to simplify the evaluation of certain metrics as well as to demonstrate the capability of the proposed method to enable metric or elementary features’ and or parts’ aggregation through vector representation. Specifically, for Bracket A, the big hole and the small holes have been evaluated separately. Similarly, for Connector D, the helix and holes were evaluated separately. For both parts, the choice to isolate specific features was drawn from design rules for AM [35]. The parts and metrics are combined to form matrix X (Table 6).

Matrix X is decomposed using SVD via a code generated in MATLAB. Before running the SVD decomposition, matrix X is normalized between [− 1, 1] with a maximum standard deviation of 1.

The normalized input matrix [X] is shown in Table 7.

The resulting decomposed matrixes [U], [W], and [V]T are presented in Eqs. 16, 17, and 18, respectively.

Several important observations can be made. First, in matrix [W] (Eq. 17), the singular values are ranked diagonally in descending order. As a result, the initial matrix [X] can be reconstructed by considering only the rows and columns in bold from matrices [U], [W], and [V]T in Eqs. 16, 17, and 18, respectively. This means that a complete dataset can be reconstructed with a reduced format of the product of the matrices [U], [W], and [V]T. For the bolded products in Eqs. 16, 17, 18, the average summed error generated by this reconstruction for [X] for the columns (metrics) is 6.66% and for the rows (evaluated parts) is 0.60%. When the number of retained singular values from matrix [W] is four, the average summed error drops to 2.66% for the columns and 0.16% for the rows. This important property is used in this paper to represent the position of the parts and metrics in a reduced model. This is explained in Section 3. For the demonstration case herein, two singular values were retained to obtain a reduced model.

The decomposed matrices in Eqs. 16, 17, and 18 are processed to form two sub-products between the three matrices ([U], [W], and [V]T). The product matrices, [U].[W] and [W].[V]T, are shown in Table 8 and Table 9, respectively. Those two products are fundamental for representing the parts and metrics in a graphical space.

In Tables 8 and 9, the shaded rows and columns represent the vectors of the two retained singular values. In Table 8, each shaded row represents the specific vector of each single part considered in the model. In Table 9, each shaded column represents the specific vector of each metric. The parts and metrics evaluated can also be presented as a 2D graph (Fig. 7) representing the two main axes of the reduced model. In Fig. 7, the ideal part for LPBF serves as a reference for guiding the evaluation of part manufacturability/printability using the technology. From Fig. 7, it can be seen that Connector D with four (4) holes only is closest to the ideal part and, hence, would be the easiest to manufacture using LPBF when compared with the other evaluated parts. In addition, consolidation of parts to form sub-assemblies is investigated by combining two vectors (Connector B + Connector D). Here, the consolidated sub-assembly is evaluated by combining the metric values of the individual components through simple vector addition. Additionally, consolidation of metrics can also be performed in a similar fashion.

Next, the Euclidean distances between the ideal part and the assessed parts are computed, and the parts are ranked on their manufacturability (Fig. 8). It is important to note that the ranking is dependent on the number of singular values considered, as shown in Fig. 8. The results of the case study are discussed further in Section 5.

5 Results and discussion

The methodology proposed in this article was demonstrated for ranking a set of parts on their manufacturability using laser powder bed fusion (LPBF) technology. The ranking was carried out for individual parts and a consolidated sub-assembly of two components. The computed ranking of the initial parts and aggregated features and parts were respectively validated graphically (Fig. 7) and using the Euclidean distance for two singular values. The blue-, green-, and orange-shaded ranking scales in Fig. 8 show the ranking when the features of Bracket A (big hole and small holes) and Connector D (4 holes and helix) have been evaluated individually. The ranking is updated when the two features of Bracket A and Connector D are combined. In addition, a consolidated part (Connector B + Connector D) represented as a sub-assembly is added to the evaluation. The updated ranking with feature aggregation and part consolidation is shown in the bottom, gray-shaded ranking scale in Fig. 8.

The Euclidean distance-based approach provided a ranking consistent with the visual evaluation presented in Fig. 7, where Connector D appears closer to the ideal part for LPBF. Cosine similarity-based ranking was also evaluated and presented for comparison. The ranking was updated by considering the combined/aggregated features, which demonstrate how vector representation of the features enables the user to both isolate different features in a part and to compose different features of a part together based on necessity. The combination of features also implies that the individual parts can be combined into sub-assemblies. For example, the sub-assembly (Connector B + Connector D) used in this case study followed the same principle and illustrates the potential to consolidate different parts together to improve the manufacturability for a specific manufacturing process technology. It can be seen that Bracket A (big hole) had an initial ranking (green-shaded scale) of seven and Bracket A (small hole) was ranked sixth (Fig. 8). By virtue of the proposed approach, it was possible to evaluate the effect of combining the two features together (Bracket A) and to compute the resulting ranking of the aggregate features (see Figs. 7 and 8).

As noted above, an important factor in the manufacturability ranking is the number of singular values selected. The global ranking changed for Connectors A, B, C, and D when three singular values were chosen instead of two (Fig. 8). Thus, any model reduction efforts must consider two factors: the average error generated by the reconstruction of a reduced model and the variation in the ranking based on the number of singular values considered.

Another interesting observation is that some metrics appear in clusters when represented in a 2D graph (Fig. 9). For example, Metric 1 (individual part production rate), Metric 2 (batch production rate), and Metric 4 (the need for internal support structures) form a cluster. It is interesting to note that Metric 1 and 2 relate to production time but not initially Metric 4. In addition, Metric 6 (minimum feasible vertical hole size), Metric 7 (difficulty of depowdering), and Metric 10 (internal lattice structures) each indicate part complexity and form a cluster. Metrics 11 and 12, design rules relating to ease of clamping and presence of overhanging features, respectively, appear to be somewhat correlated.

Currently, no specific clustering method has been used here, but methods such as k-means could be employed in future studies to further examine clustering and drivers of correlation that can better inform designers and process planners. Metrics clustered together imply the concept of similarity. Hence, metrics initially developed to characterize a specific feature(s) can assist in evaluating other features. For example, Metric 4, initially designed to assess internal support structures that would require additional post-processing, is closely related to Metrics 1 and 2, which are used to evaluate the production rate. Metric 4 combines part and machine properties, while Metric 1 is specifically composed of machine properties. Metric 2 includes the projected surface of parts and combines part and machine properties. The part and machine-related properties considered in the development of the metrics affect the level of closeness of different metrics despite their differences in objectives. Nevertheless, the 2D visualizations in Figs. 7 and 9 represent the design space that enables the combination of metrics and parts to explore and exploit the design space. Using Fig. 9, it can be envisioned that new metrics can be automatically generated to evaluate parts in the design and manufacturing space. This approach to automated metric generation opens opportunities for future research, including the potential creation of new ML approaches for product design and production planning.

6 Conclusions

This research presents a novel and systematic framework based on functional modeling and dimensional analysis theory for the development of parsimonious metrics as descriptors of functions. In addition, the developed metrics are utilized in a mathematical mechanism for evaluating part manufacturability using singular value decomposition. The metrics, developed as part of this study based on key performance indicators and independent variables, allow for evaluation of design features, production types, and product cost. The developed metrics are scale and composition invariant by virtue of the power law form (i.e., Pi number), which enable the computation of the Euclidean distance to facilitate the ranking of various part designs based on their ease of manufacturability for different production technologies and part dimensions. The proposed approach improves upon the existing limitations of DAT with the help of the DACM framework to promote a systematic approach for the selection of performance and repeating variables during the development of dimensionless metrics. The combination of functional modeling, DAT, and SVD for the purpose of part manufacturability evaluation has not been considered in the existing literature. The methodology reported herein provides a novel approach to automate product design evaluations and provide feedback to designers during process selection. Readers should keep in mind that automation of the evaluation process is fundamental in this work, which is a key novelty compared with manual (heuristic) guidelines proposed in DfAM methods.

The proposed method evaluates part designs for their “fit” for a variety of potential manufacturing process technologies through the evaluation of their manufacturability with respect to a reference (ideal) part for each process type. The ideal part is developed based on a set of metrics that define the unique attributes of the process. The method developed here and demonstrated for additive manufacturing has the potential to be extended to also consider consolidation of features and parts via the basic mechanisms presented for metric aggregation, i.e., SVD decomposition and the representation of features via vectors of metrics. Part aggregation also requires consideration of multiple other aspects, such as sub-assembly structure, mechanical interface, and the feasibility of assembling the parts in an aggregated sub-assembly. These considerations are not presented herein and form the basis for future work to extend the application of the basic principles and computational approach presented in this article. The principles presented are highly flexible and facilitate feature/part composability and metric ranking. These aspects of the approach have been demonstrated here, together with a concrete implementation of the concept of ideality from TRIZ, which was used in this research for representing the fit of a part for a given manufacturing process technology.

The developed method enables isolated evaluation of individual features comprised in a part but also allows for combining features or parts for aggregate evaluation of complex parts and sub-assemblies. Thus, the method promotes design for manufacturing and assembly through its ability to separate or combine features and parts and perform evaluation of both individual and aggregated features/parts. In addition, the graphical representation of the design space can enable design space exploration to find new combinations of parts and/or new metrics to aid design and manufacturing analysis. An element not studied in this work is the use of the approach as a prediction tool, wherein SVD allows for the computation of metric values for new parts when information about certain metrics are already known. This last element will drive future research efforts for assisting engineers by providing them with emergent design and process knowledge during product design and process planning, for example, through the use of ML techniques.

References

Bandyopadhyay A, Bose S (2019) Additive manufacturing. CRC press publisher, Boca Raton, pp 389

Pradel P, Zhu Z, Bibb R, Moultrie J (2018) A framework for mapping design for additive manufacturing knowledge for industrial and product design. J Eng Des 29(6):291–326

François M, Segonds F, Rivette M, Turpault S, Peyre P (2019) Design for additive manufacturing (DfAM) methodologies: a proposal to foster the design of microwave waveguide components. Virtual Phys Prototyp 14(2):175–187

Yang S, Page T, Zhang Y, Zhao YF (2020) Towards an automated decision support system for the identification of additive manufacturing part candidates. J Intell Manuf. https://doi.org/10.1007/s10845-020-01545-6

Felsberger A, Oberegger B, Reiner G (2016) A review of decision support systems for manufacturing systems presented at the SAMI@ iKNOW.

Li C, Ren J, Wang H (2016) A system dynamics simulation model of chemical supply chain transportation risk management systems. Comput Chem Eng 89:71–83

Hilletofth P, Hilmola O-P, Wang Y, Rouzafzoon J, Helo P (2016) Developing service supply chains by using agent based simulation. Industrial Management & Data Systems

Zhang F, Johnson D, Johnson M, Watkins D, Froese R, Wang J (2016) Decision support system integrating GIS with simulation and optimisation for a biofuel supply chain. Renew Energy 85:740–748

Power DJ (2001) Supporting decision-makers: an expanded framework. Proceedings of informing science and IT education. pp 1901–1915

Chaudhuri S, Dayal U, Narasayya V (2011) An overview of business intelligence technology. Commun ACM 54(8):88–98

Pillai DD (1990) Developing a decision support system for optimizing automated wafer fabrication. IEEE Trans Components Hybrids Manuf Technol 13(1):94–102

Berman JJ (2013) Principles of big data: preparing, sharing, and analyzing complex information. Morgan Kaufmann Publishers, Burlington

Kaisler S, Armour F, Espinosa JA, Money W (2013) Big data: issues and challenges moving forward, presented at the 2013 46th Hawaii International Conference on System Sciences, pp 995–1004

Mayer-Schönberger V, Cukier K (2013) Big data: a revolution that will transform how we live, work, and think. Houghton Mifflin Harcourt Publisher, New York

Zhang L (2014) A framework to model big data driven complex cyber physical control systems, presented at the 2014 20th International Conference on Automation and Computing. pp 283–288

Lovatt AM, Shercliff HR (Dec. 1998) Manufacturing process selection in engineering design. Part 1: the role of process selection. Mater Des 19(5–6):205–215. https://doi.org/10.1016/S0261-3069(98)00038-7

Wang Y, Zhong RY, Xu X (2018) A decision support system for additive manufacturing process selection using a hybrid multiple criteria decision-making method. Rapid Prototyp J 58:145–157. https://doi.org/10.1016/j.rcim.2019.03.003

Majeed A, Lv J, Peng T (2019) A framework for big data driven process analysis and optimization for additive manufacturing. Rapid Prototyp J 25(2):308–321. https://doi.org/10.1108/RPJ-04-2017-0075

Nilsson J (2019) System of systems interoperability machine learning model

Borutzky W (2011) Bond graph modelling of engineering systems: Theory, Applications and Software Support, Edition 1, Springer Publisher

Shim T (2002) Introduction to physical system modelling using bond graphs

Coatanéa E (2005) Conceptual modelling of life cycle design: a modelling and evaluation method based on analogies and dimensionless numbers. Helsinki University of Technology

Andreasen MM, Howard TJ, Bruun HPL (2014) Domain theory, its models and concepts. In: Chakrabarti A, Blessing LTM (eds) An anthology of theories and models of design. Springer London, London, pp 173–195

Pahl G, Beitz W, Feldhusen J, Grote K-H (2007) Engineering Design: A Systematic Approach, Springer Publisher, New York

Hundal M, Byrne J (1990) Computer-aided generation of function block diagrams in a methodical design procedure, Proc of Design Theory and Methodology-DTM. vol. 91, pp 251–257

Stone RB, Wood KL (2000) Development of a functional basis for design. J Mech Des 122(4):359–370. https://doi.org/10.1115/1.1289637

Altshuller G (2002) 40 principles: TRIZ keys to innovation, vol 1. Technical Innovation Center Publisher, Hagerstown

Bridgman P (1922) Dimensional analysis. Philos Mag 2(12):1263–1266

Li X, Sudarsanam N, Frey DD (2006) Regularities in data from factorial experiments. Complexity 11(5):32–45. https://doi.org/10.1002/cplx.20123

Kretzschmar N, Ituarte IF, Partanen J (2018) A decision support system for the validation of metal powder bed-based additive manufacturing applications. Int J Adv Manuf Technol 96(9–12):3679–3690

Szirtes T (2007) Applied dimensional analysis and modeling. Second Edition, Butterworth-Heinemann Publishers, Oxford

Mandel J (1982) Use of the singular value decomposition in regression analysis. Am Stat 36(1):15–24

Krus P (2016) Models based on singular value decomposition for aircraft design, presented at the FT2016-Aerospace Technology Congress

Zimmer D, Adam G (2011) Direct manufacturing design rules, Innovative Developments in Virtual and Physical Prototyping. pp 545–551.

Bäßler R (2018) Additive manufacturing of metals–from fundamental technology to rocket nozzles, medical implants, and custom jewelry (book review)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Coatanéa, E., Nagarajan, H.P.N., Panicker, S. et al. Systematic manufacturability evaluation using dimensionless metrics and singular value decomposition: a case study for additive manufacturing. Int J Adv Manuf Technol 115, 715–731 (2021). https://doi.org/10.1007/s00170-020-06158-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00170-020-06158-0