Abstract

The use of technologies in personnel selection has come under increased scrutiny in recent years, revealing their potential to amplify existing inequalities in recruitment processes. To date, however, there has been a lack of comprehensive assessments of respective discriminatory potentials and no legal or practical standards have been explicitly established for fairness auditing. The current proposal of the Artificial Intelligence Act classifies numerous applications in personnel selection and recruitment as high-risk technologies, and while it requires quality standards to protect the fundamental rights of those involved, particularly during development, it does not provide concrete guidance on how to ensure this, especially once the technologies are commercially available. We argue that comprehensive and reliable auditing of personnel selection technologies must be contextual, that is, embedded in existing processes and based on real data, as well as participative, involving various stakeholders beyond technology vendors and customers, such as advocacy organizations and researchers. We propose an architectural draft that employs a data trustee to provide independent, fiduciary management of personal and corporate data to audit the fairness of technologies used in personnel selection. Drawing on a case study conducted with two state-owned companies in Berlin, Germany, we discuss challenges and approaches related to suitable fairness metrics, operationalization of vague concepts such as migration* and applicable legal foundations that can be utilized to overcome the fairness-privacy-dilemma arising from uncertainties associated with current laws. We highlight issues that require further interdisciplinary research to enable a prototypical implementation of the auditing concept in the mid-term.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Avoid common mistakes on your manuscript.

1 Introduction

With the digitization of the world of work, data-driven technologies are increasingly being introduced to manage and optimize selection and hiring processes within companies (Hu 2019; Sánchez-Monedero et al. 2020; Tallgauer et al. 2020). Notably, predictive technologies are being widely adopted across various industries (Crawford et al. 2019), typically to automate subtasks such as sourcing, screening, interviewing, or selection (Ajunwa 2020; Rieke and Bogen 2018). However, these technologies can produce biased decision patterns due to manifold reasons, such as stereotypes implicitly or explicitly encoded in training data (Orwat 2019), the selection of features, target variables, or error function(s) (Dobbe et al. 2018), a lack of data points about certain groups of people, or the operationalization and measurement of phenomena that are not directly observable, such as recidivism risk (Jacobs and Wallach 2021). Thus, the use of these solutions in recruitment risks reproducing or even reinforcing existing inequalities, as individual studies have already demonstrated in specific systems (including Allhutter et al. 2020; Angwin et al. 2020; Dastin 2018; Zeide 2023).

Although research on discrimination potentials in personnel selection technologies and beyond has proliferated in recent years, producing various sociological, ethical, legal, and mathematical-technical approaches (Haeri and Zweig 2020; Krafft and Zweig 2018; Waltl and Becker 2022), comprehensive analyses of such potentials, particularly outside the U.S. context, are lacking (Sánchez-Monedero et al. 2020). Furthermore, no auditing standards have yet been established either explicitly in law or in practice (Crawford et al. 2019; Digital Regulation Cooperation Forum—DRCF 2022). At the same time, EU General Data Protection Regulation (GDPR) requires that when users of such technologies process personal data, they must minimize any discrimination risks that arise for applicants, by designing the technical and organizational procedures accordingly (Data Protection by Design, Art. 25 GDPR). The Artificial Intelligence Act proposal (AIA) of 21 April 2021 classifies AI systems “intended to be used for recruitment or selection of natural persons, notably for advertising vacancies, screening or filtering applications and evaluating candidates in the course of interviews or tests” as high-risk AI systems under Article 6(2). The providers of such technologies must ensure control of discrimination risks in the development phase and monitor them after deployment on the market (Chapters 2 and 3 as well as Art. 61 AIA proposal). However, concrete instructions on how to implement these mechanisms are largely lacking, particularly with regard to monitoring products in use.

We argue that comprehensive and reliable auditing of personnel selection technologies already introduced to the market must be contextualized, meaning conducted in their real deployment environment, embedded in existing personnel processes and based on real data. Furthermore, independent third parties such as advocacy organizations and research institutions need to be given participatory access to address the multiple relevant perspectives on the issue, as well as the need for research in this field. Our proposal in this paper thus represents an important concretization of the legal requirements of both the GDPR and the AI Act proposal, and can be used for possible legislative adjustments or fine-tuning.

The fundamental basis for contextualized and participatory auditing, however, necessitates access to personal data that is subject to stringent legal protection—particularly in the European Union, where the GDPR and the General Equal Treatment Act (German abbreviation: AGG) are in force. The legal uncertainties associated with these laws have been a major impediment even for organizations that have a commitment to equality and diversity, leading them to refrain from systematically collecting sensitive information such as gender, origin or age so far (Makkonen 2007). The resulting fairness-privacy dilemma has only recently been brought into the discourse on technology evaluation (Benjamins 2019). Despite legal regulations to the contrary, customers of respective software are often unaware of their need to audit for potential discrimination, viewing it as a sole responsibility of the developing company. Nonetheless, socio-political and legal discourse is increasingly engaging with the topic (Ajunwa 2019; Orwat 2019). A participatory and contextualized auditing of critical technologies requires the resolution of this dilemma to make technologies verifiable and thus minimize discrimination risks as far as possible while protecting the privacy of individuals seeking employment.

This paper proposes to leverage a data intermediary that provides independent, fiduciary management of personal and corporate data to audit the fairness of personnel selection technologies. Our idea resulted from a cooperation with two state-owned companies to examine their technology-based selection processes with regard to fairness in terms of gender, age and migration background. We begin by discussing some fundamental dimensions that affect all audits of technologies for personnel selection—the measurement of fairness, selection and retrieval of fairness-relevant data, with a specific focus on migration-related information, the legal framework governing data collection and processing, and possible ways to organize audits. We then use the case study to exemplify how these theoretical concepts can translate to a real-life audit and to highlight the challenges encountered. Building upon prior research on technology auditing, we argue that auditing in the human resources field must go far beyond examining the technology’s code and its underlying ‘training data’. We thus elaborate the groundwork for a conceptual framework for technology auditing in the area of personnel selection and discuss some open questions that require further interdisciplinary research to facilitate a prototypical implementation of the auditing concept in the near future.

2 Operationalization of fairness

Auditing technology for personnel selection encompasses several critical aspects that warrant careful consideration. One of utmost importance is the determination of fairness measurement, which raises fundamental questions about the applicable concepts and requisite data collection. Measuring fairness in technology audits for personnel selection typically necessitates the availability of individual-level data. Consequently, the inquiry into how to measure fairness becomes intrinsically entwined with the question of what fairness-related information should be gathered and how to collect these. Moreover, the legal framework governing data collection and processing emerges as a pertinent concern. Additionally, organizational considerations surface, encompassing the structure of the audit itself, including the responsible auditors and the types of information to be utilized throughout the process. We delve into these aspects in greater detail, discussing possible approaches and highlighting the need for further research.

2.1 Fairness metrics

The concept of fairness has become a ubiquitous theme in numerous debates, particularly in regards to ethical AI and ML system development (Smith 2020). However, the meaning of the term varies depending on the context or field of study (Mulligan et al. 2019): In legal contexts, fair practice often involves protecting individuals and groups from discrimination or securing equal participation and influence, while social science perspectives typically focus on social relationships, power dynamics, or institutions. In the context of recruitment, how fairness is interpreted depends on whether, for example, predictions made by a technology lead to discrimination and thus legal relevance comes to the fore, or if an organization is seeking to promote diversity through changes in their selection process.

In the realm of machine learning technology and algorithm evaluations, many competing (and mutually exclusive) definitions of (un)fairness have been developed that are reminiscent of those developed in the 1960 s and 1970 s for testing university admission or personnel selection processes (Hutchinson and Mitchell 2019; Verma and Rubin 2018). The research has so far developed more than twenty different concepts that vary in many aspects. From the perspective of personnel selection, it is especially useful to distinguish the measures in terms of whether they focus on groups or individuals, whether they involve reference groups, and whether they assume the existence of a ‘ground truth’—i.e., an assumed outcome. Many of the existing definitions are not suitable for the HR context. For example, it is difficult to envision recruitment scenarios in which a ground truth would exist. Amazon’s suggestion system (Dastin 2018) is an example of how data from past hires may not be suitable for this purpose. As noted in Hauer et al. (2021), the framing of fairness seems mainly driven by the notion of equality as it demands that “people of all different kinds are either treated equally or distributed equally in a decision output set or some mix of both”.

Group-based metrics, which do not rely on comparisons with a definitive ‘ground truth’, employ sociodemographic characteristics or skill information to form groups. These metrics then compare group-based outcomes, such as the percentage of individuals invited for an interview, across these groups. Variability arises from different group formation methods and the thresholds chosen to determine whether group outcomes meet the criteria for fairness. Different definitions of fairness may be applied, such as the absence of statistically significant differences in proportions among groups or adherence to specific thresholds (e.g., the 4/5 rule in U.S. legislation). Notably, research primarily distinguishes between two types of group-oriented fairness measures that do not rely on the concept of a ground truth: demographic parity and conditional demographic parity. The former aims to preserve the demographic proportions (to a predefined level) of different population groups in the later stages of the application process, while the latter considers the proportions among those applicants who meet certain criteria, such as very good high school grades. Demographic parity is thus particularly appropriate from the perspective of a company that seeks equal distribution with respect to a particular characteristic such as gender (or multiple characteristics) when filling positions (Hauer et al. 2021). Unlike conditional parity, relevant qualifications are not taken into account, although it should also be borne in mind that their consideration masks existing structural inequalities. In contrast to group-based measures, individual-based fairness measures revolve around the principle that individuals with comparable qualifications should achieve similar outcomes in the selection process, irrespective of their membership in protected socio-demographic groups. Ideally, these approaches enable us to provide explanations for why certain individuals did not progress to a particular stage in the selection funnel, or what factors would have been necessary for them to advance further. By doing so, individual-based fairness measures can help to shed light on the specific mechanisms through which unfairness manifests in a given context (Hauer et al. 2021; Kusner et al. 2017).

Statistical evidence of discrimination is growing in importance in legal practice, particularly to demonstrate indirect discrimination. This can be especially beneficial in the deployment of complex technologies, when the potentially discriminatory logic cannot be revealed by examining the code or documentation. Technology deployment thus leads to a shift in focus from the process itself to its outcome, and thus to statistical analyses (Hauer et al. 2021). Current legal research has identified group-based fairness metrics such as the (conditional) demographic parity as particularly relevant for proving indirect discrimination (Hauer et al. 2021; Wachter et al. 2020). However, many details remain unclear from a legal perspective. For instance, it is required that the disparate impact of groups must be sufficiently substantial—without any clarification of what the relevant reference groups, what ‘sufficiently significant’ means, or how it can be measured (Sacksofsky 2010). Moreover, the practical application of metrics within an HR process can carry some caveats, as exemplified by conditional demographic parity. On the one hand, employers often fail to clearly articulate those measurable criteria necessary to fill a job post, distinguishing them from those that are desirable but not essential. This is especially true for apprenticeships, for which there are few concrete and measurable criteria due to the applicants’ lack of work experience, and extra criteria may get added in the course of the selection process.

2.2 Empirical survey of fairness-relevant data through the lens of migration*

While the European anti-discrimination framework primarily emphasizes the individual dimension of discrimination (Center for Intersectional Justice 2019), it should be noted that the assignment of social categories has a considerable impact on interpersonal and intergroup perceptions, which shape structural orders and hierarchies of society. Thus, categorizations must be contextualized within social structures, cultural norms, and different power interests (Beigang et al. 2017; Rommelspacher 1997,164). Every individual does not only assign themselves but also others along certain characteristics and associated attributions (Baer 2008, 443-445), which can lead to disadvantages and discrimination risks in social (power) relations (Rottleuthner and Mahlmann 2011). Discrimination is based on social cognition and categorization processes (Aronson et al. 2008; Fiske and Taylor 1984; Gilovich et al. 2010), by dividing people into ‘we’ and ‘other’ groupsFootnote 1 according to characteristics such as gender, social origin or self-identified or externally assigned ethnicity-related attributes (see Othering in Said (1978) for further information). Thus, discrimination processes can be accompanied by external (individual and institutional) attribution mechanisms, even if the individuals in question do not identify with the group or are aware of the attribution. As a result, individuals can be associated with groups that they neither identify with, nor are they aware of the marking process itself.

Thus, the crucial question in social science theory and in practice is to what extent diversity-related information can be adequately operationalized—without legitimizing reification processes. In line with an intersectional approach, the question arises as to which methodological procedures (quantitative/qualitative) are suitable, how many and which categories can be included in the analysis, and how these can be collected (Center for Intersectional Justice 2019; Knapp 2012; Winker and Degele 2009). While categories such as gender are still declared to be empirically easy to grasp (Wolf and Hoffmeyer-Zlotnik 2003), the empirical mapping of categories such as race or class is accompanied by difficulties of comparability—after all, the way people categorize themselves or others is highly context-specific. With regard to ethnicity, for instance, a person may experience discrimination on behalf of an attributed migration background,Footnote 2 even if that person is, e.g., ‘German’ or self-identifies as ‘German’. Thus, within the German setting, there is a growing debate regarding the adequacy of the migration background category as a factor in discrimination, particularly since this concept is not unidimensional but determined by other aspects. These include (1) whether and how a person identifies with an ethnicity, (2) how they are perceived by others—the ‘attributed migration background’, and (3) how they think they are perceived by others based on, for example, their physical appearance and manner of interaction (Center for Intersectional Justice 2019). Consequently, surveying ethnicity is highly complex due to the outlined multidimensionality, not least because an intersectional perspective is involved, as other group affiliations such as gender, sexual orientation, age, disability, socio-economic class, among others, determine everyday discrimination risks and have different effects—for example, in the form of multiple victimization. The academic discourse attempts to assess the feasibility of utilizing migration background as a category in empirical studies, considering the heterogeneous nature of the respective group including individuals with (recent) migration experience, those with German education, and those born and raised in Germany, regardless of their citizenship status. These individuals may be exposed to similar or different discrimination risks (Supik 2014), thereby an approach for accurate and discrimination-sensitive collection of ethnicity data that adequately includes experience of discrimination needs to be developed.

2.3 Legal ways for collecting sensitive personal data

Despite the increasing importance of statistical fairness evaluations in the legal realm, the required data basis is only rarely available, largely due to fears of violating data protection regulations. In Germany (and the EU), many companies are hesitant to systematically collect sensitive information, such as gender, origin or age. The lackFootnote 3 of respective information can protect against biases and discrimination on the part of decision-makers; however, it also impedes the urgent need for auditing, which is hardly feasible without capturing the corresponding characteristics. This raises the question of how to legally resolve this dilemma.

2.3.1 Prohibition of processing specific personal data categories

Article 9 of the GDPR sets the clearest legal bar for data protection, prohibiting the processing of personal data which reveals, for example, the ‘racial and ethnic origin of a natural person’ (Art. 9(1)). However, the law also provides for exceptions. One such exception exists when applicants consent to the processing of their data (Art. 9(2) lit. a), though this approach often only enables the collection of partial data, thus posing a significant challenge to subsequent analyses (this aspect is addressed in more detail in ‘Consent and partial availability’). An alternative exception could be seen in the fact that the employer or the applicant concerned can exercise the rights accruing to them under employment law and fulfill their obligations in this regard (Art. 9(2) lit. b).

2.3.2 Legally uncertain exception for the implementation of the AGG

In Germany, the General Equal Treatment Act (AGG) implements several EU directives to prevent or eliminate discrimination based on ‘race or ethnic origin, gender, (...) disability, age or sexual orientation’ (§1) in various social contexts. In the working environment, this means that employees (including applicants) may not be discriminated against on any of these grounds, even if the employer only presumes the existence of one of the stated reasons for discrimination (§7). The AGG therefore does not require that discriminated applicants actually have the characteristic attributed to them. The AGG distinguishes between direct and indirect discrimination. Direct discrimination occurs when a person is treated less favorably than another in a comparable situation due to one of the aforementioned reasons (§3(1) sentence 1), while indirect discrimination describes situations in which apparently neutral rules, criteria or procedures put an individual at a particular disadvantage compared to other individuals due to one of the specified grounds, unless the rules, criteria or procedures in question are objectively justified by a legitimate aim and the means are appropriate and necessary to achieve that aim (§3(2)). The law does not state what constitutes ‘less favorable treatment’, thus it is up to the interpretation of the legal provisions to decide whether demographic parity or conditional demographic parity or another procedure should be used as proof (Hauer et al. 2021).

The employer’s obligation to take necessary measures, including preventive ones, to protect against discrimination (§12(1)) can certainly be considered as an exception for the processing of personal data such as ethnicity data (Art. 9(2) lit. b GDPR). However, for this exception to be applicable, the AGG must provide appropriate safeguards for the data subject’s fundamental rights and interests (Art. 9(2) lit. b a.E.). Since the AGG was introduced before the GDPR and does not explicitly provide for such data protection safeguards, the application of the AGG as an exception in the sense of Art. 9(2) lit. b GDPR brings about considerable legal uncertainties in practice.

2.3.3 Exception for scientific research and statistical purposes

An exception for scientific research and statistical purposes may be considered (as far as this is provided for by the law of a Member State (Art. 9(2) lit. j GDPR)), as long as data are processed for the purpose of research or statistical detection of unequal treatment in job application processes. In fact, the German Federal Data Protection Act (BDSG) permits the processing of special categories of personal data, such as ethnicity data, if needed for research or statistical purposes, and the interests of the responsible party in the processing significantly outweigh the interests of the data subject in excluding the processing (§28(1) sentence 1 BDSG). Since ensuring a fair recruitment process is ultimately in the interest of all applicants, this consideration is usually positive. Nonetheless, the party processing the data—whether the employer or third parties involved—must implement a number of specific data protection measures, such as anonymization or at least pseudonymization of the data or encryption (§28(3) and §22(2) BDSG).

Finally, the BDSG refers to the implementation of technical-organizational measures according to §25(1) GDPR (§22(2) No. 1 BDSG), to effectively protect the fundamental rights of applicants to privacy, freedom, and equality.Footnote 4 Where the effective protection of these fundamental rights requires it, the responsible party should consider providing audit procedures to detect and control potential risks of discrimination. This is especially true if such a procedure is scientifically proven to be the most effective means on the market and thus considered state of the art. Though, such a specific obligation derives only implicitly from the law and also depends on market dynamics and the evolving state of the art (Von Grafenstein 2022).

The aforementioned explanations provide an insight into the legal ambiguities linked to the aforementioned regulations. Coupled with the threat of severe fines (Art. 83(5) GDPR),Footnote 5 this has had the practical effect of data required to detect potentially unequal treatment not being systematically collected, even though doing so would be legally permissible. It is thus not surprising that most developers and customers of HR technology are currently not (thoroughly) checking whether the application of the tools they develop or buy leads to discriminatory decisions (Michot et al. 2022).

2.4 Organization of auditing

Fairness evaluations can involve multiple parties, raising questions about the responsible entities conducting audits and the types of information utilized, as well as their relationship to legal conditions and resultant effects.

Internal or first-party audits are conducted by the developing company using their own resources, usually before the technology is released (pre-deployment audit). Manufacturers can adhere to existing recommendations for developing and auditing fair technology before it is put on the market (cf., e.g., Raji et al. 2020; Sloane et al. 2022). However, producers are not equipped to objectively, independently, and reliably assess their own ideas and implementations (Raji et al. 2020). Moreover, the respective companies, with their considerable resources and expertise, are the ones who decide whether to make the outcomes of the audit public. As a consequence, auditing of proprietary technologies remains ‘behind closed doors’, thus failing to address the societal need to intervene in questionable developments (Krafft and Zweig 2018; Michot et al. 2022).

In recent years, second-party audits, also referred to as cooperative audits, have been introduced in the recruitment field (O’Neil Risk Consulting and Algorithmic Auditing (ORCAA) 2020; Wilson et al. 2021). The results of such audits, carried out by external auditors commissioned by the company, are made available to the public and thus contribute to (at least limited) increases in transparency (Engler 2021a). Nevertheless, the auditors are not legally protected nor are they audited; companies are not obligated to act upon the audit’s findings. Moreover, such audits are sometimes utilized for promotional purposes, with companies engaging in ‘ethics washing’ to broadly vouch for the lack of bias (Digital Regulation Cooperation Forum—DRCF 2022; Engler 2021b).

However, companies are typically not inclined to be audited (Raji et al. 2021). A third-party or external audit refers to an evaluation without the consent of the developing company, sometimes even without their knowledge. The auditors are usually (financially) independent third parties (researchers, journalists, civil society, law firms, etc.) with the aim to identify and quantify the differences between what the system’s expected and actual performance. One of the few published examples is the audit of the Austrian Labor Market Service’s Labor Market Opportunities Assistance System (AMAS) (Allhutter et al. 2020). External auditors often face opposition from technology manufacturers. Given the high and justified societal and legal demand for comprehensive and reliable evaluation of high-risk technologies by independent third parties, the legal and organizational complexity of practical feasibility is unacceptable, with high costs and risks for the auditors.

Audit approaches can further be distinguished in terms of level of access to the respective technologies, the extent of which effects the depth of the analyses (Koshiyama et al. 2021): While internal auditing usually grants full access to algorithms, documentation, and information about internal company processes and strategies, cooperative auditing access is contingent to the particular agreement. Within external audits, in contrast, the auditors do not have access to code and internal company documentation and must instead work with indirect observations of the system. This can be done qualitatively, for instance using available information and documentation to infer the key logic of the technology (Allhutter et al. 2020), or quantitatively, by applying the technology to generated or collected datasets and then assessing the outcomes (e.g., Mihaljević et al. (2022)). Yet access to the technology is needed, for example via an API, which in turn depends on the respective terms of use and the willingness of the technology providers (Sandvig et al. 2014).

3 Case study: fairness analysis of trainee selection

As part of a research project, we collaborated with two state-owned companies based in Berlin, Germany, to examine the use of technologies for selection of trainees with regard to potentials and obstacles for equality. In this respect, the selection of trainees poses a special challenge: the typically young applicants possess minimal to no work experience, and thus can mainly present school grades as an indicator of capability, which increases the risk of potentially unfair decisions.Footnote 6 The partnering companies were primarily interested in monitoring fairness within the organization as well as establishing reflective practices on future utilization of technology. From our research perspective, the relevant question was how such analyses could best be operationalizedFootnote 7 in practice on a ‘micro-level’, i.e., in cooperation with an individual company. As such, each cooperation can be considered a 2nd party audit of a technology-supported selection process within the respective company.

3.1 Selection process of practice partners

The two companies under investigation have many similarities, making them an ideal comparison: Both state-owned entities in the same city, industry and of comparable size, they are subject to the same legal provisions, while striving to promote equal opportunities and increase diversity in their workforce. At the same time, their selection processes differ at various points, making them interesting from an analytical point of view: company B evaluates most applicants using psychometric tests, while company A prioritizes a comprehensive pre-selection based on application material. In both cases, however, only a small group is invited to an assessment center. Both companies manage the majority of the selection subprocesses with a (different) applicant management system that provides standardized application forms. Table 1 provides an overview of the most important information requested.

3.2 Statistical fairness analyses

We have opted to concentrate on demographic parity and conditional demographic parity as the chosen approaches for measuring fairness in our study. The rationale behind this selection is twofold. Firstly, conducting individual-level analyses would have necessitated complete data, particularly concerning all qualifications of applicants, which, unfortunately, was unavailable. Attempting to manually complete the missing data would have been unfeasible. Given the absence of a definitive ground truth, demographic parity and conditional demographic parity emerged as the most practical options for assessing fairness at a group level. The respective computations required access to (1) job requirements such as high school grades or language skills, (2) diversity characteristics of applicants, and (3) funnel stages of the selection process reached by each applicant.

Based on discussions with the practice partners and the data retrieved through the application data, we focused on gender, migration background and age. While genderFootnote 8 and age are asked directly through the application form (see Table 1), no data pertaining to migration experience, migration background, or any other form of ethnicity perception was collected from either the applicants or the recruiters. As a result, we opted to determine the ‘attributed’ migration background for each applicant. This approach allows us to investigate how decision-makers’ perceptions of an applicant’s migration background may have influenced their outcomes.

Given the lack of state-of-the-art algorithms for determining the most probable ascribed migration background from information such as names or foreign language skills, it was not possible to provide the company with suitable software, thus adhering to the privacy-friendly premise of ‘code to the data’. Instead, we had to develop an own heuristic from the applicants’ data. Since personal names are already strongly associated with ethnicityFootnote 9 and are included in each job application, we decided, also for data minimization reasons, to utilize mainly those. However, full names are personally identifiable, as some combinations of first and last names might be so rare in Berlin that a search, for example on social media, could lead to the identification of some individuals. Therefore, the following data anonymization procedure was implemented:

-

1.

The company provided a data set of first names that they had augmented with a randomly selected set of names. We then semi-automatically assigned each name to one of six categories and returned the respective assignment to the company.

-

2.

The company’s recruiters coded applicants’ surnames as either ‘German-sounding’ or ‘non-German-sounding’.

-

3.

The company replaced names by categories for first and last names and provided the recoded data for analyses.

3.3 Technical-organizational measures for GDPR compliance

Company A deemed the research project to be compliant with the GDPR as long as only anonymized data was passed on to the research team. In this case, obtaining the consent of the applicants was not considered necessary, as the anonymization and forwarding of the anonymized data was carried out by the company and could be based on Art. 6(1) lit. f GDPR (legitimate interests). Consequently, the data provided must not contain any information that would enable the identification of an applicant.

Moreover, the original purpose of the data collection was deemed compatible with the new purpose of conducting the experimental audit due to their close connection, as the experimental research project was intended to reveal new insights into the application process that could ultimately help to optimize and improve it. At the same time, no sensitive information according to Art. 9 or 10 GDPR was involved. In addition, the personal reference of the data was largely minimized before further use, as described in ‘Statistical fairness analysis’ with regard to the attributed migration background, so that no conclusions can be drawn about the individual persons concerned. This effectively protected the affected applicants, ensuring the absence of unacceptable risks on their side. Finally, the GDPR provides simplifications for data processing for the purposes of research and scientific work, e.g., Art. 89 GDPR, recitals 157 and 159. Following the recommendation of the GDPR, the privacy statement was amended to inform about the new purpose and its implementation.

In contrast to company A, the data protection representative of company B concluded that, according to the AGG and GDPR, it was not permissible to provide us with various attributes, including the applicants’ first names. As this information was indispensable for predicting the ascribed migration background, no further work was done with company B’s data. The divergent interpretation of legal principles in nearly identical corporate environments highlights the significant legal uncertainty in the interaction between privacy protection and assessment of discrimination potential.

4 Towards a concept for contextualized, participatory technology audit

The presented case study exemplifies the practical implementation of a second-party audit, showcasing how the theoretical dimensions of selecting fairness measures, surveying data, addressing legal considerations, and determining organizational structure can be effectively managed. Through this illustration, we also highlight some persisting challenges and unresolved questions in this field. However, it is important to note that the outcome of such an analysis, at best, involves the identification of statistically discernible disparities within a technology-driven personnel selection process of a single company. In the remainder of this paper, we delve into the potential realization of audits on a ‘macro-level’ and emphasize the benefits for companies that utilize such software would derive from analyzing the application of these technologies within their ‘micro-level’ processes. We begin our elaboration by considering the implications of the AI act on AI technologies for personnel selection.

4.1 Auditing implications by the AI Act

According to Annex III No. 4 (a) of the AI Act proposal, AI systems intended for recruitment or selection of natural persons, in particular for advertising vacancies, screening or filtering applications and evaluating applicants in interviews or tests, are considered high-risk systems. The proposal sets out several rules for providers and users of high-risk AI systems to protect the fundamental rights (including equality rights) of data subjects, such as job applicants or employees. Among other things, AI system providers must take the following measures: the establishment of a risk management system (Art. 9); functioning data governance (addressing training, validation, and testing datasets, Art. 10); transparency and provision of information to users of AI systems (Art. 13); and human oversight during use (Art. 14).

The draft regulation explicitly allows for processing of special categories of personal data for the purpose of creating a properly functioning data governance, to the extent that it is absolutely necessary for the observation, detection and correction of biases in the development of the high-risk AI system. As with Art. 89 GDPR, AI system providers must take reasonable precautions to ensure the protection of fundamental rights such as data protection and the privacy of data subjects, including technical limitations on further use and ‘state-of-the-art security and privacy measures such as pseudonymization or encryption’ (Art. 10(5)). The AI Act thus clarifies—in contrast to the GDPR—that the processing of special categories of personal data to avoid discrimination risks is a lawful activity.

In addition to the general requirements for high-risk AI systems, the providers of such systems must comply with a whole range of auditing obligations (Art. 16 ff.): They are required, for example, to comply with certain registration obligations; to ensure that the system is subject to the relevant conformity assessment procedure before being placed on the market or put into use; to inform the national competent authorities of the member states where they have deployed or put into service the system and, where applicable, the notified body, of the non-compliance and of corrective actions already taken; to affix the CE marking on their high-risk AI systems to indicate legal compliance; to demonstrate, at the request of a national competent authority, that the high-risk AI system complies with the aforementioned requirements.

Finally, it is important for the context at hand that the AI Act primarily focuses on the time of development of the AI system. Providers must align protective measures not only with the system itself, but also with the purpose of the system (Art. 8 and 10). Accordingly, the risk management system contained therein is understood to be a continuous iterative process throughout the life cycle of an AI system, requiring regular systematic updates (Art. 9(1)). Thus, providers must monitor the high-risk AI systems they deploy (Art. 61), and take necessary corrective measures if the system does not meet the aforementioned requirements (Art. 16). There are also further obligations for users of the systems to report to the provider any newly discovered risks arising specifically from their use (Art. 29). This basically corresponds to an auditing approach that looks not only at the creation of the AI system, but also at the context of its use. However, since the primary regulatory addressee is the provider of the technology and not the user, this approach has certain weaknesses in practice. Moreover, it is questionable to what extent the users of the technologies, typically HR departments within companies, will (be able and are willing to) notice the ‘bugs’ of the technologies and report them accordingly (Guijarro Santos 2023).

4.2 Beyond the AI Act: need for contextualized participatory auditing

The AI Act is intended to introduce quality requirements for the development and use of AI systems, particularly those considered high-risk. Though the specifics of these requirements are yet to be determined, it is likely that some major errors can be avoided during the design and development of software. However, the effectiveness of the measures will only become clear once they have been specified and implemented. In any case, compliance with almost any quality requirements during the development phase will not guarantee the system’s risk-free use. The ongoing incidents of discrimination through technology use across different products and industries confirm this appraisal. Even if developing companies are only interested in software that is ‘fair’ and non-exclusionary, their options are limited per se, for instance by the lack of perspectives of marginalized populations within the workforce or the simple fact that some exclusionary effects themselves lack a solid conceptual basis, as explained in the discussion of the migration background survey (see, e.g., the section ‘Empirical survey of fairness-relevant data through the lens of migration*’). It is therefore in the interest of society as a whole to enable external auditing after market launch which incorporates the interests of the developing and using companies, despite the extra effort involved.

Documentation, code, and technical testing can provide important insights into how a technology functions and can help to identify existing problems. However, they are not sufficient as a basis for uncovering potential discriminatory effects of a technologyFootnote 10 (Brevini and Pasquale 2020; Raghavan et al. 2020). Algorithms can be difficult to comprehend, even for experts and especially when it comes to complex methods like Deep Learning based models. The increasing use of large language models in NLP applications, which are becoming increasingly relevant for HR applications, illustrates the problem, as the so-called stereotypical bias (unsurprisingly) shows a positive correlation with the performance of corresponding models (Nadeem et al. 2021). This access to evaluation in particular excludes non-computer scientists in particular, hindering inter- and transdisciplinary collaboration.

Furthermore, discriminatory effects of technologies cannot be fully understood at the algorithmic level alone, as they may arise in the context of a particular dataset or application (Sandvig et al. 2014). Evaluations on benchmark datasets, for example, no matter who provides them, cannot capture all relevant impacts resulting from the usage of the respective products, as they cannot shed light on process- and organization-related aspects (Green and Chen 2021; Raji et al. 2020) as well as the comprehensive social processes (Engler 2021a; Kim 2017). In fact, even a third-party audited technology can be used within an organization in such a way that substantial equal opportunities are reduced and discrimination is promoted. For example, a recommender for writing inclusive job postings can be used in a way that minimizes the number of people from marginalized groups who feel targeted. Ultimately, personnel selection represents the culmination of a series of smaller, sequential decisions that are increasingly supported by algorithms, but final hiring decisions still involve human judgment. This highlights the need to examine how appropriate technologies operate at each step of the selection process to understand and reduce bias (Bogen 2019).

Empirical evaluations based on real data in real socio-technical contexts offer the possibility of comprehensive auditing where the technologies have an impact. Such data are generated at the technology manufacturers’ customers as part of recruitment processes. To access the right data sources, technology vendors and customers as well as individuals applying for a job need to collaborate.

For such contextualized and participatory auditing, again the GDPR can be applied. From a legal perspective, the material scope of application of the AI Act is largely congruent with that of the GDPR. On the one hand, this is due to the fact that the current draft of the AI Act even counts statistical approaches, search and optimization methods as AI systems (see Annex II to Art. 3(1) AIA). Thus, a very large number of technologies that typically process personal data fall within the scope of the AI Act proposal. On the other hand, the regulation lists AI systems as high-risk primarily in those areas of usage in which the systems are typically operated with personal data (see Annex III). Despite the extensive overlap in the material scopes of application, the two laws are complementary in two key points: Since the AI Act primarily addresses the developers of AI systems, who have not been covered by the GDPR so far, it initially introduces producer liability that has long been called for in the context of the GDPR. At the same time, the GDPR does not focus on the time of development of the technology, as the AI Act proposal does, but on the time of its deployment (Art. 25(1) GDPR). The two laws are therefore complementary, not only in terms of the personal scope of application, but also in terms of the time of the audit.

The Data Protection by Design approach (Art. 25(1) GDPR) requires that the processors of the personal data, i.e., the users of the AI system, manage the technical and organizational procedures in such a way that the risks to the equality rights of the applicants are effectively controlled.

4.3 Utilization of data trusts

The collection and disclosure of personal data such as age, gender or migration background of job applicants is subject to a great deal of legal uncertainty on the side of HR departments which conduct technology-supported selection processes. In addition, applicants need to have a high level of trust in the technical and organizational measures for collecting, storing, processing, analyzing and archiving their data. However, neither potential employers nor technology manufacturers are suitable for this role. This problem, also seen in other areas like medical (Blankertz and Specht 2021) or mobility data (Wagner et al. 2021), has in recent years led to the discussion and, in some cases, the legal establishment of various concepts aimed at creating or ensuring the necessary legal certainty and trust in the use of sensitive data.

One of these concepts is the idea of a data trust—a legal structure that enables independent, fiduciary management of data (Open Data Institute 2018). A data trust thereby manages “data or rights relating to these data for [...] data provider and makes them available to third parties [...] for their purposes or also for purposes of the general public” (Funke 2020). The fiduciary duty implies a very high degree of impartiality, prudence, transparency and undivided loyalty (Open Data Institute 2020). Such a data trust or data intermediary model ensures that, while the data needed for fairness-related analyses is collected, it does not end up in the hands of potential employers. The data intermediary, through its central role or function in serving multiple employers as well as professional networks, can also ensure a higher number of cases needed for statistical analyses. Due to this scaling and trust-building function, data intermediaries have also gained a lot of interest from legislators and public administrators in recent years. This is demonstrated not least by the recommendation of the German Data Ethics Commission and the Competition Law 4.0 Commission (Federal Ministry for Economic Affairs and Energy (BMWi), Competition Law Commission, 2019) to investigate the feasibility of establishing them (Algorithm Watch 2020).

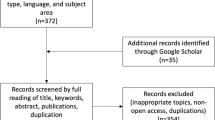

For the evaluation of technologies in the field of personnel selection, we propose the use of such a data trust, which stores applicants’ data relevant for fairness analyses, such as gender, age or migration background. Figure 1 displays a possible architecture draft, showing flows of data between application platforms, selection processes, the data trust and the auditing parties. Accordingly, HR personnel should obtain access to job-relevant non-personal data such as grades or working experience, while sensitive data of job applicants flows solely into the data trust. Moreover, to ensure a high degree of interoperability, applicants’ data should be collected in a possibly standardized and inclusive way. The development and integration of gateways to job platforms such as LinkedIn, through which numerous employers now facilitate applications, could increase the standardization of the data collected. Furthermore, the desired analyses require the linking of an applicant’s personal data with recruitment progress indicators via an applicant ID (in Fig. 1 denoted by A-ID), as well as information regarding the software (module) applied in the respective recruitment step. The two latter data sources should be provided by the companies and organizations involved, which thus also act as data suppliers in the trust. Together, these data sources form the minimum data basis for fairness-related analyses. Since a technology would typically be used by multiple HR departments, additional technology identifiers, denoted in Fig. 1 as \(T_k\)-ID, are required to cross-connect the analyses across different job application processes.

Various questions arise when endeavoring to implement such a form of data governance. In addition to the previously discussed topics of measuring fairness and surveying migration experience, these encompass many other aspects such as concerns related to security, privacy, and the actual values that should underpin this form of governance (Zygmuntowski et al. 2021). In the subsequent sections, we delve into some of these aspects, exploring potential approaches and their implications, drawing upon existing research findings, and presenting ideas for future research and development.

4.4 Legal certainty and trust for the stakeholders

The concrete design options for implementing data trusts or data intermediaries remain largely unspecified, with few real-world examples to date (e.g., Creative Diversity Network 2022 in the field of media monitoring). The focus is therefore on the existing legal framework and the one under discussion.

The EU Data Governance Act (DGA), which is still in the draft stage, devotes an entire chapter to the legal conditions that must be satisfied by data intermediary services. Particularly pertinent to the proposed concept is Art. 11 No. 1 of the DGA, which stipulates that data intermediaries may not process data for their own purposes, but only for the purposes of the data donors and users (e.g., applicants, employers, and technology developing companies). Insofar as personal data is processed, which would be the case in the context of recruitment, this corresponds to the role of commissioned processors as defined by the GDPR (Art. 4 No. 8 and Art. 28).

In addition, there are requirements in both the GDPR and the AIA proposal that refer specifically to the proof of compliance with the legal requirements through auditing procedures. These fulfill the important function of increasing legal certainty from the perspective of the parties involved. The procedures are designed very differently and have not yet been aligned with each other. For instance, the GDPR provides voluntary certification procedures for controllers and processors (Art. 42 f.). These are the entities that set the specific purpose of the data processing (Art. 4 No. 7)—for example, the HR departments in companies—and process the data for these purposes (Art. 4 No. 8)—for example, a data trust. Completing a certification procedure can be used to demonstrate adherence to the GDPR regulations, providing an incentive for organizations to pursue certification (Art. 24(3), Art. 25(3), Art. 32(4) and Art. 83(2) lit. j). Since these auditing procedures start with the processing of personal data, they thus take the use context of AI technologies more directly into account than the auditing procedures for the AI systems themselves.

According to the AIA, providers of high-risk AI systems must ensure that their system is subject to the relevant conformity assessment procedure before it is placed on the market or put into operation (Art. 16). Thus, unlike in the GDPR, the conformity assessment programs of the AIA are not voluntary, but mandatory. On the other hand, the required conformity assessment procedures are a combination of partly internal and partly external validation. The AIA considers internal validation by the technology provider itself to be sufficient (Art. 43(1) sentence 1) if the technology complies with harmonized norms published in the Official Journal of the EU (Art. 40) or with common specifications issued by the EU Commission. The purely internal validation is also justified by the fact that, in addition to this ex-ante validation, ex-post monitoring measures are also provided for, both by the provider and by the state authorities (see Memorandum). Only if such norms or specifications are not available or if the technology does not comply with them, the provider has to submit his technology to an external validation by an independent conformity assessment body (Art. 43(1) sentence 2). The proof of compliance provided by the respective procedure is thus accompanied by varying degrees of credibility.

Basically, certification procedures within the scope of the GDPR can build on the auditing procedures of the AIA. At present, however, the interplay between the various regulations has hardly been discussed. It is foreseeable that there will be a considerable need for synchronization here to ensure the effectiveness of the auditing procedures in the light of their legislative objective (Von Grafenstein 2022).

4.5 Consent and partial availability

Comprehensive information of all candidates on the relevant aspects would enable statistically reliable analyses, like in our case study where we had a census for selected variables. However, if consent is chosen as the legal basis for the data trust, it can be assumed that only a (small) proportion of job applicants will share their personal data, resulting in what is known as participation bias. The decision to (not) share data is not random; therefore, the individuals whose data are ultimately available cannot constitute a representative sample (in any sense) of the entire applicant population. Rather, willingness to share data is related to the purposes of sharing, socio-demographic characteristics of data donors, and the type of information shared (Cloos and Mohr 2022; Lin and Wang 2020; Sanderson et al. 2017). Numerous research works address the issue of information sharing, particularly on social media platforms. However, the context of auditing technologies and processes with the aim of making visible and reducing potential discrimination and disadvantage outlined here has not yet been the subject of corresponding analyses. Thus, the specific reasons for (not) sharing data are not known, nor is an estimate of which socio-demographic groups would be particularly underrepresented.

The issue of addressing participation bias in downstream tasks, including group-based fairness analyses, remains an ongoing challenge without a definitive state-of-the-art solution. Consequently, a key question arises regarding the statistical insights that can be gleaned from such data and the appropriate types of statistical analyses to apply. Recent developments in bias correction, such as the idea of acquiring differentially private information from non-participants regarding the demographic groups they identify with (Chen et al. 2023), might offer a promising avenue for mitigating and rectifying biases in participant data.

4.6 Specification and determination of fairness indicators

As explained in Sect. 2.1, various fairness metrics on the level of groups and individuals are in principle suitable for the field of recruitment. It further remains to be conclusively clarified, which information, and if any, can be used as ground truth in the context of personnel selection. In reality, only a few of them will be suitable for a particular use case (e.g., evaluation of video-based screening software) and the particular perspective of the auditors (e.g., social impact on people with migration experience). Therefore, in the context of a data trust, a selection or adaptation of indicators must be made depending on the technology used and the goals associated with its utilization or analysis. This requires a comprehensive knowledge of the possibilities and limitations of the respective metrics among all parties involved in an evaluation. For instance, when the number of applicants for a set of positions that were evaluated using a certain technology is high, as in our case study with hundreds of individuals handing in their applications for a trainee position, intersectional considerations are possible to a certain extent, at least in the case of (conditional) demographic parity. When specifying appropriate metrics, the inclusion of advocacy organizations for relevant groups of people is particularly important, as it allows up-to-date information on facets of real labor market disadvantage to be taken into account. Currently, the scientific discourse appears to lack sufficient incorporation of the perspectives of the affected population, making the involvement of advocacy organizations all the more vital. Still, in this context, it should be considered that the focus on bias against ‘protected classes’ ignores the more complex ‘subpopulations’ that are (implicitly) created by algorithms (Zeide 2023, 423f).

The legal interpretation, especially with regard to aspects such as the choice of benchmark groups or the definition of threshold values (see Sect. 2.1), is the subject of current discourse and must therefore also be continuously integrated into the further development of the concept. From a statistical point of view, it is in any case more favorable to be able to carry out analyses based on larger and more widely dispersed data sets—which is difficult to achieve when evaluating data from a single client company and therefore argues in favor of establishing a data trust with a high level of participation. Since hiring processes involve numerous decisions, the question of (un)fairness has to be raised at every stage and decision step in the process (Rieke and Bogen 2018; Schumann et al. 2020), which is reflected in our framework through continuous communication of progress indicators and respective technologies.

4.7 Obtaining diversity-related information—self-identification as an alternative

With the presented case study, we applied an experimental approach to capture the ascribed migration background (see Sinanoglu 2022). In Sect. 2.2, we highlighted that the way how people categorize themselves or others is highly context-specific. Statistically, an individual’s experiences of discrimination can be invisible, for example on behalf of their nationality; however, in daily life they can be exposed to discrimination, e.g., when looking for housing or applying for a job, without this being statistically counted as discrimination (Supik 2014). One idea to give more visibility to these invisible experiences is voluntary self-disclosure about (ascribed) group memberships (Supik 2014). DeZIM’s National Discrimination and Racism Monitor offers a notable approach, where both self-attribution and other-attribution are taken into account. The relationship between self-identification and subjectively perceived other-attribution highlights the complexity of racialization processes, as people may assign themselves as one racialized group, but identify with another one. At the same time, people can be labeled as racialized but do not identify with any of these groups (see Module II in (Sinanoglu, 2022, 8)).

The German Federal Anti-Discrimination Agency recommends collecting migration-related information based on self-identification (Beigang et al. 2017). Yet the category ‘migration background’ poses a particular challenge, as it is often not congruent with phenotypic characteristics (such as dark hair or skin color). Furthermore, ethnic discrimination is based on attributions by others, so that in addition to the self-description, the perceived categorization by others must also be recorded to make discrimination processes visible.

In this context, Germany has so far lacked an established categorization system for the self-assessment of one’s own ethnicity (Beigang et al. 2017).Footnote 11 Overall, it remains to be noted that there is a lack of instruments and continuous monitoring for the sensitive collection of discrimination-relevant categories (Beigang et al. 2017).

Accordingly, future research is urged to work on the challenges of the statistical compilation of diversity-related information and advance innovative (longitudinal) analyses. The focus should emphasize the questions of how and what personal characteristics should be collected, and whether these might depend on factors such as industry, job category, or type of technology used.

5 Discussion

The increasing prevalence of data-driven technologies in HR processes raises important questions about the need for auditing and monitoring. The current proposal of the Artificial Intelligence Act (EU AI Act) classifies numerous AI systems in the area of personnel selection and recruitment as so-called high-risk technologies and formulates quality requirements for their development and post-deployment monitoring to ensure the protection of the fundamental rights of the individuals concerned. However, the focus lies primarily on the process of developing the technologies; how exactly monitoring should take place during the utilization of the technologies is not specified further. In view of the well-known automation bias, which is particularly pronounced in the case of technologies that appear to be intelligent, it is legitimate to ask to what extent the users of such tools would recognize outputs that lead to discrimination and also report them to the manufacturers. Thus, the current draft of the EU AI Act considers the regulation of AI largely detached from existing social power relations (Guijarro Santos 2023), in which corresponding technologies are used.

We have argued that existing technologies for personnel selection and recruitment on the market, especially those with a high risk of automation bias, should be evaluated both in context and with the participation of various stakeholders. To ensure comprehensive testing of these technologies, real data must be used in the real contexts in which the technologies are used. The auditing process should not only involve providers, users and, if necessary, certification authorities; access for researchers and advocacy organizations in particular must be granted to avoid a simplistic reduction to isolated metrics, as we have shown in our explorations of fairness metrics. This is especially necessary to verify and further explore the operationalization of difficult-to-measure concepts and constructs in practice, as we have shown in the example of migration*; or to be able to consider the perspectives of affected people who are increasingly confronted with a combination of algorithm-based decisions in their job search without being able to recognize them.

Such an approach requires collaboration among various stakeholders, especially for data and information sharing, resulting in considerable technical-organizational effort compared to post-deployment monitoring performed by developers (and/or users) alone. Nevertheless, as we have argued, a data trust as a trust-based architecture for evaluation offers numerous advantages for both technology providers and users, in particular the access to a wide range of real data, resulting in more robust results and the ability to make any necessary adjustments at an earlier stage. This is a particular advantage for small and medium-sized enterprises and providers with no large customers, as they are likely to have greater difficulties in ensuring adequate data quality and quantity for evaluations.

Further questions arise with regard to the legal basis of data trusts. The legal regulations for the use of data intermediaries are still in their infancy and require further elaboration. Though, the legal bases chosen have implications, as we have shown with the example of partial availability based on consent.

Research is also needed concerning the identification of suitable intermediaries and financing models as well as the specification of technical and organizational measures such as the choice of platform technology or protocols for data security and privacy to ensure a high level of protection for sensitive data. Moreover, it is necessary to determine which stakeholders should have access to which data elements, which analyses should be possible for the technology providers, which for the users of the respective HR products, and which for external third parties such as NGOs and researchers. Research should also address questions about which external third parties can audit and whether all parties with access to audit should go through a certification process so that their analyses can be considered trustworthy. Evaluation of technologies based on data sets of different customers will raise reservations among companies, both on the manufacturer and user side, because of the possible disclosure of important business information. These must not only be addressed through detailed security, privacy and access concepts, but also by creating additional incentives. One such incentive would be the development of legislative requirements that necessitate contextualized participatory auditing along the lines we have developed. Alternatively, incentives such as certificates would be one way to translate the concept into practice (see, e.g., Ajunwa 2019). However, caution against ‘ethics-washing’ is needed. Fairness audits should not be linked to false expectations, as they cannot guarantee fairness, but primarily ‘expose blind spots’ (Raji et al. 2020).

Furthermore, there is the question of how to handle identified discrimination: which actors should be informed, and how is it ensured that the technology developers have adapted their systems? A new audit should definitely take place after the systems have been changed. As a good practice, initial positive results could be published and serve as an exemplary template. In the past, disclosure of bias in technologies not only led to a necessary thematization and scandalization of discrimination in sociopolitical discourse, but also to rapid improvement of tools (Raji and Buolamwini 2019).

Despite the relevance of participatory, contextualized evaluations, it is important to note that data are the underlying foundation for financial, political and social interventions. Having more comprehensive data is certainly not a panacea against discrimination, but primarily an improved diagnostic tool compared to current possibilities. Furthermore, it should not be overlooked that auditing processes entail emotional involvement (Keyes and Austin 2022). People in advocacy organizations, researchers, as well as others involved in the evaluation may be simultaneously affected by discrimination (which could be caused or exacerbated by the technologies under study); they are both subject and object (Pearce 2020). Auditing processes are ‘messy’ on many levels (Keyes and Austin 2022)—thus, the practical exploration of auditing is still at its very beginning.

Data availability

The research outcome described in this paper does not primarily rely on any specific data. Therefore, no data are associated with the findings and conclusions presented herein.

Notes

Detailed discussions on the critical appraisal of the (empirical) interpretation of the term ‘migration background’ can be found in (El-Mafaalani 2017), among others.

This does not mean that HR decision-makers do not have access to sociodemographic information, since respective details can typically be found in CVs or inferred from proxies such as place of birth or name parts.

E.g., the EU fundamental rights to non-discrimination under Article 21, to cultural, religious and linguistic diversity under Article 22, to equality between women and men, respect for the rights of the child and the elderly under Articles 23 and 24, and the integration of persons with disabilities under Article 25.

Up to 4% of annual global sales or \(\hbox{C}\!\!\!\!\raise.8pt\hbox{=}\) 20 million, whichever is greater.

Grades and (school) qualifications alone do not explain the poorer chances of young people with a migration background to find a trainee position, and the effect of grades differs for subgroups depending on gender, nationality and religion (cf. El-Mafaalani 2017).

By this we mean making a complex social process measurable with one or a few metrics, which is always accompanied by a reduction in complexity.

Gender is indirectly queried via the question about the desired salutation, whereby company A also offers ‘diverse’ as a selection option, which, however, was not selected by any of the applicants.

The discourse surrounding the ‘New Year’s Eve riots’ in Berlin highlighted a heightened association of first names with ethnicity, as exemplified by the CDU parliamentary group in the Berlin House of Representatives’ question to the Internal Affairs Committee: What are the first names of the crime suspects having German citizenship?, which implied that they were not ‘real Germans’. https://www.berliner-zeitung.de/news/silvester-randale-cdu-berlin-fragt-nach-vornamen-deutscher-tatverdaechtiger-li.304116

Most algorithms rarely use data containing explicitly protected information about individuals simply because of data protection regulations. However, these can be learned from other variables with which they statistically correlate.

Using ethnicity as an example, DeZIM and collaborators highlight the following for developing a category system: (I) possibility of ethnic self-identification(s) of respondents (open-ended statements); (II) possibility to state multiple ethnic—national—cultural—ideological—religious—X—group identifications, hybrid, bi-/tri-/X*Y-/trans/intersectional identities; and (III) self-determined specification of discrimination experience modalities (additive, subtractive, interactive positive, interactive negative, multiplicative, accumulative), discrimination experience dimensionalities (individual \(\leftrightarrow\) group/experiential \(\leftrightarrow\) observed), and discrimination structuralities (institutional/structural/historical) (Center for Intersectional Justice 2019, 29)

References

Ajunwa I (2019) An auditing imperative for automated hiring. Harv J Law Technol. https://doi.org/10.2139/ssrn.3437631

Ajunwa I (2020) The Paradox of Automation as Anti-Bias Intervention. Cardozo Law Rev. https://doi.org/10.2139/ssrn.2746078

Algorithm Watch (2020) Data Trusts. Sovereignty in handling your personal data. https://algorithmwatch.org/en/data-trusts/. Accessed on 15 Feb 2023

Allhutter D, Cech F, Fischer F et al (2020) Algorithmic Profiling of Job Seekers in Austria: How Austerity Politics Are Made Effective. Front Big Data 3:5. https://doi.org/10.3389/fdata.2020.00005

Angwin J, Scheiber N, Tobin A (2020) Dozens of Companies Are Using Facebook to Exclude Older Workers From Job Ads. https://www.propublica.org/article/facebook-ads-age-discrimination-targeting. Accessed on 15 Feb 2023

Aronson E, Wilson TD, Akert RM, et al (2008) Sozialpsychologie, 6th edn. Pearson Studium

Baer S (2008) Ungleichheit der Gleichheiten? Zur Hierarchisierung von Diskriminierungsverboten. In: Klein E, Menke C (eds) Universalität - Schutzmechanismen - Diskriminierungsverbote. Berliner Wissenschafts-Verlag, Berlin, pp 421–450

Beigang S, Fetz K, Kalkum D, et al (2017) Diskriminierungserfahrungen in Deutschland. Ergebnisse einer Repräsentativ- und einer Betroffenenbefragung. Tech. rep., Nomos, Baden-Baden, https://www.antidiskriminierungsstelle.de/SharedDocs/downloads/DE/publikationen/Expertisen/expertise_diskriminierungserfahrungen_in_deutschland.pdf. Accessed on 15 Feb 2023

Benjamins R (2019) Is your AI system discriminating without knowing it?: The paradox between fairness and privacy. https://business.blogthinkbig.com/is-your-ai-system-discriminating-without-knowing-it-the-paradox-between-fairness-and-privacy/. Accessed on 15 Feb 2023

Blankertz A, Specht L (2021) What regulation for data trusts should look like. Tech. rep, Stiftung Neue Verantwortung

Bogen M (2019) All the Ways Hiring Algorithms Can Introduce Bias. Harvard Business Review, https://hbr.org/2019/05/all-the-ways-hiring-algorithms-can-introduce-bias. Accessed on 15 Feb 2023

Brevini B, Pasquale F (2020) Revisiting the Black Box Society by rethinking the political economy of big data. Big Data Soc. https://doi.org/10.1177/2053951720935146

Center for Intersectional Justice (2019) Intersektionalität in Deutschland. Chancen, Lücken und Herausforderungen. Tech. rep., Berlin, https://www.dezim-institut.de/fileadmin/PDF-Download/CIJ_Broschuere_190917_web.pdf. Accessed on 15 Feb 2023

Chen L, Hartmann V, West R (2023) DiPPS: Differentially Private Propensity Scores for Bias Correction. In: Lin YR, Cha M, Quercia D (eds) Proceedings of the Seventeenth International AAAI Conference on Web and Social Media, Association for the Advancement of Artificial Intelligence (AAAI), vol 17. Association for the Advancement of Artificial Intelligence (AAAI)

Cloos J, Mohr S (2022) Acceptance of data sharing in smartphone apps from key industries of the digital transformation: a representative population survey for Germany. Technol Forecast Soc Change. https://doi.org/10.1016/j.techfore.2021.121459

Crawford K, Dobbe R, Dryer T, et al (2019) AI Now 2019 Report. Tech. rep., AI Now Institute, New York, https://ainowinstitute.org/AI_Now_2019_Report.pdf. Accessed on 15 Feb 2023

Creative Diversity Network (2022) Diamond—Creative Diversity Network. https://creativediversitynetwork.com/diamond/

Dastin J (2018) Amazon Scraps Secret AI Recruiting Tool that Showed Bias against Women. In: Ethics of Data and Analytics. Kirsten Martin, Auerbach Publications, p 269–299, https://doi.org/10.1201/9781003278290-44

Digital Regulation Cooperation Forum-DRCF (2022) Auditing algorithms: the existing landscape, role of regulators and future outlook. Findings from the DRCF Algorithmic Processing workstream-Spring 2022 https://assets.publishing.service.gov.uk/government/uploads/system/uploads/attachment_data/file/1071554/DRCF_Algorithmic_audit.pdf. Accessed on 15 Feb 2023

Dobbe R, Dean S, Gilbert T, et al (2018) A broader view on bias in automated decision-making: Reflecting on epistemology and dynamics. ArXiv abs/1807.00553

El-Mafaalani A (2017) Diskriminierung von Menschen mit Migrationshintergrund. In: Scherr A, El-Mafaalani A, Yüksel G (eds) Handbuch Diskriminierung. Springer Reference Sozialwissenschaften, Springer Fachmedien, Wiesbaden, p 465–478, https://doi.org/10.1007/978-3-658-10976-9_26

Engler A (2021a) Auditing employment algorithms for discrimination. Brookins, https://www.brookings.edu/research/auditing-employment-algorithms-for-discrimination/. Accessed on 15 Feb 2023

Engler A (2021b) Independent auditors are struggling to hold AI companies accountable. Fast Company, https://www.fastcompany.com/90597594/ai-algorithm-auditing-hirevue. Accessed on 15 Feb 2023

Fiske ST, Taylor SE (1984) Social Cognition. Topics in social psychology, Addison-Wesley Pub. Co, Reading, Mass

Funke M (2020) Die Vereinbarkeit von Data Trusts mit der DSGVO. Tech. rep., Algorithm Watch, Berlin, https://algorithmwatch.org/de/wp-content/uploads/2020/11/Die-Vereinbarkeit-von-Data-Trusts-mit-der-DSGVO-Michael-Funke-AlgorithmWatch-2020-1.pdf. Accessed on 15 Feb 2023

Gilovich T, Keltner D, Nisbett RE (2010) Social Psychology, 2nd edn. Norton, New York, NY

Green B, Chen Y (2021) Algorithmic Risk Assessments Can Alter Human Decision-Making Processes in High-Stakes Government Contexts. Proc ACM Hum-Comput Interact 5:1–33. https://doi.org/10.1145/3479562

Guijarro Santos V (2023) Nicht besser als nichts. Ein Kommentar zum KI-Verordnungsentwurf der EU Kommission und des Rats der EU. Zeitschrift für Digitalisierung und Recht 1

Haeri MA, Zweig KA (2020) The Crucial Role of Sensitive Attributes in Fair Classification. In: 2020 IEEE Symposium Series on Computational Intelligence (SSCI). IEEE, Canberra, ACT, Australia, pp 2993–3002, https://doi.org/10.1109/SSCI47803.2020.9308585

Hauer MP, Kevekordes J, Haeri MA (2021) Legal perspective on possible fairness measures—a legal discussion using the example of hiring decisions. Comput Law Secur Rev. https://doi.org/10.1016/j.clsr.2021.105583

Hu J (2019) Report: 99% of Fortune 500 companies use Applicant Tracking Systems. https://www.jobscan.co/blog/99-percent-fortune-500-ats/. Accessed on 15 Feb 2023

Hutchinson B, Mitchell M (2019) 50 Years of Test (Un)fairness: Lessons for Machine Learning. In: Proceedings of the Conference on Fairness, Accountability, and Transparency - FAT* ’19. ACM Press, Atlanta, GA, USA, pp 49–58, https://doi.org/10.1145/3287560.3287600