Abstract

The celebrated Fiat–Shamir transformation turns any public-coin interactive proof into a non-interactive one, which inherits the main security properties (in the random oracle model) of the interactive version. While originally considered in the context of 3-move public-coin interactive proofs, i.e., so-called \(\varSigma \)-protocols, it is now applied to multi-round protocols as well. Unfortunately, the security loss for a \((2\mu + 1)\)-move protocol is, in general, approximately \(Q^\mu \), where Q is the number of oracle queries performed by the attacker. In general, this is the best one can hope for, as it is easy to see that this loss applies to the \(\mu \)-fold sequential repetition of \(\varSigma \)-protocols, but it raises the question whether certain (natural) classes of interactive proofs feature a milder security loss. In this work, we give positive and negative results on this question. On the positive side, we show that for \((k_1, \ldots , k_\mu )\)-special-sound protocols (which cover a broad class of use cases), the knowledge error degrades linearly in Q, instead of \(Q^\mu \). On the negative side, we show that for t-fold parallel repetitions of typical \((k_1, \ldots , k_\mu )\)-special-sound protocols with \(t \ge \mu \) (and assuming for simplicity that t and Q are integer multiples of \(\mu \)), there is an attack that results in a security loss of approximately \(\frac{1}{2} Q^\mu /\mu ^{\mu +t}\).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 Background and State of the Art

The celebrated and broadly used Fiat–Shamir transformation turns any public-coin interactive proof into a non-interactive proof, which inherits the main security properties (in the random oracle model) of the interactive version. The rough idea is to replace the random challenges, which are provided by the verifier in the interactive version, by the hash of the current message (concatenated with the messages from previous rounds). By a small adjustment, where also the to-be-signed message is included in the hashes, the transformation turns any public-coin interactive proof into a signature scheme. Indeed, the latter is a commonly used design principle for constructing very efficient signature schemes.

While originally considered in the context of 3-move public-coin interactive proofs, i.e., so-called \(\varSigma \)-protocols, the Fiat–Shamir transformation also applies to multi-round protocols. However, a major drawback in the case of multi-round protocols is that, in general, the security loss obtained by applying the Fiat–Shamir transformation grows exponentially with the number of rounds. Concretely, for any \((2\mu +1)\)-move interactive proof \(\varPi \) (where we may assume that the prover speaks first and last, so that the number of communication rounds is indeed odd) that admits a cheating probability of at most \(\epsilon \), captured by the knowledge or soundness error, the Fiat–Shamir-transformed protocol \(\textsf{FS}[\varPi ]\) admits a cheating probability of (approximately) at most \(Q^\mu \cdot \epsilon \), where Q denotes the number of random-oracle queries admitted to the dishonest prover. A tight reduction is due to [12] with a security loss \(\left( {\begin{array}{c}Q\\ \mu \end{array}}\right) \approx \frac{Q^\mu }{\mu ^\mu }\), where the approximation holds whenever \(\mu \) is much smaller than Q, which is the typical case. More concretely, [12] introduces the notions of state-restoration soundness (SRS) and state-restoration knowledge (SRK), and it shows that any (knowledge) sound protocol \(\varPi \) satisfies these notions with the claimed security loss,Footnote 1 The security of \(\textsf{FS}[\varPi ]\) (with the same loss) then follows from the fact that these soundness notions imply the security of the Fiat–Shamir transformation.

Furthermore, there are (contrived) examples of multi-round protocols \(\varPi \) for which this \(Q^\mu \) security loss is almost tight. For instance, the \(\mu \)-fold sequential repetition \(\varPi \) of a special-sound \(\varSigma \)-protocol with challenge space \({\mathcal {C}}\) is \(\epsilon \)-sound with \(\epsilon = \frac{1}{|{{\mathcal {C}}}|^\mu }\), while it is easy to see that, by attacking the sequential repetitions round by round, investing \(Q/\mu \) queries per round to try to find a “good” challenge, and assuming \(|{{\mathcal {C}}}|\) to be much larger than Q, its Fiat–Shamir transformation \(\textsf{FS}[\varPi ]\) can be broken with probability approximately \(\big (\frac{Q}{\mu }\frac{1}{|{{\mathcal {C}}}|}\big )^\mu = \frac{Q^\mu }{\mu ^\mu }\cdot \epsilon \).Footnote 2

For \(\mu \) beyond 1 or 2, let alone for non-constant \(\mu \) (e.g., IOP-based protocols [7, 11, 12] and also Bulletproofs-like protocols [9, 10]), this is a very unfortunate situation when it comes to choosing concrete security parameters. If one wants to rely on the proven security reduction, one needs to choose a large security parameter for \(\varPi \), in order to compensate for the order \(Q^\mu \) security loss, effecting its efficiency; alternatively, one has to give up on proven security and simply assume that the security loss is much milder than what the general bound suggests. Often, the security loss is simply ignored.

This situation gives rise to the following question: Do there exist natural classes of multi-round public-coin interactive proofs for which the security loss behaves more benign than what the general reduction suggests? Ideally, the general \(Q^\mu \) loss appears for contrived examples only.

So far, the only positive results, establishing a security loss linear in Q, were established in the context of straight-line/online extractors that do not require rewinding. These extractors either rely on the algebraic group model (AGM) [27], or are restricted to protocols using hash-based commitment schemes in the random oracle model [12]. To analyze the properties of straight-line extractors, new auxiliary soundness notions were introduced: round-by-round (RBR) soundness [19] and RBR knowledge [20]. However, it is unclear if and how these notions can be used in scenarios where straight-line extraction does not apply.

In this work, we address the above question (in the plain random-oracle model, and without restricting to schemes that involve hash-based commitments), and give both positive and negative answers, as explained in more detail below.

1.2 Our Results

1.2.1 Positive Result

We show that the Fiat–Shamir transformation of any \((k_1,\dots ,k_{\mu })\)-special-sound interactive proof has a security loss of at most \(Q+1\). More concretely, we consider the knowledge error \(\kappa \) as the figure of merit, i.e., informally, the maximal probability of the verifier accepting the proof when the prover does not have a witness for the claimed statement, and we prove the following result, also formalized in the theorem below. For any \((k_1,\dots ,k_{\mu })\)-special-sound \((2\mu +1)\)-move interactive proof \(\varPi \) with knowledge error \(\kappa \) (which is a known function of \((k_1,\dots ,k_{\mu })\)), the Fiat–Shamir transformed protocol \(\textsf{FS}[\varPi ]\) has a knowledge error at most \((Q+1)\cdot \kappa \). This result is directly applicable to a long list of recent zero-knowledge proof systems, e.g., [1, 3, 9, 10, 13, 14, 25, 32, 38]. While all these works consider the Fiat–Shamir transformation of special-sound protocols, most of them ignore the associated security loss.

Main Theorem

(Theorem 2) Let \(\varPi \) be a \((k_1,\dots ,k_\mu )\)-out-of-\((N_1,\dots ,N_\mu )\) special-sound interactive proof with knowledge error \(\kappa \). Then, the Fiat–Shamir transformation \(\textsf{FS}[\varPi ]\) of \(\varPi \) is knowledge sound with knowledge error

Since in the Fiat–Shamir transformation of any \((2\mu +1)\)-move protocol \(\varPi \), a dishonest prover can simulate any attack against \(\varPi \) and can try \(Q/\mu \) times when allowed to do Q queries in total, our new upper bound \((Q+1) \cdot \kappa \) is close to the trivial lower bound \(1 - \left( 1- \kappa \right) ^{Q/\mu } \approx Q \kappa /\mu \). Another, less explicit, security measure in the context of knowledge soundness is the runtime of the knowledge extractor. Our bound on the knowledge error holds by means of a knowledge extractor that makes an expected number of \(K + Q\cdot (K-1)\) queries, where \(K = k_1 \cdots k_{\mu }\). This is a natural bound: K is the number of necessary distinct “good” transcripts (which form a certain tree-like structure). The loss of \(Q\cdot (K-1)\) captures the fact that a prover may finish different proofs, depending on the random oracle answers, and only one out of Q proofs may be useful for extraction, as explained below.

Our result on the knowledge soundness of \(\textsf{FS}[\varPi ]\) for special-sound protocols \(\varPi \) immediately carries over to ordinary soundness of \(\textsf{FS}[\varPi ]\), with the same security loss \(Q+1\). However, proving knowledge soundness is more intricate; showing a linear-in-Q loss for ordinary soundness can be obtained via simpler arguments (e.g., there is no need to argue efficiency of the extractor).

The construction of our knowledge extractor is motivated by the extractor from [2] in the interactive case, but the analysis here in the context of a non-interactive proof is much more involved. We analyze the extractor in an inductive manner and capture the induction step (and the base case) by means of an abstract experiment. The crucial idea for the analysis (and extractor) is how to deal with accepting transcripts which are not useful.

To see the core problem, consider a \(\varSigma \)-protocol, i.e., a 3-move k-special-sound interactive proof, and a semi-honest prover that knows a witness and behaves as follows. It prepares, independently, Q first messages \(a^1, \ldots , a^Q\) and asks for all hashes \(c^i = \textsf{RO}(a^i)\), and then decides “randomly” (e.g., using a hash over all random oracle answers) which thread to complete, i.e., for which \(i^*\) to compute the response z and then output the valid proof \((a^{i^*},z)\). When the extractor then reprograms the random oracle at the point \(a^{i^*}\) to try to obtain another valid response but now for a different challenge, this affects \(i^*\), and most likely the prover will then use a different thread \(j^*\) and output the proof \((a^{j^*},z')\) with \(a^{j^*} \ne a^{i^*}\). More precisely, \(\Pr (j^* = i^*)= 1/Q\). Hence, an overhead of Q appears in the runtime.

In case of an arbitrary dishonest prover with an unknown strategy for computing the \(a^i\)’s above, and with an arbitrary (unknown) success probability \(\epsilon \), the intuition remains: after reprogramming, we still expect \({\Pr (j^* = i^*) \ge 1/Q}\) and thus a linear-in-Q overhead in the runtime of the extractor. However, providing a rigorous proof is complicated by the fact that the event \(j^* = i^*\) is not necessarily independent of the prover producing a valid proof (again) after the reprogramming. Furthermore, conditioned on the prover having been successful in the first run and conditioned on the corresponding \(i^*\), the success probability of the prover after the reprogramming may be skewed, i.e., may not be \(\epsilon \) anymore. As a warm-up for our general multi-round result, we first give a rigorous analysis of the above case of a \(\varSigma \)-protocol. For that purpose, we introduce an abstract sampling game that mimics the behavior of the extractor in finding two valid proofs with \(j^* = i^*\), and we bound the success probability and the “cost” (i.e., the number of samples needed) of the game, which directly translate to the success probability and the runtime of the extractor.

Perhaps surprisingly, when moving to multi-round protocols, dealing with the knowledge error is relatively simple by recursively composing the extractor for the \(\varSigma \)-protocol. However, controlling the runtime is intricate. If the extractor is recursively composed, i.e., it makes calls to a sub-extractor to obtain a sub-tree, then a naive construction and analysis gives a blow-up of \(Q^\mu \) in the runtime. Intuitively, because only 1/Q of the sub-extractor runs produce useful sub-trees, i.e., sub-trees which extend the current \(a^{i^*}\). The other trees belong to some \(a^{j^*}\) with \(j^* \ne i^*\) and are thus useless. This overhead of Q then accumulates per round (i.e., per sub-extractor).

The crucial observation that we exploit in order to overcome the above issue is that the very first (accepting) transcript sampled by a sub-extractor already determines whether a sub-tree will be (potentially) useful, or not. Thus, if this very first transcript already shows that the sub-tree will not be useful, there is no need to run the full-fledged sub-tree extractor, saving precious time.

To illustrate this more, we again consider the simple case of a dishonest prover that succeeds with certainty. Then, after the first run of the sub-extractor to produce the first sub-tree (which requires expected time linear in Q) and having reprogrammed the random oracle with the goal to find another sub-tree that extends the current \(a^{i^*}\), it is cheaper to first do a single run of the prover to learn \(j^*\) and only run the full-fledged sub-extractor if \(j^* = i^*\), and otherwise reprogram and re-try again. With this strategy, we expect Q tries, followed by the run of the sub-extractor, to find a second fitting sub-tree. Altogether, this amounts to linear-in-Q runs of the prover, compared to the \(Q^2\) using the naive approach.

Again, what complicates the rigorous analysis is that the prover may succeed with bounded probability \(\epsilon \) only, and the event \(j^* = i^*\) may depend on the prover/sub-extractor being successful (again) after the reprogramming. Furthermore, as an additional complication, conditioned on the sub-extractor having been successful in the first run and conditioned on the corresponding \(i^*\), both the success probability of the prover and the runtime of the sub-extractor after the reprogramming may be skewed now. Again, we deal with this by considering an abstract sampling game that mimics the behavior of the extractor, but where the cost function is now more fine-grained in order to distinguish between a single run of the prover and a run of the sub-extractor. Because of this more fine-grained way of defining the “cost”, the analysis of the game also becomes substantially more intricate.

1.2.2 Negative Result

We also show that the general exponential security loss of the Fiat–Shamir transformation, when applied to a multi-round protocol, is not an artefact of contrived examples, but there exist natural protocols that indeed have such an exponential loss. For instance, our negative result applies to the lattice-based protocols in [2, 17]. Concretely, we show that the t-fold parallel repetition \(\varPi ^t\) of a typical \((k_1,\dots ,k_{\mu })\)-special-sound \((2\mu +1)\)-move interactive proof \(\varPi \) features this behavior when \(t \ge \mu \). For simplicity, let us assume that t and Q are multiples of \(\mu \). Then, in more detail, we show that for any typical \((k_1,\dots ,k_{\mu })\)-special-sound protocol \(\varPi \) there exists a poly-time Q-query prover \(\mathcal {P}^*\) against \(\textsf{FS}[\varPi ^t]\) that succeeds in making the verifier accept with probability \(\approx \frac{1}{2} Q^\mu \kappa ^t/\mu ^{\mu +t}\) for any statement x, where \(\kappa \) is the knowledge error (as well as the soundness error) of \(\varPi \). Thus, with the claimed probability, \(\mathcal {P}^*\) succeeds in making the verifier accept for statements x that are not in the language and/or for which \(\mathcal {P}^*\) does not know a witness. Given that \(\kappa ^t\) is the soundness error of \(\varPi ^t\) (i.e., the soundness error of \(\varPi ^t\) as an interactive proof), this shows that the soundness error of \(\varPi ^t\) grows proportionally with \(Q^\mu \) when applying the Fiat–Shamir transformation. Recent work on the knowledge error of the parallel repetition of special-sound multi-round interactive proofs [5] shows that \(\kappa ^t\) is also the knowledge error of \(\varPi ^t\), and so the above shows that the same exponential loss holds in the knowledge error of the Fiat–Shamir transformation of a parallel repetition.

1.3 Related Work

1.3.1 Independent Concurrent Work

In independent and to a large extent concurrent work,Footnote 3 Wikström [37] achieves a similar positive result on the Fiat–Shamir transformation, using a different approach and different techniques: [37] reduces non-interactive extraction to a form of interactive extraction and then applies a generalized version of [36], while our construction adapts the interactive extractor from [2] and offers a direct analysis. One small difference in the results, which is mainly of theoretical interest, is that our result holds and is meaningful for any \(Q < |\mathcal {C}|\), whereas [37] requires the challenge set \(\mathcal {C}\) to be large.

1.3.2 The Forking Lemma

Security of the Fiat–Shamir transformation of k-special-sound 3-move protocols is widely used for construction of signatures. There, unforgeability is typically proven via a forking lemma [18, 33], which extracts, with probability roughly \(\epsilon ^k/Q\), a witness from a signature-forging adversary with success probability \(\epsilon \), where Q is the number of queries to the random oracle. The loss \(\epsilon ^k\) is due to strict polynomial time extraction (and can be decreased, but in general not down to \(\epsilon \)). Such a k-th power loss in the success probability for a constant k is fine in certain settings, e.g., for proving the security of signature schemes; however, not for proofs of knowledge (which, on the other hand, consider expected polynomial time extraction [16]).

A previous version of [26] generalizes the original forking lemma [18, 33] to accommodate Fiat–Shamir transformations of a larger class of (multi-round) interactive proofs. However, their forking lemma only targets a subclass of the \((k_1,\dots ,k_\mu )\)-special-sound interactive proofs considered in this work. Moreover, in terms of (expected) runtime and success probability, our techniques significantly outperform their generalized forking lemma. For this reason, the latest version of [26] is based on our extraction techniques instead.

A forking lemma for interactive multi-round proofs was presented in [10], and its analysis was improved in a line of follow-up works [8, 23, 28, 30, 36]. This forking lemma shows that multi-round special-sound interactive proofs satisfy a notion of knowledge soundness called witness extended emulation. Eventually, it was shown that \((k_1,\dots ,k_{\mu })\)-special-soundness tightly implies knowledge soundness [2].

The aforementioned techniques for interactive proofs are not directly applicable to the Fiat–Shamir mode. First, incorporating the query complexity Q of a dishonest prover \(\mathcal {P}^*\) attacking the non-interactive Fiat–Shamir transformation complicates the analysis. Second, a naive adaptation of the forking lemmas for interactive proofs gives a blow-up of \(Q^\mu \) in the runtime.

1.4 Structure of the Paper

Section 2 recalls essential preliminaries. In Sect. 3, the abstract sampling game is defined and analyzed. It is used in Sect. 4 to handle the Fiat–Shamir transformation of \(\varSigma \)-protocols. Building on the intuition, Sect. 5 introduces the refined game, and Sect. 6 uses it to handle multi-round protocols. Lastly, our negative result on parallel repetitions is presented in Sect. 7.

2 Preliminaries

2.1 Interactive Proofs

Let \(R \subseteq \{0,1\}^* \times \{0,1\}^*\) be a binary relation. Following standard conventions, we call \((x; w) \in R\) a statement-witness pair, that is, x is the statement and w is a witness for x. The set of valid witnesses for a statement x is denoted by R(x), i.e., \(R(x) = \{ w: (x;w)\in R\}\). A statement that admits a witness is said to be a true or valid statement; the set of true statements is denoted by \(L_R\), i.e., \(L_R = \{ x: \exists \, w \text { s.t. } (x;w)\in R\}\). The relation R is an NP relation if the validity of a witness w can be verified in time polynomial in the size |x| of the statement x. From now on, we assume all relations to be NP relations.

In an interactive proof for a relation R, a prover \(\mathcal {P}\) aims to convince a verifier \(\mathcal {V}\) that a statement x admits a witness, or even that the prover knows a witness \(w \in R(x)\).

Definition 1

(Interactive Proof) An interactive proof \(\varPi = (\mathcal {P}, \mathcal {V})\) for relation R is an interactive protocol between two probabilistic machines, a prover \(\mathcal {P}\) and a polynomial time verifier \(\mathcal {V}\). Both \(\mathcal {P}\) and \(\mathcal {V}\) take as public input a statement x, and additionally, \(\mathcal {P}\) takes as private input a witness \(w \in R(x)\). The verifier \(\mathcal {V}\) either accepts or rejects and its output is denoted as \((\mathcal {P}(w),\mathcal {V})(x)\). Accordingly, we say the corresponding transcript (i.e., the set of all messages exchanged in the protocol execution) is accepting or rejecting.

Let us introduce some conventions and additional properties for interactive proof systems. We assume that the prover \(\mathcal {P}\) sends the first and the last message in any interactive proof \(\varPi = (\mathcal {P},\mathcal {V})\). Hence, the number of communication moves \(2\mu +1\) is always odd. We also say \(\varPi \) is a \((2\mu +1)\)-move protocol. We will refer to multi-round protocols as a way of emphasizing that we are not restricting to 3-move protocols.

Informally, an interactive proof \(\varPi = (\mathcal {P}, \mathcal {V})\) is complete if for any statement-witness pair \((x;w) \in R\) the honest execution results in the verifier accepting with high probability. It is sound if the verifier rejects false statements, i.e., \(x \notin L_R\), with high probability. We do neither require (or formally define) completeness nor soundness, as our main focus is knowledge soundness. Intuitively, a protocol is knowledge sound if any (potentially malicious) prover \(\mathcal {P}^*\) which convinces the verifier must “know” a witness w such that \((x, w) \in R\). Informally, this means that any prover \(\mathcal {P}^*\) with \(\Pr ( (\mathcal {P}^*,\mathcal {V})(x)=\textsf {accept})\) large enough is able to efficiently compute a witness \(w \in R(x)\).

Definition 2

(Knowledge Soundness) An interactive proof \((\mathcal {P},\mathcal {V})\) for relation R is knowledge sound with knowledge error \(\kappa :\mathbb {N}\rightarrow [0,1]\) if there exists a positive polynomial q and an algorithm \(\mathcal {E}\), called a knowledge extractor, with the following properties. Given input x and black-box oracle access to a (potentially dishonest) prover \(\mathcal {P}^*\), the extractor \(\mathcal {E}\) runs in an expected number of steps that is polynomial in |x| (counting queries to \(\mathcal {P}^*\) as a single step) and outputs a witness \(w \in R(x)\) with probability

where \(\epsilon (\mathcal {P}^*,x):= \Pr ( (\mathcal {P}^*,\mathcal {V})(x) = \textsf {accept})\).

Remark 1

From the linearity of the expectation, it follows easily that it is sufficient to consider deterministic provers \(\mathcal {P}^*\) in Definition 2.

An important class of protocols have particularly simple verifiers: effectively stateless verifiers which send uniformly random challenges to the prover, and run an efficient verification function on the final transcript.

Definition 3

(Public-Coin) An interactive proof \(\varPi = (\mathcal {P},\mathcal {V})\) is public-coin if all of \(\mathcal {V}\)’s random choices are made public. The message \(c_i \leftarrow \mathcal {C}_i\) of \(\mathcal {V}\) in the 2i-th move is called the i-th challenge, and \(\mathcal {C}_i\) is the challenge set. We assume every challenge set to be enumerated, i.e., encoded as \(\{1, \dots , |\mathcal {C}_i|\}\).

2.2 Special-Sound Multi-Round Protocols

The class of interactive proofs we are interested in are those where knowledge soundness follows from another property, namely special-soundness. Special-soundness is often simpler to verify, and many protocols satisfy this notion. Note that we require special-sound protocols to be public-coin.

Definition 4

(k-out-of-N Special-Soundness) Let \(k,N \in \mathbb {N}\). A 3-move public-coin interactive proof \(\varPi = (\mathcal {P},\mathcal {V})\) for relation R, with challenge set of cardinality \(N\ge k\), is k-out-of-N special-sound if there exists a polynomial time algorithm that, on input a statement x and k accepting transcripts \((a,c_1,z_1), \dots (a,c_k,z_k)\) with common first message a and pairwise distinct challenges \(c_1,\dots ,c_k\), outputs a witness \(w \in R(x)\). We also say \(\varPi \) is k-special-sound and, if \(k=2\), it is simply said to be special-sound.

We refer to a 3-move public-coin interactive proof as a \(\varSigma \)- protocol. Note that often a \(\varSigma \)-protocol is required to be (perfectly) complete, special-sound and special honest-verifier zero-knowledge (SHVZK) by definition. We do not require a \(\varSigma \)-protocol to have these additional properties.

Definition 5

(\(\varSigma \)-Protocol) A \(\varSigma \)-protocol is a 3-move public-coin interactive proof.

In order to generalize k-special-soundness to multi-round protocols, we introduce the notion of a tree of transcripts. We follow the definition of [2].

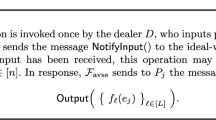

Definition 6

(Tree of Transcripts) Let \(k_1,\dots ,k_{\mu } \in \mathbb {N}\). A \((k_1, \dots , k_{\mu })\)-tree of transcripts for a \((2\mu +1)\)-move public-coin interactive proof \(\varPi = (\mathcal {P},\mathcal {V})\) is a set of \(K = \prod _{i=1}^{\mu } k_i\) transcripts arranged in the following tree structure. The nodes in this tree correspond to the prover’s messages and the edges to the verifier’s challenges. Every node at depth i has precisely \(k_i\) children corresponding to \(k_i\) pairwise distinct challenges. Every transcript corresponds to exactly one path from the root node to a leaf node. See Fig. 1 for a graphical illustration. We refer to the corresponding tree of challenges as a \((k_1, \dots , k_{\mu })\)-tree of challenges.

We will also write \(\textbf{k}= (k_1,\dots ,k_{\mu }) \in \mathbb {N}^{\mu }\) and refer to a \(\textbf{k}\)-tree of transcripts or a \(\textbf{k}\)-tree of challenges.

\((k_1,\dots ,k_\mu )\)-tree of transcripts of a \((2\mu +1)\)-move interactive proof [2]

Definition 7

(\((k_1,\dots ,k_{\mu })\)-out-of-\((N_1,\dots ,N_{\mu })\) Special-Soundness) Let \(k_1,\dots ,k_{\mu },N_1,\dots ,N_{\mu } \in \mathbb {N}\). A \((2\mu +1)\)-move public-coin interactive proof \(\varPi = (\mathcal {P},\mathcal {V})\) for relation R, where \(\mathcal {V}\) samples the i-th challenge from a set of cardinality \(N_i \ge k_i\) for \(1 \le i \le \mu \), is \((k_1,\dots ,k_{\mu })\)-out-of-\((N_1,\dots ,N_{\mu })\) special-sound if there exists a polynomial time algorithm that, on input a statement x and a \((k_1,\dots ,k_{\mu })\)-tree of accepting transcripts outputs a witness \(w \in R(x)\). We also say \(\varPi \) is \((k_1,\dots ,k_{\mu })\)-special-sound.

It is well known that, for 3-move protocols, k-special-soundness implies knowledge soundness, but only recently it was shown that more generally, for public-coin \((2\mu +1)\)-move protocols, \((k_1,\dots ,k_{\mu })\)-out-of-\((N_1,\dots ,N_{\mu })\) special-soundness tightly implies knowledge soundness [2], with knowledge error

which matches the probability that at least one of the random challenges \(c_i\) hits a certain set \(\Gamma _i\) of size \(k_i-1\). Since typical protocols admit a trivial attack that succeeds if at least one of the random challenges \(c_i\) hits a certain set \(\Gamma _i\) of size \(k_i-1\) (we capture this by the special- un soundness property in Sect. 7), the soundness/knowledge error \({\text {Er}}\) is tight for general special-sound protocols.

Note that \({\text {Er}}(k;N)=(k-1)/N\) and, for all \(1 \le m \le \mu \),

where we define \({\text {Er}}(\emptyset ;\emptyset )=1\). If \(N_1=\dots =N_\mu =N\), i.e., if the verifier samples all \(\mu \) challenges from a set of size N, we simply write \({\text {Er}}(k_1,\dots ,k_{\mu };N)\), or \({\text {Er}}(\textbf{k};N)\) for \(\textbf{k}= (k_1,\dots ,k_{\mu })\).

2.3 Non-Interactive Random Oracle Proofs (NIROP)

In practice, interactive proofs are not typically used. Instead, transformations are used which turn them into non-interactive proofs in the random oracle model (ROM). We define non-interactive random oracle proofs (NIROP) as in [12]. Their definition is a straightforward adaption of (non-)interactive proof systems to the ROM. The same holds for their properties. Every algorithm is augmented by access to a random oracle.

In the random oracle model, algorithms have black-box access to an oracle \(\textsf{RO}:\{0,1\}^*\rightarrow {\mathcal {Y}}\), called the random oracle, which is instantiated by a uniformly random function with domain \(\{0,1\}^*\) and codomain \({\mathcal {Y}}\). For convenience, we let the codomain \({\mathcal {Y}}\) be an arbitrary finite set, while typically \({\mathcal {Y}} = \{0,1\}^{\eta }\) for some \(\eta \in \mathbb {N}\) related to the security parameter. Equivalently, \(\textsf{RO}\) is instantiated by lazy sampling, i.e., for every bitstring \(x \in \{0,1\}^*\), \(\textsf{RO}(x)\) is chosen uniformly at random in \({\mathcal {Y}}\) (and then fixed). To avoid technical difficulties, we limit the domain from \(\{0,1\}^*\) to \(\{0,1\}^{\le u}\), the finite set of all bitstrings of length at most u, for a sufficiently large \(u \in \mathbb {N}\). An algorithm \(\mathcal {A}^{\textsf{RO}}\) that is given black-box access to a random oracle is called a random-oracle algorithm. We call \(\mathcal {A}\) a Q-query random-oracle algorithm, if it makes at most Q queries to \(\textsf{RO}\) (independent of \(\textsf{RO}\)).

A natural extension of the random oracle model is when \(\mathcal {A}\) is given access to multiple independent random oracles \(\textsf{RO}_1,\ldots ,\textsf{RO}_\mu \), possibly with different codomains.Footnote 4 The definitions below apply to this extension in the obvious way.

Definition 8

(Non-Interactive Random Oracle Proof (NIROP)) A non-interactive random oracle proof for relation \(R\) is a pair \((\mathcal {P}, \mathcal {V})\) of (probabilistic) random-oracle algorithms, a prover \(\mathcal {P}\) and a polynomial-time verifier \(\mathcal {V}\), such that: Given \((x; w) \in R\) and access to a random oracle \(\textsf{RO}\), the prover \(\mathcal {P}^{\textsf{RO}}(x; w)\) outputs a proof \(\pi \). Given \(x\in \{0,1\}^*\), a purported proof \(\pi \), and access to a random oracle \(\textsf{RO}\), the verifier \(\mathcal {V}^{\textsf{RO}}(x, \pi )\) outputs 0 to reject or 1 to accept the proof.

As for interactive definitions, a NIROP is complete if honestly generated proofs for \((x;w) \in R\) are accepted by \(\mathcal {V}\) with high probability. It is sound if it is infeasible to produce an accepting proof for a false statement. In the non-interactive setting, the soundness error, i.e., the success probability of a cheating prover necessarily depends on the number of queries it is allowed to make to the random oracle. The same holds true for knowledge soundness of NIROPs.

Definition 9

(Knowledge Soundness—NIROP) A non-interactive random oracle proof \((\mathcal {P}, \mathcal {V})\) for relation \(R\) is knowledge sound with knowledge error \(\kappa :\mathbb {N}\times \mathbb {N}\rightarrow [0,1]\) if there exists a positive polynomial q and an algorithm \(\mathcal {E}\), called a knowledge extractor, with the following properties: The extractor, given input x and oracle access to any (potentially dishonest) Q-query random oracle prover \(\mathcal {P}^*\), runs in an expected number of steps that is polynomial in |x| and Q and outputs a witness \(w \in R(x)\), and satisfies

for all \(x\in \{0,1\}^*\) where \(\epsilon (\mathcal {P}^*,x) = \Pr \bigl ( \mathcal {V}^{\textsf{RO}} (x, \mathcal {P}^{*,\textsf{RO}} ) =1 \bigr )\). Here, \(\mathcal {E}\) implements \(\textsf{RO}\) for \(\mathcal {P}^*\), in particular, \(\mathcal {E}\) can arbitrarily program \(\textsf{RO}\). Moreover, the randomness is over the randomness of \(\mathcal {E}\), \(\mathcal {V}\), \(\mathcal {P}^*\) and \(\textsf{RO}\).

Remark 2

As for the knowledge soundness of interactive proofs (see Remark 1), it is sufficient to consider deterministic provers \(\mathcal {P}^*\) in Definition 9. Consequently, we will assume all dishonest provers \(\mathcal {P}^*\) to be deterministic in order to simplify our analysis. Black-box access to \(\mathcal {P}^*\) then simply means black-box access to the next-message function of \(\mathcal {P}^*\). This in particular means that \(\mathcal {E}\) can “rewind” \(\mathcal {P}^*\) to any state. We stress though that \(\mathcal {E}\) cannot depend on (or “know”) certain properties of \(\mathcal {P}^*\), such as Q or the success probability \(\epsilon (\mathcal {P}^*,x)\).

Remark 3

The knowledge soundness definition for non-interactive random oracle proofs does not impose any (computational) restrictions on the prover \(\mathcal {P}^*\) attacking the proof, i.e., the knowledge extractor may also be given oracle access to an unbounded prover \(\mathcal {P}^*\). Therefore, it makes sense to refer to a NIROP admitting such a knowledge extractor as a proof of knowledge, rather than an argument of knowledge. However, at the same time, both the extractor’s runtime and the knowledge error are allowed to depend on the query complexity Q. In fact, the knowledge error \(\kappa (|x|,Q)\) typically converges to 1 as \({Q \rightarrow \infty }\). Therefore, in practice, one must bound the query complexity Q of a dishonest prover to derive nontrivial knowledge soundness properties. For this reason, a NIROP is sometimes also referred to as a non-interactive random oracle argument, even if \(\mathcal {P}^*\) is allowed to be inefficient.

2.4 (Non)-Interactive Arguments

Interactive and non-interactive proofs for which (knowledge) soundness only holds with respect to computationally bounded provers \(\mathcal {P}^*\) attacking the protocol are referred to as arguments (of knowledge). The computational soundness analysis of (non)-interactive arguments can be significantly more complicated than the unconditional soundness analysis of proofs. For instance, t-fold parallel repetition is relatively easily seen to reduce the soundness error of an interactive proof from \(\sigma \) down to \(\sigma ^t\), while the same result does not hold for arguments [15].

However, when considering knowledge soundness, arguments for a relation R can typically be cast as proofs, with unconditional knowledge soundness, but now for a slightly different relation; namely for the relation \(R'\) such that \((x;w) \in R'\) if and only if \((x;w) \in R\) or w is the solution to some computational problem (depending on the (non)-interactive argument, e.g., two different openings of a commitment, or a collision in a hash function). In particular, computationally special-sound protocols (with respect to relation R) are typically unconditionally special-sound with respect to \(R'\). Our results (and prior works) show that the unconditional special-soundness for \(R'\) implies unconditional knowledge soundness for \(R'\) (i.e., the extractor outputs a witness for the original relation R or it solves a computational problem), and thus computational knowledge soundness with respect to the original relation R. For this reason, our focus lies on the analysis of proofs, with the understanding that our results also apply to arguments.

Remark 4

The reason why the above does not work when considering ordinary soundness is that every statement x admits a witness with respect to relation \(R'\); a solution to the computational problem is a witness for any x. Hence, since there do not exist statements outside the language \(L_{R'}=\{0,1\}^*\), any (non)-interactive proof is sound with respect to relation \(R'\). In other words, the above reduction, in which an argument for relation R is cast as a proof for relation \(R'\), is only useful when considering knowledge soundness instead of ordinary soundness.

Example 1

(Bulletproofs) A typical computational problem, arising in the knowledge soundness analysis of (non)-interactive arguments, is breaking the binding property of some underlying commitment scheme. More precisely, in many (non)-interactive arguments the knowledge extractor either extracts a witness or it finds two distinct openings to the same commitment. For instance, Bulletproofs [9, 10] use the Pedersen vector commitment scheme, which is computationally binding assuming the hardness of finding non-trivial discrete logarithm relations. Hence, Bulletproofs are arguments of knowledge with respect to some relation R, but proofs of knowledge with respect to \(R'\), where a witness \(w \in R'(x)\) is either a witness \(w \in R(x)\) or a non-trivial discrete logarithm relation. This observation shows that our techniques also apply to Bulletproof-like protocols.

2.5 Adaptive Security

Thus far, knowledge soundness has been defined with respect to static or non-adaptive provers \(\mathcal {P}^*\) attacking the considered (non-)interactive proof for a fixed statement x. However, in many practical scenarios the dishonest provers are free to choose the statement x adaptively. Hence, in these cases static security is not sufficient. For interactive proofs, it is well-known that static knowledge soundness implies adaptive knowledge soundness. However, this does not carry over to non-interactive proofs. For instance, it is easy to see that the static Fiat–Shamir transformation (see Definition 11) is in general not adaptively sound.

For this reason, let us formalize adaptive knowledge soundness for non-interactive random oracle proofs. An adaptive prover \(\mathcal {P}^{\textsf {a}}\) attacking the considered NIROP is given oracle access to a random oracle \(\textsf{RO}\) and outputs a statement x of fixed length \(|x|=n\) together with a proof \(\pi \). As in the static definition, adaptive knowledge soundness requires the existence of a knowledge extractor. However, formalizing the requirements of this extractor introduces some subtle issues. Namely, because \(\mathcal {P}^{\textsf {a}}\) chooses the statement x adaptively, it is not immediately clear for which statement the extractor should extract a witness. For instance, granting the extractor the same freedom of adaptively choosing the statement x, for which it needs to extract a witness w, renders knowledge extraction trivial; the extractor could simply output an arbitrary statement-witness pair (x; w). For this reason, we require the extractor to output statement-witness pairs (x; w) corresponding to the valid pairs \((x,\pi )\) output by the adaptive prover \(\mathcal {P}^{\textsf {a}}\). To formalize these requirements, we also write \((x,\pi ,v)\), with \(v \in \{0,1\}\) indicating whether \(\pi \) is a valid proof for statement x. Given this notation, the extractor should output a triple \((x,\pi ,v)\) with the same distribution as the triples \((x,\pi ,v)\) produced by \(\mathcal {P}^{\textsf {a}}\); furthermore, if \(\pi \) is a valid proof for statement x, i.e., \(v=1\), then the extractor should additionally aim to output a witness \(w \in R(x)\). As before, the success probability of the extractor is allowed to depend on the success probability of \(\mathcal {P}^{\textsf {a}}\). Finally, to ensure that the knowledge extractor can be used in compositional settings, where the NIROP is deployed as a component of a larger protocol, the prover \(\mathcal {P}^{\textsf {a}}\) is also allowed to additionally output arbitrary auxiliary information \(\textsf {aux}\in \{0,1\}^*\) and the extractor is then required to simulate the tuple \((x,\pi ,\textsf {aux},v)\), rather than the triple \((x,\pi ,v)\). The following definition formalizes adaptive knowledge soundness along these lines. For alternative definitions see, e.g., [22, 34].

Definition 10

(Adaptive Knowledge Soundness—NIROP) A non-interactive random oracle proof \((\mathcal {P}, \mathcal {V})\) for relation \(R\) is adaptively knowledge sound with knowledge error \(\kappa :\mathbb {N}\times \mathbb {N}\rightarrow [0,1]\) if there exists a positive polynomial q and an algorithm \(\mathcal {E}\), called a knowledge extractor, with the following properties: The extractor, given input \(n \in \mathbb {N}\) and oracle access to any adaptive Q-query random oracle prover \(\mathcal {P}^{\textsf {a}}\) that outputs statements x with \(|x|= n\), runs in an expected number of steps that is polynomial in n and Q and outputs a tuple \((x,\pi ,\textsf {aux},v;w)\) such that \(\{ (x,\pi ,\textsf {aux},v): (x,\pi ,\textsf {aux}) \leftarrow \mathcal {P}^{\textsf {a},\textsf{RO}} \wedge v \leftarrow \mathcal {V}^{\textsf{RO}}(x,\pi )\}\) and \(\{ (x,\pi ,\textsf {aux},v): (x,\pi ,\textsf {aux},v;w) \leftarrow \mathcal {E}^{\mathcal {P}^{\textsf {a}}}(n)\}\) are identically distributed and

where \(\epsilon (\mathcal {P}^{\textsf {a}}) = \Pr \bigl ( \mathcal {V}^{\textsf{RO}} (x, \pi ) = 1: (x, \pi ) \leftarrow \mathcal {P}^{\textsf {a},\textsf{RO}} \bigr )\). Here, \(\mathcal {E}\) implements \(\textsf{RO}\) for \(\mathcal {P}^{\textsf {a}}\), in particular, \(\mathcal {E}\) can arbitrarily program \(\textsf{RO}\). Moreover, the randomness is over the randomness of \(\mathcal {E}\), \(\mathcal {V}\), \(\mathcal {P}^{\textsf {a}}\) and \(\textsf{RO}\).

Remark 5

We note that, while the tuple \((x,\pi ,\textsf {aux},v)\) is required to have the same distribution for \(\mathcal {P}^{\textsf {a}}\) and \(\mathcal {E}(n)\), by default the respective executions of \(\mathcal {P}^{\textsf {a}}\) and \(\mathcal {E}(n)\) give rise to two different probability spaces. Looking ahead though, we remark that the extractor that we eventually construct first does an honest run of \(\mathcal {P}^{\textsf {a}}\) by faithfully simulating the answers to \(\mathcal {P}^{\textsf {a}}\)’s random oracle queries (this produces the tuple \((x,\pi ,\textsf {aux},v)\) that \(\mathcal {E}(n)\) eventually outputs and which so trivially has the right distribution), and then, if \(\pi \) is a valid proof, \(\mathcal {E}(n)\) starts rewinding \(\mathcal {P}^{\textsf {a}}\) and reprogramming the random oracle to try to find enough valid proofs to compute a witness. Thus, in this sense, we can then say that \(\mathcal {E}(n)\) aims to find a witness \(w \in R(x)\) for the statement x output by \(\mathcal {P}^{\textsf {a}}\).

2.6 Fiat–Shamir Transformations

The Fiat–Shamir transformation [24] turns a public-coin interactive proof into a non-interactive random oracle proof (NIROP). The general idea is to compute the i-th challenge \(c_i\) as a hash of the i-th prover message \(a_i\) and (some part of) the previous communication transcript. For a \(\varSigma \)-protocol, the challenge c is computed as \(c = H(a)\) or as \(c = H(x,a)\), where the former is sufficient for static security, where the statement x is given as input to the dishonest prover, and the latter is necessary for adaptive security, where the dishonest prover can choose the statement x for which it wants to forge a proof.

For multi-round public-coin interactive proofs, there is some degree of freedom in the computation of the i-th challenge. For concreteness and simplicity, we consider a particular version where all previous prover messages are hashed along with the current message. Our techniques also apply to some other variants of the Fiat–Shamir transformation (see below), but one has to be careful, e.g., hashing only the current message is known to be not sufficient for multi-round protocols. As for \(\varSigma \)-protocols, we consider a static and an adaptive variant of this version of the Fiat–Shamir transformation. In contrast to the static variant, the adaptive Fiat–Shamir transformation includes the statement x in all hash function evaluations. If it is not made explicit which variant is used, the considered result holds for both variants.

Let \(\varPi = (\mathcal {P},\mathcal {V})\) be a \((2\mu +1)\)-move public-coin interactive proof, where the challenge from the i-th round is sampled from set \(\mathcal {C}_i\). For simplicity, we consider \(\mu \) random oracles \(\textsf{RO}_i :\{0,1\}^{\le u} \rightarrow \mathcal {C}_i\) that map into the respective challenge spaces.

Definition 11

(Fiat–Shamir Transformation) The static Fiat–Shamir transformation \(\textsf{FS}[\varPi ] = (\mathcal {P}_{\textsf{fs}}, \mathcal {V}_{\textsf{fs}})\) is the NIROP where \(\mathcal {P}_{\textsf{fs}}^{\textsf{RO}_1,\ldots ,\textsf{RO}_\mu }(x;w)\) runs \(\mathcal {P}(x;w)\) but instead of asking the verifier for the challenge \(c_i\) on message \(a_i\), the challenges are computed as

the output is then the proof \(\pi = (a_1, \ldots , a_{\mu +1})\). On input a statement x and a proof \(\pi = (a_1, \ldots , a_{\mu +1})\), \(\mathcal {V}_{\textsf{fs}}^{\textsf{RO}_1,\ldots ,\textsf{RO}_\mu }(x,\pi )\) accepts if, for \(c_i\) as above \(\mathcal {V}\) accepts the transcript \((a_1, c_1, \ldots , a_\mu , c_\mu , a_{\mu +1})\) on input x.

If the challenges are computed as

the resulting NIROP is referred to as the adaptive Fiat–Shamir transformation.

By means of reducing the security of other variants of the Fiat–Shamir transformation to Definition 11, appropriately adjusted versions of our results also apply to other variants of doing the “chaining” (Eqs. 3 and 4) in the Fiat–Shamir transformation, for instance when \(c_i\) is computed as \(c_i = \textsf{RO}_i(i, c_{i-1}, a_{i})\) or \(c_i = \textsf{RO}_i(x,i, c_{i-1}, a_{i})\), where \(c_0\) is the empty string.

2.7 Negative Hypergeometric Distribution

An important tool in our analysis is the negative hypergeometric distribution. Consider a bucket containing \(\ell \) green balls and \(N-\ell \) red balls, i.e., a total of N balls. In the negative hypergeometric experiment, balls are drawn uniformly at random from this bucket, without replacement, until k green balls have been found or until the bucket is empty. The number of red balls X drawn in this experiment is said to have a negative hypergeometric distribution with parameters \(N,\ell ,k\), which is denoted by \(X \sim \textsf{NHG}(N,\ell ,k)\).

Lemma 1

(Negative Hypergeometric Distribution) Let \(N,\ell ,k \in \mathbb {N}\) with \(\ell ,k \le N\), and let \(X \sim \textsf{NHG}(N,\ell ,k)\). Then \(\mathop {\mathrm {\mathbb {E}}}\limits [X] \le k \frac{N-\ell }{\ell +1}\).

Proof

If \(\ell <k\), it clearly holds that \(\Pr (X=N-\ell )=1\). Hence, in this case, \(\mathop {\mathrm {\mathbb {E}}}\limits [X] = N-\ell \le k \frac{N-\ell }{\ell +1}\), which proves the claim.

So, let us now consider the case \(\ell \ge k\). Then, for all \(0 \le x \le N-\ell \),

Hence,

where \(Y \sim \textsf{NHG}(N,\ell +1,k-1)\). This completes the proof of the lemma. \(\square \)

Remark 6

Typically, negative hypergeometric experiments are restricted to the non-trivial case \(\ell \ge k\). For reasons to become clear later, we also allow parameter choices with \(\ell <k\) resulting in a trivial negative hypergeometric experiment in which all balls are always drawn.

Remark 7

The above has a straightforward generalization to buckets with balls of more than 2 colors: say \(\ell \) green balls and \(m_i\) balls of color i for \(1\le i \le M\). The experiment proceeds as before, i.e., drawing until either k green balls have been found or the bucket is empty. Let \(X_i\) be the number of balls of color i that are drawn in this experiment. Then \(X_i \sim \textsf{NHG}(\ell +m_i,\ell ,k)\) for all i. To see this, simply run the generalized negative hypergeometric experiment without counting the balls that are neither green nor of color i.

3 An Abstract Sampling Game

Towards the goal of constructing and analyzing a knowledge extractor for the Fiat–Shamir transformation \(\textsf{FS}[\varPi ]\) of special-sound interactive proofs \(\varPi \), we define and analyze an abstract sampling game. Given access to a deterministic Q-query prover \(\mathcal {P}^*\), attacking the non-interactive random oracle proof \(\textsf{FS}[\varPi ]\), our extractor will essentially play this abstract game in the case \(\varPi \) is a \(\varSigma \)-protocol, and it will play this game recursively in the general case of a multi-round protocol. The abstraction allows us to focus on the crucial properties of the extraction algorithm, without unnecessarily complicating the notation.

The game considers an arbitrary but fixed U-dimensional array M, where, for all \(1 \le j_1,\dots ,j_{U} \le N\), the entry \(M(j_1,\dots ,j_{U})=(v,i)\) contains a bit \(v \in \{0,1\}\) and an index \(i \in \{1,\dots ,U\}\). Think of the bit v indicating whether this entry is “good” or “bad”, and the index i points to one of the U dimensions. The goal will be to find k “good” entries with the same index i, and with all of them lying in the 1-dimensional array \(M(j_1,\dots ,j_{i-1}, \,\cdot \,,j_{i+1},\dots ,j_{U})\) for some \(1 \le j_1,\dots ,j_{i-1},j_{i+1},\dots ,j_{U} \le N\).

Looking ahead, considering the case of a \(\varSigma \)-protocol first, this game captures the task of our extractor to find k proofs that are valid and feature the same first message but have different hash values assigned to the first message. Thus, in our application, the sequence \(j_1,\dots ,j_{U}\) specifies the function table of the random oracle

while the entry \(M(j_1,\dots ,j_{U})=(v,i)\) captures the relevant properties of the proof produced by the considered prover when interacting with that particular specification of the random oracle. Concretely, the bit v indicates whether the proof is valid, and the index i is the first message a of the proof. Replacing \(j_i\) by \(j'_i\) then means to reprogram the random oracle at the point \(i = a\). Note that after the reprogramming, we want to obtain another valid proof with the same first message, i.e., with the same index i (but now a different challenge, due to the reprogramming).

The game is formally defined in Fig. 2, and its core properties are summarized in Lemma 2. Looking ahead, we note that for efficiency reasons, the extractor will naturally not sample the entire sequence \(j_1,\dots ,j_{U}\) (i.e., function table), but will sample its components on the fly using lazy sampling.

It will be useful to define, for all \(1 \le i \le U\), the function

The value \(a_i(j_1,\dots ,j_U)\) counts the number of entries that are “good” and have index i in the 1-dimensional array \(M(j_1,\dots ,j_{i-1}, \,\cdot ,j_{i+1},\dots ,j_{U})\). Note that \(a_i\) does not depend on the i-th entry of the input vector \((j_1,\dots ,j_U)\), and so, by a slight abuse of notation, we sometimes also write \(a_i(j_1,\dots ,j_{i-1}, j_{i+1},\dots ,j_{U})\).

Lemma 2

(Abstract Sampling Game) Consider the game in Fig. 2. Let \(J= (J_1,\dots ,J_{U})\) be uniformly distributed in \(\{1,\dots ,N\}^{U}\), indicating the first entry sampled, and let \((V,I)=M(J_1,\dots ,J_{U})\). Further, for all \(1\le i \le U\), let \(A_i = a_i(J)\). Moreover, let X be the number of entries of the form (1, i) with \(i=I\) sampled (including the first one), and let \(\Lambda \) be the total number of entries sampled in this game.

Then

where \(P = \sum _{i=1}^{U} \Pr (A_i > 0)\).

Remark 8

Note the abstractly defined parameter P. In our application, where the index i of \((v,i) = M(j_1,\dots ,j_{U})\) is determined by the output of a prover making no more than Q queries to the random oracle with function table \(j_1,\dots ,j_{U}\), the parameter P will be bounded by \(Q+1\). We show this formally (yet again somewhat abstractly) in Lemma 3. Intuitively, the reason is that the events \(A_i > 0\) are disjoint for all but Q indices i (those that the considered prover does not query), and so their probabilities add up to at most 1.

Indeed, the output of a prover \(\mathcal {P}^*\) attacking the protocol, while given oracle access to a random oracle with function table \(j_1,\dots ,j_U\), that does not query index i is oblivious to the value of \(j_i\). In particular, in this case, there exists a fixed \(i'\) such that \(M(j_1',\dots ,j_U') \in \{(0,i'), (1,i')\}\) for all \(j_1',\dots ,j_U'\) with \(j_\ell = j_\ell '\) for all indices \(\ell \) queried by \(\mathcal {P}^*\). Hence, if \(\mathcal {P}^*\) does not query i then \(a_i(j_1,\dots ,j_U)>0\) implies that \(i = i'\). From this it follows that \(P = \sum _{i=1}^{U} \Pr (A_i > 0) \le Q+1\). For a formal argument see the proof of Lemma 3.

Proof

(Proof (of Lemma 2)) Expected Number of Samples. Let us first derive an upper bound on the expected value of \(\Lambda \). To this end, let \(X'\) denote the number of sampled entries of the form (1, i) with \(i = I\), but, in contrast to X, without counting the first one. Similarly, let \(Y'\) denote the number of sampled entries of the form (v, i) with \(v=0\) or \(i\ne I\), again without counting the first one. Then \(\Lambda = 1 + X' + Y'\) and

Hence, \(\mathop {\mathrm {\mathbb {E}}}\limits [X' \mid V=0 ] = \mathop {\mathrm {\mathbb {E}}}\limits [Y' \mid V=0 ] = 0\).

Let us now consider the expected value \(\mathop {\mathrm {\mathbb {E}}}\limits [Y' \mid V=1 ]\). To this end, we observe that, conditioned on the event \(V=1 \wedge I=i \wedge A_i=a\) with \(a>0\), \(Y'\) follows a negative hypergeometric distribution with parameters \(N-1\), \(a-1\) and \(k-1\). Hence, by Lemma 1,

and thus, using that \(\Pr (X' \le k-1 \mid V=1) =1\),

On the other hand

and thus

Therefore, and since \(\Pr ( V=1 \wedge I=i \wedge A_i=0) =0\),

where \(P = \sum _{i=1}^{U} \Pr (A_i > 0)\). Hence,

which proves the claimed upper bound on \(\mathop {\mathrm {\mathbb {E}}}\limits [\Lambda ]\).

Success Probability. Let us now find a lower bound for the “success probability” \(\Pr (X=k)\) of this game. Using (6) again, we can write

Now, using \(a \le N\), note that

Therefore, combining the two, and using that the summand becomes negative for \(a < k\) to argue the second inequality, and using (6) once more, we obtain

where, as before, we have used that \(\Pr (V=1 \wedge I=i \wedge A_i =0)=0\) for all \(1\le i \le U\) to conclude the second equality, and finally that \(P = \sum _{i=1}^U\Pr ( A_i>0) \). This completes the proof of the lemma. \(\square \)

Our knowledge extractor will instantiate the abstract sampling game via a deterministic Q-query prover \(\mathcal {P}^*\) attacking the Fiat–Shamir transformation \(\textsf{FS}[\varPi ]\). The index i of \(M(v,i) = (j_1,\dots ,j_{U})\) is then determined by the output of \(\mathcal {P}^*\), with the random oracle being given by the function table \(j_1,\dots ,j_{U}\). Since the index i is thus determined by Q queries to the random oracle, the following shows that the parameter P will in this case be bounded by \(Q+1\).

Lemma 3

Consider the game in Fig. 2. Let v and  be functions such that

be functions such that  for all \(j \in \{1,\dots ,N\}^{U}\). Furthermore, let \(J= (J_1,\dots ,J_{U})\) be uniformly distributed in \(\{1,\dots ,N\}^{U}\), and set \(A_i = a_i(J)\) for all \(1\le i \le U\). Let us additionally assume that for all \(j \in \{1,\dots ,N\}^{U}\) there exists a subset \(S(j) \subseteq \{1,\dots ,U\}\) of cardinality at most Q such that

for all \(j \in \{1,\dots ,N\}^{U}\). Furthermore, let \(J= (J_1,\dots ,J_{U})\) be uniformly distributed in \(\{1,\dots ,N\}^{U}\), and set \(A_i = a_i(J)\) for all \(1\le i \le U\). Let us additionally assume that for all \(j \in \{1,\dots ,N\}^{U}\) there exists a subset \(S(j) \subseteq \{1,\dots ,U\}\) of cardinality at most Q such that  for all \(j'\) with \(j_\ell '=j_\ell \) for all \(\ell \in S(j)\). Then

for all \(j'\) with \(j_\ell '=j_\ell \) for all \(\ell \in S(j)\). Then

Proof

By basic probability theory, it follows thatFootnote 5

Since \(|S(j) |\le Q\) for all j, it follows that

Now note that, by definition of the sets S(j), for all \(j \in \{1,\dots ,N\}^U\), \(i \notin S(j)\) and \(j^*\in \{1,\dots ,N\}\), it holds that

Therefore, for all ,

Hence,

Altogether, it follows that

which completes the proof. \(\square \)

4 Fiat–Shamir Transformation of \(\varSigma \)-Protocols

Let us first consider the Fiat–Shamir transformation of a k-special-sound \(\varSigma \)-protocol \(\varPi \), i.e., a 3-move interactive proof, with challenge set \(\mathcal {C}\); subsequently, in Sect. 6, we move to general multi-round interactive proofs.

Let \(\mathcal {P}^*\) be a deterministic dishonest Q-query random-oracle prover, attacking the Fiat–Shamir transformation \(\textsf{FS}[\varPi ]\) of \(\varPi \) on input x. Given a statement x as input, after making Q queries to the random oracle \(\textsf{RO}:\{0,1\}^{\le u} \rightarrow \mathcal {C}\), \(\mathcal {P}^*\) outputs a proof \(\pi = (a,z)\). For reasons to become clear later, we re-format (and partly rename) the output and consider \( I:= a\) and \(\pi \) as \(\mathcal {P}^*\)’s output. We refer to the output I as the index. Furthermore, we extend \(\mathcal {P}^*\) to an algorithm \(\mathcal {A}\) that additionally checks the correctness of the proof \(\pi \). Formally, \(\mathcal {A}\) runs \(\mathcal {P}^*\) to obtain I and \(\pi \), queries \(\textsf{RO}\) to obtain \(c:= \textsf{RO}(I)\), and then outputs

where \(V(y)=1\) if y is an accepting transcript for the interactive proof \(\varPi \) on input x and \(V(y)=0\) otherwise. Hence, \(\mathcal {A}\) is a random-oracle algorithm making at most \(Q+1\) queries; indeed, it relays the oracle queries done by \(\mathcal {P}^*\) and makes the one needed to do the verification. We may write \(\mathcal {A}^{\textsf{RO}}\) to make the dependency of \(\mathcal {A}\)’s output on the choice of the random oracle \(\textsf{RO}\) explicit. \(\mathcal {A}\) has a naturally defined success probability

where \(\textsf{RO}:\{0,1\}^{\le u} \rightarrow \mathcal {C}\) is chosen uniformly at random. The probability \(\epsilon (\mathcal {A})\) equals the success probability \(\epsilon (\mathcal {P}^*,x)\) of the random-oracle prover \(\mathcal {P}^*\) on input x.

Our goal is now to construct an extraction algorithm that, when given black-box access to \(\mathcal {A}\), aims to output k accepting transcripts \(y_1,\dots ,y_k\) with common first message a and distinct challenges. By the k-special-soundness property of \(\varPi \), a witness for statement x can be computed efficiently from these transcripts.

The extractor \(\mathcal {E}\) is defined in Fig. 3. We note that, after a successful first run of \(\mathcal {A}\), having produced a first accepting transcript (a, c, z), we rerun \(\mathcal {A}\) from the very beginning and answer all oracle queries consistently, except the query to a, i.e., we only reprogram the oracle at the point \(I = a\). Note that since \(\mathcal {P}^*\) and thus \(\mathcal {A}\) is deterministic, and we only reprogram the oracle at the point \(I = a\), in each iteration of the repeat loop \(\mathcal {A}\) is ensured to make the query to I again.Footnote 6

A crucial observation is the following. Within a run of \(\mathcal {E}\), all the queries that are made by the different invocations of \(\mathcal {A}\) are answered consistently using lazy sampling, except for the queries to the index I, where different responses \(c,c',\ldots \) are given. This is indistinguishable from having them answered by a full-fledged random oracle, i.e., by means of a pre-chosen function \(\textsf{RO}:\{0,1\}^{\le u} \rightarrow \mathcal {C}\), but then replacing the output \(\textsf{RO}(I)\) at I by fresh challenges \(c'\) for the runs of \(\mathcal {A}\) in the repeat loop. By enumerating the elements in the domain and codomain of \(\textsf{RO}\), it is easily seen that the extractor is actually running the abstract game from Fig. 2. Thus, bounds on the success probability and the expected runtime (in terms of queries to \(\mathcal {A}\)) follow from Lemmas 2 and 3. Altogether, we obtain the following result.

Lemma 4

(Extractor) The extractor \(\mathcal {E}\) of Fig. 3 makes an expected number of at most \( k + Q \cdot (k-1) \) queries to \(\mathcal {A}\) and succeeds in outputting k transcripts \(y_1,\dots ,y_k\) with common first message a and distinct challenges with probability at least

Proof

By enumerating all the elements in the domain and codomain of the random oracle \(\textsf{RO}\), we may assume that \(\textsf{RO}:\{1,...,U\} \rightarrow \{1,...,N\}\), and thus \(\textsf{RO}\) can be represented by the function table \((j_1,...,j_U) \in \{1,\ldots ,N\}^U\) for which \(\textsf{RO}(i) = j_i\). Further, since \(\mathcal {P}^*\) is deterministic, the outputs I, y and v of the algorithm \(\mathcal {A}\) can be viewed as functions taking as input the function table \((j_1,\dots ,j_U) \in \{1,\dots ,N\}^U\) of \(\textsf{RO}\), and so we can consider the array \(M(j_1,\dots ,j_U)=\bigl (I(j_1,\dots ,j_U),v(j_1,\dots ,j_U)\bigr )\).

Then, a run of the extractor perfectly matches up with the abstract sampling game of Fig. 2 instantiated with array M. The only difference is that, in this sampling game, we consider full-fledged random oracles encoded by vectors \((j_1,\dots ,j_U) \in \{1,\dots ,N\}^U\), while the actual extractor implements these random oracles by lazy sampling. Thus, we can apply Lemma 2 to obtain bounds on the success probability and the expected runtime. However, in order to control the parameter P, which occurs in the bound of Lemma 2, we make the following observation, so that we can apply Lemma 3 to bound \(P \le Q+1\).

For every \((j_1,\dots ,j_U)\), let \(S(j_1,\dots ,j_U) \subseteq \{1, \dots , U\}\) be the set of points that \(\mathcal {P}^*\) queries to the random oracle when \((j_1,\dots ,j_U)\) corresponds to the entire function table of the random oracle. Then, \(\mathcal {P}^*\) will produce the same output when the random oracle is reprogrammed at an index \(i \notin S(j_1,\dots ,j_U)\). In particular, \( I(j_1,\dots ,j_{i-1},j,j_{i+1},\dots ,j_U)=I(j_1,\dots ,j_{i-1},j',j_{i+1},\dots ,j_U) \) for all \(j,j'\) and for all \(i \notin S(j_1,\dots ,j_U)\). Furthermore, \(|S(j_1,\dots ,j_U)| \le Q\). Hence, the conditions of Lemma 3 are satisfied and \(P \le Q+1\). The bounds on the success probability and the expected runtime now follow, completing the proof. \(\square \)

The existence of the above extractor, combined with the k-special-soundness property, implies the following theorem. One subtle issue is that the sampling without replacement needs to be done efficiently, i.e., in expected polynomial time; this is not completely trivial, because in the worst case the extractor has to try all (possibly exponentially many) challenges. We discuss in Appendix A how this can be done.

Theorem 1

(Fiat–Shamir Transformation of a \(\varSigma \)-Protocol) The Fiat–Shamir transformation \(\textsf{FS}[\varPi ]\) of a k-out-of-N special-sound \(\varSigma \)-protocol \(\varPi \) is knowledge sound with knowledge error

where \(\kappa := {\text {Er}}(k;N) = (k-1)/N\) is the knowledge error of the (interactive) \(\varSigma \)-protocol \(\varPi \).

5 Refined Analysis of the Abstract Sampling Game

Before we prove knowledge soundness of the Fiat–Shamir transformation of multi-round interactive protocols, we reconsider the abstract game of Sect. 3 and consider a refined analysis of the cost of playing the game. The multi-round knowledge extractor will essentially play a recursive composition of this game; however, the analysis of Sect. 3 is insufficient for our purposes (resulting in a super-polynomial bound on the runtime of the knowledge extractor). Fortunately, it turns out that a refinement allows us to prove the required (polynomial) upper bound.

In Sect. 3, the considered cost measure is the number of entries visited during the game. For \(\varSigma \)-protocols, every entry corresponds to a single invocation of the dishonest prover \(\mathcal {P}^*\). For multi-round protocols, every entry will correspond to a single invocation of a sub-tree extractor. The key observation is that some invocations of the sub-tree extractor are expensive while others are cheap. For this reason, we introduce a cost function \(\Gamma \) and a constant cost \(\gamma \) to our abstract game, allowing us to differentiate between these two cases. \(\Gamma \) and \(\gamma \) assign a cost to every entry of the array M; \(\Gamma \) corresponds to the cost of an expensive invocation of the sub-tree extractor and \(\gamma \) corresponds to the cost of a cheap invocation. While this refinement presents a natural generalization of the abstract game of Sect. 3, its analysis becomes significantly more involved.

The following lemma provides an upper bound for the total cost of playing the abstract game in terms of these two cost functions.

Lemma 5

(Abstract Sampling Game—Weighted Version) Consider again the game of Fig. 2, as well a cost function \(\Gamma :\{1,\dots ,N\}^{U} \rightarrow \mathbb {R}_{\ge 0}\) and a constant cost \(\gamma \in \mathbb {R}_{\ge 0}\).

Let \(J= (J_1,\dots ,J_{U})\) be uniformly distributed in \(\{1,\dots ,N\}^{U}\), indicating the first entry sampled, and let \((V,I)=M(J_1,\dots ,J_{U})\). Further, for all \(1\le i \le U\), let \(A_i = a_i(J)\), where the function \(a_i\) is as defined in Eq. 5.

We define the cost of sampling an entry \(M(j_1,\dots ,j_{U})=(v,i)\) with index \(i=I\) to be \(\Gamma (j_1,\dots ,j_{U})\) and the cost of sampling an entry \(M(j_1,\dots ,j_{U})=(v,i)\) with index \(i\ne I\) to be \(\gamma \). Let \(\Delta \) be the total cost of playing this game. Then,

where \(T = \sum _{i=1}^{U} \Pr (I\ne i \wedge A_i > 0) \le P\).

Remark 9

Note that the parameter T in the statement here differs slightly from its counterpart \(P = \sum _{i} \Pr (A_i > 0) \) in Lemma 2. Recall the informal discussion of P in the context of our application (Remark 8), where the array M is instantiated via a Q-query prover \(\mathcal {P}^*\) attacking the Fiat–Shamir transformation of an interactive proof. We immediately see that now the defining events \(I\ne i \wedge A_i > 0\) are empty for all \(U-Q\) indices that the prover does not query, giving the bound \(T \le Q\) here, compared to the bound \(P \le Q+1\) on P. The formal (and more abstract) statement and proof is given in Lemma 6.

Proof

Let us split up \(\Delta \) into the cost measures \(\Delta _1\), \(\Delta _2\) and \(\Delta _3\), defined as follows. \(\Delta _1\) denotes the total costs of the elements \(M(j_1,\dots ,j_{U})=(1,i)\) with \(i=I\) sampled in the game, i.e., the elements with bit \(v=1\) and index \(i=I\); correspondingly, X denotes the number of entries of the form (1, i) with \(i = I\) sampled (including the first one if \(V=1\)). Second, \(\Delta _2\) denotes the total costs of the elements \(M(j_1,\dots ,j_{U})=(0,i)\) with \(i=I\) sampled, i.e., the elements with bit \(v=0\) and index \(i=I\); correspondingly, Y denotes the number of entries of the form (0, i) with \(i=I\) sampled (including the first one if \(V=0\)). Finally, \(\Delta _3\) denotes the total costs of the elements \(M(j_1,\dots ,j_{U})=(v,i)\) with \(i \ne I\) sampled; correspondingly, Z denotes the number of entries of this form sampled.

Clearly \(\Delta = \Delta _1 + \Delta _2 + \Delta _3\). Moreover, since the cost \(\gamma \) is constant, it follows that \(\mathop {\mathrm {\mathbb {E}}}\limits [\Delta _3] = \gamma \cdot \mathop {\mathrm {\mathbb {E}}}\limits [Z]\). In a similar manner, we now aim to relate \(\mathop {\mathrm {\mathbb {E}}}\limits [\Delta _1]\) and \(\mathop {\mathrm {\mathbb {E}}}\limits [\Delta _2]\) to \(\mathop {\mathrm {\mathbb {E}}}\limits [Y]\) and \(\mathop {\mathrm {\mathbb {E}}}\limits [Z]\), respectively. However, since the cost function \(\Gamma :\{1,\dots ,N\}^U \rightarrow \mathbb {R}_{\ge 0}\) is not necessarily constant, this is more involved.

For \(1 \le i \le U\), let us write \(J_i^* = ( J_1,\dots , J_{i-1}, J_{i+1}, \dots , J_{U})\), which is uniformly random with support \(\{1,\dots ,N\}^{U-1}\). Moreover, for all \(1 \le i \le U\) and \(j^* = (j^*_1,\dots ,j^*_{i-1},j^*_{i+1}, \ldots ,j_{U}) \in \{1,\dots ,N\}^{{U}-1}\), let \(\Lambda (i,j^*)\) denote the event

We note that conditioned on the event \(\Lambda (i,j^*)\), all samples are picked from the subarray \(M(j^*_1,\dots ,j^*_{i-1}, \,\cdot \,,j^*_{i+1}, \cdots ,j^*_{U})\); the first one uniformly at random subject to the index I being i, and the remaining ones (if \(V=1\)) uniformly at random (without replacement).

We first analyze and bound \(\mathop {\mathrm {\mathbb {E}}}\limits [ \Delta _1 \mid \Lambda (i,j^*) ]\). We observe that, for all i and \(j^*\) with \(\Pr \bigl (\Lambda (i,j^*)\bigr )>0\),

Since, conditioned on \(\Lambda (i,j^*) \wedge X=\ell \) for \(\ell \in \{0,\ldots ,N\}\), any size-\(\ell \) subset of elements with \(v=1\) and index i is equally likely to be sampled, it follows that

Hence,

Similarly,

Next, we bound the expected values of X and Y conditioned on \(\Lambda (i,j^*)\). The analysis is a more fine-grained version of the proof of Lemma 2. Bounding \(\mathop {\mathrm {\mathbb {E}}}\limits [X \mid \Lambda (i,j^*)]\) is quite easy: since \(V=0\) implies \(X=0\) and \(V=1\) implies \(X\le k\), it immediately follows that

Hence,

Suitably bounding the expectation \(\mathop {\mathrm {\mathbb {E}}}\limits [Y\mid \Lambda (i,j^*) ]\), and thus \(\mathop {\mathrm {\mathbb {E}}}\limits [ \Delta _2 \mid \Lambda (i,j^*) ]\),

is more involved. For that purpose, we introduce the following parameters. For the considered fixed choice of the index \(1 \le i \le U\) and of \(j^*= (j^*_1,\dots ,j^*_{i-1},j^*_{i+1}, \cdots ,j^*_{U})\), we let,Footnote 7

Let us first note that

for all i and \(j^*\) with \(\Pr \bigl (\Lambda (i,j^*)\bigr )>0\). Therefore, if we condition on the event \(V=1 \wedge \Lambda (i,j^*)\) we implicitly assume that i and \(j^*\) are so that a is positive. Now, towards bounding \(\mathop {\mathrm {\mathbb {E}}}\limits [Y\mid \Lambda (i,j^*) ]\), we observe that conditioned on the event \(V=1 \wedge \Lambda (i,j^*)\), the random variable Y follows a negative hypergeometric distribution with parameters \(a+b-1\), \(a-1\) and \(k-1\) (see also Remark 7). Hence, by Lemma 1,

and thus

where we use that \(\mathop {\mathrm {\mathbb {E}}}\limits [Y \mid V=0 \wedge \Lambda (i,j^*)] =1\). Hence,

and thus, combined with Eq. 7,

Since this inequality holds for all i and \(j^*\) with \(\Pr \bigl (\Lambda (i,j^*)\bigr )>0\), it follows that

What remains is to show that \(\mathop {\mathrm {\mathbb {E}}}\limits [Z] \le (k-1) T\), and thus \(\mathop {\mathrm {\mathbb {E}}}\limits [\Delta _3] = \gamma \mathop {\mathrm {\mathbb {E}}}\limits [Z] \le (k-1) T \gamma \). The slightly weaker bound \(\mathop {\mathrm {\mathbb {E}}}\limits [Z] \le (k-1) P\) follows immediately from observing that \(Z \le Y'\) for \(Y'\) as in the proof of Lemma 2 (the number of entries counted by Z is a subset of those counted by \(Y'\)), and using that \(\mathop {\mathrm {\mathbb {E}}}\limits [Y'] \le \mathop {\mathrm {\mathbb {E}}}\limits [X'+Y'] \le (k-1) P\) as derived in the proof of Lemma 2. In order to get the slightly better bound in terms of T, we bound \(\mathop {\mathrm {\mathbb {E}}}\limits [Z]\) from scratch below. We use a similar approach as above for bounding the expectation of Y. Thus, we consider a fixed choice of i and \(j^*\) and set \(a:=a_i(j^*)\) and \(b:=b_i(j^*)\). Then, conditioned on \(V=1 \wedge \Lambda (i,j^*)\), also Z follows a negative hypergeometric distribution, but now with parameters \(N-b-1\), \(a-1\) and \(k-1\). Therefore, for all i and \(j^*\) with \(\Pr \bigl (V=1 \wedge \Lambda (i,j^*)\bigr )>0\),

Using that \(\mathop {\mathrm {\mathbb {E}}}\limits [ Z \mid V=0 \wedge \Lambda (i,j^*) ] = 0\), but also recalling that \(\Pr \bigl (V=1\mid \Lambda (i,j^*) \bigr ) = a/(a+b)\) and exploiting \(\Pr (I=i \mid J_i^*=j^*) = (a+b)/N\), it follows that

We recall that the above holds for all i and \(j^*\) for which \(a = a_i(j^*) > 0\), so that \(\Pr ( V=1 \wedge \Lambda (i,j^*) ) > 0\). For i and \(j^*\) with \(a = a_i(j^*) = 0\), it holds that \(\Lambda (i,j^*)\) implies \(V=0\), and thus \(\mathop {\mathrm {\mathbb {E}}}\limits [ Z \mid \Lambda (i,j^*) ] = 0\). Therefore

Hence \(\mathop {\mathrm {\mathbb {E}}}\limits [ \Delta _3 ] \le (k-1) \cdot T \cdot \gamma \), as intended, and altogether it follows that

which completes the proof of the lemma. \(\square \)

Lemma 6

Consider the game in Fig. 2. Let v and  be functions such that

be functions such that  for all \(j \in \{1,\dots ,N\}^{U}\). Furthermore, let \(J= (J_1,\dots ,J_{U})\) be uniformly distributed in \(\{1,\dots ,N\}^{U}\) and set \(A_i = a_i(J)\) for all \(1\le i \le U\) as in Eq. 5. Let us additionally assume that for all \(j \in \{1,\dots ,N\}^{U}\) there exists a subset \(S(j) \subseteq \{1,\dots ,U\}\) of cardinality at most Q such that

for all \(j \in \{1,\dots ,N\}^{U}\). Furthermore, let \(J= (J_1,\dots ,J_{U})\) be uniformly distributed in \(\{1,\dots ,N\}^{U}\) and set \(A_i = a_i(J)\) for all \(1\le i \le U\) as in Eq. 5. Let us additionally assume that for all \(j \in \{1,\dots ,N\}^{U}\) there exists a subset \(S(j) \subseteq \{1,\dots ,U\}\) of cardinality at most Q such that  for all \(j,j'\) with \(j_\ell =j_\ell '\) for all \(\ell \in S(j)\). Then

for all \(j,j'\) with \(j_\ell =j_\ell '\) for all \(\ell \in S(j)\). Then

Proof

The proof is analogous to the proof of Lemma 3. By basic probability theory, it follows that

where the inequality follows from the fact that \(|S(j) |\le Q\) for all j.

Now note that, by definition of the sets S(j), for all \(j \in \{1,\dots ,N\}^U\), \(i \notin S(j)\) and \(j_i\in \{1,\dots ,N\}\), it holds that

Therefore, for all  ,

,

Hence,

Altogether, it follows that

which completes the proof. \(\square \)

6 Fiat–Shamir Transformation of Multi-Round Protocols

Let us now move to multi-round interactive proofs. More precisely, we consider the Fiat–Shamir transformation \(\textsf{FS}[\varPi ]\) of a \(\textbf{k}\)-special-sound \((2\mu +1)\)-move interactive proof \(\varPi \), with \(\textbf{k}=(k_1,\dots ,k_{\mu })\). While the multi-round extractor has a natural recursive construction, it requires a more fine-grained analysis to show that it indeed implies knowledge soundness.

To avoid a cumbersome notation, in Sect. 6.1 we first handle \((2\mu +1)\)-move interactive proofs in which the verifier samples all \(\mu \) challenges uniformly at random from the same set \(\mathcal {C}\). Subsequently, in Sect. 6.2, we argue that our techniques have a straightforward generalization to interactive proofs where the verifier samples its challenges from different challenge sets. In Sect. 6.3, we also show that our results extend to adaptive security in a straightforward way.

6.1 Multi-Round Protocols with a Single Challenge Set

Consider a deterministic dishonest Q-query random-oracle prover \(\mathcal {P}^*\), attacking the Fiat–Shamir transformation \(\textsf{FS}[\varPi ]\) of a \(\textbf{k}\)-special-sound interactive proof \(\varPi \) on input x. We assume all challenges to be elements in the same set \(\mathcal {C}\). After making at most Q queries to the random oracle, \(\mathcal {P}^*\) outputs a proof \(\pi = (a_1,\dots ,a_{\mu +1})\). We re-format the output and consider

as \(\mathcal {P}^*\)’s output. Sometimes it will be convenient to also consider \(I_{\mu +1}:= (a_1,\ldots ,a_{\mu +1})\). Furthermore, we extend \(\mathcal {P}^*\) to a random-oracle algorithm \(\mathcal {A}\) that additionally checks the correctness of the proof \(\pi \). Formally, relaying all the random oracle queries that \(\mathcal {P}^*\) is making, \(\mathcal {A}\) runs \(\mathcal {P}^*\) to obtain \(\textbf{I}=(I_1,\dots ,I_\mu )\) and \(\pi \), additionally queries the random oracle to obtain \(c_1:= \textsf{RO}(I_1), \dots , c_\mu := \textsf{RO}(I_\mu ) \), and then outputs

where \(V(x,y)=1\) if y is an accepting transcript for the interactive proof \(\varPi \) on input x and \(V(x,y)=0\) otherwise. Hence, \(\mathcal {A}\) makes at most \(Q+\mu \) queries (the queries done by \(\mathcal {P}^*\), and the queries to \(I_1,\ldots ,I_\mu \)). Moreover, \(\mathcal {A}\) has a naturally defined success probability

where \(\textsf{RO}:\{0,1\}^{\le u} \rightarrow \mathcal {C}\) is distributed uniformly. As before, \(\epsilon (\mathcal {A}) = \epsilon (\mathcal {P}^*,x)\).

Our goal is now to construct an extraction algorithm that, when given black-box access to \(\mathcal {A}\), and thus to \(\mathcal {P}^*\), aims to output a \(\textbf{k}\)-tree of accepting transcripts(Definition 6). By the \(\textbf{k}\)-special-soundness property of \(\varPi \), a witness for statement x can then be computed efficiently from these transcripts.

To this end, we recursively introduce a sequence of “sub-extractors” \(\mathcal {E}_1,\ldots ,\mathcal {E}_\mu \), where \(\mathcal {E}_m\) aims to find a \((1,\dots ,1,k_m,\dots ,k_\mu )\)-tree of accepting transcripts. The main idea behind this recursion is that such a \((1,\dots ,1,k_m,\dots ,k_\mu )\)-tree of accepting transcripts is the composition of \(k_m\) appropriate \((1,\dots ,1,k_{m+1},\dots ,k_\mu )\)-trees.