Abstract

Purpose

To develop a set of actionable quality indicators for critical care suitable for use in low- or middle-income countries (LMICs).

Methods

A list of 84 candidate indicators compiled from a previous literature review and stakeholder recommendations were categorised into three domains (foundation, process, and quality impact). An expert panel (EP) representing stakeholders from critical care and allied specialties in multiple low-, middle-, and high-income countries was convened. In rounds one and two of the Delphi exercise, the EP appraised (Likert scale 1–5) each indicator for validity, feasibility; in round three sensitivity to change, and reliability were additionally appraised. Potential barriers and facilitators to implementation of the quality indicators were also reported in this round. Median score and interquartile range (IQR) were used to determine consensus; indicators with consensus disagreement (median < 4, IQR ≤ 1) were removed, and indicators with consensus agreement (median ≥ 4, IQR ≤ 1) or no consensus were retained. In round four, indicators were prioritised based on their ability to impact cost of care to the provider and recipient, staff well-being, patient safety, and patient-centred outcomes.

Results

Seventy-one experts from 30 countries (n = 45, 63%, representing critical care) selected 57 indicators to assess quality of care in intensive care unit (ICU) in LMICs: 16 foundation, 27 process, and 14 quality impact indicators after round three. Round 4 resulted in 14 prioritised indicators. Fifty-seven respondents reported barriers and facilitators, of which electronic registry-embedded data collection was the biggest perceived facilitator to implementation (n = 54/57, 95%) Concerns over burden of data collection (n = 53/57, 93%) and variations in definition (n = 45/57, 79%) were perceived as the greatest barrier to implementation.

Conclusion

This consensus exercise provides a common set of indicators to support benchmarking and quality improvement programs for critical care populations in LMICs.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Avoid common mistakes on your manuscript.

Whilst recognition of the need for ongoing investment in health service infrastructure remains, stakeholders seeking to improve quality of critical care in LMICs are increasingly focused on improving processes of care, notably reducing avoidable harms (specifically healthcare associated infection and antimicrobial resistance) and patient centred outcomes. Continuous surveillance using electronic records and registries were perceived as essential infranstructure to faciliate implementation. |

Introduction

Intensive care units (ICUs) provide essential and lifesaving interventions to critically ill patients [1]. Delivery of critical care is resource intensive, complex and with considerable burden for both patients, families and those responsible for provision of healthcare services [2]. Whilst increased access to critical care has contributed to a reduction in global mortality from disease and injury, the quality of critical care remains variable internationally. Poor quality care is associated with increased hospital stay, excess morbidity, and avoidable healthcare associated costs [3].

In high-income countries (HICs) and in those where ICU facilities are widely established, there has been an increasing focus on promoting safe and effective delivery of care, monitored through a series of quality metrics (indicators) measuring availability of structures, and the quality of processes and outcomes of care [4,5,6,7]. In general, indicators are derived almost exclusively from the values, practices, and organisational structures seen in a relatively narrow ICU construct synonymous with HIC settings. Consequently, few of these indicators have been evaluated for feasibility, stakeholder relevance or ability to drive actionable improvement in LMIC settings [6, 8, 9].

Efforts to develop globally applicable indicators suitable for LMICs have focused on health system measures, such as those used to benchmark national services and drive Universal Health Coverage [3]. Such metrics often struggle to reflect the priorities of patient and frontline healthcare providers and have had limited success in translating into actionable measures, resulting in under adoption and underutilisation [6, 8, 10,11,12,13]. To address this gap in stakeholder selected indicators that reflect both priorities and practices of critical care services in LMICs, we undertook a RAND Delphi study [14] to develop a set of actionable quality indicators for use in ICUs in LMIC settings. Furthermore, we sought to identify barriers and facilitators to implementation of the selected indicators as perceived by the clinical teams delivering frontline care.

Methods

Study design

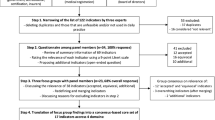

This Delphi study is reported in accordance with the Conducting and REporting DElphi Studies (CREDES) guidelines [15]. An advisory committee (AC) was convened to provide oversight, and an Expert Panel (EP) (identified by the AC) was responsible for selecting indicators by voting in the Delphi, highlighting when indicator definitions were problematic, and identifying potential barriers and facilitators to implementation of the indicators. In addition, a subset of the AC and EP were convened into a Definitions Working Group (DWG). The DWG was responsible for the appraisal of the voters’ feedback on the definitions of the indicators, adaptation and refinement of those definitions following each round of voting.

The Delphi was conducted electronically using the online survey tool, Survey Monkey Inc (San Mateo, California, USA; www.surveymonkey.com). Prior to conducting the Delphi, it was piloted for readability, interpretability, and user experience by a physician, a nurse and a non-clinician researcher, who were not part of the EP. All group discussions were conducted online using a video conferencing platform [16].

Study setting

The study was done as part of the work of the Collaboration for Research, Implementation and Training in Critical Care in Asia- Africa (CRIT Care Asia-Africa—CCAA). Established in 2019, this community of practice supports a network of nationally led ICU registries within nine countries in Asia and eight countries in Africa, representing 260 + acute and critical care departments. A detailed description of the project is published [17]. Stakeholders representing acute and critical care services from all CCAA collaborating countries were invited to participate. In addition to the members of the CCAA, researchers with extensive expertise in ICU registries, and or quality indicators and health care evaluation were invited also from other parts of the world including the Americas, Europe, and Oceania.

Study participants

Forty-seven individuals were invited to form the EP, representing clinicians primarily involved in ICU care (physicians, nurses, and allied health professionals), physicians from other specialties related to critical care, researchers, and patients who have survived ICU care or patient representatives. These EP members were identified as active members of the current CCAA registry and wider research network. For the third round of voting, the EP, were asked to invite 1–3 additional stakeholders from their respective national networks in CCAA participating countries. The EP were asked to include representatives from health care with similar experience as the EP (Electronic supplementary material 1).

Data collection and analysis

Candidate indicator list identification

A candidate list of indicators was compiled by the AC from three sources; those identified through a published scoping review [18], those already used in national registries collaborating with CCAA [17], and those identified through a stakeholder prioritisation exercise at a national CCAA meeting. Additionally, participants were able to add indicators in the first round of voting. Indicators were categorised using the Lancet Global Health High-Quality Health Systems framework’s three domains: foundation, care process, or quality impact [11]. Each indicator was described, defined (based on existing published definitions), and graded for evidence prior to presenting to the EP for voting [19]. Where indicators had multiple published definitions, participants were asked both to prioritise the indicator and the most appropriate definition.

Scoring of indicators

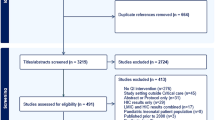

The Delphi had four rounds (Fig. 1). In rounds one and two, respondents appraised each indicator based on two criteria: validity, and feasibility (Fig. 1). Consensus for validity and feasibility was considered a prerequisite to assessing sensitivity to change and reliability. In round three, candidate indicators were assessed for validity, feasibility, sensitivity to change and reliability. In this round, voters were additionally asked to report perceived barriers and facilitators to implementation. Perceived barriers and facilitators were then categorised according to the five domains of the Consolidated Framework for Implementation Research (CFIR); intervention characteristics, outer setting, inner setting, individual characteristics, and processes. CFIR was chosen for its systematic and replicable identification of constructs which may promote or inhibit implementation of interventions in clinical practice [20]. Finally, in round four, the indicators were prioritised based on their ability to influence five priority impacts—cost of care to recipient and provider, staff wellbeing, patient safety, and patient centred outcomes [21]. The EP prioritised each indicator on all five criteria as being low, medium or high impact.

An indicator was entered into subsequent rounds of voting if it achieved a median score of ≥ 4 using a 5-point Likert scale (strongly disagree = 1 to strongly agree = 5), and if there was consensus across the voting members (“consensus to retain”). Consensus was defined as an interquartile range (IQR, defined as quartile 3-quartile 1) of < 1 for validity and feasibility, and an IQR of ≤ 2 for reliability and sensitivity to change. The criteria for reliability and sensitivity are less stringent because these characteristics may differ in interpretation across health systems, whereas feasibility and validity should be uniform. Indicators scoring a consensus median of < 4 were removed from further rounds (“consensus to remove”). Indicators which achieved a median score of ≥ 4 or < 4, but where there was no consensus were put forward to the next round of voting (“no consensus to retain or remove”). At the end of each voting round, The EP convened to discuss the results, with a view to moving towards consensus in subsequent rounds. Discussions focussed on those indicators where there was absence of consensus, variation in definition, and or divergence in voting patterns. [19, 22]. To inform these discussions voting was described based upon geographical distribution of respondents. The regions considered were based on the United Nations division of the world’s geographical regions—Asia, Africa, Americas, Europe, and Oceania [23]. The consensus process (rounds one to three) was intended to provide a list of stakeholder selected indicators, which having already achieved agreement across the four criteria, could then be prioritised in the final round of voting (round four). In case of consensus not being achieved, an alternative strategy was included in the protocol, whereby following round three, indicators that received a Likert of > 3 from more than 50% of respondents were retained for round four, similar to previous Delphi studies [10, 24]. In addition participants were provided with a free text box after every section in the survey (rounds one and three) where they were asked to comment if they had any concerns with the definitions of the indicators. The definitions that were contested in this manner were then reviewed for accuracy and feasibility of collecting data, and where necessary, alternatives were proposed by the DWG.

Results

Study participation

The study was conducted between March 2021 and October 2021 (Fig. 2). Of the 47 EP members invited, 43 agreed to participate. For round three, an additional 70 stakeholders were invited from the EP’S respective networks and 31 responded. A total of 71 clinicians, researchers and healthcare professionals representing 30 countries participated (Electronic supplementary material 2). Response rates were 50% or greater for each round. Fifty one participants (72%) completed all four rounds. The numbers, demographics, and professional characteristics of participants at each round are described in Table 1. Specialities of critical care, surgery, internal medicine and public health were represented in each of the four rounds (Table 1). Patient and patient representatives invited to the EP declined, citing lack of experience in critical care internationally, and or unfamiliarity of quality-of-care metrics (Table 2).

Indicator selection

Eighty-four candidate indicators constituted the initial list put forward to round one. Sixty-seven indicators had one definition, whereas 17 indicators had multiple published definitions (Electronic supplementary material 3). Following three rounds of voting, 70 indicators and 70 definitions were retained (20 due to an absence of consensus) (Fig. 2). Given the lack of consensus, we agreed to proceed with our a priori plan to include indicators receiving a Likert of > 3 from more than 50% of respondents into round four. This further removed 21 indicators, leaving a total of 49 indicators considered valid, feasible, sensitive to change and reliable. Variation in voting patterns across geographic regions is described in Electronic supplementary material 6. Variations in voting revealed inequalities in access to laboratory, point of care diagnostics and equipment, which hindered feasibility and reliability of metric reliant on this information for measurement. Appraisal of the remaining indicators, it was noted that no patient reported outcomes were retained, due to low scoring regarding feasibility of data collection. EP discussion and review of voting patterns revealed these indicators scored highly for validity, and therefore the EP concluded that these indicators should be considered for prioritisation. The EP requested that the eight quality impact indicators measuring patient centred and medium term outcomes should be included in round four. Prioritisation of the final 57 indicators (from round three–16 foundation, 27 care processes and 14 quality impacts) using the five priority setting categories resulted in 14 indicators being prioritised; (4 foundation measures, 6 process measures and 4 quality impacts) (Electronic supplementary material 4).

Barriers and facilitators

Eight discrete barriers and seven facilitators to implementation were identified, and categorised into the four CFIR constructs: intervention characteristics, inner setting, outer setting, and processes (Fig. 3). The most frequently perceived facilitator to implementation described by respondents was the use of electronic data collection through the use existing clinical quality registries to routinise and standardise data collection (n = 54/57, 95%) The burden of data collection (n = 53/57, 93%), and an existing lack of uniformity for the procedure (n = 36/57, 63%) were identified as barriers to implementation. Similarly, co-implementation of indicators as part of a cycle of audit and improvement alongside data pertaining to case mix and outcomes was described as an important facilitator to both implementation and impact (n = 41, 72%), as was the opportunity to participate in quality improvement programmes (n = 25/57, 44%). The absence of standardised definitions and associated expertise in interpretation of measurements (n = 45/57, 79%) was seen as a further barrier to implementation and reinforced findings of the narrative analysis of the definitions working group described above (Electronic supplementary material 5).

Discussion

Our multinational four round Delphi study resulted in the selection of 57 indicators for use in critical care settings in LMICs, and among these 14 were prioritised. Individual ICU networks will in addition have a choice as to which indicators they select for implementation. We anticipate the selection of indicators will reflect context specific factors and priorities for improvement in the different settings. These indicators will be evaluated for feasibility of implementation, and for their ability to reliably measure associated outcomes. This study brings new perspectives to the ongoing discussion [11, 18, 25] regarding indicator selection for benchmarking ICUs internationally, and the potential implications that use of some indicators for pay for performance may be having on efforts to improve quality of care. This study adds to the existing international literature by providing much needed representation from previously under-represented ICU services and critical care populations in low- and middle-income health systems.

Disparities in access to basic resources for delivery of safe critical care were evident and were reflected in the voting. Comparatively low ratios of trained healthcare providers to patients, and inequalities in access to basic resources essential for ICU care (monitoring, infusion pumps and medications, including oxygen as described elsewhere in the literature) was a driver in our study for the measure of their availability being included as a foundation indicator [2, 26, 27]. Healthcare associated infections, pressure related injury and thrombus, were considered important alongside the universal public health primacy of multi-drug resistant infection. Absence of laboratory services and concerns over reliability of sampling techniques and diagnostic stewardship, was a concern for respondents when appraising indicators for feasibility and reliability. The ongoing need to improve the quality of microbiology and diagnostic services particularly in Africa is well described and, despite significant investment, remains a barrier to both quality improvement efforts and clinical trials [28, 29]. Whilst investment in laboratory and diagnostics services (including microbiology and haematology) has increased opportunities for training, ongoing disparities in infrastructure remain and are likely to hamper efforts to improve antimicrobial stewardship and infection control within critical care [28,29,30,31,32]. In addition, absence of or lack of existing peer agreement regarding indicators’ definitions further hampered voters' ability to achieve consensus during selection and raised concerns as to how continual refinement in definitions undermines indicator reliability, prevents accurate benchmarking and opened the door to “gaming” of indicators, particularly in healthcare systems where indicators are associated with pay for performance; common in South and SouthEast Asia, and emerging in some African countries [33,34,35,36].

There was a high proportion of process indicators selected (47.3%) in our study, compared to the 2012 European Society of Intensive Care Medicine (ESICM) task force (where 22.2% were selected [7]). This shift may reflect growing awareness of the potential impact that omissions in daily care processes have on excess morbidity and mortality [31]. It may further reflect the impact that staffing, training and until recently, the relative absence of infrastructure of reliable replicable data driven service evaluation has on patient outcomes and on efforts to improve care delivery. Outcome measures (quality impacts) were similar to those selected by previous consensus studies from Europe and the UK [1, 7], with the addition of cost to both patient and provider, which is congruent with the need for out-of-pocket payments for care in many LMIC settings [11]. Indicators implemented in the network, will be evaluated for feasibility of collection and validated for their association with outcomes. As is common practice with national ICU registries internationally, population outcomes will be adjusted for case-mix, and risk using already validated and internationally comparable prognostic models (APACHE IV, SAPS III, and E-TropICS) [37, 38]. Impact of organisational factors, including team structure, resource availability and culture of quality improvement are also being explored using mixed methods. Definitions used to determine adverse events (for example incidence of healthcare associated infection) have been chosen from published literature and will be assessed for feasibility and reliability in the different ICU populations.

The use of registry-based data collection, the co-design of implementation processes and the use of feedback tools were identified as important strategies to overcome known barriers of feasibility and reliability of data collected [22,23,24,25]. Current measures of medium-long-term functional outcomes, whilst perceived as a priority, were considered poorly reflective of some social and lifestyle constructs for communities in Africa and Asia. For example, social and lifestyle constructs including driving, or playing sport did not reflect respondent experience of patient or family priorities, particularly for elderly or poorer economic quintiles of the population. Alternatives including being able to carry and care for younger family members, or being able to work on the farm, were proposed. The need for greater investment in research exploring patient and public priorities for recovery and quality of life after critical illness remains.

From all representatives including those from the most fragile health systems there was a recognition that the expanding focus of critical from episodic care towards longer term holistic provision of care which requires greater understanding of the impact of critical care on patients, their families and wider population health well beyond the hospital walls. The absence of integrated health and social care systems and lack of interoperability in healthcare data was seen as a significant barrier to measuring the impact of existing ICU services and inform how to invest in services as they rapidly expand in LMICs. Even if solutions such as telephonic follow-up or cohort follow up for specific critical care populations could facilitate data collection of the indicators of quality, concerns remained as to whether there is sufficient expertise within health ministries and healthcare policy making agencies to interpret information.

The perceived importance of these measures in evaluating quality of care, and the feasibility and acceptability for communities of capturing outcomes after ICU is reflected in the request from the EP to include such indicators in the prioritisation exercise (round 4) despite these indicators failing to meet the threshold for inclusion based on consensus scoring. Whilst the tension of overriding the Delphi process is a potential weakness of the study, the ACs decision to include these indicators for prioritisation was intended to strengthen the studies aim of identifying stakeholder prioritised indicators. The unique opportunity of the network’s community of practice is the uplift in infrastructure and methods to be able to overcome potential barriers (such as feasibility of follow up) and to undertake parallel PPIE research to explore the acceptability of follow up services in Asia and Africa, where the network is active. Of the 57 selected indicators selected after round three, 14 indicators were prioritised.

Limitations

The stakeholder representation for this Delphi was dominated by experts working in Asia and Africa, where the CCAA collaborating registries are operational. There was, however, representation from ICU experts (with expertise in and experience of using clinical registries) from South America. It must also be acknowledged that not all countries in Asia and Africa were included and those included have access to an online ICU registry and are part of a research collaboration, thus more likely to be engaged in and aware of the value of quality improvement and research as part of high-quality health systems. With increased focus on quality improvement across LMICs and investment in electronic systems like DHIS2 [39], our findings go beyond just Asia and Africa, and may be applicable to LMICs.”

Despite invitations to all sectors of clinical healthcare teams, nursing and allied healthcare professional representation remained limited and may have contributed to the gender imbalance observed in this Delphi; the majority of respondents were male. The absence of patient representation, despite invitations to patients and patient advocacy experts to participate, is a limitation of this study. Patient representatives approached declined participation citing concerns regarding their understanding of quality in healthcare, and in the case of advocacy experts, their understanding of critical care in the LMIC context. The pandemic brought into focus the absence of understanding of critical care by the general public and indeed other non-acute sectors of healthcare. Public engagement and education regarding the role critical care services may play in improving population health is needed. However, representation from stakeholders in Asia and Africa in this study, often underrepresented in similar research, was proportionally higher than reported in other published studies. [10, 21].

Conclusion

This Delphi study resulted in the selection of 57 indicators (16 foundation, 27 process and 14 quality impact) suitable for use in LMICs. Whilst recognition of the need for ongoing investment in health service infrastructure remained, stakeholders voting patterns demonstrated an increasing awareness and prioritisation of the need to measure care processes within the ICU and its potential to improve care standards and reduce avoidable harm. Despite the challenges of maintaining validity and reliability of existing indicators of ICU outcome when applied to more diverse critical populations and organisational structures, the Delphi process underscored the importance of these indicators. Context specific definitions, addressing known barriers to feasibility of data collection and absent information, were identified. This indicator set may become a tool to support benchmarking and quality improvement programs for ICUs beyond those represented here.

References

Huijben JA, Wiegers EJA et al (2019) Development of a quality indicator set to measure and improve quality of ICU care for patients with traumatic brain injury. Crit Care 23:95. https://doi.org/10.1186/s13054-019-2377-x

Murthy S, Wunsch H (2012) Clinical review: International comparisons in critical care—lessons learned. Crit Care 16:218. https://doi.org/10.1186/cc1114

Kruk ME, Gage AD, Joseph NT et al (2018) Mortality due to low-quality health systems in the universal health coverage era: a systematic analysis of amenable deaths in 137 countries. Lancet 392:2203–2212. https://doi.org/10.1016/S0140-6736(18)31668-4

Kallen MC, Roos-Blom M-J, Dongelmans DA et al (2018) Development of actionable quality indicators and an action implementation toolbox for appropriate antibiotic use at intensive care units: a modified-RAND Delphi study. PLoS ONE 13:e0207991. https://doi.org/10.1371/journal.pone.0207991

Viergever RF, Olifson S, Ghaffar A, Terry RF (2010) A checklist for health research priority setting: nine common themes of good practice. Health Res Policy Sys 8:1–9. https://doi.org/10.1186/1478-4505-8-36

Anema HA, Kievit J, Fischer C et al (2013) Influences of hospital information systems, indicator data collection and computation on reported Dutch hospital performance indicator scores. BMC Health Serv Res 13:212. https://doi.org/10.1186/1472-6963-13-212

Rhodes A, Moreno RP, Azoulay E et al (2012) Prospectively defined indicators to improve the safety and quality of care for critically ill patients: a report from the Task Force on Safety and Quality of the European Society of Intensive Care Medicine (ESICM). Intensive Care Med 38:598–605. https://doi.org/10.1007/s00134-011-2462-3

Fischer C, Lingsma HF, Anema HA et al (2016) Testing the construct validity of hospital care quality indicators: a case study on hip replacement. BMC Health Serv Res 16:551. https://doi.org/10.1186/s12913-016-1778-7

Hanefeld J, Powell-Jackson T, Balabanova D (2017) Understanding and measuring quality of care: dealing with complexity. Bull World Health Organ 95:368–374. https://doi.org/10.2471/BLT.16.179309

Odland ML, Nepogodiev D, Morton D et al (2021) Identifying a basket of surgical procedures to standardize global surgical metrics: an international Delphi study. Ann Surg 274:1107–1114. https://doi.org/10.1097/SLA.0000000000004611

Kruk ME, Gage AD, Arsenault C et al (2018) High-quality health systems in the sustainable development goals era: time for a revolution. Lancet Glob Health 6:e1196–e1252. https://doi.org/10.1016/S2214-109X(18)30386-3

Howell SJ, Pandit JJ, Rowbotham DJ, Research Council of the National Institute of Academic Anaesthesia (NIAA) (2012) National Institute of Academic Anaesthesia research priority setting exercise. Br J Anaesth 108:42–52. https://doi.org/10.1093/bja/aer418

Kok M, De Souza DK (2010) Young voices demand health research goals. Lancet 375:1416–1417. https://doi.org/10.1016/S0140-6736(10)60584-3

Dewar JA, Friel JA (2001) Delphi method. In: Gass SI, Harris CM (eds) Encyclopedia of operations research and management science. Springer US, New York, NY, pp 208–209

Jünger S, Payne SA, Brine J et al (2017) Guidance on conducting and reporting Delphi studies (CREDES) in palliative care: recommendations based on a methodological systematic review. Palliat Med 31:684–706. https://doi.org/10.1177/0269216317690685

Zoom Video Communications Inc. 2021

CRIT CARE ASIA, Beane A, Dondorp AM, Taqi A et al (2020) Establishing a critical care network in Asia to improve care for critically ill patients in low- and middle-income countries. Crit Care 24:608. https://doi.org/10.1186/s13054-020-03321-7

Jawad I, Rashan S, Sigera C et al (2021) A scoping review of registry captured indicators for evaluating quality of critical care in ICU. J Intensive Care 9:1–12. https://doi.org/10.1186/s40560-021-00556-6

Burns PB, Rohrich RJ, Chung KC (2011) The levels of evidence and their role in evidence-based medicine. Plast Reconstr Surg. 128:305–310. https://doi.org/10.1097/PRS.0b013e318219c171

Constructs—the consolidated framework for implementation research. https://cfirguide.org/constructs/. Accessed 10 Nov 2021

World Health Organization (2007) Everybody’s business—strengthening health systems to improve health outcomes : WHO’s framework for action. World Health Organization, Geneva

Flottorp SA, Oxman AD, Krause J et al (2013) A checklist for identifying determinants of practice: a systematic review and synthesis of frameworks and taxonomies of factors that prevent or enable improvements in healthcare professional practice. Implement Sci 8:35. https://doi.org/10.1186/1748-5908-8-35

https://unstats.un.org/unsd/methodology/m49/. Accessed 25 Oct 2021

Whitaker J, Nepogodiev D, Leather A, Davies J (2020) Assessing barriers to quality trauma care in low and middle-income countries: a Delphi study. Injury 51:278–285. https://doi.org/10.1016/j.injury.2019.12.035

Beane A, Salluh JIF, Haniffa R (2021) What Intensive care registries can teach us about outcomes. Curr Opin Crit Care 27:537–543. https://doi.org/10.1097/MCC.0000000000000865

Vukoja M, Riviello E, Gavrilovic S et al (2014) A survey on critical care resources and practices in low- and middle-income countries. Glob Heart 9(3):337–42.e425. https://doi.org/10.1016/j.gheart.2014.08.002

Manafò E, Petermann L, Vandall-Walker V, Mason-Lai P (2018) Patient and public engagement in priority setting: a systematic rapid review of the literature. PLoS ONE 13(3):e0193579

Petti CA, Polage CR, Quinn TC et al (2006) Laboratory medicine in Africa: a barrier to effective health care. Clin Infect Dis 42:377–382. https://doi.org/10.1086/499363

Alemnji GA, Zeh C, Yao K, Fonjungo PN (2014) Strengthening national health laboratories in sub-Saharan Africa: a decade of remarkable progress. Trop Med Int Health 19:450–458. https://doi.org/10.1111/tmi.12269

Asmelash D, Worede A, Teshome M (2020) Extra-analytical clinical laboratory errors in Africa: a systematic review and meta-analysis. EJIFCC 31:208–224

Weiss CH, Moazed F, McEvoy CA et al (2011) Prompting physicians to address a daily checklist and process of care and clinical outcomes. Am J Respir Crit Care Med 184:680–686. https://doi.org/10.1164/rccm.201101-0037OC

Yadav H, Shah D, Sayed S et al (2021) Availability of essential diagnostics in ten low-income and middle-income countries: results from national health facility surveys. Lancet Glob Health 9:e1553–e1560. https://doi.org/10.1016/S2214-109X(21)00442-3

Alonge O, Lin S, Igusa T, Peters DH (2017) Improving health systems performance in low- and middle-income countries: a system dynamics model of the pay-for-performance initiative in Afghanistan. Health Policy Plan 32:1417–1426. https://doi.org/10.1093/heapol/czx122

Chalkley M, Mirelman AJ, Siciliani L, Suhrcke M (2018) Paying for performance for health care in low- and middle-income countries: an economic perspective. In: Global health economics. World Scientific, pp 157–190

Ider B-E, Adams J, Morton A et al (2011) Gaming in infection control: a qualitative study exploring the perceptions and experiences of health professionals in Mongolia. Am J Infect Control 39:587–594. https://doi.org/10.1016/j.ajic.2010.10.033

Al-Tawfiq JA, Tambyah PA (2014) Healthcare associated infections (HAI) perspectives. J Infect Public Health 7:339–344. https://doi.org/10.1016/j.jiph.2014.04.003

Vijayaraghavan BKT, Priyadarshini D, Rashan A et al (2020) Validation of a simplified risk prediction model using a cloud based critical care registry in a lower-middle income country. PLoS ONE 15:e0244989. https://doi.org/10.1371/journal.pone.0244989

Aminiahidashti H, Bozorgi F, Montazer SH et al (2017) Comparison of APACHE II and SAPS II scoring systems in prediction of critically ill patients’ outcome. Emergency (Tehran) 5:e4

Dehnavieh R, Haghdoost A, Khosravi A et al (2019) The District Health Information System (DHIS2): a literature review and meta-synthesis of its strengths and operational challenges based on the experiences of 11 countries. HIM J 48:62–75. https://doi.org/10.1177/1833358318777713

Honda CKY, Freitas FGR, Stanich P et al (2013) Nurse to bed ratio and nutrition support in critically Ill patients. Am J Crit Care 22:e71–e78. https://doi.org/10.4037/ajcc2013610

Morita K, Matsui H, Yamana H et al (2017) Association between advanced practice nursing and 30-day mortality in mechanically ventilated critically ill patients: a retrospective cohort study. J Crit Care 41:209–215. https://doi.org/10.1016/j.jcrc.2017.05.025

Brown SES, Ratcliffe SJ, Halpern SD (2015) Assessing the utility of ICU readmissions as a quality metric: an analysis of changes mediated by residency work-hour reforms. Chest 147:626–636. https://doi.org/10.1378/chest.14-1060

Hill AD, Fowler RA, Burns KEA et al (2017) Long-term outcomes and health care utilization after prolonged mechanical ventilation. Ann ATS 14:355–362. https://doi.org/10.1513/AnnalsATS.201610-792OC

Centers for Disease Control and Prevention (2019) NHSN patient safety component manual 2019

Zilberberg MD, Shorr AF (2010) Ventilator-associated pneumonia: the clinical pulmonary infection score as a surrogate for diagnostics and outcome. Clin Infect Dis 51(Suppl 1):S131-135. https://doi.org/10.1086/653062

Brown SES, Ratcliffe SJ, Halpern SD (2014) An empirical comparison of key statistical attributes among potential ICU quality indicators. Crit Care Med 42:1821–1831. https://doi.org/10.1097/CCM.0000000000000334

Gastmeier P, Sohr D, Geffers C et al (2005) Mortality risk factors with nosocomial Staphylococcus aureus infections in intensive care units: results from the German nosocomial infection surveillance system (KISS). Infection 33:50–55. https://doi.org/10.1007/s15010-005-3186-5

Zimmerman JE, Kramer AA, McNair DS et al (2006) Intensive care unit length of stay: benchmarking based on acute physiology and chronic health evaluation (APACHE) IV. Crit Care Med 34:2517–2529. https://doi.org/10.1097/01.CCM.0000240233.01711.D9

Moore L, Stelfox HT, Turgeon AF et al (2014) Hospital length of stay after admission for traumatic injury in Canada: a multicenter cohort study. Ann Surg 260:179–187. https://doi.org/10.1097/SLA.0000000000000624

Reiter A, Mauritz W, Jordan B et al (2004) Improving risk adjustment in critically Ill trauma patients: the TRISS-SAPS score. J Trauma Acute Care Surg 57:375–380. https://doi.org/10.1097/01.TA.0000104016.78539.94

Acknowledgements

The authors are thankful for the key role played by patients, families and health care teams in critical care.

Writing group: Vrindha Pari (Chennai Critical Care Consultants, Pvt Ltd, Chennai, India), Eva Fleur Sluijs (Institute for Global Health & Development, VU University Amsterdam), Maria del Pilar Arias López (Argentine Society of Intensive Care (SATI); SATI-Q Program), David Alexander Thomson (University of Cape Town, Groote Schuur Hospital, Cape Town, South Africa), Swagata Tripathy (All India Institute of Medical Sciences, Bhubaneswar, India), Sutharshan Vengadasalam (Department of general surgery, Teaching hospital, Jaffna, Sri lanka), Bharath Kumar Tirupakuzhi Vijayaraghavan (Department of Critical Care Medicine, Apollo Hospitals, Chennai, India), Luigi Pisani (Mahidol- Oxford Tropical Medicine Research Unit, Bangkok, Thailand, Doctors with Africa CUAMM, Padova, Italy), Nicolette de Keizer (Dept Medical Informatics, Amsterdam Public Health Institute, Amsterdam UMC Amsterdam, The Netherlands.), Neill K. J. Adhikari (Department of Critical Care Medicine, Sunnybrook Health Sciences Centre and Interdepartmental Division of Critical Care Medicine, University of Toronto, Toronto, Canada), David Pilcher (Department of Intensive Care, Alfred Health, Melbourne, VIC 3004, Australia; The Australian and New Zealand Intensive Care Society (ANZICS) Centre for Outcome and Resources Evaluation, Camberwell, Melbourne, VIC 3124, Australia), Rebecca Inglis (University of Oxford, Lao Oxford Mahosot Wellcome Trust Research Unit (LOMWRU), Vientiane, Lao PDR) , Fred Bulamba (Department of Anesthesia and critical care, Faculty of Health sciences. Busitema University, Mbale, Uganda), Arjen M Dondorp (Mahidol- Oxford Tropical Medicine Research Unit, Bangkok, Thailand; Nuffield Department of Clinical Medicine. University of Oxford, Oxford, UK), Rohit Aravindakshan Kooloth (Apollo specialities, Chennai, India), Jason Phua (Fast and Chronic Programmes, Alexandra Hospital, National University Health System, Singapore; Division of Respiratory and Critical Care Medicine, National University Hospital, National University Health System, Singapore), Cornelius Sendagire (Makerere University College of Health Science, Department of Anaesthesia & Critical Care, 7072 Kampala, Uganda), Wangari Waweru-Siika (Department of Anaesthesia, Aga Khan University, Nairobi.), Mohd Zulfakar Mazlan (Department of Anaesthesiology and Intensive Care, School of Medical Sciences, Universiti Sains Malaysia, 16150 Kubang Kerian, Kelantan, Malaysia), Rashan Haniffa (Centre for Inflammation Research, University of Edinburgh, UK and Mahidol Oxford Tropical Medicine Research Unit Bangkok, Thailand, Nat-Intensive Care Surveillance-MORU, Colombo, Sri Lanka), Jorge IF Salluh (D'Or Institute for Research and Education, Rio De Janeiro, Brazil; Programa de pós-graduação em clínica médica- Universidade Federal do rio de Janeiro), Justine Davies (Institute of Applied Health Research, University of Birmingham, UK; Centre for Global Surgery, Department of Global Health, Stellenbosch University, Cape Town, South Africa; Medical Research Council/Wits University Rural Public Health and Health Transitions Research Unit, Faculty of Health Sciences, School of Public Health, University of the Witwatersrand, Johannesburg, South Africa), Abi Beane (Centre for Inflammation Research, University of Edinburgh, UK and Mahidol Oxford Tropical Medicine Research Unit Bangkok, Thailand) Justine Davies and Abigail Beane are joint last authors.

Collaborators and members of the CCAA: Teddy Thaddeus Abonyo (Department of Anaesthesia, Aga Khan University, Nairobi), Najwan Abu Al-Saad (Department of Critical Care, University College London Hospitals NHS Foundation Trust), Diptesh Aryal (Mahidol- Oxford Tropical Medicine Research Unit, Bangkok, Thailand; Nepal Intensive Care Research Foundation, Kathmandu, Nepal), Tim Baker (Muhimbili University of Health & Allied Sciences, Tanzania), Fitsum Kifle Belachew (University of Cape Town, Groote Schuur Hospital, Cape Town, South Africa; Debre Berhan University Asrat Woldyes Health sciences campus, Debre Berhan Ethiopia), Bruce M Biccard (University of Cape Town, Groote Schuur Hospital, Cape Town, South Africa), Joseph Bonney (Komfo Anokye Teaching Hospital, Emergency Medicine Directorate, P.O.Box 1934 Kumasi, Ghana; Global Health and Infectious Diseases Research Group- Kumasi Center for Collaborative Research in Tropical Medicine), Gaston Burghi (Centro de Tratamiento Intensivo del Hospital de Clínicas, UDELAR, Montevideo, Uruguay), Dave A Dongelmans (Department of Intensive care Medicine, Amsterdam University Medical Centers, Amsterdam, Netherlands), N. P. Dullewe (Post Basic College of Nursing, Colombo, Sri Lanka), Mohammad Abul Faiz (Dev Care Foundation, Dhaka, Bangladesh), Mg Ariel Fernandez (Argentine Society of Intensive Care (SATI); SATI-Q Program), Moses siaw-frimpong (Komfo Anokye Teaching Hospital, Directorate of Anaesthesia and Intensive care, P.O.Box 1934 Kumasi, Ghana), Antonio Gallesio (Argentine Society of Intensive Care (SATI); SATI-Q Program), Maryam Shamal Ghalib (Wazir Akbar Khan Hospital, General Surgery Department, Kabul, Afghanistan), Madiha Hashmi (Southeast Asian Research in Critical Care and Health, Ziauddin Hospital, Karachi, Sindh, Pakistan), Raphael Kazidule Kayambankadzanja (College of Medicine, Private Bag 360, Blantyre Malawi), Arthur Kwizera (Makerere University College of Health Science, Department of Anaesthesia & Critical Care, 7072 Kampala, Uganda), Subekshya Luitel (Department of Critical Care, University College London Hospitals NHS Foundation Trust.), Ramani Moonesinghe (University College London (UCL); Consultant in anaesthesia and critical care, UCL Hospitals, London), Mohd Basri Mat Nor (Department of Anaesthesiology and Intensive Care, School of Medicine, International Islamic University Malaysia), Hem Raj Paneru (Department of Critical Care Medicine, Tribhuvan University Teaching Hospital, Kathmandu, Nepal), Dilanthi Priyadarshani (Nat-Intensive Care Surveillance-MORU, Colombo, Sri Lanka), Mohiuddin Shaikh (Southeast Asian Research in Critical Care and Health, Ziauddin Hospital, Karachi, Sindh, Pakistan), Nattachai Srisawat (Chulalongkorn university, Bangkok, Thailand),W. M. Ashan Wijekoon (Faculty of Medicine, University of Colombo, Sri Lanka), Lam Minh Yen (University of Oxford, Lao Oxford Mahosot Wellcome Trust Research Unit (LOMWRU), Vientiane, Lao PDR).

Funding

This research is on behalf of the community of practice Collaboration for Research, Implementation and Training in Critical Care, Asia-Africa (CCAA), a Wellcome-UKRI/MRC funded research network. Wellcome grant number: 215522/Z/19/Z, UKRI/ MRC grant number: MR/V030884/1. This research was funded in whole or in part by the Wellcome Trust grant (215522/Z/19/Z). For the purpose of Open Access the author has applied a CC BY public copyright licence to any Author Accepted Manuscript arising from this submission.

Author information

Authors and Affiliations

Consortia

Contributions

VP, AB and JD had full access to all of the data in the study and take responsibility for the integrity of the data and the accuracy of the data analysis. Concept and design: VP, EFS, RH, JD, JIFS, AB. Methodology: VP, EFS, RH, JD, JIFS, AB. Acquisition, analysis, or interpretation of data: all members. Drafting of the manuscript: VP, JD, JIFS, AB. Critical revision of the manuscript for important intellectual content: TTA, NKJA, NAS, DA, AB, GB, BB, FB, JD, AMD, DAD, MAF, AG, RH, RI, NDK, RAK, AK, MDPAL, MZM, VP, JP, DP, LP, JIFS, CS, MSF, EFS, DAT, ST, SV, BKTV, WWS. Statistical analysis: AB, JD, RH, DP, EFS, VP. Supervision and joint last authors: JD, AB, Obtained funding: AB, RH, AMD. Collaborators and members of the CCAA participated in the Delphi exercise.

Corresponding author

Ethics declarations

Conflicts of interest

VP’s work and PhD is funded by CCAA-Wellcome. AB, RH and AD are partly funded by Wellcome. All other authors and collaborators have no competing interests.

Consent to participate and consent to publish

All authors and collaborators consented to participate in and publish this study.

Ethics approval and consent

This study was approved for ethics by OxTREC on 19th February 2021 reference number: 507-21.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Members of CCAA are listed in Acknowledgment section.

Supplementary Information

Below is the link to the electronic supplementary material.

ESM 1

Online resource 1: Study participant information. Supplementary file1 (DOCX 19 KB)

ESM 2

Online resource 2: Delphi members country representation. Supplementary file2 (DOCX 646 KB)

ESM 3

Online resource 3: Indicator selection rounds 1 to 3. Supplementary file3 (DOCX 269 KB)

ESM 4

Online resource 4: Final selected indicators. Supplementary file4 (DOCX 25 KB)

ESM 5

Online resource 5: Indicator definitions - DWG revisions. Supplementary file5 (DOCX 28 KB)

ESM 6

Online resource 6: Regional voting patterns. Supplementary file6 (DOCX 43 KB)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License, which permits any non-commercial use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc/4.0/.

About this article

Cite this article

Pari, V., Collaboration for Research Implementation, Training in Critical Care, Asia Africa ‘CCAA’. Development of a quality indicator set to measure and improve quality of ICU care in low- and middle-income countries. Intensive Care Med 48, 1551–1562 (2022). https://doi.org/10.1007/s00134-022-06818-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00134-022-06818-7