Abstract

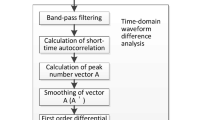

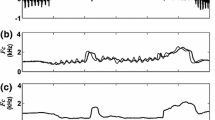

The cleft of the lip and palate (CLP) is a congenital disability affecting the craniofacial region and it impacts the speech production system. The current work focuses on the modification of misarticulations produced for unvoiced stop consonants in CLP speech. Three types of misarticulations are studied: glottal, palatal, and velar stop substitutions. The stop consonants are misarticulated due to inadequate buildup of intra-oral pressure caused by velopharyngeal dysfunction and oro-nasal fistula. The misarticulated stops affect the speech intelligibility and quality, and this further affects the use of speech-based applications. The misarticulated stops are analyzed and modified using the speech data collected from 60 Kannada speaking children (normal and CLP). An event-based modification approach is used to correct the misarticulated stops. At first, automatic detection of burst onset and vowel onset events is carried out. Then, the region from vowel onset to 20 ms duration of the vowel is extracted. Further, the region from burst onset point to 20 ms duration of the vowel is defined as the region for modification. It is transformed using the nonnegative matrix factorization (NMF) method. The objective and subjective evaluation results show that the proposed event-based transformation approach provides a relative improvement compared to the entire-word modification (signal processed without using the knowledge of burst and vowel onset events). The event-based transformed misarticulated stops showed close similarity with the normal stops in perceptual quality. The improved performance accuracy of modified stops suggests that the speech distortion is minimized.

Similar content being viewed by others

Data availability statement

The data that support the findings of this study are available from the All India Institute of Speech and Hearing (AIISH), Mysore, India. However, restrictions apply to the availability of these data, and so are not publicly available.

References

R. Aihara , R. Takashima , T. Takiguchi , Y. Ariki (2013) Individuality-preserving voice conversion for articulation disorders based on non-negative matrix factorization. In: Proceedings of IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp 8037–8040

R. Aihara, R. Takashima, T. Takiguchi, Y. Ariki, Consonant enhancement for articulation disorders based on non-negative matrix factorization, in Proceedings of Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), pp. 1–4 (2012)

A.M.A. Ali, J. Van der Spiegel, P. Mueller, Acoustic–phonetic features for the automatic classification of stop consonants. IEEE Trans. Speech Audio Process. 9(8), 833–841 (2001)

V.T. Ananthapadmanabha, P.A. Prathosh, G.A. Ramakrishnan, Detection of the closure-burst transitions of stops and affricates in continuous speech using the Plosion index. J. Acoust. Soc. Am. 135(1), 460–471 (2014)

F. Ballati, F. Corno, L. De Russis, Assessing virtual assistant capabilities with Italian dysarthric speech, in Proceedings of the 20th International ACM SIGACCESS Conference on Computers and Accessibility, pp. 93–101 (2018a)

F. Ballati, F. Corno, L. De Russis, Hey siri, do you understand me?: Virtual assistants and dysarthria, in Proceedings of 7th International Workshop on the Reliability of Intelligent Environments, pp. 557–566 (2018b)

N. Bi, Y. Qi, Application of speech conversion to alaryngeal speech enhancement. IEEE Trans. Speech Audio Process. 5(2), 97–105 (1997)

P. Boersma, V. Van Heuven, Speak and unspeak with praat. Glot Int. 5(9–10), 341–347 (2001)

K. Bruel, Speech level meter. https://www.bksv.com/en (1942)

D.N. Bryen, Y. Chung, What adults who use AAC say about their use of mainstream mobile technologies. Assist. Technol. Outcomes Benef. (ATOB) 12(1), 73–106 (2018)

R. De Maesschalck, D. Jouan-Rimbaud, D.L. Massart, The Mahalanobis distance. Chemometr. Intell. Lab. Syst. 50(1), 1–18 (2000)

P.C. Delattre, A.M. Liberman, F.S. Cooper, Acoustic loci and transitional cues for consonants. J. Acoust. Soc. Am. 27(4), 769–773 (1955)

M. Eshghi, D.J. Zajac, M. Bijankhan, M. Shirazi, Spectral analysis of word-initial alveolar and velar plosives produced by Iranian children with cleft lip and palate. Clin. Linguist. Phonet. 27(3), 213–219 (2013)

S.W. Fu, P.C. Li, Y.H. Lai, C.C. Yang, L.C. Hsieh, Y. Tsao, Joint dictionary learning-based non-negative matrix factorization for voice conversion to improve speech intelligibility after oral surgery. IEEE Trans. Biomed. Eng. 64(11), 2584–2594 (2017)

P.K. Ghosh, S.S. Narayanan, Closure duration analysis of incomplete stop consonants due to stop–stop interaction. J. Acoust. Soc. Am. 126(1), EL1–EL7 (2009)

F.E. Gibbon, Abnormal patterns of tongue-palate contact in the speech of individuals with cleft palate. Clin. Linguist. Phonet. 18(4–5), 285–311 (2004)

F.E. Gibbon, L. Ellis, L. Crampin, Articulatory placement for /t/,/d/,/k/and/g/ targets in school age children with speech disorders associated with cleft palate. Clin. Linguist. Phonet. 18(6–8), 391–404 (2004)

P. Grunwell, D.A. Sell, Speech and Cleft Palate/Velopharyngeal Anomalies (Whurr, Management of Cleft Lip and Palate London, 2001)

A. Harding, P. Grunwell, Characteristics of cleft palate speech. Int. J. Lang. Commun. Disord. 31(4), 331–357 (1996)

E.W. Healy, S.E. Yoho, Y. Wang, D. Wang, An algorithm to improve speech recognition in noise for hearing-impaired listeners. J. Acoust. Soc. Am. 134(4), 3029–3038 (2013)

G. Henningsson, D.P. Kuehn, D. Sell, T. Sweeney, J.E. Trost-Cardamone, T.L. Whitehill, Universal parameters for reporting speech outcomes in individuals with cleft palate. Cleft Palate-Craniofac. J. 45(1), 1–17 (2008)

B. Hutters, K. Brøndsted, Strategies in cleft palate speech-with special reference to Danish. Cleft Palate J. 24(2), 126–136 (1987)

P. Jain, R.B. Pachori, Event-based method for instantaneous fundamental frequency estimation from voiced speech based on eigenvalue decomposition of the Hankel matrix. IEEE/ACM Trans. Audio Speech Lang. Process. 22(10), 1467–1482 (2014)

A.B. Kain, J.P. Hosom, X. Niu, J.P. van Santen, M. Fried-Oken, J. Staehely, Improving the intelligibility of Dysarthric speech. Speech Commun. 49(9), 743–759 (2007)

V. Karjigi, P. Rao, Classification of place of articulation in unvoiced stops with spectro-temporal surface modeling. Speech Commun. 54(10), 1104–1120 (2012)

D. Kewley-Port, Measurement of formant transitions in naturally produced stop consonant–owel syllables. J. Acoust. Soc. Am. 72(2), 379–389 (1982)

N. Kido, M. Kawano, F. Tanokuchi, Y. Fujiwara, I. Honjo, H. Kojima, Glottal stop in cleft palate speech (1992)

A.W. Kummer, Cleft Palate and Craniofacial Anomalies: Effects on Speech and Resonance (Cengage Learning, Boston, 2013)

D.D. Lee , H.S. Seung, Algorithms for non-negative matrix factorization, in Proceedings of Advances in Neural Information Processing Systems, pp. 556–562 (2001)

W. Li, Q. Zhaopeng, F. Yijun, N. Haijun, Design and preliminary evaluation of electrolarynx with f0 control based on capacitive touch technology. IEEE Trans. Neural Syst. Rehabil. Eng. 26(3), 629–636 (2018)

D. Liljequist, B. Elfving, K.S. Roaldsen, Intraclass correlation—a discussion and demonstration of basic features. PLoS ONE 14(7), 1–35 (2019)

S.A. Liu, Landmark detection for distinctive feature-based speech recognition. J. Acoust. Soc. Am. 100(5), 3417–3430 (1996)

H. Liu, Q. Zhao, M. Wan, S. Wang, Enhancement of electrolarynx speech based on auditory masking. IEEE Trans. Biomed. Eng. 53(5), 865–874 (2006)

V.C. Mathad, S.M. Prasanna, Vowel onset point based screening of misarticulated stops in cleft lip and palate speech. IEEE/ACM Trans. Audio Speech Lang. Process. 28, 450–460 (2019)

P. Mermelstein, Automatic segmentation of speech into syllabic units. J. Acoust. Soc. Am. 58(4), 880–883 (1975)

S.H. Mohammadi, A. Kain, An overview of voice conversion systems. Speech Commun. 88, 65–82 (2017)

K.S.R. Murty, B. Yegnanarayana, Epoch extraction from speech signals. IEEE Trans. Audio Speech Lang. Process. 16(8), 1602–1613 (2008)

M. Novotnỳ, J. Rusz, R. Čmejla, E. Ržička, Automatic evaluation of articulatory disorders in Parkinson’s disease. IEEE/ACM Trans. Audio Speech Lang. Process. 22(9), 1366–1378 (2014)

S.J. Peterson-Falzone, M.A. Hardin-Jones, M.P. Karnell, Cleft Palate Speech (Mosby, St. Louis, 2001)

B.J. Philips, R.D. Kent, Acoustic–phonetic descriptions of speech production in speakers with cleft palate and other velopharyngeal disorders. Speech Lang. 11, 113–168 (1984)

A. Pradhan, K. Mehta, L. Findlater, Accessibility came by accident: use of voice-controlled intelligent personal assistants by people with disabilities, in Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, pp. 1–13 (2018)

A. Prakash, M.R. Reddy, H.A. Murthy, Improvement of continuous dysarthric speech quality, in Proceedings of SLPAT 2016 Workshop on Speech and Language Processing for Assistive Technologies, pp. 43–49 (2016)

A. Prathosh, T. Ananthapadmanabha, A. Ramakrishnan, Epoch extraction based on integrated linear prediction residual using Plosion index. IEEE Trans. Audio Speech Lang. Process. 21(12), 2471–2480 (2013)

A. Prathosh, A. Ramakrishnan, T. Ananthapadmanabha, Estimation of voice-onset time in continuous speech using temporal measures. J. Acoust. Soc. Am. 136(2), EL122–EL128 (2014)

F. Rudzicz, Adjusting dysarthric speech signals to be more intelligible. Comput. Speech Lang. 27(6), 1163–1177 (2013)

L. Santelmann, J. Sussman, K. Chapman, Perception of middorsum palatal stops from the speech of three children with repaired cleft palate. Cleft Palate-Craniofac. J. 36(3), 233–242 (1999)

M. Schuster, A. Maier, T. Haderlein, E. Nkenke, U. Wohlleben, F. Rosanowski, U. Eysholdt, E. Nöth, Evaluation of speech intelligibility for children with cleft lip and palate by means of automatic speech recognition. Int. J. Pediatr. Otorhinolaryngol. 70(10), 1741–1747 (2006)

K.N. Stevens, Acoustic Phonetics, vol. 30 (MIT Press, London, 2000)

K. Tanaka, S. Hara, M. Abe, S. Minagi, Enhancing a glossectomy patient’s speech via GMM-based voice conversion, in Proceedings of Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA), pp. 1–4 (2016)

N. Thomas-Stonell, A.L. Kotler, H. Leeper, P. Doyle, Computerized speech recognition: influence of intelligibility and perceptual consistency on recognition accuracy. Augment. Alternat. Commun. 14(1), 51–56 (1998)

T. Toda, A.W. Black, K. Tokuda, Voice conversion based on maximum-likelihood estimation of spectral parameter trajectory. IEEE Trans. Audio Speech Lang. Process. 15(8), 2222–2235 (2007)

T. Van den Bogaert, S. Doclo, J. Wouters, M. Moonen, Speech enhancement with multichannel wiener filter techniques in multimicrophone binaural hearing aids. J. Acoust. Soc. Am. 125(1), 360–371 (2009)

M. Vucovich, R.R. Hallac, A.A. Kane, J. Cook, C.V. Slot, J.R. Seaward, Automated cleft speech evaluation using speech recognition. J. Cranio-Maxillofac. Surg. 45(8), 1268–1271 (2017)

Z. Wu, E.S. Chng, H. Li, Joint nonnegative matrix factorization for exemplar-based voice conversion, in Proceedings of Interspeech, pp. 2509–2513 (2014a)

Z. Wu, T. Virtanen, E.S. Chng, H. Li, Exemplar-based sparse representation with residual compensation for voice conversion. IEEE/ACM Trans. Audio Speech Lang. Process. 22(10), 1506–1521 (2014b)

Y. Xiao, Y. Feng, Q. Zhao, L. Ma, J. Qian, Y. Yan, Acoustic analysis and detection of glottal stops substituted for alveolar stops in cleft palate speech. Shengxue Xuebao/Acta Acust. 40(2), 285–293 (2015)

K. Xiao, S. Wang, M. Wan, L. Wu, Reconstruction of mandarin electrolaryngeal fricatives with hybrid noise source. IEEE/ACM Trans. Audio Speech Lang. Process. (TASLP) 27(2), 383–391 (2019)

Y. Zhao, M. Kuruvilla-Dugdale, M. Song, Structured sparse spectral transforms and structural measures for voice conversion. IEEE/ACM Trans. Audio Speech Lang. Process. (TASLP) 26(12), 2267–2276 (2018)

Acknowledgements

The authors would like to thank Prof. M. Pushpavathi and Prof. Ajish K. Abraham of All India Institute of Speech and Hearing (AIISH), Mysore, India, for their help and support during data collection and perceptual evaluation. The authors would like to thank the research scholars of the signal processing laboratory for their participation in the subjective test.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Sudro, P.N., Vikram, C.M. & Prasanna, S.R.M. Event-Based Transformation of Misarticulated Stops in Cleft Lip and Palate Speech. Circuits Syst Signal Process 40, 4064–4088 (2021). https://doi.org/10.1007/s00034-021-01663-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00034-021-01663-3