Abstract

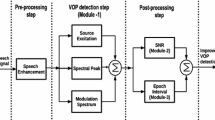

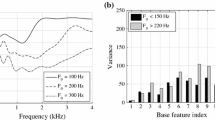

Three major areas have been the focus in the literature to improve ASR performance, namely enhanced acoustic modeling, use of new acoustic features and contributions to the language modeling. An aspect that is less frequently considered is the effect of incorrect transcription. The objective of this paper is to address this issue and correct transcriptions during training. The phonetic transcriptions delivered with a corpus are often hand-labeled and thus suffer from human error owing to short duration of phonemes. The phonetic sequence can also be generated by forced aligning one of the pronunciations for the word in the lexicon that best matches acoustic sequence. In either case, the pronunciation generated may not match the actual acoustic sequence. An attempt is made to increase the likelihood of the transcript and the acoustic feature by systematically removing vowels from the transcription that are not articulated. To identify vowels that are missing in the utterance, but are in the transcript, a group delay (GD)-based boundary detection technique is employed. Group delay is a signal processing-based vowel detector and is independent of the transcription. Viterbi forced alignment (VFA) is also used to obtain the acoustic syllable boundary using the phonetic transcription. Deviations in the acoustic syllable boundaries obtained from GD and VFA are further confirmed by a silence–vowel–consonant classifier. The corrected transcription thus generated is found to increase the log likelihood of the transcript with respect to the acoustic feature leading to a relative improvement of 2.8% phone error rate on the TIMIT corpus.

Similar content being viewed by others

Notes

The power of 0.01 is used to primarily reduce the dynamic range of the energy and thus ensure that weak syllables are also detected.

References

O. Abdel-Hamid, A.R. Mohamed, H. Jiang, G. Penn, Applying convolutional neural networks concepts to hybrid nn-hmm model for speech recognition. ICASSP (2012). pp. 4277–4280

M. Adda-Decker, L. Lamel, Pronunciation variation across system configuration, language and speaking style. Speech Commun. 29, 83–98 (1999)

R.G. Brunet, H.A. Murthy, Impact of pronunciation variation in speech recognition. In IEEE International Conference on Signal Processing and Communication (SPCOM), (Bangalore, 2012), pp. 1–5

J. Cholin, N.O. Schiller, W.J. Levelta, The preparation of syllables in speech production. J. Mem. Lang. 50, 47–61 (2004)

K. Demuynck, T. Laureys, S. Gillis, Automatic generation of phonetic transcriptions for large speech corpora eds by J.H.L. Hansen, B.L. Pellom INTERSPEECH. ISCA (2002). http://dblp.uni-trier.de/db/conf/interspeech/interspeech2002.html#DemuynckLG02

M.W. Fisher, R.G. Doddington, M.K. Goudie-Marshall, The darpa speech recognition research database: specifications and status. Proceedings of DARPA workshop on speech recognition (1986). pp. 93–99

W. Fisher, Nist syllabification software. ftp://jaguar.ncsl.nist.gov/pub/

T. Fukada, T. Yoshimura, Y. Sagisaka, Automatic generation of multiple pronunciations based on neural networks and language statistics. Speech Commun. 27, 63–73 (1999)

A. Ganapathiraju, J. Hamaker, J. Picone, M. Ordowski, G.R. Doddington, Syllable-based large vocabulary continuous speech recognition. IEEE Trans. Speech Audio Process. 9, 358–366 (2001)

J.S. Garofolo, L.F. Lamel, W.M. Fisher, J.G. Fiscus, D.S. Pallett, N.L. Dahlgren, Timit acoustic-phonetic continuous speech corpus. https://catalog.ldc.upenn.edu/LDC93S1 (1993)

J. Godfrey, E. Holliman, J. McDaniel, Switchboard: telephone speech corpus for research and development in ICASSP 92, IEEE International Conference on Speech Signal Processing

R. Golda Brunet, H.A. Murthy, Pronunciation variation across different dialects for english: A syllable-centric approach in National Conference on Communication 2012 (NCC 2012),

R. Golda Brunet, H.A. Murthy, Analysis of vowel deletion in continuous speech in Proceedings of the 21st European Signal Processing Conference (EUSIPCO)

S. Greenberg, Speaking in shorthand–a syllable-centric perspective for understanding pronunciation variation. Elsevier Speech Commun. 29, 159–176 (1999)

R.M. Hegde, H.A. Murthy, V.R. Gadde, Significance of the modified group delay feature in speech recognition. IEEE Trans. Audio, Speech, Lang. Process. 15, 190–202 (2007)

R.M. Hegde, H.A. Murthy, V.R.R. Gadde, Continuous speech recognition using joint features derived from the modified group delay function and mfcc. Proc. INTERSPEECH-ICSLP 2, (2004). pp. 905–908

L.R.M. Hill, A tutorial on hidden Markov models and selected applications in speech recognition. Proc. IEEE 77, 257–286 (1989)

G. Hirsch, Experimental framework for the performance evaluation of speech recognition front-ends on a large vocabulary task, version 2.0. ETSI STQ-Aurora DSR Working Group (2002). https://www.isip.piconepress.com/projects/aurora/doc/AU41702_Large_Vocabulary_Evaluation_Database_v2.0.pdf

T. Holter, T. Svendsen, Maximum likelihood modelling of pronunciation variation. Speech Commun. 29, 117–191 (1999)

X. Huang, A. Acero, H.W. Hon, Spoken Language Processing (Prentice Hall Inc., Upper Saddle River, 2001)

M.K. Jayesh, C.S. Ramalingam, An improved chirp group delay based algorithm for estimating the vocal tract response. In EUSIPCO 2014, Proceedings of the 22nd European Signal Processing Conference (Lisbon, September 1-5, 2014,) pp. 2295–2299

M. Karthick Baskar, P. Kolhar, S. Umesh, Speaker adaptation of convolutional neural network using speaker specific subspace vectors of SGMM in INTERSPEECH 2015, 16th Annual Conference of the international speech communication association, Dresden, Germany, September 6-10, 2015, (2015). http://www.isca-speech.org/archive/interspeech_2015/i15_1096.html pp. 1096–1100

J.M. Kessens, M. Wester, H. Strik, Improving the performance of a dutch csr by modeling within-word and cross-word pronunciation variation. Speech Commun. 29, 193–207 (1999)

A. Lakshmi, H.A. Murthy, A syllable based continuous speech recognizer for tamil, in: ICSLP (Pittsburgh, USA, 2006) pp. 1878–1881

K.F. Lee, H.W. Hon, Speaker-independent phone recognition using hidden Markov models. IEEE trans. Acoust. Speech Signal Process. 37, 1641–1648 (1989)

J. Lim, Spectral root homomorphic deconvolution system. IEEE Trans. Acoust. Speech Signal Process. 27, 223–233 (1979)

A.R. Mohamed, G.E. Dahl, G. Hinton, Acoustic modeling using deep belief networks. IEEE Trans. Audio, Speech, Language Process. 20, 14–22 (2012)

H.A. Murthy, V.R.R. Gadde, The modified group delay function and its application to phoneme recognition. Proc. Int. Conf. Acoust. SpeechSignal Process. 1, 68–71 (2003)

T. Nagarajan, H. A.Murthy, Group delay based segmentation of spontaneous speech into syllable-like units. ISCA and IEEE workshop on Spontaneous Speech Processing and Recognition (2003) pp. 115–118

T. Nagarajan, V. Prasad, H. A.Murthy, The minimum phase signal derived from the magnitude spectrum and its applications to speech segmentation. Sixth Biennial Conference of Signal Processing and Communications (2001) pp. 95–101

T. Nagarajan, V. Prasad, H. A.Murthy, Minimum phase signalderived from root epstrum. Eletron. Lett. 39, 941–942 (2003)

R.W.M. Ng, K. Hirose, Syllable: A self-contained unit to model pronunciation variation. ICASSP (2012) pp. 4457–4460

R. Padmanabhan, Studies on voice activity detection and feature diversity for speaker recognition. PhD Thesis, Indian Institute of Technology, Madras (2012)

D.B. Paul, J.M. Baker, The design for the wall street journal-based csr corpus in proceedings of the workshop on speech and natural Language, HLT ’91 (Association for Computational Linguistics, Stroudsburg, 1992) pp. 357–362

J. Pinto, G. Sivaram, M.M. Doss, H. Hermansky, H. Bourlard, Analysis of mlp based hierarchical phoneme posterior probability estimator. IEEE Trans. Audio, Speech Lan. Process. 19, 225–241 (2011)

D. Povey, A. Ghoshal, G. Boulianne, L. Burget, O. Glembek, N. Goel, M. Hannemann, P. Motlicek, Y. Qian, P. Schwarz, J. Silovsky, G. Stemmer, K. Vesely, The kaldi speech recognition toolkit in IEEE 2011 workshop on automatic speech recognition and understanding, Idiap-RR-04-2012. IEEE Signal processing society, Rue Marconi 19, Martigny (2011). IEEE Catalog No.: CFP11SRW-USB

V. Prasad, T. Nagarajan, H. A.Murthy, Continuous speech recognition using automatically segmented data at syllabic units. Sixth International Conference on Signal Processing (2002) pp. 235–238

V. Prasad, T. Nagarajan, H. A.Murthy, Automatic segmentation of continuous speech using minimum phase group delay functions. Elsevier Speech Commun. 42, 429–446 (2004)

R. Rasipuram, P. Bell, M. Magimai-Doss, Grapheme and multilingual posterior features for under-resourced speech recognition: A study on scottish gaelic in ICASSP, (2013) pp. 7334–7338

A. Rudnicky, Cmu lexicon. www.speech.cs.cmu.edu/cgi-bin/cmudict

T.N. Sainath, B. Ramabhadran, M. Picheny, An exploration of large vocabulary tools for small vocabulary phonetic recognition. Proceedings of ASRU, (Merano, 2009) pp. 359–364

T.N. Sainath, B. Ramabhadran, M. Picheny, D. Nahamoo, D. Kanevsky, Exemplar-based sparse representation features: From timit to lvcsr. IEEE Trans. Audio, Speech, Lan. Process. 19, 2598–2613 (2011)

S.A. Shanmugam, H. Murthy, A hybrid approach to segmentation of speech using group delay processing and hmm based embedded reestimation in INTERSPEECH, (2014) pp. 7334–7338

H. Strik, C. Cucchiarini, Modeling pronunciation variation for asr: A survey of the literature. Elsevier Speech Commun. 29, 225–246 (1999)

S.J. Young, P. Woodland, Htk: Speech recognition toolkit. http://htk.eng.cam.ac.uk

J. Yuan, M. Liberman, /i/ variation in american english: A corpus approach. Speech Sci. 1, 35–46 (2011)

J. Yuan, N. Ryant, M. Liberman, A. Stolcke, V. Mitra, W. Wang, Automatic phonetic segmentation using boundary models in INTERSPEECH, ISCA (2013) pp. 2306–2310

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Golda Brunet, R., Hema Murthy, A. Transcription Correction Using Group Delay Processing for Continuous Speech Recognition. Circuits Syst Signal Process 37, 1177–1202 (2018). https://doi.org/10.1007/s00034-017-0598-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00034-017-0598-2