Abstract

We consider the Cauchy problem for a third-order evolution operator P with (t, x)-depending coefficients and complex-valued lower-order terms. We assume the initial data to be Gevrey regular with an exponential decay at infinity, that is, the data belong to some Gelfand–Shilov space of type \({\mathscr {S}}\). Under suitable assumptions on the decay at infinity of the imaginary parts of the coefficients of P we prove the existence of a solution with the same Gevrey regularity of the data and we describe its behavior for \(|x| \rightarrow \infty \).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and main result

Let us consider for \((t,x) \in [0,T] \times {\mathbb {R}}\) the Cauchy problem in the unknown \(u=u(t,x)\):

where

with \(D=\frac{1}{i}\partial ,\) \(p\ge 2\), \(a_p\in C([0,T],{\mathbb {R}}), a_p(t) \ne 0\) for \(t \in [0,T]\), and \(a_j \in C([0,T],C^\infty ({\mathbb {R}};{\mathbb {C}}))\), \(j = 0, \ldots , p-1\). The operator P is known in literature as \(p-\)evolution operator, cf. [27], and p is the evolution degree. The well posedness of (1.1), (1.2) has been investigated in various functional settings for arbitrary p, cf. [3,4,5, 9]. Further results concern special values of p which correspond to classes of operators of particular interest in Mathematical Physics, cf. [6,7,8, 10, 20, 22] for the case \(p=2\) and [1] for the case \(p=3\). The condition that \(a_p\) is real-valued means that the principal symbol of P (in the sense of Petrowski) has the real characteristic \(\tau =-a_p(t)\xi ^p\); this guarantees that operator (1.2) satisfies the assumptions of Lax-Mizohata theorem. The presence of complex-valued coefficients in the lower-order terms of (1.2) plays a crucial role in the analysis of problem (1.1) in all the above-mentioned papers. In fact, when the coefficients \(a_j(t,x)\), \(j = 0, \ldots , p-1,\) are real valued and of class \({\mathcal {B}}^\infty \) with respect to x (that is uniformly bounded together with all their x-derivatives), it is well known that problem (1.1) is well-posed in \(L^2({\mathbb {R}})\) (and in \(L^2\)-based Sobolev spaces \(H^{m}\), \(m\in {\mathbb {R}}\)). On the contrary if any of the coefficients \(a_j(t,x)\) are complex valued, then in order to obtain well-posedness either in \(L^2({\mathbb {R}})\), or in \(H^{\infty }({\mathbb {R}})=\cap _{m\in {\mathbb {R}}}H^m({\mathbb {R}})\), some decay conditions at infinity on the imaginary part of the coefficients \(a_j\) are needed (see [4, 20]).

Sufficient conditions for well-posedness in \(L^2\) and \(H^\infty \) have been given in [8] and [22] for the case \(p=2\), in [3] for larger p. Considering Cauchy problem (1.1) in the framework of weighted Sobolev–Kato spaces \(H^m= H^{(m_1,m_2)}\), with \(m=(m_1,m_2) \in {\mathbb {R}}^2\), defined as

where \(\langle x\rangle ^{m_{2}}\langle D_{x}\rangle ^{m_{1}}\) denotes the operator with symbol \(\langle x\rangle ^{m_2}\langle \xi \rangle ^{m_{1}}\), and assuming the coefficients \(a_j\) to be polynomially bounded, the second and the third author obtained in [5] well-posedness also in the Schwartz space \({\mathscr {S}}({\mathbb {R}})\) of smooth and rapidly decreasing functions and in the dual space \({\mathscr {S}}'({\mathbb {R}})\). We recall that \({\mathscr {S}}({\mathbb {R}}) = \cap _{m \in {\mathbb {R}}^2} H^{m}({\mathbb {R}})\) and \({\mathscr {S}}'({\mathbb {R}}) = \cup _{m \in {\mathbb {R}}^2} H^{m}({\mathbb {R}})\). In short, the above-mentioned results can be summarized as follows: if

problem (1.1) is well-posed in:

-

\(L^{2}({\mathbb {R}})\), \(H^{m}({\mathbb {R}})\) for every \(m \in {\mathbb {R}}^2\) when \(\sigma > 1\);

-

\(H^{\infty }({\mathbb {R}})\), \({\mathscr {S}}({\mathbb {R}})\) when \(\sigma = 1\). In general, a finite loss of regularity of the solution with respect to the initial data is observed in the case \(\sigma =1\).

Now we want to consider the case when an estimate of form (1.4) for \(j=p-1\) holds for some \(\sigma \in (0,1).\) In this situation, there are no results in the literature for p-evolution operators of arbitrary order. In [10, 22] the case \(p=2\), which corresponds to Schrödinger-type equations, is considered assuming \(0<\sigma <1\) and \(C_\beta = C^{|\beta |+1} \beta !^{s_0}\) for some \(s_0 \in (1, 1/(1-\sigma ))\) in (1.4). The authors find well-posedness results in certain Gevrey spaces of order \(\theta \) with \(s_0 \le \theta <1/(1-\sigma )\), namely in the class

In both papers, starting from data f, g in \(H^m_{\rho ;\theta }\) for some \(\rho >0\) the authors obtain a solution in \(H^m_{\rho -\delta ;\theta }\) for some \(\delta >0\) such that \(\rho -\delta >0.\) This means a sort of loss of regularity in the constant \(\rho \) which rules the Gevrey behavior. We also notice that the condition \(s_0 \le \theta <1/(1-\sigma )\) means that the rate of decay of the coefficients of P imposes a restriction on the spaces \({\mathcal {H}}^\infty _\theta \) in which problem (1.1) is well posed. Finally, the case \(\theta >s_0=1/(1-\sigma )\) is investigated in [7] where the authors prove that a decay condition as \(|x|\rightarrow \infty \) on a datum in \(H^m\), \(m\ge 0\), produces a solution with (at least locally) the same regularity as the data, but with a different behavior at infinity. In the recent paper [6] the role of data with exponential decay on the regularity of the solution has been also analyzed for 2-evolution equations in arbitrary space dimension, in the frame of Gelfand–Shilov-type spaces which can be seen as the global counterpart of classical Gevrey spaces, cf. Sect. 2.1. In particular, it is proved that starting from data with an exponential decay at infinity, we can find a solution with the same Gevrey regularity of the data but with a possible exponential growth at infinity in x. Moreover, this holds for every \(\theta >s_0\). Finally, the result in [6] is proved under the more general assumption with respect to (1.4) that the coefficients \(a_1,\ a_0\) may admit an algebraic growth at infinity, namely

Recently, we started to consider the case \(p=3\) in a Gevrey setting in one space dimension under assumption (1.4) with \(j=p-1\) taking \(\sigma \in (0,1)\). This case is of particular interest because linear 3-evolution equations can be regarded as linearizations of relevant physical semilinear models like KdV and KdV-Burgers equation and their generalizations, see for instance [21, 24,25,26, 30]. There are some results concerning KdV-type equations with coefficients not depending on (t, x) in the Gevrey setting, see [16,17,18]. Our aim is to treat the more general case of variable coefficients. The present paper and [1] are devoted to establish the linear theory which is a preliminary step to treat the semilinear case. In a future paper we shall consider the case when the coefficients \(a_j\) may depend also on u following the approach developed in [2] in the \(H^\infty \) setting.

Also for the case \(p=3\), assuming a condition of form (1.4) with \(j=2\) on the term \(a_2\) for some \(\sigma \in (0,1)\), namely

is enough to lose in general well posedness both in \(H^\infty \) and in \({\mathscr {S}}\), since the necessary condition for \(H^\infty \) well-posedness

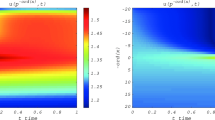

proved in [4] is no more satisfied. Namely, well posedness in \(H^\infty \) or in \({\mathscr {S}}\) may fail due to an infinite loss of regularity or of decay. To give an idea of the latter phenomenon, consider the following initial value problem

where

Notice that the coefficients \(a_j\) are analytic and satisfy conditions (1.4) for \(j=2\) and (1.5), (1.6) for \(j=0,1\). Moreover the initial datum belongs to \({\mathscr {S}}({\mathbb {R}})\) since \(\sigma \in (0,1)\). It is easy to verify that problem (1.9) admits the solution

if \(T \ge 1\). Analogously, \(u \notin C([0,T], H^\infty ({\mathbb {R}}))\). More precisely, we notice that the solution has the same regularity as the initial data, but it grows exponentially for \(|x| \rightarrow \infty \) when \(t \ge 1.\) This motivates us to study the effect of an exponential decay of the data on the solution of (1.1).

In the recent paper [1] we proved a result of well posedness in the space \({\mathcal {H}}^\infty _{\theta }\) for problem (1.1) which extends to the case \(p=3\) the results obtained in [10, 22] for the case \(p=2\) (at least in one space dimension). As in the latter case, also in [1] a loss of regularity in the index \(\rho \) appears. However, the previous example suggests that this loss can be avoided assuming the initial data to admit a suitable exponential decay. The price to pay is a considerable loss of decay which may produce solutions admitting an exponential growth. In view of the considerations above, it is quite natural to analyze problem (1.1) when the initial data belong to Gelfand–Shilov spaces, cf. Sect. 2.1 for the definition.

In order to state our main result we need to recall the definition of Gevrey-type \({\textbf {{SG}}}\)-symbol classes and of Gelfand–Shilov Sobolev spaces.

Given \(\mu ,\, \nu \, \ge 1\), \(m = (m_1, m_2) \in {\mathbb {R}}^{2}\), we denote by \({\textbf {{SG}}}^{m_1,m_2}_{\mu , \nu }({\mathbb {R}}^{2})\) (or by \({\textbf {{SG}}}^{m}_{\mu , \nu }({\mathbb {R}}^{2})\)) the space of all functions \(p \in C^\infty ({\mathbb {R}}^{2})\) for which there exist \(C,C_1 > 0\) such that

see also Definition 2. In the case \(\mu =\nu \) we write \({\textbf {{SG}}}^{m_1,m_2}_{\mu }({\mathbb {R}}^{2})\) instead of \({\textbf {{SG}}}^{m_1,m_2}_{\mu , \mu }({\mathbb {R}}^{2})\). In the following we shall obtain our results via energy estimates; hence, we need to introduce the Gelfand–Shilov–Sobolev spaces \(H^{m}_{\rho ;s,\theta }({\mathbb {R}})\) defined, for \(m = (m_1, m_2), \rho = (\rho _1, \rho _2)\) in \({\mathbb {R}}^{2}\) and \(\theta , s > 1\), by

where \(e^{\rho _1 \langle D \rangle ^{\frac{1}{\theta }}}\) is the Fourier multiplier with symbol \(e^{\rho _1 \langle \xi \rangle ^{\frac{1}{\theta }}}\). When \(\rho = (0, 0)\) we recover the usual notion of weighted Sobolev spaces (1.3).

Our pseudodifferential approach allows to consider more general 3-evolution operators of the form

\(t \in [0, T],\ x \in {\mathbb {R}},\) where \(a_3(t,D_x)\) is a pseudodifferential operator with symbol \(a_3(t,\xi )\in {\mathbb {R}}\), while, for \(j=0,1,2\), \(a_j(t,x,D_x)\) are pseudodifferential operators with symbols \(a_j(t,x,\xi )\in {\mathbb {C}}\). Notice that (1.2) in the case \(p=3\) is a particular case of (1.10). Our main result reads as follows.

Theorem 1

Let \(P(t, x, D_t, D_x)\) be an operator as in (1.10) and assume that there exist \(C_{a3}, R_{a3} > 0\) and \(\sigma {\in }\) (0, 1) such that the following conditions hold:

-

(i)

\(a_3 \in C([0, T], {\textbf {{SG}}}^{3, 0}_{1}({\mathbb {R}}^2))\), \(a_3\) is real valued and

$$\begin{aligned} |\partial _{\xi } a_3(t, \xi )| \ge C_{a_3}|\xi |^{2}, \qquad \forall |\xi | \ge R_{a_3}, \,\, \forall t \in [0, T]; \end{aligned}$$ -

(ii)

;

; -

(iii)

;

; -

(iv)

.

.

Let \(s, \theta > 1\) such that \(s_0 \le s < \frac{1}{1-\sigma }\) and \(\theta > s_0\). Let \(f\in C([0,T]; H^{m}_{\rho ; s, \theta }({\mathbb {R}}))\) and \(g\in H^{m}_{\rho ; s, \theta }({\mathbb {R}})\), where \(m=(m_1,m_2),\rho =(\rho _1,\rho _2) \in {\mathbb {R}}^2\) and \(\rho _2 > 0\). Then the Cauchy problem (1.1) admits a solution \(u\in C([0,T]; H^{m}_{(\rho _1,-{\tilde{\delta }}); s,\theta }({\mathbb {R}}))\) for every \({\tilde{\delta }}>0\), which satisfies the following energy estimate

for all \(t\in [0,T]\) and for some \(C>0\).

Remark 1

We notice that the solution obtained in Theorem 1 has the same Gevrey regularity as the initial data, but it may lose the decay exhibited at \(t=0\) and admit an exponential growth for \(|x| \rightarrow \infty \) when \(t>0\). Moreover, the loss \(\rho _2+{{\tilde{\delta }}}\) for an arbitrary \({{\tilde{\delta }}}>0\) in the behavior at infinity is independent of \(\theta \), s and \(\rho _1.\) Both these phenomena had been already observed in the case \(p=2\), see [6].

Remark 2

Let us compare Theorem 1 with the recent result obtained in [1]. In the latter paper, taking \(a_0\) uniformly bounded, \(a_1 \sim \langle x\rangle ^{-\sigma /2} \) and \( a_2 \sim \langle x\rangle ^{-\sigma }\) for some \(\sigma \in (1/2,1)\), and the Cauchy data \(f(t),g\in H^{(m_1,0)}_{(\rho _1,0);s,\theta }\) with \( s_0<\theta <1/(2(1-\sigma ))\) we prove the existence of a unique solution \(u \in C([0,T], H^{(m_1,0)}_{(\rho _1',0);s,\theta }({\mathbb {R}}))\), for some \(\rho _1' \in (0,\rho _1)\), i.e., a solution less regular than the data. Theorem 1 in the present paper shows that if the data \(f(t),g\in H^{(m_1,m_2)}_{(\rho _1,\rho _2);s,\theta }\) with \(\rho _2>0, \theta >s_0\) and \(s_0 \le s< 1/(1-\sigma )\), then there exists a solution \(u \in C([0,T], H^{(m,0)}_{(\rho _1,-{\tilde{\delta }});s,\theta })\), \(\forall {\tilde{\delta }}>0\), i.e., a solution with the same index \(\rho _1\) as the data, but with a possible worse behavior at infinity: in particular, this solution may grow exponentially for \(|x|\rightarrow \infty \). Concerning the assumptions, with respect to the existing literature, in particular [1, 22], in our result we allow:

-

A polynomial growth of exponent \(1-\sigma \in (0,1)\) for the coefficients

and \(a_0\);

and \(a_0\); -

An arbitrary Gevrey regularity index \(\theta >s_0\) both for the data and for the solution, without any upper bound: namely there is no relation between the rate of decay of the data and the Gevrey regularity of the solution.

Remark 3

Part of the recent literature on p-evolution equations is focused on the research of necessary conditions for the well posedness of problem (1.1) in various functional settings, see [4, 13, 20]. Necessary conditions are usually expressed in an integral form as in (1.8) instead than via pointwise decay estimates as in (1.7). As far as we know the only result of this type in the Gevrey setting concerns the case \(p=2\), see [13]. Our purpose is to investigate this problem in the next future for generic p.

In order to help the reading of the next sections we briefly outline the strategy of the proof of Theorem 1. Let

Noticing that \(a_3(t,\xi )\) is real valued, we have

Since \((A+A^{*})(t) \in {\textbf {{SG}}}^{2, 1-\sigma }({\mathbb {R}}^{2})\) we cannot derive an energy inequality in \(L^2\) from the estimate above. The idea is then to conjugate the operator \({ iP}\) by a suitable pseudodifferential operator \(e^{\Lambda }(t,x,D)\) in order to obtain

where \(A_{\Lambda }\) still has symbol \(A_\Lambda (t,x,\xi ) \in {\textbf {{SG}}}^{2, 1-\sigma }({\mathbb {R}}^2)\) but with \(\mathrm{Re}\, A_{\Lambda } \ge 0\). In this way, with the aid of Fefferman–Phong (see [14]) and sharp Gårding (see Theorem 1.7.15 of [28]) inequalities, we obtain the estimate from below

and therefore for the solution v of the Cauchy problem associated with the operator \(P_\Lambda \) we get

Gronwall inequality then gives the desired energy estimate for the conjugated operator \((iP)_{\Lambda }\). By standard arguments in the energy method we then obtain that the Cauchy problem associated with \(P_{\Lambda }\)

is well-posed in the weighted Sobolev spaces \(H^{m}({\mathbb {R}})\) in (1.3). Finally, we derive the existence of a solution of (1.1) from the well posedness of (1.12). In fact, if u solves (1.1) then \(v=e^{\Lambda }u\) solves (1.12), and if v solves (1.12) then \(u=\{e^{\Lambda }\}^{-1}v\) solves (1.1). In this step the continuous mapping properties of \(e^{\Lambda }\) and \(\{e^{\Lambda }\}^{-1}\) will play an important role.

The construction of the operator \(e^\Lambda \) will be the core of the proof. The function \(\Lambda (t,x,\xi )\) will be of the form

where \(\lambda _1, \lambda _2 \in {\textbf {{SG}}}^{0, 1-\sigma }_{\mu } ({\mathbb {R}}^2)\), \(k \in C^1([0,T]; {\mathbb {R}})\) is a non-increasing function to be chosen later on and \(\langle x \rangle _{h}=\sqrt{h^2+x^2}\) for some \(h \ge 1\) to be chosen later on. The role of the terms \(\lambda _1, \lambda _2, k\) will be the following:

-

The transformation with \(\lambda _2\) will change the terms of order 2 into the sum of a positive operator of the same order plus a remainder of order 1;

-

The transformation with \(\lambda _1\) will not change the terms of order 2, but it will turn the terms of order 1 into the sum of a positive operator of order 1 plus a remainder of order 0, with some growth with respect to x;

-

The transformation with \(k(t)\langle x \rangle _{h}^{1-\sigma }\) will correct this remainder term, making it positive.

The precise definitions of \(\lambda _2\) and \(\lambda _1\) will be given in Sect. 4. Since \(\Lambda \) admits an algebraic growth on the x variable, then \(e^{\Lambda }\) presents an exponential growth; this is the reason why we need to work with pseudodifferential operators of infinite order.

The paper is organized as follows. In Sect. 2 we recall some basic definitions and properties of Gelfand–Shilov spaces and the calculus for pseudodifferential operators of infinite order that we will use in the next sections. Section 3 is devoted to prove a result of spectral invariance for pseudodifferential operators with Gevrey regular symbols which is new in the literature and interesting per se. In this paper the spectral invariance will be used to prove the continuity properties of the inverse \(\{e^{\Lambda }(t,x,D)\}^{-1}\). In Sect. 4 we introduce the functions \(\lambda _1,\lambda _2\) mentioned above and prove the invertibility of the operator \(e^{{\tilde{\Lambda }}}(x,D), {\tilde{\Lambda }}=\lambda _1+\lambda _2\). In Sect. 5 we perform the change of variable and the conjugation of the operator \(\textit{iP}\). Section 6 concerns the choice of the parameters appearing in the definition of \(\Lambda \) in order to obtain a positive operator on \(L^2({\mathbb {R}})\). Finally, in Sect. 7, we give the proof of Theorem 1.

2 Gelfand–Shilov spaces and pseudodifferential operators of infinite order on \({\mathbb {R}}^n\)

2.1 Gelfand–Shilov spaces

Given \(s, \theta \ge 1\) and \(A, B > 0\) we say that a smooth function f belongs to \({\mathcal {S}}^{\theta , A}_{s, B} ({\mathbb {R}}^{n})\) if there is a constant \(C > 0\) such that

for every \(\alpha , \beta \in {\mathbb {N}}^{n}_{0}\) and \(x \in {\mathbb {R}}^{n}\). The norm

turns \({\mathcal {S}}^{\theta , A}_{s, B}({\mathbb {R}}^{n})\) into a Banach space. We define

and we can equip it with the inductive limit topology of the Banach spaces \({\mathcal {S}}^{\theta , A}_{s, B}({\mathbb {R}}^{n})\). The spaces \({\mathcal {S}}^{\theta }_{s}({\mathbb {R}}^{n})\) have been originally introduced in the book [15], see [29]. We also consider the projective version, that is

equipped with the projective limit topology. When \(\theta = s\) we simply write \({\mathcal {S}}_{\theta }\), \(\Sigma _{\theta }\) instead of \({\mathcal {S}}^{\theta }_{\theta }, \Sigma ^{\theta }_{\theta }\). We can also define, for \(C, \varepsilon > 0\), the normed space \({\mathcal {S}}^{\theta , \varepsilon }_{s, C}({\mathbb {R}}^{n})\) given by the functions \(f \in C^\infty ({\mathbb {R}}^n)\) such that there is \(C > 0\) satisfying

and we have (with equivalent topologies)

The following inclusions are continuous (for every \(\varepsilon > 0\))

All the previous spaces can be written in terms of the Gelfand–Shilov Sobolev spaces \(H^m_{\rho ;s,\theta },\) with \(\rho , m \in {\mathbb {R}}^2\) defined in Introduction. Namely, we have

From now on we shall denote by \(({\mathcal {S}}^{\theta }_{s})' ({\mathbb {R}}^{n})\), \((\Sigma ^{\theta }_{s})' ({\mathbb {R}}^{n})\) the respective dual spaces.

Concerning the action of the Fourier transform \(\mathcal {F}\) we have the following isomorphisms

2.2 Pseudodifferential operators of infinite order

We start defining the symbol classes of infinite order.

Definition 1

Let \(\tau \in {\mathbb {R}}\), \(\kappa , \theta , \mu , \nu > 1\) and \(C ,c> 0\).

-

(i)

We denote by \({\textbf {{SG}}}^{\tau , \infty }_{\mu ,\nu ;\kappa }({\mathbb {R}}^{2n}; C, c)\) the Banach space of all functions \(p\in C^\infty ({\mathbb {R}}^{2n})\) satisfying the following condition:

$$\begin{aligned} \Vert p\Vert _{C,c}:=\sup _{\alpha , \beta \in {\mathbb {N}}^n_0} C^{-|\alpha + \beta |} \alpha !^{-\mu } \beta !^{-\nu }\sup _{x,\xi \in {\mathbb {R}}^n}\langle \xi \rangle ^{-\tau +|\alpha |}\langle x \rangle ^{|\beta |}e^{-c|x|^{\frac{1}{\kappa }}}|\partial ^{\alpha }_{\xi }\partial ^{\beta }_{x}p(x,\xi )| <\infty . \end{aligned}$$We set \({\textbf {{SG}}}^{\tau , \infty }_{\mu ,\nu ;\kappa }({\mathbb {R}}^{2n}):=\bigcup _{C,c>0}{\textbf {{SG}}}^{\tau , \infty }_{\mu ,\nu ;\kappa }({\mathbb {R}}^{2n}; C, c)\) with the topology of inductive limit of the Banach spaces \({\textbf {{SG}}}^{\tau , \infty }_{\mu ,\nu ;\kappa }({\mathbb {R}}^{2n}; C,c)\).

-

(ii)

We denote by \({\textbf {{SG}}}^{\infty , \tau }_{\mu ,\nu ;\theta }({\mathbb {R}}^{2n}; C,c)\) the Banach space of all functions \(p\in C^\infty ({\mathbb {R}}^{2n})\) satisfying the following condition:

$$\begin{aligned} \Vert p\Vert ^{C,c}:=\sup _{\alpha , \beta \in {\mathbb {N}}^{n}_{0}}C^{-|\alpha + \beta |} \alpha !^{-\mu } \beta !^{-\nu }\sup _{x, \xi \in {\mathbb {R}}^{n}} \langle \xi \rangle ^{|\alpha |} \langle x \rangle ^{-\tau + |\beta |}e^{-c|\xi |^{\frac{1}{\theta }}} |\partial ^{\alpha }_{\xi }\partial ^{\beta }_{x}p(x,\xi )| <\infty . \end{aligned}$$We set \({\textbf {{SG}}}^{\infty , \tau }_{\mu ,\nu ; \theta }({\mathbb {R}}^{2n}) :=\bigcup _{C,c>0} {\textbf {{SG}}}^{\infty , \tau }_{\mu ,\nu ;\theta }({\mathbb {R}}^{2n}; C,c)\) with the topology of inductive limit of the spaces \({\textbf {{SG}}}^{\infty , \tau }_{\mu ,\nu ; \theta }({\mathbb {R}}^{2n}; C,c)\).

We also need the following symbol classes of finite order.

Definition 2

Let \(\mu , \nu \ge 1\), \(m = (m_1, m_2) \in {\mathbb {R}}^{2}\) and \(C > 0\). We denote by \({\textbf {{SG}}}^{m}_{\mu ,\nu }({\mathbb {R}}^{2n}; C)\) the Banach space of all functions \(p\in C^\infty ({\mathbb {R}}^{2n})\) satisfying the following condition:

We set \({\textbf {{SG}}}^{m}_{\mu , \nu }({\mathbb {R}}^{2n}):=\bigcup _{C>0}{\textbf {{SG}}}^{m}_{\mu ,\nu }({\mathbb {R}}^{2n}; C)\).

Finally we say that \(p \in {\textbf {{SG}}}^{m}({\mathbb {R}}^{2n})\) if for any \(\alpha , \beta \in {\mathbb {N}}^{n}_{0}\) there is \(C_{\alpha , \beta } > 0\) satisfying

When \(\mu = \nu \) we write \({\textbf {{SG}}}^{m}_{\mu }({\mathbb {R}}^{2n})\), \({\textbf {{SG}}}^{\tau ,\infty }_{\mu ,\kappa }({\mathbb {R}}^{2n})\), \({\textbf {{SG}}}^{\infty ,\tau }_{\mu ,\theta }({\mathbb {R}}^{2n})\) instead of \({\textbf {{SG}}}^{m}_{\mu , \mu }({\mathbb {R}}^{2n})\), \({\textbf {{SG}}}^{\tau , \infty }_{\mu ,\mu ; k}({\mathbb {R}}^{2n})\), \({\textbf {{SG}}}^{\infty , \tau }_{\mu , \mu ; \theta }({\mathbb {R}}^{2n})\).

As usual, given a symbol \(p(x,\xi )\) we shall denote by p(x, D) or by \(\text {op} (p)\) the pseudodifferential operator defined as standard by

where u belongs to some suitable function space depending on the assumptions on p, and  stands for \((2\pi )^{-n}d\xi \). We have the following continuity results.

stands for \((2\pi )^{-n}d\xi \). We have the following continuity results.

Proposition 1

Let \(\tau \in {\mathbb {R}}\), \(s > \mu \ge 1\), \(\nu \ge 1\) and \(p \in {\textbf {{SG}}}^{\tau , \infty }_{\mu , \nu ; s}({\mathbb {R}}^{2n})\). Then for every \(\theta >\nu \) the pseudodifferential operator p(x, D) with symbol \(p(x,\xi )\) is continuous on \(\Sigma ^{\theta }_{s}({\mathbb {R}}^{n})\) and it extends to a continuous map on \((\Sigma ^{\theta }_{s})'({\mathbb {R}}^{n})\).

Proposition 2

Let \(\tau \in {\mathbb {R}}\), \(\theta > \nu \ge 1\), \(\mu \ge 1\) and \(p \in {\textbf {{SG}}}^{\infty , \tau }_{\mu , \nu ; \theta }({\mathbb {R}}^{2n})\). Then for every \(s >\mu \) the operator p(x, D) is continuous on \(\Sigma ^{\theta }_{s}({\mathbb {R}}^{n})\) and it extends to a continuous map on \((\Sigma ^{\theta }_{s})'({\mathbb {R}}^{n})\)).

The proof of Propositions 1 and 2 can be derived following the argument in the proof of [6, Proposition 2.3] and [1, Proposition 1]. We leave details to the reader.

Now we define the notion of asymptotic expansion and recall some fundamental results, which can be found in Appendix A of [6]. For \(t_1, t_2 \ge 0\) set

and \(Q^{e}_{t_1, t_2} = {\mathbb {R}}^{2n} {\setminus } Q_{t_1, t_2}\). When \(t_1 = t_2 = t\) we simply write \(Q_t\) and \(Q^{e}_{t}\).

Definition 3

We say that:

-

(i)

\(\sum \nolimits _{j \ge 0} a_j \in \mathrm{FSG}^{\tau , \infty }_{\mu , \nu ; \kappa }\) if \(a_j(x, \xi ) \in C^{\infty }({\mathbb {R}}^{2n})\) and there are \(C, c, B > 0\) satisfying

$$\begin{aligned} |\partial ^{\alpha }_{\xi }\partial ^{\beta }_{x} a_j(x, \xi )| \le C^{|\alpha | + |\beta | + 2j + 1} \alpha !^{\mu } \beta !^{\nu } j!^{\mu + \nu -1} \langle \xi \rangle ^{\tau - |\alpha | - j} \langle x \rangle ^{-|\beta | - j} e^{c|x|^{\frac{1}{\kappa }}}, \end{aligned}$$for every \(\alpha , \beta \in {\mathbb {N}}^{n}_{0}\), \(j \ge 0\) and \((x, \xi ) \in Q^{e}_{B(j)}\), where \(B(j) := Bj^{\mu + \nu - 1}\);

-

(ii)

\(\sum \nolimits _{j \ge 0} a_j \in \mathrm{FSG}^{\infty , \tau }_{\mu , \nu ; \theta }\) if \(a_j(x, \xi ) \in C^{\infty }({\mathbb {R}}^{2n})\) and there are \(C, c, B > 0\) satisfying

$$\begin{aligned} |\partial ^{\alpha }_{\xi }\partial ^{\beta }_{x} a_j(x, \xi )| \le C^{|\alpha | + |\beta | + 2j + 1} \alpha !^{\mu } \beta !^{\nu } j!^{\mu + \nu -1} \langle \xi \rangle ^{- |\alpha | - j} e^{c|\xi |^{\frac{1}{\theta }}} \langle x \rangle ^{ \tau -|\beta | - j}, \end{aligned}$$for every \(\alpha , \beta \in {\mathbb {N}}^{n}_{0}\), \(j \ge 0\) and \((x, \xi ) \in Q^{e}_{B(j)}\);

-

(iii)

\(\sum \nolimits _{j \ge 0} a_j \in \mathrm{FSG}^{m}_{\mu , \nu }\) if \(a_j(x, \xi ) \in C^{\infty }({\mathbb {R}}^{2n})\) and there are \(C, B > 0\) satisfying

$$\begin{aligned} |\partial ^{\alpha }_{\xi }\partial ^{\beta }_{x} a_j(x, \xi )| \le C^{|\alpha | + |\beta | + 2j + 1} \alpha !^{\mu } \beta !^{\nu } j!^{\mu + \nu -1} \langle \xi \rangle ^{m_1 - |\alpha | - j} \langle x \rangle ^{m_2 -|\beta | - j}, \end{aligned}$$for every \(\alpha , \beta \in {\mathbb {N}}^{n}_{0}\), \(j \ge 0\) and \((x, \xi ) \in Q^{e}_{B(j)}\).

Definition 4

Let \(\sum \nolimits _{j \ge 0} a_j\), \(\sum \nolimits _{j \ge 0} b_j\) in \(\mathrm{FSG}^{\tau , \infty }_{\mu , \nu ; \kappa }\). We say that \(\sum \nolimits _{j \ge 0} a_j \sim \sum \nolimits _{j \ge 0} b_j\) in \(\mathrm{FSG}^{\tau , \infty }_{\mu , \nu ; \kappa }\) if there are \(C, c, B > 0\) satisfying

for every \(\alpha , \beta \in {\mathbb {N}}^{n}_{0}\), \(N \ge 1\) and \((x, \xi ) \in Q^{e}_{B(N)}\). Analogous definitions for the classes \(\mathrm{FSG}^{\infty , \tau }_{\mu , \nu ; \theta }\), \(\mathrm{FSG}^{m}_{\mu , \nu }\).

Remark 4

If \(\sum \nolimits _{j \ge 0} a_j \in \mathrm{FSG}^{\tau , \infty }_{\mu , \nu ; \kappa }\), then \(a_0 \in {\textbf {{SG}}}^{\tau , \infty }_{\mu , \nu ; \kappa }\). Given \(a \in {\textbf {{SG}}}^{\tau , \infty }_{\mu , \nu ; \kappa }\) and setting \(b_0 = a\), \(b_j = 0\), \(j \ge 1\), we have \(a = \sum \nolimits _{j \ge 0}b_j\). Hence we can consider \({\textbf {{SG}}}^{\tau , \infty }_{\mu , \nu ; \kappa }\) as a subset of \(\mathrm{FSG}^{\tau , \infty }_{\mu , \nu ; \kappa }\).

Proposition 3

Given \(\sum \nolimits _{j \ge 0} a_j \in \mathrm{FSG}^{\tau , \infty }_{\mu , \nu ; \kappa },\) there exists \(a \in {\textbf {{SG}}}^{\tau , \infty }_{\mu , \nu ; \kappa }\) such that \(a \sim \sum \nolimits _{j \ge 0} a_j\) in \(\mathrm{FSG}^{\tau , \infty }_{\mu , \nu ; \kappa }\). Analogous results for the classes \(\mathrm{FSG}^{\infty , \tau }_{\mu , \nu ; \theta }\) and \(\mathrm{FSG}^{m}_{\mu , \nu }\).

Proposition 4

Let \(a \in {\textbf {{SG}}}^{0, \infty }_{\mu , \nu ; \kappa }\) such that \(a \sim 0\) in \(\mathrm{FSG}^{0, \infty }_{\mu , \nu ; \kappa }\). If \(\kappa > \mu + \nu - 1\), then \(a \in {\mathcal {S}}_\delta ({\mathbb {R}}^{2n})\) for every \(\delta \ge \mu + \nu - 1\). Analogous results for the classes \(\mathrm{FSG}^{\infty , \tau }_{\mu , \nu ; \theta }\) and \(\mathrm{FSG}^{m}_{\mu , \nu }\).

Concerning the symbolic calculus and the continuous mapping properties on the Gelfand–Shilov Sobolev spaces we have the following results, cf. [6, Propositions A.12 and A.13].

Theorem 2

Let \(p \in {\textbf {{SG}}}^{\tau , \infty }_{\mu , \nu ; \kappa }({\mathbb {R}}^{2n})\), \(q \in {\textbf {{SG}}}^{\tau ', \infty }_{\mu , \nu ; \kappa }({\mathbb {R}}^{2n})\) with \(\kappa > \mu + \nu - 1\). Then the \(L^{2}\) adjoint \(p^{*}\) and the composition \(p\circ q\) have the following structure:

\(p^{*}(x,D) = a(x, D) + r(x,D)\) where \(r\in {\mathcal {S}}_{\mu +\nu -1}({\mathbb {R}}^{2n})\), \(a \in {\textbf {{SG}}}^{\tau , \infty }_{\mu , \nu ; \kappa }({\mathbb {R}}^{2n})\), and

\(p(x,D)\circ q(x, D) = b(x,D) + s(x,D)\), where \(s \in {\mathcal {S}}_{\mu +\nu -1}({\mathbb {R}}^{2n})\), \(b \in {\textbf {{SG}}}^{\tau +\tau ', \infty }_{\mu , \nu ; \kappa }({\mathbb {R}}^{2n})\) and

Analogous results for the classes \({\textbf {{SG}}}^{\infty , \tau }_{\mu , \nu ; \theta }({\mathbb {R}}^{2n})\) and \({\textbf {{SG}}}^{m}_{\mu ,\nu }({\mathbb {R}}^{2n})\).

Theorem 3

Let \(p \in {\textbf {{SG}}}^{m'}_{\mu , \nu }({\mathbb {R}}^{2n})\) for some \(m' \in {\mathbb {R}}^{2}\). Then for every \(m, \rho \in {\mathbb {R}}^{2}\) and \(s,\theta \) such that \(\min \{s, \theta \} > \mu + \nu - 1\) the operator p(x, D) maps \(H^{m}_{\rho ; s, \theta }({\mathbb {R}}^{n})\) into \(H^{m-m'}_{\rho ; s, \theta }({\mathbb {R}}^{n})\) continuously.

A simple application of the Faà di Bruno formula gives us the following result.

Proposition 5

If \(\lambda \in {\textbf {{SG}}}^{0,\frac{1}{\kappa }}_{\mu }({\mathbb {R}}^{2n})\), then \(e^{\lambda } \in {\textbf {{SG}}}^{0, \infty }_{\mu ; \kappa }({\mathbb {R}}^{2n})\). If \(\lambda \in {\textbf {{SG}}}^{\frac{1}{\theta }, 0}_{\mu }({\mathbb {R}}^{2n})\), then \(e^{\lambda } \in {\textbf {{SG}}}^{\infty , 0}_{\mu ; \theta }({\mathbb {R}}^{2n})\).

We conclude this section proving the following theorem.

Theorem 4

Let \(\rho , m \in {\mathbb {R}}^{2}\) and \(s, \theta , \mu > 1\) with \(\min \{s,\theta \} > 2\mu - 1\). Let \(\lambda \in {\textbf {{SG}}}^{0, \frac{1}{\kappa }}_{\mu }({\mathbb {R}}^{2n})\). Then:

-

(i)

If \(\kappa >s\), the operator \(e^\lambda (x,D):H^{m}_{\rho ; s, \theta } ({\mathbb {R}}^{n})\longrightarrow H^{m}_{\rho -\delta e_2; s, \theta }({\mathbb {R}}^{n})\) is continuous for every \(\delta >0\), where \(e_2 = (0, 1)\);

-

(ii)

If \(\kappa =s \), the operator \(e^{\lambda }(x,D):H^{m}_{\rho ; s, \theta } ({\mathbb {R}}^{n})\longrightarrow H^{m}_{\rho -\delta e_2; s, \theta }({\mathbb {R}}^{n})\) is continuous for every

$$\begin{aligned} \delta >C(\lambda ):= \sup _{(x,\xi ) \in {\mathbb {R}}^{2n}} \displaystyle \frac{\lambda (x,\xi )}{\langle x \rangle ^{1/s}}. \end{aligned}$$

Proof

-

(i)

Let \(\phi \) be a Gevrey function of index \(\mu \) such that, for a positive constant K, \(\phi (x)=1\) for \(|x|<K/2\), \(\phi (x)=0\) for \(|x|>K\) and \(0\le \phi (x)\le 1\) for every \(x\in {\mathbb {R}}^n\). We split the symbol \(e^{\lambda (x,\xi )}\) as

$$\begin{aligned} e^{\lambda (x,\xi )} = \phi (x)e^{\lambda (x,\xi )} +(1-\phi (x))e^{\lambda (x,\xi )} = a_1(x,\xi )+ a_2(x,\xi ). \end{aligned}$$(2.1)Since \(\phi \) has compact support and \(\lambda \) has order zero with respect to \(\xi \), we have \(a_1 \in {\textbf {{SG}}}_\mu ^{0,0}\). On the other hand, given any \(\delta >0\) and choosing K large enough, since \(\kappa >s\) we may write \(|\lambda (x,\xi )|\langle x\rangle ^{-1/s}<\delta \) on the support of \(a_2(x,\xi )\). Hence we obtain

$$\begin{aligned} a_2(x,\xi )=e^{\delta \langle x\rangle ^{1/s}}(1-\phi (x))e^{\lambda (x,\xi )-\delta \langle x\rangle ^{1/s}}, \end{aligned}$$with \((1-\phi (x))e^{\lambda (x,\xi )-\delta \langle x\rangle ^{1/s}}\) of order (0, 0) because \(\lambda (x,\xi )-\delta \langle x\rangle ^{1/s}<0\) on the support of \((1-\phi (x))\). Thus, (2.1) becomes

$$\begin{aligned} e^{\lambda (x,\xi )}= a_1(x,\xi )+e^{\delta \langle x\rangle ^{1/s}}{{\tilde{a}}}_2(x,\xi ), \end{aligned}$$\(a_1\) and \({{\tilde{a}}}_2\) of order (0, 0). Since by Theorem 3 the operators \(a_1(x,D)\) and \({{\tilde{a}}}_2(x,D)\) map continuously \(H^m_{\rho ,s,\theta }\) into itself, then we obtain (i). The proof of (ii) follows a similar argument and can be found in [6, Theorem 2.4].\(\square \)

3 Spectral invariance for SG-\(\Psi \)DO with Gevrey estimates

Let \(p \in {\textbf {{SG}}}^{0,0}({\mathbb {R}}^{2n})\), then p(x, D) extends to a continuous operator on \(L^{2}({\mathbb {R}}^{n})\). Suppose that \(p(x,D): L^{2}({\mathbb {R}}^{n}) \rightarrow L^{2}({\mathbb {R}}^{n})\) is bijective. The question is to determine whether or not the inverse \(p^{-1}\) is also a \({\textbf {{SG}}}\) operator of order (0, 0). This is known as the spectral invariance problem and it has an affirmative answer, see [11].

Following the ideas presented in [11, pp. 51–57], we will prove that the symbol of \(p^{-1}\) satisfies Gevrey estimates, whenever the symbol \(p \in {\textbf {{SG}}}^{0,0}_{\mu , \nu }({\mathbb {R}}^{2n})\). This is an important step in the study of the continuous mapping properties of \(\{e^{\Lambda }(x,D)\}^{-1}\) on Gelfand–Shilov Sobolev spaces \(H^m_{\rho ;s,\theta }\).

Theorems 5, 6, 7 here below can be found in [31, Chapters 20, 21].

Theorem 5

Let X, Y separable Hilbert spaces. Then a bounded operator \(A: X \rightarrow Y\) is Fredholm if and only if there are \(B: Y \rightarrow X\) bounded, \(K_1: X \rightarrow Y\) and \(K_2: Y \rightarrow X\) compact operators such that

Theorem 6

Let X, Y, Z be separable Hilbert spaces, and let \(A: X \rightarrow Y\), \(B: Y \rightarrow Z\) be Fredholm operators. Then:

-

\(B \circ A : X \rightarrow Z\) is Fredholm and \(i(BA) = i(B) + i(A)\), where \(i(\cdot )\) stands for the index of a Fredholm operator;

-

\(Y = N(A^{t}) \oplus R(A)\).

Remark 5

Let X be a Hilbert space and \(K: X \rightarrow X\) be a compact operator. Then \(I-K\) is Fredholm and \(i(I-K) = 0\).

Theorem 7

Let \(p \in {\textbf {{SG}}}^{m_1, m_2}({\mathbb {R}}^{2n})\) such that \(p(x,D): H^{s_1+m_1,s_2+m_2}({\mathbb {R}}^{n}) \rightarrow H^{m_1,m_2}({\mathbb {R}}^{n})\) is Fredholm for some \(s_1, s_2 \in {\mathbb {R}}\). Then p is \({\textbf {{SG}}}\)-elliptic, that is there exist \(C,R>0\) such that

Theorem 8

Let \(p \in {\textbf {{SG}}}^{m_1, m_2}_{\mu ,\nu }({\mathbb {R}}^{2n})\) be \({\textbf {{SG}}}\)-elliptic. Then there is \(q \in {\textbf {{SG}}}^{-m_1, -m_2}_{\mu ,\nu }({\mathbb {R}}^{2n})\) such that

where \(r_1, r_2 \in S_{\mu +\nu -1}({\mathbb {R}}^{2n})\).

Proof

See [28, Theorem 6.3.16]. \(\square \)

In order to prove the main result of this section, we need the following technical lemma.

Lemma 1

Let \(A : L^{2}({\mathbb {R}}^n) \rightarrow L^{2}({\mathbb {R}}^n)\) be a bounded operator such that A and \(A^{*}\) map \(L^2({\mathbb {R}}^n)\) into \(\Sigma _{r}({\mathbb {R}}^n)\) continuously. Then the Schwartz kernel of A belongs to \(\Sigma _{r}({\mathbb {R}}^{2n})\).

Proof

Since \(\Sigma _{r}({\mathbb {R}}^{2n}) \subset L^{2}({\mathbb {R}}^{2n})\) is a nuclear Fréchet space, (cf. [12]), by [19, Propositions 2.1.7 and 2.1.8], we have that A is defined by a kernel H(x, y) and we can write

where \(a_{j}, {\tilde{a}}_{j} \in {\mathbb {C}}\), \({\tilde{f}}_{j}(x), g_{j}(y) \in \Sigma _{r}({\mathbb {R}}^{n})\), \(f_{j}(x), {\tilde{g}}_{j}(y) \in L^{2}({\mathbb {R}}^{n})\), \(\sum \nolimits _{j}|a_j| < \infty \), \(\sum \nolimits _{j}|{\tilde{a}}_{j}| < \infty \), \({\tilde{f}}_{j}(x), g_{j}(y)\) converge to zero in \(\Sigma _{r}({\mathbb {R}}^{n})\) and \(f_{j}(x), {\tilde{g}}_{j}(y)\) converge to zero in \(L^{2}({\mathbb {R}}^{n})\).

We now use the following characterization: \(H \in \Sigma _{r}({\mathbb {R}}^{2n})\) if and only if

for every \(C>0\), and prove that both the latter conditions hold. Note that

Since \(g_j\) converges to zero in \(\Sigma _r({\mathbb {R}}^{n})\), we have

for every \(C>0\), and therefore

Hence

for every \(C >0\). Using the representation \(\sum \nolimits {\tilde{a}}_{j} {\tilde{f}}_{j}(x) {\tilde{g}}_{j}(y)\), analogously we can obtain

for every \(C>0\).

Now note that, for every \(N \in {\mathbb {N}}_0\), \(x, y \in {\mathbb {R}}^{n}\),

Therefore, for every \(C > 0\),

where \(C_1 = (2^{-1}C)^{N}\). Hence, for every \(C > 0\),

Since the Fourier transformation is an isomorphism on \(L^{2}\) and on \(\Sigma _{r}\), we have

where \(a_{j}, {\tilde{a}}_{j} \in {\mathbb {C}}\), \(\widehat{{\tilde{f}}}_{j}(\xi ), {\widehat{g}}_j(\eta ) \in \Sigma _{r}({\mathbb {R}}^{n})\), \({\widehat{f}}_j(\xi ), \widehat{{\tilde{g}}}_{j}(\eta ) \in L^{2}({\mathbb {R}}^{n})\), \(\sum \nolimits _{j}|a_j| < \infty \), \(\sum \nolimits _{j}|{\tilde{a}}_{j}| < \infty \), \(\widehat{{\tilde{f}}}_{j}(\xi ), {\widehat{g}}_j(\eta )\) converge to zero in \(\Sigma _{r}({\mathbb {R}}^{n})\) and \({\widehat{f}}_j(\xi ), \widehat{{\tilde{g}}}_{j}(\eta )\) converge to zero in \(L^{2}({\mathbb {R}}^{n})\). In an analogous way as before we get, for every \(C > 0\),

Hence \(H \in \Sigma _{r}({\mathbb {R}}^{2n})\). \(\square \)

Theorem 9

Let \(p \in {\textbf {{SG}}}^{0,0}_{\mu ,\nu }({\mathbb {R}}^{2n})\) such that \(p(x,D): L^2({\mathbb {R}}^n) \rightarrow L^2({\mathbb {R}}^n)\) is bijective. Then \(\{p(x,D)\}^{-1}: L^2({\mathbb {R}}^n) \rightarrow L^2({\mathbb {R}}^n)\) is a pseudodifferential operator given by a symbol \({\tilde{p}}= q + {\tilde{k}}\) where \(q \in {\textbf {{SG}}}^{0,0}_{\mu ,\nu }({\mathbb {R}}^{2n})\) and \({\tilde{k}} \in \Sigma _{r}({\mathbb {R}}^{2n})\) for every \(r > \mu + \nu - 1\).

Proof

Since \(p(x,D): L^2({\mathbb {R}}^n) \rightarrow L^2({\mathbb {R}}^n)\) is bijective, then p(x, D) is Fredholm and

where N denotes the kernel of the operators.

Therefore by Theorem 7p is \({\textbf {{SG}}}\)-elliptic and by Theorem 8 there is \(q \in {\textbf {{SG}}}^{0,0}_{\mu , \nu }({\mathbb {R}}^{2n})\) such that

for some \(r, s \in {\mathcal {S}}_{\mu +\nu -1}({\mathbb {R}}^{2n})\). In particular r(x, D), s(x, D) are compact operators on \( L^2({\mathbb {R}}^n)\). By Theorem 5q(x, D) is a Fredholm operator and we have

Note that N(q(x, D)) and \(N(q^{t}(x,D))\) are subspaces of \({\mathcal {S}}_{\mu +\nu -1}({\mathbb {R}}^{n})\). Indeed, let \(f \in N(q)\) and \(g \in N(q^t)\), then

Since \(L^2({\mathbb {R}}^n)\) is a separable Hilbert space and N(q(x, D)) is closed, we have the following decompositions

where \(R_{L^2}(q)\) denotes the range of q(x, D) as an operator on \(L^2({\mathbb {R}}^{n})\).

Let \(\pi : L^2 \rightarrow N(q)\) the projection of \(L^2\) onto N(q) with null space \(N(q)^{\bot }\), \(F: N(q) \rightarrow N(q^t)\) an isomorphism and \(i: N(q^t) \rightarrow L^2\) the inclusion. Set \(Q = i\circ F \circ \pi \). Then \(Q: L^2 \rightarrow L^2\) is bounded and its image is contained in \(N(q^t) \subset {\mathcal {S}}_{\mu +\nu -1}\). It is not difficult to see that \(Q^{*} = {\tilde{i}} \circ F^{*} \circ \pi _{N(q^{t})}\), where \({\tilde{i}}\) is the inclusion of N(q) into \(L^2\) and \(\pi _{N(q^{t})}\) is the orthogonal projection of \(L^2\) onto N(q). Since \({\mathcal {S}}_{\mu +\nu -1} \subset \Sigma _{r}\), then by Lemma 1, Q is given by a kernel in \(\Sigma _{r}\).

We will now show that \(q + Q\) is a bijective parametrix of p. Indeed, let \(u = u_1 + u_2 \in N(q)\oplus N(q^t)\) such that \((q + Q) u = 0\). Then \(0 = q u_2 + (i\circ F)u_1 \in R_{L^2}(q) \oplus N(q^t)\). Hence \(q u_2 = 0\) and \(i\circ F u_1 = 0\) which implies that \(u = 0\). In order to prove that Q is surjective, consider \(f = f_1 + f_2 \in R_{L^2}(q) \oplus N(q^t)\). There exist \(u_1 \in L^2\) and \(u_2 \in N(q)\) such that \(q u_1 = f_1\) and \(F u_2 = f_2\). Now write \(u_1 = v_1 + v_2 \in N(q) \oplus N(q)^{\bot }\). Then \(q(u_1) = q(v_2)\) and therefore \((q+Q)(v_2+u_2) = f_1 + f_2 = f\). Finally notice that

where \(r', s' \in \Sigma _{r}({\mathbb {R}}^{2n})\).

Now set \({\tilde{q}} = q(x,D) + Q\). Therefore \({\tilde{q}} \circ p(x,D) = I + r'(x,D): L^2 \rightarrow L^2\) is bijective. Set \(k = -(I+r')^{-1} \circ r'\). Then \((I+r')(I+k) = I\) and \(k= - r' - r'k\). Observe that

Hence \(k, k^t\) map \(L^2\) into \(\Sigma _{r}\) and by Lemma 1 we have that k is given by a kernel in \(\Sigma _{r}({\mathbb {R}}^{2n})\).

To finish the proof, it is enough to notice that

\(\square \)

4 Change of variables

4.1 Definition and properties of \(\lambda _2\) and \(\lambda _1\)

Let \(M_2, M_1>0\) and \(h \ge 1\) to be chosen later on. We define

where

\(|\partial ^{\alpha } w(\xi )| \le C_{w}^{\alpha + 1} \alpha !^{\mu }\), \(|\partial ^{\beta } \psi (y)| \le C_{\psi }^{\beta + 1}\beta !^{\mu }\) for some \(\mu > 1\) which will be chosen later. Notice that by the assumption (i) of Theorem 1 the function \(w(\xi )\) is constant for \(\xi \ge R_{a_3}\) and for \(\xi \le -R_{a_3}.\)

Lemma 2

Let \(\lambda _2(x, \xi )\) as in (4.1). Then there exists \(C>0\) such that for all \(\alpha , \beta \in {\mathbb {N}}\) and \((x,\xi ) \in {\mathbb {R}}^2\):

-

(i)

\(|\lambda _2(x, \xi )| \le \frac{M_2}{1-\sigma } \langle x \rangle ^{1-\sigma };\)

-

(ii)

\(|\partial ^{\beta }_{x}\lambda _2(x, \xi )| \le M_2 C^{\beta } \beta ! \langle x \rangle ^{1-\sigma -\beta }\), for \(\beta \ge 1\);

-

(iii)

\(| \partial ^{\alpha }_{\xi } \partial ^{\beta }_{x}\lambda _2(x, \xi )| \le M_2 C^{\alpha +\beta +1} \alpha !^{\mu } \beta ! \chi _{E_{h, R_{a_3}}} (\xi ) \!\langle \! \xi \! \rangle ^{-\alpha }_{h}\!\langle \! x \rangle ^{1-\sigma - \beta }\), for \(\alpha \ge 1, \beta \ge 0\),

where \(E_{h, R_{a_3}} = \{ \xi \in {\mathbb {R}}:h \le |\xi | \le R_{a_3}h\}\). In particular \(\lambda _2 \in {\textbf {{SG}}}^{0, 1-\sigma }_{\mu }({\mathbb {R}}^2)\).

Proof

First note that

For \(\beta \ge 1\)

For \(\alpha \ge 1\)

Finally, for \(\alpha , \beta \ge 1\)

\(\square \)

For the function \(\lambda _1\) we can prove the following alternative estimates.

Lemma 3

Let \(\lambda _1(x,\xi )\) as in (4.2). Then there exists \(C>0\) such that for all \(\alpha , \beta \ge 0\) and \((x,\xi ) \in {\mathbb {R}}^2{:}\)

-

(i)

\(|\partial ^{\alpha }_{\xi } \partial ^{\beta }_{x} \lambda _1(x, \xi )| \le M_1 C^{\alpha + \beta + 1} (\alpha ! \beta !)^{\mu } \langle \xi \rangle ^{-1 - \alpha }_{h} \langle x \rangle ^{1-\frac{\sigma }{2} - \beta };\)

-

(ii)

\(|\partial ^{\alpha }_{\xi } \partial ^{\beta }_{x} \lambda _1(x, \xi )| \le M_1 C^{\alpha + \beta + 1} (\alpha ! \beta !)^{\mu } \langle \xi \rangle ^{- \alpha }_{h} \langle x \rangle ^{1-\sigma - \beta }.\)

In particular \(\lambda _1 \in {\textbf {{SG}}}^{0, 1-\sigma }_{\mu }({\mathbb {R}}^2)\).

Proof

Denote by \(\chi _{\xi }(x)\) the characteristic function of the set \(\{x \in {\mathbb {R}}:\langle x \rangle ^{\sigma } \le \langle \xi \rangle ^{2}_{h}\}\). For \(\alpha = \beta = 0\) we have

and

For \(\alpha \ge 1\), with the aid of Faà di Bruno formula, we have

and

For \(\beta \ge 1\) we have

Finally, for \(\alpha , \beta \ge 1\) we have

\(\square \)

4.2 Invertibility of \(e^{{\tilde{\Lambda }}}\), \({\tilde{\Lambda }} = \lambda _2 + \lambda _1\)

In this section we construct an inverse for the operator \(e^{{{\tilde{\Lambda }}}}(x,D)\) with \({\tilde{\Lambda }}(x, \xi ) = \lambda _2(x,\xi ) + \lambda _1(x, \xi )\) and we prove that the inverse acts continuously on Gelfand-Shilov-Sobolev spaces. By Lemmas 2 and 3 we have \({\tilde{\Lambda }} \in {\textbf {{SG}}}^{0, 1-\sigma }_{\mu }({\mathbb {R}}^{2})\). Therefore, by Proposition 5, \(e^{{\tilde{\Lambda }}} \in {\textbf {{SG}}}^{0, \infty }_{\mu ; 1/(1-\sigma )}({\mathbb {R}}^{2})\). To construct the inverse of \(e^{{\tilde{\Lambda }}}(x,D)\) we need to use the \(L^2\) adjoint of \(e^{-{\tilde{\Lambda }}}(x,D)\), defined as an oscillatory integral by

Assuming \(\mu > 1\) such that \(1/(1-\sigma ) > 2\mu -1\), by results from the calculus, we may write

where \(a_1 \sim \sum _{\alpha } \frac{1}{\alpha !} \partial ^{\alpha }_{\xi }D^{\alpha }_{x}e^{-{\tilde{\Lambda }}}\) in \(\mathrm{FSG}^{0, \infty }_{\mu ; 1/(1-\sigma )}({\mathbb {R}}^{2})\), \(r_1 \in {\mathcal {S}}_{2\mu - 1}({\mathbb {R}}^{2})\), and

where

and \(r_2 \in {\mathcal {S}}_{2\mu -1}({\mathbb {R}}^{2})\). Therefore

where \(a \sim \sum _{\gamma } \frac{1}{\gamma !} \partial ^{\gamma }_{\xi }(e^{{\tilde{\Lambda }}}D^{\gamma }_{x}e^{-{\tilde{\Lambda }}})\) in \(\mathrm{FSG}^{0, \infty }_{\mu ; 1/(1-\sigma )}({\mathbb {R}}^{2})\) and \(r \in {\mathcal {S}}_{2\mu - 1}({\mathbb {R}}^{2})\).

Now let us study more carefully the asymptotic expansion

Note that

hence, for \(\alpha , \beta \ge 0\),

In the following we shall consider the sets

and \(Q_{t_1, t_2; h}^{e} = {\mathbb {R}}^{2} {\setminus } Q_{t_1, t_2; h}\). When \(t_1 = t_2 = t\) we simply write \(Q_{t; h}\) and \(Q^{e}_{t; h}\).

Let \(\psi (x, \xi ) \in C^{\infty }({\mathbb {R}}^{2})\) such that \(\psi \equiv 0\) on \(Q_{2; h}\), \(\psi \equiv 1\) on \(Q^{e}_{3; h}\), \(0 \le \psi \le 1\) and

for every \(x, \xi \in {\mathbb {R}}\) and \(\alpha , \beta \in {\mathbb {N}}_0\). Now set \(\psi _0 \equiv 1\) and, for \(j \ge 1\),

where \(R(j) := R j^{2\mu -1}\) and \(R > 0\) is a large constant. Let us recall that

-

\((x, \xi ) \in Q^{e}_{3R(j)} \implies \left( \dfrac{x}{R(j)}, \dfrac{\xi }{R(j)} \right) \in Q^{e}_{3} \implies \psi _i(x, \xi ) = 1\), for \(i \le j\);

-

\((x, \xi ) \in Q_{R(j)} \implies \left( \dfrac{x}{R(j)}, \dfrac{\xi }{R(j)} \right) \in Q_{2} \implies \psi _i(x, \xi ) = 0\), for \(i \ge j\).

Defining \(b(x,\xi ) = \sum _{j \ge 0} \psi _{j}(x,\xi ) r_{1, j}(x,\xi )\) we have that \(b \in {\textbf {{SG}}}^{0,\infty }_{\mu ;\frac{1}{1-\sigma }}({\mathbb {R}}^{2})\) and

We will show that \(b \in SG^{0,0}_{\mu }({\mathbb {R}}^{2n})\). Indeed, first we write

On the support of \(\partial ^{\alpha _1}_{\xi }\partial ^{\beta _1}_{x} \psi _{j+1}\) we have

whenever \(\alpha _1 + \beta _1 \ge 1\). Hence

We also have that

holds true on the support of \(\partial ^{\alpha _1}_{\xi }\partial ^{\beta _1}_{x} \psi _{j+1}\). If \(\langle \xi \rangle _{h} \ge R(j+1)\), then

On the other hand, since we are assuming \(\mu > 1\) such that \(2\mu -1 < \frac{1}{1-\sigma }\), if \(\langle x \rangle \le R(j+1)\) we obtain

Enlarging \(R >0\) if necessary, we can infer that \(\sum _{j \ge 1} r_{1, j} \in {\textbf {{SG}}}^{-1, -\sigma }_{\mu }({\mathbb {R}}^{2})\).

In analogous way it is possible to prove that \(\sum _{j \ge k} r_{1, j} \in {\textbf {{SG}}}^{-k, -\sigma k}_{\mu }({\mathbb {R}}^{2})\). Hence, we may conclude

that is, \(b \sim \sum _{j} r_{1,j}\) in \({\textbf {{SG}}}^{0,0}_{\mu }({\mathbb {R}}^{2})\).

Since \(a \sim \sum r_{1,j}\) in \(\mathrm{FSG}^{0, \infty }_{\mu ;1/(1-\sigma )}({\mathbb {R}}^{2})\), \(b \sim \sum r_{1,j}\) in \(\mathrm{FSG}^{0,\infty }_{\mu ;1/(1-\sigma )}({\mathbb {R}}^{2})\) we have \(a - b \in {\mathcal {S}}_{2\mu -1}({\mathbb {R}}^{2})\). Thus we may write

where \({\tilde{r}} \in {\textbf {{SG}}}^{-1, -\sigma }_{\mu }({\mathbb {R}}^{2})\), \({\tilde{r}} \sim \sum _{\gamma \ge 1} r_{1, \gamma }\) in \({\textbf {{SG}}}^{-1, -\sigma }_{\mu }({\mathbb {R}}^{2})\) and \({\bar{r}} \in {\mathcal {S}}_{2\mu -1}({\mathbb {R}}^{2})\). In particular \(r \in {\textbf {{SG}}}^{-1, -\sigma }_{2\mu -1}({\mathbb {R}}^2)\), therefore we obtain

This implies that the \((0,0)-\)seminorms of r are bounded by \(h^{-1}\). Choosing h large enough, we obtain that \(I + r(x,D)\) is invertible on \(L^{2}({\mathbb {R}})\) and its inverse \((I+r(x,D))^{-1}\) is given by the Neumann series \(\sum _{j \ge 0}(-r(x,D))^{j}\).

By Theorem 9 we have

where \(q \in {\textbf {{SG}}}^{0,0}_{2\mu -1}({\mathbb {R}}^{2})\), \(k \in \Sigma _{\delta }({\mathbb {R}}^{2})\) for every \(\delta > 2(2\mu -1) - 1 = 4\mu - 3 \). Choosing \(\mu >1\) close enough to 1, we have that \(\delta \) can be chosen arbitrarily close to 1. Hence, by Theorem 3, for every fixed \(s>1, \theta >1\), we can find \(\mu >1\) such that

is continuous for every \(m', \rho ' \in {\mathbb {R}}^2\). Analogously one can show the existence of a left inverse of \(e^\Lambda \) with the same properties. Summing up, we obtain the following result.

Lemma 4

Let \(s, \theta > 1\) and take \(\mu > 1\) such that \(\min \{s, \theta \} > 4\mu -3\). For \(h > 0\) large enough, the operator \(e^{{\tilde{\Lambda }}}(x,D)\) is invertible on \(L^2({\mathbb {R}})\) and on \(\Sigma _{\min \{s,\theta \}}({\mathbb {R}})\) and its inverse is given by

where \(r \in {\textbf {{SG}}}^{-1, -\sigma }_{2\mu - 1}({\mathbb {R}}^{2})\) and \(r \sim \sum _{\gamma \ge 1} \frac{1}{\gamma !} \partial ^{\gamma }_{\xi }(e^{{\tilde{\Lambda }}} D^{\gamma }_{x} e^{-{\tilde{\Lambda }}})\) in \({\textbf {{SG}}}^{-1, -\sigma }_{2\mu -1}({\mathbb {R}}^{2})\). Moreover, the symbol of \((I+r(x,D))^{-1}\) belongs to \({\textbf {{SG}}}^{0,0}_{\delta }({\mathbb {R}}^2)\) for every \(\delta >4\mu -3\) and it maps continuously \(H^{m'}_{\rho ';s,\theta }({\mathbb {R}})\) into itself for any \(\rho ', m' \in {\mathbb {R}}^2\).

We conclude this section writing \(\{e^{{\tilde{\Lambda }}}(x,D)\}^{-1}\) in a more precise way. From the asymptotic expansion of the symbol \(r(x,\xi )\) we may write

where \(q_{-3}\) denotes an operator with symbol in \({\textbf {{SG}}}^{-3, -3\sigma }_{\delta }({\mathbb {R}}^{2})\) for every \(\delta >4\mu -3\). Now note that

Hence

for a new element \(q_{-3}\) in the same space. We finally obtain:

where \(q_{-3} \in {\textbf {{SG}}}^{-3, -3\sigma }_{\delta }({\mathbb {R}}^{2})\). Since we deal with operators whose order does not exceed 3, in the next sections we are going to use frequently formula (4.3) for the inverse of \(e^{{\tilde{\Lambda }}}(x,D)\).

5 Conjugation of iP

In this section we will perform the conjugation of iP by the operator \(e^{ \rho _1 \langle D \rangle ^{\frac{1}{\theta }} } \circ e^{\Lambda }(t, x, D)\) and its inverse, where \(\Lambda (t,x,\xi )=k(t)\langle x\rangle ^{1-\sigma }_h+{{\tilde{\Lambda }}}(x,\xi )\) and the function \(k\in C^1([0,T];{\mathbb {R}})\) is a non-increasing function such that \(k(T)\ge 0\). Since the arguments in the following involve also derivatives with respect to t these derivatives will be denoted by \(D_t\), whereas the symbol D in the notation for pseudodifferential operators will always correspond to derivatives with respect to x.

More precisely, we will compute

where \(\rho _1 \in {\mathbb {R}}\) and \(P(t, x, D_t, D_x)\) is given by (1.10). As we discussed before, the role of this conjugation is to make positive the lower-order terms of the conjugated operator.

Since the operator \(^{R}e^{-{\tilde{\Lambda }}}\) appears in the inverse \(\{e^{{\tilde{\Lambda }}}(x,D)\}^{-1}\), we need the following technical lemma.

Lemma 5

Let \({\tilde{\Lambda }} \in {\textbf {{SG}}}^{0, 1-\sigma }_{\mu }({\mathbb {R}}^2)\) and \(a \in {\textbf {{SG}}}^{m_1, m_2}_{1, s_0}({\mathbb {R}}^2)\), with \(\mu > 1\) such that \(1/(1-\sigma ) > \mu + s_0 - 1\) and \(s_0 > \mu \). Then, for \(M \in {\mathbb {N}}\),

where \(q_M \in {\textbf {{SG}}}^{m_1 - M, m_2 - M\sigma }_{\mu , s_0}({\mathbb {R}}^2)\) and \(r \in {\mathcal {S}}_{\mu +s_0 -1}({\mathbb {R}}^{2})\).

Proof

Since \(e^{\pm {\tilde{\Lambda }}} \in {\textbf {{SG}}}^{0, \infty }_{\mu ; \frac{1}{1-\sigma }} ({\mathbb {R}}^{2})\) and \(a \in {\textbf {{SG}}}^{m_1, m_2}_{1, s_0} ({\mathbb {R}}^{2})\), by results from the calculus, we obtain

where \(a_1 \in {\textbf {{SG}}}^{0, \infty }_{\mu , s_0; \frac{1}{1-\sigma }}({\mathbb {R}}^{2})\), \(a_2 \in {\textbf {{SG}}}^{m_1, \infty }_{\mu , s_0; \frac{1}{1-\sigma }}({\mathbb {R}}^{2}),\) \(r_1, r_2 \in {\mathcal {S}}_{\mu +s_0-1}({\mathbb {R}}^{2})\) and

Hence

with \(a_2(x,D) \circ a_1(x,D) = a_3(x,D) + r_3(x,D)\), where \(a_3 \in {\textbf {{SG}}}^{m_1, \infty }_{\mu , s_0; \frac{1}{1-\sigma }}({\mathbb {R}}^{2})\), \(r_3 \in {\mathcal {S}}_{\mu +s_0-1}({\mathbb {R}}^{2})\) and

Thus

for some \(r \in {\mathcal {S}}_{\mu +s_0-1}({\mathbb {R}}^{2})\).

Now let us study the asymptotic expansion of \(a_3\). For \(\alpha , \beta \in {\mathbb {N}}_0\) we have (omitting the dependence \((x,\xi )\)):

Therefore, by Faà di Bruno formula, for \(\gamma , \delta \in {\mathbb {N}}_0\), we have

hence

The above estimate implies

for every \(j \ge 0\), \(\alpha , \beta \in {\mathbb {N}}_0\) and \(x,\xi \in {\mathbb {R}}\).

Let \(\psi (x, \xi ) \in C^{\infty }({\mathbb {R}}^{2})\) such that \(\psi \equiv 0\) on \(Q_{2}\), \(\psi \equiv 1\) on \(Q^{e}_{3}\), \(0 \le \psi \le 1\) and

for every \(x, \xi \in {\mathbb {R}}\) and \(\alpha , \beta \in {\mathbb {N}}_0\). Now set \(\psi _0 \equiv 1\) and, for \(j \ge 1\),

where \(R(j) = R j^{s_0 + \mu - 1}\), for a large constant \(R > 0\).

Setting \(b(x,\xi ) = \sum _{j \ge 0} \psi _{j}(x,\xi )c_{j}(x,\xi )\) we have \(b \in {\textbf {{SG}}}^{m_1, \infty }_{\mu , s_0}({\mathbb {R}}^{2})\) and

By similar arguments as the ones used in Sect. 4.2 we can prove that

Hence

Since \(1/(1-\sigma ) > \mu + s_0 -1\) we can conclude that \(b-a_3 \in {\mathcal {S}}_{\mu +s_0-1}({\mathbb {R}}^{2})\) and we obtain

where \({\tilde{r}} \in {\mathcal {S}}_{\mu +s_0-1}({\mathbb {R}}^{2})\). This concludes the proof. \(\square \)

5.1 Conjugation by \(e^{{\tilde{\Lambda }}}\)

We start noting that \(e^{{\tilde{\Lambda }}} \partial _t \{e^{{\tilde{\Lambda }}}\}^{-1} = \partial _t\) since \({\tilde{\Lambda }}\) does not depend on t.

-

Conjugation of \(ia_3(t,D)\).

Since \(a_3\) does not depend on x, applying Lemma 5, we have

$$\begin{aligned} e^{{\tilde{\Lambda }}}(x,D) (ia_3)(t,D) ^{R}(e^{-{\tilde{\Lambda }}})= ia_3(t,D) + s(t,x,D) + r_3(t,x,D), \end{aligned}$$with

$$\begin{aligned} s \sim \sum _{j \ge 1} \frac{1}{j!} \partial ^{j}_{\xi } \{e^{{\tilde{\Lambda }}} ia_3 D^{j}_{x}e^{-{\tilde{\Lambda }}}\} \in \mathrm{FSG}^{2, -\sigma }_{\mu , s_0}({\mathbb {R}}^{2}),\quad r_3 \in C([0,T],{\mathcal {S}}_{\mu +s_0-1}({\mathbb {R}}^{2})). \end{aligned}$$Hence, using (4.3) we can write more explicitly s(t, x, D) and we obtain

$$\begin{aligned}&e^{{\tilde{\Lambda }}} (ia_3)\{e^{{\tilde{\Lambda }}}\}^{-1}\\&\quad = \text {op}\left( ia_3 - \partial _{\xi }(a_3 \partial _{x}{\tilde{\Lambda }}) + \frac{i}{2}\partial ^{2}_{\xi }[a_3(\partial ^{2}_{x}{\tilde{\Lambda }} - (\partial _{x}{\tilde{\Lambda }})^2)] + a^{(0)}_{3} + r_3\right) \\&\quad \circ \left[ I +\text {op}\left( - i\partial _{\xi } \partial _{x} {\tilde{\Lambda }} - \frac{1}{2}\partial ^{2}_{\xi }(\partial ^{2}_{x}{\tilde{\Lambda }} - [\partial _x {\tilde{\Lambda }}]^{2}) - [\partial _{\xi } \partial _{x} {\tilde{\Lambda }} ]^{2} + q_{-3} \right) \right] \\&\quad = ia_3 - \text {op}\left( \partial _{\xi }(a_3 \partial _{x}{\tilde{\Lambda }}) + \frac{i}{2}\partial ^{2}_{\xi }\{a_3(\partial ^{2}_{x}{\tilde{\Lambda }} - \{\partial _{x}{\tilde{\Lambda }}\}^2)\} - a_3\partial _{\xi }\partial _{x}{\tilde{\Lambda }} + i\partial _{\xi }a_3\partial _{\xi }\partial ^{2}_{x}{\tilde{\Lambda }} \right) \\&\qquad + \text {op}\left( i\partial _{\xi }(a_3 \partial _{x}{\tilde{\Lambda }})\partial _{\xi }\partial _{x}{\tilde{\Lambda }} - \frac{i}{2}a_3\{\partial ^{2}_{\xi }(\partial ^{2}_{x}{\tilde{\Lambda }} + [\partial _x {\tilde{\Lambda }}]^{2}) + 2[\partial _{\xi } \partial _{x} {\tilde{\Lambda }} ]^{2}+ r_0 + {\bar{r}}\right) \\&\quad = ia_3 - \text {op}\left( \partial _{\xi }a_3 \partial _{x}{\tilde{\Lambda }} + \frac{i}{2}\partial ^{2}_{\xi }\{a_3[\partial ^{2}_{x}{\tilde{\Lambda }} - (\partial _{x}{\tilde{\Lambda }})^2]\} + i\partial _{\xi }a_3\partial _{\xi }\partial ^{2}_{x}{\tilde{\Lambda }}\right) \\&\qquad +\text {op}\left( i\partial _{\xi }(a_3 \partial _{x}{\tilde{\Lambda }})\partial _{\xi }\partial _{x}{\tilde{\Lambda }} - \frac{i}{2}a_3\{\partial ^{2}_{\xi }(\partial ^{2}_{x}{\tilde{\Lambda }} + [\partial _x {\tilde{\Lambda }}]^{2}) + 2(\partial _{\xi } \partial _{x} {\tilde{\Lambda }} )^{2}\}+ r_0+ {\bar{r}}\right) \\&\quad = ia_3 - \text {op}\left( \partial _{\xi }a_3 \partial _{x}\lambda _2 + \partial _{\xi }a_3\partial _{x}\lambda _1 + \frac{i}{2}\partial ^{2}_{\xi }\{a_3(\partial ^{2}_{x}\lambda _2 - \{\partial _{x}\lambda _2\}^2)\} + i\partial _{\xi }a_3\partial _{\xi }\partial ^{2}_{x}\lambda _2 \right) \\&\qquad +\text {op}\left( i\partial _{\xi }(a_3 \partial _{x}\lambda _2)\partial _{\xi }\partial _{x}\lambda _2 - \frac{i}{2}a_3\{\partial ^{2}_{\xi }(\partial ^{2}_{x}\lambda _2 + [\partial _x \lambda _2]^{2}) + 2[\partial _{\xi } \partial _{x} \lambda _2 ]^{2}\} + r_0+ {\bar{r}}\right) , \end{aligned}$$for some symbols \(a^{(0)}_{3} \in C([0,T]; {\textbf {{SG}}}^{0,0}_{\mu , s_0}({\mathbb {R}}^2))\), \(r_0 \in C([0,T]; {\textbf {{SG}}}^{0,0}_{\delta }({\mathbb {R}}^{2}))\) and, since we may assume \(\delta < \mu +s_0-1\), \({\bar{r}} \in C([0,T];{\mathcal {S}}_{\mu +s_0-1}({\mathbb {R}}^2))\).

-

Conjugation of \(ia_2(t,x,D)\).

By Lemma 5 with \(M=2\) and using (4.3) we get

$$\begin{aligned}&e^{{\tilde{\Lambda }}}(x,D) (ia_2)(t,x,D)\{e^{{\tilde{\Lambda }}}(x,D)\}^{-1}\\&\quad = \text {op}\left( ia_2 - \partial _{\xi }\{a_2 \partial _{x}{\tilde{\Lambda }}\} + \partial _{\xi }{\tilde{\Lambda }}\partial _{x}a_2 + a^{(0)}_{2} + r_2 \right) \\&\quad \circ \left[ I -\text {op}\left( i\partial _{\xi } \partial _{x} {\tilde{\Lambda }} + \frac{1}{2}\partial ^{2}_{\xi }(\partial ^{2}_{x}{\tilde{\Lambda }} - [\partial _x {\tilde{\Lambda }}]^{2}) + [\partial _{\xi } \partial _{x} {\tilde{\Lambda }} ]^{2} + q_{-3}\right) \right] \\&\quad = ia_2(t,x,D) + \text {op}( - \partial _{\xi }\{a_2 \partial _{x}{\tilde{\Lambda }}\} + \partial _{\xi }{\tilde{\Lambda }}\partial _{x}a_2 + a_2\partial _{\xi }\partial _{x} {\tilde{\Lambda }} + r_0+ {\bar{r}})\\&\quad = ia_2(t,x,D) + \text {op}(- \partial _{\xi }a_2 \partial _{x}{\tilde{\Lambda }} + \partial _{\xi }{\tilde{\Lambda }}\partial _{x}a_2 + r_0 + {\bar{r}} ) \\&\quad = ia_2(t,x,D) + \text {op}\left( - \partial _{\xi }a_2 \partial _{x}\lambda _2 + \partial _{\xi }\lambda _2\partial _{x}a_2 + r_0 +{\bar{r}} \right) , \end{aligned}$$for some symbols \(a^{(0)}_{2} \in C([0,T]; {\textbf {{SG}}}^{0,0}_{\mu , s_0})\), \(r_0 \in C([0,T]; {\textbf {{SG}}}^{0,0}_{\delta }({\mathbb {R}}^{2}))\) and \({\bar{r}} \in C([0,T];\Sigma _{\mu +s_0-1} ({\mathbb {R}}^{2}))\).

-

Conjugation of \(ia_1(t,x,D)\):

$$\begin{aligned} e^{{\tilde{\Lambda }}}(x,D) ia_1(t,x,D) \{e^{{\tilde{\Lambda }}}(x,D)\}^{-1}&= \text {op}(ia_1 + a^{(0)}_{1} + r_1)\circ \sum _{j\ge 0}(-r(t, x,D))^{j} \\&=\text {op}( ia_1+ {\tilde{r}}_{0} + {\tilde{r}}), \end{aligned}$$where \(a^{(0)}_{1} \in C([0,T]; {\textbf {{SG}}}^{0,1-2\sigma }_{\mu , s_0}({\mathbb {R}}^2))\), \(\tilde{r_0} \in C([0,T]; {\textbf {{SG}}}^{0,1-2\sigma }_{\delta }({\mathbb {R}}^{2}))\), \({\tilde{r}} \in C([0,T];\Sigma _{\mu +s_0-1} ({\mathbb {R}}^{2}))\).

-

Conjugation of \(ia_0(t,x,D)\):

$$\begin{aligned} e^{{\tilde{\Lambda }}}(x,D) ia_0(t,x,D)\{e^{{\tilde{\Lambda }}}(x,D)\}^{-1}&= \text {op}(ia_0 + a^{(0)}_{0} + r_0) \sum _{j\ge 0}(-r(t,x,D))^{j} \\&= \text {op}( ia_0+ \tilde{{\tilde{r}}}_{0} + {\tilde{r}}_1), \end{aligned}$$where \(a^{(0)}_{0} \in C([0,T]; {\textbf {{SG}}}^{0,1-2\sigma }_{\mu , s_0})\), \(\tilde{{\tilde{r}}}_0 \in C([0,T]; {\textbf {{SG}}}^{-1,1-2\sigma }_{\delta }({\mathbb {R}}^{2}))\) and \({\tilde{r}}_1 \in C([0,T];\Sigma _{\mu +s_0-1} ({\mathbb {R}}^{2}))\).

Summing up we obtain

where

and

5.2 Conjugation by \(e^{k(t)\langle x \rangle ^{1-\sigma }_{h}}\)

Let us recall that we are assuming that \(k \in C^{1}([0,T]; {\mathbb {R}})\), \(k'(t)\le 0\) and \(k(t) \ge 0\) for every \(t\in [0,T]\). Following the same ideas of the proof of Lemma 5 one can prove the following result.

Lemma 6

Let \(a \in C([0,T],{\textbf {{SG}}}^{m_1, m_2}_{\mu , s_0}({\mathbb {R}}^2))\), where \(1< \mu < s_0\) and \(\mu + s_0 - 1 < \frac{1}{1-\sigma }\). Then

for some symbols \(b \sim \sum _{j \ge 1} \frac{1}{j!} e^{k(t)\langle x \rangle ^{1-\sigma }_{h}}\, \partial ^{j}_{\xi } a\, D^{j}_{x} e^{-k(t)\langle x \rangle ^{1-\sigma }_{h}}\) in \({\textbf {{SG}}}^{m_1 - 1, m_2 -\sigma }_{\mu , s_0}({\mathbb {R}}^{2})\) and \({\bar{r}} \in C([0,T],{\mathcal {S}}_{\mu +s_0-1}({\mathbb {R}}^{2}))\).

Let us perform the conjugation by \(e^{k(t)\langle x \rangle ^{1-\sigma }_{h}}\) of the operator \(e^{{\tilde{\Lambda }}} (iP) \{e^{{\tilde{\Lambda }}}\}^{-1}\) in (5.1) with the aid of Lemma 6.

-

Conjugation of \( \partial _t\): \(e^{k(t)\langle x \rangle ^{1-\sigma }_{h} } \, \partial _{t} \, e^{-k(t)\langle x \rangle ^{1-\sigma }_{h}} = \partial _{t} - k'(t)\langle x \rangle ^{1-\sigma }_{h}\).

-

Conjugation of \(ia_3(t,D)\):

$$\begin{aligned}&e^{k(t)\langle x \rangle ^{1-\sigma }_{h}} \, ia_3(t,D) e^{-k(t)\langle x \rangle ^{1-\sigma }_{h}}\\&\quad = ia_3 +\text {op}(- k(t)\partial _{\xi }a_3 \partial _{x} \langle x \rangle ^{1-\sigma }_{h}) \\&\qquad + \text {op}\left( \frac{i}{2}\partial ^{2}_{\xi }a_3 \{k(t)\partial ^2_{x}\langle x \rangle ^{1-\sigma }_{h} - k^{2}(t) [\partial _{x}\langle x \rangle ^{1-\sigma }_{h}]^2 \}\right) + a^{(0)}_{3} + r_{3}, \end{aligned}$$where \(a^{(0)}_{3} \in C([0,T]; {\textbf {{SG}}}^{0, -3\sigma }_{\mu , s_0}({\mathbb {R}}^{2}))\) and \(r_{3} \in C([0,T]; \Sigma _{\mu +s_0-1}({\mathbb {R}}^{2}))\).

-

Conjugation of \(\text {op}(ia_2 -\partial _{\xi }a_3 \partial _{x}\lambda _2)\):

$$\begin{aligned}&e^{k(t)\langle x \rangle ^{1-\sigma }_{h}} \text {op}(ia_2 -\partial _{\xi }a_3\partial _{x}\lambda _2) \,e^{-k(t)\langle x \rangle ^{1-\sigma }_{h}}\\&\quad = ia_2 +\text {op}(-\partial _{\xi }a_3\partial _{x}\lambda _2 ) +\text {op}(- k(t)\partial _{\xi }a_2 \partial _{x} \langle x \rangle ^{1-\sigma }_{h} \\&\qquad - ik(t)\partial _{\xi }\{\partial _{\xi }a_3\partial _{x}\lambda _2\} \partial _{x} \langle x \rangle ^{1-\sigma }_{h} + a^{(0)}_{2}+ r_2), \end{aligned}$$where \(a^{(0)}_{2} \in C([0,T]; {\textbf {{SG}}}^{0, -2\sigma }_{\mu , s_0}({\mathbb {R}}^{2}))\) and \(r_2 \in C([0,T]; \Sigma _{\mu +s_0-1}({\mathbb {R}}^{2}))\).

-

Conjugation of \(i(a_1+a_0)(t,x,D)\):

$$\begin{aligned} e^{k(t)\langle x \rangle ^{1-\sigma }_{h}} i(a_1+a_0)(t,x,D)e^{-k(t)\langle x \rangle ^{1-\sigma }_{h}} = \text {op}(ia_1 + ia_0 + a_{1,0}+ r_1), \end{aligned}$$where \(r_1 \in C([0,T]; \Sigma _{\mu +s_0-1}({\mathbb {R}}^{2}))\) and

$$\begin{aligned} a_{1, 0} \sim \sum _{j \ge 1} e^{k(t)\langle x \rangle ^{1-\sigma }_{h}} \frac{1}{j!} \partial ^{j}_{\xi }i(a_1+a_0) D^{j}_{x} e^{-k(t)\langle x \rangle ^{1-\sigma }_{h}} \,\, \text {in} \,\, {\textbf {{SG}}}^{0, 1-2\sigma }_{\mu , s_0}({\mathbb {R}}^{2}). \end{aligned}$$It is not difficult to verify that the following estimate holds

$$\begin{aligned} | a_{1,0} (t,x,\xi )| \le \max \{1, k(t)\} C_{T} \langle x \rangle ^{1 - 2\sigma }_{h}, \end{aligned}$$(5.3)where \(C_{T}\) depends on \(a_1\) and does not depend on k(t).

-

Conjugation of \(\text {op}(- \partial _{\xi }a_3\partial _{x}\lambda _1 - \partial _{\xi }a_2\partial _{x}\lambda _2 + \partial _{\xi }\lambda _2\partial _{x}a_2 + ib_1)\): taking into account i) of Lemma 3

$$\begin{aligned} e^{k(t)\langle x \rangle ^{1-\sigma }_{h}}&\text {op}(- \partial _{\xi }a_3\partial _{x}\lambda _1 - \partial _{\xi }a_2\partial _{x}\lambda _2 + \partial _{\xi }\lambda _2\partial _{x}a_2 + ib_1) e^{-k(t)\langle x \rangle ^{1-\sigma }_{h}} \\&= \text {op}( -\partial _{\xi }a_3\partial _{x}\lambda _1 - \partial _{\xi }a_2\partial _{x}\lambda _2 + \partial _{\xi }\lambda _2\partial _{x}a_2 + ib_1 + r_0 + {\bar{r}}), \end{aligned}$$where \(r_0 \in C([0,T]; {\textbf {{SG}}}^{0, 0}_{\mu , s_0}({\mathbb {R}}^{2}))\) and \({\bar{r}} \in C([0,T]; \Sigma _{\mu +s_0-1}({\mathbb {R}}^{2}))\).

-

Conjugation of \(r_{\sigma }(t,x,D)\):

$$\begin{aligned} e^{k(t)\langle x \rangle ^{1-\sigma }_{h}} \,r_{\sigma }(t,x,D) \, e^{-k(t)\langle x \rangle ^{1-\sigma }_{h}} = r_{\sigma ,1}(t,x,D) + {\bar{r}}(t,x,D), \end{aligned}$$where \(r \in C([0,T]; \Sigma _{\mu +s_0-1}({\mathbb {R}}^{2}))\), \(r_{\sigma ,1}\in C([0,T]; {\textbf {{SG}}}^{0, 1-2\sigma }_{\delta }({\mathbb {R}}^{2}))\) and the estimate

$$\begin{aligned} |r_{\sigma ,1}(t,x,\xi )| \le C_{T,{\tilde{\Lambda }}} \langle x \rangle ^{1-2\sigma }_{h} \end{aligned}$$(5.4)holds with \(C_{T,{\tilde{\Lambda }}}\) independent of k(t).

Gathering all the previous computations we may write

where \(b_1\) satisfies (5.2),

(but \(c_1\) depends of \(\lambda _2, k(t)\)), \(a_{1,0}\) as in (5.3), \(r_{\sigma ,1}\) as in (5.4), and for some new operators

5.3 Conjugation by \(e^{\rho _1 \langle D \rangle ^{\frac{1}{\theta }}}\)

Since we are considering \(\theta > s_0\) and \(\mu > 1\) arbitrarily close to 1, we may assume that all the previous smoothing remainder terms have symbols in \(\Sigma _{\theta }({\mathbb {R}}^{2})\).

Lemma 7

Let \(a \in {\textbf {{SG}}}^{m_1, m_2}_{\mu , s_0}\), where \(1< \mu < s_0\) and \(\mu + s_0 - 1 < \theta \). Then

for some symbols \(b \sim \sum _{j \ge 1} \frac{1}{j!} \partial ^{j}_{\xi }e^{\rho _1 \langle \xi \rangle ^{\frac{1}{\theta }}}\, D^{j}_{x}a \, e^{-\rho _1 \langle \xi \rangle ^{\frac{1}{\theta }}}\) in \({\textbf {{SG}}}^{m_1 - (1-\frac{1}{\theta }), m_2 -1}_{\mu , s_0}({\mathbb {R}}^{2})\) and \(r \in {\mathcal {S}}_{\mu +s_0 -1}({\mathbb {R}}^{2})\).

Let us now conjugate by \(e^{\rho _1 \langle D \rangle ^{\frac{1}{\theta }}}\) the operator \(e^{k(t)\langle x \rangle ^{1-\sigma }_{h}} e^{\tilde{\Lambda }} (iP) \{e^{\tilde{\Lambda }}\}^{-1}e^{-k(t)\langle x \rangle ^{1-\sigma }_{h}}\) in (5.5). First of all we observe that since \(a_3\) does not depend of x, we simply have

-

Conjugation of \(\text {op}\left( -\partial _{\xi }a_3\partial _{x}\lambda _2 + ia_2 - k(t)\partial _{\xi }a_3\partial _x \langle x \rangle ^{1-\sigma }_{h}\right) \):

$$\begin{aligned} e^{\rho _1 \langle D \rangle ^{\frac{1}{\theta }}} \,\text {op}&( -\partial _{\xi }a_3\partial _{x}\lambda _2 + ia_2 - k(t)\partial _{\xi }a_3\partial _x \langle x \rangle ^{1-\sigma }_{h} )\, e^{-\rho _1 \langle D \rangle ^{\frac{1}{\theta }}} \\&= \text {op}(-\partial _{\xi }a_3\partial _{x}\lambda _2 + ia_2 - k(t)\partial _{\xi }a_3\partial _x \langle x \rangle ^{1-\sigma }_{h}) + (a_{2,\rho _1} + {\bar{r}})(t,x,D), \end{aligned}$$where \(a_{2,\rho _1} \in C([0,T], {\textbf {{SG}}}^{1+\frac{1}{\theta }, -1}_{\mu , s_0}({\mathbb {R}}^{2}))\), \({\bar{r}} \in C([0,T],\Sigma _{\theta }({\mathbb {R}}^{2}))\) and the following estimate holds

$$\begin{aligned} |\partial ^{\alpha }_{\xi } \partial ^{\beta }_{x}a_{2,\rho _1}(t,x,\xi )| \le \max \{1,k(t)\} |\rho _1| C_{\lambda _2, T}^{\alpha +\beta +1}\alpha !^{\mu }\beta !^{s_0} \langle \xi \rangle ^{1+\frac{1}{\theta } -\alpha } \langle x \rangle ^{-\sigma - \beta }. \end{aligned}$$(5.7)In particular

$$\begin{aligned} |a_{2,\rho _1}(t,x,\xi )| \le \max \{1,k(t)\} |\rho _1| C_{\lambda _2, T} \langle \xi \rangle ^{1+\frac{1}{\theta }}_{h} \langle x \rangle ^{-\sigma }. \end{aligned}$$ -

Conjugation of \( \text {op}(- \partial _{\xi }a_3\partial _{x}\lambda _1 + ia_1 - \partial _{\xi }a_2\partial _{x}\lambda _2 + \partial _{\xi }\lambda _2\partial _{x}a_2 - k(t)\partial _{\xi }a_2 \partial _{x} \langle x \rangle ^{1-\sigma }_{h} + ib_1 + ic_1): \) the conjugation of this term is given by

$$\begin{aligned} \text {op}(- \partial _{\xi }a_3\partial _{x}\lambda _1 +&ia_1 - \partial _{\xi }a_2\partial _{x}\lambda _2 + \partial _{\xi }\lambda _2\partial _{x}a_2 - k(t)\partial _{\xi }a_2 \partial _{x} \langle x \rangle ^{1-\sigma }_{h} + ib_1 + ic_1) \\&+ a_{1, \rho _1}(t,x,D) + {\bar{r}}(t,x,D), \end{aligned}$$where \({\bar{r}} \in C([0,T],\Sigma _{\theta } ({\mathbb {R}}^{2}))\) and \(a_{1,\rho _1}\) satisfies the following estimate

$$\begin{aligned} |\partial ^{\alpha }_{\xi } \partial ^{\beta }_{x}a_{1,\rho _1}(t,x,\xi )| \le \max \{1,k(t)\} |\rho _1| C_{{\tilde{\Lambda }}, T}^{\alpha +\beta +1}\alpha !^{\mu }\beta !^{s_0} \langle \xi \rangle ^{\frac{1}{\theta } -\alpha } \langle x \rangle ^{-\sigma - \beta }. \end{aligned}$$(5.8)In particular

$$\begin{aligned} |a_{1,\rho _1}(t,x,\xi )| \le \max \{1,k(t)\} |\rho _1| C_{{\tilde{\Lambda }}, T} \langle \xi \rangle ^{\frac{1}{\theta }}_{h} \langle x \rangle ^{-\sigma }. \end{aligned}$$ -

Conjugation of \(\text {op}(ia_0 - k'(t)\langle x \rangle ^{1-\sigma }_{h} + a_{1,0} + r_{\sigma ,1})\):

$$\begin{aligned} e^{\rho _1 \langle D \rangle ^{\frac{1}{\theta }}} \text {op}(ia_0 -&k'(t)\langle x \rangle ^{1-\sigma }_{h} + a_{1,0} + r_{\sigma ,1}) e^{-\rho _1 \langle D \rangle {\frac{1}{\theta }}} \\&= \text {op}( ia_0 - k'(t)\langle x \rangle ^{1-\sigma }_{h} + a_{1,0} + r_{\sigma ,1}) + r_0(t,x,D) + {\bar{r}}(t,x,D), \end{aligned}$$where \(r_0 \in C([0,T]; {\textbf {{SG}}}^{(0,0)}_{\delta }({\mathbb {R}}^{2}))\) and \({\bar{r}} \in C([0,T]; \Sigma _{\theta }({\mathbb {R}}^{2}))\).

Summing up, from the previous computations we obtain

with \(a_{2,\rho _1}\) as in (5.7), \(b_1\) as in (5.2), \(c_1\) as in (5.6), \(a_{1,\rho _1}\) as in (5.8), \(a_{1,0}\) as in (5.3), \(r_{\sigma ,1}\) as in (5.4), and for some operators

6 Estimates from below

In this section we will choose \(M_2, M_1\) and k(t) in order to apply Fefferman–Phong and sharp Gårding inequalities to get the desired energy estimate for the conjugated problem. By the computations of the previous section we have

where \({\tilde{a}}_2, {\tilde{a}}_1\) represent the parts with \(\xi -\)order 2, 1, respectively, and \({\tilde{a}}_0\) represents the part with \(\xi -\)order 0, but with a positive order (less than or equal to \(1-\sigma \)) with respect to x. The real parts are given by

Since the Fefferman–Phong inequality holds true only for scalar symbols, we need to decompose \(\mathrm{Im}\, {\tilde{a}}_2\) into its Hermitian and anti-Hermitian part:

where \(2\mathrm{Re}\langle t_2(t,x,D)u,u\rangle =0\) and \(t_1(t,x,\xi )=-\displaystyle \sum \nolimits _{\alpha \ge 1}\frac{i}{2\alpha !}\partial _\xi ^\alpha D_x^\alpha \mathrm{Im} {\tilde{a}}_2(t,x,\xi )\). Observe that, using (5.7),

In this way we may write

where \({\tilde{r}}_0\) has symbol of order (0, 0).

Now we are ready to choose \(M_2, M_1\) and k(t). The function k(t) will be of the form \(k(t)=K(T-t)\), \(t \in [0,T]\), for a positive constant K to be chosen. In the following computations we shall consider \(|\xi | > hR_{a_3}\). Observe that \(2|\xi |^{2} \ge \langle \xi \rangle ^{2}_{h}\) whenever \(|\xi | \ge h > 1\). For \(\mathrm{Re}\, {\tilde{a}}_2\) we have:

For \(\mathrm{Re}\,{\tilde{a}}_1\), we have:

Since \(\langle \xi \rangle _{h} \langle x \rangle ^{-\frac{\sigma }{2}} \le \sqrt{2}\) on the support of \(1 - \psi \left( \frac{\langle x \rangle ^{\sigma }}{\langle \xi \rangle ^{2}_{h}} \right) \), we may conclude

Taking (6.1) into account we obtain

For \(\mathrm{Re}\, {\tilde{a}}_0\), we have:

Finally, let us proceed with the choices of \(M_1, M_2\) and K. First we choose K larger than \(\max \{C_{a_0}, 1/T\}\), then we set \(M_2\) such that \(M_2 \frac{C_{a_3}}{2} - C_{a_2} - {\tilde{C}}_{a_3}KT(1-\sigma ) > 0\) and after that we take \(M_1\) such that \(M_1 \frac{C_{a_3}}{2} - C_{a_2} - C_{a_1} - {\tilde{C}}_{a_2, \lambda _2} > 0\) (choosing \(M_2\), \(M_1\) we determine \({\tilde{\Lambda }}\)). Enlarging the parameter h we may assume

With these choices we obtain that \(\mathrm{Re}\, {\tilde{a}}_2 \ge 0\), \(\mathrm{Re}\, ({\tilde{a}}_1 + t_1) + M_1 \frac{C_{a_3}}{2} \sqrt{2} \ge 0\) and \(\mathrm{Re}\, {\tilde{a}}_0 \ge 0\). Let us also remark that the choices of \(M_2, M_1\) and k(t) do not depend of \(\rho _1\) and \(\theta \).

7 Proof of Theorem 1

Let us denote

By (6.2), with the choices of \(M_2,M_1,k(t)\) in the previous section, we get

with

Fefferman–Phong inequality applied to \(\mathrm{Re}\, {\tilde{a}}_2\) and sharp Gårding inequality applied to \({\tilde{a}}_1 + t_1+M_1 \frac{C_{a_3}}{2} \sqrt{2}\) and \({\tilde{a}}_0\) give

for a positive constant c. Now applying Gronwall inequality we come to the following energy estimate:

for every \(v(t,x) \in C^{1}([0,T]; {\mathscr {S}}({\mathbb {R}}))\). By usual computations, this estimate provides well-posedness of the Cauchy problem associated with \({{\tilde{P}}}_{\Lambda }\) in \(H^m({\mathbb {R}})\) for every \(m=(m_1,m_2)\in {\mathbb {R}}^2\): namely for every \({{\tilde{f}}}\in C([0,T]; H^m({\mathbb {R}}))\) and \({{\tilde{g}}}\in H^m({\mathbb {R}})\), there exists a unique \(v \in C([0,T]; H^m({\mathbb {R}}))\) such that \({{\tilde{P}}}_\Lambda v = {{\tilde{f}}}\), \(v(0) = \tilde{g}\) and

Let us now turn back to our original Cauchy problem (1.10), (1.1). Fixing initial data \(f \in C([0,T], H^m_{\rho ;s,\theta }({\mathbb {R}}))\) and \(g \in H^m_{\rho ;s,\theta }({\mathbb {R}})\) for some \(m, \rho \in {\mathbb {R}}^2\) with \(\rho _2 >0\) and positive \(s,\theta \) such that \(\theta > s_0\), we can define \(\Lambda \) as at the beginning of Sect. 6 with \(\mu >1\) such that \(s_0 > 2\mu -1\) and \(M_1,M_2, k(0)\) such that (7.1) holds. Then by Theorem 4 we get

and

for every \({{\bar{\delta }}} >0\), because \(1/(1-\sigma )>s\). Since \(\bar{\delta }\) can be taken arbitrarily small, we have that \(f_{\rho _1,\Lambda } \in C([0,T], H^m)\) and \(g_{\rho _1,\Lambda } \in H^m\). Hence the Cauchy problem

admits a unique solution \(v \in C([0,T], H^m)\cap C^{1}([0,T], H^{m_1-3, m_2-1+1/\sigma )})\) satisfying energy estimate (7.2). Taking now \(u= (e^{\Lambda (t,x,D)})^{-1} e^{-\rho _1\langle D \rangle ^{1/\theta }}v\), we easily see that u solves Cauchy problem (1.1), it satisfies

and it is the unique solution with this property. Namely, \( u \in C([0,T], H^m_{(\rho _1,-{\tilde{\delta }}); s, \theta }({\mathbb {R}})) \cap C^{1}([0,T], H^{(m_1-3,m_2)}_{(\rho _1 ,-{\tilde{\delta }}); s, \theta }({\mathbb {R}})) \) for every \({\tilde{\delta }}>0\). Moreover, from (7.2) we get

which gives (1.11). This concludes the proof. \(\square \)

Remark 6

Notice that the argument of the proof of Theorem 1 and in particular energy estimate (1.11) implies that the solution of problem (1.1) is unique in the space of all functions u such that

In general we cannot conclude that it is unique in \(C([0,T], H^m_{(\rho _1, -{\tilde{\delta }}); s, \theta }({\mathbb {R}}))\).

Remark 7

In our main result we assume that the symbol of the leading term \(a_3(t,D)\) is independent of x. This assumption is crucial in the argument of the proof. As a matter of fact, we observe that if \(a_3\) depends on x, even allowing it to decay like \(\langle x \rangle ^{-m}\) for \(m>>0\) , the conjugation of this term with the operator \(e^{\rho _1 \langle D \rangle ^{1/\theta }}\) would give

We observe that

Hence since \(2+\frac{1}{\theta } >2\), this remainder term could not be controlled by other lower-order terms whose order in \(\xi \) does not exceed 2. Notice that this represents a difference in comparison with the \(H^\infty \) frame where a dependence on x in the leading term can be allowed by assuming a suitable decay assumption with respect to x, cf. [2, Sect. 4].

Remark 8

With a major technical effort one can consider 3-evolution equations in higher space dimension, that is for \(x \in {\mathbb {R}}^n, n >1.\) At this moment, results of this type exist only for the case \(p=2\), cf. [6, 10, 22]. When passing to higher dimension, the main difficulty is the choice of the functions \(\lambda _1, \lambda _2\) defining the change of variable, which must be chosen in order to satisfy certain partial differential inequalities, see also [2, Sect. 4]. These may be non-trivial for \(p >2\). In this paper we prefer to restrict to the one space dimensional case both since the content is already quite technical and since the main physical models to which our results could be applied are included in this case.

Change history

09 October 2022

Missing Open Access funding information has been added in the Funding Note.

References

A. Arias Junior, A. Ascanelli, M. Cappiello, Gevrey well posedness for\(3\)-evolution equations with variable coefficients. Preprint (2021). https://arxiv.org/abs/2106.09511

A.Ascanelli, C.Boiti, Semilinear p-evolution equations in Sobolev spaces, J. Differential Equations 260 (2016), 7563–7605.

A. Ascanelli, C. Boiti, L. Zanghirati, Well-posedness of the Cauchy problem for p-evolution equations. J. Differential Equations 253 (10) (2012), 2765–2795.

A. Ascanelli, C. Boiti, L. Zanghirati, A Necessary condition for\( H^{\infty }\)well-posedness of\( p \)-evolution equations. Adv. Differential Equations 21 (2016), 1165–1196.

A. Ascanelli, M. Cappiello, Weighted energy estimates for p-evolution equations in SG classes. J. Evol. Eqs, 15 (3) (2015), 583–607.

A. Ascanelli, M. Cappiello, Schrödinger-type equations in Gelfand-Shilov spaces. J. Math. Pures Appl. 132 (2019), 207–250.

A. Ascanelli, M. Cicognani, M.Reissig, The interplay between decay of the data and regularity of the solution in Schrödinger equations. Ann. Mat. Pura Appl. (1923-) 199 (4) (2020), 1649–1671.

A. Baba, The\(H_{\infty }\)-wellposed Cauchy problem for Schrödinger type equations. Tsukuba J. Math. 18 (1) (1994), 101–117.

M. Cicognani, F. Colombini, The Cauchy problem for p-evolution equations. Trans. Amer. Math. Soc. 362 (9) (2010), 4853–4869.

M. Cicognani, M. Reissig, Well-posedness for degenerate Schrödinger equations. Evol. Equ. Control Theory 3 (1) (2014), 15–33.