Abstract

We study the inverse scattering problem of determining a magnetic field and electric potential from scattering measurements corresponding to finitely many plane waves. The main result shows that the coefficients are uniquely determined by 2n measurements up to a natural gauge. We also show that one can recover the full first-order term for a related equation having no gauge invariance, and that it is possible to reduce the number of measurements if the coefficients have certain symmetries. This work extends the fixed angle scattering results of Rakesh and Salo (SIAM J Math Anal 52(6):5467–5499, 2020) and (Inverse Probl 36(3):035005, 2020) to Hamiltonians with first-order perturbations, and it is based on wave equation methods and Carleman estimates.

Similar content being viewed by others

1 Introduction and Main Theorems

In this work, we study the inverse scattering problem of recovering a first-order perturbation from fixed angle scattering measurements. Let \(\lambda > 0\), \(n\ge 2\), and let \(\omega \in S^{n-1}\) be a fixed unit vector. Suppose that for \(m\in \mathbb {N}\), \(\mathcal V(x,D)\) is a first-order differential operator with \(C^m(\mathbb {R}^n)\) coefficients having compact support in \(B = \{x\in \mathbb {R}^n:|x|<1\}\), the open ball of radius 1. We consider a Hamiltonian \(H_{\mathcal V} = -\Delta + {\mathcal V}(x,D)\) in \(\mathbb {R}^n\) and the problem

where \(\psi ^s_{\mathcal V}(x,\lambda ,\omega )\) is known as a the scattering solution. It is well known that in order to have uniqueness for this problem, one needs to put further restrictions on the function \(\psi _{\mathcal V}^s\). See, e.g. [27] for the following facts. The outgoing Sommerfeld radiation condition (SRC for short)

where \(r = |x|\), selects the solutions that heuristically behave as Fourier transforms in time of spherical waves that propagate toward infinity. A function \(\psi ^s_{\mathcal V}\) satisfying (1.1) and the SRC is called an outgoing scattering solution. This solution is given by the so-called outgoing resolvent operator

so that, formally,

Notice that, assuming that such a solution \(\psi ^s_{\mathcal V}\) exists—which would happen if the resolvent is well defined and bounded in appropriate spaces—it must satisfy the Helmholtz equation

since the coefficients of \({\mathcal V}(x,D)\) are compactly supported in B. It is well known that a solution of Helmholtz equation satisfying the SRC has always the asymptotic expansion

where \(\theta = \frac{x}{|x|}\) and \( a_{\mathcal V}(\lambda ,\theta ,\omega )\) is called the scattering amplitude or far field pattern.

In this setting, the main objective of an inverse scattering problem consists in reconstructing the coefficients of \(\mathcal V(x,D)\) from partial or full knowledge of \(a_{\mathcal V}(\lambda ,\theta ,\omega )\). Depending on the data that is assumed to be known, we can distinguish several types of inverse scattering problems:

-

1.

Full data Recover the coefficients of \(\mathcal V(x,D)\) from the knowledge of \(a_{\mathcal V}(\lambda ,\theta ,\omega )\) for all \((\lambda , \theta , \omega )\in (0,\infty ) \times S^{n-1}\times S^{n-1}\).

-

2.

Fixed frequency (or fixed energy) Recover the coefficients of \(\mathcal V(x,D)\) from the knowledge of \(a_{\mathcal V}(\lambda _0,\theta ,\omega )\) for a fixed \(\lambda _0>0\) and all \((\theta , \omega )\in S^{n-1}\times S^{n-1}\).

-

3.

Backscattering Recover the coefficients of \(\mathcal V(x,D)\) from the knowledge of \(a_{\mathcal V}(\lambda ,\omega ,-\omega )\) for all \((\lambda , \omega )\in (0,\infty ) \times S^{n-1}\).

-

4.

Fixed angle (single measurement) Recover the coefficients of \(\mathcal V(x,D)\) from the knowledge of \(a_{\mathcal V}(\lambda ,\theta ,\omega _0)\) for a fixed \(\omega _0\in S^{n-1}\) and all \((\lambda , \theta )\in (0,\infty ) \times S^{n-1}\).

In the case of fixed angle scattering, it is also interesting to consider analogous inverse problems in which \(a_{\mathcal V}(\, \cdot \,, \, \cdot \,, \omega )\) is assumed to be known for each \(\omega \) in a fixed subset (usually finite) of \(S^{n-1}\).

Let \(D = -i\nabla \). We consider the Hamiltonian

where both the magnetic potential \(\mathbf {A}\) and the electrostatic potential q are real. Then, \(H_{\mathcal V}\) is self-adjoint, and if \(\mathbf {A}\) and q are compactly supported, the resolvent (1.2) is bounded in appropriate spaces under very general assumptions on the regularity of \(\mathbf {A}\) and q. This implies that the problem (1.1) has a unique solution \(\psi ^s_{\mathcal V}\) and hence that the scattering amplitude \(a_{\mathcal V} =a_{\mathbf {A},q}\) is well defined, so that the fixed angle scattering problem can be appropriately stated.

In [19, 21], it has been proved that for \(H_\mathcal V = -\Delta +q\), knowledge of the fixed angle scattering data \(a_{\mathcal V}(\, \cdot \, , \, \cdot \, , \omega )\) in two opposite directions \(\omega = \pm \omega _0\) for a fixed \( \omega _0 \in S^{n-1}\) determines uniquely the potential q. The present article extends the results of [19, 21] to the case of non-vanishing first-order coefficients and proves that from 2n measurements, or just \(n+1\) measurements under symmetry conditions, one can determine both the first- and zeroth-order coefficients up to natural gauges. To prove these results, we follow the approach used in [19]; that is, we show the equivalence of the fixed angle scattering problem with an appropriate inverse problem for the wave equation. This inverse scattering problem in time domain consists in recovering information on \(\mathbf {A}\) and q from boundary measurements of the solution \(U_{\mathbf {A},q}\) of the initial value problem

If the support of \(\mathbf {A}\) and q is contained in B, the boundary measurements of \(U_{\mathbf {A},q}\) are made in the set \( \partial B \times (-T,T) \cap \{(x,t) : t \ge x\cdot \omega \}\), where \(\partial B\) denotes the boundary of the ball.

We now describe some previous results on the inverse scattering problem of recovering a potential q(x) from fixed angle measurements. As discussed above, this problem can be considered in the frequency domain, as the problem of determining q from the scattering amplitude \(a_q(\, \cdot \,, \, \cdot \,, \omega )\) for the Schrödinger operator \(-\Delta + q\) with a fixed direction \(\omega \in S^{n-1}\), or alternatively in the time domain as the problem of recovering q from boundary or scattering measurements of the solution \(U_q\) of the wave equation. The equivalence of these problems is discussed in [19] (see [16, 17, 26] for the odd-dimensional case).

The one-dimensional case is quite classical, see [6, 15]. In dimensions \(n \ge 2\), uniqueness has been proved for small or generic potentials [1, 23], recovery of singularities results are given in [18, 20], and uniqueness of the zero potential is considered in [2]. Recently, in [19, 21] it was proved that measurements for two opposite fixed angles uniquely determine a potential \(q \in C^{\infty }_c(\mathbb {R}^n)\). The problem with one measurement remains open, but [19, 21] prove uniqueness for symmetric or horizontally controlled potentials (similar to angularly controlled potentials in backscattering [25]), and Lipschitz stability estimates are given for the wave equation version of the problem. We also mention the recent work [14] which studies the fixed angle problem when the Euclidean metric is replaced by a Riemannian metric, or sound speed, satisfying certain conditions, and the upcoming work [13] which studies fixed angle scattering for time-dependent coefficients also in the case of first-order perturbations.

We now introduce the main results in this work. Since the metric is Euclidean, the vector potential \(\mathbf {A}\) can equivalently be seen as a 1-form \(\mathbf {A}= A^j dx_j\). We denote by \(d\mathbf {A}\) the exterior derivative of \(\mathbf {A}\). Let \(\{e_1,\dots ,e_n\}\) be any orthonormal basis in \(\mathbb {R}^n\). Our first result shows that the magnetic field \(d\mathbf {A}\) and the electrostatic potential q are uniquely determined by the knowledge of the fixed angle scattering amplitude \(a_{\mathbf {A},q}(\, \cdot \,, \, \cdot \,, \omega )\) for the n orthogonal directions \(\omega = e_j\), \(1\le j \le n\) and the n opposite ones, \(\omega = -e_j\). From now on, in this paper we will fix \(m\) to be the integer

In general, we consider \(\mathbf {A}\in C^{m+2}(\mathbb {R}^n; \mathbb {R}^n)\) and \(q \in C^m(\mathbb {R}^n; \mathbb {R})\). This is required in order to guarantee that the solutions of (1.4) satisfy certain regularity properties.

Theorem 1.1

Let \(n\ge 2\) and \(\lambda _0>0\), and let \(\{e_1,\dots ,e_n\}\) be any orthonormal basis in \(\mathbb {R}^n\). Assume that the pairs of potentials \(\mathbf {A}_1,\mathbf {A}_2 \in C^{m+2}_c(\mathbb {R}^n; \mathbb {R}^n)\) and \(q_1,q_2 \in C^{m}_c(\mathbb {R}^n; \mathbb {R})\) are compactly supported in B. Assume also that the following condition holds:

If for all \(\theta \in S^{n-1}\) and \(\lambda \ge \lambda _0\) we have

then \(d \mathbf {A}_1 = d \mathbf {A}_2\) and \(q_1 = q_2\).

The condition (1.6) is a technical restriction necessary to decouple the information on q from the information on \(\mathbf {A}\) at some point in the proof of this uniqueness result.

In Theorem 1.1, one cannot recover completely the magnetic potential \(\mathbf {A}\) due to the phenomenon of gauge invariance. This consists simply in the observation that if \(H_{\mathbf {A},q} u = v\) for some functions u and v, then \(H_{\mathbf {A}+ \nabla f,q} \, (e^{ -if} u) = e^{-if}v\), for any \(f\in C^2(\mathbb {R}^n)\). Therefore, if f is compactly supported, the scattering amplitude is not going to be affected by f, so that \(a_{\mathbf {A},q} = a_{\mathbf {A}+ \nabla f,q}\). On the other hand, if we consider Hamiltonians

where V is a fixed function, then the gauge invariance is broken and knowledge of the associated scattering amplitude \( \tilde{a}_{\mathbf {A},V}(\, \cdot \, , \,\cdot \,,\omega )\) for the 2n directions \(\omega = \pm e_j\) determines completely \(\mathbf {A}\).

Theorem 1.2

Let \(n\ge 2\) and \(\lambda _0>0\), and let \(\{e_1,\dots ,e_n\}\) be any orthonormal basis in \(\mathbb {R}^n\). Assume that \(\mathbf {A}_1,\mathbf {A}_2 \in C^{m+2}_c(\mathbb {R}^n; \mathbb {R}^n)\) and \(V \in C^{m}_c(\mathbb {R}^n; \mathbb {C})\) have compact support in B, and that the Hamiltonians \(\tilde{H}_{\mathbf {A}_1,V} \) and \(\tilde{H}_{\mathbf {A}_2,V} \) are both self-adjoint operators.

If for all \(\theta \in S^{n-1}\) and \(\lambda \ge \lambda _0\) we have

then \(\mathbf {A}_1 = \mathbf {A}_2\).

Notice that in this statement (1.6) is not assumed. This is related to the fact that V is fixed, so it is not necessary to decouple V from \(\mathbf {A}\) in the proof. Similarly, if \(\mathbf {A}\) is a fixed vector potential, it would be possible to determine q from the knowledge of \(a_{\mathbf {A},q}(\,\cdot \,,\,\cdot \,,\pm \omega )\) for a fixed \(\omega \in S^{n-1}\).

In both the previous theorems, we have considered an orthonormal basis \(\{e_1,\dots ,e_n\}\) of \(\mathbb {R}^n\) in order to simplify the notation and computations in some parts of the arguments, but we remark that our proofs can be easily adapted to allow non-orthonormal directions of measurements. Also, in both theorems the measured quantities are the scattering amplitudes generated by 2n waves incoming each one from one of the 2n different directions \(\pm e_j\). Given \(e_j\), one can avoid the need of sending a wave also from the opposite direction \(-e_j\) provided the potentials satisfy certain symmetry properties. As an example of this phenomenon, we state the following result.

Theorem 1.3

Let \(n\ge 2\) and \(\lambda _0>0\), and let \(\{e_1,\dots ,e_n\}\) be any orthonormal basis in \(\mathbb {R}^n\). Assume that the pairs of potentials \(\mathbf {A}_1,\mathbf {A}_2 \in C^{m+2}_c(\mathbb {R}^n; \mathbb {R}^n)\) and \(q_1,q_2 \in C^{m}_c(\mathbb {R}^n; \mathbb {R})\) are compactly supported in B and satisfy (1.6). Assume also that

If for all \(\theta \in S^{n-1}\) and \(\lambda \ge \lambda _0\) we have

then \(d \mathbf {A}_1 = d \mathbf {A}_2\) and \(q_1 = q_2\).

We assume that \(a_{ \mathbf {A}_1, q_1} (\lambda ,\theta , \omega ) = a_{ \mathbf {A}_2,q_2}(\lambda ,\theta ,\omega )\) for \(\omega = \pm e_n\) instead of just \(\omega = e_n \) since we have not considered any symmetry on the potential q. (A result assuming symmetries on q to reduce further the data could also be proved modifying slightly the arguments used to prove this theorem.) We also prove in time domain a more technical result analogous to Theorem 1.2 that requires just n measurements instead of 2n, and that is compatible with less restrictive symmetry conditions than (1.7) (see Theorem 5.1).

As already mentioned, the previous theorems follow from corresponding results for the time domain inverse problem (Theorems 2.1, 2.2, and 5.2, respectively). We now state the precise result that establishes the equivalence between the inverse scattering problem in frequency domain and the inverse scattering problem in time domain, extending the results in [19] to first-order perturbations.

Theorem 1.4

Let \(n\ge 2\), \(\omega \in S^{n-1}\), and \(\lambda _0>0\). Assume that \(\mathbf {A}_1,\mathbf {A}_2 \in C^{m+2}_c(\mathbb {R}^n; \mathbb {R}^n)\) and \(q_1,q_2 \in C^{m}_c(\mathbb {R}^n; \mathbb {R})\) are supported in B. For \(k=1,2\), let \(U_{\mathbf {A}_k, q_k}(x,t;\omega )\) be the unique distributional solution of the initial value problem

Then, one has that

if and only if

We remark that the restriction of the distribution \(U_{\mathbf {A}_k,q_k}\) to the surface \(\partial B \times \mathbb {R}\) is always well defined and vanishes in the open set \((\partial B \times \mathbb {R}) \cap \left\{ t <x\cdot \omega \right\} \). This can be seen from the explicit formula for \(U_{\mathbf {A}_k,q_k}\) that we will compute in Sect. 2.

The proof of the main results in time domain is based on a Carleman estimate method introduced in [19, 21], which in turn adapts the method introduced in [4]. See [3, 11, 12] for more information and references on the Bukhgeim–Klibanov method, and [5, 10] for its use in inverse boundary problems for the magnetic Schrödinger operator.

Essentially, the Carleman estimate is applied to the difference of two solutions of (1.4). The general idea is to choose an appropriate Carleman weight function for the wave operator that is large on the surface \(\{ t = x \cdot \omega \}\) and allows one to control a source term on the right-hand side of the equation. Then, one needs an additional energy estimate to absorb the error coming from the source term. This will allow one to control the difference of the potentials \(\mathbf {A}_1-\mathbf {A}_2\) or \(q_1-q_2\). This step is the key to get the uniqueness result. Unfortunately, after doing all this, there is a remaining boundary term in the Carleman estimate that cannot be appropriately controlled. However, this term can be canceled using an equivalent Carleman estimate for solutions of the wave equation coming from the opposite direction. This is why we require 2n measurements to recover n independent functions instead of just n measurements. Assuming symmetry properties on the coefficients like in Theorem 1.3 is essentially an alternative way to get around this difficulty.

An interesting point in the proof of the time domain results is how one decouples the information concerning \(\mathbf {A}\) from the information on q. The method used here consists in considering the solutions of the initial value problem

where H stands for the Heaviside function. Since (1.4) is essentially the time derivative of the previous IVP, it turns out that it is equivalent to formulate the inverse scattering problem in time domain using any of these initial value problems. The advantage is that the solutions of (1.8) contain information only about \(\mathbf {A}\) at the surface \(\{t=x \cdot \omega \}\). By using these ideas, we are able to estimate both \(\mathbf {A}_1-\mathbf {A}_2\) in terms of \(q_1-q_2\) and \(q_1-q_2\) in terms of \(\mathbf {A}_1-\mathbf {A}_2\). Using these two estimates in tandem allows us to recover both the magnetic field and electric potential under the assumption (1.6).

This paper is structured as follows. In Sect. 2, we state the time domain results, Theorems 2.1 and 2.2, from which Theorems 1.1 and 1.2 follow by Theorem 1.4. We also analyze the structure of the solutions of the initial value problems (1.4) and (1.8), and we state several of their properties that will play an essential role later on. In Sect. 3, we introduce the Carleman estimate, and in Sect. 4, we combine the results of the previous two sections to prove Theorems 2.1 and 2.2. In the last section of the paper, we state and prove Theorems 5.1 and 5.2 in order to illustrate how the number of measurements can be reduced in time domain by imposing symmetry assumptions on the potentials. (Theorem 1.3 follows from the second result.) The proof of Theorem 1.4 given in “Appendices A and B” is devoted to adapting several known results for the wave operator to our purposes.

2 The Inverse Problem in Time Domain

2.1 Main Results in Time Domain

Let \(\omega \in S^{n-1}\) be fixed. In the time domain setting, we consider the initial value problem

where \(\delta \) represents the 1-dimensional delta distribution and \(H_{\mathcal V} = -\Delta + {\mathcal V}(x,D)\) . Formally, the problem (1.1) is the Fourier transform in the time variable of (2.1). As we will show later in this section, there is a unique distributional solution of \(U_{\mathcal V}\) if the first-order coefficients of \(\mathcal V\) are in \(C^{m+2}_{c}(\mathbb {R}^n)\) and the zero-order coefficient is \(C^{m}_{c}(\mathbb {R}^n)\), for \(m\) as in (1.5).

The inverse problem in the time domain consists in determining the coefficients of \(\mathcal {V}\) from certain measurements of \(U_{\mathcal V}\) at the boundary \(\partial B \times (-T, T)\subset \mathbb {R}^{n+1}\) for some fixed \(T>0\). To simplify the notation, we define

From now, depending on the context, it will be useful to write the Hamiltonian \(H_\mathcal V\) both in the forms

and

where \(\mathbf {A},\mathbf {W}\in C^{m+2}_c(\mathbb {R}^n; \mathbb {C}^n)\) and \(q,V\in C^{m}_c(\mathbb {R}^n ;\mathbb {C})\). Since the coefficients have high regularity and are complex valued, both forms are completely equivalent, but the first notation is specially convenient in the cases where there is gauge invariance. In fact, this inverse problem has an invariance equivalent to the gauge invariance present in the frequency domain problem. A straightforward computation shows that if U is a solution of

then \(\widetilde{U}= e^{-f} U\) is a solution of

where f is any \(C^2(\mathbb {R}^n)\) function with compact support in B. The initial condition satisfied by \(\widetilde{U}\) is not affected by the exponential factor \(e^{-f}\) since for \(t<-1\) the distribution \(\delta (t-x\cdot \omega )\) is supported in \(\{ x \cdot \omega < -1\}\), a region where f vanishes. On the other hand, we also have that \(\widetilde{U}|_{\Sigma }= U|_{\Sigma }\) since the support of f is contained in B. Hence, at best one can recover the magnetic field \(d\mathbf {A}\) from the boundary data \(U|_{\Sigma }\). We now state two uniqueness results for the inverse problem that we have just introduced.

Theorem 2.1

Let \(\mathbf {A}_1,\mathbf {A}_2 \in C^{m+2}_c(\mathbb {R}^n; \mathbb {C}^n)\) and \(q_1,q_2 \in C^{m}_c(\mathbb {R}^n; \mathbb {C})\) with compact support in B and such that

Also, let \(1 \le j \le n\) and consider the 2n solutions \(U_{k, \pm j}(x,t)\) of

If for each \(1 \le j \le n\) one has \(U_{1,\pm j} = U_{2, \pm j}\) on the surface \(\Sigma \cap \{ t \ge \pm x_j \}\), then \(d \mathbf {A}_1 = d \mathbf {A}_2\) and \(q_1 = q_2\).

As in the introduction, we highlight that the restriction of the distribution \(U_{1,\pm j}\) to the surface \(\Sigma \) is well defined and vanishes in the open set \(\Sigma \cap \{ t < \pm x_j \}\), see the comments after Proposition 2.4 for more details. Theorem 1.1 follows directly from this result and Theorem 1.4. On the other hand, if we fix the zero-order term to be always the same, then the gauge invariance disappears and one can recover completely the first-order term of the perturbation. To state this result, we use the Hamiltonian in the form (2.3).

Theorem 2.2

Let \(\mathbf {W}_1,\mathbf {W}_2 \in C^{m+2}_c(\mathbb {R}^n; \mathbb {C}^n)\) and \(V \in C^{m}_c(\mathbb {R}^n; \mathbb {C})\) with compact support in B. Let \(1 \le j \le n\) and \(k=1,2\), and consider the corresponding 2n solutions \(U_{k, \pm j}\) satisfying

If for each \(1 \le j \le n\) one has \(U_{1,\pm j} = U_{2, \pm j}\) on the surface \( \Sigma \cap \{ t \ge \pm x_j \}\), then \(\mathbf {W}_1 = \mathbf {W}_2\).

As in the previous case, Theorem 1.2 follows from Theorem 2.2 with \(\mathbf {W}_k = -i\mathbf {A}_k\) and Theorem 1.4. To see this, notice that for \(k=1,2\) the Hamiltonians \(\tilde{H}_{\mathbf {A}_k,V}\) in Theorem 1.2 satisfy \(\tilde{H}_{\mathbf {A}_k,V} = (D +\mathbf {A}_k )^2 + q_k \) for \(q_k := V-\mathbf {A}_k^2 -D \cdot \mathbf {A}_k\). And since by assumption \(\mathbf {A}_k\) is real and \(\tilde{H}_{\mathbf {A}_k,V} \) is self-adjoint, \(q_k\) must be a real function. This means that the conditions required to apply Theorem 1.4 are satisfied.

In Theorem 2.2, one needs 2n measurements to obtain the unique determination of the first-order coefficient \(\mathbf {W}\). The number of measurements in Theorem 2.1 stays the same even if one now also proves the unique determination of q. This is due to the gauge invariance phenomenon, since now one uniquely determines just the magnetic field \(d\mathbf {A}\) and not the first-order term \(\mathbf {A}\). In fact, in the uniqueness proof, gauge invariance reduces one degree of freedom in the first-order term by making it possible to choose a gauge in which the nth component of \(\mathbf {A}_1 -\mathbf {A}_2\) vanishes. (As we shall see later on, the fact that the solutions \( U_{k, \pm n}\) coincide at \( \Sigma \cap \{ t = \pm x_n \}\) guarantees that there are no obstructions for this gauge transformation.)

2.2 The Direct Problem

In order to prove the previous theorems, we need to study the direct problem (2.1) in more detail. Let \(\omega \in S^{n-1}\). Assume \(\mathbf {W}\in C^{m+2}_c(\mathbb {R}^n; \mathbb {C}^n)\) and \(V\in C^{m}_c(\mathbb {R};\mathbb {C})\), and consider the initial value problems for the wave operator

and

where \(L_{\mathbf {W},V}\) was defined in (2.3). As mentioned in the introduction, the reason we also consider the second equation is that the \(\delta \)-wave \(U_\delta \) and H-wave \(U_H\) contain equivalent information about \(\mathbf {W}\) and V (see Proposition 2.5), but H-waves decouple the information on \(\mathbf {W}\) from the information on V.

To study (2.6) and (2.7), it is convenient to use certain coordinates in \(\mathbb {R}^n\) associated with the fixed vector \(\omega \). Specifically, we take any orthonormal coordinate system such that, for \(x\in \mathbb {R}^n\), we have \(x = (y,z)\) where \(y \in \{ \omega \}^{\perp }\) (identified with \(\mathbb {R}^{n-1}\)) and \(z = x \cdot \omega \).

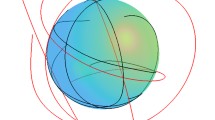

For a fixed \(T> 7\), it will be helpful to introduce the following subsets of \(\mathbb {R}^{n+1}\) (see Fig. 1):

We now give a heuristic motivation of the existence of solutions \(U_\delta \) and \(U_H\) of (2.6) and (2.7). For the interested reader, we give a proof in Sect. B.1 of the properties that we now state, by means of the progressing wave expansion method. We start by making the ansatz of looking for possible solutions of (2.6) and (2.7) in the family of functions satisfying

where f(y, z, t) and g(y, z, t) are \(C^2\) functions in \(\{t \ge z\}\). A straightforward computation shows that

In the case of equation (2.7) to satisfy the initial condition, we need to have \(f = u \) where u is a function satisfying \(u(x,t) = 1\) for all \(t<-1\), and \(g(x,t)=0\) for all \((x,t) \in \mathbb {R}^{n+1}\). Then, (2.9) implies that u must satisfy

The unique solution of the last ODE is

since it has to satisfy the initial condition \(u(y,z,z)=1\) for \(z<-1\). We now state this and further results about the solution of (2.7).

Proposition 2.3

Let \(\omega \in S^{n-1}\) be fixed. Let \(\mathbf {W}\in C^{m+2}_c(\mathbb {R}^n; \mathbb {C}^n)\) and \(V \in C^{m}_c(\mathbb {R}^n; \mathbb {C})\). Define

There is a unique distributional solution \(U_H(x,t;\omega )\) of (2.7), and it is supported in the region \(\left\{ t\ge x \cdot \omega \right\} \). In particular,

where u is a \({C^2}\) function in \(\left\{ t\ge x \cdot \omega \right\} \) satisfying the IVP

Notice that the boundary value of u at \(\{t=x\cdot \omega \}\) depends only on \(\mathbf {W}\) and not on the zero-order term V.

We now study the solutions of (2.6). In this case, we have to consider (2.9) with \(g =1\) for \(t<-1\), and \(f= v\) where v is a function satisfying \(v(x,t) = 0\) for \(t< -1\). Then, the following conditions must be satisfied:

The last two conditions are required so that \( \left[ (\partial _t+\partial _z- \omega \cdot \mathbf {W})g\right] \partial _t \delta (t-z) \) vanishes completely in all \(\mathbb {R}^{n+1}\) as a distribution. As in the case of (2.10), the third ODE and the initial condition imply that

and in fact we are going to choose \(g(y,z,t) = e^{\psi (y,z)}\) for all \((y,z,t) \in \mathbb {R}^{n+1}\). We can freely do this: \(g_1(y,z,t) \delta (t-z) = g_2(y,z,t) \delta (t-z) \) iff \(g_1(y,z,z) = g_2(y,z,z)\). Also, the previous choice implies that \(\partial _t g = 0\), so that the last condition is satisfied too. Computing explicitly \(L_{\mathbf {W},V}(g)\), the second equation in (2.13) becomes the ODE

with initial condition \(v(y,z,z) = 0\) if \(z<-1\). The unique solution is then

We state this rigorously in the following proposition.

Proposition 2.4

Let \(\omega \in S^{n-1}\) be fixed. Let \(\mathbf {W}\in C^{m+2}_c(\mathbb {R}^n; \mathbb {C}^n)\) and \(V \in C^{m}_c(\mathbb {R}^n; \mathbb {C})\). Consider \(\psi \) as in (2.11). There is a unique distributional solution \(U_\delta (x,t;\omega )\) of (2.6), and it is supported in the region \(\left\{ t\ge x \cdot \omega \right\} \). Moreover,

where v is a \(C^2\) function in \(\left\{ t\ge x \cdot \omega \right\} \) satisfying

with

One of the consequences of the formula (2.14) is that the restriction of \(U_\delta (x,t;\omega )\) to the surface \(\Sigma \) is well defined. This essentially follows from the fact that the wave front set of the distribution \(\delta (t-x\cdot \omega )\) is disjoint from the normal bundle of \(\Sigma \). We emphasize that \(U_\delta \) satisfies the initial condition \( U_\delta |_{\left\{ t<-1\right\} }= \delta (t-x\cdot \omega )\), even if it does not look that way at a first glance. This is due to the fact that when \(t<-1\) the distribution \(\delta (t-x\cdot \omega )\) is supported in \(\{ x \cdot \omega < -1\}\), a region in which \(\psi \) vanishes. Therefore,

As mentioned previously, for more details about the proofs of Propositions 2.3 and 2.4 see Sect. B.1. We remark that the condition (1.5) on the regularity of the coefficients appears in the proofs of these propositions in order to have \(C^2\) solutions u and v of (2.12) and (2.15).

An important fact later on is that the solutions of (2.6) and (2.7) satisfy that \(\partial _t U_H= U_\delta \). This is consequence of the independence of V and \(\mathbf {W}\) from t together with the uniqueness of solutions for both equations. Of particular relevance for the scattering problem will be that this equivalence also holds for the boundary data: knowledge of \(U_H|_{\Sigma _+}\) gives \(U_\delta |_{\Sigma _+}\) and vice versa.

Proposition 2.5

Let \(\mathbf {W}\in C^{m+2}_c(\mathbb {R}^n; \mathbb {C}^n)\) and \(V \in C^{m}_c(\mathbb {R}^n; \mathbb {C})\), and let \(\omega \in S^{n-1}\) be fixed. If \(U_\delta \) and \(U_H\) are, respectively, the unique distributional solutions of (2.6) and (2.7), then one has that \(\partial _t U_H = U_\delta \) in the sense of distributions. In fact, it also holds that \(v(x,t) = \partial _t u(x,t)\), so that (2.14) can be written as

Specifically, if \(\psi \) is given by (2.11) we have that

Notice that the previous identity holds in particular for every \(x \in \partial B\), so we can write that

Proof of Proposition 2.5

Since \(\mathbf {W}\) and V are independent of t, we can take a time derivative of both sides of (2.7). This implies that \(\partial _t U_H\) satisfies

Computing explicitly \(\partial _t U_H\), we get

Proposition 2.4 implies there is a unique distributional solution of (2.7), and hence, \(\partial _t U_H = U_\delta \). Then, \(\partial _t u = v\). We also get that \(u(x,x\cdot \omega ) = e^{\psi }\), but we already knew this from Proposition 2.3. Therefore, (2.16) holds true. Identity (2.17) follows directly by the fundamental theorem of calculus. \(\square \)

As an immediate consequence of identity (2.18), we get the following lemma.

Lemma 2.6

Let \(\mathbf {W}_1,\mathbf {W}_2 \in C^{m+2}_c(\mathbb {R}^n; \mathbb {C}^n)\) and \(V_1,V_2\in C^{m}_c(\mathbb {R}^n; \mathbb {C})\). For \(k=1,2\) and \(\omega \in S^{n-1}\), consider the solutions \(U_{H,k}\) and \(U_{\delta ,k}\) of

and

Then, \(U_{H,1} = U_{H,2}\) in \(\Sigma \cap \{t \ge x\cdot \omega \}\) if and only if \(U_{\delta ,1} = U_{\delta ,2}\) in \(\Sigma \cap \{ t \ge x\cdot \omega \}\).

2.3 Energy Estimates

To finish this section, we state three different estimates related to the wave operator that will be useful later on. They are analogues of the estimates given in [21, Lemmas 3.3-3.5], modified in order to account for the presence of a first-order perturbation not considered in the mentioned paper. For completeness, we have included the proofs in Sect. B.2. The first two lemmas will be used to control certain boundary terms appearing in the Carleman estimate. We denote by \(\nabla _\Gamma \alpha \) the component of \(\nabla \alpha \) tangential to \(\Gamma \).

Lemma 2.7

Let \(T>1\). Let \(\mathbf {W}\in L^\infty (B,\mathbb {C}^n) \) and \(V\in L^\infty (B,\mathbb {C})\). Then, the estimate

holds trueFootnote 1 for every \(\alpha \in C^{\infty }(\overline{Q}_+)\). The implicit constant depends on \(\Vert \mathbf {W} \Vert _{L^\infty (B)}\), \(\Vert V \Vert _{L^\infty (B)}\) and T.

Lemma 2.8

Let \(T>1\). Let \(\mathbf {W}\in L^\infty (B,\mathbb {C}^n) \), \(V\in L^\infty (B,\mathbb {C})\) and \(\phi \in C^2(\overline{Q}_+)\). Then, there is a constant \(\sigma _0>0\) such that the following estimate

holds for every \(\alpha \in C^\infty (\overline{Q}_+)\) and for every \(\sigma \ge \sigma _0\). The implicit constant depends on \(\left\| \phi \right\| _{C^2(\overline{Q}_+)}\), \(\Vert \mathbf {W} \Vert _{L^\infty (B)}\), \(\Vert V \Vert _{L^\infty (B)}\) and T.

The following lemma will be used to show that the normal derivative at \(\Sigma _{+}\) of a solution of the free wave equation outside the unit ball vanishes at \(\Sigma _{+}\) provided that certain extra conditions are met. Here, \(\nu \) denotes the unit vector field normal to \(\Sigma _+\).

Lemma 2.9

Let \(T>1\). Let \(\alpha (y,z,t)\) be a \(C^2\) function on \(\left\{ t\ge z\right\} \) satisfying

Assume that on the region \(|(y,z) |\ge 1\), we have

for some \(\beta \in C^2_c(\mathbb {R}^{n-1})\) compactly supported on \(|y |\le 1-\varepsilon \), where \(\varepsilon \in (0,1)\). Then,

for any function \(\chi \in C^1(\overline{Q}_+)\). The implicit constant depends on T.

3 The Carleman Estimate and Its Consequences

In this section, we are going to apply a suitable Carleman estimate in order to be able to control the difference of the potentials with the boundary data. For this purpose, we adapt a Carleman estimate for general second-order operators stated in [19, Theorem A.7]. The trick is to choose an appropriate weight function to obtain a meaningful estimate for the wave operator.

First, take any \(\vartheta \in \mathbb {R}^n\) such that \(|\vartheta |=2\) and consider the following smooth function,

The weight in the Carleman estimate is going to be the function \(\phi = e^{\lambda \eta }\) for some \(\lambda >0\) large enough. This choice is made in order to have several properties. On the one hand, we want \(\phi \) to be sufficiently pseudoconvex so that the Carleman estimate holds for the wave operator. On the other hand, we want \(\phi \) to decay very fast when \(t >z\) in order to deal with certain terms appearing in the Carleman estimate (see Lemma 3.2 and its application in the proof of Lemma 3.3). And finally, since the z coordinate is going to be determined by the direction \(\omega \) of the traveling wave, we require the restriction of \(\phi \) to the surface \(\{t=z\}\) to be independent of \(\omega \) (or in other words, dependent on x but independent of the choice of coordinates \(x=(y,z)\)). This is of great help since we recover \(\mathbf {A}\) combining measurements made from waves traveling in different directions.

In order to state the Carleman estimate, we fix an open set \(D \subset \{ (x,t) \in \mathbb {R}^{n+1} : |x|<3/2\}\) such that \(Q \subset D\). (Recall the notation introduced in (2.8).)

Proposition 3.1

Let \(\phi = e^{\lambda \eta }\) for \(\lambda >0\) large enough. Let \(\Omega \subset D\) be any open set with Lipschitz boundary. Then, there exists some \(\sigma _0>0\) such that

holds for all \(u\in C^{2}(\overline{\Omega })\) and all \(\sigma \ge \sigma _0\). The implicit constant depends on n, \(\lambda \), and \(\Omega \). Here, \(\nu \) is the outward pointing unit vector normal to \(\partial \Omega \), and for real v and \(0\le j \le n\), \(E^j\) is given by

where g is some real-valued and bounded function independent of \(\sigma \) and v. (Here, the index 0 corresponds to t, so that \(\partial _0 \phi = \partial _t \phi \).)

Proof

Since \(\square h \) is real if h is a real function, the statement follows from applying Theorem A.7 of [19] to the real and imaginary parts of u, and then adding the resulting estimates. However, to apply the mentioned result, one needs to verify that \(\phi \) is strongly pseudoconvex in the domain D with respect to the wave operator \(\square \). The reader can find the precise definition of this condition in [19, Appendix]), though it is not necessary for the discussion that follows.

Denote by \((\xi ,\tau ) \in \mathbb {R}^{n+1}\) the Fourier variables corresponding to (x, t), where \(\xi \in \mathbb {R}^n\) and \(\tau \in \mathbb {R}\).

It can be proved that a function \(\phi = e^{\lambda \eta }\) will be strongly pseudoconvex for \(\lambda >0\) large enough provided \(\eta \) satisfies certain technical conditions. By Propositions A.3 and A.5 in [19], these conditions are the following: One needs to verify that the level surfaces of \(\eta \) are pseudoconvex with respect to the wave operator \(\square \), and that \(|(\nabla _{x,t} \eta )(x,t) |>0\) for all \((x,t)\in \overline{D}\).

In our case, the second property is immediate since \(|\vartheta |=2\) implies that \(|x-\vartheta |^2\) has non-vanishing gradient in \(\overline{D}\). The reader can find in [19, Definition A.1] a precise definition of the first property. For the purpose of this work, it is enough to use that the level surfaces of a function f are pseudoconvex w.r.t. \(\square \) in a domain D if for all \((x,t) \in \overline{D}\) and \((\xi ,\tau ) \in \mathbb {R}^{n+1}\)

. (Notice that \(\tau ^2 - |\xi |^2\) is the symbol of the wave operator.)

We now consider f given by

where \(|\vartheta |=2\) and \(b>0\). Here, we are using the orthonormal coordinates \((x,t) = (y,z,t) \), \( y\in \mathbb {R}^{n-1}\) introduced previously. Let \((\zeta , \rho ,\tau ) \in \mathbb {R}^{n-1} \times \mathbb {R}\times \mathbb {R}\) be the Fourier variables counterpart to (y, z, t). A straightforward computation with \(j, k =1, \ldots , n-1\) and \(\partial _j = \partial _{y_j}\), shows that the only non-vanishing second-order derivatives of f are

Then, in particular, (3.3) is verified if we show that

Note that both conditions are homogeneous in the variables \((\zeta ,\rho ,\tau )\), so it is enough to study the case \(\tau =1\). Thus, (3.4) becomes

It is not difficult to verify that for \(b>3\), this inequality always holds. This proves that for \(b=4\), the level surfaces of f are strongly pseudoconvex with respect to \(\square \), and therefore, the same holds for \(\eta = \frac{1}{2}f\).

To finish, we mention that precise formula (3.2) of the quadratic forms \(E_j\) is computed in detail in [19, Section A.2]. \(\square \)

In the following lemma, we prove a couple of properties of the weight \(\phi \) that will be important later in order to show that some terms appearing in the Carleman estimate are suitably small in the parameter \(\sigma \).

Lemma 3.2

Let \(\vartheta \in \mathbb {R}^n\) with \(|\vartheta |=2\), and \(\eta \) as in (3.1). Then, the following properties are satisfied for any \(T>7\):

-

(i)

The smallest value of \(\phi \) on \(\Gamma \) is strictly larger than the largest value of \(\phi \) on \(\Gamma _{-T} \cup \Gamma _{T}\).

-

(ii)

The function

$$\begin{aligned} \kappa (\sigma ) = \sup _{(y,z) \in \overline{B}} \int _{-T}^T e^{2\sigma (\phi (y,z,t)-\phi (y,z,z))} \, dt \end{aligned}$$

satisfies that \(\underset{\sigma \rightarrow \infty }{\lim } \kappa (\sigma ) =0\).

Proof

Let \(\eta _0(y, z):= \vert x-\vartheta \vert ^2\). For the first assertion, it is enough to prove that

for T large enough, since \(\eta (y,z,z) = \eta _0(y, z)\). Observing that \(|(y,z)| \le 1\) in \(\Gamma \) and \(\Gamma _{\pm T}\), the previous inequality will hold if

and since \(\max _B \eta _0- \min _B \eta _0 = 8\), this is true for any \(T > 7\). This yields the first assertion. To prove the second assertion, we are going to use the following inequality

Since \(e^{\lambda \eta _0}>1\) always, we have

and hence, since \(|z| \le 1\),

By the dominated convergence theorem, the last integral goes to zero when \(\sigma \rightarrow \infty \), and therefore, \(\displaystyle {\lim _{\sigma \rightarrow \infty } \kappa (\sigma ) =0}\). This completes the proof. \(\square \)

We now adapt the Carleman estimate of Proposition 3.1 to our purposes. First, define

for \(x\in \mathbb {R}^n\) and \(|\vartheta | =2\), so that \(\phi (y,z,z) = \phi _0(y,z)\). From now on, it is convenient to use the notation

We are interested in applying Proposition 3.1 for \(\Omega = Q_\pm \). The boundary of \(Q_\pm \) is composed of the following regions: \(\partial Q_\pm = \Gamma \cup \Sigma _\pm \cup \Gamma _{\pm T}\). We are going to use the precise formula (3.2) only in \(\Gamma \), where in fact an explicit computation yields that

for any real function \(v \in C^2(\overline{Q}_\pm )\) (for a detailed derivation of this formula, see [19, Section A.2]). The important thing about this identity is that it does not depend on \(\nabla v\) but just on Zv and \(\nabla _y v\), which are derivatives along directions tangent to \(\Gamma \).

The following lemma is the consequence of the Carleman estimate in Proposition 3.1 that is relevant for our fixed angle scattering problem. It is an analogue of [19, Proposition 3.2] adapted to the case of magnetic potentials.

Lemma 3.3

Let \(T>7\) and \(\omega \in S^{n-1}\), and let \(\phi _0(x,\vartheta )\) be as above. Assume that

and that

hold for some vector fields \(\mathbf {E}_\pm (x), \mathbf {A}_\pm (x) \) in \(C(\mathbb {R}^n;\mathbb {C}^n)\), some functions \(f_\pm (x) , h_\pm (x), q_\pm (x)\) in \(C(\mathbb {R}^n;\mathbb {C})\), and \(w_\pm (x,t)\) in \(C^2(\overline{Q}_\pm )\). Then, there is a constant \(c>0\) such that, for \(\sigma >0\) large enough,

where \(\gamma \) is a positive function satisfying \(\gamma (\sigma ) \rightarrow 0\) as \(\sigma \rightarrow \infty \). The implicit constant is independent of \(\sigma \), \(\vartheta \) and \(w_\pm \).

We remark that since the functions \(\phi _0(x,\vartheta )\) and \(h_\pm (x)\) are independent of t, the norms \( \Vert e^{\sigma \phi _0} h_\pm \Vert _{L^2(B)}\) and \( \Vert e^{\sigma \phi _0} h_\pm \Vert _{L^2(\Gamma )}\) are equivalent. The same holds for \( \mathbf {A}_\pm (x) \) and \( q_\pm (x) \).

In the proof of the lemma, it will be useful to introduce the notation

We remark that \(F^j\) depends on the function g in Proposition 3.1 which could in principle be different in the domains \(Q_+\) and \(Q_-\), but as discussed in [19, footnote 1 in the proof of Proposition 3.2] we can choose g to be the same both in \(Q_+\) and \(Q_-\).

Proof

We first apply Proposition 3.1 with \(\Omega = Q_+ \subset D\). To simplify notation, we use w, \(\mathbf {A}\), \(\mathbf {E}\), q, and h instead of \(w_+\), \(\mathbf {A}_+\), \(\mathbf {E}_+\), \(q_+\), and \(h_+\). For \(\sigma >0\) large enough, Proposition 3.1 and (3.9) yield the estimate

where to shorten notation, we are temporarily adopting the convention that \(F^j\nu _j\) stands for \(\sum _{j=0}^n F^j\nu _j\). Since \(\mathbf {E}\) and f are bounded, a direct perturbation argument (one can absorb the extra terms in the left-hand side for \(\sigma \) large enough) allows to obtain from the previous estimate that

for \(\sigma \) large enough. Then, since \(\partial Q_+ = \Gamma \cup \Sigma _+ \cup \Gamma _T\), we have

The energy estimate in Lemma 2.8 yields

Combining this estimate with (3.10) gives

For the terms over \(\Gamma _T\), using the energy estimate in Lemma 2.7 one has

where in the last line we have used (3.6), which implies that

since \(\mathbf {A}\) and q do not depend on t. We now multiply (3.12) by \(e^{\sigma \sup _{\Gamma _T} \phi }\). Then, by Lemma 3.2 one can use in the right-hand side that \(\sup _{\Gamma _T} \phi \le \inf _\Gamma \phi -\delta \) for some \(\delta >0\). This gives

Inserting this estimate in (3.11), and taking \(\sigma \) large enough to absorb the term \(\sigma ^3 e^{-2\delta \sigma } \Vert e^{\sigma \phi }w \Vert _{H^1(\Gamma )}^2 \) on the left, yields

By (3.7), we can relate the left-hand side of the previous inequality with h using that

and that for \(\sigma \) large enough

Inserting these two estimates in (3.13) gives

By (3.6) and Lemma 3.2, we have

where \(\kappa (\sigma ) \rightarrow 0 \) as \(\sigma \rightarrow \infty \). Using this in (3.14) yields

where \(\gamma (\sigma ) := \kappa (\sigma ) + \sigma ^3 e^{-2\delta \sigma } \) also satisfies \(\gamma (\sigma ) \rightarrow 0 \) as \(\sigma \rightarrow \infty \). We now use that \(\phi (y,z,z) = e^{\lambda |x-v|^2} = \phi _0(x)\), which means that we can write the previous estimate changing the \(L^2(\Gamma )\) norms to \(L^2(B)\) norms. (This is possible since the integrands do not depend on t anymore.) Also, (3.5) and (3.9) imply that

that is, \(F^j\nu _j\) on \(\Gamma \) only depends on the part of the gradient of w tangential to \(\Gamma \). Applying these observations and rewriting the previous estimate with \(w = w_+\) and \(\mathbf {A}= \mathbf {A}_+\) yield

Fix \(\nu \) to be the downward pointing unit normal to \(\Gamma \), so \(\nu \) is an exterior normal for \(Q_+\). An analogous argument in \(Q_-\) yields the estimate

where the minus sign in the boundary term comes from the fact that the outward pointing normal at \(\Gamma \) seen as part of the boundary of \(Q_-\) is the opposite to that of the case of \(Q_+\). Now, since \(F^j(x,\sigma w, \nabla _\Gamma w) \nu _j\) is quadratic in w and \(\nabla _\Gamma w\) (and the coefficients are bounded functions), we have that

Therefore, adding (3.15) and (3.16) and applying the previous estimate give the desired result. \(\square \)

Lemma 3.3 is going to be used for two different purposes, and as a consequence, it will be convenient to restate the estimate in a more specific way. We do this in the following couple of lemmas.

Lemma 3.4

Let \(T>7\). Let \(\mathbf {E}_\pm \), \(\mathbf {A}_\pm \), \(f_\pm \), \(q_\pm \) and \(\gamma (\sigma )\) be as in Lemma 3.3, and suppose that \(h_\pm = q_\pm \). Assume that for a fixed \(\omega \in S^{n-1}\), there exist \(w_\pm \in H^2(Q_\pm )\) such that (3.6) and (3.7) hold with \(w_+ = w_-\) on \(\Gamma \). Assume also that \(w_\pm |_{\Sigma _\pm } = 0\) and \(\partial _\nu w_\pm |_{\Sigma _\pm } = 0 \). Then, one has that

for \(\sigma >0\) large enough.

The proof is immediate from Lemma 3.3. This lemma will be applied in the following section to appropriate \(w_\pm \) functions that we will construct using the \(\delta \)-wave solutions given by Proposition 2.4. The following lemma will be used in a similar setting, in this case for \(w_\pm \) functions generated by H-wave solutions like the ones given by Proposition 2.4.

Lemma 3.5

Let \(T>7\). Also, let \(\mathbf {A}_\pm \), \(q_\pm \) and \(\gamma (\sigma )\) be as in Lemma 3.3.

Suppose that for a fixed \(\omega \in S^{n-1}\) there exist \(w_\pm \), \(\mathbf {E}_\pm \) and \(f_\pm \) satisfying the assumptions of Lemma 3.3, and such that (3.6) and (3.7) hold with \(w_+ = w_-\) on \(\Gamma \) and \(h_\pm = \omega \cdot \mathbf {A}_\pm \). Assume also that \(w_\pm |_{\Sigma _\pm } = 0\) and \(\partial _\nu w_\pm |_{\Sigma _\pm } = 0 \). Then, one has that

Moreover, let \(\{e_1 , \dots , e_n\}\) be an orthonormal basis of \(\mathbb {R}^n\), and let \(J \subseteq \{1,\dots ,n\}\) be the set of natural numbers satisfying that at least one of the components \(e_j \cdot \mathbf {A}_+ \) and \(e_j \cdot \mathbf {A}_- \) does not vanish completely in \(\mathbb {R}^n\). Suppose that for each \(j\in J\), estimate (3.18) holds with \(\omega = e_j\). Then, one has that

for \(\sigma >0\) large enough.

Proof

Estimate (3.18) follows immediately from Lemma 3.3 under the assumptions in the statement. Let us prove (3.19). By assumption for each \(j \in J\), we have

But notice that, by definition, \(e_j \cdot \mathbf {A}_\pm = 0\) in \(\mathbb {R}^n\) if \(j \notin J\), so that \(\mathbf {A}_\pm = \sum _{j\in J} (e_j \cdot \mathbf {A}_\pm )e_j \). This means that adding (3.20) for all \(j\in J\) gives

Then, using that \(\gamma (\sigma ) \rightarrow 0\) as \(\sigma \rightarrow \infty \), to finish the proof is enough to take \(\sigma \) large enough in order to absorb in the left-hand side the first term on the right. \(\square \)

We have already seen in Sect. 2 that the restriction to \(\Gamma \) of the solutions of (2.7) and (2.6) is in a certain sense related to the coefficients of the perturbation. In the next section, we use this fact to construct appropriate functions \(w_+\) and \(w_-\) so that estimates (3.17) and (3.19) hold simultaneously with \(\mathbf {A}_\pm \) and \(q_\pm \) being quantities related to the differences of potentials \(\mathbf {A}_1 - \mathbf {A}_2 \) and \(q_1 - q_2\). This will yield the proof of Theorem 2.1 (and similarly of 2.2), since the function \(\gamma (\sigma )\) in the previous lemmas goes to zero when \(\sigma \rightarrow \infty \).

4 Proof of the Uniqueness Theorems with 2n measurements

With Lemmas 3.4 and 3.5, we can finally prove the uniqueness results, Theorem 2.1 and 2.2. Both theorems are stated in terms of pairs of measurements, one for each direction \(\pm e_j\). Due to this fact, it is convenient to give an explicit expression in the same coordinate system for solutions of (2.7) and (2.6) that correspond to the opposite directions \( \omega = \pm \omega _0\), where \(\omega _0 \in S^{n-1}\) is fixed.

Let \(x \in \mathbb {R}^n\), and choose any orthonormal coordinate system in \(\mathbb {R}^n\) such that \(x = (y,z)\), where \(y \in \{ \omega _0 \}^{\perp }\) (identified with \(\mathbb {R}^{n-1}\)) and \(z= x\cdot \omega _0\). Consider the solutions \(U_{H,\pm }(y,z,t) := U_{H}(y,z,t; \pm \omega _0)\) given by Proposition 2.3 when \(\omega = \omega _0\) and when \(\omega = -\omega _0\). By Proposition 2.3, in this coordinate system it holds that \(U_{H,\pm }(y,z,t) = u_\pm (y,z,t)H(t- (\pm z))\) with

where

In the case of Proposition 2.4, it is convenient to state the results for a Hamiltonian written in the form (2.2). This is easily obtained making the change \(\mathbf {W}= -i\mathbf {A}\) and \(V= \mathbf {A}^2 + D\cdot \mathbf {A}+ q\) in the previous results. Therefore, using the same coordinates as before, the solutions \(U_{\delta ,\pm }(y,z,t):= U_{\delta }(y,z,t;\pm \omega _0)\) of (2.6) given by Proposition 2.4 satisfy

where \( \psi _\pm (y,z) := \pm (-i) \int _{-\infty }^{\pm z} \omega _0 \cdot \mathbf {A}(y, \pm s) \,ds\), and \(v_\pm \) satisfies

Having these explicit coordinate expressions, we now prove Theorems 2.1 and 2.2. We start with the second one which is the simplest, since the zeroth-order term V is fixed. The proof consists in the construction of two appropriate functions \(w_+\) and \(w_-\) using the solutions \(U_{k, \pm j}\) of (2.7) in order to apply the results introduced in the previous section. For a fixed direction \(\omega = e_j\) and \(k=1,2\), we use \(U_{k, + j}\) to construct \(w_+\) and, with a certain gauge change, we use \(U_{k, - j}\) to construct \(w_-\). The gauge change is a technical requirement necessary to have \(w_+ = w_-\) in \(\Gamma \), as assumed in Lemmas 3.4 and 3.5. Notice that the reason for this assumption comes from the fact that one wants to get rid of the first term on the right-hand side of the estimate (3.8) which is large when the parameter \(\sigma \) is large.

Proof of Theorem 2.2

By Lemma 2.6, we know that it is completely equivalent to consider that the \(U_{k, \pm j}\) are solutions of the initial value problem

instead of the IVP (2.5). Here, it is convenient to work with H-waves instead of \(\delta \)-waves since, as mentioned previously, the former have boundary values on \(\Gamma \) that are independent of V (see Proposition 2.3).

Fix \(1 \le j \le n\), and take any orthonormal coordinate system in \(\mathbb {R}^n\) such that \(x= (y,z)\) with \(z= x_j\) and \(y \in \mathbb {R}^{n-1}\). Let \(k=1,2\). By the previous discussion, we know that the \(U_{k,\pm j}\) functions satisfy that

where \(u_{k,\pm j}\) is given by (4.1) with \(\mathbf {W}=\mathbf {W}_k\), \(\omega = \pm e_j\), and \(\omega _0 = e_j\). Writing this in detail, we obtain that

By assumption, we have that \(U_{1,\pm j} = U_{2,\pm j}\) on \(\Sigma _\pm = \Sigma \cap \{ t \ge \pm z\}\), so that

and hence, in particular \(u_{1,j} = u_{2, j}\) in \( \Sigma _+ \cap \Gamma = \Sigma \cap \{ t = z\}\). From this and (4.4), we get that there is a function \(\mu _j : \mathbb {R}^{n-1} \rightarrow \mathbb {C}\) such that

We now define \(w_+ := u_{1,j} - u_{2,j}\) in \(\overline{Q}_+\). With this choice, Proposition 2.3 yields that \(w_+ \in C^2(\overline{Q}_+)\), and (4.4) that

To apply Lemma 3.3 and Lemma 3.5, we need also to define an appropriate function \(w_-\) in \(\overline{Q}_-\). To obtain a useful choice, we now consider the solutions \(u_{k,-j}\) of (4.4) and we take

Then, \(w_- \in C^2(\overline{Q}_-)\) as desired. Also, if \(t=z\)

Therefore, \(w_+\) satisfies

and \(w_- \) satisfies

where \(f_- = V-|\nabla \mu _{j}|^2 + \Delta \mu _{j} -2\mathbf {W}_1 \cdot \nabla \mu _{j}\).

Observe that \(e^{\mu _{j}(y)}\), \(u_{2,\pm j}(x,\pm t)\) and \(|\nabla u_{2,\pm j}(x,\pm t) |\) are bounded functions in \(\overline{Q}_\pm \). Also, we have that \(|u_{2,\pm j}(x, \pm t)| \gtrsim 1\) in \(\Gamma \) by (4.4). Therefore, it holds that

with \(f_+ = V\), \(\mathbf {E}_+ = \mathbf {W}_1\), \(\mathbf {E}_- = \mathbf {W}_1 +\nabla \mu _{j}\), and \(f_-\) as before (notice that \(e_j \cdot \mathbf {E}_\pm = e_j \cdot \mathbf {W}_1\) since \(e_j \cdot \nabla \mu _j =0\)). Hence, (3.6) and (3.7) are satisfied with \(\mathbf {A}_\pm = \mathbf {W}_1-\mathbf {W}_2\), \(q_\pm = 0\), and \(h_\pm = e_j \cdot (\mathbf {W}_1-\mathbf {W}_2)\).

On the other hand, (4.5) implies that \(w_+|_{\Sigma _+} = w_-|_{\Sigma _-} = 0\). Then, applying Lemma 2.9, respectively, with \(\alpha = w_+\) and \(\chi =1\), or \(\alpha = e^{-\mu _j}w_-\) and \(\chi = e^{\mu _j}\) yields that \(\partial _\nu w_\pm |_{\Sigma _\pm } = 0\).

Since \(w_+ = w_-\) in \(\Gamma \) by (4.7), the previous observations imply that all the conditions to apply Lemma 3.5 are satisfied, so that (3.18) holds for each \(j\in \{1,\dots ,n\}\) with \(\mathbf {A}_\pm = \mathbf {W}_1-\mathbf {W}_2\) and \(q_\pm = 0\). Therefore, the same lemma implies that (3.19) must also hold, and this gives

Hence, \(\mathbf {W}_1 =\mathbf {W}_2\), since \(e^{\sigma \phi _0(x)}>0\) always. This finishes the proof. \(\square \)

We now go to the remaining case, Theorem 2.1. In this result, the zero-order term is not fixed so that there is gauge invariance. (In fact, to simplify the proof it will be convenient to fix a specific gauge.) This is a harder proof than the previous one since we need to decouple information about q from the information about \(\mathbf {A}\).

Proof of Theorem 2.1

Let \(k=1,2\). Since making a change of gauge \(\mathbf {A}_k -\nabla f_k\) with \(f_k\) compactly supported leaves invariant the measured values \(U_{k,\pm j}|_{\Sigma _{+ ,\pm j}}\), we can freely choose a suitable \(f_k\) in order to simplify the problem. In fact, to show that \(d\mathbf {A}_1= d\mathbf {A}_2\) it is enough to prove that \(\mathbf {A}_1=\mathbf {A}_2\) in a specific fixed gauge. Under the assumptions in the statement, one can always take

since (1.6) implies that \(f_k\) must be compactly supported in B. With this choice, one has that

in \(\mathbb {R}^n\). Therefore, the previous arguments show that from now on, we can assume without loss of generality that we have fixed a gauge such that \(e_n \cdot \mathbf {A}_1 = e_n \cdot \mathbf {A}_2 = 0\) in \(\mathbb {R}^n\).

Fix \(1 \le j \le n-1\). We again take any orthonormal coordinate system in \(\mathbb {R}^n\) such that \(x= (y,z)\), where \(y\in \mathbb {R}^{n-1}\) and \(z= x_j\). Let \(k=1,2\). As in the proof of Theorem 2.2, by Lemma 2.6 we can assume that \(U_{k,\pm j}\) satisfies the IVP

instead of (2.4). By Proposition 2.3, we know that the \(U_{k,\pm j}\) have the structure described in (4.3) for \(1\le j \le n-1 \) where \(u_{k,\pm j}\) satisfies (4.1) with \(\mathbf {W}= -i\mathbf {A}_k\), \(\omega = \pm e_j\), and \(\omega _0 = e_j\). Writing this in detail, we obtain that

By the assumption that \(U_{1,\pm j} = U_{2,\pm j}\) on \(\Sigma _\pm \) and (4.3), we have that (4.5) holds analogously in this case. Specifically, in \( \Sigma _+ \cap \Gamma \) this implies that there is a function \(\mu _j\) such that

We now define \(w_+ := u_{1,j} - u_{2,j}\), so that \(w_+ \in C^2(\overline{Q}_+)\) by Proposition 2.3, and

To define \(w_-\), we consider the solutions \(u_{k,-j}\) of (4.10) and we take

Then, \(w_- \in C^2(\overline{Q}_-)\) as desired. Also, for \(t=z\)

Hence, if we define

\(w_+\) satisfies

and \(w_- \) satisfies

where \(\widetilde{V}_k = V_k + |\nabla \mu _{j}|^2 + \Delta \mu _{j} + 2i \mathbf {A}_k \cdot \nabla \mu _{j}\). Since \(|\nabla \mu _j|\) is bounded, we have that

On the other hand, \(u_{2,\pm j}(x, \pm t)\) and \(|\nabla u_{2,\pm j}(x, \pm t) |\) are also bounded in \(\overline{Q}_\pm \), and on \(\Gamma \) we have that \(|u_{2,\pm j} | \gtrsim 1 \) by (4.10). Therefore, if \(f_+ = V_1\), \(f_- = \widetilde{V}_1\), \(\mathbf {E}_+ = -i\mathbf {A}_1\), and \(\mathbf {E}_- = -i\mathbf {A}_1 + \nabla \mu _j\) (notice that \(e_j \cdot \nabla \mu _j = 0\)), one gets

so that (3.6) and (3.7) are satisfied with \(h_\pm = e_j \cdot (\mathbf {A}_1-\mathbf {A}_2)\),

As mentioned previously, (4.5) holds analogously in this case, so that \(w_+|_{\Sigma _+} = w_-|_{\Sigma _-} = 0\). Again, applying Lemma 2.9, respectively, with \(\alpha = w_+\) and \(\chi =1\), or \(\alpha = e^{-\mu _j}w_-\) and \(\chi = e^{\mu _j}\) yields that \(\partial _\nu w_\pm |_{\Sigma _\pm } = 0\).

Also, (4.11) shows that \(w_+ = w_- \) in \(\Gamma \). These assertions hold for each \(j =1, \dots ,n-1\), and the n-th component \(e_n \cdot (\mathbf {A}_1-\mathbf {A}_2)\) vanishes in \(\mathbb {R}^n\). This means that the assumptions of Lemma 3.5 are satisfied with \(J= \{ 1, \dots ,n-1 \}\).

As a consequence, (3.18) holds for each \(j\in J\) and hence (3.18) also holds with the choices established in (4.13). This gives

where \(\gamma (\sigma ) \rightarrow 0\) as \(\sigma \rightarrow \infty \).

We now use the information provided by \(U_{k,\pm n}\). We use the same coordinates as before, in this case with \(z= x_n\). Since \(e_n \cdot \mathbf {A}_1= e_n \cdot \mathbf {A}_2 =0\) in all \(\mathbb {R}^n\), we have that

Then, Proposition 2.4 and (4.2) yield

where using the notation introduced in (4.12) we have

The assumption \(U_{1,\pm n}|_{\Sigma _\pm } = U_{2,\pm n}|_{\Sigma _\pm }\) in the statement implies that

and since \(\mathbf {A}_k\) and \(q_k\) are compactly supported, this means that

We define

so that \(w_\pm \in C^2(\overline{Q}_\pm )\) by Proposition 2.4. The combination of the identities (4.16) and (4.18) and a change of variables shows that

Also, by direct computation (4.16) yields

Hence, (3.6) and (3.7) are satisfied with \(h_\pm = V_1-V_2\), \(\mathbf {E}_\pm =-i\mathbf {A}_1\), \(f_\pm =V_1\), and \(\mathbf {A}_\pm \) and \(q_\pm \) as in (4.13). Also, (4.17) implies that \(w_+|_{\Sigma _+} = w_-|_{\Sigma _-} = 0\), and (4.19) that \(w_+|_\Gamma = w_-|_\Gamma \). The condition \(\partial _\nu w_\pm |_{{\Sigma _\pm }} = 0\) follows again from Lemma 2.9.

Therefore, all the assumptions to apply Lemma 3.4 hold with the previous choices for \(\mathbf {A}_\pm \) and \(q_\pm \), and this yields

Since \(\gamma (\sigma ) \rightarrow 0\) as \(\sigma \rightarrow \infty \), combining the previous estimate with (4.14) immediately implies that \(\mathbf {A}_1-\mathbf {A}_2 =0\) and \(V_1 -V_2 = 0\) in this gauge. In a general gauge, then \(d(\mathbf {A}_1-\mathbf {A}_2) =0\). And since \(V_k = \mathbf {A}_k^2 + D \cdot \mathbf {A}_k + q_k\), one obtains that \(q_1 = q_2\). This finishes the proof. \(\square \)

That the \(w_\pm \) functions satisfy condition (4.19) is one of the key properties needed in order to prove Theorem 2.1, since otherwise Lemma 3.4 cannot be applied with this choice of \(w_\pm \). In order to prove (4.19), we have used indirectly that (1.6) holds. (Notice that this last condition means that we can move to a gauge in which (4.15) is true.) In fact, (4.19) is no longer true in general if one removes (1.6), since there appear non-vanishing terms in the right-hand side of (4.19) related to \(\psi _+\) and \(\psi _-\).

5 Reducing the Number of Measurements

In this section, we prove an analogous result to Theorem 2.2, in which the number of measurements is reduced to n. To compensate this, one needs assume that the potentials have certain symmetries. (In fact, each component of \(\mathbf {W}\) must satisfy some kind of antisymmetry property.) The main change in the proof is in the definition of \(w_-\) in \(Q_-\), which now is constructed by symmetry from \(w_+\), instead of using new information coming from the solution associated with the opposite direction. For each \(0\le j \le \), the symmetry of \(e_j \cdot \mathbf {W}\) plays an essential role since it is necessary to show that \(w_+ = w_-\) in \(\Gamma \), in order to extract meaningful results from the Carleman estimate.

Theorem 5.1

Let \(\mathbf {W}_1,\mathbf {W}_2 \in C^{m+2}_c(\mathbb {R}^n; \mathbb {C}^n)\), and \(V \in C^{m}_c(\mathbb {R}^n; \mathbb {C})\) with compact support in B. Also, let \(1 \le j \le n\) and \(k=1,2\), and consider the n solutions \(U_{k, j}\) of

Assume also that for each \(1 \le j \le n\), there exists an orthogonal transformation \(\mathcal O_j\) satisfying that \(\mathcal O_j(e_j) = -e_j\) and such that

Then, if for all \(1 \le j \le n\) one has \(U_{1,j} = U_{2,j}\) on the surface \( \Sigma \cap \{ t \ge x_j \}\), it holds that \(\mathbf {W}_1 = \mathbf {W}_2\).

The simplest example of a vector field satisfying the previous conditions is the case of an antisymmetric vector fields \(\mathbf {W}_k\), that is, such that \(\mathbf {W}_k (-x) = -\mathbf {W}_k(x)\) (for example, the gradient of a radial function).

We remark that it is possible to show that the previous theorem also holds if one substitutes condition (5.2) by

that is, a symmetry condition instead of an antisymmetry condition in the imaginary part of \(e_j \cdot \mathbf {W}_k\).

Proof of Theorem 5.1

By Lemma 2.6, we know that it is completely equivalent to assume that the \(U_{k, j}\) solutions solve

instead of (5.1). Take \(1 \le j \le n\). In this proof, we fix \(x= (y,z)\) in \(\mathbb {R}^n\), where \(z= x_j\) and \(y \in \mathbb {R}^{n-1}\). Let \(k=1,2\). By Proposition 2.3 and (4.1), we know that \(U_{k,j}(y,z,t) = u_{k, j}(y,z,t)H(t- z)\) where \(u_{k, j}\) is the same function as \(u_{k, +j}\) in (4.4)

Under the assumption in the statement, we have that \(u_{1,j} = u_{2, j}\) on the surface \( \Sigma _+ \) and hence also in \( \Sigma _+ \cap \Gamma = \Sigma \cap \{ t = z\}\), which implies that there is a function \(\mu _j : \mathbb {R}^{n-1} \rightarrow \mathbb {C}\) such that (4.6) holds.

We now define \(w_+ := u_{1,j} - u_{2,j}\) in \(\overline{Q}_+\). Then,

In this coordinates, for each matrix \(\mathcal O_j\) in the statement there is by definition a \(n-1 \times n-1\) orthogonal matrix \(\mathcal T_j\) such that

To apply Lemma 3.5, we need also to define an appropriate function \(w_-\) in \(\overline{Q}_-\). We take

If \(t=z\), using (5.3) and the symmetry condition (5.2), we get that

Therefore, \(w_+\) satisfies (4.8), and \(w_- \) satisfies

where

Also, since \(e_j \cdot \mathbf {W}_1( {\mathcal T}_j(y),-z) = - e_j \cdot \mathbf {W}_1( y,z) \), we have that

Since \( |u_2(y,z,z)| \gtrsim 1\) always, and \(|\nabla u_2|\) is bounded above in \(\overline{Q}_+\), the previous identities show that

where \(\mathbf {E}_-\) and \(f_-\) were defined in (5.5), \(\mathbf {E}_+ = \mathbf {W}_1\), \(f_+ = V\), and

From (5.4), we get that \(w_+ = w_-\) on \(\Gamma \). Also, we have that \(w_+|_{\Sigma _+} = w_-|_{\Sigma _-} = 0\), and \(\partial _\nu w_\pm |_{{\Sigma _\pm }} = 0\) by Lemma 2.9. Hence, we can use (3.18) of Lemma 3.5 with \(q_\pm = 0\) and \(\omega = e_j\), which yields

Now, this holds for \(\phi _0(x) = \phi _0(x,\vartheta ) = e^{\lambda \vert x-\vartheta \vert ^2}\), where \(\vartheta \) is an arbitrary vector such that \(|\vartheta | = 2\). Also, the implicit constant in the estimate is independent of \(\vartheta \). Therefore, writing \(\vartheta = 2\theta \) for \(\theta \in S^{n-1}\), we can integrate both sides of the previous estimate in \(S^{n-1}\) to get

where \(d S(\theta )\) denotes integration against the surface measure of the unit sphere. Changing the order of integration with the \(L^2(B)\) integrals gives

where it can be verified that \(r(x,\sigma ) : = \left( \int _{S^{n-1}} e^{2\sigma \phi _0(x,2\theta )} \, \mathrm{d} S(\theta ) \right) ^{1/2}\) is a radial function.

Since r(x) is a radial, and \(\mathbf {A}_+\) and \(\mathbf {A}_-\) differ in an orthogonal transformation by (5.6), a direct change of variables shows that

and therefore, taking into account that \(\mathbf {A}_+ = \mathbf {W}_1-\mathbf {W}_2\) we get from (5.7) that

This estimate can be proved for any \(1 \le j \le n\). Adding over all directions, and using that \(\gamma (\sigma ) \rightarrow 0\) as \(\sigma \rightarrow 0\) to absorb the resulting term on the right-hand side in the left, yields

for \(\sigma >0\) large enough. Since \(r(x,\sigma )>0\) for all \(x\in \mathbb {R}^n\), and \(\sigma >0\), the previous estimate implies that \(\mathbf {W}_1 =\mathbf {W}_2\). This finishes the proof. \(\square \)

Combining the techniques used in this proof with the techniques used in the proof of Theorem 2.1, the reader can obtain some similar results to the previous one, always interchanging some measurements for symmetry assumptions on \(\mathbf {A}\) and q. A specially simple case is the following one.

Theorem 5.2

Let \(\mathbf {A}_1, \mathbf {A}_2 \in C^{m+2}_c(\mathbb {R}^n; \mathbb {C}^n)\) and \(q_1,q_2 \in C^{m}_c(\mathbb {R}^n; \mathbb {C})\) with compact support in B, satisfying (1.6), and such that

Let \(1 \le j \le n-1\) and consider the \(n-1\) solutions \(U_{k, j}(x,t)\) of

and the 2 solutions \(U_{k, \pm n}(x,t)\) of

If for each \(1 \le j \le n\) one has \(U_{1, j} = U_{2, j}\) on the surface \(\Sigma \cap \{ t \ge x_j \}\), then \(d \mathbf {A}_1 = d \mathbf {A}_2\) and \(q_1 = q_2\).

Proof

We use the notation introduced in (4.12). Notice that \(U_{k,j}\) is the same as \(U_{k,+j}\) in the proof of Theorem 2.1. We only give a sketch of the main ideas in the proof. One can start as in the proof of Theorem 2.1 by making a change of gauge such that \(e_n \cdot \mathbf {A}_1 = e_n \cdot \mathbf {A}_2 =0 \). One can verify that this choice of gauge does not alter the antisymmetry property (5.8).

Let \(0\le j \le n-1\). By Lemma 2.6, we can assume that the \(U_{k,j} =U_{k,+j}\) satisfy (4.9) and (4.10) with \(\mathbf {W}_k = -i\mathbf {A}_k\) and \(V= V_k\). We define \(w_+ = u_{1,j}-u_{2,j}\) and

It follows that \(w_+ = w_-\) in \(\Gamma \), and this can be verified using (5.8), in complete analogy with the computations in (5.4). The remaining conditions necessary to apply Lemma 3.5 with \(\mathbf {A}_+ = \mathbf {A}_1 -\mathbf {A}_2\), \(\mathbf {A}_-(x) = \mathbf {A}_+(-x)\), \(q_+ = V_1-V_2\) and \(q_-(x) =q_+(-x)\) can be verified as in the proof of Theorem 2.1. Then, Lemma 3.5 and a change of variables to transform \(q_-\) in \(q_+\), and \(\mathbf {A}_-\) in \(\mathbf {A}_+\) yields the estimate

We can now repeat exactly the same arguments used in the proof of Theorem 2.1 to prove (4.20). In fact, we have that (4.20) holds independently for both the weight functions \(\phi _0(\cdot , \vartheta )\) and \(\phi _0(\cdot ,- \vartheta )\). Adding these two possible estimates yields

The previous inequality and (5.9) imply that \(q_1 = q_2\) and \(\mathbf {A}_1 = \mathbf {A}_2\) in the gauge fixed at the beginning of the proof. \(\square \)

Notes

Throughout the paper, we write \(a \lesssim b\) or equivalently \(b \gtrsim a\), when a and b are positive constants and there exists \(C > 0\) so that \(a \le C b\). We refer to C as the implicit constant in the estimate.

References

Barceló, J.A., Castro, C., Luque, T., Meroño, C.J., Ruiz, A., Vilela, M.C.: Uniqueness for the inverse fixed angle scattering problem. J. Inverse Ill-Posed Probl. 28(4), 465–470 (2020)

Bayliss, A., Li, Y., Morawetz, C.: Scattering by a potential using hyperbolic methods. Math. Comput. 52(186), 321–338 (1989)

Bellassoued, M., Yamamoto, M.: Carleman Estimates and Applications to Inverse Problems for Hyperbolic Systems. Springer Monographs in Mathematics. Springer (2017)

Bukhgeim, A., Klibanov, M.: Global uniqueness of class of multidimensional inverse problems. Soviet Math. Dokl. 24, 244–247 (1981)

Cristofol, M., Soccorsi, E.: Stability estimate in an inverse problem for non-autonomous Schrödinger equations. Appl. Anal. 90(10), 1499–1520 (2011)

Deift, P., Trubowitz, E.: Inverse scattering on the line. Commun. Pure Appl. Math 32(2), 121–151 (1979)

Evans, L.: Partial differential equations. Graduate Student in Mathematics, vol. 19, American Mathematical Society (1997)

Hörmander, L.: Linear Partial Differential Operators, 4th Printing. Springer-Verlag, Berlin (1976)

Hörmander, L.: The Analysis of Linear Partial Differential Operators, vol. I–IV. Springer-Verlag, Berlin (1983–1985)

Huang, X., Kian, Y., Soccorsi, E., Yamamoto, M.: Carleman estimate for the Schrödinger equation and application to magnetic inverse problems. J. Math. Anal. Appl. 474, 116–142 (2019)

Imanuvilov, O., Yamamoto, M.: Global uniqueness and stability in determining coefficients of wave equations. Commun. PDE 26, 1409–1425 (2001)

Klibanov, M.: Carleman estimates for global uniqueness, stability and numerical methods for coefficient inverse problems. J. Inverse Ill-Posed Probl. 21(4), 477–560 (2013)

Krishnan, V., Rakesh, Senapati, S.: Stability for a formally determined inverse problem for a hyperbolic PDE with space and time dependent coefficients. Preprint (2020), arXiv:2010.11726

Ma, S., Salo, M.: Fixed angle inverse scattering in the presence of a Riemannian metric. Preprint (2020), arXiv:2008.07329

Marchenko, V.: Sturm–Liouville Operators and Applications. Operator Theory: Advances and Applications. Birkhäuser, Basel (2011)

Melrose, R.: Geometric Scattering Theory. Lectures at Stanford Geometric scattering theory. Lectures at Stanford Geometric scattering theory. Cambridge University Press (1995)

Melrose, R., Uhlmann, G.: An Introduction to Microlocal Analysis. Book in preparation, http://www-math.mit.edu/rbm/books/imaast.pdf

Meroño, C.J.: Fixed angle scattering: recovery of singularities and its limitations. SIAM J. Math. Anal. 50(5), 5616–5636 (2018)

Rakesh, Salo, M.: Fixed angle inverse scattering for almost symmetric or controlled perturbations. SIAM J. Math. Anal. 52(6), 5467–5499 (2020)

Ruiz, A.: Recovery of the singularities of a potential from fixed angle scattering data. Commun. Partial Differ. Equ. 26(9–10), 1721–1738 (2001)

Rakesh, Salo, M.: The fixed angle scattering problem and wave equation inverse problems with two measurements. Inverse Probl. 36(3), 035005 (2020)

Shiota, T.: An inverse problem for the wave equation with first order perturbation. Am. J. Math. 107(1), 241–251 (1985)

Stefanov, P.: Generic uniqueness for two inverse problems in potential scattering. Commun. Partial Differ. Equ. 17(1–2), 55–68 (1992)

Teschl, G.: Mathematical Methods in Quantum Mechanics. Graduate studies in Mathematics, vol. 99, AMS (2009)

Uhlmann, G.: Uniqueness for the inverse backscattering problem for angularly controlled potentials. Inverse Probl. 30, 065004 (2014)

Uhlmann, G.: A time-dependent approach to the inverse backscattering problem. Inverse Probl. 17(4), 703–716 (2001)

Yafaev, D.R.: Mathematical Scattering Theory: Analytic Theory. Mathematical Surveys and Monographs, vol. 99, AMS (2010)

Acknowledgements

C.M. was supported by project MTM2017-85934-C3-3-P. L.P. and M.S. were supported by the Academy of Finland (Finnish Centre of Excellence in Inverse Modelling and Imaging, grant numbers 312121 and 309963), and M.S. was also supported by the European Research Council under Horizon 2020 (ERC CoG 770924). The authors would like to thank the anonymous referees for their comments and suggestions that have helped to improve this paper.

Funding

Open access funding provided by University of Jyväskylä (JYU).

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Jan Derezinski.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A. Stationary Scattering

In this section, we prove Theorem 1.4. We have adapted the proof of [19, Theorem 5.1] in order to allow for the presence of a first-order perturbation, but the main ideas and the exposition are similar to the work in that paper.

We define \(\mathbb {C}^+: = \{ \lambda \in \mathbb {C}: \, {{\,\mathrm{\mathrm {Im}}\,}}(\lambda )>0 \}\), and we write \(R_{\mathcal V}(\lambda ) \) for the resolvent operator \(R_{\mathcal V}(\lambda ) =(H_{\mathcal V} -\lambda ^2)^{-1}\); in case it is well defined. We also use the following non-standard convention for the Fourier transform and its inverse for Schwartz functions on the real line:

(and equally for the extension of the Fourier transform to tempered distributions).

In order to illustrate why it is reasonable to expect an equivalence between the stationary scattering data and the time domain data as stated in Theorem 1.4, we reproduce here the following heuristic argument given in [19]. Let \(U_{\mathcal V}(x,t;\omega )\) be the solution of

Suppose for the moment that the Fourier transform of \(U_{\mathcal V}\) in the time variable is well defined. Then, for each \(\lambda \in \mathbb {R}\) the function \(\widetilde{U}_{\mathcal V}(x,\lambda ;\omega ) \) should solve the equation

If we define the time domain scattering solution to be \(u_{\mathcal V} = U_{\mathcal V } - \delta (t-x\cdot \omega )\), one has that \(\widetilde{U}_{\mathcal V}(x,\lambda ) = e^{i\lambda x\cdot \omega } + \widetilde{u}_{\mathcal V}(x,\lambda )\), where \(\widetilde{u}_{\mathcal V}(x,\lambda )\) extends holomorphically to \(\{ {{\,\mathrm{\mathrm {Im}}\,}}(\lambda )>0 \}\) since \(u_{\mathcal V}\) vanishes for \(t<-1\). Since these are the properties that characterize the outgoing eigenfunctions of (A.1), one might expect that

where \(\psi _{\mathcal V}\) is the solution of (1.1). Now, the condition \(a_{\mathcal V_1}(\lambda ,\cdot ,\omega ) = a_{\mathcal V_2}(\lambda ,\cdot ,\omega )\) implies by the Rellich uniqueness theorem that the outgoing eigenfunctions for \(H_{\mathcal V_1}\) and \(H_{\mathcal V_2}\) agree outside the support of the potentials:

If the map \(\lambda \rightarrow \psi _{\mathcal V}(\lambda ,x,\omega )\) was smooth near \(\lambda =0\), then one could have (A.2) for all \(\lambda \in \mathbb {R}\). Taking the inverse Fourier transform would imply that

The argument above is only formal since requires the regularity of the map \(\lambda \rightarrow \psi _{\mathcal V}(\lambda ,x,\omega )\) on the real line. The regularity of this map is related to the poles of the meromorphic continuation of the resolvent \(R_{\mathcal {V}}(\lambda )\), initially defined in the resolvent set of \(H_{\mathcal {V}}\). Indeed, in some cases there is a pole located at \(\lambda =0\) and thus the argument above does not work in general. To get around these difficulties, we start by recalling the following property of the Fourier transform.

Lemma A.1

Suppose F(z) is analytic on \(\{{{\,\mathrm{\mathrm {Im}}\,}}(z) >r\}\) for some \(r\in \mathbb {R}\) and

for some positive R, C, N independent of z. There exist an \(f \in \mathcal D'(\mathbb {R})\) with \({{\,\mathrm{\mathrm {supp}}\,}}(f)\subset [-R,\infty ) \) and \(e^{-(\mu -r)t} f \in \mathcal S'(\mathbb {R})\) that also satisfies \((e^{-(\mu -r)t} f)^{\sim }(\cdot ) =F(\cdot + i\mu )\) for every \(\mu >r\).

This is essentially a Paley–Wiener theorem that we have stated in the form given in [19, Lemma 5.3]. In the following proposition, we give the precise relation between the time domain and frequency measurements.

Proposition A.2

Let \(\omega \in S^{n-1}\), and let \({\mathcal V}(x,D) = \mathbf {W}\cdot \nabla + V\) with \(\mathbf {W}\in C^{m+2}_c(\mathbb {R}^n; \mathbb {C}^n)\), and \(V \in C^{m}_c(\mathbb {R}^n; \mathbb {C})\) compactly supported in B. Let \(U_{\mathcal V}\) be the solution of

given by Proposition 2.4, and let \(u_{\mathcal V}(x,t;\omega ) = U_{\mathcal V} (x,t;\omega ) -\delta (t-x \cdot \omega )\). Assume also that there exists some \(r \ge 0\) such that for \({{\,\mathrm{\mathrm {Im}}\,}}(\lambda ) \ge r\)

where \(C_r>0\) is independent of \(\lambda \). Then, if we define

the following identity holds

for all \(\mu > r\), and all \(\varphi \in C^\infty _c(\mathbb {R}^n)\) and \(\chi \in C^\infty _c(\mathbb {R})\).

Proof

If (A.4) holds for some \(\lambda \), then \(z= \lambda ^2\) is, by definition, in the resolvent set \(\rho (H_{\mathcal V})\subset \mathbb {C}\) of the operator \(H_{\mathcal V}\). It is well known that the resolvent map \(z \rightarrow (H_{\mathcal V} -z)^{-1}\) forms a holomorphic family of bounded \(L^2\) operators in the open set \(\rho (H_{\mathcal V})\) (see, for example, [24, Theorem 2.15]). Since the map \(z= \lambda ^2\) is also holomorphic and (A.4) holds for \({{\,\mathrm{\mathrm {Im}}\,}}(\lambda ) \ge r \), \(\lambda \rightarrow R_{\mathcal V}(\lambda ) = (H_{\mathcal V} -\lambda ^2)^{-1}\) is also an holomorphic map for \({{\,\mathrm{\mathrm {Im}}\,}}(\lambda ) \ge r \).

On the other hand, using (A.4) we have

For any fixed \(\varphi \in C^\infty _x(\mathbb {R}^n)\), define

From the previous observations, it follows that \(F_\varphi (\lambda )\) must be an holomorphic function in the set \({{\,\mathrm{\mathrm {Im}}\,}}(\lambda )\ge r \). By estimate (A.5), we get

Then, Lemma A.1 implies that there is a function \(f_\varphi \in \mathcal {D}^\prime (\mathbb {R})\) supported on \([-1, \infty )\) such that for all \(\mu >r\):

Now, given \(\mu >r\), define the linear map \(\mathcal K: C^\infty _c(\mathbb {R}^n) \rightarrow \mathcal D'(\mathbb {R})\) given by

The map \(\mathcal {K}\) is continuous. To see this, take a sequence \(\varphi _j \rightarrow 0\) in \(C^\infty _c(\mathbb {R}^n)\), then (A.5) implies that \(F_{\varphi _j} \rightarrow 0\) when \({{\,\mathrm{\mathrm {Im}}\,}}(\lambda ) \ge r\), and hence

Since \(\mathcal {K}\) is continuous, the Schwartz kernel theorem ensures that there is a unique \(K \in \mathcal {D}'(\mathbb {R}^n\times \mathbb {R})\) such that

Since \(f_\varphi \) is supported in \([-1,\infty )\), it follows that K is supported in \(\{t\ge 1\}\). We now define the distribution

We claim that v is a solution in \(\mathbb {R}^{n+1}\) of

Since, by (A.3), this is also the equation satisfied by \(u_{\mathcal V}\), the uniqueness of distributional solutions of the wave equation supported in \(\{t\ge -1\}\) (see [8, Theorem 9.3.2]) implies that \(u_{\mathcal V} = v\), so

which finishes the proof of the Proposition.

To prove the previous claim, we use (A.6) in the following computations. First,

Also, if we denote by \(\mathcal V^*\) the formal adjoin of \( \mathcal V\) (with respect to the distribution pairing \(\langle \cdot ,\cdot \rangle \)), we have

and similarly, one gets