Abstract

This paper revisits the proof of Anderson localization for multi-particle systems. We introduce a multi-particle version of the eigensystem multi-scale analysis by Elgart and Klein, which had previously been used for single-particle systems.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Anderson localization [4] is the absence of diffusion or transport in certain random media. Both experimental and theoretical aspects of Anderson localization do not only depend on the nature of the randomness, but also on other properties of the physical system, such as the number of particles and the strength of their interactions. In the following, the number of particles is denoted by N and we distinguish three categories of physical systems:

-

(i)

single-particle systems (\(N=1\)),

-

(ii)

multi-particle systems (\(1<N<\infty \)),

-

(iii)

and infinite-particle systems (\(N\rightarrow \infty \)).

1.1 Single-Particle Systems

The standard Anderson model for a single-particle system is given by

Here, \(d\geqslant 1\) is the spatial dimension, \(\Delta \) is the centered, discrete Laplacian, \(\lambda >0\) is a (large) disorder parameter, and \(V= \{ V(x) \}_{x \in {\mathbb {Z}}^d}\) is a random potential. The assumptions on the random potential \(V= \{ V(x) \}_{x \in {\mathbb {Z}}^d}\) commonly include the probabilistic independence of the potential at different sites and the absolute continuity of the single-site distribution with a bounded density, but may be less or more restrictive in individual works. In the standard model (1.1), Anderson localization may refer to either spectral or dynamical properties of H. The Hamiltonian H is called spectrally localized if it has a pure-point spectrum and the eigenfunctions are exponentially localized. The Hamiltonian H is called dynamically localized if, for any \(x\in {\mathbb {Z}}^d\), the evolution \(e^{-itH} \delta _x\) decays exponentially in space uniformly in time.

The first proofs of Anderson localization [25, 38, 44] were restricted to one-dimensional models. Later proofs of Anderson localization, which also treated higher-dimensional cases, are mostly based on Aizenman and Molchanov’s fractional moment method [2, 3] or Fröhlich and Spencer’s multi-scale analysis [32, 33, 36, 51]. Both methods are based on estimates of the Green’s function G corresponding to the Hamiltonian H. The Green’s function is defined by

where \(x,y\in {\mathbb {Z}}^d\) and \(z\in {\mathbb {C}}\backslash \sigma (H) \supseteq {\mathbb {C}}\backslash {\mathbb {R}}\) lies outside the spectrum of H. The Green’s function G is used because of its desirable algebraic properties, such as the resolvent identity. After the exponential decay of the Green’s function has been proven, the spectral and dynamical localization of H follows from the relationship between the Green’s function and the eigenfunction correlator (cf. [8, Chapter 7]). The fractional moment method proceeds through direct estimates of \({\mathbb {E}}[ |G(x,y;z)|^s]\), where \(0<s<1\). A detailed introduction can be found in the textbook [8] or in the survey article [49]. The multi-scale analysis (MSA) is more involved but often works under less restrictive conditions on the random potential. We note in particular the work of Bourgain and Kenig [9] on localization near the edge of the spectrum for the Anderson–Bernoulli model on \({\mathbb {R}}^d\) for \(d \geqslant 2\), the work of Ding and Smart [26] which establishes the analogous result on \({\mathbb {Z}}^2\), and [45] for the extension of the previous result to \({\mathbb {Z}}^3\). Instead of estimating the Green’s function directly, the (MSA) proceeds through an induction on scales. To outline the induction procedure, we let \(\Lambda _L := [-L,L]^d \cap {\mathbb {Z}}^d\) be the discrete cube of width 2L. The corresponding (finite-dimensional) Hamiltonian \(H_{\Lambda _L}\) is defined by \(H_{\Lambda _L}:= 1_{\Lambda _L} H 1_{\Lambda _L}\), and the corresponding Green’s function \(G_{\Lambda _L}\) is defined as in (1.2) with H replaced by \(H_{\Lambda _L}\). By using the second resolvent identity, estimates of the Green’s function \(G_{\Lambda _L}\) at scale L can be reduced to estimates of the Green’s function \(G_{\Lambda _\ell }\) at a smaller scale \(\ell \), where \(L=\ell ^\gamma \) and \(\gamma >1\) is fixed. Together with an initial scale estimate, which relies on Wegner estimates, this ultimately yields uniform estimates of \(G_{\Lambda _L}\) in the length scale L. For an excellent introduction to the (MSA), we refer the reader to the lecture notes [39].

More recently, Elgart and Klein [27,28,29] developed an eigensystem multi-scale analysis (EMSA), which provides an alternative proof of Anderson localization. Similar to the classical (MSA), the (EMSA) uses an induction on scales. Instead of working with the Green’s function, however, the (EMSA) directly analyzes the eigensystem of the Hamiltonian on the discrete cube \(\Lambda _L\). We anticipate that these methods may be more adaptable to the many-body setting, discussed further in Sect. 1.3, since many statements for such systems are posed in terms of the eigenfunctions themselves. It is perhaps of interest to note that certain aspects of the (EMSA) seem to have some similarities to techniques in nonlinear PDEs: For example, the mapping of (localized) eigenfunctions on smaller scales to (localized) eigenfunctions on larger scales (cf. [27, Lemma 3.6]) is similar to the stability theory for partial differential equations (see, e.g., [50, Section 3.7]). In contrast, the resolvent identity for the Green’s function, which plays a similar role in the (MSA), seems to have no such simple analogue.

1.2 Multi-particle Systems

We define the N-particle Anderson model by

Here, \(\lambda >0\) is the disorder parameter. We define the N-particle Laplacian \(\Delta ^{(N)}\), the random potential \(V:{\mathbb {Z}}^{Nd} \rightarrow {\mathbb {R}}\), and the interaction potential \(U :{\mathbb {Z}}^{Nd} \rightarrow {\mathbb {R}}\) as follows:

-

The N-particle Laplacian \(\Delta ^{(N)}\) is given by

$$\begin{aligned} \Delta ^{(N)} \varphi ( {\mathbf {x}}) = \sum _{\begin{array}{c} {\mathbf {y}}\in {\mathbb {Z}}^{Nd} :\Vert {\mathbf {y}}- {\mathbf {x}}\Vert _{1}=1 \end{array}} \varphi ({\mathbf {y}}), \end{aligned}$$where \(\varphi :{\mathbb {Z}}^{Nd} \rightarrow {\mathbb {C}}\) and \({\mathbf {x}}\in {\mathbb {Z}}^{Nd}\).

-

The (multi-particle) random potential \(V :{\mathbb {Z}}^{Nd} \rightarrow {\mathbb {R}}\) is given by

$$\begin{aligned} V({\mathbf {x}}) = \sum _{j=1}^{N} {\mathcal {V}}(x_j), \end{aligned}$$where \({\mathcal {V}}:{\mathbb {Z}}^{d} \rightarrow {\mathbb {R}}\) and \({\mathbf {x}}=(x_1,\ldots ,x_N) \in {\mathbb {Z}}^{Nd}\). The conditions on the (single-particle) random potential \({\mathcal {V}}= \{ {\mathcal {V}}(u) \}_{u \in {\mathbb {Z}}^d}\) will be detailed in Assumption 1.1.

-

Finally, the interaction \(U:{\mathbb {Z}}^{Nd} \rightarrow {\mathbb {R}}\) is given by

$$\begin{aligned} U({\mathbf {x}}) = \sum _{1\leqslant i < j \leqslant N} {\mathcal {U}}(x_i - x_j), \end{aligned}$$where \({\mathcal {U}}:{\mathbb {Z}}^{d} \rightarrow {\mathbb {R}}\) has finite support and \({\mathbf {x}}=(x_1,\ldots ,x_N) \in {\mathbb {Z}}^{Nd}\).

The most essential difference between the single- and multi-particle Anderson models (1.1) and (1.3) lies in the probabilistic dependencies of the random potential. In the single-particle setting, V(x) and V(y) are probabilistically independent for any \(x,y \in {\mathbb {Z}}^d\) satisfying \(x \ne y\). In the multi-particle setting, however, \(V({\mathbf {x}})\) and \(V({\mathbf {y}})\) can be probabilistically dependent as soon as \(\{ x_i \}_{i=1}^N\) and \(\{ y_j \}_{j=1}^{N}\) are not disjoint, which occurs infinitely often (even for a fixed \({\mathbf {x}}\in {\mathbb {Z}}^{Nd}\)). In fact, \(V({\mathbf {x}})\) and \(V({\mathbf {y}})\) are not only probabilistically dependent but identical if \({\mathbf {y}}\) is a permutation of \({\mathbf {x}}\). To be precise, we let \(S_N\) be the symmetric group on \(\{1,\ldots ,N\}\). For any \(\pi \in S_N\) and \({\mathbf {x}}\in {\mathbb {Z}}^{Nd}\), we define

Then, it is clear from the definition of the random potential that \(V({\mathbf {x}})= V(\pi {\mathbf {x}})\) for all \({\mathbf {x}}\in {\mathbb {Z}}^{Nd}\) and \(\pi \in S_N\). The permutation invariance is not only exhibited by the random potential V, but also by the N-particle Laplacian \(\Delta ^{(N)}\) and the interaction potential U. As a consequence of the permutation invariance, it is natural to measure decay not in the standard \(\ell ^\infty \)-norm, but instead in the symmetrized distance

The symmetrized distance \(d_S\) can be viewed as a semi-metric on \({\mathbb {Z}}^{Nd}\) or as a metric on the quotient space \({\mathbb {Z}}^{Nd}/ S_N\). In some previous works, the symmetrized distance was replaced by the weaker Hausdorff distance (cf. [7]). Except for this change in the metric, the spectral and dynamical localization of the multi-particle Hamiltonian (1.3) can be defined exactly as for single-particle systems.

Anderson localization for the multi-particle Hamiltonian (1.3) has been proven using multi-particle versions of both the fractional moment method [7, 35] and multi-scale analysis [12, 13, 15,16,17,18,19,20,21,22, 41,42,43]. The main idea, which was first used in [7, 20], is to prove Anderson localization for the multi-particle Hamiltonian (1.3) through an induction on the number of particles. Informally speaking, the bounds on the Green’s function or eigenfunction correlator in [7, 20] distinguish the following two scenarios: If the particle configuration can be separated into two sub-configurations with distant particles, the desired conclusion follows from the induction hypothesis. If the particle configuration cannot be further decomposed, then all particles need to be localized near a single site and form a “quasi-particle” (cf. [7, Section 1]). This case is then treated using single-particle methods. Of course, this informal sketch is overly simplistic and the probabilistic dependencies in the random potential turn out to be a challenge.

In this work, we present an alternative proof of Anderson localization for the N-particle Hamiltonian (1.3), which is based on the eigensystem multi-scale analysis of Elgart and Klein. Instead of striving for optimal or the most general results, we focus on accessible and simple arguments. We first describe the assumptions on the random potential which will be used in this paper.

Assumption 1.1

Let \(V= \{ V(x;\omega ) \}_{x\in {\mathbb {Z}}^d}\) be a sequence of independent, identically distributed random variables. We assume that the single-site distribution is given by \(\rho (v) \mathrm {d}v\), where the density \(\rho :{\mathbb {R}}\rightarrow {\mathbb {R}}_{\geqslant 0}\) satisfies the following conditions:

-

(i)

(Compact support) There exists a \(v_{\max } \in {\mathbb {R}}_{>0}\) such that \({\text {supp}}(\rho ) \subseteq [0,v_{\max }]\).

-

(ii)

(Upper/lower bound) There exist \(\rho _{\min },\rho _{\max } \in {\mathbb {R}}_{>0}\) such that

$$\begin{aligned} \rho _{\min }\leqslant \rho (v) \leqslant \rho _{\max } \qquad \text {for all } v \in [0,v_{\max }]. \end{aligned}$$In particular, the density \(\rho \) is strictly positive on its support.

-

(iii)

(Smoothness) The density \(\rho \) is continuously differentiable on \((0,v_{\max })\) and

$$\begin{aligned} \Vert \rho ^\prime (v) 1_{(0,v_{\max })}(v)\Vert _{L_v^\infty ({\mathbb {R}})} < \infty . \end{aligned}$$

The assumptions on the random potential are physically reasonable. In particular, they include the uniform distribution on the compact interval \([0,v_{\max }]\). We note that Assumption 1.1 excludes the case of Bernoulli random variables, which remains open. The full strength of Assumption 1.1 is only used to obtain a regular conditional mean (see Definition 2.3), which may replace the lower bound in (ii) and (iii). However, we found the current set of assumptions more accessible. In comparison with other works on multi-particle localization, our assumptions are essentially identical to [13] but more restrictive than [7, 19, 20].

In order to state our main result, we also need to introduce some additional notation. For any \({\mathbf {a}}\in {\mathbb {Z}}^{Nd}\) and \(L>0\), we define the symmetrized N-particle cube by

We emphasize that the side length \(L>0\) is not required to be an integer. While the cube \(\Lambda _L({\mathbf {a}})\) only depends on the integer part of L, this has notational advantages once we take fractional powers of L. In many statements below, where the center \({\mathbf {a}}\in {\mathbb {Z}}^{Nd}\) is not essential, we simply write \(\Lambda _L\). Similar as for single-particle systems, we define the restriction of the Hamiltonian H to the cube \(\Lambda _L\) by \(H_{\Lambda _L} = 1_{\Lambda _L} H 1_{\Lambda _L}\). In order to state the next definition, we let \(\tau \in (0,1)\) be a parameter as in (1.9). We also denote by \({\widetilde{\sigma }}(H_{\Lambda _L})\) the multi-set containing the eigenvalues of \(H_{\Lambda _L}\) repeated according to their multiplicities.

Definition 1.2

(m-localizing). Let \(N\geqslant 1\), and let \(\Lambda _L = \Lambda _L^{(N)}({\mathbf {a}})\), where \({\mathbf {a}}\in {\mathbb {Z}}^{Nd}\), \({\mathbf {x}}\in \Lambda _L\), and \(m>0\). Then, \(\varphi \in \ell ^2(\Lambda _L)\) is called \(({\mathbf {x}},m)\)-localizing if \(\Vert \varphi \Vert _{\ell ^2}=1\) and

The function \(\varphi \) is called m-localizing if there exists an \({\mathbf {x}}\in \Lambda _L\) such that \(\varphi \) is \(({\mathbf {x}},m)\)-localizing.

Furthermore, the cube \(\Lambda _L\) is called m-localizing for H if there exists an orthonormal eigenbasis

such that \(\varphi _\theta \) is m-localizing for all \(\theta \in {\widetilde{\sigma }}(H_{\Lambda _L})\). Instead of writing that \(\Lambda _L\) is m-localizing for H, we sometimes simply write that \(H_{\Lambda _L}\) is m-localizing, which places more emphasis on the Hamiltonian.

Definition 1.2 is the multi-particle version of [27, Definition 1.3 and Definition 1.5]. Equipped with this definition, we can now state our main result.

Theorem 1.3

(Anderson localization). Let \(d=1\), let \(N\geqslant 1\), and let \(H^{(N)}\) be the N-particle Anderson Hamiltonian as in (1.3). Assume that the localization length \(m>0\), the decay parameter \(p>0\), the initial length scale \(L_0 \geqslant 1\), and the disorder parameter \(\lambda >0\) satisfy

for some explicit constants. Then, we have for all \(L\geqslant L_0\) that

Remark 1.4

In particular, in the sequel, we will define parameters \(\tau , \gamma , \beta \) in Sect. 1.4 to be used in the proof, and one may take the constants to be

where

Remark 1.5

The main defect of Theorem 1.3 is the restriction to dimension \(d=1\). This restriction is only used in the proof of the covering properties (Lemma 3.3), but does not enter in other parts of the argument. The geometric difficulties occurring in dimension \(d\geqslant 2\), which are a result of the symmetrization of the cubes, are further discussed in Sect. 3.1 and the appendix.

While Theorem 1.3 does not explicitly contain the spectral or dynamical localization of the N-particle Hamiltonian, both properties can be obtained as consequences of this result, as in [27, Corollary 1.8]. In Theorem 1.3, we obtain polynomial tails in our probabilistic estimate. By working with polynomial instead of exponential tails, we simplify the probabilistic aspects of the inductive step in the length scale (Theorem 5.4).

The proof of Theorem 1.3 combines the eigensystem multi-scale analysis of [27,28,29] with the induction on the number of particles from [7, 20]. The main difficulties are tied to probabilistic dependencies in the random potential \(V:{\mathbb {Z}}^{Nd} \rightarrow {\mathbb {R}}\), which affect the Wegner estimate (Proposition 2.2) and the induction step (Theorem 5.4).

1.3 Infinite-Particle Systems

In recent years, there has been much activity and interest in Anderson localization for many-body systems (\(N\rightarrow \infty \)). Since a survey of this activity is well beyond the scope of this introduction, we refer the reader to the review articles [1, 5] in the physical literature and [6, 47] in the mathematical literature. We instead focus on the nonlinear Schrödinger equation with a random potential given by

where \(\epsilon ,\delta >0\). The nonlinear Schrödinger equation describes the one-particle density of the N-particle Schrödinger equation in the many-body limit (cf. [46] and the references therein). The random nonlinear Schrödinger equation (1.7) was first studied by Bourgain and Wang [10, 11], Fishman et al. [30, 31], Fröhlich et al. [34], and Wang and Zhang [53]. The previous results [53] imply that, with high probability, the evolution of any localized initial data remains localized up to the time \(C_k (\epsilon +\delta )^{-k}\), where \(k \geqslant 1\) is arbitrary. However, they do not provide detailed information on the asymptotic behavior as \(t\rightarrow \infty \) for general localized initial data. During the preparation of this work, this problem was revisited by Cong, Shi, and Zhang [24] and Cong and Shi [23]. In [23], the authors show for any localized initial data that the solutions to (1.7) satisfy

for all \(t\in {\mathbb {R}}\), as long as \(\delta \) and \(\epsilon \) are sufficiently small depending on \(\kappa \). The proofs rely on certain normal form (or symplectic) transformations, which successively remove non-resonant terms inside the Hamiltonian. In particular, the methods are more closely related to the original form of the multi-scale analysis [33] than its later implementations (see, e.g., [39, 51]).

While we were initially motivated by the asymptotic behavior of (1.7), we view the eigensystem multi-scale analysis as a bridge between the dynamical system methods, as in [10, 11, 23, 24, 30, 31, 34, 53], and more classical approaches to Anderson localization, as in [2, 3, 32, 33, 36, 51]. We conclude this introduction with an interesting observation, which illustrates the differences between the two perspectives: In the multi-particle setting [7, 20], the worst dependence on the particle number N in proofs of localization is a result of summing over all possible particle configurations inside the cube. In contrast, the worst case in proofs of localization for (1.7) is a “quasi-soliton,” which only has a few degrees of freedom (cf. [30, p. 845]).

1.4 Notation

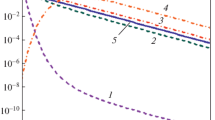

We first choose the parameters that will be needed throughout this paper. We fix \(\beta ,\tau \in (0,1)\) and \(\gamma >1\) such that

Turning toward the geometric objects in this paper, we let \(\Phi \subset \Theta \subset {\mathbb {Z}}^{Nd}\) be symmetric sets and define the boundary

the exterior boundary

and the interior boundary

For any \(r \geqslant 1\), we also define

Thus, \(\Phi ^{\Theta ,r}\) contains all particle configurations in \(\Phi \) which have a symmetrized distance of at least r to all particle configurations in \(\Theta \backslash \Phi \). The sets \(\partial _{ex}^{\Theta } \Phi \), \(\partial _{\text {in}}^{\Theta } \Phi \), and \(\Phi ^{\Theta ,r}\) are illustrated in Fig. 1.

Furthermore, let \({\mathbf {x}}\in {\mathbb {Z}}^{Nd}\) and let \(1\leqslant n \leqslant N\). We define the projection onto the n-th particle by

We also denote the sites corresponding to the particle configurations in \(\Theta \subset {\mathbb {Z}}^{Nd}\) by

For any site \(u \in {\mathbb {Z}}^{d}\), we define the number operator \(N_u :{\mathbb {Z}}^{Nd} \rightarrow {\mathbb {N}}\) by

Finally, we recall the geometric decomposition of the Hamiltonian given by

Here, \(\oplus \) denotes the direct sum and the boundary term \(\Gamma _{\partial ^\Theta \Phi }\) is defined by

We choose \(d=1\), \(N=2\), \(\Theta =\Lambda _L^{(2)}({\mathbf {a}})\), and \(\Phi =\Lambda _{L/2}^{(2)}({\mathbf {b}})\), where \(L>0\) is unspecified. The centers \({\mathbf {a}},{\mathbf {b}}\in {\mathbb {Z}}^2\) are chosen so that the lower-left corners of \(\Theta \) and \(\Phi \) coincide. The interior and exterior boundary are illustrated in the left diagram. The set \(\Phi ^{\Theta ,r}\) with \(r=L/4\) is illustrated in the right diagram. The boundary \(\partial ^{\Theta } \Phi \) is not illustrated here, since it is a subset of \({\mathbb {Z}}^2 \times {\mathbb {Z}}^2\) rather than \({\mathbb {Z}}^2\)

2 Multi-particle Wegner Estimates

In this section, we prove a minor variant of the multi-particle Wegner estimate from [15, Theorem 3]. In order to state the result, we first recall the notion of spectral separation for a family of sets in \({\mathbb {Z}}^{Nd}\). The proof of the multi-particle Wegner estimate, Proposition 2.2, requires additional notation and is therefore placed in a separate subsection.

Definition 2.1

(Spectral separation). Let \(L \geqslant 1\). We call two symmetric, finite sets \( \Theta _1, \Theta _2 \in {\mathbb {Z}}^{Nd} \) spectrally \( L\)-separated for \(H \) if

A family \( \{ \Theta _j \}_{j\in J} \) of finite, symmetric sets is called spectrally \( L\)-separated for \( H \) if \( \Theta _j \) and \( \Theta _{j^\prime } \) are spectrally \( L \)-separated for all \(j,j^\prime \in J \) such that

We note that the spectral separation \({{\,\mathrm{dist}\,}}( \sigma (H_{\Theta _j}), \sigma (H_{\Theta _{j^\prime }})) \geqslant 1/2\, \cdot \exp (-L^\beta )\) clearly fails if the two sets \(\Theta _j\) and \(\Theta _{j^\prime }\) coincide, which makes a condition on the distance between \(\Theta _j\) and \(\Theta _{j^\prime }\) natural. We will eventually consider families of cubes with side lengths comparable to \(\ell \), see, e.g., the proof of Theorem 5.4.

Proposition 2.2

(Multi-particle Wegner estimate). Let \(L,\ell \geqslant 1\) satisfy \(L=\ell ^\gamma \), and let the random potential satisfy Assumption 1.1. Let \({\mathcal {J}}\) be a finite index set, and let \(\{ \Theta _j \}_{j \in {\mathcal {J}}}\) be a family of finite, symmetric sets satisfying \({{\,\mathrm{diam}\,}}\Theta _j \leqslant 20 N \ell \) for all \(j\in {\mathcal {J}}\). Then

Before we start with the proof of Proposition 2.2, we include a short discussion of (2.1). If the family \(\{ \Theta _j \}_{j \in {\mathcal {J}}}\) consists only of two symmetric sets \(\Theta _1\) and \(\Theta _2\) satisfying \(d_S(\Theta _1,\Theta _2) \geqslant 80 N^2 \ell \), then (2.1) estimates the probability

However, the standard Wegner estimate (as in [39, 40, 48, 52]) only controls

In the single-particle case, estimates of (2.3) directly lead to estimates of (2.2), since the condition \(d(\Theta _1,\Theta _2)\geqslant 80\ell \) implies the probabilistic independence of \(H_{\Theta _1}\) and \(H_{\Theta _2}\). In the multi-particle case, however, the Hamiltonians \(H_{\Theta _1}\) and \(H_{\Theta _2}\) can be probabilistically dependent even for an arbitrarily large symmetric distance of \(\Theta _1\) and \(\Theta _2\). As a result, estimates of (2.2) are more difficult than estimates of (2.3).

2.1 Regular Conditional Means, Weak Separability, and Applications

In this subsection, we prove Proposition 2.2. Since the methods here will not be used in the rest of the paper, we encourage the reader to skip this subsection during the first reading. Given a finite set \({\mathcal {S}}\subseteq {\mathbb {Z}}^d\), we denote the sample mean of the random potential on \({\mathcal {S}}\) by

For any site \( u \in {\mathcal {S}}\), we further denote the fluctuations of \( {\mathcal {V}}\) relative to the sample mean by

We denote the \( \sigma \)-algebra generated by the fluctuations \( \{ \eta _{u,{\mathcal {S}}} \}_{u\in {\mathcal {S}}} \) by \( {\mathscr {F}}_{\mathcal {S}}\). Finally, we denote by \( F_S( \cdot | {\mathscr {F}}_{\mathcal {S}}) \) the conditional distribution function of \( \langle {\mathcal {V}}\rangle _{\mathcal {S}}\) given \( {\mathscr {F}}_{\mathcal {S}}\), i.e.,

Definition 2.3

(RCM). We say that the random potential \( {\mathcal {V}}\) has a regular conditional mean if there exist constants \(A_1,A_2 \in [0,\infty )\) and \( b_1,b_2,C_1,C_2\in (0,\infty ) \) such that for any finite subset \( {\mathcal {S}}\subseteq {\mathbb {Z}}^d \), the conditional distribution function \( F(\cdot |{\mathscr {F}}_{\mathcal {S}}) \) satisfies

for all \(s>0\).

The simplest example of a random potential \({\mathcal {V}}\) with a regular conditional mean is a standard Gaussian potential. In that case, the sample mean \(\langle {\mathcal {V}}\rangle _{{\mathcal {S}}}\) is a Gaussian random variable with variance \((\# {\mathcal {S}})^{-1}\) which is independent of \({\mathscr {F}}_{\mathcal {S}}\). Thus,

In particular, (2.6) holds with \(C_1= \sqrt{2/\pi }, A_1 = 1/2, b_1=1\), and any choice of \(C_2,A_2,\) and \(b_2\). In the next lemma, we show that Assumption 1.1 also leads to a regular conditional mean.

Lemma 2.4

Assume that the random potential satisfies Assumption 1.1. Then, there exists a constant \(C=C(\rho )>0\), depending only on the single-site density \(\rho \), such that

for all \({\mathcal {S}}\subseteq {\mathbb {Z}}^d\) and \(s>0\). In particular, (2.6) is satisfied with

Proof

This is a consequence of [14, Theorem 4 with \(\alpha =1/2\)]. The restriction on s in [14, Theorem 4] is circumvented by increasing the power of N in [14, (6.5)], which makes the bound trivial in the excluded range. \(\square \)

This finishes our discussion of Definition 2.3 and its relation to Assumption 1.1. We now turn to the more geometric aspects of the Wegner estimates.

Definition 2.5

We call two symmetric sets \( \Theta _1,\Theta _2 \subseteq {\mathbb {Z}}^{Nd} \) weakly separable if there exists a set of sites \( {\mathcal {S}}\subseteq {\mathbb {Z}}^d \) and \( 0 \leqslant N_1 \ne N_2 \leqslant N \) such that

In other words, \(\Theta _1\) and \(\Theta _2\) are called weakly separable if we can find a region \({\mathcal {S}}\subseteq {\mathbb {Z}}^d\) in which the number of particles is constant for each set \(\Theta _1\) and \(\Theta _2\) and differs between the two sets. An example of two weakly separable sets is given by the two cubes \(\Lambda _L({\mathbf {x}})\) and \(\Lambda _L({\mathbf {y}})\) in Fig. 2. In that example, (2.7) holds with \({\mathcal {S}}=\{3,4,5\}\), \(N_1=1\), and \(N_2=2\).

We display two-particle cubes in one spatial dimension. We let \( {\mathbf {x}}= (4,1) \), \( {\mathbf {y}}= (4,4) \), and \( L=1 \). If \( \pi \in S_2 \) is the permutation given by \( \pi (1)=2 \) and \( \pi (2)=1 \), we have \( \pi {\mathbf {x}}= (1,4) \). The two-particle cube \( \Lambda _L^{(2)}({\mathbf {x}}) \) corresponds to the orange area, and the two-particle cube \( \Lambda _L^{(2)}({\mathbf {y}}) \) corresponds to the blue area

In order to use Definition 2.5 in our proof of multi-particle localization, we clarify the relation between weak separability and the symmetric distance of sets, which is done in the next lemma.

Lemma 2.6

If \( \Theta _1,\Theta _2\subseteq {\mathbb {Z}}^{Nd} \) are symmetric and satisfy

then \( \Theta _1\) and \( \Theta _2 \) are weakly separable.

This is a slight generalization of [15, Lemma 2.1].

Proof

The main idea behind the proof is that \( {\mathbf {x}},{\mathbf {y}}\in {\mathbb {Z}}^{Nd} \) satisfy \( d_S({\mathbf {x}},{\mathbf {y}})=0 \) if and only if \( N_u({\mathbf {x}})=N_u({\mathbf {y}}) \) for all \( u \in {\mathbb {Z}}^d \). We now present a more stable version of this elementary fact.

To simplify the notation, we set \( L:= \max ( {{\,\mathrm{diam}\,}}_S(\Theta _1),{{\,\mathrm{diam}\,}}_S(\Theta _2)) \). We also fix two particle configurations \( {\mathbf {a}}\in \Theta _1 \) and \( {\mathbf {b}}\in \Theta _2\). We denote by \( \Gamma _1,\ldots ,\Gamma _M \), where \( 1 \leqslant M \leqslant N \), the connected components of

For each \( 1 \leqslant m\leqslant M \), we let \( {\mathcal {J}}_m({\mathbf {a}}) \) and \( {\mathcal {J}}_m({\mathbf {b}}) \) be the indices corresponding to the connected component \( \Gamma _m \). Since \(\Gamma _m\) is connected, we obtain that

We now claim that there exists an \( 1\leqslant m\leqslant M \) such that

If not, then \( {\mathbf {a}}\) and \( {\mathbf {b}}\) are close in the symmetrized distance. More precisely, it holds that

which is a contradiction. For any \( m \) as in (2.10), we define \( {\mathcal {S}}:= \Gamma _m \), \( N_1 := \# {\mathcal {J}}_m({\mathbf {a}}) \), and \( N_2 := \# {\mathcal {J}}_m({\mathbf {b}}) \). It remains to verify the conditions in Definition 2.5. Since the argument for \( \Theta _2 \) is similar, it suffices to check for all \( {\mathbf {x}}\in \Theta _1 \) that

Due to the permutation invariance of (2.11), we may assume that \( \Vert {\mathbf {x}}- {\mathbf {a}}\Vert _{\ell ^\infty } \leqslant {{\,\mathrm{diam}\,}}_S(\Theta _1) \leqslant L \). It then follows for all \( 1 \leqslant j \leqslant N \) that \( x_j \in \Lambda _L(a_j) \). Due to the equivalence

it follows that \( x_j \in \Gamma _m \) if and only if \( j \in {\mathcal {J}}_m({\mathbf {a}}) \). This yields

which yields (2.11) and completes the proof. \(\square \)

The following proposition is a minor extension of [15, Theorem 3].

Proposition 2.7

(Basic multi-particle Wegner estimate). Let \( V \) be a random potential with a regular conditional mean as in Definition 2.3 with constants \(A_i, b_i, C_i\) for \(i=1,2\). Let \( \Theta _1, \Theta _2 \subseteq {\mathbb {Z}}^{Nd} \) be two finite and symmetric sets with

For any \( s >0 \), it then holds that

Proof

By replacing [15, Lemma 8] with Lemma 2.6, the argument extends essentially verbatim to our situation. The role of L in [15, Theorem 3] is taken by \(\# {\mathcal {S}}\) in Definition 2.5. Since \({\mathcal {S}}\) can always be taken as a subset of the projections \(\Pi \Theta _1 \cup \Pi \Theta _2\), we have \(\# {\mathcal {S}}\leqslant \# (\Theta _1 \cup \Theta _2)\). \(\square \)

We are now ready to prove the main result of this section.

Proof of Proposition 2.2

Using that \({{\,\mathrm{diam}\,}}\Theta _j \leqslant 20 N \ell \) for all \(j \in {\mathcal {J}}\), we obtain for an absolute constant \(C\geqslant 1\) that

Now, let \(j_1,j_2 \in {\mathcal {J}}\) be such that

By combining Lemma and Proposition 2.7, we obtain that

The desired estimate now follows from a union bound. \(\square \)

3 Preparations

We provide the tools needed in the eigensystem multi-scale analysis in Sect. 5.

3.1 Covers

As is evident from its name, the eigensystem multi-scale analysis connects multiple scales. The notion of a cover allows us to decompose boxes at a large scale L into several boxes at a smaller scale \(\ell \).

Definition 3.1

Let \( \Lambda _L^{(N)}({\mathbf {b}}) \), \( {\mathbf {b}}\in {\mathbb {Z}}^{Nd} \), be an \( N \)-particle box. We define the cover \( C_{L,\ell } = C_{L,\ell }^{(N)}({\mathbf {b}}) \) by

We further denote the centers of the cubes in \( C_{L,\ell } \) by \( \Xi _{L,\ell } \).

In the one-particle version of the eigensystem multi-scale analysis [29], the authors rely on suitable covers (cf. [29, 37]), which contain fewer boxes but still satisfy similar covering properties. Since we are not optimizing the different parameters in our argument, the simpler notion of a cover as in Definition 3.1 is sufficient for our purpose. For more elementary arguments based on covers, we refer to the excellent lecture notes by Kirsch [39, Section 9].

Remark 3.2

Unfortunately, the properties of covers in the (symmetrized) multi-particle setting are much more complicated than in the one-particle setting. While we obtained all the necessary properties for the multi-particle multi-scale analysis in one spatial dimension, i.e., \( d=1 \), we were unable to solve the geometric difficulties in dimension \( d \geqslant 2 \). Indeed, our argument uses the non-decreasing rearrangement of particles in \({\mathbb {Z}}\), which has no direct analogue in higher spatial dimensions.

We record the properties of covers used in the rest of the paper in the next lemma. In order to not interrupt the flow of the main argument, we moved the proof into a separate appendix.

Lemma 3.3

(Properties of covers). Let \( d=1\), let \( C_{L,\ell } \), and let \( \Xi _{L,\ell } \) be as in Definition 3.1. Then, we have the following properties:

-

(i):

The cardinality of the cover is bounded by \( \# C_{L,\ell } \leqslant (2L+1)^{Nd} \).

-

(ii):

We have that

$$\begin{aligned} \Lambda _L^{(N)}({\mathbf {b}}) = \bigcup _{a\in \Xi _{L,\ell }} \Lambda _\ell ^{\Lambda _L({\mathbf {b}}),\ell }({\mathbf {a}}). \end{aligned}$$(3.2)

3.2 Partially and Fully Interactive Cubes

As explained in the introduction, one of the main differences between the one-particle and multi-particle setting lies in the probabilistic independence of certain Hamiltonians. If \(N=1\) and \(\Theta _1,\Theta _2 \subseteq {\mathbb {Z}}^d\) satisfy \(d(\Theta _1,\Theta _2)>0\), which is equivalent to \(\Theta _1 \cap \Theta _2 \not = \emptyset \) , then the restricted Hamiltonians \(H_{\Theta _1}\) and \(H_{\Theta _2}\) are probabilistically independent. If \(N\geqslant 2\) and \(\Theta _1,\Theta _2 \subseteq {\mathbb {Z}}^{Nd}\), however, the symmetrized distance \(d_S(\Theta _1,\Theta _2)\) can be arbitrarily large, while the one-particle sites \(\Pi \Theta _1\) and \(\Pi \Theta _2\), as defined in (1.11), are overlapping. As a result, the restricted Hamiltonians \(H_{\Theta _1}\) and \(H_{\Theta _2}\) are not probabilistically independent. In this section, we introduce partially and fully interactive cubes, which allow us to isolate the related difficulties. This notion appeared in earlier work of Chulaevsky and Suhov [20].

Definition 3.4

(Partially and fully interactive cubes). We call a cube \( \Lambda ^{(N)}_L({\mathbf {x}}) \) partially interactive if there exist \( 1 \leqslant N_1,N_2 < N \), where \( N_1 + N_2 = N \), and disjoint sets \( {\mathcal {S}}_1, {\mathcal {S}}_2 \subseteq {\mathbb {Z}}^{d} \) satisfying the following conditions:

-

\( {{\,\mathrm{dist}\,}}\,( {\mathcal {S}}_1,{\mathcal {S}}_2) \geqslant C_{{\mathcal {U}}} \), where \(C_{{\mathcal {U}}} = \max \limits _{u \in {{\,\mathrm{supp}\,}}{\mathcal {U}}} \Vert u \Vert +1 \),

-

for all \( {\mathbf {y}}\in \Lambda _L^{(N)}({\mathbf {x}}) \), it holds that

$$\begin{aligned} \# \{ 1 \leqslant j \leqslant N:y_j \in {\mathcal {S}}_1\} = N_1 \qquad \text {and} \qquad \# \{ 1 \leqslant j \leqslant N :y_j \in {\mathcal {S}}_2 \} = N_2. \end{aligned}$$

Conversely, we call a cube \( \Lambda ^{(N)}_L({\mathbf {x}}) \) fully interactive if it is not partially interactive.

An example of a partially interactive and a fully interactive cube is displayed in Fig. 3.

We display a partially interactive and a fully interactive two-particle cube in orange and blue, respectively. We let \(d=1\), \(N=2\), \( {\mathbf {x}}= (5,1) \), and \( L=1 \). We also assume nearest neighbor interactions, that is, \({\text {supp}}({\mathcal {U}})\subseteq \{-1,0,1\}\). Then, the cube \(\Lambda _L({\mathbf {x}})\) is partially interactive. For instance, one can take \({\mathcal {S}}_1=\{0,1,2\}\) and \({\mathcal {S}}_2=\{4,5,6\}\), which satisfy \(d({\mathcal {S}}_1,{\mathcal {S}}_2)\geqslant 2\). It is clear from the picture that each particle lies in exactly one of the sets \({\mathcal {S}}_1\) and \({\mathcal {S}}_2\). Conversely, if \({\mathbf {y}}=(5,5)\), then the cube \(\Lambda _L({\mathbf {y}})\) is fully interactive, since both particles separately range over the set \(\{ 4,5,6\}\)

Lemma 3.5

Let \( L > C_{{\mathcal {U}}} \) and let \( \Lambda _L^{(N)}({\mathbf {x}}) \), \( \Lambda _L^{(N)}({\mathbf {y}}) \) be two fully interactive \( N\)-particle cubes. If \( d_S({\mathbf {x}},{\mathbf {y}}) \geqslant 8NL \), then \( \Pi \Lambda _L^{(N)}({\mathbf {x}}) \) and \( \Pi \Lambda _L^{(N)}({\mathbf {y}}) \) are disjoint subsets of \( {\mathbb {Z}}^d \).

Proof

We first obtain a bound for the diameters of \( \Pi \Lambda _L^{(N)}({\mathbf {x}}) \) and \( \Pi \Lambda _L^{(N)}({\mathbf {y}}) \). Since \( \Lambda _L^{(N)}({\mathbf {x}}) \) is fully interactive, the union

is connected. Otherwise, we could choose \( {\mathcal {S}}_1 \) as one of the connected components and \( {\mathcal {S}}_2 \) as the union over the cubes \( \{ u \in {\mathbb {Z}}^d :\Vert u- x_j \Vert _\infty \leqslant L \} \) which are not contained in \( {\mathcal {S}}_1 \). Since the union in (3.3) is connected, we obtain that

by our assumption on L. Since \( \Lambda _L({\mathbf {y}}) \) is also fully interactive, the diameter of \( \Pi \Lambda _L({\mathbf {y}}) \) obeys the same upper bound. Since \( d_S({\mathbf {x}},{\mathbf {y}}) \geqslant 8NL \), there exist \( 1 \leqslant i , j \leqslant N \) such that \( \Vert x_i - y_j \Vert _\infty \geqslant 8NL \). For any \( u \in \Pi \Lambda _L({\mathbf {x}}) \) and \( v \in \Pi \Lambda _L({\mathbf {y}}) \), it follows that

Thus, \( \Pi \Lambda _L({\mathbf {x}}) \) and \( \Pi \Lambda _L({\mathbf {y}}) \) are disjoint. \(\square \)

3.3 Decay Estimates on Localizing Cubes

In this subsection, we prove that an eigenfunction \(\psi \) on \(\Lambda _L\) with eigenvalue \(\mu \) decays over a smaller cube \(\Lambda _\ell \) as long as \(\mu \) is not too close the spectrum of the restricted Hamiltonian \(H_{\Lambda _\ell }\). This forms the basis of an iteration scheme over the cubes in the cover \(C_{L,\ell }\).

In the statement of the next lemma, we take \({\widetilde{\tau }}=(1+\tau )/2\).

Lemma 3.6

Let \( \Theta \subseteq {\mathbb {Z}}^{Nd} \) be symmetric, and let \( \Lambda _\ell = \Lambda _\ell ^{(N)} \subseteq \Theta \) be \( m \)-localizing. Let \( \psi :\Theta \rightarrow {\mathbb {R}} \) be a generalized eigenfunction for \( H_\Theta \) with generalized eigenvalue \( \mu \). We assume that

Then, we have for \( m^\prime := m \cdot (1- 3 \ell ^{- \frac{1-\tau }{2}}) \) and all \( {\mathbf {y}}\in \Lambda _\ell ^{\Theta ,\ell ^{{\widetilde{\tau }}}} \) that

Remark 3.7

This is the \( N \)-particle analog of [29, Lemma 2.2] on the spectral interval \( I = {\mathbb {R}}\). Without the spectral projection, however, the proof simplifies significantly.

For future use, we remark that the constant \(C(m,\tau ,\gamma ,\beta ,d)\) can be chosen as decreasing in m.

Proof

We denote by \( \{ (\varphi _\nu ,{\mathbf {x}}_\nu ) \}_{\nu \in {\widetilde{\sigma }}(H_{\Lambda _\ell })} \) the eigenfunctions and localization centers of \( H_{\Lambda _\ell } \). Recall that \( {\widetilde{\sigma }}(H_{\Lambda _\ell }) \) denotes the spectrum of \( H_{\Lambda _\ell } \) with the eigenvalues (possibly) repeated according to their multiplicities. For any \( {\mathbf {y}}\in \Lambda _\ell ^{\Theta ,\ell _{{\widetilde{\tau }}}} \), we have that

We can estimate the inner product by

After inserting this into (3.7), we obtain that

In the last line, we used the condition \({\mathbf {y}}\in \Lambda _\ell ^{\Theta ,\ell ^{{\widetilde{\tau }}}}\). In order to complete the argument, it remains to prove that

Using our choice of \( m^\prime \), we have that

Thus, (3.8) reduces to

This implied the condition \(\tau >\gamma \beta \) on the parameters and the lower bound on \(\ell \). \(\square \)

3.4 Buffered Cubes

When proving that a large cube \(\Lambda _L\) is m-localizing (or \(m^\prime \)-localizing with \(m^\prime \) close to m), we would ideally like all smaller cubes \(\Lambda _\ell ({\mathbf {a}})\) in the cover \(C_{L,\ell }\) to be m-localizing. Unfortunately, since we want the probability of m-localization to increase in the side length of the cube, this is not possible. This problem already occurs in the one-particle setting, and we refer to the lecture notes of Kirsch [39, Section 9] for a more detailed explanation. In this subsection, which is entirely analytic, we control the influence of small “bad” regions on a larger scales. The main idea is that the bad region should be surrounded by a buffer of good cubes.

Definition 3.8

(Buffered cubes). We call a symmetric set \(\Upsilon \subseteq \Lambda _L \subseteq {\mathbb {Z}}^{Nd}\) a buffered cube in \(\Lambda _L\) if the following holds:

-

(i)

\( \Upsilon \) is of the form

$$\begin{aligned} \Upsilon = \Lambda _{R}({\mathbf {b}}) \cap \Lambda _L, \end{aligned}$$with \({\mathbf {b}}\in \Lambda _L\) and \(\ell \leqslant R \leqslant L\).

-

(ii)

There exists a set of good centers \( {\mathcal {G}}_\Upsilon \subseteq \Xi _{L,\ell }\), where \(\Xi _{L,\ell }\) is as in Definition 3.1, such that the cubes \(\{ \Lambda _\ell ({\mathbf {a}}) \}_{{\mathbf {a}}\in {\mathcal {G}}_\Upsilon } \) are m-localizing and

$$\begin{aligned} \partial ^{\Lambda _L}_{\text {in}} \Upsilon \subseteq \bigcup _{{\mathbf {a}}\in {\mathcal {G}}_\Upsilon } \Lambda _\ell ^{\Lambda _L,\ell }({\mathbf {a}}). \end{aligned}$$

We display a buffered cube \( \Upsilon \subseteq \Lambda _L\). The large black box corresponds to \( \Lambda _L \), and the red region corresponds to \( \Upsilon \). The green boxes illustrate a subset of the localizing boxes \( \Lambda _\ell (a)\), \( a \in {\mathcal {G}}_\Upsilon \). The shaded green area displays a single symmetric localizing box. For illustrative purposes, we accepted two differences between this illustration and our argument. By definition, the full family \(\{ \Lambda _\ell (a)\}_{a\in {\mathcal {G}}_\Upsilon } \) has to contain overlapping cubes, but the figure only shows disjoint cubes. While \( \Upsilon \) accounts for a large portion of \( \Lambda _L \) in the figure, our estimates show that the buffered cubes are small compared to \( \Lambda _L \)

While we do not strictly require that \(\Lambda _\ell ({\mathbf {b}})\) in Definition 3.8 is bad, i.e., not m-localizing, this will be the case for most of our buffered cubes. We illustrate this definition in Fig. 4.

We now present a short auxiliary lemma that will be used in the proof of Lemma 3.10. The statement and proof are a minor modification of [29, Lemma 2.1].

Lemma 3.9

Let \( \Theta \subseteq {\mathbb {Z}}^{Nd} \) be symmetric, and let \( \psi \) be a generalized eigenfunction of \( H_\Theta \) with generalized eigenvalue \( \mu \). Let \( \Phi \subseteq \Theta \) be finite and symmetric, \( \eta > 0 \), and suppose

Then, we have the estimate

Except for the weak (and necessary) non-resonance condition (3.9), Lemma 3.9 requires no information on \(\Theta ,\psi \), and \(\mu \). The lemma never yields any decay by itself, but provides a cheap way to leave a bad region.

Proof

Since \( (H_\Theta - \mu ) \psi = 0 \), it follows from the geometric decomposition (1.13) that

Using this, we obtain

\(\square \)

Lemma 3.10

Let \( \Lambda _L = \Lambda _L({\mathbf {x}}_0) \), where \( {\mathbf {x}}_0 \in {\mathbb {Z}}^{Nd} \), and let \( (\psi ,\mu ) \) be an eigenpair for \( H_{\Lambda _L} \). Let \( \Upsilon \subsetneq \Lambda _L \) be an m-buffered cube and suppose that

Further assume that

Then, we have for \( m^\prime = m( 1- 3\ell ^{\frac{1-\tau }{2}}) \) that

This is the \( N\)-particle analog of [29, Lemma 2.4]. The precise form of the decay in (3.11) is inessential. It is only important that the pre-factor is bounded by 1/2, say, which prevents repeated (or infinitely many) visits to the buffered cubes.

Proof

From Lemma 3.9, we obtain that

From the definition of a buffered cube, we have that \( {\mathbf {u}}\in \Lambda _\ell ^{\Lambda _L,\ell }({\mathbf {a}}_{\mathbf {u}}) \) for some \( {\mathbf {a}}_{\mathbf {u}}\in {\mathcal {G}}_\Upsilon \). From the lower bound on \(\ell \) and Lemma 3.6, it follows that

It therefore remains to prove that

Using the definition of \(m^\prime \) and that \(\ell \geqslant 6^{\frac{2}{1-\tau }}\), we obtain \(m^\prime \geqslant m/2\). Thus, it suffices to show that

Since \(\gamma \beta <1\), this follows from the lower bound on \(\ell \). \(\square \)

4 Initial Step

In this section, we proceed with the initial step of the eigenfunction multi-scale analysis, and in particular, we establish a localization estimate for an initial scale, \(\ell \). We record the main result of this section in the following.

Proposition 4.1

Let \( \ell \geqslant 1 \), let \( m > 0\), let \(\delta >0\), and assume that the disorder parameter, \(\lambda \), satisfies

Then, we have

Remark 4.2

In our application of this proposition, we will in fact take \(\delta \) to be \(\ell ^{-c}\), for some exponent \(c \equiv c(N) >0\), and hence, we see from the statement that by taking \(\lambda \) sufficiently large, we can ensure \(c \gg 1\).

The previous proposition should be compared to [27, Prop 4.2]. In order to establish Proposition 4.1, we will prove Lemma 4.4, which should be compared with [27, Lemma 4.4]. Lemma 4.4 states that under a certain separation condition on the potential, recorded in (4.2), we have the required eigenfunction decay. The argument for this lemma will be purely analytic and not rely on any probabilistic estimates. Proposition 4.1 will then follow from establishing bounds for the probability of the event that the separation condition holds. The difference between the single- and multi-particle setting lies in the permutation invariance of the Hamiltonian, which leads to a degenerate spectrum.

We first present the analytic portion of the initial scale estimate. This part of the argument is based on Gershgorin’s disk theorem, which we now recall.

Lemma 4.3

(Gershgorin’s disk theorem). Let \( A = (a_{jk})_{j,k=1}^n \in {\mathbb {C}}^{n\times n} \) be a complex matrix. For all \( 1 \leqslant j \leqslant n \), we define \( R_j := \sum _{1\leqslant k \leqslant n :k\ne j} |a_{jk}| \). Then, the eigenvalues of \( A \) are contained in

We now establish the analytic lemma. To simplify the notation, we write \({\mathbf {x}}\not \sim _\pi {\mathbf {y}}\) for any \({\mathbf {x}},{\mathbf {y}}\in {\mathbb {Z}}^{Nd}\) satisfying \({\mathbf {x}}\ne \pi {\mathbf {y}}\) for all \(\pi \in S_N\).

Lemma 4.4

Let \( \Theta \subseteq {\mathbb {Z}}^{Nd} \) be finite and symmetric, let \( \eta > 4Nd \), and assume for any \( {\mathbf {x}}, {\mathbf {y}}\in \Theta \) satisfying \( {\mathbf {x}}\not \sim _\pi {\mathbf {y}}\) that

Then, for all normalized eigenfunctions \( \varphi \in \ell ^2(\Theta ) \) there exists an \( {\mathbf {x}}\in \Theta \) such that

Proof

For any \( x\in \Theta \), we define the Gershgorin disk \( {\mathbb {D}}_{\mathbf {x}}\) by

From Gershgorin’s disk theorem, we obtain that

Let \( \varphi \in \ell ^2(\Theta ) \) be a normalized eigenfunction of \( H_\Theta \) with eigenvalue \( \mu \). From (4.5), we see that \( \mu \in {{\mathbb {D}}_{{\mathbf {x}}}}\) for some \( x \in \Theta \). From the assumption (4.2), it follows for all \( y \in \Theta \) satisfying \( y \not \sim _\pi x \) that

We obtain for all \( {\mathbf {y}}\in \Theta \) satisfying \( {\mathbf {y}}\not \sim _\pi {\mathbf {x}}\) that

We obtain (4.3) by iterating (4.6) until we reach the orbit \( S_N {\mathbf {x}}= \{ \pi {\mathbf {x}}:\pi \in S_N \}\). \(\square \)

Having established Lemma 4.4, all that remains is establishing the aforementioned probabilistic bounds, which we turn to now.

Proof of Proposition 4.1

Let \( \eta := (1+e^m) 2Nd> 4Nd \). Using Lemma 4.4, it remains to prove that the separation condition (4.2) is satisfied with probability at least \( 1- \delta \). We recall from (1.12) that the number operator \( N_u :{\mathbb {Z}}^{Nd}\rightarrow {\mathbb {N}} \), where \(u \in {\mathbb {Z}}^d\), is given by

Using this notation, we can rewrite the random potential \( V({\mathbf {x}}) \) as

For any pair of particle configurations \( {\mathbf {x}},{\mathbf {y}}\in {\mathbb {Z}}^{Nd} \) satisfying \( x \not \sim _\pi {\mathbf {y}}\), it holds that \( N_w({\mathbf {x}}) \ne N_w({\mathbf {y}}) \) for some \( w \in {\mathbb {Z}}^d \). After splitting

this yields

By using a union bound, we obtain that

Due to the assumption on \( \lambda \), this yields the desired estimate. \(\square \)

5 Inductive Step

A complication of the current work compared to the single-particle setting of [27] is that in addition to inducting on the scales, we need to induct on the particle number. We first address the latter problem, with an approach which should be compared to [20]. We will use the following proposition to treat the case of partially interactive boxes, which will handle one of the scenarios we need to address in the proof of Theorem 5.4.

Proposition 5.1

(Induction step in the particle number \( n \)). Let \( N \geqslant 2 \), let \( (p(n))_{n=1,\ldots ,N-1} \subseteq [1,\infty ) \), let \( L \geqslant 2 \), and assume for all \( 1 \leqslant n \leqslant N-1 \) that

If \( \Lambda _L^{(N)}({\mathbf {x}}) \) is partially interactive, we have that

Remark 5.2

In applications of this proposition, we will choose the disorder parameter \(\lambda \) in Proposition 4.1 large enough to ensure that p(n), and hence \({\tilde{p}}(N)\), is sufficiently large.

Remark 5.3

The idea behind the proof is that a partially interacting \( N \)-particle cube can be split into non-interacting cubes with fewer particles. The \( N \)-particle eigensystem can then be obtained as a tensor product of the eigensystems with fewer particles. Unfortunately, the symmetrization of the cube makes the implementation of this idea slightly cumbersome.

Proof

Let \( N_1, N_2, {\mathcal {S}}_1 \), and \( {\mathcal {S}}_2 \) be as in Definition 3.4. Using the permutation invariance of the Hamiltonian \( H^{(N)} \) and the cube \( \Lambda _L^{(N)}({\mathbf {x}}) \), we may assume that \( x_1,\ldots ,x_{N_1} \in {\mathcal {S}}_1 \) and \( x_{N_1+1},\ldots ,x_{N} \in {\mathcal {S}}_2 \). For any \( {\mathcal {J}}\subseteq \{ 1, \ldots , N\} \) with \( \# {\mathcal {J}}= N_1 \), we define

We note that different sets \( {\mathcal {J}}\) lead to disjoint supports in (5.2). Since \(\Lambda _L^{(N)}({\mathbf {x}})\) is partially interactive, it follows from Definition 3.4 that

This leads to the orthogonal decomposition

The subspace \( \ell _{\mathcal {J}}^2(\Lambda _L({\mathbf {x}})) \) contains wavefunctions \( \varphi \) supported on particle configurations \( {\mathbf {y}}\in \Lambda _L({\mathbf {x}})\) with \( y_j \in {\mathcal {S}}_1 \) for all \( j \in {\mathcal {J}}\) and \( y_j \in {\mathcal {S}}_2 \) for all \( j \in {\mathcal {J}}^c \). The operator \( H^{(N)}_{\Lambda _L({\mathbf {x}})} \) leaves each subspace \( \ell ^2_{\mathcal {J}}(\Lambda _L({\mathbf {x}})) \) invariant, and we can decompose

The eigensystems of \( H^{(N)}_{\Lambda _L({\mathbf {x}})} \) can then be obtained as a union of the eigensystems of \( H^{({\mathcal {J}})}_{\Lambda _L({\mathbf {x}})} \).

Let now \( {\mathcal {J}}_0:= \{ 1, \ldots , N_1\} \), and for each \( {\mathcal {J}}\), we fix a permutation \( \pi _{{\mathcal {J}}} \in S_N \) satisfying \( \pi _{\mathcal {J}}({\mathcal {J}}) = {\mathcal {J}}_0 \). With this notation, we have that

Thus,

Together with the decomposition (5.4), it follows that \( H^{(N)}_{\Lambda _L({\mathbf {x}})} \) is \(m\)-localizing if and only if \( H^{({\mathcal {J}}_0)}_{\Lambda _L({\mathbf {x}})} \) is localizing. Consequently, recalling the definition of \( m\)-localizing for operators and boxes, see Definition 1.2, it follows that

We will now establish bounds on the latter probability. Since \( {\mathcal {J}}_0 = \{ 1,\ldots ,N_1\} \), we have from Definition 3.4 that

and

Since the eigensystem for \( H^{({\mathcal {J}}_0)}_{\Lambda _L^{(N)}({\mathbf {x}})} \) can be written as a tensor product of the eigensystems of the two operators on the right, we can estimate

Thus, by combining (5.5), (5.6), and the induction hypothesis, we obtain that

which yields the desired estimate. \(\square \)

The following is the main inductive step and constitutes the bulk of the work of this section.

Theorem 5.4

( Induction step in the scale \( \ell \)). Let \(d=1\), and let \( (p(n))_{n=1,\ldots ,N}\subseteq [1,\infty ) \) be a decreasing sequence satisfying

Let

and suppose that for some scale \(\ell \geqslant \ell _0\) and all \( 1 \leqslant n \leqslant N \) that

Then, we have for \(L = \ell ^\gamma \) and all \(1\leqslant n \leqslant N\) that

where \(M := m ( 1- 3\ell ^{- \frac{1-\tau }{2}}) (1-250 N^2 \ell ^{1-\tau \gamma })\).

Remark 5.5

We emphasize that in (5.8), n is allowed to be N because we are currently inducting on the length scale and not the particle number. We also mention that the constant \(C=C(\rho ,m,\tau ,\gamma ,\beta ,d)\) can be chosen as decreasing in m.

Proof

We only prove (5.9) for \(n=N\). After minor notational changes, the same argument also applies for \(1\leqslant n < N\).

Suppose (5.8) holds for some scale \(\ell \). Fix \({\mathbf {x}}_0 \in {\mathbb {Z}}^{Nd}\) and consider \(\Lambda _L({\mathbf {x}}_0)\) for L as in the statement of the theorem. We will prove that (5.9) holds in five steps. Throughout, we let \( C_{L,\ell } \) be an \(\ell \)-cover of \( \Lambda _L({\mathbf {x}}_0) \) as in Definition 3.1 and let \(\Xi _{L,\ell }\) be the corresponding centers.

5.1 Step 1: Definition of the Good Event \(\mathcal {E}\)

The good event \( {\mathcal {E}}\) will lead to localization on \( \Lambda _L \) and will satisfy the probabilistic bound \( {\mathbb {P}}({\mathcal {E}})\geqslant 1 - L^{-p(N)} \) for some p(N). We define \( {\mathcal {E}}:= {\mathcal {E}}_{\text {PI}} \cap {\mathcal {E}}_{\text {FI}} \cap {\mathcal {E}}_{\text {NR}} \), where the three events contain conditions on partially interactive cubes, conditions on fully interactive cubes, and a non-resonance condition, respectively.

We first define the good event for partially interactive cubes by

Second, we define the good event for fully interactive cubes by

Finally, we define the good event regarding non-resonances by

5.2 Step 2: Estimate of the Probability of the Bad Set

In this step, we separately estimate the probabilities of \( {\mathcal {E}}_{\text {PI}},{\mathcal {E}}_{\text {FI}}, \) and \( {\mathcal {E}}_{\text {NR}} \).

We first estimate the probability of \( {\mathcal {E}}_{\text {PI}} \). We mention that in the single-particle setting, i.e., \(N=1\), there are no partially interactive cubes, and hence, \({\mathbb {P}}({\mathcal {E}}_{\text {PI}}^c) =0\) holds trivially. If \(N\geqslant 2\), it follows from Lemma 3.3 and Proposition 5.1 that

provided that

We now estimate the probability of \( {\mathcal {E}}_{\text {FI}} \). Let \( {\mathbf {a}}_1,{\mathbf {a}}_2 \in \Xi _{L,l} \) be as in the definition of \( {\mathcal {E}}_{\text {FI}} \), that is, such that \( d_S({\mathbf {a}}_1,{\mathbf {a}}_2) \geqslant 8N\ell \) and such that \( \Lambda _\ell ({\mathbf {a}}_1) \) and \( \Lambda _\ell ({\mathbf {a}}_2) \) are fully interactive. By Lemma 3.5, it follows that \( \Pi \Lambda _\ell ({\mathbf {a}}_1) \) and \( \Pi \Lambda _\ell ({\mathbf {a}}_2) \) are disjoint. As a result, the random operators \( H_{\Lambda _\ell ({\mathbf {a}}_1)} \) and \( H_{\Lambda _\ell ({\mathbf {a}}_2)} \) are probabilistically independent. From a union bound and the induction hypothesis (5.8), we obtain that

provided that

As mentioned in Remark 5.2, the disorder parameter \(\lambda \) can be chosen so as to ensure that we can make \({\tilde{p}}(N)\) arbitrarily large, and hence, p(N) can be guaranteed to satisfy the two conditions (5.13) and (5.14) simultaneously.

It remains to estimate the probability of \( {\mathcal {E}}_{\text {NR}} \). Using the multi-particle Wegner estimate, i.e., Proposition 2.2, we have that

provided that

The last condition (5.15) follows from our lower bound on \(\ell \).

5.3 Step 3: Buffered Cubes, Good Cubes, and Spectral Separation

For the rest of this proof, we only work on the good event \( {\mathcal {E}}\). There exists a \( {\mathbf {b}}\in \Xi _{L,\ell } \) such that for all \( {\mathbf {a}}\in \Xi _{L,\ell } \) satisfying \( d_S({\mathbf {a}},{\mathbf {b}}) \geqslant 8N\ell \), the cube \( \Lambda _\ell ({\mathbf {a}}) \) is \( m\)-localizing. We define the set of centers for good cubes by

We also define the buffered subset \( \Upsilon \) and the associated set of good cubes \( {\mathcal {G}}_\Upsilon \) in the buffer by

We now verify that \( \Upsilon \) and \( {\mathcal {G}}_{\Upsilon } \) satisfy the conditions in Definition 3.8. The property (i) is immediate. To prove (ii), let \( {\mathbf {y}}\in \partial ^{\Lambda _L}_{\text {in}} \Upsilon \). By the covering Lemma 3.3, there exists an \( {\mathbf {a}}\in \Xi _{L,\ell } \) such that

Since \( d_S({\mathbf {y}},{\mathbf {b}}) = 10N\ell \), we obtain that \( 9N\ell \leqslant d_S({\mathbf {a}},{\mathbf {b}}) \leqslant 11 N\ell \), which implies \( {\mathbf {a}}\in {\mathcal {G}}_\Upsilon \).

From the covering Lemma 3.3, we also obtain that

We then define

We view \( {\mathfrak {C}}_{L,\ell }\) as a modification of the cover \( C_{L,\ell } \), which retains most (but not necessarily all) of the cubes and adds the buffered cube \( \Upsilon \). We will refer to elements of \( {\mathfrak {C}}_{L,\ell }\) by \( \Theta \), which allows us to uniformly treat \( \Lambda _\ell ({\mathbf {a}}) \) and \( \Upsilon \) in some arguments below. From the definition of \( {\mathcal {E}}_{\text {NR}} \supseteq {\mathcal {E}}\), it follows that \( {\mathfrak {C}}_{L,\ell }\) is spectrally \( L \)-separated, see Definition 2.1. In contrast to [29], we only require a single buffered cube \( \Upsilon \). This is an advantage of working with polynomial instead of exponential tails in the probabilistic estimates.

5.4 Step 4: Proximity of the Eigenvalues at the Scales L and \(\ell \)

We let \( \mu \) be an eigenvalue of \( H_{\Lambda _L} \). In this step, we show that there exists a \( \Theta _{\mu } \in {\mathfrak {C}}_{L,\ell }\) such that

Arguing by contradiction, we assume that \( {{\,\mathrm{dist}\,}}( \mu ,\sigma (H_\Theta )) \geqslant 1/2 \cdot e^{-L^\beta } \) for all \( \Theta \in {\mathfrak {C}}_{L,\ell }\). We let \( \psi \) be a normalized eigenfunction of \( H_{\Lambda _L} \) with the eigenvalue \(\mu \). Due to the normalization, we have that \( \Vert \psi \Vert _{\ell ^\infty } \leqslant \Vert \psi \Vert _{\ell ^2} = 1\). We claim that this can be upgraded to

Once the claim (5.21) has been established, the lower bound on \(\ell \) leads to

which is a contradiction.

To see the claim (5.21), we let \( {\mathbf {y}}\in \Lambda _L \) be arbitrary. Using the covering property (5.18), it follows that either \( {\mathbf {y}}\in \Lambda _\ell ^{\Lambda _L,\ell }({\mathbf {a}}) \) for some \( {\mathbf {a}}\in {\mathcal {G}}\) or that \( {\mathbf {y}}\in \Upsilon \).

If \( {\mathbf {y}}\in \Lambda _\ell ^{\Lambda _L,\ell }({\mathbf {a}}) \), it follows from the lower bound on \(\ell \), \(\tau >\gamma \beta \), Lemma 3.6, and the spectral separation \( d(\mu ,\sigma (H_{\Lambda _\ell ({\mathbf {a}})}))\geqslant 1/2 \cdot e^{-L^\beta } \) that

If \( {\mathbf {y}}\in \Upsilon \), we similarly obtain from \( {{\,\mathrm{dist}\,}}( \mu ,\sigma (H_\Upsilon )) \geqslant 1/2\cdot e^{-L^\beta } \), \( {{\,\mathrm{dist}\,}}(\mu ,\sigma (H_{\Lambda _\ell ({\mathbf {a}})})) \geqslant 1/2 \cdot e^{-L^\beta } \) for all \( {\mathbf {a}}\in {\mathcal {G}}_{\Upsilon }\subseteq {\mathcal {G}}\), and Lemma 3.10 that

This completes the proof of the claim (5.21) and hence this step.

5.5 Step 5: M-Localization

Similar as in Step 4, we let \( (\psi _\mu ,\mu ) \) be a normalized eigenpair of \( H_{\Lambda _L} \). It remains to show that there exists a \( {\mathbf {x}}_\mu \in \Lambda _L \) such that \( \psi _\mu \) is \( ({\mathbf {x}}_\mu ,M)\)-localized, i.e.,

for all \( {\mathbf {y}}\in \Lambda _L \) satisfying \( d_S({\mathbf {y}},{\mathbf {x}}_\mu ) \geqslant L^\tau \). By Step 4, there exists a region \( \Theta _\mu \in {\mathfrak {C}}_{L,\ell }\) such that \( {{\,\mathrm{dist}\,}}( \mu ,\sigma (H_{\Theta _\mu })) \leqslant 1/2 \cdot e^{-L^\beta } \). Since \( {\mathfrak {C}}_{L,\ell }\) is spectrally \( L \)-separated and each set in \({\mathfrak {C}}_{L,\ell }\) has a diameter of at most \(20N\ell \), it follows that

We choose any particle configuration \( {\mathbf {x}}_\mu \in \Theta _\mu \) as our localization center. Now, let \( {\mathbf {y}}_0 = {\mathbf {y}}\in \Lambda _L \) satisfy \( d_S({\mathbf {y}}_0,{\mathbf {x}}_\mu ) \geqslant 200 N^2 \ell \). Due to the lower bound on \(\ell \), this assumption is (much) weaker than the assumption \( d_S({\mathbf {y}}_0,{\mathbf {x}}_\mu ) \geqslant L^\tau \) for (5.22). By the covering property (5.18), we have \( {\mathbf {y}}_0 \in \Lambda _\ell ^{\Lambda _L,\ell }({\mathbf {a}}) \) for some \( {\mathbf {a}}\in {\mathcal {G}}\) or \( {\mathbf {y}}_0 \in \Upsilon \). We then set \( \Theta =\Lambda _\ell ({\mathbf {a}}) \) or \( \Theta = \Upsilon \), respectively. Since \( {{\,\mathrm{diam}\,}}_S(\Theta ) \leqslant 20 N \ell \), it follows that

Thus, it follows from the spectral separation of \( {\mathfrak {C}}_{L,\ell }\) that \( {{\,\mathrm{dist}\,}}(\mu ,\sigma (H_\Theta )) \geqslant 1/2 \cdot e^{-L^\beta } \). Next, we apply the decay estimates for good and buffered cubes.

If \( \Theta = \Lambda _\ell ({\mathbf {a}}) \), and hence, \( {\mathbf {y}}_0 \in \Lambda _\ell ^{\Lambda _L,\ell }({\mathbf {a}}) \), it follows from Lemma 3.6 that

for some \( {\mathbf {y}}_1 \in \partial _\text {ex}^{\Lambda _L} \Lambda _\ell ({\mathbf {a}}) \). We call this scenario a good step. In particular, since \( {\mathbf {y}}_0 \in \Lambda _\ell ^{\Lambda _L,\ell }({\mathbf {a}}) \), it follows that \( d_S( {\mathbf {y}}_0,{\mathbf {y}}_1) \geqslant \ell \) and hence \( |\psi ({\mathbf {y}}_0)|\leqslant 1/2\cdot |\psi ({\mathbf {y}}_1)| \).

If \( \Theta = \Upsilon \), and hence, \( {\mathbf {y}}_0 \in \Upsilon \), it follows from Lemma 3.10 that

where \( {\mathbf {y}}_1 \in {\widetilde{\Upsilon }} := \{ v \in \Lambda _L :d_S({\mathbf {v}},\Upsilon ) \leqslant 2N\ell \}\). We call this scenario a bad step.

We illustrate the iteration scheme which generates the sequence \(({\mathbf {y}}_k)_{k=0}^K\). The bad cube \(\Lambda _\ell ({\mathbf {b}})\), which is inside the buffered cube \(\Upsilon \), is displayed in red. The cube \(\Lambda _\ell ({\mathbf {x}}_\mu )\) is displayed in blue. The good and bad steps in the iteration are displayed in green and orange, respectively. Certain pre-factors, such as \(200N^2\) in (ii) below, are ignored in this figure for illustrative purposes

By iterating this procedure, see Fig. 5, we generate a final iteration index \( K \) and a sequence of particle configurations \( ({\mathbf {y}}_k)_{k=0}^K \) satisfying the following properties:

-

(i)

\( {\mathbf {y}}_0 = {\mathbf {y}}\),

-

(ii)

\( d_S({\mathbf {y}}_k, {\mathbf {x}}_\mu ) \geqslant 200 N^2 \ell \) for all \( 0 \leqslant k \leqslant K-1 \) and \( d_S({\mathbf {y}}_K,{\mathbf {x}}_\mu )< 200 N^2 \ell \).

-

(iii)

If \( {\mathbf {y}}_k \not \in \Upsilon \) and \( 0 \leqslant k \leqslant K-1 \), then \( d_S({\mathbf {y}}_k,{\mathbf {y}}_{k+1})\geqslant \ell \) and

$$\begin{aligned} |\psi ({\mathbf {y}}_k)|\leqslant e^{-m^\prime d_S({\mathbf {y}}_k,{\mathbf {y}}_{k+1})} |\psi ({\mathbf {y}}_{k+1})|. \end{aligned}$$(5.26)We then call the \( k \)-th iteration step good.

-

(iv)

If \( {\mathbf {y}}_k \in \Upsilon \) and \( 0 \leqslant k \leqslant K-1 \), then \( {\mathbf {y}}_{k+1} \in {\widetilde{\Upsilon }} \) and

$$\begin{aligned} |\psi ({\mathbf {y}}_k)|\leqslant \frac{1}{2} |\psi ({\mathbf {y}}_{k+1})|. \end{aligned}$$(5.27)

We remark that the final index \( K \) is well defined, i.e., the iteration stops after finitely many steps, since both (5.26) and (5.27) gain at least a factor of \( 1/2 \).

Since all bad steps occur in \( {\widetilde{\Upsilon }} \), we obtain that

Using the a priori estimate \( |\psi ({\mathbf {y}}_K)|\leqslant 1\), we obtain that

If \( d_S({\mathbf {y}},{\mathbf {x}}_\mu ) \geqslant L^\tau \), then (5.29) and our definition of \( M \) imply that

This completes the proof of (5.22) and hence the proof of the theorem. \(\square \)

6 Proof of Main Theorem

We now prove the main result, as shown in Theorem 1.3.

Proof

The proof proceeds through an induction on the length scales. We will not induct on the number of particles here, which was previously done in Proposition 5.1 and the proof of Theorem 5.4. Before we start the induction, however, we need to choose a sequence of decay parameters \((p(n))_{n=1}^{N}\), length scales \((L_k)_{k=0}^\infty \), and (inverse) localization lengths \((m_k)_{k=0}^\infty \). Our choices are motivated by the conditions in Theorem 5.4.

The decay parameters \((p(n))_{n=1}^N\) are defined through a backward recursion: We set

and for all \(2\leqslant n\leqslant N\), we define

By iterating the definition, we obtain the upper bound

Turning to the length scales \((L_k)_{k=0}^{\infty }\), we recall that the initial length scale \(L_0\) is part of the statement of Theorem 5.4. Due to our assumption, the initial length scale satisfies

where the constant C is as in Theorem 5.4. The remaining length scales \(L_k\), where \(k\geqslant 1\), are then defined through the (forward) recursion \(L_{k}= L_{k-1}^\gamma \).

We now turn to the localization lengths \((m_k)_{k=0}^\infty \). With \(m>0\) as in the statement of the theorem, we define

Due to our choice of \(m_0\), the length scales \((L_k)_{k=0}^\infty \), and the (large) constant \(C=C(\rho ,m,\tau ,\gamma ,\beta ,d)\), all three factors in the recursion formula are positive and \(m_k \geqslant m\) for all \(k\geqslant 0\).

We now prove by a induction on \(k\geqslant 0\) that

Base case (in the length scale): \(k=0\). Due to our assumption on the disorder parameter \(\lambda \), we have for all \(1\leqslant n \leqslant N\) that

Thus, (6.2) with \(k=0\) follows from Proposition 4.1.

Induction step (in the length scale): \(k-1\rightarrow k\). Due to our choice of \((p(n))_{n=1,\ldots ,N}\), the condition (5.7) in Theorem 5.4 is satisfied. The induction step then follows from the lower bound (6.1) and Theorem 5.4 with \((\ell ,L,m,M)\) replaced by \((L_{k-1},L_k,m_{k-1},m_k)\).

This completes the proof of our claim (6.2). The claim (6.2) almost yields (1.6) in Theorem 1.3, except that the length scale L is currently restricted to the discrete sequence \((L_k)_{k=0}^\infty \). This restriction can essentially be removed as in Section [27, Section 4.3]. Since [27, Section 4.3] uses exponential instead of polynomial tails, we mention that p in the derivation of (6.2) has to be replaced by \(p+4Nd\), but omit all other details. \(\square \)

References

Agarwal, K., Altman, E., Demler, E., Gopalakrishnan, S., Huse, D.A., Knap, M.: Rare-region effects and dynamics near the many-body localization transition. Ann. Phys. 529(7), 1600326 (2017)

Aizenman, M., Elgart, A., Naboko, S., Schenker, J.H., Stolz, G.: Moment analysis for localization in random Schrödinger operators. Invent. Math. 163(2), 343–413 (2006)

Aizenman, M., Molchanov, S.: Localization at large disorder and at extreme energies: an elementary derivation. Commun. Math. Phys. 157(2), 245–278 (1993)

Anderson, P.W.: Absence of diffusion in certain random lattices. Phys. Rev. 109, 1492–1505 (1958)

Abanin, D.A., Papić, Z.: Recent progress in many-body localization. Ann. Phys. 529(7), 1700169 (2017)

Abdul-Rahman, H., Nachtergaele, B., Sims, R., Stolz, G.: Localization properties of the disordered XY spin chain: a review of mathematical results with an eye toward many-body localization. Ann. Phys. 529(7), 201600280 (2017)

Aizenman, M., Warzel, S.: Localization bounds for multiparticle systems. Commun. Math. Phys. 290(3), 903–934 (2009)

Aizenman, M., Warzel, S.: Random Operators, volume 168 of Graduate Studies in Mathematics. American Mathematical Society, Providence, RI. Disorder effects on quantum spectra and dynamics (2015)

Bourgain, J., Kenig, C.E.: On localization in the continuous Anderson–Bernoulli model in higher dimension. Invent. Math. 161(2), 389–426 (2005)

Bourgain, J., Wang, W.-M.: Diffusion bound for a nonlinear Schrödinger equation. In: Mathematical Aspects of Nonlinear Dispersive Equations, volume 163 of Annals of Mathematics Studies, pp. 21–42. Princeton University Press, Princeton (2007)

Bourgain, J., Wang, W.-M.: Quasi-periodic solutions of nonlinear random Schrödinger equations. J. Eur. Math. Soc. (JEMS) 10(1), 1–45 (2008)

Chulaevsky, V., de Monvel, A.B., Suhov, Y.: Dynamical localization for a multi-particle model with an alloy-type external random potential. Nonlinearity 24(5), 1451–1472 (2011)

Chulaevsky, V.: On resonances in disordered multi-particle systems. C. R. Math. Acad. Sci. Paris 350(1–2), 81–85 (2012)

Chulaevsky, V.: On the regularity of the conditional distribution of the sample mean. arXiv:1304.6913, (2013)

Chulaevsky, V.: On partial charge transfer processes in multiparticle systems on graphs. J. Oper., pages Art. ID 373754, 9 (2014)

Chulaevsky, V.: On the regularity of the conditional distribution of the sample mean. Markov Process. Relat. Fields 21(3 part 1), 415–431 (2015)

Chulaevsky, V.: Efficient localization bounds in a continuous \(N\)-particle Anderson model with long-range interaction. Lett. Math. Phys. 106(4), 509–533 (2016)

Chulaevsky, V.: Towards localization in long-range continuous interactive Anderson models. Oper. Matrices 13(1), 121–153 (2019)

Chulaevsky, V., Suhov, Y.: Eigenfunctions in a two-particle Anderson tight binding model. Commun. Math. Phys. 289(2), 701–723 (2009)

Chulaevsky, V., Suhov, Y.: Multi-particle Anderson localisation: induction on the number of particles. Math. Phys. Anal. Geom. 12(2), 117–139 (2009)

Chulaevsky, V., Suhov, Y.: Multi-scale analysis for random quantum systems with interaction. Progress in Mathematical Physics, vol. 65. Springer, New York (2014)

Chulaevsky, V., Suhov, Y.: Efficient Anderson localization bounds for large multi-particle systems. J. Spectr. Theory 7(1), 269–320 (2017)

Cong, H., Shi, Y.: Diffusion bound for the nonlinear Anderson model (2020). arXiv:2008.10171

Cong, H., Shi, Y., Zhang, Z.: Long-time Anderson Localization for the Nonlinear Schrodinger Equation Revisited (2020). arXiv:2006.04332

Delyon, F., Kunz, H., Souillard, B.: One-dimensional wave equations in disordered media. J. Phys. A 16(1), 25–42 (1983)

Ding, J., Smart, C.K.: Localization near the edge for the Anderson Bernoulli model on the two dimensional lattice. Invent. Math. 219(2), 467–506 (2020)

Elgart, A., Klein, A.: An eigensystem approach to Anderson localization. J. Funct. Anal. 271(12), 3465–3512 (2016)

Elgart, A., Klein, A.: Eigensystem multiscale analysis for Anderson localization in energy intervals. J. Spectr. Theory 9(2), 711–765 (2019)

Elgart, A., Klein, A.: Eigensystem multiscale analysis for the Anderson model via the Wegner estimate. Ann. Henri Poincaré 21(7), 2301–2326 (2020)

Fishman, S., Krivolapov, Y., Soffer, A.: On the problem of dynamical localization in the nonlinear Schrödinger equation with a random potential. J. Stat. Phys. 131(5), 843–865 (2008)

Fishman, S., Krivolapov, Y., Soffer, A.: Perturbation theory for the nonlinear Schrödinger equation with a random potential. Nonlinearity 22(12), 2861–2887 (2009)

Fröhlich, J., Martinelli, F., Scoppola, E., Spencer, T.: Constructive proof of localization in the Anderson tight binding model. Commun. Math. Phys. 101(1), 21–46 (1985)

Fröhlich, J., Spencer, T.: Absence of diffusion in the Anderson tight binding model for large disorder or low energy. Commun. Math. Phys. 88(2), 151–184 (1983)

Fröhlich, J., Spencer, T., Wayne, C.E.: Localization in disordered, nonlinear dynamical systems. J. Stat. Phys. 42(3–4), 247–274 (1986)

Fauser, M., Warzel, S.: Multiparticle localization for disordered systems on continuous space via the fractional moment method. Rev. Math. Phys., 27(4):1550010, 42 (2015)

Germinet, F., Klein, A.: Bootstrap multiscale analysis and localization in random media. Commun. Math. Phys. 222(2), 415–448 (2001)

Germinet, F., Klein, A.: New characterizations of the region of complete localization for random Schrödinger operators. J. Stat. Phys. 122(1), 73–94 (2006)

Gol’dšeĭd, I.J., Molčanov, S.A., Pastur, L.A.: A random homogeneous Schrödinger operator has a pure point spectrum. Funkcional. Anal. i Priložen., 11(1):1–10, 96 (1977)

Kirsch, W.: An Invitation to Random Schroedinger Operators (2007). arXiv:0709.3707

Kirsch, W.: A Wegner estimate for multi-particle random Hamiltonians. Zh. Mat. Fiz. Anal. Geom., 4(1):121–127, 203 (2008)

Klein, A., Nguyen, S.T.: The bootstrap multiscale analysis of the multi-particle Anderson model. J. Stat. Phys. 151(5), 938–973 (2013)

Klein, A., Nguyen, S.T.: Bootstrap multiscale analysis and localization for multi-particle continuous Anderson Hamiltonians. J. Spectr. Theory 5(2), 399–444 (2015)

Klein, A., Nguyen, S.T., Rojas-Molina, C.: Characterization of the metal-insulator transport transition for the two-particle Anderson model. Ann. Henri Poincaré 18(7), 2327–2365 (2017)

Kunz, H., Souillard, B.: Sur le spectre des opérateurs aux différences finies aléatoires. Commun. Math. Phys., 78(2):201–246 (1980/1981)

Linjun, L., Zhang, L.: Anderson–Bernoulli Localization on the 3D lattice and discrete unique continuation principle (2019). arXiv:1906.04350

Schlein, B.: Derivation of effective evolution equations from microscopic quantum dynamics. In Evolution Equations, volume 17 of Clay Mathematics Proceedings, pp. 511–572. American Mathematical Society, Providence (2013)

Sims, R., Stolz, G.: Many-body localization: concepts and simple models. Markov Process. Relat. Fields 21(3 part 2), 791–822 (2015)

Stollmann, P.: Wegner estimates and localization for continuum Anderson models with some singular distributions. Arch. Math. (Basel) 75(4), 307–311 (2000)

Stolz, G.: An introduction to the mathematics of Anderson localization. In: Entropy and the Quantum II, volume 552 of Contemporary Mathematics, pp. 71–108. American Mathematical Society, Providence (2011)

Tao, T.: Nonlinear Dispersive Equations, volume 106 of CBMS Regional Conference Series in Mathematics. Published for the Conference Board of the Mathematical Sciences, Washington, DC; by the American Mathematical Society, Providence, RI. Local and global analysis (2006)

von Dreifus, H., Klein, A.: A new proof of localization in the Anderson tight binding model. Commun. Math. Phys. 124(2), 285–299 (1989)

Wegner, F.: Bounds on the density of states in disordered systems. Z. Phys. B 44(1–2), 9–15 (1981)

Wang, W.-M., Zhang, Z.: Long time Anderson localization for the nonlinear random Schrödinger equation. J. Stat. Phys. 134(5–6), 953–968 (2009)

Acknowledgements

The authors thank Alexander Elgart, Fei Feng, Rupert Frank, Abel Klein, and Terence Tao for interesting and helpful discussions. D. M. also thanks Carlos Kenig, Charles Smart, and Wilhelm Schlag for prior conversations about Anderson localization.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Anton Bovier.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

DM was partially supported by NSF DMS-180069.

Appendix A. Non-decreasing Rearrangement and Properties of Covers

Appendix A. Non-decreasing Rearrangement and Properties of Covers

Before we prove the covering properties, we provide a more convenient representation of the symmetrized distance.

Definition A.1

(Non-decreasing rearrangement). Let \( {\mathbf {x}}\in {\mathbb {Z}}^N \). We define \( {\widehat{{\mathbf {x}}}} \in {\mathbb {Z}}^N \) as the unique vector satisfying \( {\widehat{{\mathbf {x}}}}_j \leqslant {\widehat{{\mathbf {x}}}}_{j+1} \) for all \( j=1,\ldots ,N-1 \) and \( {\widehat{{\mathbf {x}}}} = \pi {\mathbf {x}}\) for some \( \pi \in S_N \). We call \( {\widehat{{\mathbf {x}}}} \) the non-decreasing rearrangement of \( {\mathbf {x}}\). Furthermore, we call \( {\mathbf {x}}\in {\mathbb {Z}}^N \) non-decreasing if \( {\mathbf {x}}= {\widehat{{\mathbf {x}}}} \).

Definition A.1 heavily depends on the natural order on \( {\mathbb {Z}}\) and has no (exact) analogue in \( {\mathbb {Z}}^d \) for \( d \geqslant 2 \). The next lemma characterizes the symmetrized distance of \( {\mathbf {x}}\) and \( {\mathbf {y}}\) in terms of their non-decreasing rearrangements.

Lemma A.2

Let \( N \geqslant 2 \), and let \( {\mathbf {x}},{\mathbf {y}}\in {\mathbb {Z}}^N \). Then, it holds that

Proof

Due to the permutation invariance of the identity (A.1), we may assume that \( {\mathbf {x}}\) and \( {\mathbf {y}}\) are non-decreasing. Then, the identity (A.1) is equivalent to

We proceed by induction on the particle number \( N \geqslant 2 \).

Base case: \( N= 2 \). Since (A.2) clearly holds if \( \pi \) equals the identity, the base case reduces to

Using the symmetry in \( {\mathbf {x}}\) and \( {\mathbf {y}}\), we can further reduce (A.2) to

Using that \( {\mathbf {x}}\) and \( {\mathbf {y}}\) are non-decreasing, (A.3) follows directly from

Induction step: \( N-1\rightarrow N \). We further split the induction step into two cases.

Case 1: \( \pi (N) = N \). This case easily follows from the induction hypothesis. Indeed, let \( {\widetilde{{\mathbf {x}}}} = (x_1,\ldots ,x_{N-1}) \), \( {\widetilde{{\mathbf {y}}}} = ( y_1,\ldots ,y_{N-1} ) \), and let \( {\widetilde{\pi }}\in S_{N-1} \) be the restriction of \( \pi \) to \( \{1,\ldots ,N-1\} \). Using the induction hypothesis, we obtain that