Abstract

Since their first applications, Convolutional Neural Networks (CNNs) have solved problems that have advanced the state-of-the-art in several domains. CNNs represent information using real numbers. Despite encouraging results, theoretical analysis shows that representations such as hyper-complex numbers can achieve richer representational capacities than real numbers, and that Hamilton products can capture intrinsic interchannel relationships. Moreover, in the last few years, experimental research has shown that Quaternion-valued CNNs (QCNNs) can achieve similar performance with fewer parameters than their real-valued counterparts. This paper condenses research in the development of QCNNs from its very beginnings. We propose a conceptual organization of current trends and analyze the main building blocks used in the design of QCNN models. Based on this conceptual organization, we propose future directions of research.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In the last decade, the use of deep learning models has become ubiquitous for solving difficult and open problems in science and engineering. Convolutional Neural Networks (CNN) were one of the first deep learning models [56, 88, 99], and their success in tackling the large scale object recognition and classification problem (Imagenet challenge) [92], led to its application in other domains.

The core components of a CNN architecture are the convolution and pooling layers. A convolution layer is as a variation of a fully connected layer (Perceptron), as shown in Fig. 1. In the former case, a weight-sharing mechanism over locally connected inputs is applied [51]. This technique is inspired by the local receptive fields discovered by Hubel and Wiesel in their experiments with macaques [79].

Fully connected layer (a) vs. convolutional layer (b). For an input array of \(2\times 3\) elements, the Perceptron uses 12 weights and 24 connections (not locally connected,) while the convolution layer uses 4 weights and 8 connections. In the convolutional layer, we have a reduction in the number of parameters and connections, because a single weight is connected to several inputs, and the weights are applied over locally connected inputs

Formally speaking, the convolution layer applies the mathematical definition of convolution between discrete signals; thus, for a bi-dimensional input:

the convolution with a kernel:

is defined as follows:

thus, \(\textbf{F}\in \mathbb {R}^{N_1-M_1+1 \times N_2-M_2+1}\) is called feature map.

The convolution layer is typically followed by a pooling layer; this provides a sort of local invariance to small rotations and translations of the input features [95, 96]. Moreover, T. Poggio proved that the combination of convolution and pooling layers produce an invariant signature to a group of geometric transformations [5, 135, 136]. However, in the design of very deep architectures, researchers have encountered some difficulties, e.g. reducing the number of parameters without losing generalization, and finding fast optimization methods for adjusting millions of parameters avoiding the vanishing and exploding gradient problems [76, 131].

Fundamental theoretical, as well as experimental analysis, have shown that some algebraic systems, different from the real numbers, have the potential to solve these problems. For example, using a complex numbers representation avoids local minima caused by the hierarchical structure [124], exhibits better generalization [73], and faster learning [12]. Because of the Cayley–Dickson construction [13], it could be inferred that these properties would hold on quaternion-valued neural networks. Recent experimental work has favored this conjecture, where quaternion-valued neural networks show a reduction in the number of parameters, and improved classification accuracies compared to its real-valued counterparts [8, 54, 77, 125, 130, 151, 181]. In addition, a quaternion representation can deal with four-dimensional signals as a single entity [21, 45, 75, 114, 142], models efficiently 3D transformations [66, 93], captures internal latent relationships via the Hamilton product [127], among other properties.

Because of the diversity of deep learning models, this paper focuses in those using quaternion convolution as the main component. Consequently, we have identified three dominant conceptual trends: the classic, the geometric, and the equivariant approaches. These differ in the definition and interpretation of the quaternion convolution layer. The main contributions of this paper can be summarized as follows:

-

1.

This paper presents a classification of QCNNs models based on the definition of quaternion convolution.

-

2.

This paper provides a description of all atomic components needed for implementing QCNNs models, the motivation behind each component, the challenges when they are applied in the quaternion domain, and future directions of research for each component.

-

3.

This paper presents an organized overview of the models that have been found in the literature. They are organized by application domain, classified in: classic, geometric, or equivariant approach; and presented by the type of model: recurrent, residual, convNet, generative or CAE.

The organization of the paper is as follows: In Sect. 2, we introduce fundamental concepts of quaternion algebra, then in Sect. 3 we explain the three conceptual trends (classic, geometric, and equivariant), followed by a presentation of the key atomic components to construct QCNNs architectures. Thereafter, in Sect. 4, we show a classification of current works by application, and present the diverse types of architectures. Finally, Sect. 5 presents open issues and guidelines for future work, followed by the conclusions in Sect. 6. Table 12 present a list of acronyms used in the paper.

2 Quaternion Algebra

This mathematical system was developed by W.R. Hamilton (1805–1865) at the middle of the XIX century [63,64,65]. His work on this subject started by exploring ratios between geometric elements, consequently he called quaternion to the quotient of two vectors, which are expressed in the quadrinomial form as follows [64]:

where \(q_R, q_I, q_J, q_K\) are scalars, and \(\hat{i}, \hat{j}, \hat{k}\) are three right versors, i.e. the quotient of two perpendicular equally long vectors, and also the square roots of negative unity.

In terms of modern mathematics, the quaternion algebra, \(\mathbb {H}\), is: the 4-dimensional vector space over the field of the real numbers, generated by the basis \(\{1,\hat{i},\hat{j},\hat{k}\}\), and endowed with the following multiplication rules (Hamilton product):

Thus, for two arbitrary quaternions: \({\textbf {p}} = p_R + p_I\hat{i} + p_J\hat{j} + p_K\hat{k}\) and \({\textbf {q}} = q_R + q_I\hat{i} + q_J\hat{j} + q_K\hat{k}\), their multiplication is calculated as follows:

Notice that each coefficient of the resulting quaternion, is composed of real and imaginary parts of the factors p and q. In this way, the Hamilton product capture interchannel relationships between both factors.

Moreover, quaternion algebra is associative and non-commutative, and in the sense of W. K. Clifford (1845–1879), it is the even geometric algebra of the 3D Euclidean space, \(G(\mathbb {R}^3)\) [107, 152].

Next, there are introduced some useful operations with quaternions.

Let, \({\textbf {q}} = q_R+ q_I\hat{i}+ q_J\hat{j} + q_K\hat{k}\), be a quaternion, its conjugate is defined as:

And its magnitude is computed as follows:

As well as complex numbers, quaternions can be represented in polar form [85, 164]:

where \(\hat{v}\) is a unit vector, and:

Note that for a pure quaternion \({\textbf {q}}=0\) its angle \(\theta =0\); otherwise, the unit vector of the polar representation can be computed as follows:

Even though other polar parametrizations have been proposed, see for example [4, 20, 21, 143], current QCNNs apply Eq. (2.7).

As was mentioned before, a quaternion can represent a geometric transformation. Let \({\textbf {w}}_\theta \) be a unitary versor expressed in polar form:

and let \(\textbf{q}\) be any quaternion; then, their multiplication:

applies a rotation, with angle \(\theta \), along an axis \({\textbf {w}}=w_I\hat{i}+w_J\hat{j}+w_K \hat{k}\).

Since we have not made any particular assumption on \({\textbf {q}}\), we are applying a rotation in the four-dimensional space of quaternions. Denoting by \(\Pi _1\) the orthogonal projection to the plane defined by the scalar axis and the vector \(w_I\hat{i} + w_J\hat{j} + w_K\hat{k}\), and by \(\Pi _2\) the orthogonal projection to plane perpendicular to \(w_I\hat{i} + w_J\hat{j} + w_K\hat{k}\), it can be proved that this 4-dimensional rotation is composed of a simultaneous rotation of the elements on plane \(\Pi _1\), and of the elements on plane \(\Pi _2\) [164].

Alternatively, we can split the transformation as a sandwiching product:

in this case, the angle of each versor, \({\textbf {w}}_{\theta /2}\), is divided to half.

From the group theory perspective, the set of unitary versors lies on a 3-Sphere, \(\mathbb {S}^3\), embedded in a 4D Euclidean space [66]; and together with the Hamilton product form a group, which is isomorphic to the 4D rotation group SO(4) [164], and to the special unitary group SU(2) [43, 66]. Moreover, there exist a two to one homomorphism with the rotation group SO(3) [43, 66].

Another operation of interest is quaternion convolution; because the non-commutative property of quaternion multiplication, we have 3 different definitions of discrete quaternion convolution. First, left-side quaternion convolution, defined as follows [44, 46, 133]:

Second, right-side quaternion convolution, defined as follows [46]:

In third place, we have two-sided quaternion convolution [46, 133, 141]:

where \({\textbf {q}}, {\textbf {w}}, {\textbf {w}}_{\text {left}}, {\textbf {w}}_{\text {right}} \in \mathbb {H}\), \(*\) represents the convolution operator, and the Hamilton product is applied between \(\textbf{q}\)’s and \(\textbf{w}\)’s.

Finally, Table 1 summarizes the notation that will be used in the rest of this paper.

3 QCNN Components

In this section, we present the main building blocks for implementing quaternion-valued convolutional deep learning architectures. Future directions for each component are specified at the end of each subsection.

3.1 Quaternion Convolution Layers

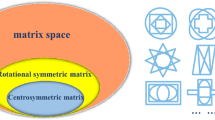

In Sect. 2, it was presented the different definitions of quaternion convolution. From this definition, different conceptual approaches can be obtained by setting some restrictions on the quaternions, e.g. using unitary quaternions, or using just the real part of one of the quaternions. In the following paragraphs, we describe the three main conceptual treads found in most of the current works on QCNNs.

Let’s assume a dataset, where each sample has dimension \(N\times M\times 4\), i.e. an input can be represented as a 4-channels matrix of real numbers. Then each sample, \(\textbf{Q}\), is represented as a \(N\times M\) matrix where each element is a quaternion:

Then \(\textbf{Q}\) can be decomposed in its real and imaginary components:

where \({Q_R}, {Q_I}, {Q_J}, {Q_K} \in \mathbb {R}^{N\times M}\).

In the same way, a convolution kernel of size \(L\times L\) is represented by a quaternion matrix, as follows:

which can be decomposed as:

where \({W_R},{W_I}, {W_J}, {W_K} \in \mathbb {R}^{L\times L}\).

In the classic approach, the definition of quaternion convolution is a natural extension of the real and complex convolution; its role is to compute the correlation between input data and kernel patterns, in the quaternion domain. Then, Altamirano [3], Gaudet and Maida [54], and Parcollet et al. [130] define the convolution layer using left-sided convolution:

Thus, \(\textbf{F}\in \mathbb {H}^{(N-L+1)\times (M-L+1)}\) represents the output of the layer, i.e. a quaternion feature map, and each element of the tensor is computed as follows:

This approach does not make any particular assumption about quaternions \(\textbf{w}\) and \(\textbf{q}\). Thus, the convolution represents the integral transformation of a quaternion function on a quaternion input signal.

It should be noted that the definition of Altamirano [3] is based on the work on Quaternion-valued Multilayer Perceptrons (QMLP’s) by Arena et al. [6,7,8], while the definition of Gaudet and Maida [54] and Parcollet et al. [130] is derived from Travelsi’s research on Complex-valued CNNs [157]. However, all the authors arrived to the same definition of the convolution layer.

Alternatively, we have the geometric approach which was constructed based on the previous work of Matsui et al. [112] and Isokawa et al. [81]. In this case, the quaternion product applies affine transformations over the input features, consequently the quaternion convolution inherits this property. Thus, Zhu et al. [181] apply the two-sided convolution definition:

where \(\textbf{F}\in \mathbb {H}^{(N-L+1)\times (M-L+1)}\), and each element of the output is computed as follows:

In addition, each quaternion \(\textbf{w}\) is represented in its polar form:

where \(\theta (r,s)\in [-\pi ,\pi ]\), and \(\textbf{u}\) represents the unitary rotation axis. Thus, quaternion convolution applies fixed-axis rotation and scaling transformation (2DoF) on the quaternion \(\textbf{q}\). Note that the role of the magnitude of the quaternion weight at the denominator of Eq. (3.8) is to compensate the double effect of multiplication of the magnitude of \(\textbf{w}(r,s)\) by the left and by the right. Thus, for \(\Vert \textbf{w}(r,s)\Vert =0\) we have directly \(\textbf{f}(x,y)=0\), avoiding division by zero.

Similarly, Hongo et al. [77] use the two-sided quaternion convolution definition, but add a threshold value for each component of the quaternion:

where \(\textbf{B} \in \mathbb {H}^{(N-L+1)\times (M-L+1)}\).

In both cases, quaternion convolution applies geometric transformations over the input data. In a recent work, Matsumoto et al. [113] note the limited expression ability of working with fixed axes, and propose a model that learns general rotation axis (4DoF), interested readers are encouraged to see full details of this model in [113].

Finally, concerning the equivariant approach Shen et al. [151] propose a simplified version; they convolve the quaternion input, \(\textbf{Q} \in \mathbb {H}^{N\times M}\), with a kernel of real numbers, \(\textbf{W} \in \mathbb {R}^{L\times L}\):

This version allows to extract equivariant features, i.e. if an input sample, \(\textbf{Q}\), produces a feature map, \(g(\textbf{Q})\), then the rotated input sample, \(R(\textbf{Q})\), will produce a rotated feature map, \(g(\textbf{Q})\), thus:

This type of convolution is specially useful when working with 3D point clouds, since the networks are robust to rotated input features, and the feature maps produced by inner layers are invariant to permutations of the input data points.

Most work on Quaternion-valued CNN is based on these, or lies in one of the preceding categories. However, independently of the approach that we follow, a related problem when we implement QCNNs, is how to deal with multidimensional inputs. In real-valued CNNs, the common way of dealing with them is: defining 2D convolution kernels with the same number of channels as the input data, apply 2D convolution separately for each channel; then, the resulting 2D outputs are summed over all channels to produce a single-channel feature map. A different approach is to apply N-dimensional convolution; in this case, the multidimensional kernel is convoluted over all the channels of the input data.

For quaternion-valued CNNs, current implementations use variations of the first approach; i.e. the input data is divided into 4-channel sub-inputs, thereafter quaternion convolution is computed for each sub-input. Before explaining the details, some notation is introduced.

Let \(\textbf{X} \in \mathbb {R}^{N\times M \times C}\) be an input data, N is the number of rows, M the number of columns, and C is the number of channels, where C is multiple of 4, then X is partitioned as follows:

where each \(\textbf{Q}_s \in \mathbb {H}^{N\times M}\), \(0<s<(C/4)-1\) is a quaternion channel.

Let \(\textbf{V} \in \mathbb {R}^{L\times L \times K}\), with K multiple of 4, be the convolution kernel, then:

where each \(\textbf{W}_s \in \mathbb {H}^{L\times L}\), \(0<s<(K/4)-1\) is a quaternion channel.

Thus, there are three different ways of dealing with multidimensional quaternion inputs, see Fig. 2:

-

1.

Encoding set-up: Kernel and input have the same number of channels (\(K=C\)). In this case, each quaternion channel input is assigned to one quaternion channel kernel, and convolution between them is computed using Eqs. (3.5), (3.7), (3.10) or (3.11); Thus, if we use left-sided convolution, each individual output, \(\textbf{F}_s \in \mathbb {H}^{N-L+1\times M-L+1}\), is computed as follows:

$$\begin{aligned} \textbf{F}_s= \textbf{W}_s * \textbf{Q}_s, \end{aligned}$$(3.15)where \(0<s<(C/4)-1\), and the final quaternion feature map is obtained by concatenating all outputs:

$$\begin{aligned} \textbf{F}= [\textbf{F}_0, \textbf{F}_2, \dots , \textbf{F}_{(C/4)-1} ] \end{aligned}$$(3.16)This method produces an output with the same number of channels as the input data; and could be used in Convolutional Auto-Encoders (CAE).

-

2.

Pyramidal set-up: Kernel and input have different number of channels (\(K\ne C\)), but they are multiples of 4. In [3], the computing of feature maps using a pyramidal approach is proposed: each kernel \(\textbf{W}_t\), where \(0<t<(K/4)-1\) is convolved with each sub-input \(\textbf{Q}_s\), where \(0<s<(C/4)-1\), hence, it produces C/4 quaternion outputs. Since each quaternion input channel is convolved with each quaternion kernel channel, see Fig. 2, a convolution kernel \(\textbf{V} \in \mathbb {R}^{L\times L \times K}\) will produce \(C*K/16\) quaternion outputs:

$$\begin{aligned} \textbf{F}= [\textbf{F}_0, \textbf{F}_2, \dots , \textbf{F}_{C*K/16}]. \end{aligned}$$(3.17)Thus, if left-sided convolution is applied, each quaternion output channel, \(\textbf{F}_k\), is computed as follows:

$$\begin{aligned} \textbf{F}_{(t*C/4)+s}= \textbf{W}_t * \textbf{Q}_s. \end{aligned}$$(3.18)Even though [3] used left-sided convolution, this approach is valid with any of the other convolution definitions. The intuition behind this approach is to detect a quaternionic pattern in any sub-input; however, its application is impractical because of the exponential growth of the number of channels in deep architectures. Calculation of summed outputs can alleviate the computational cost.

-

3.

Summed set-up: Similarly to the former method, but in this approach, each quaternion input channel is convolved with a different quaternion kernel channel:

$$\begin{aligned} \textbf{F}_s= \textbf{W}_s * \textbf{Q}_s, \end{aligned}$$(3.19)where \(0<s<(C/4)-1\). Then, the final quaternion feature map, \(\textbf{F}\in \mathbb {H}^{N\times M}\), is obtained by summing all outputs:

$$\begin{aligned} \textbf{F} = \sum _{s=0}^{(C/4)-1} \textbf{F}_s. \end{aligned}$$(3.20)

Visualization of different approaches for computing quaternion convolution on a multichannel input. This example shows a 2D input with 2 quaternion channels; the output of an encoding set-up is shaded in pink, the output of the pyramidal set-up layer is framed in cyan, and the summed set-up is shaded in orange

3.1.1 Future Directions

As was mentioned before, N-dimensional convolution has been applied on Real-valued CNNs for processing multichannel inputs. In a similar way, the equations presented can be extended to 8-channel inputs using an octonion or hypercomplex algebra, see for example [61, 147, 159, 169, 178]. For larger number of inputs, a geometric algebra [71] representation can be applied. Some approaches in this direction can be found in [61, 178], which works learn the algebra rules directly from the data. A different approach consist in adapting Clifford Neurons [16, 18, 19, 132] to the deep learning domain. Even though some works has been proposed in this line of thought [15, 177], the Clifford Deep Leaning area is still in its infancy and further work is required in the study of properties, development of components, models and applications.

Finally, in these type of deep learning architectures: quaternion, hyper-complex, or geometric, a major concern is the selection of the signature of the algebra, which will embed data into different geometric spaces, and the processing will take distinct meanings accordingly. Thus, a sensible selection of the dimension and signature should be made according to the nature of the problem and the meaning of input data as well as intermediate layers.

3.2 Quaternion Fully Connected Layers

Let \(\textbf{Q}\) be a \(N_1\times N_2 \times N_3\) tensor, representing the input to a fully connected layer; then each element of \(\textbf{Q}\) is a quaternion:

where \(N_1, N_2, N_3\) are the height, width, and number of channels of the input.

Now, for the fully connected layer, a quaternion kernel, \(\textbf{W}\), of size \(N_1\times N_2 \times N_3\) is defined, where each element is a quaternion:

where \(N_1, N_2, N_3\) are the height, width, and number of channels of the input.

Note that elements of the input and weight tensors are denoted as q(x, y, z), and w(x, y, z), respectively; and the output of the layer will be a quaternion, \(\textbf{f} \in \mathbb {H}\).

Thus, for classic fully connected layers, the output, \(\textbf{f}\), is computed as follows [3]:

Similarly, for geometric fully connected layers, the output is computed as follows [181]:

The difference between quaternion-valued and real-valued fully connected layers relies on the application of the Hamilton product, which captures interchannel relationships, and in the former case, the output is a quaternion. Moreover, it should be noticed that for real-based networks, fully connected layers are equivalent to inner product layers, but for quaternion-valued networks, the output of quaternion fully connected layers and quaternion inner product layers are different.

3.2.1 Future Directions

Current implementations of fully quaternion layers follows a classic or geometric approach; the equivariance approach should be implemented and tested.

3.3 Quaternion Pooling

A pooling layer introduces a sort of invariance to geometric transformations, such as small translations and rotations. Most of the current methods for applying quaternion pooling rely on channel-wise pooling, in a similar way as is applied in Real-valued CNNs. For example, [54, 113] use channel-wise global average pooling layers, and [100, 101, 181] apply channel-wise average as well as channel-wise max pooling layers, while [77, 119, 130] apply just channel-wise max pooling layers, defined as follows:

where \({\textbf {Q}}\) is a quaternion submatrix.

In contrast, [3, 151, 176] use a max-pooling approach, but instead of using the channel-wise maximum value, they select the quaternion with maximum amplitude within a region:

In addition, since this method can obtain multiple maximum amplitude quaternions, [176] applies the angle cosine theorem to discriminate between them. In this way, fully-quaternion pooling takes advantage of the information contained in different channels.

3.3.1 Future Directions

Future works should introduce novel fully quaternion pooling methods, emphasizing the use of interchannel relationships, and their advantages over split methods. For example: using polar representations, introducing quaternion measures [108], or taking inspiration from information theory.

3.4 Quaternion Batch Normalization

Internal covariance shift [80], is a statistical phenomenon that occurs during training: the change of the network parameters causes changes in the statistical distribution of the inputs in hidden layers. Whitening procedures [97, 165], i.e. apply linear transformations to obtain uncorrelated inputs with zero means and unit variances, alleviate this phenomenon. Since whitening the input layers is computationally expensive, because of the computing of covariance matrices, its inverse square root, and the derivatives of these transformations, Ioffe and Szegedy [80] introduced the batch normalization algorithm, which normalizes each dimension independently. It uses mini-batches to estimate the mean and variance of each channel, and transform the channel to have zero mean and unit variance using the following equation:

where \(\epsilon \) is a constant added for numerical stability. In order to maintain the representational ability of the network, an affine transformation with two learnable parameters, \(\gamma \) and \(\beta \), is applied:

Even though this method does not produce uncorrelated inputs, it improves convergence time by enabling higher learning rates, and has allowed the training of deeper neural network models. On the other hand, uncorrelated inputs reduce overfitting and improve generalization [33].

Since channel-wise normalization does not assure equal variance in the real and imaginary components, Gaudet and Maida [54] proposed a quaternion batch-normalization algorithm using the whitening approach [87], and treat each component of the quaternion as an element of a four dimensional vector. We call this approach Whitening Quaternion Batch-Normalization (WQBN). Let \(\textbf{x}\) be a quaternion input variable, \(\textbf{x}=[x_R, x_I, x_J, x_K]^T\), \(E(\textbf{x})\) its expected value, and \(V(\textbf{x})\) its quaternion covariance matrix, both computed over a mini-batch. Then:

where subscripts represent the covariance between real or imaginary components of \(\textbf{x}\), e.g. \(v_{ij}=\text {cov}(x_I,x_J)\). Then, the Cholesky decomposition of \(V^{-1}\) is computed, and one of the resulting matrices, W, is selected. Thereafter, the whitened quaternion variable, \(\tilde{\textbf{x}}\), is calculated with the following matrix multiplication [54]:

and finally:

where \(\beta \in \mathbb {H}\) is a trainable parameter, and:

is a symmetric matrix with trainable parameters.

In contrast, Yin et al. [176] apply the quaternion variance definition proposed in [162]:

where \(\textbf{v}=\textbf{x}-E(\textbf{x})\). Note that in this case, the variance is a single real value. We call this approach the Variance Quaternion Batch Normalization (VQBN). Thus, the batch normalization is computed as follows:

where \(\mathbf {\gamma ,\epsilon }\in \mathbb {R}\), \(\mathbf {\beta }\in \mathbb {H}\) are trainable parameters.

Recently, Grassucci et al. [58] noted that for proper quaternion random variables [29, 161], the covariance matrix in Eq. (3.29) becomes the diagonal matrix:

and the batch-normalization procedure is simplified to applying Eqs. (3.33) and (3.34) with \(V(\textbf{x})=2\sigma \).

A third approach is the one proposed in [151], where the authors define a rotation-equivariance operation as follows:

Generative Adversarial Networks apply another type of normalization, called Spectral Normalization [115]. In these networks, having a Lipschitz-bounded discriminative function is crucial to mitigate the gradient explosion problem [11, 57, 180]; thus, based on their real-valued counterpart, Grassucci et al. [58] proposed a Quaternion Spectral Normalization algorithm that constrains the spectral norm of each layer. To explain this procedure, we introduce some definitions.

Let f be a generic function, it is said to be K-Lipschitz continuous if, for any two points, \(x_1,x_2\), it satisfies:

Let \(\sigma (\cdot )\) be the spectral norm of a matrix, i.e. the largest singular value of a matrix. The Lipschitz norm of a function f, denoted by \(\Vert f\Vert _{\text {Lip}}\), is defined as follows:

Thus, for a generic linear layer, \(f(h)=Wx+b\), whose gradient is W, their Lipschitz norm is:

Now, let \(\textbf{W}\) be a quaternion matrix; their Lipschitz norm, \(\sigma (\textbf{W})\), is computed by estimating the largest singular value of \(\textbf{W}\) via the power iteration method [58]. Then, Quaternion Spectral Normalization is applied, in a split way, using the following equations:

For applying the Lipschitz bound to the whole network, the following relationship is used:

provided the Lipschitz norm of each layer is constrained to 1 [58]. For details about the incorporation of the method in the training stage, consult [58, 115]

3.4.1 Future Directions

The WQBN algorithm produces uncorrelated, zero mean, and unit variance inputs, but is computationally expensive. In addition, Kessy et al. [87] states that there are infinitely many possible matrices satisfying \(V^{-1}=\bar{W}W\). In contrast, VQBN algorithm produces zero mean and unit variance input (according to the Wang et al. definition [162], which averages the variance of the four channels), but since we use a single value variance, scaling is isotropic, and the input channels still correlated. However, this approach greatly reduces the computational time, by avoiding the decomposition of the covariance matrix. Further theoretical and experimental analysis is required to grasp its advantages versus independent channel batch-normalization.

3.5 Activation Functions

Biological neurons produce an output signal if a set of input stimuli surpasses a threshold value within a lapse of time; for artificial neurons, the role of the activation function is to simulate this triggering action. Mathematically, this behavior is modeled by a mapping: in the case of real-valued neurons, the domain and image is the field of real numbers, while complex and quaternion neurons map complex or quaternion inputs to complex or quaternion outputs, respectively. In addition, non-linear activation functions are required so artificial neural networks work as universal interpolators of continuous quaternion valued functions, related density theorems for quaternion-valued MLP can be consulted in [8].

Since back-propagation has become the standard method for training artificial neural networks, the activation function is required to be analytic [72], i.e. the derivative exists at any point. In the complex domain, by the Liouville theorem, it is known that a bounded function, which is analytical at any point, is a constant function; reciprocally, complex non-constant functions have non-bounded images [153]. Accordingly, some common real-valued functions, like the hyperbolic tangent and sigmoid, will diverge to infinity when they are extended to the complex domain [72]; this makes them unsuitable for representing the behavior of a biological neuron. A similar problem arises in the quaternion domain: “the only quaternion function regular with bounded norm in \(E^4\) is a constant” [40], where \(E^4\) stands for a 4-dimensional Euclidean space.

The relationship between regular functions and the existence of their quaternionic derivatives is stated by the Cauchy–Riemann–Fueter equation [155]; leading to the result that the only globally analytic quaternion functions are some linear and constant functions. Moreover, non-linear activation functions are required for constructing a neural network architecture that works as a universal interpolator of a continuous quaternion valued function [8].

In this manner, a typical approach to circumvent this problem, has been to relax the constraints, and use non-linear non-analytic quaternion functions satisfying input and output properties, where the learning dynamics is built using partial derivatives on the quaternion domain. An example of this approach are split quaternion functions, defined as follows:

where \(\textbf{q}\in \mathbb {H}\), \(f_R\), \(f_I\), \(f_J\), and \(f_K\) are mappings over the real numbers: \(f_{*}:\mathbb {R}\rightarrow \mathbb {R}\).

Nowadays, split quaternion functions remain the only type of activation function that has been implemented on QCNNs. Even though any type of quaternion split activation function used in QMLP can be applied, split quaternion ReLU is currently the most common activation function applied on QCNNs. For the sake of completeness, we present the activation functions found in current works.

Let \(\textbf{q}=q_R+q_I\hat{i}+q_J\hat{j}+q_K\hat{k} \in \mathbb {H}\), we have the following activation functions:

-

1.

Split Quaternion Sigmoid. It was introduced in [3, 10], and is defined as follows:

$$\begin{aligned} {Q}S(\textbf{q})= S(q_R)+ S(q_I) \hat{i} + S(q_J) \hat{j} + S(q_K) \hat{k}, \end{aligned}$$(3.43)where \(S: \mathbb {R}\rightarrow \mathbb {R}\) is the real-valued sigmoid function:

$$\begin{aligned} S(x)= \frac{1}{1+e^{-x}}. \end{aligned}$$(3.44) -

2.

Split Quaternion Hyperbolic Tangent. It was introduced in [129, 181], and is defined as follows:

$$\begin{aligned} {Q}tanh(\textbf{q})= \tanh (q_R)+ \tanh (q_I)\hat{i} + \tanh (q_J) \hat{j} + \tanh (q_K) \hat{k}, \end{aligned}$$(3.45)where \(\tanh : \mathbb {R}\rightarrow \mathbb {R}\) is the real-valued hyperbolic tangent function:

$$\begin{aligned} \tanh (x)= & \frac{\sinh (x)}{\cosh (x)} \nonumber \\= & \frac{\exp (2x)-1}{\exp (2x)+1}. \end{aligned}$$(3.46) -

3.

Split Quaternion Hard Hyperbolic Tangent. It was introduced in [127], and is defined as follows:

$$\begin{aligned} {Q}H^2T(\textbf{q})= H^2T(q_R)+ H^2T(q_I) \hat{i} + H^2T(q_J) \hat{j} + H^2T(q_K) \hat{k}, \end{aligned}$$(3.47)where \(H^2T: \mathbb {R}\rightarrow \mathbb {R}\) [34]:

$$\begin{aligned} H^2T(x)= {\left\{ \begin{array}{ll} -1 & \text {if } x<-1 \\ x & \text {if } -1\le x\le 1 \\ 1 & \text {if } x>1. \end{array}\right. } \end{aligned}$$(3.48)For this type of activation functions, Collobert [34] proved that hidden layers of MLP work as local SVM’s when the real-value hard hyperbolic tangent is used.

-

4.

Split Quaternion ReLU. It was introduced in [54, 77, 176], and is defined as follows:

$$\begin{aligned} {Q}ReLU(\textbf{q}) = ReLU(q_R) + ReLU(q_I)\hat{i} + ReLU(q_J)\hat{j} + ReLU(q_K)\hat{k}, \end{aligned}$$(3.49)where \(ReLU: \mathbb {R}\rightarrow \mathbb {R}\) is the real-valued ReLU function [50, 56]:

$$\begin{aligned} ReLU(x)= \max (0, x). \end{aligned}$$(3.50) -

5.

Split Quaternion Parametric ReLU. It was introduced in [130], and is defined as follows:

$$\begin{aligned} {Q}PReLU(\textbf{q}) = PReLU(q_R)+ PReLU(q_I)\hat{i} + PReLU(q_J)\hat{j} + PReLU(q_K)\hat{k}, \end{aligned}$$(3.51)where \(PReLU: \mathbb {R}\rightarrow \mathbb {R}\) [67]:

$$\begin{aligned} PReLU(x)= {\left\{ \begin{array}{ll} x & \text {if } x>0 \\ \alpha x & \text {if } x\le 0 \end{array}\right. } \end{aligned}$$(3.52)and \(\alpha \) is a parameter learned during the training stage, which controls the slope of the negative side of the ReLU function. Equivalently, we have:

$$\begin{aligned} PReLU(x)= \max (0, x)+\alpha \min (0,x). \end{aligned}$$(3.53) -

6.

Split Quaternion Leaky ReLU. It was introduced in [59] as a particular case of the \(\mathbb {Q}\)PReLU function; in this case, \(\alpha \) is a small constant value, e.g. 0.01 [109].

An advantage of using split activation functions is that by processing each channel separately, we can adopt existing frameworks without additional modifications of the source code; however, this separate processing does not adequately capture the cross-dynamics of the data channels.

A different approach in the design of quaternion activation functions comes from quaternion analysis [155]. So far, it is clear that the key problem is designing suitable activation functions, and computing its quaternion derivatives; thus, some mathematicians have been working in redefining quaternion calculus.

For example, De Leo and Rotelli [38, 39], as well as Schwartz [144], have introduced the concept of local quaternionic derivative. The trick was to extend “the concept of a derivative operator from those with constant (even quaternionic) coefficients, to one with variable coefficients depending upon the point of application... [thus] the derivative operator passes from a global form to local form” [39]. Using this novel approach, they define local analyticity, which, in contrast to global analyticity, does not reduce functions to a trivial class (constant or linear functions). Following this idea, Ujang et al. [158] define the concept of fully quaternion functions, and the properties they should fulfill to be locally analytic, non-linear, and suitable for gradient-based learning. They displayed better performance over split-quaternion functions when they were applied for designing adaptive filters. Opposed to split functions, fully quaternion functions capture interchannel relationships, making them suitable for quaternion-based learning; however, experimental comparison over a standard benchmark remain an open issue.

To the best of our knowledge, currently, the only fully quaternion activation function that has been applied in QCNNs is the Rotation-Equivariant ReLU function. It was proposed by Shen et al. [151] as part of a model that extracts rotation equivariant features. Let \(\{\textbf{q}_1, \textbf{q}_2, \dots , \textbf{q}_N\}\) be a set of quaternions; then, for a quaternion \(\textbf{q}_s\), with \(1\le s\le N\), the activation function is defined as follows:

where c is a positive constant, computed as follows: \(c=\frac{1}{N}\sum _{t=1}^N \Vert \textbf{q}_t\Vert \). Since c is the average of the magnitudes of all quaternion outputs in a neighborhood, if the magnitude of a specific quaternion output is above the average, the output remains the same (active); otherwise, the magnitude of the quaternion output is mitigated by a 1/c factor.

3.5.1 Future Directions

So far, we have identified the fundamental trends of thought for activation functions: split-quaternion functions, whose derivatives for training are computed in a channel wise manner, and fully quaternion functions, whose derivatives are computed locally, or using partial derivatives. Theoretical analysis, as well as some preliminary experimental results, have indicated a better performance of fully quaternion activation functions over others. However, these ideas have not been set in practice on QCNNs. Intended works should focus on introducing novel fully connected activation functions that exploit specific properties of the quaternion representation, and establishing proper benchmarks for comparison of existing functions.

3.6 Training

From the point of view of supervised learning, the problem of training a QCNN can be stated as an optimization one:

where L is the loss function, and \(\textbf{w}_{1},\dots ,\textbf{w}_{n}\) are all the quaternion weights of the network.

For solving the problem, current works extend the Gradient Descent Algorithm to the quaternion domain, and computation of the gradient is achieved by means of the backpropagation technique. However, due to the non-analytic condition of non-linear quaternionic functions, there are three approaches to computing derivatives during the training stage: use partial derivatives and the generalized quaternion chain rule [54], use local quaternionic derivatives [38, 39, 144] or use the Generalized Hamiltonian-Real calculus (GHR) [172].

Currently, all of the methods for training that have been tested on QCNNs rely on the first approach, i.e. the use of partial derivatives. These methods are adaptations of the QMLP backpropagation algorithm [7], or an extension of the generalized complex chain rule for real-valued loss functions [157]. Both approaches are equivalent and relax the analyticity condition by using partial derivatives with respect to the real and imaginary parts, as follows: Let L be a real-valued loss function and \(\textbf{q}=q_R+q_I\hat{i}+ q_J\hat{j} + q_K\hat{k}\), where \(q_R, q_I, q_J, q_K \in \mathbb {R}\), then [54, 155]:

Now, assuming \(\textbf{q}\) can be expressed in terms of a second quaternion variable, \(\textbf{w}=w_R+w_I \hat{i}+ w_J \hat{j} + w_K \hat{k}\), where \(w_R, w_I, w_J, w_K \in \mathbb {R}\). Then, the generalized quaternion chain rule is calculated as follows [54]:

From these equations, the gradient descendant algorithm using backpropagation was developed, see [3, 9, 10, 113] for a full derivation of the algorithm; the algorithmic form is summarized as follows:

Let \(\textbf{Q} = [\textbf{q}(x,y)] \in \mathbb {H}^{N\times M}\) be the input to a convolution layer, \(\textbf{W} = [\textbf{w}(x,y)] \in \mathbb {H}^{L\times L}\) be the weights of the convolution kernel, and \(\textbf{F} = [\textbf{f}(x,y)]\in \mathbb {H}^{(N-L+1)\times (M-L+1)}\) be the output of the layer; then, a quaternion weight, \(\textbf{w}(s,t)\), where \(1<s,t<L\), represents the weight connecting input \(\textbf{q}(a+s,b+t)\) with output \(\textbf{f}(u,v)\), where \(1<u<N-L+1\) and \(1<v<N-L+1\). Thus, the gradient of the loss function with respect to \(\textbf{w}(s,t)\) is computed using Eq. 3.57. Hence, given a positive constant learning rate, \(\epsilon \in \mathbb {R}^+\), the quaternion weight must be changed by a value proportional to the negative gradient:

Let \(\textbf{d}(u,v)^{top}, \textbf{d}(s,t)^{bottom} \in \mathbb {H}\) be the error propagated from the top and to the bottom layers, respectively, the weights are updated as follows:

The bias term is updated using the following equation:

and the error is propagated to the bottom layer according to the following equation:

Note that Eqs. (3.59) and (3.61) use quaternion products. Finally, for an activation layer, the error is propagated to the bottom layer according to the following equation:

where \(\odot \) is the Hadamard or Schur product (component wise) [36].

Adopting the same definition of the chain rule, but a different definition of convolution, we have the work of Zhu et al. [181], whose authors apply the two-sided convolution and a polar representation of the quaternion weights. Since their model applies rotation and scaling on the input features, the quaternion gradient for his model is simplified to a rotation transformation over the same axis, but with a reversed angle. A general model of this approach, with learnable arbitrary axes, is presented by [113]. Therefore, the training algorithm is similar to the one presented before, but Eq. (3.59) changes accordingly [81, 112, 113]:

and Eq. (3.61) changes to [81, 112, 113]:

So far, we have reviewed the first approach for training; the second approach relies on developing novel quaternionic calculus tools. Thus, using the concept of local quaternionic derivative [38, 39, 144] T. Isokawa proposed a QMLP and a backpropagation algorithm [82, 120].

The third approach follows the same trend of developing novel quaternion calculus tools, but from a different perspective. Mandic et al. [110] start from the observation that for gradient-based optimization, a common objective is to minimize a positive real function of quaternion variables, e.g. \(J(\textbf{e},\mathbf {\bar{e}})=\textbf{e}\mathbf {\bar{e}}\), and for that purpose the pseudo-gradient is used, i.e. the sum of component-wise gradients. Formalization of these ideas is achieved by “establishing the duality between derivatives of quaternion valued functions in \(\mathbb {H}\) and the corresponding quadrivariate real functions in \(\mathbb {R}^4\)” [110], leading to what is called Hamiltonian-Real Calculus (HR). In addition, Mandic et al. [110] proved that for a real function of quaternion vector variable, the maximum change is in the direction of the conjugate gradient, establishing a general framework for quaternion gradient-based optimization. Going further, Xu and Mandic [172] proposed the product and chain rules for computing derivatives, as well as the quaternion counterparts of the mean value and Taylor’s theorems; this establishes an alternative framework called Generalize HR calculus (GHR). Thereafter, novel quaternion gradient algorithms using GHR calculus were proposed [173, 174]. Although in [175] is shown that a QMLP trained with a GHR-based algorithm obtains better prediction gains, on the 4D Saito’s chaotic signal task, than other quaternion-based learning algorithms [8, 17, 112], further experimental analysis on a standard benchmark and proper comparison with real and complex counterparts is required. To this date, there is no published work on the use of local quaternionic derivatives or GHR calculus for training QCNNs.

Besides the problem of computing derivatives of non-analytic quaternion activation functions, another problem is that algorithms such as gradient descent and back propagation could be trapped in local minima. Therefore, QCNNs could be trained with alternative methods that do not rely on the computing of quaternion derivatives, such as evolutionary algorithms, ant colony optimization, particle swarm optimization, etc. [31]. In this case, general-purpose optimization algorithms will have the same performance in average [166, 167].

3.6.1 Future Directions

Novel methods for training should be applied, for example: modern quaternion calculus techniques, such as local or GHR calculus, and meta-heuristic algorithms working in the quaternion domain. In addition, fully quaternion loss functions should be introduced.

3.7 Quaternion Weight Initialization

At the beginning of this century, real-valued neural networks were showing the superiority of deep architectures; however, the standard gradient descent algorithm from random weight initialization performed poorly when used for training these models. To understand the reason of this behavior, Glorot and Bengio [55] established an experimental setup and observed that some activation functions can cause saturation in top hidden layers. In addition, by theoretically analyzing the forward and backward propagation variances (expressed with respect to the input, output and weight initialization randomness), they realized that, because of the multiplicative effect through layers, the variance of the back-propagated gradient might vanish or explode in very deep networks. Consequently, they proposed, and validated experimentally, a weight initialization procedure, which makes the variance dependent on each layer, and maintains activation and back-propagated gradient variances as we move up or down the network. Their method, called normalized initialization, uses a scaled uniform distribution for initialization. However, one of its assumptions is that the network is in a linear regime at the initialization step; thus, this method works better for softsign units than for sigmoid or hyperbolic tangent ones [55]. Since the linearity assumption is invalid for activation functions such as ReLU, He et al. [67] developed an initialization method for non-linear ReLU and Parametric ReLU activation functions. Their initialization method uses a zero-mean Gaussian distribution with a specific standard deviation value, and surpasses the performance of the previous method for training extremely deep models, e.g. 30 convolution layers.

In the case of classic QCNNs, Trabelsi et al. [157] extended these results to deep complex networks. Similar results are presented by Gaudet and Maida [54] for deep quaternion networks, and by Parcollet et al. [129] for quaternion recurrent networks. These works treat quaternion weights as 4-dimensional vectors, whose components are normally distributed, centered at zero, and independent. Hence, it can be proved that the weights and their magnitude follow a 4DOF Rayleigh distribution [54, 129], reducing the weight initialization by selecting a single parameter, \(\sigma \), which indicates the mode of the distribution. Let \(n_\text {in}\) and \(n_\text {out}\) be the number of neurons of the input and output layers, respectively, if we select \(\sigma =\frac{1}{\sqrt{2(n_{\text {in}}+n_{\text {out}})}}\), then we have a quaternion normalized initialization which ensures that the variances of the quaternion input, output and their gradients are the same. Alternatively, \(\sigma =\frac{1}{\sqrt{2n_{\text {in}}}}\) is used for the quaternion ReLU initialization. The algorithm is presented in Fig. 3.

On the other hand, for geometric QCNNs, the quaternion weights represent an affine transformation composed by a scale factor, s, and a rotation angle \(\theta \). Thus, to keep the same variance of the gradients during training, Zhu et al. [181] proposed a simple initialization procedure using the uniform distribution, \(U[\cdot ]\):

3.7.1 Future Directions

In the case of geometric QCNNs, further theoretical analysis of weight initialization techniques is required, as well as the extension for ReLU units. For equivariant QCNNs, weight initialization procedures have not been introduced or are not described in the literature. In addition, current works only consider the case of split quaternion activation functions. The propagation of variance using fully quaternion activation functions should be investigated.

4 Architectural Design for Applications

The previous section deals with the individual building blocks of QCNNs; the possible ways we can interconnect them, give rise to numerous models. In this manner, there are three leading factors to consider when implementing applications:

-

1.

Domain of application. Current works are primarily focused on 3 areas: vision, language, and forecasting.

-

2.

Mapping the input data from real numbers to the quaternion domain.

-

3.

Topology. Based on current works, we have the following types:

-

ConvNets. They use convolution layers without additional tricks.

-

Residual. They use a shortcut connection from input to forward blocks. This connection creates a pathway with an identity mapping from input to output of a residual block. In this way, it is easier for the solver to learn the desired function with reference to an identity mapping than to learn it as a new one.

-

Convolution Auto-Encoders (CAE). Its aim is to reconstruct the input feature at the output.

-

Point-based. They focus on unordered sets of vectors such as point-cloud input data.

-

Recurrent. They exploit connections that can create cycles, allowing the network to learn long-term temporal information.

-

Generative. They use an adversarial learning paradigm, where one player generates samples resembling the real data, and the other discriminates between real and fake data; the solution of the game is the model achieving Nash equilibrium.

-

Residual QNN models. Some notation details: For convolution, parameters are presented in the format (number of kernels, size of kernel, stride); if the size of kernel or stride is the same for each dimension, a single number is shown, e.g. 3 is shown instead of \(3\times 3\). Symbol \(+\) inside a circle means summing. Dropout and flatten procedures are omitted. All blocks represent operations in the quaternion domain

Quaternion ConvNet models. Some notation details: For convolution, parameters are presented in the format (number of kernels, size of kernel, stride); if the size of kernel or stride is the same for each dimension, a single number is shown, e.g. 3 is shown instead of \(3\times 3\). LRN stands for Local Response Normalization. Dropout and flatten procedures are omitted. All blocks represent operations in the quaternion domain, except those preceded by the word “real”

Quaternion CAE and generative models. Some notation details: For convolution, parameters are presented in the format (number of kernels, size of kernel, stride); if the size of kernel or stride is the same for each dimension, a single number is shown, e.g. 3 is shown instead of \(3\times 3\). Symbol \(+\) inside a circle means summing while \(\cup \) inside a circle means concatenation. TConv stands for Decoding Transposed Convolution. Dropout and flatten procedures are omitted. All blocks represent operations in the quaternion domain, except those preceded by the word “real”

This section is devoted to show how the blocks presented in Sect. 3 can be used to construct different architectures, and we focus our analysis on the three points previously introduced.

The information is organized in three subsections corresponding to the domains of applications: vision, language, and forecasting. Within each subsection, the models are ordered according to the type of convolution involved: classic, geometric, or equivariant. In addition, different networks are presented according to their topology: ConvNet, Residual, CAE, Point-based, Recurrent, or Generative. Methods for mapping input data to the quaternion domain are presented in each subsection.

A graphic depiction of several models is shown in Figs. 4, 5, and 6. Some architectures available in the literature are presented from a high-level abstraction point of view; showing their topologies and the blocks they use. Information about the number of quaternion kernels, size, and stride is included when available. Further implementation details, such as weight initialization, optimization methods, etc. is omitted to avoid a cumbersome presentation. Readers interested in specific models are encouraged to consult the original sources.

In addition, Tables 2, 3, 4, 5, 6, 7, 8, 9 and 10 provide a comparison between different QCNNs. They provide extra information, such as datasets on which the models were tested, performance metrics, comparison to real-valued models, etc. For comparison between quaternion and real-valued models, we only report real-valued architectures that have similar topology. The difference in the number of parameters between real-valued and quaternion-valued networks is due to the following reasons: Some authors prefer to compare networks with the same number of real-valued units, and quaternion-valued units, where the quaternion-valued units have 4 times more parameters. Others compare networks with the same number of parameters: they reduce the number of quaternion units to \(25\%\) of the real-valued networks, or quadruple the number of real-valued units. In addition, some quaternion-value networks use real-valued components in some parts of the network.

To conclude, Sect. 4.4 summarizes some insights obtained from these works (Table 10).

4.1 Vision

For mapping data to the quaternion domain, several methods are available in the literature; in the case of color images, the most common approach is to encode the red, green, and blue channels into the imaginary parts of the quaternion:

see for example [26, 27, 58,59,60, 77, 83, 119, 127, 146, 176, 181].

Another method, proposed by Gaudet and Maida [54], is to use a residual block:

\(BN\rightarrow ReLU\rightarrow Conv\rightarrow BN\rightarrow ReLU\rightarrow Conv\),

where a shortcut connection sums the input to the output of the block.

In contrast, for grayscale images, Chen et al. [27] propose to map the grayscale values to the real part of the quaternion as follows:

In the case of Polarimetric Synthetic Aperture Radar (PolSAR) images [106] containing scattering matrices [14, 90]:

Matsumoto et al. [113] propose to compute a Pauli decomposition [32] from the complex scattering matrix, as follows:

and assign the square magnitude of the components b, c, and a to the red, green, and blue channels, respectively, of an RGB image. Thereafter, the image is mapped to the quaternion domain as usual. Alternatively, Matsumoto et al. [113] propose to transform the scattering matrix into 3D Stokes vectors [98] normalized by their total power. Thereafter, each component is mapped to the imaginary parts of the quaternion.

Finally, for point clouds, Shen et al. [151] propose to map the (x, y, z) coordinates of each 3D point to a quaternion as follows:

4.1.1 Vision Under the Classic Convolution Paradigm

One of the first models proposed in the QCNNs literature were Residual Networks; in the quaternion domain, Gaudet and Maida [54] tested deep and shallow quaternion-valued residual nets. For both cases, they use three stages; the shallow network contains 2, 1 and 1 residual blocks in each stage, while the deep network uses 10, 9 and 9 residual blocks in each stage, see Fig. 4a. These models were applied on image classification tasks using the CIFAR-10 and CIFAR-100 [91] datasets, and the KITTI Road Estimation benchmark [49]. Recently, Sfikas et al. [148] proposed a standard residual model for keyword spotting in handwritten manuscripts, see Fig. 4c. Their model is applied on the PIOP-DAS [149] and the GRPOLY-DB [53] datasets, containing digitized documents written in modern Greek.

A different type of model is ConvNets. The first proposal came from Yin et al. [176], who implemented a basic QCNN model, see Fig. 5f. Since quaternion models extract 4 times more features, they propose to use an attention module, whose purpose is to filter out redundant features. These models were applied to the image classification problem on the CIFAR-10 dataset [91]. In addition, they propose a similar model for Double JPEG compression detection on the UCID dataset [78]. Thereafter, Jin et al. [83] propose to include a deformable layer, leading to a Deformable Quaternion Gabor CNN (DQG-CNN), see Fig. 5h. They apply it on a facial recognition task using the Oulu-CASIA [156], MMI [126], and SFEW [41] datasets. Chen et al. [26] propose an architecture based on quaternion versions of Fully Convolutional Neural Networks (FCN) [150]. Their model is applied for the color image splicing localization problem, and tested on CASIA v1.0, CASIA v2.0 [42], and Columbia color DVMM [122] datasets. The basis of their architecture is the Quaternion-valued Fully Convolutional Network (QFCN), see Fig. 5d. Then, the final model is composed of three QFCNs working in parallel, each one has different up-sampling layers: The first network does not have extra connections, the second network has a shortcut connection fusing the results of the fifth pooling layer with the output of the last layer, while the third network combines results of the third, and fourth pooling layers with the output of the last layer. In addition, each network uses a Super-pixel-enhanced pairwise Condition Random Field module to improve the results from the QCNN. This fusion of the outputs allows to work with different scales of image contents [103]. Thereafter, Chen et al. [27] propose the quaternion-valued two-stream Region-CNN. They extend the real-valued RGB-N [179] to the quaternion domain, improve it for pixel-level processing, and implement two extra-modules: an Attention Region Proposal Network, based on CBAM [168], for enhancing the features of important regions; and a Feature Pyramid Network, based on Quaternion-valued ResNet, to extract multi-scale features. Their results improved the ones previously published in [26]. Because of the complexity of these models, it is recommended to read it directly from [26, 27].

In the case of CAE’s, whose aim is to reconstruct the input feature at the output, Parcollet et al. [127] propose a quaternion convolutional encoder-decoder (QCAE), see Fig. 6a, and tested it on the KODAK PhotoCD dataset [48].

Another type of CAE’s is Variational Autoencoder, which estimates the probabilistic relationship between input and latent spaces. The Quaternion-valued Variational Autoencoder model (QVAE) was proposed by Grassucci et al. [59], see Fig. 6b, and applied it to reconstruct and generate faces of the CelebFaces Attributes Dataset (CelebA) [104].

A more sophisticated type of architecture is the generative models. Sfikas et al. [146] propose a Quaternion Generative Adversarial Network for text detection of inscriptions found on byzantine monuments [89, 138]. The generator is an U-Net [139] like model, and the discriminator, a cascade of convolution layers, see Fig. 6e, f, where the activation function of the last layer sums the output of real and imaginary parts of the quaternion to produce a real-valued output.

Grassucci et al. [58] adapted a Spectral Normalized GAN (SNGAN) [28, 116] to the quaternion domain, and apply it on an image to image translation task using the CelebA-HQ [86] and 102 Oxford Flowers [123] datasets. The model is presented in Fig. 6c, d. Thereafter, Grassucci et al. [60] proposed the quaternion-valued version of the StarGANv2 model [30]. It is composed of the generator, mapping, encoding, and discriminator networks; this model was evaluated on an image to image translation task using the CelebA-HQ dataset [86]. Because of the complexity of the model, it is recommended to consult them directly from [60].

4.1.2 Vision Under the Geometric Paradigm

The only residual model lying in this paradigm is the one of Hongo et al. [77], who proposed a residual model, based on ResNet34 [68], see Fig. 4a. This is applied to the image classification task using the CIFAR-10 dataset [91].

On ConvNet models, we have the work of Zhu et al. [181], who proposed shallow QCNN and quaternion VGG-S [145] models for image classification problems. These models are shown in Fig. 5b, c; the former was tested on the CIFAR-10 dataset [91], and the latter on the 102 Oxford flower dataset [123]. Hongo et al. [77] proposed a different QCNN model, see Fig. 5g, and tested it on the image classification task using the CIFAR-10 dataset [91]. Moreover, Matsumoto et al. [113] propose QCNN models for classifying pixels of PolSAR images. This type of images contain additional experimental features given in complex scattering matrices. For their experiments, they labeled each pixel of two images in one of 4 classes: water, grass, forest, or town. Then, they converted the complex scattering matrices into PolSAR pseudocolor features, or into normalized Stokes vectors. By testing similar models under these two different representations, they found out that the classification results largely depend on input features. The proposed model is shown in Fig. 5j.

In the context of CAE’s, Zhu et al. [181] propose a U-Net-like encoder-decoder network [139] for the color image denoising problem. The model was tested for a denoising task on images of the 102 Oxford flower dataset [123], and on a subset of the COCO dataset [102], see details of the model in [111, 139, 160, 181].

4.1.3 Vision Under the Equivariant Paradigm

For tasks that use point clouds as input data, Shen et al. [151] modified the real-valued PointNet model [25] by exchanging all the layers for Rotation Equivariant Quaternion Modules, and removing the Spatial Transformer module, since it discards rotation information. The rotation equivariant properties of the modules were evaluated on the ShapeNet dataset [24]. They experimentally proved that point clouds reconstructed using the synthesized quaternion features had the same orientations as point clouds generated by directly rotating the original point cloud. In addition, they modify other models, i.e. PointNet++ [137], DGCNN [163], and PointConv [170], by replacing their components into its equivariance counterparts, and tested it on a 3D shape classification task on the ModelNet40 [171] and 3D MNIST [37] datasets. Because the variety of components and interconnections, the reader is directed to [25, 137, 151, 163, 170] to consult the details of these models.

4.2 Language

For language applications, all works that have been proposed are ConvNets.

Under the classic paradigm, Parcollet et al. [130] propose a Connectionist Temporal Classification CTC-QCNN model, see Fig. 5a, and tested it on a phoneme recognition task, with TIMIT dataset [52]. The mapping of input signals to the quaternion domain is achieved by transforming the raw audio into 40-dimensional log mel-filter-bank coefficients with deltas, delta-deltas, and energy terms, and arranging the resulting vector into the components of an acoustic quaternion signal:

Another problem related to language is joint 3D Sound Event Localization and Detection (SELD); solving this task can be helpful for activity recognition, assisting hearing impaired people, among other applications. The SELD problem consists in simultaneous solving: the Sound Event Detection (SED) problem, i.e. in a set of overlapping sound events, detecting temporal activities of each sound event and associating a label, and the Sound Localization Problem, which consists of estimating the spatial localization trajectory of each sound. The latter task could be simplified to determining the orientation of a sound source with respect to the microphone, which is called Direction-of-Arrival (DOA). For this problem, also following a classical approach, Comminiello et al. [35] propose a quaternion-valued recurrent network (QSELD-net), based on the real-valued model of Adavanne et al. [1]. The model has a first processing stage, where output is processed in parallel by two branches: the first one performs a multi-label classification task (SED), while the second one performs a multi-output regression task (DOA estimation). The model is shown in Fig. 5e, where N is the number of sound event classes to be detected; for DOA estimation, we have three times more outputs, since they represent (x, y, z) coordinates for each sound event class. This model was tested on the Ambisonic, Anechoic and Synthetic Impulse Response (ANSYN), and the Ambisonic, Reverberant and Synthetic Impulse Response (RESYN) datasets [2], consisting of spatially located sound events in an anechoic/reverberant environment synthesized using artificial impulse responses. Each dataset comprises three subsets: no temporally overlapping sources (O1), maximum two temporally overlapping sources (O2) and maximum three temporally overlapping sources (O3). In this application, the input data is a multichannel audio signal; real-valued SELD-net [1] as well as the quaternion-valued counterpart [35] apply the same feature extraction method: the spectrogram is computed using the M-point discrete Fourier transform to obtain a feature sequence of T frames containing the magnitude and phase components for each channel.

Under the geometric paradigm, Muppidi and Radfar [119] proposed a QCNN for the emotion classification from speech task and evaluate it on the RAVDESS [105], IEMOCAP [23], and EMO-DB [22] datasets. They convert speech waveform inputs from the time domain to the frequency domain using Fourier transform, thereafter compute its Mel-Spectrogram, and convert it to an RGB image. Finally, RGB images are processed with the model shown in Fig. 5i, where the Reset-ReLU activation function resets invalid values to the nearest point in color space.

4.3 Forecasting

Neshat et al. [121] proposed an hybrid forecasting model, composed of QCNNs and Bi-directional LSTM recurrent networks, for prediction of short-term and long-term wind speeds. Historical meteorological wind data was collected from Lesvos and Samothraki Greek islands located in the North Aegean Sea, and obtained from the Institute of Environmental Research and Sustainable Development (IERSD), and the National Observatory of Athens (NOA). This work integrates classic standard QCNNs within a complete system, and shows that better results are obtained when using QCNNs than other AI models or handcrafted techniques. Because of the complexity of the model, it is recommended to consult the details directly from [121].

4.4 Insights

A fair comparison between the performance of the models and their individual blocks is difficult because of the fact that most of them are applied on different problems or using different datasets; moreover, most of the quaternion-valued models are based on their real-valued counterparts, and there are very few works based on incremental improvement over previous quaternion models. However, from the works reviewed, the following insights can be established:

-

1.

Quaternion-valued models achieve, at least, comparable results to their real-valued counterparts, and with a lower number of parameters. As was stated by [148]: “\(\dots \)an operation that is written as \(y=Wx\), where \(y\in \mathbb {R}^{4K}\), \(x \in \mathbb {R}^{4\,L}\), and \(W \in \mathbb {R}^{4K\times 4L}\) is thus mapped to a quaternionic operation \(\textbf{y} = \textbf{W} \textbf{x}\), where \(\textbf{y} \in \mathbb {H}^K\), \(\textbf{x} \in \mathbb {H}^L\) and \(\textbf{W}\in H^{K\times L}\). Parameter vector \(\textbf{W}\) only contains \(4 \times K \times L\) parameters compared to \(4 \times 4 \times K \times L\) of W, hence we have a 4x saving.” In addition, some works report faster convergence on the training stage.

-

2.

For the image classification task, the CIFAR-10 dataset has become the standard benchmark. Although the classification errors reported in different works are not standardized (for example: some authors use data-augmentation techniques), the best reported result is from Gaudet and Maida [54], who uses a classic approach and a deep residual model. In addition, residual models present the best results for image classification tasks.

-

3.

Attention modules filter out redundant features, and their use can improve the performance of the network [176].

-

4.

Variance Quaternion Batch Normalization (VQBN) experimentally outperforms Split Batch Normalization [176].

-

5.

The integration of quaternion Gabor filters and convolution layers enhances the abilities of the model to capture features in the aspects of spatial localization, orientation selectivity, and spatial frequency selectivity [83, 117, 118].

-

6.

Quaternion generative models demonstrate better abilities to learn the properties of the color space, and generate RGB images with more defined objects than real-valued models [58, 127].

Finally, Table 11 presents information about the available source code. It only includes source code published by the authors of the papers; other implementations can be found in the popular website paperswithcode [154].

5 Further Discussion

Section 3 presented each individual block, discussion of current issues, knowledge gaps, and future works for improvement. Here, we discuss future directions of research and open questions for any model of QCNNs, independently of its components. Even more, some topics involve any type of quaternion-valued deep learning model.

5.1 On Proper Comparisons

When looking at the different models that have been proposed, one of the questions that arises is: Is it fair to compare a real-valued architecture with a quaternion-valued one using the same topology and number of parameters? Note that in the first case, the optimization of the parameters occurs in an Euclidean 4-dimensional space, while in the second case, the optimization occurs in the quaternion space, which can be connected to geometric spaces different to the Euclidean one. From this observation, we could argue that a more reasonable way to compare is: an optimal real value network vs the optimal quaternion-value network, even though they have different topologies, connections, and number of weights. Now, it is clear that a single real-valued convolution layer cannot capture interchannel relationships, but could a real-valued multilayer or recurrent architecture capture interchannel relationships without the use of Hamilton products? When are Hamilton products really needed?

Something that could shed light on these questions is the reflection of Sfikas et al. [148], who believe that the effectiveness of quaternion networks is because of “navigation on a much compact parameter space during learning” [148], as well as an extra parameter sharing trait.

5.2 Mapping from Data to Quaternions

Another question that arises is: how to find an optimal mapping from the input data to the quaternion domain? Common sense would say that finding a suitable mapping, and connecting with the right geometry could seriously improve the performance of quaternion networks; however, theoretical and experimental work is required in this direction. For example, for images, we can adopt different color models with a direct geometric relation to quaternion space, and point clouds as well as deformable models can avoid algebraic singularities when the quaternion representation is used. Moreover, models for language tasks are in their infancy, and novel methods that take advantage of the quaternion space should be proposed for signal processing, speech recognition, and other tasks.

5.3 Extension to Hypercomplex and Geometric Algebras

Current QCNN models rely on processing 4-dimensional input data, which is mapped to the quaternion domain. If the input data have less than 4 channels, a common solution is to apply zero-padding on some channels, or define a mapping to a 4-dimensional space.

In contrast, for more than 4 channels, there are several alternatives: The first one is to map the input data to an n-dimensional space, where n is a multiple of four. Then, we can process the input data by defining a quaternion kernel for each 4-channel input, see Fig. 2. Alternatively, an extension of the quaternion convolution to the octonions, sedenions or generalized hypercomplex numbers can be applied [159]. Moreover, Geometric algebras [71, 84] can be used to generalize hypercomplex convolution to general n-dimensional spaces, not restricted to multiples of 4, and to connect with different geometries.

An approach in this direction is to parametrize the hypercomplex convolution in such a way that the convolution kernel is separated in a set of matrices containing the algebra multiplication rules, and a set of matrices containing the filtering weights [61, 178]. Let S and D be the input and output channel dimension of the data, respectively, and let \(\textbf{W}\in \mathbb {R}^{S\times D}\) be an element of the convolution kernel; then, it is decomposed into the sum of its Kronecker products [70] as follows:

where \(\textbf{A}_n\in \mathbb {R}^{N\times N}\), with \(n=1,\dots ,N\) are learnable matrices containing the algebra multiplication rules, each \(\textbf{V}_n\in \mathbb {R}^{\frac{S}{N}\times \frac{D}{N}\times K\times K}\) is a learnable matrix, and they compose together an element \(\textbf{W}\) of the convolution kernel.

The parameter N defines the dimension of the algebra; then, for \(N=2\) we are working in the complex domain, while a value \(N=4\) leads us to the quaternion space. For example, for computing the product between quaternions \({\textbf {q}}\) and \({\textbf {p}}\):

which can be rewritten as:

and their decomposition into the sum of Kronecker products is:

Since matrices \(\textbf{A}_n\), and \(\textbf{V}_n\) are obtained during training, this method does not require previous definition of multiplication rules, it just requires to set up a single parameter N, which represents the dimension of the algebra. Thus, the works of Grassucci, E. et al. [61] and Zhang et al. [178] are an approach to learn latent mathematical structures directly from data. Other ideas on this topic will be discussed in the next section.

5.4 Machine-Learning Mathematical Structures

The use of quaternion-valued algorithms might lead to a faster search of solutions in the quaternion space or to find better solutions for problems, since the machinery of the algorithm is built on quaternion properties and solutions are computed directly on the quaternion domain.

For example, QCNNs could be applied for synthesizing quaternion functions from data in a similar way to the work of Lample and Charton [94] for real-valued functions. Another interesting question arises about which of the mathematical problems of the ones recollected in [69] can be posed in the quaternion domain, and how to solve them with QCNNs models.

5.5 Quaternions on the Frequency Domain

A natural implementation of QCNNs in the frequency domain would be to compute the Quaternion Fourier transform [21, 74, 134] of the input and kernels, and multiply them in the frequency domain. Moreover, the computing of the convolution could be accelerated using Fast QFT algorithms [140]. To this date, works following this approach have not been published. However, using a bio-inspired approach, Moya-sanchez et al. [118] proposed a monogenic convolution layer. A monogenic signal [47], \(I_M\), is a mapping of the input signal, which simultaneously encodes local space and frequency characteristics:

where \(I_1\) and \(I_2\) are the Riesz transforms of the input in the x and y directions. In [118], the monogenic signal is computed in the Fourier domain, \(I'\) is computed as a convolution of the input with quaternion log-Gabor filters with learnable parameters, and local phase and orientation are computed for achieving contrast invariance. In addition, their model provides crucial sensitivity to orientations, resembling properties of V1 cortex layer.

5.6 Might the Classic, Geometric, and Equivariant Models be Special Cases of a Unified General Model?

To answer this question, let us recall that a quaternion is an element of a 4-dimensional space, and unitary versors together with the Hamilton product are isomorphic to the group SO(4). Thus, our point of view is that the classic model, using the four components, is the most general one. By dividing the product as a sandwiching product and using an equivalent polar representation, a generalized version of the geometric model could be obtained (not Euclidean or affine, but 3D projective model); in addition, for the equivariant model, the kernel is reduced merely to its real components. However, the connection to invariant theory should be investigated for each of these models, so that a stratified organization at the light of geometry might be achieved. For example, if we link a unified general model to a particular geometry and its invariant properties, we could go down on the hierarchy of geometries by setting restrictions on the general model, until we obtain the rotation equivariant model or the others.

6 Conclusions