Abstract

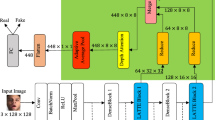

Facial processing technology's ability to generate realistic human faces poses significant societal risks when exploited maliciously. Deep face fraud detection relies on deep learning to meticulously scrutinize the manipulation sequence of fake faces, uncovering deceptive traces. This study focuses on Detecting Sequential DeepFake Operations (Seq-DeepFake), transforming the face detection task into an image-to-sequence exploration.To enhance detection accuracy, this paper introduces a Seq-DeepFake detection method. The Seq-DeepFake Transformer model's activation function is refined, incorporating the Rectified Randomized Leaky Unit (RReLU) to address learning rate challenges associated with negative input values. Furthermore, diverse attention mechanism modules are integrated into the backbone network, forming the innovative CLSP-Resnet-50 model. Experimental results demonstrate the efficacy of the enhanced Seq-DeepFake model, employing two evaluation metrics on a deepfake dataset, showcasing improved accuracy. Comparative analysis against other real and fake face detection methods substantiates the effectiveness of the Seq-DeepFake model.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Fried, O., et al.: Text-based editing of talking-head video. ACM Trans. Graph. (TOG) 38(4), 1–14 (2019)

Westerlund, M.: The emergence of deepfake technology: a review. Technol. Innov. Manage. Rev. 9(11), 39–52 (2019). https://doi.org/10.22215/timreview/1282

Deng, J., Guo, J., Xue, N., Zafeiriou, S.: Arcface: additive angular margin loss for deep face recognition. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 4690–4699 (2019)

Zeng, N., Zhang, H., Song, B., Liu, W., Li, Y., Dobaie, A.M.: Facial expression recognition via learning deep sparse autoencoders. Neurocomputing 273, 643–649 (2018)

Viola, P., Jones, M.J.: Robust real-time face detection. Int. J. Comput. Vision 57, 137–154 (2004)

Dalal, N., Triggs, B.: Histograms of oriented gradients for human detection. In: 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), vol. 1, pp. 886–893. IEEE (2005)

Lin, K., et al.: Face detection and segmentation based on improved mask r-cnn. Discret. Dyn. Nat. Soc. 2020, 1–11 (2020)

Zollhofer, M., et al.: State of the art on monocular 3d face reconstruction, tracking, and applications. In: Computer Graphics Forum, vol. 37, pp. 523–550. Wiley Online Library (2018)

Thies, J., Zollhofer, M., Stamminger, M., Theobalt, C., Niesner, M.: Face2face: real-time face capture and reenactment of RGB videos. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2387–2395 (2016)

Ranjan, R., Patel, V.M., Chellappa, R.: Hyperface: a deep multi-task learning framework for face detection, landmark localization, pose estimation, and gender recognition. IEEE Trans. Pattern Anal. Mach. Intell. 41(1), 121–135 (2017)

Vaswani, A., et al.: Attention is all you need. In: Advances in Neural Information Processing Systems, vol. 30 (2017)

Kim, H., Choi, Y., Kim, J., Yoo, S., Uh, Y.: Exploiting spatial dimensions of latent in gan for real-time image editing. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 852–861 (2021)

Jiang, Y., Huang, Z., Pan, X., Loy, C.C., Liu, Z.: Talk-to-edit: Fine-grained facial editing via dialog. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 13799–13808 (2021)

Karras, T., Laine, S., Aila, T.: A style-based generator architecture for generative adversarial networks. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 4401–4410 (2019)

Shao, Rui, Tianxing, Wu., Liu, Ziwei: Detecting and recovering sequential deepfake manipulation. In: Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T. (eds.) Computer Vision – ECCV 2022, pp. 712–728. Springer Nature Switzerland, Cham (2022). https://doi.org/10.1007/978-3-031-19778-9_41

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

Roy, S.K., Dubey, S.R., Chatterjee, S., Baran Chaudhuri, B.: Fusenet: fused squeeze-and-excitation network for spectral-spatial hyperspectral image classification. IET Image Proc. 14(8), 1653–1661 (2020)

Acknowledgments

This work was partially supported by the National Natural Science Foundation of China (No. 6202780103), by the Guangxi Science and Technology Project (No. AB22035052), Guangxi Key Laboratory of Image and Graphic Intelligent Processing Project (Nos. GIIP2211, GIIP2308), by the Innovation Project of GUET Gurduate Education (No. 2023YCXB09).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Deng, Z., You, K., Yang, R., Hu, X., Chen, Y. (2024). An Improved Seq-Deepfake Detection Method. In: Huang, DS., Premaratne, P., Yuan, C. (eds) Applied Intelligence. ICAI 2023. Communications in Computer and Information Science, vol 2014. Springer, Singapore. https://doi.org/10.1007/978-981-97-0903-8_21

Download citation

DOI: https://doi.org/10.1007/978-981-97-0903-8_21

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-97-0902-1

Online ISBN: 978-981-97-0903-8

eBook Packages: Computer ScienceComputer Science (R0)