Abstract

Concerning the role of ocean dynamics in climate change, retrieving information about the interior of the ocean is the basis of scientific studies. Given the surface limitation of remote sensing techniques and expenses of in-situ observations, the sparse information of the ocean’s three-dimensional structure has hindered the studies about the global ocean. In this chapter, a neural network (NN) is described, which is capable to learn the relationship between gridded Argo Ocean Heat Content (OHC) and remote sensing data, and to estimate the OHC covers the near-global ocean from four distinct depths down to 2000 m. Our technology enables the OHC to be extended to periods with remote sensing products, other than the training period from 2005 to 2018. Furthermore, an ensemble method was applied to estimate the hindcasting uncertainty by training with six 108-month (9-year) training periods. The ensemble mean and standard deviation of six members was then calculated, yielding a new OHC product from 1993 onward to present with uncertainty estimation and high accuracy, compared with other acknowledged OHC products. This reconstructed OHC product is named Ocean Projection and Extension neural Network (OPEN) product. We evaluated the accuracy with the normalized root-mean-square error (NRMSE) and the determination coefficient, as well as conducted a series of experiments to optimize the network architecture and feature combinations. When compared with several widely applied global OHC products, the OPEN OHC has a high accuracy, with a determination coefficient larger than 0.95 and an NRMSE smaller than 20%. The OPEN product can well reflect the decadal trends of OHC, interannual variabilities, and therefore be a useful addition as a reliable data source for climate changes. As a case study, the application of OPEN has highlighted the broad-use NN approaches, while more explore would be urgently needed to facilitate artificial intelligence applications in the climatic and marine sciences.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

1 Introduction

In recent decades, the imbalance in the top-of-atmosphere radiation, termed the Earth’s energy imbalance (EEI) [49], has been continuously promoted changes in the global climate system, leading to continued global warming. The EEI must be accurately quantified in order to investigate and comprehend the past, present, and future state of climate change [38], which is defined by the net heat gaining in the Earth’s climate system calculating the difference between the energy entering into and reflected by the Earth [50]. Due to its small magnitude compared with solar radiation, the EEI is difficult to quantify accurately [38]. Yet, more than 93% of the EEI of the Earth system is sequenced in the ocean as ocean heat content (OHC) changes [5, 7]. Naturally, this is due to the large heat capacity and gigantic volume of seawater, which accounts for ~71% of the world’s surface area and ~97% of total water volume. Therefore, the OHC variability is slower and can better capture low-frequency climate variability. These make OHC the most suitable variable to detect and track EEI changes than sea surface temperature (SST) [26, 50].

OHC is driven by both human activity and natural variability. The anthropogenic forcing has been reflected in the OHC, leading to speeding OHC warming rate [35], and therefore the former serves as an essential indicator of ocean variability. In turn, OHC also feedbacks to the climate change [10, 25]. On the multi-decadal timescale natural variability, the global OHC is of high relevance to the Earth’s heat balance [3]. OHC is also closely associated with the El Niño Southern Oscillation (ENSO), dominating the interannual variability. In recent years, hemispheric asymmetry of OHC changes has emerged, which can be likely explained by internal dynamics instead of different surface forcing [39]. The world ocean of 2021 was the hottest ever recorded by human beings despite the La Niña conditions [17]. In summary, the accurate quantification of the OHC is crucial to understanding EEI [10, 50].

Remote sensing can provide wide and near-real-time coverage as well as a vast collection of spatial and temporal information. However, in most circumstances, only water surface can be seen from the remote sensing which cannot penetrate to the ocean’s interior. Recently, a series of subsurface and deeper ocean remote sensing (DORS) methods were developed to unlock the enormous potential of remote sensing data in sensing the ocean interior [31]. Particularly, Artificial Intelligent (AI) methods have provided cutting-edge tools and infrastructures [41].

Different remote sensing data were applied to derive subsurface thermal information via various methods [18, 31, 32, 52]. These early initiatives demonstrated the concept of DORS to tackle the issue of data sparsity. Recent research has shown that surface remote sensing data may be effectively used to retrieve STA via machine-learning or AI approaches. For instance, [27] and [23] proved that subsurface structures were dominated by the first baroclinic mode, and thus can be estimated from SSH. By merging remote sensing data with a Self-Organization Map (SOM) approach, [51] further confirmed the theory’s credibility in the Northern Atlantic Ocean. Reference [36] used a clustered shallow neural network (NN) to obtain subsurface temperature, demonstrating the promise of NNs as a category of generic techniques with powerful regression capabilities. Other relevant contributions have been made [2, 8, 20, 21, 23], to mention a few.

Among the several methodologies, neural networks (NN), as the foundation of contemporary deep learning breakthroughs, have demonstrated the capability in the regression problems of the ocean subsurface estimation [2, 27,28,29, 48]. Yet, the application of NN models to temporally extrapolate remote sensing data was limited. This is partly because of the difficulty in time series estimation, and the fact that STA was indirectly influenced by the surface signals in the deep ocean. The exact physical controls are fundamentally nonlinear. In this regard, OHC is more tightly coupled with the surface forcing [40], which may lead to a more physically consistent DORS application. So far, only a few studies have used surface data to retrieve OHC [27, 54]. In the ground-breaking work of [27], an NN was trained to derive discrete site-wise Indian Ocean OHC. Given that different ocean basins have different OHC dynamics and thus linkages to the surface, the first goal of this study is to determine whether this approach can be extended to the entire global ocean. We will answer this question by developing an NN model driven by big data to estimate OHC, that is accurate for the global ocean and for temporally extending OHC data to the pre-Argo era of 1993 onward to 2004.

The method will also be used to generate an OHC product using this NN approach, hindcasting the OHC before the Argo era. Since the early 2000s, when Argo floats have been continuously deployed, the ability to accurately quantify OHC has unprecedented increased [44]. To present, a network of over 4000 Argo floats has been detecting robust climate signals in the global ocean’s large-scale dynamic features. However, prior to the Argo era, there was no reliable full-coverage ocean interior data. As a result, there are discussions and debates regarding various climate issues. Consider the trend of heat redistribution during the “hiatus” period between 1998 and the late 2010s, when global warming appeared to be slowing [53]. Various climate signals have been detected, each backed by different data products [45], which puts a strain on the quality of ocean interior data in order to give comprehensive and effective support for climate research throughout this time period [53]. For example, there are two broad opinions on driving processes: one is the Atlantic meridional overturning circulation-controlled mechanism [11], and the other is Indo-Pacific-originated mechanisms [33].

In this chapter, we describe the NN model yielding an NN-based global OHC product named Ocean Projection and Extension neural Network (OPEN) [47]. The technical details will be described with a focus on the NN approach. This chapter is structured as follows. After presenting data in Sect. 2, the NN method is detailed in Sect. 3. We also present the design of experiments to optimize the network. In Sect. 4, we first test the sensitivity of the network parameters and structure. The OHC is then reconstructed, extended to the pre-Argo era, from 1993 to 2020. In addition, OPEN and other renowned near-global OHC products are evaluated in terms of linear trends and variability modes. Finally, in Sect. 5, we summarize the results and provide prospects for future studies.

2 Data

A summary of all data sets utilized in this chapter is shown in Table 1, including an Argo-based three-dimensional temperature product to derive OHC, multi-source satellite remote sensing data, and OHC products from different sources.

The sea surface height (SSH) is from the Absolute Dynamic Topography products of Archiving, Validation, and Interpretation of Satellite Oceanographic (AVISO). The SST is from the Optimum Interpolation Sea Surface Temperature (OISST). The sea surface wind (SSW) is from the Cross Calibrated Multi-platform (CCMP). These three products have a common spatial resolution of one quarter. The sea surface salinity (SSS) is adopted from the Soil Moisture Ocean Salinity (SMOS) product. The SMOS product has a spatial resolution of one degree. We linearly interpolated all the products to a one-degree grid except for SSS.

The OHC ‘ground truth’ was derived from Roemmich and Gilson [44] gridded Argo product, which consists of 27 standard levels of 0–2000 m. The variables include pressure, temperature, and salinity. Dynamic heights were also provided from the T/S profiles. It has a monthly time interval from 2005 to the present, and a spatial resolution is \(1^{\circ } \times 1^{\circ }\). By definition, the OHC can be calculated by conducting depth integral of temperature T from the surface to a particular level z.

In the integration, \(\rho \) is the seawater density and Cp is the heat capacity. Constant values of 1025 \(kg\cdot m^{-3}\) and 3850 \(J\cdot kg^{-1}\cdot K^{-1}\) were applied. OHC300, OHC700, OHC1500, and OHC2000 refers to the OHC of top 300, 700, 1500, and 2000 m, respectively, where integration is done. The reference of OHC, with regard to the climatological mean of 2005–2015, was then computed and removed to provide OHC anomalies. Hereafter, we report OHC anomaly unless otherwise indicated.

Other near-global OHC products will be compared with the OPEN product. These data sets are: National Centers for Environmental Information (NCEI) data by [35], Institute of Atmospheric Physics (IAP) data by [13], EN4 from the Met Office of United Kingdom by [22], empirical DORS-based ARMOR3D data by [23], and numerical reanalysis GLORYS2V4. Among these data products, NCEI, IAP, and EN4 are all optimal interpolated (mapped) one-degree products from a common collection of discrete station and profiling data. The source of the in-situ data includes Argo profilers, conductivity-temperature-depth (CTD), and expendable bathythermograph (XBT). The ARMOR3D and GLORY2V4 both have a 0.25-degree resolution. Because we only use basin OHC summations, the different resolution is not an issue.

3 Method

3.1 Neural Network

The NN with a total of o layers (h as hidden layers) applied in this chapter can be generally formularized as:

...

For a regression problem, one may express mathematically an NN as an approximation function \(\hat{y}=f ({\textbf {x}};{\theta })\) from the inputs x to the OHC \(\hat{y}\) with parameters \(\theta \). \(\theta \) include weights w, biases b, and activation functions \(\sigma \) for each neuron in the hidden layer.

Generally, in a network, one input layer, one or more hidden layers, and one output layer are essential. Layers inter-connects each other in a manner of stacks. The input layer collects input features and hence has the same number of neurons. Each neuron in each layer computes the weighted average from its previous layer’s outputs. The neuron then computes the nonlinear outputs with its activation function. And the next layer receives the output results from its previous layer. The number of neurons is often described as the width, with the number of hidden layers as the depth, i.e., a deep NN has more hidden layers.

Such an NN is essentially an optimization problem to find the parameters \(\theta \) leading to the minimized cost function J, which is the mean squared error. This can be formularized as:

In the equation, Tr refers to the training set with to N samples in a training set. We applied a Bayesian regularization for the NN, following our previous study of [36]. Our experience suggests that by smoothing the cost function J, the Bayesian regularization approach can efficiently avoid overfitting [19]. This trait is advantageous for temporal projection because smoothness is more likely to work effectively when fresh data are provided. An ensemble technique was applied. Six subsets of training periods were defined, that starts from 2005, 2006, 2007, 2008, 2009, and 2010, and ends in 2013, 2014, 2015, 2016, 2017, and 2018, respectively. Except for the training period, all remaining data were utilized as the testing set. The uncertainty may be evaluated using three times standard deviations, which are distributed across six ensemble members. For each depth, a distinct NN was trained. Once the remote sensing data are provided, the OHC field can be derived. The ensemble average will be reported as our hindcast of OHC in the following chapters.

3.2 Design of Experiments

The NN relies heavily on the proper combination of sea surface variables. In AI field, these variables are referred to as input features. One may certainly train NN for any unrelated input-output data, yet this often results in overfitted NN. It is envisaged that an NN model can successfully extrapolate to unknown data provided there is a clear input-output relationship that the NN can learn. Furthermore, in the practice of optimization, choosing the greatest feature combination might be paradoxical at times [46]. As a result, features are frequently chosen haphazardly in a process known as feature engineering. The availability of historical data also influences feature selection. In the current study, to find the best combination of the features, we designed 16 experiments as shown in Table 2. For these experiments, we chose the OHC300 in January 2011 as the target to be hindcasted with the tuned NN and a data subset from the 12 months of 2010 as the training data. Note that the conclusions here are insensitive to the data subsetting. Case A and Case R are included in each case. Case R uses remote sensing SSH, in addition to the surface SST and SSS, while Case A employs those from the (surface) Argo data of the uppermost level. Both experiment series shared the same SSW data set. This is to test if Argo ‘surface’ data can be transited to remote sensing OHC estimation. In addition, we designed several experiments to evaluate the role of temporal and spatial information, involving day of year (DOY), longitude (LON), and latitude (LAT). At last, the normalized root-mean-square error (NRMSE) and determination coefficient (R2) were used to measure the network performance. NRMSE is the ratio of root-mean-square error to corresponding standard deviation.

4 Results and Analysis

4.1 Optimization of Feature Combinations

Table 2 shows the R2 and NRMSE values. Overall, the SSH anomaly is the leading factor affecting OHC, followed by the SST anomaly. This can be seen from the retrieval accuracy for Case 1, which has already very high retrieval accuracy considering these two features (Table 2). Cases 1A and Case 1R show that the accuracy is fairly good, with the retrieved OHC explaining 70% of the variance. The retrieval accuracy with satellite data is slightly higher. This could be because Argo’s SSH is actually the dynamic height integrated from temperature and salinity [44] while the contribution from volume changes was missing. Consistent with our previous work [36], it is clear that including spatiotemporal information, i.e., LON, LAT, and DOY, enhances the training for both data sets substantially when comparing Case 1 and Case 2. We suspect that including DOY improves the NN because it allowed it to learn the seasonal cycle, which is the most dominating signal in OHC. By comparing Cases 2 and Case 4, or Cases 1 and Case 3, we can find that SSW only improves the accuracy by ~1%. When comparing Cases 1 and Case 5, SSS increases the retrieval in Case R, but has a suppressing effect of 6% of Argo data. This is because Argo SSS differs to a large extent from the remote sensing SSS. When utilizing the measured SSS to train and the remotely sensed SSS to predict, this discrepancy resulted in considerably lower accuracy. On the other hand, Case R is generally better than Cases A. This is not surprising, given that SSH primarily represents the inner dynamics of the first baroclinic mode [9, 40], as well as the mismatch between dynamic height and satellite-based absolute dynamic topography. Not surprisingly, directly using remote sensing data for the training is a better way. Case 8R had the highest accuracy successfully captured 80% variabilities; nevertheless, because SSS is only available for recent years, the feature combination in Case 4R is chosen as the optimized network features.

4.2 Deep, or Shallow—That Is the Question

One comment perspective is a deep NN has a stronger capability to regress the complex hidden relationship between input and output features. Theoretically, the universal approximation theorem demonstrated that a one-hidden-layer NN with sufficient neurons can approximate any continuous function [24]. To confirm this concept in retrieving the OHC, the optimal hyperparameters will be discovered using a grid-search method. We designed several experiments in which the NN was deepened from two hidden layers to six. The performance of networks with different hyperparameters is examined using a subset of data (Fig. 1).

Determination coefficients as a function of the number of neurons for different NN structures. Different activation function combinations and hidden-layer numbers are represented by different line colors. The training data is OHC300 of 2005–2013, while testing data is those of 2017. The configuration of Case 4 was adopted. Sig means the activation function of tangential sigmoid

As Fig. 1 demonstrates, as the neuron number increases, the retrieving accuracy of two-/three-layer networks first improves, then declines. Generally, three-layer networks have steeper declines, i.e., being more prone to overfitting. This means that keeping a basic shallow network structure is better for the current issue. We also observe that increasing the complexity of NN reduces linear trends. The following part will deal with the global and basin-wide warming trends of OHC. These results agree with our previous application of NN for subsurface temperature estimation [36]. In summary, adding more hidden layers to a network can improve its capacity to fit a complicated input-to-output mapping function. It might, however, raise the probability of overfitting and make the training more difficult.

The choice of activation functions is also influencing (Fig. 1). For two-layer networks, the combination of ReLU and sigmoid functions is not as good as the sigmoid function alone. The ReLU activation, on the other hand, outperforms all three-layer networks. Furthermore, the ReLU activation function is predicted to be more efficient than the sigmoid function in terms of computation, but this advantage is negligible for shallow networks. This result shows that for a shallow NN, the nonlinear sigmoid function is a better choice, emphasizing the above-mentioned universal approximation theorem [24]. The optimum NN design was determined by these studies to be a three-layer NN with three neurons and a combined sigmoid with ReLU activation. This architecture will be used to report findings by default in the following text.

4.3 Data Reconstruction

We used the ensemble approach to train the model using data from 2005 to 2018. We further hindcasted the data from 1993, the earliest year with global altimetry coverage, to the year 2020. OPEN OHC data are compared to six datasets, i.e., NCEI, EN4, IAP, ARMOR3D, and GLORYS2V4, with an emphasis on interannual variabilities and decadal trends. These data sets are summarized in Table 1.

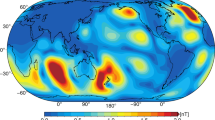

Figure 2 presents OHC300, OHC700, and OHC2000 from OPEN and Argo for January 2011 (as Table 2). The NRMSE values were 0.36, 0.34, and 0.37 for the three depth integrals, while the retrieval R2 is 0.80, 0.82, and 0.80, respectively. Across different depths, the accuracy changes are small, suggesting the robustness of NN networks. The spatial distribution hindcasted by OPEN closely agrees with the Argo OHC. The spatial distribution of the OHC is dominated by the ENSO fluctuation. In the tropical Indo-Pacific waters, the OHC has high values for all depths; in the eastern tropical Pacific, the OHC is lower. This pattern suggests a La Niña state, consistent with a multivariate Niño index of -1.83. In the southern hemisphere, the meandering of the Agulhas retroflection is discernable, showing alternating warming and cooling patterns [6]. For different depths, the OHC patterns are consistent; however, the magnitude is different, which gradually increases with depth. The most major difference is between OHC300 and OHC2000. Significant OHC changes can be found in the Pacific and Indian seas for OHC300, while changes can be found in all basins for OHC2000.

For hindcasting 1993–2004, by using IAP data as the true value, Fig. 3a shows the pattern correlation of the hindcasted OPEN OHC, while the temporal correlation and errors are displayed in Fig. 3b–d. The total R2 was higher than 0.98, with an NRMSE of 12%. The R2 and NRMSE interannual fluctuations are quite minimal, indicating a consistent performance. For long-term temporal extrapolation, this is preferable. In 1997, there is an extremely high error, which is most likely due to the strong ENSO signature of this year. ENSO might disturb the ocean surface, causing the network to deviate from its learned association.

At the extension of the western boundary current system, OPEN’s error is relatively higher, as well as in the two zonal bands cross the subtropical Pacific Ocean in the northern and southern hemispheres at ~\(25^{\circ }\), and in the Agulhas retroflection region. All these systems have nonlinear circulation and complex dynamics. In other regions, OPEN has a high correlation and low RMSE in terms of site-wise OHC time series but also presents heterogeneous structures. Overall, the hindcast of OHC300 in the global ocean presents ~10% error with respect to the spatiotemporal standard deviation of OHC300.

Table 3 summarizes the statistic matrix between OPEN OHC and other products. The R2 are all greater than 0.988 (OHC300: 0.993; OHC700: 0.988; OHC1500: 0.988; OHC2000: 0.989) when compared to the Argo OHC over the training period (2005–2018), whereas the NRMSE values are all less than 11% (OHC300: 0.09, OHC700: 0.109, OHC1500: 0.111, and OHC2000: 0.106). The best agreement between OPEN and EN4 products can be found, while differs from IAP (Table 3). In summary, OPEN OHC can be reliably reconstructed to the pre-Argo period since the overall accuracy is high.

We further compare the global OHC300 from all the products shown in Fig. 4, and the corresponding linear trends for two distinct time periods of 1993–2010 and 1998–2015. In the second period, the surface warming hiatus occurred. From the trends, it is reflected the global ocean’s ongoing warming. For instance, in 2018 and 2019, record high were reached in the OHC [15, 16]. Interannual variabilities such as the ENSO fingerprinted the OHC, showing an abrupt high in 1997 and 1998. This signal is less visible for deeper OHC (0–700, 0–1500, and 0–2000 m), but more so for the upper OHC300. Because the OHC300 is more sensitive to the surface thermal forcings, the ENSO signature is more prominent. In Table 4, it is noticeable that OPEN has a higher OHC warming trend than IAP, while that of EN4 is very close to the latter. Since the two data sets were both from mapping techniques from a similar database of in-situ observation, this similarity is not surprising, especially for OHC300 and less so for the deeper OHC. In these depths, two statistic-based products (GLORYS2V4 and ARMOR3D) present even larger inconsistency and stronger trends. Summarizing across all the products in Fig. 4, our NN-based OPEN product agrees well with other products, falling within the range of all data sets. Similarly for OPEN and ARMOR3D, the OHC300 presents a high bias after the year 2015, which is likely due to the same source of remote sensing data as the major inputs of estimation. Further improvements can be achieved by using more sophisticated AI approaches, which are ongoing efforts to predict OHC by the use of time sequence learning and spatial autoencoding-decoding structures. Given the large uncertainties among various estimations and the core role of OHC in understanding ocean warming and heat transfers in the Earth system, the importance of accurate quantifying the OHC is further emphasized.

For different ocean basins, the OHC variabilities and trends are shown in Fig. 5 and Table 5. The representative pattern of linear trends of IAP and OPEN is shown in Fig. 6. For all the major basins of oceans, there are consistent warming trends exist, which reflects the overall ocean warming by the anthropogenic forcing. Consistent with the findings of [33], during the two time periods, steadily highest OHC increase can be found in the Indo-Pacific basins and the warm pool area. This highlighted the Indo-Pacific role driving the recent global warming hiatus. In contrast, the Southern Ocean illustrated the lowest warming rates; this estimation was accompanied by large uncertainties, which can be attributed to the low Argo coverage therein. From 1993 to 2010, IAP and OPEN both present a basin-wide dipole warming and cooling pattern in the Pacific Ocean, with positive trends in the western part and negative trends in the east (Fig. 6). The structure mimics the Pacific Decadal Oscillation negative pattern, which was supported by the transition from positive to negative phase reported in literature [37]. For the later period, both the IAP and OPEN show bulk warming Indian Ocean, but less homogeneous for other basins. These consistencies prove the capability of OPEN data to reflect OHC trends in both Argo and pre-Argo eras.

12-month moving averaged OHC300 (unit: ZJ, i.e., \(\times 10\)21 J) for four major ocean basins. Because Argo data has a shorter temporal coverage so the reference here is 2005–2014. The thick black line with gray envelopes is the ensemble average and three standard deviations for six OPEN ensemble members

We now focus on the inconsistency. Compared with the majority of data sets, OPEN differs to the largest degree for the Pacific. Because the ENSO’s signature is exaggerated in the Pacific and lower in the other oceans. For OPEN, a significant jump after 2015 can be unexpectedly seen for the Pacific OHC, which is not found in the global OHC (Fig. 4). Further speculation shows that the different references contributed to approximately half of this jump, i.e., OPEN has a lower reference compared to ARMOR3D and GLORYS2V4. On the other hand, OPEN OHC300 presents a minimal envelope of uncertainty in the Pacific basin (Fig. 5), which implies that the jump is not due to random errors but contains mostly systematic biases. To reduce the error, one option is to utilize a more sophisticated deepened NN, such as the deep convolutional NN that will be discussed in the following chapters of this book, to extract the complicated link between surface variables and OHC. Alternatively, one can also adopt the strategy to use the clustering technique to subset the global ocean into distinct thermal provinces, each can be represented by a simple but different surface-subsurface relationship and thus better estimated by NN. This strategy has been shown viable in our previous effort [36]. These will be tested in future research.

We notice that IAP has a reference that is about 10% higher than that of Argo and OPEN. To further examine this mismatch, we show the non-anomaly OHC300 from these three products, which is one particular snapshot as an example (Fig. 7). The IAP product is significantly larger, i.e., warm bias, than OPEN and Argo, which is especially noticeable in the Pacific Ocean’s subtropical gyres, despite the fact that the three products show relatively similar patterns. This is very likely due to the errors of the XBT correction scheme of IAP. Compared with more accurate CTD measurements, the XBT measurements have a well-documented warm bias, despite the advanced time-varying correction recently developed [12, 34], which accounts for ~0.5 \(^\circ \)C warm bias. Yet, noting that this error is systematic, there are limited signatures in the decadal trends, but this highlights the value of having additional independent OHC datasets.

The PCs (left), EOFs of OPEN (middle), and EOF of IAP (right) from EOF analysis of OHC300, with the corresponding percentage of explanation shown in the title. The explained percentage of each mode is listed above the corresponding EOFs. Before EOF analysis, linear trends and high-frequency signals (higher than 12 months) were removed

To further demonstrate the OHC300’s spatiotemporal variability, we apply the empirical orthogonal function (EOF) analysis to OPEN and IAP (Fig. 8). The EOF analysis was conducted after linear trends and seasonal variation (by a 12-month lowpass filter) were removed. Those spatial EOFs and temporal PCs show high agreements between OPEN and IAP data sets (Fig. 8). For instance, the first mode accounts for 41.2% variability in IAP and 40.7% in OPEN, which is very close to each other. Only some small differences can be found in the corresponding PC1 (Fig. 8a). The EOF both have a tropical Pacific dipole higher in the warm pool and lower in the western part. In the extratropic, the difference is still small. Other modes present some visibly larger differences, but considering the smaller percentage (<12%) of these modes, the contribution to the OHC difference is small. The same conclusions will be drawn by analyzing OPEN and other products, further demonstrating the validity of OPEN.

5 Summary and Conclusions

In this chapter, we describes the AI technique of DORS and its application for studying climate change. An NN approach was developed to estimate OHC from remote sensing data sets, yielding a new ocean heat content estimation, which was termed Ocean Projection and Extension neural Network (OPEN) product [47]. By using the 1\(^\circ \times 1^\circ \) gridded Argo OHC data as the true values, and taking advantage of remote sensing products of SSH, SST, and SSW with near-global coverage and higher spatiotemporal resolution, we trained four NNs, each for estimating the OHC from the surface to 300, 700, 1500, and 2000 m depth. The NNs were trained with the 2005–2018 data in a way that enables the temporal extrapolation of OHC. By testing a variety of architecture of NN and feature combinations, the NN was optimized. Generally, a simple shallow NN was favorable for temporal extrapolation. The final choice for NN architecture had three hidden layers, each with three neurons. In this way, the four-depth OPEN OHC product was extended to the 1993 period covering the pre-Argo era, with a very high accuracy of R2 > 0.95 and NRMSE < 20%. We also estimated the uncertainty of OHC by using an ensemble technique, which demonstrated that OPEN also had low uncertainties from the NN technique. Comparisons of OPEN against other widely applied OHC data sets showed the good performance of OPEN in terms of trends and variabilities.

Various contributions have emphasized the need for more trustworthy OHC products for the sake of understanding the Earth’s climate, e.g., [42, 54]. As we mentioned before, all estimations are subjected to different sources of uncertainties. In-situ mapping-based products (IAP, EN4, and NCEI) have inconsistent observation records and uncertainties in mapping schemes. Numerical models (GLORYS2V4) may incorporate imperfect representations of physics. Despite the favorable performance of the NN-based OPEN product, it has limitations. It was trained from gridded Argo data. Although the gridded Argo product is often treated as observation, it is subjected to its own mapping and instrumental errors. For instance, [30] has found a larger errors of such product in western boundary current systems, where nonlinear dynamics are characterized. The unevenly distributed Argo profiles also contributed to the spatial errors.

For the oceanography community, one haunting skepticism to AI technique is that: what can these techniques do to solve real-world oceanography problems? To date, many applications of AI oceanography are still very preliminary, ‘in their infancy’ [55], far away from product-level outcomes. This chapter shows a promising application of AI techniques in climatic and ocean sciences, in addition to the currently available DORS studies. Presumably, the application of OPEN will also serve as a base for future AI studies. Several future directions of AI application concerning OHC and its climatic effects are: (1) extending the global OHC product to a longer time span, favorably covering several quick-warming and particularly surface warming ‘hiatus’ periods to understand the phenomenology [53]; (2) generating a downscale OHC product with higher spatial/temporal resolution; (3) developing AI method that digging into multiple datasets and digesting physics laws; and (4) projecting future OHC. These are all playgrounds where the AI Oceanography approach can unleash its potential.

References

Akbari E, Alavipanah S, Jeihouni M, Hajeb M, Haase D, Alavipanah S (2017) A review of ocean/sea subsurface water temperature studies from remote sensing and non-remote sensing methods. Water 9(12):936. https://doi.org/10.3390/w9120936

Ali MM, Swain D, Weller RA (2004) Estimation of ocean subsurface thermal structure from surface parameters: A neural network approach. Geophys Res Lett 31(20). https://doi.org/10.1029/2004gl021192, https://agupubs.onlinelibrary.wiley.com/doi/abs/10.1029/2004GL021192

Antonov JI (2005) Thermosteric sea level rise, 1955-2003. Geophys Res Lett 32(12). https://doi.org/10.1029/2005gl023112

Atlas R, Hoffman RN, Ardizzone J, Leidner SM, Jusem JC, Smith DK, Gombos D (2011) A cross-calibrated, multiplatform ocean surface wind velocity product for meteorological and oceanographic applications. Bull Am Meteor Soc 92(2):157–174. https://doi.org/10.1175/2010BAMS2946.1

Balmaseda MA, Trenberth KE, Källén E (2013) Distinctive climate signals in reanalysis of global ocean heat content. Geophys Res Lett 40(9):1754–1759. https://doi.org/10.1002/grl.50382

Beal LM, De Ruijter WP, Biastoch A, Zahn R, Group SWIW (2011) On the role of the Agulhas system in ocean circulation and climate. Nature 472(7344):429–36. https://doi.org/10.1038/nature09983www.ncbi.nlm.nih.gov/pubmed/21525925

Bindoff NL, Willebrand J, Artale V, Cazenave A, Gregory JM, Gulev S, Hanawa K, Le Quere C, Levitus S, Nojiri Y, et al. (2007) Observations: oceanic climate change and sea level

Charantonis AA, Badran F, Thiria S (2015) Retrieving the evolution of vertical profiles of Chlorophyll-a from satellite observations using Hidden Markov Models and Self-Organizing Topological Maps. Remote Sens Environ 163:229–239. https://doi.org/10.1016/j.rse.2015.03.019

Chelton DB, deSzoeke RA, Schlax MG, El Naggar K, Siwertz N (1998) Geographical variability of the first baroclinic rossby radius of deformation. J Phys Oceanogr 28(3):433–460. https://doi.org/10.1175/1520-0485(1998)028<0433:GVOTFB>2.0.CO;2

Chen J, Shum C, Wilson C, Chambers D, Tapley B (2000) Seasonal sea level change from TOPEX/Poseidon observation and thermal contribution. J Geodesy 73(12):638–647

Chen XY, Tung KK (2014) Varying planetary heat sink led to global-warming slowdown and acceleration. Science 345(6199):897–903. https://doi.org/10.1126/science.1254937. \(<\)Go to ISI\(>\)://WOS:000340524700037

Cheng L, Abraham J, Goni G, Boyer T, Wijffels S, Cowley R, Gouretski V, Reseghetti F, Kizu S, Dong S, Bringas F, Goes M, Houpert L, Sprintall J, Zhu J (2016) XBT science: Assessment of instrumental biases and errors. Bull Am Meteor Soc 97(6):924–933. https://doi.org/10.1175/bams-d-15-00031.1

Cheng L, Trenberth KE, Fasullo J, Boyer T, Abraham J, Zhu J (2017) Improved estimates of ocean heat content from 1960 to 2015. Sci Adv 3

Cheng L, Abraham J, Zhu J, Trenberth KE, Fasullo J, Boyer T, Locarnini R, Zhang B, Yu F, Wan L (2020a) Record-setting ocean warmth continued in 2019. Adv Atmos Sci 37:137–142

Cheng LJ, Abraham J, Hausfather Z, Trenberth KE (2019) How fast are the oceans warming? Science 363(6423):128–129. https://doi.org/10.1126/science.aav7619. \(<\)Go to ISI\(>\)://WOS:000455320600030

Cheng LJ, Abraham J, Zhu J, Trenberth KE, Fasullo J, Boyer T, Locarnini R, Zhang B, Yu FJ, Wan LY, Chen XR, Song XZ, Liu YL, Mann ME (2020) Record-setting ocean warmth continued in 2019. Adv Atmos Sci 37(2):137–142. https://doi.org/10.1007/s00376-020-9283-7\(<\)Go to ISI\(>\)://WOS:000519079600002

Cheng L, Abraham J, Trenberth KE, Fasullo J, Boyer T, Mann ME, Zhu J, Wang F, Locarnini R, Li Y, Zhang B, Tan Z, Yu F, Wan L, Chen X, Song X, Liu Y, Reseghetti F, Simoncelli S, Gouretski V, Chen G, Mishonov A, Reagan J (2022) Another record: ocean warming continues through 2021 despite La Niña conditions. Adv Atmos Sci. https://doi.org/10.1007/s00376-022-1461-3

Chu PC, Fan C, Liu WT (2000) Determination of vertical thermal structure from sea surface temperature. J Atmos Oceanic Tech 17(7):971–979

Foresee FD, Hagan MT (1997) Gauss-Newton approximation to Bayesian learning. In: Proceedings of international conference on neural networks (ICNN’97), IEEE, vol 3, pp 1930–1935

Friedrich T, Oschlies A (2009) Neural network-based estimates of North Atlantic surface pco2 from satellite data: A methodological study. J Geophys Res: Oceans 114(C3). https://doi.org/10.1029/2007jc004646. https://agupubs.onlinelibrary.wiley.com/doi/abs/10.1029/2007JC004646

Garcia-Gorriz E, Garcia-Sanchez J (2007) Prediction of sea surface temperatures in the western Mediterranean Sea by neural networks using satellite observations. Geophys Res Lett 34(11). https://doi.org/10.1029/2007gl029888. https://agupubs.onlinelibrary.wiley.com/doi/abs/10.1029/2007GL029888

Good SA, Martin MJ, Rayner NA (2013) EN4: Quality controlled ocean temperature and salinity profiles and monthly objective analyses with uncertainty estimates. J Geophys Res: Oceans 118(12):6704–6716. https://doi.org/10.1002/2013JC009067

Guinehut S, Dhomps AL, Larnicol G, Le Traon PY (2012) High resolution 3-d temperature and salinity fields derived from in situ and satellite observations. Ocean Sci 8(5):845–857. https://doi.org/10.5194/os-8-845-2012www.ocean-sci.net/8/845/2012/

Hornik K, Stinchcombe M, White H (1989) Multilayer feedforward networks are universal approximators. Neural Netw 2(5):359–366

Hoskins BJ, McIntyre M, Robertson AW (1985) On the use and significance of isentropic potential vorticity maps. Q J R Meteorol Soc 111(470):877–946

IPCC (2014) Climate Change 2014: Impacts, Adaptation, and Vulnerability. Part A: Global and Sectoral Aspects. Contribution of Working Group II to the Fifth Assessment Report of the Intergovernmental Panel on Climate Change. Cambridge University Press, Cambridge, United Kingdom and New York, NY, USA

Jagadeesh PSV, Kumar MS, Ali MM (2015) Estimation of heat content and mean temperature of different ocean layers. IEEE J Sel Topics Appl Earth Obs Remote Sensing 8(3):1251–1255. https://doi.org/10.1109/JSTARS.2015.2403877

Jain S, Ali MM (2006) Estimation of sound speed profiles using artificial neural networks. IEEE Geosci Remote Sens Lett 3(4):467–470. https://doi.org/10.1109/LGRS.2006.876221

Jain S, Ali MM, Sen PN (2007) Estimation of sonic layer depth from surface parameters. Geophys Res Lett 34(17). https://doi.org/10.1029/2007gl030577. https://agupubs.onlinelibrary.wiley.com/doi/abs/10.1029/2007GL030577

Jeong Y, Hwang J, Park J, Jang CJ, Jo YH (2019) Reconstructed 3-D ocean temperature derived from remotely sensed sea surface measurements for mixed layer depth analysis. Remote Sensing 11(24):3018

Klemas V, Yan XH (2014) Subsurface and deeper ocean remote sensing from satellites: an overview and new results. Prog Oceanogr 122:1–9. https://doi.org/10.1016/j.pocean.2013.11.010. www.sciencedirect.com/science/article/pii/S0079661113002310

Klemas V, Yan XH (2014) Subsurface and deeper ocean remote sensing from satellites: An overview and new results. Prog Oceanogr 122:1–9. https://doi.org/10.1016/j.pocean.2013.11.010. \(<\)Go to ISI\(>\)://WOS:000334006100001

Lee SK, Park W, Baringer MO, Gordon AL, Huber B, Liu Y (2015) Pacific origin of the abrupt increase in Indian Ocean heat content during the warming hiatus. Nature Geosci 8(6):445–449. https://doi.org/10.1038/ngeo2438. http://dx.doi.org/10.1038/ngeo2438 www.nature.com/ngeo/journal/v8/n6/pdf/ngeo2438.pdf

Levitus S, Antonov JI, Boyer TP, Locarnini RA, Garcia HE, Mishonov AV (2009) Global ocean heat content 1955-2008 in light of recently revealed instrumentation problems. Geophy Res Lett 36(7). https://doi.org/10.1029/2008gl037155

Levitus S, Antonov JI, Boyer TP, Baranova OK, Garcia HE, Locarnini RA, Mishonov AV, Reagan JR, Seidov D, Yarosh ES, Zweng MM (2012) World ocean heat content and thermosteric sea level change (0-2000 m), 1955-2010. Geophys Res Lett 39(10):n/a–n/a. https://doi.org/10.1029/2012gl051106

Lu W, Su H, Yang X, Yan XH (2019) Subsurface temperature estimation from remote sensing data using a clustering-neural network method. Remote Sens Environ 229:213–222. https://doi.org/10.1016/j.rse.2019.04.009www.sciencedirect.com/science/article/pii/S0034425719301464

Mantua NJ, Hare SR, Zhang Y, Wallace JM, Francis RC (1997) A pacific interdecadal climate oscillation with impacts on salmon production. Bull Am Meteor Soc 78(6):1069–1080

Meyssignac B, Boyer T, Zhao Z, Hakuba MZ, Landerer FW, Stammer D, Köhl A, Kato S, L’Ecuyer T, Ablain M, Abraham JP, Blazquez A, Cazenave A, Church JA, Cowley R, Cheng L, Domingues CM, Giglio D, Gouretski V, Ishii M, Johnson GC, Killick RE, Legler D, Llovel W, Lyman J, Palmer MD, Piotrowicz S, Purkey SG, Roemmich D, Roca R, Savita A, Kv Schuckmann, Speich S, Stephens G, Wang G, Wijffels SE, Zilberman N (2019) Measuring global ocean heat content to estimate the Earth Energy Imbalance. Front Marine Sci 6. https://doi.org/10.3389/fmars.2019.00432

Rathore S, Bindoff NL, Phillips HE, Feng M (2020) Recent hemispheric asymmetry in global ocean warming induced by climate change and internal variability. Nat Commun 11(1):2008. https://doi.org/10.1038/s41467-020-15754-3www.ncbi.nlm.nih.gov/pubmed/32332758

Rebert JP, Donguy JR, Eldin G, Wyrtki K (1985) Relations between sea level, thermocline depth, heat content, and dynamic height in the tropical pacific ocean. J Geophys Res 90(C6). https://doi.org/10.1029/JC090iC06p11719

Reichstein M, Camps-Valls G, Stevens B, Jung M, Denzler J, Carvalhais N, Prabhat, (2019) Deep learning and process understanding for data-driven earth system science. Nature 566(7743):195–204. https://doi.org/10.1038/s41586-019-0912-1. www.ncbi.nlm.nih.gov/pubmed/30760912

Resplandy L, Keeling RF, Eddebbar Y, Brooks M, Wang R, Bopp L, Long MC, Dunne JP, Koeve W, Oschlies A (2019) Quantification of ocean heat uptake from changes in atmospheric O2 and CO2 composition. Sci Rep 9(1):20244. https://doi.org/10.1038/s41598-019-56490-z

Reynolds RW, Smith TM, Liu C, Chelton DB, Casey KS, Schlax MG (2007) Daily high-resolution-blended analyses for sea surface temperature. J Clim 20(22):5473–5496. https://doi.org/10.1175/2007jcli1824.1

Roemmich D, Gilson J (2009) The 2004–2008 mean and annual cycle of temperature, salinity, and steric height in the global ocean from the Argo program. Prog Oceanogr 82(2):81–100. https://doi.org/10.1016/j.pocean.2009.03.004

Su H, Wu XB, Lu WF, Zhang WW, Yan XH (2017) Inconsistent subsurface and deeper ocean warming signals during recent global warming and hiatus. J Geophys Res-Oceans 122(10):8182–8195. https://doi.org/10.1002/2016jc012481. \(<\)Go to ISI\(>\)://WOS:000415893300022

Su H, Yang X, Lu W, Yan XH (2019) Estimating subsurface thermohaline structure of the global ocean using surface remote sensing observations. Remote Sensing 11(13). https://doi.org/10.3390/rs11131598

Su H, Zhang H, Geng X, Qin T, Lu W, Yan XH (2020) OPEN: A new estimation of global ocean heat content for upper 2000 meters from remote sensing data. Remote Sensing 12(14):2294. https://www.mdpi.com/2072-4292/12/14/2294

Swain D, Ali MM, Weller RA (2006) Estimation of mixed-layer depth from surface parameters. J Mar Res 64(5):745–758. https://doi.org/10.1357/002224006779367285www.ingentaconnect.com/content/jmr/jmr/2006/00000064/00000005/art00005 doi.org/10.1357/002224006779367285

Trenberth KE, Fasullo JT, Balmaseda MA (2014) Earth’s energy imbalance. J Clim 27(9):3129–3144

von Schuckmann K, Palmer MD, Trenberth KE, Cazenave A, Chambers D, Champollion N, Hansen J, Josey SA, Loeb N, Mathieu PP, Meyssignac B, Wild M (2016) An imperative to monitor Earth’s Energy Imbalance. Nat Clim Chang 6(2):138–144. https://doi.org/10.1038/nclimate2876

Wu X, Yan XH, Jo YH, Liu WT (2012) Estimation of subsurface temperature anomaly in the North Atlantic using a Self-Organizing Map neural network. J Atmos Oceanic Tech 29(11):1675–1688. https://doi.org/10.1175/jtech-d-12-00013.1

Yan XH, Schubel JR, Pritchard DW (1990) Ocean upper mixed layer depth determination by the use of satellite data. Remote Sens Environ 32(1):55–74. https://doi.org/10.1016/0034-4257(90)90098-7. \(<\)Go to ISI\(>\)://WOS:A1990DZ26200005

Yan XH, Boyer T, Trenberth K, Karl TR, Xie SP, Nieves V, Tung KK, Roemmich D (2016) The global warming hiatus: slowdown or redistribution? Earth’s Future 4:472–482. https://doi.org/10.1002/2016ef000417

Zanna L, Khatiwala S, Gregory JM, Ison J, Heimbach P (2019) Global reconstruction of historical ocean heat storage and transport. Proc Natl Acad Sci U S A 116(4):1126–1131. https://doi.org/10.1073/pnas.1808838115www.ncbi.nlm.nih.gov/pubmed/30617081

Zheng G, Li X, Zhang RH, Liu B (2020) Purely satellite data-driven deep learning forecast of complicated tropical instability waves. Sci Adv 6

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License (http://creativecommons.org/licenses/by-nc-nd/4.0/), which permits any noncommercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if you modified the licensed material. You do not have permission under this license to share adapted material derived from this chapter or parts of it.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2023 The Author(s)

About this chapter

Cite this chapter

Lu, W., Su, H. (2023). Ocean Heat Content Retrieval from Remote Sensing Data Based on Machine Learning. In: Li, X., Wang, F. (eds) Artificial Intelligence Oceanography. Springer, Singapore. https://doi.org/10.1007/978-981-19-6375-9_6

Download citation

DOI: https://doi.org/10.1007/978-981-19-6375-9_6

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-19-6374-2

Online ISBN: 978-981-19-6375-9

eBook Packages: Earth and Environmental ScienceEarth and Environmental Science (R0)