Abstract

This chapter proposes a pixel-level model to classify sea ice from Synthetic Aperture Radar (SAR) images, named DAU-Net. The DAU-Net is based on the U-Net. The encoder is a ResNet-34 model. The position and channel attention modules are integrated into the DAU-Net to improve performance. The Sentinel-1A SAR images are used as experimental data. The model’s input contains three channels: VV polarized information, VH polarized information, and the incident angle. Experiments show that the dual-attention mechanism improves the classification accuracy, and contributes to classify fine-grained targets. Incorporating the SAR incident angle in the algorithm is also essential for a high accuracy classification. We employ the well-trained model to classify a series of SAR images from freezing to melting of Bering Strait. The results are compared with the 1 km products of the National Snow and Ice Data Center. Results show that the DAU-Net is capable of dealing with complex sea conditions, showing good robustness and applicability.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

1 Introduction

The changes in global sea ice volume, distribution, and movement reflect the interaction of the atmosphere-cryosphere-hydrosphere and the global climate change [30]. Sea ice study is also significant because it causes marine navigation and transportation safety concerns. Since the classification of sea ice and open water provides valuable information for safe navigation, sea ice classification and monitoring draw extensive attention [8, 37, 39]. Satellite remote sensing, such as optical camera, microwave radiometer, and synthetic aperture radar (SAR), has been the most effective way to monitor sea ice in the polar regions [21, 40]. SAR images have been the primary source for sea ice classification and monitoring, due to its high spatial resolution, wide-coverage, and ability to penetrate clouds [7].

Series of studies have been devoted to classifying sea ice and open water on SAR images, including threshold-based methods, expert systems, and machine learning methods. Multi-Year Ice (MYI) Mapping System (MIMS) is a typical threshold-based model, and it can quickly map MYI in uncalibrated SAR images [13]. The representation of expert systems is the Advanced Reasoning using Knowledge for Typing Of Sea ice (ARKTOS) [38]. ARKTOS performs a fully automated analysis of SAR sea ice images by mimicking the reasoning process of sea ice experts. For machine learning methods, the regression model is an early exploration. Lundhaug and Maria [29] proposed a multivariate regression method to model the relationship between the mean and standard deviation of the backscattering coefficients and air temperatures with sea ice types and water. Their experiments showed the correlation coefficients between predicted and actual values were higher than 0.90. Karvonen [20] developed a modified pulse-coupled neural network (PCNN) to classify the sea ice in the Baltic Sea. Zhang et al. [47] proposed a k-means-based model which combines microwave scatterometer and radiometer data to classify sea ice types. Zakhvatkina [45] extracted textural features from the gray-level co-occurrence matrix (GLCM) and input the features into an artificial neural network (ANN)-based model to classify sea ice and the open water. Similarly, researchers combined the GLCM with other machine learning algorithms, such as Markov random field (MRF) [6] and support vector machine (SVM) [25] to classify sea ice from SAR images.

Overall, the main drawback of the aforementioned traditional methods is that they need prior expert knowledge and sophisticated manual engineering to extract features for discriminating between sea ice and open water. This drawback has been a common challenge faced by the earth system science in the era of big data [33].

Deep learning (DL) technology addresses the mentioned challenge [19]. A typical DL model consists of deep neural networks (DNN), which accepts input data in a raw format and automatically discover the required features [24]. In recent years, DL has been successfully applied in oceanography, geography, and remote sensing, which has helped humans gain further process understanding of earth system science problems [27, 32,33,34, 43]. A deep convolution neural network (CNN) is a particular type of DNN composed of CNN layers. A CNN layer connects to the local patches of the previous layer through convolution kernels to extract local spatial features [22]. Since CNN-based methods have achieved great success in image classification, researchers employed CNN to extract features automatically to improve the accuracy and efficiency of sea ice classification. Yan and Scott [44] introduced an early CNN-based model AlexNet [2], and transfer learning to classify sea ice and open water. Li et al. [26] proposed a CNN-based model to classify sea ice and open water from Chinese Gaofen-3 SAR images . Wang et al. [42] constructed a CNN model consists of three CNN layers and two fully connected neural network layers to classify sea ice near the Bering Strait. [16] integrated transfer learning and dense CNN blocks to form a transferred multilevel fusion network (MLFN). The MLFN outperformed the PCAKM [5], the NBRELM [15], and the GaborPCANet [12] in classifying sea ice and open water.

More and more researchers are trying to construct DL-based models to achieve end-to-end classification between sea ice and open water. Though the aforementioned DL-based models deliver excellent performances, several issues still exist. First, classification accuracy needs to be further improved. Especially for the medium-high resolution SAR images, fine-grained objects such as small floes, sinuous ice-water boundaries, and ice channels need to be well classified. Second, the information of SAR images, such as dual-polarization information and incident angle (IA), are not fully utilized by most DL-based models. The benefit of fusing dual-polarized information has been demonstrated in the conventional method [25], and the IA affects the radar backscattering intensity. All this information should be considered to improve classification accuracy. Third, most of the existing models are validated by independent images, and their applicability to more challenging tasks, such as classifying a series of images from freezing to melting, remains to be verified.

Aiming to solve the issues mentioned above, we propose a dual-attention U-Net model, DAU-Net, to classify sea ice and open water on SAR images. U-Net was initially developed for the semantic segmentation of biomedical images [35]. It is designed to work with fewer training samples but is still able to yield precise segmentations. The effectiveness of employing U-Net to solve classification or segmentation problems of geoscience has been demonstrated [11, 28, 46]. Therefore, we use the U-Net as the backbone of the classification model. The dual-polarized information and the IA of SAR images are utilized as the model inputs. To extract more characteristic features from the multiple input information, we integrate the dual-attention mechanism [14] to optimize the origin U-Net. Finally, we use SAR images near the Bering Sea to train and evaluate the model. We validate the applicability of DAU-Net by a series of SAR images of Bering Strait and compare the classification results with the sea ice products of the National Snow and Ice Data Center (NSIDC).

2 Data

2.1 Study Area

The study areas are the Bering Sea and Bering Strait, which locates near the out edge of the sea ice on the Pacific side of the Arctic (Fig. 1). The Bering Strait is the only channel for water exchange between the Pacific Ocean and the Arctic Ocean, showing strong atmosphere-sea-ice interactions and supports one of the world’s most productive and valuable fisheries with ever increasing commercial vessel activities [9]. Therefore, sea ice detection and monitoring in this region are of great interest to scientific research communities and commercial fishing and transportation industries.

2.2 SAR Images

The SAR images are obtained from Sentinel-1A in the interferometric wide-swath (IW) mode with a swath width of 250 km. The images are the ground range detected (GRD) products with VV + VH (vertical emitting and vertical and horizontal receiving, respectively) polarizations. The IA is between 30.00-46.00 degrees. The range and azimuth resolutions are 5 and 20 m, respectively, with a sampling space of 10 m.

The data set consists of 34 SAR images as shown in Table 1, and is divided into three subsets: 1) the training set, 2) the testing set, and 3) the applicability validation set (Fig. 1). The model training set includes 15 images (No.1 – No.15 in Table 1). The testing set is the No.16 image in Table 1, and we used this image to evaluate the model performance by metrics. The applicability validation set is a series of images covering the Bering Strait. The series contains six images, each of which is mosaicked from three single Sentinel-1A images, a total of 18 Sentinel-1A images (No.17–No.34 in Table 1). The image series covers the whole ocean process from freezing to melting of the Bering Strait. Therefore, we could validate the applicability of the well-trained model by monitoring the entire cycle of sea ice in the Bering Strait.

2.3 NSIDC Sea Ice Products

The sea ice products of the NSIDC [41], named Multisensor Analyzed Sea Ice Extent - Northern Hemisphere (MASIE-NH), are employed as a reference for the applicability discussion. The product is based on the Interactive Multisensor Snow and Ice Mapping System (IMS) results produced by the National Ice Center (NIC). NIC utilizes visible imagery, passive microwave data, and NIC weekly analysis products to create their data product. MASIE-NH provides measurements of daily sea ice extent and sea ice edge boundary for the Northern Hemisphere and 16 Arctic regions in a polar stereographic projection at both 1 and 4 km grid cell sizes [41]. We choose the 1 km MASIE-NH products as the reference.

2.4 Data Preprocessing

We use SNAP 3.0 [10] to perform radiometric calibration and boxcar filtering on all SAR images. As the size of the source SAR image is too large, we downscale each image to 1/3 of the original image size, about 8,000\(\times \)5,000 pixels. Although the spatial resolution is downscaled from 10 m to 30 m, it is still much higher than that of the MASIE-NH products (1 km). It is far more detailed than could be expected from existed manual or operational automatic classifiers [25]. We scale all pixel values to 0-1. All IA values are scaled to 0-1, referred by 0\(^{\circ }\)-90\(^{\circ }\).

The SAR images are labeled into two classes, 1 for sea ice and 0 for open water, by the annotation tool LabelMe [36] to obtain the ground truth labels. As the resolutions of existing sec ice products are much lower than that of the Sentinel-1A images [25], the labeling process is based on visual interpretation. For regions that are difficult to distinguish, we refer to the 1 km MASIE-NH products to label them. In this way, most of the pixels in the SAR images could be labeled correctly. Due to the limitations of SAR image noise and manual labeling, there are inevitably a few mislabeling pixels, and some small sea ice objects cannot be accurately labeled. This is a common problem in the supervised learning field. For most classification missions, such mislabeled pixels account for a small proportion of all pixels and do not affect the convergence of the model [17].

We divide all images (VV, VH, and IA) into 256\(\times \)256-pixel chips as the model inputs. Fig. 2 takes the VV channel as an example to show the SAR image chips and the corresponding ground truth labels.

3 Method

3.1 Overall Structure of DAU—Net

The backbone of the proposed DAU-Net is a U-Net model. The U-Net is named for its almost symmetric encoder-decoder network architecture like a “U” shape and is designed to work with fewer training samples but still able to yield precise segmentations. The encoder extracts abstracted, downscaled high-level feature maps. The decoder restores the resolution of the high-level feature maps. The intermediate feature maps extracted by encoder and decoder are connected to form multi-scale feature maps for pixel-level classifications. The encoder can be a mature DNN model, such as VGG16, ResNet18, ResNet34, etc [4, 23].

Discriminant feature representations are essential for improving classification accuracy. To achieve a high accuracy classification between sea ice and open water in medium-high resolution SAR images, we need more characteristic features to discriminate fine-grained objects such as small floes, sinuous ice-water boundaries, and ice channels. Therefore, we integrate a dual-attention mechanism into the original U-Net and form a DAU-Net model to improve the feature representations of sea ice and open water. The dual self-attention mechanism means position attention module (PAM) and channel attention module (CAM), which could capture the long-range dependencies in spatial and channel dimensions. It has been demonstrated effective in classical image segmentation [14].

The PAM captures long-range dependencies in spatial dimension by a self-attention mechanism. For a feature map, the feature value at a specific position is updated by aggregating feature values at all positions with a weighted summation. The weights are determined by the feature similarities between the corresponding two positions. Any two positions with similar features can contribute to mutual improvement regardless of their distance in the spatial dimension. Similarly, the CAM employs the self-attention mechanism to capture the channel dependencies between any two-channel maps. Each channel map is updated by a weighted sum of all channel maps. Finally, the outputs of these two attention modules are fused to enhance the feature representations further.

Overall, as shown in Fig. 3, the DAU-Net consists of five parts: inputs, encoder, attention, decoder, and output. Each input unit consists of three channels of a 256\(\times \)256-pixel SAR image: VV, VH, and IA. The encoder is the ResNet-34, a mature model for image recognition, and it extracts abstracted, downscaled feature maps for accurate classification. The attention part performs position attention and channel attention on the extracted feature maps to capture long-range dependencies in spatial and channel dimensions. The outputs of the two attention modules are fused to form more characteristic features transmitted to the decoder. The decoder module rescales the downscaled feature maps to the original size. Skip connections link the encoder feature and decoder feature. Next, we will detail the encoder, attention, decoder, and output modules.

3.2 Encoder

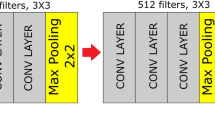

He et al. [18] proposed the residual network (ResNet) to increase the number of hidden CNN layers to more than one hundred. The ResNet family includes ResNet-18, ResNet-34, ResNet-50, and ResNet-101, where the number represents the number of CNN layers. Large numbers mean more CNN layers, more parameters, and more training complexity. The ResNet family has been widely used in semantic segmentation and object detection. Considering the depth of the model, the number of trainable parameters, and the complexity of sea ice texture, we choose ResNet-34 as the encoder for DAU-Net. The comparisons between the ResNet-34 and the other ResNet-based encoders are carried out in the F part of Section IV.

The encoder consists of 33 CNN layers of the ResNet-34, including five stages. The first stage is one CNN layer with 7\(\times \)7 kernel size and 2\(\times \)2 strides. After the first stage, the original image size is downscaled to 128\(\times \)128. The remaining four stages are composed of 3, 4, 6, and 3 ResNet blocks and a total of 16 ResNet blocks, Fig. 3. Each ResNet block contains two stacking CNN layers with a shortcut connection linking the input of the block and the output of the 2nd CNN layers [18]. The number of convolutional kernels in the five stages is 64, 64, 128, 256, and 512. The original ResNet34 model uses four 2\(\times \)2 max-pooling layers that are stacked on four ResNet stages to downscale the feature map. Here, we discard the last max-pooling layer and retain the first three max-pooling layers. The activation function of each CNN layer is ReLU [1]. After encoding, the origin inputs are transformed into 512 16\(\times \)16 feature maps. Following, these high-level features are transmitted to the attention part.

3.3 Attention

The 512 16\(\times \)16 feature maps extracted by the encoder are fed into the PAM and CAM to capture spatial and channel dependencies. The outputs of these two attention modules are fused and transformed into the decoder.

3.3.1 PAM

Since CNN adopts local connection, the features captured by CNN are local. For semantic segmentation, local features generated by fully CNN are not representative enough, which could lead to misclassifications [31]. The PAM addresses this issue. The PAM updates the feature value at a specific position by aggregating feature values at all positions with a weighted summation. Thus, the global spatial dependencies of any two positions could be captured. These global features are fused with local features to form more characteristic features. Following, we will detail the calculation of PAM.

As shown in Fig. 4a., let H, W, and C represent the width, height, and channels, and \({A\mathbb {\in R}}^{H \times W \times C}\) is a local feature map extracted from the model inputs. The white/dark regions represent sea ice/water features. There are some inaccurate features in A, especially the regions marked by the red rectangle. Then A is fed into all three CNN layers to generate three feature

maps \({B\mathbb {\in R}}^{H \times W \times C}\), \({C\mathbb {\in R}}^{H \times W \times C}\), and \({D\mathbb {\in R}}^{H \times W \times C}\), as shown in Fig. 4b. B is reshaped to \(B^{1} \in \mathbb {R}^{N \times C}\), where N = H \(\times \) W is the number of pixels. C is reshaped and transposed to \(C^{1} \in \mathbb {R}^{C \times N}\). Then, matrix multiplication is performed between \({B^{1}}\) and \({C^{1}}\). Then, the multiplication result is activated by a softmax layer to calculate the spatial attention map \(S \in \mathbb {R}^{N \times N}\). The softmax activation [3] normalizes S by row and makes the sum of each row is 1. The more similar feature representations of the two positions contribute to a higher correlation between them, generating a large value in S.

The global dependencies of any two positions in the feature map modeled by S. D is reshaped to\(\ D^{1} \in \mathbb {R}^{N \times C}\). S is multiplied by \({D^{1}}\) to generate\(\ {A^{s}\mathbb {\in R}}^{N \times C}\):

where \(a_{ij}^{s}\) is an element of \(A^{s}\), \({S_{i}}\) is the \({i_{th}}\) row of S and \(D_{j}^{1}\) is the \({j}_{th}\) column of \({D^{1}}\). \({A^{s}}\) is reshaped to \({A^{1}\mathbb {\in R}}^{H \times W \times C}\). For each channel of \({A^{1}}\), the element of a position is the weighted sum of elements across all positions in the corresponding channel of D based on the weights in S. Therefore, \(A^{1}\) has a global contextual view and selectively aggregates contexts according to the spatial attention map. \(A^{1}\) is multiplied by a scale parameter \(\alpha \) and added to the input feature map A in element-wise to obtain the output \(E^{H \times W \times C}\):

where \(\alpha \) is initialized as 0 and gradually learns to assign more weight.

The pixel value of the output feature map E is a weighted sum of the features across all pixels and original features. E integrates the local features and the long-range global features. The similar semantic features achieve mutual gains, thus improving intra-class compact and semantic consistency. Intuitively, as shown in Fig. 4c, the inaccurate features in A are optimized by the PAM, which contributes to the final output.

3.3.2 CAM

Each channel map of high-level features can be regarded as a class-specific response, and different semantic responses are associated with each other. The CAM updates the feature value at a position by aggregating feature values of all channels in the same position with a weighted sum. The interdependencies between channels of feature maps are captured, which improves the feature representation of specific semantics.

The detailed calculation process of CAM in the DAU-Net. a Feature maps without CAM, some water pixels are inaccurately encoded as sea ice pixels, marked in the red rectangle. b The calculation process of CAM. c Feature maps after CAM. Some inaccurate sea ice pixels are modified as water pixels, improving the accuracy of outputs

The structure of CAM is illustrated in Fig. 5. As shown in Fig. 5a., let H, W, and C represent the width, height, and channels, and \({A\mathbb {\in R}}^{H \times W \times C}\) is a local feature map extracted from the model inputs, Fig. 5a. The channel attention map \(X \in \mathbb {R}^{C \times C}\) is calculated from the original features \({A\mathbb {\in R}}^{H \times W \times C}\), Fig. 5b. A is reshaped to \(A^{1} \in \mathbb {R}^{N \times C}\), and is reshaped and transposed to \(A^{2} \in \mathbb {R}^{C \times N}\). Then, a matrix multiplication between \({A^{2}}\) and \({A^{1}}\) is performed. Then, a softmax layer is applied to obtain the channel attention map X. The more similar feature representations of the two channels contribute to a higher correlation between them, generating a larger value in X. The sum of each row in X is 1. \({A}^{1}\) is multiplied by the transpose of X to generate \(A^{x} \in \mathbb {R}^{N \times C}\):

where \(a_{ij}^{x}\) is an element of \(A^{x}\), \(A_{i}^{1}\) is the \({i}^{th}\) row of \({A}^{1}\) and \({X_{j}}\) is the \({j_{th}}\) column of X. \({A}^{x}\) is reshaped to \(A^{3} \in \mathbb {R}^{H \times W \times C}\). For each position of \({A^{3}}\), the element of a channel is the weighted sum of elements across all channels in the corresponding position of A based on the weights in X. Therefore, \(A^{3}\) has long-range contextual dependencies in channel dimensions. \({A^{3}}\) is multiplied by a scale parameter \(\beta \) and added to the input feature map A in element-wise to obtain the output \(F^{H \times W \times C}\):

where \(\beta \) gradually learns a weight from 0.

The final feature of each channel is a weighted sum of the features of all channels and original features. The long-range semantic dependencies between different channels of the feature maps are modeled, which boosts feature discriminability. As shown in Fig. 5a, many open water regions are inaccurately represented as sea ice features in feature map A. After the channel attention procedure, most of the inaccurate regions in A are corrected, Fig. 5c. The outputted feature map F is more discriminating than A and helps to achieve a good classification result.

3.3.3 Fusion

The PAM output and CAM output are separately transformed by a CNN layer. An element-wise summation is performed on the two transformed results. A CNN layer executes convolutions on the summation to generate fusion features. Finally, the fusion features are transmitted to the decoding part.

3.4 Decoder

Five decoder modules are stacked upon the features outputted by attention modules, and each decoder module is composed of one up-sampling layer and two stacking CNN layers. Each CNN layer is followed by a batch normalization layer and a ReLU activation layer. The number of convolutional kernels in the four decoders is 256, 128, 64, 32, and 16, respectively. Three concatenations fuse the features generated from the same level encoder and decoder. The kernel size of all CNN layers in decoder modules is 3\(\times \)3. After decoding, the 16\(\times \)16 feature maps are rescaled to the same size as the input image, 256\(\times \)256.

3.5 Output

The feature maps output by the decoder are fed into the output module that consists of one CNN layer with one 1\(\times \)1 convolutional kernel. One sigmoid layer performs non-linear activation on the convolutional outputs to predict the value of each pixel. The activation value is between [0,1]. If it is larger than 0.5, the pixel is sea ice; otherwise, it is open water. The loss function is binary cross-entropy.

4 Experiments

4.1 Experiments Setting

There are 4,684 SAR chips in the training set. We split 30% samples from the training set as the validation set. We choose a typical image with rough sea surface and various sea ice textures as the testing image. We divided the testing image into 672 256\(\times \)256 chips. The developed model runs on a GPU workstation with one NVIDIA TESLA V100 32 GB GPU. Its batch size is 16, and the initial learning rate is 0.0001. We use Keras as the DL packages, and the ReduceLROnPlateau and early stopping strategies in Keras are employed to accelerate convergence and avoid overfitting.

4.2 Evaluation Metrics

Accuracy, precision, recall and mean intersection over union (IoU) are employed to evaluate the performance of the classification methods. The definition of these metrics is shown in Fig. 6. Precision refers to the proportion of correctly predicted pixels, both sea ice, and water, among all predicted pixels. Precision refers to the proportion of pixels that are true sea ice and predicted as sea ice to all predicted sea ice pixels. A higher precision value means the model extracts less false alarms. Recall refers to the proportion of pixels that are true sea ice and predicted as sea ice to all true sea ice pixels. A higher recall value means the model misses fewer sea ice pixels. IoU means the proportion of pixels that are true sea ice and predicted as sea ice to the union of true sea ice and predicted sea ice pixels. When the predicted sea ice pixels coincide with the true sea ice pixels completely, the IoU is the maximum value of 1.

4.3 Comparison Experiments Against Other Models Performances

To validate the performance of the proposed DAU-Net, two recently proposed DL-based sea ice classification models are selected for comparison: 1) CNNwang, which is the CNN-based detection model proposed by Wang et al. [42] in 2018. It consists of five CNN layers and three max-pooling layers; 2) DenseNetFCN, which has a similar structure with the MLFN model proposed in 2019 [16]. To satisfy the pixel-level segmentation and make a fair comparison, DenseNetFCN replaces the fully connected layers in the original MLFN with fully convolutional layers and adds upsampling blocks, forming a “U” shape segmentation model.

We also compare our model performance against the classic U-Net model that has a similar structure with DAU-Net except that the CAM and PAM are removed. It is worth noting that the CNN layers after two attention modules and the CNN layer of the fusion part are retained to ensure a fair comparison. U-NetCAM means the U-Net model with CAM but no PAM. U-NetPAM is the U-Net model with PAM but no CAM. Similarly, the CNN layers are retained in these two models. We tune the hyper parameters of all compared models and record the results with the best accuracy.

The evaluation metrics of all models are shown in Table 2, and the corresponding classification results are shown in Fig. 7. The accuracy, IoU, and precision of CNNwang are lower than those of the other five models. However, the recall of CNNwang is the largest one. The precision and the recall are very unbalanced, which means CNNwang misses fewer sea ice pixels but misclassifies many open water pixels as sea ice (high false alarms). As shown in Fig. 7d, the classification results of CNNwang, such as sea ice edges and ice blocks, are coarse-grained. Limited by the model complexity, it is difficult for CNNwang to extract enough representative features to achieve fine-grained classification, thus generate many false alarms. Compared with CNNwang, the accuracy, IoU, and precision of DenseNetFCN are improved obviously, and recall is reduced. The gap between precision and recall is narrowed. Fig. 7e shows that the classification results are much more refined than those of CNNwang. However, there are still some false alarms in the region marked by the red rectangle. Although DenseNetFCN is more complicated than CNN, it is still not enough to extract sufficiently characteristic features to accurately distinguish sea ice and water, especially in areas where sea ice and water are mixed under complex sea conditions.

The U-Net model outperforms CNNwang and DenseNetFCN in both accuracy and IoU. Its recall and precision are also more balanced. Fig. 7f shows that the U-Net obviously reduces the false alarms generated by DenseNetFCN (marked by the red rectangle). By introducing attention modules, U-NetCAM and U-NetPAM show improvements in accuracy and IoU. The precisions and recalls do not show significant improvements. However, as shown in Fig. 7g-h, the classification results of U-NetCAM and U-NetPAM are more refined, and the boundary between sea ice and open water is more smoother. Finally, the DAU-Net, integrated with CAM and PAM, obtains the most considerable accuracy, IoU, and precision (Table 2). Compared with the original U-Net model, the accuracy, IoU, and precision of the DAU-Net increased by 0.50%, 1.00%, and 1.14%, respectively. The accuracy and recall are in balance. By comparing Fig. 7i and f, it can be found that the false alarms generated by U-Net are reduced significantly, and the classification results of DAU-Net are more refined. The fine-grained objects such as small floes, sinuous ice-water boundaries, and ice channels are classified more smoothly by DAU-Net. Therefore, the CAM and the PAM can improve the representative ability of extracted features to promote the classification results of sea ice and open water.

4.4 Effectiveness of IA

As the IA is ignored in existed DL-based models [16, 42], we design an experiment to evaluate the effectiveness of employing the IA of SAR images as one input. Table 3 shows the experiment results. DAU-Net is the model with IA, and DAU-NetNIA is the model without IA. The other experiment settings are unchanged. The accuracy and IoU of DAU-NetNIA are less than those of the DAU-Net. The precision is much larger than the recall, which means DAU-NetNIA misses many sea ice pixels. As shown in Fig. 8c, some sea ice pixels are misclassified as open water in the upper left part of the image. Thus, the IA is essential to obtain better classification results.

4.5 Effectiveness of Dual—Polarization Information

We design an experiment to evaluate the effectiveness of dual-polarization inputs. DAU-Net uses the VV channel, VH channel, and IA as the inputs. DAU-NetVV uses VV channel and IA as the inputs, and DAU-NetVH uses VH channel and IA as inputs. The other experiment settings are unchanged, as shown in Table 4. The four metrics of DAU-NetVH are smaller than those of the other two models. As Fig. 8e shown, DAU-NetVH misclassifies many sea ice pixels as open water, mainly the pixels in the upper left part of the image. DAU-NetVV performs better than DAU-NetVH, but it still misses some sea ice pixels in the middle part of the image, Fig. 8d. Finally, by combining VV and VH as inputs, DAU-Net achieves the best performance. Thus, the dual-polarization information of SAR image is helpful to obtain better classification results.

4.6 Performances of Different ResNet-Based Encoders

The encoder in DAU-Net is ResNet-34. We design an experiment to evaluate the performances of the other two ResNet-based encoders. DAU-Net18 is the model using ResNet-18 as the encoder, and DAU-Net50 is the model using ResNet-50 as the encoder. The other parts of these two models are the same as those of the DAU-Net. As shown in Table 5, the performances of the three models do not show much difference. DAU-Net with ResNet-34 as encoder slightly outperforms the other two ResNet-based encoders. For our classification mission, ResNet-34 is a more suitable encoder than the other two ResNet models.

5 Discussions

To validate the robustness of the proposed model, we employ the DAU-Net to classify sea ice and open water from a series of SAR images in the Bering Strait and compare the classification results with the sea ice products provided by NSIDC. As the DenseNetFCN represents the existing DL-based classification model for sea ice, we take the results of DenseNetFCN as comparison targets. The image series consists of six images, each of which is mosaiced from three Sentinel-1A images, and a total of 18 Sentinel-1A images. Their details are shown in Table 1. The image series covers the process from freezing to melting of the Bering Strait, including a variety of sea ice textures and sea surface conditions. As shown in Fig. 9a-f, sea ice partially appeared in the Bering Strait on Dec 13, 2018, and it covered the entire region until Mar 19, 2019. Then, on Mar 31, 2019, the sea ice started to melt, and by May 6, 2019, most of it had receded. The most recent data (generally from the previous day) of the 1 km products appear in the archive at approximately 10:00 p.m. (Greenwich Mean Time, GMT). The 18 Sentinel-1A images in the Bering Strait are acquired around 06:00 p.m. (GMT). Due to the time difference, the date of the MASIE-NH products we employed is one day later than the date of the Sentinel-1A images. The cell size of the DAU-Net result is 30 m. The spatial resolution of the two data is too different, so it is unreasonable to compare their evaluation metrics quantitatively. Here, we discuss the performance of DAU-Net through the visual comparison of classification results.

Figure 9g-l show the classification results of DAU-Net and Fig. 9m-r are the corresponding MASIE-NH products. Overall, the DAU-Net results are consistent with the MASIE-NH products. The sea surface in Fig. 9a, d, and f is very rough and bright, mixing with the sea ice, especially the regions marked as red rectangles. As shown in Fig. 9g, j, and l, the DAU-Net classifies the sea ice and open water well, which demonstrates that the proposed model can deal with the complex sea surface. There are many water gaps, small sea ice floes, and sinuous ice-water boundaries in Fig. 9c and f, which are finely classified by the DAU-Net, as shown in Fig. 9i and l. The separate water channels in Fig. 9e are also successfully classified by DAU-Net, as shown in Fig. 9k. As the spatial resolution of the MASIE-NH products is 33.3 times lower than that of DAU-Net results. Many fine-grained objects cannot be classified in the MASIE-NH products. As shown in Fig. 9i, k, and l, the classification results of DAU-Net are more consistent with the SAR images than the MASIE-NH products, especially in the regions marked by the yellow rectangles in Fig. 9c, e, and f. Taking the region marked by the yellow rectangle in Fig. 9f as an example, we show the detailed comparisons between the classification results of DAU-Net and 1km MASIE-NH products in Fig. 10. Our classification results show obvious advantages over MASIE-NH products in spatial resolution, Fig. 10b-d.

A detailed comparison between results of DAU-Net and MASIE-NH products in a representative region marked in Fig. 9f. a The SAR image on May 6, 2019. b–d the detailed SAR image, classification results of DAU-Net, and 1km MASIE-NH products corresponding to the marked region

However, DAU-Net performs not very well in some regions. As marked by the green rectangles in Fig. 9a, some sea ice pixels with dark textures are misclassified as open water. Some open water pixels with extremely rough surfaces are misclassified as sea ice. The misclassifications may be due to the lack of these two types of samples in the training set. The misclassifications mainly exist in the SAR image on Dec 13, 2018, the early stage of sea ice in the Bering Strait, with some very dark sea ice textures. These textures are rare during the freezing and melting stages. In addition, the extremely rough sea surfaces are also rare in the training set, resulting in misclassifications. As shown in Fig. 9s-x, the results of DenseNetFCN are generally consistent with the MASIE-NH products. However, DenseNetFCN performs worse than DAU-Net, especially in the regions marked by red circles. Some rough sea surface pixels are misclassified as sea ice pixels.

In summary, by validating the applicability of DAU-Net through a series of SAR images in the Bering Strait, we demonstrated that the DAU-Net performs well in most sea conditions. The proposed is capable of dealing with various sea ice textures. Due to the advantages of SAR image resolution and model performance, the results of DAU-Net are more refined than MASIE-NH products. DAU-Net also outperforms the existing DL-based sea ice classification model, DenseNetFCN.However, the DAU-Net performs not well on some unusual textures. To further improve the model applicability, we will collect more training samples to supplement the rare texture types.

6 Conclusions

This study proposes a DAU-Net model to classify the sea ice and open water from SAR images. We combine the ResNet34 with the U-Net to form the model backbone. SAR images are obtained from Sentinel 1A. The dual-polarized information and the IA of SAR images are utilized as the model inputs. We integrate the dual-attention mechanism, PAM and CAM, into the original U-Net model to extract more characteristic features, which helps to achieve more accurate classifications. We use 15 Sentinel-1A SAR images acquired near the Bering Sea to train the model. We evaluate the model performance by one SAR image and compare the DAU-Net with the typical DL-based ice classification models. Further, we use the well-trained model to classify a series of SAR images of Bering Strait, which covers the process from freezing to melting. We make a comparison between the classification results of DAU-Net and the 1km MASIE-NH products of NSIDC. Experiments show that: 1) the dual-attention mechanism enhances the representative ability of features and help the DAU-Net outperforms the origin U-Net and typical existing DL-based ice classification models, especially in the classification of fine-grained targets; 2) the three-channel inputs, dual-polarized information (VV and VH) and IA, contribute to high accuracy classifications; and 3) the DAU-Net is capable of dealing with complex sea state conditions from freezing to melting, showing good robustness and applicability.

In the future, to address the misclassifications on unusual sea ice textures, we will collect more training samples from a wide range of space and time. We will also explore the possibility of integrating few-shot learning to solve the mentioned problem. Besides, the multi-category classification models to discriminate MYI, sea ice, and open water will be will become a follow-up work.

References

Agarap AF (2018) Deep learning using rectified linear units (ReLU). arXiv preprint arXiv:1803.08375

Alom MZ, Taha TM, Yakopcic C, Westberg S, Sidike P, Nasrin MS, Van Esesn BC, Awwal AAS, ASARi VK (2018) The history began from AlexNet: A comprehensive survey on deep learning approaches. arXiv preprint arXiv:1803.01164

Bahdanau D, Cho K, Bengio Y (2014) Neural machine translation by jointly learning to align and translate. Comput Sci

Balakrishna C, Dadashzadeh S, Soltaninejad S (2018) Automatic detection of lumen and media in the IVUS images using U-Net with VGG16 encoder. arXiv preprint arXiv:1806.07554

Celik T (2009) Unsupervised change detection in satellite images using principal component analysis and k-Means clustering. IEEE Geosci & Remote Sens Lett 6(4):772–776

Clausi D, Deng H (2003) Operational segmentation and classification of SAR sea ice imagery. In: Advances in Techniques for Analysis of Remotely Sensed Data, 2003 IEEE Workshop on

Clausi DA, Member S, Deng H (2005) Operational map-guided classification of SAR sea ice imagery. IEEE Trans Geosci & Remote Sensin 43:2940–2951

Dabboor M, Geldsetzer T (2014) Towards sea ice classification using simulated RADARSAT constellation mission compact polarimetric sar imagery. Remote Sens Environ 140:189–195

Danielson S, Curchitser E, Hedstrom K, Weingartner T, Stabeno P (2011) On ocean and sea ice modes of variability in the bering sea. J Geophys Res: Ocean 116(C12)

ESA (2021) Step science toolbox explotiation platform. http://step.esa.int/main/toolboxes/snap/

Falk T, Mai D, Bensch R, Çiçek Ö, Abdulkadir A, Marrakchi Y, Böhm A, Deubner J, Jäckel Z, Seiwald K et al (2019) U-Net: deep learning for cell counting, detection, and morphometry. Nat Methods 16(1):67–70

Feng G, Dong J, Bo L, Xu Q (2017) Automatic change detection in synthetic aperture radar images based on PCANet. IEEE Geosci Remote Sens Lett 13(12):1792–1796

Fetterer F, Bertoia C, Jing PY (2002) Multi-year ice concentration from RADARSAT. In: Geoscience & Remote Sensing, IGARSS 97 Remote Sensing-a Scientific Vision for Sustainable Development, IEEE International

Fu J, Liu J, Tian H, Li Y, Bao Y, Fang Z, Lu H (2020) Dual attention network for scene segmentation. In: 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)

Gao F, Dong J, Li B, Xu Q, Xie C (2016) Change detection from synthetic aperture radar images based on neighborhood-based ratio and extreme learning machine. J Appl Remote Sens 10(4):046019

Gao Y, Gao F, Dong J, Wang S (2019) Transferred deep learning for sea ice change detection from synthetic aperture radar images. IEEE Geosci Remote Sens Lett 16(10):1655–1659

Garcia LP, de Carvalho AC, Lorena AC (2015) Effect of label noise in the complexity of classification problems. Neurocomputing 160:108–119

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp 770–778. https://doi.org/10.1109/CVPR.2016.90

Hinton GE, Salakhutdinov RR (2006) Reducing the dimensionality of data with neural networks. sci 313(5786):504–507

Karvonen JA (2004) Baltic sea ice SAR segmentation and classification using modified pulse coupled neural networks. IEEE Trans Geosci & Remote Sens

Komarov AS, Buehner M (2017) Automated detection of ice and open water from dual-polarization RADARSAT-2 images for data assimilation. IEEE Trans Geosci & Remote Sens 55(10):1–15

Krizhevsky A, Sutskever I, Hinton G (2012) Imagenet classification with deep convolutional neural networks. Advances in neural information processing systems 25(2)

Lau S, Wang X, Xu Y, Chong E (2020) Automated pavement crack segmentation using fully convolutional U-Net with a pretrained resnet-34 encoder. arXiv preprint arXiv:2001.01912

Lecun Y, Bengio Y, Hinton G (2015) Deep learning. Nat 521(7553):436–444. https://doi.org/10.1038/nature14539

Leigh S, Wang Z, Clausi, DA (2014) Automated ice-water classification using dual polarization SAR satellite imagery. IEEE Trans Geosci & Remote Sens

Li J, Wang C, Wang S, Zhang H, Wang Y (2017) Gaofen-3 sea ice detection based on deep learning. In: 2017 Progress in Electromagnetics Research Symposium - Fall (PIERS - FALL)

Li X, Liu B, Zheng G, Ren Y, Zhang S, Liu Y, Gao L, Liu Y, Zhang B, Wang F (2020) Deep learning-based information mining from ocean remote sensing imagery. Natl Sci Rev

Liu B, Li X, Zheng G (2019) Coastal inundation mapping from bitemporal and dualccolarization SAR imagery based on deep convolutional neural networks. J Geophys Res: Ocean 124(12)

Lundhaug Maria (2002) ERS SAR studies of sea ice signatures in the pechora sea and kara sea region. Can J Remote Sens 28(2):114–127

Olonscheck D, Mauritsen T, Notz D (2019) Arctic sea-ice variability is primarily driven by atmospheric temperature fluctuations. Nat Geosci 12(6):430–434

Peng C, Zhang X, Yu G, Luo G, Sun J (2017) Large kernel matters–improve semantic segmentation by global convolutional network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 4353–4361

Petrou ZI, Tian Y (2019) Prediction of sea ice motion with convolutional long short-term memory networks. IEEE Trans Geosci Remote Sens 57(99):1–12

Reichstein M, Camps-Valls G, Stevens B, Jung M, Denzler J, Carvalhais N, Prabhat (2019) Deep learning and process understanding for data-driven earth system science. Nat 566(7743):195

Ren Y, Chen H, Han Y, Cheng T, Chen G (2019) A hybrid integrated deep learning model for the prediction of citywide spatio-temporal flow volumes. Int J Geogr Inf Sci. 1–22

Ronneberger O, Fischer P, Brox T (2015) U-Net: Convolutional networks for biomedical image segmentation. Springer International Publishing

Russell BC, Torralba A, Murphy KP, Freeman WT (2008) LabelMe: A database and web-based tool for image annotation. Int J Comput Vis 77(1-3)

Shokr ME (1991) Evaluation of second-order texture parameters for sea ice classification from radar images. J Geophys Res: Ocean 96

Soh LK (2002) Arktos: An intelligent system for satellite sea ice image analysis. Cse Technical Reports

Soh LK, Tsatsoulis C (1999) Texture analysis of sar sea ice imagery using gray level co-occurrence matrices. IEEE Trans Geosci & Remote Sens 37(2):780–795

Su H, Wang Y, Xiao J, Yan XH (2015) Classification of MODIS images combining surface temperature and texture features using the support vector machine method for estimation of the extent of sea ice in the frozen bohai bay,China. Int J Remote Sens 36(9–10):2734–2750

US National Ice Center (2008) IMS Daily Northern Hemisphere Snow and Ice Analysis at 1 km, 4 km, and 24 km Resolutions, Version 1e. Tech. rep., NSIDC: National Snow and Ice Data Center, Boulder, Colorado USA., https://doi.org/10.7265/N52R3PMC, https://nsidc.org/data/g02156

Wang C, Zhang H, Wang Y, Zhang B (2018) Sea ice classification with convolutional neural networks using Sentinel-L scanSAR images. In: IGARSS 2018 - 2018 IEEE International Geoscience and Remote Sensing Symposium

Wang L, Scott KA, Xu L, Clausi DA (2016) Sea ice concentration estimation during melt from dual-pol SAR scenes using deep convolutional neural networks: A case study. IEEE Trans Geosci Remote Sens 54(8):4524–4533. https://doi.org/10.1109/TGRS.2016.2543660

Yan X, Scott KA (2017) Sea ice and open water classification of SAR imagery using cnn-based transfer learning. In: IGARSS 2017 - 2017 IEEE International Geoscience and Remote Sensing Symposium

Zakhvatkina NY, Alexandrov VY, Johannessen OM, Sandven S, Frolov IY (2013) Classification of sea ice types in ENVISAT synthetic aperture radar images. IEEE Trans Geosci & Remote Sens 51(5):2587–2600

Zhang Z, Liu Q, Wang Y (2017) Road extraction by deep residual U-Net. IEEE Geosci Remote Sens Lett 15(99):1–5

Zhang Z, Yu Y, Li X, Hui F, Cheng X, Chen Z (2019) Arctic sea ice classification using microwave scatterometer and radiometer data during 2002-2017. IEEE Trans Geosci & Remote Sens. 1–10

Acknowledgements

The authors would like to thank the European Space Agency for providing the Sentinel-1 data, the Sentinel Application Platform (SNAP) software. Ground truth labels are annotated by LabelMe (http://labelme.csail.mit.edu).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License (http://creativecommons.org/licenses/by-nc-nd/4.0/), which permits any noncommercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if you modified the licensed material. You do not have permission under this license to share adapted material derived from this chapter or parts of it.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2023 The Author(s)

About this chapter

Cite this chapter

Ren, Y., Li, X., Yang, X., Xu, H. (2023). Sea Ice Detection from SAR Images Based on Deep Fully Convolutional Networks. In: Li, X., Wang, F. (eds) Artificial Intelligence Oceanography. Springer, Singapore. https://doi.org/10.1007/978-981-19-6375-9_12

Download citation

DOI: https://doi.org/10.1007/978-981-19-6375-9_12

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-19-6374-2

Online ISBN: 978-981-19-6375-9

eBook Packages: Earth and Environmental ScienceEarth and Environmental Science (R0)