Abstract

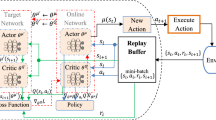

For the motion control of the seven degree of freedom manipulator, there are many problems in the traditional inverse kinematics solution, such as high modeling skills, difficulty in solving the equation matrix, and a huge amount of calculation. In this paper, reinforcement learning is applied in seven degree of freedom manipulator. In order to cope with the problem of large state space and Continuous action in RL, the neural network is used to map the state space to the action space. The action selection network and the action evaluation network are constructed with the Actor-Critic framework. The action selection policy is learned by the training of RL based on DDPG. Finally, test the effectiveness of the method by Baxter robot in Gazebo simulator.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Tsarouchi, P., Makris, S., Michalos, G., et al.: Robotized assembly process using dual arm robot. Procedia CIRP 23(3), 47–52 (2014)

Shen, J., Gu, G.C., Liu, H.B.: Mobile robot path planning based on hierarchical reinforcement learning in unknown dynamic environment. Robot 28(5), 544–547+552 (2006)

Liu Y.X., Wang, L.L., Hang, X.S., Tang, Q.: A survey of coordinated control for dual manipulators. J. Inner Mongolia Univ. (Nature Science Edition) 48(4), 471–480 (2017)

Wang, Z., Hu L.S.: Industrial manipulator path planning based on deep Q-learning. Control Instrum. Chem. Ind. 45(2), 141–145+171 (2018)

Zhang, S., Li S.: Reinforcement learning based obstacle avoidance for robotic manipulator. Mach. Des. Manuf. 8, 140–142 (2007)

Li, Y., Shao, J.: A Revised Gaussian distribution sampling scheme based on RRT* algorithms in robot motion planning. In: International Conference on Control, Automation and Robotics, Nagoya, pp. 22–26 (2017)

Xu, X.: Sequential anomaly detection based on temporal-difference learning: principles, models and case studies. Appl. Soft Comput. 10(3), 859–867 (2010)

Zhou, W., Yu, Y.: Summarize of hierarchical reinforcement learning. CAAI Trans. Intell. Syst. 12(05), 590–594 (2017)

Xia, L.: Reinforcement learning with continuous state-continuous action. Comput. Know. Technol. 7(19), 4669–4672 (2011)

Chen, X., Gao, Y., Fan, S., Yu, Y.: Kernel-based continuous-action actor-critic learning. PR & AI 27(02), 103–110 (2014)

Mnih, V., Kavukcuoglu, K., Silver, D., Rusu, A.A., et al.: Human-level control through deep reinforcement learning. Nature 518(7540), 529 (2015)

Silver, D., Lever, G., Heess, N., Degris, T., et al.: Deterministic policy gradient algorithms. In: International Conference on Machine Learning, pp. 387–395 (2014)

Lillicrap, T.P., Hunt, J.J., Pritzel, A., Heess, N., et al.: Continuous control with deep reinforcement learning. Comput. Sci. 8(6), A187 (2015)

Chen, X., Huang, Y., Zhang, X.: Kinematic Calibration method of Baxter robot based on screw-axis measurement and Kalman Filter. J. Vibr. Measur. Diagn. 37(5), 970–977+1066–1067 (2017)

Acknowledgements

The authors would like to express appreciation to mentors in Shanghai University for their valuable comments and other helps. Thanks for the program supported by Shanghai Municipal Commission of Economy and Informatization of China. The program number is No. 2017-GYHLW-01037. Thanks for the program supported by Shanghai Science and Technology Committee of China. The program number is No. 17511109300.

Fund Program:

Financed by Program of Shanghai Municipal Commission of Economy and Informatization. No. 2017-GYHLW-01037.

Financed by Program of Shanghai Science and Technology Committee. No. 17511109300.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Liu, Ll., Chen, El., Gao, Zg., Wang, Y. (2019). Research on Motion Planning of Seven Degree of Freedom Manipulator Based on DDPG. In: Wang, K., Wang, Y., Strandhagen, J., Yu, T. (eds) Advanced Manufacturing and Automation VIII. IWAMA 2018. Lecture Notes in Electrical Engineering, vol 484. Springer, Singapore. https://doi.org/10.1007/978-981-13-2375-1_44

Download citation

DOI: https://doi.org/10.1007/978-981-13-2375-1_44

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-13-2374-4

Online ISBN: 978-981-13-2375-1

eBook Packages: Intelligent Technologies and RoboticsIntelligent Technologies and Robotics (R0)