Abstract

Atom probe tomography (APT) represents a revolutionary characterization tool for materials that combine atomic imaging with a time-of-flight (TOF) mass spectrometer to provide direct space three-dimensional, atomic scale resolution images of materials with the chemical identities of hundreds of millions of atoms. It involves the controlled removal of atoms from a specimen’s surface by field evaporation and then sequentially analyzing them with a position sensitive detector and TOF mass spectrometer. A paradox in APT is that while on the one hand, it provides an unprecedented level of imaging resolution in three dimensions, it is very difficult to obtain an accurate perspective of morphology or shape outlined by atoms of similar chemistry and microstructure. The origins of this problem are numerous, including incomplete detection of atoms and the complexity of the evaporation fields of atoms at or near interfaces. Hence, unlike scattering techniques such as electron microscopy, interfaces appear diffused, not sharp. This, in turn, makes it challenging to visualize and quantitatively interpret the microstructure at the “meso” scale, where one is interested in the shape and form of the interfaces and their associated chemical gradients. It is here that the application of informatics at the nanoscale and statistical learning methods plays a critical role in both defining the level of uncertainty and helping to make quantitative, statistically objective interpretations where heuristics often dominate. In this chapter, we show how the tools of Topological Data Analysis provide a new and powerful tool in the field of nanoinformatics for materials characterization.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

The modern development of Atom Probe Tomography (APT) has opened new exciting opportunities for material design due to its ability to experimentally map atoms with chemistry in a 3D space [1,2,3,4,5,6,7]. However, the challenges exist to accurately reconstruct the 3D atomic structure and to more precisely identify features (for example, precipitates and interfaces) from the 3D data. Because data is in the format of discrete points in some metric space, i.e., a point cloud, many data mining algorithms which have been developed are applicable to extract the geometric information embedded in the data. Nevertheless, those geometric-based methods have certain limitations when being applied to solve the problems in atom probe data. We summarize below the limitations of geometric-based methods and present a data-driven approach to address significant challenges associated with massive point cloud data and data uncertainty at sub-nanoscales which can be generalized to many other applications.

1.1 Atom Probe Tomography Data and Analysis

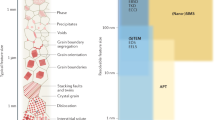

In APT, atoms are removed from a region on a specimen’s surface (the area may be as large as 200 nm × 200 nm) and are then spatially mapped (see Fig. 7.1). When combined with depth resolution of one inter-planar atomic layer for depth profiling, APT provides the highest spatial resolution of any microanalysis technique. This capability provides a unique opportunity to study experimentally with atomic resolution, chemical clustering, and 3D distributions of atoms, and to directly test and refine atomic and molecular-based modeling studies. While APT has its origins in FIM, originally developed by Erwin W. Müller in 1955, and the atom probe microscope dates back to ca. 1968, it is only fairly recently that highly sophisticated and reliable instruments have become commercially available.

Reproduced from Ref. [8] with permission

In APT, the specimen is inserted into a cryogenically cooled, UHV analysis chamber. The analysis chamber is cryogenically cooled to freeze out atomic motion. It is at ultrahigh vacuum (UHV) to allow individual atoms to be identified without interference from the environment. A positive voltage is applied to the specimen via a voltage/laser pulse. The positive voltage attracts electrons and results in the creation of positive ions. These ions are repelled from the specimen and pulled toward a position sensitive detector. The location of the atom in the specimen is determined from the ion’s hit position on the detector. This configuration magnifies the specimen by a million times and in due course, atoms from the surface ionize, exposing another layer of atoms under them. This process of field ionization continues until the specimen has been fully analyzed, and provides a 3D image of the entire specimen. The difference in APT with other characterization techniques is that the image is mathematically a point cloud, as opposed to a traditional gray scale voxelized image.

Improvements in data collection rates, field-of-view, detection sensitivity (at least one atomic part per million), and specimen preparation have advanced the atom probe from a scientific curiosity to a state-of-the-art research instrument [9,10,11,12,13,14,15,16,17,18]. While APT is a powerful technique with the capacity to gather information containing hundreds of millions of atoms from a single specimen, the ability to effectively use this information has significant challenges. The main technological bottleneck lies in handling the extraordinarily large amounts of data in short periods of time (e.g., giga- and terabytes of data). The key to successful scientific applications of this technology in the future will require that handling, processing, and interpreting such data via informatics techniques be an integral part of the equipment and sample preparation aspects of APT.

As applies to APT, two main phases are involved in the data processing and analysis. The first one is the reconstruction of the 3D image, which identifies the 3D coordinate and chemistry for each collected atom. The second phase is to extract useful information from the reconstructed image; for example, to identify crystalline structures, clusters, and precipitates. There are two parameters of interest here which need to be determined during the 3D image reconstruction: the voxel size [19,20,21] and the elemental concentration threshold for the voxels. Normally these two parameters are determined empirically by trial and error—i.e., a value is set for the parameter and if the expected features are visible then the image is considered to be correct. Once the parameters are set, they are treated as fixed values and all the subsequent analyses are done based on these set values. There are two issues with this approach (1) the determination of the values for the parameters is largely subjective, and (2) once the values are chosen, the results of the subsequent analyses are biased toward those particular values.

In the following, we use a practical case to elaborate the issues. Because the number of atoms being imaged is very large, using visual inspection to detect the existence of crystalline structure is very difficult. This is a particular problem which we address later on in this chapter for defining interfaces and precipitates. That is, by identifying where there is a change in crystal structure, we can identify phase transitions. A popular way to detect the crystalline structure in a set of atoms is to find repetitive patterns formed by local subsets of atoms [21]. The local subset of an atom is defined as the set of neighboring atoms within the nth coordinate shell together with the atom itself. The nth coordinate shell of an atom is defined as the distance from the atom to the nth peak of the radial distribution function. Figure 7.2 shows the 1st and 2nd coordinate shells of a point/atom. As a result, every neighboring point is either in or not in the local subset. Although this is an effective method, the results of the 1st and 2nd coordinate shells are relatively independent, and there is no collective way of summarizing the results for all the coordinate shells. On the other hand, there have been many algorithms developed for the detection of precipitates and atomic clustering. Almost all of these algorithms require some parameter inputs, including bin size, chemical threshold, and number of neighbors. As discussed in terms of defining reconstruction parameters, the end result is largely user biased. Therefore, in both aspects (reconstruction and data analysis), a protocol which removes this bias is necessary if we are to trust the results from an APT experiment. Such a protocol is described in the following section, with application of the approach demonstrated in the results section.

Reproduced from Ref. [22] with permission from The Royal Society of Chemistry

Example of how the definition of a cluster or atomic scale feature is largely dependent on user selection of parameters. In this case, based on a difference in nearest neighbor distances, two very different clusters are defined. This is a significant problem in APT, where this same issue can result in two totally different microstructural characterizations. For example, multiple boundaries of a precipitate can be reasonably defined. Through the use of topological methods, we propose to address this issue and define a bias-free approach to reconstruction and data analysis in APT and, therefore, provide believable and sufficiently robust results not provided with geometry-based approaches.

1.2 Characteristics of Geometric-Based Data Analysis Methods

The modern development of (APT) has opened new exciting opportunities for material design due to its ability to experimentally map atoms with chemistry in a 3D space. However, the challenges exist to accurately reconstruct the 3D atomic structure and to more precisely identify features (for example, precipitates and interfaces) from the 3D data [23,24,25,26,27,28,29,30]. Because data is in the format of discrete points in some metric space, i.e., a point cloud, many data mining algorithms, which have been developed, are applicable to extract the geometric information embedded in the data [31,32,33,34]. Nevertheless, those geometric-based methods have certain limitations when being applied to solve the problems in atom probe data. We summarize below the limitations of geometric-based methods.

In the category of supervised learning [35], many methods require prior knowledge about the data. In the case when the prior knowledge is not available, assumptions need to be made and a bias could be introduced. For example, regression usually assumes a mathematical function between the variables, which means the conclusion we draw from the regression would bias the function that is chosen. On the other hand, for unsupervised learning methods [34], there is usually some parameter(s) that needs to be determined for the algorithm. For example, clustering methods usually require the number of clusters (or some equivalent parameter) to be manually determined; in the case of dimensionality reduction, a common assumption is that the data resides on a lower dimensional manifold, which will sufficiently represent the data, although the dimension of the manifold may not be something that can be determined by the algorithm.

Due to the wide range of applications, there is hardly a universal rule to determine the values of the parameters required by the geometric-based methods. For a particular task, the parameters can be determined either empirically based on the constraints of the situation at hand, or by some algorithm [36]. In these cases, the hidden assumption is that the number of the parameter is fixed once chosen. In some scenario, it would be worthwhile to make those fixed parameters variables. This is not equivalent to giving a set of values to the parameters and collecting all of the results, since the results are independent from each other. What is needed is a scheme that can summarize the results as the parameter changes value. The lack of variability also exists on another level, that is, geometric-based approaches have the property of being exact, i.e., two points in a space are geometrically distinguishable as long as they do not share the same coordinates. As a result of this, for example, classification algorithms determine the classes by using a set of hyper boundaries which are fixed once obtained by training the algorithm.

Topological-based methods have certain properties that are not available for the geometrical-based methods [37]:

-

(i)

topology focuses on the qualitative geometric features of the object, which themselves are not sensitive to coordinates, which means that the data can be studied without having to use some algebraic function, and thus no prior assumption or parameter needs to be dealt with;

-

(ii)

instead of using a metric for distance, topology uses a less clear metric, i.e., “proximity” or “neighborhood”, since “proximity” is less absolute than the actual metric, topology is capable of dealing with the scenarios where information is less exact;

-

(iii)

the qualitative geometric features can be associated to some algebraic structure through homology, so changes in the topology can be tracked by these algebraic structures, which can be useful when assessing the impact of a parameter on the result of a given analysis. All these properties make the topological-based methods good candidates for dealing with APT data. Table 7.1 summarizes the main differences between the geometric- and topological-based methods.

Table 7.1 Comparison of geometric-based and topological-based methods

2 Persistent Homology

Persistent Homology [38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55] is a means of topological data analysis. Now let us use an example to show how the topological data analysis methods can overcome the limitations of geometrical methods. Given, as shown in Fig. 7.3, is a set of points (a point cloud) obtained by randomly sampling a circle with some added noise. Let us assume that we are assigned with the task to infer what kind of object the set of points is sampled from. There are several ways we can approach this, with the most straightforward one being to make a judgment based on how the points visually appear. Because the points appear to be located close to the rim of a disk, one can intuitively connect those points along the outskirt of the set, which would give us a zig-zag version of a circle, and thus one may conclude that the points are sampled from a circle. However, not only is this visual observation-based intuition very subjective, but also it is not feasible when the dimension of the data space is higher than three. Alternatively, because we know the points are sampled from some unknown object, we can assume that two or more points are from the same portion of the object if they are close. With this assumption, we can connect two points with a line if they are close. However, this means we need to choose a distance threshold, and it is obvious that choosing different thresholds will result in different conclusions.

Persistent Homology provides a concise way to deal with the above question. First, homology is, generally speaking, a link between topology and algebra, which associates the qualitative geometric features like connected components and n-dimensional holes with algebraic objects (homology groups). Table 7.2 shows the summary of the homology class with the corresponding qualitative geometric features for the first few dimensions. The homology classes of the same dimension in a topological space form a group and the rank of the group (the Betti number) equals the number of distinct qualitative geometric features of that dimension. Thus by using homology, different topological spaces can be distinguished by comparing the number of distinct homology classes. In the case of a circle versus an annulus, homology cannot distinguish them because both the circle and the annulus have one connected component and one hole, i.e., the number of distinct homology classes for both 0th and 1st dimensions are the same. Thus, in the above case, the ambiguity between the circle and the annulus does not affect the result. Next, as the points are sampled from some object, adding continuum to the subsets of discrete points is necessary to recover the shape of the object. Persistent Homology accomplishes this by associating every point with a disk (or hyper-disk in higher dimensional cases) and increasing the diameter of the disks from 0 (growing the size of the disks). During the process, the topology of the space defined by the union of all of the disks will change. The changes will include two or more isolated disks merging together and/or hole(s) being formed or covered by a set of disks. Through homology, these changes will be seen as the change in the number of distinct homology classes and all this will be recorded by a so-called “barcode” representation, which serves as the result of the persistent homology. Because all the disks have the same size at any given time, and the homology classes are recorded for a series of continuous disk sizes, the conclusion can be made without biasing toward a particular value of the disk size.

Figure 7.4 demonstrates the process with the barcode. The left column shows the points and their associated disks at different sizes, and the right column is the barcode which is a summary of the qualitative geometric features (different homology classes) of the space defined by the intersection of the disks. The horizontal axis of the barcode is the diameter of those disks, and the vertical axis is the ordering of the bars. At the beginning when the diameter of all of the 30 disks is 0, no disk overlaps with others, so there are 30 connected components in the space. These 30 connected components are represented by 30 horizontal bars (in orange color) of length 0, while the left end of the bars line up to the horizontal value 0 since they begin to exist when the diameter is zero. As the disk sizes increase, the bars also extend horizontally toward the right, as can be seen in the first row of Fig. 7.4. During this process, whenever there are two disks whose union changes from an empty set to a non-empty set with the two disks originally belonging to two different connect components, then there are two originally connected components becoming one connected component. At this point, one of the two bars representing the two isolated connected components will stop extending, while the other bar will continue extending and represent the union of both connected components. This dynamic is shown in the 1st row through the 3rd row of Fig. 7.4 (notice that the color is lighter for the bars that are still extending). At some large diameter value all the disks merge together and become one single connected component, so there remains one bar representing the union of all the disks. Also at a certain diameter value, an enclosed hole will be formed by the union of the disks, this enclosed hole represents a 1st homology class, and a new bar (green color) is added to the barcode as shown in the fourth row of the Fig. 7.4. Notice this bar did not start from the horizontal value of 0, but it starts from when the diameter value corresponds to when the enclosed hole is just formed. As the disks size increases, this bar also extends horizontally to the right, and at the same time the area of the enclosed hole decreases. When this enclosed hole is totally covered by the disks, it is considered to be no longer “live” and the corresponding bar stops extending at the value of the disk diameter such that the hole is just covered by the disks. We call the diameter value when the enclosed hole is formed the “birth time” of that hole/homology class and the diameter value when the region is just covered by the disks the “death time” of the hole/homology class. Correspondingly, all of the 0th homology classes have a birth time of zero and a death time equal to the diameter when their corresponding bar is no longer extending. By examining the barcode, we can read off the birth and death time of every homology class. This provides an idea of all the qualitative geometric features with their relative birth and death time, and thus how persistent they are within the range of the diameter values being considered. In general, the long-lived or more persistent features are the ones of greater significance than the short-lived ones. Here, except for one orange bar which keeps extending forever, all the orange bars have shorter length than the green bar, so our conclusion is that the 2D hole is the main topological feature of the space. It is worth pointing out that the above example demonstrated the idea of persistent homology in 2D space, but in practice, the data and the geometric features are not restricted to 2D (see Table 7.2).

Demonstration of Persistent Homology. Each row of subfigures corresponds to one value of disk diameter. The left column of subfigures shows the points (blue point) with their associated disks in purple color; the right column is the corresponding barcode. The 0th homology classes are represented by the orange bars and the 1st homology class is represented by the green bar. All the bars for the 0th homology class are sorted in the order of their death time. The lighter shaded bars indicate that the bar is not terminated at that point

In fact, the above process can be thought of as viewing the points through a telescope with the focus of the telescope changing continuously, and thus we name the Persistent Homology on a set of points as a “Data Telescope”. When the image in the telescope is clear and all the points are sharp, it corresponds to the case which the diameter of the disks is 0. When the focus is detuned and the points in the image are vague, it indicates that the diameter of the disks is no longer zero. This tuning ability can be very useful when processing APT data. One point to clarify: in atom probe data the raw output is a point cloud with information associated with each individual atom. We then define the voxels in order to visualize the data and to make the analyses manageable through data reduction. In APT data analyses, the counterpoint of the disk diameter in the above example can be the voxel size and/or the chemical concentration threshold of the voxel. By tuning these parameters, we can track the topological changes within the raw data space, and based on these changes we select the critical transition point(s) in reconstruction parameters to capture the useful information which is otherwise hidden. The exact procedure developed and applied in this chapter is described schematically in Fig. 7.5, encompassing the entire “Data Telescope” for APT data by focusing the voxel size selection and chemical threshold for each voxel (the adjustable reconstruction parameters) in a bias-free manner, and for which we apply for identifying microstructural features.

Process followed in this chapter for defining optimal reconstruction parameters in APT, and identifying microstructural features free of bias. In Sect. 7.3, we introduce an approach for defining optimal voxel size, where the optimal size is defined as that which is least sensitive to atomic positioning with the respective voxels. This makes the data most applicable to topological analyses. In Sect. 7.4, we then apply a topological analysis to the data after voxelized to identify the optimal chemical thresholds which reflect microstructural phase transitions. In Sect. 7.5, we then introduce application of uncertainty into the analysis, reflecting the experimental conditions

3 Voxel Size Determination: Identification of Interfaces

In visualizing APT data, the large number of data points can obscure the underlying structures. Therefore, the reconstructed APT data is first sectioned into voxels (i.e., 3D boxes), which encompass a collection of atoms (Fig. 7.6) and a local density value is assigned to each voxel based on the chemical composition of the voxel. By tracking the variation in the local density associated within each voxel across the sample, one can detect underlying features such as grain boundaries or precipitates. The following section describes the process we outlined in a previous report [56]. Figure 7.6 graphically denotes the process of voxelization for random points scattered in 3D, which represent the atoms in a material. The volume encompassing the data is initially sectioned into voxels of edge length of 0.2 nm (chosen arbitrarily for illustration). The data was then binned into voxels. The voxels were classified by the number of data points they contained and a density value was assigned. The density value of voxels is useful for pinpointing regions of high chemical density, potentially indicating the presence of precipitates, or capturing regions of different densities delineating different phases.

Reproduced from Ref. [56] with permission

Voxelization of random data points scattered in 3D, representative of atoms in a material. a Original data set within a 1 nm3 box. b The atoms are grouped into uniform voxels of edge length 0.2 nm. The numbers of atoms contained within the different voxels represent the local density of that voxel volume.

The procedure which we have developed and which is described below roughly follows these steps:

-

(i)

for a given voxel size, apply a Gaussian kernel to each atom at its exact position;

-

(ii)

sum all of the Gaussian kernels to define a estimated density across the voxel;

-

(iii)

define another Gaussian assuming every atom in the voxel is located within the center of the voxel;

-

(iv)

calculate the difference between the estimated density and the central Gaussian which approximates the true density; and

-

(v)

define the optimal voxel size as that which has the minimum difference.

The specifics of each step are expanded below.

Kernel density estimation (KDE) methods can capture the contribution of each atom toward the voxel and to obtain a smooth overall density function representing the voxel. Each atom is represented by a kernel, which is a symmetric function which integrates to one and contributes to a value at the center of the voxel. The center is considered representative of the region encompassed by the voxel. The contribution of the atoms within the voxel to the voxel center is a function of the atom’s location and is determined by a sampling formula [57]. In the simplest case, the contribution of each atom, located at position “x”, toward a voxel of unit length centered around the origin can be represented by a Parzen window [58] given by:

which indicates that all atoms within the voxel contribute equally, independent of their location within the voxel. There are several other sampling functions and merits of each are discussed elsewhere [59, 60]. In this work, we make use of Gaussian kernels as shown in Fig. 7.7.

Reproduced from Ref. [56] with permission

KDE using Gaussian kernels. h is the edge length of the voxel. xi denotes the location of atom “i”. X denotes the center of the voxel at which the density is calculated.

If “x” is the atom position and X is the center of the voxel of edge length “h” where the individual kernel contribution is measured, the contribution of the Gaussian kernel is defined by the weighting function

where the value hd is known as the bandwidth of the kernel and in the case of the Gaussian kernel: hd = σ where σ is the standard deviation. The standard Gaussian kernel (with zero mean and unit variance) is given by \( K\left( t \right) = \frac{1}{{\sqrt {2\pi } }}e^{{ - \frac{1}{2}t^{2} }} \).

The estimated density function at any point x within the voxel (Fig. 7.8) is defined by the average of the different kernel contributions (Eq. 9.2) as

Reproduced from Ref. [56] with permission

Illustration of density estimation through kernel density function (red lines) representing atom positions (yellow circles) in different voxels. The blue line is the estimated density of atoms in each voxel obtained by summing up the contributions of the various kernels within the voxel.

where hd > 0 is the window width, smoothing parameter or bandwidth.

To automate the voxel size, an error function is defined to compute the difference between the kernel estimated density of the data \( \hat{f}\left( x \right) \) and its true density f(x). A typical measure of the accuracy over the entire voxel is obtained by integrating the square of the error computed given by:

where MISE is the mean integrated square error. Since the distribution of atoms does not follow any known pattern, especially at the region of interest such as the interface, the true density \( f\left( x \right) \) is not known. The approach followed here to approximate the true density as closely as possible to the estimated density consists of the following sequence: \( f\left( x \right) \) is first assumed to be a Gaussian distribution, assumed to represent the actual distribution of atoms within the voxel, although the atoms may very well be non-normally distributed. The mean and variance of this assumed Gaussian spread is calculated for the atoms within and on the boundary of the voxel of interest. Next, depending on the real distribution of the atoms, the Gaussian function may peak either at or off center in the voxel and in the latter case it is translated to the center of the voxel. The difference of this Gaussian distribution with \( \hat{f}\left( x \right) \) is used for computation of MISE. For the cases where the initial assumption of f(x) is a poor one, it will results in a high MISE. By gradually varying the voxel size the validity of this assumption reaches a most probable value corresponding to minimized MISE. The total squared error (E tot ) is then computed for the entire dataset given by the following equation

where V is the total number of voxels. E tot is then minimized with respect to varying voxel size. The kernel density estimation was carried out on the Ni–Al–Cr dataset comprising ~8.72 million atoms. For each atom in a voxel, a Gaussian kernel was fit at the atom location and its amplitude was set at 1 with full width at half maximum set to the voxel edge length. The kernel contributions of atoms to the voxel were calculated at the voxel center for all atoms within and on the boundary of a particular voxel. These values were then added giving the amplitude of density at the voxel center. The error was then calculated between the actual density and estimated density using the procedure explained in the previous section. This procedure is repeated for the voxel size varied from 0.5 to 2.5 nm in steps of 0.1 nm. A minimum error was obtained for 1.6 nm voxel size (Fig. 7.9) providing a tradeoff between the noise and data averaging. This voxel size of 1.6 nm reduces the atomic data set into a representation of 83,754 voxels at 1.6 nm3 each.

Reproduced from Ref. [56] with permission

Dependence of the normalized mean integrated square error (MISE) on the voxel size. The error is minimum for a voxel edge length of 1.6 nm.

In the case of 1 nm, the voxel size is too small to accurately estimate the density. Due to statistical fluctuation in the distribution of atoms, there are many pockets of 1 nm3 throughout the sample where the concentration of Al and Cr are almost equal. As the voxel size is increased, the increase in volume averages out the noise and a clear interface starts emerging. At 1.6 nm most of the statistical noise vanishes and a very sharp interface is obtained, with nanometer scale fluctuations visible on the isosurface representing the interface (Fig. 7.10). As the voxel size is increased beyond this value, over smoothing of data starts occurring. The interface starts becoming diffuse and the graininess in the image disappears. At this stage there is ideally no statistical noise and the residual clusters scattered throughout the volume could potentially be capturing the presence of nanoclusters.

Adapted from Ref. [56] with permission

Feature topology for voxel size of 1.6 nm. Only those voxels where the Al = Cr concentrations are imaged, reflecting the γ/γ’ interface. This elucidates the effect of voxel edge length on capturing the precise interface between the γ/γ’ region of the Ni–Al–Cr sample, with the approach applicable to all APT data for defining voxel size and for any metallic samples for defining microstructural features.

4 Topological Analysis for Defining Morphology of Precipitates

Interfaces and precipitate regions are typically identified from APT data by representing them as isoconcentration surfaces at a particular concentration threshold, thereby making the choice of concentration threshold critical. The popular approach to selecting the appropriate concentration threshold is to draw a proximity histogram [61], which captures the average concentration gradient across the interface and visually identifies a concentration value that is the best representative of an interface or phase change occurrence. This makes the choice of concentration gradient user dependent and subjective. In this section, we will showcase how persistent homology can be applied to better recover the morphology of the precipitates.

As we have mentioned in Sect. 7.2, metric properties such as the position of a point, the distance between points, or the curvature of a surface are irrelevant to topology. Thus, a circle and a square have the same topology although they are geometrically different. Such qualitative geometric features can be represented by simplicial complexes, which are combinatorial objects that can represent spaces and separate the topology of a space from its geometry [62]. Simplicial homology is a process that provides information about the simplicial complex by the number of cycles (a type of hole) it contains. One of its informational outcomes are Betti numbers which record the number of qualitative geometric features such as connected components, holes, tunnels, or cavities. A microstructural features such as a nanocluster can have only limited topological features depending on its dimension. For example, in 3D, a structure can be simply connected, or it can be connected such that a tunnel passes through it, or it can be connected to itself such that it encloses a cavity, or it can remain unconnected. Thus, we can characterize the topology of a structure by counting the number of simply connected components, number of tunnels and number of cavities denoted by Betti numbers β 0 , β 1, and β 2 . The relationship between the Betti numbers, the data topology, and the concept of barcodes as described in the introduction is summarized in Fig. 7.11.

Reproduced from Ref. [62] with permission

Persistent homology and barcodes as described in the introduction, and their relationship with Betti numbers, as applied in this section. a the original point cloud data consisting of 2000 points. b Extracting 1% of the original data points as landmark points. c The barcode using the witness complex [21] achieves the identification of (β0, β1, β2) = (1, 0, 1), representing 1 connected component and 1 cavity.

As discussed earlier and expanded upon in our prior work [62], the persistence of different topological features can be recorded as barcodes, which we now group according to each Betti number. The horizontal axis represents the parameter ɛ or the range of connectivity among points in the point cloud while the vertical axis captures the number of topological components present in the point cloud at each interval of ɛ. There has to be some knowledge of the appropriate range for ɛ, such as the interatomic distance when dealing with raw atom probe data or voxel length if the data has been voxelized. The persistence of features is a measure of whether these features are actually present in the data or if they are artifacts appearing at certain intervals.

Having voxelized the APT data following the approach discussed in Sect. 7.3, each voxel represents a certain value of local concentration. By varying the concentration threshold as our filtration parameter, our underlying dataset provides a different set of voxels corresponding to each concentration threshold. We vary the concentration threshold of each element independently in Fig. 7.12 to show how the process evolves.

Adapted from Ref. [62] with permission

Filtration of an AlMgSc structure with respect to concentration threshold. The three panels show the evolution of Betti numbers with changes in the concentration threshold for Sc, Mg, and Al respectively. The set of (β0, β1, and β2) captures the number of precipitates and the persistence of β0 denotes appropriate concentration thresholds for the different elements Isoconcentration Surfaces obtained at different concentration threshold are shown corresponding to each figure to denote regions of interest.

The top panel shows the evolution of Betti numbers for varying Sc concentration. At each value of Sc concentration threshold “δ”, those voxels having a concentration of δ ± 0.02 were chosen. Consider β0: at a high concentration threshold, beyond 0.5, a very small number of simply connected components are observed. This is because very few voxels have concentration value equal or more than this threshold. As concentration threshold is decreased, more voxels qualify to be included in the group leading to an increase in β0. The value of β0 remains constant for a certain range indicating that these are real features. A plot of the voxels at δ = 0.3 shows that it indeed captures real clusters of Sc. With further decrease of concentration threshold, a decrease in β0 is observed. This is because every voxel outside the Sc clusters has some minimal content of Sc and the inclusion of all exterior voxels results in one single connected component. We also observe a peak in the value of β1 at a low concentration of δ = 0.03. When we plot the isoconcentration surface for those voxels we find that these represent cavities. These voxels with very low Sc concentration sit on the edge of the Sc clusters, and thereby, enclose Sc clusters within themselves. A similar trend is observed with Mg where for low concentration we see Mg Isosurface containing cavities that enclose Sc clusters, whereas for high concentration there are few voxels.

5 Spatial Uncertainty in Isosurfaces

APT data is a point cloud data and in order to study hidden features like precipitates or grain boundaries, isosurfaces are often used. These isosurfaces are drawn at a particular concentration threshold. We calculate the uncertainty in spatial location of isosurfaces here and use visualization techniques that lead to the incorporation of uncertainty information in the final image (Fig. 7.13). Isosurfaces were drawn by joining voxels which have the same value of density or concentration, as defined in Sect. 7.4. For uncertainty calculations in the APT data, we followed the approach described in Sect. 7.3 for calculating the error and difference from ideal density. Consider xi as the atom in a voxel with coordinates (x xi , x yi , and x zi ), the mean µx and standard deviation σx along the x-axis will be given as follows:

The atoms are distributed throughout a voxel, as discussed in Sect. 7.3. When isosurfaces are drawn, only the net value is included in the representation (a). We have added another quantity to include spatially scattered data. We model the uncertainty as Gaussian noise (b). Parameters of Gaussians such as FWHM or variance are calculated based on spatial distribution in the region. We have net value, as before, but also have another parameter which includes information about neighborhood. However, this also adds another dimension in visualization. We represent the uncertainty through selective blurring of the region where blur intensity is mapped to values at the Gaussian distribution

With the above information, a full definition of Gaussian distribution at the voxel center was obtained, following the logic described in Fig. 7.7. We have added the concept of uncertainty in isosurfaces. As we have seen, the calculation for voxels and chemical thresholds involves averaging of data points, while averaging involves some variability in the final result. We may not be able to remove the variability but we can attempt to quantify it. At present, density values are calculated at the centers and we get one net value at each point. Further interpolation across voxels provides uncertain isosurfaces. The above equations were used to study the APT data described in Sect. 7.4. Voxel data was used to convert the data into a structured grid format. The data was then visualized. As a first step, crisp isosurfaces were drawn. In a second step, uncertainty information was added by assigning a shaded region around it. The intensity of the shade was dependent on the uncertainty value. In the present study, an uncertainty of ±1% was used. Figure 7.14 shows an isosurface drawn at a concentration threshold of 12%. Here, each voxel was assigned a value which was assumed to be constant throughout the voxel. Further, all of the voxels with values equal to the threshold were joined to give crisp isosurfaces. Figure 7.14b shows the same isosurface with the inclusion of uncertainty. Uncertainty is a function of spatial distribution of atoms. Distribution of atoms is less at distances away from the isosurface and thus no effect was observed. Near the surface, the uncertainty of the isosurface decreases. From the image, it is observed that there is an increased level of intensity as the surface is approached.

a Output from APT experiment. b Isoconcentration surface obtained at 12% concentration threshold, following the approaches described in Sects. 3 and 4 for defining voxel size and chemical threshold, respectively. Crisp and bias free definition of precipitate boundaries is provided. c Isoconcentration surface shown with inclusion of uncertainty

6 Summary

Atom probe tomography is a chemical imaging tool that produces data in the form of mathematical point clouds. Unlike most images which have a continuous gray scale of voxels, atom probe imaging has voxels associated with discrete points that are associated with individual atoms. The informatics challenge is to assess nano and sub-nanoscale variations in morphology associated with isosurfaces when clear physical models for image formation do not exist given the uncertainty and sparseness in noisy data. In this chapter, we have provided an overview of the application of topological data analysis and computational homology as powerful new informatics tools that address such data challenges in exploring atom probe images.

References

M.K. Miller, R.G. Forbes, Atom-Probe Tomography (Springer, New York, 2014)

D.J. Larson, T.J.T.J. Prosa, R.M. Ulfig, B.P. Geiser, T.F. Kelly, Local Electrode Atom Probe Tomography: A User’s Guide (Springer, New York, 2013)

B. Gault, M.P. Moody, J.M. Cairney, S.P. Ringer, Atom Probe Microscopy (Springer, New York, 2012)

S.K. Suram, K. Rajan, Microsc. Microanal. 18, 941 (2012)

B. Gault, S.T. Loi, V.J. Araullo-Peters, L.T. Stephenson, M.P. Moody, S.L. Srestha, R.K.W. Marceau, L. Yao, J.M. Cairney, S.P. Ringer, Ultramicroscopy 111, 1619 (2011)

E.R. McMullen, J.P. Perdew, Solid State Commun. 44, 945 (1982)

E.R. McMullen, J.P. Perdew, Phys. Rev. B 36, 2598 (1987)

M.K. Miller, T.F. Kelly, K. Rajan, S.P. Ringer, Mat. Today 15, 158 (2012)

M.K. Miller, E.A. Kenik, Microsc. Microanal. 10, 336 (2004)

K. Hono, Acta Mat. 47, 3127 (1999)

T.F. Kelly, D.J. Larson, K. Thompson, J.D. Olson, R.L. Alvis, J.H. Bunton, B.P. Gorman, Ann. Rev. Mat. Res. 37, 681 (2007)

T.F. Kelly, M.K. Miller, Rev. Sci. Instr. 78, 1101 (2007)

M.K. Miller, Atom-probe tomography (Kluwer Academic/Plenum Publishers, New York, 2000)

M.K. Miller, A. Cerezo, M.G. Heatherington, G.D.W. Smith, Atom-probe field-ion microscopy (Clarendon Press, Oxford, 1996)

J. Rsing, J.T. Sebastian, O.C. Hellman, D.N. Seidman, Microsc. Microanal. 6, 445 (2000)

D.N. Seidman, R. Herschitz, Acta Metall. 32, 1141 (1985)

D.N. Seidman, R. Herschitz, Acta Metall. 32, 1155 (1985)

D.N. Seidman, B.W. Krakauer, D. Udler, J. Phys. Chem. Sol. 55, 1035 (1994)

M. Hetherington, M. Miller, J. Phys. Colloq. 50, C8 (1989)

M. Hetherington, M. Miller, J. Phys. Colloq. 48, C6–559 (1987)

K. Torres, M. Daniil, M.A. Willard, G.B. Thompson, Ultramicroscopy 111, 464 (2011)

C. Phillips, G. Voth, Soft Matter 9, 8552 (2013)

M.P. Moody, L.T. Stephenson, P.V. Liddicoat, S.P. Ringer, Contingency table techniques for three dimensional atom probe tomography. Micros. Res. Tech. 70, 258 (2007)

F. Vurpillot, F. De Geuser, G. Da Costa, D. Blavette, J. Microscopy 216, 234 (2004)

J.M. Hyde, A. Cerezo, T.J. Williams, Ultramicroscopy 109, 502 (2009)

M.P. Moody, B. Gault, L.T. Stephenson, D. Haley, S.P. Ringer, Ultramicroscopy 109, 815 (2009)

B. Gault, X.Y. Cui, M.P. Moody, A.V. Ceguerra, A.J. Breen, R.K.W. Marceau, S.P. Ringer, Scripta Mat. 131, 93 (2017)

K. Hono, D. Raabe, S.P. Ringer, D.N. Seidman, MRS Bull. 41, 23 (2016)

F. Vurpillot, W. Lefebvre, J.M. Cairney, C. Obderdorfer, B.P. Geiser, K. Rajan, MRS Bull. 41, 1 (2016)

J.M. Cairney, K. Rajan, D. Haley, B. Gault, P.A.J. Bagot, P.-P. Choi, P.J. Felfer, S.P. Ringer, R.K.W. Marceau, M.P. Moody, Ultramicroscopy 159, 324 (2015)

O. Hellman, J. Vandenbroucke, J. Blatz du Rivage, D.N. Seidman, Mater. Sci. Eng. A 327, 29 (2002)

L.T. Stephenson, M.P. Moody, P.V. Liddicoat, S.P. Ringer, Microsc. Microanal. 13, 448 (2007)

A. Shariq, T. Al-Kassab, R. Kirchheim, R.B. Schwarz, Ultramicroscopy 107, 773 (2007)

F.D. Geuser, W. Lefebvre, Microsc. Res. Tech. 74, 257 (2011)

L. Ericksson, T. Byrne, E. Johansson, J. Trygg, C. Vikstrom, Multi- and Megavariate Data Analysis: Principles, Applications (Umetrics Ab, Umea, 2001)

H.-P. Kriegel, P. Krger, J. Sander, A. Zimek, Wiley Interdisciplinary Reviews. Data Min. Knowl. Disc. 1, 231 (2011)

G. Carlsson, Bull. Am. Math. Soc. 46, 255 (2009)

H. Edelsbrunner, D. Letscher, A. Zomorodian, Discret. Comput. Geom. 28, 511 (2002)

S. Bhattacharya, R. Ghrist, V. Kumar, IEEE Trans. Rob. 31, 578 (2015)

G. Carlsson, T. Ishkhanov, V.d. Silva, A. Zomorodian. Int. J. Comput. Vision 76, 1 (2008)

P.G. Cmara, Current Opinion in Systems Biology Future of Systems Biology Genomics and Epigenomics, vol. 1, p. 95 (2017)

I. Donato, M. Gori, M. Pettini, G. Petri, S. De Nigris, R. Franzosi, F. Vaccarino, Phys. Rev. E 93, 052138 (2016)

H. Edelsbrunner, D. Letscher, A. Zomorodian, Discr. Comput. Geom. 28, 511 (2002)

Y. Hiraoka, T. Nakamura, A. Hirata, E.G. Escolar, K. Matsue, Y. Nishiura, Proc. Natl. Acad. Sci. 113, 7035 (2016)

D. Horak, S. Maletic, M. Rajkovic, J. Stat. Mech Theor. Exp. 2009, P03034 (2009)

H. Liang, H. Wang, PLoS Comput. Biol. 13, e1005325 (2017)

N. Otter, M.A. Porter, U. Tillmann, P. Grindrod, H.A. Harrington, arXiv:1506.08903 (2015)

B. Rieck, H. Leitte, Comput. Graph. Forum 34, 431 (2015)

B. Rieck, H. Leitte, Comput. Graph. Forum 35, 81 (2016)

B. Rieck, H. Mara, H. Leitte, IEEE Trans. Visual Comput. Graph. 18, 2382 (2012)

C.M. Topaz, L. Ziegelmeier, T. Halverson, PLoS One 10, e0126383 (2015)

B. Wang, G.-W. Wei, J. Comput. Phys. 305, 276 (2016)

K. Xia, G.-W. Wei, International journal for numerical methods. Biomed. Eng. 30, 814 (2014)

K. Xia, G.-W. Wei, J. Comput. Chem. 36, 1502 (2015)

A. Zomorodian, G. Carlsson, Discr. Comput. Geom. 33, 249 (2005)

S. Srinivasan, K. Kaluskar, S. Dumpala, S. Broderick, K. Rajan, Ultramicroscopy 159, 381 (2015)

V. Epanechnikov, Theor. Probab. Appl. 14, 153 (1969)

E. Parzen, Ann. Math. Stat. 33, 1065 (1962)

M. Rosenblatt, Ann. Math. Stati. 27, 832 (1956)

D.W. Scott, Biometrika 66, 605 (1979)

O.C. Hellman, J.A. Vandenbroucke, J. Rusing, D. Isheim, D.N. Seidman, Microsc. Microanal. 6, 437 (2000)

S. Srinivasan, K. Kaluskar, S. Brodeick, K. Rajan, Ultramicroscopy 159, 374 (2015)

Acknowledgements

We gratefully acknowledge support from NSF DIBBs Project OAC-1640867 and NSF Project DMR-1623838. KR acknowledges support from the Erich Bloch Endowed Chair at the University at Buffalo-State University of New York.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made. The images or other third party material in this chapter are included in the chapter’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2018 The Author(s)

About this chapter

Cite this chapter

Zhang, T., Broderick, S.R., Rajan, K. (2018). Topological Data Analysis for the Characterization of Atomic Scale Morphology from Atom Probe Tomography Images. In: Tanaka, I. (eds) Nanoinformatics. Springer, Singapore. https://doi.org/10.1007/978-981-10-7617-6_7

Download citation

DOI: https://doi.org/10.1007/978-981-10-7617-6_7

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-10-7616-9

Online ISBN: 978-981-10-7617-6

eBook Packages: Chemistry and Materials ScienceChemistry and Material Science (R0)