Abstract

Deep learning-based defect detection is rapidly gaining importance for automating visual quality control tasks in industrial applications. However, due to usually low rejection rates in manufacturing processes, industrial defect detection datasets are inherent to three severe data challenges: data sparsity, data imbalance, and data shift. Because the acquisition of defect data is highly cost″=intensive, and Deep Learning (DL) algorithms require a sufficiently large amount of data, we are investigating how to solve these challenges using data oversampling and data augmentation (DA) techniques. Given the problem of binary defect detection, we present a novel experimental procedure for analyzing the impact of different DA-techniques. Accordingly, pre-selected DA-techniques are used to generate experiments across multiple datasets and DL models. For each defect detection use-case, we configure a set of random DA-pipelines to generate datasets of different characteristics. To investigate the impact of DA-techniques on defect detection performance, we then train convolutional neural networks with two different but fixed architectures and hyperparameter sets. To quantify and evaluate the generalizability, we compute the distances between dataset derivatives to determine the degree of domain shift. The results show that we can precisely analyze the influences of individual DA-methods, thus laying the foundation for establishing a mapping between dataset properties and DA-induced performance enhancement aiming for enhancing DL development. We show that there is no one-fits all solution, but that within the categories of geometrical and color augmentations, certain DA-methods outperform others.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

1 Introduction

Manufacturing processes have been optimized in recent decades to achieve minimum reject rates and high product qualities. However, as product and process complexities increase, the importance of reliable quality continues to grow. Defects such as internal holes, pits, abrasions, and scratches on workpieces or knots, broken picks, and broken yarn in fabrics [1] negatively impact both visual and functional product properties [2]. Defects also contribute to the additional wastage of resources, safety hazards, and can have severe economic consequences for a company. Therefore, reliably assuring the quality of manufactured products is of paramount importance in manufacturing. One of the famous and contemporary solutions towards achieving the goal of a fully automated quality control system is through deep learning (DL)-based computer vision. DL algorithms improve over existing rule-based systems in terms of generalization and performance, while requiring less domain expertise [3, 4, 5]. However, a major disadvantage of data-driven approaches compared to rule-based techniques lies in the strong dependency of model precision on data quantity, data quality, and the evolution of the data over time (data drift) [6]. While the focus in recent years has been on the development of advanced network architectures (e.g., ResNet-50 [7] or Inception-v3 [8]), the progress that is being made in model-space is increasingly diminishing. As a result, the development is shifting more towards data-centric approaches, especially in real-world domains like for example manufacturing or medical diagnostics. Table 6.1 provides an overview of the main data challenges that are characteristic for image data acquired from production processes. These properties form a strong contrast to the ones of (research) datasets (e.g., ImageNet [9], COCO [10], MNIST [11]) used for developing and benchmarking of deep neural network architectures and DL-algorithms, which is why the approaches from research are difficult to transfer one-to-one to such complex defect detection use-cases.

Data augmentation (DA) represents a data-space solution addressing the above mentioned data quality challenges. There are various DA techniques that aim for changing both the geometrical and visual appearance of images to improve both performance and robustness properties of deep neural networks. The most common DA techniques are geometric transformations, color augmentations, kernel filters, mixing images and random erasing [12]. Even though DA is already an integral part of DL pipelines, different DA-methods are often blindly applied based on empirical knowledge and require elaborate tuning for specific datasets. To analyze the impact of different DA-methods on both precision and generalization for the task of visual defect detection, this paper introduces our experimental procedure in Sect. 6.3.3, presents the results in Sect. 6.4.2 and finally derives insights about the studied DA-methods in Sect. 6.5. Sect. 6.3.2 introduces the three real-world datasets which we work with. Our DA-methods are chosen according to a preliminary study of related papers that is summarized in Sects. 6.2 and 6.3.3.

2 Related Work

This section provides a brief overview of work that addresses the generalization problem, DA approaches, and its impact on real-world DL tasks. One central drawback of real-world datasets is that the models trained on them do not generalize well as these datasets are prone to domain shift [13]. In recent years model-centric techniques such as dropout [14], transfer learning [15], and pretraining [16] have tried to address the issues of generalization, particularly in deep neural networks. DA tries to avoid poor generalization by solving the root problem of training data [17] rather than changing the model or training process. Applications of DA can be found in various works across multiple domains such as natural language processing [18], computer vision [17], and time series classification [19]. Particularly in computer vision tasks DA has been applied to address the domain generalization problem [20, 21, 22]. Many papers exist that apply and analyze basic DA-techniques (e.g., oversampling and data warping on histopathological images [23]) and advanced methods (e.g., stacked DA on medical images [24], style-transfer augmentations [25], cGan, and geometric transformations [26]) for specific use cases and datasets.

Fewer papers exist that provide an overview of DA-methods and try to examine their influences on model accuracy. The survey of Shorten et al. [17] presents a comprehensive overview of DA and present the impact examination of individual methods on well-known datasets (e.g., CIFAR-10, MNIST, Caltech101) in an isolated manner of pairwise comparisons. Shijie et al. [27] explore the impact of various DA-methods on image classification tasks with CNNs. On subsets of CIFAR10 and ImageNet, they conduct pair and triple comparisons to identify best″=performing DA-techniques and to draw general conclusions. Yang et al. [28] systematically review different DA-methods and propose a taxonomy of reviewed methods. For semantic segmentation, image classification, and object detection, they compare the performances of different model architectures on datasets (e.g., CIFAR-100, SVHN) with and without pre-defined set of DA-techniques. The survey paper of Khosla et al. [29] presents an overview of selected DA-methods without conducting further effect analyses. In addition to generic studies on scientific datasets, a few domain″=specific approaches exist. The only related work on DA in defect detection is provided by Jain et al. [30]. They propose a DA-framework utilizing GANs which they use to investigate data synthetization for classification of manufacturing datasets.

2 Scientific Impact

Existing studies are almost exclusively conducted on scientific datasets and no reference is made to specific application domains (with the exception of [30]). To the best of our knowledge, there is currently no preliminary work, that examines the impact of DA-methods specific to DL-based visual quality control in manufacturing datasets in an unconstrained setting (i.e. only pairwise evaluations).

3 Approach

In this section, we present our approaches and procedures. Sect. 6.3.1 defines the mathematical problem of binary defect detection. Sect. 6.3.2 introduces the datasets considered in this study and their properties. The experimental procedure, the domain shift measure, and the evaluation metrics are presented in Sect. 6.3.3.

3.1 Binary Defect Detection Problem Definition

For binary visual defect detection, the input feature space is denoted by 𝒳 and 𝒴 denotes the target space. We define the domain as a joint distribution PXY on 𝒳 × 𝒴 and the dataset as 𝒟 = {(𝒳i , 𝒴i)} Ni , where N is the number of training examples. In this work, 𝒳1, 𝒳2, 𝒳3 comprises images from three datasets, namely: (1) AITEX fabric defects [1], (2) Magnetic tile defects [31], and (3) TIG Aluminium 5083 welding defects [32]. We define the binary classification problem where 𝒴 ∈ {Defected, Non-defected}. Furthermore, the DL model is defined as f : 𝒳 → 𝒴, where the primary objective is to learn a mapping from the input space 𝒳 to target space 𝒴. In this work f ∈ {ResNet-50 [7], Inception-V3 [8]}. The predictions generated using model f are denoted as \(\hat{\mathcal{Y}}\). The categorical cross entropy loss function is defined as \(\ell\colon\mathcal{Y}\times\hat{\mathcal{Y}}\rightarrow[0,\infty)\). Each dataset 𝒟 = {(𝒳i , 𝒴i)} Ni is augmented using various DAs, where θ denotes the list of all DAs, and a new augmented dataset is generated as 𝒟1 = θ(𝒟). For each dataset, ten DA-pipelines with varying DAs are constructed to create ten different data sets 𝒟1 .. 𝒟10.

3.2 Presentation of the Datasets

Three real-world industrial″=grade datasets are used in this work. An overview of exemplary images is provided in Fig. 6.1. The Magnetic tile defects dataset (MagTile) contains a total of 1,344 images of magnetic tiles with five defect types: blowhole, crack, fray, break, nneven (grinding uneven), and free (no defects). AITEX is a fabric production dataset containing 246 images of 4,096 × 256 pixels that capture seven different fabric structures. In total, there are 140 defect-free images, 20 for each type of fabric, and there are a total of 105 images with defects. The TIG Aluminium 5083 welding seam dataset (TIG5083) contains 33,254 images of aluminium weld seams and the surrounding area of the weld seam, with six classes: good weld, burn through, contamination, lack of fusion, misalignment, and lack of penetration. We convert the multi-class classification task of all datasets into a binary classification problem by merging all individual defect types into a single defect class.

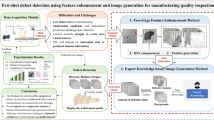

3.3 Experiment Procedure

To evaluate the impact of DA-techniques we propose a three-stage process: First, for each dataset, apply a DA-pipeline and evaluate model performance on different test sets. Second, measure the domain shift between the train set and the test sets. Third, correlate the achieved performance with the domain shift. This framework provides insight into the effects of different DAs on model performance, domain shift, and, through the correlation of both, the generalization capabilities of the trained model. An overview of our algorithm can be found in Fig. 6.2. We assume a standard train-test split of 80/20 and further a validation split of 60/20 (based on the 80% train split). Additionally, we create a hold-out test set by splitting off one of the defect classes per dataset before they are merged (see Sect. 6.3.2). This hold-out set serves as an additional out-of-distribution test set to measure the generalization capabilities of the model. We apply DA in two different settings. For AITEX and MagTile, augmented datapoints were added as new instances, retaining the original ones. This was done to increase the overall number of instances in the dataset and stabilize training. For TIG5083, augmented datapoints replace the originals since the dataset already contains enough images for training. The hold-out class for the AITEX data set was ‘Broken end’, the hold-out class for Magnetic tile defects was ‘Crack’, and the hold-out class for TIG Aluminium 5083 was ‘contamination’ class.

3.3 Data Augmentation Pipelines

In order to pre-select the DA-steps for this paper, a survey was conducted across 24 papers dealing with 6 major industrial image data sets. Table 6.2 describes all available augmentations for each dataset. From these augmentation pools, different pipelines for each dataset were constructed. For each pipeline, two of the augmentations are reserved for the test set and are later referred to as test augmentations. The remaining DAs have a 0.5 chance of being applied to the training set. This process is repeated ten times (see Table 6.3). App. 6.6.1 provides an overview of selected unaugmented and augmented images for all three datasets.

3.3 Domain Shift Measures

We use an algorithm proposed by [36] for measuring the domain shift between datasets. In computer vision tasks, calculating domain shift can be seen as calculating the difference in representation by a model given the source and target domain. Given that a source domain is distant from the target domain, the representation of the domains in the learned space for a specific model tends to diverge. The authors used the activation values from the model’s last layers to quantify the domain shift. Specifically, by creating a statistical distribution using each kernel’s activation value in those layers, we can measure the distance between the datasets using the Wasserstein distance.

3.3 Evaluation Metrics

To evaluate the results of the binary classification problem, various metrics such as F1″=Score, precision, recall, Jaccard similarity [37], Cohen’s kappa score [38], and Matthews correlation coefficient (MCC) [39] are used. Since the datasets are imbalanced even after applying DA, all metrics (Jaccard, precision, recall, and F1″=Score) are weighted by the class distribution. We use multiple different evaluation metrics, as they all slightly deviate from each other. In this way, we circumvent the difficulties due to the sensitivity of individual metrics and obtain a more conclusive evaluation. Since all these scores are bound between [0,1] we average all of them for our reporting of final performance values.

4 Results

In this section, we present the results. Sect. 6.4.1 defines the training and implementation procedure. Sect. 6.4.2 provides an overview of the protocol followed to evaluate the results at the example of the AITEX dataset. Sect. 6.4.3 presents the results of our ablation study.

4.1 Training and Implementation

For controlling the model training, a validation set is split of from the augmented training set. The model is evaluated on the original test set, augmented test sets (using the two reserved test augmentations) and the hold-out set as described in Sect. 6.3.3. The hold-out class for the AITEX data set was ’Broken end’, the hold-out class for MagTile defects was ’Crack’, and the hold-out class for TIG5083 was ’contamination’. As models for our experiment, ResNet-50 and Inception-v3 were chosen, as both are widely used in the literature about industrial applications. The learning rate for both models is set to 10−3, the Adam optimizer [40] is used and the first-layer input shape of the networks is set to 224 and 299 respectively. We initialize the networks using pre-trained weights (ImageNet) for both architectures. DL is enhanced via transfer learning with 50 epochs of frozen weights in the encoder (shallow training) and additional 30 epochs of fine-tuning the entire model (deep training). Similarly to the evaluation metrics, the class-balanced version of the loss function was employed to stabilize the learning process. The data for each experiment was normalized according to the statistics of the train set after applying DA.

4.2 Results for the AITEX Dataset

Fig. 6.3 depicts the average F1″=Score across both the models and across the DA steps for each test set. The values are obtained by averaging the performance of each pipeline that contains the respective augmentation. We observe that the performance on the original test and, to a lesser extent, the augmented test set remains stable, but on the hold-out set (highest amount of domain shift) model performance significantly improved. The top three DA-steps for AITEX dataset are MLS, Gaussian noise and random rotating. As stated in Sect. 6.3.3, we also averaged the performance across multiple other metrics, since they all slightly differ from each other. Similar trends can be observed in Fig. 6.4.

Next, the distance between the train set (source domain) and the test sets (target domain) was calculated for all the models and datasets. Table 6.4 contains the mean and standard deviation across all the pipelines for the AITEX dataset and ResNet-50 model. The domain shift increases from the original test set to the augmented test set to the hold-out set. Finally, the domain shift is correlated to the respective F1-Scores, as Wasserstein distance alone lacks interpretability.

A negative correlation means that with increasing domain shift the performance of the model on the test data decreases. Therefore, a greater correlation is desirable. Each cell in Table 6.5 contains the Pearson correlations between the distance measure and F1-Scores across all the test sets. Since the domain shift is measured based on a single layer of the model we evaluated the last three layers of each model and reported the values separately in the columns. The correlation values don’t change depending on the layer used, but we observe two outliers in the pipelines that display a weaker correlation between domain shift and model performance. Further information can be found in App. 6.6.2. The same evaluation protocol was followed for evaluating the results across the other two datasets as well and similar trends were observed. The results TIG5083 and MagTile can be found in App. 6.6.3.

4.3 Results of the Ablation Study

In addition to the average score presented in Sect. 6.4.2, we draw additional insights from comparing mode performance across all models and datasets available. Fig. 6.5 depicts the stacked bar plot of weighted F1-Scores averaged across all datasets and models for each augmentation that was available for the dataset. Across all the experiments, affine transformations, moving least squares (MLS) and random rotation DA techniques performed the best. Similarly, Fig. 6.6 depicts the average of the scores across all other evaluation metrics. We can observe similar trends where on average across experiments affine transformations, perspective transformation and MLS perform the best.

5 Conclusion

DL offers enormous potential to automate complex visual quality control tasks that cannot be solved using rule-based methods. However, manufacturing applications entail three severe data challenges: data sparsity, data imbalance and data shift. DA-methods have become an integral part of DL-pipelines to improve both performance and generalization. To provide precise assistance for the selection of DA-methods for developing DL-based quality control in the future, in this paper we present an experiment protocol. Thereby, we aim to evaluate the impact of individual DA-methods on defect detection performance depending on dataset characteristics. We apply this protocol to three defect detection use-cases, present and interpret the results.

Using our approach, we can evaluate the influences of each DA method on the model metrics in detail. We show how to determine the domain shift between genuine and augmented dataset derivatives and therefore providing a measure and interpretability for choosing the degree of DA. By correlating this domain shift with F1-Scores, the strength of the positive influence of a DA-pipeline on bridging the domain shift can be determined. Applying our protocol to the datasets, we obtain the three best DA-methods MLS, Gaussian noise, random rotating (AITEX), image transpose, random perspective, salt & pepper noise (MagTile), and affine transformation, perspective transformation, image transpose (TIG5083). Thereby we confirm that the performance improvement of DA-methods depends on dataset characteristics, the DL-task to be solved and the degree of DA. This shows that there is no one-fits-all solution, but at the same time makes it all the more clear that establishing a mapping between dataset properties (e.g., degree of imbalance, defect sizes, positional variance of defects) and DA-induced performance enhancement will enable tailor-made and precise DL-pipeline development, especially in real-world applications.

Correlating the found performances with the respective domain shift revealed additional insights. The two pipelines for the AITEX dataset that induced the weakest negative correlation between domain shift and performance were mainly composed of our three best″=performing augmentations for that dataset (see Table 6.5 pipeline 4,9). Additionally, we found that the worst performing pipelines either had very few augmentations or contained badly performing augmentations in them (mainly ″random rotate’’ for AITEX), further highlighting the need for tailor-made DA-pipelines for each dataset. Our ablation study showed that (in contrast), by averaging the results over all datasets and models, at least some augmentations do perform better than others on average. The better″=performing augmentations are the more complex ones, showcasing their versatility and robustness, while simple of-the-shelf augmentations display the least amount of lift in model performance. Fig. 6.6 can serve as a benchmark of augmentation techniques for new industrial″=grade datasets, or those with unknown properties.

With the proposed the protocol, we lay the foundation for determining the appropriateness of DA-methods for specific data properties in an analytical approach. We will include also more advanced DA-methods and extend the study to additional domain″=specific datasets to provide more validity to the results. By establishing a catalog of dataset properties to which we can map the results of the study, we aim to develop a domain″=specific decision support system for choosing optimal DA-pipelines for DL-applications.

6 Appendix

6.1 Dataset Illustrations

Selection of AITEX [1] images: train set (a), test set (b), hold-out set (c), and augmented test set (d)

Selection of MagTile [31] images: train set (a), test set (b), hold-out set (c), and augmented test set (d)

Selection of TIG5083 [32] images: train set (a), test set (b), hold-out set (c), and augmented test set (d)

6.2 Domain Shift Calculations

The distance measure does not have good interpretability alone. Hence, we correlate the distance measure to the F1-Scores, a negative correlation is expected between them where the distance should be smaller, and the F1-Scores should be higher. Table 6.6 provides the distance measures for the averagepool layer of the ResNet-50 model across train and test sets, where the first three columns represent the distance and the following three columns represent the F1-score for the same pipelines. We take Pearson correlations along each pipeline, correlating the distance measure with the corresponding performance metric. Similarly, repeating this process for the last layers of both the models gives us Table 6.5. The same procedure was followed to construct similar tables for MagTile defects and TIG5083 dataset. Furthermore, we take the mean across the last layers of the models.

6.3 Results

6.3.1 MagTile Dataset

6.3.2 TIG5083 Dataset

References

Silvestre-Blanes J, Albero-Albero T, Miralles I, Pérez-Llorens R, Moreno J (2019) A public fabric database for defect detection methods and results. Autex Res J 19(4):363–374. https://doi.org/10.2478/aut-2019-0035

Yang J, Li S, Wang Z, Dong H, Wang J, Tang S (2020) Using deep learning to detect defects in manufacturing: A comprehensive survey and current challenges. Materials 13(24):5755. https://doi.org/10.3390/ma13245755

Minhas MS, Zelek JS (2020) Defect detection using deep learning from minimal annotations. In: Farinella GM, Radeva P, Braz J (Hrsg) Proceedings of the 15th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, VISIGRAPP 2020, Volume 4: VISAPP, Valletta, Malta. SCITEPRESS, Setúbal, S 506–513 https://doi.org/10.5220/0009168005060513

Hssayeni M, Saxena S, Ptucha R, Savakis A (2017) Distracted driver detection: Deep learning vs handcrafted features. Electron Imaging 2017:20–26. https://doi.org/10.2352/ISSN.2470-1173.2017.10.IMAWM-162

Marnissi MA, Fradi H, Dugelay JL (2019) On the discriminative power of learned vs. hand-crafted features for crowd density analysis. In: 2019 International Joint Conference on Neural Networks (IJCNN), S 1–8 https://doi.org/10.1109/IJCNN.2019.8851764

Sun C, Shrivastava A, Singh S, Gupta A (2017) Revisiting unreasonable effectiveness of data in deep learning era. http://arxiv.org/pdf/1707.02968v2

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), S 770–778 https://doi.org/10.1109/CVPR.2016.90

Szegedy C, Vanhoucke V, Ioffe S, Shlens J, Wojna Z (2016) Rethinking the inception architecture for computer vision. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), S 2818–2826 https://doi.org/10.1109/CVPR.2016.308

Deng J, Dong W, Socher R, Li LJ, Li K, Fei-Fei L (2009) Imagenet: A large-scale hierarchical image database. In: 2009 IEEE Conference on Computer Vision and Pattern Recognition, S 248–255 https://doi.org/10.1109/CVPR.2009.5206848

Lin T, Maire M, Belongie SJ, Bourdev LD, Girshick RB, Hays J, Perona P, Ramanan D, Dollár P, Zitnick CL (2014) Microsoft COCO: common objects in context. CoRR. http://arxiv.org/abs/1405.0312

LeCun Y, Cortes C (2010) MNIST handwritten digit database. http://yann.lecun.com/exdb/mnist/

Zhong Z, Zheng L, Kang G, Li S, Yang Y (2020) Random erasing data augmentation. AAAI 34(07):13001–13008

Zhou K, Liu Z, Qiao Y, Xiang T, Loy CC (2021) Domain generalization in vision: a survey (arXiv e-prints arXiv:2103.02503)

Srivastava N, Hinton G, Krizhevsky A, Sutskever I, Salakhutdinov R (2014) Dropout: A simple way to prevent neural networks from overfitting. J Mach Learn Res 15(1):1929–1958

Weiss K, Khoshgoftaar T, Wang D (2016) A survey of transfer learning. J Big Data. https://doi.org/10.1186/s40537-016-0043-6

Erhan D, Bengio Y, Courville A, Manzagol PA, Vincent P, Bengio S (2010) Why does unsupervised pre-training help deep learning? J Mach Learn Res 11(19):625–660

Shorten C, Khoshgoftaar TM (2019) A survey on image data augmentation for deep learning. J Big Data 6:60. https://doi.org/10.1186/s40537-019-0197-0

Feng SY, Gangal V, Wei J, Chandar S, Vosoughi S, Mitamura T, Hovy E (2021) A survey of data augmentation approaches for NLP. In: Findings of the Association for Computational Linguistics: ACL-IJCNLP 2021. pp. 968–988. Association for Computational Linguistics. https://aclanthology.org/2021.findings-acl.84

Iwana BK, Uchida S (2021) An empirical survey of data augmentation for time series classification with neural networks. PLoS ONE 16(7):e254841

Wan C, Shen X, Zhang Y, Yin Z, Tian X, Gao F, Huang J, Hua XS (2022) Meta convolutional neural networks for single domain generalization. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), S 4682–4691

Qiao F, Zhao L, Peng X (2020) Learning to learn single domain generalization. CoRR. https://arxiv.org/abs/2003.13216

Xu Z, Liu D, Yang J, Niethammer M (2020) Robust and generalizable visual representation learning via random convolutions. CoRR. https://arxiv.org/abs/2007.13003

Faryna K, van der Laak J, Litjens G (2021) Tailoring automated data augmentation to h&e-stained histopathology. In: Medical imaging with deep learning. https://openreview.net/forum?id=JrBfXaoxbA2

Zhang L, Wang X, Yang D, Sanford T, Harmon SA, Turkbey B, Roth H, Myronenko A, Xu D, Xu Z (2019) When unseen domain generalization is unnecessary? rethinking data augmentation. CoRR. http://arxiv.org/abs/1906.03347

Jackson PTG, Abarghouei AA, Bonner S, Breckon TP, Obara B (2018) Style augmentation: Data augmentation via style randomization. CoRR. http://arxiv.org/abs/1809.05375

Meister S, Wermes MAM, Stüve J, Groves RM (2021) Review of image segmentation techniques for layup defect detection in the automated fiber placement process. J Intell Manuf 32(8):2099–2119

Shijie J, Ping W, Peiyi J, Siping H (2017) Research on data augmentation for image classification based on convolution neural networks. In: 2017 Chinese Automation Congress (CAC), S 4165–4170 https://doi.org/10.1109/CAC.2017.8243510

Yang S, Xiao W, Zhang M, Guo S, Zhao J, Shen F (2022) Image data augmentation for deep learning: A survey. http://arxiv.org/pdf/2204.08610v1

Khosla C, Saini BS (2020) Enhancing performance of deep learning models with different data augmentation techniques: A survey. In: 2020 International Conference on Intelligent Engineering and Management (ICIEM). IEEE, New York, S 79–85 https://doi.org/10.1109/ICIEM48762.2020.9160048

Jain S, Seth G, Paruthi A, Soni U, Kumar G (2022) Synthetic data augmentation for surface defect detection and classification using deep learning. J Intell Manuf 33(4):1007–1020. https://doi.org/10.1007/s10845-020-01710-x

Huang Y, Qiu C, Guo Y, Wang X, Yuan K (2018) Surface defect saliency of magnetic tile. In: 2018 IEEE 14th International Conference on Automation Science and Engineering (CASE), S 612–617 https://doi.org/10.1109/COASE.2018.8560423

Bacioiu D, Melton G, Papaelias M, Shaw R (2019) Automated defect classification of aluminium 5083 tig welding using hdr camera and neural networks. J Manuf Process 45:603–613

Schaefer S, McPhail T, Warren J (2006) Image deformation using moving least squares. ACM Trans Graph 25(3):533–540. https://doi.org/10.1145/1141911.1141920

Petro AB, Sbert C, Morel JM (2014) Multiscale retinex. Image Process Line 4:71–88. https://doi.org/10.5201/ipol.2014.107

Jobson D, Rahman Z, Woodell G (1997) A multiscale retinex for bridging the gap between color images and the human observation of scenes. IEEE Trans Image Process 6(7):965–976. https://doi.org/10.1109/83.597272

Stacke K, Eilertsen G, Unger J, Lundström C (2021) Measuring domain shift for deep learning in histopathology. IEEE J Biomed Health Inform 25(2):325–336. https://doi.org/10.1109/JBHI.2020.3032060

Hancock J (2004) Jaccard distance (Jaccard index, Jaccard similarity coefficient) https://doi.org/10.1002/9780471650126.dob0956

McHugh M (2012) Interrater reliability: The kappa statistic. Biochem Med 22:276–282. https://doi.org/10.11613/BM.2012.031

Chicco D, Jurman G (2020) The advantages of the matthews correlation coefficient (mcc) over f1 score and accuracy in binary classification evaluation. BMC Genomics. https://doi.org/10.1186/s12864-019-6413-7

Loshchilov I, Hutter F (2019) Decoupled weight decay regularization. In: ICLR

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access Dieses Kapitel wird unter der Creative Commons Namensnennung 4.0 International Lizenz (http://creativecommons.org/licenses/by/4.0/deed.de) veröffentlicht, welche die Nutzung, Vervielfältigung, Bearbeitung, Verbreitung und Wiedergabe in jeglichem Medium und Format erlaubt, sofern Sie den/die ursprünglichen Autor(en) und die Quelle ordnungsgemäß nennen, einen Link zur Creative Commons Lizenz beifügen und angeben, ob Änderungen vorgenommen wurden.

Die in diesem Kapitel enthaltenen Bilder und sonstiges Drittmaterial unterliegen ebenfalls der genannten Creative Commons Lizenz, sofern sich aus der Abbildungslegende nichts anderes ergibt. Sofern das betreffende Material nicht unter der genannten Creative Commons Lizenz steht und die betreffende Handlung nicht nach gesetzlichen Vorschriften erlaubt ist, ist für die oben aufgeführten Weiterverwendungen des Materials die Einwilligung des jeweiligen Rechteinhabers einzuholen.

Copyright information

© 2023 Der/die Autor(en)

About this paper

Cite this paper

Leyendecker, L., Agarwal, S., Werner, T., Motz, M., Schmitt, R.H. (2023). A Study on Data Augmentation Techniques for Visual Defect Detection in Manufacturing. In: Lohweg, V. (eds) Bildverarbeitung in der Automation. Technologien für die intelligente Automation, vol 17. Springer Vieweg, Berlin, Heidelberg. https://doi.org/10.1007/978-3-662-66769-9_6

Download citation

DOI: https://doi.org/10.1007/978-3-662-66769-9_6

Published:

Publisher Name: Springer Vieweg, Berlin, Heidelberg

Print ISBN: 978-3-662-66768-2

Online ISBN: 978-3-662-66769-9

eBook Packages: Computer Science and Engineering (German Language)