Abstract

Anomaly detection (AD) methods that are based on deep learning (DL) have considerably improved the state of the art in AD performance on natural images recently. Combined with the public release of large-scale datasets that target AD for automated visual inspection (AVI), this has triggered the development of numerous, novel AD methods specific to AVI. However, with the rapid emergence of novel methods, the need to systematically categorize them arises. In this review, we perform such a categorization, and identify the underlying assumptions as well as working principles of DL-based AD methods that are geared towards AVI. We perform this for 2D AVI setups, and find that the majority of successful AD methods currently combines features generated by pre-training DL models on large-scale, natural image datasets with classical AD methods in hybrid AD schemes. Moreover, we give the main advantages and drawbacks of the two identified model categories in the context of AVI’s inherent requirements. Last, we outline open research questions, such as the need for an improved detection performance of semantic anomalies, and propose potential ways to address them.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

1 Introduction

Anomaly detection (AD) tries to identify instances in data that deviate from a previously defined (or learned) concept of “normality” [1, 2]. In this context, identified deviations are referred to as “anomalies”, and labeled as “anomalous”, whereas data points that conform to the concept of normality are considered “normal”. In the field of computer vision, AD tries to identify anomalous images, and one of its most promising application domains is the automated visual inspection (AVI) of manufactured goods [3, 4, 5, 6]. The reason for this is the close match between the properties inherent to the AD problem and the constraints imposed by the manufacturing industry on any AVI system (AVIS):

-

1.

Anomalies are rare events [1, 2]. As a consequence, AD algorithms generally focus on finding a description of the normal state, and require few to no anomalies during training. Viewing defective goods as anomalies and the expected product as the normal state, this matches the limited availability of defective goods when setting up AVISs. Manually collecting and labeling defective goods for training supervised deep learning (DL) methods has furthermore been identified as one of the main cost factors for DL-based AVISs [7], and has to be minimized to achieve economic feasibility.

-

2.

The anomaly distribution is ill-defined [1, 2]. This matches the constraint that all possible defect types an AVIS may encounter during deployment are often unknown during training [4]. Still, AVISs are expected to detect also such unknown defect types reliably.

These two constraints imposed by AD problems in general, and the manufacturing industry in particular, already severely limit the feasibility of supervised, DL-based AVISs. Additionally, two further requirements are imposed by the manufacturing industry on AVISs:

-

1.

AVI methods should not be compute″=intensive during training to minimize lead times of product changes. Said product changes are furthermore expected to become more frequent due to a general decrease in lot sizes inherent to industry 4.0 [8].

-

2.

AVI methods need to run in real-time on limited hardware [9].

While the recent success of DL-based AD algorithms has renewed the general interest in AD [2], these two additional constraints have, combined with the release of public datasets [10, 11, 4, 5], led to the development of AD algorithms that are geared specifically towards AVI [12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 5]. The emergence of such algorithms, however, calls for their systematic review in order to consolidate findings and to thereby facilitate additional research. To the best of our knowledge, none of the recent reviews focus on AD for AVI, and they often discuss the broader AD field with a focus on natural images instead [2, 23, 24]. In our work, we fill this gap, and review recent advances in AD for AVI. To this end, we first provide brief formal definitions of both AD and anomaly segmentation (AS), and afterwards summarize public datasets. Next, we systematically categorize algorithms developed for 2D AVI setups, and give their main advantages as well as disadvantages. Last, we outline open research questions, and propose potential ways of addressing them.

2 A Brief Overview of AD/AS

2.1 Formal Definitions

As outlined above, AD is tasked with deciding whether a given test datum is normal or anomalous. More formally speaking, AD is tasked with finding a function Φ : 𝒳 → y that maps from the input space 𝒳 to the anomaly score y. It follows that y should be much lower for a normal test datum than for an anomalous test datum. In context of AVI, 𝒳 typically consists of 2D images \(\vec{x}\in\mathbb{R}^{C\times H\times W}\). Here, H and W specify the height and width of the image \(\vec{x}\), and C corresponds to the number of color channels present in the image. For RGB images, C = 3, whereas C = 1 for grayscale images and C > 3 for multi/hyperspectral images. For a more comprehensive definition of AD, see [2].

In addition to AD, AVI is also concerned with AS, i.e. with localizing the anomaly inside \(\vec{x}\). AS is thus tasked with finding a function \(\Phi\colon\mathcal{X}\to\vec{y}\) that produces an anomaly map \(\vec{y}\in\mathbb{R}^{H\times W}\) instead of a scalar anomaly score y. By aggregating \(\vec{y}\) appropriately, an image-level anomaly score y can be subsequently derived for AS algorithms.

2.2 Types of Algorithms

There exist 3 types of AD/AS algorithms, which differ in their requirements w.r.t. the training data (see Fig. 1.1, [1]):

-

1.

Supervised algorithms. Supervised algorithms treat the AD/AS problems as imbalanced, binary classification/segmentation problems. As such, they require a fully labeled dataset that contains both normal and anomalous images for training. Sampling the anomaly distribution furthermore induces a significant bias [25], also for AVI [12].

-

2.

Semi″=supervised algorithms. As opposed to supervised algorithms, semi″=supervised algorithms require only a dataset of labeled normal images for training. Semi″=supervised approaches commonly make use of the concentration assumption [2], i.e. the assumption that the normal data distribution can be bounded inside a given feature space. Examples here would be neighborhood/prototype [15, 26] or density-based approaches [12, 14, 16]. Other approaches such as autoencoders (AEs) [27] use the concentration assumption in a more indirect manner: They try to train models that are well-behaved only on the manifold of the normal data distribution constructed in the input domain, i.e. the raw images. Thereby, they exploit the observation that DL models fail at generalizing to samples that are different from the training dataset [28]. The majority of proposed AD/AS approaches are semi″=supervised.

-

3.

Unsupervised algorithms. As opposed to supervised and semi″=supervised approaches, unsupervised approaches can work with unlabeled data [29, 30]. To do so, they combine the concentration assumption with the two core assumptions made for anomalies: (I) that anomalies are rare events, and (II) that their distribution is ill-defined (still, a uniform distribution is often assumed).

We note that the terms semi″=supervised and unsupervised, as defined in this review based on [1], are not used consistently throughout literature. For example, unsupervised is often misused to refer to the semi″=supervised setting in AVI [4, 5, 6]. Moreover, the term semi″=supervised has also been used to refer to a partially labeled dataset, where labeled anomalies may also be present and used for training [31]. We believe that a consistent use of terminology which conforms with its historical definition [1] would facilitate a more intuitive understanding of research in AD for AVI.

2.3 Anomaly Types

In previous literature, three anomaly types are distinguished [2]:

-

1.

Point anomalies, which are instances that are anomalous on their own, e.g. a scratch.

-

2.

Contextual anomalies, which are instances that are anomalous only in a specific context. A scratch, for example, might only be considered an anomaly if it lies on a cosmetic surface or otherwise impairs the product’s function.

-

3.

Group anomalies, which are several data points that, as a whole, form an anomaly. Group anomalies may also be contextual, and are rare in AVI.

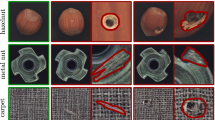

Complementary to these categories, anomalies have recently been partitioned based on the degree of semantic understanding required to detect them [2]. In particular, anomalies are divided into low-level, textural anomalies, and high-level, semantic anomalies (see Fig. 1.2 for an example). Detecting semantic anomalies is generally more difficult than detecting textural anomalies [2, 5], as learning semantically meaningful feature representation is inherently more difficult. Convolutional neural networks (CNNs), for example, exhibit a significant texture bias [32, 33]. Furthermore, we note that synonymous nomenclature was introduced in AD for AVI recently [5], where textural anomalies correspond to structural anomalies, and semantic anomalies correspond to logical anomalies. We again stress the importance of using terminology consistently, and stick to the terms textural/semantic anomalies due to their more intuitive understanding.

Textural vs. semantic anomalies. (a) shows a textural anomaly, whereas (b) shows a semantic anomaly. Images are taken from MVTec AD [4].

2.4 Evaluating AD/AS Performance

Since AD and AS can be viewed as binary problems, corresponding evaluation measures are commonly used to evaluate their performance. Specifically, the area under the receiver operating characteristic (ROC) curve (AUROC) and the area under the precision″=recall (PR) curve (AUPR) are employed. It should be noted that AUPR is better″=suited for evaluating imbalanced problems such as AS [34]. Recently, the per-region″=overlap (PRO) curve was proposed specifically for AS in AVI [13]. As opposed to the pixel-wise AUROC/AUPR, the area under the PRO curve is used for measuring an algorithm’s ability to detect all individual anomalies present in an image. Since the PRO curve does not take false positive (FP) predictions into account, it is constructed up to a preset false positive rate (FPR), and the most commonly used cut-off value is 30% FPR.

We note that all the above evaluation measures focus on an algorithm’s general capability of solving the AD/AS problem. Thereby, the difficulty of selecting the optimal threshold t for y/\(\vec{y}\) is sidestepped by iterating over all possible thresholds t. Finding the optimal value for t, and finding it ideally with normal images only, is a promising avenue for future research [3].

3 AD/AS for 2D AVI

3.1 Datasets

Recent advances in AD/AS for AVI were spurred by the public release of suitable datasets that depict manufactured goods such as screws (objects) or fabrics (textures). We give an overview of them in Table 1.1, and observe the following:

-

1.

In none of the datasets, anomalies are rare events. In fact, global prevalence ranges from 10%–30%, and prevalences commonly reach >50% in the pre-defined test sets. Furthermore, total dataset sizes are relatively small compared to the throughput of a deployed AVIS, and datasets are thus at risk of not fully capturing the normal data distribution. Fully recapitulating the normal data distribution, however, is crucial to achieve high true positive rates (TPRs) at low FPRs, a requirement imposed by the rarity of anomalies/defects. Thus, developed AD/AS methods might not transfer as well to industry. To mitigate this, dataset sizes should be further increased, and an effort should be made to sample the normal data distribution as representatively as possible.

-

2.

Almost no dataset specifies the anomaly type. In fact, only MVTec LOCO AD distinguishes between textural and semantic anomalies, and even MVTec LOCO AD does not differentiate between point, contextual, and group anomalies. Furthermore, MVTec AD, BTAD and MTD contain mostly textural anomalies. Together, this limits research aimed at detecting semantic anomalies in AVI, and additional datasets are required.

-

3.

All datasets contain images that can be cast to the RGB format. Moreover, most of the goods contained in MVTec AD and MVTec LOCO AD are relatively similar in appearance to natural images.

3.2 Methods

In general, developed AD/AS methods can be categorized into those that train complex models and their feature representations in an end-to-end manner, and hybrid approaches that leverage feature representations of DL models pre-trained on large-scale, natural image datasets, but leave them unchanged.

3.2.1 Training Complex Models in an End-to-End Manner.

Methods that train complex models in an end-to-end manner tend to pursue either AEs [27] or knowledge distillation (KD) [13]. Both approaches are based on the assumption that the trained DL model is well-behaved only on images that originate from the normal data distribution. For the AE, this means that the image reconstruction fails for anomalies, whereas for KD, this means that the regression of the teacher’s features by the student network fails. While the AE can be easily applied to multi/hyperspectral images [35], KD-based approaches are limited by their need for a suitable teacher model. Since CNNs pre-trained on ILSVRC2012 (a subset of ImageNet [36]) are commonly used as teacher models, this limits KD approaches to images that are castable to the RGB image format used in ImageNet, i.e. RGB or grayscale images. While a randomly initialized CNN might potentially be used as the teacher to circumvent this problem (similar approaches have been pursued successfully in reinforcement learning [37]), its efficacy has not yet been demonstrated for AVI.

As an alternative to AE and KD, the concentration assumption can be used to formulate learning objectives such as the patch support vector data description [38], which directly learn feature representations where the normal data is concentrated/clustered around a fixed point. Anomalies are then expected to have a larger distance to the cluster center than normal data.

The main advantage of methods that train complex models in an end-to-end manner is their applicability to any data type, including multi/hyperspectral images. For their main drawbacks, it needs to be stated that training these methods is compute″=intensive, and they thus do not conform with the requirement of low/limited training effort imposed by the manufacturing industry. Furthermore, these methods tend to produce worse results than hybrid approaches on RGB-castable images. As a potential explanation, it has been hypothesized that discriminative features are inherently difficult to learn from scratch using normal data only [21]. Moreover, it was shown that AEs tend to generalize to anomalies in AVI [27]. To improve results, two approaches are currently pursued in literature: (I) Initializing the method with a model that was pre-trained on a large-scale natural image dataset [39]. However, this restricts approaches to grayscale/RGB images due to a lack of large-scale multi/hyperspectral image datasets. Furthermore, its effectiveness is limited by catastrophic forgetting [22, 40], which, in AD, refers to a loss of initially present, discriminative features. Therefore, this technique is often combined with the second approach, where (II) surrogates for the anomaly distribution are provided via anomaly synthesis [31, 41]. This requires either access to representative anomalies as a basis for synthesis, or an exhaustive understanding of the underlying manufacturing process and the visual appearance of occurring defects. Thus, anomaly synthesis violates the assumption of an ill-defined anomaly distribution in the same manner as supervised approaches, and is expected to incur a similar bias.

3.2.2 Hybrid Approaches.

At their core, hybrid approaches assume that feature representations generated by training DL models on large-scale, natural image datasets can be transferred to achieve AD in AVI. Specifically, they assume that discriminative features have already been generated, and restrict themselves to finding a description of normality in said features. To this end, they employ three different techniques, all of which are classical AD approaches that are based on the concentration assumption: (I) Generative approaches explicitly model the probability density function (PDF) of the normal data distribution inside the pre-trained feature representations. Both unconstrained PDFs (i.e. via normalizing flows) [16, 42] and constrained PDFs (e.g. by assuming a Gaussian distribution) [12, 14] have been used. (II) Classification″=based approaches fit binary classification models such as the one-class support vector machine (SVM) to the pre-trained feature representations [43]. (III) Neighborhood/Prototype-based approaches employ k-NN algorithms or variations thereof to implicitly approximate the PDF of the normal data distribution [15, 26].

A main advantage of hybrid approaches lies in their outstanding performance: All approaches that achieved state-of-the-art AD performance on MVTec AD so far are hybrid approaches (see Fig. 1.3). Furthermore, hybrid approaches are in general not compute″=intensive during training, as they do not train complex DL models. For example, “training” a Gaussian AD model consists of extracting the pre-trained features for the training dataset, followed by the numeric computation of \(\vec{\mu}\) and \(\vec{\Sigma}\) [12]. Moreover, hybrid approaches are fast, as state-of-the-art, lightweight classification CNNs can be used as feature extractors. Thereby, they fit the requirements of the manufacturing industry extremely well.

The main disadvantage of hybrid approaches lies in their core assumption: If the feature representations of the underlying, pre-trained feature extractor/model are simply not discriminative to the specific AVI problem at hand, hybrid approaches will automatically fail. However, hybrid approaches have been successfully applied even to AVI setups which produce images that differ from the natural image distribution [15, 18, 42]. To nonetheless mitigate this disadvantage, the diversity of available, pre-trained feature extractors should be increased. There are two straightforward ways to do this: (I) Using datasets other than ILSVRC2012 for pre-training, and (II) Using different model architectures, and even computer vision tasks, for pre-training. Both influence general transfer learning performance [44], and initial work indicates they might be beneficial also for AD/AS in AVI [20]. The second disadvantage of hybrid approaches is their limitation to images that can be cast to the RGB format, which is directly due to the underlying feature extractors that are trained on natural image datasets.

4 Open Research Questions

First, the detection performance of semantic anomalies needs to be improved further. This would facilitate the application of developed algorithms to even more sophisticated AVI tasks. Here, hybrid approaches can directly benefit from advances in DL which yield more semantically meaningful feature representations. For example, vision transformers were recently shown to possess as smaller texture bias than CNNs [45], and could thus potentially be used as feature extractors. Second, the bias incurred by sampling the anomaly distribution needs to be decreased. A potential way of achieving this would be to explicitly incorporate the assumptions made for the anomaly distribution into the corresponding learning objectives. Third, methods that facilitate setting the working point of AD/AS methods in an automated manner are needed. As these would ideally rely on normal data only, they could aim at achieving specific target FPRs, e.g. via bootstrapping and model ensembling. Fourth, AD/AS methods that are less compute″=intensive during training are required for multi/hyperspectral images to meet the requirements imposed by the manufacturing industry. Here, public datasets are expected to facilitate progress, similar as was observed for RGB images. Fifth, AD/AS methods are required for 3D AVI tasks. A first dataset was published recently [6], and we expect for hybrid approaches that rely on models pre-trained on large-scale datasets to also achieve strong performance here [17].

While not an open research questions specific to AVI, anomalies have recently been clustered successfully based on their visual appearance [46]. Together with the empirical success of anomaly synthesis/supervised AD [31, 41, 47], this indicates that the commonly made assumption that anomalies follow a uniform distribution might not be true. This aspect thus warrants additional research.

5 Conclusion

In our work, we have reviewed recent advances in AD/AS for AVI. We have provided a brief definition of AD/AS, and gave an overview of public datasets and their limitations. Moreover, we identified two general categories of AD/AS approaches for AVI, and gave their main advantages as well as disadvantages when considering the constraints and requirements imposed by the manufacturing industry. Last, we identified open research questions, and outlined potential ways of addressing them. We expect our review to facilitate additional research in AD/AS for AVI.

References

Chandola V, Banerjee A, Kumar V (2009) Anomaly detection: A survey. Acm Comput Surv (CSUR) 41(3):1–58

Ruff L, Kauffmann JR, Vandermeulen RA, Montavon G, Samek W, Kloft M, Dietterich TG, Müller KR (2021) A unifying review of deep and shallow anomaly detection. Proc IEEE 109(5):756–795

Bergmann P, Fauser M, Sattlegger D, Steger C (2019) MVTec AD – a comprehensive real-world dataset for unsupervised anomaly detection. In: The IEEE Conference on Computer Vision and Pattern Recognition (CVPR)

Bergmann P, Batzner K, Fauser M, Sattlegger D, Steger C (2021) The mvtec anomaly detection dataset: A comprehensive real-world dataset for unsupervised anomaly detection. Int J Comput Vis. https://doi.org/10.1007/s11263-020-01400-4

Bergmann P, Batzner K, Fauser M, Sattlegger D, Steger C (2022) Beyond dents and scratches: Logical constraints in unsupervised anomaly detection and localization. Int J Comput Vis. https://doi.org/10.1007/s11263-022-01578-9

Bergmann P, Jin X, Sattlegger D, Steger C (2022) The mvtec 3d-ad dataset for unsupervised 3d anomaly detection and localization. In: Proceedings of the 17th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications – Volume 5: VISAPP. SciTePress, Setúbal, S 202–213

Dai W, Mujeeb A, Erdt M, Sourin A (2018) Towards automatic optical inspection of soldering defects. In: 2018 International Conference on Cyberworlds (CW), S 375–382

Brettel M, Friederichsen N, Keller M, Rosenberg M (2014) How virtualization, decentralization and network building change the manufacturing landscape: An industry 4.0 perspective. Int J Inf Commun Eng 8(1):37–44

Li L, Ota K, Dong M (2018) Deep learning for smart industry: Efficient manufacture inspection system with fog computing. IEEE Trans Ind Informatics 14(10):4665–4673

Mishra P, Verk R, Fornasier D, Piciarelli C, Foresti GL (2021) VT-ADL: A vision transformer network for image anomaly detection and localization. In: 30th IEEE/IES International Symposium on Industrial Electronics (ISIE). Kyoto, Japan

Huang Y, Qiu C, Yuan K (2020) Surface defect saliency of magnetic tile. Vis Comput 36(1):85–96

Rippel O, Mertens P, König E, Merhof D (2021) Gaussian anomaly detection by modeling the distribution of normal data in pretrained deep features. IEEE Trans Instrum Meas 70:1–13

Bergmann P, Fauser M, Sattlegger D, Steger C (2020) Uninformed students: Student-teacher anomaly detection with discriminative latent embeddings. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, S 4183–4192

Defard T, Setkov A, Loesch A, Audigier R (2021) Padim: A patch distribution modeling framework for anomaly detection and localization. In: Del Bimbo A, Cucchiara R, Sclaroff S, Farinella GM, Mei T, Bertini M, Escalante HJ, Vezzani R (Hrsg) Pattern Recognition. ICPR International Workshops and Challenges. Springer, Cham, S 475–489

Roth K, Pemula L, Zepeda J, Schölkopf B, Brox T, Gehler P (2022) Towards total recall in industrial anomaly detection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), S 14318–14328

Gudovskiy D, Ishizaka S, Kozuka K (2022) Cflow-ad: Real-time unsupervised anomaly detection with localization via conditional normalizing flows. In: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), S 98–107

Bergmann P, Sattlegger D (2022) Anomaly detection in 3d point clouds using deep geometric descriptors (arXiv preprint arXiv:2202.11660)

Rippel O, Haumering P, Brauers J, Merhof D (2021) Anomaly detection for the automated visual inspection of pet preform closures. In: 2021 26th IEEE International Conference on Emerging Technologies and Factory Automation (ETFA), S 1–7

Rippel O, Müller M, Merhof D (2020) GAN-based defect synthesis for anomaly detection in fabrics. In: 2020 25th IEEE International Conference on Emerging Technologies and Factory Automation (ETFA), Bd. 1, S 534–540

Rippel O, Merhof D (2021) Leveraging pre-trained segmentation networks for anomaly segmentation. In: 2021 26th IEEE International Conference on Emerging Technologies and Factory Automation (ETFA), S 1–4

Rippel O, Mertens P, Merhof D (2021) Modeling the distribution of normal data in pre-trained deep features for anomaly detection. In: 2020 25th International Conference on Pattern Recognition (ICPR), S 6726–6733

Rippel O, Chavan A, Lei C, Merhof D (2022) Transfer learning gaussian anomaly detection by fine-tuning representations. In: Proceedings of the 2nd International Conference on Image Processing and Vision Engineering – IMPROVE. INSTICC, SciTePress, Setúbal, S 45–56

Chalapathy R, Chawla S (2019) Deep learning for anomaly detection: A survey (arXiv preprint arXiv:1901.03407)

Pang G, Shen C, Cao L, Hengel AVD (2021) Deep learning for anomaly detection: A review. ACM Comput Surv 54(2):1–38

Ye Z, Chen Y, Zheng H (2021) Understanding the effect of bias in deep anomaly detection. In: Zhou ZH (Hrsg) Proceedings of the Thirtieth International Joint Conference on Artificial Intelligence, IJCAI 2021, Virtual Event / Montreal, Canada, 19–27 August 2021, S 3314–3320

Cohen N, Hoshen Y (2020) Sub-image anomaly detection with deep pyramid correspondences (arXiv preprint arXiv:2005.02357)

Zavrtanik V, Kristan M, Skocaj D (2021) Draem – a discriminatively trained reconstruction embedding for surface anomaly detection. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), S 8330–8339

Hendrycks D, Basart S, Mu N, Kadavath S, Wang F, Dorundo E, Desai R, Zhu T, Parajuli S, Guo M, Song D, Steinhardt J, Gilmer J (2021) The many faces of robustness: A critical analysis of out-of-distribution generalization. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), S 8340–8349

Cordier A, Missaoui B, Gutierrez P (2022) Data refinement for fully unsupervised visual inspection using pre-trained networks (arXiv preprint arXiv:2202.12759)

Yoon J, Sohn K, Li CL, Arik SO, Lee CY, Pfister T (2022) Self-supervise, refine, repeat: Improving unsupervised anomaly detection. Transactions on machine learning research

Liznerski P, Ruff L, Vandermeulen RA, Franks BJ, Kloft M, Müller KR (2021) Explainable deep one-class classification. In: International Conference on Learning Representations

Geirhos R, Rubisch P, Michaelis C, Bethge M, Wichmann FA, Brendel W (2019) Imagenet-trained CNNs are biased towards texture; increasing shape bias improves accuracy and robustness. In: International Conference on Learning Representations

Hermann KL, Chen T, Kornblith S (2020) The origins and prevalence of texture bias in convolutional neural networks. In: Larochelle H, Ranzato M, Hadsell R, Balcan M, Lin H (Hrsg) Advances in Neural Information Processing Systems 33: Annual Conference on Neural Information Processing Systems 2020, NeurIPS 2020, December 6–12, 2020, virtual

Davis J, Goadrich M (2006) The relationship between precision-recall and roc curves. In: Proceedings of the 23rd International Conference on Machine learning, S 233–240

Ma N, Peng Y, Wang S, Liu D (2018) Hyperspectral image anomaly targets detection with online deep learning. In: 2018 IEEE International Instrumentation and Measurement Technology Conference (I2MTC). IEEE, New York, S 1–6

Deng J, Dong W, Socher R, Li L, Li K, Fei-Fei L (2009) ImageNet: A large-scale hierarchical image database. In: 2009 IEEE Conference on Computer Vision and Pattern Recognition, S 248–255

Burda Y, Edwards H, Storkey A, Klimov O (2019) Exploration by random network distillation. In: International Conference on Learning Representations

Yi J, Yoon S (2020) Patch svdd: Patch-level svdd for anomaly detection and segmentation. In: Proceedings of the Asian Conference on Computer Vision (ACCV)

Venkataramanan S, Peng KC, Singh RV, Mahalanobis A (2020) Attention guided anomaly localization in images. In: Vedaldi A, Bischof H, Brox T, Frahm JM (Hrsg) Computer Vision – ECCV 2020. Springer, Cham, S 485–503

Deecke L, Ruff L, Vandermeulen RA, Bilen H (2020) Deep anomaly detection by residual adaptation (arXiv preprint arXiv:2010.02310)

Li CL, Sohn K, Yoon J, Pfister T (2021) Cutpaste: Self-supervised learning for anomaly detection and localization. In: 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), S 9659–9669

Rudolph M, Wandt B, Rosenhahn B (2021) Same same but differnet: Semi-supervised defect detection with normalizing flows. In: Winter Conference on Applications of Computer Vision (WACV)

Andrews J, Tanay T, Morton EJ, Griffin LD (2016) Transfer representation-learning for anomaly detection. JMLR, New York

Mensink T, Uijlings J, Kuznetsova A, Gygli M, Ferrari V (2021) Factors of influence for transfer learning across diverse appearance domains and task types. In: IEEE Transactions on Pattern Analysis and Machine Intelligence, S 1–1

Naseer MM, Ranasinghe K, Khan SH, Hayat M, Shahbaz Khan F, Yang MH (2021) Intriguing properties of vision transformers. In: Advances in Neural Information Processing Systems 34

Sohn K, Yoon J, Li CL, Lee CY, Pfister T (2021) Anomaly clustering: Grouping images into coherent clusters of anomaly types (arXiv preprint arXiv:2112.11573)

Ruff L, Vandermeulen RA, Franks BJ, Müller KR, Kloft M (2020) Rethinking assumptions in deep anomaly detection (arXiv preprint arXiv:2006.00339)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access Dieses Kapitel wird unter der Creative Commons Namensnennung 4.0 International Lizenz (http://creativecommons.org/licenses/by/4.0/deed.de) veröffentlicht, welche die Nutzung, Vervielfältigung, Bearbeitung, Verbreitung und Wiedergabe in jeglichem Medium und Format erlaubt, sofern Sie den/die ursprünglichen Autor(en) und die Quelle ordnungsgemäß nennen, einen Link zur Creative Commons Lizenz beifügen und angeben, ob Änderungen vorgenommen wurden.

Die in diesem Kapitel enthaltenen Bilder und sonstiges Drittmaterial unterliegen ebenfalls der genannten Creative Commons Lizenz, sofern sich aus der Abbildungslegende nichts anderes ergibt. Sofern das betreffende Material nicht unter der genannten Creative Commons Lizenz steht und die betreffende Handlung nicht nach gesetzlichen Vorschriften erlaubt ist, ist für die oben aufgeführten Weiterverwendungen des Materials die Einwilligung des jeweiligen Rechteinhabers einzuholen.

Copyright information

© 2023 Der/die Autor(en)

About this paper

Cite this paper

Rippel, O., Merhof, D. (2023). Anomaly Detection for Automated Visual Inspection: A Review. In: Lohweg, V. (eds) Bildverarbeitung in der Automation. Technologien für die intelligente Automation, vol 17. Springer Vieweg, Berlin, Heidelberg. https://doi.org/10.1007/978-3-662-66769-9_1

Download citation

DOI: https://doi.org/10.1007/978-3-662-66769-9_1

Published:

Publisher Name: Springer Vieweg, Berlin, Heidelberg

Print ISBN: 978-3-662-66768-2

Online ISBN: 978-3-662-66769-9

eBook Packages: Computer Science and Engineering (German Language)