Abstract

Good quality research depends on good quality data. In multidisciplinary projects with quantitative and qualitative data, it can be difficult to collect data and share it between partners with diverse backgrounds in a timely and useful way, limiting the ability of different disciplines to collaborate. This chapter will explore two examples of the impact of data collection and sharing on analysis in a recent Horizon 2020 project, RealValue. The main insight is that it is not only projects but also the processes within them such as data collection, sharing and analysis that are socio-technical. We shall examine two examples within the project—validating the models and triangulating the qualitative data—to examine data synergy across four dimensions: time (synchronising activities), people (managing and coordinating actors), technology (in this case focusing mainly on connectivity) and quality. Recommendations include developing a data protocol for the energy demand community built on these four dimensions.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

- Data collection and sharing methods

- Socio-technical

- Multidisciplinary

- Energy demand

- Demand response

- Smart grid

1 Introduction

A large number of field trials have attempted to understand energy use in buildings (e.g. Economidou et al. 2011; Jones et al. 2013; TSB 2014; Guerra-Santin et al. 2013; Gupta and Kapsali 2015). Nevertheless, the number of studies with complete monitoring equally capturing building data, technologies and people is limited, a fact recognised by the Buildings Performance Institute Europe (BPIE) as limiting the impact of this research on European policy (Economidou et al. 2011). Notwithstanding their size, samples and research scope, many studies experience similar pitfalls in their data collection processes. Despite recognition of the need to combine multiple methods to understand the multidimensional socio-technical issues (Topouzi et al. 2016) and ongoing recognition of the ontological and language challenges of multidisciplinary work (Mallaband et al. 2017; Robison and Foulds 2017; Sovacool et al. 2015), there is less focus on the challenge of data collection and the implementation of these methodologies.

This chapter reflects on the socio-technical nature of data collection and sharing in multi-partner multidisciplinaryFootnote 1 projects: not just the fact that different types of data need to be collected and analysed but the expectations different disciplines have of dataFootnote 2 and the different skills they bring to the analysis. Recognising this and planning accordingly increases the chances of high-quality, useful data being used in collaborative ways in complex consortia. We suggest four dimensions to achieving data synergyFootnote 3 in such contexts: synchronising data processes in time, coordinating the people involved both logistically and in terms of their skills and expectations, recognising the multiplicity of issues affecting both social and technical data collection and paying attention to data quality.

Although the chapter will use examples from RealValueFootnote 4 (see Fig. 5.1), the issues discussed are common to most multidisciplinary projects with multiple actors. The chapter will use two illustrative examples. The first examines attempts to validate bottom-up models of energy demand using trial data collected during the project. The second explores efforts to triangulate the qualitative data collected on customers, using monitoring data from the heating and hot water appliances fitted in their homes.

The chapter will start by introducing the background context of the project, move on to discussing the four dimensions of data synergy and finish with some recommendations for achieving data synergy.

2 Background Context

In order to later appreciate the data requirements of each project strand, it is necessary to describe them briefly.

2.1 Modelling

The plan for the modelling work was to integrate a building energy model (BEM) into power system models in order to assess the potential system value of deploying smart electric thermal storage (SETS) and then to validate them using trial data.

A BEM is a physics-based simulation of building energy use. Inputs into the model include physical characteristics such as building geometry, construction materials, lighting, HVACFootnote 5 and so on (Negendahl 2015; Clarke and Hensen 2015). The model also needs information about building use, occupancy and indoor temperature. A BEM program combines these inputs with information about local weather to calculate thermal loads and energy requirements, the electricity grid’s response to those loads and resulting energy use. Such models are used by building professionals and researchers to evaluate the energy performance of buildings for applications like building design, retrofit decision-making, LEED certificationFootnote 6 and urban planning. Bottom-up models of demand are based on uncertain assumptions (McKenna et al. 2017). To help deal with some of these, the models were initially calibrated based on ‘archetypal’ data from national databases to allow time to run the simulations required by the project (Andrade-Cabrera et al. 2016). Originally, there was a plan to use trial data at a later stage, to validate the models and recalibrate them if necessary.

2.2 Customer Impact Assessment

In parallel with the modelling work, customers were recruited for the live trial and had a combination of technologies installed in their homes, an experience captured in the Customer Impact Assessment (Darby et al. 2018). The technologies installed included heaters and/or hot water cylinders, an internet connection if not already present, a gateway to link the appliances to the cloud (where demand response (DRFootnote 7) would be facilitated), interval meters and, in a sample of homes, additional sensors (occupancy and temperature) and smart plugsFootnote 8. Each home was therefore a source of multiple data points, for assessing the potential for DR and other research purposes.

The social scientists also collected data, including surveys before and after the installation of the technology and at the end of the project, in-home interviews, observations and photographs in a subset of properties and interviews with other project actors (installers, project delivery coordinators, manufacturers, etc.) on their interactions with customers. The objectives were to understand the impact of the installed technologies and, eventually, DR, on customers, and to assess necessary conditions for a good customer experience and DR participation. Five conditions emerged: comfort, control, cost, care and connectivity.

Both the technical and social data were meant to facilitate multidisciplinary collaboration. Interesting data from the implementation phase included indoor and outdoor temperature, occupancy, building fabric, energy consumption (ideally, with heating consumption disaggregated) and customer data held by other partners, like billing, call centre data and DR performance data. The quantitative data from the technologies installed in homes was to be used to triangulate the qualitative data.

3 The Processes of Collecting, Sharing and Analysing Data Are Socio-technical

Based on the social and technical contexts just described, researchers took the view that this was a socio-technical project (Foulds and Robison 2017). Following Powells et al. (2014) who argue that electricity ‘load’ is not an isolated physical phenomenon but also represents activities and social practices, we recognised that the technology and its users were inextricably interlinked and that, therefore, multiple disciplinary methods were necessary. Table 5.1 summarises the data collected.

It also became clear that the processes of collecting, sharing and analysing data were socio-technical, no matter whether the data being collected was qualitative or quantitative and irrespective of the use to which it was finally put.Footnote 11 For example, the social scientists collected and shared customer satisfaction data with industry partners, building and occupancy data with the modelling team and the interview, observation and photographic data with several partners who were interested in a more in-depth insight into their customers, often to improve the technology on offer. In return, they hoped to receive more quantitative data such as heating periods and temperature settings from the SETS, call centre complaints/inquiries, cost data from the energy providers and consumption data from interval meters.

Having discussed the use of the data, we now turn our attention to the data itself. There is no space to deal with every data source in turn but, in the discussion that follows, we explore more fully the idea that dealing with data is socio-technical by focusing on four aspects of the data collection and sharing process necessary to achieve data synergy.

4 Data Synergy

We contend that good data depended on four interlinking dimensions:

-

Time (synchronising the collection and sharing of data between different parts of the project)

-

People (coordinating the different actors involved in the collection and sharing of data)

-

Technology (establishing the connectivity between the different technologies so that data could be transmitted)

-

Quality (ensuring data is good enough for the research purpose)

The discussion will examine challenges in relation to these dimensions in order to make recommendations for the development of a data protocol for appropriate data synergy for use in other multidisciplinary energy demand projects.

4.1 Time: Synchronisation

Figure 5.2 shows the timing of data collection in the project,Footnote 12 including the winter periods (critical data collection opportunities in a heating project), the two strands of the project and the variety of data collection methods. It is noteworthy that most data was collected towards the end of the project, with a gap in the middle caused by recruitment difficulties.

Several issues emerged:

-

1.

Multiple data collection methods required complex coordination with the main implementation phases of the project such as recruitment, installation and the three heating seasons, as well as maintaining a coherent approach across the three countries.

-

2.

As different stages started and finished, the need to facilitate communication among actors across different stages of the process became more complicated, and, without a single data person to oversee this process, the inevitable result was that partners focused more on managing their own data and results than on collaboration.

-

3.

Collecting the same data at different points in the project necessitated the altering of the data collection tools to reflect the changing priorities of partners, resulting in changed metrics in some cases, and this compromised the quality of the data and made comparisons across countries difficult.

-

4.

Timing data collection to happen during the winter season was critical, and the ambitious timeframe meant there were only three heating seasons in which to test the technology and monitor behaviour. The first phase of installations had been done by the first heating season, but the connectivity problems discussed below meant data was absent or of poor quality. Further, recruitment was then delayed until just before the final heating season, so close to the end of the project that it was difficult to process data collected when the technologies were at their most reliable.

4.2 People: Coordination

People are a crucial part of collecting data, even when the methods are apparently technical. It is worth noting the different roles of people in the project, each of whom impacted the data: customers, data collection agents, installers, industrial project partners and researchers. The nature of this project meant direct access to customers was restricted, and so data were generally not collected by researchers. This was problematic because those collecting it did not have the skills, training or appreciation of the final use of the data to collect it correctly, as they had other priorities.

Previous research (Janda and Parag 2013; Wade et al. 2016) has highlighted the influence of different actors in socio-technical processes, and this project was a case in point. The otherwise excellent project management team had an industry background, and their priority was implementation rather than research. Thus, ensuring timely deliverables sometimes hampered the collection and sharing of research data. Table 5.2 serves to highlight the number of different actors involved in the project and consequent complexity of sharing different types of data.

Apart from the logistical challenge of coordinating the data across actors, working with multiple partners had other challenges, more widely discussed in the literature, such as a lack of shared ontology, vocabulary and culture (Hargreaves and Burgess 2009; Longhurst and Chilvers 2012; Robison and Foulds 2017; Sovacool et al. 2015). Data sets also had a different meaning for different partners, who brought different skills to the analysis and interpreted, and then used, the data differently. This had implications for the quality of data they needed and the way in which the data was interpreted, both of which are discussed later under data quality.

4.3 Technology: Connectivity

Given IOT [Internet of Things] is in the news… clean technology, all these buzzwords are always being used. But yet, when it comes to the practicalities of doing a project with [hundreds of] houses, it was incredibly difficult.

Project delivery coordinator, RealValue project

Good connectivity between the different technologies was essential, both for successful DR and to access most of the quantitative data. It is not necessary to dwell on the details of these connections (Fig. 5.3), but, in essence, it was necessary for the connected appliances to communicate through a gateway to a cloud-based aggregation platform that optimised the charging of those appliances according to the customer’s comfort settings, cost algorithms and grid constraints. This was unexpectedly demanding. Unanticipated complications included the need to install internet connections, customers turning off one or other technology, power failures causing the appliances to revert to ‘stand-alone’ mode (i.e. not connected and so no longer transmitting data or available for DR), the need to develop interfaces for different technologies to communicate, organisational firewalls preventing communication, changing communication protocols necessitating ongoing modifications and a software update that disrupted the appliances.

Diagram of the subsystem integration and data flows behind the RealValue user interface application. WAN = Wireless Area Network, IoT = Internet of Things, SETS = Smart Electric Thermal Storage. Source: RealValue project partners, cited in Darby et al. (2018)

The variety of factors that can influence technical data is noteworthy. Spataru and Gauthier (2014) focused explicitly on the performance of various indoor environmental sensors for monitoring people and indoor temperatures. In addition, there were significant impacts on the researchers (for a specific example, see Box 5.1). However, we are more interested in the impact.

Box 5.1 Attempts to collect temperature and occupancy data using technical and social methods

Temperature and occupancy data were important both to validate the models and triangulate the qualitative customer data, and there were multiple possible data sources (Table 5.1). The heaters had temperature sensors and timing settings, which offered a proxy for temperature and occupancy, respectively. However, the temperature sensors were on the heaters themselves and so could not measure the actual temperature of the room, and heating was often set to come on when people were not at home, making both proxies unreliable. Besides, data from most heaters was unavailable until much later in the project, as described. This meant additional temperature and occupancy sensors installed in a subset of homes were important both to help calibrate the models with this appliance data and to triangulate the customer impact assessment data, but there were two significant problems. The first was that most did not transmit data. The second was that the location of the sensors was not accurately noted by those who installed them, making interpretation of the data impossible.

Although the social scientists included occupancy and temperature questions in the surveys, these were filled in by agents with different objectives, and the data was incomplete and ultimately unusable. Follow-up home visits were carried out and did include questions and observations on temperature and occupancy that were shared with modellers, but it was not possible to visit the homes with additional sensors, again because of the need to coordinate with other project partners, and so remedying the connectivity issues or observing the location of the sensors was impossible. Despite multiple possible sources, therefore, the final data on temperature and occupancy was patchy. This prevented researchers collaborating as fully as they might have done otherwise.

4.4 Data Quality: Granularity, Reliability and Project Design

During the final heating season, recruitment was completed and attention turned to fixing the connectivity issues, with some success: data did become available. As partners started to work with it, however, the next major issue arose—the quality of the data, a product of the previous three sections (Stevenson and Leaman 2010). All sorts of factors had affected the data but there are three main points to discuss here.

First, expectations of the granularity (or resolutionFootnote 13), and the duration of the data, varied depending on the partner and their purpose. So, whilst industrial partners needed single 24-hour periods of uninterrupted data to run equipment diagnostics, social scientists wanted data for participants for whom they had other data (such as surveys or interviews), and modellers needed several days of data to help them see patterns but did not mind some gaps, as long as they had an idea of occupancy (Fig. 5.4).

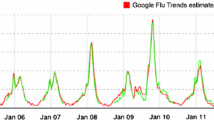

Second is the reliability, or consistency, of the data. As noted in Fig. 5.4, different methods of collecting apparently the same data yielded different results, making methodological transparency and accuracy vital for replicable research. Figure 5.5 demonstrates this from viewpoint of the data. It shows two sets of temperature data: one from a SETS temperature sensor, the other from an additional temperature sensor (whose location was unknown).

Based on the midday temperature spikes on the solid line, we could speculate that the additional sensor was warmed by the sun. Interestingly, the interpretation of what happened on the days without spikes differed between modellers and social scientists: the former assuming cloudy weather and the latter closed curtains, possibly indicating illness or shift work, for example. Without additional data on weather, the aspect of the room and occupancy, it is not possible to tell which of these is correct, but the different analyses indicate each discipline’s bias.

Still on temperature, the 2–4 °C difference between the two sensors is striking.Footnote 14 As the SETS sensor is on the metallic SETS surface near the warm air vent, it might well be warmer than the room. This might help explain the high temperature settings seen during the home visits: 24 °C at the appliance might translate to 18–20 °C in the room.

Both graphs also show gaps in the data, indicated by straight horizontal lines. Strangely, these do not always coincide, suggesting either that they were caused by different factors or that there were various combinations of factors affecting data quality. Again, without a home visit to verify, the cause cannot be known.

Third is the socio-technical project design. What has become clear upon examination of the data is that many of the problems related to the project design phase of the project. Rather than a socio-technical project design, this was in fact an industry-led technical demonstration project with some social inputs, partly leading to the incommensurability of the data discussed above. A socio-technical project design should encompass three phases: model, design and methods, and analysis, all of which should be socio-technical. This should start with a conceptual, theoretical phase that considers how the actions and states of people interact with the technical and physical properties of their environments. It might end with an analysis of socio-technical constructs such as a ‘person-space-time mean internal temperature’, a measure meant to get closer to the user experience of temperature in the home (Love and Cooper 2015). The methods linking these have yet to be developed, but mobile phones and in-home temperature apps might offer some traction (Grunewald 2015).

5 Achieving Data Synergy

Epistemological debates run as an undercurrent through all of these issues. Fundamentally, the more positivist-grounded technical/monitoring sciences would define quality in very different ways to most critical social scientists, who would instead embrace subjectivity, implying that issues of ‘validation’ and ‘calibration’, in the traditional sense, are backgrounded or at least mean something different. Nevertheless, in the context of a replicability crisis in various disciplines, this chapter suggests that data processes in the energy demand research community could use improvement.

We have contributed to the conversation about ways in which this might happen and will finish with recommendations in each of the four dimensions discussed:

-

Time: Synchronising research rests on critical dependencies, different from project management, and requires backup plans to ensure quality data, otherwise sometimes constrained by the project plan. Also, the duration of heating projects needs to be better aligned with their objectives.Footnote 15

-

People: The impact of different actors cannot be underestimated. Planning and responsive management are essential parts of real-world project delivery, and we would recommend four coordination roles—a project manager, a project delivery coordinator (for practical project implementation), a data analyst (from the start of the project, to organise, hold and facilitate access to a shared set of data) and a research coordinator (with a socio-technical background, to synchronise the research).

-

Technology: Demonstration projects inevitably use novel technologies and the difficulty of managing the interfaces between them should be taken into account.

-

Quality: The use of consistent metrics would allow better comparisons across different countries with different languages, contexts, technologies and participant groups. Data protocols need to be developed to establish conventions for collecting and sharing data, both quantitative (e.g. what to capture, how often and where) and qualitative (e.g. what scales to use for age, income and cost).

This is not trivial and requires work from researchers and funders. However, the reward would be more robust, reliable data; better, more policy-relevant outcomes; and more replicable research.

Notes

- 1.

The Oxford Dictionary defines multidisciplinary as ‘Combining or involving several academic disciplines or professional specializations in an approach to a topic or problem’. This fits our purposes in this chapter.

- 2.

Such as the need for larger/representative/standardised samples vs. the need for depth/bringing out individual differences in the data, for example.

- 3.

Data synergy is a term coined for this chapter and describes data from multiple sources or disciplines that, when combined, is more valuable than any of the sources were on their own.

- 4.

- 5.

HVAC (heating, ventilation and air conditioning).

- 6.

LEED (Leadership in Energy and Environmental Design) is the most widely used green building rating system in the world.

- 7.

Demand response seeks to adjust the demand for power instead of adjusting the supply, for the benefit of the grid.

- 8.

A plug that provides control of any device plugged into it.

- 9.

Though this may not be true where indoor temperatures are kept constant using thermostats and where, in fact, people warming the environment through their bodies and activities may lessen the need for heating.

- 10.

In many countries the weather varies from one year to another, and thus the heat demand. In fact, therefore, information on heating costs at stable tariffs in a normal year are required (the effect of price increases and weather variations should be discounted).

- 11.

Love and Cooper (2015) discuss the need for socio-technical data rather than separate streams of social and technical data.

- 12.

This is just the timeline for Ireland. Data was also collected in Latvia and Germany.

- 13.

The number of data points within a particular period for a particular data set.

- 14.

Higher variations than this have been recorded. To make some kind of judgement here, one needs data from many homes and sensors, but only 5 of 50 homes installed with additional room temperature sensors provided usable data, and even this was not good quality.

- 15.

This is out of the control of the project itself but something that should be considered by funders.

References

Andrade-Cabrera, C., Turner, W. J. N., Burke, D., Neu, O., & Finn, D. P. (2016). Lumped Parameter Building Model Calibration using Particle Swarm Optimization. Proceedings of ASIM 2016: 3rd Asia Conference of International Building Performance Simulation Association, Jeju, South Korea.

Clarke, J. A., & Hensen, J. L. M. (2015). Integrated Building Performance Simulation: Progress, Prospects and Requirements. Building and Environment, 91, 294–306.

Darby, S., Higginson, S., Topouzi, M., Goodhew, J., & Reiss, S. (2018). Getting the Balance Right. Can Smart Electric Thermal Storage Work for Both Customers and Grids? Report for the RealValue Project (Deliverable 5.3). Oxford: Environmental Change Institute.

Economidou, M., Atanasiu, B., Despret, C., Maio, J., Nolte, I., & Rapf, O. (2011). Europe’s Buildings Under the Microscope: A Country-by-country Review of the Energy Performance of Buildings. Brussels: Buildings Performance Institute Europe (BPIE).

Foulds, C., & Robison, R. (2017). The SHAPE ENERGY Lexicon—Interpreting Energy-related Social Sciences and Humanities Terminology. Cambridge: SHAPE ENERGY.

Gram-Hanssen, K. (2013). Efficient Technologies or User Behaviour, Which Is the More Important When Reducing Households’ Energy Consumption? Energy Efficiency, 6, 447–457.

Grunewald, P. (2015). Measuring and Evaluating Time-Use and Electricity-Use Relationships (Meter). Early Career Fellowship Ref. EP/M024652/1. Engineering and Physical Sciences Research Council EPSRC).

Guerra-Santin, O., & Silvester, S. (2017). Development of Dutch Occupancy and Heating Profiles for Building Simulation. Building Research and Information, 45(4), 396–413.

Guerra-Santin, O., Tweed, C., Jenkins, H., & Jiang, S. (2013). Monitoring the Performance of Low Energy Dwellings: Two UK Case Studies. Energy and Buildings, 64, 32–40.

Gupta, R., & Kapsali, M. (2015). Empirical Assessment of Indoor Air Quality and Overheating in Low-carbon Social Housing Dwellings in England, UK. Advances in Building Energy Research, pp. 1–23.

Hargreaves, T., & Burgess, J. (2009). Pathways to Interdisciplinarity: A Technical Report Exploring Collaborative Interdisciplinary Working in the Transition Pathways Consortium. Norwich: School of Environmental Sciences, University of East Anglia.

Janda, K. B., & Parag, Y. (2013). A Middle-out Approach for Improving Energy Performance in Buildings. Building Research and Information, 41, 39–50.

Jones, P., Lannon, S., & Patterson, J. (2013). Retrofitting Existing Housing: How Far, How Much? Building Research and Information, 41, 532–550.

Longhurst, N., & Chilvers, J. (2012). Interdisciplinarity in Transition? A Technical Report on the Interdisciplinarity of the Transition Pathways to a Low Carbon Economy Consortium. Norwich: Science, Society and Sustainability, University of East Anglia.

Love, J., & Cooper, A. (2015). From Social and Technical to Socio-technical: Designing Integrated Research on Domestic Energy Use. Indoor and Built Environment, 24(7), 986–998.

Mallaband, B., Wood, G., Buchanan, K., Staddon, S., Mogles, N. M., & Gabe-Thomas, E. (2017). The Reality of Cross-disciplinary Energy Research in the United Kingdom: A Social Science Perspective. Energy Research & Social Science, 25, 9–18.

McKenna, E., Higginson, S., Grünewald, P., & Darby, S. J. (2017). Simulating Residential Demand Response: Improving Socio-technical Assumptions in Activity-based Models of Energy Demand. Energy Efficiency, pp. 1–15, doi:https://doi.org/10.1007/s12053-017-9525-4.

Negendahl, K. (2015). Building Performance Simulation in the Early Design Stage: An Introduction to Integrated Dynamic Models. Automation in Construction, 54, 39–53.

Peeters, L., de Dear, R., Hensen, J., & d’Haeseleer, W. (2009). Thermal Comfort in Residential Buildings: Comfort Values and Scales for Building Energy Simulation. Applied Energy, 86(5), 772–780.

Powells, G., Bulkeley, H., Bell, S., & Judson, E. (2014). Peak Electricity Demand and the Flexibility of Everyday Life. Geoforum, 55, 43–52.

Richardson, I. (2008). A High-resolution Domestic Building Occupancy Model for Energy Demand Simulations. Energy and Buildings, 40(8), 1560–1566.

Robison, R., & Foulds, C. (2017). Creating an Interdisciplinary Energy Lexicon: Working with Terminology Differences in Support of Better Energy Policy, Proceedings of the eceee 2017 Summer Study on Consumption, Efficiency & Limits, paper 1-267-17. 29 May–3 June 2017, Presqu’ile de Giens, France. pp. 121–130.

Sovacool, B. K., Ryan, S. E., Stern, P. C., Janda, K., Rochlin, G., Spreng, D., Pasqualetti, M. J., Wilhite, H., & Lutzenhiser, L. (2015). Integrating Social Science in Energy Research. Energy Research & Social Science, 6, 95–99.

Spataru, C., & Gauthier, S. (2014). How to Monitor People ‘Smartly’ to Help Reducing Energy Consumption in Buildings? Architectural Engineering and Design Management, 10, 60–78.

Stevenson, F., & Leaman, A. (2010). Evaluating Housing Performance in Relation to Human Behaviour: New Challenges. Building Research & Information, 38, 437–441.

Topouzi, M., Grunewald, P., Gershuny, J., & Harms, T. (2016). Everyday Household Practices and Electricity Use: Early Findings form a Mixed-method Approach to Assessing Demand Flexibility. Proceedings of the BEHAVE 2016 4th European Conference on Behaviour and Energy Efficiency. 8–9 September 2016, Coimbra, Portugal.

TSB. (2014). Retrofit for the Future: Reducing Energy Use in Existing Homes. A Guide to Making Retrofit Work. Swindon: Technology Strategy Board.

Wade, F., Hitchings, R., & Shipworth, M. (2016). Understanding the Missing Middlemen of Domestic Heating: Installers as a Community of Professional Practice in the United Kingdom. Energy Research & Social Science, 19, 39–47.

Wilson, C., & Dowlatabadi, H. (2007). Models of Decision Making and Residential Energy Use. Annual Review of Environment and Resources, 32, 169–203.

Acknowledgements

The RealValue project was funded by the European Union’s Horizon 2020 research and innovation programme under grant agreement no. 646116. We would like to thank our participants, without whom the research would not be possible, and the reviewers, whose insights vastly improved our chapter.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made. The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2018 The Author(s)

About this chapter

Cite this chapter

Higginson, S., Topouzi, M., Andrade-Cabrera, C., O’Dwyer, C., Darby, S., Finn, D. (2018). Achieving Data Synergy: The Socio-Technical Process of Handling Data. In: Foulds, C., Robison, R. (eds) Advancing Energy Policy. Palgrave Pivot, Cham. https://doi.org/10.1007/978-3-319-99097-2_5

Download citation

DOI: https://doi.org/10.1007/978-3-319-99097-2_5

Published:

Publisher Name: Palgrave Pivot, Cham

Print ISBN: 978-3-319-99096-5

Online ISBN: 978-3-319-99097-2

eBook Packages: EnergyEnergy (R0)