Abstract

Synchronous Programming (SP) is a universal computational principle that provides deterministic concurrency. The same input sequence with the same timing always results in the same externally observable output sequence, even if the internal behaviour generates uncertainty in the scheduling of concurrent memory accesses. Consequently, SP languages have always been strongly founded on mathematical semantics that support formal program analysis. So far, however, communication has been constrained to a set of primitive clock-synchronised shared memory (csm) data types, such as data-flow registers, streams and signals with restricted read and write accesses that limit modularity and behavioural abstractions.

This paper proposes an extension to the SP theory which retains the advantages of deterministic concurrency, but allows communication to occur at higher levels of abstraction than currently supported by SP data types. Our approach is as follows. To avoid data races, each csm type publishes a policy interface for specifying the admissibility and precedence of its access methods. Each instance of the csm type has to be policy-coherent, meaning it must behave deterministically under its own policy—a natural requirement if the goal is to build deterministic systems that use these types. In a policy-constructive system, all access methods can be scheduled in a policy-conformant way for all the types without deadlocking. In this paper, we show that a policy-constructive program exhibits deterministic concurrency in the sense that all policy-conformant interleavings produce the same input-output behaviour. Policies are conservative and support the csm types existing in current SP languages. Technically, we introduce a kernel SP language that uses arbitrary policy-driven csm types. A big-step fixed-point semantics for this language is developed for which we prove determinism and termination of constructive programs.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

- Synchronous programming

- Data abstraction

- Clock-synchronised shared memory

- Determinacy

- Concurrency

- Constructive semantics

1 Introduction

Concurrent programming is challenging. Arbitrary interleavings of concurrent threads lead to non-determinism with data races imposing significant integrity and consistency issues [1]. Moreover, in many application domains such as safety-critical systems, determinism is indeed a matter of life and death. In a medical-device software, for instance, the same input sequence from the sensors (with the same timing) must always result in the same output sequence for the actuators, even if the run-time software architecture regime is unpredictable.

Synchronous programming (SP) delivers deterministic concurrency out of the boxFootnote 1 which explains its success in the design, implementation and validation of embedded, reactive and safety-critical systems for avionics, automotive, energy and nuclear industries. Right now SP-generated code is flying on the Airbus 380 in systems like flight control, cockpit display, flight warning, and anti-icing just to mention a few. The SP mathematical theory has been fundamental for implementing correct-by-construction program-derivation algorithms and establishing formal analysis, verification and testing techniques [2]. For SCADEFootnote 2, the SP industrial modelling language and software development toolkit, the formal SP background has been a key aspect for its certification at the highest level A of the aerospace standard DO-178B/C. This SP rigour has also been important for obtaining certifications in railway and transportation (EN 50128), industry and energy (IEC 61508), automotive (TÜV and ISO 26262) as well as for ensuring full compliance with the safety standards of nuclear instrumentation and control (IEC 60880) and medical systems (IEC 62304) [3].

Synchronous Programming in a Nutshell. At the top level, we can imagine an SP system as a black-box with inputs and outputs for interacting with its environment. There is a special input, called the clock, that determines when the communication between system and environment can occur. The clock gets an input stimulus from the environment at discrete times. At those moments we say that the clock ticks. When there is no tick, there is no possible communication, as if system and environment were disconnected. At every tick, the system reacts by reading the current inputs and executing a step function that delivers outputs and changes the internal memory. For its part, the environment must synchronise with this reaction and do not go ahead with more ticks. Thus, in SP, we assume (Synchrony Hypothesis) that the time interval of a system reaction, also called macro-step or (synchronous) instant, appears instantaneous (has zero-delay) to the environment. Since each system reaction takes exactly one clock tick, we describe the evolution of the system-environment interaction as a synchronous (lock-step) sequence of macro-steps. The SP theory guarantees that all externally observable interaction sequences derived from the macro-step reactions define a functional input-output relation.

The fact that the sequences of macro-steps take place in time and space (memory) has motivated two orthogonal developments of SP. The data-flow view regards input-output sequences as synchronous streams of data changing over time and studies the functional relationships between streams. Dually, the control-flow approach projects the information of the input-output sequences at each point in time and studies the changes of this global state as time progresses, i.e., from one tick to the next. The SP paradigm includes languages such as Esterel [4], Quartz [5] and SC [6] in the imperative control-flow style and languages like Signal [7], Lustre [8] and Lucid Synchrone [9] that support the declarative data-flow view. There are even mixed control-data flow language such as Esterel V7 [10] or SCADE [3]. Independently of the execution model, the common strength to all of these SP languages is a precise formal semantics—an indispensable feature when dealing with the complexities of concurrency.

At a more concrete level, we can visualise an SP system as a white-box where inside we find (graphical or textual) code. In the SP domain, the program must be divided into fragments corresponding to the macro-step reactions that will be executed instantaneously at each tick. Declarative languages usually organise these macro-steps by means of (internally generated) activation clocks that prescribe the blocks (nodes) that are performed at each tick. Instead, imperative textual languages provide a \(\mathop {\mathtt {pause}}\) statement for explicitly delimiting code execution within a synchronous instant. In either case, the Synchrony Hypothesis conveniently abstracts away all the, typically concurrent, low-level micro-steps needed to produce a system reaction. The SP theory explains how the micro-step accesses to shared memory must be controlled so as to ensure that all internal (white-box) behaviour eventually stabilises, completing a deterministic macro-step (black-box) response. For more details on SP, the reader is referred to [2].

State of the Art. Traditional imperative SP languages provide constructs to model control-dominated systems. Typically, these include a concurrent composition of threads (sequential processes) that guarantees determinism and offers signals as the main means for data communication between threads. Signals behave like shared variables for which the concurrent accesses occurring within a macro-step are scheduled according to the following principles: A pure signal has a status that can be present (1) or absent (0). At the beginning of each macro-step, pure signals have status 0 by default. In any instant, a signal \({\texttt {s}} \) can be explicitly emitted with the statement \({\texttt {s}}.\mathop {\mathtt {emit}} ()\) which atomically sets its status to 1. We can read the status of \({\texttt {s}} \) with the statement \({\texttt {s}}.\mathop {\mathtt {pres}} ()\), so the control-flow can branch depending on run-time signal statuses. Specifically, inside programs, if-then-else constructions await for the appropriate combination of present and absent signal statuses to emit (or not) more signals. The main issue is to avoid inconsistencies due to circular causality resulting from decisions based on absent statuses. Thus, the order in which the access methods \(\mathop {\mathtt {emit}}\), \(\mathop {\mathtt {pres}}\) are scheduled matters for the final result. The usual SP rule for ensuring determinism is that the \(\mathop {\mathtt {pres}} \) test must wait until the final signal status is decided. If all signal accesses can be scheduled in this decide-then-read way then the program is constructive. All schedules that keep the decide-then-read order will produce the same input-output result. This is how SP reconciles concurrency and observable determinism and generates much of its algebraic appeal. Constructiveness of programs is what static techniques like the must-can analysis [4, 11,12,13] verify although in a more abstract manner. Pure signals are a simple form of clock-synchronised shared memory (csm) data types with access methods (operations) specific to this csm type. Existing SP control-flow languages also support other restricted csm types such valued signals and arrays [10] or sequentially constructive variables [6].

Contribution. This paper proposes an extension to the SP model which retains the advantages of deterministic concurrency while widening the notion of constructiveness to cover more general csm types. This allows shared-memory communication to occur at higher levels of abstraction than currently supported. In particular, our approach subsumes both the notions of Berry-constructiveness [4] for Esterel and sequential constructiveness for SCL [14]. This is the first time that these SP communication principles are combined side-by-side in a single language. Moreover, our theory permits other predefined communication structures to coexist safely under the same uniform framework, such as data-flow variables [8], registers [15], Kahn channels [16], priority queues, arrays as well as other csm types currently unsupported in SP.

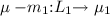

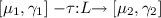

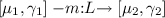

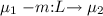

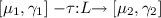

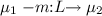

Synopsis and Overview. The core of our approach is presented in Sect. 2 where policies are introduced as a (constructive) synchronisation mechanism for arbitrary abstract data types (ADT). For instance, the policy of a pure signal is depicted in Fig. 1. It has two control states 0 and 1 corresponding to the two possible signal statuses. Transitions are decorated with method names \(\mathop {\mathtt {pres}}\), \(\mathop {\mathtt {emit}}\) or with \(\sigma \) to indicate a clock tick.

The policy tells us whether a given method or tick is admissible, i.e., if it can be scheduled from a particular stateFootnote 3. In addition, transitions include a blocking set of method names as part of their action labels. This set determines a precedence between methods from a given state. A label m : L specifies that all methods in L take precedence over m. An empty blocking set \(\emptyset \) indicates no precedences. To improve visualisation, we highlight precedences by dotted (red) arrows tagged \(\mathop {\mathtt {prec}}\)Footnote 4. The policy interface in Fig. 1 specifies the decide-then-read protocol of pure signals as follows. At any instant, if the signal status is 0 then the \(\mathop {\mathtt {pres}}\) test can only be scheduled if there are no more potential \(\mathop {\mathtt {emit}}\) statements that can still update the status to 1. This explains the precedence of the \(\mathop {\mathtt {emit}}\) transition over the self loop with action label \(\mathop {\mathtt {pres}} \mathrel {:}\{\mathop {\mathtt {emit}} \}\) from state 0. Conversely, transitions \(\mathop {\mathtt {pres}}\) and \(\mathop {\mathtt {emit}}\) from state 1 have no precedences, meaning that the \(\mathop {\mathtt {pres}}\) and \(\mathop {\mathtt {emit}}\) methods are confluent so they can be freely scheduled (interleaved). The reason is that a signal status 1 is already decided and can no longer be changed by either method in the same instant. In general, any two admissible methods that do not block each other must be confluent in the sense that the same policy state is reached independently of their order of execution. Note that all the \(\sigma \) transition go to the initial state 0 since at each tick the SP system enters a new macro-step where all pure signals get initialised to the 0 status.

Section 2 describes in detail the idea of a scheduling policy on general csm types. This leads to a type-level coherence property, which is a local form of determinism. Specifically, a csm type is policy-coherent if it satisfies the (policy) specification of admissibility and precedence of its access methods. The point is that a policy-coherent csm type per se behaves deterministically under its own policy—a very natural requirement if the goal is to build deterministic systems that use this type. For instance, the fact that Esterel signals are deterministic (policy-coherent) in the first place permits techniques such as the must-can analysis to get constructive information about deterministic programs. We show how policy-coherence implies a global determinacy property called commutation. Now, in a policy-constructive program all access methods can be scheduled in a policy-conforming way for all the csm types without deadlocking. We also show that, for policy-coherent types, a policy-constructive program exhibits deterministic concurrency in the sense that all policy-conforming interleavings produce the same input-output behaviour.

To implement a constructive scheduling mechanism parameterised in arbitrary csm type policies, we present the synchronous kernel language, called Deterministic Concurrent Language (DCoL), in Sect. 2.1. DCoL is both a minimal language to study the new mathematical concepts but can also act as an intermediate language for compiling existing SP Sect. 3 presents its policy-driven operational semantics for which determinacy and termination is proven. Section 3 also explains how this model generalises existing notions of constructiveness. We discuss related work in Sect. 4 and present our conclusions in Sect. 5.

A companion of this paper is the research report (https://www.uni-bamberg.de/fileadmin/uni/fakultaeten/wiai_professuren/grundlagen_informatik/papersMM/report-WIAI-102-Feb-2018.pdf) [17] which contains detailed proofs and additional examples of csm types.

2 Synchronous Policies

This section introduces a kernel synchronous Deterministic Concurrent Language (DCoL) for policy-conformant constructive scheduling which integrates policy-controlled csm types within a simple syntax. DCoL is used to discuss the behavioural (clock) abstraction limitations of current SP. Then policies are introduced as a mechanism for specifying the scheduling discipline for csm types which, in this form, can encapsulate arbitrary ADTs.

2.1 Syntax

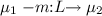

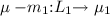

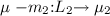

The syntax of DCoL is given by the following operators:

The first two statements correspond to the two forms of immediate completion: \(\mathop {\mathtt {skip}}\) terminates instantaneously and \(\mathop {\mathtt {pause}}\) waits for the logical clock to terminate. The operators  and \(P \mathop {{\texttt {;}}} Q\) are parallel interleaving and imperative sequential composition of threads with the standard operational interpretation. Reading and destructive updating is performed through the execution of method calls \({\texttt {c}}.m(e)\) on a csm variable \({\texttt {c}} \in \textsf {O}\) with a method \(m \in \textsf {M}_{\texttt {c}} \). The sets \(\textsf {O}\) and \(\textsf {M}_{\texttt {c}} \) define the granularity of the available memory accesses. The construct \(\mathop {{\texttt {let}}} x {{\texttt {\,=\,}}} {\texttt {c}}.m(e) \mathop {{\texttt {in}}} P\) calls m on \({\texttt {c}} \) with an input parameter determined by value expression e. It binds the return value to variable x and then executes program P, which may depend on x, sequentially afterwards. The execution of \({\texttt {c}}.m(e)\) in general has the side-effect of changing the internal memory of \({\texttt {c}} \). In contrast, the evaluation of expression e is side-effect free. For convenience we write \(x {{\texttt {\,=\,}}} {\texttt {c}}.m(e) {\mathop {{\texttt {;}}}} P\) for \(\mathop {{\texttt {let}}} x {{\texttt {\,=\,}}} {\texttt {c}}.m(e) \mathop {{\texttt {in}}} P\). When P does not depend on x then we write \({\texttt {c}}.m(e){\mathop {{\texttt {;}}}} P\) and \({\texttt {c}}.m(e){\mathop {{\texttt {;}}}}\) for \({\texttt {c}}.m(e){\mathop {{\texttt {;}}}}\mathop {\mathtt {skip}} \). The exact syntax of value expressions e is irrelevant for this work and left open. It could be as simple as permitting only constant value literals or a full-fledged functional language. The conditional \(\mathop {\mathtt {{if}}}\, e\, \mathop {\mathtt {then}}\, P\, \mathop {\mathtt {else}}\, P \) has the usual interpretation. For simplicity, we may write \(\mathop {\mathtt {{if}}}\, {\texttt {c}}.m(e)\, \mathop {\mathtt {then}}\, P\, \mathop {\mathtt {else}}\, Q \) to mean \(x {{\texttt {\,=\,}}} {\texttt {c}}.m(e) {\mathop {{\texttt {;}}}} \mathop {\mathtt {{if}}}\, x\, \mathop {\mathtt {then}}\, P\, \mathop {\mathtt {else}}\, Q \). The recursive closure \(\mathop {\textsf {rec}} p.\, P\) binds the behaviour P to the program label p so it can be called from within P. Using this construct we can build iterative behaviours. For instance, \({{\texttt {loop}}} \, P\, {{\texttt {end}}} =_{ \scriptstyle df }\mathop {\textsf {rec}} p.\, P {\mathop {{\texttt {;}}}} \mathop {\mathtt {pause}} {\mathop {{\texttt {;}}}} p\) indefinitely repeats P in each tick. We assume that in a closure \(\mathop {\textsf {rec}} p.\, P\) the label p is (i) clock guarded, i.e., it occurs in the scope of at least one \(\mathop {\mathtt {pause}}\) (meaning no instantaneous loops) and (ii) all occurrences of p are in the same thread. Thus, \(\mathop {\textsf {rec}} p.\, p\) is illegal because of (i) and \(\mathop {\textsf {rec}} p.\, (\mathop {\mathtt {pause}} \mathop {{\texttt {;}}} p \mathop {{\texttt {|\!\!|}}}\mathop {\mathtt {pause}} \mathop {{\texttt {;}}} p)\) is not permitted because of (ii).

and \(P \mathop {{\texttt {;}}} Q\) are parallel interleaving and imperative sequential composition of threads with the standard operational interpretation. Reading and destructive updating is performed through the execution of method calls \({\texttt {c}}.m(e)\) on a csm variable \({\texttt {c}} \in \textsf {O}\) with a method \(m \in \textsf {M}_{\texttt {c}} \). The sets \(\textsf {O}\) and \(\textsf {M}_{\texttt {c}} \) define the granularity of the available memory accesses. The construct \(\mathop {{\texttt {let}}} x {{\texttt {\,=\,}}} {\texttt {c}}.m(e) \mathop {{\texttt {in}}} P\) calls m on \({\texttt {c}} \) with an input parameter determined by value expression e. It binds the return value to variable x and then executes program P, which may depend on x, sequentially afterwards. The execution of \({\texttt {c}}.m(e)\) in general has the side-effect of changing the internal memory of \({\texttt {c}} \). In contrast, the evaluation of expression e is side-effect free. For convenience we write \(x {{\texttt {\,=\,}}} {\texttt {c}}.m(e) {\mathop {{\texttt {;}}}} P\) for \(\mathop {{\texttt {let}}} x {{\texttt {\,=\,}}} {\texttt {c}}.m(e) \mathop {{\texttt {in}}} P\). When P does not depend on x then we write \({\texttt {c}}.m(e){\mathop {{\texttt {;}}}} P\) and \({\texttt {c}}.m(e){\mathop {{\texttt {;}}}}\) for \({\texttt {c}}.m(e){\mathop {{\texttt {;}}}}\mathop {\mathtt {skip}} \). The exact syntax of value expressions e is irrelevant for this work and left open. It could be as simple as permitting only constant value literals or a full-fledged functional language. The conditional \(\mathop {\mathtt {{if}}}\, e\, \mathop {\mathtt {then}}\, P\, \mathop {\mathtt {else}}\, P \) has the usual interpretation. For simplicity, we may write \(\mathop {\mathtt {{if}}}\, {\texttt {c}}.m(e)\, \mathop {\mathtt {then}}\, P\, \mathop {\mathtt {else}}\, Q \) to mean \(x {{\texttt {\,=\,}}} {\texttt {c}}.m(e) {\mathop {{\texttt {;}}}} \mathop {\mathtt {{if}}}\, x\, \mathop {\mathtt {then}}\, P\, \mathop {\mathtt {else}}\, Q \). The recursive closure \(\mathop {\textsf {rec}} p.\, P\) binds the behaviour P to the program label p so it can be called from within P. Using this construct we can build iterative behaviours. For instance, \({{\texttt {loop}}} \, P\, {{\texttt {end}}} =_{ \scriptstyle df }\mathop {\textsf {rec}} p.\, P {\mathop {{\texttt {;}}}} \mathop {\mathtt {pause}} {\mathop {{\texttt {;}}}} p\) indefinitely repeats P in each tick. We assume that in a closure \(\mathop {\textsf {rec}} p.\, P\) the label p is (i) clock guarded, i.e., it occurs in the scope of at least one \(\mathop {\mathtt {pause}}\) (meaning no instantaneous loops) and (ii) all occurrences of p are in the same thread. Thus, \(\mathop {\textsf {rec}} p.\, p\) is illegal because of (i) and \(\mathop {\textsf {rec}} p.\, (\mathop {\mathtt {pause}} \mathop {{\texttt {;}}} p \mathop {{\texttt {|\!\!|}}}\mathop {\mathtt {pause}} \mathop {{\texttt {;}}} p)\) is not permitted because of (ii).

This syntax seems minimalistic compared to existing SP languages. For instance, it does not provide primitives for pre-emption, suspension or traps as in Quartz or Esterel. Recent work [18] has shown how these control primitives can be translated into the constructs of the SCL language, exploiting destructive update of sequentially constructive (SC) variables. Since SC variables are a special case of policy-controlled csm variables, DCoL is at least as expressive as SCL.

2.2 Limited Abstraction in SP

The pertinent feature of standard SP languages is that they do not permit the programmer to express sequential execution order inside a tick, for destructive updates of signals. All such updates are considered concurrent and thus must either be combined or concern distinct signals. For instance, in languages such as Esterel V7 or Quartz, a parallel composition

of signal emissions is only constructive if a commutative and associative function is defined on the shared signal \({\texttt {xs}} \) to combine the values assigned to it. But then, by the properties of this combination function, we get the same behaviour if we swap the assignments of values 1 and 5, or execute all in parallel as in

If what we intended with the second emission \({\texttt {xs}}.\mathop {\mathtt {emit}} (5)\) in (1) was to override the first \({\texttt {xs}}.\mathop {\mathtt {emit}} (1)\) like in normal imperative programming so that the concurrent thread \(v {{\texttt {\,=\,}}} {\texttt {xs}}.\textsf {read}() \mathop {{\texttt {;}}} {\texttt {ys}}.\mathop {\mathtt {emit}} (v + 1)\) will read the updated value as \(v = 5\)? Then we need to introduce a \(\mathop {\mathtt {pause}} \) statement to separate the emissions by a clock tick and delay the assignment to \({\texttt {ys}} \) as in

This makes behavioural abstraction difficult. For instance, suppose nats is a synchronous reaction module, possibly composite and with its own internal clocking, which returns the stream of natural numbers. Every time its step function \({{\texttt {nats}}}.{{\texttt {step}}} ()\) is called it returns the next number and increments its internal state. If we want to pair up two successive numbers within one tick of an outer clock and emit them in a single signal \({\texttt {ys}} \) we would write something like \(x_1 = {{\texttt {nats}}}.{{\texttt {step}}} () \mathop {{\texttt {;}}} x_2 = {{\texttt {nats}}}.{{\texttt {step}}} () \mathop {{\texttt {;}}} {\texttt {y}}.\mathop {\mathtt {emit}} (x_1, x_2)\) where \(x_1\), \(x_2\) are thread-local value variables. This over-clocking is impossible in traditional SP because there is no imperative sequential composition by virtue of which we can call the step function of the same module instance twice within a tick. Instead, the two calls \({{\texttt {nats}}}.{{\texttt {step}}} ()\) are considered concurrent and thus create non-determinacy in the value of \({\texttt {y}} \).Footnote 5 To avoid a compiler error we must separate the calls by a clock as in \(x_1 = {{\texttt {nats}}}.{{\texttt {step}}} () \mathop {{\texttt {;}}} \mathop {\mathtt {pause}} \mathop {{\texttt {;}}} x_2 = {{\texttt {nats}}}.{{\texttt {step}}} () \mathop {{\texttt {;}}} {\texttt {y}}.\mathop {\mathtt {emit}} (x_1, x_2)\) which breaks the intended clock abstraction.

The data abstraction limitation of traditional SP is that it is not directly possible to encapsulate a composite behaviour on synchronised signals as a shared synchronised object. For this, the simple decide-then-read signal protocol must be generalised, in particular, to distinguish between concurrent and sequential accesses to the shared data structure. A concurrent access \(x_1 {{\texttt {\,=\,}}} {{\texttt {nats}}}.{{\texttt {step}}} () \mathop {{\texttt {|\!\!|}}}\) \(x_2 {{\texttt {\,=\,}}} {{\texttt {nats}}}.{{\texttt {step}}} ()\) must give the same value for \(x_1\) and \(x_2\), while a sequential access \(x_1 {{\texttt {\,=\,}}} {{\texttt {nats}}}.{{\texttt {step}}} () \mathop {{\texttt {;}}} x_2 {{\texttt {\,=\,}}} {{\texttt {nats}}}.{{\texttt {step}}} ()\) must yield successive values of the stream. In a sequence \(x {{\texttt {\,=\,}}} {\texttt {xs}}.{{\texttt {read}}} () \mathop {{\texttt {;}}} {\texttt {xs}}.\mathop {\mathtt {emit}} (v)\) the x does not see the value v but in a parallel  we may want the read to wait for the emission. The rest of this section covers our theory on policies in which this is possible. The modularity issue is reconsidered in Sect. 2.6.

we may want the read to wait for the emission. The rest of this section covers our theory on policies in which this is possible. The modularity issue is reconsidered in Sect. 2.6.

2.3 Concurrent Access Policies

In the white-box view of SP, an imperative program consists of a set of threads (sequential processes) and some csm variables for communication. Due to concurrency, a given thread under control (tuc) has the chance to access the shared variables only from time to time. For a given csm variable, a concurrent access policy (cap) is the locking mechanism used to control the accesses of the current tuc and its environment. The locking is to ensure that determinacy of the csm type is not broken by the concurrent accesses. A cap is like a policy which has extra transitions to model potential environment accesses outside the tuc. Concretely, a cap is given by a state machine where each transition label \(a \mathrel {:}L\) codifies an action a taking place on the shared variable with blocking set L, where L is a set of methods that take precedence over a. The action is either a method \(m \mathrel {:}L\), a silent action \(\tau \mathrel {:}L\) or a clock tick \(\sigma \mathrel {:}L\). A transition \(m \mathrel {:}L\) expresses that in the current cap control state, the method m can be called by the tuc, provided that no method in L is called concurrently. There is a Determinacy Requirement that guarantees that each method call by the tuc has a blocking set and successor state. Additionally, the execution of methods by the cap must be confluent in the sense that if two methods are admissible and do not block each other, then the cap reaches the same policy state no matter the order in which they are executed. This is to preserve determinism for concurrent variable accesses. A transition \(\tau \mathrel {:}L\) internalises method calls by the tuc ’s concurrent environment which are uncontrollable for the tuc. In the sequel, the actions in \(\textsf {M}_{\texttt {c}} \cup \{\sigma \}\) will be called observable. A transition \(\sigma \mathrel {:}L\) models a clock synchronisation step of the tuc. Like method calls, such clock ticks must be determinate as stated by the Determinacy Requirement. Additionally, the clock must always wait for any predicted concurrent \(\tau \)-activity to complete. This is the Maximal Progress Requirement. Note that we do not need confluence for clock transitions since they are not concurrent.

Definition 1

A concurrent access policy (cap) \(\Vdash _{\texttt {c}} \) of a csm variable \({\texttt {c}} \) with (access) methods \(\textsf {M}_{\texttt {c}} \) is a state machine consisting of a set of control states \(\mathbb {P} _{\texttt {c}} \), an initial state \(\varepsilon \in \mathbb {P} _{\texttt {c}} \) and a labelled transition relation \(\rightarrow \; \subseteq \mathbb {P} _{\texttt {c}} \times \textsf {A}_{\texttt {c}} \times \mathbb {P} _{\texttt {c}} \) with action labels \(\textsf {A}_{\texttt {c}} = (\textsf {M}_{\texttt {c}} \cup \{ \tau , \sigma \}) \times 2^{\textsf {M}_{\texttt {c}}}\). Instead of \((\mu _1, (a, L), \mu _2) \mathop {\in } \rightarrow \) we write  . We then say action a is admissible in state \(\mu _1\) and blocked by all methods \(m \in L \subseteq \textsf {M}_{\texttt {c}} \). When the blocking set L is irrelevant we drop it and write

. We then say action a is admissible in state \(\mu _1\) and blocked by all methods \(m \in L \subseteq \textsf {M}_{\texttt {c}} \). When the blocking set L is irrelevant we drop it and write  . A cap must satisfy the following conditions:

. A cap must satisfy the following conditions:

-

Determinacy. If

and

and  then \(L_1 = L_2\) and \(\mu _1 = \mu _2\) provided a is observable, i.e., \(a \ne \tau \).

then \(L_1 = L_2\) and \(\mu _1 = \mu _2\) provided a is observable, i.e., \(a \ne \tau \). -

Confluence. If

and

and  do not block each other, i.e., \(m_1 \in \textsf {M}_{\texttt {c}} \setminus L_2\) and \(m_2 \in \textsf {M}_{\texttt {c}} \setminus L_1\), then for some \(\mu '\) both

do not block each other, i.e., \(m_1 \in \textsf {M}_{\texttt {c}} \setminus L_2\) and \(m_2 \in \textsf {M}_{\texttt {c}} \setminus L_1\), then for some \(\mu '\) both  and

and  .

. -

Maximal Progress.

and

and  imply a is observable.

imply a is observable.

A policy is a cap without any (concurrent) \(\tau \) activity, i.e., every  implies that a is observable. \(\square \)

implies that a is observable. \(\square \)

The use of a cap as a concurrent policy arises from the notion of enabling. Informally, an observable action \(a \in \textsf {M}_{\texttt {c}} \cup \{ \sigma \}\) is enabled in a state \(\mu \) of a cap if it is admissible in \(\mu \) and in all subsequent states reachable under arbitrary silent steps. To formalise this we define weak transitions \(\mu _1 \Rightarrow \mu _2\) inductively to express that either \(\mu _1 = \mu _2\) or \(\mu _1 \Rightarrow \mu '\) and  . We exploit the determinacy for observable actions \(a \in \textsf {M}_{\texttt {c}} \cup \{ \sigma \}\) and write \(\mu \odot a\) for the unique \(\mu '\) such that

. We exploit the determinacy for observable actions \(a \in \textsf {M}_{\texttt {c}} \cup \{ \sigma \}\) and write \(\mu \odot a\) for the unique \(\mu '\) such that  , if it exists.

, if it exists.

Definition 2

Given a cap \(\Vdash _{\texttt {c}} = (\mathbb {P} _{\texttt {c}}, \varepsilon , \mathrel {{\mathop {\longrightarrow }\limits ^{}}})\), an observable action \(a \in \textsf {M}_{\texttt {c}} \cup \{ \sigma \}\) is enabled in state \(\mu \in \mathbb {P} _{\texttt {c}} \), written \(\mu \Vdash _{\texttt {c}} \mathop {\downarrow }a\), if \(\mu ' \odot a\) exists for all \(\mu '\) such that \(\mu \Rightarrow \mu '\). A sequence \({{\varvec{a}}} \in (\textsf {M}_{\texttt {c}} \cup \{ \sigma \})^*\) of observable actions is enabled in \(\mu \in \mathbb {P} _{\texttt {c}} \), written \(\mu \Vdash _{\texttt {c}} \mathop {\downarrow }{{\varvec{a}}}\), if (i) \({{\varvec{a}}} = \varepsilon \) or (ii) \({{\varvec{a}}} = a\, {{\varvec{b}}}\), \(\mu \Vdash _{\texttt {c}} \mathop {\downarrow }a\) and \(\mu \odot a \Vdash _{\texttt {c}} \mathop {\downarrow }{{\varvec{b}}}\). \(\square \)

Example 1

Consider the policy \(\Vdash _{\texttt {s}} \) in Fig. 1 of an Esterel pure signal \({\texttt {s}} \). An edge labelled a : L from state \(\mu _1\) to \(\mu _2\) corresponds to a transition  in \(\Vdash _{\texttt {s}} \). The start state is \(\varepsilon = 0\) and the methods \(\textsf {M}_{\texttt {s}} = \{ \mathop {\mathtt {pres}}, \mathop {\mathtt {emit}} \}\) are always admissible, i.e., \(\mu \odot m\) is defined in each state \(\mu \) for all methods m. The presence test does not change the state and any emission sets it to 1, i.e., \(\mu \odot \mathop {\mathtt {pres}} = \mu \) and \(\mu \odot \mathop {\mathtt {emit}} = 1\) for all \(\mu \in \mathbb {P} _{\texttt {s}} \). Each signal status is reset to 0 with the clock tick, i.e., \(\mu \odot \sigma = 0\). Clearly, \(\Vdash _{\texttt {s}} \) satisfies Determinacy. A presence test on a signal that is not emitted yet has to wait for all pending concurrent emissions, that is \(\mathop {\mathtt {emit}}\) blocks \(\mathop {\mathtt {pres}}\) in state 0, i.e.,

in \(\Vdash _{\texttt {s}} \). The start state is \(\varepsilon = 0\) and the methods \(\textsf {M}_{\texttt {s}} = \{ \mathop {\mathtt {pres}}, \mathop {\mathtt {emit}} \}\) are always admissible, i.e., \(\mu \odot m\) is defined in each state \(\mu \) for all methods m. The presence test does not change the state and any emission sets it to 1, i.e., \(\mu \odot \mathop {\mathtt {pres}} = \mu \) and \(\mu \odot \mathop {\mathtt {emit}} = 1\) for all \(\mu \in \mathbb {P} _{\texttt {s}} \). Each signal status is reset to 0 with the clock tick, i.e., \(\mu \odot \sigma = 0\). Clearly, \(\Vdash _{\texttt {s}} \) satisfies Determinacy. A presence test on a signal that is not emitted yet has to wait for all pending concurrent emissions, that is \(\mathop {\mathtt {emit}}\) blocks \(\mathop {\mathtt {pres}}\) in state 0, i.e.,  . Otherwise, no transition is blocked. Also, all competing transitions

. Otherwise, no transition is blocked. Also, all competing transitions  and

and  that do not block each other, are of the form \(\mu _1 = \mu _2\), from which Confluence follows. Note that since there are no silent transitions, Maximal Progress is always fulfilled too. Moreover, an action sequence is enabled in a state \(\mu \) (Definition 2) iff it corresponds to a path in the automaton starting from \(\mu \). Hence, for \({{\varvec{m}}} \in \textsf {M}_{\texttt {s}} ^*\) we have \(0 \Vdash _{\texttt {s}} \mathop {\downarrow }{{\varvec{m}}}\) iff \({{\varvec{m}}}\) is in the regular languageFootnote 6 \(\mathop {\mathtt {pres}} ^{*} + \mathop {\mathtt {pres}} ^{*} \mathop {\mathtt {emit}} (\mathop {\mathtt {pres}} + \mathop {\mathtt {emit}})^{*}\) and \(1 \Vdash _{\texttt {s}} \mathop {\downarrow }{{\varvec{m}}}\) for all \({{\varvec{m}}} \in \textsf {M}_{\texttt {s}} ^{*}\).

that do not block each other, are of the form \(\mu _1 = \mu _2\), from which Confluence follows. Note that since there are no silent transitions, Maximal Progress is always fulfilled too. Moreover, an action sequence is enabled in a state \(\mu \) (Definition 2) iff it corresponds to a path in the automaton starting from \(\mu \). Hence, for \({{\varvec{m}}} \in \textsf {M}_{\texttt {s}} ^*\) we have \(0 \Vdash _{\texttt {s}} \mathop {\downarrow }{{\varvec{m}}}\) iff \({{\varvec{m}}}\) is in the regular languageFootnote 6 \(\mathop {\mathtt {pres}} ^{*} + \mathop {\mathtt {pres}} ^{*} \mathop {\mathtt {emit}} (\mathop {\mathtt {pres}} + \mathop {\mathtt {emit}})^{*}\) and \(1 \Vdash _{\texttt {s}} \mathop {\downarrow }{{\varvec{m}}}\) for all \({{\varvec{m}}} \in \textsf {M}_{\texttt {s}} ^{*}\).

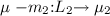

Contrast \(\Vdash _{\texttt {s}} \) with the policy \(\Vdash _{\texttt {c}} \) of a synchronous immutable variable (IVar) \({\texttt {c}}\) shown in Fig. 2 with methods \(\textsf {M}_{\texttt {c}} = \{ {{\texttt {get}}}, {{\texttt {put}}} \}\). During each instant an IVar can be written (put) at most once and cannot be read (get) until it has been written. No value is stored between ticks, which means the memory is only temporary and can be reused, e. g., IVars can be implemented by wires. Formally, \(\mu \Vdash _{\texttt {c}} \mathop {\downarrow }{{\texttt {put}}} \) iff \(\mu = 0\), where 0 is the initial empty state and \(\mu \Vdash _{\texttt {c}} \mathop {\downarrow }{{\texttt {get}}} \) iff \(\mu = 1\), where 1 is the filled state. The transition  switches to filled state where get is admissible but put is not, anymore. The blocking \(\{ {{\texttt {put}}} \}\) means there cannot be other concurrent threads writing \({\texttt {c}}\) at the same time. \(\square \)

switches to filled state where get is admissible but put is not, anymore. The blocking \(\{ {{\texttt {put}}} \}\) means there cannot be other concurrent threads writing \({\texttt {c}}\) at the same time. \(\square \)

2.4 Enabling and Policy Conformance

A policy describes what a single thread can do to a csm variable \({\texttt {c}}\) when it operates alone. In a cap all potential activities of the environment are added as \(\tau \)-transitions to block the tuc ’s accesses. To implement this \(\tau \)-locking we define an operation that generates a cap \([\mu , \gamma ]\) out of a policy. In this construction, \(\mu \in \mathbb {P} _{\texttt {c}} \) is a policy state recording the history of methods that have been performed on \({\texttt {c}}\) so far (\( must \) information). The second component \(\gamma \subseteq \textsf {M}_{\texttt {c}} ^*\) is a prediction for the sequences of methods that can still potentially be executed by the concurrent environment (\( can \) information).

Definition 3

Let \((\mathbb {P} _{\texttt {c}}, \varepsilon , \rightarrow )\) be a policy. We define a cap \(\Vdash _{\texttt {c}} \) where states are pairs \([\mu , \gamma ]\) such that \(\mu \in \mathbb {P} _{\texttt {c}} \) is a policy state and \(\gamma \subseteq \textsf {M}_{\texttt {c}} ^*\) is a prediction. The initial state is \([\varepsilon , \textsf {M}_{\texttt {c}} ^*]\) and the transitions are as follows:

-

1.

The observable transitions

are such that \(\gamma _1 = \gamma _2\) and

are such that \(\gamma _1 = \gamma _2\) and  provided that for all sequences \(n\,{{\varvec{n}}} \in \gamma _1\) with

provided that for all sequences \(n\,{{\varvec{n}}} \in \gamma _1\) with  we have \(n \not \in L\).

we have \(n \not \in L\). -

2.

The silent transitions are

such that \(\emptyset \ne m\, \gamma _2 \subseteq \gamma _1\) and

such that \(\emptyset \ne m\, \gamma _2 \subseteq \gamma _1\) and  .

. -

3.

The clock transitions are

such that \(\gamma _1 = \emptyset \) and

such that \(\gamma _1 = \emptyset \) and  . \(\square \)

. \(\square \)

Silent steps arise from the concurrent environment: A step  removes some prefix method m from the environment prediction \(\gamma _1\), which contracts to an updated suffix prediction \(\gamma _2\) with \(m\, \gamma _2 \subseteq \gamma _1\). This method m is executed on the csm variable, changing the policy state to \(\mu _2 = \mu _1 \odot m\). A method m is enabled, \([\mu , \gamma ] \Vdash _{\texttt {c}} \mathop {\downarrow }m\), if for all \([\mu _1, \gamma _1]\) which are \(\tau \)-reachable from \([\mu , \gamma ]\), method m is admissible, i.e.,

removes some prefix method m from the environment prediction \(\gamma _1\), which contracts to an updated suffix prediction \(\gamma _2\) with \(m\, \gamma _2 \subseteq \gamma _1\). This method m is executed on the csm variable, changing the policy state to \(\mu _2 = \mu _1 \odot m\). A method m is enabled, \([\mu , \gamma ] \Vdash _{\texttt {c}} \mathop {\downarrow }m\), if for all \([\mu _1, \gamma _1]\) which are \(\tau \)-reachable from \([\mu , \gamma ]\), method m is admissible, i.e.,  for some \(\mu _2\).

for some \(\mu _2\).

Example 2

Consider concurrent threads  , where \(P_2 = {\texttt {zs}}.{{{\texttt {put}}} (5)} \mathop {{\texttt {;}}} u {{\texttt {\,=\,}}} \) \({\texttt {ys}}.{{{\texttt {get}}}}()\) and \(P_1 = v {{\texttt {\,=\,}}} {\texttt {zs}}.{{{\texttt {get}}}}() \mathop {{\texttt {;}}} {\texttt {ys}}.{{\texttt {put}}} (v+1)\) with IVars \({\texttt {zs}} \), \({\texttt {ys}} \) according to Example 1. Under the IVar policy the execution is deterministic, so that first \(P_2\) writes on \({\texttt {zs}}\), then \(P_1\) reads from \({\texttt {zs}}\) and writes to \({\texttt {ys}}\), whereupon finally \(P_1\) reads \({\texttt {ys}}\). Suppose the variables have reached policy states \(\mu _{\texttt {zs}} \) and \(\mu _{\texttt {ys}} \) and the threads are ready to execute the residual programs \(P_i'\) waiting at some method call \({\texttt {c}} _i.m_i(v_i)\), respectively. Since thread \(P_i'\) is concurrent with the other \(P_{3-i}'\), it can only proceed if \(m_i\) is not blocked by \(P_{3-i}'\), i.e., if \([\mu _{{\texttt {c}} _i}, can _{{\texttt {c}} _i}(P_{3-i}') ] \Vdash _{{\texttt {c}} _i} \mathop {\downarrow }m_i\), where \( can _{{\texttt {c}}}(P) \subseteq \textsf {M}_{\texttt {c}} ^*\) is the set of method sequences predicted for P on \({\texttt {c}} \).

, where \(P_2 = {\texttt {zs}}.{{{\texttt {put}}} (5)} \mathop {{\texttt {;}}} u {{\texttt {\,=\,}}} \) \({\texttt {ys}}.{{{\texttt {get}}}}()\) and \(P_1 = v {{\texttt {\,=\,}}} {\texttt {zs}}.{{{\texttt {get}}}}() \mathop {{\texttt {;}}} {\texttt {ys}}.{{\texttt {put}}} (v+1)\) with IVars \({\texttt {zs}} \), \({\texttt {ys}} \) according to Example 1. Under the IVar policy the execution is deterministic, so that first \(P_2\) writes on \({\texttt {zs}}\), then \(P_1\) reads from \({\texttt {zs}}\) and writes to \({\texttt {ys}}\), whereupon finally \(P_1\) reads \({\texttt {ys}}\). Suppose the variables have reached policy states \(\mu _{\texttt {zs}} \) and \(\mu _{\texttt {ys}} \) and the threads are ready to execute the residual programs \(P_i'\) waiting at some method call \({\texttt {c}} _i.m_i(v_i)\), respectively. Since thread \(P_i'\) is concurrent with the other \(P_{3-i}'\), it can only proceed if \(m_i\) is not blocked by \(P_{3-i}'\), i.e., if \([\mu _{{\texttt {c}} _i}, can _{{\texttt {c}} _i}(P_{3-i}') ] \Vdash _{{\texttt {c}} _i} \mathop {\downarrow }m_i\), where \( can _{{\texttt {c}}}(P) \subseteq \textsf {M}_{\texttt {c}} ^*\) is the set of method sequences predicted for P on \({\texttt {c}} \).

Initially we have \(\mu _{\texttt {zs}} = 0 = \mu _{\texttt {ys}} \). Since method \({{\texttt {get}}} \) is not admissible in state 0, we get \([0, can _{{\texttt {zs}}}(P_{2}) ] \nVdash _{{\texttt {zs}}} \mathop {\downarrow }{{\texttt {get}}} \) by Definitions 3 and 2. So, \(P_1\) is blocked. The \({\texttt {zs}}.{{\texttt {put}}} \) of \(P_2\), however, can proceed. First, since no predicted method sequence \( can _{{\texttt {zs}}}(P_{1}) = \{ {{\texttt {get}}} \}\) of \(P_1\) starts with \({{\texttt {put}}} \), the transition  implies that

implies that  by Definition 3(1). Moreover, since get of \(P_1\) is not admissible in 0, there are no silent transitions out of \([0, can _{{\texttt {zs}}}(P_{1}) ]\) according to Definition 3(2). Thus, \([0, can _{{\texttt {zs}}}(P_{1}) ] \Vdash _{\texttt {zs}} \mathop {\downarrow }{{\texttt {put}}} \), as claimed.

by Definition 3(1). Moreover, since get of \(P_1\) is not admissible in 0, there are no silent transitions out of \([0, can _{{\texttt {zs}}}(P_{1}) ]\) according to Definition 3(2). Thus, \([0, can _{{\texttt {zs}}}(P_{1}) ] \Vdash _{\texttt {zs}} \mathop {\downarrow }{{\texttt {put}}} \), as claimed.

When the \({\texttt {zs}}.{{\texttt {put}}} \) is executed by \(P_2\) it turns into \(P_2' = u {{\texttt {\,=\,}}} {\texttt {ys}}.{{\texttt {get}}} ()\) and the policy state for \({\texttt {zs}} \) advances to \(\mu _{\texttt {zs}} ' = 1\), while \({\texttt {ys}} \) is still at \(\mu _{\texttt {ys}} = 0\). Now \({\texttt {ys}}.{{\texttt {get}}} \) of \(P_2'\) blocks for the same reason as \({\texttt {zs}} \) was blocked in \(P_1\) before. But since \(P_2\) has advanced, its prediction on \({\texttt {zs}} \) reduces to \( can _{{\texttt {zs}}}(P_2') = \emptyset \). Therefore, the transition  implies

implies  by Definition 3(1). Also, there are no silent transitions out of \([1, can _{{\texttt {zs}}}(P_2') ]\) by Definition 3(2) and so \([\mu _{\texttt {zs}} ', can _{{\texttt {zs}}}(P_2') ] \Vdash _{\texttt {zs}} \mathop {\downarrow }{{\texttt {get}}} \) by Definition 2. This permits \(P_1\) to execute \({\texttt {zs}}.{{\texttt {get}}} ()\) and proceed to \(P_1' = {\texttt {ys}}.{{\texttt {put}}} (5+1)\). The policy state of \({\texttt {zs}} \) is not changed by this, neither is the state of \({\texttt {ys}}\), whence \(P_2'\) is still blocked. Yet, we have \([\mu _{\texttt {ys}}, can _{{\texttt {zs}}}(P_2') ] \Vdash _{\texttt {ys}} \mathop {\downarrow }{{\texttt {put}}} \) which lets \(P_1'\) complete \({\texttt {ys}}.{{\texttt {put}}} \). It reaches \(P_1''\) with \( can _{{\texttt {ys}}}(P_1'') = \emptyset \) and changes the policy state of \({\texttt {ys}}\) to \(\mu _{\texttt {ys}} ' = 1\). At this point, \([\mu _{\texttt {ys}} ', can _{{\texttt {zs}}}(P_1'') ] \Vdash _{\texttt {ys}} \mathop {\downarrow }{{\texttt {get}}} \) which means \(P_2'\) unblocks to execute \({\texttt {ys}}.{{\texttt {get}}} \). \(\square \)

by Definition 3(1). Also, there are no silent transitions out of \([1, can _{{\texttt {zs}}}(P_2') ]\) by Definition 3(2) and so \([\mu _{\texttt {zs}} ', can _{{\texttt {zs}}}(P_2') ] \Vdash _{\texttt {zs}} \mathop {\downarrow }{{\texttt {get}}} \) by Definition 2. This permits \(P_1\) to execute \({\texttt {zs}}.{{\texttt {get}}} ()\) and proceed to \(P_1' = {\texttt {ys}}.{{\texttt {put}}} (5+1)\). The policy state of \({\texttt {zs}} \) is not changed by this, neither is the state of \({\texttt {ys}}\), whence \(P_2'\) is still blocked. Yet, we have \([\mu _{\texttt {ys}}, can _{{\texttt {zs}}}(P_2') ] \Vdash _{\texttt {ys}} \mathop {\downarrow }{{\texttt {put}}} \) which lets \(P_1'\) complete \({\texttt {ys}}.{{\texttt {put}}} \). It reaches \(P_1''\) with \( can _{{\texttt {ys}}}(P_1'') = \emptyset \) and changes the policy state of \({\texttt {ys}}\) to \(\mu _{\texttt {ys}} ' = 1\). At this point, \([\mu _{\texttt {ys}} ', can _{{\texttt {zs}}}(P_1'') ] \Vdash _{\texttt {ys}} \mathop {\downarrow }{{\texttt {get}}} \) which means \(P_2'\) unblocks to execute \({\texttt {ys}}.{{\texttt {get}}} \). \(\square \)

Definition 4

Let \(\Vdash _{\texttt {c}} \) be a policy for \({\texttt {c}}\). A method sequence \({{\varvec{m}}}_{1}\) blocks another \({{\varvec{m}}}_{2}\) in state \(\mu \), written \(\mu \Vdash _{\texttt {c}} {{\varvec{m}}}_{1} \rightarrow {{\varvec{m}}}_{2}\), if \(\mu \Vdash _{\texttt {c}} \mathop {\downarrow }{{\varvec{m}}}_{2}\) but \([\mu , \{ {{\varvec{m}}}_{1} \}] \nVdash _{\texttt {c}} \mathop {\downarrow }{{\varvec{m}}}_{2}\). Two method sequences \({{\varvec{m}}}_{1}\) and \({{\varvec{m}}}_{2}\) are concurrently enabled, denoted \(\mu \Vdash _{\texttt {c}} {{\varvec{m}}}_{1} \mathrel {\diamond _{}} {{\varvec{m}}}_{2}\) if \(\mu \Vdash _{\texttt {c}} \mathop {\downarrow }{{\varvec{m}}}_{1}\), \(\mu \Vdash _{\texttt {c}} \mathop {\downarrow }{{\varvec{m}}}_{2}\) and both \(\mu \nVdash _{\texttt {c}} {{\varvec{m}}}_{1} \rightarrow {{\varvec{m}}}_{2}\) and \(\mu \nVdash _{\texttt {c}} {{\varvec{m}}}_{2} \rightarrow {{\varvec{m}}}_{1}\). \(\square \)

Our operational semantics will only let a tuc execute a sequence \({{\varvec{m}}}\) provided \([\mu , \gamma ] \Vdash _{\texttt {c}} \mathop {\downarrow }{{\varvec{m}}}\), where \(\mu \) is the current policy state of \({\texttt {c}} \) and \(\gamma \) the predicted activity in the tuc ’s concurrent environment. Symmetrically, the environment will execute any \({{\varvec{n}}} \in \gamma \) only if it is enabled with respect to \({{\varvec{m}}}\), i.e., if \([\mu , \{{{\varvec{m}}}\}] \Vdash \mathop {\downarrow }{{\varvec{n}}}\). This means \(\mu \Vdash _{\texttt {c}} {{\varvec{m}}} \mathrel {\diamond _{}} {{\varvec{n}}}\). Policy coherence (Definition 5 below) then implies that every interleaving of the sequences \({{\varvec{m}}}\) and any \({{\varvec{n}}} \in \gamma \) leads to the same return values and final variable state (Proposition 1).

2.5 Coherence and Determinacy

A method call m(v) combines a method \(m \in \textsf {M}_{\texttt {c}} \) with a method parameterFootnote 7 \(v \in \mathbb {D} \), where \(\mathbb {D} \) is a universal domain for method arguments and return values, including the special don’t care value \( {\_} \in \mathbb {D} \). We denote by \(\textsf {A}_{\texttt {c}} = \{ m(v) \mid m \in \textsf {M}_{\texttt {c}}, v \in \mathbb {D} \}\) the set of all method calls on object \({\texttt {c}} \). Sequences of method calls \(\alpha \in \textsf {A}_{\texttt {c}} ^*\) can be abstracted back into sequences of methods \(\alpha ^\# \in \textsf {M}_{\texttt {c}} ^*\) by dropping the method parameters: \(\varepsilon ^\# = \varepsilon \) and \((m(v)\, \alpha )^\# = m\, \alpha ^\#\).

Coherence concerns the semantics of method calls as state transformations. Let \(\mathbb {S} _{\texttt {c}} \) be the domain of memory states of the object \({\texttt {c}} \) with initial state \( init _{\texttt {c}} \in \mathbb {S} _{\texttt {c}} \). Each method call \(m(v) \in \textsf {A}_{\texttt {c}} \) corresponds to a semantical action \([\![m(v)]\!]_{\texttt {c}} \in \mathbb {S} _{\texttt {c}} \rightarrow (\mathbb {D} \times \mathbb {S} _{\texttt {c}})\). If \(s \in \mathbb {S} _{\texttt {c}} \) is the current state of the object then executing a call m(v) on \({\texttt {c}} \) returns a pair \((u, s') = [\![m(v)]\!]_{\texttt {c}} (s)\) where the first projection \(u \in \mathbb {D} \) is the return value from the call and the second projection \(s' \in \mathbb {S} _{\texttt {c}} \) is the new updated state of the variable. For convenience, we will denote \(u = \pi _1 [\![m(v)]\!]_{\texttt {c}} (s)\) by \(u = s.m(v)\) and \(s' = \pi _2[\![m(v)]\!]_{\texttt {c}} (s)\) by \(s' = s \odot m(v)\). The action notation is extended to sequences of calls \(\alpha \in \textsf {A}_{\texttt {c}} ^*\) in the natural way: \(s \odot \varepsilon = s\) and \(s \odot (m(v)\,\alpha ) = (s \odot m(v)) \odot \alpha \).

For policy-based scheduling we assume an abstraction function mapping a memory state \(s \in \mathbb {S} _{\texttt {c}} \) into a policy state \(s^\# \in \mathbb {P} _{\texttt {c}} \). Specifically, \( init _{\texttt {c}} ^\# = \varepsilon \). Further, we assume the abstraction commutes with method execution in the sense that if we execute a sequence of calls and then abstract the final state, we get the same as if we executed the policy automaton on the abstracted state in the first place. Formally, \((s \odot \alpha )^\# = s^\# \odot \alpha ^\#\) for all \(s \in \mathbb {S} _{\texttt {c}} \) and \(\alpha \in A_{\texttt {c}} ^*\).

Definition 5

(Coherence). A csm variable \({\texttt {c}} \) is policy-coherent if for all method calls \(a, b \in \textsf {A}_{\texttt {c}} \) whenever \(s^\# \Vdash _{\texttt {c}} a^\# \mathrel {\diamond _{}} b^\#\) for a state \(s \in \mathbb {S} _{\texttt {c}} \), then a and b are confluent in the sense that \(s.a = (s \odot b).a\), \(s.b = (s \odot a).b\) and \(s \odot a \odot b = s \odot b \odot a\). \(\square \)

Example 3

Esterel pure signals do not carry any data value, so their memory state coincides with the policy state, \(\mathbb {S} _{\texttt {s}} = \mathbb {P} _{\texttt {s}} = \{ 0, 1 \}\) and \(s^\# = s\). An emission \(\mathop {\mathtt {emit}} \) does not return any value but sets the state of \({\texttt {s}} \) to 1, i.e., \(s.\mathop {\mathtt {emit}} ( {\_} ) = {\_} \in \mathbb {D} \) and \({s}{\odot }{\mathop {\mathtt {emit}} ( {\_} ) = 1 \in \mathbb {S} _{\texttt {s}}}\). A present test returns the state, \(s.\mathop {\mathtt {pres}} ( {\_} ) = s\), but does not modify it, \({s}{\odot }{\mathop {\mathtt {pres}} ( {\_} ) = s}\). From the policy Fig. 1 we find that the concurrent enablings \(s^\# \Vdash _{\texttt {s}} a^\# \mathrel {\diamond _{}} b^\#\) according to Definition 4 are (i) \(a = b \in \{ \mathop {\mathtt {pres}} ( {\_} ), \mathop {\mathtt {emit}} ( {\_} ) \}\) for arbitrary s, or (ii) \(s = 1\), \(a = \mathop {\mathtt {emit}} ( {\_} )\) and \(b = \mathop {\mathtt {pres}} ( {\_} )\). In each of these cases we verify \(s.a = (s \odot b).a\), \(s.b = (s \odot a).b\) and \(s \odot a \odot b = s \odot b \odot a\) without difficulty. Note that \(1 \Vdash _{\texttt {s}} \mathop {\mathtt {emit}} \mathrel {\diamond _{}} \mathop {\mathtt {pres}} \) since the order of execution is irrelevant if \(s = 1\). On the other hand, \(0 \nVdash _{\texttt {s}} \mathop {\mathtt {emit}} \mathrel {\diamond _{}} \mathop {\mathtt {pres}} \) because in state 0 both methods are not confluent. Specifically, \(0.\mathop {\mathtt {pres}} ( {\_} ) = 0 \ne 1 = (0 \odot \mathop {\mathtt {emit}} ( {\_} )).\mathop {\mathtt {pres}} ( {\_} )\). \(\square \)

A special case are linear precedence policies where \(\mu \Vdash _{\texttt {c}} \mathop {\downarrow }m\) for all \(m \in \textsf {M}_{\texttt {c}} \) and \(\mu \Vdash _{\texttt {c}} m \rightarrow n\) is a linear ordering on \(\textsf {M}_{\texttt {c}} \), for all policy states \(\mu \). Then, for no state we have \(\mu \Vdash _{\texttt {c}} m_{1} \mathrel {\diamond _{}} m_2\), so there is no concurrency and thus no confluence requirement to satisfy at all. Coherence of \({\texttt {c}} \) is trivially satisfied whatever the semantics of method calls. For any two admissible methods one takes precedence over the other and thus the enabling relation becomes deterministic. There is however a risk of deadlock which can be excluded if we assume that threads always call methods in order of decreasing precedence.

The other extreme case is where the policy makes all methods concurrently enabled, i.e., \(\mu \Vdash _{\texttt {c}} m_1 \mathrel {\diamond _{}} m_2\) for all policy states \(\mu \) and methods \(m_1\), \(m_2\). This avoids deadlock completely and gives maximal concurrency but imposes the strongest confluence condition, viz. independently of the scheduling order of any two methods, the resulting variable state must be the same. This requires complete isolation of the effects of any two methods. Such an extreme is used, e. g., in the CR library [19]. The typical csm variable, however, will strike a trade-off between these two extremes. It will impose a sensible set of precedences that are strong enough to ensure coherent implementations and thus determinacy for policy-conformant scheduling, while at the same time being sufficiently relaxed to permit concurrent implementations and avoiding unnecessary deadlocks risking that programs are rejected by the compiler as un-scheduleable.

Whatever the policies, if the variables are coherent, then all policy-conformant interleavings are indistinguishable for each csm variable. To state schedule invariance in its general form we lift method actions and independence to multi-variable sequences of methods calls \(\textsf {A} = \{ {\texttt {c}}.m(v) \mid {\texttt {c}} \in \textsf {O}, m(v) \in \textsf {A}_{\texttt {c}} \}\). For a given sequence \(\alpha \in \textsf {A}^*\) let \(\pi _{\texttt {c}} (\alpha ) \in \textsf {A}_{\texttt {c}} ^*\) be the projection of \(\alpha \) on \({\texttt {c}} \), formally \(\pi _{\texttt {c}} (\varepsilon ) = \varepsilon \), \(\pi _{\texttt {c}} ({\texttt {c}}.m(v)\, \alpha ) = m(v)\, \pi _{\texttt {c}} (\alpha )\) and \(\pi _{\texttt {c}} ({\texttt {c}} '.m(v)\, \alpha ) = \pi _{\texttt {c}} (\alpha )\) for \({\texttt {c}} ' \ne {\texttt {c}} \). A global memory \({\varSigma } \in \mathbb {S} = \prod _{{\texttt {c}} \in \textsf {O}} \mathbb {S} _{\texttt {c}} \) assigns a local memory \({\varSigma }.{\texttt {c}} \in \mathbb {S} _{\texttt {c}} \) to each variable \({\texttt {c}} \). We write \( init \) for the initial memory that has \( init .{\texttt {c}} = init _{\texttt {c}} \) and \(( init .{\texttt {c}})^\# = \varepsilon \in \mathbb {P} _{\texttt {c}} \).

Given a global memory \({\varSigma } \in \mathbb {S} \) and sequences \(\alpha , \beta \in \textsf {A}^*\) of method calls, we extend the independence relation of Definition 4 variable-wise, defining \({\varSigma } \Vdash \alpha \mathrel {\diamond _{}} \beta \) iff \(({\varSigma }.{\texttt {c}})^\# \Vdash _{\texttt {c}} (\pi _{\texttt {c}} (\alpha ))^\# \mathrel {\diamond _{}} (\pi _{\texttt {c}} (\beta ))^\#\). The application of a method call \(a \in \textsf {A}\) to a memory \({\varSigma } \in \mathbb {S} \) is written \({\varSigma }.a \in \mathbb {S} \) and defined \(({\varSigma }.({\texttt {c}}.m(v))).{\texttt {c}} = ({\varSigma }.{\texttt {c}}).m(v)\) and \(({\varSigma }.({\texttt {c}}.m(v))).{\texttt {c}} ' = {\varSigma }.{\texttt {c}} '\) for \({\texttt {c}} ' \ne {\texttt {c}} \). Analogously, method actions are lifted to global memories, i.e., \(({\varSigma } \odot {\texttt {c}}.m(v)).{\texttt {c}} ' = {\varSigma }.{\texttt {c}} '\) if \({\texttt {c}} ' \ne {\texttt {c}} \) and \(({\varSigma } \odot {\texttt {c}}.m(v)).{\texttt {c}} = {\varSigma }.{\texttt {c}} \odot m(v)\).

Proposition 1

(Commutation). Let all csm variables be policy-coherent and \({\varSigma } \Vdash a\mathrel {\diamond _{}} \alpha \) for a memory \({\varSigma } \in \mathbb {S} \), method call \(a \in \textsf {V}\) and sequences of method calls \(\alpha \in \textsf {V}^*\). Then, \({\varSigma } \odot a \odot \alpha = {\varSigma } \odot \alpha \odot a\) and \({\varSigma }.a = ({\varSigma } \odot \alpha ).a\).

2.6 Policies and Modularity

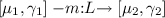

Consider the synchronous data-flow network cnt in Fig. 3b with three process nodes, a multiplexer mux, a register reg and an incrementor inc. Their DCoL code is given in Fig. 3a. The network implements a settable counter, which produces at its output \({\texttt {ys}}\) a stream of consecutive integers, incremented with each clock tick. The wires \({\texttt {ys}}\), \({\texttt {zs}}\) and \({\texttt {ws}}\) are IVars (see Example 2) carrying a single integer value per tick. The input \({\texttt {xs}}\) is a pure Esterel signal (see Example 1). The counter state is stored by reg in a local variable xv with \({{\texttt {read}}} \) and \({{\texttt {write}}} \) methods that can be called by a single thread only. The register is initialised to value 0 and in each subsequent tick the value at \({\texttt {ys}}\) is stored. The inc takes the value at \({\texttt {zs}}\) and increments it. When the signal \({\texttt {xs}}\) is absent, mux passes the incremented value on \({\texttt {ws}}\) to \({\texttt {ys}}\) for the next tick. Otherwise, if \({\texttt {xs}}\) is present then mux resets \({\texttt {ys}}\).

The evaluation order is implemented by the policies of the IVars \({\texttt {ys}}\), \({\texttt {zs}}\) and \({\texttt {ws}}\). In each case the put method takes precedence over get which makes sure that the latter is blocked until the former has been executed. The causality cycle of the feedback loop is broken by the fact that the reg node first sends the current counter value to \({\texttt {zs}}\) before it waits for the new value at \({\texttt {ys}}\). The other nodes mux and inc, in contrast, first read their inputs and then send to their output.

Now suppose, for modularity, the reg node is pre-compiled into a synchronous IO automaton to be used by mux and inc as a black box component. Then, \({{\texttt {reg}}} \) must be split into three modes [20] \({{\texttt {reg}}}.{{\texttt {init}}} \), \({{\texttt {reg}}}.{{\texttt {get}}} \) and \({{\texttt {reg}}}.{{\texttt {set}}} \) that can be called independently in each instant. The init mode initialises the register memory with 0. The \({{\texttt {get}}} \) mode extracts the buffered value and \({{\texttt {set}}} \) stores a new value into the register. Since there may be data races if get and set are called concurrently on reg, a policy must be imposed. In the scheduling of Fig. 3b, first \({{\texttt {reg}}}.{{\texttt {get}}} \) is executed to place the output on \({\texttt {zs}}\). Then, \({{\texttt {reg}}} \) waits for \({{\texttt {mux}}} \) to produce the next value of \({\texttt {ys}}\) from \({\texttt {xs}}\) or \({\texttt {ws}}\). Finally, \({{\texttt {reg}}}.{{\texttt {set}}} \) is executed to store the current value of \({\texttt {ys}}\) for the next tick. Thus, the natural policy for the register is to require that in each tick set is called by at most one thread and if so no concurrent call to get by another thread happens afterwards. In addition, the policy requires init to take place at least once before any set or get. Hence, the policy has two states \(\mathbb {P} _{{\texttt {reg}}} = \{ 0, 1 \}\) with initial \(\varepsilon = 0\) and admissibility such that \(0 \Vdash _{{\texttt {reg}}} \mathop {\downarrow }m\) iff \(m = {{\texttt {init}}} \) and \(1 \Vdash _{{\texttt {reg}}} \mathop {\downarrow }m\) for all m. The transitions are \(0 \odot {{\texttt {init}}} = 1\) and \(1 \odot m = 1\) for all \(m \in \textsf {M}_{{\texttt {reg}}} \). Further, for coherence, in state 1 no set may be concurrent and every get must take place before any concurrent set. This means, we have \(1 \Vdash _{{{\texttt {reg}}}} m \rightarrow {{\texttt {set}}} \) for all \(m \in \{ {{\texttt {get}}}, {{\texttt {set}}} \}\). Figure 3c shows the partially compiled code in which reg is treated as a compiled object. The policy on reg makes sure the accesses by mux and inc are scheduled in the right way (see Example 4). Note that reg is not an IVar because it has memory.

The cnt example exhibits a general pattern found in the modular compilation of SP: Modules (here reg) may be exercised several times in a synchronous tick through modes which are executed in a specific prescribed order. Mode calls (here \({{\texttt {reg}}}.{{\texttt {set}}} \), \({{\texttt {reg}}}.{{\texttt {get}}} \)) in the same module are coupled via common shared memory (here the local variable \({\texttt {xs}}\)) while mode calls in distinct modules are isolated from each other [15, 20].

3 Constructive Semantics of DCoL

To formalise our semantics it is technically expedient to keep track of the completion status of each active thread inside the program expression. This results in a syntax for processes distinguished from programs in that each parallel composition  is labelled by completion codes \(k_i \in \{ \bot , 0, 1 \}\) which indicate whether each thread is waiting \(k_i = \bot \), terminated 0 or pausing \(k_i = 1\). Since we remove a process from the parallel as soon as it terminates then the code \(k_i = 0\) cannot occur. An expression

is labelled by completion codes \(k_i \in \{ \bot , 0, 1 \}\) which indicate whether each thread is waiting \(k_i = \bot \), terminated 0 or pausing \(k_i = 1\). Since we remove a process from the parallel as soon as it terminates then the code \(k_i = 0\) cannot occur. An expression  is considered a special case of a process with \(k_i = \bot \). The formal semantics is given by a reduction relation on processes

is considered a special case of a process with \(k_i = \bot \). The formal semantics is given by a reduction relation on processes

specified by the inductive rules in Figs. 4 and 5. The relation (2) determines an instantaneous sequential reduction step of process P, called an sstep, that follows a sequence of enabled method calls \( {{\varvec{m}}} \in \textsf {M}^*\) in sequential program order in P. This does not include any context switches between concurrent threads inside P. For thread communication, several ssteps must be chained up, as described later. The sstep (2) results in an updated memory \({\varSigma } '\) and residual process \(P'\). The subscript \(k'\) is a completion code, described below. The reduction (2) is performed in a context consisting of a global memory \({\varSigma } \in \mathbb {S} \) (\( must \) context) containing the current state of all csm variables and an environment prediction \(\varPi \subseteq \textsf {M}^*\) (\( can \) context). The prediction records all potentially outstanding methods sequences from threads running concurrently with P.

We write \(\pi _{\texttt {c}} ({{\varvec{m}}}) \in \textsf {M}_{\texttt {c}} ^*\) for the projection of a method sequence \({{\varvec{m}}} \in \textsf {M}^*\) to variable \({\texttt {c}} \) and write \(\pi _{\texttt {c}} (\varPi )\) for its lifting to sets of sequences. Prefixing is lifted, too, i.e., \({\texttt {c}}.m \odot \varPi = \{ {\texttt {c}}.m\, {{\varvec{m}}} \mid {\varvec{m}} \in \varPi \}\) for any \({\texttt {c}}.m \in \textsf {M}\).

Performing a method call \({\texttt {c}}.m(v)\) in \({\varSigma }; \varPi \) advances the \( must \) context to \({\varSigma } \odot {\texttt {c}}.m(v)\) but leaves \(\varPi \) unchanged. The sequence of methods \({{\varvec{m}}} \in \textsf {M}^*\) in (2) is enabled in \({\varSigma }; \varPi \), written \([{\varSigma }, \varPi ] \Vdash \mathop {\downarrow }{{\varvec{m}}}\) meaning that \([({\varSigma }.{\texttt {c}})^\#, \pi _{\texttt {c}} (\varPi )] \Vdash _{\texttt {c}} \mathop {\downarrow }\pi _{\texttt {c}} ({{\varvec{m}}})\) for all \({\texttt {c}} \in \textsf {O}\). In this way, the context \([{\varSigma }, \varPi ]\) forms a joint policy state for all variables for the tuc P, in the sense of Sect. 2 (Definition 3).

Most of the rules in Figs. 4 and 5 should be straightforward for the reader familiar with structural operational semantics. \(\textsf {Seq} _1\) is the case of a sequential P; Q where P pauses or waits (\(k' \ne 0\)) and \(\textsf {Seq} _2\) is where P terminates and control passes into Q. The statements \(\mathop {\mathtt {skip}} \) and \(\mathop {\mathtt {pause}} \) are handled by rules \(\textsf {Cmp} _1\) and \(\textsf {Cmp} _2\). The rule \(\textsf {Rec} \) explains recursion \(\mathop {\textsf {rec}} p. P\) by syntactic unfolding of the recursion body P. All interaction with the memory takes place in the method calls \(\mathop {{\texttt {let}}} x = {\texttt {c}}.m(e)\; \mathop {{\texttt {in}}} P\). Rule \(\textsf {Let} _1\) is applicable when the method call is enabled, i.e., \([{\varSigma }, \varPi ] \Vdash \mathop {\downarrow }{\texttt {c}}.m\). Since processes are closed, the argument expression e must evaluate, \( eval (e) = v\), and we obtain the new memory \({\varSigma } \odot {\texttt {c}}.m(v)\) and return value \({\varSigma }.{\texttt {c}}.m(v)\). The return value is substituted for the local (stack allocated) identifier x, giving the continuation process \(P\{{\varSigma }.{\texttt {c}}.m(v)/x\}\) which is run in the updated context \({\varSigma } \odot {\texttt {c}}.m(v); \varPi \). The prediction \(\varPi \) remains the same. The second rule \(\textsf {Let} _2\) is used when the method call is blocked or the thread wants to wait and yield to the scheduler. The rules for conditionals \(\textsf {Cnd} _1\), \(\textsf {Cnd} _2\) are canonical. More interesting are the rules \(\textsf {Par} _1\)–\(\textsf {Par} _4\) for parallel composition, which implement non-deterministic thread switching. It is here where we need to generate predictions and pass them between the threads to exercise the policy control.

The key operation is the computation of the \( can \)-prediction of a process P to obtain an over-approximation of the set of possible method sequences potentially executed by P. For compositionality we work with sequences \( can ^s(P) \subseteq \textsf {M}^*\times \{ 0 , 1 \}\) stoppered with a completion code 0 if the sequence ends in termination or 1 if it ends in pausing. The symbols \(\bot _0\), \(\bot _1\) and \(\top \) are the terminated, paused and fully unconstrained \( can \) contexts, respectively, with \(\bot _0 = \{ (\varepsilon , 0) \}\), \(\bot _1 = \{ (\varepsilon , 1) \}\) and \(\top = \textsf {M}^*\times \{ 0 , 1 \}\). The set \( can ^s(P) \), defined in Fig. 6, is extracted from the structure of P using prefixing \({\texttt {c}}.m \odot \varPi '\), choice \(\varPi _1' \oplus \varPi _2' = \varPi _1' \cup \varPi _2'\), parallel \(\varPi _1' \otimes \varPi _2'\) and sequential composition \(\varPi _1' \cdot \varPi _2'\). Sequential composition is obtained pairwise on stoppered sequences such that \(({{\varvec{m}}}, 0) \cdot ({{\varvec{n}}}, c) = ({{\varvec{m}}}\, {\varvec{n}}, c)\) and \(({{\varvec{m}}}, 1) \cdot ({{\varvec{n}}}, c) = ({{\varvec{m}}}, 1)\). As a consequence, \(\bot _0 \cdot \varPi ' = \varPi '\) and \(\bot _1 \cdot \varPi ' = \bot _1\). Parallel composition is pairwise free interleaving with synchronisation on completion codes. Specifically, a product \(({{\varvec{m}}}, c) \otimes ({{\varvec{n}}}, d)\) generates all interleavings of \({{\varvec{m}}}\) and \({{\varvec{n}}}\) with a completion that models a parallel composition that terminates iff both threads terminate and pauses if one pauses. Formally, \(({{\varvec{m}}}, c) \otimes ({{\varvec{n}}}, d) = \{ ({{\varvec{c}}}, \textit{max} (c, d)) \mid {{\varvec{c}}} \in {\varvec{m}} \otimes {{\varvec{n}}} \}\). Thus, \(\varPi _P' \otimes \varPi _Q' = \bot _0\) iff \(\varPi _P' = \bot _0 = \varPi _Q'\) and \(\varPi _P' \otimes \varPi _Q' = \bot _1\) if \(\varPi _P' = \bot _1 = \varPi _Q'\), or \(\varPi _P' = \bot _0\) and \(\varPi _Q' = \bot _1\), or \(\varPi _P' = \bot _1\) and \(\varPi _Q' = \bot _0\). From \( can ^s(P) \) we obtain \( can (P) \subseteq \textsf {M}^*\) by dropping all stopper codes, i.e., \( can (P) = \{ {{\varvec{m}}} \mid \exists d.\, ({{\varvec{m}}}, d) \in can ^s(P) \}\).

The rule \(\textsf {Par} _1\) exercises a parallel  by performing an sstep in P. This sstep is taken in the extended context \({\varSigma }; \varPi \otimes can (Q)\) in which the prediction of the sibling Q is added to the method prediction \(\varPi \) for the outer environment in which the parent

by performing an sstep in P. This sstep is taken in the extended context \({\varSigma }; \varPi \otimes can (Q)\) in which the prediction of the sibling Q is added to the method prediction \(\varPi \) for the outer environment in which the parent  is running. In this way, Q can block method calls of P. When P finally yields as \(P'\) with a non-terminating completion code, \(0 \ne k' \in \{ \bot , 1 \}\), the parallel completes as

is running. In this way, Q can block method calls of P. When P finally yields as \(P'\) with a non-terminating completion code, \(0 \ne k' \in \{ \bot , 1 \}\), the parallel completes as  with code \(k' \sqcap k_Q\). This operation is defined \(k_1 \sqcap k_2 = 1\) if \(k_1 = 1 = k_2\) and \(k_1 \sqcap k_2 = \bot \), otherwise. When P terminates its sstep with code \(k' = 0\) then we need rule \(\textsf {Par} _2\) which removes child \(P'\) from the parallel composition. The rules \(\textsf {Par} _3, \textsf {Par} _4\) are symmetrical to \(\textsf {Par} _1, \textsf {Par} _2\). They run the right child Q of a parallel

with code \(k' \sqcap k_Q\). This operation is defined \(k_1 \sqcap k_2 = 1\) if \(k_1 = 1 = k_2\) and \(k_1 \sqcap k_2 = \bot \), otherwise. When P terminates its sstep with code \(k' = 0\) then we need rule \(\textsf {Par} _2\) which removes child \(P'\) from the parallel composition. The rules \(\textsf {Par} _3, \textsf {Par} _4\) are symmetrical to \(\textsf {Par} _1, \textsf {Par} _2\). They run the right child Q of a parallel  .

.

Completion and Stability. A process \(P'\) is 0-stable if \(P' = \mathop {\mathtt {skip}} \) and 1-stable if \(P' = \mathop {\mathtt {pause}} \) or \(P' = P_1' \mathop {{\texttt {;}}} P_2'\) and \(P_1'\) is 1-stable, or  , and \(P_i'\) are 1-stable. A process is stable if it is 0-stable or 1-stable. A process expression is well-formed if in each sub-expression

, and \(P_i'\) are 1-stable. A process is stable if it is 0-stable or 1-stable. A process expression is well-formed if in each sub-expression  of P the completion annotations are matching with the processes, i.e., if \(k_i \ne \bot \) then \(P_i\) is \(k_i\)-stable. Stable processes are well-formed by definition. For stable processes we define a (syntactic) tick function which steps a stable process to the next tick. It is defined such that \(\mathop {\sigma }(\mathop {\mathtt {skip}}) = \mathop {\mathtt {skip}} \), \(\mathop {\sigma }(\mathop {\mathtt {pause}}) = \mathop {\mathtt {skip}} \), \(\mathop {\sigma }(P_1' \mathop {{\texttt {;}}} P_2') = \mathop {\sigma }(P_1') \mathop {{\texttt {;}}} P_2'\) and

of P the completion annotations are matching with the processes, i.e., if \(k_i \ne \bot \) then \(P_i\) is \(k_i\)-stable. Stable processes are well-formed by definition. For stable processes we define a (syntactic) tick function which steps a stable process to the next tick. It is defined such that \(\mathop {\sigma }(\mathop {\mathtt {skip}}) = \mathop {\mathtt {skip}} \), \(\mathop {\sigma }(\mathop {\mathtt {pause}}) = \mathop {\mathtt {skip}} \), \(\mathop {\sigma }(P_1' \mathop {{\texttt {;}}} P_2') = \mathop {\sigma }(P_1') \mathop {{\texttt {;}}} P_2'\) and  .

.

Example 4

The data-flow cnt-cmp from Fig. 3c can be represented as a DCoL process in the form  with

with

Let us evaluate process C from an initialised memory \({\varSigma } _0\) such that \({\varSigma } _0.{\texttt {xs}} = 0 = {\varSigma } _0.{\texttt {ws}} \), and empty environment prediction \(\{\epsilon \}\).

The first sstep is executed from the context \({\varSigma } _0; \{\epsilon \}\) with empty \( can \) prediction. Note that  abbreviates \(\mathop {{\texttt {let}}}\ \_ = {{\texttt {reg}}}.{{\texttt {init}}} (0)\ \mathop {{\texttt {in}}} \) \((M \mathrel {_{\bot \;}\!\!{\mathop {{\texttt {|\!\!|}}}}{\!}_{\;\bot }} I)\). In context \({\varSigma } _0; \{\epsilon \}\) the method call \({{\texttt {reg}}}.{{\texttt {init}}} (0)\) is enabled, i.e., \([{\varSigma } _0, \{\epsilon \}] \Vdash \mathop {\downarrow }{{\texttt {reg}}}.{{\texttt {init}}} \). Since \( eval (0) = 0\), we can execute the first method call of C using rule \(\textsf {Let} _1\). This advances the memory to \({\varSigma } _1 = {\varSigma } _0 \odot {{\texttt {reg}}}.{{\texttt {init}}} (0)\). The continuation process

abbreviates \(\mathop {{\texttt {let}}}\ \_ = {{\texttt {reg}}}.{{\texttt {init}}} (0)\ \mathop {{\texttt {in}}} \) \((M \mathrel {_{\bot \;}\!\!{\mathop {{\texttt {|\!\!|}}}}{\!}_{\;\bot }} I)\). In context \({\varSigma } _0; \{\epsilon \}\) the method call \({{\texttt {reg}}}.{{\texttt {init}}} (0)\) is enabled, i.e., \([{\varSigma } _0, \{\epsilon \}] \Vdash \mathop {\downarrow }{{\texttt {reg}}}.{{\texttt {init}}} \). Since \( eval (0) = 0\), we can execute the first method call of C using rule \(\textsf {Let} _1\). This advances the memory to \({\varSigma } _1 = {\varSigma } _0 \odot {{\texttt {reg}}}.{{\texttt {init}}} (0)\). The continuation process  is now executed in context \({\varSigma } _1; \bot _0\). The left child M starts with method call \({\texttt {xs}}.\mathop {\mathtt {pres}} ()\) and the right child I with \({{\texttt {reg}}}.{{\texttt {get}}} ()\). The latter is admissible, since \(({\varSigma } _1.{{\texttt {reg}}})^\# = 1\). Moreover, get does not need to honour any precedences, whence it is enabled, \([{\varSigma } _1, \varPi ] \Vdash \mathop {\downarrow }{{\texttt {reg}}}.{{\texttt {get}}} \) for any \(\varPi \). On the other hand, \({\texttt {xs}}.\mathop {\mathtt {pres}} \) in M is enabled only if \(({\varSigma } _1.{\texttt {xs}})^\# = 1\) or if there is no concurrent \(\mathop {\mathtt {emit}} \) predicted for \({\texttt {xs}} \). Indeed, this is the case: The concurrent context of M is \(\varPi _{I} = \{\epsilon \} \otimes can (I) = can (I) = \{ {{\texttt {reg}}}.{{\texttt {get}}} \cdot {\texttt {ws}}.{{\texttt {put}}} \}\). We project \(\pi _{\texttt {xs}} (\varPi _{I}) = \{ \epsilon \}\) and find \([{\varSigma } _1, \varPi _I] \Vdash \mathop {\downarrow }{\texttt {xs}}.\mathop {\mathtt {pres}} \). Hence, we have a non-deterministic choice to take an sstep in M or in I. Let us use rule \(\textsf {Par} _1/\textsf {Par} _2\) to run M in context \({\varSigma }; \varPi _{I}\). By loop unfolding \(\textsf {Rec} \) and rule \(\textsf {Let} _1\) we execute the present test of M which returns the value \({\varSigma } _1.{\texttt {xs}}.\mathop {\mathtt {pres}} () = {\texttt {false}}\). This leads to an updated memory \({\varSigma } _2 = {\varSigma } _1 \odot {\texttt {xs}}.\mathop {\mathtt {pres}} () = {\varSigma } _1\) and continuation process \(P({\texttt {false}}); \mathop {\mathtt {pause}}; M\). In this (right associated) sequential composition we first execute \(P({\texttt {false}})\) where the conditional rule \(\textsf {Cnd} _2\) switches to the \({{\texttt {else}}} \) branch Q which is \(u = {\texttt {ws}}.{{\texttt {get}}} (); {{\texttt {reg}}}.{{\texttt {set}}} (u);\), still in the context \({\varSigma } _2, \varPi _I\). The reading of the data-flow variable \({\texttt {ws}} \), however, is not enabled, \([{\varSigma } _2, \varPi _I] \nVdash \mathop {\downarrow }{\texttt {ws}}.{{\texttt {get}}} \), because \(({\varSigma } _2.{\texttt {ws}})^\# = 0\) and thus \({{\texttt {get}}} \) not admissible. The sstep blocks with rule \(\textsf {Let} _2\):