Abstract

Given a simplicial complex and a vector-valued function on its vertices, we present an algorithmic construction of an acyclic partial matching on the cells of the complex. This construction is used to build a reduced filtered complex with the same multidimensional persistent homology as of the original one filtered by the sublevel sets of the function. A number of numerical experiments show a substantial rate of reduction in the number of cells achieved by the algorithm.

T. Kaczynski—This work was partially supported by NSERC Canada Discovery Grant and IMA Minnesota.

C. Landi—Work performed under the auspices of INdAM-GNSAGA.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

- Multidimensional persistent homology

- Discrete Morse theory

- Acyclic partial matchings

- Matching algorithm

- Reduced complex

1 Introduction

Persistent homology has been established as an important tool for the topological analysis of discrete data. However, its effective computation remains a challenge due to the huge size of complexes built from data. Some recent works focussed on algorithms that reduce the original complexes generated from data to much smaller cellular complexes, homotopically equivalent to the initial ones by means of acyclic partial matchings of discrete Morse theory.

Although algorithms computing acyclic partial matchings have primarily been used for persistence of one-dimensional filtrations, see e.g. [11, 13, 16], there is currently a strong interest in combining persistence information coming from multiple functions in multiscale problems, e.g. in biological applications [20], which motivates extensions to generalized types of persistence. The extension of persistent homology to multifiltrations is studied in [5]. Other related directions are explored e.g. by the authors of [19] who do statistics on a set of one-dimensional persistence diagrams varied as coordinate system rotates, and in [8], where persistence modules on quiver complexes are studied.

Our attempt parallel to [8] is [2], where an algorithm given by King et al. in [11] is extended to multifiltrations. The algorithm produces a partition of the initial complex into three sets \((\texttt {A},\texttt {B},\texttt {C})\) and an acyclic partial matching \(\texttt {m}: \texttt {A}\rightarrow \texttt {B}\). Any simplex which is not matched is added to \(\texttt {C}\) and defined as critical. The matching algorithm of [2] is used for reducing a simplicial complex to a smaller one by elimination of matched simplices. First experiments with filtrations of triangular meshes show that there is a considerable amount of cells identified by the algorithm as critical but which seem to be spurious, in the sense that they appear in clusters of adjacent critical faces which do not seem to carry significant topological information.

The aim of this paper is to improve our previous matching method for optimality, in the sense of reducing the number of spurious critical cells. Our new matching algorithm extends the one given in [16] for cubical complexes, which processes lower stars rather than lower links, and improves the result of [2] for optimality. Next, the new matching algorithm presented here emerges from the observation that, in the multidimensional setting, it is not enough to look at lower stars of vertices: one should take into consideration the lower stars of simplices of all dimensions, as there may be vertices of a simplex which are not comparable in the partial order of the multifiltration. The vector-valued function initially given on vertices of a complex is first extended to simplices of all dimensions. Then the algorithm processes the lower stars of all simplices, not only the vertices. The resulting acyclic partial matching is used as in [2] to construct a reduced filtered Lefschetz complex with the same multidimensional persistent homology as the original simplicial complex filtered by the sublevel sets of the function. Our reduction is derived from the works of [10, 13, 14]. A recent related work on reduction techniques can be found among others in [6].

The paper is organized as follows. In Sect. 2, the preliminaries are introduced. In Sect. 3, the main Algorithm 2 is presented and its correctness is discussed. At the section end, the complementing reduction method is recalled from [2]. In Sect. 4 experiments on synthetic and real 3D data are presented. In Sect. 5, we comment on open questions and prospects for future work.

2 Preliminaries

Let \(\mathcal{K}\) be a finite geometric simplicial complex, that is a finite set composed of vertices, edges, triangles, and their q-dimensional counterparts, called simplices. A q-dimensional simplex is the convex hull of affinely independent vertices \(v_0, \ldots v_q \in {\mathbb R}^n\) and is denoted by \(\sigma =[v_0, \ldots v_q]\). The set of q-simplices of \(\mathcal{K}\) is denoted by \(\mathcal{K}_q\). A face of a q-simplex \(\sigma \in \mathcal{K}\) is a simplex \(\tau \) whose vertices constitute a subset of \(\{v_0,v_1,\ldots ,v_q\}\). If \(\dim \tau = q-1\), it is called a facet of \(\sigma \). In this case, \(\sigma \) is called a cofacet of \(\tau \), and we write \(\tau < \sigma \).

A partial matching \((\texttt {A},\texttt {B},\texttt {C},\texttt {m})\) on \(\mathcal{K}\) is a partition of \(\mathcal{K}\) into three sets \(\texttt {A},\texttt {B},\texttt {C}\) together with a bijective map \(\texttt {m}: \texttt {A}\rightarrow \texttt {B}\), also called discrete vector field, such that, for each \(\tau \in \texttt {A}\), \(\texttt {m}(\tau )\) is a cofacet of \(\tau \). The intuition behind is that projection from \(\tau \) to the complementing part of the boundary of \(\texttt {m}(\tau )\) induces a homotopy equivalence between \(\mathcal{K}\) and a smaller complex. An \(\texttt {m}\) -path is a sequence \(\sigma _0,\tau _0,\sigma _1,\tau _1,\ldots ,\sigma _p,\tau _p,\sigma _{p+1}\) such that, for each \(i=0,\ldots ,p\), \(\sigma _{i+1}\ne \sigma _i\), \(\tau _i=\texttt {m}(\sigma _i)\), and \(\tau _i\) is a cofacet of \(\sigma _{i+1}\).

A partial matching \((\texttt {A},\texttt {B},\texttt {C},\texttt {m})\) on \(\mathcal{K}\) is called acyclic if there does not exist a closed \(\texttt {m}\)-path, that is an \(\texttt {m}\)-path such that, \(\sigma _{p+1}=\sigma _0\).

The main goal of this paper is to produce an acyclic partial matching which preserves the filtration of a simplicial complex \(\mathcal{K}\) by sublevel sets of a vector-valued function \(f: \mathcal{K}_0 \rightarrow {\mathbb R}^k\) given on the set of vertices of \(\mathcal{K}\). We assume that \(f: \mathcal{K}_0 \rightarrow \mathbb {R}^k\) is a function which is component-wise injective, that is, whose components \(f_i\) are injective. This assumption is used in [3] for proving correctness of the algorithm. Given any function \(\tilde{f}: \mathcal{K}_0 \rightarrow \mathbb {R}^k\), we can obtain a component-wise injective function f which is arbitrarily close to \(\tilde{f}\) via the following procedure. Let n denote the cardinality of \(\mathcal{K}_0\). For \(i=1,\ldots ,k\), let us set  . For each i with \(1\le i\le k\), we can assume that the n vertices in \(\mathcal{K}_0\) are indexed by an integer index j, with \(1\le j\le n\), increasing with

. For each i with \(1\le i\le k\), we can assume that the n vertices in \(\mathcal{K}_0\) are indexed by an integer index j, with \(1\le j\le n\), increasing with  . Thus, the function

. Thus, the function  can be defined by setting

can be defined by setting  , with \(s\ge 1\) (the larger s, the closer f to \(\tilde{f}\)). Finally, it is sufficient to set \(f=(f_1,f_2,\ldots , f_k)\). We extend f to a function \(f: \mathcal{K}\rightarrow {\mathbb R}^k\) as follows:

, with \(s\ge 1\) (the larger s, the closer f to \(\tilde{f}\)). Finally, it is sufficient to set \(f=(f_1,f_2,\ldots , f_k)\). We extend f to a function \(f: \mathcal{K}\rightarrow {\mathbb R}^k\) as follows:

Any function \(f : {\mathcal{K}} \rightarrow {\mathbb R}^k\) that is an extension of a component-wise injective function \(f : {\mathcal{K}}_0 \rightarrow {\mathbb R}^k\) defined on the vertices of the complex \(\mathcal{K}\) in such a way that f satisfies Eq. (1) will be called admissible. In \({\mathbb R}^k\) we consider the following partial order. Given two values \(a=(a_i), b=(b_i)\in {\mathbb R}^k\) we set \(a\preceq b\) if and only if \(a_i\le b_i\) for every i with \(1\le i\le k\). Moreover we write \(a\precneqq b\) whenever \(a\preceq b\) and \(a\ne b\). The sublevel set filtration of \(\mathcal{K}\) induced by an admissible function f is the family \(\{\mathcal{K}^a\}_{a\in {\mathbb R}^k}\) of subsets of \(\mathcal{K}\) defined as follows:

It is clear that, for any parameter value \(a\in {\mathbb R}^k\) and any simplex \(\sigma \in {\mathcal{K}}^a\), all faces of \(\sigma \) are also in \({\mathcal{K}}^a\). Thus \({\mathcal{K}}^a\) is a simplical subcomplex of \(\mathcal{K}\) for each a. The changes of topology of \({\mathcal{K}}^a\) as we change the multiparameter a permit recognizing some features of the shape of \(|{\mathcal{K}}|\) if f is appropriately chosen. For this reason, the function f is called in the literature a measuring function or, more specifically, a multidimensional measuring function [4]. The lower star of a simplex is the set

and the reduced lower stars is the set \(L_*(\sigma )=L(\sigma )\setminus \{\sigma \}\).

2.1 Indexing Map

An indexing map on the simplices of the complex \(\mathcal{K}\) of cardinality N, compatible with an admissible function f, is a bijective map \(I:{\mathcal{K}} \rightarrow \{1,2,\ldots , N\}\) such that, for each \(\sigma , \tau \in {\mathcal{K}}\) with \(\sigma \ne \tau \), if \(\sigma \subseteq \tau \) or \(f(\sigma )\precneqq f(\tau )\) then \(I(\sigma )<I(\tau )\).

To build an indexing map I on the simplices of the complex \(\mathcal{K}\), we will revisit the algorithm introduced in [2] that uses the topological sorting of a Directed Acyclic Graph (DAG) to build an indexing for vertices of a complex that is compatible with the ordering of values of a given function defined on the vertices. We will extend the algorithm to build an indexing for all cells of a complex that is compatible with both the ordering of values of a given admissible function defined on the cells and the ordering of the dimensions of the cells. We recall that a topological sorting of a directed graph is a linear ordering of its nodes such that for every directed edge (u, v) from node u to node v, u precedes v in the ordering. This ordering is possible if and only if the graph has no directed cycles, that is, if it is a DAG. A simple well known algorithm (see [17]) for this task consists of successively finding nodes of the DAG that have no incoming edges and placing them in a list for the final sorting. Note that at least one such node must exist in a DAG, otherwise the graph must have at least one directed cycle.

When the graph is a DAG, there exists at least one solution for the sorting problem, which is not necessarily unique. We can easily see that each node and each edge of the DAG is visited once by the algorithm, therefore its running time is linear in the number of nodes plus the number of edges in the DAG. The following lemma whose proof is based on Algorithm 1 is proved in [3].

Lemma 1

Let \(f:\mathcal{K}\rightarrow {\mathbb R}^k\) be an admissible function. There exists an injective function \(I:{\mathcal{K}} \rightarrow {\mathbb N}\) such that, for each \(\sigma , \tau \in {\mathcal{K}}\) with \(\sigma \ne \tau \), if \(\sigma \subseteq \tau \) or \(f(\sigma )\precneqq f(\tau )\) then \(I(\sigma )<I(\tau )\).

3 Matching Algorithm

The main contribution of this paper is the Matching Algorithm 2. It uses as input a finite simplicial complex \(\mathcal{K}\) of cardinality N, an admissible function \(f:\mathcal{K}\rightarrow {\mathbb R}^k\) built from a component-wise injective function \(f : {\mathcal{K}}_0 \rightarrow {\mathbb R}^k\) using the extension formula given in Eq. (1), and an indexing map I compatible with f. It can be precomputed using the topological sorting Algorithm 1. Given a simplex \(\sigma \), we use unclass_facets \(_{\sigma }(\alpha \)) to denote the set of facets of a simplex \(\alpha \) that are in \(L(\sigma )\) and have not been classified yet, that is, not inserted in either \(\texttt {A}\), \(\texttt {B}\), or \(\texttt {C}\), and num_unclass_facets \(_{\sigma }(\alpha \)) to denote the cardinality of unclass_facets \(_{\sigma }(\alpha \)). We initialize classified(\(\sigma \)) = false for every \(\sigma \in \mathcal{K}\). We use priority queues PQzero and PQone which store candidates for pairings with zero and one unclassified facets respectively in the order given by I. We initialize both as empty sets. The algorithm processes cells in the increasing order of their indexes. Each cell \(\sigma \) can be set to the states of classified(\(\sigma \)) = true or classified(\(\sigma \)) = false so that if it is processed as part of a lower star of another cell it is not processed again by the algorithm. The algorithm makes use of extra routines to calculate the cells in the lower star \(L(\sigma )\) and the set of unclassified facets unclass_facets \(_{\sigma }(\alpha \)) of \(\alpha \) in \(L_*(\sigma )\) for each cell \(\sigma \in \mathcal{K}\) and each cell \(\alpha \in L_*(\sigma )\).

The goal of the process is to build a partition of \(\mathcal{K}\) into three lists \(\texttt {A}\), \(\texttt {B}\), and \(\texttt {C}\) where \(\texttt {C}\) is the list of critical cells and in which each cell in \(\texttt {A}\) is paired in a one-to-one manner with a cell in \(\texttt {B}\) which defines a bijective map \(\texttt {m}:\texttt {A}\rightarrow \texttt {B}\). When a cell \(\sigma \) is considered, each cell in its lower star \(L(\sigma )\) is processed exactly once as shown in [3]. The cell \(\sigma \) is inserted into the list of critical cells \(\texttt {C}\) if \(L_* (\sigma ) = \emptyset \). Otherwise, \(\sigma \) is paired with the cofacet \(\delta \in L_* (\sigma )\) that has minimal index value \(I(\delta )\). The algorithm makes additional pairings which can be interpreted topologically as the process of constructing \(L_*(\sigma )\) with simple homotopy expansions or the process of reducing \(L_*(\sigma )\) with simple homotopy contractions. When no pairing is possible a cell is classified as critical and the process is continued from that cell. A cell \(\alpha \) is candidate for a pairing when unclass_facets \(_{\sigma }(\alpha \)) contains exactly one element \(\lambda \) that belongs to PQzero. For this purpose, the priority queues PQzero and PQone which store cells with zero and one available unclassified faces respectively are created. As long as PQone is not empty, its front is popped and either inserted into PQzero or paired with its single available unclassified face. When PQone becomes empty, the front cell of PQzero is declared as critical and inserted in \(\texttt {C}\).

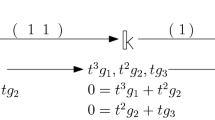

We illustrate the algorithm by a simple example. We use the simplicial complex \(\texttt {S}\) from our first paper [2, Figure 2] to compare the outputs of the previous matching algorithm and the new one. Figure 1(a) displays \(\texttt {S}\) and the output of [2, Algorithm 6]. The coordinates of vertices are the values of the function considered in [2]. Since that function is not component-wise injective, we denote it by \(\tilde{f}\) and we start from constructing a component-wise injective approximation f discussed at the beginning of Sect. 2. If we interpret the passage from \(\tilde{f}\) to f as a displacement of the coordinates of vertices, the new complex \(\mathcal{K}\) is illustrated by Fig. 1(b). The partial order relation is preserved when passing from \(\tilde{f}\) to f, and the indexing of vertices in [2, Figure 2] may be kept for f. Hence, it is easy to see that [2, Algorithm 6] applied to \(\mathcal{K}\) gives the same result as that displayed in Fig. 1(a). In order to apply our new Algorithm 2, we need to index all 14 simplices of \(\mathcal{K}\). For convenience of presentation, we label the vertices \(w_i\), edges \(e_i\), and triangles \(t_i\) by the index values \(i=1,2,...,14\). The result is displayed in Fig. 1(b). The sequence of vertices \((v_0,v_1,v_2,v_3,v_4)\) is replaced by \((w_1,w_2,w_4,w_8,w_{12})\). Here are the main steps of the algorithm:

The output is displayed in Fig. 1(b).

In (a), the complex and output of Algorithm 6 of [2] are displayed. Gray-shaded triangles are those which are present in the simplicial complex. Critical simplices are marked by red circles and the matched simplices are marked by arrows. In (b), the complex is modified so to satisfy the coordinate-wise injectivity assumption. Labeling of all simplices by the indexing function and the output of Algorithm 2 are displayed. (Color figure online)

3.1 Correctness

The full and detailed proof of correctness of Algorithm 2 is given in [3]. We present here the main steps of the proof. Recall that \(f=(f_1,\ldots , f_k): \mathcal{K}\rightarrow \mathbb {R}^k\) is an admissible function. We start with some preparatory results that allow to make a proof by induction that every cell of the complex is classified exactly once by the algorithm and the algorithm produces effectively an acyclic partial matching of the complex.

-

First, we note that lower stars of distinct simplices are not necessarily disjoint. However when two lower stars meet, they get automatically classified at the same time by the algorithm (see [3, Lemma 3.1]).

-

If a cell \(\sigma \) is unclassified when Algorithm 2 reaches line 5, then either \(L_*(\sigma )\) is empty for which case \(\sigma \) is classified as critical. Alternatively, \(L_*(\sigma )\) contains at least one cofacet of \(\sigma \) that has \(\sigma \) as a unique facet in \(L(\sigma )\). Then \(\sigma \) is paired with the cofacet with minimal index and the remainder of its cofacets in \(L_*(\sigma )\), have no unclassified faces in \(L_*(\sigma )\) and hence they must enter PQzero at line 11 of Algorithm 2. Moreover, if for every cell \(\alpha \) with \(I(\alpha ) < I(\sigma )\), \(L(\alpha )\) consists only of classified cells, then all cells in \(L(\sigma )\) are also unclassified (see [3, Lemmas 3.2, 3.4]).

-

Finally, we prove by induction on the index of the cells that every cell is classified in a unique fashion by the algorithm. The proof is simple when the index takes values 1 and 2 since the cells can be only vertices or edges (see [3, Lemma 3.3]). For the general index, we first prove that for any cell popped from PQone, its unique unclassified facet belongs to PQzero and the two cells get paired at line 19 of the algorithm (see [3, Lemma 3.5]). Afterwards, we state that each cell that is still unclassified after line 10 of the algorithm ultimately enters PQone or PQzero and gets classified. This requires an argument based on the dimension of the cell and the number of its unclassified faces in the considered lower star. Moreover, we prove that if a cell is already classified, it cannot be considered again for classification or enter the structures PQone or PQzero (see [3, Lemma 3.6]).

-

The correctness proof is concluded by proving that the algorithm produces an acyclic partial matching of the complex (see [3, Proposition 3.7 and Theorem 3.8]).

3.2 Filtration Preserving Reductions

Lefchetz complexes introduced by Lefschetz in [12] are developed further in [14] under the name \(\texttt {S}\)-complex. In our context, these complexes are produced by applying the reduction method [10, 13, 14] to an initial simplicial complex \(\mathcal{K}\), with the use of the matchings produced by our main Algorithm 2. Both concepts of partial matchings and sublevel set filtration of \(\mathcal{K}\) induced by \(f: \mathcal{K}\rightarrow {\mathbb R}^k\) introduced in Sect. 2 naturally extend to Lefschetz complexes as proved in [2]. Persistence is based on analyzing the homological changes occurring along the filtration as the multiparameter \(a\in {\mathbb R}^k\) varies. This analysis is carried out by considering, for \(a \preceq b\), the homomorphism \(H_*(j^{(a,b)}): H_*(\texttt {S}^{a}) \rightarrow H_*(\texttt {S}^{b})\) induced by the inclusion map \(j^{(a,b)}:\texttt {S}^{a}\hookrightarrow \texttt {S}^{b}\). The image of the map \(H_q(j^{(a,b)})\) is known as the q-th multidimensional persistent homology group of the filtration at (a, b) and we denote it by \(H_q^{a,b}(\texttt {S})\). It contains the homology classes of order q born not later than a and still alive at b.

If we assume that \((\texttt {A},\texttt {B},\texttt {C},\texttt {m})\) is an acyclic matching on a filtered Lefschetz complex \(\texttt {S}\) obtained from the original simplicial complex \(\mathcal{K}\) by reduction, the following result holds which asserts that the multidimensional persistent homology of the reduced complex is the same as of the initial complex (see [2]).

Corollary 2

For every \(a\preceq b\in {\mathbb R}^k\), \(H_*^{a,b}(\texttt {C}) \cong H_*^{a,b}(\mathcal{K})\).

3.3 Complexity Analysis

Given a simplex \(\sigma \in \mathcal{K}\), the coboundary cells of \(\sigma \) are given by \({\mathbf {cb\,}}(\sigma ) := \{ \tau \in \mathcal{K}\, |\, \sigma \, \text {is a face of } \, \tau \}\). It is immediate from the definitions that \(L_* (\sigma ) \subset {\mathbf {cb\,}}(\sigma )\). We define the coboundary mass \(\gamma \) of \(\mathcal{K}\) as \(\gamma = \max _{\sigma \in \mathcal{K}} \mathrm{card\,}{\mathbf {cb\,}}(\sigma )\), where \(\mathrm{card\,}\) denotes cardinality. While \(\gamma \) is trivially bounded by N, the number of cells in \(\mathcal{K}\), this upper bound is a gross estimate of \(\gamma \) for many complexes of manifolds and approximating surface boundaries of objects. For the simplicial complex \(\mathcal{K}\), we assume that the boundary and coboundary cells of each simplex are computed offline and stored in such a way that access to every cell is done in constant time. Given an admissible function \(f : {\mathcal{K}} \rightarrow {\mathbb R}^k\), the values by f of simplices \(\sigma \in \mathcal{K}\) are stored in the structure that stores the complex \(\mathcal{K}\) in such a way that they are accessed in constant time. We assume that adding cells to the lists \(\texttt {A}\), \(\texttt {B}\), and \(\texttt {C}\) is done in constant time. Algorithm 2 processes every cell \(\sigma \) of the simplicial complex \(\mathcal{K}\) and checks whether it is classified or not. In the latter case, the algorithm requires a function that returns the cells in the reduced lower star \(L_* (\sigma )\) which is read directly from the structure storing the complex. In the best case, \(L_* (\sigma )\) is empty and the cell is declared critical. Since \(L_* (\sigma ) \subset {\mathbf {cb\,}}(\sigma )\), it follows that \(\mathrm{card\,}L_* (\sigma ) \le \gamma \). From Algorithm 2, we can see that every cell in \(L_* (\sigma )\) enters at most once in PQzero and PQone. It follows that the while loops in the algorithm are executed all together in at most \(2 \gamma \) steps. We may consider the operations such as finding the number of unclassified faces of a cell to have constant time except for the priority queue operations which are logarithmic in the size of the priority queue when implemented using heaps. Since the sizes of PQzero and PQone are clearly bounded by \(\gamma \), it follows that \(L_* (\sigma )\) is processed in at most \(O(\gamma \log \gamma )\) steps. Therefore processing the whole complex incurs a worst case cost of \(O(N \cdot \gamma \log \gamma )\).

4 Experimental Results

We have successfully applied the algorithms from Sect. 3 to different sets of triangle meshes. In each case the input data is a 2-dimensional simplicial complex \(\mathcal{K}\) and a function f defined on the vertices of \(\mathcal{K}\) with values in \({\mathbb R}^2\). The first step is to slightly perturb f in order to achieve injectivity on each component as described in Sect. 2. The second step is to construct an index function defined on all the simplices of the complex and satisfying the properties of Lemma 1. Then we build the acyclic matching \(\texttt {m}\) and the partition \((\texttt {A},\texttt {B},\texttt {C})\) in the simplices of the complex using Algorithm 2. In particular, the number of simplices in \(\texttt {C}\) out of the total number of simplices of \(\mathcal{K}\) is relevant, because it determines the amount of reduction obtained by our algorithm to speed up the computation of multidimensional persistent homology.

4.1 Examples on Synthetic Data

We consider two well known 2-dimensional manifolds — the sphere and the torus. For the sphere, we consider its triangulations of five different sizes and we take \(f(x,y,z)=(x,y)\). The triangulated sphere with the maximum number of simplices is shown in Fig. 2(left), where cells found critical by the algorithm are colored. The comparison with other triangulations of the sphere is shown in the Table 1: the first row shows the number of simplices in each considered mesh \(\mathcal{K}\); the middle row shows the number of critical cells obtained by using our matching algorithm to reduce \(\mathcal{K}\); the bottom row shows the ratio between the second and the first lines, expressing them in percentage points. In the case of the torus, we again consider triangulations of different sizes and we take \(f(x,y,z)=(x,y)\). The numerical results are shown in the same table and also displayed in Fig. 2(right).

A triangulation a sphere with 2882 simplices and one of the torus with 7200 simplices, with respect to a component-wise injective perturbation of the function defined of its vertices by \(f(x,y,z)=(x,y)\). Critical vertices are in yellow, critical edges in blue, and critical triangles in red. (Color figure online)

In conclusion, our experiments on synthetic data confirm that the current simplex-based matching algorithm scales well with the size of the complex.

4.2 Examples on Real Data

We consider four triangle meshes (available at [1]). For each mesh the input 2-dimensional measuring function f takes each vertex v of coordinates (x, y, z) to the pair \(f(v) = (|x|, |y|)\). In Table 2, the first row shows on the top line the number of vertices in each considered mesh, and in the middle line the reduction ratio achieved by our algorithm on those cells, expressing them in percentage points. Finally, it also displays in the bottom line the analogous ratio achieved by our previous algorithm [2]. The second and the third rows show similar information for the edges and the faces. Finally, the fourth row show the same information for the total number of cells of each considered mesh \(\mathcal{K}\).

Our experiments confirm that the current simplex-based matching algorithms produce a fair rate of reduction for simplices of any dimension also on real data. In particular, it shows a clear improvement with respect to the analogous result presented in [2] and obtained using a vertex-based and recursive matching algorithm.

4.3 Discussion

The experiments on synthetic data confirmed two aspects: (1) The discrete case seems to behave much as the differentiable case for two functions [18] because critical cells are still localized along curves; (2) The number of critical cells scales well with the total number of cells, indicating that we are not detecting too many spurious critical cells.

We should point here a fundamental difference between Morse theory for one function whose critical points are isolated and extensions of Morse theory to vector-valued functions where, even in the generic case, critical points form stratified submanifolds. For example, for two functions on a surface, they form curves. Hence the topological complexity depends not on the number of critical points but on the number of such curves. As a consequence, the finer the triangulation, the finer the discretization of such curves and the larger number of critical cells we get.

On the other hand, experiments on real data show the improvement with respect to our previous algorithm [2] already observed in the toy example of Fig. 1. We think that the new algorithm performs better because it is simplex-based rather than vertex-based. So, the presence of many non-comparable vertices has a limited impact on it.

5 Conclusion

The point of this paper is the presentation of Algorithm 2 to construct an acyclic partial matching from which a gradient compatible with multiple functions can be obtained. As such, it can be useful for specific purposes such as multidimensional persistence computation, whereas it is not meant to be a competitive algorithm to construct an acyclic partial matching for general purposes.

Some questions remain open. First, since indexing map is not unique, one may ask what is the effect of its choice on the output. We believe that the size of the resulting complex should be independent. This is a subject for future work.

A deeper open problem arises from the fact that the optimality of reductions is not yet well defined in the multidimensional setting, although the improvement is observed in practice. As commented earlier, even in the classical smooth case, the singularities of vector-valued functions on manifolds are not isolated. An appropriate application-driven extension of the Morse theory to multidimensional functions is not much investigated yet. Some related work is that of [15] on Jacobi sets and of [7] on preimages of maps between manifolds. However there are essential differences between those concepts and our sublevel sets with respect to the partial order relation.

The experiments presented in this paper show an improvement with respect to the algorithm in [2] that, to the best of our knowledge, is still the only other algorithm available for this task. Such experiments were obtained with a non-optimized implementation. For an optimized implementation of it, we defer the reader to [9], where experiments on larger data sets can be found.

References

The GTS Library. http://gts.sourceforge.net/samples.html

Allili, M., Kaczynski, T., Landi, C.: Reducing complexes in multidimensional persistent homology theory. J. Symbolic Comput. 78, 61–75 (2017)

Allili, M., Kaczynski, T., Landi, C., Masoni, F.: A new matching algorithm for multidimensional persistence. arXiv:1511.05427v3

Biasotti, S., Cerri, A., Frosini, P., Giorgi, D., Landi, C.: Multidimensional size functions for shape comparison. J. Math. Imaging Vis. 32(2), 161–179 (2008)

Carlsson, G., Zomorodian, A.: The theory of multidimensional persistence. In: Proceedings of the 23rd Annual Symposium on Computational Geometry (SCG 2007), pp. 184–193, New York, NY, USA. ACM (2007)

Dłotko, P., Wagner, H.: Simplification of complexes for persistent homology computations. Homology Homotopy Appl. 16(1), 49–63 (2014)

Edelsbrunner, H., Harer, J.: Jacobi sets of multiple Morse functions. In: Foundations of Computational Mathematics (FoCM 2002), Minneapolis, pp. 37–57. Cambridge Univ. Press, Cambridge (2002)

Escolar, E.G., Hiraoka, Y.: Computing persistence modules on commutative ladders of finite type. In: Hong, H., Yap, C. (eds.) ICMS 2014. LNCS, vol. 8592, pp. 144–151. Springer, Heidelberg (2014). doi:10.1007/978-3-662-44199-2_25

Iuricich, F., Scaramuccia, S., Landi, C., De Floriani, L.: A discrete morse-based approach to multivariate data analysis. In: SIGGRAPH ASIA 2016 Symposium on Visualization (SA 2016), New York, NY, USA, pp. 5:1–5:8. ACM (2016)

Kaczynski, T., Mrozek, M., Slusarek, M.: Homology computation by reduction of chain complexes. Comput. Math. Appl. 35(4), 59–70 (1998)

King, H., Knudson, K., Mramor, N.: Generating discrete Morse functions from point data. Exp. Math. 14(4), 435–444 (2005)

Lefschetz, S.: Algebraic Topology, vol. 27. American Mathematical Society, Providence (1942). Colloquium Publications

Mischaikow, K., Nanda, V.: Morse theory for filtrations and efficient computation of persistent homology. Discrete Comput. Geometry 50(2), 330–353 (2013)

Mrozek, M., Batko, B.: Coreduction homology algorithm. Discrete Comput. Geometry 41, 96–118 (2009)

Patel, A.: Reeb spaces and the robustness of preimages. Ph.D. thesis, Duke University (2010)

Robins, V., Wood, P.J., Sheppard, A.P.: Theory and algorithms for constructing discrete Morse complexes from grayscale digital images. IEEE Trans. Pattern Anal. Mach. Intell. 33(8), 1646–1658 (2011)

Sedgewick, R., Wayne, K.: Algorithms. Addison-Wesley, Boston (2011)

Smale, S.: Global analysis and economics. I. Pareto optimum and a generalization of Morse theory. In: Dynamical systems (Proceedings of Symposium, Univ. Bahia, Salvador, 1971), pp. 531–544. Academic Press, New York (1973)

Turner, K., Murkherjee, A., Boyer, D.M.: Persistent homology transform for modeling shapes ans surfaces. Inf. Inference 3(4), 310–344 (2014)

Xia, K., Wei, G.-W.: Multidimensional persistence in biomolecular data. J. Comput. Chem. 36(20), 1502–1520 (2015)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Allili, M., Kaczynski, T., Landi, C., Masoni, F. (2017). Algorithmic Construction of Acyclic Partial Matchings for Multidimensional Persistence. In: Kropatsch, W., Artner, N., Janusch, I. (eds) Discrete Geometry for Computer Imagery. DGCI 2017. Lecture Notes in Computer Science(), vol 10502. Springer, Cham. https://doi.org/10.1007/978-3-319-66272-5_30

Download citation

DOI: https://doi.org/10.1007/978-3-319-66272-5_30

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-66271-8

Online ISBN: 978-3-319-66272-5

eBook Packages: Computer ScienceComputer Science (R0)