Abstract

We propose a method to visualize information flow in the visual pathway following a visual stimulus. Our method estimates structural connections using diffusion magnetic resonance imaging and functional connections using electroencephalography. First, a Bayesian network which represents the cortical regions of the brain and their connections is built from the structural connections. Next, the functional information is added as evidence into the network and the posterior probability of activation is inferred using a maximum entropy on the mean approach. Finally, projecting these posterior probabilities back onto streamlines generates a visual depiction of pathways used in the network. We first show the effect of noise in a simulated phantom dataset. We then present the results obtained from left and right visual stimuli which show expected information flow traveling from eyes to the lateral geniculate nucleus and to the visual cortex. Information flow visualization along white matter pathways has potential to explore the brain dynamics in novel ways.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

- Diffusion MRI

- Visual Information Flow

- Lateral Geniculate Nucleus

- Dipole Intensity

- Fiber Orientation Distribution Function

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

The brain can be described as a network of interconnected information processing nodes. Each node, corresponding to a brain region, is specialized for a specific type of information. It is the interaction of the different nodes via the connections of the network that allows the brain to process and appropriately respond to external stimuli. Consider a subject that is asked to reach for and grasp an object when a visual cue is presented. First, visual information is transferred from the retina to the primary visual cortex through the optic nerve, optic tract and optic radiation. It is then expected that the reach and grasp component of the task will be handled separately by subregions of the parietal and premotor cortex [1]. Finally, the primary motor cortex will be involved to initiate the reaching movement. Individually, the visual, parietal, premotor, and motor cortex cannot produce the desired task. It is their interaction, or more specifically their exchange of information, that allows the subject to reach and grasp the object. The ability to image and observe the interaction of the different regions of the brain would therefore be invaluable to our understanding of the brain.

In this work, we present a new model of information flow in the brain built on the previous work of [2]. First, we build a Bayesian network which captures the state of the cortical surface of the brain and their connections. The network is subject specific as it is built from the structural connectivity via diffusion magnetic resonance imaging (MRI). Next, we introduce the electroencephalography (EEG) measurements as evidence into this network. Finally, using the principle of maximum entropy on the mean, we infer the posterior probability that cortical regions and connections are active. By projecting activation probabilities of connections back onto the streamlines obtained from diffusion MRI, we generate an animated representation of information flow. Thus, by combining functional information obtained from EEG with anatomical information obtained in diffusion MRI, we infer the information flow in the white matter of the brain.

2 Theory

We model the cortical activity using a distributed dipole model. The activation of a small area of the cortical surface is modeled by a dipole n that is perpendicular to the surface and whose intensity at a time t is given by \(x_{n,t} \in {\text {I}\!\text {R}}\). We distribute thousands of these dipoles on the cortical surface and combine their intensities in a vector \(\varvec{x} \in {\text {I}\!\text {R}}^{N_dN_t}\) where \(N_d\) and \(N_t\) are the number of dipoles and samples, respectively. The forward problem can then be modeled as \(\varvec{m} = \varvec{G}\varvec{x} + \varvec{\epsilon }\) where \(\varvec{m} \in {\text {I}\!\text {R}}^{N_sN_t}\) is the vector of EEG measurements with \(N_s\) the number of sensors. The matrix \(\varvec{G}\) is the lead field that projects the dipole intensities onto the sensors. It can be computed using the anatomical MR images of the subject [5] and is therefore a known quantity. The observation are corrupted by the additive noise term \(\varvec{\epsilon }\). Note that we consider all time samples in a single very large problem which makes the forward operator \(\varvec{G}\) block diagonal.

2.1 Connectivity Informed Maximum Entropy on the Mean

The vector of dipole intensities \(\varvec{x}\) represents the cortical activity for a given time window. Estimating these intensities from the measurements \(\varvec{m}\) is an ill-posed problem. We have many more intensities to estimate than available measurements. In [2], Amblard et al. propose to group the dipoles into \(N_S\) cortical regions, which we refer to as clusters. Each cluster is assigned a state variable which dictates the possible intensities of dipoles within the cluster. Let \(S_k\) represent the state of the \(k^{th}\) cluster, that is whether it is active or not. Let \(\varvec{S} = [S_1, S_2, ..., S_{N_S}]\) be the cluster state vector. Note that like \(\varvec{m}\) and \(\varvec{x}\), the vector \(\varvec{S}\) incorporates a time component. That is, if the cortical surface is segmented into 60 regions and the data window contains 10 time points, \(\varvec{S}\) contains 600 state variables. Let \(d\mu (\varvec{x}, \varvec{S}) = \mu (\varvec{x}, \varvec{S})d\varvec{x}\) be a reference law that represents the a priori information of the dipole intensities and cluster states. We assume the source amplitudes \(\varvec{x}_k\) of the \(k^\text {th}\) cluster depends only on the state of the cluster \(S_k\) to get

where \(\pi (\varvec{S})\) is the joint probability law of the cluster states. This distribution, along with the assumption of independent cluster states, was used by [2] to constrain the inverse problem and estimate the dipole intensities.

The output of a tractography pipeline is a set of streamlines which represent the white matter fiber bundles. When streamlines begin in a cluster and end in another, we say that those two clusters are connected. A connection between two clusters is characterized by its length, which is the average length of the streamlines that reach those clusters. We treat these connections as wires or pipes which allow communication between cortical regions. The state of a cluster at a time \(t_0\) can influence the state of a different cluster at a time \(t_\varDelta \) if they are connected. The delay \(\varDelta \) is proportional to the length of the connection and the speed at which information flows in the connection. For example, if a cluster is known to be active at a time \(t_0\), it increases the likelihood that its connected counterpart is active at a time \(t_\varDelta \). This spatio-temporal regularization constitutes an additional prior and is therefore included in the model by modifying the distribution (1). The connections are added as a new set of variable which represent connection states. Much like the state of the clusters affect the dipoles, the state of the connections affect clusters. That is, the state of a connection \(C_i\) gives us information about the state of the clusters it connects. Like clusters, we assume connections are either active or inactive. Let \(\varvec{C}\) be the connection state vector, the updated prior information on sources, clusters, and connections is then given by

where \(\varphi (\varvec{C})\) is the joint probability law of the connection states. The distribution (2) is too general. It allows the state of a cluster to be affected by a connection that does not reach it. We therefore assume that the state of a cluster depends only on the connections that reach it. If we let \(\varvec{C}_{\gamma (k)}\) be the connections that reach the \(k^{th}\) cluster, the joint distribution of the cluster states given the connection states is

Finally, we assume that the state of a connection is independent of other connection which, along with (3) yields

The distribution (4) contains all of the available prior information. It describes our prior knowledge of the source intensities, cluster states, and connection states given no EEG measurements. The next step is to insert the observed EEG measurements as evidence and thus be able to infer the posterior probability of any given source, cluster, and connection configuration.

Let the dipole intensities \(\varvec{x}\) have a probability law \(dp(\varvec{x})\), distinct from the reference law \(d\mu (\varvec{x})\) described previously. The objective of the MEM algorithm is to find the probability law \(dp^*(\varvec{x}) = p^*(\varvec{x})d\varvec{x}\) that satisfies

while minimizing the distance to the reference law measured using the Kullback-Leibler divergence \(D_\text {KL}(p(\varvec{x})|d\mu (\varvec{x}))\). In other words, the solution is the probability law \(dp^*(\varvec{x})\) closest to the reference law \(d\mu (\varvec{x})\) that explains the observations on average. By introducing Lagrange multipliers, minimizing \(D_\text {KL}(p(\varvec{x})|d\mu (\varvec{x}))\) subject to (5) can be written as

The first term is the entropy of the model, the second is the data fit, and the third ensures \(p(\varvec{x})\) is a probability distribution. Eq. (6) can be thought of as finding the optimal compromise between the uncertainty (i.e. entropy) of the model and the observations. If we assume the noise is Gaussian with zero mean, the unique optimal Lagrange multipliers \(\varvec{\lambda }^*\) can be determined by solving [2]

where \({\varSigma }_\epsilon ^2\) is the variance of the additive noise and where \(Z(\varvec{\lambda })\) is the partition function given by

Note that (7) does not depend on \(p(\varvec{x})\) or \(\lambda _0\), which can be determined from the unique minimizer \(\varvec{\lambda }^*\). Finding the optimal Lagrange multipliers from (7) requires evaluating \(Z(\varvec{\lambda })\) which in turn requires the reference law \(d\mu (\varvec{x})\). We propose to obtain this reference law by marginalizing \(\varvec{S}\) and \(\varvec{C}\) out of (4), that is

where the sum over \(\left\{ \varvec{C}\right\} \) indicates a sum over all possible realizations of \(\varvec{C}\). The optimal Lagrange multipliers \(\varvec{\lambda }^*\) are identified by solving (7) which requires evaluating \(Z(\varvec{\lambda })\) repeatedly. The sum over all possible states of \(\varvec{C}\) which appears in (9) is prohibitive because it generates \(2^{N_c}\) terms which quickly becomes intractable. This problem can be mitigated by noting that \(Z(\varvec{\lambda })\) is the unnormalized sum of the marginal probability of any variable in \(\varvec{C}\) or \(\varvec{S}\). All terms in the sum need not be evaluated because the state of a cluster depends only on a few connections. Using a suitable variable elimination order, which depends on the specific connections between clusters, the sum of products can be rearranged to compute \(Z(\varvec{\lambda })\) efficiently.

3 Methods

3.1 Synthetic Data

To validate our algorithm, synthetic EEG data was generated using a simplified model of the visual system. The model consists of two clusters of 10 sources representing the left and right primary visual cortices. In addition, the model contains four clusters representing the lateral geniculate nuclei (LGN) and the eyes. These four clusters each have a single invisible source, i.e. their corresponding column of \(\varvec{G}\) contains only zeros. Bidirectional connections are present between the LGN and between the visual cortices. Unidirectional connections link the eyes to the LGN and the LGN to the visual cortices. To simulate signals, information is propagated from the eyes to the LGN and to the visual cortex by selecting random connections. When a cluster is activated by one of its connections, the correspond sources are activated. The synthetic EEG signals are then recorded by two sensors located near the visual cortices. Gaussian noise is added to the synthetic EEG measurements to obtain the desired signal to noise ratio (SNR) defined as the variance of the signal over the variance of the noise.

To quantify how the SNR affects our algorithm’s ability to recover information flow patterns, synthetic signals were generated with an SNR varying between 1 and 20. These signals were then used to recover the posterior probability that each connection was active. A recovery was deemed successful if all connections used to simulate the data obtained a posterior probability above 0.5 while all other posterior probabilities were below 0.5.

3.2 Experimental Data Acquisition and Preprocessing

Magnetic resonance images and EEG signals were acquired on a healthy volunteer. The MR images included diffusion weighted images acquired using a SPLICE sequence (b-value 1000 s/mm\(^2\), 64 directions) and a T1 weighted image. The SPLICE sequence was selected over a more common EPI sequence because it does not generate distortions near the eyes and allows us to track the optic nerve and optic tract. Fiber orientation distribution functions were computed using constrained spherical deconvolution [6] implemented in dipy [4]. Fiber tracking was performed using anatomically constrained particle filter tractography [7] also implemented in dipy. The surface of the cortex was extracted and segmented from the T1 weighted image using FreeSurfer and then downsampled to 8000 vertices. The forward operator \(\varvec{G}\) was computed using OpenMEEG [5, 8].

After the MR acquisition, the subjects were fitted with a 64 channel actiCAP (Brain Products GmbH) EEG cap. The subjects were seated in front of a computer screen in a dark quiet room and were asked to fixate on a red circle appearing at the center of the screen. The visual stimuli consisted of a circle appearing on the left or right side of the screen. One run consisted of 50 left and 50 right visual cues randomly interleaved. A total of 10 runs were performed, 5 with each stimuli. The EEG signals were separated into 150 ms epochs starting at the stimulus onset. Epochs with blink artifacts were excluded from further analysis. All remaining epochs for each stimuli were averaged and downsampled to 100 Hz.

3.3 Model Parameters

The model presented above relies on several parameters. First, the information velocity must be specified to compute the delay associated with each connection. Here, we assume a constant velocity of 6 m/s [9] for all connections of the brain. Next, the parameters of the prior distribution \(d\mu (\varvec{x}, \varvec{S}, \varvec{C})\) must be given. Connections and clusters are initialized with a 0.9 probability of being inactive. However, when a connection is active, the clusters it connects must also be active. Finally, the intensity of sources follows a Gaussian distribution with zero mean. If the cluster is active or inactive, the variance of the distribution is 1e−4 or 1e−7, respectively.

4 Results

Figure 1 illustrates the rate of successful information flow recovery averaged over 100 realizations for each SNR. The rate of recovery sharply rises between a SNR of 5 and 10. An example of a successful recovery is illustrated in Fig. 2. The left part of the figure presents the posterior probabilities projected back onto the model and animated. Information can be seen flowing through the different clusters. The right part of the figure presents and overview of the same results in the form of a flow diagram. Each row of the graph represents a cortical region and the circles correspond to individual time points. Information flow in connections is illustrated by lines connecting cortical regions at different time points. In both cases, green indicates a high probability that a region or connection is active whereas white indicates a low probability.

Reconstruction of the information flow from the left eye, to the right LGN, to right visual, to the left visual (\(\text{ SNR }=5\)). The left of the figure is a video of the information flow projected back onto the model. The right of the figure presents the same information in the form of an information flow diagram.

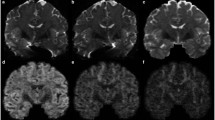

Reconstruction of the information flow following a left and right visual stimulus is illustrated in Fig. 3. For the left visual stimulus, information flow through the optic nerve and optic chiasm to reach the right LGN. The information then goes through the optic radiation to reach the occipital lobe. Notably, the right LGN and right optic radiation have a higher posterior probability than their left counterpart, consistent with the expected visual activation. Similar results are obtained for the right visual stimuli, however the right and left visual pathways are activated with similar posterior probabilities.

5 Conclusion

By combining structural connectivity information from diffusion MRI and functional information from EEG, our algorithm is able to infer information in the white matter of the brain. We evaluated the rate of successful recovery using synthetic data and presented preliminary in vivo results of information flow in the visual pathway. In this study, a constant information velocity of 6 m/s was assumed for all connections of the brain. However, it should be noted that our model is not specific to this assumption and can accommodate different information velocities for different connections. In the future, it may be possible to tune information speed using additional micro-structure information such as axon diameter or myelination. Our method represents a promising new approach to visualizing brain dynamics and connectomics across white matter pathways.

References

Gallivan, J.P., Culham, J.C.: Neural coding within humain brain areas involved in actions. Current Opin. Neurobiol. 33, 141–149 (2015)

Amblard, C., Lapalme, E., Lina, J.M.: Biomagnetic source detection by maximum entropy and graphical models. IEEE Trans. Bio-Med. Eng. 3, 427–442 (2004)

Kwon, D., Shinohara, R.T., Akbari, H., Davatzikos, C.: Combining generative models for multifocal glioma segmentation and registration. In: Golland, P., Hata, N., Barillot, C., Hornegger, J., Howe, R. (eds.) MICCAI 2014. LNCS, vol. 8673, pp. 763–770. Springer, Cham (2014). doi:10.1007/978-3-319-10404-1_95

Garyfallidis, E., Brett, M., Amirbekian, B., Rokem, A., Van Der Walt, S., Descoteaux, M., Nimmo-Smith, I.: Dipy, a library for the analysis of diffusion MRI data. Front. Neuroinf. 8 (2014)

Gramfort, A., Papadopoulo, T., Olivi, E., Clerc, M.: OpenMEEG: opensource software for quasistatic bioelectromagnetics. Biomed. Eng. Online 9, 45 (2010)

Tournier, J.D., Calamate, F., Connelly, A.: Robust determination of the fibre orientation distribution in diffusion MRI: non-negativity constrained super-resolved spherical deconvolution. NeuroImage 35, 1459–1472 (2007)

Girard, G., Whittingstall, K., Deriche, R., Descoteaux, M.: Towards quantitative connectivity analysis: reducing tractography biases. NeuroImage 98, 266–278 (2014)

Kybic, J., Clerc, M., Abboud, T., Faugeras, O., Keriven, R., Papadopoulo, T.: A common formalism for the integral formulations of the forward EEG problem. IEEE Trans. Med. Imaging 24, 12–28 (2005)

Tomasi, S., Caminiti, R., Innocenti, G.: Areal differences in diameter and length of corticofugal projections. Cereb. Cortex 22, 1463–1472 (2012)

Acknowledgement

This work has received funding from the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation program (ERC Advanced Grant agreement No 694665 : CoBCoM - Computational Brain Connectivity Mapping).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Deslauriers-Gauthier, S. et al. (2017). Inference and Visualization of Information Flow in the Visual Pathway Using dMRI and EEG. In: Descoteaux, M., Maier-Hein, L., Franz, A., Jannin, P., Collins, D., Duchesne, S. (eds) Medical Image Computing and Computer Assisted Intervention − MICCAI 2017. MICCAI 2017. Lecture Notes in Computer Science(), vol 10433. Springer, Cham. https://doi.org/10.1007/978-3-319-66182-7_58

Download citation

DOI: https://doi.org/10.1007/978-3-319-66182-7_58

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-66181-0

Online ISBN: 978-3-319-66182-7

eBook Packages: Computer ScienceComputer Science (R0)